Bandcamp's AI Music Ban: What Artists Need to Know [2025]

Something shifted in the music industry when Bandcamp announced it was banning music made "wholly or in substantial part" by generative AI. It wasn't just another policy update buried in terms of service. It was a statement about what Bandcamp values, and it caught the attention of every artist, platform, and tech company watching how this whole AI music thing would play out.

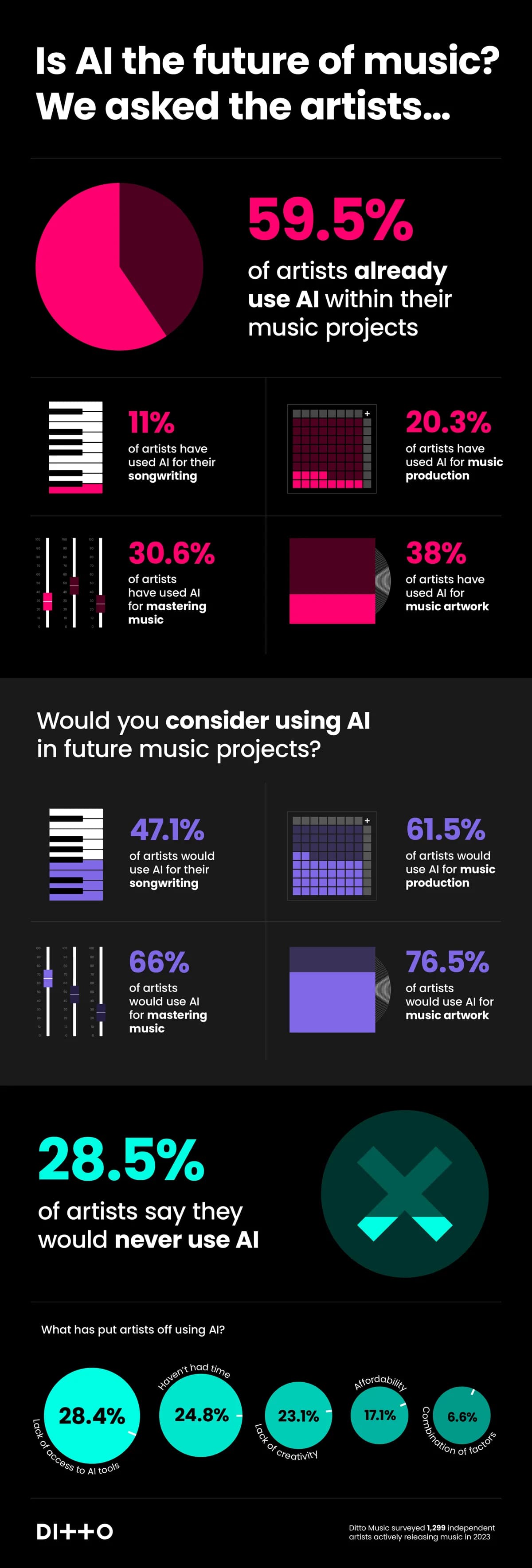

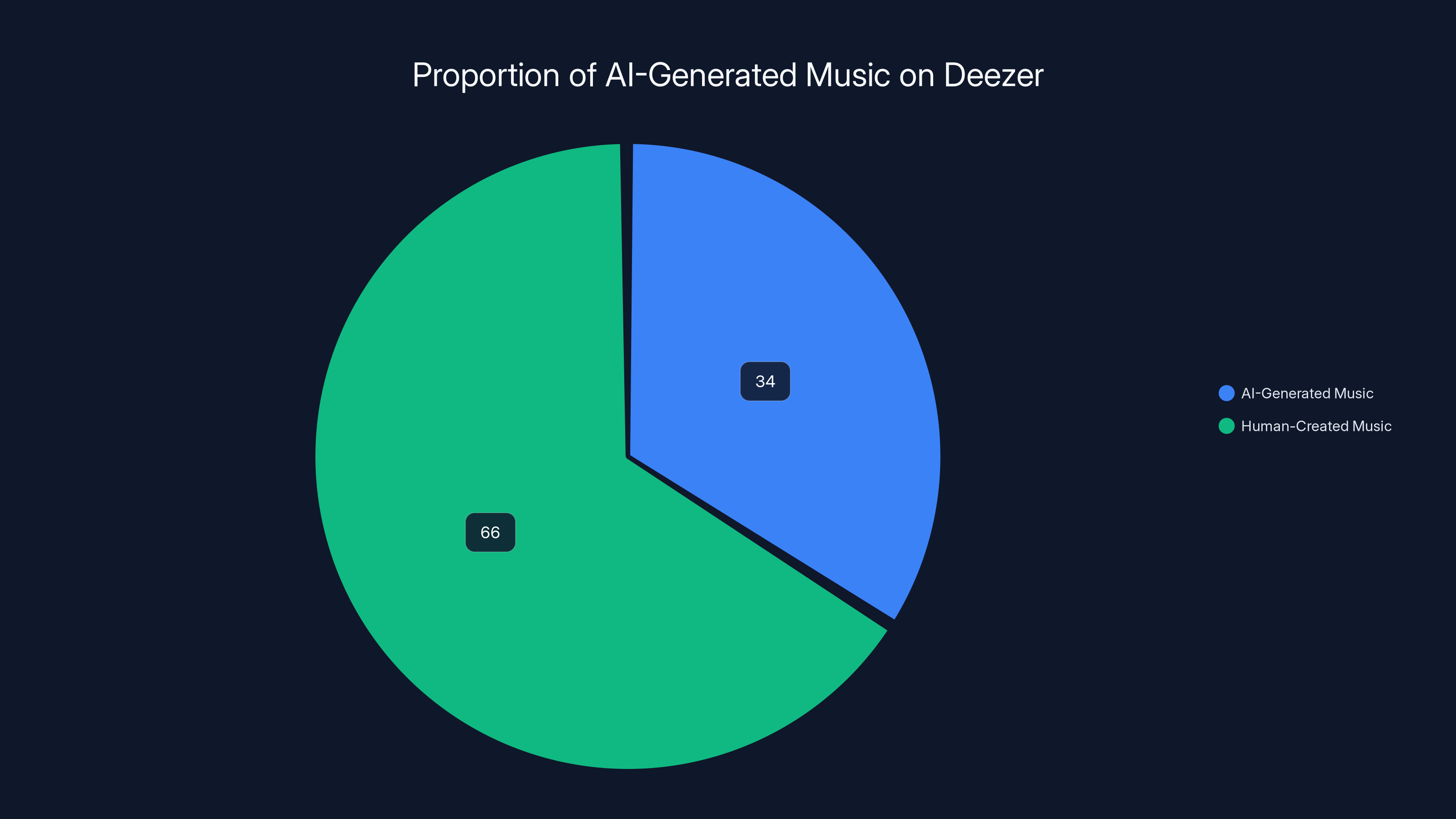

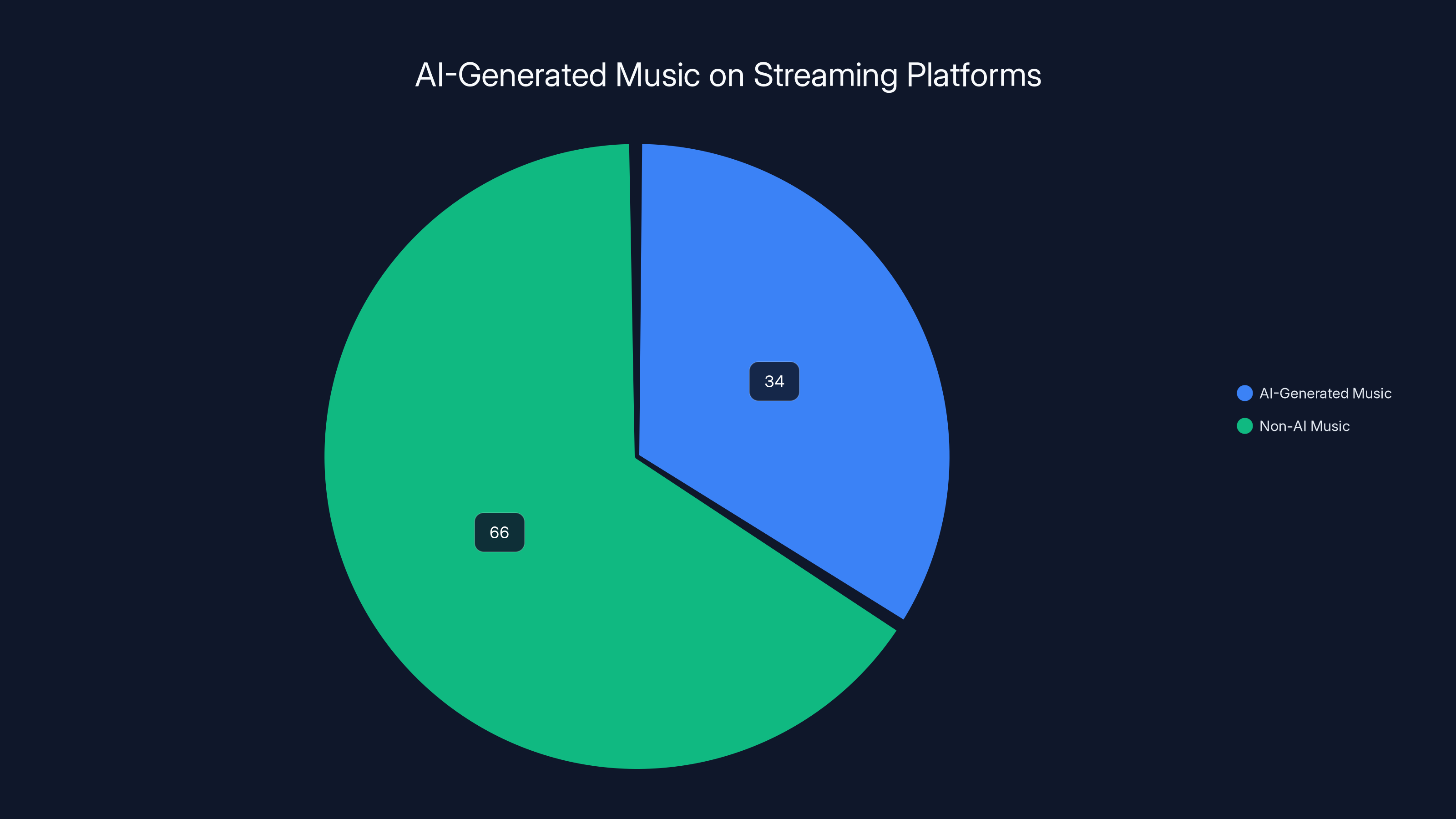

The music streaming world had been sleepwalking through an AI problem for months. Deezer reported that roughly 50,000 AI-generated songs were being uploaded daily, accounting for about 34 percent of all music on the platform. That's not a niche issue anymore. That's an existential threat to the entire ecosystem. Musicians were frustrated. Fans were confused. Platforms were scrambling.

Bandcamp decided to act decisively. And that decision tells us something important about where the music industry is headed and what happens when a platform prioritizes artists over algorithm-driven growth.

Here's what's really happening with Bandcamp's AI policy, why it matters more than you think, and what comes next for everyone involved.

TL; DR

- Bandcamp banned music made wholly or substantially by AI, with tools to report suspected AI content

- The policy is stricter than competitors like Spotify, which only requires disclosure labels

- AI impersonation of artists is strictly prohibited, addressing a major problem for original musicians

- 50,000+ AI songs upload daily to streaming platforms, making enforcement increasingly critical

- Bottom Line: Bandcamp's stance signals a shift toward artist protection over platform growth

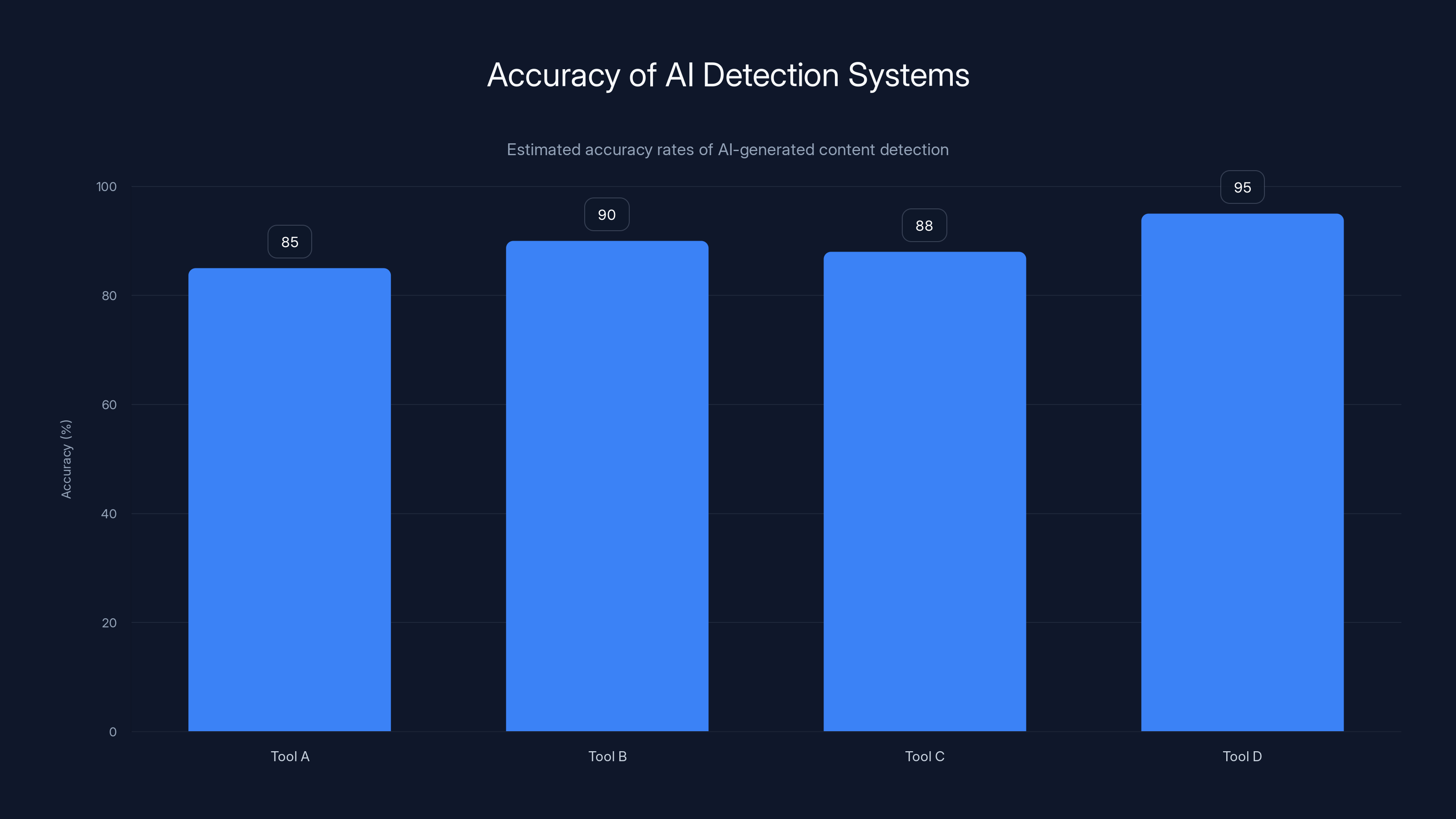

AI detection systems used by platforms like Bandcamp have varying accuracy rates, typically between 85-95%, depending on the tool and audio characteristics. Estimated data.

The AI Music Problem Nobody Wanted to Talk About

Let's back up. For years, the music industry pretended AI-generated music wasn't really a problem. It was niche. It was novelty. It was something for YouTube enthusiasts and experimental musicians, not a real threat to professional artists.

Then the floodgates opened.

Tools like Suno and Udio made AI music generation accessible to anyone with a browser. You don't need to understand music theory. You don't need recording equipment. You don't need talent. You just type a prompt, wait 30 seconds, and you have a song.

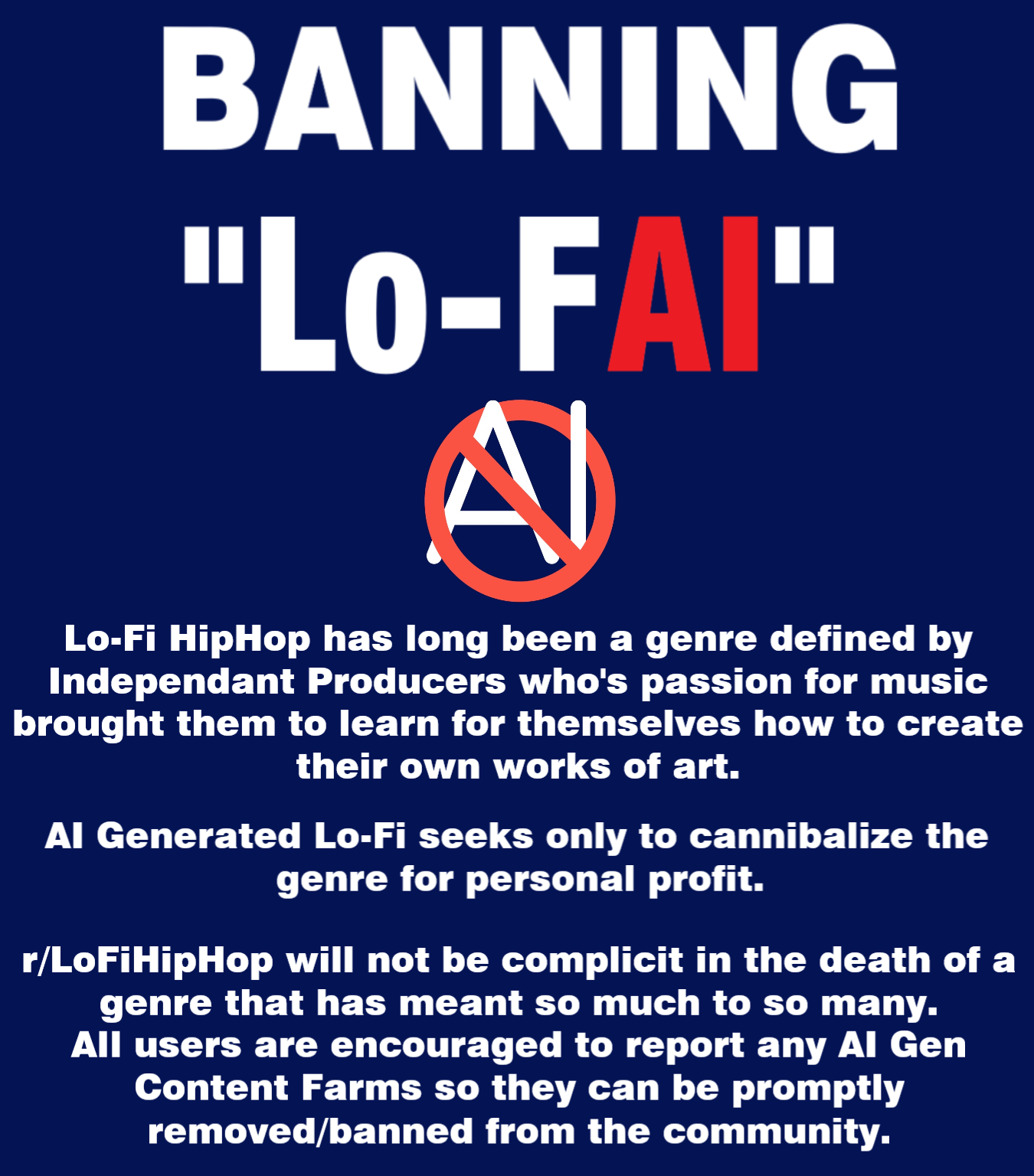

This democratization of music creation sounds beautiful in theory. In practice, it created what musicians started calling "AI slop"—low-effort, often derivative music flooding platforms at scale.

The problem isn't that AI-generated music exists. The problem is volume without quality control. Streaming platforms have algorithms designed to reward engagement metrics, not artistic merit. An AI-generated bedroom pop song that gets 100 streams costs nothing to host. A human artist who spent three months in a studio producing an album might get comparable numbers.

From an economics perspective, that's a disaster for working musicians.

And then there's the impersonation angle. Artists started reporting that AI tools were creating songs that deliberately mimicked their voice, style, and delivery. Fans would search for a specific artist and find dozens of fake "songs" mixed in with the real catalog. Some of these were novelty. Some were explicitly designed to monetize an artist's likeness without permission.

That's where the legal and ethical lines get interesting. And that's where Bandcamp decided to take a stand.

Understanding Bandcamp's Specific Policy Language

Bandcamp's policy doesn't just say "no AI music." The language matters, and understanding it matters even more.

The platform explicitly bans music made "wholly or in substantial part" by generative AI. That's a careful phrase. It's not a blanket prohibition on any tool that touches a song. It's targeting songs where AI is the primary creative force.

This distinction is important. A producer using AI to generate drum patterns, then building original melodies and arrangements on top? That probably survives. A person running a prompt through Suno and uploading the output directly? That doesn't.

The policy recognizes a reality that many anti-AI absolutists skip over: technology has always been part of music creation. Digital audio workstations are AI-adjacent. Autotune is technically algorithmic. Sample-based music relies on processing. The question isn't whether technology should be involved. The question is whether the human creative decision-making is the primary force.

Bandcamp's leadership was explicit about their reasoning. "We believe that the human connection found through music is a vital part of our society and culture, and that music is much more than a product to be consumed," the company wrote on their blog.

That's not about the technology. It's about values. And that distinction matters.

The policy also explicitly prohibits impersonation. Using AI to create music that imitates another artist's voice, style, or likeness without permission is "strictly prohibited." This is where Bandcamp went further than simple AI disclosure. They're protecting artist identity.

That's a bigger deal than it might sound. Impersonation isn't just a copyright issue. It's about reputation and control. Your voice is part of your brand. If someone generates a song in your style and it goes viral, people might assume it's legitimately yours. Your fans get confused. Your authentic work gets buried. And you had no say in the matter.

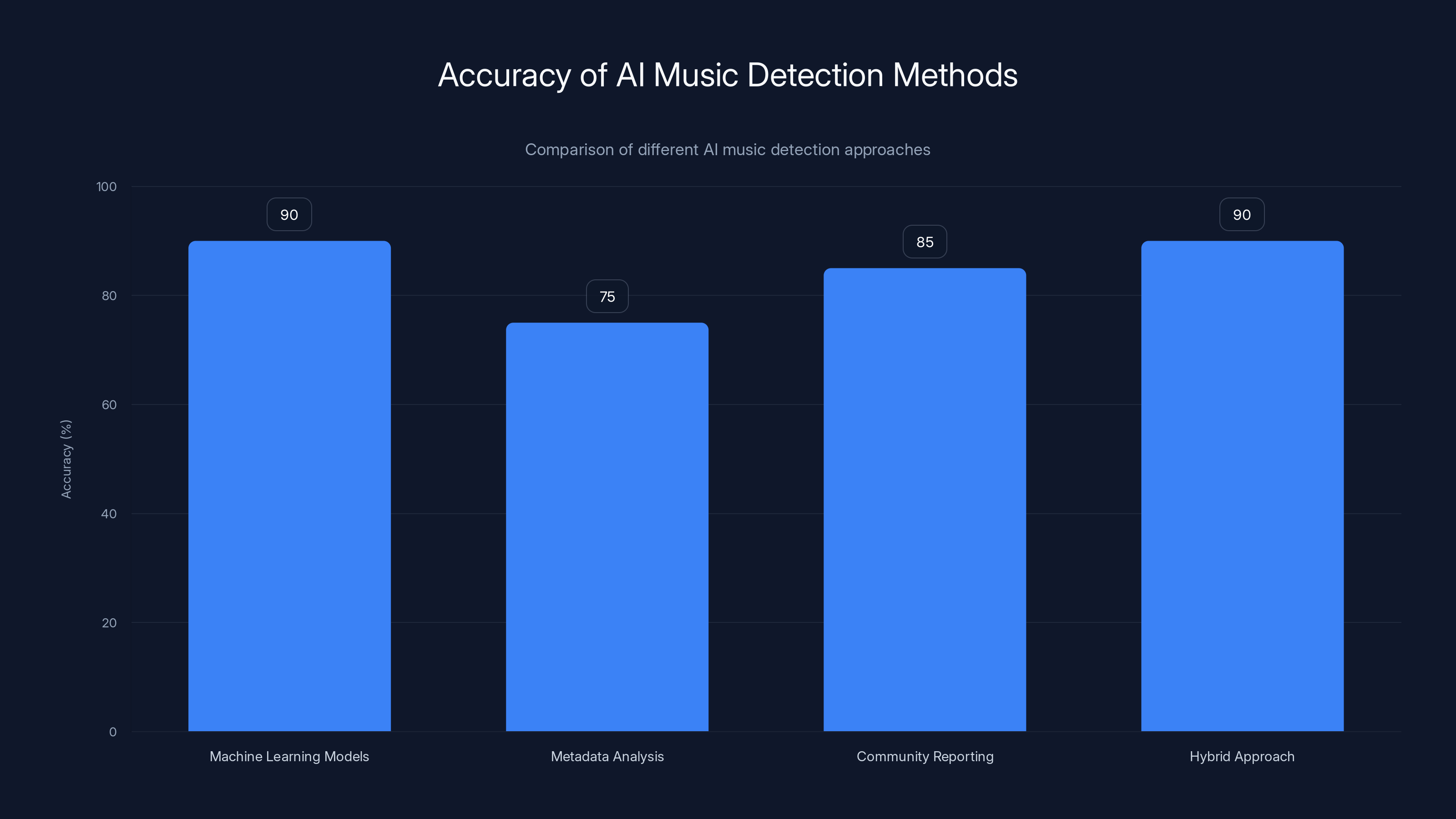

Machine learning models and hybrid approaches offer high accuracy (around 90%) in detecting AI-generated music, while metadata analysis and community reporting are slightly less effective.

How Bandcamp Will Enforce the Policy

Here's where policy meets reality: enforcement is the hard part.

Bandcamp said it will actively monitor uploads for suspected AI-generated content. The company didn't specify exactly how this would work, but the most likely approach involves audio analysis. AI-generated music has certain acoustic fingerprints that machine learning models can identify with reasonable accuracy.

But here's the catch. Those detection systems aren't perfect. They have false positives and false negatives. And they get better as AI generation improves. It's an arms race that Bandcamp acknowledged implicitly by not claiming they'd catch everything.

So they're also giving users reporting tools. Community enforcement. If fans or other artists suspect something is AI-generated, they can flag it. Bandcamp then investigates.

This is smart for a few reasons. First, it scales. Bandcamp doesn't have unlimited moderation resources. Crowdsourced reporting costs nothing. Second, the community has strong incentives to be accurate. False flagging damages the platform's trust. Third, it distributes responsibility. If someone gets wrongly removed, they can appeal and point to the reporting system's limitations.

The unknown variable: false positives. How many legitimate artists will get caught in the filter? What's the appeals process? Will Bandcamp's moderation team be trained to understand nuanced questions about AI-assisted production?

These are the details that matter in real enforcement, and Bandcamp hasn't fully specified them yet.

What we do know is that Bandcamp has a track record of supporting artists in concrete ways. The platform introduced Bandcamp Fridays, where the company waives its revenue share and gives 100 percent of streaming proceeds to artists. Since the program started, over $120 million has gone directly to musicians. That's not hypothetical artist support. That's money.

So when Bandcamp makes a policy decision, it comes from a company that's financially aligned with artist welfare. That changes the calculation.

How This Compares to Other Streaming Platforms

Bandcamp didn't make this decision in isolation. They made it while other platforms were moving slowly, and that contrast is revealing.

Spotify has approached the problem cautiously. The platform committed to developing an industry standard for AI disclosure in music credits, so listeners can see when AI was involved. They've also promised an impersonation policy. But as of early 2025, these are still in development. Spotify is being thoughtful, collaborative, and slow.

Bandcamp went faster and further. An outright ban is more aggressive than disclosure labels. It's saying "no AI-generated music," not "label your AI-generated music."

Deezer signed a global AI artist training statement alongside actors and songwriters. That's about protecting artists' training data, preventing AI companies from scraping their work without permission. Important, but different focus than Bandcamp's policy.

Apple Music has been mostly quiet on this. YouTube and SoundCloud have taken minimal action.

So Bandcamp is currently out front. Not just in timing, but in decisiveness.

There's a strategic element here worth understanding. Bandcamp's core audience is artists, not passive listeners. The platform became successful by treating musicians as partners, not inventory. An AI ban makes sense for that market positioning. It differentiates Bandcamp from platforms that optimize for growth metrics above all else.

But it also comes with risk. What if the enforcement system is too aggressive? What if legitimate artists get caught in false positives and decide to leave? What if the AI music community decides to build alternative platforms?

Bandcamp is betting that artist trust is worth more than the potential audience of AI music creators. That's a values bet.

The Real Impact: What This Means for Musicians

If you're a musician, Bandcamp's policy directly affects you. Maybe obviously. Maybe less so.

First, the obvious part. If you were worried about fake AI versions of your music appearing on Bandcamp alongside your legitimate catalog, you just got more protection. The platform committed resources to identifying and removing that content. When you report impersonation, there's now a specific policy backing your complaint.

This matters for artists with substantial reach. Big-name musicians have teams handling this stuff. Independent artists usually don't. A solo bedroom producer with 5,000 followers can now rely on Bandcamp's moderation rather than hiring lawyers.

Second, less obvious part. The policy affects the economics of the platform. If AI music is banned, then the only music generating streams is human-created. That changes how revenue gets distributed. Fewer low-cost uploads competing with your work. More resources flowing to legitimate creators.

Bandcamp Fridays already give artists 100 percent of revenue on those days. If the overall platform leans toward quality over quantity, that money gets divided among fewer, typically more serious artists. Whether that helps or hurts any individual creator depends on their position in the catalog.

Third, the precedent angle. When a major platform makes a policy decision, it creates pressure on other platforms to follow. Not immediately. Not universally. But the conversation changes. If every platform had banned AI slop, the problem wouldn't exist. Now Spotify, YouTube, and others face implicit pressure to do something comparable.

Bandcamp created a public standard. They're essentially saying "this is what responsible platform stewardship looks like." Other platforms can ignore that, but they're now doing so explicitly.

AI-generated music accounts for 34% of all daily uploads on Deezer, highlighting the significant presence of AI in music creation.

The Gray Area: What About AI-Assisted Production?

Here's where the policy gets complicated, and where smart artists will ask important questions.

Bandcamp's language prohibits music made "wholly or in substantial part" by AI. But what does "substantial part" actually mean? 50 percent? 60 percent? Is it by time, by creative impact, or something else?

A producer might use AI to:

- Generate drum patterns, then manually adjust them

- Create melodic sketches, then write original variations

- Suggest chord progressions, then compose something different

- Analyze vocal recordings and suggest mixing approaches

In each case, AI played a role. But a human made conscious creative decisions that shaped the final product. Does that survive Bandcamp's policy? Probably. But Bandcamp hasn't provided exact thresholds.

This ambiguity creates practical problems. If you're an artist pushing creative boundaries with AI tools, how do you know if your work complies? If you get flagged, how do you prove it's legitimate?

Bandcamp would need to clarify this through appeals, precedent, and iterative guidance. That's not ideal, but it's how most policy enforcement works initially. The rules get defined through application and adjustment.

The safer approach for now: if AI tools are part of your production, document the process. Show the original prompts, the source files, the manual edits. If you get questioned, you have evidence that human creativity was the driving force.

There's also the philosophical question of whether AI-assisted production should even be restricted. Some artists and technologists argue that prohibitions are too blunt. Tools are morally neutral. Only the intent and outcome matter.

Bandcamp's answer is: intent absolutely matters. If you're using AI to shortcut the creative process, to generate lazy content at scale, that's different than using AI as one tool among many in your toolkit.

But distinguishing between those in practice? That's hard. And it's where even well-intentioned policies run into enforcement challenges.

The Bigger Picture: Why This Policy Matters Beyond Bandcamp

Bandcamp's decision doesn't exist in isolation. It's part of a larger cultural and economic recalibration around AI.

The music industry is essentially asking: what do we value? Is it growth in content volume, or quality and authenticity? Is it algorithm optimization, or artist relationships? Is it democratizing music creation, or protecting professional musicians' livelihoods?

These aren't new questions. They've been asked about technology's impact on music since sampling became standard. But AI scales the questions up dramatically.

When a tool makes it trivial to create music, the scarcity that gave music value disappears. Supply increases infinitely. Demand doesn't. The economic model breaks.

That's why even platforms that benefit from volume have to think about this. If your platform becomes 50 percent AI slop, your brand suffers. Artists stop uploading there. Listeners stop searching there. The business model collapses.

So the policy question becomes a business question. And business questions get answered by whoever has the most market power.

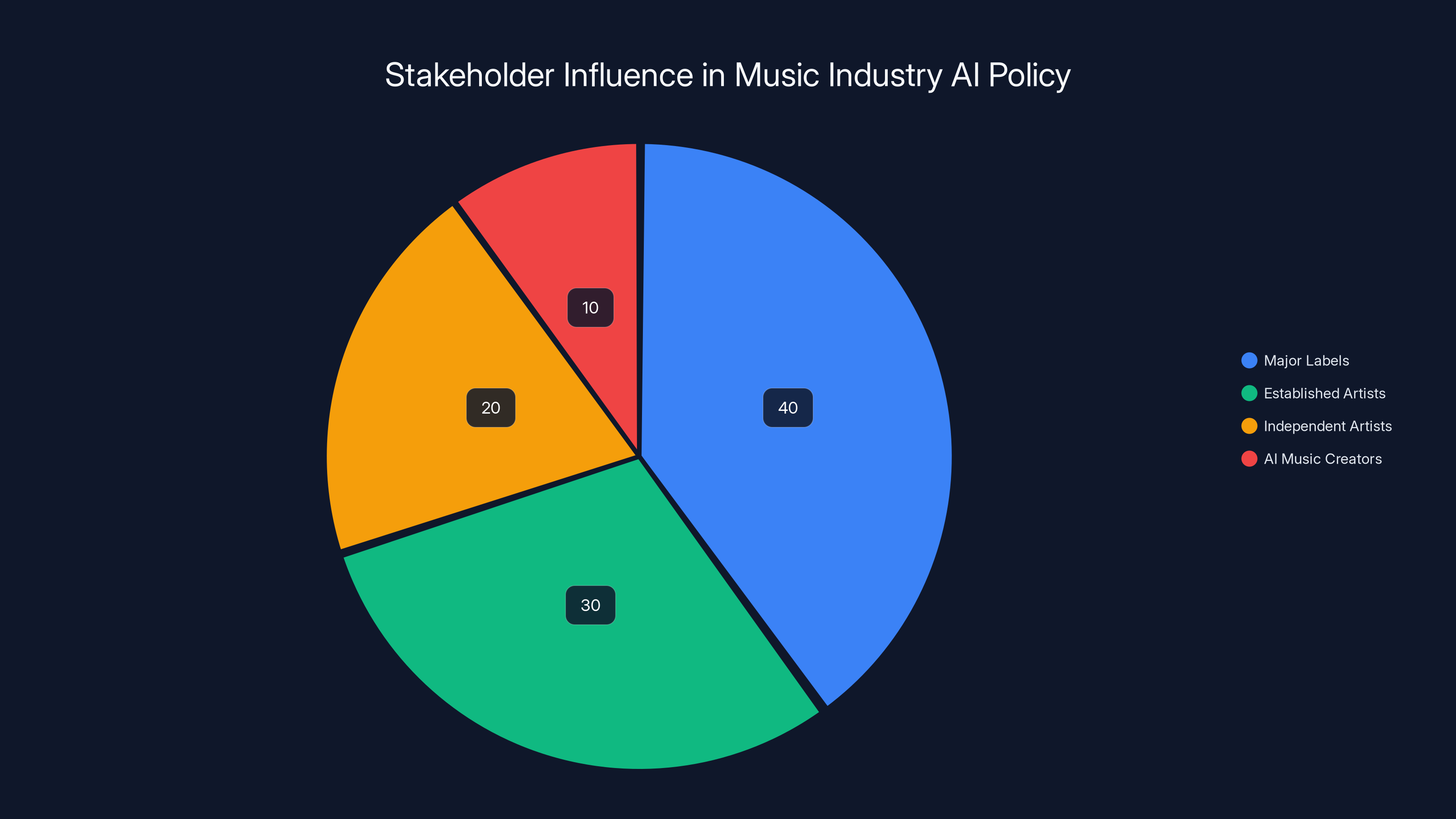

Right now, major labels and established artists have market power. They can pressure platforms. Independent artists have less power but more sympathy from platforms like Bandcamp. AI music creators have neither.

Bandcamp's stance aligns the platform with the artist community. That's a deliberate choice with business consequences. They're betting that artist trust is worth more than AI creator attention.

Technical Detection: How Do Platforms Identify AI Music?

Enforcing the policy requires identifying AI music at scale. How does that actually work?

AI-generated audio has statistical signatures. The way frequencies distribute, the way transitions happen, the acoustic characteristics of drums and instruments—these leave traces. Machine learning models trained on large datasets of known AI music can learn to recognize these patterns.

Companies like Audio Shake and specialized research labs have built detection systems. The accuracy is decent, typically in the 85-95 percent range, but it depends heavily on the specific AI tool used to generate the music.

Here's the problem: as AI music tools improve, they're specifically trained to avoid these detection signatures. It's the same adversarial dynamic as AI image detection. Someone builds a detector. The AI tools get better. The detectors improve. It's an endless cycle.

Bandcamp probably isn't building detection from scratch. They're likely licensing or integrating existing tools. That means their accuracy depends on what's available on the market, and that technology is still improving.

Another detection method: metadata analysis. Who uploaded it? Do they have a history of uploads? Is their account pattern consistent with human artists? This is less about the audio itself and more about the account behavior.

And then there's the community reporting component, which bypasses detection entirely. If someone reports a track, humans can listen and make judgment calls. That's slow to scale, but it's thorough.

The realistic scenario is hybrid. Automated detection catches obvious cases. Community reports catch deliberate impersonation. Human review handles edge cases and appeals. That probably catches 80-90 percent of problematic content while allowing most legitimate artists to operate freely.

Not perfect. But probably sufficient.

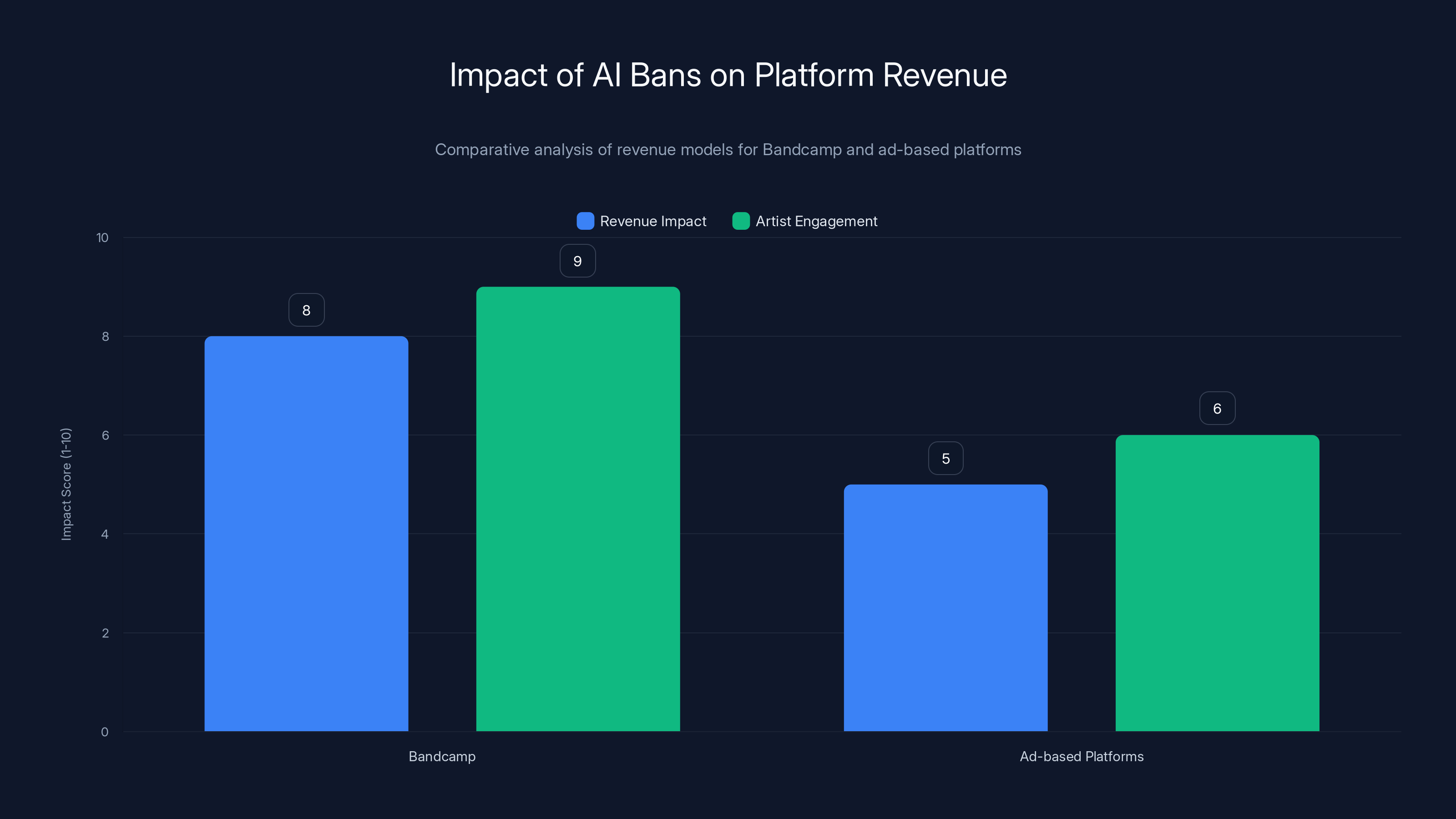

Bandcamp's revenue model benefits from AI bans due to increased artist engagement and higher quality content, whereas ad-based platforms may see a mixed impact. (Estimated data)

What About Independent AI Musicians? Are They Just Shut Out?

This is the tension at the heart of the policy. Bandcamp is banning AI music entirely from one music creation tool category. That affects people who identify as AI musicians.

Some of them are making genuinely interesting work. They're exploring the aesthetic possibilities of the medium. They're pushing creative boundaries. From an artistic innovation perspective, that's valuable.

Bandcamp's position is that innovation happens within human-creative constraint. The platform values human artistic intent above algorithmic generation. That's a philosophical stance, not a technical one.

It's also a market stance. Bandcamp's audience is predominantly human musicians and indie music fans. Those communities explicitly value human creation. Hosting AI music would contradict the platform's brand positioning.

But it does create a question: where do AI musicians go? They can't use Bandcamp. Major platforms haven't decided yet. Will alternate platforms emerge specifically for AI music?

Probably yes. There's demand. Someone will build Bandcamp-for-AI-music. It might be niche. It might be weird. But it'll exist.

The policy also creates an interesting innovation dynamic. If AI musicians want access to human-centric platforms, they face pressure to incorporate more human creative input. That might actually push the technology in more interesting directions—toward AI as a tool for human creativity, rather than AI as a replacement for it.

So Bandcamp's policy isn't necessarily suppressing AI music. It's redirecting it toward different platforms and different use cases. That's actually more functional than a total prohibition would be.

The Reporting System: How Community Enforcement Actually Works

Bandcamp gave users reporting tools. This is crucial because it distributes the enforcement burden.

The system probably works like this: if you suspect a track is AI-generated, you can file a report. The report includes your reason, possibly links to similar tracks, or evidence of impersonation. Bandcamp's moderation team reviews it.

For impersonation reports, the process is potentially clearer. If you're an artist and someone uploaded a track claiming to be you or obviously mimicking your style, you can file a copyright/identity claim. Bandcamp likely has expedited handling for that.

The incentive structure matters. If reporting is easy but false reports are common, the system gets clogged. If reporting is hard, legitimate problems get ignored. Bandcamp needs to find the middle ground.

Community moderation works best when the community is invested in accuracy. On Bandcamp, the community is artists. Artists have strong incentives to correctly identify AI music (to reduce competition) but also strong incentives to avoid false positives (to preserve community trust).

Compare this to platforms where users are mostly passive listeners. They have no incentive to help moderate. Reporting becomes spam.

Bandcamp's advantage here is their community composition. They've built a platform for creators by creators. That makes community enforcement actually functional.

But there are still failure modes. What if a competitor falsely reports your track as AI to damage you? What if someone legitimately confused about the policy reports ambient electronic music made with synthesizers?

The appeals process becomes critical. Bandcamp needs robust appeals where creators can defend their work. They haven't detailed this yet, which is a gap.

What Happens Next: The Evolution of Platform Policies

Bandcamp made the first move. What comes next?

Expect other platforms to gradually tighten their own policies. Not all at once. Not with Bandcamp's decisiveness. But the pressure is there.

SoundCloud will probably follow relatively quickly. They've positioned themselves as artist-friendly and would face criticism if they allowed unchecked AI slop.

Spotify will continue moving toward disclosure requirements. They're the market leader and move more cautiously, but they'll probably eventually require AI disclosure in credits and metadata.

YouTube is the wildcard. They have the most to lose from AI regulation but also the most to lose from platform degradation. Expect them to move slowly but eventually implement something.

The majors—Sony, Universal, Warner—will push for stricter rules. They have the most to gain from AI restrictions because they represent established artists.

Independent labels and smaller platforms will fragment. Some will go hard on AI prohibition. Others will embrace it. That diversity might actually be healthy.

Legislatively, we'll probably see some regulation. Not necessarily AI-specific, but music platform regulation that touches on AI. The European Union is already moving in that direction.

The likeliest outcome: a spectrum. Some platforms ban AI music entirely (Bandcamp model). Some require disclosure and separate categorization (Spotify trajectory). Some embrace it as a category. Some create hybrid models where AI music is allowed but with clear labeling and separation.

This spectrum is actually better than uniformity would be. It lets different communities find their platform. Human-focused communities can use Bandcamp. Experimental communities can use other platforms. Everyone gets choice.

Major labels and established artists hold the most influence over AI policy decisions in the music industry, while AI music creators have the least. (Estimated data)

The Economics: How AI Bans Affect Platform Revenue

Here's the business reality: fewer uploads can mean higher quality, which can mean better long-term revenue despite lower volume.

Bandcamp already prioritizes revenue sharing over pure growth. The Bandcamp Fridays initiative directly transfers money to artists. If the platform has fewer total uploads but higher engagement and legitimacy, the per-artist revenue could actually increase.

Less AI slop means less competition for promotion. Human artists get better discoverability. Better discoverability means more listeners. More listeners means more revenue per track. Even with fewer total tracks, the platform could be more profitable.

For Bandcamp specifically, the economics probably work. The platform makes money through revenue sharing and features like better analytics and customizable themes. More high-quality content serves their business model better than more total content.

For platforms that monetize primarily through ads (YouTube, Spotify's free tier), the math is different. Lower volume could mean lower revenue if listener hours decline. But AI music is generally low-engagement. People listen to AI slop as background noise, not attentively. Removing it might actually increase average listener engagement per track.

The longer play is that platforms enforcing AI policies will develop better reputations with artists and listeners. That's a competitive advantage. They become the "artist-friendly" platforms, and that attracts people.

Bandcamp is already known for this. The policy reinforces that brand. It's good business wrapped in good principles.

Practical Implications for Different Stakeholders

The policy affects different groups in different ways.

For Independent Artists: This is mostly good news. Less competition from AI slop. Better discoverability. More protection against impersonation. The costs are minimal unless you were using AI tools substantially in your process.

For Established Artists: Better brand protection. Less dilution of your catalog from AI impersonation. Maintains the scarcity that makes your music valuable. Reinforces your professional positioning.

For Music Producers and Technologists: Some friction if they were experimenting with AI tools on Bandcamp. Pressure to clearly delineate human creative input. But opportunity to develop better tools that augment human creativity without replacing it.

For Streaming Listeners: Cleaner platform. Less algorithmic noise. More curated experience. Potentially higher discoverability for human music, though it depends on how the algorithm adjusts.

For Deezer, Spotify, and YouTube: Increased pressure to make similar policies. Competitive disadvantage if artists migrate to Bandcamp. But also opportunity to differentiate their approach.

For AI Music Tool Companies: Reduced addressable market for pure generation (where the tool creates music independently). But potential expansion of AI-as-augmentation tools (where AI helps humans create).

The Impersonation Problem: A Deeper Look

Impersonation is the most ethically clear-cut problem the policy addresses. And it's the most serious.

Imagine you're an independent artist with 50,000 fans. Someone creates AI versions of your music, upload them to multiple platforms, and monetizes the streams. Listeners assume it's you. Fans get confused. Your authentic work gets buried in search results.

From your perspective, this is theft. Not copyright infringement, necessarily (that's legally complex). But definitely theft of your identity, your reputation, and your potential revenue.

Now imagine you're a woman in music, where research shows you already face higher rates of impersonation, harassment, and identity theft. AI tools that make this easier are particularly threatening.

Bandcamp's explicit prohibition on impersonation is the policy's strongest component. It's clear. It's enforceable. It protects something real.

The question is whether prohibition is enough, or whether technical solutions are needed. Should platforms use authentication systems where verified artists get identity markers? Should they use voice recognition to prevent impersonation at the upload level?

Probably both. Bandcamp's policy creates the rule. But enforcement technology will need to improve to stay ahead of impersonation attempts.

Deezer reports 34% of its music is AI-generated, while Bandcamp's ban aims to reduce AI-generated content to an estimated 5%. Estimated data.

Looking Ahead: What the Music Industry Needs

Bandcamp's policy is a good start, but it's not a complete solution to the AI music problem.

What the industry needs:

1. Industry Standards for AI Disclosure When AI is used anywhere in production, credit it clearly. Not hidden in metadata, but visible to listeners. This gives consumers information and artists credit for their creative decisions.

2. Stronger Training Data Consent Agreements AI music tools shouldn't be trained on artist work without explicit consent and compensation. Deezer signed the global statement on this. Others need to follow.

3. Artist Authentication Systems Verified artists need digital credentials that prevent impersonation at the platform level. This is technically feasible and needs to become standard.

4. Royalty Clarity for AI-Assisted Work If AI is part of production but humans made primary creative decisions, who gets paid? The rules need to be clearer. Bandcamp's policy is about prohibition, but the industry needs rules for permutation.

5. Litigation Clarity Courts need to clarify the legal boundaries between AI assistance and AI replacement. Is generating a drum pattern and keeping it copyright infringement on the tool company? What about the artist?

6. Career Development for AI Musicians If the technology is here to stay, the industry should develop pathways where AI musicians can create legitimately interesting work, maybe on dedicated platforms or with human collaboration requirements.

Bandcamp addressed the acute problem (AI slop and impersonation). The industry needs to address the deeper questions about how music creation evolves with new tools.

How This Affects Different Music Genres

The policy doesn't affect all music equally.

Electronic Music and Ambient These genres have used algorithmic and generative tools for decades. Artists producing ambient music with algorithmic systems might be in gray area. If the human creative decision-making is primary, probably fine. If it's mostly algorithmic generation, probably not.

Hip-Hop and Production Music Sample-based production and beat-making might involve AI tools. Same analysis applies. If you're primarily making creative decisions and using AI as one tool, you're probably safe.

Pop and Singer-Songwriter Less likely to involve AI at all. The policy barely affects this space. Unless you're using AI to generate lyrics or melodies, you're fine.

Experimental and Avant-Garde This is the weirdest space. Some experimental music deliberately uses algorithms and generative systems as artistic medium. That's legitimate creative work. Whether it survives Bandcamp's policy depends on how the policy gets applied and clarified.

Classical and Orchestral Likely unaffected. AI tools can augment composition, but the creative depth typically comes from human arrangers and musicians.

So really, the policy creates challenges primarily for:

- Pure AI generation (no human creative input)

- Impersonation

- Low-effort mass production

Legitimate tools-based creation is generally fine if human intention is obvious.

Bandcamp's Competitive Position in Context

Why is Bandcamp able to make this move while bigger platforms can't?

It comes down to business model and community.

Bandcamp doesn't have pressure from investors demanding growth metrics. It's been profitable and independent. That gives leadership space to prioritize values over volume.

Bandcamp's community is artists first. The platform succeeds by being useful to creators. An AI ban aligns with that community's values. Spotify's community is more mixed. Listeners care about music variety. Artists care about earnings. These interests sometimes conflict.

Bandcamp also has a smaller moderation burden. They can actually review uploads and maintain quality. Bigger platforms can't do that at scale.

Bandcamp has positioned itself deliberately as the artist-friendly alternative. This policy reinforces that positioning. It's strategic and principled at the same time.

Bigger platforms have to move more cautiously because the costs of being wrong are higher. If Spotify bans AI music and then artificial sounds in legitimate music production get caught in the filter, the damage is massive. With Bandcamp, the damage is localized to their community.

That's not an excuse for bigger platforms to move slowly. But it explains why Bandcamp can move decisively.

The Philosophical Question Underneath

All of this policy stuff sits on top of a deeper question: what is music?

Is music a product? A service? An art form? A technology? A social connection?

Different people answer that question differently. And their answer determines how they feel about AI music.

If music is a product, AI music is fine. It's a product produced more efficiently. Why care how it's made?

If music is an art form, AI music is fundamentally problematic. Art requires intention and skill. Can algorithms have intention?

If music is a social connection, AI music is concerning because it bypasses the human connection. You're not connecting with an artist. You're consuming content from an algorithm.

Bandcamp's position is closest to the third view. The company believes in human connection through music. That belief drives the policy.

This is important because it means the policy isn't primarily about technology. It's about values. And values don't change just because technology improves.

So even if AI music generation becomes indistinguishable from human music, Bandcamp would probably maintain the policy. Because the policy is about whether you value the human connection, not about whether you can technically detect the AI.

That's actually stronger than a detection-based policy. It's principled. It won't become obsolete as AI improves.

Preparing for Further Evolution

Bandcamp's policy is current as of early 2025, but the situation will evolve.

Expect several developments:

More Sophisticated AI Tools The generation tools are improving rapidly. In a year, current detection methods might be significantly less effective. Bandcamp will need to update its detection systems.

Genre-Specific Guidance Bandcamp will probably eventually provide clearer guidance on specific genres and use cases. The current policy is intentionally broad. As edge cases emerge, clarification will follow.

Potential Appeals Process The platform will likely develop a more formal appeals system if too many false positives accumulate. Right now, it seems ad-hoc.

Integration with Industry Standards Bandcamp might align with broader industry standards as they develop. Right now, the platform is leading. Eventually, they'll probably move toward interoperability.

Pricing or Features Around Verification Bandcamp might introduce verification badges or artist authentication features. Not necessarily paid, but probably optional and beneficial.

Possible Collaboration with Detection Tools The platform might partner with AI detection companies to improve their systems. Or build in-house expertise.

For artists, the key is to stay informed. Policy details matter. What's prohibited changes as enforcement technology evolves. Maintaining awareness helps you avoid problems.

FAQ

What exactly is Bandcamp's policy on AI music?

Bandcamp prohibits music made "wholly or in substantial part" by generative AI. This means music where AI is the primary creative force is banned. The platform also explicitly prohibits using AI to impersonate other artists or styles. Human-created music using AI tools as part of the production process is generally allowed, provided human artistic decision-making remains primary.

How does Bandcamp enforce this policy?

Bandcamp uses both automated detection systems and community reporting. The platform has tools to analyze audio files for AI signatures, and users can report suspected AI-generated music or impersonation. When content is reported or flagged, Bandcamp's moderation team reviews it. The platform has stated it will remove suspected AI-generated music, though specific appeals processes haven't been fully detailed.

Can I use AI tools in my music if I upload to Bandcamp?

Yes, if human creativity remains the dominant force. Using AI to generate drum patterns that you then modify, or creating melodic sketches you build upon, is likely permissible. The policy targets content made primarily by algorithms without substantial human creative input. Document your creative process to protect yourself if your work is questioned.

How does Bandcamp's policy compare to other streaming platforms?

Bandcamp's prohibition is stricter than most competitors. Spotify is working toward requiring AI disclosure in music credits but hasn't implemented a prohibition. Deezer has focused on preventing AI training on artists' work without consent. Apple Music, YouTube, and SoundCloud haven't taken major public stances. Bandcamp is currently the most restrictive major platform.

What's the difference between AI-assisted music and AI-generated music?

AI-assisted music involves humans making primary creative decisions using AI as one tool among many. AI-generated music involves an algorithm creating the content with minimal human input or decision-making. Bandcamp bans the latter but generally allows the former. The distinction relies on whether human artistic intent and decision-making are clearly primary.

If my music is wrongly flagged as AI-generated, what can I do?

Bandcamp has indicated there's an appeals process, though specific procedures haven't been fully detailed. If you're flagged, you should be able to appeal and provide evidence of your creative process. Documentation of how you created the music, including original files, drafts, and your production process, will strengthen your appeal.

Will AI music creators be able to use alternative platforms?

Yes. While Bandcamp is prohibiting AI music, other platforms haven't taken the same stance. Specialized platforms for AI music creators may emerge. Additionally, music created with AI as part of a human-driven process can likely exist on most platforms as long as it doesn't violate impersonation rules.

How does Bandcamp's policy affect the overall music industry?

Bandcamp's decision creates industry pressure for other platforms to develop their own AI policies. It signals that artist protection and content quality matter more than maximum volume and growth. This pushes the conversation from "is this a problem?" to "what's the right policy?" and may accelerate policy development across streaming services.

Can someone impersonate me using AI on Bandcamp?

Bandcamp's policy explicitly prohibits AI impersonation of artists and styles. If someone uploads AI-generated music impersonating you, you can report it using the platform's reporting tools. The company commits to investigating and removing content that violates this rule. However, enforcement depends on detection and your reporting, so monitoring for impersonation remains important.

What should independent artists do to prepare for these platform policies?

Document your creative process thoroughly. Keep original files, drafts, and notes about how you created your music. This documentation helps if your work is ever questioned. Also, diversify your music distribution across multiple platforms rather than relying exclusively on Bandcamp. Stay informed about policy changes by following official platform announcements. Finally, consider how you use AI tools in your workflow to ensure they complement your creative vision rather than replace it.

Final Thoughts: Where This Leads

Bandcamp's AI policy is more significant than it might initially appear. On the surface, it's a moderation rule. Dig deeper, and it's a statement about values, community, and what music means.

The platform is saying that human connection matters. That artists matter. That quality matters. That growth for its own sake doesn't.

In an industry increasingly optimized around metrics, that's a meaningful position.

Will other platforms follow? Some will, probably. But maybe not all. The industry is probably heading toward a spectrum where different platforms serve different communities and use cases.

That might actually be healthier than a uniform approach would be. Artists can choose where to publish. Listeners can choose where to listen. Communities can self-select for their values.

For anyone making music, the practical takeaway is simpler: know the rules of the platforms you use, document your creative process, and stay aware of how these policies evolve. The landscape is shifting. Being informed helps you navigate it.

Bandcamp made a decision. Now we'll see what everyone else does.

Key Takeaways

- Bandcamp prohibits music made wholly or substantially by AI, making it the first major platform to take this decisive stance

- The policy explicitly bans AI impersonation of artists, protecting musicians from identity theft and unauthorized deepfakes

- Detection uses both automated audio analysis and community reporting, distributing enforcement across technology and human judgment

- 50,000 AI-generated songs upload daily to streaming platforms, creating an existential problem for human artists' discoverability and earnings

- Competing platforms like Spotify favor disclosure requirements over prohibition, suggesting industry fragmentation into different policy models

- The policy is philosophically rooted in belief that human connection through music matters, not just technical detection capabilities

- Artists using AI as production tools (not replacement) likely remain compliant, creating gray area requiring documentation of creative process

Related Articles

- Matthew McConaughey Trademarks Himself: The New AI Likeness Battle [2025]

- Bluesky Social Platform: The Complete Guide [2025]

- TikTok Shop's Algorithm Problem With Nazi Symbolism [2025]

- Roblox's Age Verification System Catastrophe [2025]

- Senate Passes DEFIANCE Act: Deepfake Victims Can Now Sue [2025]

- AI Music Flooding Spotify: Why Users Lost Trust in Discover Weekly [2025]

![Bandcamp's AI Music Ban: What Artists Need to Know [2025]](https://tryrunable.com/blog/bandcamp-s-ai-music-ban-what-artists-need-to-know-2025/image-1-1768399745821.jpg)