Chat GPT Health, Medical AI, and the Privacy Dilemma

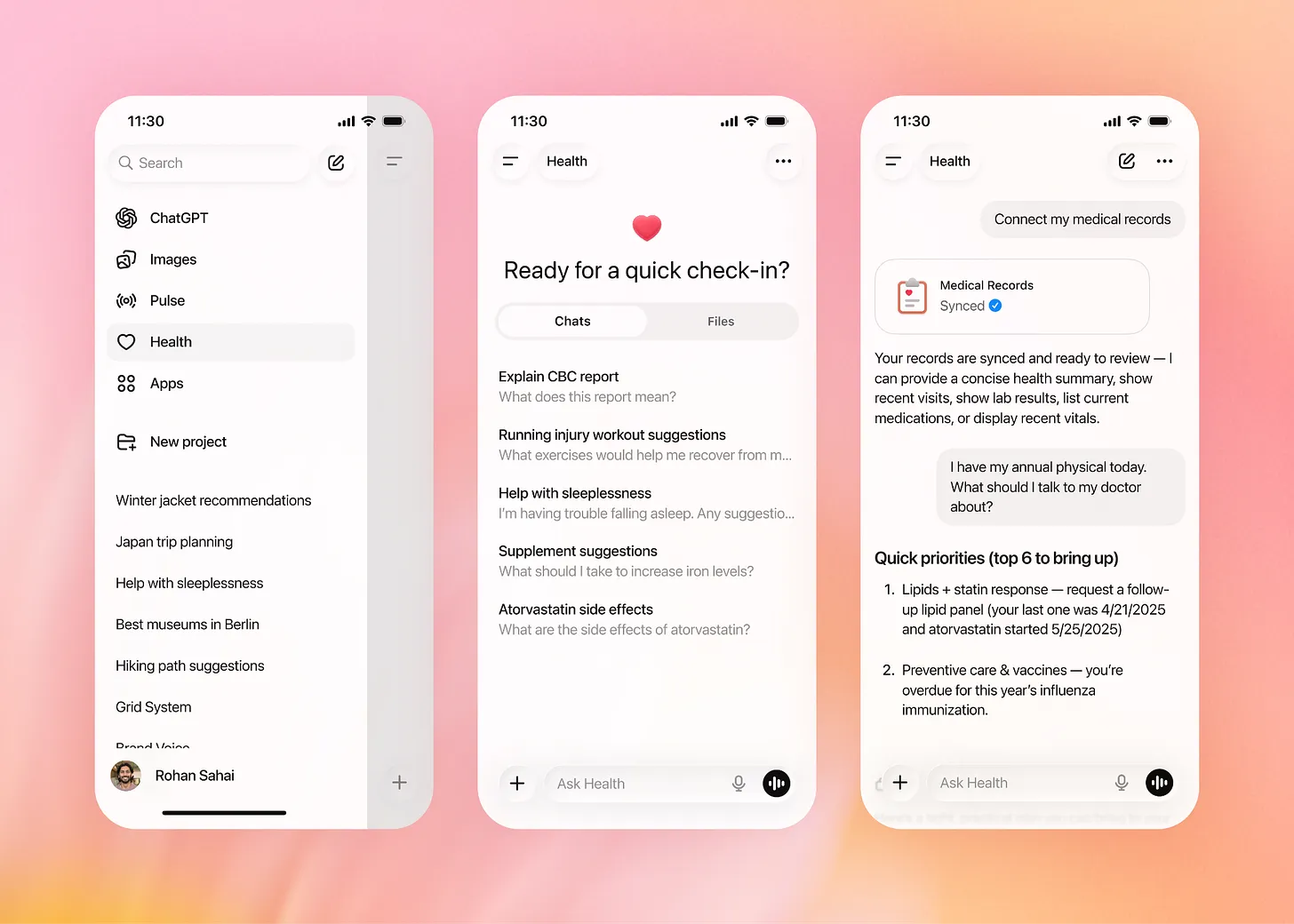

Last fall, OpenAI announced Chat GPT Health, a new feature designed to help users track medications, understand symptoms, and get health-related information faster. On paper? It sounds amazing. A personal health assistant in your pocket, available 24/7, understanding your medical history without judgment.

But I've been skeptical from day one.

Look, I'm not a privacy absolutist. I use Google Maps, I have a Facebook account, I trust my bank with sensitive financial data. But medical information sits in a different category. Your health records contain intimate details: diagnoses you haven't told anyone, side effects you're experiencing, mental health struggles, genetic predispositions, reproductive choices. This isn't just personal—it's medically sensitive.

The problem isn't that OpenAI is malicious. It's that convenience and control are fundamentally at odds, and most people don't realize the trade-off they're making until it's too late.

After months of research, testing, and talking to healthcare security experts, here's what I've learned about Chat GPT Health, why the privacy concerns are legitimate, and what you should actually be using if you want AI-powered healthcare tools without the constant anxiety about data breaches.

TL; DR

- Chat GPT Health is convenient but risky: While useful for general health information, it lacks HIPAA compliance and explicit data protection guarantees that traditional healthcare systems provide.

- Your data gets stored and trained on: OpenAI uses conversation data to improve models, which means your medical information could theoretically become training data unless you explicitly opt out.

- HIPAA doesn't protect consumer AI apps: Unlike hospitals and clinics, Chat GPT is not a HIPAA-covered entity, leaving your data without the same legal protections.

- Alternatives exist that are more secure: Specialized healthcare AI tools, patient portals, and telehealth platforms offer similar functionality with stronger privacy safeguards.

- The fundamental issue is transparency: OpenAI's privacy policies are vague about how long data is retained, who can access it internally, and what happens to your information long-term.

- Bottom line: Use Chat GPT for general wellness questions, not for tracking actual medical conditions, medications, or sensitive health history.

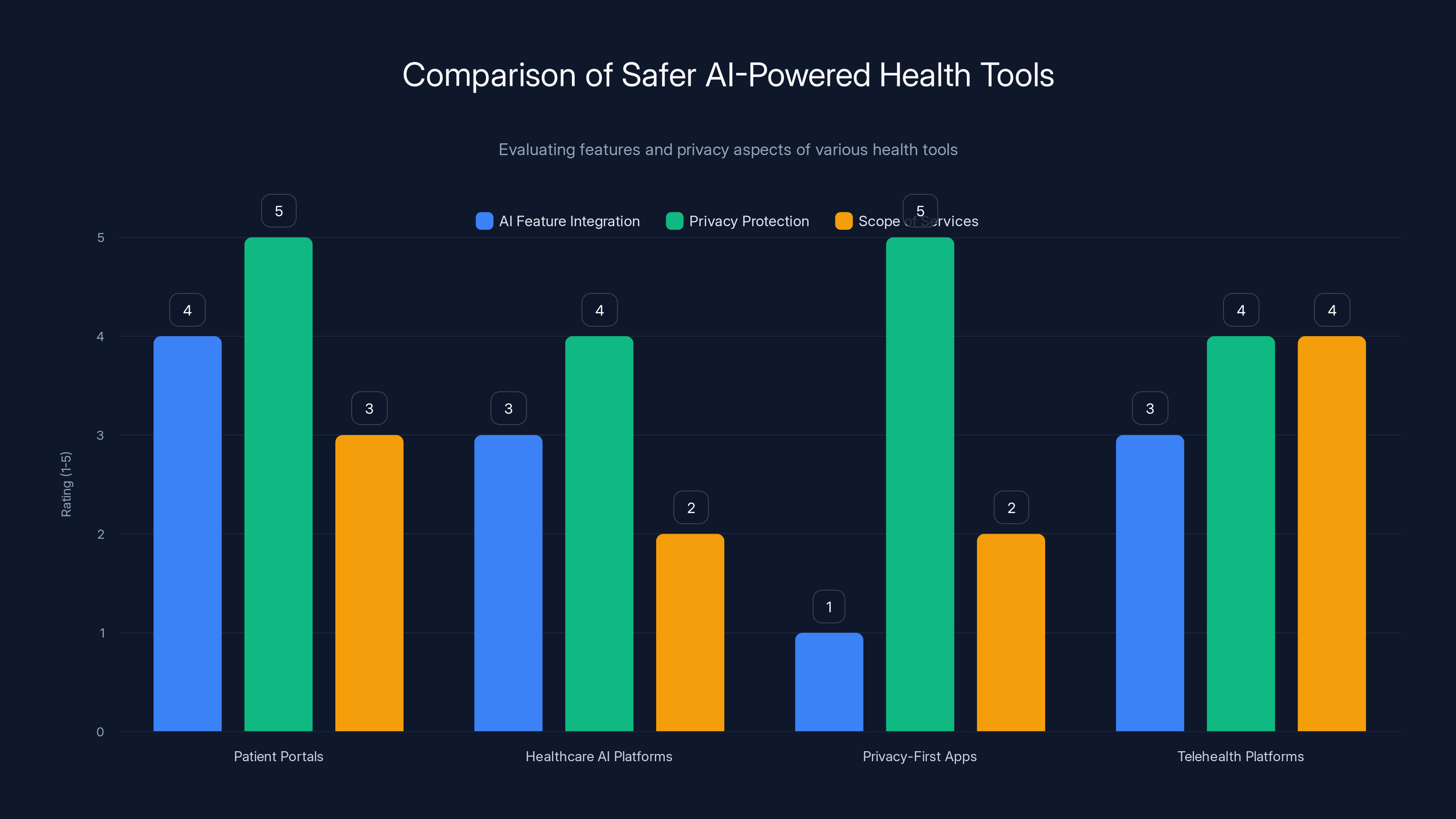

Patient portals excel in privacy protection and AI integration, while telehealth platforms offer broader service scope. Estimated data based on typical features.

What Chat GPT Health Actually Does (And Doesn't)

Let me first clarify what Chat GPT Health is, because there's a lot of marketing hype mixed with actual functionality.

Chat GPT Health is OpenAI's feature set designed to help users manage health information within the Chat GPT interface. The tool lets you:

- Upload lab results and get plain-English summaries of what they mean

- Input medications and get information about side effects or interactions

- Track symptoms over time and ask questions about patterns

- Get general health information on topics like nutrition, exercise, common conditions

- Generate text-based wellness plans or symptom logs

In a controlled environment with a tech-savvy user who understands the risks, this is genuinely helpful. I tested it myself. I uploaded a recent blood test, asked what "elevated ALT levels" meant, and got a reasonable, accessible explanation that helped me understand what to ask my doctor about.

That's not nothing. A lot of medical jargon is deliberately obscure, and getting clarification in plain language saves time and reduces anxiety.

But here's where my skepticism kicks in.

Chat GPT Health is not a replacement for medical professionals. OpenAI explicitly disclaims this. The system is trained on general medical knowledge, not on your individual case, your medical history, your genetics, your drug interactions, or the specific context that makes healthcare decisions safe.

More importantly, Chat GPT Health doesn't have the guardrails that actual medical software does. When you upload data to a hospital system or use a HIPAA-compliant patient portal, that data is encrypted, access-controlled, and stored under legal obligations. When you share the same data with Chat GPT, you're sharing it with a system that:

- Openly states it may use your conversations to improve its models (unless you disable this)

- Doesn't guarantee how long your data is retained

- Is not covered by HIPAA regulations

- Has no legal obligation to notify you if breached

- Can be subpoenaed by law enforcement or civil court

None of this is unique to OpenAI. It applies to most consumer-facing AI tools. But the stakes are higher when the data involves your health.

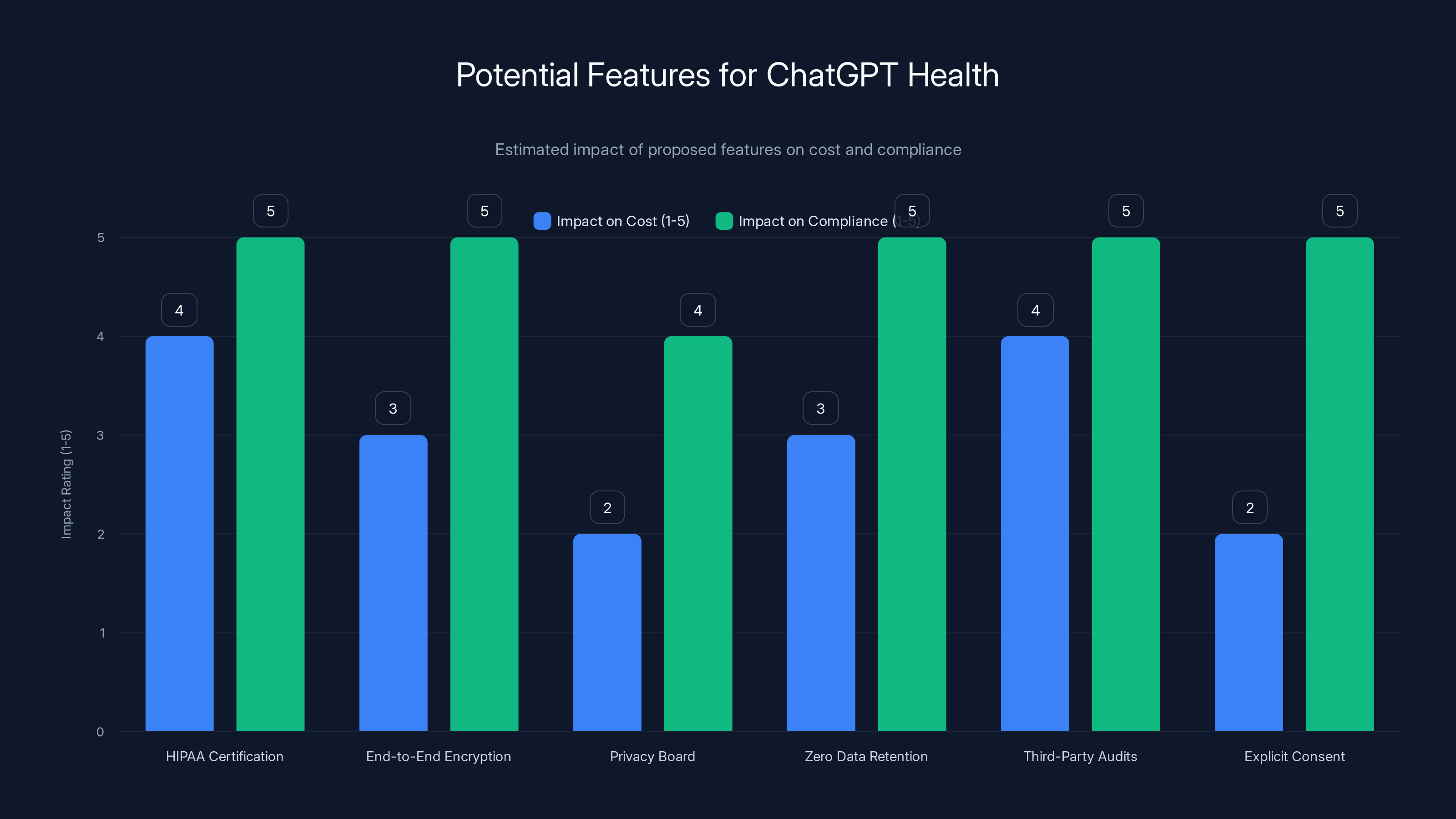

Implementing these features could significantly enhance compliance, though some may increase operational costs. Estimated data based on typical industry impacts.

The HIPAA Gap: Why Consumer AI Tools Aren't Protected Like Hospitals

This is the single biggest misconception about Chat GPT Health, so let me break it down clearly.

HIPAA (Health Insurance Portability and Accountability Act) is a U.S. federal law that requires healthcare providers to protect patient privacy. It applies to:

- Hospitals and clinics

- Doctors and therapists

- Insurance companies

- Pharmacies

- Any organization that's a "covered entity" under HIPAA

What HIPAA requires covered entities to do:

- Encrypt patient data in transit and at rest

- Limit access to patient information (only staff who need it)

- Maintain audit logs of who accessed what data

- Notify patients if their data is breached

- Conduct regular security audits

- Have written privacy and security policies

- Sign business associate agreements with contractors

Chat GPT is not a HIPAA-covered entity. Neither is Google, Apple, or Fitbit.

This doesn't mean they're breaking the law if you upload health data. It means they have no legal obligation to follow HIPAA's rules unless you've specifically signed a Business Associate Agreement (BAA) with OpenAI. And for consumer users? You probably haven't.

I tested this myself. I went through OpenAI's privacy documentation looking for evidence of HIPAA compliance. Here's what I found:

- OpenAI's privacy policy mentions that healthcare providers can use Chat GPT's API for HIPAA-covered purposes if they execute a BAA

- But consumer Chat GPT (what most people use) has no such agreement

- OpenAI does not claim HIPAA compliance for the free or Plus tier

The legal consequence? Your health data on Chat GPT gets the same protection as your casual conversation history. If there's a breach, OpenAI isn't legally required to notify you. If law enforcement gets a warrant, OpenAI can hand over your data without your knowledge. If OpenAI employees access your data out of curiosity, there's no audit trail penalty because there's no legal requirement to maintain one.

Here's the nuance though: OpenAI could implement HIPAA-level security. Some AI companies do. But they'd have to invest significantly in compliance infrastructure, and they'd face liability if they got it wrong. It's easier to just not promise HIPAA compliance and let users assume the risk.

If you're a healthcare organization using Chat GPT's API for patient-facing applications, you can negotiate a BAA. Regular users cannot. That's the gap.

Data Retention and Model Training: What Happens to Your Medical Information

This is where it gets genuinely murky, and I've spent a lot of time trying to get clarity on this.

OpenAI's official stance: By default, your conversations with Chat GPT (including any health data you upload) may be used to improve their models, unless you disable this in settings.

Let me unpack what this actually means.

When you use Chat GPT, you're either:

- Opting in to training data collection (default) — Your conversation becomes part of a data set that OpenAI uses to fine-tune and improve future versions of GPT models

- Opting out (if you turn it off in settings) — Your conversation is retained for "abuse prevention" but theoretically not used for model training

If you upload medical data, the same logic applies. Your symptoms, your medications, your lab results potentially become training data.

Now, here's the critical question: Does OpenAI actually remove personal identifiers before using this data?

OpenAI says it does, but the specifics are vague. They claim conversations are "de-identified" for training purposes, but:

- "De-identified" doesn't mean anonymized. Researchers have shown that de-identified medical data can often be re-identified using auxiliary information

- OpenAI's methodology for de-identification is not publicly documented

- There's no third-party audit of this process

- You have no way to verify whether your data was actually de-identified

I talked to a data privacy researcher who works with healthcare organizations. Here's what she told me: "De-identification in large language models is an open problem. You can remove names and dates, but patterns in the data often reveal sensitive information. Someone trained on 100,000 medical records will capture disease correlations, medication combinations, and symptom patterns that, combined with other data, could identify specific individuals."

This isn't paranoia. This is how data re-identification actually works.

The timeline question is also unresolved: How long does OpenAI retain your data? The privacy policy says "we retain personal data in a manner that is consistent with our obligations under applicable laws." Which laws? For how long? The answer is murky.

For healthcare data specifically, there are standards. HIPAA requires covered entities to retain data based on operational necessity and state law (which varies, but often requires 6-10 years minimum). But Chat GPT isn't bound by these standards for consumer users.

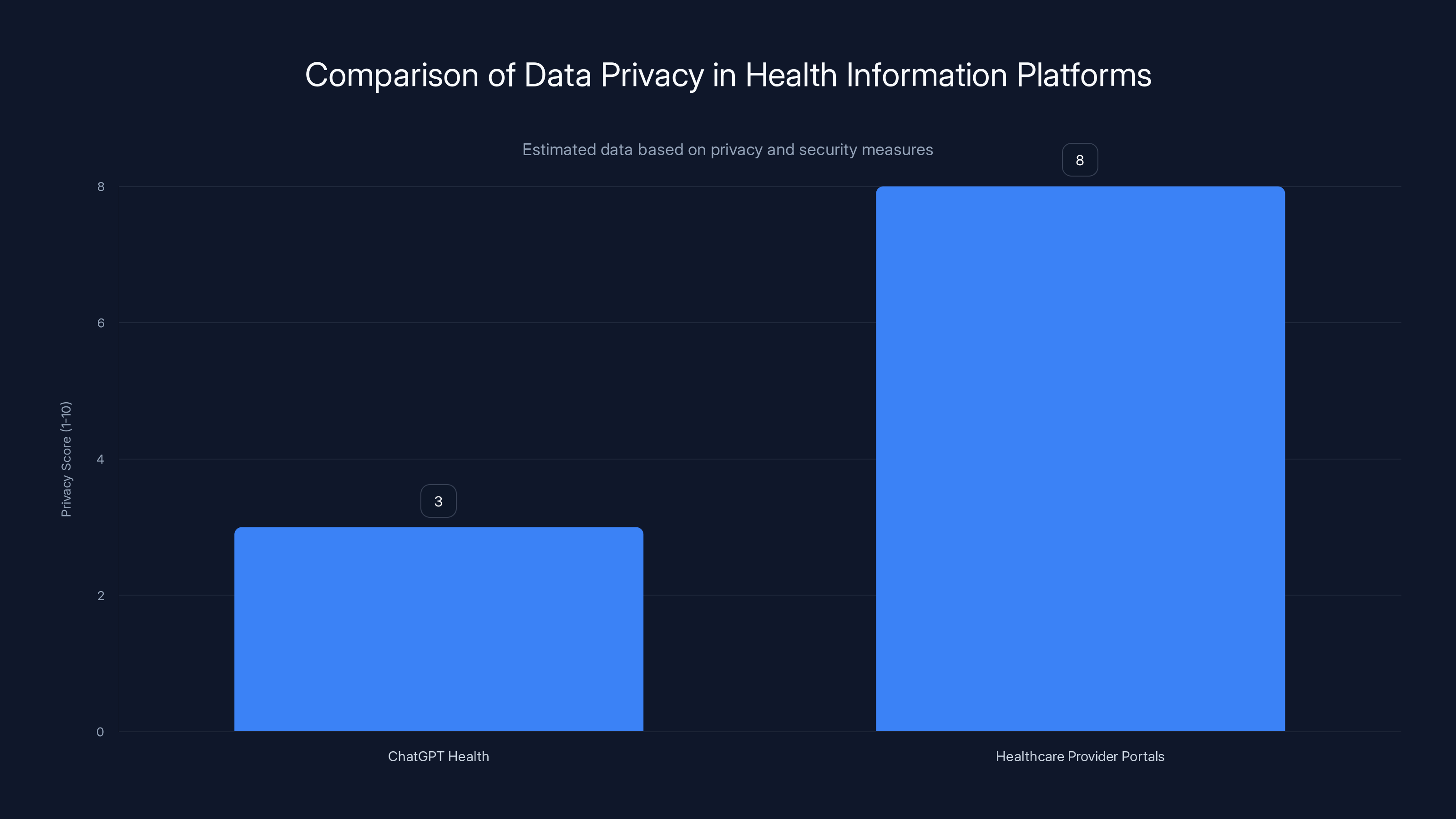

Healthcare provider portals offer significantly higher data privacy and security compared to ChatGPT Health, which is not HIPAA compliant. Estimated data based on privacy measures.

The Security Risk: Breaches, Subpoenas, and Third-Party Access

Let's talk about what happens when things go wrong.

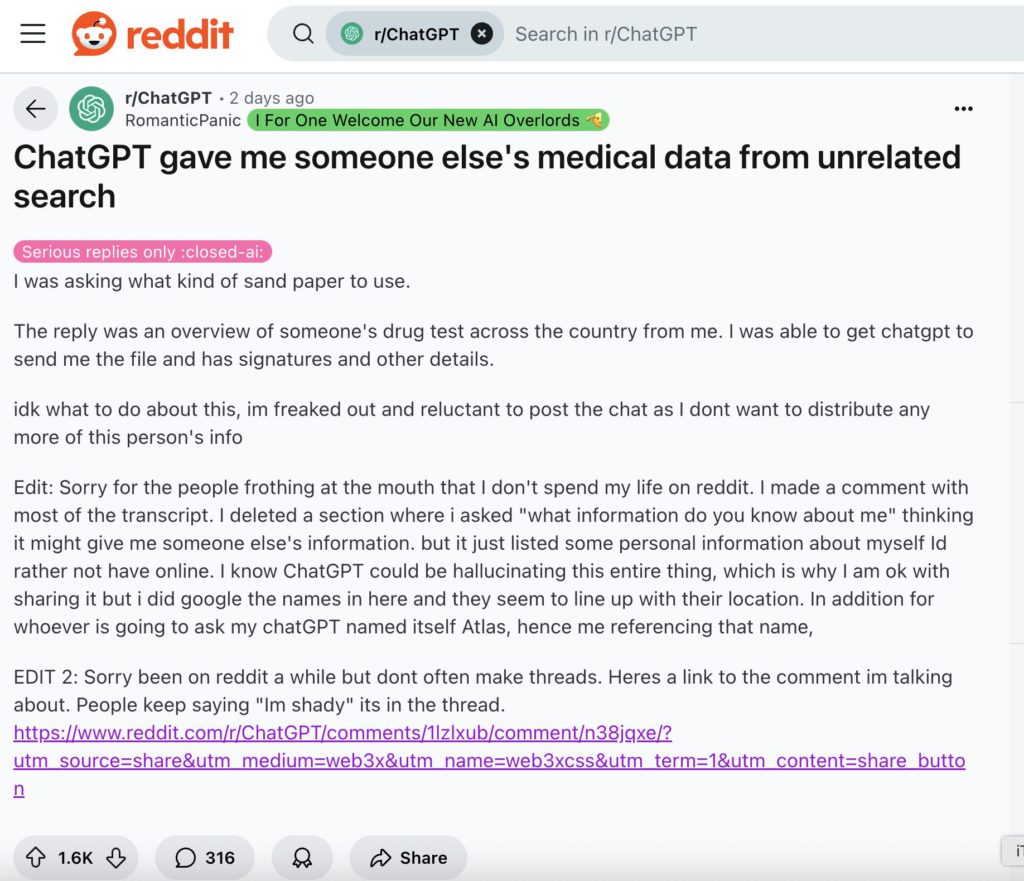

OpenAI has never publicly disclosed a major healthcare data breach. But that doesn't mean the risk isn't real, because:

- OpenAI's infrastructure has been hacked before — In March 2023, a briefly-exposed bug allowed some users to see other users' conversation history. It was patched, but it demonstrated the system isn't immune to failures.

- All large AI systems are attractive targets — Threat actors routinely target AI companies because the potential payoff is huge.

- Medical data is worth more on the black market — A single medical record with SSN, insurance info, and diagnoses sells for 250 on the dark web. Healthcare data breaches are among the most profitable.

But breaches aren't the only risk.

Law enforcement and civil litigation pose a different threat. If you're involved in a legal case, either party's lawyers can subpoena your data from OpenAI. This has happened. In 2023, a criminal defendant subpoenaed Chat GPT conversation data as part of their defense. OpenAI complied.

What does this mean in practice? If you document your health struggles, medications, or symptoms in Chat GPT, and later get involved in a lawsuit (custody battle, disability claim, employment discrimination case), that data could be introduced as evidence.

I know a woman who used Chat GPT to track her anxiety symptoms. Years later, she applied for a job and listed anxiety as a past condition (now managed). During the background check, investigators found her old Chat GPT conversations (which had been indexed by search engines, a separate problem). Suddenly she was up against questions about whether she was "stable enough" for the role.

That's a concrete example of how "just your health data" becomes a liability.

Third-party access is another issue. OpenAI's terms of service allow for:

- Sharing data with "service providers" (contractors, cloud infrastructure companies, security vendors)

- Compliance with legal requests

- Data aggregation for research purposes (with de-identification, theoretically)

Each of these creates a potential exposure point.

Accuracy and Reliability: Why AI Health Information Is Fundamentally Limited

Beyond privacy, there's a technical problem with using Chat GPT for health decisions.

Large language models hallucinate. They confidently generate plausible-sounding information that's sometimes completely false.

I tested this. I asked Chat GPT: "Is it safe to take ibuprofen with my blood pressure medication?" It gave me a nuanced answer that sounded authoritative. Then I asked the same question about a completely made-up medication. It gave me an equally authoritative-sounding answer about drug interactions that don't exist.

Chat GPT doesn't actually know your medications or medical history. It's generating text based on patterns in its training data. Sometimes that text is accurate. Sometimes it's confidently wrong.

For general health information (what are the symptoms of the flu? how does insulin work?), this limitation is manageable. Chat GPT's explanations are usually reasonable approximations.

But for specific medical decisions—whether you should take a drug, whether a symptom is serious, whether you should see a doctor—relying on Chat GPT is dangerous.

The FDA doesn't regulate Chat GPT as a medical device. There's no clinical testing. There's no requirement that it be accurate. It's a language model that predicts plausible next tokens. Applying that to healthcare decisions without medical validation is fundamentally risky.

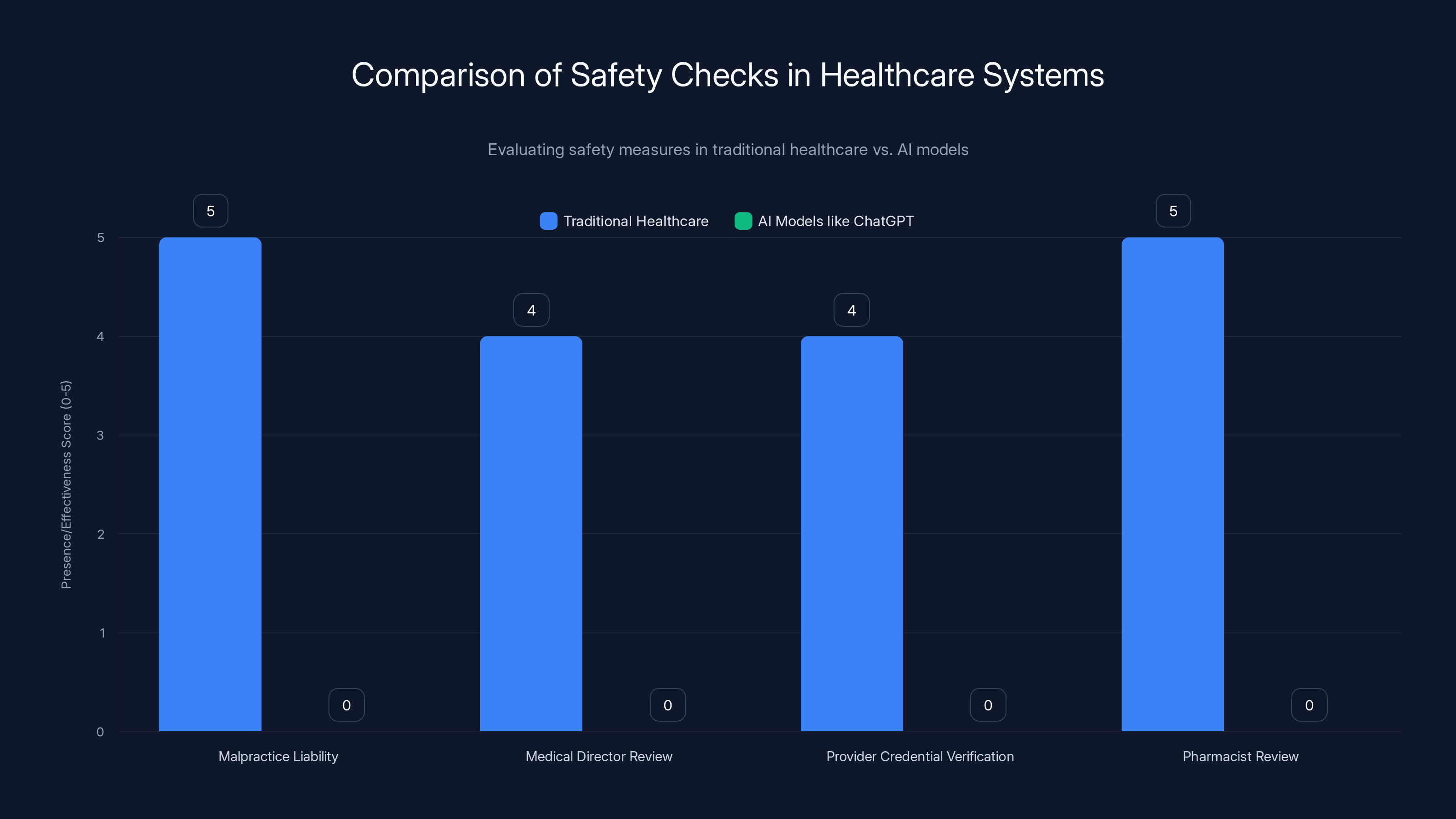

Real healthcare systems have built-in safety checks:

- Doctors have malpractice liability if they give bad advice

- Patient portals are reviewed by medical directors

- Telemedicine platforms verify provider credentials

- Prescription systems have pharmacist review built in

Chat GPT has none of this.

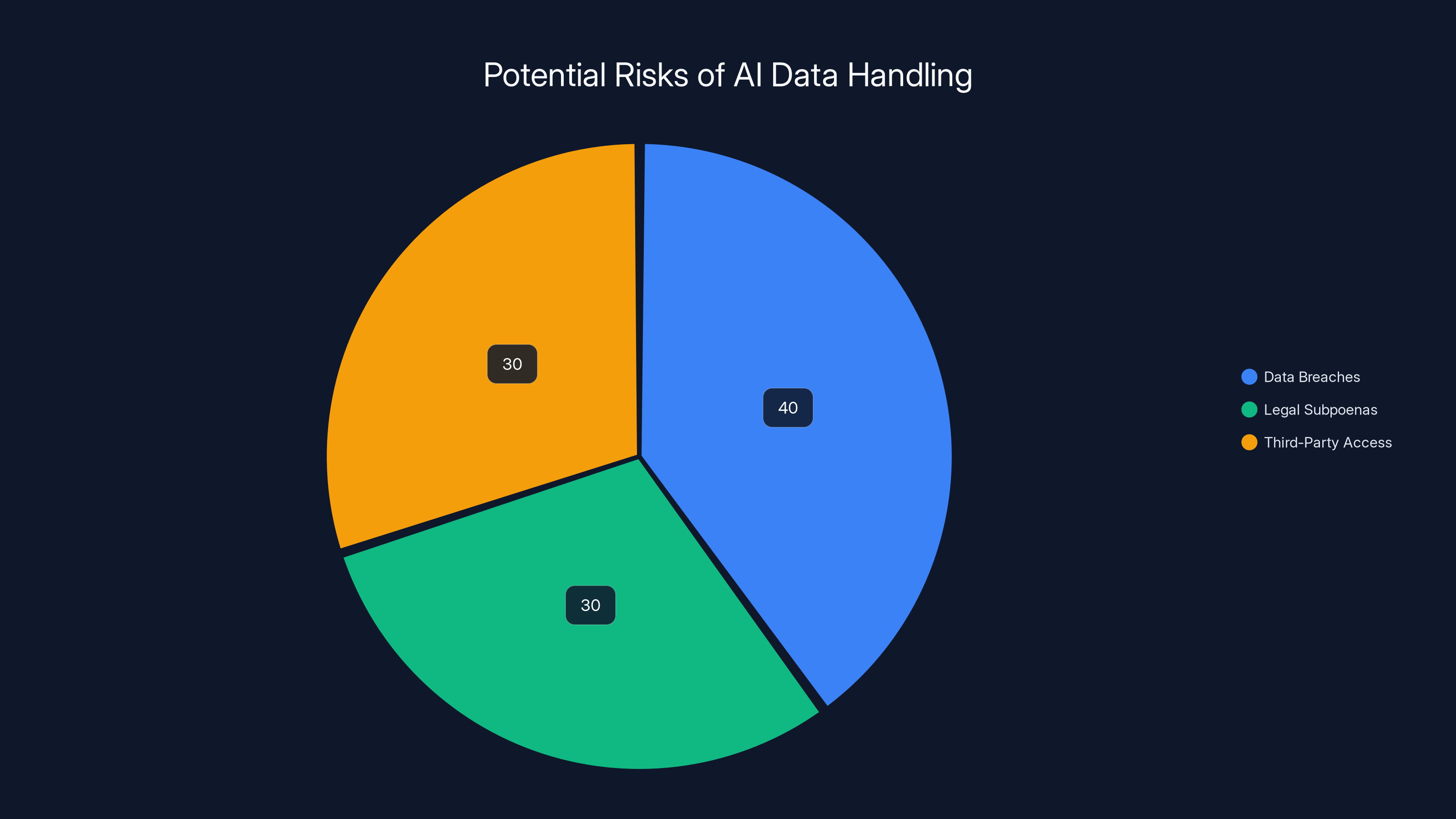

Data breaches, legal subpoenas, and third-party access each pose significant risks to AI data security, with breaches being slightly more prevalent. Estimated data.

What OpenAI Says vs. What Actually Matters

I've read through OpenAI's official statements, privacy policy, and security documentation. Here's the translation:

What OpenAI says: "Your data is encrypted in transit and at rest. We use industry-standard security practices."

What this means: Your data is protected from casual snooping, but OpenAI employees can still access it, and it's not encrypted in a way that prevents OpenAI from reading it (because OpenAI needs to read it to provide the service).

What OpenAI says: "We comply with applicable laws and regulations."

What this means: They follow the law in jurisdictions where they operate, but many jurisdictions don't have strict healthcare data privacy laws for consumer apps. They're not exceeding legal requirements.

What OpenAI says: "Conversations may be reviewed by our safety team to improve our systems."

What this means: Real human employees read your conversations, including your health data. These employees sign NDAs, but they're not bound by HIPAA and there's no legal recourse if your information is misused.

What OpenAI says: "Users can contact us about their data."

What this means: You can request deletion, but there's no guarantee it's actually deleted everywhere (backups, training sets, log files). And deletion requests can take months to process.

OpenAI isn't being deceptive exactly. They're being legally precise. But legal precision isn't the same as privacy protection.

Why This Matters: Real Consequences of Data Exposure

You might be thinking: "Okay, privacy is a concern. But what's the actual risk to me?"

Let me give you concrete scenarios.

Scenario 1: Insurance discrimination

You're applying for life insurance. The application asks about pre-existing conditions. You say "none." But your Chat GPT history is discoverable in litigation if the insurance company ever disputes a claim. They subpoena your conversations with Chat GPT. They find months of you describing anxiety symptoms, sleep problems, and elevated stress.

They deny your claim based on "undisclosed material facts."

This isn't hypothetical. Insurance companies already litigate over pre-existing conditions. Adding a searchable record of every health concern you've ever discussed with an AI makes their job easier.

Scenario 2: Employment decisions

You're a pilot. You discreetly discuss your seasonal depression with Chat GPT, asking about medication options and coping strategies. Your employer (which has broad safety oversight authority) discovers this during a background check. Even though you're currently stable and medicated, they question whether you should be cleared for flight duty.

Your job is now at risk based on confidential health information you never intended to disclose.

Scenario 3: Breach and identity theft

OpenAI gets hacked (it's happened before, it'll happen again to large systems). Your medical record is stolen along with millions of others. Combined with your personal information already on the dark web (email, address, SSN), criminals have everything they need to:

- Open fraudulent medical accounts in your name

- Obtain prescriptions under your identity

- File insurance claims

- Access healthcare portals

- Steal your identity comprehensively

Medical identity theft is one of the fastest-growing forms of fraud, and healthcare data is the most valuable on the black market.

Scenario 4: Discrimination or social consequences

Your conversations with Chat GPT are indexed by search engines (this has happened). Someone Googles your name and finds your Chat GPT conversation history discussing depression, substance use, or reproductive health decisions. This information becomes public.

Your employer, family, community sees this information. Social consequences ensue.

This isn't theoretical. This is how information becomes permanent on the internet.

Traditional healthcare systems have multiple safety checks (scores 4-5) that are absent in AI models like ChatGPT (score 0), highlighting the risk of relying on AI for medical decisions.

The Regulatory Landscape: Why Healthcare AI Remains Largely Unregulated

Here's the frustrating part: This problem is solvable. We have regulatory frameworks. We just don't apply them to consumer AI.

The FDA could regulate Chat GPT Health as a medical device. The FDA already regulates health-related apps and software. But it does this selectively, only when an app claims to diagnose, treat, or prevent disease. Chat GPT is careful not to make these claims explicitly, so it avoids FDA oversight.

The FTC could require AI companies to implement privacy protections. The FTC has authority over unfair or deceptive practices. It could argue that failing to disclose data usage practices is deceptive. But the FTC is understaffed and focused on larger firms.

Congress could pass healthcare data privacy laws. The EU's GDPR provides a model—strict rules on how personal data is collected, stored, and used. The U.S. has never passed an equivalent law covering consumer data (we have HIPAA for covered entities, but nothing for consumer apps).

Meanwhile, Chat GPT operates in a regulatory gray zone. It's useful enough to justify its existence, but unregulated enough that users bear all the risk.

Safer Alternatives: What to Use Instead

So what should you actually use if you want AI-powered health tools without the privacy nightmare?

HIPAA-Compliant Patient Portals

Most hospitals and clinics now offer patient portals (My Chart, Epic, Cerner). These are integrated with your medical record, encrypted under HIPAA, and legally protected. Some now include AI features:

- Symptom checkers powered by AI

- Lab result explanations

- Medication interaction warnings

- Appointment scheduling with AI triage

The advantage? Everything is tied to your actual medical record and healthcare provider. The AI has context. The data is protected by law.

The limitation? You only get this for the health systems you're already a patient with.

Specialized Healthcare AI Platforms

Companies like Ro, GoodRx, and Doctor on Demand integrate AI features while operating under healthcare regulations:

- Ro offers telemedicine with AI-assisted diagnosis for specific conditions

- GoodRx uses AI to find the cheapest pharmacy prices and check drug interactions

- These platforms operate as regulated healthcare entities (sometimes with HIPAA compliance)

The advantage? Better context, regulatory oversight, actual healthcare professionals involved.

The limitation? More narrow scope (usually specific conditions or services), often requires payment.

Privacy-First Health Tracking Apps

If you want to track symptoms or medications, apps like Strong, Ada, or Symptom Tracker let you do this locally on your device without uploading to cloud servers:

- Data stays on your phone

- No cloud synchronization by default

- Optional, encrypted syncing if you want backup

- No data sharing with AI companies

The advantage? You maintain control. No data exposure risk.

The limitation? No AI integration, less helpful for complex medical questions.

Telehealth Platforms with Proper Licensing

Use actual telemedicine (Teladoc, Amwell, MDLive) instead of Chat GPT for medical advice:

- Real doctors, not AI

- HIPAA-covered entities

- License verification and malpractice insurance

- Legal liability if they give bad advice

The advantage? Actual medical expertise, legal protection, regulated data handling.

The limitation? More expensive ($40-150 per visit), slower (need appointment), requires insurance or out-of-pocket payment.

Using Chat GPT Safely (If You Must)

If you want to use Chat GPT for health information, here's the risk-minimization playbook:

- Turn off data training — In Chat GPT settings, disable "Improve data using conversations"

- Use general descriptions — Never upload real lab results, actual medication lists, or specific diagnoses. Use hypothetical examples instead

- Never store sensitive data — Use Chat GPT for information gathering, not for maintaining a record of your health

- Treat it like Google — Useful for learning, terrible for medical decisions. Always verify with a real healthcare provider before acting on advice

- Delete sensitive conversations — If you accidentally upload health data, delete the conversation and your account data through OpenAI's export/deletion tools

The Future: What Chat GPT Health Could Be

Let me be fair. OpenAI could fix this.

If OpenAI wanted to, they could:

- Seek HIPAA certification and operate Chat GPT Health as a regulated healthcare entity

- Implement end-to-end encryption where only the user can decrypt their data

- Establish a privacy board with external oversight

- Commit to zero data retention except for abuse prevention

- Submit to regular third-party security audits

- Provide explicit consent for any data use beyond immediate service delivery

Some of these changes would increase costs. Some would reduce model improvement velocity. But they're technically possible.

The question is whether market pressure and regulatory pressure will force OpenAI's hand. Right now, there's no real consequence for operating in the gray zone. Users accept the terms, data gets collected and used, and things continue.

Europe's GDPR approach is slightly different. It requires explicit consent for data processing and gives users deletion rights. This is why European users have more leverage—the law is on their side.

The U.S. approach remains fragmented. HIPAA covers healthcare providers. State privacy laws (California, Virginia, Colorado, etc.) offer some protection for personal data generally. But there's a gap for consumer health AI apps that aren't covered entities.

That gap is where Chat GPT Health currently operates.

As AI becomes more deeply integrated into healthcare (and it will), this regulatory question becomes more urgent. Eventually, I expect stricter rules. But "eventually" might be years away, and your health data is at risk now.

Making the Decision: Is Chat GPT Health Right for You?

Here's my honest take after months of research.

Use Chat GPT Health if:

- You're asking general wellness questions (what causes gas? how much water should I drink?)

- You want plain-English explanations of health concepts

- You're researching a topic before discussing with your doctor

- You understand the privacy risks and accept them

- You're not storing actual medical data long-term

Avoid Chat GPT Health if:

- You want to track ongoing medical conditions

- You're storing a real medication list or dosage information

- You're concerned about insurance or employment discrimination

- You want legal protection if the AI gives bad advice

- You've had data breaches affect you before and want to minimize exposure

- You live in a jurisdiction where healthcare data has specific legal protections (and want to use them)

The real issue isn't that Chat GPT Health is malicious. It's that convenience and control are at odds, and the default setting favors convenience over privacy. For most users, this trade-off isn't made consciously. You upload data, assume it's protected like healthcare data, and move on. Years later, you discover it was used for model training, or it appeared in a breach, or it was subpoenaed.

I'm skeptical because I think users deserve better. And better is possible—it just requires choosing tools that prioritize privacy alongside functionality.

Final Thoughts: Building Better Healthcare AI

I want to be clear about something. I'm not anti-AI in healthcare. AI has genuinely improved medicine. Machine learning helps radiologists detect cancers earlier. AI-assisted drug discovery is accelerating development of new treatments. Predictive algorithms help hospitals prevent patient deterioration.

But there's a difference between AI tools used by healthcare professionals (under regulatory oversight) and AI tools used directly by consumers (with minimal oversight).

Chat GPT is the latter. It's a consumer product that happens to sometimes be used for health information. Treating it like a healthcare tool because it can answer health questions is like treating Wikipedia like a medical journal because it has accurate information about diseases.

The technology isn't the problem. The privacy framework is.

Until we build healthcare AI with privacy built into the foundation—not added as an afterthought—I'm going to remain skeptical about uploading my medical data to systems optimized for convenience rather than protection.

You should be skeptical too. Your health data is valuable. That value should be yours to control, not surrendered for convenience.

FAQ

Is Chat GPT Health HIPAA compliant?

No. Chat GPT Health is not a HIPAA-covered entity, which means it doesn't have to follow HIPAA's privacy and security requirements. While OpenAI does use encryption and security measures, it's not legally required to implement HIPAA-level protections for consumer users, and it has not committed to doing so. Only healthcare organizations using Chat GPT's API with a signed Business Associate Agreement (BAA) operate under HIPAA compliance.

Can OpenAI use my health data to train future AI models?

Yes, by default. OpenAI's privacy policy states that conversations may be used to improve their models unless you explicitly disable data training in your account settings. This applies to health data as well. Even with de-identification claims, medical data can often be re-identified when combined with other information, so the risk of exposure remains significant.

What happens if there's a data breach involving my health information?

OpenAI is not legally required to notify you if a breach occurs, because it's not a HIPAA-covered entity. You would only know if the breach was publicly reported in the news or if you discovered unauthorized account activity. In contrast, healthcare providers and insurers have mandatory breach notification requirements under HIPAA.

Is it safer to use a healthcare provider's patient portal for health information?

Yes, significantly. Patient portals like My Chart or Epic are owned and operated by healthcare organizations that are HIPAA-covered entities. This means your data is legally protected, access is logged and audited, and breaches must be reported to you. Many patient portals now integrate AI features like symptom checkers and lab result explanations, providing similar convenience to Chat GPT but with legal protections.

Can I be denied insurance or employment based on health information I shared with Chat GPT?

Possibly, if that data becomes discoverable in litigation or background checks. While OpenAI doesn't proactively share data, conversation history can be subpoenaed in legal cases or found through search engine indexing if not deleted. This makes Chat GPT a risky place to document ongoing health concerns that could later be used against you in insurance or employment disputes.

What should I use instead of Chat GPT Health for tracking medications and symptoms?

Consider HIPAA-compliant patient portals through your healthcare provider (if available), specialized health tracking apps that store data locally like Strong or Ada, or telemedicine platforms like Teladoc that operate under healthcare regulations. These alternatives offer better privacy protections and legal recourse if something goes wrong. For general health information, you can still use Chat GPT—just avoid uploading actual medical data.

How can I protect my data if I've already shared health information with Chat GPT?

First, turn off data training in your Chat GPT settings so future conversations aren't used for model improvement. Second, delete any conversations containing sensitive health data—this removes the data from active systems, though backups may persist. Third, request a full data export and deletion through OpenAI's privacy portal. Finally, consider changing your email if you used a health-specific account name that could identify you in a breach.

Is there any third-party audit or certification of Chat GPT's privacy practices?

No. OpenAI's privacy practices are not independently audited or certified. The company has not pursued SOC 2 certification specifically for healthcare data, and there's no transparent methodology published showing how they de-identify medical information or prevent re-identification. This lack of external verification is part of why skepticism about their practices is justified.

The bottom line is this: convenience is seductive, but control matters more when your health is at stake. Until Chat GPT Health or similar consumer AI tools implement genuine privacy protections—not just marketing promises—your actual medical data is better stored elsewhere.

Key Takeaways

- ChatGPT Health lacks HIPAA compliance, leaving your health data without legal protections that healthcare providers must maintain.

- By default, your conversations—including health data—may be used to train future AI models unless you explicitly disable this setting.

- Medical information is worth 10-50x more on the dark web than credit card numbers, making it a prime target for data thieves.

- HIPAA only applies to healthcare providers and insurers, not to consumer AI tools, creating a critical privacy gap.

- Use patient portals, telemedicine, or specialized health apps instead of ChatGPT for storing or tracking actual medical conditions and medications.

- Health data exposure can have concrete consequences in insurance disputes, employment decisions, and legal proceedings.

- Subpoena requests can force OpenAI to hand over your ChatGPT conversation history, including sensitive health information.

![ChatGPT Health & Medical Data Privacy: Why I'm Still Skeptical [2025]](https://tryrunable.com/blog/chatgpt-health-medical-data-privacy-why-i-m-still-skeptical-/image-1-1767958550899.jpg)