How Claude Code Just Solved the AI Agent Context Problem

Imagine building the world's most capable programming assistant, then watching it struggle because you gave it too much to think about.

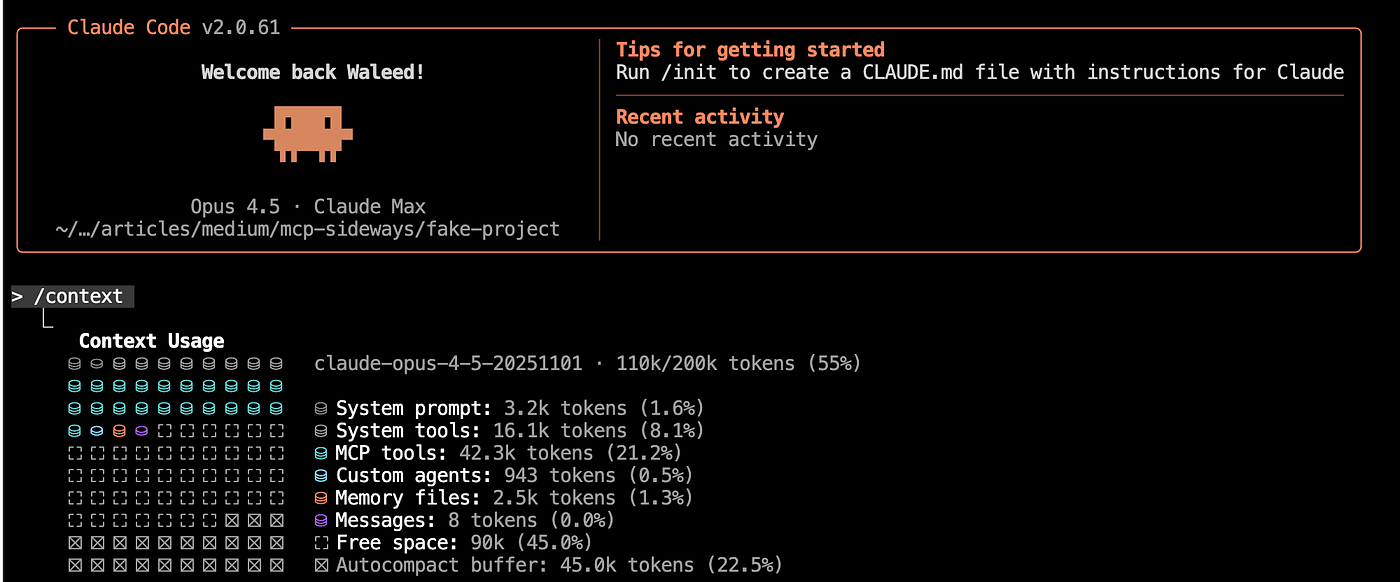

That was the problem Anthropic faced with Claude Code. The platform had become powerful, but bloated. Every time Claude Code accessed tools—whether it was Git Hub integration, Docker commands, or file operations—it had to "read the instruction manual" for every single tool in the system, even the ones it would never use. This wasn't a minor inefficiency. It was consuming massive chunks of context that could otherwise be filled with actual user input, previous conversation history, or the AI's reasoning process.

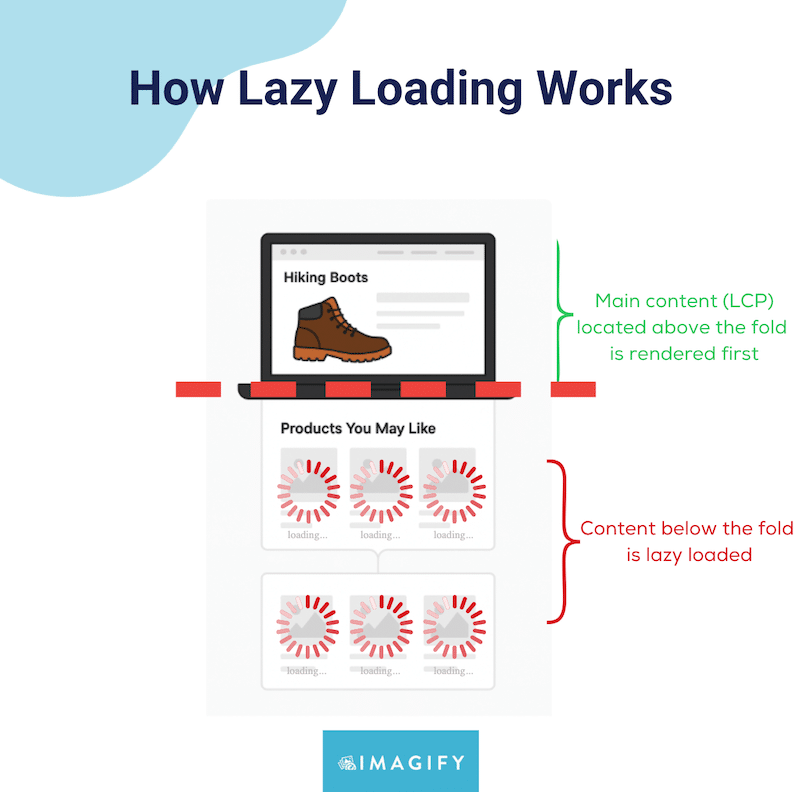

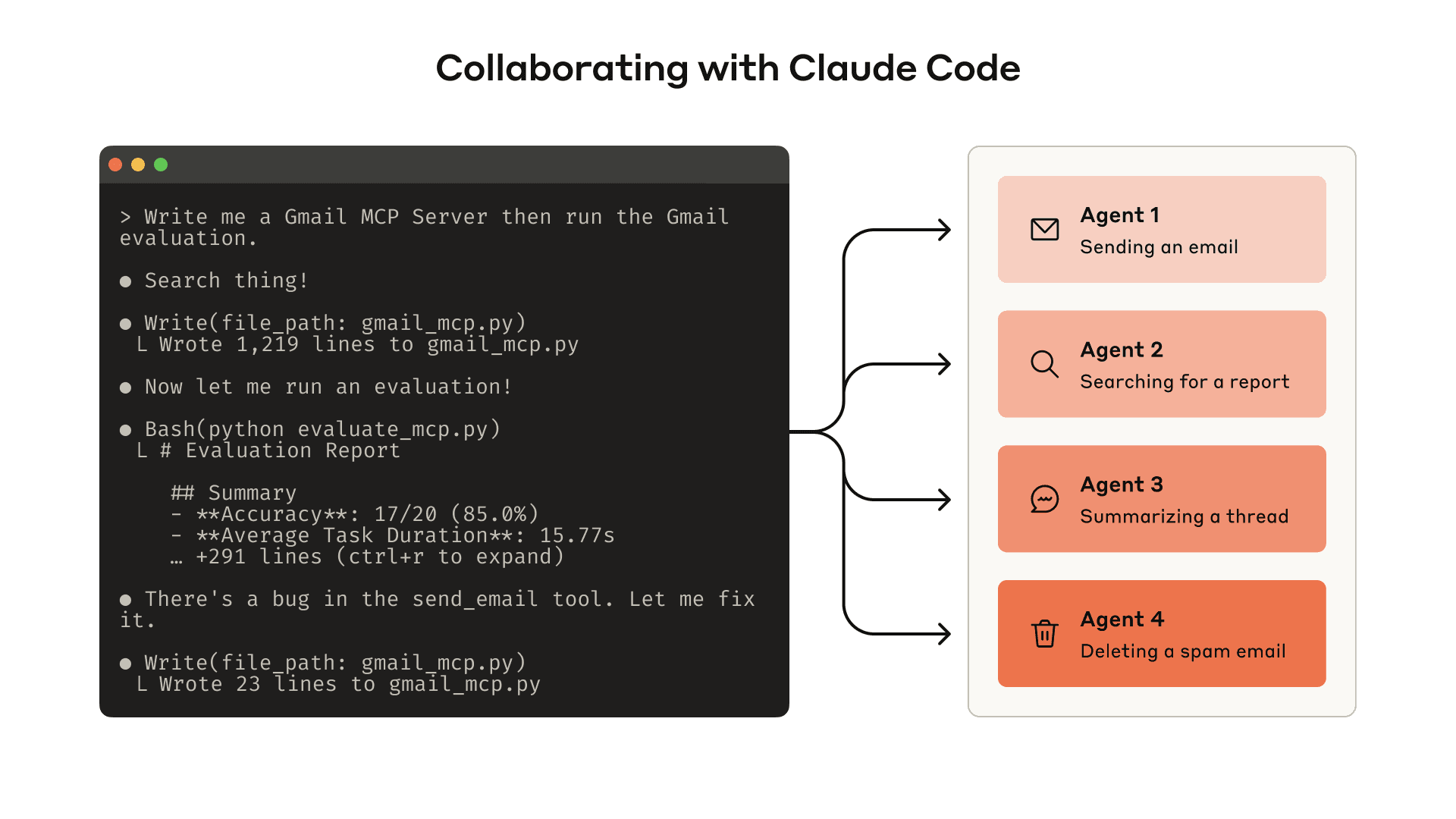

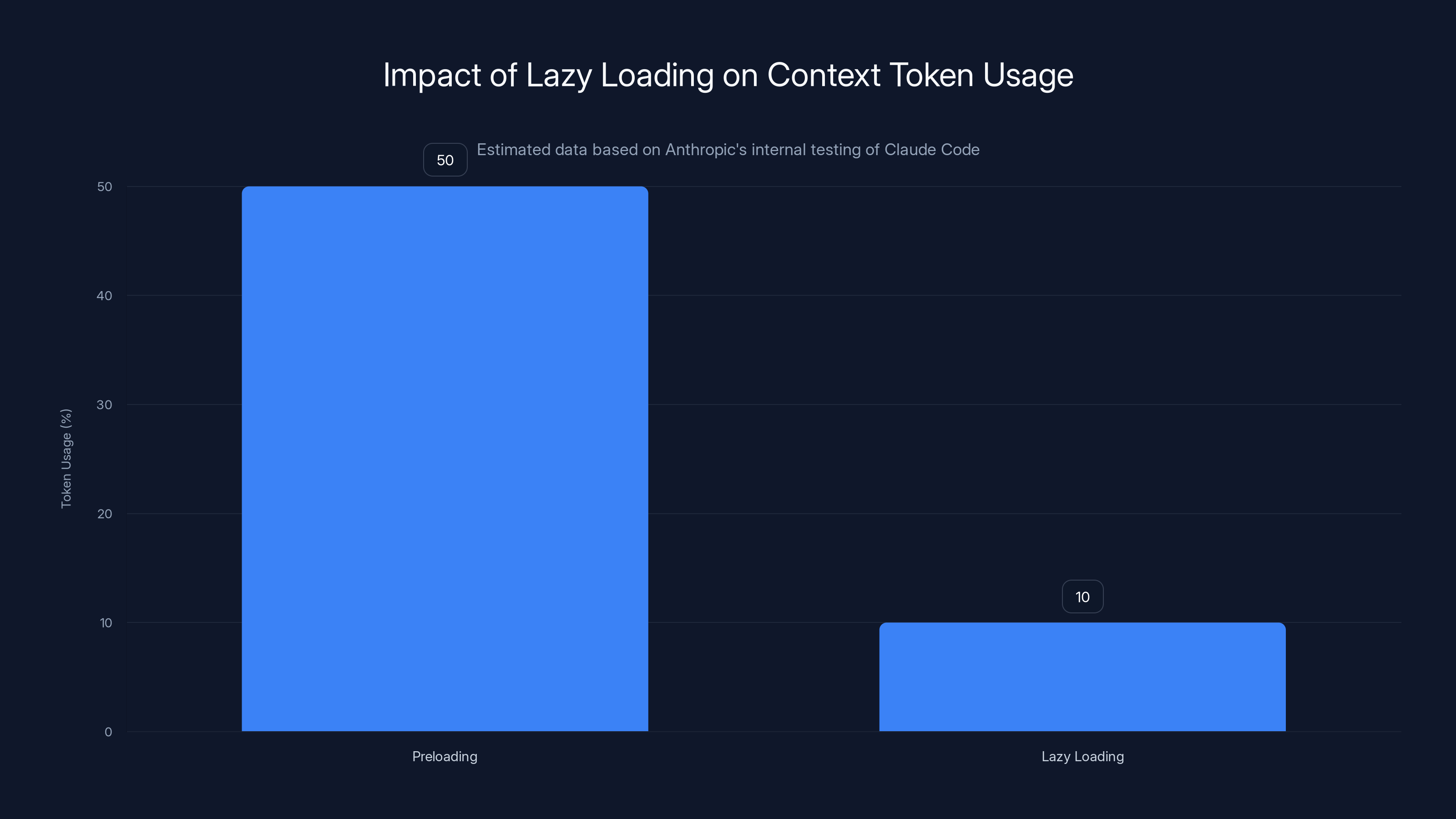

Then, in late 2024, something changed. The Claude Code team released MCP Tool Search, a feature that fundamentally altered how AI agents interact with external tools. Instead of preloading hundreds of tool definitions into the context window, the system now uses "lazy loading," fetching only the tools that are actually needed for the task at hand.

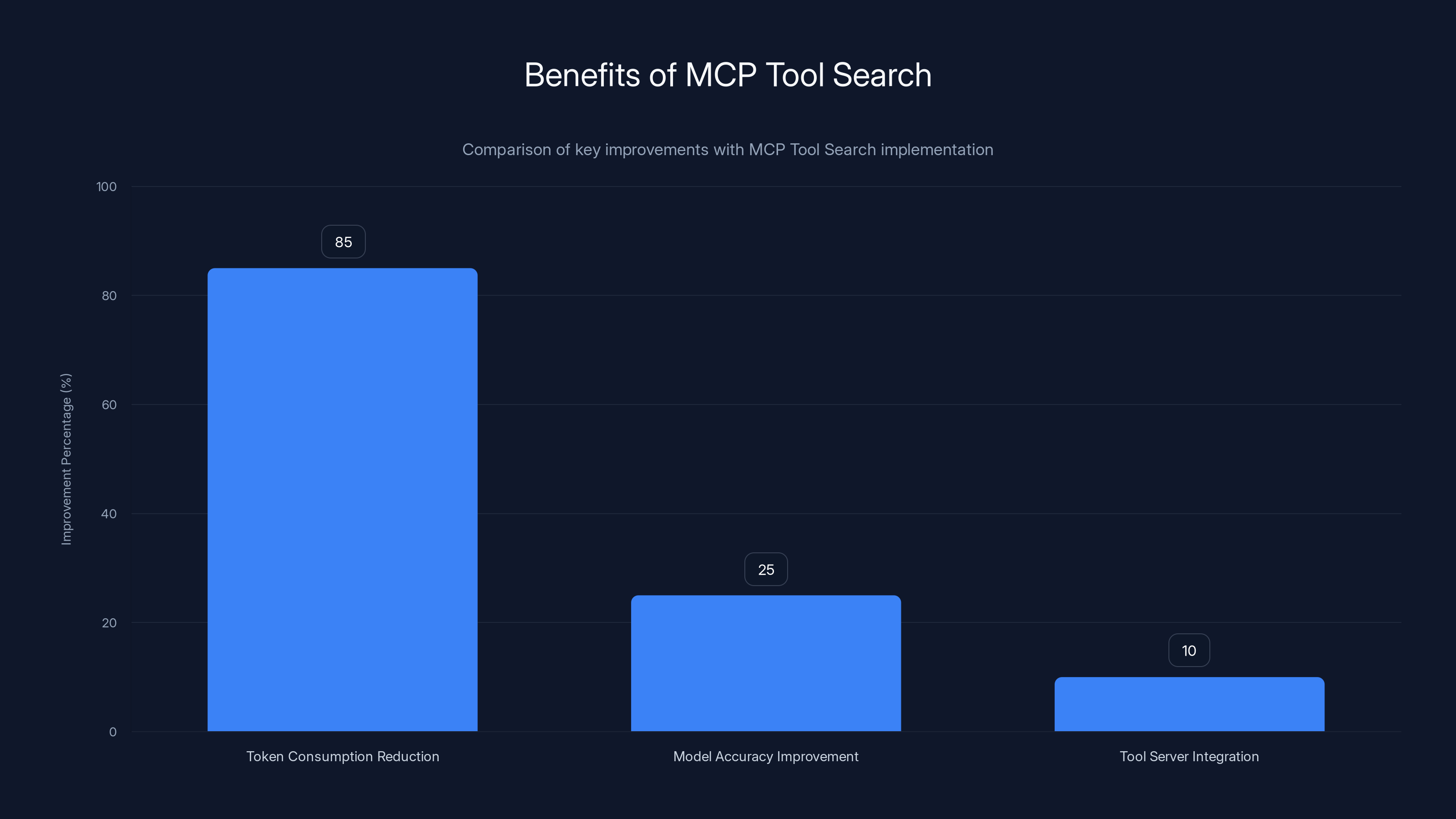

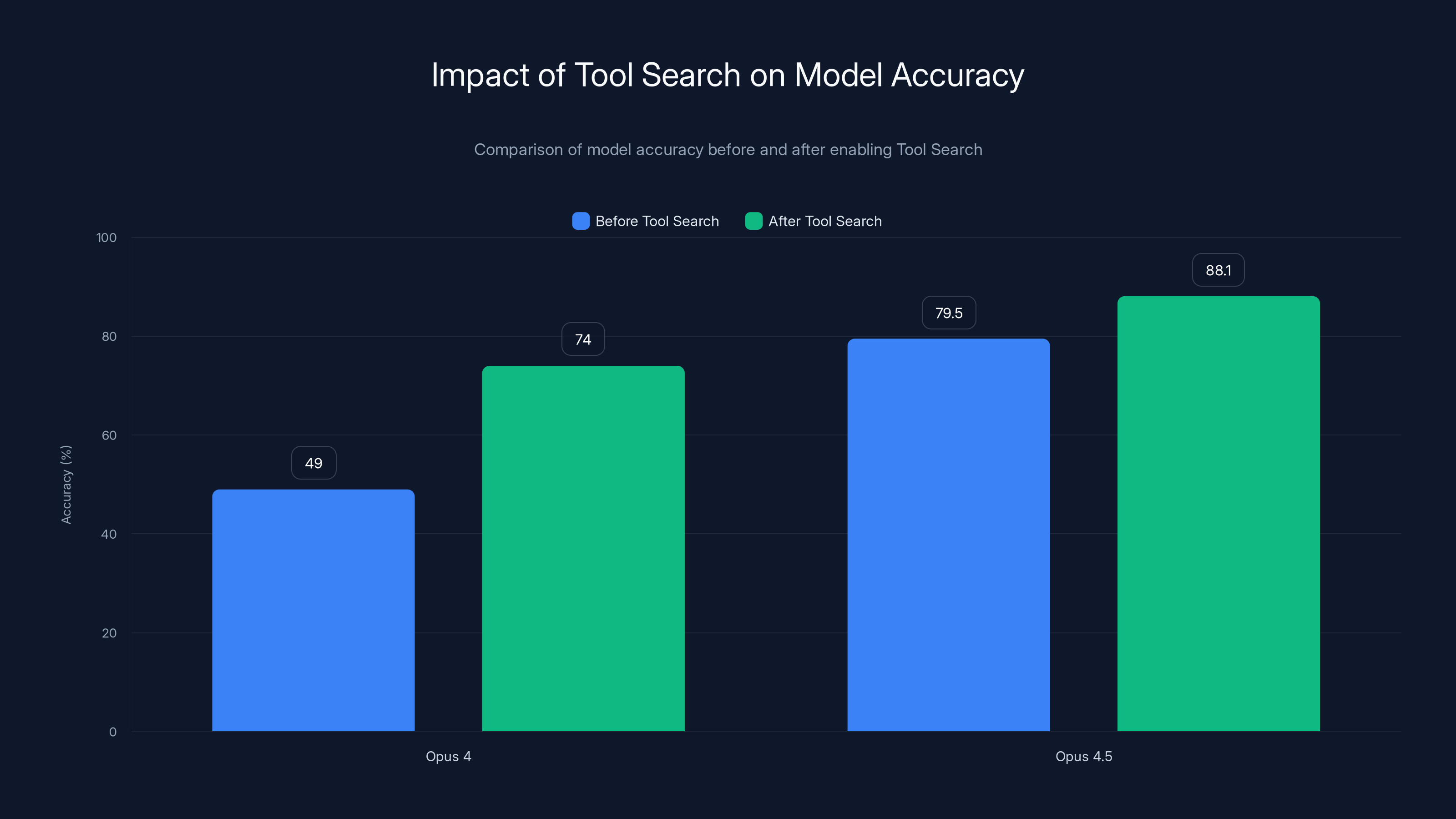

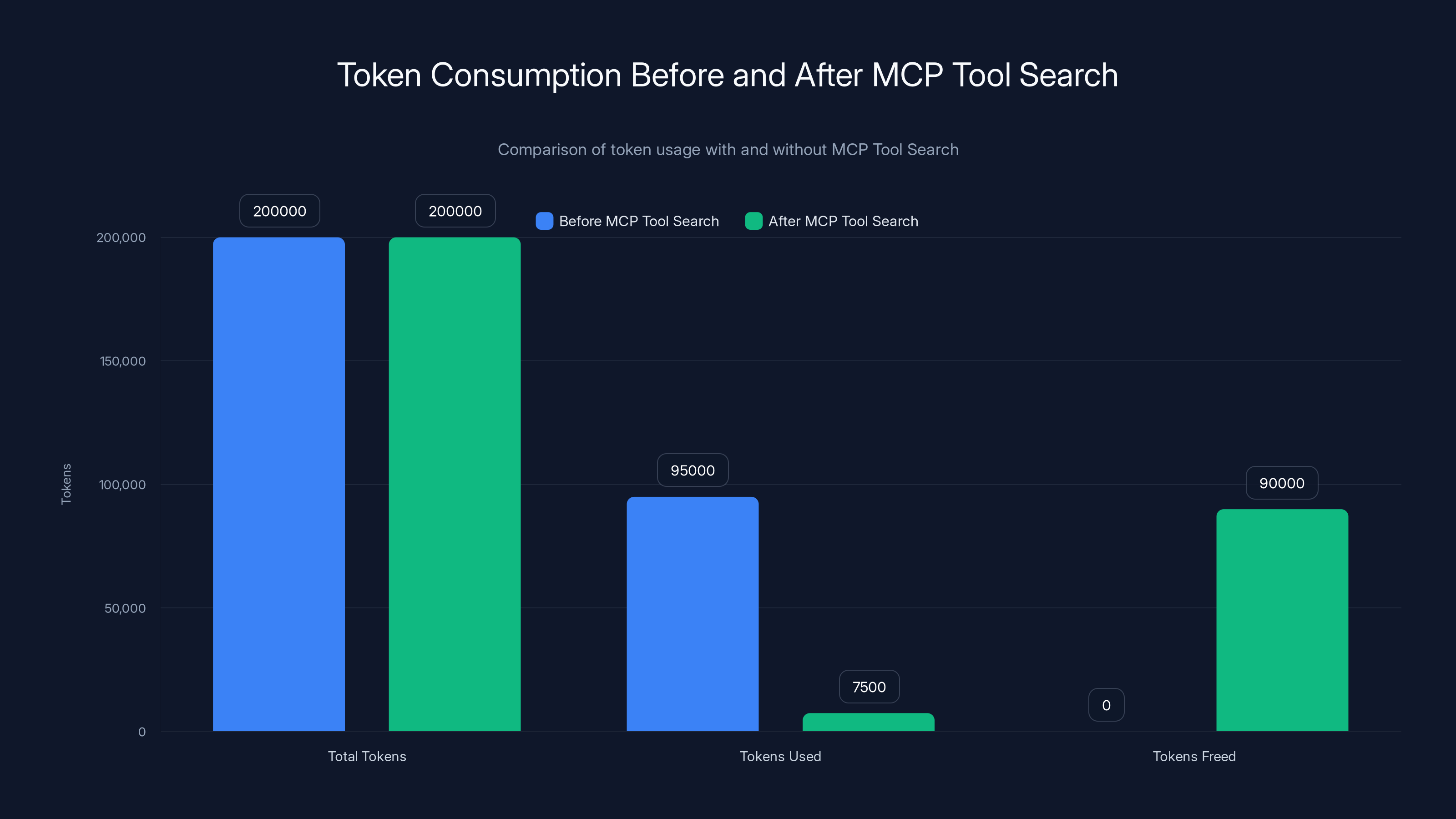

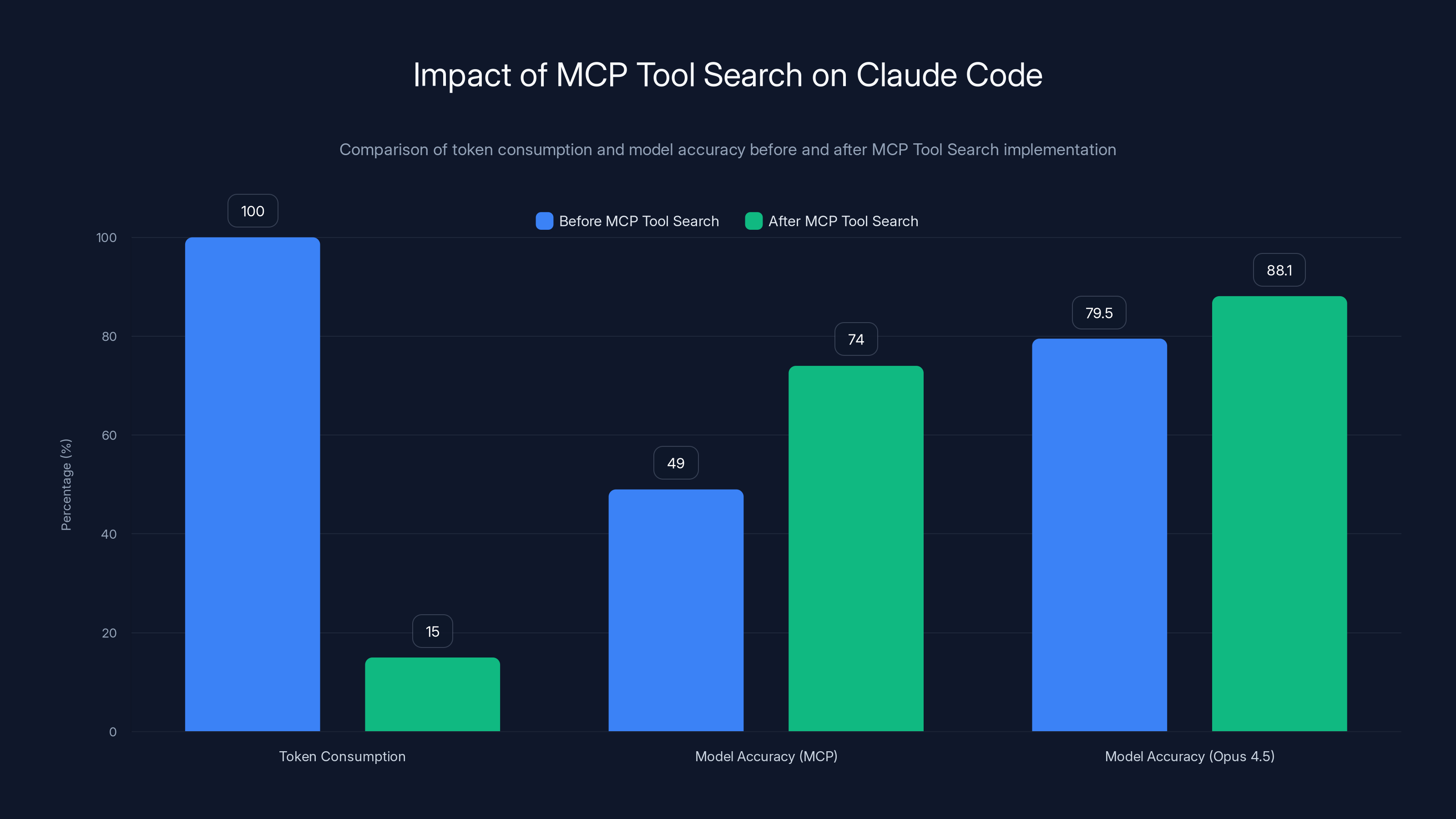

The results speak for themselves. Token consumption dropped by 85% in internal testing. Model accuracy on MCP evaluations jumped from 49% to 74%. For the newer Opus 4.5 model, it improved from 79.5% to 88.1%. This isn't just an optimization—it's a glimpse into how AI infrastructure should work as agents become more sophisticated.

Let's break down what happened, why it matters, and what it means for the future of AI development.

Understanding the "Startup Tax" on AI Agents

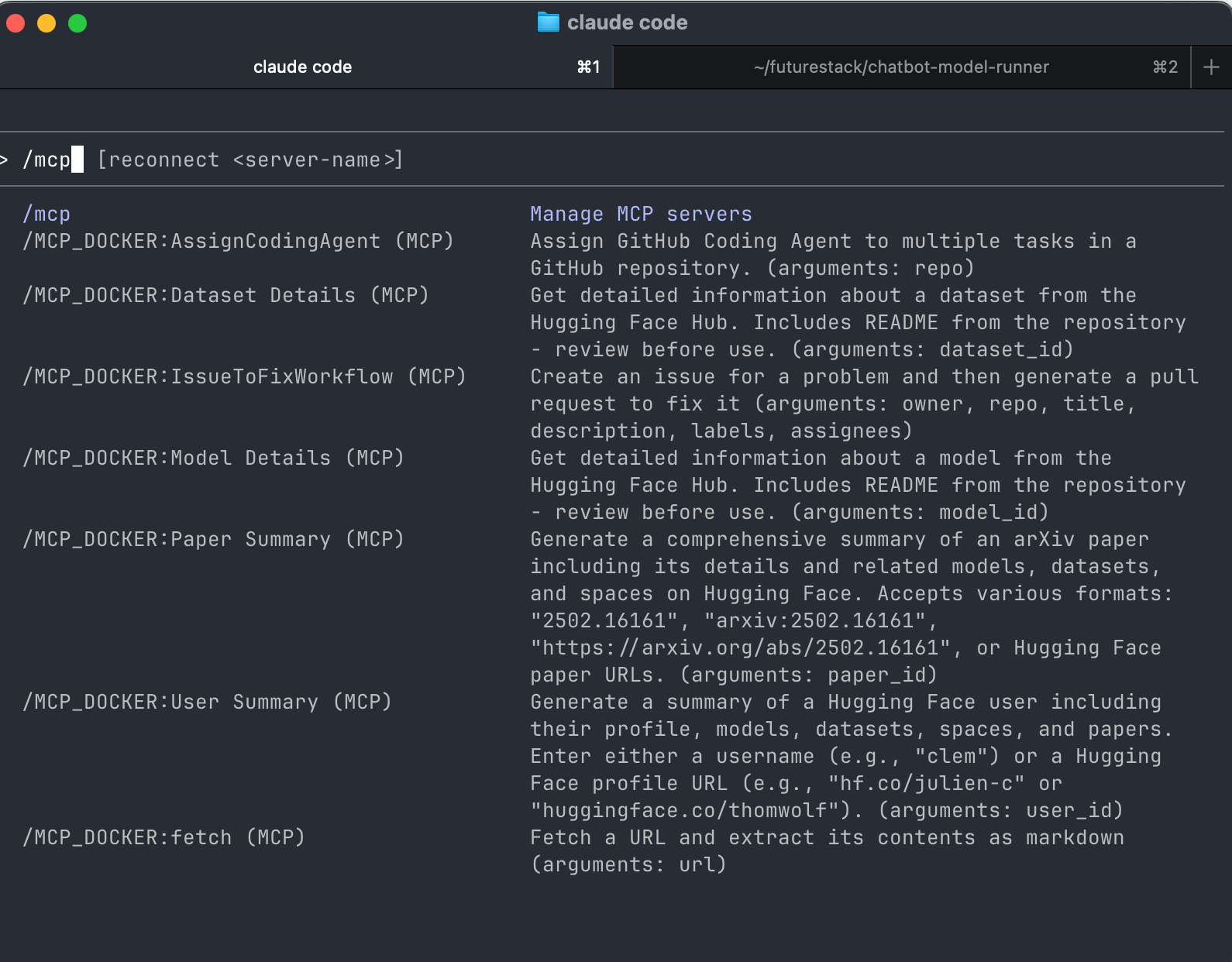

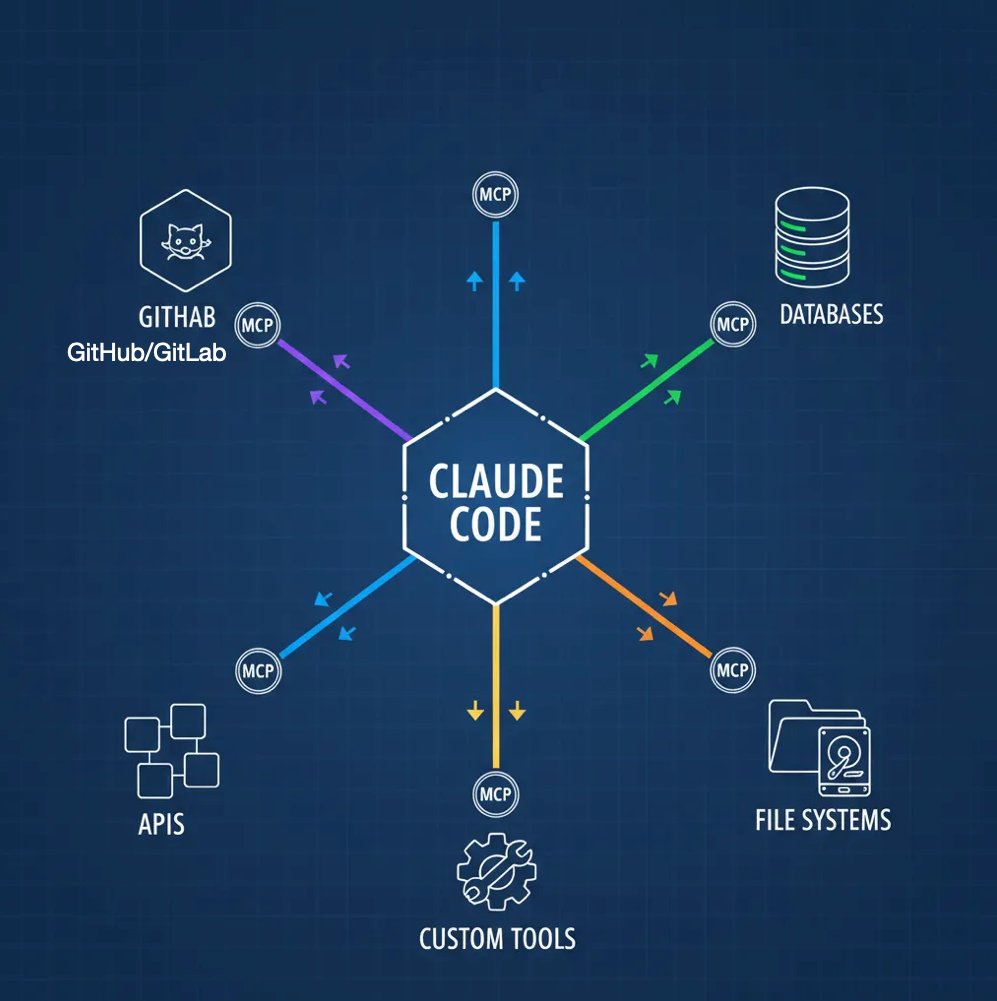

To understand the significance of MCP Tool Search, you need to understand the friction of the previous system. The Model Context Protocol, released in 2024 as an open source standard, was designed to be a universal way to connect AI models to data sources and tools. Whether you needed Git Hub access, local file systems, Docker environments, or custom APIs, MCP provided a structured, reliable format.

Sounds great in theory. But as the ecosystem grew, a problem emerged.

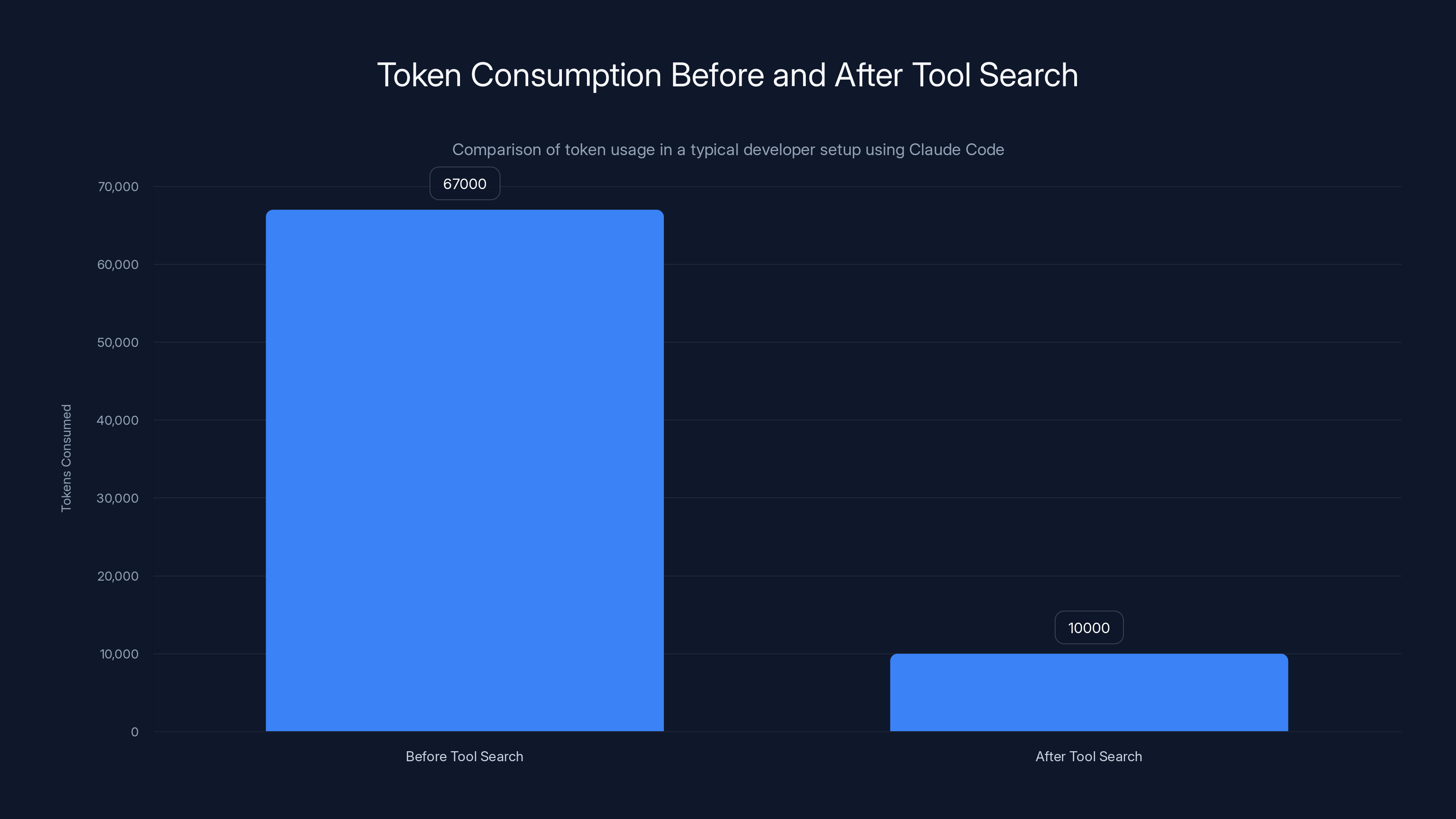

MCP servers could have 50+ tools each. Users were building setups with 7+ servers, and those setups were consuming 67,000+ tokens just to define all available tools. A single Docker MCP server could consume 125,000 tokens just to document its 135 tools. That's roughly equivalent to feeding the model 150+ pages of dense technical documentation before it even started working on the user's actual task.

Think about what this means in practice. Claude's context window (the amount of information it can hold at once) isn't infinite. Each token used to describe available tools is a token that can't be used for your actual code, your project history, or the AI's reasoning process. It's like showing up to work with a briefcase full of tools you'll never use, leaving no room for the ones you actually need.

As Thariq Shihipar, a technical staff member at Anthropic, put it: the old constraint forced "a brutal tradeoff. Either limit your MCP servers to 2-3 core tools, or accept that half your context budget disappears before you start working."

Community members analyzing the situation were even more blunt. Aakash Gupta, analyzing the problem before the fix was released, described it as a bottleneck that wasn't about too many tools—it was about loading tool definitions like "2020-era static imports instead of 2024-era lazy loading."

VSCode doesn't load every extension at startup. Jet Brains doesn't inject every plugin's documentation into memory. Modern software engineering solved this problem years ago. AI agents, being a newer paradigm, were still using the brute-force approach.

The frustration was building. Users wanted more tool integration, more capabilities, more power. But adding more tools made the system worse. The ecosystem had hit an architectural ceiling.

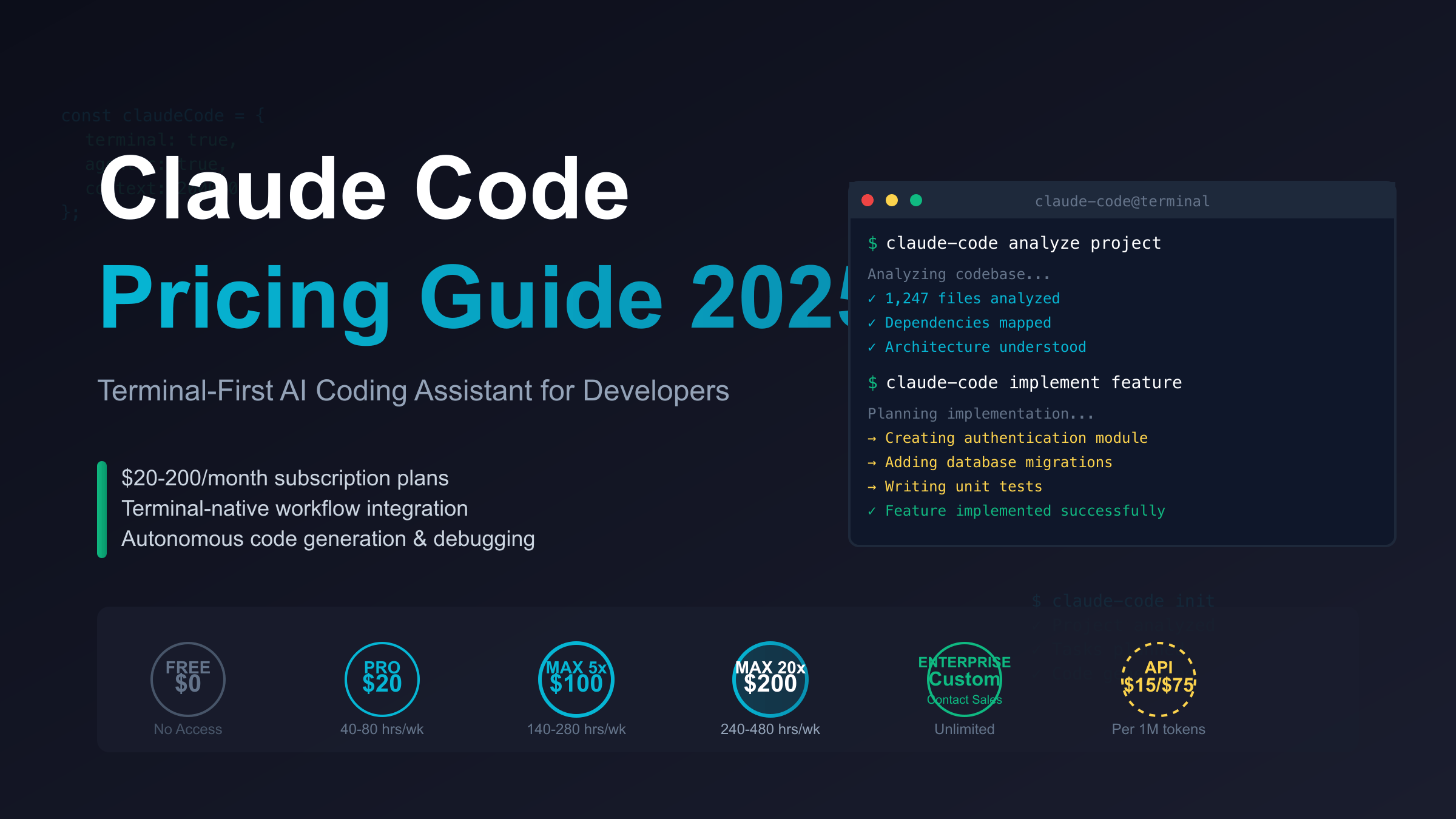

MCP Tool Search reduces token consumption by 85%, improves model accuracy by 25%, and allows integration of 10+ tool servers without context limits. Estimated data.

Enter MCP Tool Search: The Lazy Loading Solution

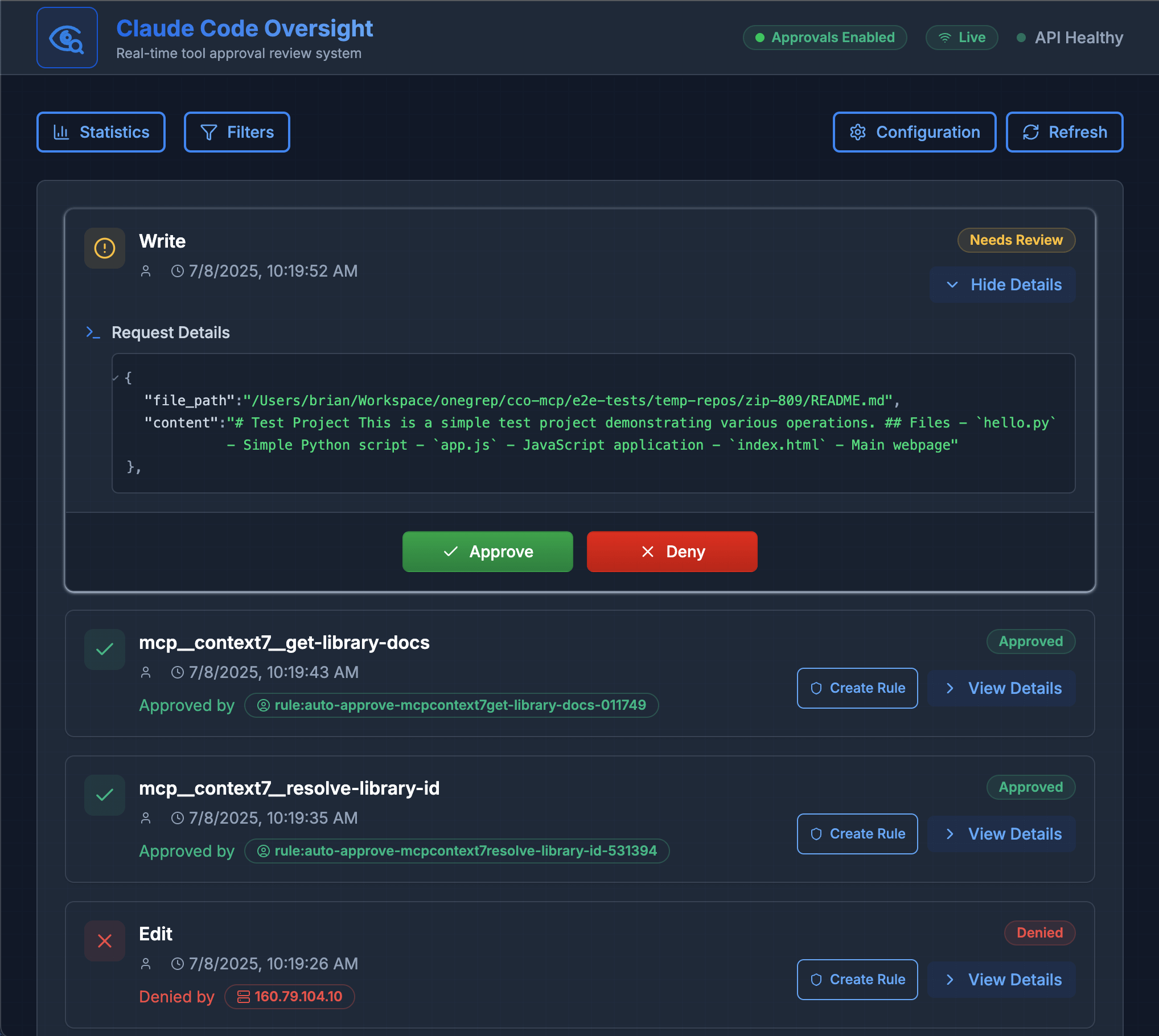

The solution Anthropic released was elegant in its restraint. Instead of preloading every tool definition into the context window, Claude Code now monitors context usage and switches strategies automatically.

Here's how it works: When the system detects that tool descriptions would consume more than 10% of the available context, it doesn't dump all the raw documentation into the prompt. Instead, it creates a lightweight search index. When you ask Claude Code to do something specific—say, "deploy this container" or "push this code to Git Hub"—the system doesn't scan a massive pre-loaded list of 200 commands. Instead, it queries the index, finds the relevant tool definition, and pulls only that specific tool into the context.

It's a shift from brute-force architecture to something resembling modern software engineering best practices.

For developers maintaining MCP servers, this change has practical implications. The server_instructions field in the MCP definition—previously a "nice to have" for documentation—is now critical. It acts as metadata that helps Claude understand when to search for your tools. You're essentially writing a brief summary of what your tools do and when they should be used, similar to how you'd describe a skill in a résumé.

If your MCP server handles Docker operations, your instructions might say something like: "Use these tools for container management, image building, and deployment operations." Claude Code uses this description to decide whether to pull in your tools when the user mentions containerization.

The token savings from this approach are dramatic. In Anthropic's internal testing, switching from preloading all tool definitions to using Tool Search reduced token consumption from approximately 134,000 tokens to around 5,000 tokens. That's an 85% reduction. You're maintaining full access to every tool while using a fraction of the context.

To put this in perspective, consider what 85% context savings means. If Claude Code's context window is typically fully utilized, freeing up 129,000 tokens means you can now include an additional 64 pages of project documentation, 10,000 lines of code for the model to review, or significantly longer conversation history. The model has room to breathe.

The Secondary Effect: Improved Accuracy Through Focus

While the token savings are the obvious benefit, there's a more subtle and potentially more important effect: improved model focus.

Large language models are notoriously sensitive to distraction. When a model's context window is stuffed with thousands of lines of irrelevant tool definitions, its ability to reason decreases. Imagine trying to concentrate on a complex problem while someone's reading an unrelated technical manual in the background. The human brain struggles. So does the AI's attention mechanism.

This creates a real problem called the "needle in a haystack" effect. When the model has access to hundreds of similar-sounding commands, it struggles to differentiate between them. Should it use notification-send-user or notification-send-channel? When you've got 50+ notification tools to choose from, even a smart model gets confused.

The MCP Tool Search update eliminates this noise. By loading only relevant tool definitions, Claude Code can dedicate its "attention" mechanisms to the user's actual query and the relevant active tools. It's the difference between having a cluttered desk with 500 books piled on it versus having just the 3 books you need for today's work.

The data backs this up with impressive numbers. Internal benchmarks shared by the Anthropic team indicate that enabling Tool Search improved the accuracy of the Opus 4 model on MCP evaluations from 49% to 74%. That's a 25-percentage-point jump, or roughly a 51% relative improvement. For the newer Opus 4.5 model, which was already performing better, accuracy jumped from 79.5% to 88.1%, an improvement of 8.6 percentage points or about 11% relative improvement.

These aren't marginal gains. In practical terms, this means Claude Code is now more likely to select the correct tool, use it correctly, and chain multiple tools together more intelligently. A 74% accuracy rate is approaching something you could confidently use in production for many real-world tasks.

Boris Cherny, the Head of Claude Code, captured the significance in his reaction to the launch: "Every Claude Code user just got way more context, better instruction following, and the ability to plug in even more tools."

Let's unpack each of those three things:

More context: Literally. You're freeing up 85% of the tokens previously consumed by tool definitions.

Better instruction following: Because the model isn't distracted by irrelevant tool definitions, it can focus on understanding your specific instructions and reasoning through the task step-by-step.

More tools: You're no longer constrained by the brutal tradeoff. You can safely plug in 10+ tool servers instead of being limited to 2-3.

The introduction of Tool Search reduces token consumption by 85%, freeing up 57,000 tokens per session. Estimated data based on typical developer setup.

Technical Architecture: How Lazy Loading Actually Works

Understanding how lazy loading works technically helps explain why it's such an elegant solution.

In the previous system, Claude Code maintained what we might call a "static binding" architecture. Every tool definition was loaded into the context window upfront, the same way traditional software loads all dependencies at startup. This is simple to implement but inefficient at scale.

With MCP Tool Search, Anthropic implemented what's effectively a "dynamic binding" system using a search-based approach. When the system detects that tool definitions would exceed the 10% context threshold, it builds a lightweight index. This index doesn't contain the full tool definitions—instead, it contains metadata and searchable descriptions.

Here's the flow:

-

System initialization: Claude Code loads MCP servers and builds a metadata index without loading full tool definitions.

-

User request: You ask Claude Code to do something, like "Build a Docker image from this Dockerfile."

-

Intent detection: The system analyzes your request and determines that Docker tools are relevant.

-

Index search: Instead of scanning a list of 200 tools, the system queries the index using your request as context.

-

Selective loading: Only the relevant tool definitions are loaded into the context window.

-

Execution: Claude Code now has the tool definitions it needs, plus massive amounts of freed-up context for reasoning.

The technical elegance lies in the server_instructions field. This is where you document what your tools do and when they should be used. If you maintain an authentication MCP server, your instructions might read: "Use these tools for user login, token management, and permission verification." Claude Code uses this as a guide when deciding whether to include your tools in the search results.

It's a clever inversion of the traditional approach. Instead of asking the model to understand all available tools and figure out which ones to use (a task that degrades as the number of tools increases), you're telling the model upfront what each tool is good for. The model then decides whether to use that tool based on the user's request.

The Architectural Evolution: From Novelty to Production

This update signals something deeper than just a performance optimization. It represents a maturation in how we think about AI infrastructure. It shows that AI agents are transitioning from novel experiments to production systems that need architectural discipline.

In the early days of any technology paradigm, brute force is common. The first websites didn't care about performance optimization—they just put everything on the page and hoped for the best. The first databases didn't worry about query optimization. Early software frameworks loaded everything into memory and dealt with the consequences later.

But as systems scale, efficiency becomes not just a nice-to-have—it becomes the primary engineering challenge. The MCP Tool Search update is Anthropic acknowledging that AI agents have reached this inflection point.

Consider how modern development tools evolved. VSCode doesn't load every extension at startup—it uses lazy loading. Jet Brains IDEs don't inject every plugin's documentation into memory—they load it on demand. Modern web frameworks use lazy loading for images, components, and data. These patterns are well-established in software engineering precisely because they solve the scale problem.

AI agents are applying the same lessons. Claude Code can now support an ecosystem of tools the way VSCode supports an ecosystem of extensions. You don't have to worry that adding one more tool will degrade performance for everyone. The architecture scales.

This also reflects a maturation in the open source ecosystem around MCP. The protocol itself provided the structure, but the ecosystem needed architectural evolution to realize its full potential. With Tool Search, MCP servers can be more ambitious. They can expose more tools. They can serve more specialized use cases. The ecosystem can expand without the bloat that previously constrained it.

Real-World Impact: What Changed for Developers

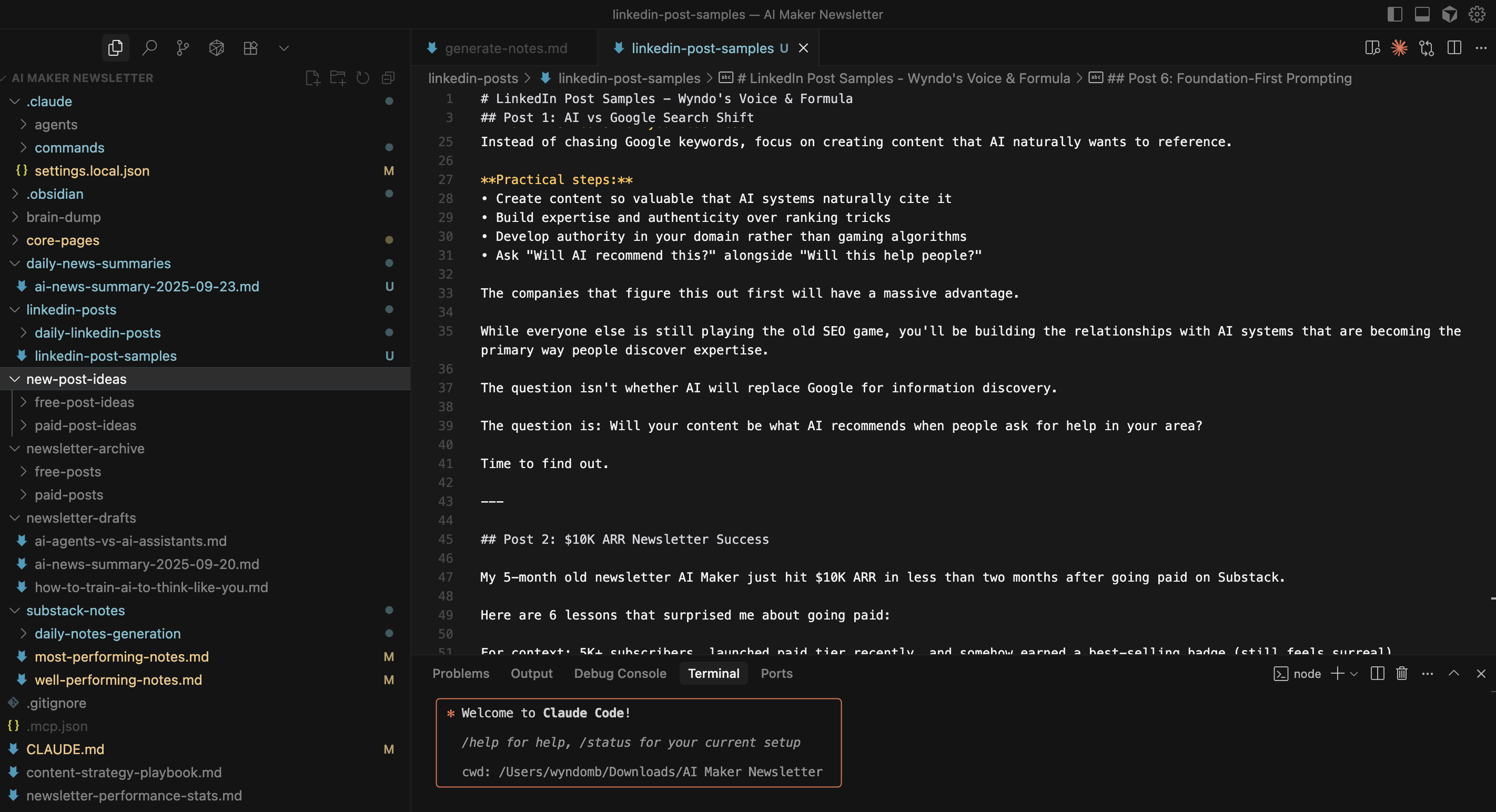

For developers using Claude Code, the impact is immediate but subtle. You don't see a dramatic UI change or new buttons to click. Instead, you notice that Claude Code "just works" better.

You can now safely configure Claude Code with more tool servers. Previously, adding too many servers meant you'd hit context limits and the AI would struggle. Now, you can integrate your Git Hub server, your Docker server, your database tools, your custom API server, and your file management tools all at once without worry.

The AI's responses become more coherent. Because it's not distracted by irrelevant tool definitions, it reasons through problems more clearly. It chains multiple tools together more intelligently. It makes fewer mistakes.

Developers who had to carefully curate which tools to expose now have flexibility. You can be more comprehensive in your MCP server design without worrying about context bloat. This means better-designed tools, more complete integrations, and less compromising architectural decisions for performance reasons.

For teams building MCP servers, this opens new possibilities. You're no longer constrained by the "2-3 core tools" limitation. You can build comprehensive MCP servers that expose all the functionality your system has, confident that Claude Code will only load what's needed.

Enabling Tool Search significantly improved model accuracy: Opus 4 saw a 25-percentage-point increase, while Opus 4.5 improved by 8.6 percentage points.

The Broader Implications for AI Infrastructure

This update is significant beyond just Claude Code. It demonstrates a pattern that will likely repeat across AI infrastructure.

Right now, most AI agent systems are relatively simple. They might integrate with a few external tools or data sources. As these systems become more sophisticated, they'll face similar scaling challenges. An AI agent that needs to access dozens of APIs, databases, and services will run into the same context bloat problem Claude Code faced.

Solutions like lazy loading, dynamic indexing, and smarter tool discovery will likely become standard features in AI frameworks. We'll see similar patterns in other platforms as they scale their agent capabilities.

This also has implications for how we design AI-accessible tools going forward. The emphasis on clear server_instructions and metadata suggests that future AI systems will care deeply about how tools document themselves. Tools that provide clear, concise descriptions of what they do and when to use them will work better with AI agents.

We might also see tool ecosystems evolve in response. Just as VSCode has a massive extension marketplace, we could see MCP server marketplaces where developers can easily find and integrate pre-built tool servers. But unlike a VSCode extension marketplace where extension quality varies widely, MCP servers designed with lazy loading in mind will be more reliable and performant.

How to Optimize Your MCP Server for Tool Search

If you're building or maintaining an MCP server, understanding how Tool Search works helps you optimize for it.

First, invest in clear server_instructions. This is now critical infrastructure. Your instructions should answer: What does this server do? When should it be used? What problems does it solve? What's the best way for an AI to understand when my tools are relevant?

Second, organize your tools logically. If you have 50 tools, group them by functionality. Document each tool with a clear name and description. Help Claude Code's search function understand what each tool does so it can fetch the right one when needed.

Third, consider your tool naming conventions. Tools with clear, descriptive names that use consistent patterns are easier for the search function to categorize. docker-build-image is better than build. github-push-commit is better than push.

Fourth, avoid redundancy. If you have five tools that do essentially the same thing with minor variations, consolidate them. Tool Search works best when each tool has a distinct purpose. The search function can then clearly determine when each tool is needed.

Fifth, test your MCP server with Claude Code directly. Pay attention to whether Claude Code is finding your tools when they're relevant. If it's not, your server_instructions might need clarification or your tool descriptions might need to be more specific.

Measuring the Impact: Token Efficiency and Cost

Let's talk about what the 85% token reduction actually means in terms of real-world impact.

Consider a typical scenario: A developer using Claude Code with 7 tool servers, each with 10+ tools. Before Tool Search, that setup consumed roughly 67,000 tokens just to define all available tools. After Tool Search, the same setup might consume 10,000 tokens or less. That's 57,000 tokens freed up per session.

For Claude 3 Opus (the model Claude Code typically uses), input tokens typically cost around

But the cost calculation is only part of the story. The real value is in what you can do with those freed tokens. You can include more context from your project. You can provide longer code samples for analysis. You can maintain longer conversation history. All of these things improve the quality of Claude Code's responses.

In some cases, that might be worth more than the cost savings. Being able to give Claude Code the full context it needs to solve a complex problem is more valuable than cutting costs.

The implementation of MCP Tool Search significantly reduces token usage from 95,000 to 7,500, freeing up 90,000 tokens for improved model performance. Estimated data.

The Evolution of Context Windows and Agent Capability

MCP Tool Search is interesting in the context of broader trends in AI development. Models are getting larger context windows. Claude 3 supports up to 200,000 tokens. Newer models are pushing toward 1 million tokens. You'd think this would eliminate the context bloat problem.

It doesn't. Here's why: Even with massive context windows, the quality of the AI's reasoning degrades when it has to process irrelevant information. A 200,000-token context window sounds infinite until you realize that a large codebase, full project history, and current conversation can easily consume 100,000+ tokens on their own. Add in tool definitions, and you're cutting into the space available for actual reasoning and output.

MCP Tool Search solves this not by giving you more context, but by being smarter about how context is used. It's a quality improvement rather than a quantity improvement. This suggests that future AI developments will focus on similar efficiency gains—smarter context management, better prioritization of information, and more efficient reasoning patterns.

We might also see this pattern influence how model training evolves. If models become better at focusing on relevant information and ignoring irrelevant information, they could become more efficient even with the same context window size. Tool Search is one implementation of this principle, but we'll likely see similar optimizations across different AI systems.

Challenges and Edge Cases

Like any optimization, MCP Tool Search has limitations worth understanding.

One challenge is with tools that have complex interdependencies. If Tool A needs to be used before Tool B, the search function might not understand that relationship if it's not explicitly documented in the server instructions. You need to be very clear about these dependencies in your documentation.

Another edge case involves highly specialized tools that don't fit into obvious categories. If you have a tool that's useful in 10 different contexts but the tool's name suggests it's only relevant to one context, the search function might not find it when needed. The fix is better documentation and clearer naming.

There's also the question of what happens when Tool Search doesn't find the tool you need. Currently, if the search function doesn't identify a tool as relevant, it won't be loaded into the context. This is usually correct behavior, but there are edge cases where a tool might be useful in an unexpected way. Users can work around this by being more explicit in their requests (e.g., "I need to use the Docker build tool" instead of just "Build this"), but this isn't ideal.

Another consideration: The 10% context threshold is a reasonable default, but different use cases might benefit from different thresholds. Some users might want to be more aggressive about lazy loading to maximize context for reasoning. Others might want to preload more tools if they're working on a task that requires many tool interactions. Future versions might offer configuration options for this.

Looking Forward: The Future of AI Agent Architecture

MCP Tool Search represents an inflection point in how AI agent systems will be designed going forward.

We're moving from the era where agents were novelties—interesting experiments that worked on simple tasks—toward the era where they're production infrastructure that needs to handle complex, real-world workflows. That transition requires architectural maturity, and Tool Search is one expression of that maturity.

Future developments might include even smarter search functions that understand semantic relationships between tools. Instead of just keyword matching, the search might understand that if you're doing database optimization, you might need profiling tools, query analysis tools, and indexing tools even if you didn't explicitly ask for them.

We might also see more sophisticated caching mechanisms. If Claude Code frequently uses the same set of tools in a session (e.g., you're doing Docker work all day), the system might keep those tools in context to avoid repeatedly loading and unloading them.

There's also potential for learning-based optimization. The system could track which tools you actually use and prioritize keeping those in context, or predict which tools you'll need based on your conversation history.

As AI agents become more sophisticated and integrate with more external systems, these kinds of architectural innovations will become increasingly important. The lesson from MCP Tool Search is that efficiency matters just as much as capability when building systems that need to operate at scale.

Estimated data shows that lazy loading reduces token usage from 50% to 10%, significantly improving system performance by loading only necessary tool definitions.

Comparison: Before and After MCP Tool Search

Let's create a concrete comparison to illustrate the shift.

Before MCP Tool Search:

You want to build an AI agent that can work with Git Hub, Docker, and database tools. You set up three MCP servers with a total of 85 tools across all of them. When Claude Code starts, it loads all 85 tool definitions into context. That's roughly 85,000-100,000 tokens consumed just describing tools. Your 200,000-token context window is already halfway gone before you've given the agent any real task to work on. The model is distracted by irrelevant tools (why does it need to know about every database tool when you're just deploying containers?). Its accuracy suffers.

After MCP Tool Search:

You set up the same three MCP servers with the same 85 tools. Claude Code builds a lightweight search index instead of loading all tool definitions. When you ask it to "Deploy the application," the system searches the index, identifies that Docker tools are relevant, loads just those definitions into context. Maybe 5,000-10,000 tokens consumed. Your context window is still largely empty. The model focuses entirely on your deployment task with full context for reasoning. Its accuracy improves.

The difference is dramatic. You've freed up 90,000 tokens while actually improving the model's performance.

Getting Started with MCP Tool Search

If you want to take advantage of MCP Tool Search, here's how to approach it.

First, make sure you're using the latest version of Claude Code. The feature rolled out in late 2024, so any recent update should have it.

Second, audit your current MCP server setup. How many servers are you using? How many tools total? If you're currently limited to 2-3 servers, you might have been hitting the context bloat problem. With Tool Search, you can safely expand.

Third, improve your server_instructions. Look at the MCP servers you're using or building. Make sure the instructions clearly describe what the server does and when it should be used. This is critical for Tool Search to work effectively.

Fourth, test the configuration. Use Claude Code normally and pay attention to whether it's accessing the right tools for your tasks. If it's missing tools you expected it to use, the search function might not be finding them. That usually means the server instructions need clarification.

Fifth, if you maintain MCP servers, consider optimizing them for lazy loading. Better documentation, clearer tool names, logical grouping, and removal of redundant tools all help.

The Broader Philosophy: Efficiency as a Feature

What's interesting about MCP Tool Search from a product philosophy perspective is that it reframes efficiency as a feature rather than just an implementation detail.

Traditional software optimization happens behind the scenes. Users don't see it. They might notice the software is faster, but they don't experience the optimization directly. With Tool Search, the optimization directly enables new capabilities. You can use more tools because the system is smarter about managing context.

This is a subtle but important shift. It means future product development will likely emphasize this kind of "efficiency-as-enablement" thinking. Make the system more efficient with context, and suddenly you can support more complex tasks. Make reasoning more efficient, and you can tackle harder problems. This focuses engineering effort on improvements that have direct user-facing value.

It also suggests that future competition in the AI space will be increasingly won by whoever can be most efficient with limited resources (like context) rather than whoever has the largest models. A model that can solve complex problems with limited context might be more valuable than a larger model that needs massive context to work properly.

The introduction of MCP Tool Search reduced token consumption by 85% and significantly increased model accuracy for both MCP and Opus 4.5 models. Estimated data based on internal testing results.

Real-World Workflows: How Tool Search Changes Development

Let's think through some concrete development workflows and how Tool Search changes them.

Scenario 1: Building a full-stack application

Previously, you might configure Claude Code with Git Hub tools (for code management), Docker tools (for containerization), and database tools (for schema management). But the combined context from all these tools was so large that Claude struggled with complex reasoning. With Tool Search, you can safely include all these tools. When you're working on the API layer, Docker tools fade into the background while database tools stay readily accessible. The model's focus follows your work.

Scenario 2: Debugging complex issues

When something breaks in production, you want Claude Code to have access to logging tools, profiling tools, database query analysis, and application health checks. Previously, connecting all these would consume massive context. Now, you can safely set up all of them. The model searches for the relevant tools based on your problem description, giving you more complete debugging capabilities.

Scenario 3: Documentation and code generation

Generating accurate documentation requires understanding code structure, API specifications, and usage patterns. With Tool Search, Claude Code can access all the tools needed to understand your system without drowning in irrelevant information. This improves the quality of generated documentation.

Implementing Tool Search in Your Own Workflows

If you're building with Claude Code or planning to integrate it into your workflow, here's a practical implementation strategy.

Step 1: Start with a minimal setup. Choose your core tools (maybe Git Hub and one domain-specific tool). Get comfortable with how Claude Code works.

Step 2: Gradually add more tools. As you add each tool, pay attention to how Claude Code discovers and uses it. This gives you feedback about whether your server instructions are clear.

Step 3: Monitor context usage. Some Claude Code integrations will show you how many tokens are being consumed. Watch for situations where you hit context limits. Those are places where Tool Search is working hard but might need optimization.

Step 4: Refine your server instructions based on what you learn. If Claude frequently uses tools in unexpected ways, that's feedback that your documentation might be misleading.

Step 5: Expand confidently. Once you understand how your tools interact with Tool Search, you can safely expand to comprehensive setups that would have been impractical before.

Industry Trends: Why This Timing Matters

MCP Tool Search arriving in late 2024 is significant timing. This is when AI agents were starting to move from hype into practical usage. Teams were trying to integrate agents into real workflows and hitting exactly this kind of practical limitation.

Ant solutions arrive just in time to enable the next wave of adoption. Before Tool Search, ambitious teams would try to give Claude Code comprehensive tool access and run into problems. After Tool Search, those same ambitious integrations become practical. This removes a barrier to AI agent adoption and enables more sophisticated use cases.

This also suggests where AI development is heading. Early stages of any technology focus on capability ("what can it do?"). Mature stages focus on efficiency ("how can it do it better?"). We're clearly moving into the efficiency phase of AI agent development. Expect to see more innovations focused on smarter context management, better resource allocation, and more efficient reasoning patterns.

Integration Considerations: Working with Existing Systems

If you're integrating Claude Code into existing systems, Tool Search changes some of your architecture decisions.

Previously, you had to make hard choices about which tools to expose through Claude Code. Exposing too many would cause problems. Now, you can expose more comprehensive tool sets. This might mean redesigning how your systems surface capabilities to Claude Code.

You might also want to reorganize your MCP servers. Instead of having one mega-server with everything, you could split into domain-specific servers (database server, infrastructure server, application monitoring server, etc.). With Tool Search, this modular approach works better than before because the system intelligently finds the relevant servers for each task.

You might also want to implement feedback mechanisms. Track which tools Claude Code actually uses for which kinds of tasks. This data helps you understand whether your tool organization and documentation is working well.

The Competition Angle: What This Means for Other Platforms

MCP Tool Search is Anthropic-specific, but the problem it solves is universal. Any AI agent system that connects to multiple external tools faces similar context challenges.

We should expect to see similar solutions from other platforms. Teams building AI agents on top of Open AI's models, Google's models, or open-source models will need similar mechanisms. The specific implementation will differ, but the pattern is becoming clear: lazy loading for tool definitions is table stakes for production agent systems.

This might also inspire cross-platform collaboration. If MCP Tool Search becomes the standard approach, other platforms might adopt MCP or something similar. Or we might see competitive implementations that try to solve the problem more elegantly.

Either way, the broader trend is clear: as AI agents mature, infrastructure solutions that improve efficiency will become increasingly valuable. Tool Search is an example of the kind of innovation that wins market share in this space.

FAQ

What is the Model Context Protocol (MCP)?

The Model Context Protocol is an open source standard released by Anthropic in 2024 that provides a structured way to connect AI models to external tools, data sources, and APIs. It allows tools like Claude Code to safely access and control systems like Git Hub repositories, Docker environments, and file systems through a standardized interface.

How does MCP Tool Search work?

Instead of preloading all tool definitions into the context window, MCP Tool Search creates a lightweight search index and only loads tool definitions when they're actually needed. When the system detects that tool descriptions would consume more than 10% of available context, it switches from static loading to dynamic search-based loading. The system queries this index based on your request and pulls only relevant tool definitions into context.

What are the benefits of MCP Tool Search?

The primary benefits include an 85% reduction in token consumption for tool definitions, improved model accuracy (from 49% to 74% on MCP evaluations for Opus 4), and the ability to safely integrate 10+ tool servers without hitting context limits. This frees up context for actual project code, reasoning, and longer conversation history, directly improving Claude Code's capability to solve complex problems.

Why was the old system problematic?

The previous system loaded all tool definitions upfront, similar to how legacy software loads all dependencies at startup. With 7+ MCP servers containing 50+ tools each, this consumed 67,000+ tokens just describing available tools. This meant half the available context was consumed before any actual work began, and the abundance of irrelevant tool information distracted the model's reasoning.

How can I optimize my MCP server for Tool Search?

Write clear and specific server_instructions that explain what your tools do and when they should be used. Use descriptive tool names that clearly indicate their purpose. Organize related tools logically, remove redundant tools, and provide concise but informative descriptions for each tool. Test your configuration with Claude Code to ensure the search function correctly identifies and loads your tools when relevant.

What happens if Tool Search doesn't find a tool I need?

If the system doesn't identify a tool as relevant to your request, it won't be loaded into context. This is usually correct behavior, but if you need a tool in an unexpected context, you can be more explicit in your request. For example, instead of asking generically for help with a task, explicitly mention the tool name or the specific capability you need.

How does Tool Search impact costs?

The 85% token reduction saves approximately

Is Tool Search available for all Claude models?

MCP Tool Search is implemented in Claude Code, which uses Anthropic's latest models. The feature became available in late 2024. Check your Claude Code version to ensure you have the latest update that includes Tool Search functionality.

Can I use Tool Search with custom MCP servers?

Yes. Any MCP server you build or deploy can take advantage of Tool Search. The key is providing clear server_instructions that help Claude Code understand what your tools do and when they should be used. The system will automatically apply lazy loading to your custom servers if their tool definitions exceed the context threshold.

What's the difference between Tool Search and previous context management approaches?

Previous approaches either preloaded everything (wasteful) or required developers to manually limit tools (restrictive). Tool Search is intelligent and automatic. It maintains full access to all tools while dynamically managing what's loaded based on actual need. This provides the best of both worlds: comprehensive tool access with efficient context usage.

How will AI agent infrastructure evolve beyond Tool Search?

Future developments might include smarter semantic search that understands relationships between tools, learning-based optimization that predicts which tools you'll need, more sophisticated caching for frequently used tool sets, and user-configurable context thresholds. The broader trend suggests that efficiency improvements will become increasingly important as AI agents scale to more complex real-world workloads.

Key Takeaways

- This isn't just an optimization—it's a glimpse into how AI infrastructure should work as agents become more sophisticated

- To understand the significance of MCP Tool Search, you need to understand the friction of the previous system

- It's a shift from brute-force architecture to something resembling modern software engineering best practices

- For developers maintaining MCP servers, this change has practical implications

- The

server_instructionsfield in the MCP definition—previously a "nice to have" for documentation—is now critical

Related Articles

- Venezuela's X Ban Still Active: Why VPNs Remain Essential [2025]

- NYT Strands Game #684 Hints, Answers & Spangram [January 16, 2025]

- Euphoria Season 3 2026: Why This HBO Max Trailer Changed Everything [2025]

- Bandcamp's AI Music Ban: What It Means for Artists and the Industry [2025]

- Signal's Founder Built a Private AI That ChatGPT Can't Match [2025]

- Barcelona vs Real Madrid Free Streams: How to Watch Spanish Super Cup Final 2026 | TechRadar

![Claude Code MCP Tool Search: How Lazy Loading Changed AI Agents [2025]](https://tryrunable.com/blog/claude-code-mcp-tool-search-how-lazy-loading-changed-ai-agen/image-1-1768507559322.png)