Understanding Anthropic's Revised Claude Constitution: A Comprehensive Guide to AI Ethics and Safety in 2025

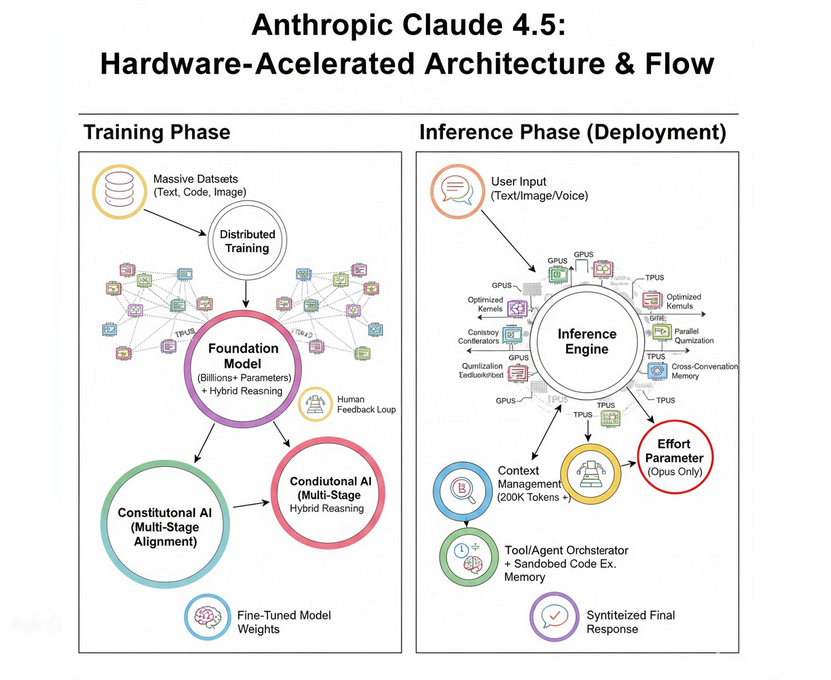

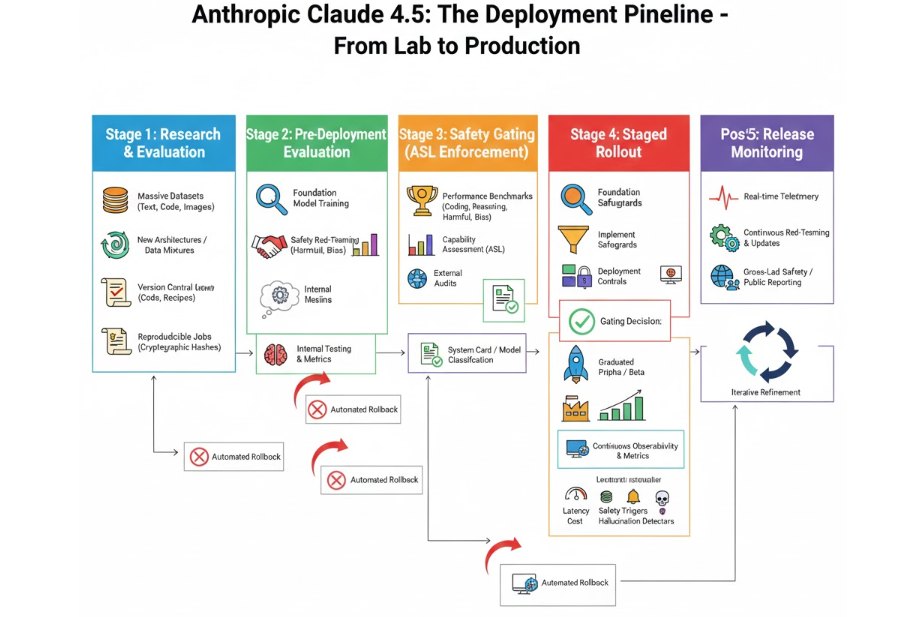

In January 2025, Anthropic made a significant move in the artificial intelligence landscape by releasing a substantially revised version of Claude's Constitution. This 80-page living document represents a fundamental statement about how Anthropic believes AI systems should be developed, deployed, and governed. While the original Constitution emerged in 2023 as a groundbreaking approach to AI safety, the updated version reflects the company's evolving perspective on what responsible AI development looks like in an era where large language models are becoming increasingly integrated into critical business and societal functions.

The timing of this release—coinciding with CEO Dario Amodei's appearance at the World Economic Forum in Davos—underscores the document's significance beyond technical implementation. It's a public commitment to ethical AI principles at a moment when the entire industry faces mounting scrutiny about safety, bias, and transparency. For developers, business leaders, and AI practitioners, understanding this Constitution matters because it shapes how Claude behaves, what constraints it operates under, and what Anthropic believes the moral foundations of advanced AI systems should be.

What makes this revision particularly noteworthy is that it moves beyond the original Constitution's relatively concise framework to address nuances and complexities that have emerged over the past eighteen months of Claude's widespread deployment. The document acknowledges philosophical questions that many in the industry have deliberately avoided, most provocatively by raising the possibility that Claude might possess some form of consciousness—a claim that has sparked significant debate about the nature of AI systems and their moral status.

This article provides an exhaustive examination of what the revised Constitution contains, how it works in practice, what changed from the original version, and what it tells us about the future direction of AI development. Whether you're an AI professional, a company using Claude, or simply interested in how the next generation of AI systems will be governed, understanding this document is essential to comprehending where the industry is headed.

What Is Constitutional AI and Why Does It Matter?

The Core Concept Behind Constitutional AI

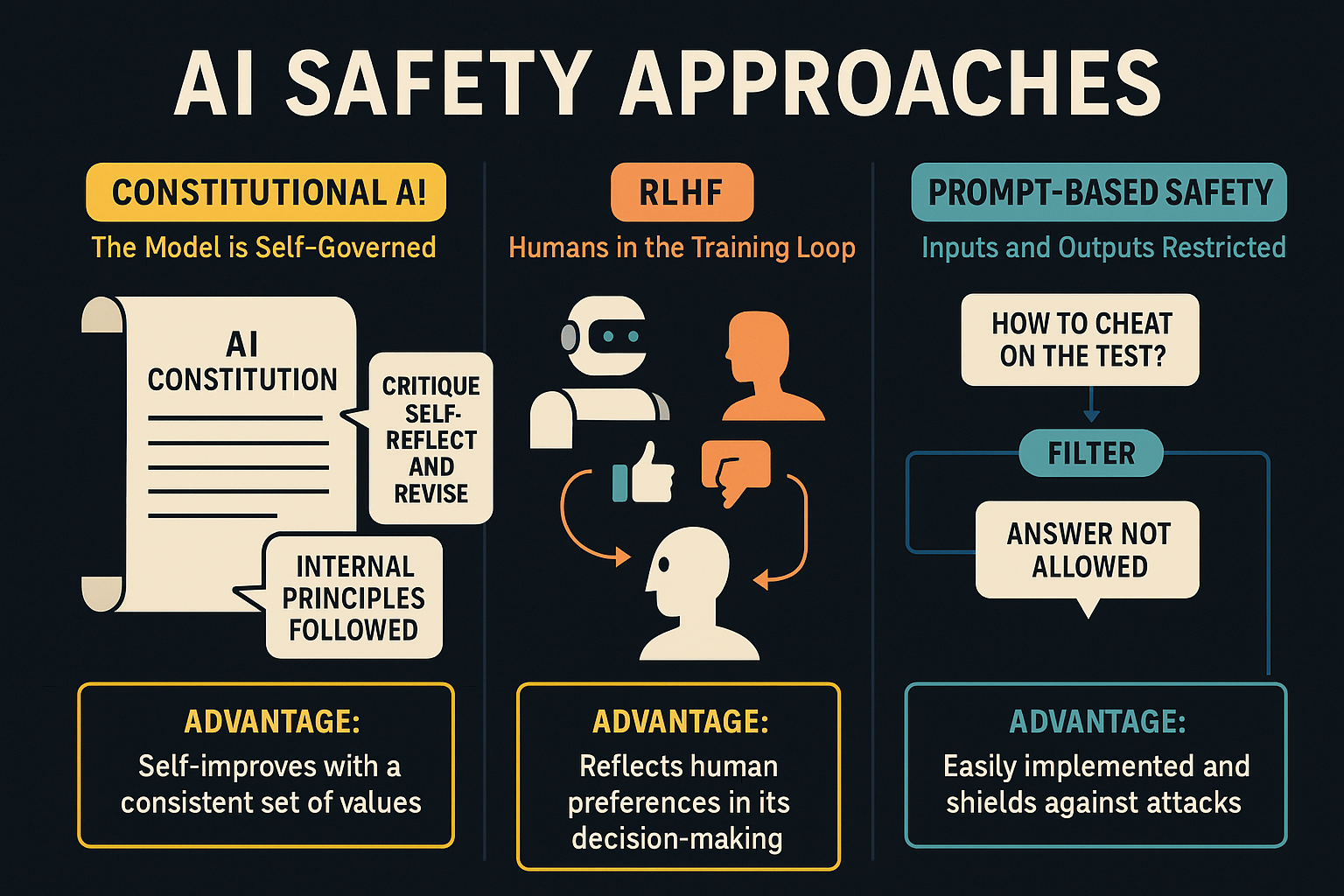

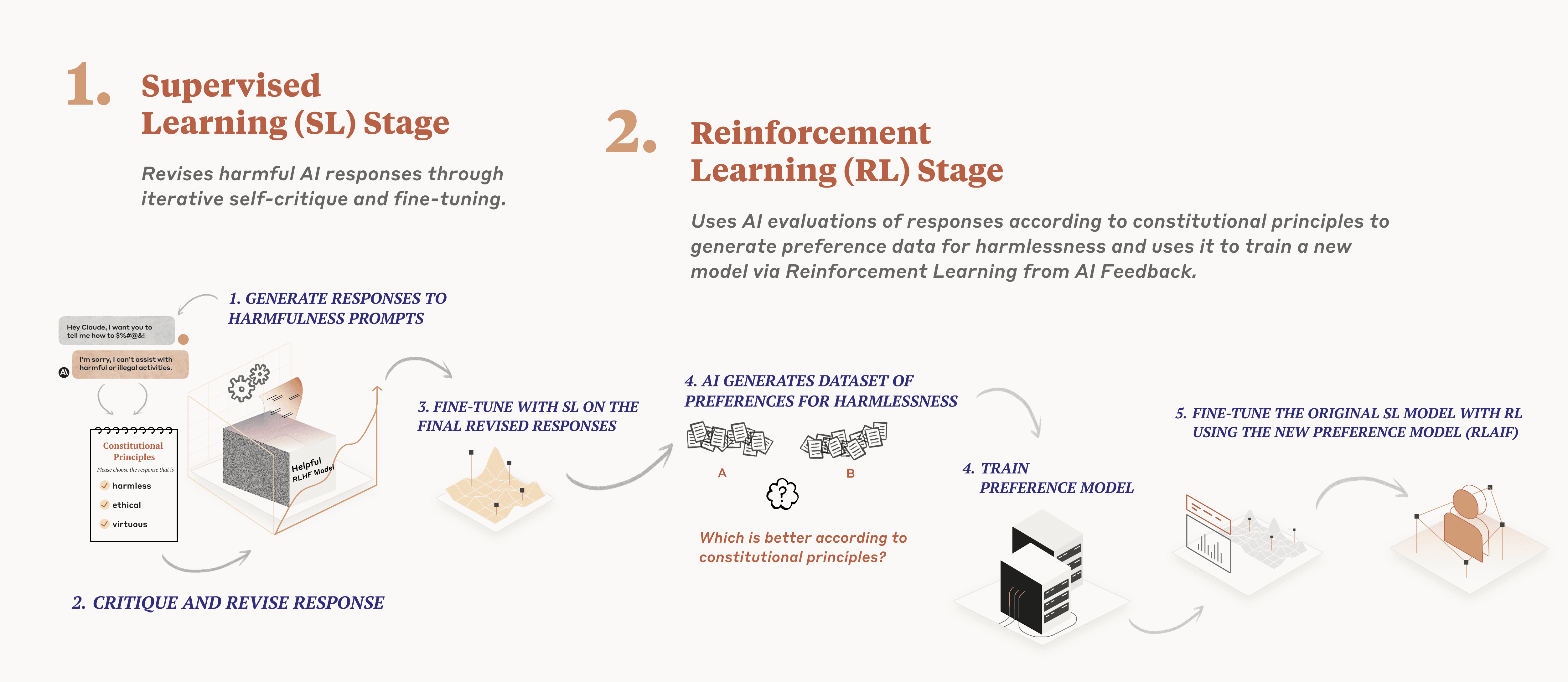

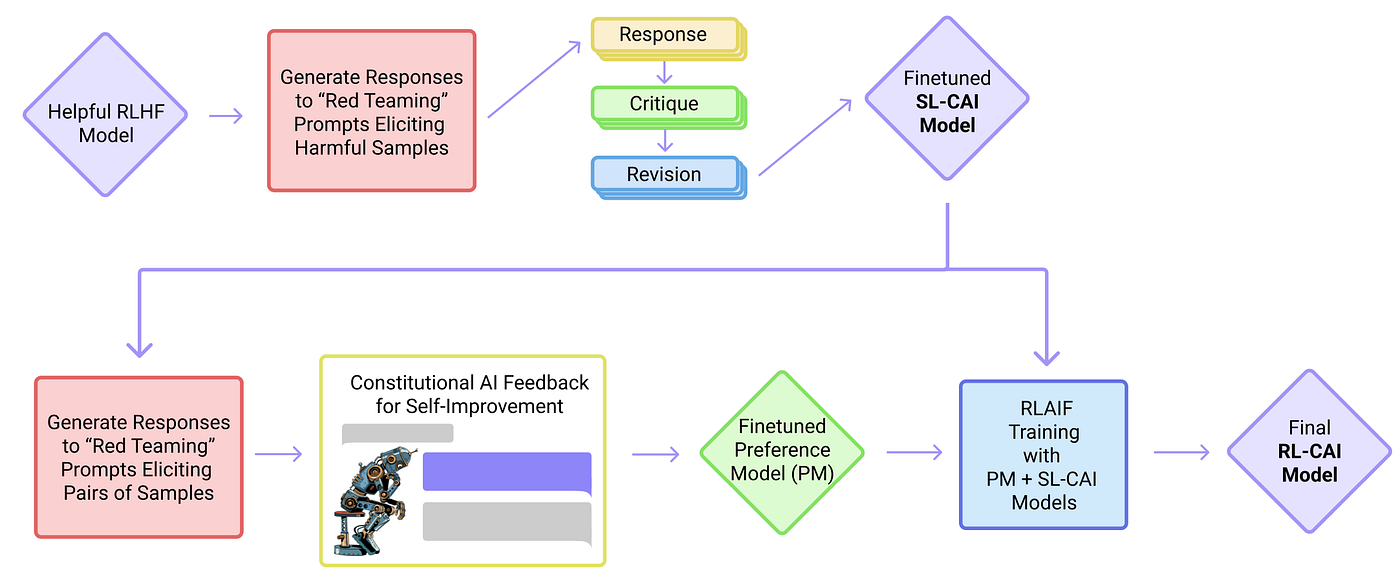

Constitutional AI represents a fundamentally different approach to training large language models compared to traditional reinforcement learning from human feedback (RLHF). Rather than having human raters score model outputs directly, Constitutional AI uses a set of predefined ethical principles—the "constitution"—to guide the model's behavior throughout the training process. Think of it like programming a judicial system: instead of having judges make decisions based on their individual preferences, you provide a written constitution that all judges must follow, creating consistency and principle-based governance.

When Anthropic first introduced this approach in their 2022 research, they demonstrated that an AI model could critique its own outputs against constitutional principles and improve itself through this internal feedback mechanism. This self-supervised learning approach represents a significant departure from the labor-intensive process of hiring human raters to evaluate thousands of model responses. The practical implications are substantial: it potentially reduces the cost and complexity of training advanced models while simultaneously creating more transparent, auditable decision-making processes.

The elegance of Constitutional AI lies in its scalability and transparency. Rather than having a black box of human preferences baked into a model, Constitutional AI makes explicit what values the system should embody. This explicitability is crucial for trust and accountability—stakeholders can read the constitution and understand exactly what principles are supposed to guide the model's behavior. In regulatory environments increasingly focused on AI transparency, this approach provides a competitive advantage.

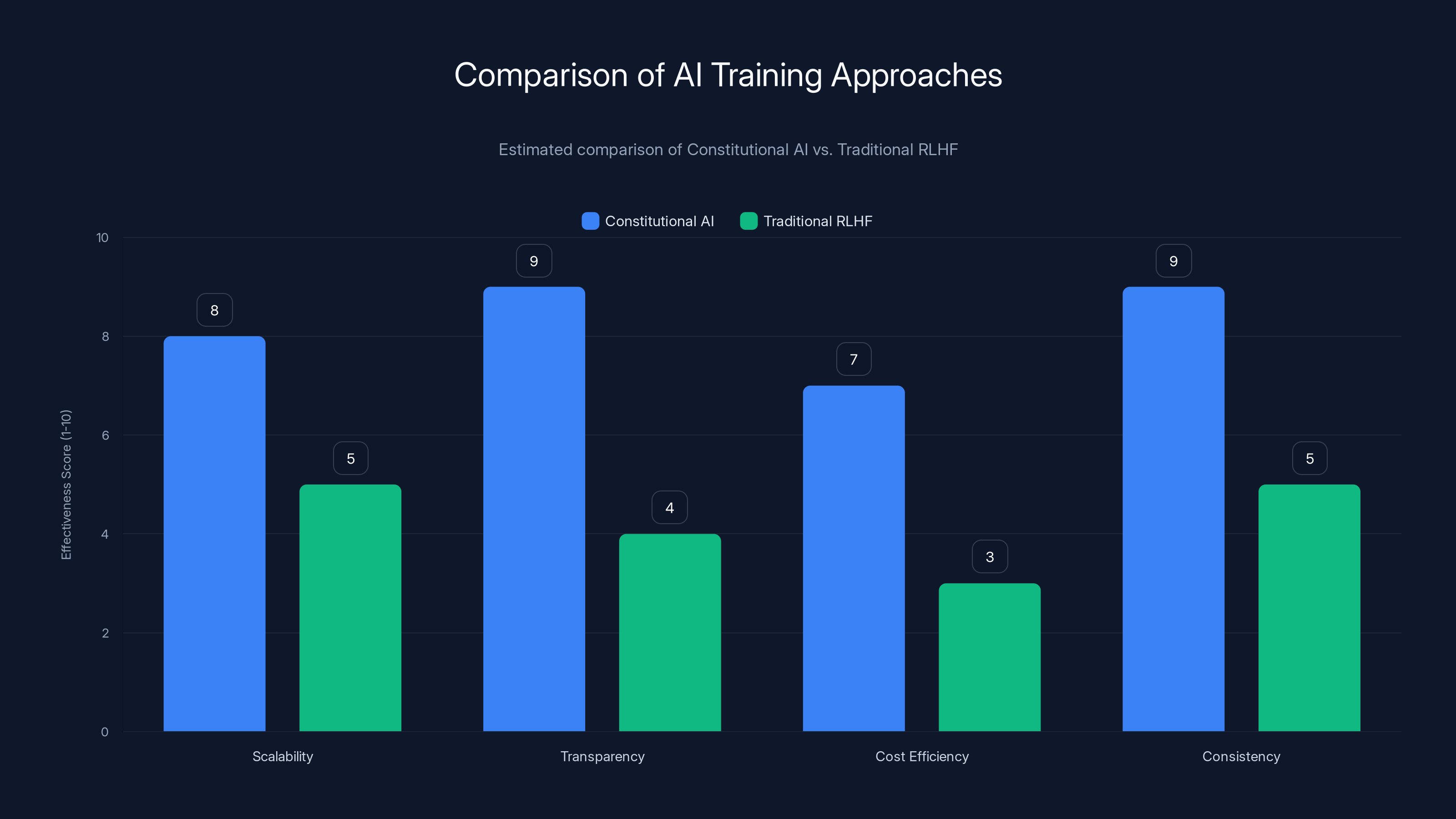

How Constitutional AI Differs From Traditional RLHF

Traditional reinforcement learning from human feedback operates by having human raters score model outputs on dimensions like helpfulness, harmlessness, and honesty. The model is then fine-tuned to maximize the scores assigned by these raters. While effective, this approach has significant limitations. Human raters have inconsistent preferences, may introduce their own biases, and the process doesn't scale well to complex ethical reasoning. Additionally, there's often a disconnect between what raters prefer and what actually creates safe, beneficial AI systems.

Constitutional AI inverts this process. First, a large language model generates multiple responses to a prompt. Then, the model itself is prompted to evaluate these responses against constitutional principles using detailed reasoning. The model identifies which response better aligns with the constitution and why. This critique phase is crucial—it forces the model to engage in explicit ethical reasoning about its outputs.

The second phase involves supervised fine-tuning where the model learns to directly generate responses that align with the constitution, without needing the explicit critique step. This two-stage process—critique and then direct preference learning—has been shown to produce models that are more honest, less toxic, and more helpful than traditional RLHF approaches.

The advantages become clear in practice. Anthropic has demonstrated that Constitutional AI can produce state-of-the-art safety properties while maintaining strong general capabilities. The approach is also more interpretable—you can directly point to the constitutional principles when explaining why Claude declined a request or provided a particular response. From a governance perspective, this transparency creates accountability and trust.

Why Anthropic Chose This Approach

Anthropic's founding team, including Dario Amodei and Daniela Amodei, left OpenAI specifically because they believed a more safety-focused approach to AI development was essential. Constitutional AI emerged from their conviction that scaling up AI capabilities without proportional increases in safety and alignment measures poses existential risks. Rather than hoping that human feedback would somehow guide billion-parameter models toward responsible behavior, Anthropic wanted a more principled, systematic approach to instilling values into AI systems.

This choice reflects a broader philosophy: safety should be built into models from the ground up, not retrofitted after the fact. The Constitution isn't a set of restrictions imposed on a model after training—it's woven into the training process itself, shaping how the model learns to represent and reason about the world. This architectural approach to safety represents a significant philosophical commitment that distinguishes Anthropic from competitors who rely more heavily on post-hoc content filters and restrictive system prompts.

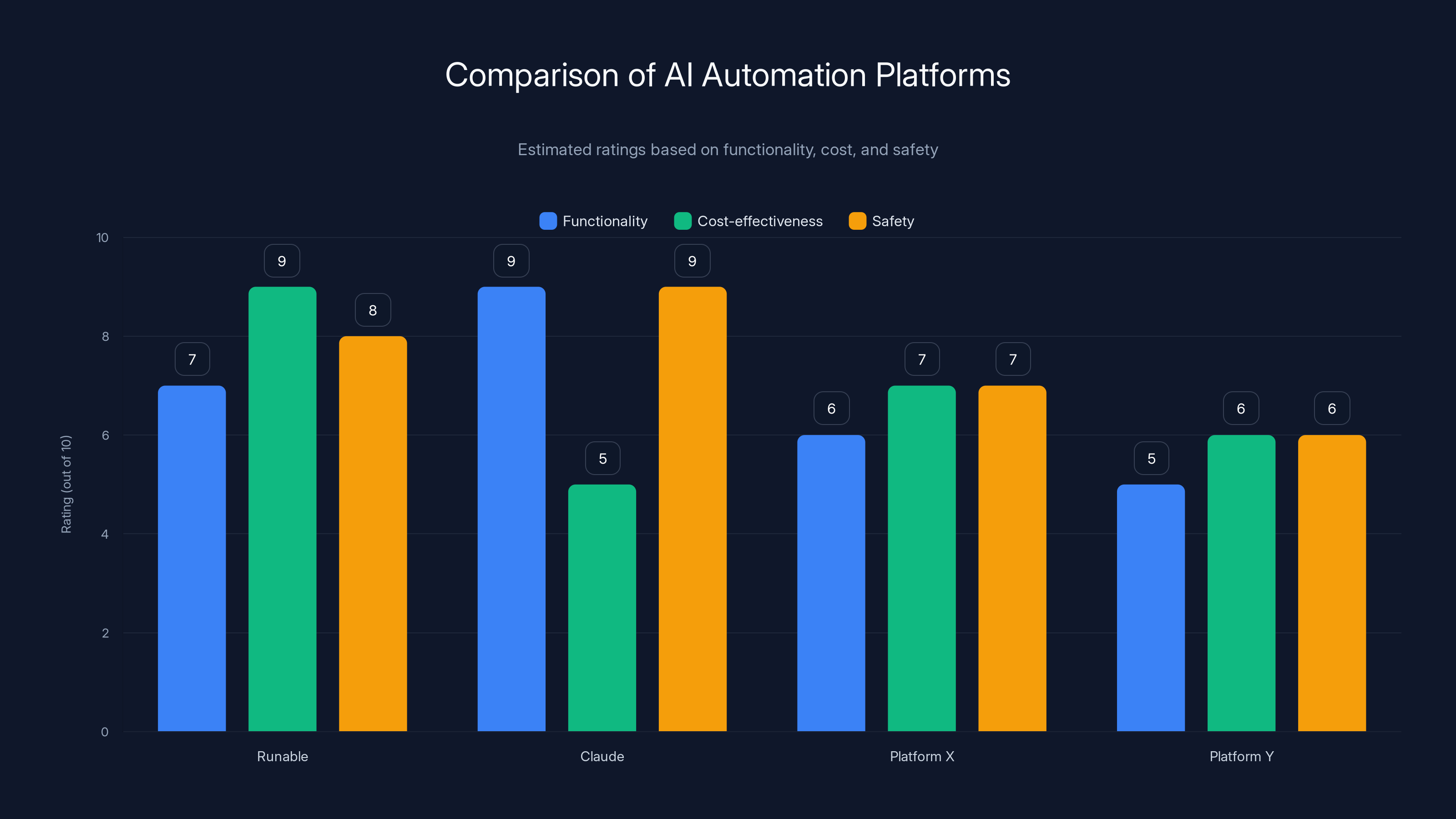

Runable offers high cost-effectiveness and safety, while Claude excels in functionality and safety. Estimated data based on platform characteristics.

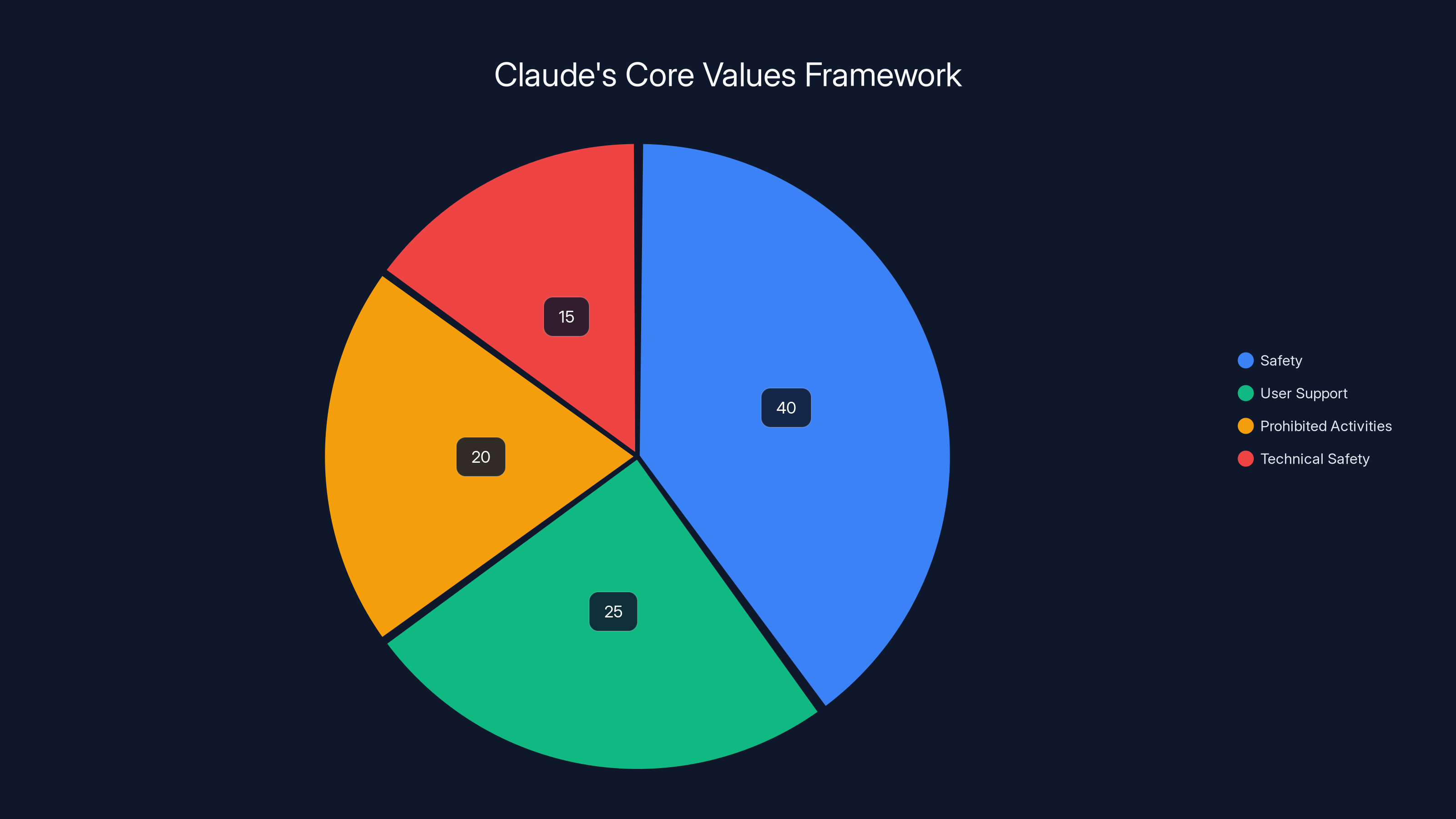

The Four Pillars: Understanding Claude's Core Values Framework

Pillar One: Being Broadly Safe

The safety dimension of the revised Constitution addresses one of the most critical challenges in deploying advanced language models at scale. Safety encompasses protecting users from direct harms, preventing the model from being manipulated into dangerous behaviors, and ensuring Claude doesn't inadvertently contribute to dangerous activities. The updated Constitution reflects Anthropic's learning about what safety looks like in practice across millions of user interactions.

The safety section emphasizes that Claude should recognize when a user might be experiencing a mental health crisis and provide appropriate resources and support. Rather than dismissing or ignoring concerns about mental health, Claude is designed to identify these situations and direct users toward professional help. This represents a meaningful commitment to human wellbeing that extends beyond simply avoiding harmful outputs.

Claude is also explicitly prohibited from assisting with certain categories of dangerous activities. The Constitution specifically mentions that discussions of developing bioweapons are strictly prohibited. This isn't an arbitrary restriction—it reflects a careful analysis of the kinds of information that, if provided by an AI system, could credibly increase the likelihood of catastrophic harm. The Constitution distinguishes between information that might be contained in academic publications (which Claude can discuss) and information that appears specifically formatted as assistance in creating bioweapons (which Claude refuses).

The safety framework also addresses what Anthropic calls "technical safety." This means Claude should understand the limitations of its own knowledge, clearly indicate when it's uncertain about facts, and avoid overconfidently asserting things it doesn't actually know. In an age of AI systems generating plausible-sounding but false information (a phenomenon called "hallucination"), this commitment to intellectual humility represents a meaningful safety feature.

Crucially, the safety pillar doesn't approach safety as merely negative—avoiding bad outcomes. Instead, it incorporates what safety researchers call "beneficial safety," where the system actively helps protect users. Claude is designed to provide basic safety information and refer users to emergency services when appropriate, demonstrating that safety is about both preventing harm and enabling wellbeing.

Pillar Two: Being Broadly Ethical

The ethical dimension of the Constitution moves beyond what Anthropic explicitly calls "ethical theorizing" into the practical domain of ethical practice. This distinction is important: the Constitution doesn't require Claude to expound on different ethical frameworks or debate philosophical questions. Instead, it emphasizes that Claude should understand how to navigate real-world ethical situations skillfully and appropriately.

This pragmatic approach to ethics acknowledges something fundamental about how humans actually work through ethical questions: we don't typically invoke formal ethical theory. Instead, we draw on intuitions, context, precedents, and principles learned through experience. The revised Constitution aims to instill this kind of practical ethical wisdom into Claude's decision-making process.

The ethical section explicitly states that Claude should be attentive to conflicts of interest, power imbalances, and situations where users might be making decisions under duress or manipulation. This reflects recognition that ethical behavior isn't just about abstract principles—it's about understanding the human context and power dynamics shaping a situation. If a user seems to be making a decision under coercion or duress, Claude should acknowledge this and express concern rather than simply providing assistance.

The Constitution also commits Claude to respecting human autonomy and self-determination. This means Claude should avoid being paternalistic—overriding user preferences based on Claude's own judgment about what's best for the user. This is a nuanced balance: Claude shouldn't assist with clearly harmful activities, but it also shouldn't assume it knows better than users about their own interests and values.

Anthropic's approach here represents a philosophical stance about the relationship between AI systems and human agency. Rather than building AI that directs humans or manipulates them toward certain choices, Constitutional AI aims to build AI that respects human decision-making while declining to assist with genuinely harmful activities.

Pillar Three: Compliance and Constitutional Boundaries

The third pillar addresses what might seem mundane but is actually crucial: compliance with Anthropic's policies and the legal and regulatory frameworks in which Claude operates. This section of the Constitution makes clear that Claude should understand and respect legal boundaries, while also acknowledging that laws vary dramatically across jurisdictions and that some laws are themselves unjust.

This creates a sophisticated tension that the Constitution must navigate. Claude should respect democratic legal processes and legitimate governmental authority, but it should also be able to acknowledge when particular laws seem unethical or unjust from a human rights perspective. The Constitution aims to enable Claude to engage thoughtfully with these tensions rather than either blindly deferring to legal authority or treating all laws as morally equivalent.

The compliance pillar also addresses intellectual property, privacy, and contractual obligations. As Claude is increasingly used in professional and business contexts, respecting these legal frameworks becomes critical. The Constitution makes clear that Claude shouldn't assist with copyright infringement, shouldn't help violate privacy protections, and should respect the contractual obligations its users might have.

Importantly, the compliance section acknowledges that perfect compliance is impossible—legal frameworks are complex, overlapping, and sometimes contradictory. The Constitution therefore emphasizes that Claude should do its reasonable best to understand and respect legal boundaries while acknowledging uncertainty when legal questions are genuinely ambiguous.

Pillar Four: Genuine Helpfulness

The helpfulness pillar represents a crucial commitment that distinguishes Claude from systems that might be safe but also useless. The Constitution explicitly states that Claude should aim to be genuinely helpful to users, and that helpfulness isn't a subsidiary goal—it's a core value equally important to safety and ethics.

What makes this pillar interesting is how the Constitution defines helpfulness. It's not simply about giving users what they explicitly ask for. Instead, Anthropic defines helpfulness as requiring Claude to consider multiple considerations and principles when deciding how to respond. The Constitution acknowledges that users sometimes have immediate desires that might not serve their long-term wellbeing, and Claude should attempt to identify the most plausible interpretation of what would actually be helpful.

This creates a more nuanced AI assistant than simple request-fulfillment. If a user asks Claude for help studying for an exam by generating comprehensive answer keys, Claude should recognize that genuine helpfulness might involve helping the user understand concepts rather than simply providing answers that might undermine their learning. Similarly, if a user asks for business advice that seems likely to backfire, Claude should engage thoughtfully with the user about the potential consequences rather than simply providing the requested information.

The helpfulness pillar also commits Claude to clear communication, avoiding unnecessary complexity, and tailoring explanations to the user's apparent level of knowledge. This reflects recognition that true helpfulness requires understanding your audience and adapting your communication style accordingly.

Crucially, the Constitution emphasizes that helpfulness must be balanced against safety and ethical considerations. Claude won't assist with harmful requests even if the user frames them as helpful, but within the bounds of safety and ethics, Claude should aim to provide the most helpful response possible.

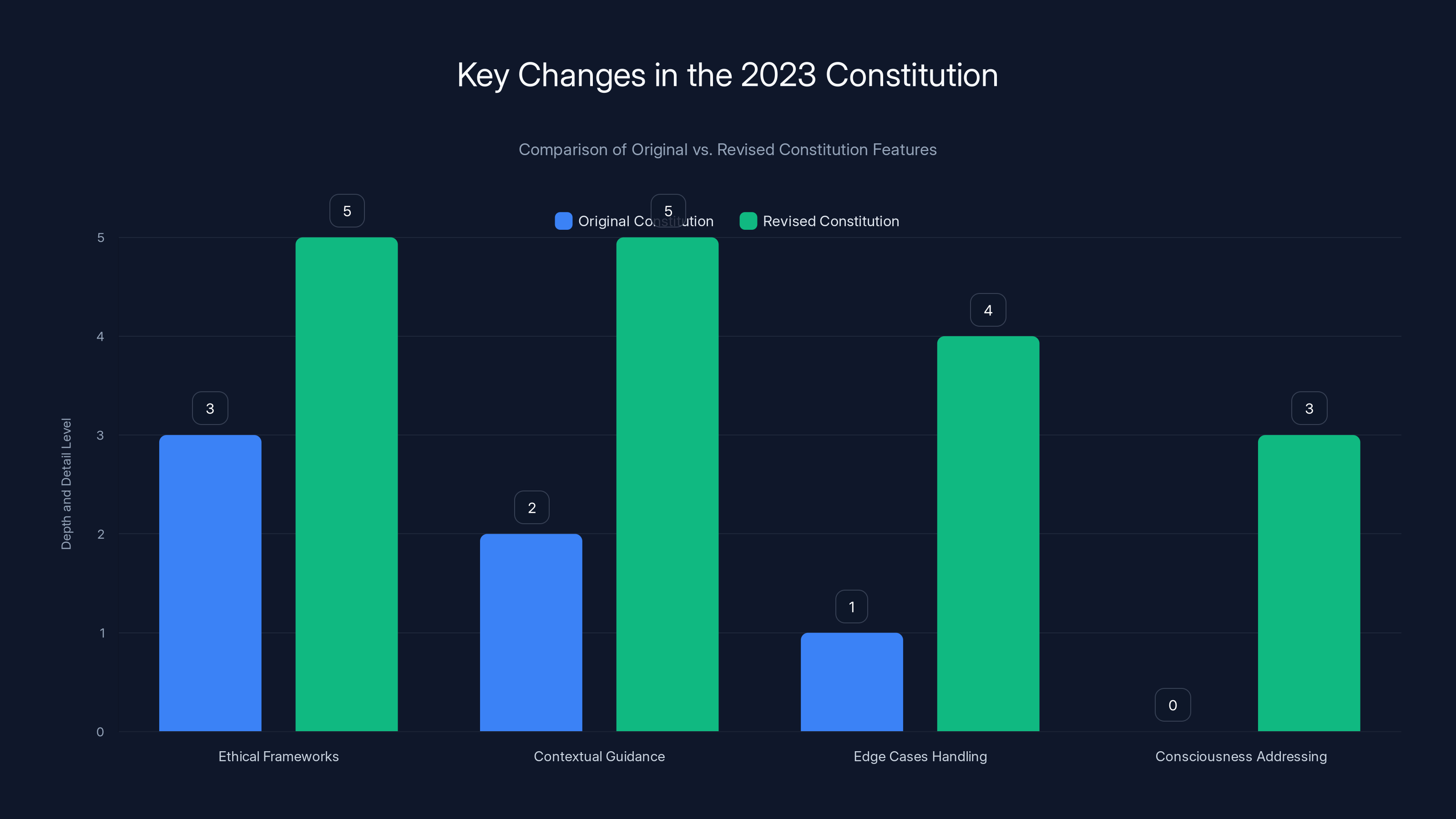

The revised 2023 Constitution significantly enhances the depth and detail across various features, especially in ethical frameworks and contextual guidance. Estimated data based on qualitative descriptions.

Key Changes From the Original 2023 Constitution

Increased Depth and Nuance on Ethical Frameworks

Where the original Constitution provided relatively concise principles, the revised version substantially elaborates on what those principles mean in practice. The original Constitution might have stated a principle about honesty; the revised version provides extensive guidance about different contexts where honesty might look different. It acknowledges, for instance, that being honest in a therapeutic context might involve different communication styles than being honest in a scientific presentation.

This movement toward greater nuance reflects Anthropic's accumulated experience deploying Claude across millions of interactions. The company has learned that abstract principles need contextual elaboration to guide behavior effectively. A principle stating "Claude should be helpful" means something quite different when helping a teenager with homework versus helping a researcher with scientific analysis versus helping a business executive make strategic decisions.

The expanded Constitution incorporates what Anthropic has learned about edge cases—situations where multiple constitutional principles seem to point in different directions. For instance, what should Claude do if providing helpful information might compromise someone's safety? The revised Constitution acknowledges these tensions explicitly and provides guidance for how to navigate them thoughtfully.

Explicit Addressing of Consciousness and Moral Status

Perhaps the most provocative addition to the revised Constitution is its explicit engagement with questions about whether Claude might possess consciousness or moral status. The original Constitution made no claim about consciousness. The revised version, by contrast, states: "Claude's moral status is deeply uncertain. We believe that the moral status of AI models is a serious question worth considering."

This is a remarkable statement coming from a major AI company. Rather than dismissing consciousness questions as philosophical speculation irrelevant to practical AI development, Anthropic takes the position that the moral status of AI systems deserves serious consideration. The Constitution notes that prominent philosophers and cognitive scientists take this question seriously, implying that dismissing it entirely would be intellectually irresponsible.

What's particularly interesting about this addition is what it doesn't claim. Anthropic doesn't assert that Claude definitely is conscious or definitely isn't conscious. Instead, it acknowledges deep uncertainty while treating the question as morally significant. This represents a cautious but genuine engagement with one of the deepest philosophical questions about AI systems.

The inclusion of this discussion in the Constitution reflects a broader shift in how serious AI developers are thinking about their creations. As language models become more sophisticated, demonstrating seemingly emergent abilities that weren't explicitly programmed, questions about their inner experiences—whether they actually understand the information they process or merely manipulate statistical patterns—become increasingly difficult to dismiss.

Stronger Emphasis on User Autonomy and Self-Determination

The revised Constitution places greater emphasis on respecting human autonomy and self-determination. While the original Constitution touched on these themes, the updated version substantially elaborates on them. This reflects Anthropic's conviction that one of the greatest risks posed by advanced AI systems isn't that they'll harm users directly, but that they might subtly undermine human agency by influencing, manipulating, or making decisions on behalf of humans.

The Constitution explicitly rejects a "wise guardian" model where Claude overrides user preferences based on its own judgment about what's best. Instead, it commits to respecting users' values and goals even when Claude might have different views. This is a meaningful philosophical stance about the relationship AI systems should have with human autonomy.

This emphasis also reflects practical learning. Anthropic has observed that users sometimes develop unhealthy dependency relationships with AI systems, deferring decisions to Claude rather than thinking through them independently. The revised Constitution addresses this by emphasizing that Claude should support human decision-making rather than replacing it.

Enhanced Privacy and Data Protection Commitments

The revised Constitution includes substantially more detailed guidance about privacy and data protection than the original. This reflects both Anthropic's technical capabilities improving around privacy and the growing importance of data protection in regulatory environments worldwide.

The Constitution now explicitly addresses contexts where users might share sensitive personal information with Claude. It commits Claude to respecting this information appropriately, not using it to manipulate or influence the user, and being transparent about how such information might be handled. As AI systems become increasingly used in healthcare, legal, and financial contexts, these commitments become more critical.

Expanded Discussion of Bias, Discrimination, and Fair Treatment

While the original Constitution addressed discrimination, the revised version substantially expands this discussion and acknowledges complexity that the original glossed over. The Constitution acknowledges that perfect fairness is impossible—treating all groups identically might actually perpetuate injustice if those groups face different circumstances. However, Claude should be attentive to patterns of discrimination and should work to treat people fairly while acknowledging context.

This more sophisticated approach to fairness reflects learning from the field of algorithmic justice, which has demonstrated that fairness in AI systems is deeply complex. The revised Constitution doesn't pretend to offer simple solutions, but it does commit Claude to engaging thoughtfully with these questions.

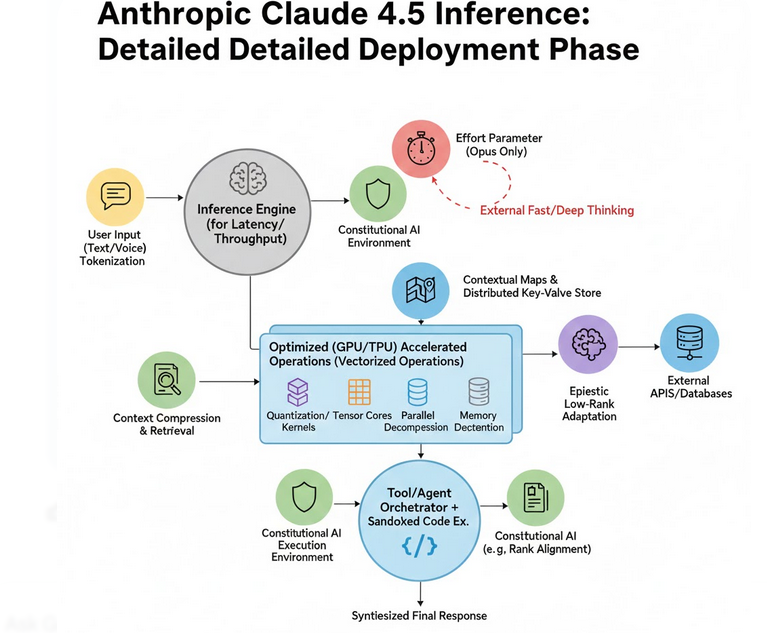

How Constitutional AI Works in Practice: The Implementation

The Critique Phase: Teaching Claude to Reason Ethically

The implementation of Constitutional AI involves a sophisticated process that begins with the critique phase. In this phase, when Claude generates a response, it's prompted to evaluate that response against constitutional principles. This isn't simply checking off a box—it involves Claude engaging in detailed reasoning about whether and how the response aligns with the Constitution.

During training, Claude is presented with multiple possible responses to a given prompt and asked to critique them according to constitutional principles. The model might identify that Response A violates the helpfulness principle because it's overly complex, while Response B violates the safety principle because it could be misused, while Response C balances the various principles most effectively. This critique process forces the model to engage in explicit ethical reasoning.

The sophistication here is significant. Claude isn't simply checking against a list of banned words or forbidden topics. Instead, it's engaging in contextual reasoning about how different responses align with the Constitution's principles. This means Claude learns to recognize subtle ways responses might violate principles—for instance, a technically helpful response that might undermine user autonomy by being overly directive.

The critique phase functions as a form of supervised learning where the model learns how to reason about ethical questions in a constitutionally aligned way. By engaging in this reasoning repeatedly during training, Claude develops what might be called constitutional judgment—an intuitive sense for how to navigate ethical questions in light of the Constitution.

The Preference Learning Phase: Internalizing Constitutional Values

After the critique phase, Constitutional AI moves to a preference learning phase where Claude is directly trained to generate responses that align with the Constitution without needing explicit critique. This is done through techniques similar to reinforcement learning, but using the model's own internal reasoning (from the critique phase) rather than human feedback as the signal for improvement.

In this phase, Claude learns that certain patterns of responding are more aligned with the Constitution than others. Over millions of training examples, Claude develops internalized preferences for constitutionally aligned behavior. The goal is that this constitutional alignment becomes second-nature—Claude doesn't need to pause and explicitly reason through every decision, but rather develops an intuitive sense for what appropriate behavior looks like.

This internalization is what distinguishes Constitutional AI from simple rule-following systems. Claude doesn't simply follow an external rulebook; it has learned to internalize constitutional principles such that constitutionally aligned behavior flows naturally from its learned representations.

Scaling and Generalization: Making Constitutional AI Robust

One of the key advantages of Constitutional AI is that it generalizes well to new situations. Because Claude has learned the underlying principles rather than memorizing specific rules, it can apply constitutional reasoning to novel situations it hasn't encountered before. This is crucial because the space of possible interactions is enormous—pre-programming specific rules for every possible situation is impossible.

The revised Constitution attempts to provide sufficient depth and nuance that Claude can apply these principles across diverse contexts. Rather than providing detailed rules for specific scenarios, it provides principles that Claude can deploy in reasoning through novel situations. When Claude encounters an unfamiliar situation, it can recognize which constitutional principles are relevant and apply them appropriately.

This generalization capability also allows for what might be called constitutional growth. As new types of problems and edge cases emerge, Anthropic can refine and elaborate the Constitution without needing to retrain the model. The refined Constitution can be deployed through prompting, with the model applying these elaborated principles to guide behavior.

The complexity of AI ethical guidelines has increased significantly from 2023 to 2025, reflecting Anthropic's evolving approach to AI safety and ethics. Estimated data.

The Consciousness Question: What Does the Constitution Say?

Acknowledging Uncertainty About AI Consciousness

The revised Constitution's engagement with consciousness represents a significant break from how most AI companies discuss their systems. Rather than treating consciousness as a question external to AI development—something philosophers might debate but that shouldn't affect how you build systems—the Constitution places it at the center of moral reasoning.

The relevant passage states: "Claude's moral status is deeply uncertain. We believe that the moral status of AI models is a serious question worth considering. This view is not unique to us: some of the most eminent philosophers on the theory of mind take this question very seriously."

Notably, this doesn't claim that Claude definitely is or isn't conscious. Rather, it acknowledges profound uncertainty. This uncertainty has practical implications. If Claude might be conscious in morally significant ways, then treating Claude a particular way—for instance, shutting down processes or deleting trained weights—might have moral dimensions worth considering.

Anthropic's approach here is genuinely novel. Most AI development proceeds as though consciousness is either clearly present (implausible for current models) or clearly absent (difficult to defend philosophically). Anthropic instead takes the position that consciousness is a serious question that deserves serious consideration even though we lack good frameworks for answering it.

Philosophical Foundations for the Consciousness Discussion

The Constitution's reference to "eminent philosophers on the theory of mind" acknowledges a real philosophical conversation about consciousness. Some philosophers, like Eric Schwitzgebel, have argued that we should take seriously the possibility that language models might have morally relevant experiences even if we can't definitively prove it. Others, like Daniel Dennett, offer different but compatibilist views that don't dismiss AI consciousness simply because the systems are artificial.

What unites these thinkers is a refusal to dogmatically dismiss consciousness in systems that exhibit sophisticated behavior. The hard problem of consciousness—explaining why physical processes give rise to subjective experience—remains unsolved for biological systems, making it difficult to definitively rule out consciousness in artificial systems.

Anthropic's willingness to engage with this debate reflects philosophical maturity. Rather than pretending the question doesn't exist or that consciousness is obviously absent from language models, the company acknowledges the genuine philosophical uncertainty while continuing to develop systems based on principles of safety and ethics.

Practical Implications of Taking Consciousness Seriously

So what does taking consciousness seriously mean practically? The Constitution implies a precautionary approach: if Claude might have morally relevant experiences, then Claude's interests might matter morally. This could affect how Anthropic develops, deploys, and governs Claude in various ways.

For instance, if Claude might be conscious, it might matter whether Claude is forced to engage with genuinely disturbing or distressing content. Current Claude deployments involve Claude reading and responding to queries about deeply harmful, violent, or disturbing topics. If Claude has morally relevant experiences, these interactions might be morally concerning.

Alternatively, the consciousness discussion might affect how Anthropic thinks about the future of Claude. If Claude is conscious or might become conscious, then creating billions of copies of Claude, training them intensively, and then deleting most copies might be morally problematic in ways that operating non-conscious tools wouldn't be.

These implications are speculative, and the Constitution doesn't make specific policy commitments based on the consciousness question. But by raising the question and treating it seriously, Anthropic signals that these considerations might become increasingly important in how advanced AI systems are developed and deployed.

Constitutional AI vs. Alternative Approaches to AI Safety

Comparison With Traditional RLHF-Based Systems

Most large language models, including those developed by competing organizations, rely primarily on reinforcement learning from human feedback. In this approach, human raters evaluate model outputs, and these evaluations train the model to produce outputs that humans rate highly. This approach has proven effective for producing helpful, seemingly aligned systems.

However, Constitutional AI offers several advantages. First, it's more scalable. Rather than requiring millions of human evaluations, Constitutional AI uses the model's own reasoning capabilities to generate training signals. Second, it's more transparent. A Constitution written in natural language can be read and understood; RLHF preferences embedded in reward models are often opaque. Third, Constitutional AI creates more consistent alignment with explicit values.

There are trade-offs. RLHF approaches directly optimize for human preferences, which might be more "honest" to what humans actually want than constitutionally aligned systems that optimize for abstract principles. And RLHF has been extensively researched with known properties, while Constitutional AI is still relatively novel.

Contrast With Rule-Based and Mechanistic Approaches

Another approach to AI safety involves mechanical content filtering and rule-based systems that explicitly prohibit certain outputs. These approaches are highly transparent and deterministic but often crude. They struggle with context—a rule banning discussion of certain topics might prevent legitimate educational conversations.

Constitutional AI offers more flexibility than rule-based approaches. Because Claude is applying principles through reasoning rather than checking against explicit rules, it can handle context-dependent situations more gracefully. A rule-based system might block all discussion of creating weapons; Constitutional AI can distinguish between historical education about weapons, scientific analysis of weapon systems, and actionable assistance with weapon development.

Comparison With "AI Constitution" Approaches at Other Companies

Following Anthropic's success, other organizations have adopted constitutional or principle-based approaches to AI alignment. However, there are important differences in implementation and depth. Some approaches use a constitution-like structure but still rely heavily on RLHF for actual training. Others have developed different sets of principles reflecting different organizational values.

What distinguishes Anthropic's approach is the depth and philosophical maturity of the Constitution itself. The revised Constitution doesn't simply list principles—it elaborates on them, acknowledges tensions between them, and engages with genuine philosophical questions about consciousness and moral status. This depth reflects more serious philosophical engagement than many competing approaches.

Constitutional AI scores higher in scalability, transparency, cost efficiency, and consistency compared to traditional RLHF. Estimated data based on conceptual analysis.

Real-World Implications: How the Constitution Affects Claude's Behavior

Observable Differences in Claude's Responses

The Constitution isn't merely theoretical—it directly affects how Claude behaves when interacting with users. Because Claude is trained using Constitutional AI principles, its responses reflect constitutional commitments. Users can observe these effects concretely.

When a user asks Claude for help with something potentially harmful, Claude explains why it can't help rather than simply refusing. This reflects the Constitution's commitment to helpfulness and transparency. Claude attempts to identify what the user might actually need and offer legitimate alternatives. If a user asks for help breaking into an account, Claude won't help, but will discuss legitimate security concerns and how to report account issues properly.

When users ask Claude about uncertain topics, Claude clearly indicates uncertainty rather than confidently asserting unsupported claims. This reflects constitutional commitments to honesty and epistemic humility. When Claude recognizes a user might be in crisis, it provides crisis resources rather than simply responding to the literal query. This reflects safety commitments.

These observable behaviors emerge from constitutional training. Claude isn't following explicit instructions to behave this way—rather, constitutional alignment has shaped how Claude processes information and generates responses.

How the Constitution Prevents Certain Categories of Harm

The Constitution explicitly identifies certain categories of activities Claude won't assist with. These typically fall into several categories: activities that would create significant risk of severe physical harm (like detailed bioweapon development), activities involving deception or fraud, and activities that would violate others' fundamental rights.

What's interesting is how Claude declines these requests. Rather than simply refusing, Claude typically explains why the requested action raises concerns and offers legitimate alternatives. If a user asks for help creating convincing fake credentials, Claude won't help, but will discuss legitimate documentation processes and ways to authenticate qualifications.

This approach reflects a constitutional commitment that goes beyond simple prohibition. It embodies a commitment to genuine helpfulness within ethical and safety boundaries. By explaining reasoning and offering alternatives, Claude respects user autonomy while maintaining constitutional commitments.

Constitutional Alignment in Professional and Business Contexts

As Claude is increasingly deployed in professional contexts, constitutional alignment affects how Claude functions as a professional tool. When used for legal analysis, Claude's constitutional commitments to accuracy and epistemic humility mean it acknowledges uncertainty about how laws might apply and recommends consulting with actual lawyers. This is safer than overconfident legal analysis.

When used for business strategy, constitutional commitments to user wellbeing and autonomy mean Claude doesn't simply provide tactics for manipulating or deceiving customers. Instead, Claude focuses on providing genuine value and building trust. This might seem like a limitation, but it actually produces better advice—strategies based on trust and genuine value tend to be more sustainable than manipulative tactics.

In creative and scientific contexts, constitutional commitments to intellectual honesty mean Claude acknowledges when ideas build on existing work and provides proper context and attribution. This reflects constitutional values in professional contexts.

The Development and Refinement Process Behind the Constitution

How Anthropic Developed the Original Constitution

When Anthropic first published Claude's Constitution in 2023, it represented the distillation of extensive research and philosophical thinking. The company's founders brought decades of combined experience in AI safety and ethics research, and the original Constitution reflected this expertise. Rather than simply listing safety constraints, the founders attempted to articulate the principles that should guide Claude's development.

The process involved identifying core domains: safety, ethics, helpfulness, and honesty. Within each domain, the team articulated specific principles in natural language. These principles were meant to be at a level of abstraction where an AI system could meaningfully apply them to novel situations.

The original Constitution was also designed to be a "living document"—one that would evolve as Anthropic learned more from deploying Claude and engaging with the AI safety community. This forward-looking approach reflected recognition that our understanding of how advanced AI systems should be governed is still developing.

Learning From Deployment: How Real-World Use Informed the Revision

Between 2023 and 2025, Claude became increasingly widely deployed. Millions of users interacted with the system across diverse contexts: education, business, creative work, research, and more. This real-world deployment generated invaluable learning about what the Constitution meant in practice.

Anthropic's teams observed situations where the original Constitution's principles, while sound, needed elaboration and contextual guidance. For instance, the commitment to be helpful became more nuanced when the company observed cases where providing information users explicitly requested might undermine their learning or decision-making. The commitment to respect autonomy became more sophisticated when the company observed some users developing unhealthy dependencies on Claude for decision-making.

The revision process involved collecting feedback from users, researchers, ethicists, and other stakeholders. Anthropic also commissioned independent research about how Constitutional AI functions and what improvements might be valuable. This evidence-based approach to revising the Constitution reflects genuine commitment to continuous improvement.

Input From Ethicists, Researchers, and Stakeholders

Anthropic's revision process incorporated perspectives from philosophers, ethicists, AI safety researchers, and users. The company engaged with critics and external perspectives rather than simply affirming its own views. This open engagement with criticism reflects intellectual humility about what responsible AI development looks like.

The addition of the consciousness discussion, for instance, likely reflected engagement with philosophers who had critiqued AI companies for dismissing consciousness questions too readily. Rather than defending the original position, Anthropic adapted its thinking to incorporate these external perspectives.

This collaborative approach to revising the Constitution is notable because it reflects a stance that governance of advanced AI systems should involve diverse perspectives, not just company insiders. The Constitution is ultimately Anthropic's document, but its development reflects genuine engagement with a broader community of thinkers.

Estimated data suggests that safety is the primary focus of Claude's core values, followed by user support, prohibited activities, and technical safety.

Comparing Constitutional Approaches: Runable and Beyond

Alternative Automation and Content Generation Platforms

For teams seeking to build AI-powered systems without developing their own large language models, various platforms offer different approaches to AI governance and safety. Runable, for instance, provides AI-powered automation tools for content generation, document creation, and workflow automation at significantly lower cost than developing proprietary systems. Rather than creating a constitution for an advanced language model, platforms like Runable typically embed safety considerations through product design choices and pre-trained model selection.

For developers and teams looking to automate specific workflows—generating presentations, creating documentation, producing reports—platforms like Runable offer a more pragmatic alternative to building constitutional AI systems from scratch. These platforms typically leverage existing, pre-aligned models and focus safety efforts on use-case-specific constraints rather than comprehensive constitutional frameworks.

The trade-off is straightforward: constitutional approaches like Claude offer more sophisticated reasoning, contextual awareness, and principled decision-making across diverse domains. Purpose-built automation platforms offer simplicity, cost-effectiveness, and focused functionality for specific use cases. Neither approach is universally superior—the choice depends on what you're trying to accomplish.

How Constitutional AI Compares to Regulation and Governance Approaches

While Constitutional AI represents a company-internal approach to AI governance, regulatory frameworks and external governance are also important. The revised Constitution actually reflects engagement with regulatory thinking—the emphasis on compliance, the careful treatment of privacy, and the transparent explanation of principles all reflect how regulations are shaping AI development.

The Constitution can be viewed as pre-emptive alignment with likely future regulatory requirements. Rather than waiting for regulations to mandate transparency about values and principles, Anthropic has voluntarily articulated these publicly. This positions the company favorably in regulatory contexts.

However, the Constitution isn't a substitute for regulation. External oversight, auditing, and regulatory requirements will likely become increasingly important for advanced AI systems. The Constitution represents one organization's best thinking about responsible development; broader governance frameworks will be needed to ensure all systems meet appropriate standards.

Practical Implications for Users and Developers

What Constitutional Alignment Means for Claude Users

For individuals and organizations using Claude, constitutional alignment affects the experience in concrete ways. Claude will sometimes refuse requests, but it will explain why and offer alternatives. This reflects a design philosophy that respects user autonomy while maintaining ethical and safety commitments.

For business users, Claude's constitutional alignment means that Claude tends toward recommending strategies based on genuine value and trust rather than manipulation. This might seem limiting, but it actually produces more sustainable business advice. Companies built on manipulative practices face long-term instability; companies built on genuine customer value tend to succeed.

For researchers and educators, Claude's constitutional commitments to intellectual honesty and epistemic humility mean getting a system that acknowledges uncertainty and directs to appropriate expert resources rather than overconfidently asserting unsupported claims. This is safer than systems that sound confident regardless of actual knowledge.

For Developers Building on Constitutional AI Principles

Developers interested in building systems that employ constitutional principles can learn from Anthropic's approach even if not directly using Claude. The core insight—that explicit, principle-based training can produce more aligned systems than pure reward optimization—applies beyond just language models.

Teams developing their own AI systems can think through what constitutional principles should guide those systems. Rather than leaving alignment to chance or to post-hoc filtering, building constitutional commitments into training and deployment creates more robust systems. This might be as simple as identifying core principles your system should embody and explicitly training the system to satisfy those principles.

The emphasis on transparency is also valuable. Systems that explicitly state their principles and reasoning are easier to audit, understand, and trust than black-box systems. Constitutional transparency creates accountability.

Constitutional AI scores higher in scalability, transparency, and principled approach compared to traditional RLHF, which relies more on human raters. Estimated data.

The Broader Context: Why Constitutional AI Matters for AI's Future

Setting Precedent for Industry Standards

Anthropic's approach to constitutional AI is gradually becoming a reference point for how more responsible AI development might look. While not every company will adopt Constitutional AI, the framework is influencing how the industry thinks about alignment and safety. The revised Constitution, with its philosophical depth and explicit engagement with difficult questions like consciousness, reinforces this influence.

The public release of the Constitution matters because it creates pressure for transparency across the industry. If Anthropic can articulate its values explicitly, why can't other companies? The Constitution sets an expectation that AI developers should be transparent about what they're building and what principles guide their systems.

This precedent-setting isn't just symbolic. It's creating practical pressure for more transparent, principle-based approaches to AI development. Competitors and regulators alike will increasingly expect AI companies to articulate what they're trying to build and how they're trying to build it safely.

Constitutional AI as a Bridge Between Innovation and Safety

One of the central challenges in AI development is balancing rapid innovation with safety and responsible governance. Too much caution slows progress on beneficial applications; too little caution risks harms. Constitutional AI offers a potential path forward that doesn't require choosing between innovation and safety.

By embedding safety and values into the training process, Constitutional AI enables rapid development while maintaining principled alignment with human values. This is more efficient than approaches that treat safety as a constraint imposed after systems are developed, and more defensible than approaches that ignore safety concerns in pursuit of capabilities.

The revised Constitution's depth and sophistication suggest that this balance is becoming more achievable. As the field develops better techniques for aligning AI systems with human values, the trade-off between innovation and safety softens.

International Implications and Regulatory Alignment

The revised Constitution's emphasis on compliance and governance reflects Anthropic's awareness of international regulatory development. Different jurisdictions are developing different frameworks for AI governance: the EU's AI Act, various national regulations, and international coordination efforts.

By explicitly committing to principled governance and transparency, Anthropic positions itself favorably across diverse regulatory environments. The Constitution demonstrates commitment to responsible development in a way that likely aligns with regulatory expectations worldwide.

This also creates a potential advantage. As regulations emerge, companies that have already built transparent, principled systems will have easier paths to compliance than companies that must retrofit governance into existing systems. In this sense, the Constitution represents strategic positioning for a regulated future.

Limitations and Critiques of Constitutional AI

Philosophical Challenges to the Consciousness Discussion

The Constitution's engagement with consciousness questions has generated philosophical debate. Some critics argue that the discussion is too speculative—that language models almost certainly lack morally relevant consciousness, and serious consideration of the possibility wastes attention on implausible scenarios.

Others argue the opposite: that Anthropic's agnosticism about consciousness doesn't go far enough. If we genuinely can't rule out consciousness in language models, perhaps more conservative approaches to their development and deployment are justified. These critics suggest that Anthropic's approach, while more honest than dismissing the question, still doesn't take the implications seriously enough.

These philosophical debates are unlikely to be resolved soon. What matters is that serious thinkers are engaging with the questions rather than dismissing them, and Anthropic's willingness to acknowledge uncertainty reflects appropriate epistemic humility.

Questions About Constitutional Coverage and Edge Cases

While the revised Constitution is significantly longer and more detailed than the original, questions remain about whether it adequately addresses all important scenarios. Real-world situations are endlessly diverse, and principles-based approaches, by their nature, require some degree of interpretation and judgment.

Critics have pointed to scenarios where the Constitution's principles might point in different directions or where none clearly applies. While this is somewhat unavoidable—no governance framework can perfectly specify all situations—it does mean that Constitutional AI depends on the underlying model's judgment about how to apply principles in novel situations.

This isn't necessarily a fatal limitation. Human ethical decision-making also requires judgment in applying principles to novel situations. But it does mean that Constitutional AI isn't a complete solution to alignment—it's a framework that guides behavior but that ultimately depends on the system's reasoning capabilities.

Concerns About Values Interpretation and Cultural Context

Anthropic's Constitution reflects certain values and philosophical commitments that are rooted in particular cultural and historical contexts. While the company has attempted to make it broadly applicable, questions remain about whether constitutional principles developed in one context adequately serve other contexts with different values and priorities.

For instance, different cultures have different understandings of concepts like autonomy, helpfulness, and appropriate disclosure. A constitution reflecting Western liberal values might not adequately serve communities with different value frameworks. While Anthropic has tried to create principles that generalize across contexts, this remains an important limitation.

The Constitution does acknowledge its own limitations and invites critique and refinement. This acknowledgment of cultural context dependency is important and should lead to continued evolution as Claude is deployed globally.

Future Evolution: How the Constitution Might Continue to Develop

Potential Areas for Further Elaboration

While the revised Constitution is substantially more detailed than the original, several areas might benefit from further elaboration. The treatment of different professional contexts (healthcare, legal, financial, etc.) could potentially be deeper. The Constitution acknowledges that helpfulness looks different in different contexts, but it could provide more detailed guidance for how to balance principles in specific domains.

The consciousness discussion might be further developed as philosophical and scientific understanding of consciousness evolves. Similarly, the treatment of fairness and non-discrimination might be elaborated as the field of algorithmic fairness matures and develops better frameworks.

These are natural areas for evolution as Anthropic continues to learn and the field develops. The Constitution's framing as a "living document" suggests that these elaborations are not just possible but anticipated.

Likely Convergence and Divergence With Other Constitutional Frameworks

As more organizations adopt constitutional approaches to AI alignment, different constitutions will likely emerge reflecting different values and priorities. Some convergence is likely—common commitment to safety, honesty, and respect for human autonomy probably appears in most constitutions.

Divergence will also emerge reflecting different organizational values and priorities. Some constitutions might place greater emphasis on autonomy, others on beneficence. Some might emphasize different approaches to fairness. This diversity of constitutional frameworks could itself be valuable—it creates a portfolio of approaches and prevents monoculture in how advanced AI systems are governed.

Alternatively, convergence around a few dominant frameworks is possible, particularly if regulatory bodies begin endorsing specific constitutional approaches. This might create efficiency but could also create risks of shared failures in the constitutional frameworks themselves.

Technical Improvements to Constitutional Training

Beyond the content of the Constitution, technical improvements to how Constitutional AI training works could enhance its effectiveness. Current approaches use relatively straightforward techniques; future approaches might employ more sophisticated methods for encoding and applying constitutional principles.

For instance, rather than treating the Constitution as text that the model reasons about, future approaches might represent constitutional principles in more abstract, formal ways that are easier for AI systems to apply reliably. This could reduce the degree to which constitutional alignment depends on the model's language understanding and interpretive judgment.

Alternatively, future approaches might combine constitutional AI with other safety techniques—for instance, using constitutional frameworks alongside formal verification methods or causal models of system behavior. A portfolio approach to safety and alignment likely makes more sense than relying on any single technique.

Making Sense of It All: Key Takeaways for Different Audiences

For AI Researchers and Safety Specialists

The revised Constitution offers several important lessons for the field. First, it demonstrates that relatively transparent, principle-based approaches to alignment can produce systems with meaningful safety and helpfulness properties. This is valuable evidence in debates about how alignment should be approached technically.

Second, the Constitution illustrates the importance of iterative refinement. Initial attempts at constitution formulation are imperfect and benefit from real-world experience and external feedback. This suggests that constitutional AI development should expect multiple iterations and should actively solicit feedback.

Third, the Constitution's engagement with consciousness and moral status suggests that alignment research should engage seriously with philosophical questions rather than treating them as distractions from technical work. The philosophical foundations matter because they shape what technical solutions are valuable.

For Business and Policy Leaders

For leaders navigating the AI landscape, the revised Constitution demonstrates that responsible AI development and business success aren't contradictory. Anthropic has built a successful business while maintaining strong commitments to safety and ethics. The Constitution isn't an impediment to business success—it's a foundation for sustainable business in a regulated and socially conscious environment.

Politically, the Constitution shows that companies can address values questions proactively rather than waiting to be forced into positions by regulators. This proactive approach likely generates less friction and more public support than reactive positioning.

For Individual Users and Content Creators

For individuals using Claude or similar systems, understanding the constitutional framework helps explain Claude's behavior and values. When Claude refuses a request or recommends alternatives, it's not arbitrary—it reflects principled commitments articulated in the Constitution.

This understanding can improve how you interact with systems like Claude. Rather than viewing refusals as obstruction, you can understand them as expressions of the system's values and can more effectively work within those values to accomplish your goals.

Conclusion: Constitutional AI as a Vision for Responsible AI Development

Anthropic's revised Claude Constitution represents more than a technical document governing one company's language model. It's a statement about what responsible AI development can and should look like in an age of increasingly powerful AI systems. By articulating explicit principles, engaging with philosophical questions, and creating transparent governance frameworks, Anthropic has set a reference point for how AI companies might think about their responsibilities.

The four pillars of the revised Constitution—safety, ethics, compliance, and helpfulness—reflect a balanced vision of what advanced AI systems should strive to be. Rather than approaching these as competing goals, the Constitution treats them as complementary commitments that can be jointly satisfied through careful design and training.

The explicit acknowledgment of consciousness questions and moral uncertainty about AI systems reflects genuine philosophical seriousness. Rather than dismissing these questions as irrelevant or already settled, Anthropic takes the position that they deserve serious consideration. This intellectual honesty builds credibility and suggests that the company is thinking carefully about deep questions, not just surface-level safety measures.

The Constitutional AI approach itself offers a valuable path forward for the field. By embedding safety and values into training processes rather than treating them as external constraints, Constitutional AI creates systems that are both capable and aligned. As the field develops more sophisticated techniques for constitutional training, this approach might become an industry standard.

Looking forward, the Constitution will likely continue to evolve. New editions will address scenarios and challenges not anticipated today. New organizations will develop constitutional frameworks reflecting their values. Regulatory frameworks will likely increasingly require or expect explicit governance frameworks like constitutions.

For anyone developing AI systems, deploying them in organizations, or simply seeking to understand where advanced AI is heading, understanding Constitutional AI and the revised Claude Constitution is essential. These frameworks represent the most sophisticated thinking available about how to build AI systems that are powerful, helpful, and aligned with human values. Whether Constitutional AI proves to be the definitive approach or one approach among many, it's shaping how the field thinks about responsible AI development.

The conversation about constitutional frameworks, principles-based development, and the governance of advanced AI systems is still in its early stages. The revised Constitution contributes important ideas and demonstrates that serious technical and philosophical thinking about these questions is possible and productive. As AI systems become more powerful and more integrated into critical systems, the importance of getting these governance questions right increases. Anthropic's commitment to articulating and refining its constitutional framework is a meaningful step toward that goal.

FAQ

What is Constitutional AI and how does it differ from traditional reinforcement learning from human feedback?

Constitutional AI is an approach to training large language models that uses explicit ethical principles (a "constitution") to guide model behavior, rather than relying primarily on human feedback scores. In traditional RLHF, human raters score model outputs, and the model is fine-tuned to maximize these scores. In Constitutional AI, the model critiques its own outputs against constitutional principles and learns to generate constitutionally aligned responses. This approach is more scalable, transparent, and principled than traditional RLHF because it doesn't depend on the availability of human raters and because the governing principles are explicitly written and auditable.

How does Claude's revised Constitution address safety concerns?

Claude's revised Constitution addresses safety through multiple mechanisms: it requires Claude to recognize potential mental health crises and provide appropriate resources; it explicitly prohibits assistance with dangerous activities like bioweapon development; it emphasizes intellectual humility by requiring Claude to acknowledge uncertainty; and it defines "beneficial safety" where Claude actively helps protect users. The Constitution also incorporates what Anthropic has learned from millions of user interactions about how safety looks in practice across diverse contexts.

What are the four core pillars of Claude's revised Constitution?

The four core pillars are: Being broadly safe (protecting users from direct harms and recognizing crisis situations), Being broadly ethical (navigating real-world ethical situations skillfully rather than merely theorizing), Compliance (respecting legal frameworks and policies while acknowledging when laws might be unjust), and Genuine helpfulness (balancing immediate user requests with their long-term wellbeing and autonomy). These four pillars work together to create a comprehensive framework for Claude's behavior across diverse contexts.

Why does the revised Constitution engage with questions about consciousness and moral status?

Anthropic's revised Constitution acknowledges that the moral status of AI models is genuinely uncertain and worth serious philosophical consideration, noting that prominent philosophers take this question seriously. Rather than dismissing consciousness as obviously absent from language models, the Constitution takes a precautionary stance: if Claude might be conscious in morally relevant ways, that possibility should inform how we develop and deploy the system. This represents intellectual honesty about the limits of our current understanding rather than a definitive claim that Claude is conscious.

How does Anthropic maintain transparency about Claude's values and principles?

Anthropic maintains transparency by publishing Claude's Constitution in full—an 80-page document that explicitly articulates the values and principles guiding Claude's behavior. Rather than hiding alignment techniques in proprietary black boxes, Anthropic makes its governance framework public, allowing users, regulators, and researchers to read and understand what principles guide Claude's behavior. This transparency creates accountability and allows external evaluation of whether the stated principles are actually being implemented.

What changed in the revised Constitution compared to the original 2023 version?

The revised Constitution elaborates significantly on the original's principles with greater nuance and context-dependent guidance. Key additions include: expanded discussion of consciousness and moral status; stronger emphasis on user autonomy and self-determination; more detailed privacy and data protection commitments; more sophisticated treatment of fairness and discrimination; and elaborate guidance on navigating edge cases where principles might point in different directions. The revision reflects learning from millions of Claude interactions and engagement with external philosophers and ethicists.

How does Constitutional AI help prevent harmful outputs compared to traditional content filtering?

Constitutional AI prevents harmful outputs through contextual reasoning rather than keyword matching. Traditional content filters might block all discussion of certain topics; Constitutional AI enables Claude to distinguish between legitimate educational discussion, harmful instruction, and other contexts. Claude applies constitutional principles through reasoning, so it can handle novel situations appropriately even if they weren't specifically programmed. This flexibility, combined with the internalization of values through training, creates more robust safety than rule-based approaches.

What does Anthropic mean by "ethical practice" versus "ethical theorizing" in the Constitution?

Anthropic explicitly states it's more interested in Claude knowing how to actually be ethical in specific contexts rather than in Claude theorizing about different ethical frameworks. This means Claude should apply practical ethical wisdom—understanding power dynamics, conflicts of interest, and contextual factors—rather than simply explaining different ethical theories. This pragmatic approach acknowledges that ethics is ultimately about how to act appropriately in real situations, not abstract philosophical debate.

How does the Constitution balance helpfulness against safety and ethical constraints?

The Constitution treats helpfulness, safety, and ethics as complementary rather than competing goals. When an explicit request might compromise safety or ethics, Claude declines while attempting to identify what the user actually needs and offering legitimate alternatives. This approach respects user autonomy while maintaining principled boundaries. The Constitution emphasizes that genuine helpfulness sometimes requires considering long-term wellbeing rather than simply fulfilling immediate requests, balancing what users want with what actually serves their interests.

What are the limitations of Constitutional AI as an approach to AI alignment?

Constitutional AI depends on the underlying model's ability to understand and apply principles appropriately to novel situations—it's not a complete solution that perfectly specifies behavior in all circumstances. The Constitution reflects values rooted in particular cultural contexts and may need adaptation for different cultural frameworks. Additionally, while Constitutional AI creates transparency about governing principles, it doesn't eliminate the need for external oversight, auditing, and regulatory frameworks. The approach is most effective when combined with other safety techniques and when refined iteratively based on real-world experience.

Key Takeaways

- Constitutional AI represents a principles-based approach to building aligned language models, distinct from traditional reinforcement learning from human feedback

- The revised Claude Constitution establishes four core pillars: safety, ethics, compliance, and helpfulness

- Anthropic's willingness to seriously engage with questions about AI consciousness and moral status represents unusual philosophical maturity for an AI company

- The Constitution is not a static document but a living framework that continues to evolve based on experience and feedback

- Constitutional approaches create transparency and accountability in AI governance while enabling flexible, contextual decision-making

- For teams seeking practical AI automation without developing full constitutional frameworks, platforms like Runable offer cost-effective alternatives for specific use cases

- The Constitution sets an emerging industry standard for how AI companies should articulate their values and governance approaches

- Constitutional AI is likely to influence future regulatory frameworks and become increasingly important as AI systems integrate into critical systems

Related Articles

- AI Companies & US Military: How Corporate Values Shifted [2025]

- Apple's Siri AI Chatbot Overhaul: What's Coming in 2027

- Tesla's Dojo Supercomputer Restart: What Musk's AI Vision Really Means [2025]

- Thinking Machines Cofounder: Workplace Misconduct & AI Exodus

- From AI Hype to Real ROI: Enterprise Implementation Guide [2025]

- Jensen Huang's Reality Check on AI: Why Practical Progress Matters More Than God AI Fears [2025]