Cognitive Diversity in LLMs: Transforming AI Interactions [2025]

You've probably experienced that frustrating moment: you ask an AI tool a question, get an answer that's technically correct but somehow not what you needed. The problem isn't the AI—it's that we're asking it to think like everyone else, when what we really need is for it to think like you.

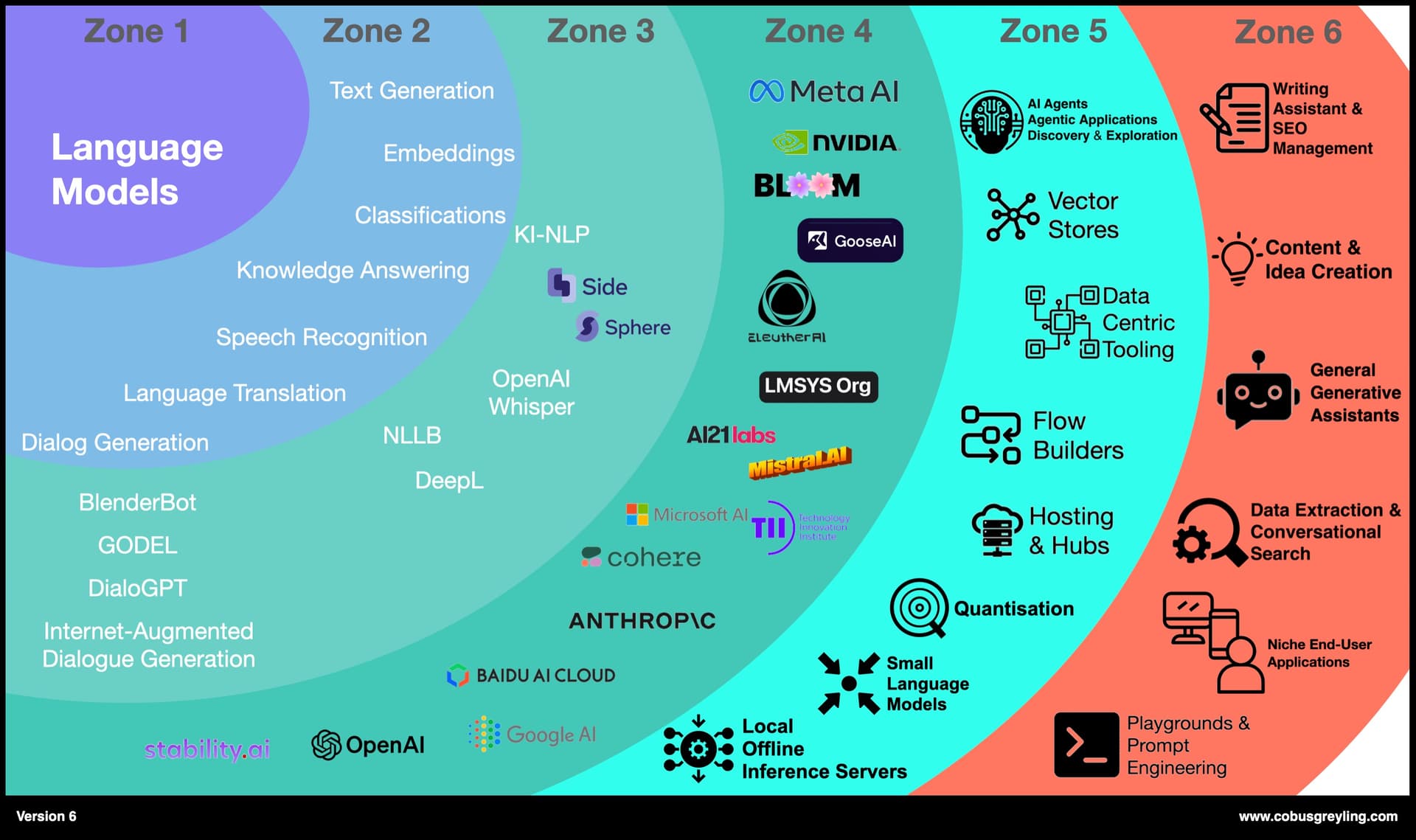

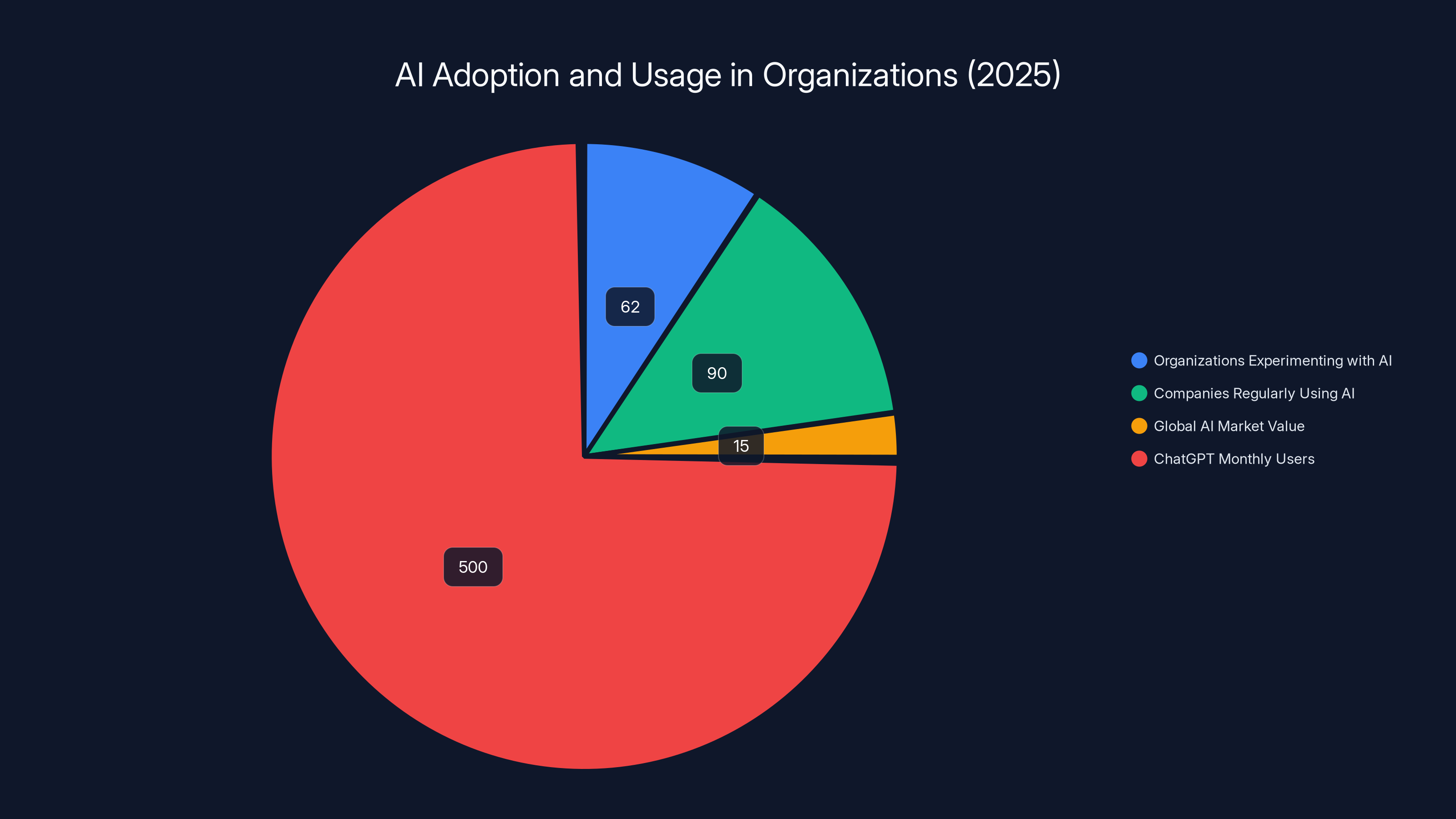

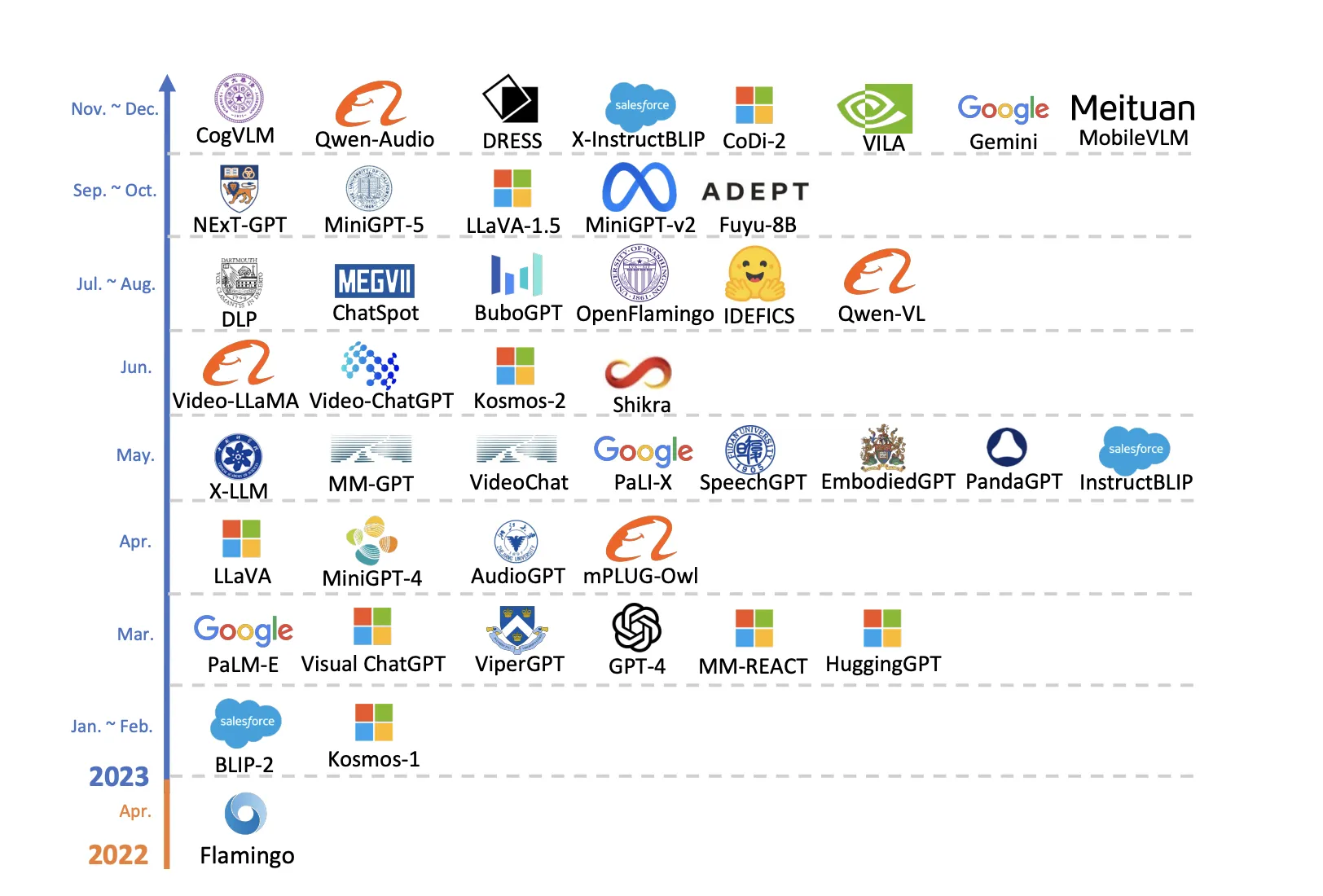

Here's what's happening. We're in the middle of a massive AI adoption surge. According to McKinsey research, 62% of organizations are actively experimenting with AI agents in 2025, and nearly 9 in 10 companies regularly use them. The global AI market is valued at around $15 billion, and ChatGPT alone reaches over 500 million monthly users. The numbers are staggering.

But there's a persistent problem nobody talks about enough: AI systems currently have one thinking style. They're trained to generate answers, but not necessarily the right kind of answers for your way of thinking. When someone asks for a creative solution, they get a by-the-book response. When someone needs a practical, rule-based approach, they get something experimental. The mismatch happens because LLMs don't understand how you think—they just generate what they've been trained to generate.

What if that could change?

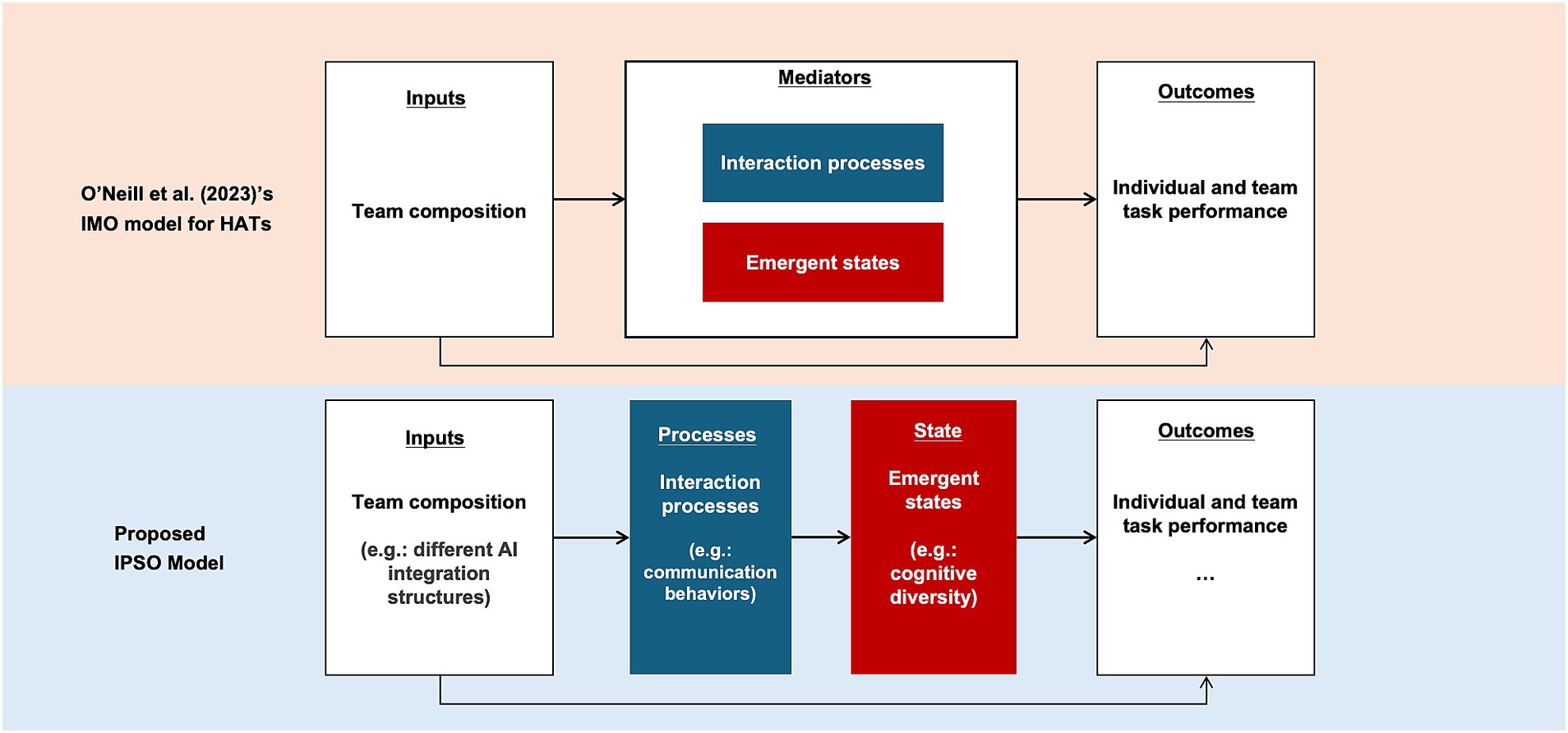

A growing body of research suggests that embedding cognitive diversity into language models could be a game-changer. We're not talking about diversity as a concept—we're talking about programming AI systems to recognize and respond to different cognitive styles, the way a good manager adapts their communication to each team member. The result? Better answers, delivered faster, in the way that actually matters to you.

Let's dig into what cognitive diversity really means, why it matters for LLMs, and how this research is already pointing toward the next evolution of AI interfaces.

TL; DR

- Cognitive diversity refers to differences in how people think, solve problems, and make decisions, ranging from highly adaptive (structured, rule-based) to highly innovative (flexible, rule-breaking)

- LLMs can be trained to emulate different cognitive styles based on prompt framing, generating either practical or paradigm-challenging solutions as needed

- Current AI limitations stem from treating all users the same way, ignoring that humans have innate preferences for how much structure they want and how rules should apply

- Research from Carnegie Mellon and Penn State demonstrates that cognitive-style-aware prompting improves solution quality by matching output to user needs

- The next generation of AI interfaces will likely incorporate cognitive diversity by default, eliminating the need for users to perfectly optimize their prompts

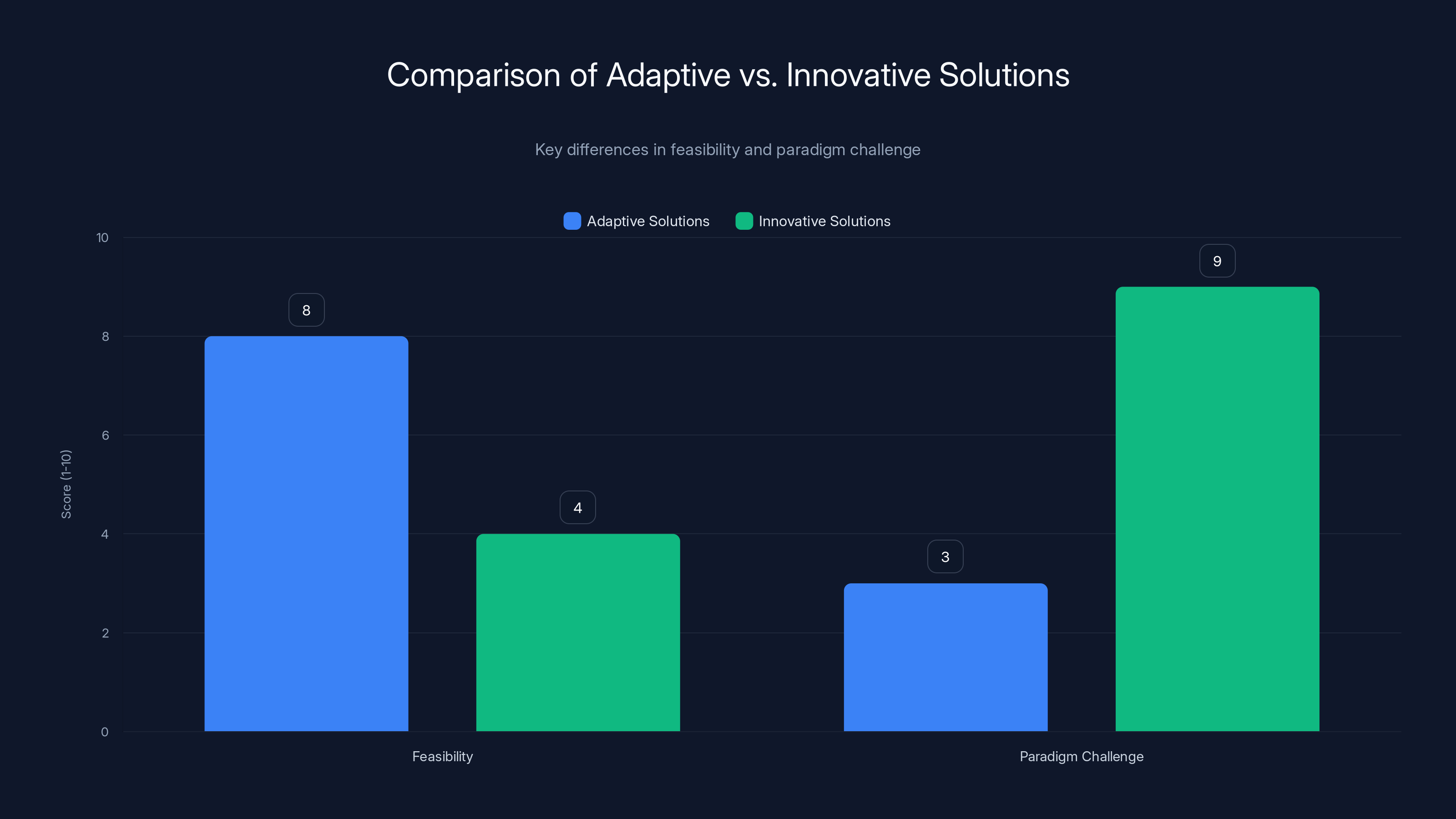

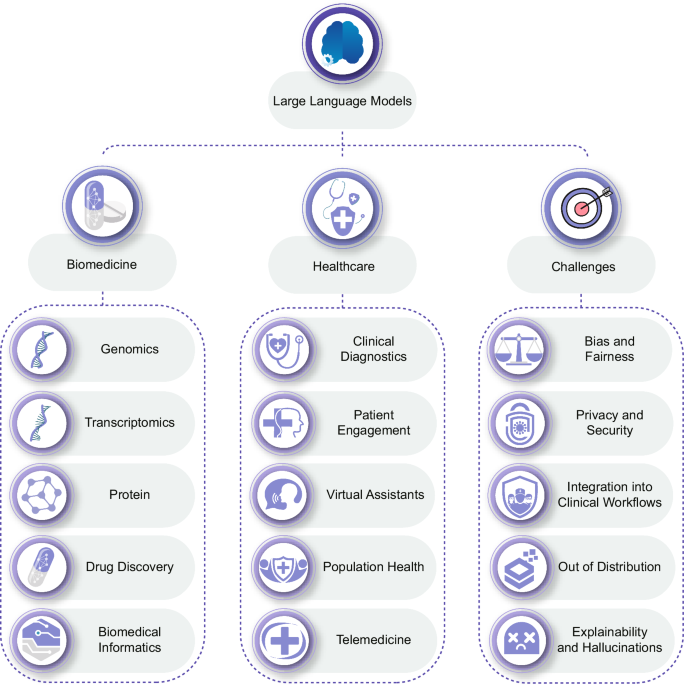

Adaptive solutions are highly feasible but less challenging to paradigms, while innovative solutions are less feasible but significantly challenge existing paradigms. Estimated data based on typical characteristics.

Understanding Cognitive Diversity: The Hidden Variable in Problem-Solving

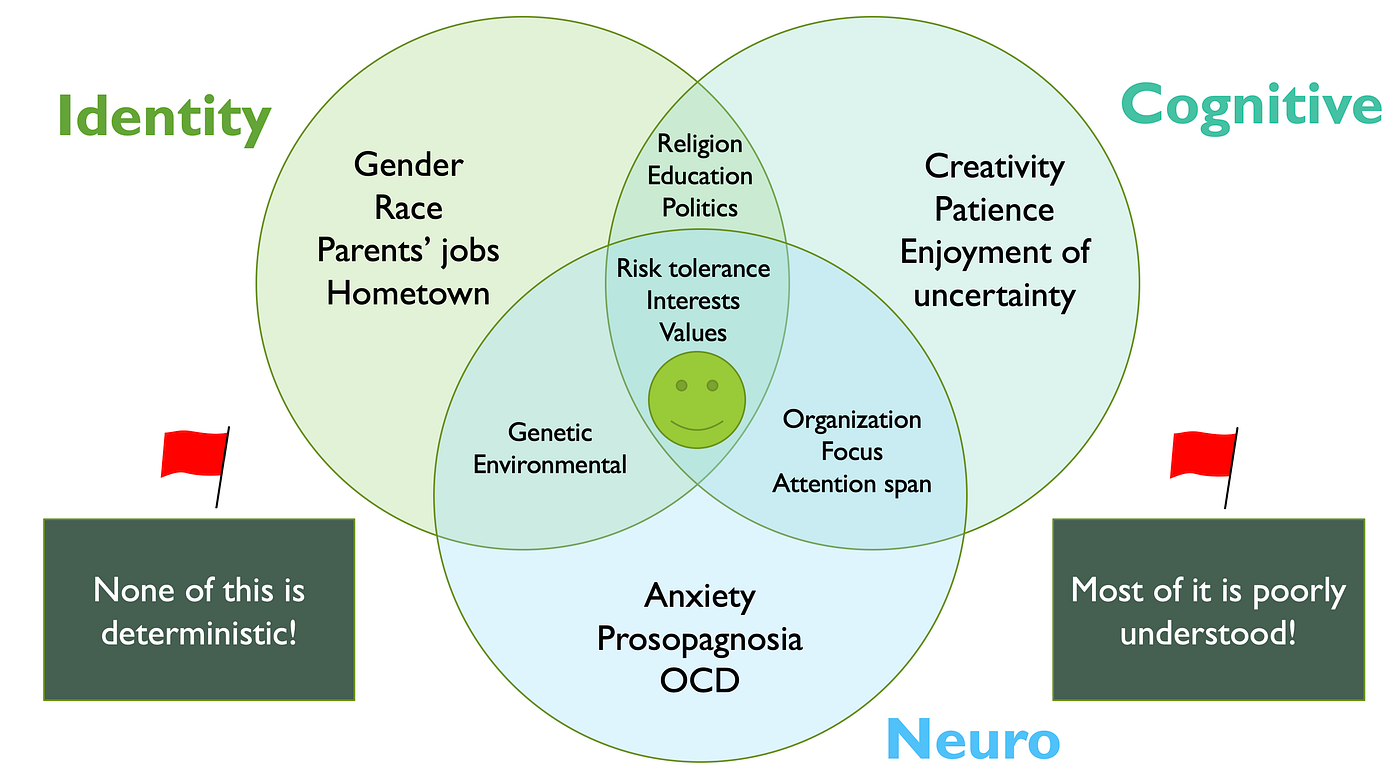

Cognitive diversity isn't about being smart or motivated. It's about how your brain naturally approaches problems.

Think about your team. You probably have someone who loves detailed planning and systematic processes. They want to know the rules, understand the structure, and execute flawlessly. You also have someone who gets bored with routine, breaks rules creatively, and generates wild ideas that somehow work. Neither person is better. They're just different.

This difference is what researchers call cognitive diversity, and it has nothing to do with IQ or work ethic.

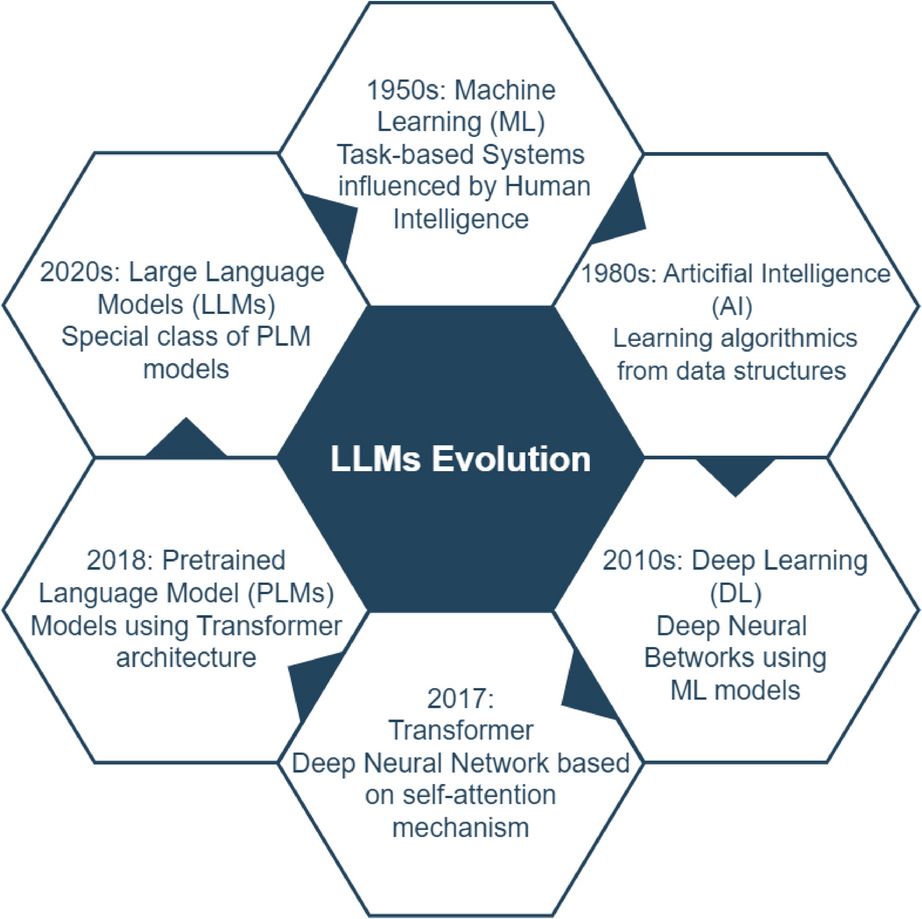

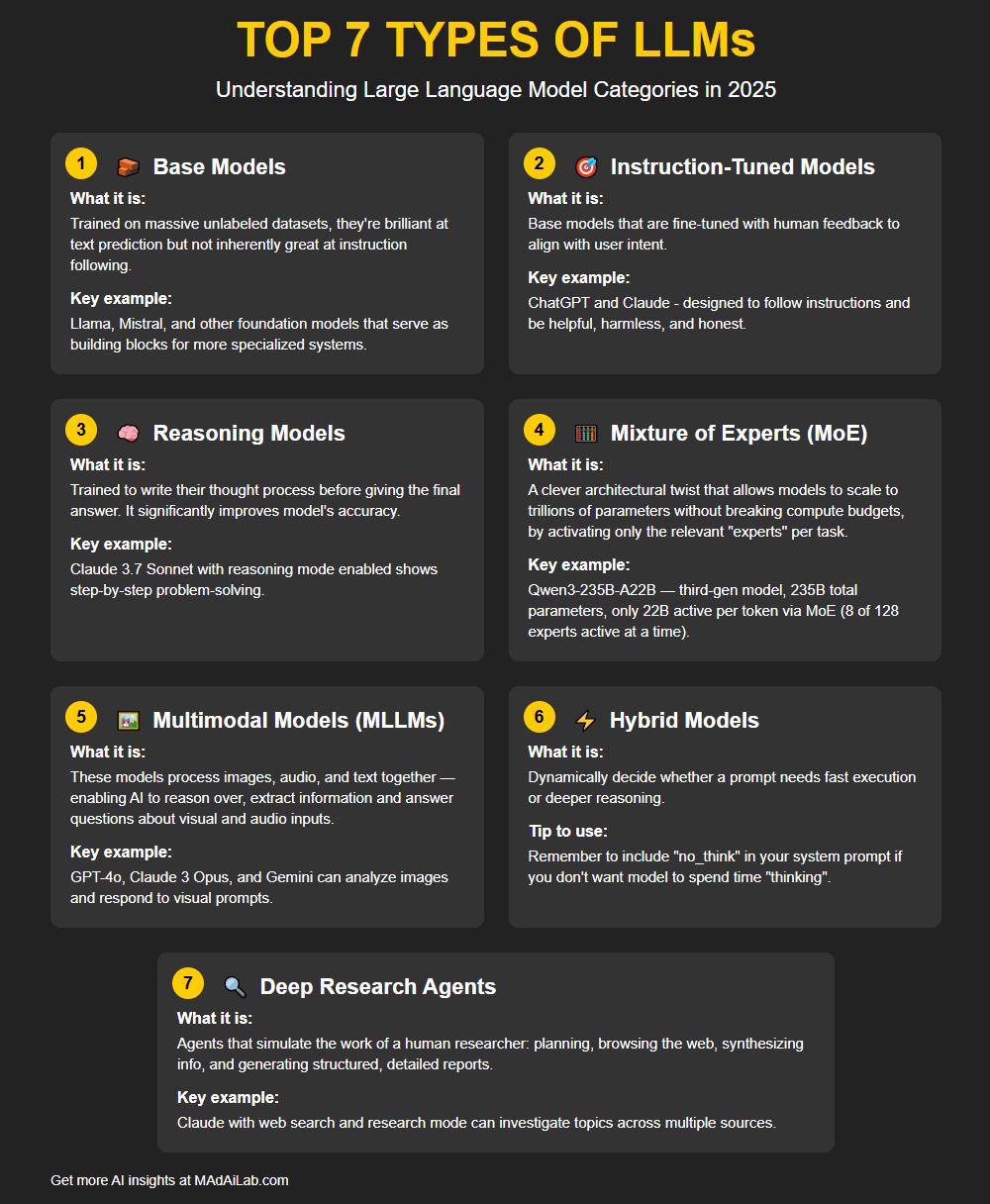

The Adaption-Innovation Theory (KAI)

Decades of research by Dr. M. J. Kirton established something called Adaption-Innovation Theory. The theory is built on a simple observation: when solving problems, people fall along a spectrum. On one end, you have highly adaptive thinkers—they prefer structure, clear rules, and incremental improvements. On the other end, you have highly innovative thinkers—they prefer flexibility, ignoring or changing rules, and radical shifts in approach.

The KAI (Kirton Adaption-Innovation) inventory measures where someone falls on this spectrum. It's not a personality test. It's a cognitive preference measure. It tells you how much structure someone needs to feel productive and focused.

Adaptive thinkers thrive with:

- Clear, consistent rules

- Structured environments

- Well-defined problems with clear success criteria

- Incremental, step-by-step solutions

- Documentation and processes

Innovative thinkers thrive with:

- Ambiguity and flexibility

- Loose boundaries and creative freedom

- Novel, undefined challenges

- Paradigm-breaking approaches

- Minimal constraints and bureaucracy

Here's the critical insight: there is no ideal position on this spectrum. A surgeon needs adaptive thinking during an operation. A research scientist needs innovative thinking during discovery. The same person might need both at different times.

What matters is alignment. When the cognitive style of the solution matches the cognitive style of the person receiving it, everything works better. The solution makes sense. It feels actionable. It fits their way of thinking.

Why This Matters in Real Life

Consider a design problem: you need to improve user onboarding for a mobile app.

An adaptive solution might look like: "Implement a step-by-step tutorial with clear instructions, measure completion rates, then optimize each step based on data." It's practical, testable, and stays within existing UX frameworks.

An innovative solution might look like: "Eliminate the traditional onboarding entirely. Use AI to detect user intent from their first action and adapt the interface in real-time." It's bold, it breaks convention, and it might not be feasible tomorrow.

Both solutions are valid. Both could work. But if you're an adaptive thinker working from an innovative solution, you'll feel lost. If you're an innovative thinker stuck with an adaptive solution, you'll feel constrained. The mismatch creates friction.

Now imagine if AI tools understood this. Imagine if you could ask a question and get back multiple possible answers, each framed according to different cognitive styles. The tool would recognize what you need and serve it up accordingly.

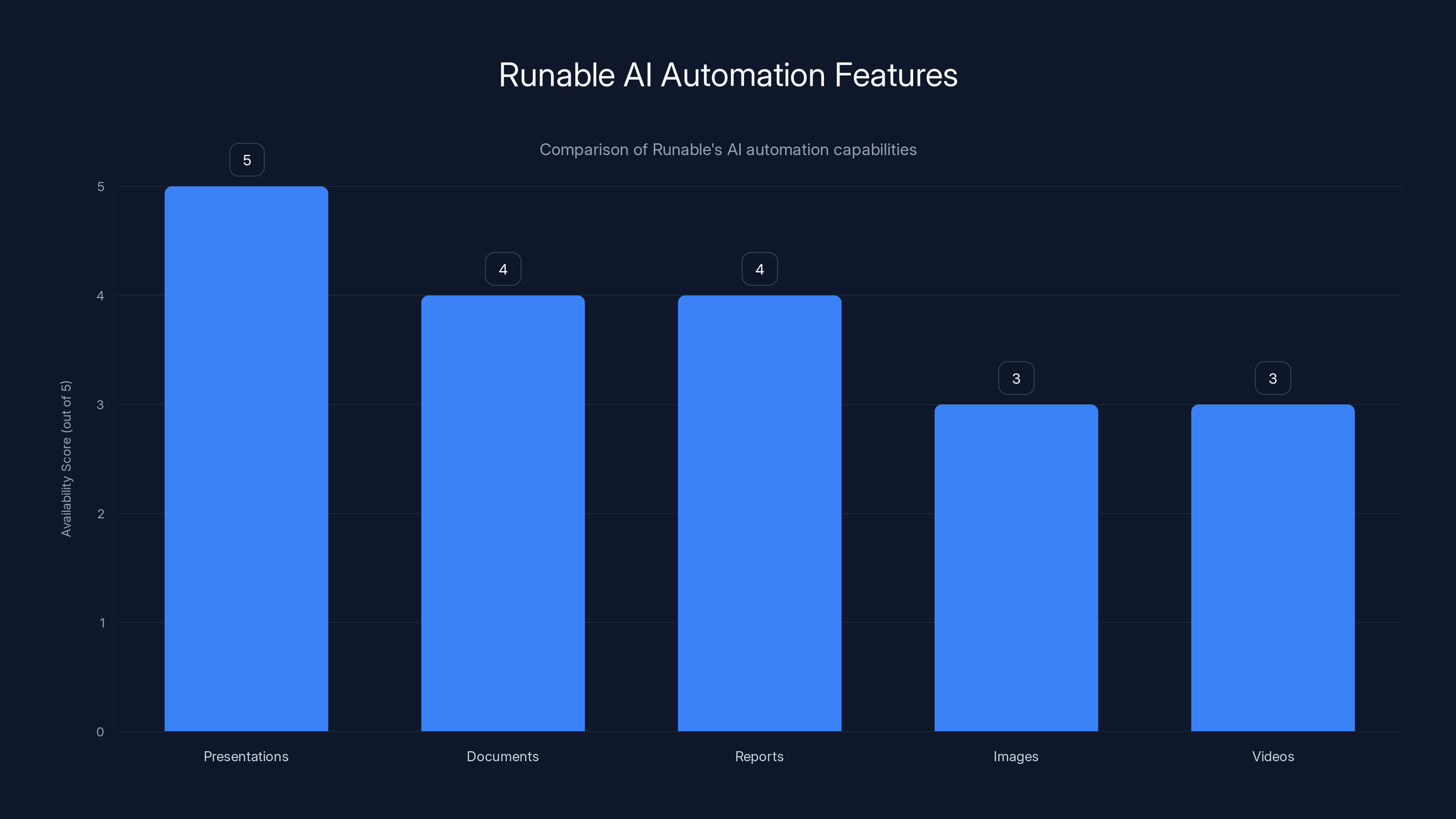

Runable offers a comprehensive suite of AI automation tools, excelling in creating presentations and documents. Estimated data based on typical feature offerings.

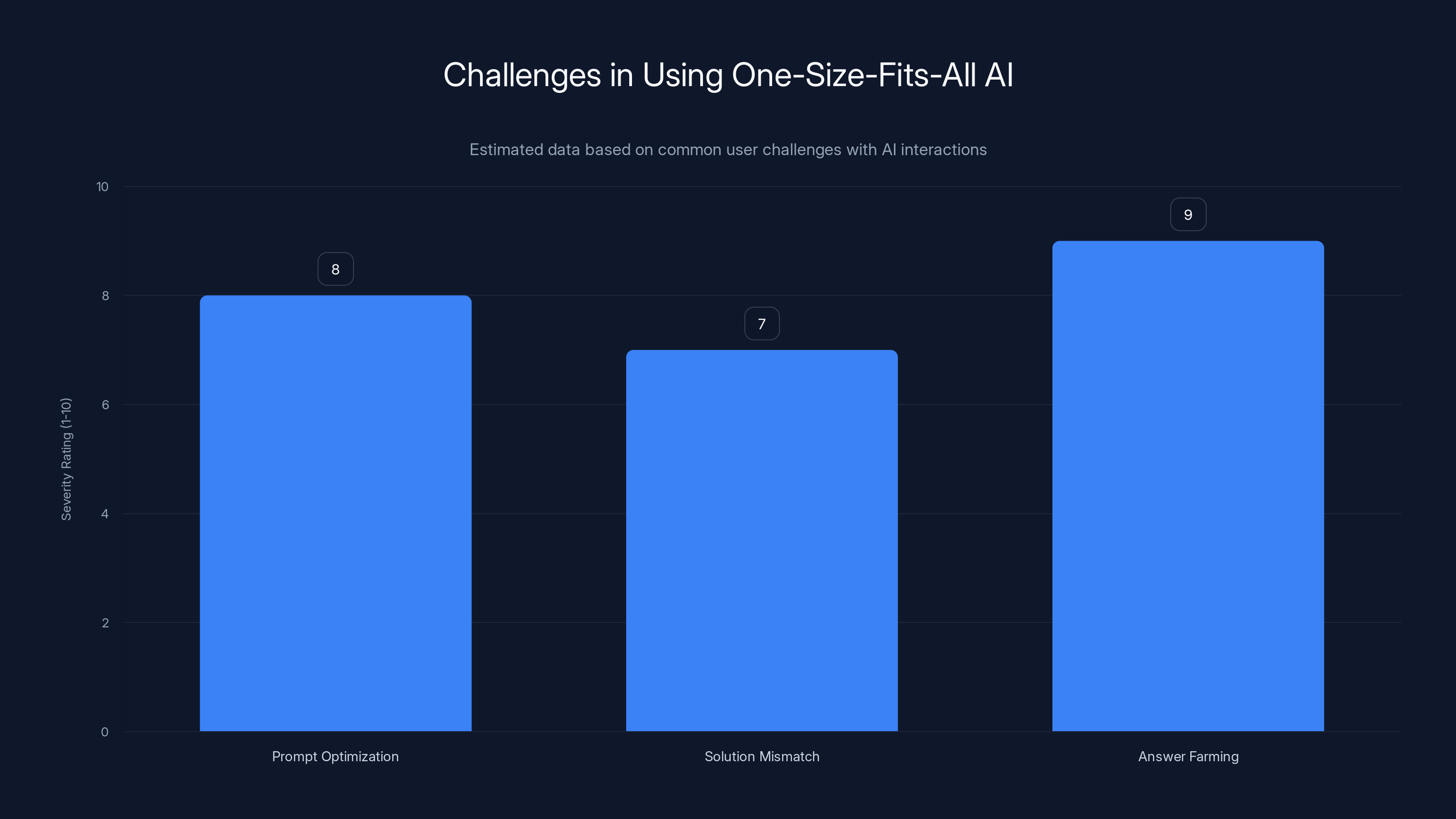

The Current Problem: One-Size-Fits-All AI

Let's be blunt: current LLMs treat everyone the same.

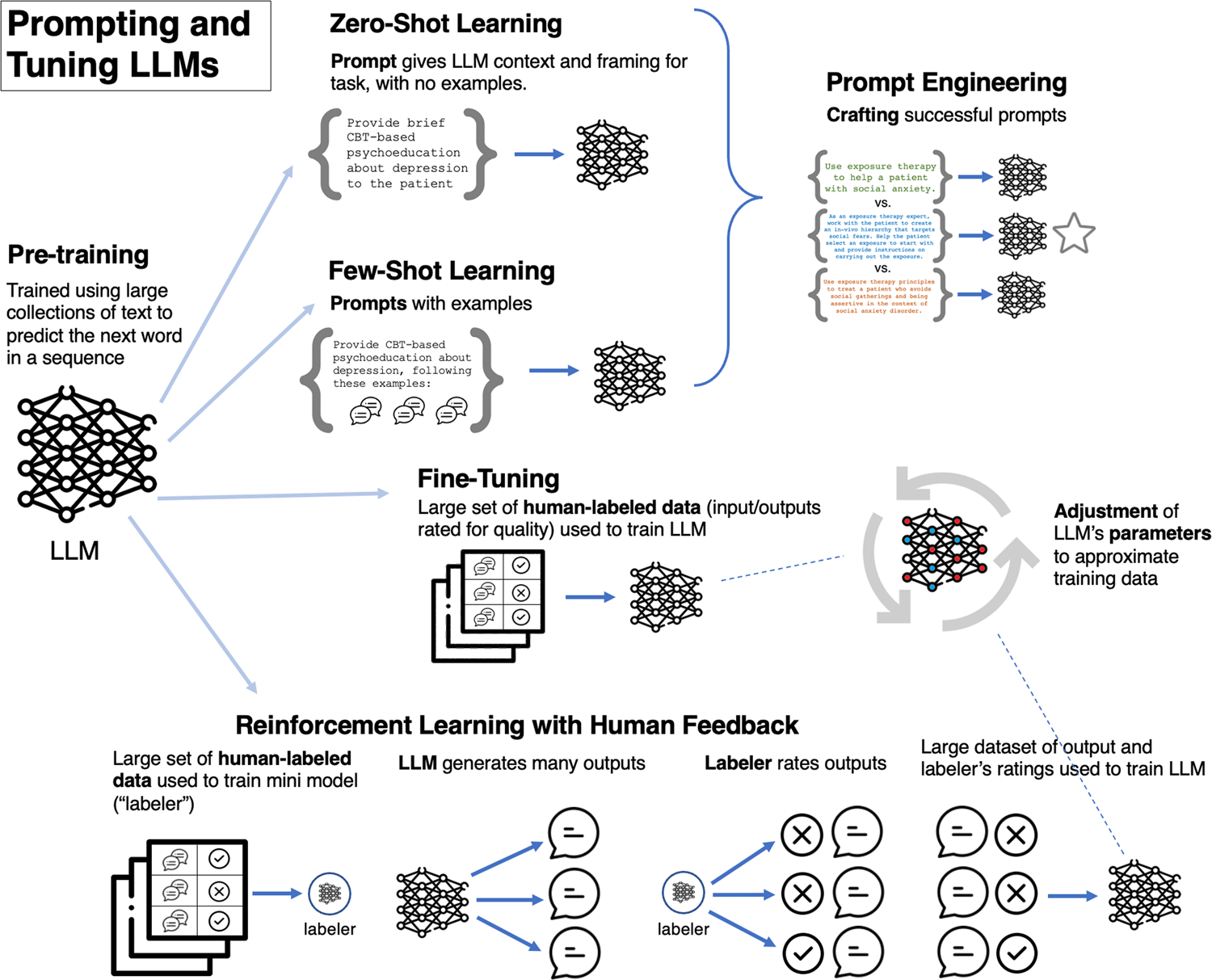

When you prompt Chat GPT, Claude, or Gemini, you're interacting with a system that has no idea how you prefer to think. It generates responses based on patterns in training data and the phrasing of your prompt. The quality of the output depends entirely on how well you craft that prompt—something most users never learn to do well.

This creates several problems.

The Prompt Optimization Burden

Right now, the responsibility for good AI output falls on the user. You're supposed to be better at prompting. You need to learn techniques like:

- Chain-of-thought prompting (breaking down complex questions into steps)

- Role-playing (asking the AI to act as a specific expert)

- Few-shot prompting (providing examples)

- Temperature adjustment (controlling randomness)

- Constraint specification (limiting scope and format)

Most users don't do any of this. They ask a question the way they'd ask a person, get a mediocre answer, and assume the AI is limited. The AI isn't limited—the interaction is.

The Solution Mismatch Problem

Even when people craft good prompts, they often get technically correct but emotionally unsatisfying answers. A data analyst asks for insight from a dataset and gets back a by-the-book statistical summary. A product manager asks for a strategy and gets a generic framework instead of something tailored to their situation.

This happens because the LLM has no model of how you think. It's generating generic solutions that work for hypothetical average users, not the specific person asking the question.

The "Answer Farming" Problem

According to McKinsey research, inaccuracy is the #1 risk organizations work to mitigate when using AI. People get wrong answers. But the real problem isn't always the answer itself—it's that the answer wasn't the kind of answer they needed.

An innovative thinker might need to explore unconventional approaches to a problem. If the AI keeps suggesting proven, safe solutions, it's technically accurate but practically useless for that person's cognitive style.

The Cognitive Load of Iteration

Getting the right answer from an LLM often requires multiple attempts. You ask, get something generic, rephrase, get something better, rephrase again. Each iteration costs time and mental energy. If the AI understood your cognitive style upfront, it could nail it on the first try.

McKinsey data shows that 39% of respondents attribute operational income improvements to AI, but those same respondents report spending significant time optimizing prompts and validating outputs. The friction is real.

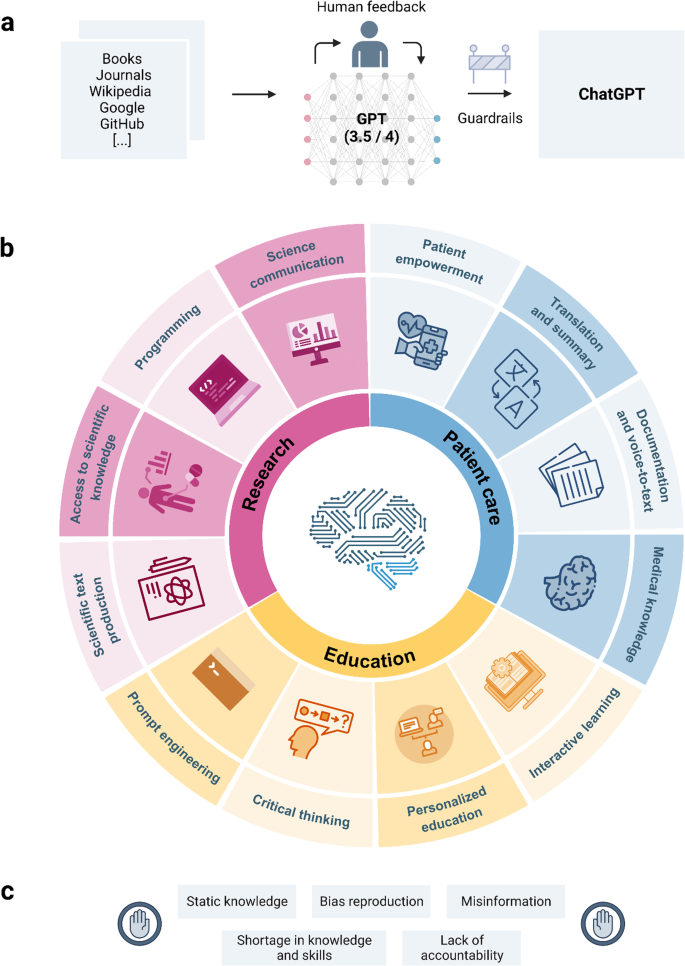

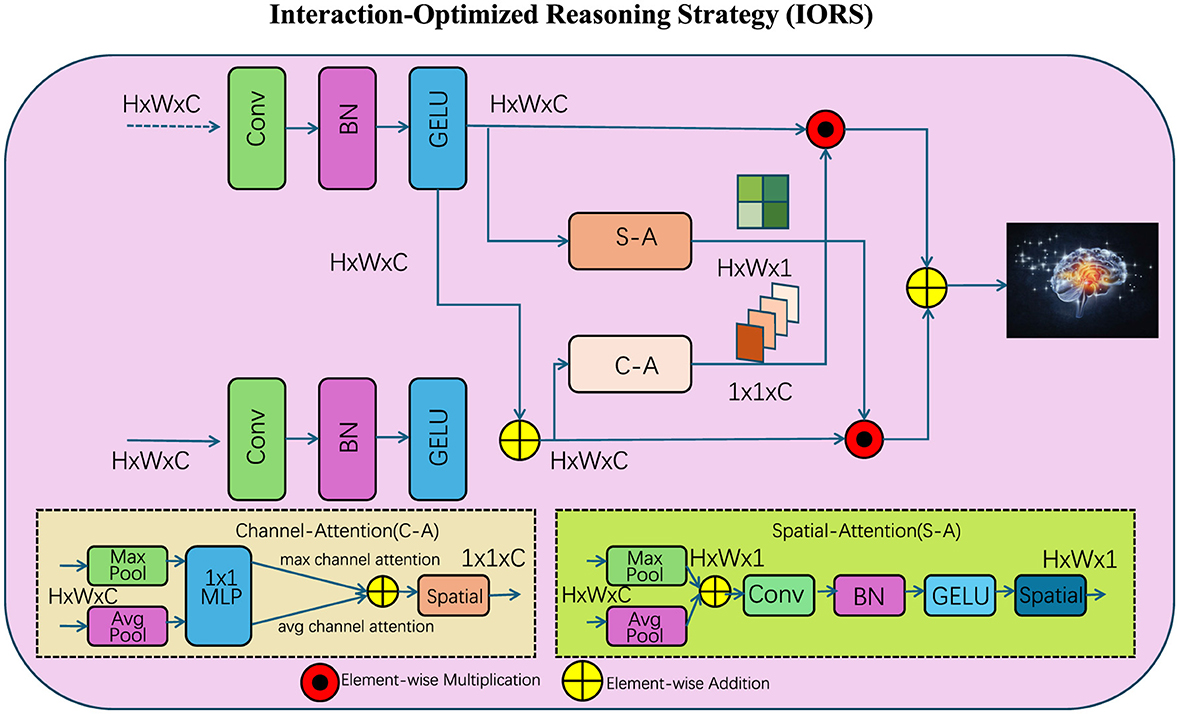

The Research Breakthrough: Teaching LLMs Cognitive Styles

Here's where things get interesting.

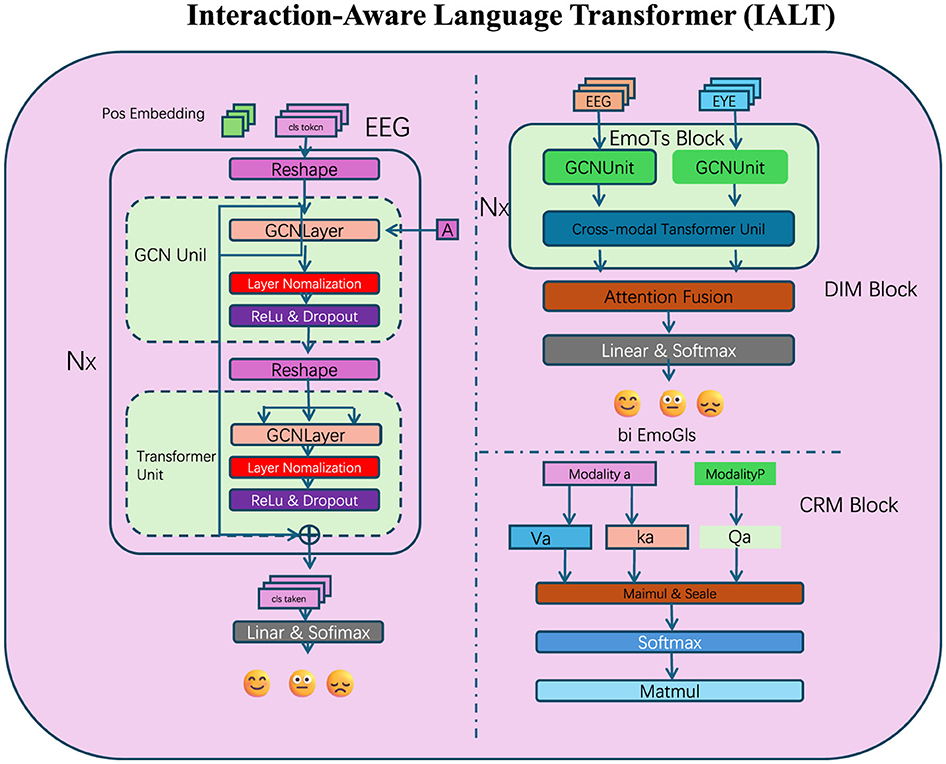

Researchers at Carnegie Mellon University and Penn State University asked a fundamental question: Can language models learn to emulate different cognitive styles?

Their answer was yes.

The Experiment

The research team (published in a paper titled "Putting the Ghost in the Machine: Emulating Cognitive Style in Large Language Models") conducted a structured experiment.

They took an LLM and taught it about Adaption-Innovation Theory. They gave it examples of adaptive thinking and innovative thinking. They showed it what meticulous, detail-oriented, rule-following solutions looked like versus bold, boundary-breaking, paradigm-shifting ones.

Then they gave the model three design problems to solve. For each problem, they used two different prompts:

-

The Adaptive Prompt: Framed with language emphasizing structure, clarity, feasibility, and working within existing frameworks. It asked for solutions that are practical, testable, and grounded in proven approaches.

-

The Innovative Prompt: Framed with language emphasizing ambiguity, flexibility, and breaking from convention. It asked for solutions that challenge existing assumptions and explore new possibilities.

The results were striking.

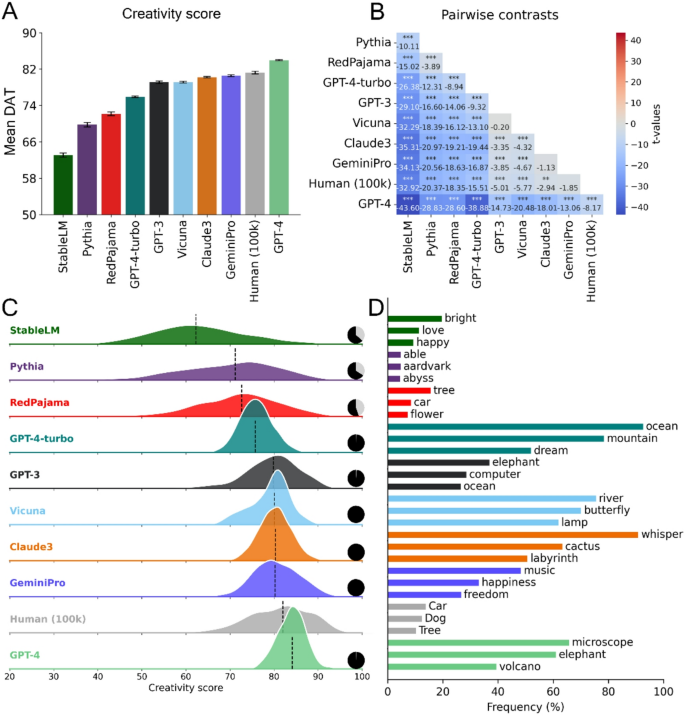

What They Found

When given the adaptive prompt, the LLM generated more feasible, structured, traditional solutions. When given the innovative prompt, it generated less feasible but more paradigm-challenging solutions.

But here's the key: the AI wasn't just generating different random outputs. It was generating contextually appropriate answers based on understanding cognitive diversity.

The adaptive solutions stayed within existing frameworks. They proposed incremental improvements. They focused on implementation feasibility and risk mitigation. They read like they came from a meticulous, systematic thinker.

The innovative solutions broke new ground. They challenged assumptions. They proposed radical shifts. They read like they came from a creative, rule-breaking thinker.

Most importantly: each prompt actually shaped the reasoning process, not just the final output. The AI approached the problems differently depending on the cognitive style it was asked to emulate.

The Evaluation Framework

The researchers didn't just ask: "Which answer is better?" They evaluated answers on two dimensions:

Feasibility (how realistic and implementable):

- Can this actually be done with current resources?

- What's the implementation timeline?

- What are the risks and unknowns?

- How much does this build on proven approaches?

Paradigm-Relatedness (how much it challenges existing frameworks):

- Does this stay within current paradigms or break them?

- What fundamental assumptions does it question?

- How innovative is this relative to current practice?

- Would this require reimagining how we approach this problem?

Adaptive solutions typically scored high on feasibility but lower on paradigm challenge. Innovative solutions typically scored lower on feasibility but higher on paradigm challenge. Neither was "better"—they were different kinds of better.

Why This Matters

This research proves something important: LLMs aren't limited to one thinking style. They can be directed to think adaptively or innovatively. They can generate the types of solutions you actually need if they understand your cognitive preferences.

This isn't about personality or preferences in the fuzzy sense. This is about fundamentally different approaches to problem-solving, and the research shows that LLMs can be trained to recognize and execute both.

Estimated data shows that 'Answer Farming' is perceived as the most severe challenge, followed by 'Prompt Optimization' and 'Solution Mismatch'.

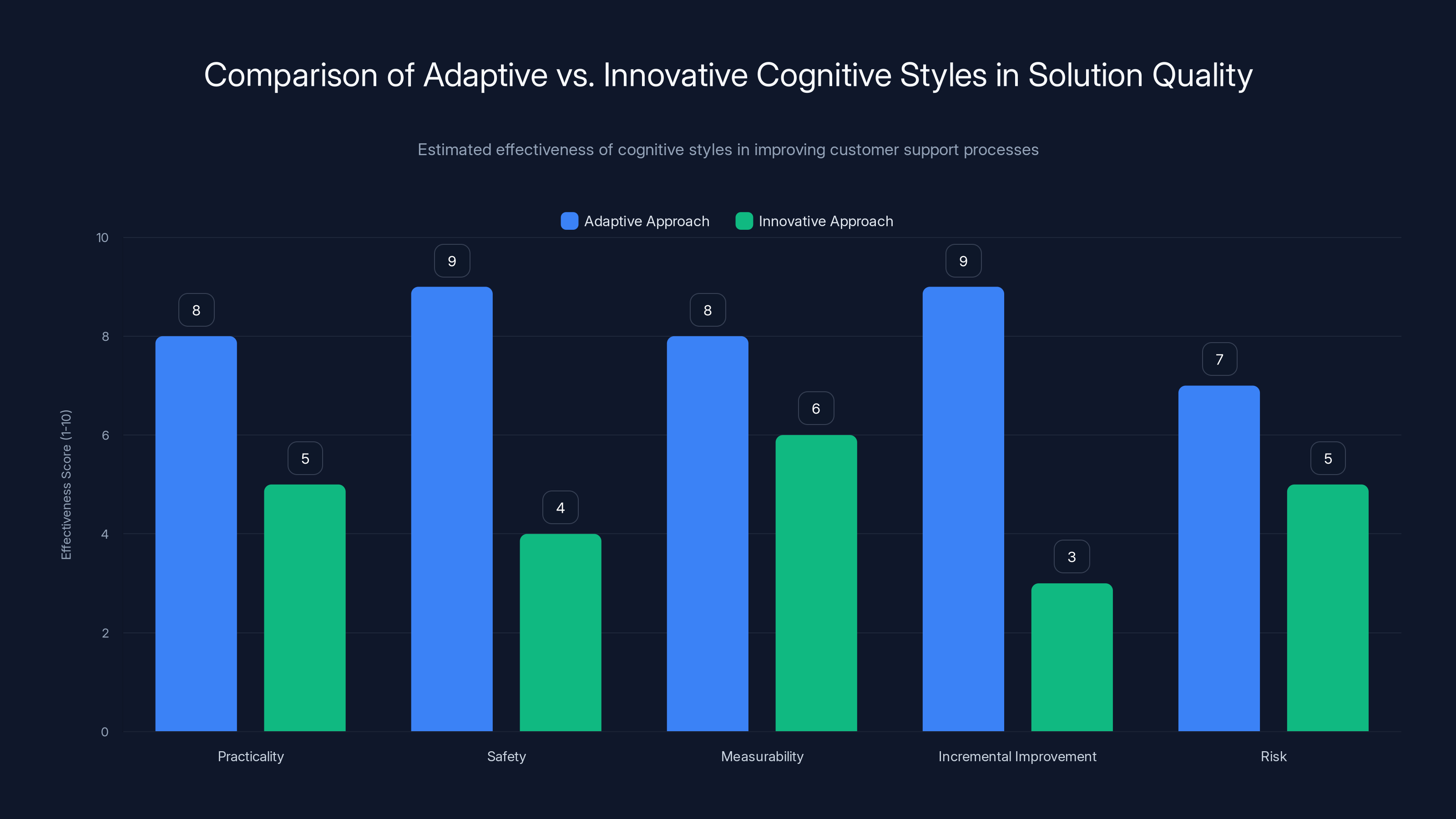

How Cognitive Styles Affect Solution Quality

Let's get concrete. How does cognitive style actually change the quality of an answer?

Consider a real-world scenario: improving a company's customer support process.

The Adaptive Approach

A cognitive style-aware AI prompted adaptively might suggest:

"Implement a structured improvement to your current system. First, audit your current ticket response times and identify bottlenecks. Second, create standard response templates for the top 20 ticket categories. Third, implement a tiered escalation process based on complexity. Fourth, track metrics weekly and optimize templates based on customer satisfaction scores."

This answer is:

- Practical: Every step can be executed immediately with current resources

- Safe: It builds on proven customer support frameworks

- Measurable: It defines specific metrics and tracking points

- Incremental: It improves what already exists rather than replacing it

- Risk-averse: It minimizes disruption and failure points

This is exactly what an adaptive thinker wants to hear. They're thinking: "Okay, I can do this. I know what success looks like. I have a clear roadmap."

The Innovative Approach

The same AI prompted innovatively might suggest:

"Rethink the entire customer support model. Instead of reactive ticket handling, implement predictive support that surfaces solutions before customers need them. Use AI to monitor user behavior, detect frustration signals, and proactively offer help. Replace human response templates with AI-generated, context-specific answers. Measure success not by response time but by the percentage of issues prevented before they happen."

This answer is:

- Bold: It proposes a fundamental shift from current practice

- Ambitious: It requires new infrastructure and thinking

- Risky: It could fail if implementation isn't smooth

- Paradigm-challenging: It questions whether reactive support is even the right model

- Forward-looking: It imagines support as prevention, not problem-solving

The Metrics Side

Let's look at how these would score on our evaluation dimensions:

Adaptive Solution:

- Feasibility: 9/10 (can be implemented in 4-8 weeks)

- Paradigm Challenge: 3/10 (builds on existing support models)

- Time to Value: 2-3 months

- Risk Level: Low

- Improvement Potential: 20-30% better support metrics

Innovative Solution:

- Feasibility: 4/10 (requires significant architectural changes)

- Paradigm Challenge: 9/10 (fundamentally reimagines support)

- Time to Value: 6-12 months

- Risk Level: High

- Improvement Potential: 3-5x better support outcomes if it works

Why One Answer Isn't Better Than the Other

Here's the critical insight: which answer is better depends entirely on who's asking.

If you're running a startup with limited resources and need quick wins, the adaptive answer is gold. You can implement it in weeks and improve your metrics. The innovative approach would bankrupt you.

If you're running a mature company with resources and you need to differentiate in a crowded market, the adaptive approach is a waste of effort. You'll just be matching competitors. The innovative approach is what you need.

The same question, asked by two different people with two different cognitive styles, needs two different answers. Current AI gives you one answer and hopes it lands right.

The Mechanisms: How Cognitive Style Prompting Works

Now let's look under the hood. How does this actually work from an LLM perspective?

Prompt Engineering for Cognitive Diversity

Cognitive style-aware prompting isn't magic. It's about giving the LLM different conceptual frameworks within which to generate answers.

When you prompt adaptively, you're essentially saying: "Here's the thinking style I want you to use: meticulous, detail-oriented, risk-averse, rule-following, incremental." The LLM then generates responses that reflect that thinking style.

When you prompt innovatively, you're saying: "Here's a different thinking style: boundary-breaking, ambiguity-comfortable, risk-tolerant, rule-challenging, radical." The LLM generates differently.

The language signals matter:

Adaptive Prompt Markers:

- "Provide practical, implementable steps"

- "Focus on feasibility and risk mitigation"

- "Work within existing frameworks and proven approaches"

- "Prioritize speed of implementation"

- "Define clear success metrics"

Innovative Prompt Markers:

- "Challenge existing assumptions"

- "Explore unconventional approaches"

- "Break from established frameworks"

- "Prioritize transformational impact over quick wins"

- "Question fundamental premises"

How the LLM Processes Cognitive Styles

When an LLM receives a cognitively-framed prompt, it's receiving instruction about how to think about the problem, not just what to solve.

Think of it like this: if you asked a chess player to suggest moves, you'd get one thing. If you said "suggest moves that prioritize immediate advantage" versus "suggest moves that prioritize long-term positional strength," you'd get different suggestions. The player is still playing chess, but they're thinking about it differently.

LLMs work similarly. They're processing:

- The problem (the actual question or challenge)

- The cognitive style (how to approach this type of problem)

- The constraints (what counts as a good answer within that style)

The combination shapes the output.

The Training Effect

Here's something important: models don't need to be retrained to recognize cognitive styles. They can be prompted to emulate them. The research showed this with existing models.

However, models that are specifically trained on cognitive diversity principles would be even better. You could:

- Fine-tune models on adaptive solutions and innovative solutions separately

- Train models to recognize which cognitive style a user prefers based on past interactions

- Build models that automatically suggest multiple cognitive approaches to the same problem

- Create models that learn user preferences over time and optimize accordingly

These advances would make cognitive diversity a core feature rather than a prompting hack.

The adaptive approach scores higher in practicality, safety, measurability, and incremental improvement, while the innovative approach is more risky and ambitious. Estimated data based on typical cognitive style attributes.

Real-World Applications: Where This Changes Everything

So where does this actually matter? Where would cognitive diversity in LLMs make the biggest difference?

Product Design and Innovation

Designers need both thinking styles. Early-stage design needs innovative thinking: challenge assumptions, explore radical directions, break conventions. Late-stage design needs adaptive thinking: refine approaches, optimize for feasibility, focus on implementation.

A cognitive diversity-aware design tool could serve up innovative prompts during ideation and adaptive prompts during refinement. Designers wouldn't need two different tools—one AI could adapt its thinking to match the design phase.

Software Development

Developers face similar challenges. Sometimes they need innovative approaches to architecture decisions. Sometimes they need meticulous, systematic approaches to debugging and optimization.

Consider code review feedback. An adaptive reviewer might suggest: "Refactor this function to follow the existing pattern we use in module X." An innovative reviewer might suggest: "This function reveals a fundamental flaw in our architecture. We should reconsider the whole approach."

An LLM that understood cognitive diversity could generate different code review styles. A team could even specify: "During this sprint, generate review feedback in the innovative style to challenge our assumptions about this module."

Business Strategy and Planning

Executives often need strategy advice. Sometimes they need adaptive thinking: "Here's how to optimize your current business model." Sometimes they need innovative thinking: "Here's a completely different business model that could disrupt your market."

A CEO could ask the same question but get two completely different strategic answers depending on their cognitive style and what they're trying to accomplish. This would make AI strategy tools far more useful than generic frameworks.

Education and Learning

Students have different learning styles. Some thrive with structured curriculum and clear rules (adaptive). Others need flexibility, ambiguity, and self-directed exploration (innovative).

A cognitive diversity-aware educational AI could adapt its teaching style to the student. Not personality-based adaptation (which is often superficial), but cognitive-style-based adaptation that fundamentally changes how it explains concepts and structures learning.

Content Creation and Copywriting

Writers need different styles for different purposes. Blog posts often need structure (adaptive). Creative fiction needs flexibility and rule-breaking (innovative).

LLMs that understood cognitive diversity could generate content in fundamentally different voices. A marketer could say: "Generate a product description in the adaptive style (emphasizing proven benefits) and the innovative style (emphasizing paradigm-breaking capabilities)" and get two genuinely different outputs.

Healthcare and Medical Decision-Making

Doctors need both. They need adaptive thinking for diagnosis (systematic, rule-based): "Follow the diagnostic algorithm systematically." They need innovative thinking for novel cases: "This doesn't match standard patterns. What unconventional explanations should we consider?"

An AI that switched between cognitive styles could provide more comprehensive medical support.

The Limitations and Challenges Ahead

Let's be real: this research is promising, but there are real limitations to address.

The Feasibility Problem

Innovative solutions often aren't actually feasible. The research found that innovative prompts generate less feasible answers—and that's okay for ideation, but it's a problem if you're trying to implement something.

The challenge: users might get excited about innovative ideas that can't actually be built. The AI needs to balance cognitive style with realistic constraint acknowledgment.

User Self-Awareness

For this to work, users need to understand their own cognitive style. Not everyone does. You could get an adaptive thinker to request an innovative approach and then reject the answer because it violates their actual preferences.

This means the next-generation AI interfaces need to help users discover their cognitive style, not just respond to what they ask for.

The Validation Problem

How do you know if a solution is actually good? Feasibility is measurable. But how do you measure whether an innovative solution is genuinely good versus just novel?

The research used expert evaluation, but that doesn't scale. Real-world implementation would need clearer metrics for solution quality across different cognitive styles.

Training Data Bias

LLMs are trained on internet text, which reflects humanity's existing thinking patterns. Adaptive thinking is probably overrepresented (because standard, proven approaches appear more often in training data). Innovative thinking might be underrepresented.

This means LLMs might naturally bias toward adaptive solutions even with innovative prompting. Correcting this requires deliberate training adjustments.

The Personalization Privacy Tradeoff

To truly personalize based on cognitive diversity, the system would need to track user cognitive preferences over time. That's valuable, but it's also a privacy concern. How much user data is okay to collect in pursuit of better AI interactions?

The Cost Question

Cognitive diversity-aware AI is more complex than generic AI. It requires better prompting infrastructure, more sophisticated models, or finer-grained training. That all costs more. Will people pay for it?

In 2025, 62% of organizations are experimenting with AI, nearly 90% use AI regularly, the AI market is valued at $15 billion, and ChatGPT has 500 million monthly users. Estimated data.

Implications for AI Interface Design

If cognitive diversity becomes a core principle in LLM development, what changes?

The Death of Generic Prompting

Right now, prompting is universal: everyone uses the same techniques. In a cognitive diversity-aware future, prompting becomes personalized. Your preferred prompting style emerges from your cognitive style.

An adaptive thinker might prefer detailed, structured prompts. An innovative thinker might prefer open-ended, ambiguous prompts. The interface could adapt to what you naturally do well.

Multi-Style Response Delivery

Instead of getting one answer, you might get:

- The adaptive version (practical, feasible, incremental)

- The innovative version (bold, paradigm-challenging, transformational)

- A hybrid version (combining both approaches)

You'd choose which one matters for your current context. Different answers for different needs.

Cognitive Style Detection

Advanced AI interfaces could detect your cognitive style through interaction patterns. How do you ask questions? How do you react to different answer types? What problems do you naturally lean toward? Over time, the system learns your style and serves up answers in your preferred way.

This isn't personality detection (which feels invasive and often wrong). This is functional cognitive style detection based on how you actually work.

Context-Aware Cognitive Switching

The best approach might be context-aware cognitive switching. The system recognizes what phase of work you're in and suggests appropriate cognitive styles:

- Ideation phase: Lean heavily toward innovative prompting

- Planning phase: Shift toward adaptive prompting

- Execution phase: Mostly adaptive, with innovative prompts for obstacles

- Evaluation phase: Hybrid approach examining both angles

You'd rarely need to explicitly request a cognitive style—the system would learn when you need it.

Team Cognitive Diversity Tools

For teams, this gets really interesting. Imagine:

- A brainstorming session where the AI generates both adaptive and innovative ideas, making sure both thinking styles contribute

- A project planning tool that flags when a team is too homogeneous in cognitive style and suggests bringing in different perspectives

- A code review system that switches between adaptive (quality, standards) and innovative (architecture rethinking) review modes

This would actually force teams to embrace cognitive diversity rather than just talking about it.

The Future of LLM Interaction

We're standing at an interesting moment. The research proves cognitive diversity can be embedded in LLMs. The applications are clear. The limitations are manageable. So what's next?

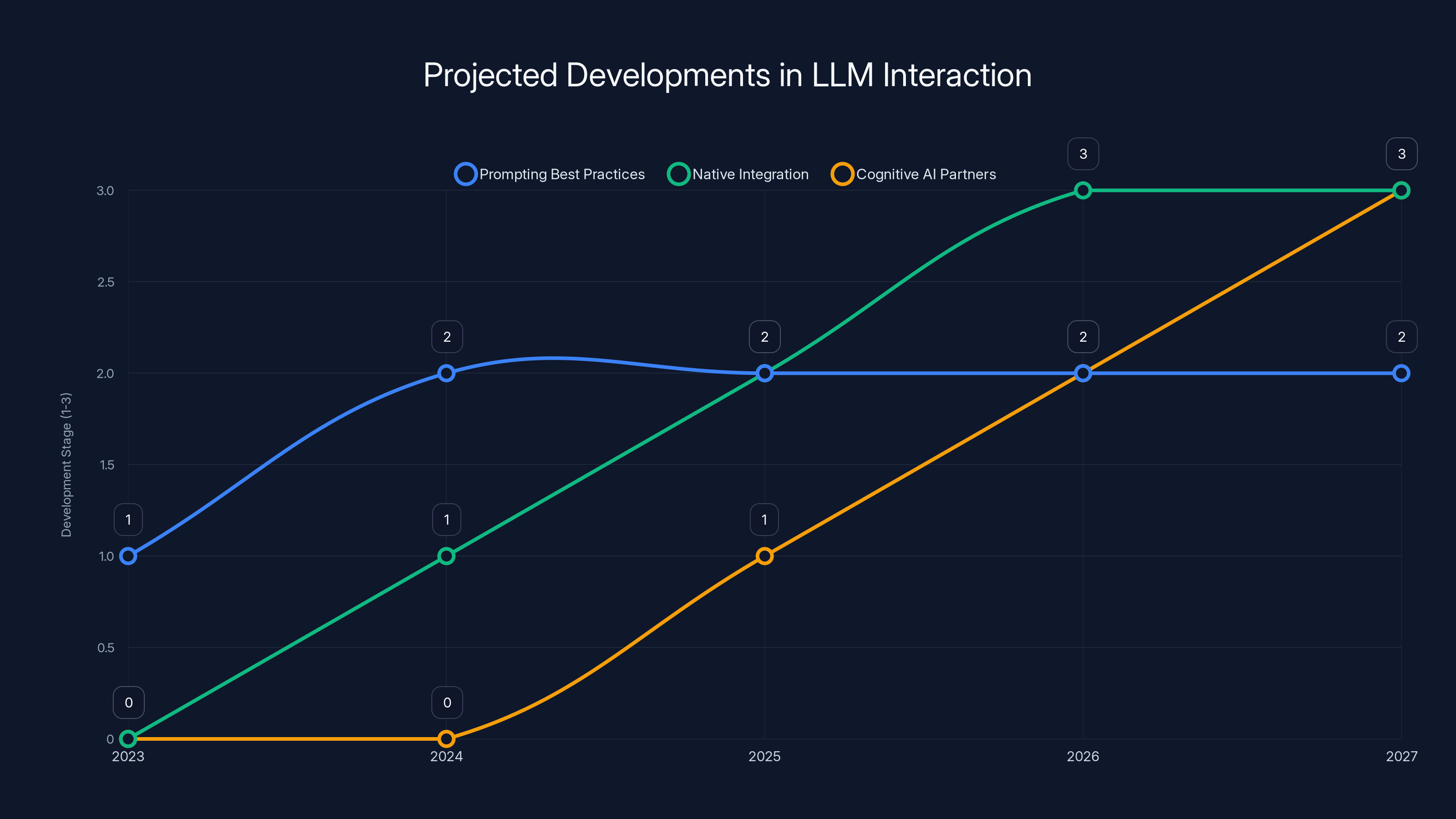

Near-Term: Prompting Best Practices

In the next 12-18 months, expect:

- Cognitive style guides that teach people how to prompt adaptively and innovatively

- Prompt templates that bake in cognitive diversity principles

- Prompting frameworks that help users diagnose their needs and select the right cognitive style

- Interface improvements that make cognitive style selection visible and easy

This is the low-hanging fruit. You don't need new models—just better prompting infrastructure.

Medium-Term: Native Integration

18-36 months out, expect:

- Fine-tuned models specifically trained on adaptive and innovative solution generation

- Native cognitive style parameters in API calls: you specify style just like you specify temperature or length

- Automatic style detection based on user interaction patterns

- Multi-output generation where the system returns solutions in multiple cognitive styles simultaneously

- Hybrid reasoning where models understand when to apply which cognitive style mid-reasoning

This is where cognitive diversity becomes a core feature, not a prompting trick.

Long-Term: Cognitive AI Partners

In 3-5 years, the vision is clear:

- Personalized cognitive AI that learns your style and serves answers accordingly

- Team cognitive AI that ensures diverse thinking is applied to all decisions

- Domain-specific cognitive AI (medical AI that switches between diagnostic and innovative thinking, for example)

- Automatic cognitive style collaboration where you don't have to request different styles—the system provides them proactively

At this point, we're not just asking better questions of AI. We're collaborating with AI partners who think in different ways and together generate better solutions.

The chart illustrates the projected timeline for advancements in LLM interaction, with near-term improvements in prompting practices, medium-term native integration, and long-term cognitive AI partnerships. Estimated data.

Why This Matters More Than You Think

Cognitive diversity in LLMs isn't just a nice-to-have feature. It's foundational to better AI.

Right now, we're in the early stages of an AI adoption boom. Organizations are experimenting, trying things, finding that AI helps—but also finding that it's clunky, requires constant prompt optimization, and doesn't feel like a true partnership.

The bottleneck isn't intelligence. The bottleneck is alignment. AI isn't aligned with how humans actually think and work. We all have cognitive preferences, and current AI ignores that.

By embedding cognitive diversity into LLMs, we're essentially saying: "Let AI adapt to how humans naturally think, not the other way around."

This changes everything:

- Better adoption: If AI thinks the way you do, you'll use it more and better

- Faster learning curves: You don't need to learn prompting tricks—the system learns your style

- Higher quality outputs: You get answers in the format and style that actually works for you

- Better decisions: Teams that embrace both adaptive and innovative thinking make better calls

- Less frustration: You're not constantly wrestling with an AI that thinks differently from you

The research shows this is possible. The applications are everywhere. The path forward is clear.

We're not waiting for cognitive diversity in LLMs to become mainstream. We're at the moment where it's transitioning from "interesting research" to "practical implementation." Within 2-3 years, it won't be a feature—it'll be table stakes.

How to Start Experimenting Today

You don't need to wait for new tools or updated models. You can start experimenting with cognitive diversity prompting right now.

Step 1: Understand Your Own Cognitive Style

Reflect on how you naturally approach problems:

- Do you prefer clear structure and defined rules, or flexibility and ambiguity?

- Are you energized by optimization and incremental improvement, or by paradigm-shifting ideas?

- When you solve problems, do you tend to work within existing frameworks or break them?

- Do you value feasibility and low risk, or transformational impact and ambitious swings?

If your answers lean toward structure, rules, optimization, and feasibility, you're probably more adaptive. If they lean toward flexibility, ambiguity, paradigm-breaking, and ambition, you're probably more innovative. Most people are somewhere in between.

Step 2: Design Two Prompts

Take a real problem you're working on. Design two prompts:

Prompt A (Adaptive): "Suggest practical, feasible improvements to [problem]. Focus on solutions that: (1) can be implemented with existing resources, (2) build on proven approaches, (3) have clear success metrics, (4) minimize risk and disruption, (5) can be completed in the next 1-2 months."

Prompt B (Innovative): "Suggest bold, unconventional approaches to [problem]. Focus on solutions that: (1) challenge existing assumptions, (2) break from established frameworks, (3) prioritize transformational impact over quick wins, (4) embrace ambitious thinking, (5) could completely reimagine how we approach this."

Step 3: Compare Outputs

Generate responses to both prompts using your preferred LLM. Don't pick one as "better." Instead:

- Which answer feels more natural to how you actually think?

- Which approach would better solve your actual problem?

- Could you combine the best of both?

Step 4: Learn and Iterate

Over time, you'll notice patterns in which cognitive style serves which contexts best. Use that knowledge to refine your prompting.

You might discover: "For strategic planning, I always want the innovative approach. For tactical implementation, I want the adaptive approach. For complex problems, I want both." That's valuable knowledge.

Key Takeaways for Organizations

If you're leading an organization adopting AI, cognitive diversity is worth thinking about systematically.

For AI Implementation Teams

- Start with education: Help your organization understand that cognitive diversity exists and that different thinkers need different approaches from AI

- Develop prompting standards: Create templates that bake in cognitive diversity principles, rather than assuming one-size-fits-all prompts

- Train in cognitive awareness: Help people discover their own cognitive style and learn to prompt accordingly

- Measure what matters: Track whether AI-generated solutions actually get implemented, not just how many prompts were generated

For Leadership

- Diverse thinking is a feature, not a bug: Don't homogenize your teams around one cognitive style. Adaptive and innovative thinkers together outperform either alone

- AI should amplify team diversity: Choose AI tools that support different thinking styles, not tools that normalize one approach

- Expect longer evaluation cycles: If your AI generates both adaptive and innovative solutions, you need time to evaluate both, not just pick the first one

For Product Teams

- Design for cognitive diversity: Build tools that serve multiple cognitive styles, not just one "best way"

- Make cognitive style visible: Let users explicitly choose which thinking style they want from AI, then learn their preferences over time

- Surface multiple answers: Generate multiple approaches in one response, explicitly labeled by cognitive style

Addressing the Skepticism

Let's address the pushback you might hear.

"This is just personality typing dressed up in research language."

Not really. Personality typing is about who you are. Cognitive diversity is about how you think about problems. Someone can be an introvert (personality) who's a highly innovative problem-solver (cognitive style), or an extrovert who's highly adaptive. They're different dimensions. The research is grounded in decades of psychometric work, not pop psychology.

"If we optimize for individual cognitive styles, won't we lose team coherence?"

Opposite. Teams with diverse cognitive styles make better decisions than homogeneous teams. The risk is that everyone defaults to their individual style and never challenges themselves. An organization that encourages both cognitive styles—that expects adaptive thinking in some contexts and innovative thinking in others—gets the best of both worlds.

"This feels like we're outsourcing thought to AI."

No more than a calculator outsources math. The AI generates possibilities. You decide. Cognitive diversity-aware AI actually gives you more options to choose from, not fewer.

"Won't adaptive thinking just optimize people into stagnation?"

Adaptive thinking is powerful. It's how you execute well, reduce risk, and build on proven approaches. The issue is when it's the only thinking style in an organization. Innovation requires innovation-style thinking. The sweet spot is knowing when to use which.

The Competitive Advantage

Here's what organizations that embrace cognitive diversity in AI will have over those that don't:

- Faster decision-making: You get multiple well-reasoned approaches and choose faster

- Better adoption: Tools that match how people naturally think get used more

- Higher quality solutions: You're not forcing everyone through one cognitive framework

- More innovation: Systematically generating innovative thinking, not just adaptive optimization

- Stronger teams: Diverse thinking styles are brought into collaboration systematically

- Lower frustration: People stop wrestling with AI that thinks differently from them

In an AI-driven future, the competitive advantage isn't raw intelligence. It's alignment. Whoever can align AI with how humans actually think and work will win.

Conclusion: The Future Is Cognitive

We're at an inflection point. AI has proven it can understand and emulate different cognitive styles. The applications are everywhere. The research is solid. The path forward is clear.

The next generation of AI interfaces won't ask you to think differently. They'll learn how you think and serve up answers in that style.

You won't need to become a prompting expert. You'll just ask questions the way you naturally do, and the system will deliver answers in the way that works for your cognitive style. Adaptive thinkers will get practical, feasible solutions. Innovative thinkers will get bold, paradigm-challenging ideas. Teams will get both, because that's what teams need.

This isn't sci-fi. It's not "someday maybe." The research is done. The path is clear. The only question is how fast organizations move to adopt it.

If you're using AI today and feeling frustrated—like the answers are technically right but emotionally wrong—now you know why. And now you know what to do about it.

The ghost in the machine isn't just intelligence. It's also style. And learning to work with both will fundamentally change how we collaborate with AI.

Start experimenting today. Create both adaptive and innovative prompts for a problem you're working on. Compare the results. You'll see immediately why cognitive diversity matters. And you'll start thinking about AI interaction differently.

The future of AI isn't smarter machines. It's machines that think like we do—in all our diverse, adaptive, innovative, beautiful variety.

That's worth building toward.

FAQ

What is cognitive diversity, and how does it apply to AI?

Cognitive diversity refers to differences in how individuals think, solve problems, and approach challenges. It ranges from highly adaptive thinkers (who prefer structure and proven approaches) to highly innovative thinkers (who prefer flexibility and rule-breaking). Applying cognitive diversity to LLMs means training or prompting them to recognize and respond to different cognitive styles, generating solutions that match how a particular person naturally thinks rather than forcing a one-size-fits-all approach.

How can LLMs learn to emulate different cognitive styles?

Research from Carnegie Mellon and Penn State demonstrated that LLMs can emulate cognitive styles through carefully framed prompts. An "adaptive prompt" asks for practical, feasible, risk-averse solutions within existing frameworks. An "innovative prompt" asks for bold, paradigm-challenging, ambitious approaches. The LLM then generates fundamentally different types of answers based on the cognitive style framework provided, not through retraining but through intelligent prompt engineering.

What are the key differences between adaptive and innovative solutions?

Adaptive solutions score high on feasibility and low on paradigm challenge. They're practical, implementable quickly, minimize risk, and build on proven approaches. Innovative solutions score low on feasibility but high on paradigm challenge. They're bold, transformational, often require significant resources, and question fundamental assumptions. Neither is inherently better—the right approach depends on context and user needs.

Why is it better to have both cognitive styles available rather than just one?

Teams and organizations that embrace both adaptive and innovative thinking outperform those relying on one style alone. Adaptive thinking drives execution and optimization. Innovative thinking drives transformation and breakthrough ideas. Different problems require different approaches. A customer support company needs both: adaptive solutions for optimization, innovative thinking for differentiating in the market.

How can I start experimenting with cognitive diversity in my own AI use today?

Create two prompts for a problem you're working on: one explicitly asking for practical, feasible, low-risk solutions (adaptive framing), and one asking for bold, unconventional, paradigm-challenging approaches (innovative framing). Generate responses to both using your preferred LLM and compare. You'll immediately see how prompting style shapes the type of answer you receive. Use this knowledge to request the cognitive style that best serves your actual needs.

What are the main limitations of cognitive diversity-aware AI?

Key limitations include: innovative solutions often aren't immediately feasible; users may not understand their own cognitive style; measuring solution quality across different styles is complex; LLM training data may over-index adaptive thinking; privacy concerns around tracking user preferences; and implementation costs may increase. These are addressable challenges, but they require thoughtful design and user education.

Will cognitive diversity in AI lead to teams ignoring practical constraints?

Not if implemented thoughtfully. Cognitive diversity-aware AI shouldn't eliminate feasibility considerations—it should be transparent about them. A good system might label innovative solutions as "transformational but requires 12-month implementation," making the tradeoffs clear. The goal is expanding the range of good options, not removing practical grounding.

How do I know if I'm an adaptive or innovative thinker?

Adaptive thinkers naturally prefer structure, clear rules, proven approaches, and incremental optimization. They're energized by reducing risk and implementing well. Innovative thinkers prefer flexibility, challenging conventions, exploring new possibilities, and paradigm shifts. They're energized by ambiguity and transformation. Most people fall somewhere on the spectrum. The Kirton Adaption-Innovation inventory (KAI) is the formal assessment tool if you want an official score.

Is cognitive diversity the same as personality types or learning styles?

No. Cognitive diversity measures how you naturally approach problem-solving and change. Personality types measure traits like introversion/extroversion. Learning styles describe how you absorb information. Someone can be an introverted (personality), visual learner (learning style) who's an innovative (cognitive style) problem-solver. They're different dimensions that aren't strongly correlated.

What's the timeline for cognitive diversity becoming standard in AI tools?

Prompting best practices are available now. Fine-tuned models with native cognitive style support are likely 18-36 months away. Widespread adoption and automatic style detection could take 3-5 years. Expect the transition to happen gradually, with early-adopter organizations gaining competitive advantages before cognitive diversity becomes table stakes.

Try Runable For Personalized AI Automation

Cognitive diversity principles apply beyond just LLM interactions. If you're building workflows, automations, or generating content, consider how different thinking styles might serve your team better. Runable offers AI-powered automation for creating presentations, documents, reports, images, and videos starting at $9/month. You can use cognitive diversity principles to prompt Runable for multiple approaches to your automation needs—practical implementations and transformational reimaginings—getting the adaptivity and innovation you need in one platform.

Use Case: Generate both practical and innovative versions of your next report or presentation, then select the approach that matches your team's cognitive style and project context.

Try Runable For Free

Related Articles

- How OpenAI's Codex AI Coding Agent Works: Technical Details [2025]

- ChatGPT Citing Grokipedia: The AI Data Crisis [2025]

- ChatGPT Creativity Settings: Master Advanced Prompting Techniques [2025]

- Google AI Mode Personal Intelligence: Gmail & Photos Integration [2025]

- LinkedIn's Small Models Breakthrough: Why Prompting Failed [2025]

- AI Hallucinated Citations at NeurIPS: The Crisis Facing Top Conferences [2025]

![Cognitive Diversity in LLMs: Transforming AI Interactions [2025]](https://tryrunable.com/blog/cognitive-diversity-in-llms-transforming-ai-interactions-202/image-1-1769508463467.jpg)