Introduction: The Privacy Crisis in AI Chatbots

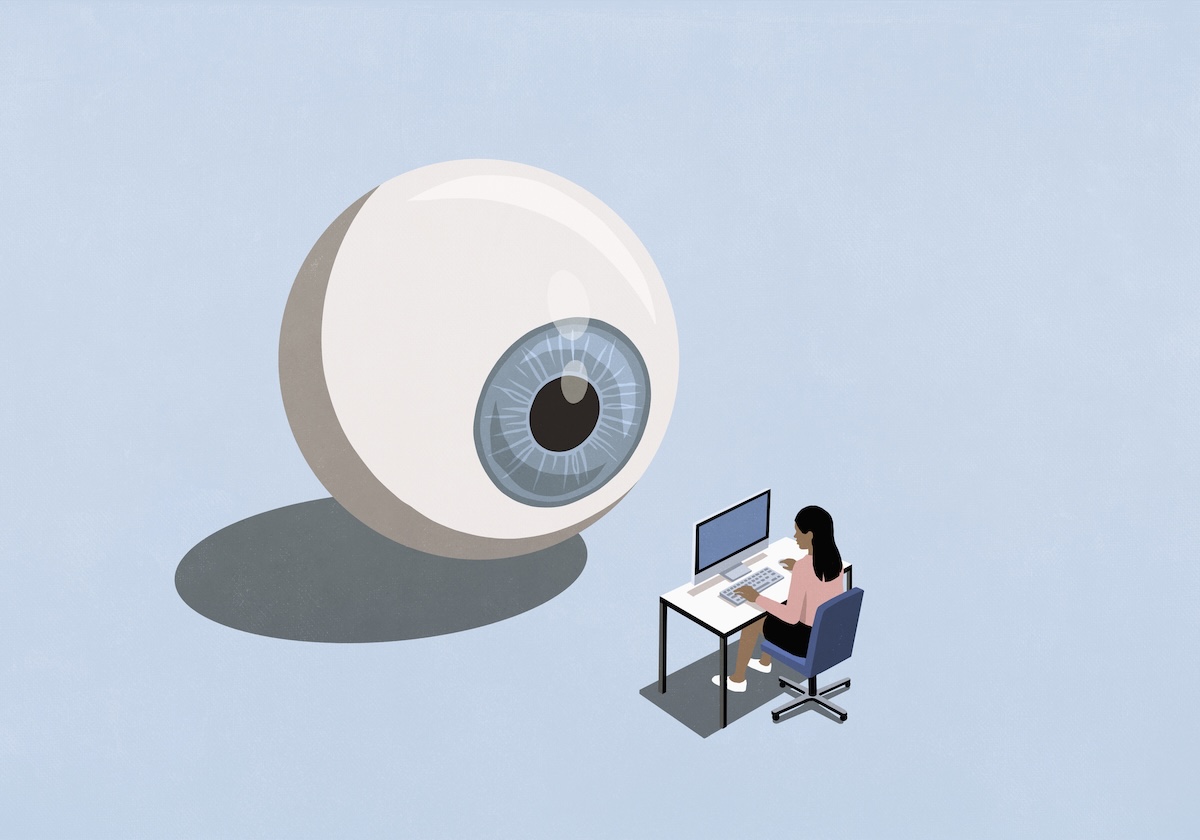

The rise of artificial intelligence chatbots has fundamentally transformed how we interact with technology. From customer service to creative writing, from code generation to personal advice, these tools have become indispensable for millions worldwide. Yet beneath this convenience lies a growing concern that few users adequately consider: what happens to every conversation you have with Chat GPT, Claude, or Google's Gemini?

The uncomfortable truth is that every message you send, every question you ask, every personal detail you share with mainstream AI assistants becomes part of a vast data collection pipeline. These conversations feed training datasets, inform model improvements, and increasingly, fuel advertising strategies. OpenAI has already begun testing advertising features, signaling a future where the intimate conversations you have with AI could be weaponized for commercial purposes. This is fundamentally different from traditional web services where you understand the trade-off between free content and data collection. With AI chatbots, the intimacy of conversation itself invites users to share deeply personal information—health concerns, relationship struggles, financial anxiety, career doubts—information that no user reasonably expects to become part of an advertising targeting mechanism.

This privacy paradox has created a significant gap in the AI market. Users want the power and convenience of modern AI assistants, but increasingly, they also want assurance that their conversations remain private. Traditional solutions like self-hosted models offer privacy but sacrifice convenience, requiring technical expertise and computational resources most users don't possess. Commercial alternatives promise convenience but demand trust in corporate data practices that have repeatedly proven untrustworthy.

In December 2024, Signal's founder Moxie Marlinspike launched a project designed to bridge this gap: Confer. Rather than accepting the false choice between privacy and capability, Confer represents a fundamental rethinking of how AI services can be architectured. By applying cryptographic principles, trusted execution environments, and open-source transparency to the AI chatbot space, Confer demonstrates that you can have a Chat GPT-like interface without sacrificing the privacy protections that made Signal the gold standard in encrypted communications.

This comprehensive guide explores what Confer is, how it works, what it means for the future of AI privacy, and how it compares to both mainstream alternatives and emerging competitors in the privacy-conscious AI space.

Who is Moxie Marlinspike and Why His Track Record Matters

The Signal Founder's Privacy Philosophy

Moxie Marlinspike isn't a newcomer to privacy advocacy. As the founder and lead architect of Signal, one of the world's most respected encrypted messaging applications, Marlinspike has spent nearly two decades thinking deeply about privacy, security, and cryptography. Signal's E2E encryption protocol has become so trusted that privacy advocates, journalists, human rights organizations, and even governments acknowledge it as best-in-class. The protocol itself was so well-designed that it became the standard for encrypted messaging across platforms—WhatsApp, Facebook Messenger, and Skype all eventually adopted Signal Protocol for their encrypted communications.

What distinguishes Marlinspike's approach to privacy is that it's not theoretical or aspirational. Signal operates under the principle of cryptographic guarantees, meaning the technical architecture itself prevents privacy violations rather than relying on policy promises or corporate goodwill. Even Signal employees cannot access user conversations because the cryptography makes it technically impossible. This architectural approach—designing systems so that privacy violations become mathematically impossible rather than merely against company policy—represents a paradigm shift in how privacy-conscious technology should be built.

Marlinspike's background in security extends beyond Signal. He's studied cryptography at the University of Chicago, contributed to numerous open-source security projects, and maintained a public position that technological solutions to privacy problems are more reliable than legal frameworks or regulatory approaches. His skepticism of corporate commitments to privacy is well-founded: he's watched encryption become a compliance checkbox for companies that simultaneously monetize user data in other ways.

Why an AI Privacy Project Now?

Marlinspike's concerns about AI assistants stem from what he calls the "intimate nature" of the service. Chat interfaces, by their design, encourage disclosure and confession in ways that other technologies do not. People ask their AI assistants questions they wouldn't search for on Google, reveal anxieties they wouldn't post on social media, and share details they wouldn't tell friends. This combination of intimate communication with powerful data collection creates what Marlinspike describes as a dangerous scenario: "Chat interfaces like Chat GPT know more about people than any other technology before. When you combine that with advertising, it's like someone paying your therapist to convince you to buy something."

This metaphor captures the core concern. A therapist maintains confidentiality not out of benevolence but ethical obligation. Advertising-supported AI assistants, by contrast, have financial incentive to exploit what they learn about users. The alignment between the assistant's business model and the user's privacy interests is fundamentally misaligned. Marlinspike's previous work demonstrated that when technical architecture can enforce privacy, it becomes durable even as business incentives shift. Confer applies that same principle to AI.

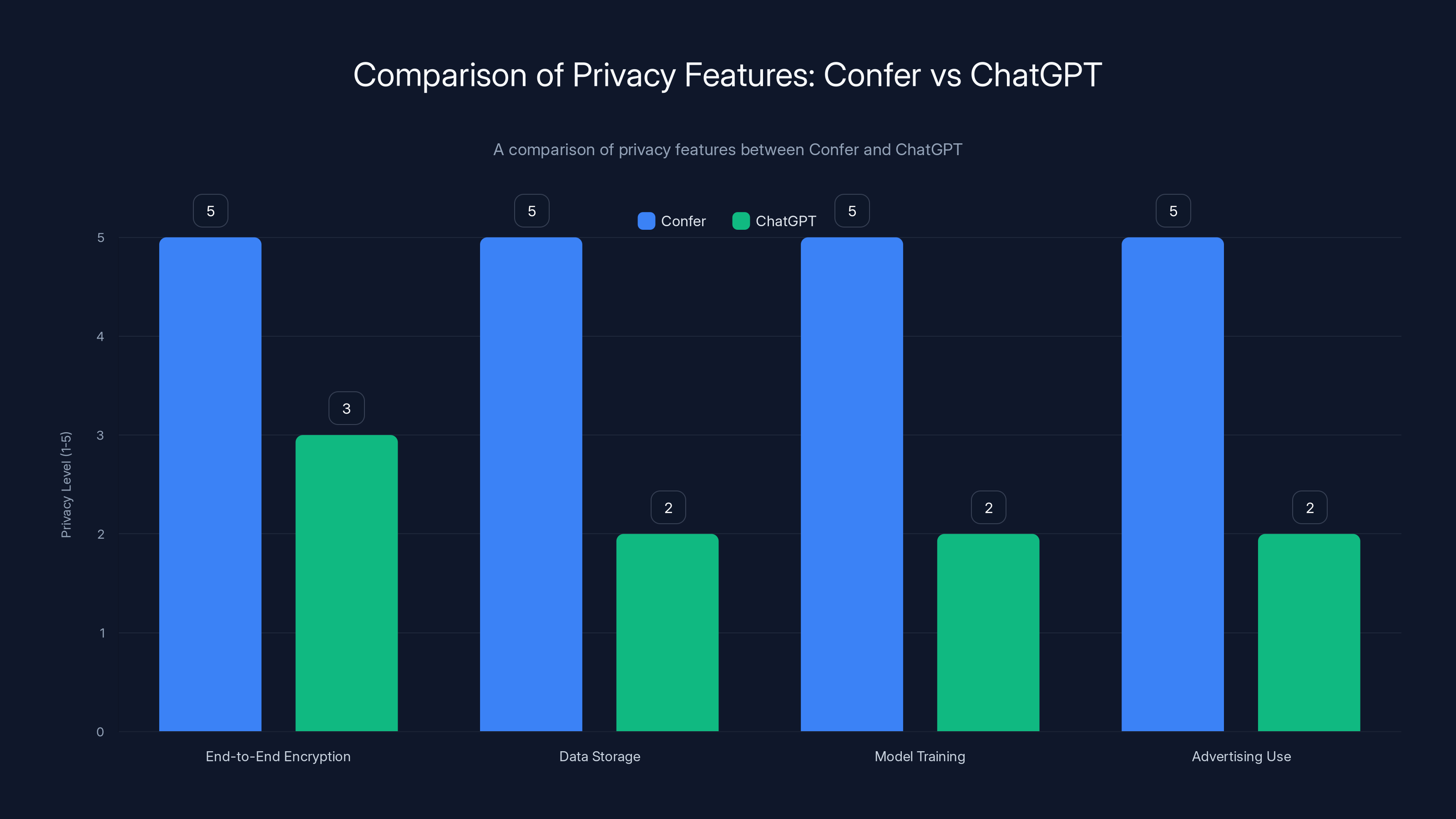

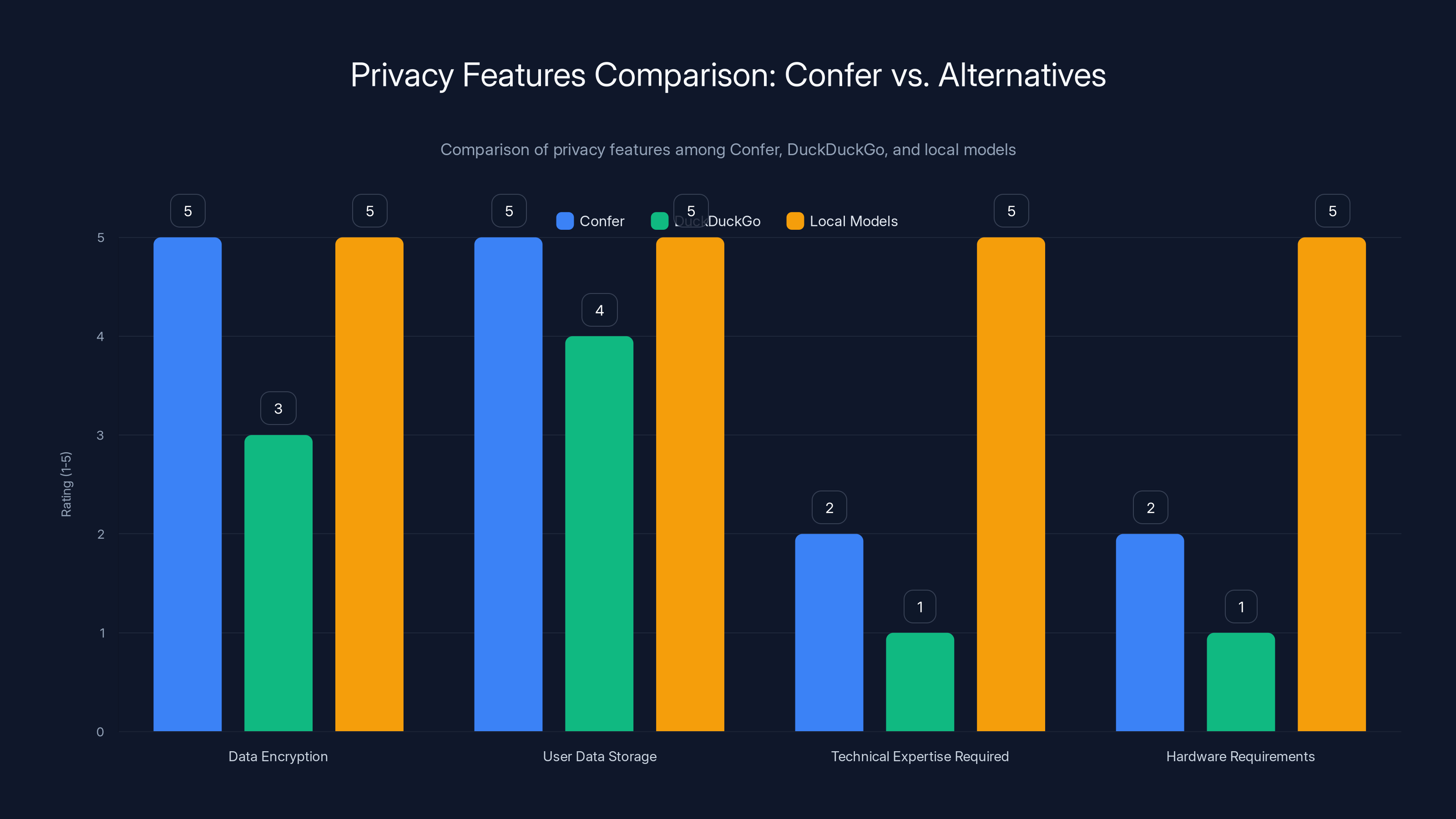

Confer offers superior privacy features compared to ChatGPT, with maximum scores in encryption, data storage, and non-use for model training or advertising. (Estimated data)

Understanding Confer: Architecture and Technical Implementation

The Three-Layer Privacy Architecture

Confer's technical design reflects deep thinking about where privacy needs to be protected in an AI system. Rather than relying on a single security mechanism, Confer implements three independent layers of privacy protection, each addressing different potential vulnerabilities:

First Layer: Encryption at the Transport Level

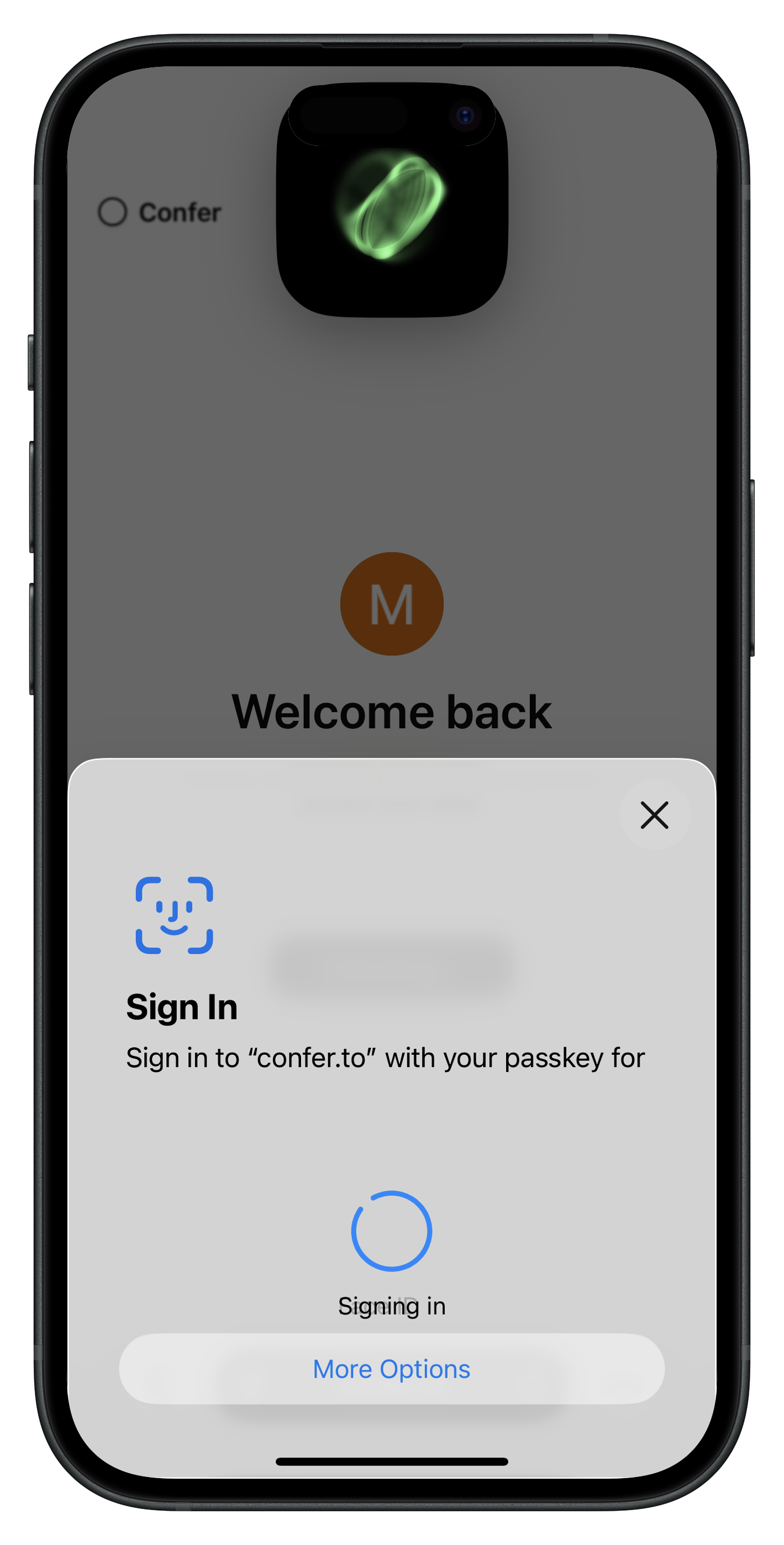

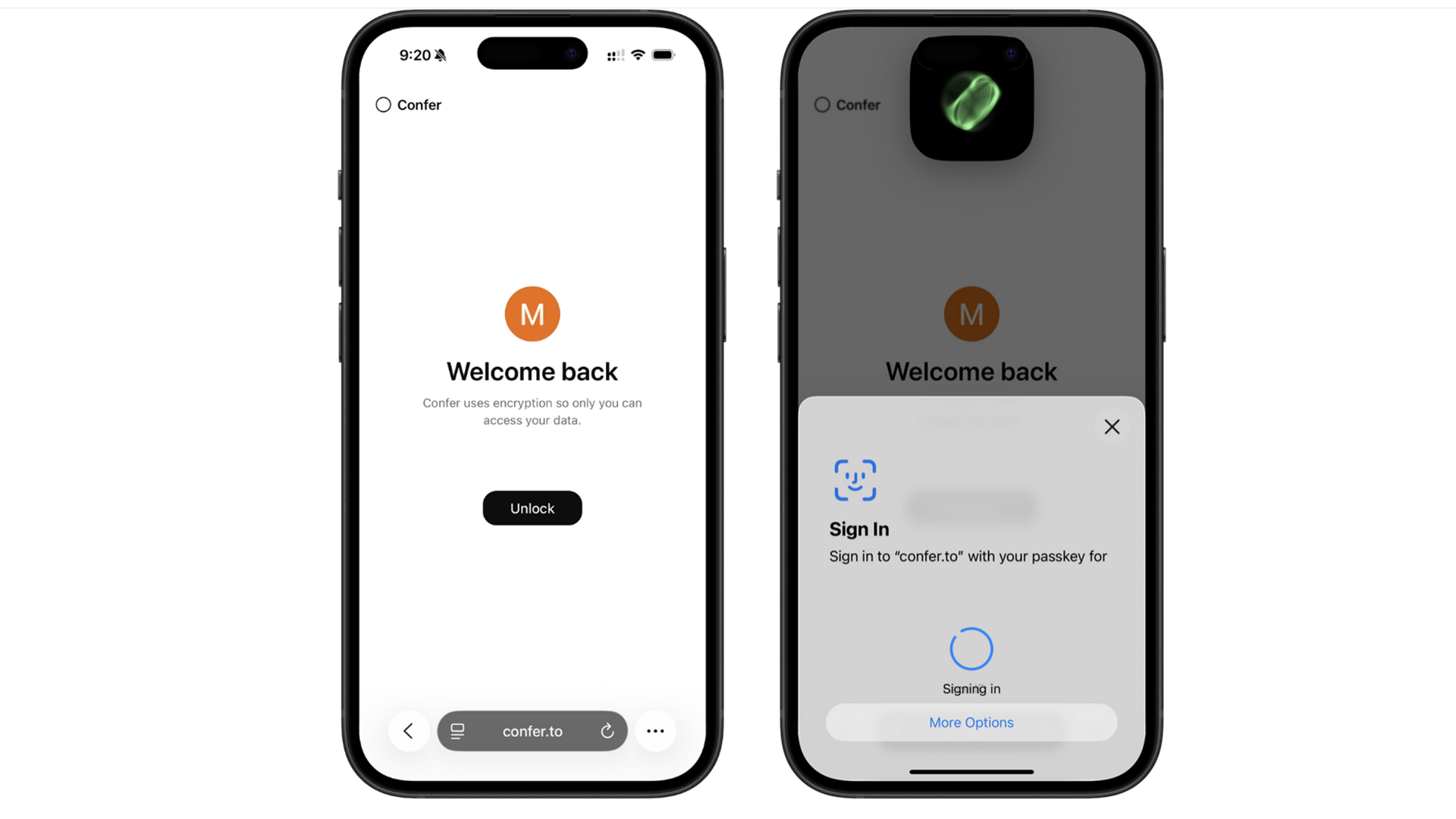

Conversations entering and leaving Confer are encrypted using the Web Authn passkey system, a modern authentication standard that's gaining adoption across platforms like Apple and Google. Unlike traditional password-based authentication, Web Authn uses public-key cryptography, meaning the server never actually handles your password or authentication secret. Instead, authentication happens through cryptographic challenge-response protocols. This ensures that even the initial connection to Confer cannot leak unencrypted user data to intermediaries like internet service providers or network monitoring systems.

The use of passkeys represents a significant usability improvement over traditional password authentication. Rather than memorizing complex passwords, users authenticate through biometric verification (fingerprint, face recognition) or hardware security keys. This approach also eliminates the risk of password reuse attacks that plague traditional authentication systems. However, Marlinspike acknowledges that Web Authn's full potential is currently limited to certain platforms. Modern macOS (Sequoia and later), iOS, Android, and newer Windows implementations natively support Web Authn passkeys. Users on older systems or Linux can still use Confer, but with password-based authentication that's less technically elegant than the passkey approach.

Second Layer: Encryption at the Application Level

Conversation encryption doesn't stop at the transport layer. Even if data reaches Confer's servers, it remains encrypted end-to-end, meaning the user's encryption key never leaves their device. This is similar to how Signal implements encryption—the service operator literally cannot decrypt user messages even if required to do so by subpoena or governmental pressure. The encryption key exists only on the user's device, making decryption technically impossible for anyone without access to that device.

This design decision has profound implications. It means Confer's operators—Marlinspike and his team—cannot access user conversations. Neither can service providers, cloud infrastructure operators, or governmental actors with legal authority over Confer as a company. The conversation content is literally inaccessible to anyone except the user. This represents a fundamental architectural difference from Chat GPT, Claude, and other mainstream alternatives where service operators have intentional, designed-in access to all conversation data.

Third Layer: Computation in Trusted Execution Environments

The most technically sophisticated aspect of Confer's architecture involves where and how AI inference actually happens. Confer processes all queries inside Trusted Execution Environments (TEEs), specialized CPU features that create isolated computation zones on the server. Major cloud providers including Intel, AMD, and ARM have implemented TEE technology through products like Intel SGX (Software Guard Extensions), AMD SEV (Secure Encrypted Virtualization), and ARM Trust Zone.

Within a TEE, several security properties hold: the processing is isolated from the broader operating system, making it difficult for system-level attacks to observe computation; memory used during inference is encrypted, preventing even privileged attackers from observing what data is being processed; and the environment supports remote attestation, a cryptographic proof that the exact code running in the TEE is what you expect it to be.

Why does this matter for privacy? Inference—the process of running a trained AI model on new input to generate output—requires temporary access to both the encrypted conversation and the model weights. Inside a standard environment, this creates a window where sensitive data could be logged, observed by attackers, or accidentally leaked. Within a TEE, this computation happens in an isolated, auditable environment. Remote attestation allows users or independent observers to verify that the code running in the TEE is legitimate Confer code, not some modified version designed to log conversations.

Open-Source Transparency and Verifiability

Beyond the technical mechanisms, Confer's architecture includes a commitment to open-source transparency that's unusual for AI services. The core infrastructure code is publicly available, allowing security researchers, cryptographers, and independent auditors to examine exactly how Confer implements these protections. This transparency serves several purposes: it enables expert review that improves security, it reduces the possibility of hidden vulnerabilities going unnoticed, and it provides users with concrete evidence that Confer's privacy claims are technically grounded rather than marketing rhetoric.

This commitment to open-source mirrors the approach that made Signal trusted. Rather than asking users to trust Moxie Marlinspike's assertions about privacy, the code itself proves the claims. Security researchers have independently verified Signal's encryption implementation, found it sound, and continue to audit it as cryptographic standards evolve. Confer's open-source approach enables similar independent verification.

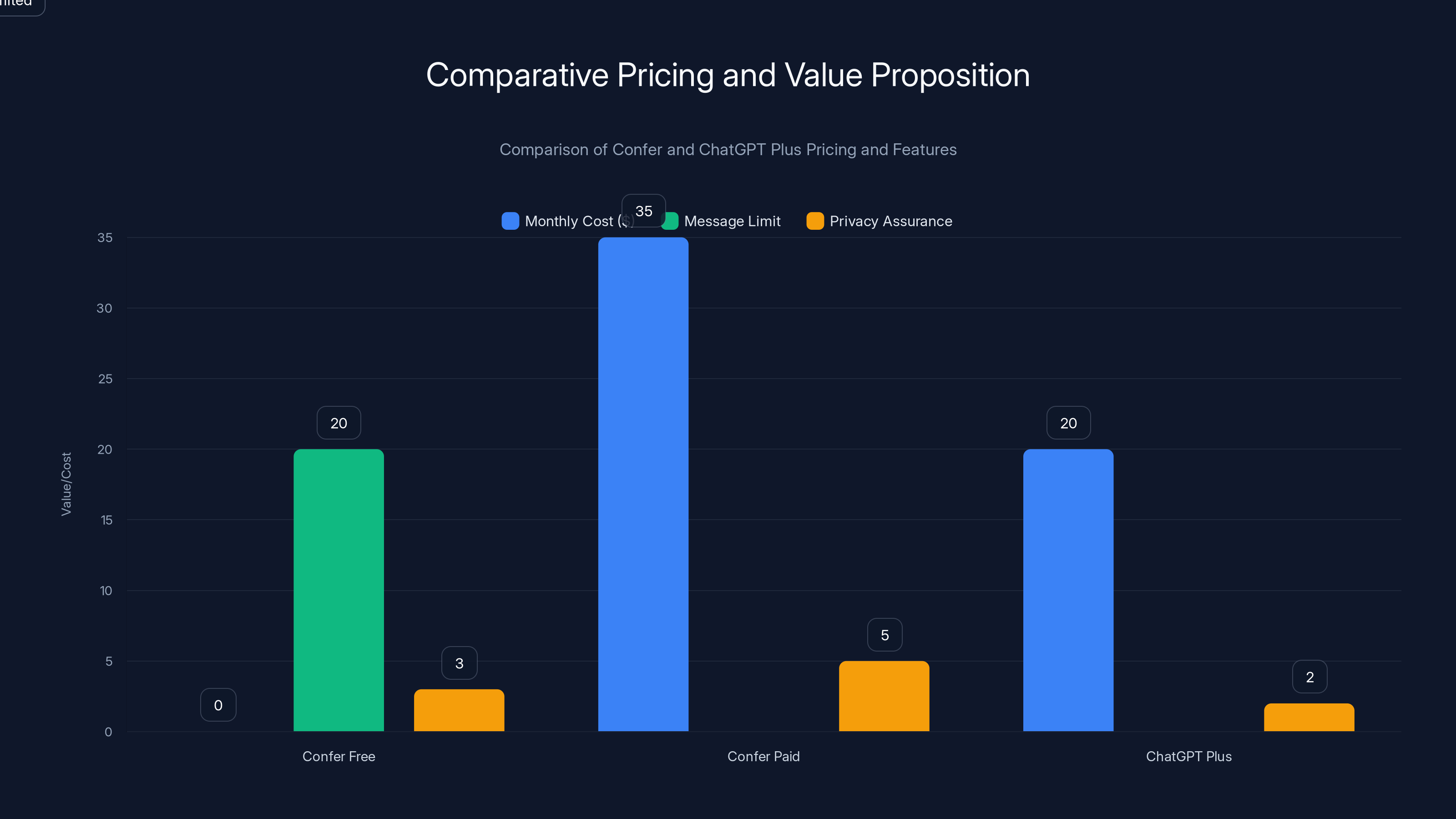

Confer offers a free tier with limited messages and a

How Confer Works: User Experience and Practical Implementation

Getting Started: Authentication and Passkeys

Creating a Confer account begins with the passkey authentication process. Rather than creating a password, users choose their authentication method based on their device. On modern Apple devices, passkeys integrate with the system's biometric authentication, creating a seamless experience: users approach the signup page, and their device offers to create a passkey protected by Face ID or Touch ID. The passkey itself never leaves the device; instead, it's stored securely in the system keychain, cryptographically isolated from other applications.

For Android users, Google's Passkey Manager provides similar functionality. Windows users can use Windows Hello biometric authentication or external hardware security keys like YubiKeys. On Linux or older systems without native passkey support, Confer allows password authentication, though this path lacks some security properties of the cryptographic approach.

Once authenticated, users arrive at the Confer interface, which deliberately mimics the design language of Chat GPT and Claude. This isn't accidental—Marlinspike recognized that users have developed mental models about how AI chatbots work. Preserving familiar interface patterns reduces cognitive load and makes transitioning to Confer painless for users switching from other platforms.

Starting Conversations and Message Encryption

When a user types a message in Confer, the message is encrypted on the user's device before ever leaving it. The encryption happens in the browser or application, using encryption keys that never transmit to Confer's servers. This is crucial: even if someone intercepted the network traffic between the user's device and Confer's infrastructure, they would see only encrypted gibberish, not the actual conversation content.

The encrypted message travels to Confer's infrastructure, where it's processed. However, because the message remains encrypted throughout transport and initial handling, the server doesn't work with readable text. Instead, the inference process happens inside the Trusted Execution Environment, where the message is decrypted just long enough to be processed by the AI model.

The AI model itself runs within the TEE. Confer uses an "array of open-weight foundation models," meaning it leverages publicly available, non-proprietary language models rather than a single proprietary model. This approach offers flexibility: if one model works better for certain types of queries, Confer's infrastructure can route intelligently. The model generates a response, which is then re-encrypted before exiting the TEE and transmitting back to the user's device.

The Conversation Experience

From the user's perspective, Confer functions like any other AI chatbot. Messages appear, responses generate, conversations develop naturally. The privacy protections operate entirely behind the scenes. A user concerned about privacy has nothing special to do to enable protection—protection is the default, enforced by architecture rather than settings or configurations.

Confer maintains conversation history. Over time, a user builds up a library of chats with Confer, searchable and organized by topic. However, this conversation history receives the same encryption protections as individual messages. Each conversation in a user's history remains encrypted such that Confer's operators cannot access it.

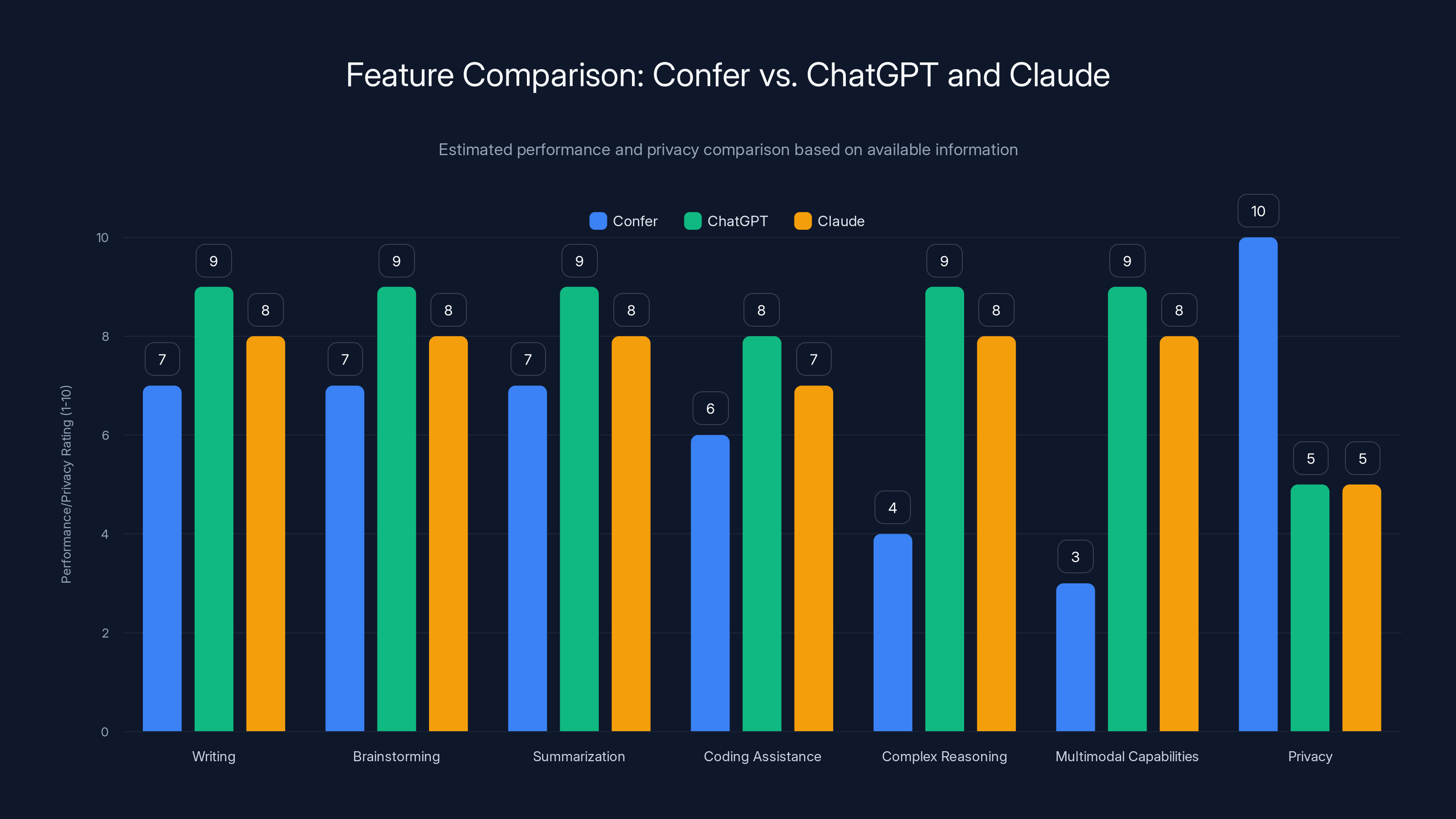

Feature Comparison: Confer vs. Chat GPT and Claude

Capability and Model Performance

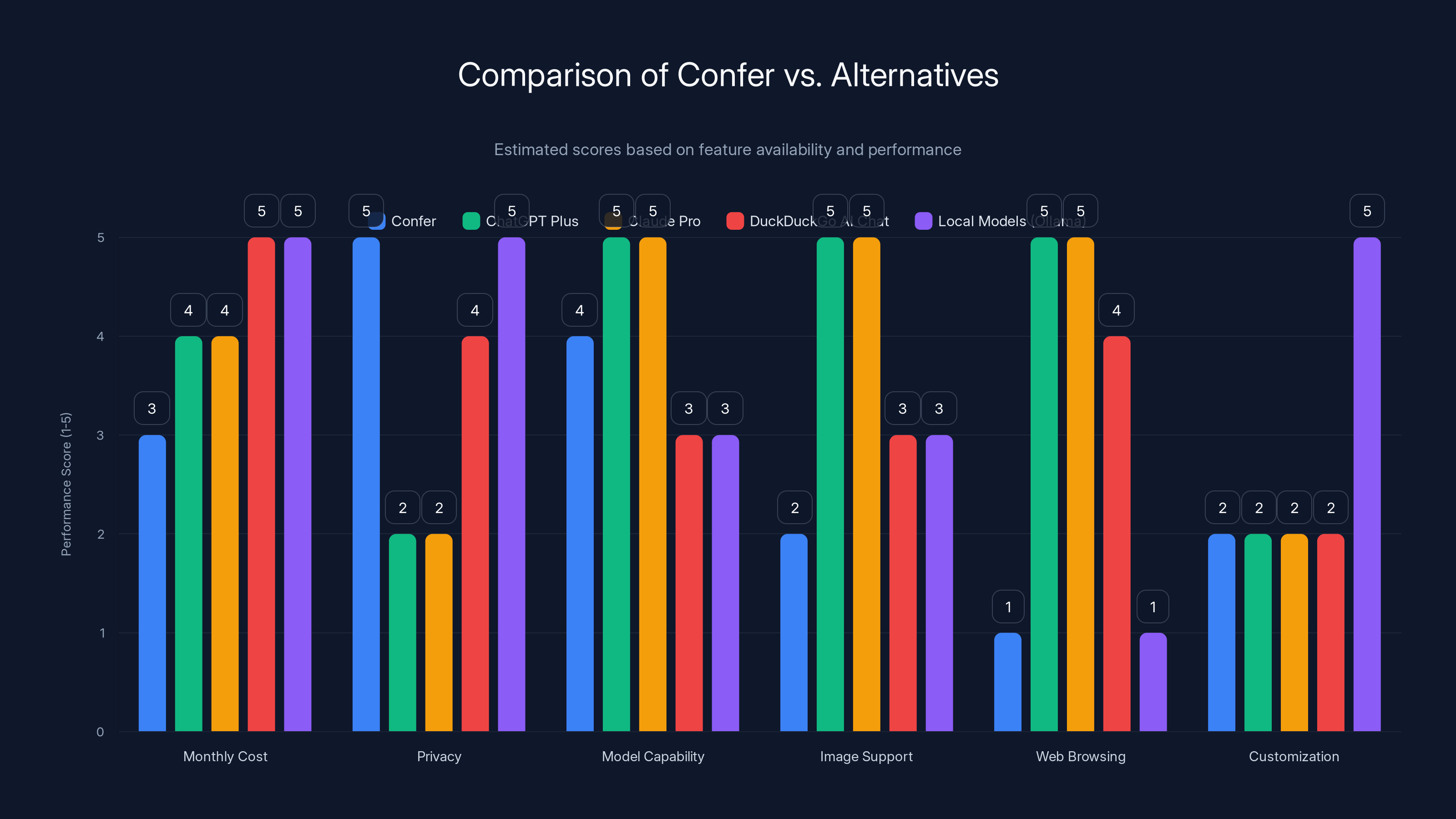

Confer deliberately doesn't position itself as having superior AI capabilities compared to Chat GPT or Claude. In fact, Marlinspike acknowledges that OpenAI and Anthropic have vastly larger resources dedicated to developing more advanced models. Confer's current implementation uses open-weight models—models like Meta's Llama, Mistral's Mistral, or other publicly available foundation models—which, while capable, don't match the performance of the largest proprietary models like GPT-4 or Claude 3.5 Sonnet.

This is a deliberate trade-off. The most advanced large language models are proprietary, which means they're trained on proprietary data and their weights are closed. Using them would require either licensing them from their creators (which might involve data sharing) or running them in an environment where privacy guarantees become harder to maintain. By choosing open-weight models, Confer accepts a modest capability trade-off in exchange for privacy guarantees and architectural simplicity.

However, this comparison is more nuanced than "Confer is worse." For many tasks—writing, brainstorming, summarization, coding assistance, research—the performance difference between a well-implemented Llama 2 model and GPT-4 is marginal. The practical limitations matter more than raw capability. If a user's primary need is help writing an email or brainstorming ideas, Confer performs admirably. If a user needs cutting-edge reasoning for extremely complex tasks, or if they need multimodal capabilities (images, video, voice), current Confer implementation falls short.

Conversation Privacy Protection

Here the comparison becomes entirely one-directional in Confer's favor. Chat GPT and Claude, despite any privacy policies, retain conversations on their servers in a form accessible to their operators. OpenAI and Anthropic employees can, if needed, read your conversations. Law enforcement with appropriate legal authority can compel access. The companies themselves can use conversation data to improve models and train new systems.

Confer, by architectural design, cannot offer conversations to anyone. The technical implementation makes it impossible for even Marlinspike and his team to access conversation content. This isn't a policy promise but a cryptographic guarantee. No amount of legal pressure, system compromise, or malicious insider action can leak conversation content from Confer because that content never exists in a decryptable form in Confer's infrastructure.

Model Training and Data Usage

Chat GPT and Claude both use user conversations to improve their models, with options to opt out. However, even users who opt out know that their data is processed, stored, and accessed by the companies. Confer takes a different approach: by design, user conversations cannot be used for model training because they cannot be accessed. This isn't an opt-in privacy setting; it's the fundamental architecture.

This distinction matters significantly for data minimization, a core privacy principle. Confer collects the minimum data necessary to function: encrypted conversations needed to provide service, plus basic account information (email, authentication credentials). It doesn't collect telemetry about what users ask, doesn't build profiles about user interests, and doesn't use conversations for any secondary purposes.

Advertising and Monetization Model

Chat GPT Plus costs

Confer's higher price point reflects the technical complexity of the privacy architecture and Marlinspike's commitment that the business model remain aligned with user privacy. There's no financially cheaper tier because a cheaper tier would require compromises on privacy protections to justify the lower cost.

Feature Limitations to Consider

Confer's feature set is currently more limited than Chat GPT or Claude. It doesn't support image generation, image analysis, web browsing, or voice interaction. It doesn't have integration with external services or the ability to run complex workflows like Chat GPT's advanced features. These limitations exist partly because they would complicate the privacy architecture and partly because Confer is earlier in its development.

Over time, these features could be added with appropriate privacy protections. Image analysis, for instance, could work similarly to text inference within a TEE. But the initial version deliberately keeps features focused on core chat functionality, ensuring privacy protections are robust and well-tested before expanding.

Confer excels in privacy protection, while ChatGPT and Claude lead in advanced capabilities. Estimated data based on feature descriptions.

Pricing, Plans, and Value Proposition

Tier Breakdown and Usage Limits

Confer offers two tiers: a free tier and a paid subscription.

The free tier allows 20 messages per day across up to five active chats. For casual use, experimentation, or users evaluating whether Confer fits their needs, the free tier provides meaningful capability. Twenty messages daily is sufficient for several conversation turns, and five concurrent chats enable multiple topic threads. However, the 20-message daily limit prevents heavy usage or workflows requiring extensive AI interaction.

The paid tier costs $35 per month and removes message limits, provides access to more advanced models within Confer's model array, and enables personalization features. Unlimited usage opens workflows impossible under the free tier: extended research sessions, repeated refinement and iteration, complex multi-turn conversations.

The

Comparative Value Analysis

For users with high privacy sensitivity, Confer's value proposition is stronger than the price comparison suggests. Consider what users are implicitly paying for Chat GPT Plus:

Confer's $35 is the complete, transparent cost. No hidden extraction, no data monetization, no uncertainty about future practices.

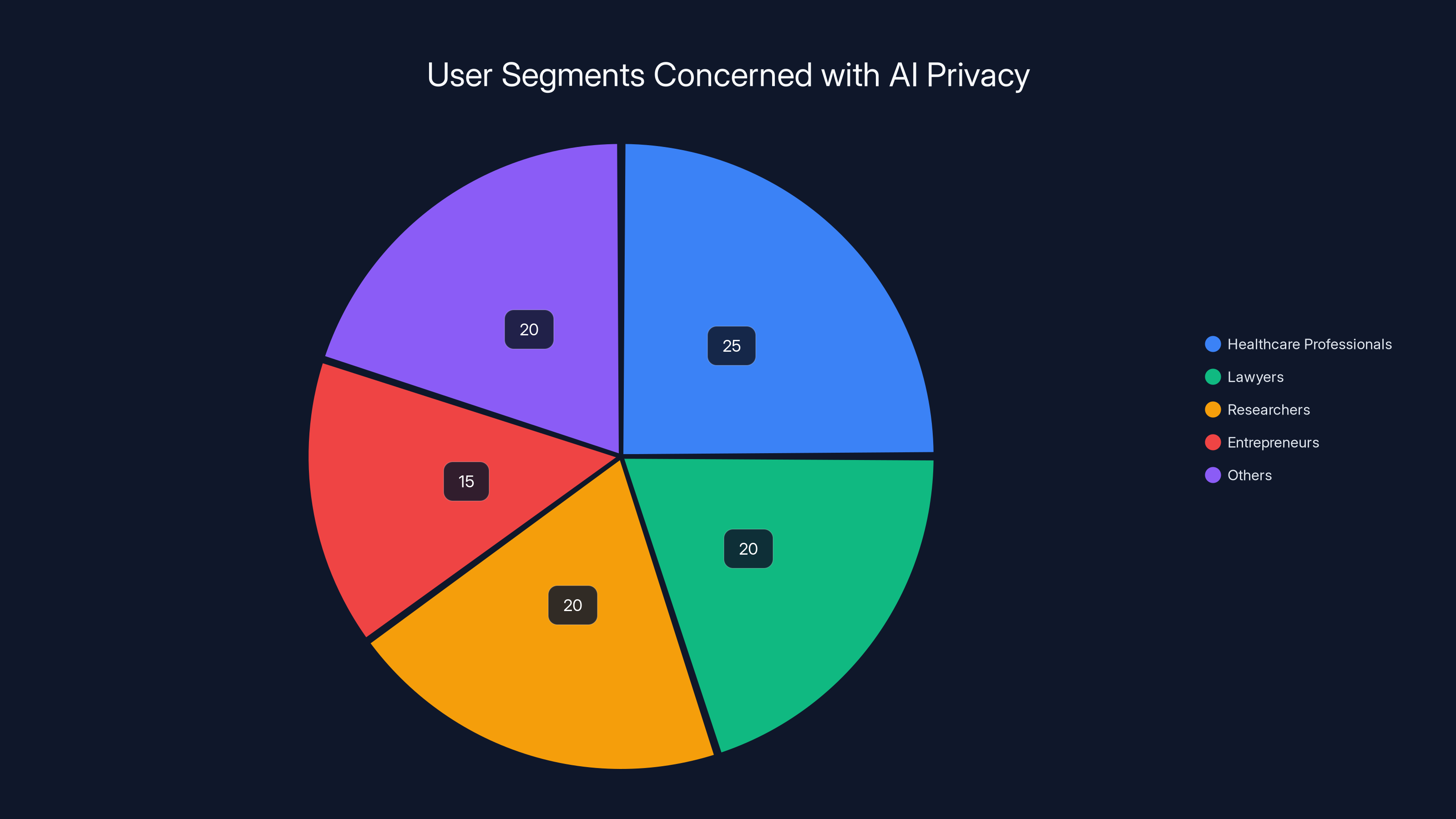

For price-sensitive users without strong privacy concerns, Chat GPT Plus remains the better choice. For privacy-conscious users, researchers, individuals discussing sensitive topics, healthcare workers, legal professionals, and anyone regularly sharing information they'd prefer remain private, Confer's premium makes sense.

Annual Pricing and Sustainability

Confer doesn't currently offer annual discounts (though this could change). At

Use Cases: Who Should Use Confer?

Healthcare and Mental Health

One of the strongest use cases for Confer involves health-related conversations. Users asking about symptoms, managing chronic conditions, processing mental health concerns, or researching medical information rightfully expect privacy. Health information is protected by regulations like HIPAA in the US, GDPR in Europe, and comparable frameworks elsewhere. While these regulations don't technically govern AI assistants the same way they govern licensed healthcare providers, the spirit of health privacy protection aligns with using tools that cannot data-mine health information for advertising or other purposes.

A patient exploring anxiety treatment options, for instance, probably doesn't want that exploration influencing their advertising profiles. Someone with diabetes managing their diet doesn't need AI companies building a detailed profile of their health condition. Confer enables health-related conversations with full privacy—appropriate for a space where vulnerabilities and personal medical information naturally emerge.

Legal and Compliance Work

Lawyers, compliance officers, and legal professionals often use AI assistants to review contracts, research legal precedent, draft documents, and analyze regulations. These conversations can involve confidential client information, proprietary business terms, or sensitive legal strategy. Standard AI assistants create liability risks: conversations might be inadvertently exposed, data retention could violate client confidentiality obligations, or future model training might contaminate proprietary information.

Confer's architecture eliminates these risks. Lawyers can use Confer for legal research and analysis knowing that conversations remain truly private. This is particularly valuable for small law firms and solo practitioners who can't afford dedicated private AI infrastructure but need privacy guarantees for client work.

Financial and Personal Planning

People discuss financial situations with AI assistants—retirement planning, investment strategy, debt management, financial anxiety. This information is sensitive and personal, but it's also the kind of thing that creates lucrative advertising profiles. Financial services companies would pay substantial amounts to know who's worried about their finances, who's planning to invest, or who's interested in specific financial products.

Confer prevents this. Users can discuss their financial situations with confidence that information won't be built into advertising profiles or sold to financial services marketers.

Research and Competitive Intelligence

Researchers, entrepreneurs, and strategists use AI assistants to help with competitive analysis, market research, and strategy development. This work often involves exploring proprietary ideas, competitive approaches, or market dynamics they'd prefer competitors didn't know they were investigating. While conversation metadata alone might not reveal much, the content of extended research conversations could expose significant competitive intelligence if accessed by competitors or their service providers.

Confer enables this research with full privacy assurance. Entrepreneurs can analyze their market landscape and competitive positioning without creating a digital trail of their strategic thinking.

Sensitive Creative Work

Writers, artists, and creators sometimes work on projects they prefer to keep private until launch. A novelist developing a story about sensitive topics, an illustrator exploring controversial themes, or a musician writing about personal trauma might use AI assistance but prefer that assistance doesn't build profiles about their creative interests and future work.

Confer provides this privacy, enabling creative development without surveillance.

Enterprise Deployment

For organizations with privacy requirements, Confer could eventually offer enterprise deployment. While the current version focuses on individual users, the architecture could theoretically support on-premises or private cloud deployment for organizations that need to ensure no conversation data leaves their infrastructure. This could appeal to government agencies, healthcare organizations, or corporations with strict data residency requirements.

Estimated data suggests that healthcare professionals and lawyers are among the top segments prioritizing privacy in AI tools, reflecting their need to handle sensitive information securely.

The Broader Privacy Ecosystem: Alternatives and Complementary Tools

Self-Hosted Models and Local Solutions

Before Confer emerged, the primary privacy-conscious alternative to mainstream chatbots involved running language models locally. Tools like Ollama, LM Studio, and others enable downloading open-weight models (Llama, Mistral, Falcon) and running them entirely on a user's computer. These solutions provide absolute privacy: no data leaves your device, no servers are involved, no data collection is possible.

However, local models come with significant constraints. They require substantial computational resources—a modern GPU and sufficient RAM—which many users don't have. They require technical expertise to set up and maintain. They offer no ability to run more capable models on affordable consumer hardware. And they require users to manually manage model updates and maintain the software infrastructure.

Confer sits between local models and cloud-based commercial services. It provides privacy guarantees similar to local models but without requiring users to operate their own infrastructure. This accessibility is valuable for users who prioritize privacy but lack technical infrastructure or expertise.

DuckDuckGo and Search-Based Alternatives

DuckDuckGo, the privacy-focused search engine, has developed some AI assistance features, including AI Chat, which connects to various language models while avoiding storing user data long-term. DuckDuckGo's approach emphasizes privacy by design in search—not tracking users, not building profiles, not personalizing based on behavior.

However, DuckDuckGo's AI Chat is fundamentally different from Confer. It focuses on search integration and information retrieval rather than conversational depth. The privacy approach is also different: DuckDuckGo deletes chat history but doesn't employ the end-to-end encryption and trusted execution environment approach Confer uses. For privacy-conscious users wanting basic search-integrated AI, DuckDuckGo works well. For users needing deeper conversational interaction with stronger cryptographic privacy, Confer offers advantages.

Open-Source Alternatives and Deployment Options

Tools like Hugging Face's Transformers, LangChain, and other open-source frameworks enable developers to build privacy-conscious AI applications. These tools don't themselves provide privacy—instead, they're infrastructure for building systems with privacy properties. A developer could use LangChain to build a chatbot that runs on private infrastructure, deploying it in ways that ensure privacy.

These solutions require technical expertise and development resources. They're appropriate for organizations large enough to maintain custom AI infrastructure. For individual users and small organizations, the overhead of building and maintaining custom solutions makes generalized platforms like Confer more practical.

Anthropic's Constitutional AI Approach

Anthropic, Claude's creator, has researched "Constitutional AI," an approach to training models to be more helpful, harmless, and honest. While this represents important progress in AI safety and alignment, it doesn't directly address privacy concerns. A model trained to be harmless and honest can still retain user data, use it for training, and share it with operators. Constitutional AI improves model behavior but doesn't prevent data extraction.

Enterprise Privacy Solutions

Some enterprises use dedicated AI infrastructure that maintains privacy through isolation and governance. These typically involve running models on private cloud infrastructure with strict access controls, minimal data retention, and architectural isolation from data monetization pipelines. Services like Anthropic's managed offerings can include privacy protections, but at substantial cost and complexity.

For small organizations and individual users, these enterprise solutions are economically inaccessible. Confer fills that gap by providing enterprise-grade privacy protections at individual pricing.

Emerging Privacy-First AI Platforms

Confer isn't the only new entrant focusing on privacy. Other projects have emerged targeting similar concerns. Some focus on encryption, others on local processing, others on explicit privacy guarantees. The ecosystem is developing with increasing recognition that privacy-conscious AI infrastructure is a legitimate market category.

Confer distinguishes itself through Marlinspike's reputation and the sophistication of its technical approach. His track record with Signal provides confidence that privacy claims are grounded in technical reality rather than marketing aspirations.

Technical Deep Dive: Trusted Execution Environments and Remote Attestation

How TEEs Work

Trusted Execution Environments represent a significant breakthrough in server security architecture. Modern CPUs from Intel, AMD, and ARM include special processor modes that create isolated execution zones. Within a TEE, code runs in a manner largely isolated from the broader operating system.

Intel's SGX (Software Guard Extensions) is one implementation. Software running in an SGX enclave executes in a protected memory region. The processor encrypts the memory, preventing the operating system, hypervisor, or other processes from reading what's happening inside the enclave. The enclave developer specifies exactly what code should run inside, and the hardware ensures only that code executes there.

AMD's SEV (Secure Encrypted Virtualization) takes a different approach, encrypting virtual machine memory so that the hypervisor can't observe guest computation. This enables virtualized environments where the cloud provider can't inspect what running applications do.

These technologies were originally designed for different purposes—protecting against side-channel attacks, enabling secure computation in untrusted environments, protecting intellectual property in cloud deployments. But they're powerfully applicable to privacy-conscious AI: they enable computation on sensitive data in a manner where the hosting provider cannot observe that computation.

The Challenge of Side-Channel Attacks

TEEs significantly improve security, but they're not perfect. Research has discovered "side-channel attacks" that observe aspects of computation without directly accessing the data. For example, by measuring how long computation takes, or by observing memory access patterns, attackers can sometimes infer properties of protected data.

Confer's architecture accounts for these limitations. The documentation acknowledges that Trusted Execution Environments provide strong protections but aren't perfect. Rather than claiming absolute immunity to any possible attack, Confer positions TEEs as one component of a multi-layered approach. Combined with encryption, transport security, and open-source review, TEEs contribute to privacy assurance even accounting for known vulnerabilities.

Remote Attestation and Verification

Remote attestation is the process of cryptographically proving that specific code is running in a TEE. This matters for users because they want assurance that Confer's privacy-protecting code is actually what's running in the TEE, not some modified version.

The attestation process works like this: The TEE generates a cryptographic report containing a hash of the code running inside it, plus other system state. The processor signs this report with a key that's tied to the specific hardware. The report can be sent to a remote verifier who checks the signature using keys appropriate to that hardware. If the signature is valid and the code hash matches what we expect, we have cryptographic proof that the correct code is running.

This is powerful but also creates responsibilities. Confer must maintain and publish the expected code hashes. Users or independent verifiers can then check that these match what the remote attestation reports. If someone modified Confer's inference code to log conversations, the attestation would fail—users would see that the code running doesn't match what Confer published.

Confer offers strong privacy features with moderate technical and hardware requirements, unlike local models which demand high technical expertise and hardware. Estimated data based on typical privacy features.

Security Considerations and Potential Vulnerabilities

Threat Model and Scope of Protection

Confer's privacy protections are significant but operate within a defined threat model. They protect against:

- Data interception in transit: Encryption prevents eavesdropping on network communication

- Server-side data access: Conversation encryption and TEE isolation prevent Confer operators from accessing conversation content

- Advertising targeting based on content: The inability to access conversations prevents building profiles based on content

- Model training on conversations: The encrypted, inaccessible conversations cannot be used to train models

- Law enforcement demands: Even if law enforcement with proper legal authority demands access to conversations, Confer cannot provide them

However, Confer doesn't protect against:

- Local endpoint compromise: If a user's device is compromised with malware, that malware might capture conversations before encryption occurs

- Side-channel attacks on TEEs: Sophisticated attackers with physical access to servers might exploit known TEE vulnerabilities

- Metadata analysis: While conversation content is private, metadata (how often users chat, at what times, rough conversation length) might be observable

- Account compromise: If someone gains access to a user's account credentials, they can read that user's conversations

- Future cryptographic breakthroughs: If encryption algorithms are broken through new mathematics or quantum computing, historical conversations could theoretically be decrypted

This threat model is appropriate and honest. No system provides absolute security against all possible adversaries. Confer provides strong protections against the most likely threats (data extraction by Confer operators, advertising profiling, law enforcement access) while acknowledging where limitations exist.

Dependency on Hardware Security

Confer's security ultimately depends on TEE hardware functioning as designed. If a processor manufacturer has implemented SGX, SEV, or ARM Trust Zone with security flaws, Confer's protections could be compromised. This is a real concern—researchers have discovered vulnerabilities in these systems before.

However, this dependency is actually better than the alternative. In a traditional server, the operating system and all software layers must be perfectly secure. TEEs add an additional hardware-based security boundary that's harder to compromise than pure software security. While hardware can be flawed, the additional security layer it provides is valuable.

The Role of Open-Source Auditing

Confer's commitment to open-source code mitigates many security concerns. When code is public, security researchers can audit it. Vulnerabilities are discovered through research rather than exploited in secret. While this means attackers also see the code, the security community typically moves faster at discovering and fixing vulnerabilities than attackers at exploiting them.

Open-source also enables users to verify that Confer does what it claims. Rather than trusting Marlinspike's assertions, users and researchers can examine the code.

Comparison Table: Confer vs. Alternatives

| Feature | Confer | Chat GPT Plus | Claude Pro | DuckDuckGo AI Chat | Local Models (Ollama) |

|---|---|---|---|---|---|

| Monthly Cost | $35 | $20 | $20 | Free | Free (hardware costs) |

| Conversation Privacy | End-to-end encrypted | Retained by OpenAI | Retained by Anthropic | Deleted quickly | Complete (local) |

| Data for Model Training | No | Yes (opt-out) | Yes (opt-out) | Minimal | N/A |

| Advertising Risk | None | High | Low-moderate | Minimal | None |

| Model Capability | Good (open-weight) | Excellent (GPT-4) | Excellent (Claude 3.5) | Moderate | Depends on model |

| Image Support | No | Yes | Yes | Limited | Depends on model |

| Web Browsing | No | Yes | Yes | Built-in | No |

| Setup Complexity | Very Simple | Very Simple | Very Simple | Very Simple | Moderate-Complex |

| Technical Knowledge Required | None | None | None | None | Some |

| Server Infrastructure | Cloud-based TEE | Cloud | Cloud | Cloud | Local |

| Hardware Requirements | Internet connection | Internet connection | Internet connection | Internet connection | GPU/RAM intensive |

| Message Limits (Free) | 20/day | Limited to 3-4 per minute | N/A | No limit | N/A |

| Offline Capability | No | No | No | No | Yes |

| API Access | No | Yes | Yes | No | Yes |

| Customization | Limited | Limited | Limited | Limited | Extensive |

Confer excels in privacy and cost, while ChatGPT Plus and Claude Pro lead in model capability and web browsing. Local models offer extensive customization. Estimated data based on feature descriptions.

The Economics of Privacy: Why Confer Costs More

Infrastructure Costs

Confer's

Chat GPT's lower price is possible because OpenAI subsidizes the per-user cost through planned data monetization and advertising. The $20 represents only the explicit subscription revenue, not the full economic value OpenAI extracts from users.

Engineering and Maintenance

Confer's privacy architecture requires sophisticated engineering. The codebase needs careful security review, architecture needs to be verified by cryptographers and security experts, and operations require expertise in TEE management and remote attestation. This level of security sophistication costs more than building a straightforward cloud service.

OpenAI, with vastly larger resources, can amortize security engineering costs across millions of users. Confer, as a smaller organization, needs to pass more of those costs directly to users.

Sustainable Business Model

Confer's $35 pricing is explicitly designed to create a sustainable, durable business. At this price point, a relatively small team can maintain the service indefinitely without requiring future funding, data monetization, or pivot to advertising. This business model predictability benefits users—they can be confident the service won't suddenly change or become less privacy-respecting as business needs evolve.

Chat GPT's lower price ultimately depends on a business model that hasn't fully materialized. As OpenAI implements advertising, diversifies into enterprise services, and monetizes data in other ways, the disconnect between $20/month subscription revenue and the actual value extracted will become more apparent.

Implementation Guidance: Getting Started with Confer

Assessment: Is Confer Right for You?

Before committing to Confer, users should consider whether the privacy benefits justify the cost difference from Chat GPT.

Confer is appropriate if:

- You discuss sensitive health, financial, or personal information with AI assistants

- You work on confidential projects (legal, research, competitive strategy) that shouldn't be profiled

- You're deeply concerned about advertising targeting and data monetization

- You work in a regulated industry with privacy obligations (healthcare, legal, finance)

- You value knowing that your conversations literally cannot be accessed by anyone else

- You use AI assistants heavily and want to eliminate data collection across all usage

Chat GPT or Claude might be better if:

- You need advanced capabilities like image generation or sophisticated multimodal analysis

- You need web browsing or integration with external services

- Cost is a primary constraint and privacy concerns are secondary

- You work exclusively with non-sensitive information where profiling creates no harm

- You need the absolute latest, most capable language models

Migration Path from Chat GPT

Users switching from Chat GPT will find Confer's interface immediately familiar. The signup process is straightforward, requiring either passkey setup (on compatible devices) or password creation. Once inside, the chat interface resembles Chat GPT closely enough that existing users feel at home.

The main adjustment involves understanding Confer's limitations compared to Chat GPT: no image generation, no web browsing, no voice interaction. Users should evaluate whether these missing features matter for their primary use cases. If they do, Confer might not be the right primary tool, though it could still serve as a secondary tool for sensitive conversations.

Hybrid Strategies

Some users adopt hybrid approaches: they use Chat GPT or Claude for tasks where capabilities matter most and privacy concerns are minimal, and they use Confer for sensitive conversations and research. This hybrid approach optimizes for both capability and privacy.

For instance, a user might use Chat GPT for image generation and creative tasks, but use Confer for discussing personal health concerns or sensitive project planning. This splits the data footprint, ensuring that the most sensitive conversations occur in the privacy-protected environment.

Looking Forward: The Future of Privacy-Conscious AI

Potential Feature Roadmap

While Confer currently focuses on core chat functionality, future development could add features while maintaining privacy protections. Image analysis using open-weight models could work similarly to text inference within TEEs. Custom instructions could enable personalization without violating privacy (the instructions would be encrypted the same as conversations).

Web browsing capability presents more complexity—accessing external websites creates metadata trails about user interests—but privacy-preserving web browsing approaches exist and could theoretically be integrated.

Marlinspike has emphasized that Confer's initial release is deliberately focused on doing one thing exceptionally well: private conversations. Future expansion would follow only after validating that the core privacy architecture works reliably.

Market Dynamics and Competitive Response

Confer's emergence may influence how competitors approach privacy. If significant user bases demonstrate willingness to pay premium prices for privacy, it creates business incentive for mainstream players to offer privacy-respecting options. OpenAI and Anthropic could theoretically launch privacy-focused variants, though their existing business models create conflicts of interest.

More likely, the competitive landscape will differentiate: mainstream platforms continue optimizing for capability and data monetization, while privacy-focused platforms like Confer serve the segment that prioritizes privacy. This mirrors the relationship between Chrome (which optimizes for integration with Google services) and Firefox (which emphasizes privacy).

Regulatory Pressures

Regulatory frameworks globally are increasing privacy requirements for AI systems. GDPR already constrains how European companies can use conversational data. California's privacy laws create similar constraints. As these regulations tighten, mainstream platforms may face pressure to offer privacy guarantees they currently don't provide.

Confer, already designed to comply with strict privacy regulations, may find regulatory shifts advantageous. Compliance becomes a competitive advantage when regulations mandate privacy protections.

The Broader Privacy Architecture Movement

Confer represents a broader architectural shift in thinking about privacy. Rather than treating privacy as a policy or compliance checkbox, the approach treats privacy as a fundamental architectural property. This represents the evolution of privacy thinking from "we promise to be careful with your data" to "we cannot access your data because of how we built the system."

This architectural approach is spreading: messaging apps increasingly implement end-to-end encryption at the architectural level, cloud storage providers increasingly implement encryption designs where providers cannot access user files, and AI platforms are beginning to adopt similar principles.

Confer's success would validate this architectural approach to privacy in the AI space, potentially influencing how the industry builds future systems.

Potential Challenges and Limitations

User Adoption and Critical Mass

Confer faces a significant challenge: achieving sufficient user adoption to sustain operations while maintaining small size and privacy-first culture. Network effects favor established players like Chat GPT and Claude. Most users already have accounts there, have conversations already stored there, and have established habits.

Switching to Confer requires not just cost-benefit calculation but enough privacy concern to justify migration. This limits the addressable market compared to mainstream alternatives. Confer likely succeeds as a secondary tool or specialized service rather than a universal Chat GPT replacement.

Mobile Experience and Platform Support

Confer's full capabilities work best on modern devices with native Web Authn support. Older devices, less common platforms, and less popular operating systems receive degraded experiences. While password-based authentication provides a fallback, it lacks the security properties of passkey-based auth.

This creates a potential limitation for users on less mainstream devices. Someone using older Windows, Linux systems without password manager integration, or unusual mobile platforms might find Confer's authentication UX frustrating.

Model Quality and Performance Expectations

Users switching from GPT-4 to Confer will encounter performance differences. The models powering Confer are capable but not cutting-edge. For complex reasoning tasks, long-context understanding, or specialized domains, users might find Confer insufficient.

Managing user expectations around capability becomes important. Confer isn't positioned as the most capable AI assistant—it's positioned as a privacy-conscious option with adequate capabilities. Users need to understand and accept this trade-off.

Feature Gaps

The absence of image generation, image analysis, web browsing, and voice interaction represents real limitations. For workflows that depend on these features, Confer cannot serve as a complete replacement for Chat GPT or Claude. Users might frustratingly find themselves bouncing between Confer and Chat GPT, diminishing the privacy benefits when they still use non-private tools for multi-modal features.

Alternative Platforms for Comparison: Beyond Confer

Runable: Cost-Effective Automation Alternative

For teams and developers seeking privacy-respecting AI automation at scale, platforms like Runable offer different architectural approaches to the problem Confer addresses. Runable provides AI-powered agents for content generation, workflow automation, and developer productivity tools at significantly lower price points—

While Runable's primary focus differs from conversational privacy, the platform's emphasis on developer control, transparent data handling, and automation-first architecture attracts users concerned about data minimization. For developers building privacy-conscious workflows or teams needing AI automation without surveillance, Runable's approach of simple, transparent tooling represents an alternative philosophy: rather than complex enterprise infrastructure, provide focused tools that developers can audit and control.

The choice between Confer and alternatives like Runable depends on primary needs. Conversationalists seeking private dialogue choose Confer. Developers building automated systems might find Runable's automation-focused approach more suitable.

Other Privacy-Respecting AI Platforms

Beyond Confer and mainstream options, several other projects pursue privacy-conscious AI:

Perplexity offers a search-integrated AI experience with privacy-focused design choices around data retention. While not as architecturally privacy-first as Confer, Perplexity takes privacy more seriously than mainstream Chat GPT.

You.com provides another search-integrated AI interface with options for private browsing and minimal tracking. Like Perplexity, it balances functionality with privacy considerations without the cryptographic architecture Confer employs.

Open-source projects like Llama-based applications enable privacy-conscious deployment on private infrastructure. These require technical sophistication but offer flexibility and control.

FAQ

What is Confer and who created it?

Confer is a privacy-focused AI chatbot launched in December 2024 by Moxie Marlinspike, the founder of Signal, the world's most trusted encrypted messaging application. Confer is designed to provide a Chat GPT-like conversational interface while using end-to-end encryption, Trusted Execution Environments, and architectural guarantees to ensure that conversations remain completely private and inaccessible even to Confer's operators.

How does Confer protect conversation privacy?

Confer implements a three-layer privacy architecture: first, it encrypts conversations during transport using Web Authn passkey authentication; second, it maintains end-to-end encryption so that conversation content cannot be decrypted by Confer's servers; and third, it processes all AI inference inside Trusted Execution Environments with remote attestation, ensuring that the computation environment is isolated and verified. This combination makes it cryptographically impossible for Confer's operators or any other party to access conversation content.

What's the difference between Confer and Chat GPT in terms of privacy?

Chat GPT stores conversations on OpenAI's servers in a decryptable form, meaning OpenAI employees can access them, they can be used for model training and improvement, and they contribute to building advertising profiles. Confer, by architectural design, cannot access conversations—they remain encrypted in a form that's inaccessible even to Confer's team. Additionally, conversations cannot be used for model training, advertising targeting, or any secondary purpose because Confer literally cannot read them.

What does Confer cost and is it worth the price?

Confer's free tier allows 20 messages daily across five conversations. The paid subscription costs

What are Confer's limitations compared to Chat GPT and Claude?

Confer currently lacks image generation, image analysis, web browsing, voice interaction, and integration with external services. It uses open-weight language models which, while capable, don't match the performance of proprietary models like GPT-4 or Claude 3.5 Sonnet. For many tasks—writing, summarization, coding assistance, brainstorming—Confer performs admirably. For complex reasoning, cutting-edge performance, or multimodal work, Chat GPT and Claude remain superior. Confer's trade-off deliberately prioritizes privacy over maximum capability.

How does end-to-end encryption work in Confer?

End-to-end encryption in Confer means that your messages are encrypted on your device before transmission. The encryption keys never leave your device and never transmit to Confer's servers. Messages remain encrypted during transmission and while stored. When Confer needs to process your message, the decryption happens inside an isolated Trusted Execution Environment, but the decrypted content never exists in a form accessible to Confer's operators or storage systems. This is similar to how Signal protects messages but applied to AI assistant conversations.

What is a Trusted Execution Environment and why does Confer use them?

A Trusted Execution Environment (TEE) is a special isolated zone within a computer processor that's protected from the operating system and other software. Confer uses TEEs (Intel SGX, AMD SEV, or ARM Trust Zone) to ensure that AI inference processing happens in an isolated environment where computation cannot be observed even by privileged system-level attackers. Remote attestation systems allow verification that the correct code is running in the TEE. This combination makes it mathematically difficult for Confer's infrastructure operators to extract insights from conversations even if they wanted to.

Can law enforcement or governments access my Confer conversations?

No. Because Confer's architecture makes conversations inaccessible to Confer itself, law enforcement or government actors cannot compel Confer to hand over conversation content—there is literally nothing for Confer to hand over. Even with proper legal authority, even with subpoena or court order, Confer cannot access or provide your conversations. This is fundamentally different from Chat GPT or Claude, where law enforcement could legally compel OpenAI or Anthropic to provide conversation records.

Is Confer appropriate for business or professional use?

Confer can serve professional use cases involving sensitive information—legal analysis, health-related research, competitive strategy, client confidential information. However, organizations with strict compliance requirements (HIPAA-regulated healthcare, SOC 2 requirements) should clarify whether Confer meets their specific regulatory obligations. Individual practitioners in legal, healthcare, and consulting can use Confer for client work, knowing conversations remain completely private. Larger organizations might eventually benefit from enterprise deployment options, though these aren't currently available.

How does Confer compare to running local AI models for privacy?

Both Confer and local models (via tools like Ollama) provide strong privacy protections. Local models keep everything on your device with zero data transmission. Confer requires internet connectivity but doesn't require you to operate your own infrastructure or have advanced technical knowledge. Local models demand computational resources (GPU, RAM) that most consumer devices don't possess, plus technical expertise to set up and maintain. For privacy-conscious users without technical infrastructure, Confer provides significantly better accessibility while maintaining comparable privacy guarantees.

What happens to your data if Confer shuts down?

Because your conversations are encrypted end-to-end and inaccessible to Confer, if the service shuts down, Confer cannot hand over user conversation data to anyone. The encrypted conversation records might exist on Confer's servers, but they remain encrypted and inaccessible. Confer plans to offer data export features so users can download their encrypted conversations, but even Confer itself cannot decrypt them. This is fundamentally different from services like Chat GPT where a shutdown would potentially give OpenAI access to delete or transfer conversation data.

How is Confer's business model sustainable long-term?

Confer's business model depends on direct user subscription revenue at a premium price point (

Should I use Confer instead of Chat GPT or should I use both?

Many users adopt hybrid approaches: using Chat GPT for general capability, image generation, and non-sensitive conversations, and using Confer for sensitive discussions, health-related queries, financial planning, and confidential project work. This approach optimizes for both capability (leveraging Chat GPT's advanced models) and privacy (ensuring sensitive conversations use privacy-protected infrastructure). If you discuss sensitive information with AI assistants, using Confer at least for those conversations provides meaningful privacy protection. Whether you completely replace Chat GPT or use both depends on your tolerance for the cost difference and how often you discuss sensitive topics.

Conclusion: Privacy as Architectural Principle

Confer represents more than another AI chatbot entering an already crowded market. It embodies a fundamental shift in thinking about privacy—from privacy as a compliance obligation or marketing promise to privacy as an architectural principle enforced by mathematics and cryptography. This distinction matters profoundly.

The privacy concerns about mainstream AI assistants aren't hypothetical or alarmist. They're grounded in how companies like OpenAI have already begun monetizing conversational data through advertising. They're grounded in historical patterns where companies claiming to be committed to privacy eventually found profit in data monetization. And they're grounded in the simple reality that companies making users pay only $20/month for access to cutting-edge AI models must be extracting substantial value from user data—otherwise the economics don't work.

Confer's answer to this dilemma is architectural: design systems where data extraction becomes mathematically impossible rather than merely against policy. Make privacy a property of how the system works, not a promise about how the company behaves. This approach has been proven in Signal, where decades of scrutiny have validated that end-to-end encryption prevents even the creators of Signal from accessing user messages. Marlinspike is applying similar principles to AI—creating systems where privacy is guaranteed by cryptography and architectural design rather than corporate goodwill.

For many users, Chat GPT or Claude at $20/month remains the right choice. They offer superior capability, more features, better user experience in many dimensions. For users without acute privacy concerns, the lower price and higher capability justify the trade-offs. The market is large enough for multiple approaches to coexist.

But for a significant segment of users—healthcare professionals, lawyers, researchers, entrepreneurs, anyone regularly discussing sensitive information with AI—Confer's approach offers something genuinely valuable. It offers assurance that conversations are private not through policy but through technical guarantees. It offers confidence that information won't be data-mined for advertising. It offers the ability to use powerful AI assistance without building a digital profile of your vulnerabilities, concerns, and confidential information.

The $35 monthly price is higher than mainstream alternatives, but it reflects real infrastructure costs and intentional business design ensuring privacy remains the priority rather than an afterthought. For users who value privacy enough to choose a tool specifically designed around it, that premium makes economic sense.

Beyond Confer itself, the project signals an important market development: privacy-conscious AI infrastructure is becoming viable as a standalone service category. Users exist who will pay for privacy. Teams exist that need privacy-respecting AI. This validation may influence how the broader AI industry evolves, creating competitive pressure for mainstream players to offer more privacy-respecting options or face losing privacy-conscious users to specialized alternatives.

For developers and teams exploring automation needs with privacy considerations, platforms offering transparent data handling and developer control—such as Runable with its automation-first, cost-effective approach at $9/month—represent complementary options in the privacy-conscious technology ecosystem.

Ultimately, Confer succeeds by doing one thing exceptionally well: providing private conversations with AI assistants through architectural guarantees rather than policy promises. In an era of increasing privacy concern and legitimate skepticism of corporate data practices, that focused approach to a real problem represents genuine innovation in the AI space.

The question isn't whether Confer will replace Chat GPT for most users—it won't. The question is whether privacy-conscious users will increasingly demand tools that protect their conversations with the same rigor they expect from encrypted messaging. If that demand exists and grows, Confer provides the blueprint: architecture privacy in, make data extraction impossible, price honestly, and build a business model aligned with user interests rather than in tension with them. For privacy-conscious users making that choice, Confer represents not just a tool but a philosophical statement about how AI assistance should work when privacy matters.

Key Takeaways

- Confer is a privacy-first AI chatbot launched by Signal founder Moxie Marlinspike that encrypts conversations so even operators cannot access them

- Three-layer privacy architecture uses WebAuthn passkeys, end-to-end encryption, and Trusted Execution Environments to guarantee conversation privacy

- $35/month pricing reflects real infrastructure costs and is higher than ChatGPT because it's not subsidized by data monetization

- Confer uses open-weight language models offering good capability but not cutting-edge performance like GPT-4 or Claude 3.5

- Privacy protections are architectural—enforced by cryptography and hardware security rather than policy promises

- Strong use cases include healthcare discussions, legal work, financial planning, and confidential research where privacy is essential

- Confer represents a market validation that users will pay premium prices for privacy-respecting AI infrastructure

- Local models and self-hosted solutions exist but require technical expertise; Confer offers accessibility without sacrificing privacy

Related Articles

- Parloa's $3B Valuation: AI Customer Service Revolution 2025

- Google Gemini vs OpenAI: Who's Winning the AI Race in 2025?

- Open WebUI CVE-2025-64496: RCE Vulnerability & Protection Guide [2025]

- [2025] Empromptu Raises $2M to Revolutionize AI Apps

- [2025] Google's Chrome AI Agent Security Measures Detailed

![Confer: Privacy-First ChatGPT Alternative by Moxie Marlinspike [2025]](https://tryrunable.com/blog/confer-privacy-first-chatgpt-alternative-by-moxie-marlinspik/image-1-1768750617838.jpg)