Discord's Age Verification Plan Exploded Into a PR Nightmare

It was supposed to be straightforward. Discord, the communication platform hosting millions of gaming communities, wanted to verify user ages to comply with regulations. Instead, it became one of the most spectacularly mishandled tech announcements in recent memory.

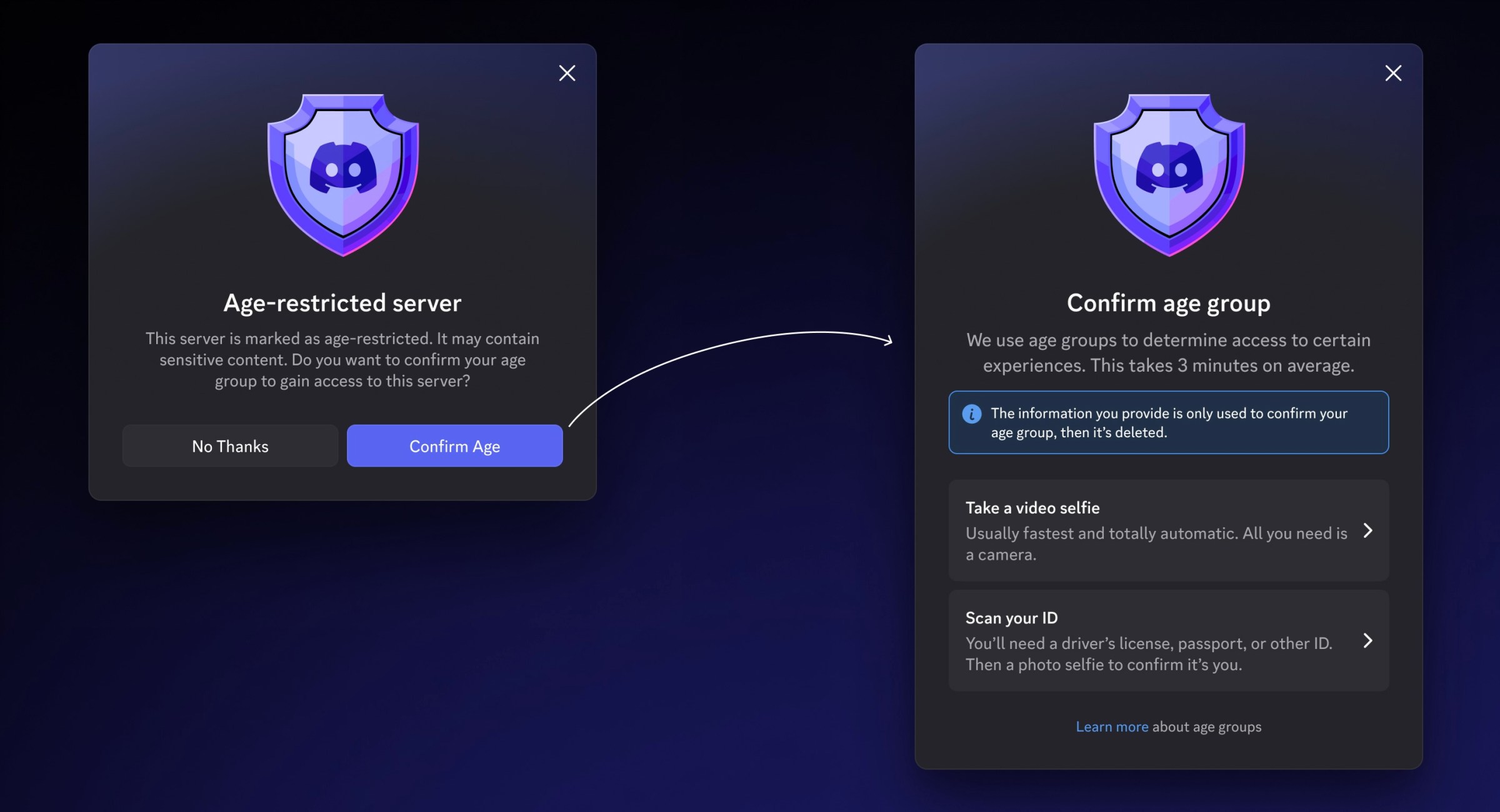

In early 2025, Discord announced a policy requiring age verification for certain regions and features. The plan seemed reasonable on its surface—protect minors, follow the law, move on. Except Discord forgot one critical thing: they had to explain why it was necessary, how it would work, what data would be collected, and most importantly, how they'd protect that information.

Within hours, the announcement spiraled. Users flooded social media with criticism. Community notes appeared on every platform explaining the privacy concerns. Forum threads exploded with people discussing migration to alternative platforms. By day two, Discord was in full damage control mode—but by then, the community had already decided their verdict.

This wasn't just a bad announcement. It was a masterclass in how not to communicate with your user base when privacy is on the line. The backlash revealed something uncomfortable about how tech companies often view their users: as problems to be solved rather than communities to be consulted.

TL; DR

- The Issue: Discord announced mandatory age verification without clear communication about data collection and privacy safeguards

- The Response: Massive user backlash, platform exodus, community notes debunking company claims across multiple platforms

- The Problem: Users feared biometric data collection, insufficient transparency, and regulatory overreach

- The Alternative: Competing platforms gained users as Discord scrambled for damage control

- Bottom Line: Tech companies underestimate how seriously users take privacy violations, especially when children are involved

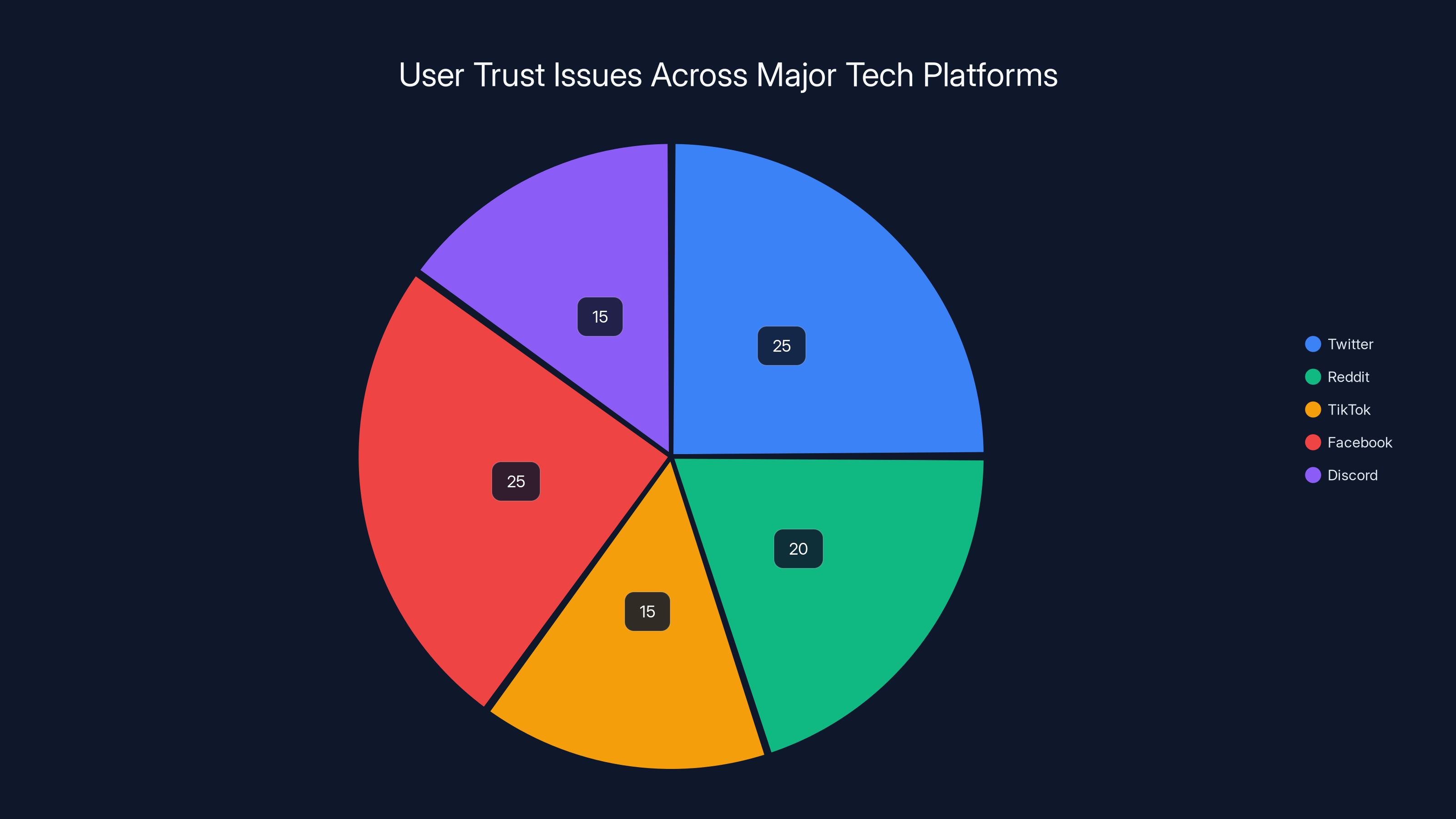

Estimated data shows that major tech platforms like Twitter and Facebook have the highest percentage of users considering alternatives due to trust issues.

What Actually Happened: The Timeline of Discord's Age Verification Plan

Understanding the disaster requires knowing what Discord actually announced and when. The company didn't wake up one morning and decide to collect everyone's government ID—the plan was more subtle, which somehow made it worse.

Discord's age verification system was designed to roll out incrementally across different regions. Certain countries have strict regulations around online services for minors, and Discord claimed it needed to verify ages to remain compliant. The system would reportedly use third-party verification services to confirm that users were the age they claimed to be, as reported by TechCrunch.

On the surface, this sounds reasonable. Age verification is a real problem that platforms struggle with. YouTube does it, TikTok does it, even some Reddit communities require it. The difference was execution and transparency.

Discord's announcement buried critical details. The company didn't clearly explain which regions would be affected first, what "verification" actually meant (would it require government ID? Biometric data? Credit card information?), how long data would be stored, or who would have access to it. Instead, they focused on compliance language that made users nervous, as noted by Engadget.

The timing made it worse. Discord announced this during a period when several other platforms were facing regulatory pressure around child safety. Facebook, Instagram, and TikTok were all dealing with legislative scrutiny. Discord's announcement arrived alongside news that various governments were considering restrictions on teen access to social media. Context matters, and Discord's announcement felt like a precursor to something bigger and scarier, as highlighted by The Verge.

Within hours, communities started comparing notes. One Discord server would share theories about what the verification system actually did. Another would post screenshots of regulatory filings. Someone would link to the terms of service of the third-party verification company Discord planned to use. Pretty soon, users had pieced together a picture that was far more invasive than what Discord's official announcement suggested.

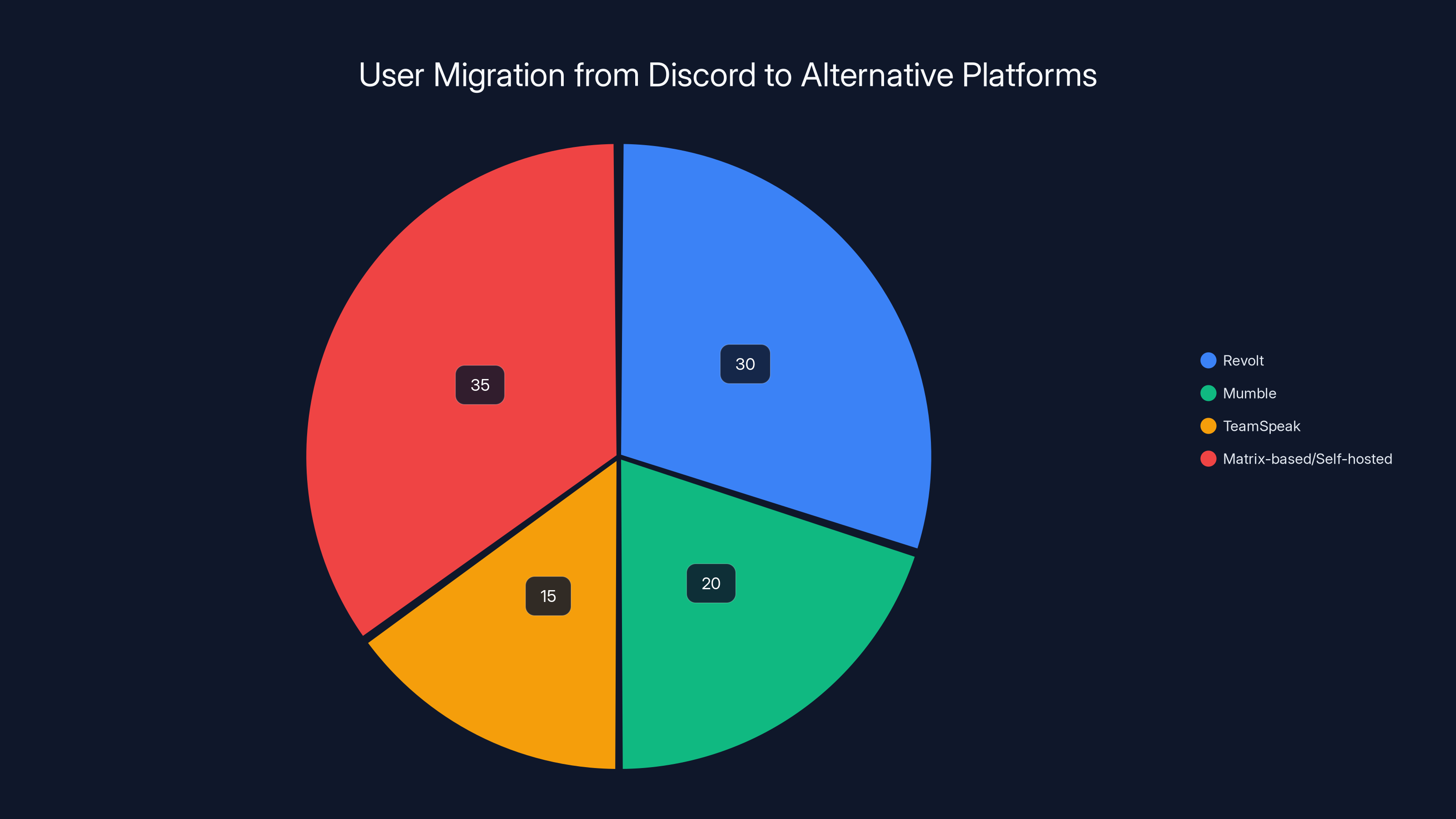

Estimated data shows that Matrix-based platforms and self-hosted solutions attracted the highest percentage of users migrating from Discord, highlighting a strong preference for privacy and control.

Why Users Immediately Distrusted the Plan

Trust is fragile. Once it breaks, companies spend months or years rebuilding it. Discord managed to shatter it with a single announcement.

The core issue wasn't age verification itself. It was that Discord gave people no reason to believe the company would handle sensitive data responsibly. This wasn't paranoia—it was pattern recognition based on years of tech company behavior.

Look at the precedent. Meta (formerly Facebook) has been caught repeatedly mishandling user data. Google claims to care about privacy while building surveillance infrastructure so sophisticated that it tracks users across the entire web. Amazon collects everything about everything. When Discord announced it would collect age verification data, users reasonably wondered: will this data be sold to advertisers? Will it be breached? Will it be retained forever?

Discord's response to these questions was essentially silence. The company didn't proactively address data retention, third-party sharing, or security measures. They just said "we're doing age verification" and seemed shocked when users didn't celebrate, as reported by Kotaku.

Another factor: Discord's user base skews young. Millions of teenagers use the platform daily to talk with friends, join gaming communities, and create content. For these users, the idea of their age being verified by some third-party company was genuinely frightening. What if that data was leaked? What if it was shared with parents? What if advertisers could access it?

Discord also had no history of transparency around privacy decisions. The company rarely published transparency reports. Users didn't know what information Discord already collected about them or how it was used. Adding age verification on top of that mystery made users rightfully uncomfortable, as discussed by Daily Kos.

The distrust was amplified by the fact that Discord hadn't consulted its community before announcing this. Major gaming and streaming platforms have community councils, feedback systems, and beta testing groups. Discord skipped those steps entirely. It announced a major policy change to millions of users and then seemed surprised when they objected.

The Community Notes Phenomenon: How Public Fact-Checking Destroyed Discord's Narrative

Community notes are a relatively new tool, pioneered by X (formerly Twitter), that let users add context to posts they think are misleading. When enough users agree that a note is helpful, it becomes visible to everyone. It's crowdsourced fact-checking in real time.

When Discord's official channels posted about the age verification plan, community notes exploded. Users added context explaining the privacy concerns, linked to privacy research, shared information about the third-party verification companies, and broke down what Discord wasn't saying, as noted by The Record.

On Reddit, moderators of major subreddits stickied posts explaining the privacy issues. On Threads, the Discord announcement was heavily community-noted. Even on TikTok, creators explained why this was a bad idea to millions of followers.

What's significant about this is that Discord couldn't control the narrative. The company tried to frame age verification as a necessary compliance measure, but users immediately reframed it as a privacy invasion. And they did it publicly, at scale, with evidence and reasoning.

This revealed something important about 2025: companies can no longer announce major policies and expect their framing to stick. If users disagree, they'll organize counterarguments faster than the company can respond. Discord's PR team probably spent hours crafting messaging around "protecting young users" and "responsible compliance," only to see it eviscerated by 13-year-olds on Reddit who understood the privacy implications better than Discord's own privacy officer apparently did.

The community notes also did something else crucial: they made other platforms aware of the problem. Journalists picked up on the fact that Discord's age verification plan was facing mass criticism. That coverage then spread to mainstream media, turning it from a niche tech controversy into a story about tech companies and child privacy, as reported by BBC News.

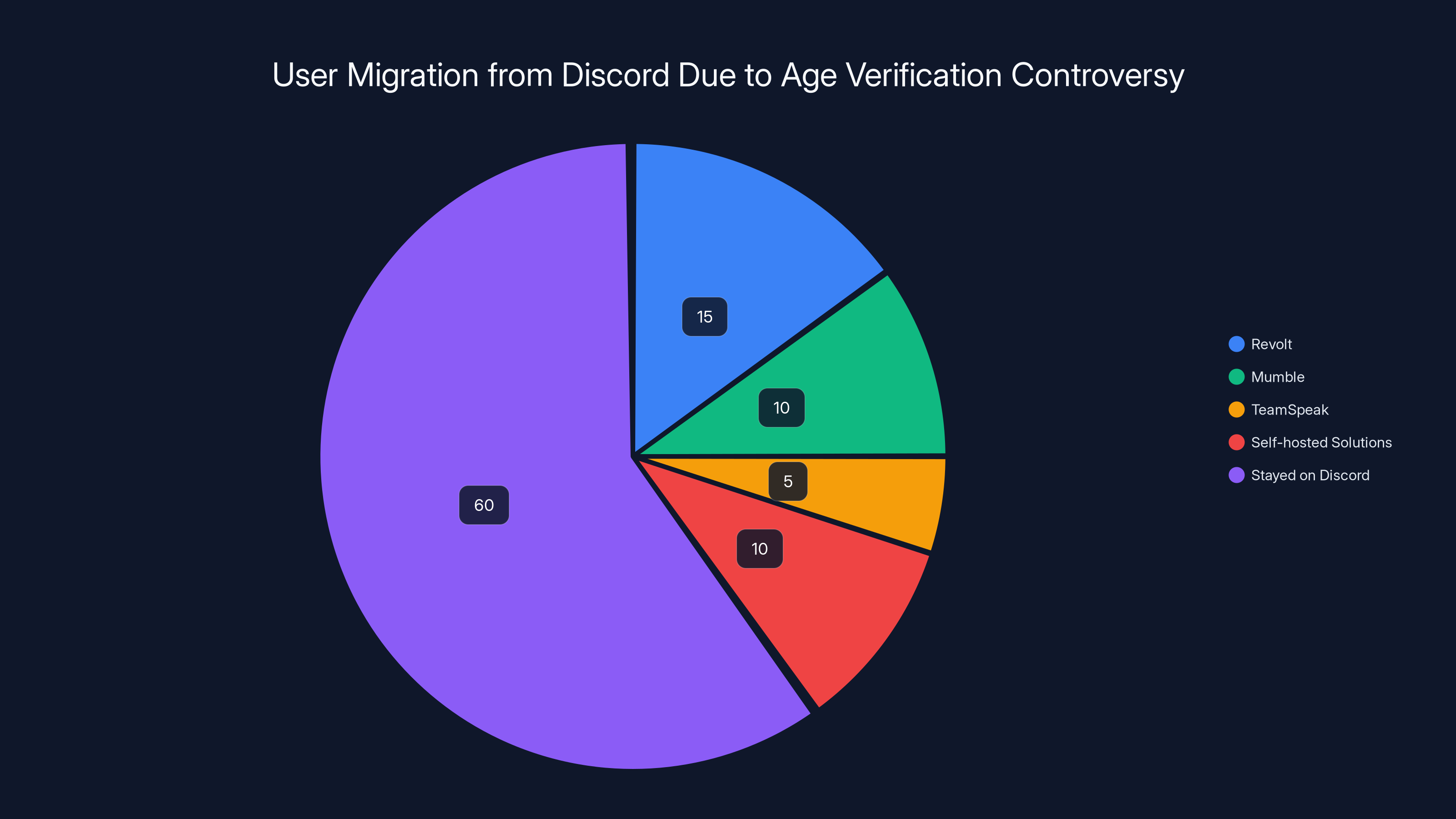

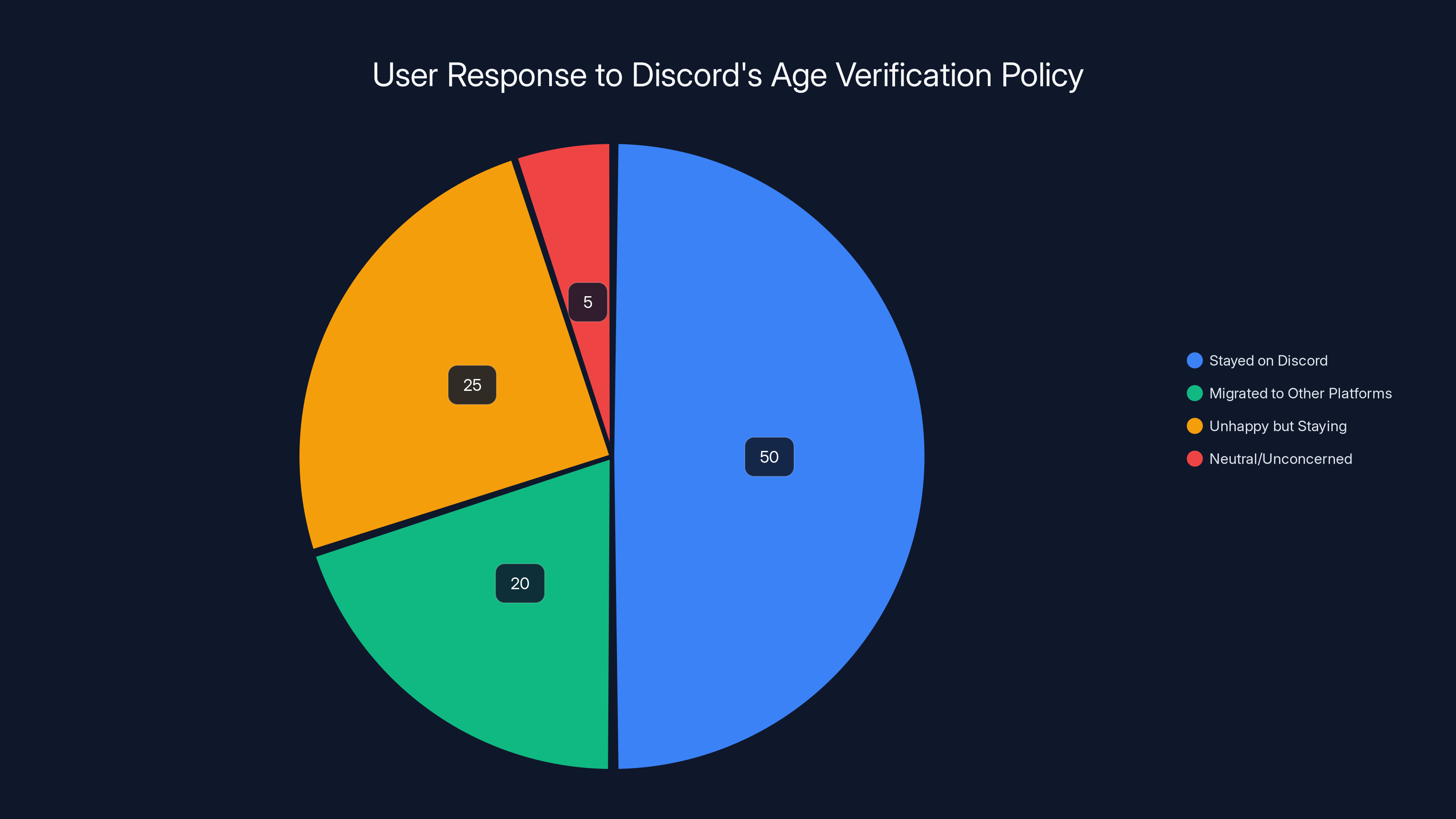

Estimated data suggests that while a significant portion of users considered alternatives, the majority remained on Discord due to the inconvenience of switching and limitations of other platforms.

Data Privacy Concerns That Discord Didn't Address

Underlying the entire backlash was a fundamental question: what data would Discord actually collect, and what would happen to it?

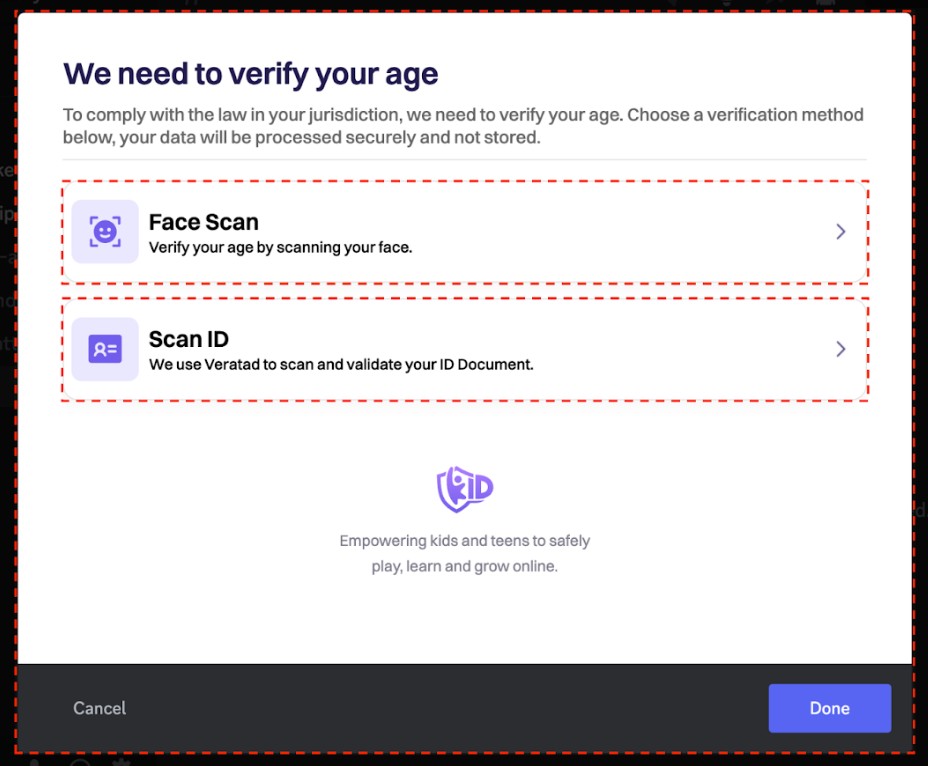

Discord never clearly answered this. Different verification services work differently. Some require government ID. Some use biometric data. Some combine multiple verification methods. Discord didn't specify which service it would use, what data would be collected, or how long it would be kept, as noted by Help Net Security.

This ambiguity was intentional or incompetent—probably both. Discord likely hadn't fully decided on its verification approach when it announced the policy. Or it knew the details would be unpopular and hoped users would accept the vague announcement first, ask questions later.

Here's what users rightfully feared:

Government ID collection. If verification required scanning a government ID, Discord would suddenly have access to legal names, addresses, government ID numbers, and passport information for millions of users. This data is incredibly valuable to hackers and extremely sensitive if breached.

Biometric data. Some age verification systems use facial recognition or other biometric data. Storing biometric information is regulated differently than other personal data in most countries. Discord didn't clarify whether it would use biometrics, and if it did, how it would secure that data.

Third-party data sharing. Would the verification company share data with Discord? Would Discord use it for other purposes? Would it be sold to advertisers or data brokers? Discord's terms of service don't exactly build confidence on this front.

Data retention. How long would verification data be stored? Forever? Just long enough to confirm age, then deleted? Discord had no policy in place.

Cross-referencing. Could Discord link age verification data to behavioral data it already collected about users—what games they play, what communities they join, what they talk about? Possibly yes, which would create incredibly detailed profiles of minors.

None of these concerns were paranoid. Companies have a proven track record of mishandling sensitive data. Data breaches are common. Regulatory oversight is minimal. Users had every right to demand clarity on these points before accepting age verification.

Discord's failure to address these concerns head-on was a massive strategic error. A company serious about user trust would have published a detailed privacy impact assessment, explained its data retention policy, committed to not selling verification data, and offered transparency into how the data would be used. Instead, Discord basically said "trust us," which is never a successful strategy in 2025, as highlighted by TechBuzz.

The Exodus: Where Users Went and Why

When users decided they didn't trust Discord, they didn't just complain—they left. Platform migrations are notoriously difficult. You're giving up existing communities, losing connections with friends, starting from scratch on a new service. For millions of Discord users to actually switch platforms was a massive statement about how serious their concerns were.

Several competing platforms benefited from the Discord exodus. Revolt, an open-source Discord alternative, saw traffic spike significantly. Mumble, an older but privacy-focused voice chat platform, got renewed interest. Even TeamSpeak, which most people thought was dead, saw new user signups.

But the most interesting alternative was Matrix-based platforms and self-hosted solutions. Some communities decided to run their own servers using open-source software. This is more complicated to set up than just switching to another platform, but for communities that valued privacy and control, it was worth the technical overhead.

The exodus also highlighted something that Discord probably didn't expect: communities are more loyal to the people in them than to the platform itself. If the community leadership decided to migrate, users followed. Some major gaming communities, streaming groups, and creative communities all evaluated moving away from Discord entirely, as reported by Gizmodo.

Not all of them actually left. But the fact that they seriously considered it showed how much goodwill Discord had burned with a single policy announcement.

What made the exodus more damaging than a typical platform controversy was that it revealed Discord's vulnerability. The company doesn't own its users in the way it probably thinks it does. Communities are portable. If you mistreat them, they'll leave. This is a lesson other platforms have learned the hard way—Reddit during the 2023 API pricing controversy, Twitter during the Elon Musk transition, Tumblr during the 2018 content policy change.

Discord's mistake was assuming it was too big to fail, too integrated into people's daily lives for them to leave. But if the price of staying is surrendering privacy, many users will pay the cost of switching.

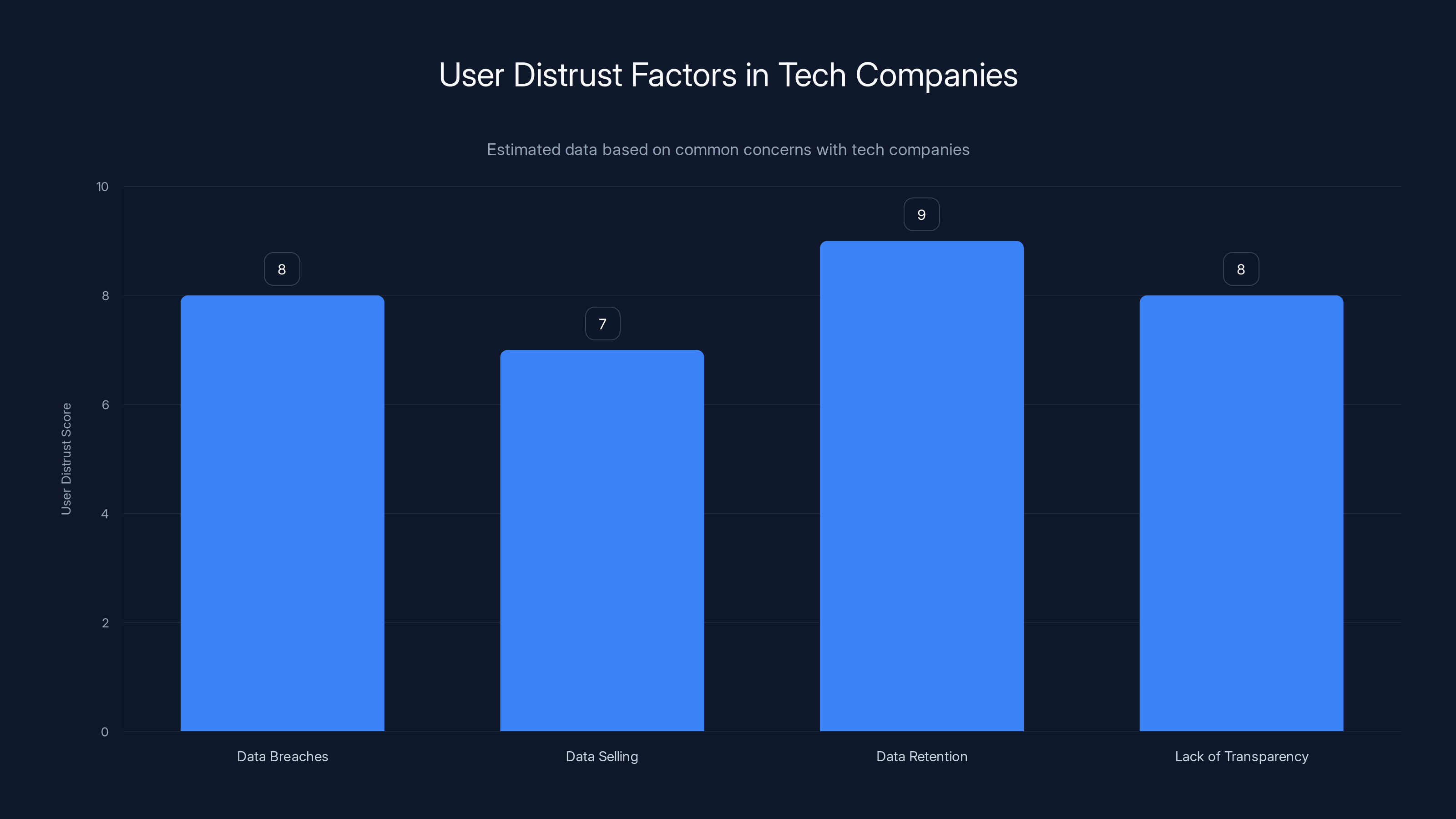

Users distrust tech companies primarily due to data breaches, lack of transparency, and concerns over data selling and retention. (Estimated data)

Regulatory Context: Why Discord Was Under Pressure in the First Place

Understanding Discord's decision requires understanding the regulatory environment it operates in. Discord didn't wake up and decide to collect age data for fun—it was responding to government pressure, albeit ham-handedly.

Multiple countries were implementing stricter regulations around online services and minors. The European Union was tightening rules through various directives. The US FTC was investigating tech companies for violating COPPA (Children's Online Privacy Protection Act). Various countries were considering outright restrictions on teen social media access, as reported by White & Case.

In this context, Discord probably felt it had to do something to show regulators it was serious about child safety and age verification. The problem was how it communicated and implemented that something.

Regulatory pressure isn't going away. If anything, it's accelerating. Governments worldwide are moving toward stricter rules around online services for minors. This means more platforms will need to implement age verification. The question is whether they'll do it thoughtfully or bungle it the way Discord did.

The irony is that Discord's ham-fisted approach probably made regulators' jobs easier. By generating so much negative attention, Discord inadvertently demonstrated why careful, transparent age verification frameworks are necessary. If Discord had done this right—with clear communication, strong privacy protections, and community input—regulators might have seen it as a model. Instead, they saw a company that didn't understand its own users, as noted by Atlantic Council.

How Discord's PR Response Made Things Worse

After the initial backlash, Discord tried to issue clarifications. This is where things got really bad, because each clarification seemed to reveal new problematic details that the company had apparently failed to think through.

Discord's official statement tried to frame age verification as "protecting young users" and "following regulations." Both true, but both also missing the point. Users didn't object to age verification in principle—they objected to the lack of transparency, the potential for data misuse, and the way the policy was announced without consultation.

The follow-up clarifications were worse. When users asked what data would be collected, Discord provided vague answers. When users asked about data retention, Discord seemed to not have a clear policy. When users asked about third-party sharing, Discord didn't commit to anything specific, as reported by The Verge.

Each non-answer just confirmed what users suspected: Discord hadn't thought through the privacy implications of its own policy. The company was reacting to regulatory pressure without having a coherent strategy for implementing age verification in a privacy-conscious way.

Discord also made the mistake of being defensive instead of apologetic. When users raised concerns, Discord's responses came across as frustrated rather than understanding. The company seemed to view criticism as unreasonable instead of recognizing it as valid feedback from users who cared about privacy.

Good crisis communication requires acknowledging the legitimate concerns, explaining how you'll address them, and giving people concrete reassurance. Discord did none of these things effectively. Instead, it doubled down on regulatory justifications and technical details that users found either inadequate or irrelevant.

The PR disaster was compounded by the fact that different parts of Discord seemed to be saying different things. The official blog post had one framing, Twitter responses had another, support tickets got yet another explanation. Inconsistent messaging is always worse than bad messaging because it makes the company look disorganized.

Estimated data shows that while 50% of users stayed on Discord, 20% migrated to other platforms, and 25% are unhappy but still using the service. Only 5% remain neutral or unconcerned.

The Bigger Picture: Tech Companies and Trust

Discord's age verification fiasco is just one data point in a much larger trend: tech companies have catastrophically mismanaged user trust, and users are finally calling them on it.

We've seen this movie before. Elon Musk took over Twitter and immediately started implementing changes that antagonized users. The company lost advertisers, creators moved to alternatives, and the platform's relevance declined. Reddit's API pricing disaster alienated communities and third-party app developers. TikTok faced bans in multiple countries because of data privacy concerns. Facebook's various privacy scandals have made the company permanently untrustworthy in the eyes of millions.

What these situations have in common is that tech companies seemed shocked that users actually cared about the issues in question. Musk was surprised Twitter users wanted a consistent algorithm and moderation policy. Reddit was surprised developers and users were upset about paying for API access. Discord was surprised users cared about age verification and privacy, as highlighted by Bloomberg Law.

But here's what's changed: users have options now. Viable alternatives exist for almost every major platform. Discord found out the hard way that monopoly position isn't permanent anymore.

Tech companies need to reckon with the fact that trust is the foundation of everything. Without it, users will leave the moment a better option exists. Discord had built a lot of goodwill over the years by being relatively user-friendly, avoiding excessive advertising, and supporting community-driven features. One bad policy announcement and poor communication eroded that goodwill in days.

The question going forward is whether Discord can rebuild trust or whether it's permanently damaged the relationship with its user base. The company can't take back the announcement, but it can change how it handles privacy going forward.

What Discord Should Have Done Differently

Spending some time on this question is instructive because it shows what responsible tech communication looks like. If Discord wanted to implement age verification, here's what it should have done:

First, transparency. Before announcing anything, Discord should have published a detailed explanation of why age verification was necessary, what regulations it was responding to, and what specific data collection method it would use. This isn't optional—it's baseline for any policy affecting user data.

Second, community input. Discord has millions of active users and thousands of active communities. Rather than announcing a policy, the company could have asked for feedback. This doesn't mean doing whatever users want, but it means acknowledging their concerns before implementing changes.

Third, privacy-first design. If Discord needed to verify ages, it could have chosen methods that collect minimal data. Some age verification systems work without requiring government ID or biometric data. Discord could have prioritized these options to minimize privacy invasion.

Fourth, clear data policy. Discord should have committed in writing to specific promises: data will be deleted after X days, third parties will not have access, data will not be used for profiling, users will be notified of all data uses. Clear commitments reduce uncertainty and build trust.

Fifth, opt-out mechanisms. For regions where age verification wasn't legally required, Discord could have let communities opt out. This would have given users choice and shown the company respected their concerns.

Sixth, transparency reports. After implementation, Discord should publish regular transparency reports showing how many users verified, how many data requests it received, how it handled privacy concerns.

None of this is complicated or unreasonable. It's just what responsible data handling looks like. The fact that Discord didn't do any of it shows the company either didn't understand the stakes or didn't care about user concerns.

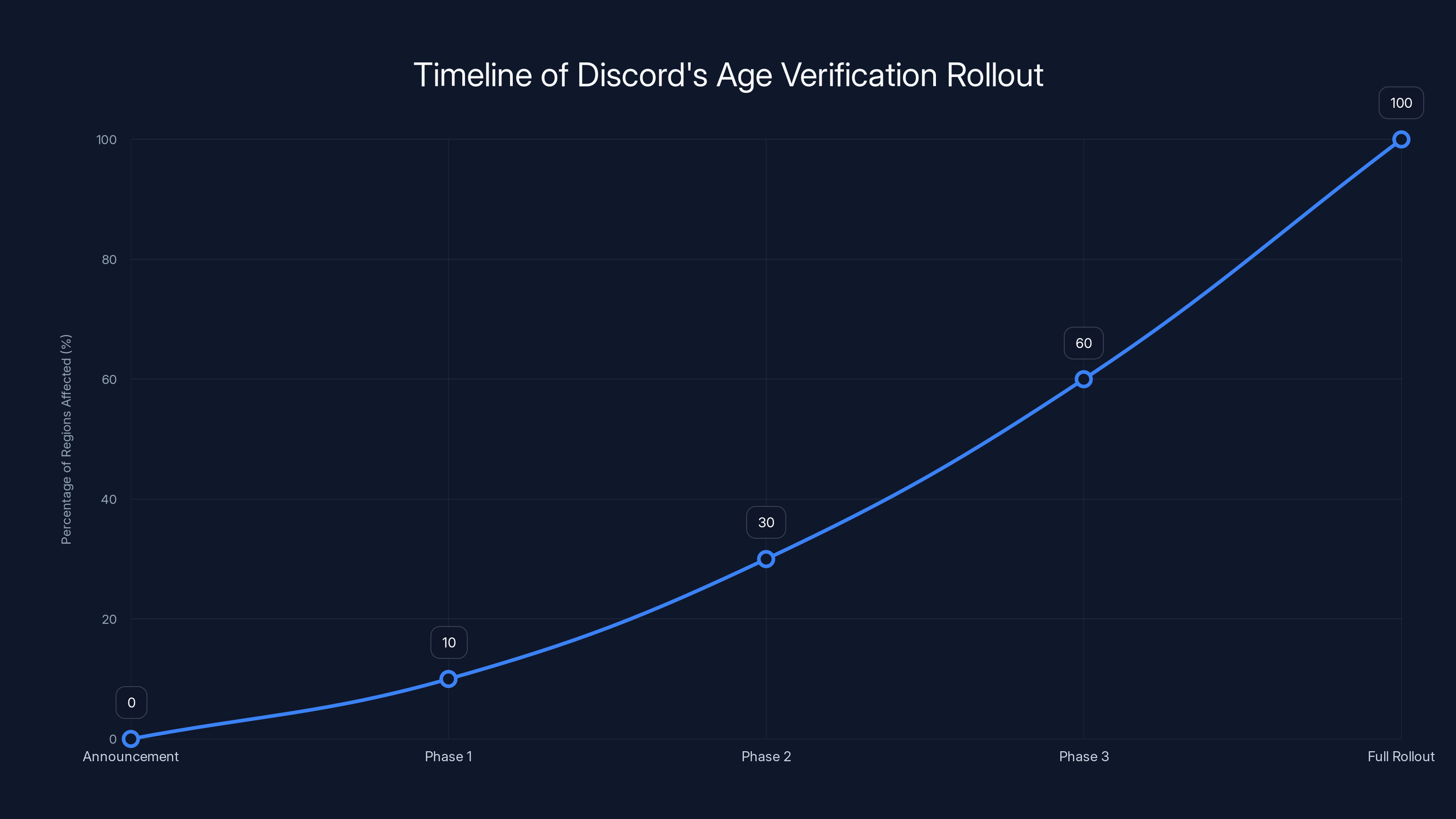

Estimated data showing a gradual increase in regions affected by Discord's age verification plan, highlighting the incremental rollout strategy.

The Ripple Effects: What This Means for Other Platforms

Discord's disaster has ripple effects throughout the tech industry. Other platforms are watching and learning (hopefully) from Discord's mistakes.

For Twitch, which also serves millions of young users, the Discord situation is a warning. If Twitch ever needs to implement age verification, users will immediately compare it to Discord's failures. Twitch has a slightly better track record on communication, but not by much.

For YouTube, which already has age verification systems in place, the Discord situation validates criticisms users have been making about its approach to teen privacy. YouTube hasn't had a Discord-level backlash, but that's partly because users see YouTube as inevitably invasive. Discord had more goodwill to lose.

For smaller platforms and startups, the Discord situation is a template for what not to do. If you're building a platform with young users, invest in privacy infrastructure and transparency first. Make it a feature, not an afterthought. Make it a reason users choose your platform over competitors.

For regulators, Discord's failure shows that you can't expect companies to self-regulate on privacy. Guidelines need to be specific, enforcement needs to be real, and companies need to know there are consequences for mishandling data.

Most importantly, the Discord situation shows that users' appetite for privacy advocacy is not declining—it's growing. Each platform failure builds momentum for the next privacy-focused alternative.

Where We Stand Now: Recovery and Long-Term Implications

As of early 2025, Discord is still trying to recover from the age verification backlash. The company hasn't abandoned the policy entirely—regulatory requirements don't just disappear—but it's clearly scrambling to find a less invasive implementation.

Some communities have migrated, but most users have stayed. The exodus was real but not total. This is partly because switching platforms is genuinely difficult, and partly because alternative platforms have their own limitations. But Discord can't take this as vindication. Millions of users are still unhappy, and any future privacy misstep could trigger another migration wave.

Discord's stock has taken a hit. Investor confidence in the company's ability to manage policy and communication has declined. The company faces increased regulatory scrutiny. Advocacy groups are watching Discord's age verification implementation closely.

For users, the takeaway is clear: you can't trust any tech platform with your privacy unless you actually force them to be trustworthy. This means demanding transparency, asking hard questions about data collection, supporting privacy-focused alternatives, and being willing to switch if a company crosses a line.

Looking forward, the question isn't whether Discord will implement age verification—it will, because regulators will require it. The question is whether Discord will learn from this disaster and do it right next time. Given the company's response so far, that's uncertain.

The broader question is whether tech companies will finally accept that users care about privacy and are willing to act on that concern. For years, tech companies have dismissed privacy complaints as niche concerns from overly cautious users. Discord's backlash suggests that's no longer true. Privacy is now mainstream, and companies that ignore it do so at their peril.

What Users Can Do Now

If you're a Discord user concerned about privacy, your options are limited but real.

First, disable optional data collection. Discord collects various types of data. Go into your privacy settings and disable anything not essential to basic functionality. Turn off activity status, disable marketing preferences, opt out of analytics where possible.

Second, minimize sensitive information. Don't put your real name as your display name. Don't link accounts unnecessarily. Keep your profile information minimal. The less data Discord has about you, the less is at risk if the age verification system fails.

Third, use strong passwords and two-factor authentication. If age verification data is collected, the security of your account becomes more important. A strong password and 2FA make your account harder to compromise.

Fourth, stay informed. Follow technology news sources, privacy advocacy groups, and Discord's official announcements. If the company announces new privacy-affecting changes, you'll want to know before implementing them.

Fifth, support alternatives. Even if you continue using Discord, try out alternatives like Revolt or Matrix-based platforms. Using them, contributing to them, and supporting their development creates real alternatives that can compete with Discord on privacy grounds.

Sixth, give feedback. If you're frustrated with Discord's privacy approach, tell them. Send support tickets, post in communities, leave reviews, make your concerns known. Companies listen to loud users.

The Future of Age Verification and Privacy

Age verification is becoming standard across platforms, and that's not necessarily bad. The problem is the implementation. Done right, age verification can protect young users from harmful content without invading everyone's privacy. Done wrong, it becomes a vehicle for mass data collection and surveillance.

The technology is improving. Some new age verification methods work without requiring sensitive personal data. Blockchain-based solutions exist. Even simple methods like email verification have improved. There are ways to verify age that don't require government IDs or biometric data.

The question is whether platforms will use these methods or whether they'll take the path of least resistance and collect as much data as possible.

Discord's disaster suggests that users will force the issue. If platforms try to collect excessive data under the guise of age verification, users will call them out, spread information through community notes, and migrate to alternatives. The calculus for tech companies is shifting: heavy-handed data collection generates backlash that damages the bottom line. Privacy-conscious design builds trust and loyalty.

This doesn't mean every platform will suddenly become privacy-focused. But it does mean that privacy is no longer a niche concern. It's becoming a competitive advantage. Platforms that get this right will attract users frustrated with privacy violations elsewhere.

FAQ

What was Discord's age verification plan?

Discord announced it would implement mandatory age verification for users in certain regions to comply with regulations around online services for minors. However, the company didn't provide clear details about what data would be collected, how it would be stored, or what third parties would have access to it, which triggered immediate backlash.

Why did users react so negatively to the announcement?

Users were concerned about privacy implications that Discord didn't address. Questions about whether government IDs would be required, whether biometric data would be collected, how long data would be retained, and whether it would be shared with third parties went unanswered. The announcement also came without any community input or transparency about implementation details.

What are community notes and how did they affect Discord's messaging?

Community notes are user-added context that appears on posts deemed misleading by the platform. When Discord announced its age verification plan, users added community notes across multiple platforms explaining privacy concerns, linking to relevant research, and breaking down why the policy was problematic. This crowdsourced fact-checking undercut Discord's official messaging and made the concerns highly visible.

Did Discord lose users over this controversy?

Yes, some users migrated to alternative platforms including Revolt, Mumble, TeamSpeak, and self-hosted open-source solutions. However, the exodus wasn't total, partly because switching platforms is inconvenient and alternatives have their own limitations. Still, the fact that millions of users seriously considered leaving shows how much damage the announcement did to user trust.

What specific data privacy concerns did users raise?

Users raised multiple concerns: would government ID be required (exposing sensitive identification documents), would biometric data be collected (facial recognition, fingerprints), would data be sold to advertisers or data brokers, how long would data be stored, would it be linked to behavioral data Discord already collected, and would it be breached and exposed. Discord failed to address these concerns directly.

Why didn't Discord communicate better about the age verification plan?

Likely a combination of factors: the company probably hadn't fully developed its implementation strategy when announcing, leadership underestimated how seriously users care about privacy, the company was reacting to regulatory pressure rather than planning proactively, and internal communication between Discord teams was apparently inconsistent. The result was vague announcements that triggered suspicion rather than reassurance.

What should Discord have done differently?

Discord should have: published detailed transparency about why verification was needed and how it would work, sought community input before announcing, chosen privacy-minimal verification methods, committed to specific data handling practices in writing, offered opt-out mechanisms where legal, and published regular transparency reports. Essentially, the company needed to treat privacy as a priority rather than an afterthought.

What does this mean for other platforms?

Other platforms that serve young users, like Twitch and YouTube, should expect similar backlash if they announce age verification without careful communication and privacy safeguards. The Discord situation demonstrates that users will migrate to alternatives if a platform is perceived as violating their privacy, making privacy-conscious design a competitive advantage.

How can Discord rebuild trust after this fiasco?

Discord needs to implement age verification in a genuinely privacy-conscious way, publish detailed privacy policies for the system, commit to not selling verification data, offer transparency reports, respond to user concerns seriously rather than defensively, and demonstrate through action (not just words) that it takes privacy seriously going forward. Trust is rebuilt through consistent, transparent behavior over time.

What does Discord's failure teach users about platform privacy?

It reinforces that users cannot assume platforms will protect their privacy without pressure. Users need to actively demand transparency, ask hard questions about data collection, audit what data platforms have about them, support privacy-focused alternatives, and be willing to switch platforms if privacy is violated. Tech companies respond to user demand, and demand for privacy is now becoming mainstream.

Conclusion: The New Reality of Platform Power and User Agency

Discord's age verification disaster wasn't just a PR failure—it was a moment that exposed fundamental truths about how tech platforms and users interact in 2025. The company learned, in the most public way possible, that it doesn't control its users the way it assumed. Communities are portable. Users have options. And once trust is broken, rebuilding it is exponentially harder than maintaining it in the first place.

What's particularly telling about this situation is that Discord is neither the worst nor the most privacy-invasive platform available. Yet the backlash was severe enough to send the company scrambling and trigger a genuine platform exodus. This suggests users' tolerance for privacy violations has hit a threshold. Tech companies can no longer get away with vague policies and defensive responses.

The reason community notes were so effective in amplifying the Discord backlash is that they democratized fact-checking. Users didn't have to wait for journalists to cover the story or for privacy advocates to issue statements. They could immediately add context and explanation to Discord's official announcements. This changes the power dynamic dramatically. Companies can no longer control their own narrative.

For Discord specifically, the path forward is clear but challenging. The company must implement age verification because regulators require it. But it can do so in a way that minimizes privacy invasion, maximizes transparency, and respects user concerns. This means collecting less data, being explicit about data handling, committing to privacy practices in writing, and building systems that users can actually trust.

For users, the takeaway is that your concerns about privacy matter. When you express them loudly and clearly, platforms listen. When enough users are willing to switch platforms, alternatives flourish. You have more power than you probably realize—but only if you actually exercise it.

For other tech companies, Discord's disaster is a warning. Privacy is no longer optional. Transparency is now expected. Defensive communication will backfire. Users are organized, informed, and willing to act on their principles. Companies that ignore these realities do so at significant risk to their bottom line and reputation.

The broader implication is that tech companies' era of unchecked data collection might actually be ending. Not because of government regulation (though that helps) and not because of activism (though that helps too), but because users themselves have decided they're done trading privacy for convenience. They're demanding better options, and if platforms don't provide them, they'll build alternatives or switch to them.

Discord tried to implement age verification without properly consulting its users or being transparent about implications. It assumed goodwill would carry the company through backlash. Instead, it discovered that goodwill is fragile and that 2025 users will not accept vague promises about their data. The lesson is expensive, but hopefully Discord—and other platforms watching this unfold—will actually learn from it.

The future of online platforms depends on rebuilding trust with users. That starts with transparency, continues with respect for privacy, and succeeds only if companies are willing to acknowledge that users, not platforms, are ultimately in control of where they spend their time and attention.

Key Takeaways

- Discord announced mandatory age verification without clear communication about data collection, retention, or third-party sharing, triggering immediate distrust

- Community notes across multiple platforms fact-checked Discord's messaging, showing that crowdsourced fact-checking can undercut official company narratives

- Millions of users seriously considered switching to alternative platforms like Revolt and Mumble, demonstrating that privacy concerns translate into real user behavior change

- Discord's defensive, vague responses to privacy questions confirmed user suspicions that the company hadn't adequately planned the privacy implications of age verification

- The backlash reveals that user tolerance for privacy violations has shifted—tech companies can no longer assume goodwill will carry them through controversial policies

Related Articles

- Discord's Age Verification Mandate and the Future of a Gated Internet [2025]

- How to Cancel Discord Nitro: Age Verification & Privacy Concerns [2025]

- Discord Age Verification 2025: Complete Guide to New Requirements [2025]

- India's New Deepfake Rules: What Platforms Must Know [2026]

- Discord Age Verification for Adult Content: What You Need to Know [2025]

- UK's Light-Touch App Store Regulation: What It Means for Apple and Google [2025]

![Discord's Age Verification Disaster: How a Privacy Policy Sparked Mass Exodus [2025]](https://tryrunable.com/blog/discord-s-age-verification-disaster-how-a-privacy-policy-spa/image-1-1770811585450.jpg)