Discord's Age Verification Mandate and the Future of a Gated Internet

Last month, Discord announced something that caught a lot of people off guard: starting in 2025, users who can't prove their age would lose access to substantial chunks of the platform. No age verification, no access to servers marked for older audiences. No stage channels. No unfiltered content. According to The Verge, this move is part of a broader trend across the tech industry.

It's not a gentle nudge. It's a gatekeeping system that treats the internet less like a commons and more like a nightclub with a bouncer. And it's happening across the entire tech industry at the exact same moment.

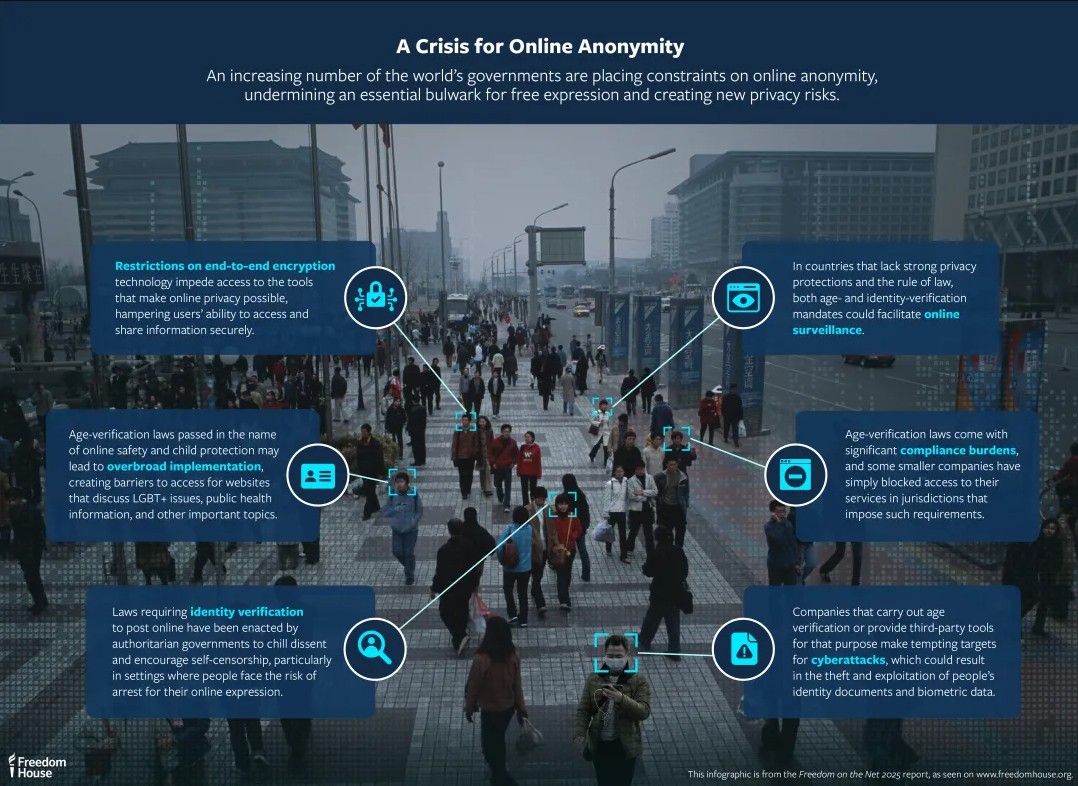

What's happening with Discord isn't just about keeping minors out of adult communities. It's a harbinger of a larger transformation: the internet is slowly becoming age-gated. And unlike physical spaces, this digital gatekeeping doesn't require a person at a door. It requires biometric scanning, government IDs, AI inference models, and data collection at a scale most people haven't even considered.

This article dives deep into what Discord's move means, why every major platform is doing this simultaneously, what the technology actually does, and what the real risks are beyond what you've heard on Twitter.

TL; DR

- Discord is forcing age verification globally: Users must prove their age or lose access to 18+ content, stage channels, and restricted servers starting next month, as reported by TechCrunch.

- Regulatory pressure is the real driver: Laws in the UK, Australia, and the US are forcing platforms to implement age checks, and they're all doing it at once, according to Reuters.

- The technology isn't as accurate as promised: AI age inference relies on behavioral signals that users can easily fake or game, while government ID verification poses privacy risks, as noted by Tech Policy Press.

- This signals a fundamental shift in internet architecture: Platforms are moving from open-to-all to age-gated by default, which could reshape how the internet works globally, as discussed in USA Today.

- Privacy and data risks are significant: A third-party vendor used by Discord suffered a data breach exposing IDs, and most platforms haven't disclosed their data security protocols, as highlighted by BBC News.

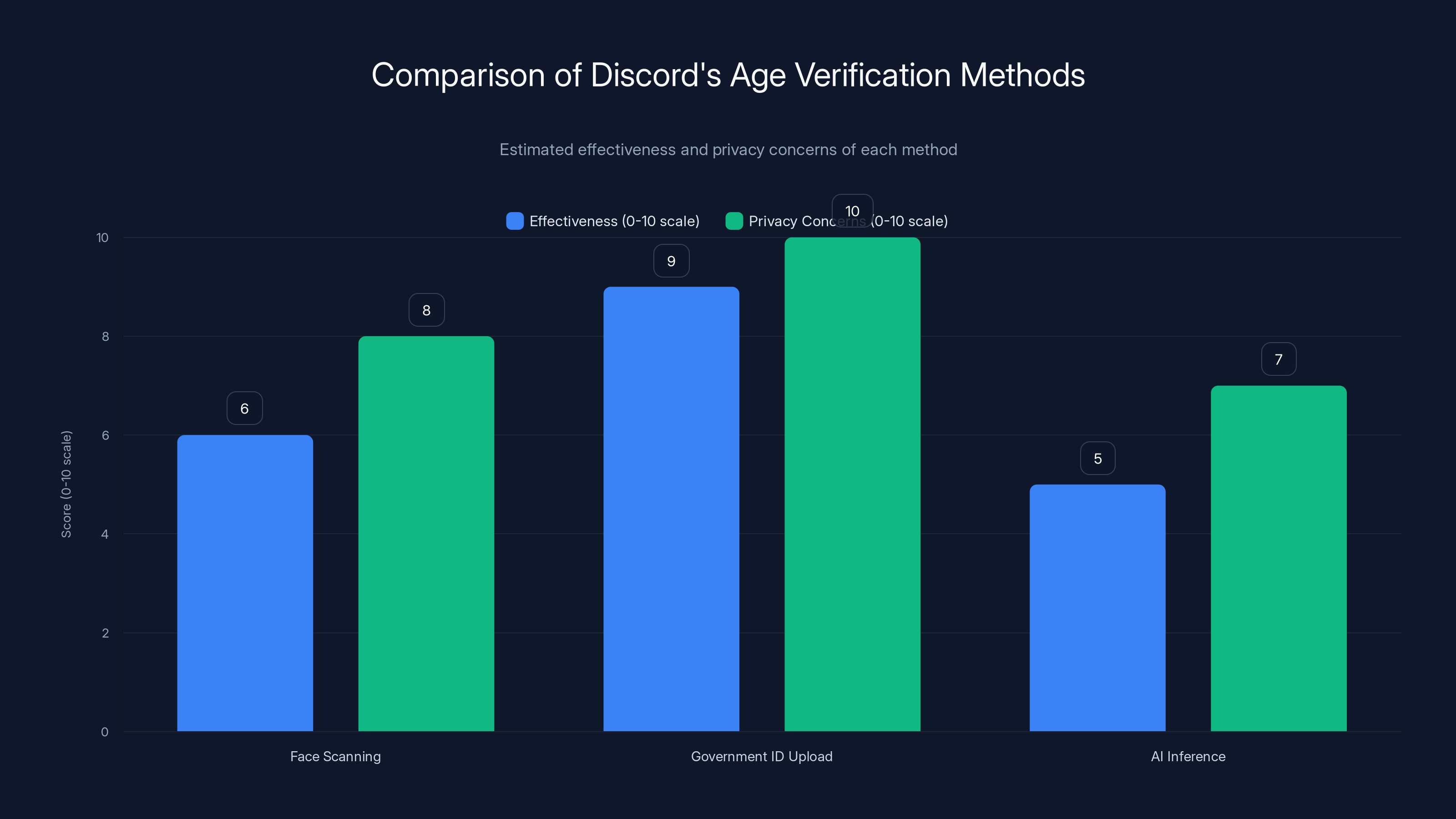

Government ID upload is the most effective but raises the highest privacy concerns. Face scanning offers moderate effectiveness with significant privacy issues. AI inference is the least effective and has moderate privacy concerns. Estimated data.

The Age Verification Trend Is Everywhere: Here's Why It's Happening Now

Discord didn't invent age verification. But what makes Discord's move significant is the timing and the scale. According to Education Week, age verification is becoming baseline infrastructure.

Instagram started the ball rolling in 2022 when it asked users changing their age to 18+ to submit a video selfie. The company framed it as preventing lies and protecting minors. By 2024, Instagram ramped up significantly, using AI to scan posts from friends to identify if someone was actually underage (like detecting when people wished them a happy 14th birthday).

Google followed suit with an announcement that it would use machine learning to estimate user age based on search history and YouTube viewing patterns. YouTube users identified as under 18 are now automatically placed in a restricted mode with limited recommendations and no access to certain content categories, as detailed in YouTube's official blog.

OpenAI launched an AI age prediction model in ChatGPT that flags users under 18 and restricts access to graphic violence, sexual roleplay, and dangerous content. The model analyzes behavior signals: what age someone claims to be, how old their account is, login patterns, according to CNBC.

Reddit rolled out age verification. Bluesky did too. Xbox requires it in some regions. Roblox forced age checks for anyone wanting to chat with other players, as reported by Tech Policy Press.

Then came the lawsuits.

The UK's Online Safety Bill, which went into effect in 2023, doesn't explicitly mandate age verification but makes platforms liable for child safety failures. Same logic in Australia's legislation. In the US, states like Utah, Ohio, and Indiana passed laws requiring age verification for social media access. That's not a gentle suggestion. That's a legal requirement with fines attached, as noted by Tech Policy Press.

When lawyers start getting involved, engineers move fast.

But here's the thing: most of these regulations weren't designed to force technology that doesn't work yet. They were written by people who assumed age verification was a solved problem. It's not.

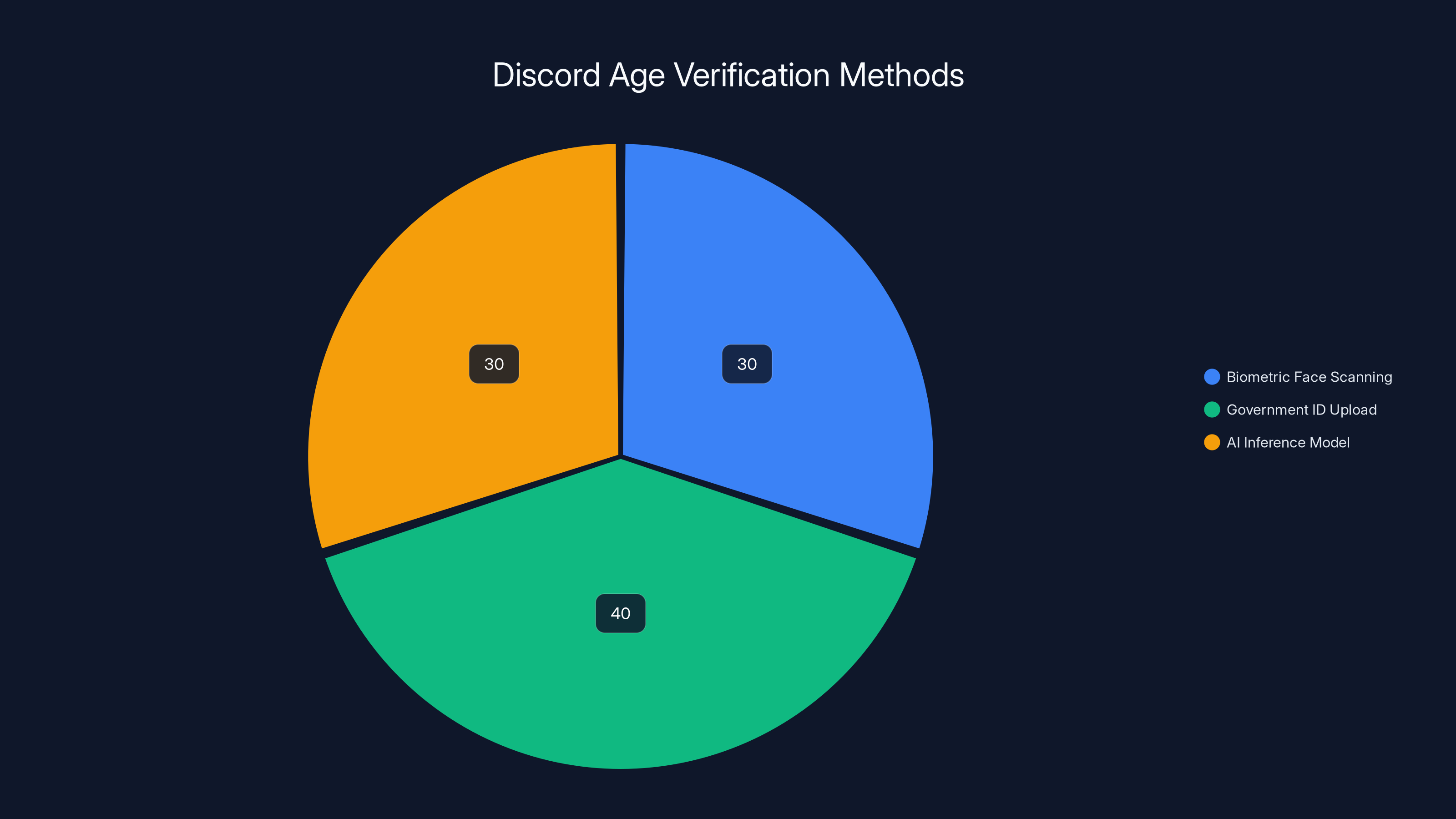

Estimated data suggests a balanced preference among Discord users for age verification methods, with government ID upload slightly more favored.

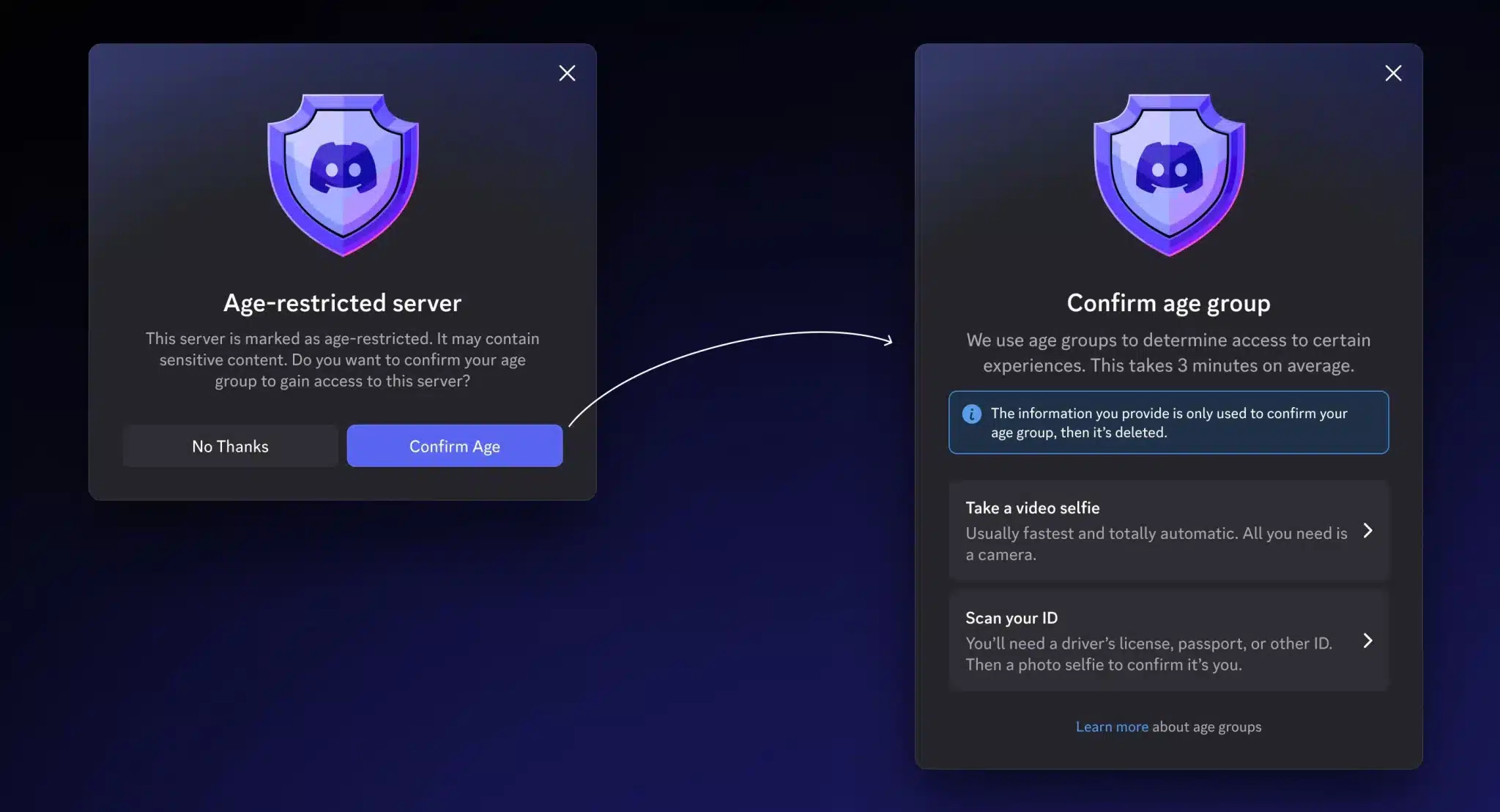

How Discord's Age Verification Actually Works: Three Methods

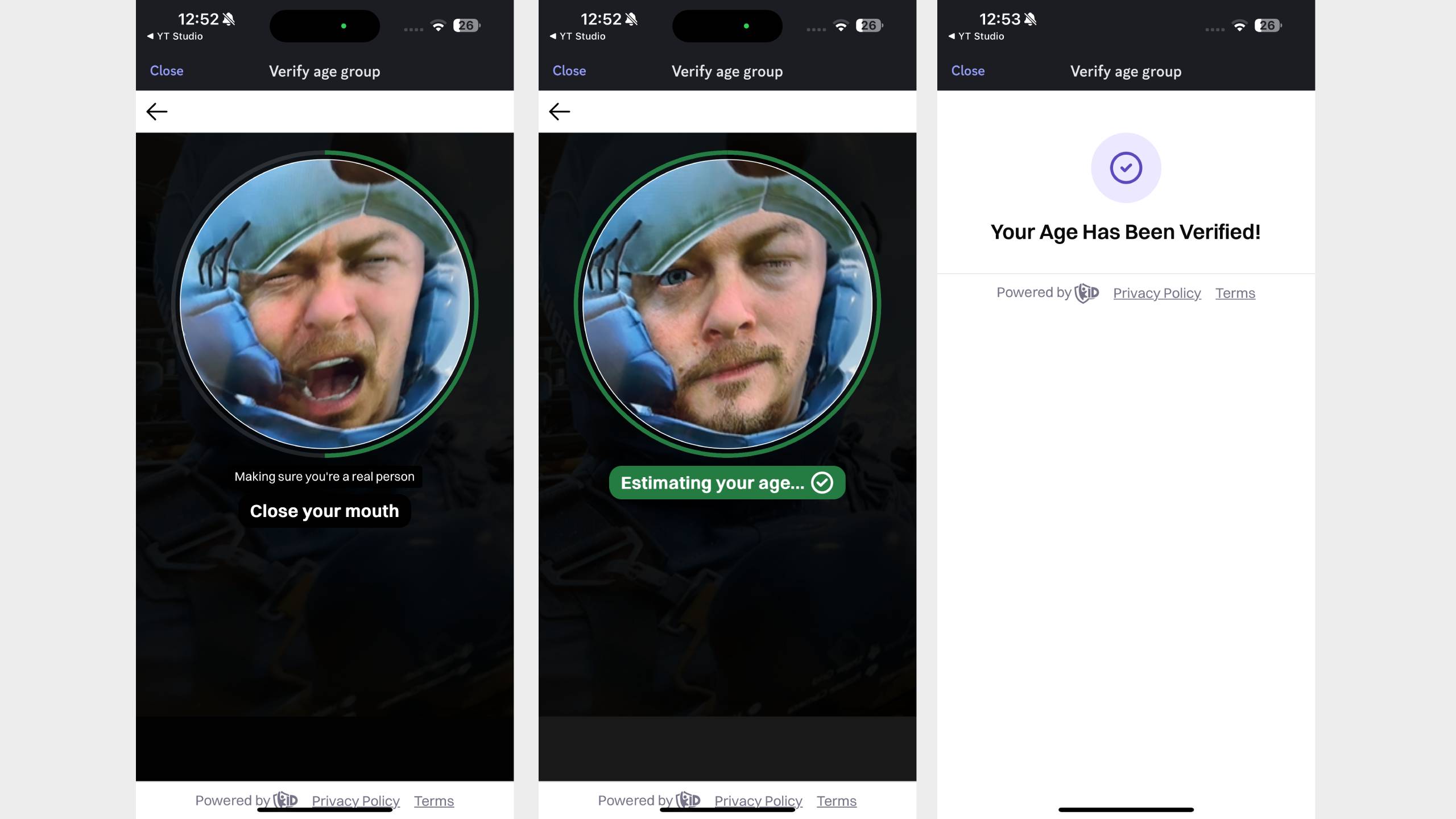

Discord isn't forcing everyone to do the same thing. Instead, it's offering a tiered system, and most users will fall into the tier they like least: AI inference.

Method 1: Face Scanning via Biometric Verification

If you want instant, definitive age verification, Discord lets you submit a face scan. You take a selfie, the system analyzes facial features associated with age (bone structure, skin texture, etc.), and Discord gets a confidence score.

The appeal is obvious: it's fast, users don't have to share sensitive documents, and it works in theory.

The reality is messier. Facial recognition for age estimation isn't a solved problem. Different ethnicities age at different rates. Lighting conditions matter. Makeup, filters, and even expressions can throw off the model. Studies on age estimation AI show error margins of 3-5 years in controlled environments. In the wild, with users taking selfies in their bedrooms under bad lighting? The error margin widens.

More critically, Discord will store those images somewhere. The company says it uses third-party vendors for verification, which brings us back to last year's data breach where a third-party Discord vendor exposed user information and a "small number" of ID images, as reported by BBC News.

Small number. That's doing a lot of work in that sentence.

Method 2: Government ID Upload

Want the most accurate verification? Upload a government ID. Passport, driver's license, national ID card.

This is the nuclear option for privacy. You're giving Discord a copy of a government document that contains your full name, address, date of birth, identification number, and often a photograph.

Does Discord store that? They say the third-party vendor handles it and Discord doesn't retain copies. But "third-party vendor" is the same phrase they used before the breach.

Why would anyone do this? Because if you want access to the widest range of Discord's features without restrictions, this is the fastest route. No AI inference, no waiting for algorithmic decision-making. Just straight verification.

The tradeoff is that you're putting a government ID in the hands of a company that had a vendor security breach. Good luck with that.

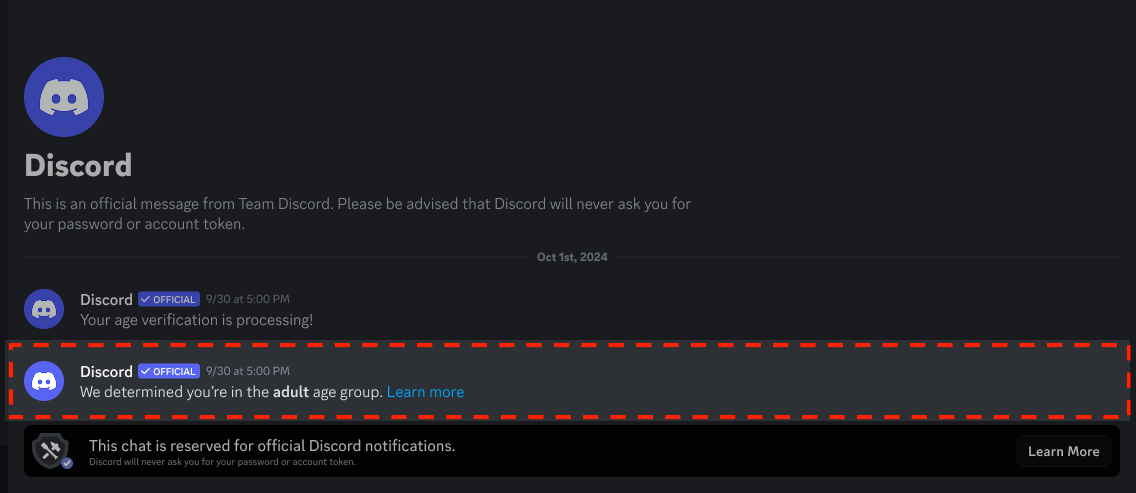

Method 3: AI Inference (The Default)

Most users won't choose biometric or ID verification. They'll get caught in the default: AI inference.

This is where Discord analyzes your account behavior and predicts your age. The company doesn't disclose exactly what signals it uses, but based on what other platforms have publicly shared, the model looks at:

- The age you claim in your profile

- How old your account is

- When you're active (school hours vs. late nights)

- Servers you join and content you engage with

- Language patterns and vocabulary

- Whether your friends are adults or minors

The system then generates a confidence score. If Discord thinks you're an adult, you get full feature access. If it's uncertain or thinks you're a minor, restrictions kick in.

Sounds reasonable until you consider how easy it is to game. Want Discord to think you're 25? Set your profile age to 25. Join some communities that skew older. Post at times adults typically post. Use different language.

A determined teenager can beat this system in an afternoon.

But here's the catch: a false positive (Discord thinking you're an adult when you're not) means a minor gets access to 18+ content. A false negative (Discord thinking you're a minor when you're an adult) means adults lose access unnecessarily.

Neither outcome is great. But which one does Discord optimize for? Looking at their public statements, they're trying to minimize false positives (letting minors through), which means they'll accept higher false negatives (blocking adults).

That's a business decision, not a technical limitation.

Why Age Verification Sounds Good in Theory But Fails in Practice

Regulators genuinely want to protect minors. That's not cynical. That's the motivation.

The problem is that age verification isn't the right tool for the job. It's like using a sledgehammer to hang a picture.

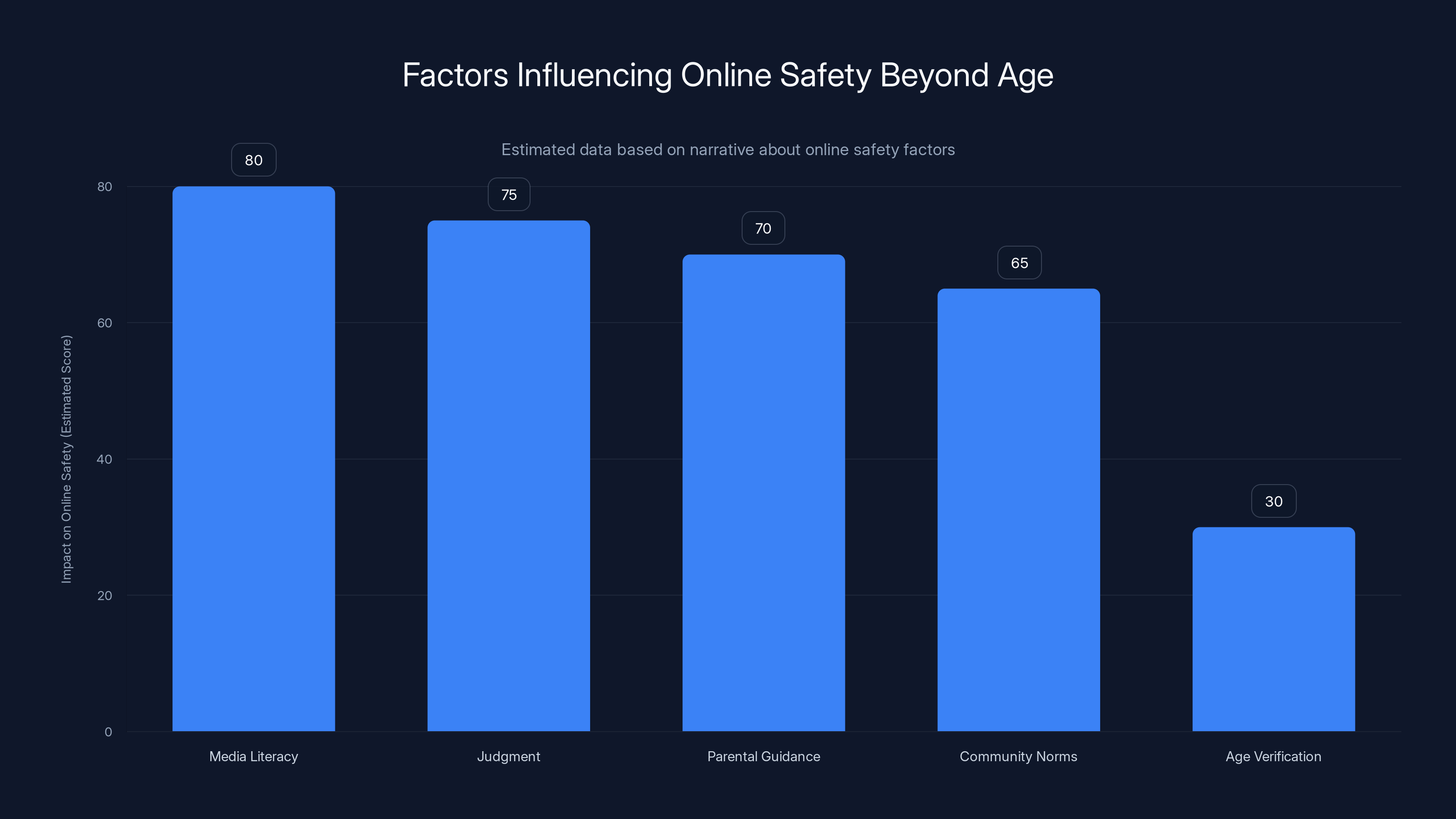

The False Premise: Age as a Safety Proxy

Everyone assumes older equals safer. Turn 18 and suddenly you can handle graphic content? The logic doesn't hold.

A 17-year-old and an 18-year-old have virtually identical cognitive development, psychological resilience, and maturity levels. But one gets access to 18+ servers and the other doesn't.

Age is a legal construct. Development is biological and psychological. They don't map neatly onto each other.

What actually makes someone safer online isn't their age—it's media literacy, judgment, parental guidance, and community norms. A thoughtfully designed platform with good moderation and user controls is safer for a 16-year-old than a poorly moderated platform is for a 25-year-old.

But age verification is easy to implement and easy to defend in court. Media literacy takes work.

The Privacy Extraction Problem

Every age verification method collects data. Face scans. Government IDs. Behavioral signals.

Once platforms have that data, they optimize for monetization. Your age inference profile becomes another data point used for targeting ads. Your facial biometric gets added to their model. Your government ID... well, that's sitting on a server somewhere.

Regulators framed age verification as a child safety measure. Platforms are implementing it as a data collection tool that also protects them from liability.

Those aren't mutually exclusive. But they're not aligned either.

The Regulatory Arbitrage Problem

Age verification laws vary by country. The UK has one standard. Australia has another. Various US states have different requirements.

Discord's solution? Implement the strictest standard globally.

Why? Because it's easier to have one system than to maintain regional variants. It's also legally safer to overcomplicate than to undercomplicate.

So users in countries with no age verification requirements still get age-gated because Discord is implementing UK and Australian standards globally.

This is regulatory capture disguised as compliance.

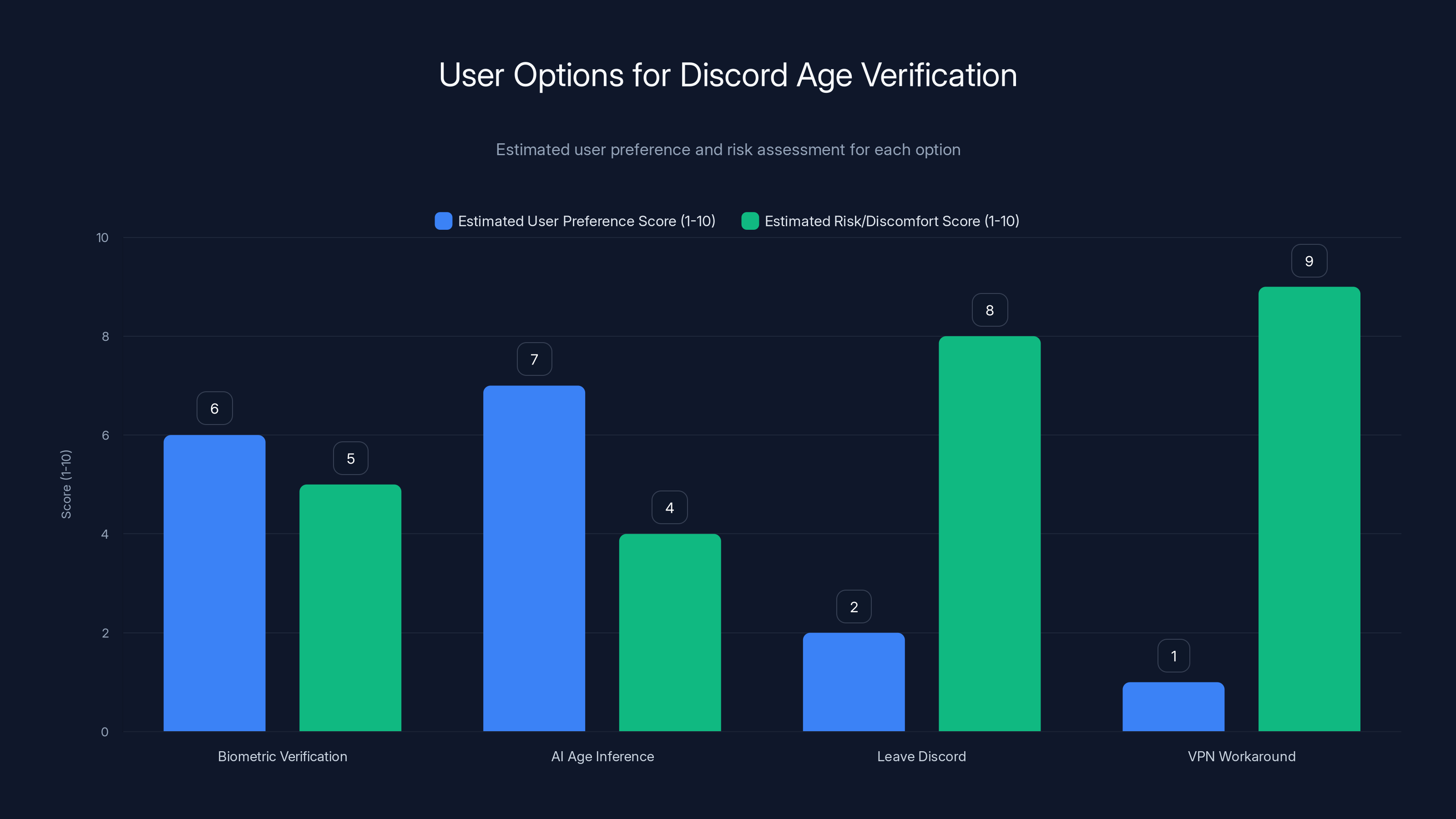

AI age inference is preferred by users due to lower discomfort, but carries moderate risk. Leaving Discord is the least preferred due to high social cost. (Estimated data)

What Discord Users Are Actually Experiencing: The Restrictions

Let's be concrete about what access loss looks like.

If Discord's AI inference model identifies you as a minor (or can't confidently identify you as an adult), you lose:

Restricted Servers and Channels: Any server marked "18+" becomes inaccessible. The server still exists, you just can't see it. That could be gaming communities, music communities, hobby communities—anything the server owner tagged as restricted.

Stage Channels: These are live audio channels where people broadcast to groups. They're commonly used for gaming tournaments, music sessions, community events. Without age verification, you can't access them.

Content Filtering: Discord will apply aggressive content filters to your feed. Graphics that look like violence, nudity, or sexual content get blurred or hidden. This is algorithmic moderation running in real-time on everything you see.

Limited Direct Messaging: This one isn't explicitly stated, but based on patterns from other platforms, expect DM restrictions with adults you're not already connected to.

For a 17-year-old who's been on Discord for three years building communities and friendships? This is a meaningful degradation of the service.

For a 22-year-old? If Discord's AI gets it wrong, they're suddenly locked out of content they should have access to, with no straightforward way to appeal or override the model's decision.

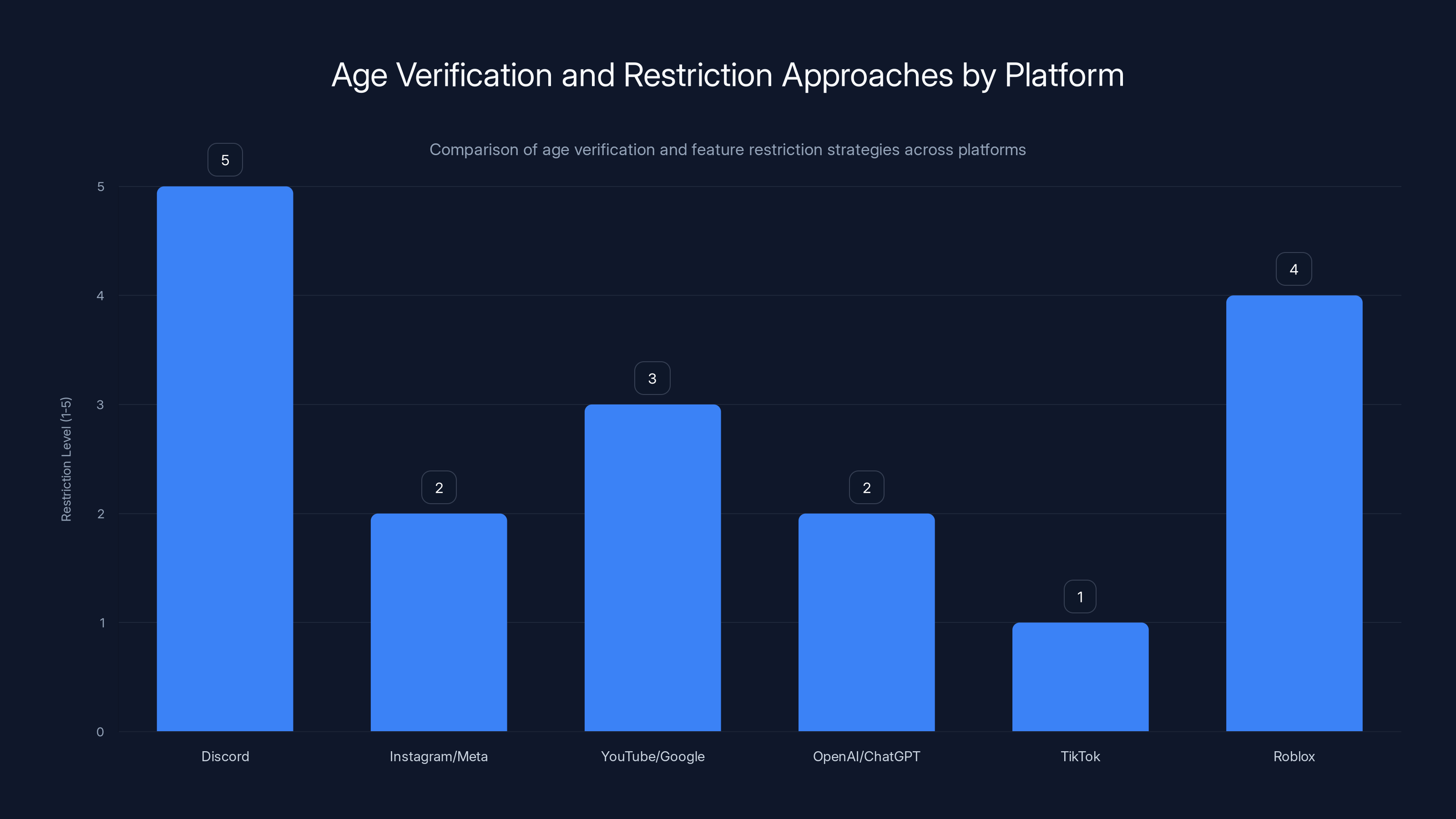

The Comparison to Other Platforms: Who's Doing What

Discord isn't alone, but its approach is more aggressive than most.

Instagram and Meta: Uses AI inference plus optional video selfie verification for age-gating features like Stories and Reels visibility. Doesn't restrict feature access as broadly as Discord. More about algorithmic content filtering than hard access blocks.

YouTube/Google: Age estimation based on search and viewing history. Restricts recommendations but doesn't block feature access. Users can manually update their age in settings, creating an honor system overlay, as noted in Google's blog.

OpenAI/ChatGPT: AI age prediction for restricting certain conversation topics. Behavioral analysis of chat patterns. Less restrictive than Discord because ChatGPT doesn't really have a community dimension—it's one-to-one interaction.

TikTok: Didn't implement age verification broadly. Instead uses algorithmic content filtering and recommendation restrictions. Different approach entirely—nobody is authenticating age, TikTok is just limiting what gets recommended to different age groups.

Roblox: Requires age verification to access social features like chat. Most restrictive after Discord because Roblox is explicitly child-focused and needed to tighten safety measures, as reported by Tech Policy Press.

Discord's approach is notable because it's applying aggressive age-gating to a platform that serves all ages, not just children or young adults.

Media literacy, judgment, and community norms have a higher estimated impact on online safety compared to age verification. Estimated data.

The Privacy Nightmare: What Happens to Your Data

Here's what nobody wants to talk about: once you submit age verification data to Discord, you've created a new liability.

Under GDPR (in Europe), under CCPA (in California), under various other data protection laws, Discord now has legal obligations around that data. They have to tell you what they're doing with it. They have to let you request deletion. They have to disclose breaches.

But the data lives somewhere. And someone has access to it.

Discord's current infrastructure uses third-party vendors for verification. That vendor processes the data, generates a yes/no decision, and (supposedly) deletes the original submission.

Supposedly.

Last year's breach showed us what "supposedly" means in practice. A vendor that Discord trusted with verification data wasn't properly securing it. User information got exposed. ID images got exposed.

Discord didn't lose that data directly. But they passed it to someone who did.

This is the classic vendor risk problem, and tech companies are terrible at solving it. You can have the best security practices in the world, but if a vendor cuts corners, you're exposed.

What should happen: Discord should implement age verification in-house or use vendors with government-level security audits, not just annual SOC 2 certifications.

What will happen: Discord will use the cheapest vendor that meets legal minimum standards.

The Government ID Problem: Why Uploading Your ID Is Risky

Let's talk about the scariest option: uploading a government ID to prove your age.

On its surface, it seems like the most foolproof method. You take a photo of your license or passport, Discord's system reads it, confirms your age, done.

Except a government ID is the skeleton key to identity theft.

Your driver's license contains: full legal name, date of birth, address, driver's license number, possibly a physical description, and your photo. If someone has that, they can:

- Open credit accounts in your name

- Apply for loans

- File taxes as you

- Get a phone number in your name

- Rent apartments

- Pretty much commit identity fraud at scale

And you're uploading this to Discord, a company that was recently compromised through a vendor, and a company that has already shown it doesn't properly vet its security partners.

Why would you do this?

Most people won't. Some will, thinking the verification is secure because it's a large company. Those people are taking a real risk.

The best-case scenario: Discord properly deletes the image after verification. The image never gets stored long-term.

The likely scenario: Discord stores the image for audit purposes, backing up the backup, keeping copies on disaster recovery systems.

The nightmare scenario: A vendor with access to those images gets compromised, like happened last year.

Discord's official statement is reassuring: "Discord does not store copies of your ID." Great. But their vendor does, at least temporarily. And we know what happened last time.

Discord and Roblox have the highest restriction levels due to aggressive age-gating and verification measures, while TikTok has the least restrictive approach focusing on content filtering. Estimated data based on platform strategies.

AI Age Inference: The Model That Thinks It Knows You Better Than You Do

Most Discord users will end up in the AI inference system by default. This is the least transparent but most widely applied method.

Here's how it works in theory: Discord's AI watches your behavior. What servers you join. What you talk about. When you're active. How you write. Then it makes a prediction: is this person an adult?

The model probably trains on labeled data: accounts where the user manually confirmed their age, plus maybe partnerships with other platforms that share age labels.

Then the model makes real-time predictions on unlabeled accounts.

This sounds scientific. It's actually probabilistic guessing with sophisticated math behind it.

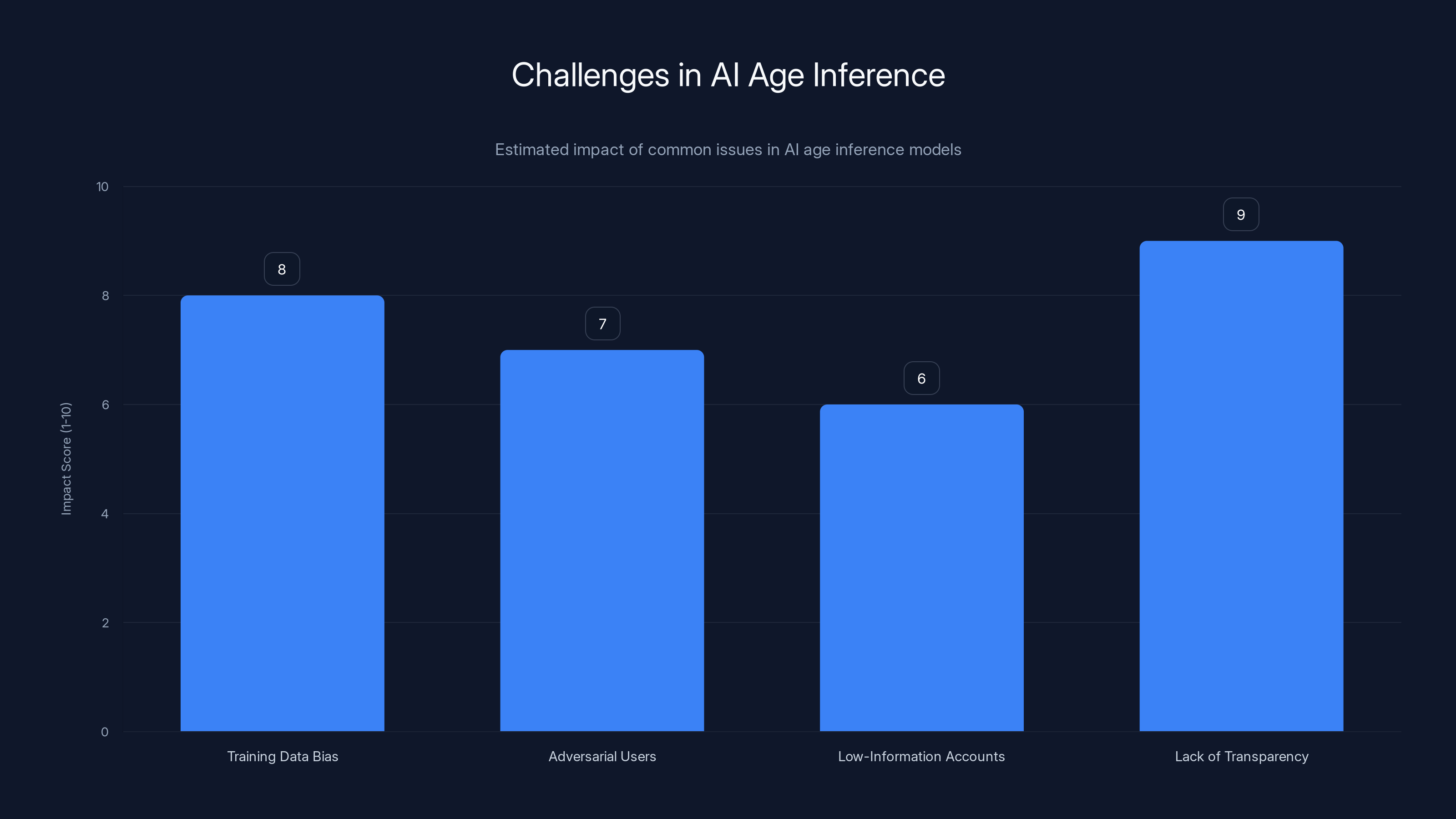

Why AI Age Inference Fails

First problem: Training data bias. If Discord's labeled data comes mostly from users in developed countries, users who are actively online, users who play certain games—the model learns the demographic distribution of those users, not how to accurately infer age across all humans.

Second problem: Adversarial users. Anyone who wants to beat the system can. Claim you're 25. Join adult communities. Change your posting patterns. Use different language. Within a week, the model probably thinks you're an adult.

Third problem: Low-information accounts. What if you're a new user with a blank profile? The model has almost no signal. It has to make a decision based on next to nothing, probably defaulting to "adult" (to avoid false positives of minors getting through).

Fourth problem: The model can't prove its reasoning. You can't appeal to Discord saying "your model is wrong about my age." There's no transparent decision process. It's a black box that says "no" and offers no recourse.

This is where the system becomes genuinely dystopian. Your access to services is determined by an algorithm you can't see, can't challenge, and can't override without submitting biometric data or a government ID.

At scale, Discord's AI system will make millions of mistakes. The model will restrict adults. It will let through minors it misjudges.

Discord has decided that false negatives (restricting adults) are preferable to false positives (letting minors through). That's a reasonable safety choice, but it also means a significant portion of adult users will be unnecessarily restricted.

The Regulatory Capture Angle: Why Platforms Love Age Verification

Here's something nobody's saying out loud: platforms love age verification because it actually helps their bottom line.

Regulators see age verification as a child safety measure. Platforms see it as legal protection plus data collection plus audience segmentation.

Once Discord has an age inference profile for every user, what does the company do with that data? They build an "adult" recommendation engine. They optimize differently for adult users. They can sell advertising to brands that specifically want to reach "verified adults."

This is why every platform is implementing this simultaneously. It's not just regulatory pressure. It's also a business optimization that regulators are forcing them to do anyway.

Suzanne Bernstein from the Electronic Privacy Information Center nailed it: "The way to protect not just kids, but everyone's safety online, is to design products that are less manipulative and that don't pose a risk of harm."

But that requires structural change. Age verification requires engineers to build a new feature. Guess which one gets chosen?

Regulators wanted platforms to be safer. Platforms interpreted this as: "get legal cover for current practices and extract more data." That's the regulatory capture problem in action.

This chart estimates the impact of various challenges faced by AI age inference models. Lack of transparency is considered the most significant issue, followed by training data bias. (Estimated data)

The Global Rollout Problem: Exporting Strict Standards Worldwide

Here's something counterintuitive: Discord is implementing UK and Australian age verification standards globally.

Why? Because building regional variants is expensive and legally risky. If Discord has to comply with Australia's age assurance requirements, but it also operates in the US where there's no federal mandate, the company could theoretically offer different experiences in different countries.

That creates compliance complexity. Someone has to track what applies where. Someone has to test regional variants. Someone has to monitor for loopholes.

Or you just implement the strictest standard everywhere and accept that users in permissive jurisdictions get the restrictive treatment.

This is how global regulatory minimum effectively becomes global regulatory maximum. The strictest jurisdiction's rules become everyone's rules.

A 16-year-old in a country with no age verification laws just got an age verification requirement imposed on them because Discord has to comply with laws in countries it operates in.

This is regulatory arbitrage in reverse. Instead of companies finding the most permissive jurisdiction, platforms are being forced to satisfy the most restrictive jurisdiction, which effectively exports that jurisdiction's standards globally.

What This Means for the Future: Age-Gated by Design

Discord's rollout isn't an end point. It's a way station.

Once age verification becomes normalized on Discord—once users accept that they need to prove their age to access internet services—that becomes the expectation for every other platform.

Imagine the next generation of internet: you need to authenticate your age to use social media, to access streaming services, to use communication platforms. It becomes as standard as having a login.

That's not inherently bad. Age-appropriate content is reasonable.

But the infrastructure it requires is unprecedented. Every platform needs biometric data or government IDs or behavioral AI models tracking your activity. Every platform needs to store or process that sensitive information.

The surface-level benefit (protecting minors from age-inappropriate content) is real. But the infrastructure cost is enormous and the privacy risks are substantial.

We're building a gated internet. Not because it's the best solution, but because it's the easiest way to satisfy regulators and limit platform liability.

Once that infrastructure exists, what happens when it's used for other purposes?

The Normalization Problem

Age verification used to be exotic. "Upload your ID? No way."

Now it's becoming routine. "Yeah, I verified my age on Discord."

Once it's routine, suddenly it's expected everywhere. Every service implements it. Every service collects the same data. Every service has a vendor managing your sensitive information.

The infrastructure scales faster than safeguards catch up.

The Data Aggregation Problem

Rightfully, we worry about single platforms holding our data. Discord breached by a vendor, big deal, you protect your account.

But what happens when Facebook has your biometric data, Discord has your behavioral age profile, Google has your search-based age inference, and TikTok has your video-based age model?

A data broker aggregates all of it. Suddenly they have an unprecedented picture of who you are across every major platform you use.

Data aggregators don't get breached that often because they don't advertise what they have. But it's there. And if it ever leaks—or gets subpoenaed—the damage is massive.

Age verification creates unique, sensitive data that becomes very valuable to anyone who wants to know who you really are, how old you actually are, and what kind of content you engage with.

The Regulatory Scope Creep Problem

Age verification is being justified for child safety. Governments are pushing it for that reason.

But government uses for age verification are broader. Border control. Law enforcement. Fraud detection. Tax compliance.

Once the infrastructure is built, it's available for use. A government could theoretically require platforms to share age verification data with authorities. Not for child safety, but for whatever the government deems important.

This has precedent. Facial recognition systems were built for security theater. Now they're used for mass surveillance.

Age verification could follow the same path.

What Users Can Actually Do: Your Options Aren't Great

Let's be real: if you want to use Discord, you have limited options.

Option 1: Submit to Age Verification

Your least bad choice is probably biometric verification. It's uncomfortable, but your face scan doesn't identify you the way a government ID does. Discord gets a yes/no decision, supposedly doesn't store the image long-term, and you move on.

This assumes Discord's vendors are secure and Discord's data handling practices are honest. Recent evidence suggests caution on both fronts.

Option 2: Hope the AI Gets It Right

Do nothing. Let Discord's AI model infer your age. If it's right, great. If it's wrong, appeal the decision and hope for human review.

For most adult users, this will probably work. The model will see account age, activity patterns, and server memberships and correctly infer adulthood.

For young adults (18-22) and for any adults with unusual activity patterns, there's risk. Wrong classification and you lose feature access with no clear recourse.

Option 3: Leave Discord

If you're fundamentally uncomfortable with biometric data collection or behavioral tracking, the most consistent option is just leaving. Use a different platform. There are alternatives.

This is a nuclear option that most people won't take. Discord is popular because of network effects—your friends are there. Moving is costly.

But it's worth acknowledging: the option exists. You're not trapped.

Option 4: VPN to Another Country

Discord specifically said their global rollout will prevent users from getting around restrictions using VPNs to access another region's version of the service.

So this is explicitly blocked. You can't circumvent the system this way, which shows Discord anticipated and deliberately closed this loophole.

The Missing Conversation: What Would Actually Protect Minors Online

Everyone's focused on age verification. Almost nobody's talking about what would actually reduce harm to minors online.

Spoiler: it's not age gates.

Real Solutions Look Like This

Better Platform Design: Platforms could make default feeds less algorithmically manipulative. Instead of optimizing for engagement (which favors extreme content), optimize for diverse, balanced feeds. A teenager on a well-designed platform is safer than an adult on a poorly designed one.

Real Moderation: Discord already has the ability to moderate servers and channels. Age verification doesn't improve moderation. Better moderation practices do.

Media Literacy: Teach teenagers how to spot misinformation, manipulation, and dangerous trends online. This works. It reduces harm.

Transparency: Let users understand what algorithms are recommending to them and why. Instead of hidden AI, open systems.

Parental Controls: Give parents tools to monitor and guide their kids' online activity. Actually useful tools, not security theater.

All of these are harder than implementing age verification. None of them generate data that can be monetized. All of them require platforms to rethink business models.

So instead, we get age gates.

Age verification is what happens when regulators want solutions but don't want to disrupt platform business models. It's a compromise that satisfies regulators ("we're protecting children"), satisfies platforms ("we're compliant, also we got more data"), and doesn't actually fix the underlying problems.

The Precedent This Sets: What Comes After Age Verification

Once we normalize identity verification for internet access, what's next?

Credential Stacking: You verify your age. Then you verify your real name for account verification. Then you verify your location. Then you verify your employment status.

Each additional credential is justified: fraud prevention, tax compliance, platform safety.

But cumulatively, you've just verified yourself completely. The internet went from pseudonymous to authenticated. That's a fundamental architecture change.

Liability-Driven Restrictions: As platforms face more legal liability, they implement more restrictions. Age gates today. Next comes income verification for trading platforms. Then citizenship verification for speech platforms.

Platforms optimize to minimize risk, not to maximize user experience.

Government Integration: Once platforms have verified identity, governments can request that data. Not subpoena—request. Then demand. Then require sharing.

No platform wants that. But if everyone has verified identity data, governments will push for access. And they'll justify it: border security, fraud prevention, national security.

It happened with metadata. It happened with biometric databases. It will happen with age verification data.

Fragmentation: Different countries require different verification standards. Eventually, platforms maintain separate verified identity databases for different regions. Users can't move between regions with the same account. Internet fragments further.

None of this is science fiction. It's the logical progression of what age verification infrastructure enables.

Industry Context: Who Benefits and Who Gets Harmed

Who wins with age verification infrastructure?

Platforms win: Legal protection. Data collection. Audience segmentation. Behavioral targeting capability.

Data brokers win: More verified identity data to aggregate and sell.

Regulatory agencies win: They can claim they're protecting children while the underlying business model stays intact.

Incumbent platforms win: New competitors have higher friction to entry. Building age verification infrastructure is expensive.

Who loses?

Users lose: Privacy, data security risk, reduced service access, more authentication friction.

Younger people especially: They're the least likely to understand the privacy tradeoffs and most affected by access restrictions.

Alternative platforms lose: The infrastructure cost of age verification favors big platforms that can afford it. Decentralized, smaller, or niche platforms get priced out.

Data protection advocates lose: Hard to advocate for less data collection when regulators are mandating more.

This is a classic regulatory structure that protects the powerful while imposing costs on everyone else.

FAQ

What is Discord's age verification requirement?

Starting in 2025, Discord is requiring users worldwide to prove their age through one of three methods: biometric face scanning, government ID upload, or passing an AI inference model that analyzes account behavior. Users who don't verify or don't pass the AI check will have restricted access to 18+ servers, stage channels, and unfiltered content.

How does Discord's age verification work?

Discord offers three verification methods: biometric scanning of your face (analyzed by an AI model), uploading a government-issued ID, or being identified as an adult by Discord's proprietary AI inference system that analyzes your account activity, server memberships, login patterns, and behavioral signals. The biometric and ID methods use third-party vendors; the AI method is handled in-house but relies on analyzing your account behavior.

Why is Discord implementing age verification?

Regulatory pressure from governments in the UK, Australia, and various US states is the primary driver. Laws like the UK's Online Safety Bill and Australia's Digital Age Assurance Services hold platforms liable for child safety failures, creating legal incentive to implement age-gating. Discord is also implementing these systems to limit platform liability and to gain additional user data for targeting and personalization.

What happened with the previous Discord age verification vendor breach?

Last year, a third-party vendor used by Discord for age verification suffered a data breach that exposed user information and a "small number" of ID images submitted for verification. Discord stated it "immediately stopped" working with that vendor, but the incident raised concerns about vendor oversight and data retention practices that remain largely unanswered by the company.

Can I opt out of age verification and still use Discord?

No. If Discord's AI inference system determines you can't access restricted features, the only workaround is to submit biometric or government ID verification. You cannot use Discord without submitting to some form of age verification, whether it's volunteering your own data or having Discord analyze your behavior.

Is biometric face scanning or government ID upload safer for privacy?

Neither is truly safe, but biometric scanning is less immediately dangerous than uploading a government ID. A face scan doesn't identify you for fraud purposes the way a driver's license or passport does. However, biometric data stored by vendors can be misused and has already been exposed in Discord's vendor breach. Government ID upload poses much higher identity theft risk if breached.

How accurate is Discord's AI age inference model?

Discord doesn't disclose accuracy metrics, but similar AI age inference systems typically have 10-15% false positive and false negative rates. This means millions of adult users could be incorrectly flagged as minors and restricted, while some minors could successfully circumvent the system. The accuracy varies significantly based on account history, activity patterns, and region.

What other platforms are implementing age verification?

Instagram, YouTube, Google, OpenAI/ChatGPT, Reddit, Bluesky, Xbox, Roblox, TikTok, and others have rolled out various forms of age verification or age inference in response to similar regulatory pressure. Discord's approach is notably more aggressive because it restricts feature access broadly rather than just limiting content recommendations.

Will age verification actually protect minors from harmful content?

Age verification doesn't address the underlying causes of harm online: algorithmic manipulation, poor moderation, and lack of media literacy. A 17-year-old and an 18-year-old have virtually identical developmental capacity. Age is a legal construct, not a safety proxy. What actually protects minors is platform design that reduces manipulation, honest moderation, and education about online safety.

What could governments have done instead of requiring age verification?

Effective alternatives include requiring platforms to optimize for user well-being instead of engagement, providing better moderation tools, mandating algorithmic transparency, requiring media literacy education, and giving parents legitimate oversight tools. These require changing platform business models, which is why age verification was chosen instead—it's a compliance measure that doesn't disrupt core business practices.

The Bottom Line: We're Building a Gated Internet, One Verification at a Time

Discord's age verification mandate isn't really about protecting minors from inappropriate content. If it were, there are better solutions.

It's about liability. It's about data collection. It's about building infrastructure that makes platforms legally safer and users more trackable.

The precedent is being set right now. Every major platform is implementing similar systems simultaneously. Users are getting accustomed to biometric authentication and government ID uploads. Regulators are seeing compliance without requiring structural change.

The internet is slowly being gated. Not because it's the best solution to the problems we're trying to solve, but because it's the easiest way to satisfy regulators while maintaining the status quo.

Once this infrastructure is normalized—once every service requires age verification, biometric data, or behavioral analysis—it becomes the foundation for more restrictive systems.

The precedent matters. And right now, we're setting one that favors surveillance and control over privacy and freedom.

Key Takeaways

- Discord's age verification mandate forces users to choose between submitting biometric data, uploading government IDs, or accepting algorithmic restrictions, marking a significant shift toward gated internet access

- Regulatory pressure from UK, Australian, and US laws is driving simultaneous age verification implementation across every major platform, effectively exporting strict standards globally

- AI age inference models used by Discord likely have 10-15% error rates, risking both inappropriate minor access and unnecessary adult restrictions without meaningful recourse

- Age verification infrastructure creates unprecedented privacy and identity theft risks through data concentration at platforms and vendors, evidenced by existing data breaches exposing ID images

- The real solution to online safety—thoughtful platform design, better moderation, media literacy—is being bypassed in favor of regulatory theater that protects platform liability while monetizing user data

Related Articles

- How to Cancel Discord Nitro: Age Verification & Privacy Concerns [2025]

- Discord Age Verification for Adult Content: What You Need to Know [2025]

- Section 230 at 30: The Law Reshaping Internet Freedom [2025]

- How Roblox's Age Verification System Works [2025]

- Are VPNs Legal? Complete Global Guide [2025]

- Europe's Sovereign Cloud Revolution: Investment to Triple by 2027 [2025]

![Discord's Age Verification Mandate and the Future of a Gated Internet [2025]](https://tryrunable.com/blog/discord-s-age-verification-mandate-and-the-future-of-a-gated/image-1-1770728841474.jpg)