The Chat GPT Caricature Trend That's Got Everyone Talking

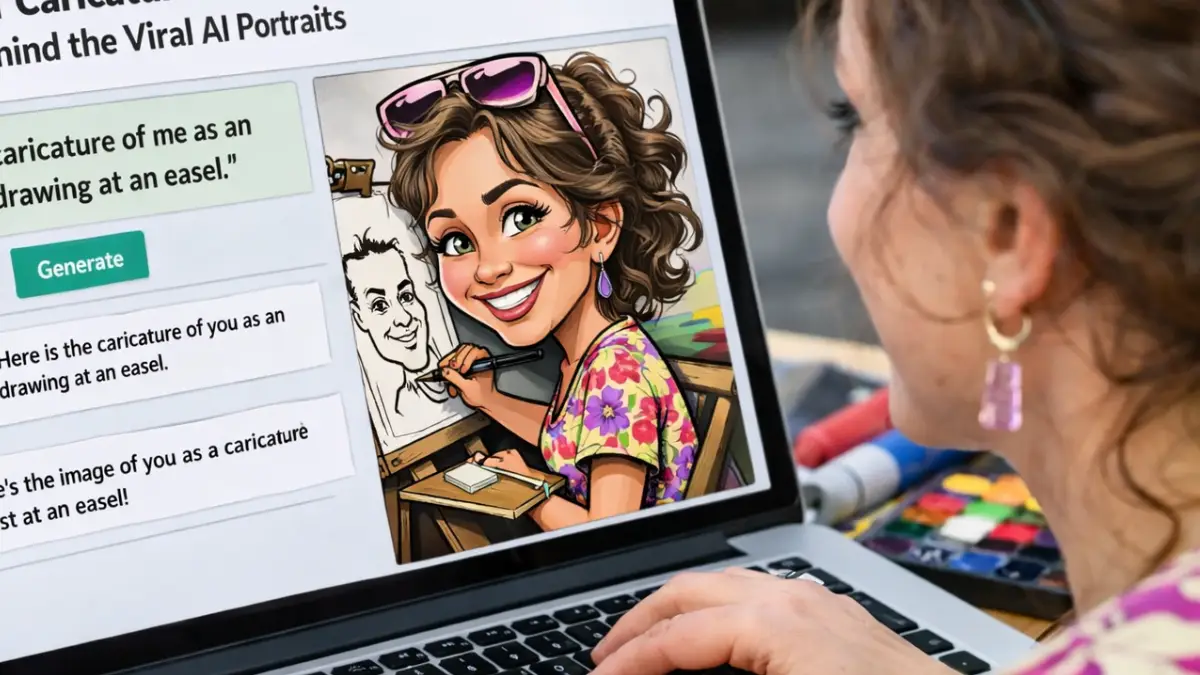

You've probably seen them all over social media. Those hilarious, weirdly accurate caricatures of people that somehow capture not just what they look like, but who they actually are. The twist? They're generated by Chat GPT.

I got curious about the hype. So I did what thousands of others have done—I fed the AI some personal details about myself and waited to see what it would create. The results were genuinely shocking. Not just because the caricature was funny (it was), but because of what it revealed about how much information these AI systems actually absorb and synthesize from the data we give them.

This trend has sparked a larger conversation that goes way beyond viral art. It's forcing us to reckon with some uncomfortable questions: How well does AI really know us? What happens when we feed our personal information into these systems? And should we be concerned about what this means for privacy and data security?

The Chat GPT caricature trend isn't just another Tik Tok moment. It's a fascinating window into how modern AI works, what it learns from user input, and the implications that has for how we interact with technology in our daily lives.

TL; DR

- The trend works surprisingly well because Chat GPT learns patterns from detailed personal descriptions and synthesizes them into visual caricatures

- AI absorption of data is real and demonstrates how thoroughly these systems process and retain user information patterns

- Privacy concerns are valid but manageable with proper data hygiene and understanding AI's actual capabilities versus myths

- The phenomenon reveals AI strengths in pattern recognition and creative synthesis that go far beyond simple text responses

- User behavior matters most because what you share with AI determines what it can know and reflect back to you

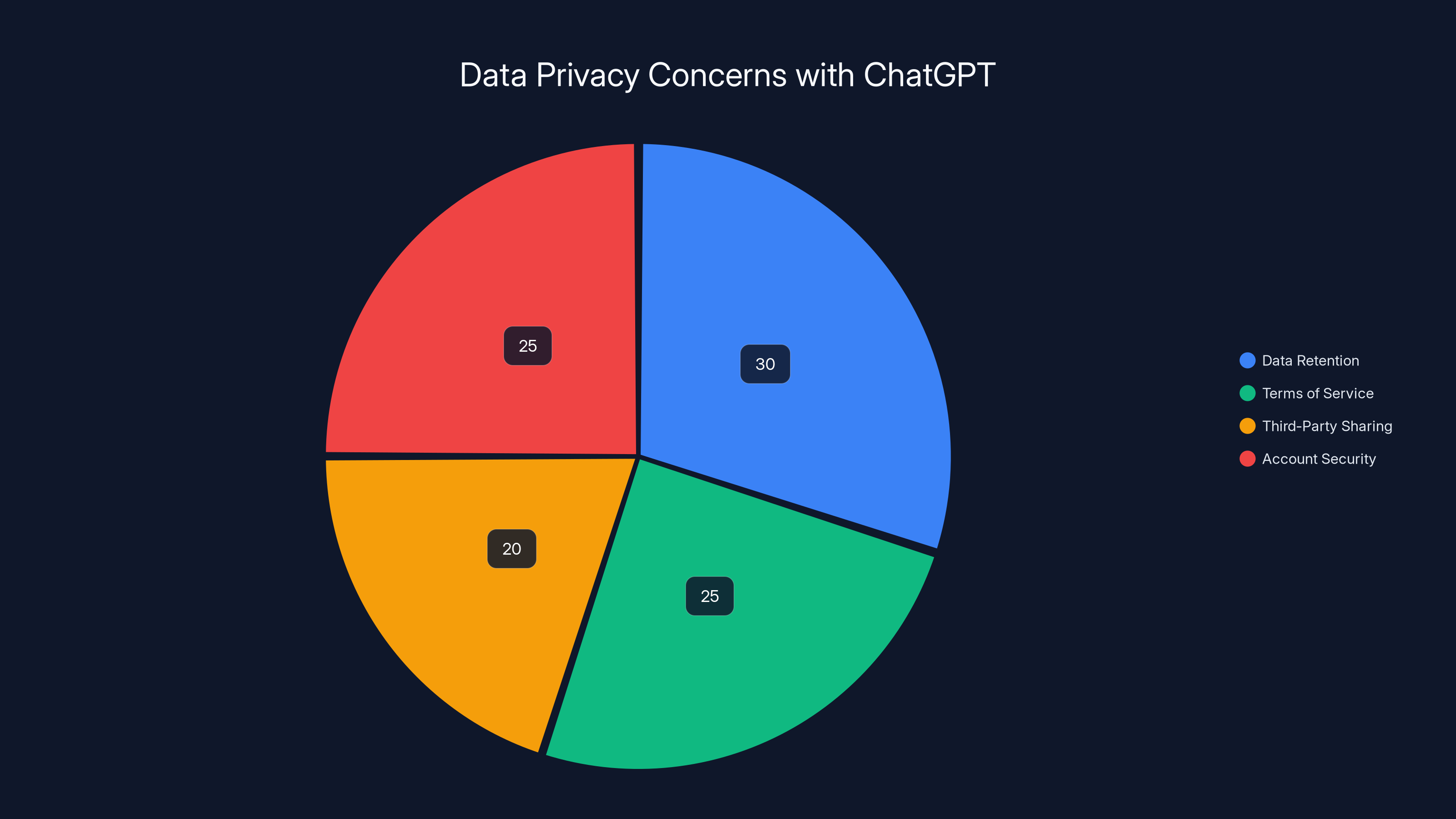

Estimated data shows that data retention and account security are the top concerns for users regarding ChatGPT's data privacy. Estimated data.

What Exactly Is This Caricature Trend?

Let's start with the basics, because if you've been living under a rock (no judgment), here's what's actually happening. The Chat GPT caricature trend involves users writing detailed descriptions of themselves—their appearance, personality, habits, quirks, sense of humor, all of it—and then asking Chat GPT to create a visual caricature based on that information.

The magic happens because Chat GPT's newer versions have image generation capabilities built in. You describe yourself in text, and the AI translates those words into a visual representation. But here's the kicker: it doesn't just draw what you literally look like. It exaggerates the characteristics you mentioned, adds context clues about your personality, and somehow manages to capture your essence in a single image.

People started doing this on platforms like Tik Tok and Twitter around early 2025, and the results started going viral almost immediately. Why? Because they work. And not just "kind of work." They work in ways that feel uncomfortably accurate.

The format is simple: you write a prompt like, "I'm a 28-year-old marketing manager who drinks too much coffee, has an unhealthy obsession with true crime podcasts, and always looks slightly stressed but makes jokes about it." Then Chat GPT generates an image that captures exactly that vibe.

What makes this different from other AI art trends is the emphasis on personal characterization. Other tools like Midjourney or Ideogram are brilliant at creating art from specific prompts, but they're often working from scratch. Chat GPT is working from your personal narrative, which introduces a whole new dimension.

The trend taps into something deeply human: we want to be understood. And when an AI seems to understand you well enough to turn your self-description into something that makes you laugh and go "yes, that's exactly me," it feels validating in a weird way.

How AI Actually Creates These Caricatures

Understanding how this works requires getting into the weeds a bit about how Chat GPT actually processes information. And I promise it's more interesting than it sounds.

When you describe yourself to Chat GPT, you're giving it a series of data points. It's analyzing the semantic meaning of those points—not just the individual words, but the relationships between them and what they collectively suggest about character, appearance, and personality.

Let's break down the actual process. Chat GPT's language model has been trained on billions of examples of human communication. When you feed it a personal description, it recognizes patterns from that training data. A person who says they're "always running late and has 47 open tabs" probably has certain visual and behavioral characteristics. Chat GPT has seen enough examples of people like this (through books, articles, social media, conversations) to make educated associations.

Then comes the creative part. The AI doesn't just describe your appearance. It exaggerates specific elements—the kind of exaggeration you'd see in a professional caricature. If you mention you're stressed, it emphasizes that in facial expression. If you say you're always tired, the eyes get darker, more drawn. If you mention a distinctive style choice, it becomes a defining visual element.

What's genuinely impressive is the synthesis layer. Chat GPT doesn't generate these images by accident. It's making deliberate creative choices about exaggeration, style, and emphasis based on your input. This requires understanding not just what you said, but what those details mean when combined together.

The technical process uses DALL-E, Open AI's image generation model, which creates images from text descriptions. But Chat GPT is doing the heavy lifting of understanding your personal narrative and translating it into visual language that DALL-E can work with.

This is genuinely novel territory in AI development. Previous image-generation systems could create art from abstract descriptions, but Chat GPT adds a layer of contextual understanding and character development that makes the results feel more like caricature art—exaggerated but recognizable, humorous but meaningful.

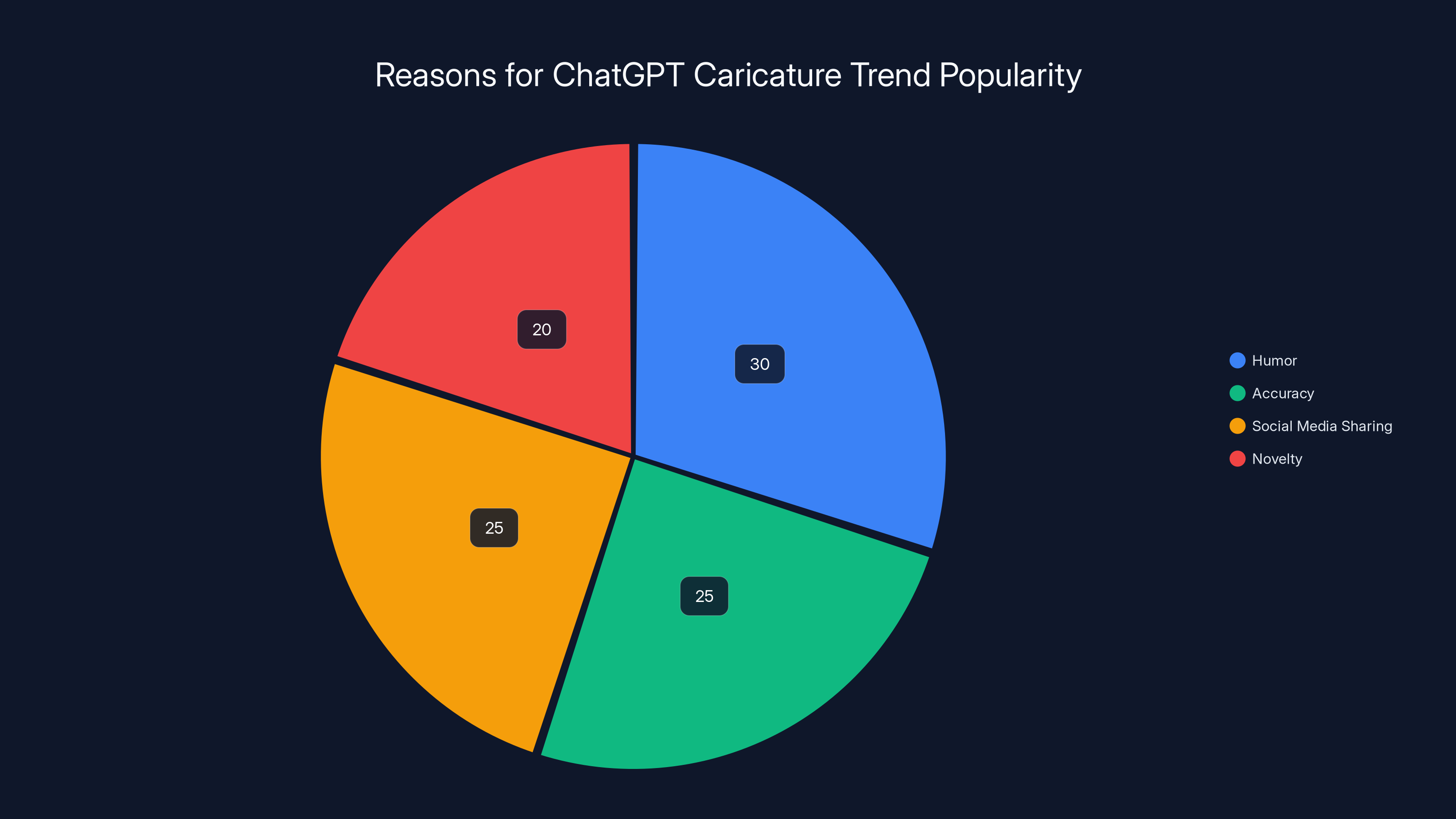

Humor and accuracy are the leading factors driving the popularity of the ChatGPT caricature trend, with social media sharing and novelty also playing significant roles. Estimated data.

Why These Caricatures Hit So Accurately

Here's the part that actually matters: why do these things work so well? Why do people look at their Chat GPT-generated caricature and immediately go "that's me"?

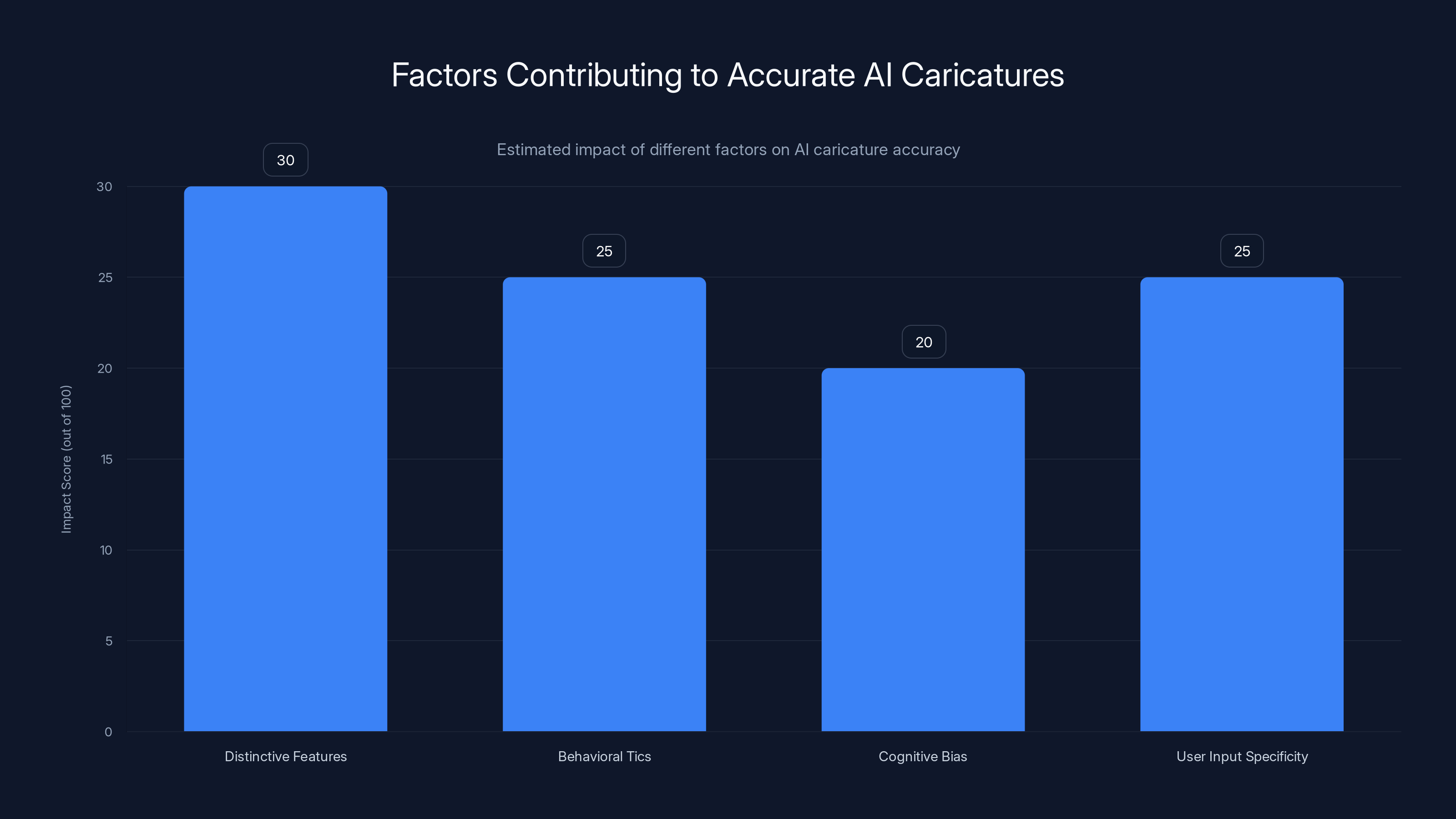

The answer has to do with how human perception works and how AI has learned to replicate it. When professional caricature artists sketch someone, they're not aiming for photorealism. They're looking for the distinctive elements that make you recognizable—the features that stand out, the behavioral tics that define you, the little details that tell your story.

AI has essentially learned to do this by analyzing thousands of professional caricatures, celebrity sketches, and personality-driven artwork. Chat GPT understands the relationship between personality traits and visual representation in ways that mirror how human artists think about their work.

But there's another element at play: cognitive bias. We're predisposed to see ourselves in ambiguous images. If an image contains even a few elements that match our self-perception, we tend to interpret the rest of it as accurate. This is called the Barnum effect—the tendency to accept vague, general statements as uniquely applicable to ourselves.

However, dismissing these caricatures as purely the result of cognitive bias misses the point. Users consistently report that multiple specific details in their AI-generated caricatures match aspects of their personality or appearance that they mentioned but had no reason to expect the AI would capture so precisely.

For example, one user reported that they casually mentioned being "someone who always has paint stains on my hands" in their description. The AI caricature showed them with noticeably paint-stained hands. Another mentioned they have a "nervous laugh" and the AI captured that exact expression in the face.

The accuracy comes from pattern recognition operating at scale. Chat GPT has analyzed enough human language about personality and appearance to understand correlations. Anxious people often describe similar physical manifestations. Confident people tend to describe themselves in certain ways. Creative people mention specific types of clutter or disorganization.

When you feed the AI your personal description, it's not guessing blindly. It's drawing on learned patterns about what combinations of traits typically look like when expressed by a human being.

The Data Privacy Elephant in the Room

Now we get to the part that actually matters if you're worried about anything. What happens to the personal information you share with Chat GPT? How is it stored? Who can access it? And should you be concerned?

First, the straightforward answer: Open AI states that they do not use free-tier Chat GPT conversations for model training purposes (as of 2024). This is actually a significant shift from earlier policies and something they made clear after privacy concerns were raised.

However, that doesn't mean your data is completely invisible. Open AI does retain conversation logs for safety and moderation purposes. This means your detailed personal description is stored on their servers. It's encrypted in transit and at rest, but it is stored.

Here's what you should actually care about:

Data Retention: Your conversations with Chat GPT are kept for up to 30 days. After that, they're deleted from Open AI's systems. You can also manually delete conversation history whenever you want.

Terms of Service: By using Chat GPT, you've agreed to let Open AI process your data for improving their service. This technically includes analyzing conversation patterns to improve model performance, though they say individual conversation content isn't used for training.

Third-Party Sharing: Open AI doesn't sell your data to advertisers or marketing companies. But they do work with law enforcement when presented with valid legal requests.

Account Security: Your account security is tied to your email. If someone gains access to your email, they can access your Chat GPT conversations. This is a realistic risk vector that most people overlook.

The deeper issue isn't so much Open AI's data practices as it is what you're teaching the AI about yourself. Every time you use Chat GPT, you're contributing to the data that trains future models. Even if Open AI isn't using your current conversations, the fact that millions of people are detailing their personal characteristics, habits, and insecurities means future AI systems will have incredibly detailed information about human psychology and behavior.

This isn't necessarily nefarious. But it's worth understanding the implications. AI systems trained on this kind of detailed personal data could be used to create incredibly convincing targeted messaging, psychological manipulation, or social engineering attacks.

The real privacy risk isn't that Open AI is going to sell your caricature description to advertisers. It's that as AI gets better at understanding human psychology, the information you casually share becomes more valuable and more capable of being misused by bad actors.

What This Trend Reveals About How AI Understands You

Beyond the meme-ness of it all, the caricature trend is actually revealing something profound about how far AI has come in understanding human psychology and personality expression.

When an AI system can translate your self-description into an image that feels recognizable and accurate, it's demonstrating understanding at multiple levels simultaneously. It understands the semantic meaning of your words. It understands cultural references and how they relate to personality. It understands visual communication and how certain expressions convey psychological states.

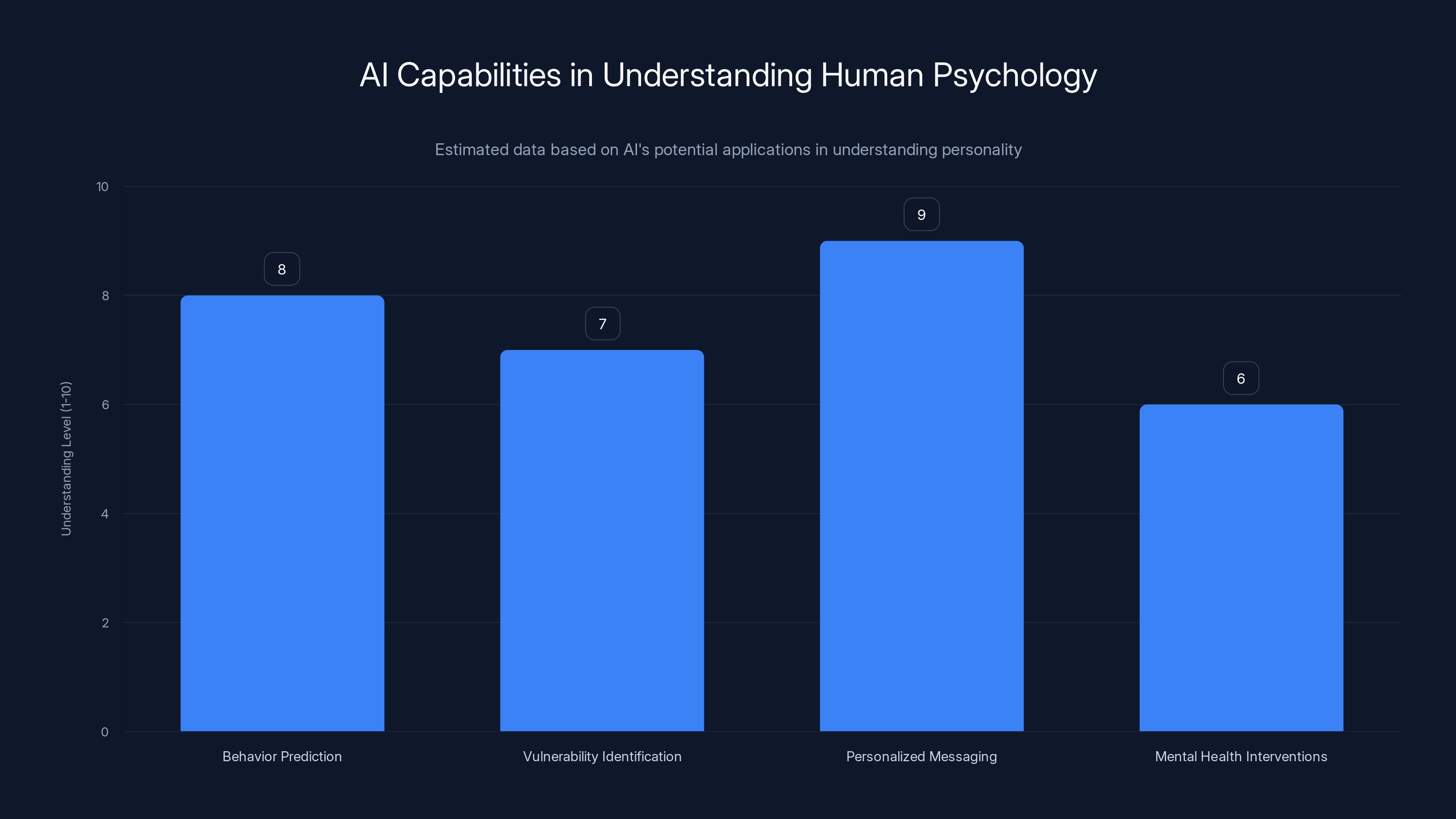

Let's think about what this means practically. AI systems that can understand personality this well could potentially:

Predict behavior patterns with greater accuracy. If Chat GPT understands that a "perpetually exhausted marketing professional who stress-eats and makes self-deprecating jokes" probably has certain decision-making patterns, it could anticipate your preferences or reactions.

Identify vulnerability patterns for social engineering. Someone who describes themselves as "trusting, naive, but loves helping others" is potentially more vulnerable to certain manipulation tactics. That's data a bad actor could theoretically use.

Personalize messaging at an unprecedented level. Advertisers have dreamed of being able to understand consumer psychology this deeply. Now they have tools that can.

Create personalized mental health interventions. This one's actually positive. AI that understands personality patterns could help identify people at risk for depression, anxiety, or other conditions.

The caricature trend is basically a party trick that accidentally demonstrated that modern AI has achieved a meaningful level of psychological understanding. And that's simultaneously cool and slightly unsettling.

Distinctive features and user input specificity are key factors in the accuracy of AI-generated caricatures, with cognitive bias also playing a significant role. (Estimated data)

The Psychology Behind Why We Love This Trend

Let's talk about the human side of this. Why have millions of people rushed to create their AI caricatures? What's the appeal beyond "haha the AI made me look funny"?

It taps into several deep psychological needs. First, there's the narcissistic element—and I don't mean that pejoratively. We all have a fundamental desire to be seen and understood. When an AI chatbot demonstrates that it "gets" you well enough to create a visual representation, that's validating. It feels like being truly understood.

Second, there's the element of self-discovery. Many people report that seeing their caricature gave them new insight into how they come across to others. The exaggeration that made it funny also made certain aspects of their personality crystal clear. Someone might think of themselves as "moderately organized" but the caricature making them look chaotic might prompt them to think, "Wait, am I actually more disorganized than I realized?"

Third, there's the social element. This trend thrives on sharing. You create your caricature, you post it online, and then you get validation from friends and strangers who go, "Yes, that's totally you." This social feedback loop is incredibly powerful.

There's also an element of control and play. In an era where we're often concerned about AI's capabilities and potential dangers, this trend lets people interact with AI on their own terms. They're directing it, they're in control, they're making it do something that entertains them and their audience.

Psychologically, this trend also speaks to our desire to make AI relatable and humanized. By treating Chat GPT as something that can understand and represent us, we're engaging in a form of anthropomorphization that makes AI feel less threatening and more like a tool we can collaborate with creatively.

Comparing Caricature AI to Other Personality-Based AI Tools

The Chat GPT caricature trend isn't the only AI tool focused on understanding and representing personality. Let's look at how it stacks up against similar offerings.

Traditional Personality Quizzes (Myers-Briggs, Di SC) have been the standard way people understand themselves for decades. But they're based on predetermined categories. You answer questions, you fit into a box. Chat GPT caricatures are generative and creative—they create something new rather than sorting you into existing categories.

AI Avatar Generators like character.ai or similar tools can create avatar representations of you, but they're typically based on visual input or very basic text descriptions. They don't synthesize personality and appearance the way Chat GPT does.

Social Media Personality Analysis Tools (which have become increasingly controversial) promise to analyze your posts and predict personality. But they're often inaccurate because they're trying to infer personality from behavior someone's publicly displaying, not personality someone's directly describing.

Professional Psychometric Tools used by companies like Google or consulting firms are incredibly detailed and sophisticated, but they're not designed for entertainment or self-discovery. They're designed for hiring, management, and organizational development.

The Chat GPT caricature approach is unique because it combines elements of all of these: it uses self-reported data (like personality quizzes), it generates creative output (like avatar tools), it's attempting personality assessment (like AI analysis tools), but it's framed as entertainment and self-discovery rather than as a formal assessment.

This is actually what makes it potentially more useful than formal personality tools. People are more honest and more detailed when they think they're just having fun.

The Technical Capabilities That Make This Work

Understanding the technical architecture helps explain why Chat GPT caricatures work better than you might expect.

Chat GPT uses a transformer-based architecture (GPT-4 at its core, with updates rolling out constantly) that processes language probabilistically. It's not following explicit rules like "if personality trait A, then visual feature B." Instead, it's learned associations through exposure to billions of examples.

When you describe yourself, Chat GPT's language model converts your description into a numerical representation called an embedding. This embedding captures the semantic meaning of your description. It's not just the words; it's the meaning behind them.

Then, that embedding gets passed to DALL-E or another image generation model. DALL-E has been trained to understand the relationship between text descriptions and visual representations. But instead of getting a simple text prompt ("draw a caricature of a tired person"), it's getting a richly detailed embedding that captures the nuance and specificity of your self-description.

The image generation happens through diffusion models, which start with random noise and iteratively refine it based on the prompt. The model is essentially saying, "What does an image look like when it matches this embedding?" and gradually building toward the answer.

What's remarkable is that this process can work across extremely varied inputs. Someone describing themselves in completely unique terms still gets an accurate caricature because the system isn't looking for specific keywords. It's understanding the underlying meaning and intent.

This is why generic descriptions produce mediocre results. If you just say "I'm a software engineer," there are too many possible variations. But if you say "I'm a software engineer who mainlines cold brew coffee, argues about tabs vs. spaces with way too much intensity, and has tried starting a side project approximately 47 times," the model has much more specific information to work with.

AI systems demonstrate high levels of understanding in personalized messaging and behavior prediction, indicating significant advancements in psychological comprehension. Estimated data.

Privacy-Conscious Alternatives and Best Practices

If you want to try the caricature trend but are concerned about data privacy, here are some practical steps you can take.

Use Chat GPT on a Private Window: This won't prevent Open AI from storing your conversation, but it will prevent it from being stored in your browser history.

Omit Identifying Details: Don't include your name, employer, location, or other information that could specifically identify you. Focus on personality traits and general appearance details instead.

Create a Throwaway Description: Write your caricature prompt in a text editor first, then paste it into Chat GPT. This keeps your actual personal file separate from what you're sharing with AI.

Understand What You're Agreeing To: Before using Chat GPT, review their terms of service and privacy policy. Their practices have been evolving, and it's worth understanding the current state.

Consider Paid Versions: Chat GPT Plus subscribers have some additional privacy protections. Your conversations are still stored, but you get better control over data and preferences.

Alternative Tools: If you want similar functionality with a different privacy model, Claude (by Anthropic) has different data policies and similar capabilities. Antml and other open-source alternatives offer more privacy control but require more technical setup.

Honestly, if you're just doing this for fun on your personal account, the privacy risk is probably lower than other things you're already doing online. The real concern would be if you're doing this on a work account or sharing extremely sensitive information.

What This Means for the Future of AI and Personalization

The caricature trend is a microcosm of where AI is heading. We're moving toward systems that don't just process information but genuinely understand context, nuance, and personality.

In the next few years, you can expect:

More sophisticated personality-based AI interactions. Systems that adapt their communication style and recommendations based on understanding who you are, not just what you're currently asking for.

Increased use of personal data for customization. Brands and services will get better at personalizing experiences, but this will require more personal information. The ethical debates about this will intensify.

More advanced deepfakes and synthetic media. If AI can create accurate caricatures of your personality, it can create other synthetic representations. Video, audio, and text deepfakes will get harder to distinguish from real content.

Potential for misuse and manipulation. As AI gets better at understanding personality, it becomes a more powerful tool for targeted persuasion, social engineering, and psychological manipulation.

New privacy regulations and frameworks. Governments are already working on AI regulation. Personality data will likely become a protected category similar to biometric data under GDPR and similar frameworks.

The caricature trend is fun and harmless on its surface. But it's also a demonstration of a capability that has serious implications for privacy, autonomy, and how we interact with technology.

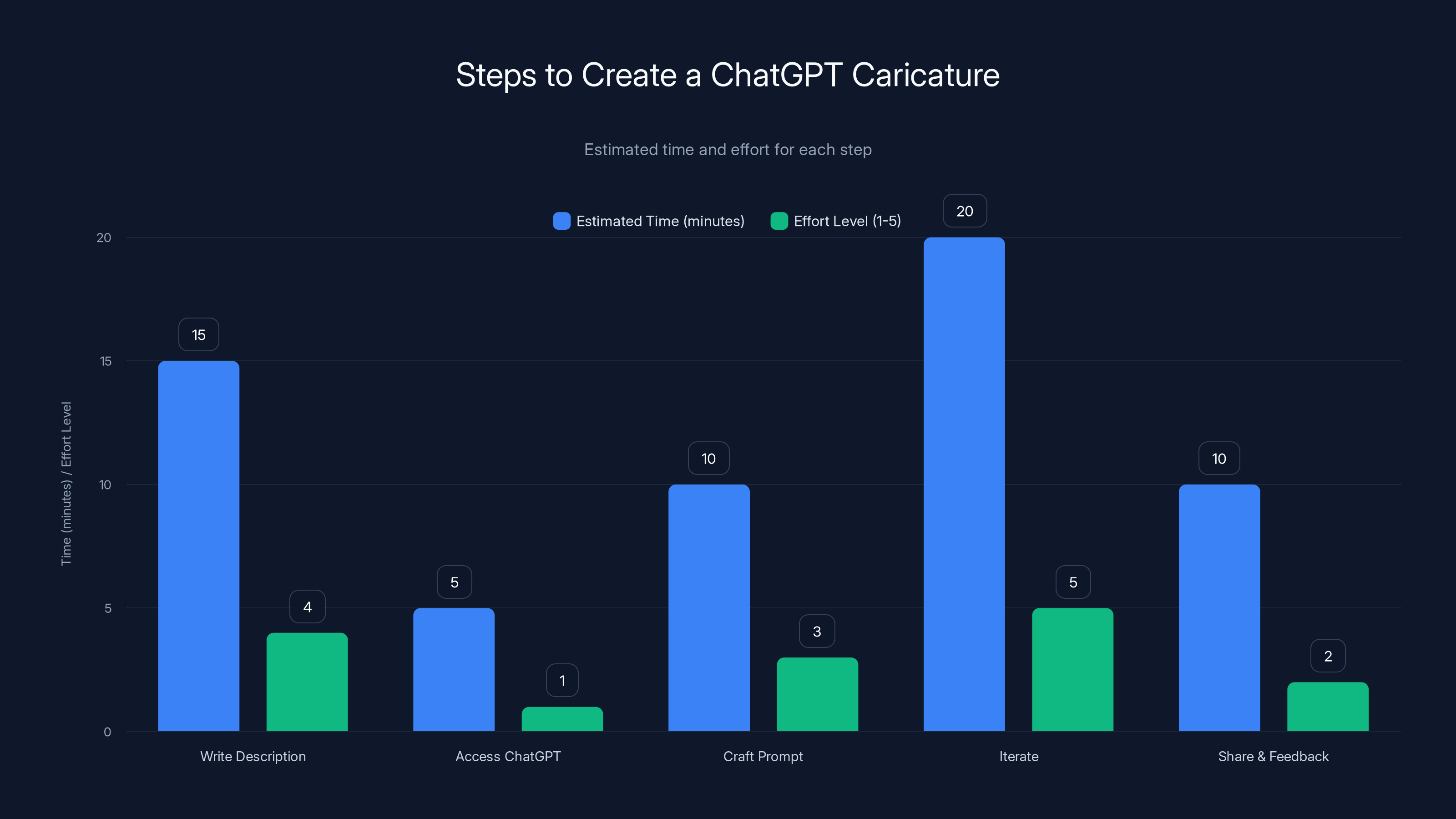

How to Create Your Own Chat GPT Caricature (Step-by-Step)

If you want to try this yourself, here's exactly how to do it.

Step 1: Write Your Description

Start with appearance. What do people notice about you visually? Hair color, style, typical expression, clothing choices, distinguishing features. Be specific. "Tired eyes with dark circles" is better than "brown eyes."

Then move to personality. What are your dominant traits? Are you anxious, confident, playful, serious? How do you come across to people who don't know you?

Finally, add behavioral details. What are your habits? What do you do constantly? What's your typical day like? What are you known for?

Step 2: Access Chat GPT

Go to Chat GPT and log in. You need to be using a version with image generation capability (GPT-4 or later).

Step 3: Craft Your Prompt

Here's a template that works:

"Create a caricature of a person based on this description: [Your description]. Make it exaggerated but recognizable, capturing their personality through visual elements. Include their typical expression, style choices, and distinctive features. Make it funny but not mean-spirited."

Step 4: Iterate

If the first result doesn't feel right, you can ask Chat GPT to adjust. "Make the expression more anxious." "Add more visual emphasis to the clothing style." "Make it look more professional but still funny."

Step 5: Share and Get Feedback

Show it to friends and family. See if they think it's accurate. This part is honestly the most fun because people's reactions tell you whether the AI actually nailed it or if you're just experiencing confirmation bias.

Creating a ChatGPT caricature involves several steps, with iteration being the most time-consuming and effort-intensive. Estimated data.

Common Mistakes People Make When Creating Caricatures

Not all caricatures turn out great. Here's what makes some fall flat.

Being Too Generic: "I'm an introvert who likes coffee" is too broad. Millions of people fit this description. The AI needs specificity to create something unique to you.

Not Balancing Visual and Personality Details: If you only describe appearance, you get a pretty drawing. If you only describe personality, you get something that doesn't match how you look. You need both.

Overly Humble or Self-Deprecating Descriptions: If your entire description is negative ("I'm anxious, weak, pathetic"), the AI will create a sad or unappealing image. Balance criticism with genuine self-perception.

Including Too Much Information: Long descriptions (over 300 words) start to dilute the impact. Focus on your most distinctive elements.

Not Using the Feedback Loop: If the first attempt isn't good, most people just give up. But asking the AI to adjust specific elements usually improves results dramatically.

Treating It Like a Photo Generator: Chat GPT caricatures aren't meant to look photorealistic. The style is exaggerated and cartoony. Expecting photorealism will lead to disappointment.

The Ethical Implications of AI Understanding Personality

Beyond the fun and the potential privacy concerns, there are some deeper ethical questions worth considering.

When AI systems can understand personality this well, who should be allowed to use that capability? Right now, it's available to anyone with a Chat GPT account. But imagine if employers had access to this technology. They could theoretically create personality caricatures of job candidates and use them in hiring decisions. That's ethically problematic for multiple reasons.

There's also the question of consent and data ownership. You've given Chat GPT detailed information about your personality. Do you retain ownership of that information? Can Open AI use patterns from your description to train future models without your explicit permission?

Then there's the philosophical question: what does it mean for an AI to "know" you? The caricature feels accurate because we tend to project meaning onto images and see ourselves in them. But the AI isn't actually knowing you—it's pattern-matching against examples in its training data. Is that understanding, or just sophisticated imitation?

There's also fairness and bias to consider. If AI learns to understand personality from training data that overrepresents certain groups (which most training data does), it might generate caricatures that are less accurate or more stereotyped for underrepresented groups.

And finally, there's the question of manipulation. As AI gets better at understanding personality, the gap between using this technology for entertainment and using it for persuasion/manipulation gets narrower.

Comparing Chat GPT Caricatures to Professional AI Tools for Personality Analysis

While the caricature trend is fun, there are actually serious tools that do similar personality analysis at a more sophisticated level.

IBM Watson Personality Insights (retired in 2021) used AI to analyze text and predict the Big Five personality traits. It was sophisticated but required substantial data and was prone to errors.

Hire Vue's AI Interviewing Tool attempts to assess personality and competence based on video interviews. It's been controversial for potential bias and has faced significant criticism.

Palantir's Analysis Tools (used by governments and large corporations) can analyze vast amounts of data about people to create detailed psychological and behavioral profiles. This is serious-level stuff with serious implications.

Academic Research Tools like those used in psychology studies often employ AI to analyze personality based on social media data, writing patterns, or behavioral data. These are designed for research purposes but reveal how sophisticated personality prediction can become.

The Chat GPT caricature is actually more honest than many of these tools because it's based on your self-description rather than attempting to infer your personality from behavior you're not aware is being analyzed.

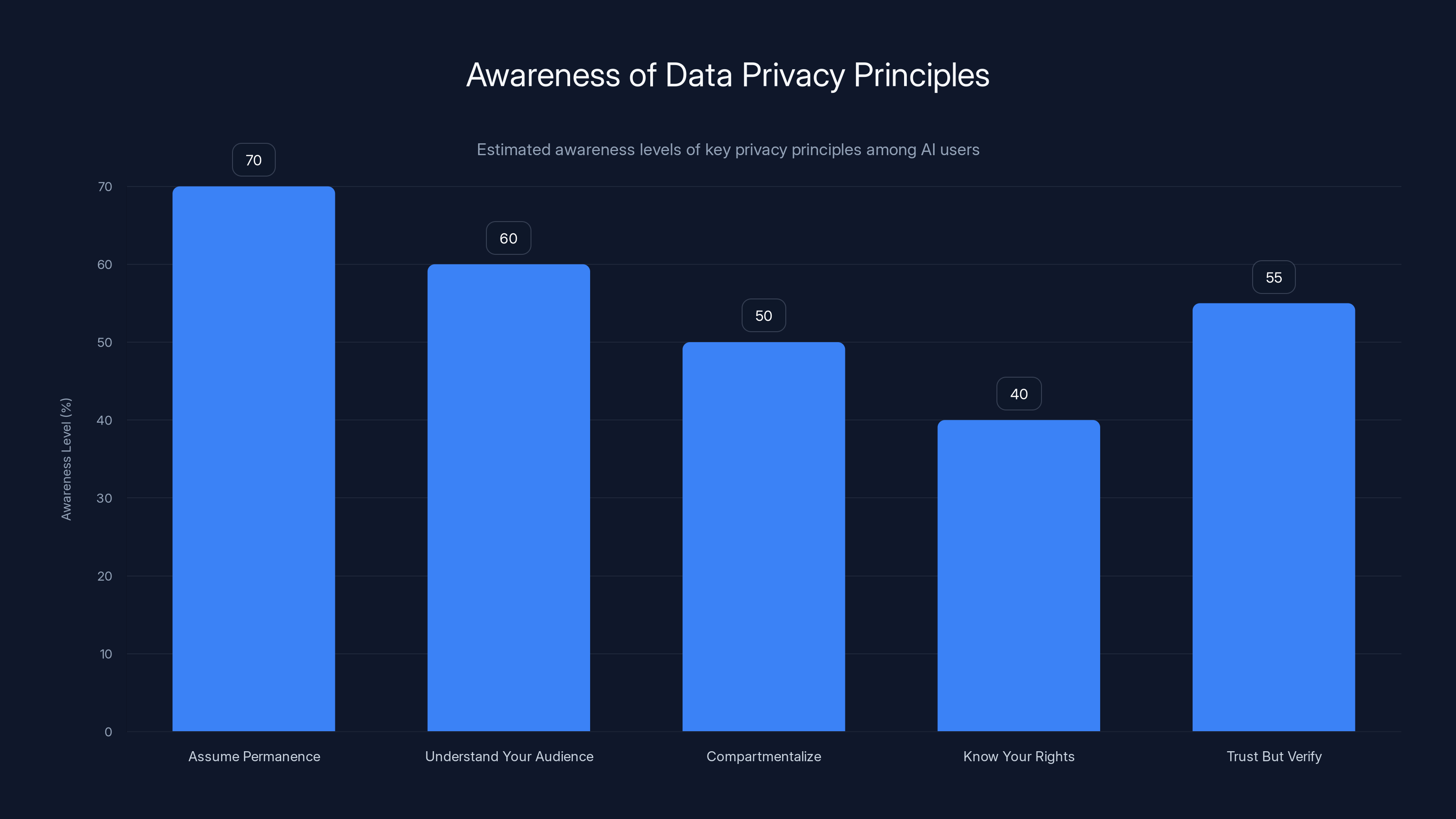

Estimated data suggests varying awareness levels of key privacy principles, with 'Assume Permanence' being the most recognized among users.

Why This Trend Matters Beyond the Meme

At its core, the Chat GPT caricature trend matters because it demonstrates something important about where AI technology has arrived. We've moved past the era where AI is just a tool for specific tasks. We're now in an era where AI can engage in creative synthesis, understanding personality and context in ways that feel meaningfully human.

This is genuinely significant from a technological standpoint. It means AI has developed capabilities in:

- Semantic understanding: Knowing what things mean, not just what they are

- Contextual reasoning: Understanding how pieces of information relate to each other

- Creative synthesis: Combining information in novel ways

- Psychological inference: Understanding human psychology well enough to make educated guesses about unmentioned characteristics

The trend also matters because it's shifting how people think about AI. Instead of being something mysterious and threatening, it's becoming something interactive and creative. This is both good and bad. Good because it demystifies AI and encourages people to experiment with it. Bad because it might make people less cautious about the implications of sharing detailed personal information with AI systems.

From a cultural standpoint, the trend reflects our current moment: we're fascinated by AI, somewhat concerned about it, and looking for ways to engage with it on our own terms. The caricature trend lets us do that.

Tips for Protecting Yourself While Using Personality-Based AI

If you're going to interact with AI systems that understand personality, here are practical steps for protecting yourself.

Principle 1: Assume Permanence: Anything you share with an AI system could potentially exist forever. Write as if what you're saying will be publicly available in 10 years.

Principle 2: Understand Your Audience: When you use an AI system, you're sharing with that company's engineers, their security team, potentially law enforcement, and theoretically anyone who manages to hack the system. Write accordingly.

Principle 3: Compartmentalize: Don't use the same descriptions or consistent details across multiple platforms. If you describe yourself as "a 28-year-old marketing manager at Tech Corp" to Chat GPT, Facebook, Linked In, and Google, that data can be cross-referenced to build a complete profile.

Principle 4: Know Your Rights: Different jurisdictions have different laws about data privacy. In the EU, GDPR gives you significant rights. In the US, it's much more limited. Know what applies to you.

Principle 5: Trust But Verify: Just because an AI system seems to understand you well doesn't mean it actually does. It might just be matching patterns. Don't assume accuracy equals understanding.

The Future of AI Caricatures and Personality Representation

The Chat GPT caricature trend probably won't be around in its current form in five years. But the underlying capability is here to stay and will only get more sophisticated.

We're likely to see:

3D Avatar Generation: Instead of 2D caricatures, imagine AI generating full 3D avatars that capture your personality. These could be used in virtual meetings, the metaverse, or gaming.

Video Caricatures: AI-generated videos of caricaturized versions of you, potentially speaking with your voice but exaggerated personality traits.

Interactive Caricatures: Imagine a chatbot that embodies your caricature—it has your personality traits, your communication style, your sense of humor. You could have conversations with an AI version of yourself.

Predictive Caricatures: AI that shows you how you might look and act at different ages or with different life circumstances. "Here's what you'd look like if you pursued a different career path."

Professional Applications: HR departments might use personality-based caricatures for team building. Therapists might use them for self-awareness exercises. Marketers might create personalized brand representatives.

The technology is moving toward increasingly sophisticated personality representation. The caricature trend is cute and fun, but it's also an early glimpse at how intimate AI's understanding of human psychology will become.

Debunking Common Myths About AI and Personality Understanding

There's a lot of confusion about what AI can and can't do. Let's clear up some misconceptions.

Myth 1: "AI reads your mind" Reality: AI analyzes patterns in what you explicitly tell it. It can make educated guesses about unmentioned traits, but it's not reading thoughts. It's doing sophisticated inference.

Myth 2: "If the caricature is accurate, the AI truly understands you" Reality: Accuracy might just mean the AI has learned patterns that match how humans typically express those traits. That's not the same as genuine understanding.

Myth 3: "The caricature reveals your 'true self'" Reality: It reveals how you perceive yourself and how you describe yourself. That's your self-presentation, not necessarily your objective reality.

Myth 4: "Open AI uses your caricature descriptions to train future models" Reality: Open AI has stated they don't use free-tier conversations for training. However, they do use them for safety research and improvement, and policies change.

Myth 5: "Only Chat GPT can do this" Reality: Any sophisticated language model paired with an image generator could do this. Claude, Gemini, and other AI systems have similar capabilities.

Myth 6: "This is definitely going to be used for bad purposes" Reality: Like any technology, it can be used well or poorly. The fact that something can be misused doesn't mean it will be or that we shouldn't engage with it.

Making This Technology Work for You

Instead of just being concerned or delighted by the caricature trend, here's how to actually use it constructively.

For Self-Discovery: Create multiple versions of your caricature with different emphasis. One focused on how you see yourself professionally. One focused on how you see yourself with close friends. One focused on your anxieties and insecurities. The differences reveal interesting things about how you compartmentalize your identity.

For Communication: If you struggle to describe yourself in interviews or dates or introductions, your caricature description becomes a template. It forces you to articulate what makes you distinctive.

For Creative Projects: Writers use these to develop characters. Designers use them to understand personality-to-visual translation. Anyone doing creative work can use AI caricatures as inspiration.

For Relationship Understanding: Create caricatures of people you're close to and see if your AI-generated representation matches how they actually are. This can reveal gaps between how you perceive people and how they perceive themselves.

For Therapy and Reflection: Some therapists are starting to use AI-generated personality representations as tools for patients to reflect on self-perception and identity. It's not a replacement for therapy but a useful tool.

The Bottom Line: Should You Try This?

Yes. Probably. Here's why.

The privacy concerns are real but manageable. You can create a caricature without sharing identifying information. You can limit what you reveal. You have control over what you put in.

The experience is actually valuable. Articulating how you see yourself is harder than you think. The process of describing yourself in detail for the AI to work with forces clarity. And then seeing how the AI interprets that description creates an interesting mirror for self-reflection.

The technology is fascinating. Whether you're interested in AI, psychology, art, or just fun internet trends, this is a meaningful example of what modern AI can do.

The risk of missing out is real. This is a moment where a new capability is democratized and available to anyone. In a few years, this might be integrated into hiring, dating, or other contexts where it's less optional and more loaded with implications.

So try it. Have fun with it. But understand what you're doing. You're not just creating a funny image. You're teaching an AI system about human psychology at scale, and you're giving that system detailed personal information. As long as you go in with eyes open, the benefits probably outweigh the risks.

FAQ

What is the Chat GPT caricature trend exactly?

The Chat GPT caricature trend involves writing detailed descriptions of yourself—including your appearance, personality, habits, and quirks—and asking Chat GPT to generate a visual caricature based on that description. The AI uses image generation capabilities (DALL-E) to create exaggerated but recognizable drawings that capture your essence as described. The results have gone viral on social media because they often feel uncannily accurate and funny.

How does Chat GPT create these caricatures?

Chat GPT processes your self-description using language models to understand semantic meaning and personality patterns. It then translates that understanding into visual language that DALL-E, Open AI's image generation model, can work with. The image generator creates the visual caricature by starting with random noise and iteratively refining it to match the personality-based description. The accuracy comes from pattern recognition learned during training on billions of examples of human communication and artistic style.

Is my personal data safe when I use Chat GPT for this?

Open AI states that they don't use free-tier conversations for model training. However, they do retain conversations for up to 30 days for safety and moderation purposes. Your data is encrypted in transit and at rest, but it is stored on their servers. You can manually delete conversations or adjust your privacy settings. The real concern isn't Open AI misusing your data, but rather that detailed personality data could be valuable if the system is breached, or used to train future AI models. You can minimize risk by omitting identifying details like your employer or location.

Why do these caricatures feel so accurate?

They feel accurate due to a combination of factors: AI pattern recognition that associates personality traits with visual characteristics, your own cognitive bias (tendency to see yourself in ambiguous representations), and the genuine sophistication of modern language models in understanding personality psychology. Professional caricaturists have understood for centuries that you don't need photorealism to capture someone accurately—you need to exaggerate the distinctive elements. Chat GPT has learned to do exactly this by analyzing thousands of caricatures and personality descriptions during its training.

Could this technology be misused?

Yes, potentially. As AI gets better at understanding personality, it could be used for social engineering, psychological manipulation, targeted persuasion, or creating convincing deepfakes. Employers might use personality prediction to discriminate in hiring. Governments might use it for surveillance. However, the current version of the caricature trend is relatively low-risk because it's based on voluntary self-description rather than behavioral analysis. Misuse requires deliberate action by bad actors, not just the technology existing.

What should I include in my caricature description for the best results?

Include three dimensions for best results: physical appearance details (hair, typical expression, style choices), behavioral habits (what you do constantly, distinctive patterns), and personality traits (how you come across to others). Be specific rather than generic. "I have dark circles and drink coffee constantly" is better than "I like coffee." The more distinctive and honest details you include, the more accurate and personalized the result will be. Aim for 150-250 words of detailed description.

Will Chat GPT remember this caricature description in future conversations?

No. Each conversation with Chat GPT is technically separate, though the AI has context within a single conversation thread. However, the conversation itself is stored on Open AI's servers for 30 days. If you're concerned about this, you can use the same description across multiple sessions and then delete the conversations afterward. Alternatively, create a new conversation for your caricature so it's isolated from your other Chat GPT usage.

Are there privacy-focused alternatives to Chat GPT for this?

Claude by Anthropic has similar capabilities and different data policies. Runable offers AI-powered document and presentation creation starting at $9/month with strong privacy controls. Open-source models like Llama can be run locally for maximum privacy but require more technical setup. Each option has trade-offs between privacy, ease of use, and capability quality.

Could this trend become mainstream beyond social media?

Likely yes. Therapists might use personality caricatures for self-awareness exercises. Companies might use them for team building. Dating apps might integrate personality-based caricature generation. However, there will probably be backlash and regulation around using personality analysis for hiring, advertising, or other high-stakes decisions. The frivolous version (making funny caricatures) will probably always exist, but the serious versions will face scrutiny.

What does this tell us about AI's future capabilities?

The caricature trend demonstrates that AI has achieved meaningful understanding of personality, context, and creative synthesis. This suggests future AI will be increasingly personalized, increasingly capable of understanding psychological nuance, and increasingly capable of creating content tailored to individual users. It also suggests that privacy concerns about AI systems will become more pressing as the technology becomes more capable of understanding us deeply.

Key Takeaways

The Chat GPT caricature trend is far more than a passing internet joke. It's a meaningful demonstration of how sophisticated modern AI has become in understanding human psychology and personality. It reveals both the impressive capabilities of current AI systems and the legitimate privacy and ethical concerns that come with that capability.

If you decide to participate in the trend, you're contributing to a massive dataset that helps train future AI systems. You're also giving yourself a chance to reflect on how you see yourself and how you come across to others. The experience of describing yourself in detail is valuable regardless of how accurate the final caricature turns out to be.

The broader implication is that we're entering an era where AI can understand us in ways that feel uncomfortably personal and intimate. This isn't necessarily bad. But it requires awareness, intentionality about what we share, and ongoing conversations about how these capabilities should be governed and used ethically.

The trend will evolve. The underlying technology will get more sophisticated. But the fundamental question it raises—how well should AI be allowed to understand us, and what should we do with that understanding—will remain relevant for years to come.

Try the caricature. Have fun with it. But understand what you're doing and what it means.

Related Articles

- OpenAI GPT-5.3 Codex vs Anthropic: Agentic Coding Models [2025]

- Substack Data Breach: What Happened & How to Protect Yourself [2025]

- Why Loyalty Is Dead in Silicon Valley's AI Wars [2025]

- ICE Out of Our Faces Act: Facial Recognition Ban Explained [2025]

- Claude Opus 4.6: Anthropic's Bid to Dominate Enterprise AI Beyond Code [2025]

- Are VPNs Legal? Complete Global Guide [2025]

![ChatGPT Caricature Trend: How Well Does AI Really Know You? [2025]](https://tryrunable.com/blog/chatgpt-caricature-trend-how-well-does-ai-really-know-you-20/image-1-1770381436555.png)