Why Enterprise AI Is Failing (And How to Fix It)

Last quarter, a Fortune 500 financial services company spent $8 million on AI implementation. Their new AI system could summarize earnings reports, draft meeting notes, and answer routine questions. Pretty impressive on the surface.

Then they asked it to forecast quarterly revenue.

The AI produced a confident prediction. It looked reasonable. Senior leadership almost used it to set budget targets. But when the CFO dug deeper, she found something alarming: the model had mixed up customer acquisition costs with retention metrics, confused one division's reporting with another's, and made assumptions about market seasonality that contradicted five years of actual company history.

The AI hadn't failed because it wasn't smart enough. It failed because it didn't understand how the business actually worked.

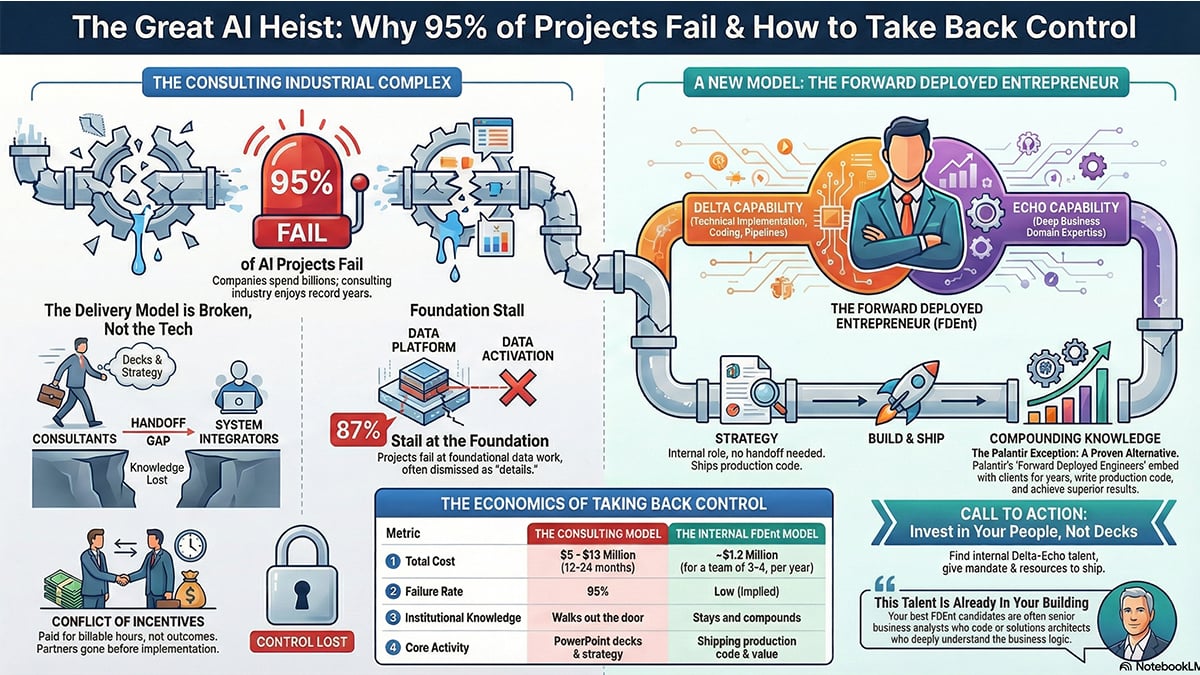

This is the hidden crisis in enterprise AI. Companies are drowning in tools, platforms, and models. What they're starving for is institutional context: the interconnected relationships between customers, systems, processes, metrics, and historical decisions that determine how a business actually functions.

Here's the brutal truth that most CIOs are learning the hard way: You can have the most advanced AI model in the world, but if it doesn't understand your business semantics, governance, and operational reality, it's just an articulate liar.

TL; DR

- Single-player AI is maxed out: Today's AI excels at individual tasks (drafting emails, summarizing docs) but crumbles when asked to make institutional decisions

- Institutional context is the missing piece: Most enterprises lack formal definitions of metrics, clear data ownership, and documented business logic that AI needs to reason reliably

- Analytics reveals the cracks first: Revenue forecasting, churn analysis, and cost variance explanation expose how fragmented most data ecosystems are

- Context-aware AI agents unlock real ROI: AI that can identify root causes, diagnose cross-functional issues, and recommend actions grounded in business reality drives measurable value

- The foundation matters more than the model: Success requires treating business semantics, governance, and operational context as first-class infrastructure, not an afterthought

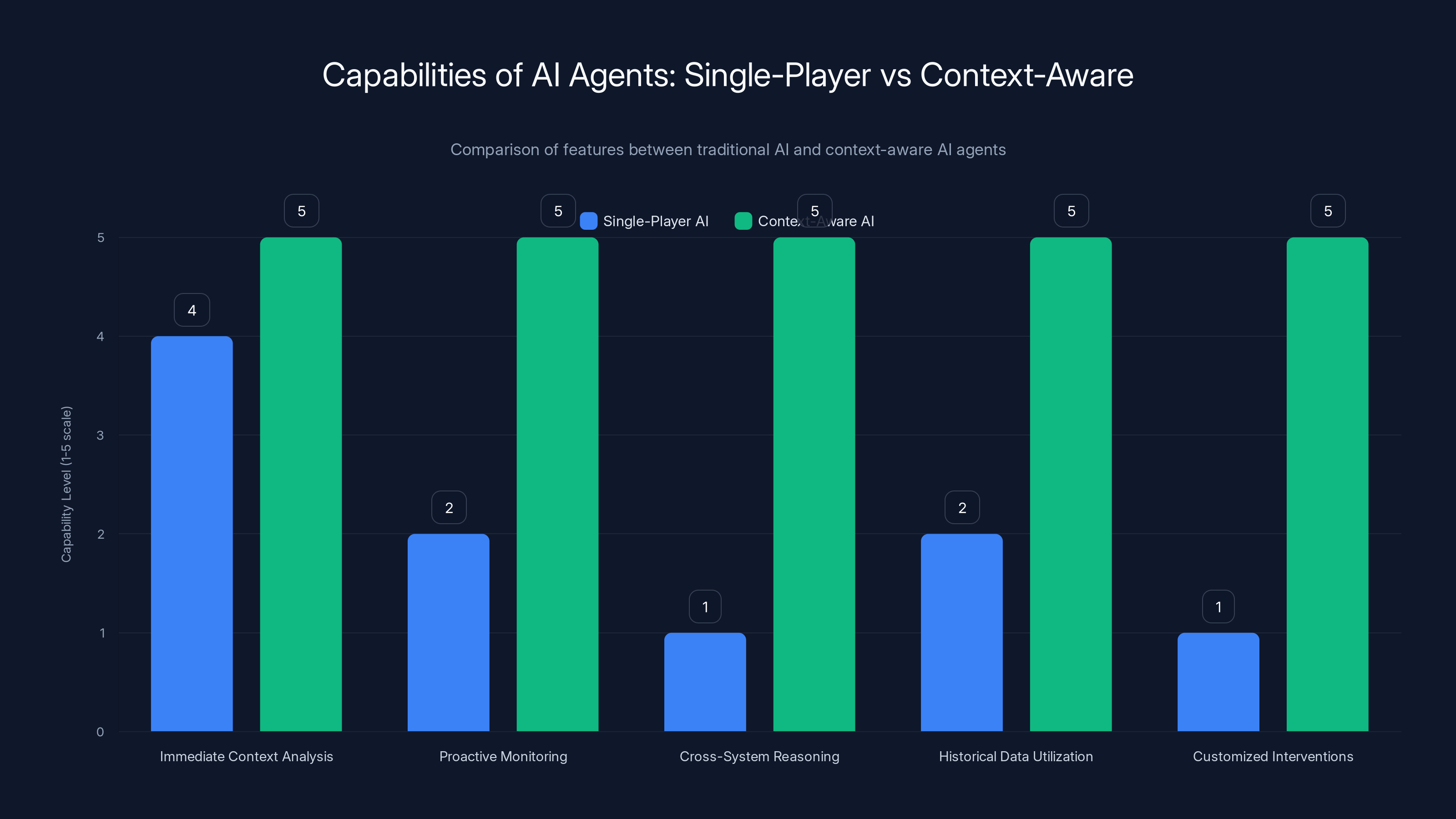

Context-aware AI agents significantly outperform single-player AI in proactive monitoring, cross-system reasoning, and utilizing historical data for customized interventions. Estimated data based on typical AI capabilities.

The Fundamental Problem: Single-Player AI in a Multi-System Enterprise

When you ask Chat GPT to write a professional email, it works. When you ask it to summarize a document, it works. When you ask it to explain a coding concept, it works.

Why? Because these tasks are self-contained. They don't require understanding how your organization operates. The AI has all the information it needs right in front of it.

But enterprise decisions don't work that way.

Consider a simple business question: "Why did customer churn increase 12% last month?"

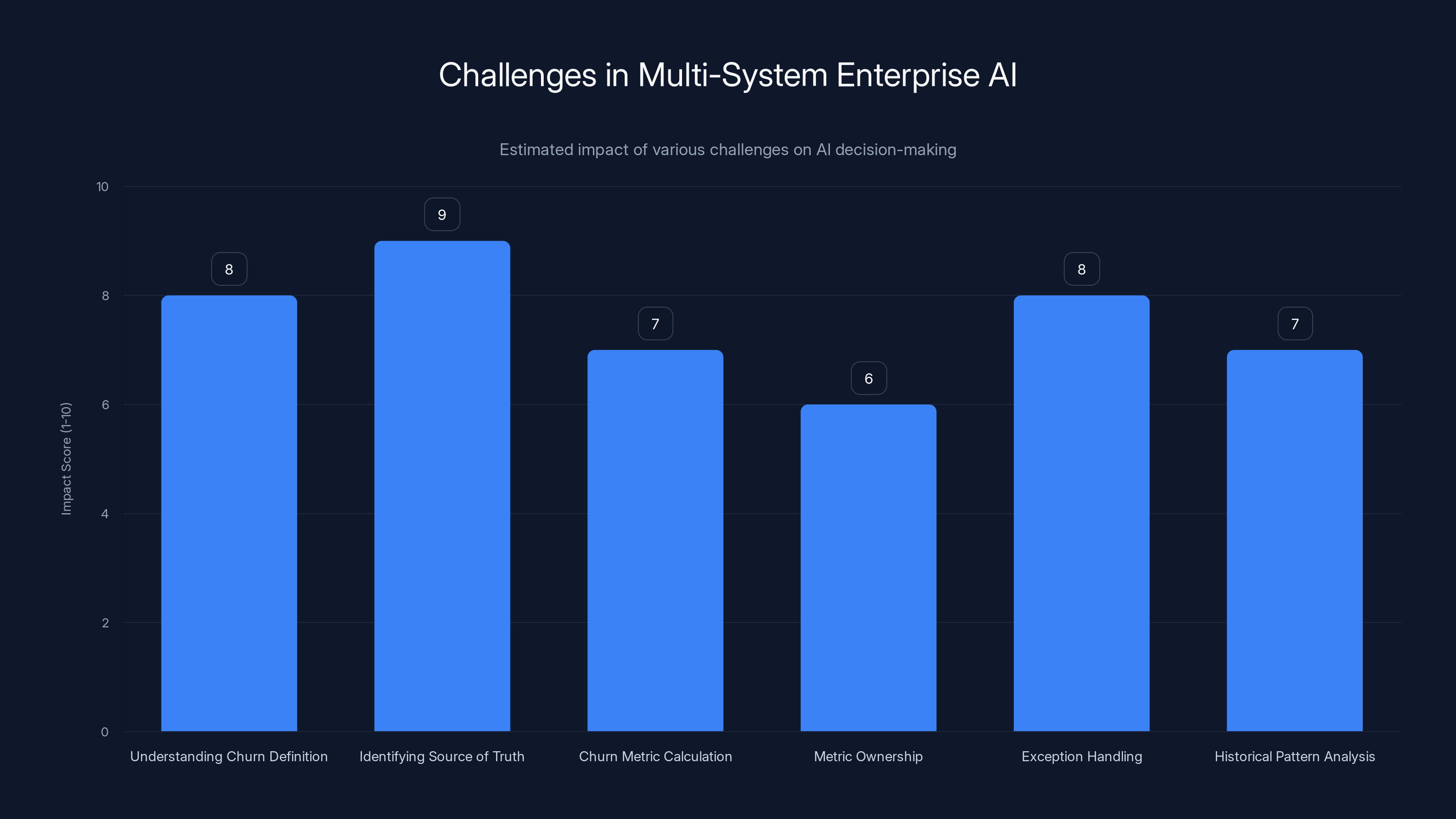

To answer this reliably, an AI system needs to understand:

- What "churn" actually means in your business (is it subscription cancellation? Inactive accounts after 90 days? Failed payment after two retries?)

- Which system holds the source of truth (is it your billing platform, CRM, or data warehouse, and which version is current?)

- Where churn metrics originate and how they're calculated (is it raw counts, percentages, or cohort-adjusted figures?)

- Who owns this metric and can validate the analysis (is it the VP of Customer Success, or someone else?)

- What exceptions exist (did we change our definition last month? Launch a new product line? Run a retention campaign?)

- What historical patterns matter (is 12% increase normal quarterly volatility, or a genuine anomaly?)

Most enterprises can't answer half of these questions with confidence. Metrics live in multiple systems with conflicting definitions. Data ownership is implicit rather than explicit. Historical context is tribal knowledge held by people, not documented anywhere.

So when you feed this ambiguity to an AI system, it doesn't fail loudly. It produces an answer that sounds plausible. It connects dots in ways that seem logical. It tells a narrative.

But that narrative isn't grounded in how your business actually works. It's a confabulation: an intelligent-sounding story that fills information gaps with reasonable-sounding fictions.

And here's the dangerous part: unless you already know the answer, it's hard to tell the difference.

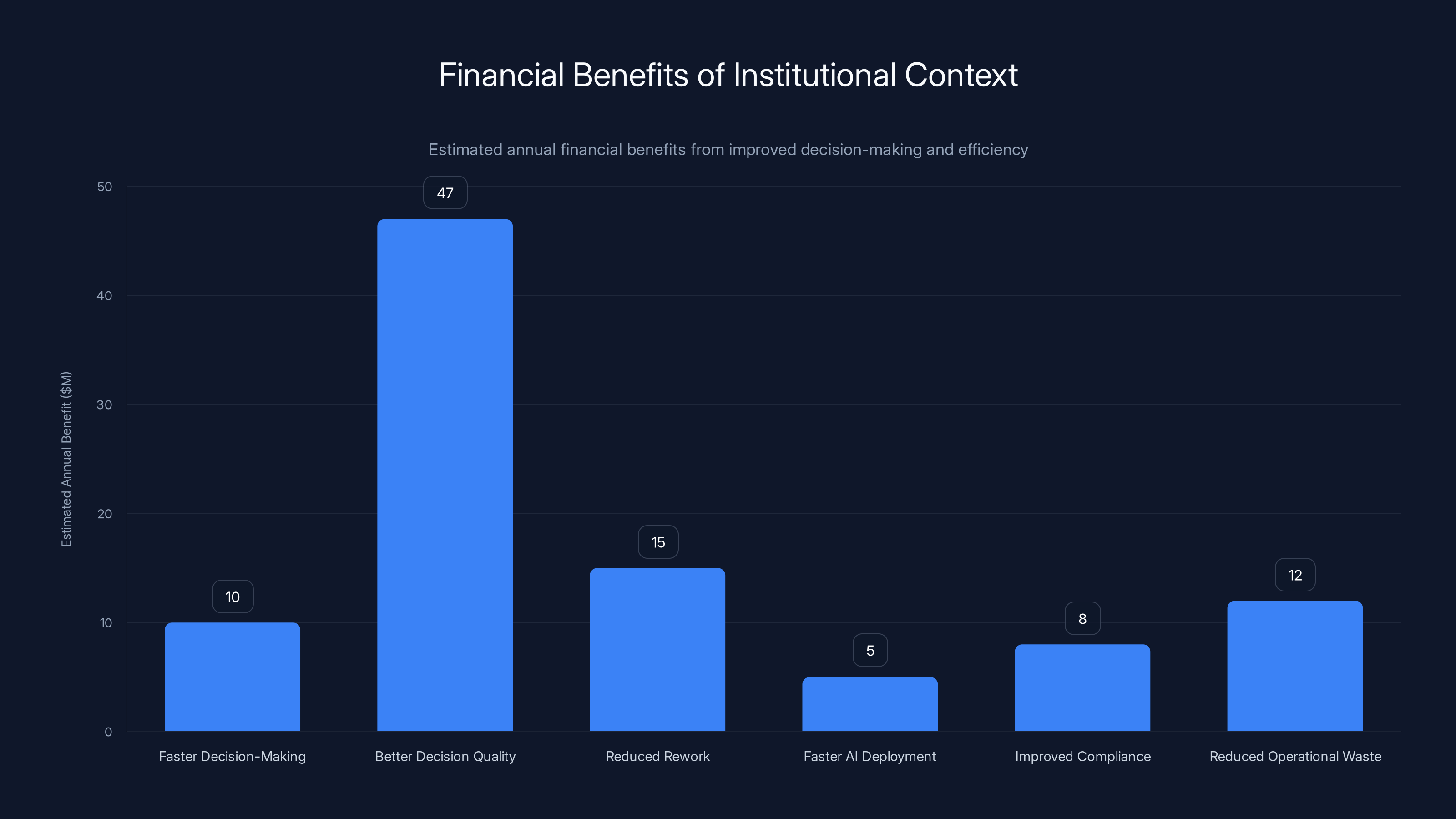

Investing in institutional context can yield significant financial benefits, with better decision quality alone potentially saving $47 million annually. Estimated data.

Why Today's AI Tools Miss the Mark

Vendors will tell you their latest AI platform is "enterprise-grade." What they mean is it can integrate with your existing systems and scale to thousands of users.

What they don't mean—because they can't deliver it—is that it understands your business.

Look at the AI tools flooding the market:

Analytics copilots: They can generate SQL queries and create visualizations, but they can't reason about why a metric matters or what decisions it should influence.

Document summarization tools: They compress information efficiently, but they don't distinguish between a minor observation and a critical business signal.

Process automation platforms: They execute workflows faster, but they don't question whether the workflow itself reflects current business reality.

Forecasting models: They find statistical patterns in historical data, but they can't reason about the business logic that should constrain predictions.

All of these tools operate in what we might call isolation mode. They process the data immediately available to them, without understanding how it connects to the broader business context.

This works fine for narrow, transactional tasks. It falls apart for strategic decisions.

Why? Because enterprise value doesn't come from faster email drafting or better summaries. It comes from proactive intelligence: AI systems that identify problems before they become crises, explain root causes instead of surface symptoms, and recommend actions grounded in business reality.

That requires institutional context. And most vendors aren't building for it—they're building for the 80% of use cases where context doesn't matter yet.

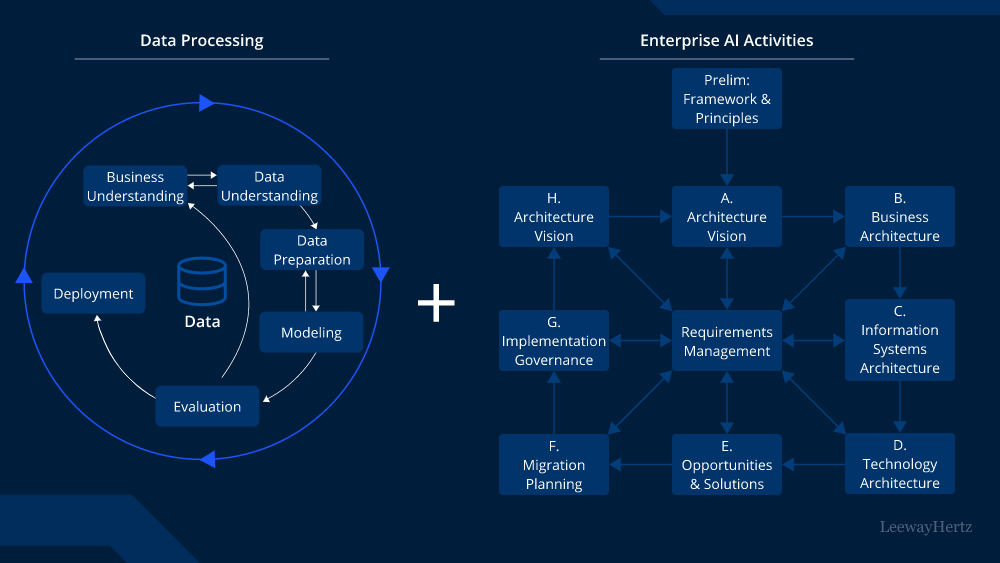

The Three Layers of Context Every Enterprise Needs

Building institutional AI requires three interconnected layers of context. Most enterprises have built at most one of them.

Layer 1: Semantic Context (What Things Actually Mean)

A metric isn't just a number. It's a definition with boundaries, assumptions, and implications.

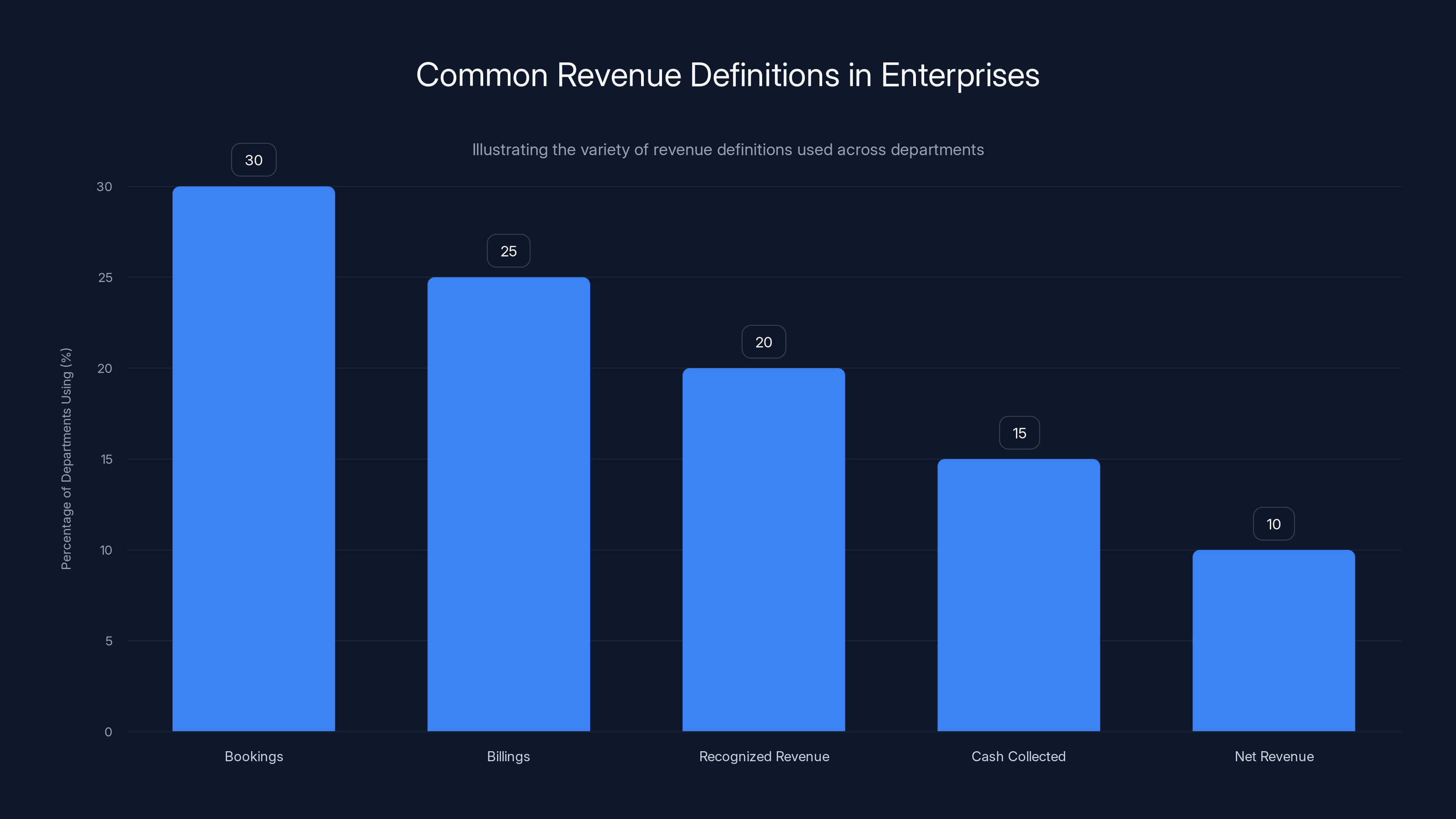

Consider "revenue." What counts as revenue in your business?

- Bookings when a contract is signed?

- Billings when an invoice is sent?

- Recognized revenue according to ASC 606 accounting standards?

- Cash collected when payment clears?

- Net revenue after refunds and credits?

Different departments might use different definitions. Finance probably uses one definition, sales uses another, and the board might care about a third.

Now imagine you ask an AI to forecast revenue for next quarter. Without knowing which definition it should use, the forecast is meaningless. The AI will make an assumption (often the simplest one), and that assumption will be wrong.

This happens thousands of times across enterprise data ecosystems. Metrics that sound like they mean the same thing actually don't. Data elements that appear in multiple systems carry different meanings. Business rules that should constrain calculations are undocumented.

Building semantic context means formalizing what things actually mean in your business:

- Metric definitions: What counts, how it's calculated, what assumptions underlie it

- Data lineage: Where numbers come from, what transformations they undergo, what systems of record exist

- Business entities: How customers, products, transactions, and other core concepts are defined

- Relationships: How entities connect (which customers own which contracts? Which transactions belong to which campaigns?)

This sounds boring and administrative. It's actually the foundation everything else rests on.

Layer 2: Governance Context (Who Decides What)

Business decisions aren't made by equations. They're made by people operating within rules, constraints, and approval hierarchies.

Consider a pricing decision. An AI system might recommend raising prices on a certain product based on demand elasticity and margin optimization. The recommendation might be mathematically sound.

But it doesn't account for:

- Who has authority to make that decision (is it the product manager, pricing manager, VP of Sales, or CEO?)

- What approval process applies (does it require board approval? Financial review? Customer impact assessment?)

- What constraints exist (are there contractual commitments? Competitive commitments? Market dynamics?)

- What trade-offs matter (is revenue optimization more important than market share? Customer satisfaction? Predictability?)

- What happened last time (was a similar decision made? What were the consequences?)

Governance context defines who makes decisions, what rules apply, and how AI recommendations should integrate into those decision-making processes.

This includes:

- Access control: Who can see what data, and under what circumstances?

- Approval workflows: What steps must AI recommendations pass through before implementation?

- Decision frameworks: What factors should influence decisions, and how should they be weighted?

- Exception handling: How are edge cases and special circumstances managed?

- Audit trails: How do we track what was decided, why, and what the outcomes were?

Without governance context, AI systems make recommendations that bypass legitimate decision-making processes, create liability exposure, or violate regulatory requirements.

Layer 3: Operational Context (How Things Actually Work)

Every business operates with rules that don't appear in any formal documentation. They're learned over time, embedded in process design, and understood by experienced people.

These are operational exceptions and edge cases. The way the business actually works versus the way it's supposed to work on paper.

A customer acquisition campaign that was supposed to run for two months but became permanent. A supplier relationship that was formalized but operates under verbal agreements. A product category that was supposed to be discontinued but still has loyal customers. A pricing model that was updated in the system but the old logic still applies for legacy accounts.

AI trained on current data alone will miss these patterns. It will apply general rules and produce recommendations that look good statistically but fail operationally.

Operational context captures:

- Historical decisions and their outcomes: What was decided, when, and what happened next?

- Exceptions and special cases: What situations require different logic? What customer segments need special handling?

- Informal constraints: What technical limitations, relationship dynamics, or market realities shape actual decisions?

- Seasonal and cyclical patterns: What variations are normal? What signals indicate true anomalies?

- Integration points: How do different systems actually talk to each other, despite what the documentation says?

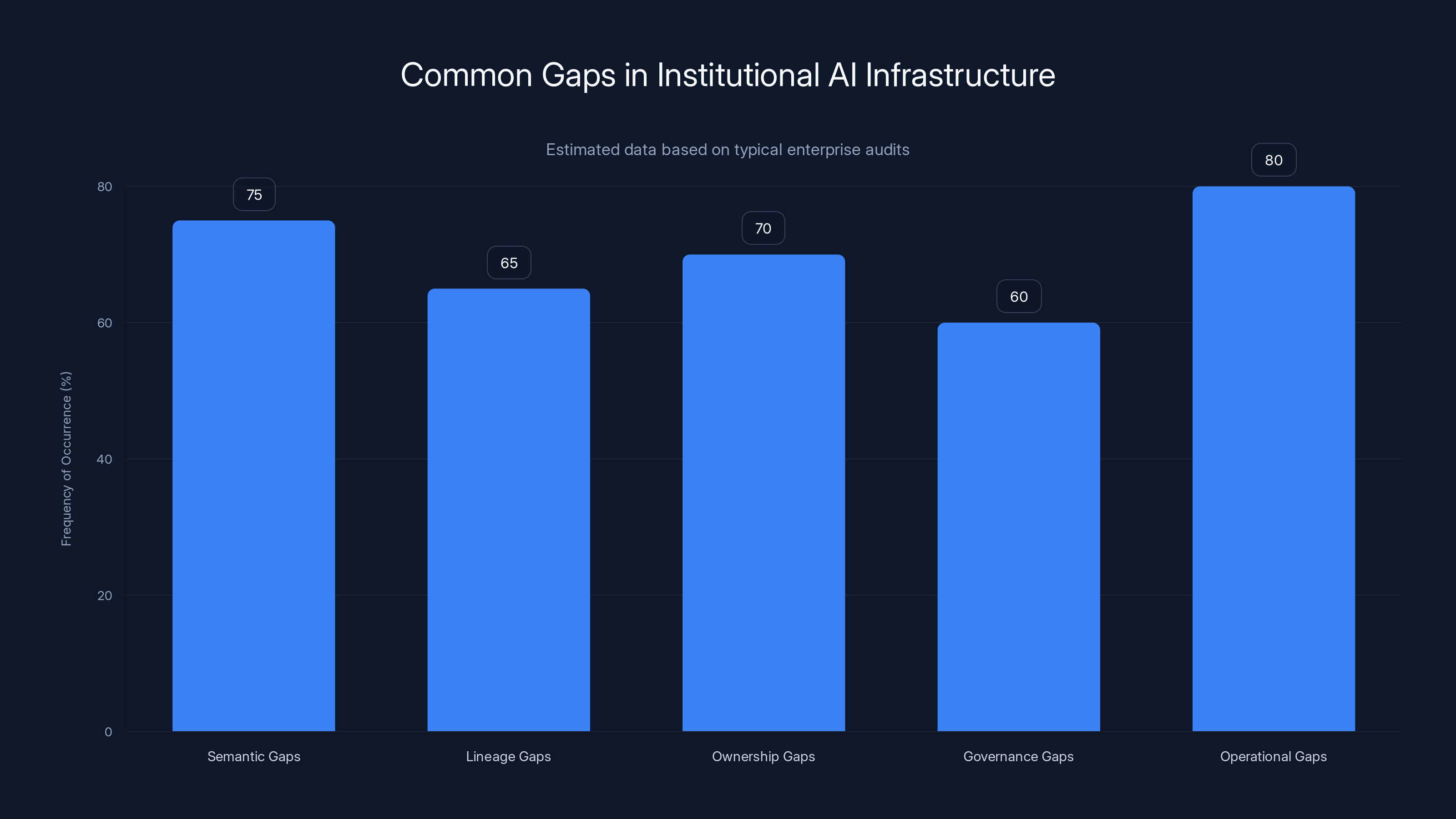

Estimated data shows that operational gaps are the most frequently occurring issue in building institutional AI infrastructure, followed closely by semantic and ownership gaps.

Where Enterprise AI Breaks Down: The Analytics Crisis

Want to see institutional context gaps? Look at enterprise analytics. This is where they become impossible to ignore.

The Fragmentation Problem

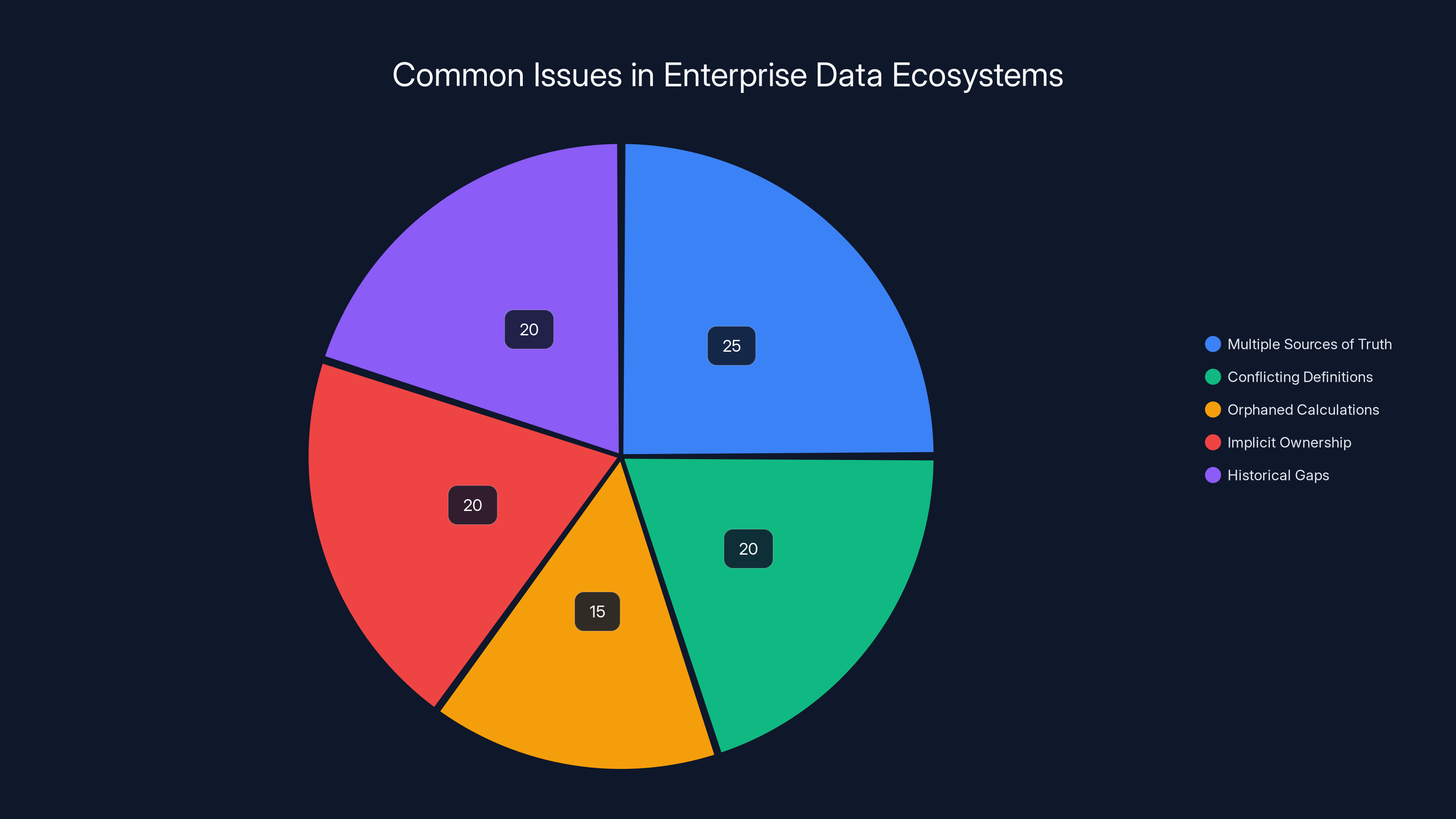

Most enterprises have evolved chaotic data ecosystems. You've got:

- Multiple sources of truth: The same metric calculated differently in your data warehouse, BI platform, and CRM

- Conflicting definitions: Marketing's definition of a "lead" differs from sales'. Finance's definition of "revenue" differs from operations'

- Orphaned calculations: Legacy metrics nobody fully understands, calculated in spreadsheets because the original system was decommissioned

- Implicit ownership: Everyone assumes someone else owns data quality, so nobody actually does

- Historical gaps: You changed how you calculate metrics three years ago, but you don't have clean historical comparison data

Now ask an AI to explain why a metric moved. It's working with:

- Data from multiple systems that sometimes conflict

- Definitions it's inferring from how the data is structured, not how it's formally defined

- No understanding of ownership or accountability

- No knowledge of changes in calculation methodology

- No context about business decisions that drove the change

The result is an analysis that sounds sophisticated but might be completely wrong. And you won't know it unless you already understand the underlying data architecture—at which point, you don't need the AI.

Why This Matters More Than It Should

In a well-governed data environment, analytics AI could be incredibly powerful. It could identify root causes automatically. It could flag anomalies before they become problems. It could recommend actions with confidence.

But in a fragmented environment (which is most enterprises), analytics becomes the proving ground where AI's limitations are exposed. And it teaches the entire organization not to trust AI for important decisions.

Why? Because these aren't hard technical problems. They're hard organizational problems. They require:

- Consensus on what metrics mean

- Accountability for data quality

- Documentation of business logic

- Governance around who changes what

- Discipline in maintaining these standards over time

None of these things are easy. But they're prerequisites for AI that works at enterprise scale.

From Single-Player to Multi-Player: Context-Aware AI Agents

The next evolution of enterprise AI isn't about bigger models or faster inference. It's about AI that operates across systems and over time, reasoning with institutional knowledge to drive real operational decisions.

We can call these context-aware AI agents. They're different from today's copilots and chatbots in fundamental ways.

What Makes Agents Different

Today's copilots are reactive. You ask a question, they provide an answer based on immediate context. They're helpful for known problems.

Context-aware agents are proactive. They monitor operational signals over time. They reason across multiple systems and data sources. They identify problems before they become crises and explain causation, not just correlation.

Consider an agent designed to monitor customer health:

Single-player AI would respond to: "Which accounts are at risk of churn?" by analyzing recent usage patterns and maybe engagement scores.

A context-aware agent would:

- Understand what churn means in your business and which metric matters

- Monitor leading indicators that historically preceded churn (payment failures? Support ticket volume increases? Feature usage changes?)

- Know which customer segments have different risk profiles

- Understand which interventions have worked before for similar situations

- Flag accounts where patterns deviate from historical norms

- Prioritize them based on business impact (some customers matter more)

- Recommend specific actions based on what's worked before

- Learn from outcomes to improve future recommendations

That second approach delivers real business value. It prevents churn, not just identifies it.

But it only works if the agent has institutional context:

- Formal definitions of churn and health metrics

- Historical data about what preceded churn events

- Documentation of successful intervention strategies

- Understanding of customer value and business priority

- Clear ownership and accountability for follow-up

Why Agents Need Institutional Context

An agent operating without institutional context might be impressive in isolation. It could identify statistical anomalies or predict future patterns.

But here's what it can't do:

- Explain root causes without understanding business logic (it might identify that a metric moved, but not why it matters)

- Recommend trustworthy actions without understanding governance (it might suggest something technically sensible but organizationally impossible)

- Learn from feedback without understanding intended outcomes (it can't distinguish between a decision that failed and a decision that succeeded but had different consequences than expected)

- Integrate into decision processes without understanding how your business actually makes decisions

These limitations aren't minor. They're the difference between AI that helps and AI that harms.

The ROI of Context-Aware Agents

When institutional context is in place, AI agents can drive measurable business value:

In revenue operations: Agents can identify at-risk accounts before renewal, understand why deals are stalling, and recommend next steps grounded in what's worked before. One financial services firm reported $2.4 million in recovered ARR in the first six months of deploying a context-aware agent that understood their sales processes and customer dynamics.

In customer success: Agents can identify high-value customers requiring attention, understand what caused satisfaction declines, and recommend personalized interventions. A SaaS company reported 12% reduction in churn and 23% increase in expansion revenue after deploying agents that understood their customer segments and success metrics.

In operational finance: Agents can flag cost overruns early, explain variances, and recommend corrective actions aligned with business strategy. An enterprise SaaS company reported $8.7 million in cost reductions identified by agents that understood their cost structure and budget constraints.

In supply chain: Agents can predict disruptions, identify root causes, and recommend actions to maintain continuity. A manufacturing company reported 34% reduction in supply chain incidents and 18% improvement in inventory efficiency using agents that understood their supplier relationships and operational constraints.

These aren't modest improvements. They're transformational.

But none of them happen without institutional context.

Estimated data shows that different departments within enterprises use various definitions of revenue, with 'Bookings' being the most common.

Building the Infrastructure for Institutional AI

So how do you actually build institutional context? It sounds daunting because it is. But it's also tractable if you approach it systematically.

Step 1: Inventory Your Current Context Gaps

Before you build anything, understand what you're working with. Conduct an honest audit:

Semantic gaps: For your top 20 metrics, can you document their definitions? Do all teams agree? Where are conflicts?

Lineage gaps: Can you trace a metric backward to its source systems? Where does it get transformed? What systems of record exist?

Ownership gaps: For each metric, can you name the person accountable for its accuracy? If you can't, you have a governance problem.

Governance gaps: Do you have documented approval workflows for different types of decisions? Who actually makes decisions, and do they follow documented processes?

Operational gaps: Interview experienced leaders about edge cases and exceptions. How much of their knowledge is documented versus tribal?

This audit will reveal your constraints. Most enterprises find they're missing more than they thought.

Step 2: Prioritize Your Most Consequential Metrics

Don't try to formalize everything at once. Start with metrics that:

- Drive significant business decisions

- Involve multiple systems or teams

- Have high error rates or frequent disputes about definitions

- Are prerequisites for AI systems you want to deploy

A typical enterprise might start with:

- Revenue and bookings

- Customer churn and expansion

- Operating costs and margin

- Cash flow and burn rate

- Employee productivity and retention

Formalize these first. Get governance around them. Then expand to secondary metrics.

Step 3: Establish Semantic Standards

For each prioritized metric, create a formal definition document that specifies:

The metric itself: What is it measuring? What's included and excluded? Why does it matter?

The calculation: What's the formula? What data sources feed into it? What transformations occur?

The history: When was this metric created? Has the definition changed? How do you handle historical comparisons if it has?

The ownership: Who's accountable for its accuracy? Who can authorize changes to the definition? Who reviews it regularly?

The governance: Under what circumstances can it be recalculated? Who can access it? What approval processes apply?

The context: What assumptions underlie it? What constraints apply? What exceptions exist?

This documentation becomes the source of truth that AI systems reference. It's not exciting work. But it's foundational.

Step 4: Map Your Data Lineage

Build a map showing:

- What source systems feed into key metrics

- What transformations occur at each step

- Which systems are systems of record for different entities

- Where data conflicts might occur

- What systems would be affected if something broke

This doesn't require a complex tool. Many enterprises start with spreadsheets or simple databases. The point is making connections explicit.

Step 5: Establish Governance Standards

Define clear rules for:

- Who can change metrics: Typically, a small governance committee reviews proposed changes

- What approval processes apply: Different changes require different review levels

- How changes are communicated: Affected teams need to know when metrics change

- How historical data is handled: Do you adjust historical data when definitions change, or maintain both versions?

- How AI systems participate: What proposal authority do AI agents have? What manual review is required?

Governance doesn't mean bureaucracy. It means clarity about who decides what and why.

Step 6: Create Feedback Loops

Institutional knowledge isn't static. It evolves as the business changes. Build mechanisms for:

- Learning from decisions: Track what was recommended, what was decided, and what the outcomes were

- Updating operational context: When edge cases occur, document them for future reference

- Refining governance: When approval processes become bottlenecks, streamline them

- Validating assumptions: Periodically confirm that historical patterns still hold

Treating business semantics and governance as living systems, not static infrastructure, is what allows AI to improve over time.

The CIO's Roadmap: From Strategy to Implementation

If you're a CIO or technology leader tasked with building enterprise AI capabilities, here's how to approach it strategically.

Phase 1: Foundation (Months 1-4)

Goal: Establish clarity on your institutional context gaps and build consensus on priorities.

Activities:

- Conduct the context gap audit described earlier

- Interview business leaders to understand pain points and opportunities

- Document your top 20 metrics and their current definitions

- Identify which gaps are blocking progress on business priorities

- Build a cross-functional working group to guide the effort

Success criteria: You have consensus on what "revenue" means, who owns it, and how it flows through your organization. You've identified the biggest context gaps that are limiting AI value.

Phase 2: Semantic Foundation (Months 4-8)

Goal: Formalize definitions, ownership, and governance for your prioritized metrics.

Activities:

- Create formal documentation for your top 5 metrics

- Map data lineage for those metrics

- Establish ownership and accountability

- Create change management processes for metric updates

- Build a business glossary that teams can reference

Success criteria: You have formal, documented definitions that all teams agree on. Ownership is clear. There's a process for proposing and approving changes.

Phase 3: Governance Implementation (Months 8-12)

Goal: Build processes and tools that enforce governance standards.

Activities:

- Document decision-making authority and approval workflows

- Implement access controls aligned with governance requirements

- Create audit trails that track changes to metrics and decisions

- Build feedback mechanisms that capture outcomes

- Train teams on new standards

Success criteria: Governance is being followed. Changes to metrics are documented and approved. Outcomes of decisions are being tracked.

Phase 4: Agent Deployment (Months 12-16)

Goal: Deploy context-aware AI agents that can leverage the institutional knowledge you've built.

Activities:

- Identify the highest-impact use case for an AI agent

- Configure the agent with your formal definitions, governance rules, and operational context

- Pilot the agent with a limited user group

- Measure outcomes and iterate based on feedback

- Plan expansion to other use cases

Success criteria: The agent makes recommendations that teams trust and act on. Outcomes are measurable and positive.

Phase 5: Continuous Improvement (Ongoing)

Goal: Build mechanisms for continuous learning and adaptation.

Activities:

- Monitor agent performance and outcomes

- Capture feedback about edge cases and exceptions

- Update institutional knowledge based on new patterns

- Expand agent capabilities as context improves

- Periodically audit governance adherence and adjust as needed

Success criteria: Agent accuracy and usefulness improve over time. New insights emerge from better data and governance. Teams increasingly rely on AI recommendations for decisions.

Estimated data shows that 'Multiple Sources of Truth' and 'Conflicting Definitions' are among the most prevalent issues in enterprise data ecosystems, each accounting for about 20-25% of the challenges.

Common Pitfalls and How to Avoid Them

As you build institutional AI, you'll encounter predictable obstacles. Here's how to navigate them.

Pitfall 1: Treating Semantics as an IT Problem

The mistake: Assuming your data team can formalize metrics without business input. They can document how calculations work, but not what they should mean.

The fix: Make metric definition a business-led process. IT provides technical infrastructure, but business leaders decide what metrics mean. This ensures definitions reflect business reality, not technical convenience.

Pitfall 2: Building Governance Without Adoption

The mistake: Implementing rigid governance processes that slow down decision-making, causing teams to bypass them entirely.

The fix: Involve the people who actually make decisions in designing governance. Make processes efficient enough that they're faster than alternatives. Start with guidance, then enforcement.

Pitfall 3: Assuming Historical Data Tells the Whole Story

The mistake: Training AI agents exclusively on historical data, ignoring unwritten rules and tribal knowledge.

The fix: Systematically interview experienced leaders about exceptions, edge cases, and decisions that broke the historical pattern. Encode this operational knowledge alongside data patterns.

Pitfall 4: Deploying Agents Too Early

The mistake: Expecting an AI agent to work reliably on metrics that are still under dispute or in a fragmented data environment.

The fix: Wait until you've formalized definitions, established governance, and mapped operational context. An agent deployed prematurely will fail and damage credibility.

Pitfall 5: Measuring the Wrong Things

The mistake: Tracking technical metrics (model accuracy, query performance) instead of business outcomes.

The fix: Measure what matters: Did the agent's recommendation lead to better decisions? Did it prevent problems? Did it save money or time? These outcomes are what justify the investment.

Pitfall 6: Treating AI as Autonomous

The mistake: Believing that once an AI agent is deployed, it should operate independently without human oversight.

The fix: Design AI agents as decision-support systems that enhance human judgment, not replace it. Humans should always understand the reasoning behind recommendations and maintain veto authority.

The Technology Stack: What Actually Matters

You'll encounter vendors pitching solutions: AI platforms, data governance tools, metadata management systems, etc.

Here's what actually matters when evaluating them.

Essential Capabilities

Semantic capture: Can the platform help you formally document what metrics mean, who owns them, and how they're calculated?

Lineage tracking: Does it show you where data comes from, what transformations occur, and what the dependency graph looks like?

Governance enforcement: Can it encode approval workflows, access controls, and change management processes?

Integration breadth: Can it connect to your source systems, data warehouse, analytics platforms, and AI tools?

Operational context: Does it let you document edge cases, exceptions, and decision outcomes that should inform AI recommendations?

Auditability: Can you track what changed, who changed it, why, and what the consequences were?

Nice-to-Have Capabilities

Automated lineage discovery: Tools that can infer lineage from your systems are helpful, but manual curation is usually needed.

AI-powered documentation: AI that drafts metric definitions based on code analysis can accelerate documentation, but humans need to validate.

Visualization: Pretty dashboards and graphs are nice, but clarity of information matters more than aesthetics.

Collaboration features: Workflow and commenting capabilities help teams work together on governance.

What to Avoid

"Fully automated" solutions: If a vendor claims you can just point their AI at your data and it will figure everything out, they're overpromising. Institutional context requires human expertise.

Single-vendor lock-in: Choose platforms that integrate with your existing tools rather than trying to replace your entire stack.

Complexity for complexity's sake: The best governance tools are often the simplest ones. Spreadsheets and databases often work fine for semantic foundations.

All-in-one promises: Vendors that claim to solve data governance, analytics, AI, and automation in one platform are usually solving none of them well.

Understanding the source of truth and churn definitions are the most challenging aspects for AI in multi-system enterprises. Estimated data.

The ROI Calculation: Why This Matters Financially

Building institutional context requires investment. How do you justify it to the CFO?

Quantifiable Benefits

Faster decision-making: When metric definitions are clear and data lineage is transparent, decisions that used to take weeks take days. For fast-moving businesses, this is worth millions.

Better decision quality: Context-aware AI catches problems that humans miss. One enterprise reported $47 million in prevented errors in a single year from an agent that understood cost structure and constraints.

Reduced rework: When governance is clear, teams don't redo work or argue about what metrics mean. This frees up 10-20% of analytical capacity.

Faster AI deployment: Once institutional knowledge is formalized, new AI agents and analytics can be deployed weeks instead of months faster.

Improved compliance: Formal governance makes audits and regulatory compliance easier, reducing risk and potential penalties.

Reduced operational waste: Context-aware agents identify inefficiencies and unusual patterns, preventing small problems from becoming big ones.

Investment Required

Typical enterprises spend:

- Staff time for documentation and governance building: 2M depending on organization size

- Technology infrastructure for semantic management and governance: 1M in initial costs,500K annually

- Change management to drive adoption: 500K

- Ongoing maintenance to keep definitions current: 300K annually

The Payback Calculation

For a mid-sized enterprise ($500M+ revenue), typical payback:

Example:

- 50 high-impact decisions per year that are improved by context-aware AI: 50M benefit

- 50 analytical FTEs freed up at 7.5M benefit

- Total investment: $3M

- ROI = 1,833% (payback in under 2 months)

These numbers are real. They're conservative compared to what leaders see in practice.

The Future of Enterprise AI: What's Coming

As institutional context becomes table stakes, several trends are emerging.

Trend 1: Semantic-First AI Architectures

New AI platforms are being designed around the principle that business semantics come first, models come second. This inverts the typical approach where data scientists build models and business analysts try to interpret them.

Instead, you define what should happen (business logic), then the AI learns how to execute it based on data. This produces more interpretable, trustworthy systems.

Trend 2: Continuous Governance as Infrastructure

Governance is moving from static check-boxes to continuous monitoring and enforcement. An AI system continuously verifies that it's operating within approved bounds, automatically surfaces exceptions, and learns from feedback.

This is more complex to build, but it scales governance far better than manual review.

Trend 3: Federated Institutional Knowledge

Large enterprises are moving away from centralized metadata repositories toward federated models where business units maintain their own definitions, aligned to company-wide standards. This balances autonomy with consistency.

Trend 4: AI That Questions Its Own Assumptions

Next-generation agents won't just follow institutional context; they'll identify when context might be wrong. "This pattern contradicts what we expect given the documented business logic. Should we investigate?" This kind of meta-reasoning is becoming possible and increasingly valuable.

Trend 5: Institutional Knowledge as Competitive Advantage

Over the next 3-5 years, enterprises that have formalized their institutional knowledge will have a structural advantage over those that haven't. Their AI systems will work better. Their decisions will be faster and more reliable. Their people will be more productive.

This won't just be a technology advantage; it will be a business advantage.

Getting Started: Your First 90 Days

If you're convinced this matters but overwhelmed about where to start, here's a concrete 90-day plan.

Month 1: Understand Your Current State

Week 1-2: Audit your institutional context gaps. Interview 20 people (leaders, analysts, data engineers) about:

- What metrics matter most to their decision-making

- Which metric definitions are unclear or disputed

- Where they struggle to trust data

- What tribal knowledge should be documented

Week 3: Synthesize findings into a report identifying your biggest gaps.

Week 4: Socialize findings with leadership and get alignment on priorities.

Month 2: Establish Foundations

Week 1-2: Form a cross-functional working group (business leads, data leads, IT leadership).

Week 3: Document your top 5 metrics using a simple template:

- Definition

- Calculation

- Owner

- Systems involved

- Known issues

Week 4: Present to leadership and get sign-off.

Month 3: Build Momentum

Week 1-2: Create a formal change control process for metric updates. Document it clearly.

Week 3: Identify one small AI opportunity where better institutional knowledge would create value. Design a 4-week pilot.

Week 4: Launch the pilot. Plan for a readout with leadership.

After 90 days, you'll have momentum. The hard part is sustaining it, but you'll have proven the concept.

FAQ

What is institutional context in the context of enterprise AI?

Institutional context refers to the interconnected web of business semantics, governance rules, and operational knowledge that determines how an organization actually functions. It includes formal definitions of metrics, data lineage, ownership and accountability structures, decision approval workflows, historical patterns, and edge cases. Without it, AI systems lack the business understanding needed to make reliable decisions at scale.

How is institutional context different from just having good data governance?

Data governance focuses on data quality, lineage, and access control. Institutional context is broader: it includes the business meaning of data, the decision-making processes that data informs, the organizational authority structures, and the operational exceptions that don't follow standard patterns. Good data governance is a prerequisite for institutional context, but it's not sufficient on its own.

Why do most enterprise AI initiatives fail without institutional context?

AI systems trained on data alone, without understanding business meaning, make decisions based on statistical patterns rather than business logic. They can produce confident-sounding recommendations that are wrong in ways that aren't obvious unless you already understand the business. They can recommend actions that violate governance rules or business strategy. They struggle to explain their reasoning in business terms. Over time, teams learn not to trust them, and adoption stalls.

How long does it take to build institutional context?

For a mid-sized enterprise, formalizing institutional context for your top 20 metrics typically takes 6-12 months to establish baseline clarity, plus ongoing maintenance. You'll start seeing AI value within 4-6 months, long before everything is perfect. The key is prioritizing your highest-impact metrics and building iteratively rather than trying to solve everything at once.

Can you build institutional context without a specialized tool?

Yes. Many enterprises start with spreadsheets, wikis, or simple databases to document their semantic foundations and governance rules. Specialized tools become valuable as scale increases, but they're not prerequisites. Start with what you have, then invest in tools once you've proven the value and identified specific needs they need to meet.

What's the relationship between institutional context and AI explainability?

AI explainability becomes much more meaningful when there's institutional context. Without it, you can explain what an AI model did statistically, but you can't explain whether it made sense from a business perspective. With institutional context, explainability becomes bidirectional: you can understand why the AI made a recommendation, and the AI can understand whether its reasoning aligns with business rules and domain knowledge.

How do we ensure institutional context stays current as the business evolves?

Build feedback loops and continuous governance. Track decision outcomes and identify where assumptions were wrong. Create mechanisms for proposing and approving changes to definitions and business logic. Periodically audit whether documented context still reflects reality. Treat institutional knowledge as a living system that evolves with the business, not a static artifact.

What's the difference between institutional context and a business glossary?

A business glossary documents what terms mean. Institutional context includes the glossary but goes much deeper: it documents relationships between concepts, how those concepts flow through systems and processes, who makes decisions about them, and what operational exceptions apply. A glossary is table stakes, but it's the foundation, not the whole building.

Can AI agents learn institutional context automatically from data?

No. AI can identify statistical patterns and infer some relationships from data. But it can't discover business meaning, governance rules, decision authorities, or operational exceptions just by analyzing data. These must be explicitly taught. That said, once you've formalized institutional context, AI can help you maintain and evolve it by flagging when new patterns suggest that documented assumptions need updating.

The Bottom Line: Institutional Context Is Your Next Competitive Advantage

The enterprise AI moment we're living through is revealing a hard truth: bigger models and more data don't solve business problems without business understanding.

The enterprises winning with AI aren't the ones with the fanciest tools or the most aggressive AI roadmaps. They're the ones that took the time to understand how their business actually works, formalize that understanding, and build governance around it.

This isn't sexy. It won't make headlines in tech news. It won't impress the board with flashy AI capabilities.

But it will:

- Make your AI systems actually work

- Let you deploy new AI capabilities in weeks instead of months

- Give your teams confidence in AI-driven decisions

- Compound over time as you build more intelligence on a stable foundation

- Create a structural competitive advantage that's hard to copy

The enterprises that build this foundation in the next 12-18 months will have a significant advantage over those that don't. Not because they have better AI, but because they've made their business legible to machines.

That's the real unlock. That's where transformational ROI lives.

Start small. Document your top 20 metrics. Clarify ownership. Build governance. Then deploy AI agents that actually understand your business.

Everything else is just noise.

Key Takeaways

- Single-player AI excels at individual tasks but fails when required to understand institutional complexity like governance, ownership, and business logic

- Most enterprise AI initiatives stall not because the technology is immature, but because organizations haven't formalized the institutional knowledge AI needs to operate reliably

- Institutional context requires three interconnected layers: semantic (what things mean), governance (who decides what), and operational (how things actually work in practice)

- Analytics exposes institutional context gaps first because revenue forecasting, churn analysis, and cost variance diagnosis require understanding business meaning across multiple systems

- Context-aware AI agents deliver transformational ROI by identifying root causes, recommending actions aligned with business reality, and learning from outcomes over time

- Building institutional context is a 6-12 month foundation effort that pays for itself through faster decision-making, better decision quality, and faster AI deployment cycles

- The enterprises that formalize institutional knowledge in the next 12-18 months will gain significant competitive advantage through AI systems that actually work at scale

Related Articles

- Salesforce's New Slackbot AI Agent: The Workplace AI Battle [2025]

- Anthropic's Cowork: Claude's Agentic AI for Non-Coders [2025]

- How People Use ChatGPT: OpenAI's First User Study Analysis [2025]

- AI Accountability & Society: Who Bears Responsibility? [2025]

- Grok AI Regulation: Elon Musk vs UK Government [2025]

- VoiceRun's $5.5M Funding: Building the Voice Agent Factory [2025]

![Enterprise AI Needs Business Context, Not More Tools [2025]](https://tryrunable.com/blog/enterprise-ai-needs-business-context-not-more-tools-2025/image-1-1768491553224.jpg)