Introduction: The Enterprise AI Bet Nobody Expected

Snowflake just dropped a

This isn't how enterprise software usually works. Historically, companies pick one vendor and stick with them. Maybe they hedge by keeping a second option in the wings, just in case. But this is different. These aren't desperate contingency plans. These are deliberate, simultaneous, hundred-million-dollar commitments to multiple AI companies with overlapping capabilities.

What's driving this shift? The enterprise AI landscape has fundamentally changed. A year ago, ChatGPT was shiny and new. Everyone wanted access. But now? The C-suite is asking harder questions. Which model actually performs better on our specific tasks? Do we need reasoning-focused systems, or speed-optimized ones? How do we maintain compliance and data governance across different AI providers? What happens if one model has an outage?

The answers to those questions don't point toward a single vendor solution. They point toward flexibility, optionality, and the ability to swap between models based on the job at hand.

This shift tells us something profound about how enterprise AI adoption is actually going to play out over the next five years. It's not going to be a "winner takes most" market. It's going to look more like the ride-hail ecosystem, where Uber and Lyft coexist profitably because users switch between them based on context. Or the way teams use both Figma and Adobe Creative Suite depending on the workflow. Or how developers reach for different programming languages for different problems.

Multiple companies can win. Multiple models can thrive. And enterprises will get better, more reliable AI systems as a result.

Let's dig into what's actually happening, why it matters, and what comes next.

TL; DR

- The New Pattern: Enterprise giants like Snowflake, ServiceNow, and others are signing multi-year deals with competing AI companies (OpenAI, Anthropic, Google, Meta) simultaneously rather than picking winners

- Why This Matters: Different AI models have different strengths and weaknesses—enterprises need optionality to match the right model to each task

- The Market Implication: This signals a multi-winner AI market, not a "winner takes all" scenario like we've seen in previous software revolutions

- Workforce Impact: Employees at these enterprises are already using their preferred models regardless of official company contracts, forcing leadership to embrace choice

- The Business Case: Model agnosticism reduces risk, improves performance, and locks in better negotiating leverage with AI providers

- What's Next: Expect more enterprises to adopt portfolio strategies, and expect AI providers to compete on specific model strengths rather than trying to be everything to everyone

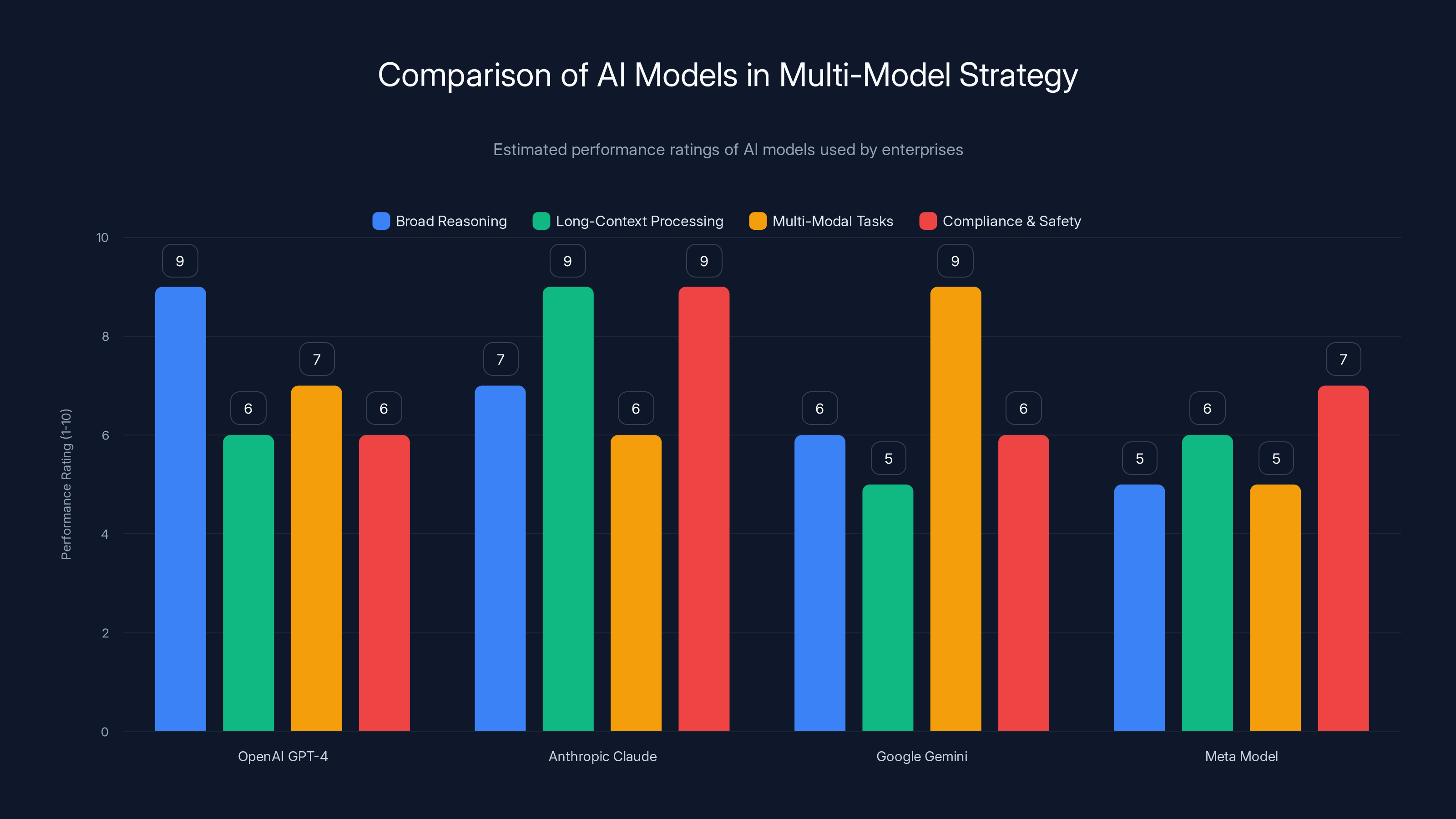

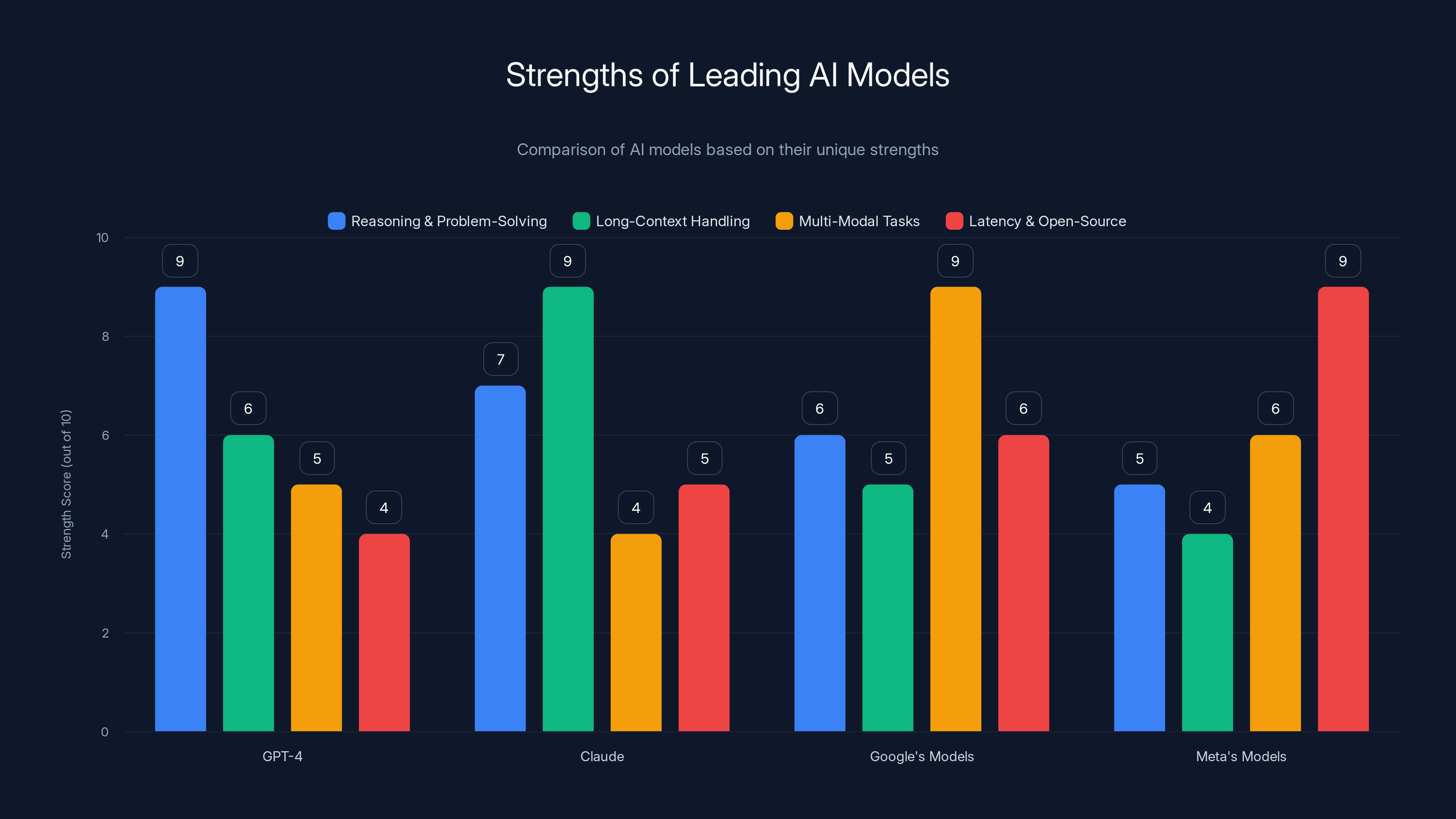

Estimated data shows that different AI models excel in different areas, supporting the multi-model strategy for tailored task performance.

The Snowflake Deal: What Actually Happened (And Why It Matters)

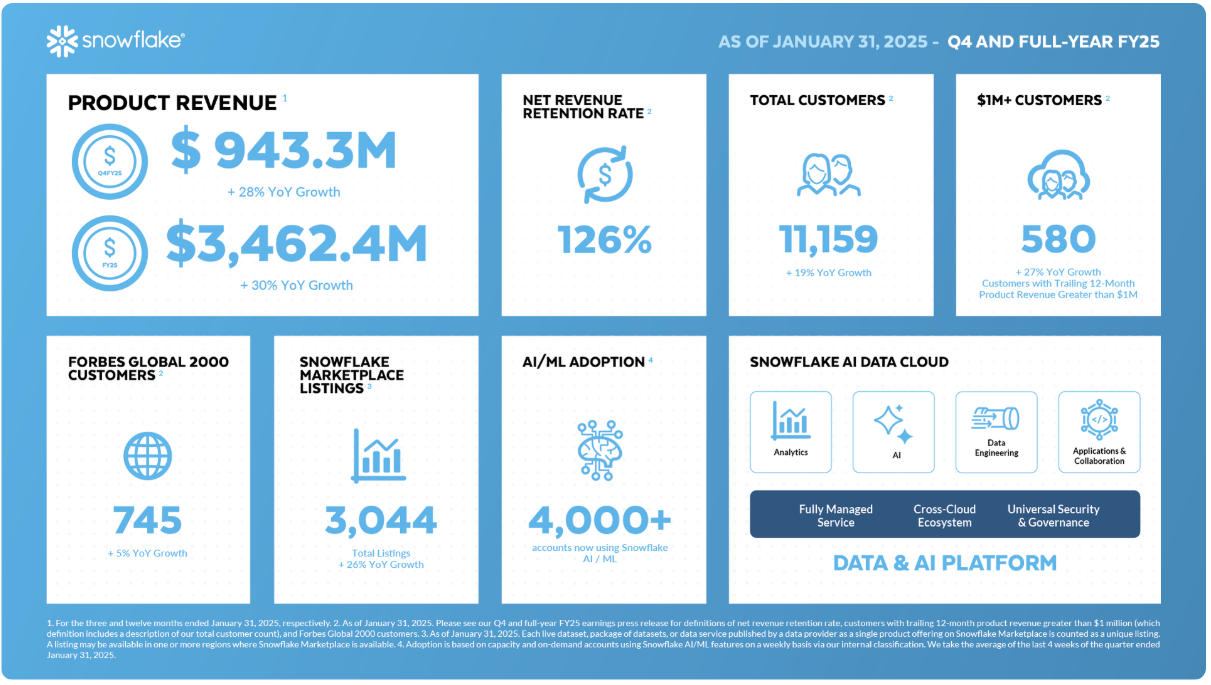

On February 2, 2025, Snowflake announced a $200 million multi-year deal with OpenAI. The headline was straightforward: enterprise data gets access to frontier AI models. Snowflake's 12,600 customers now have OpenAI's models integrated into their data platforms. Snowflake employees get ChatGPT Enterprise as a company standard. The companies committed to building new AI agents together.

Snowflake CEO Sridhar Ramaswamy's statement was carefully crafted corporate speak, but one phrase jumped out: "Customers can now harness all their enterprise knowledge in Snowflake together with the world-class intelligence of OpenAI models." Translation: your data gets smarter when it meets frontier AI.

But here's the thing that should grab your attention. This deal came just two months after Snowflake announced an identical $200 million deal with Anthropic in December 2024. Same structure. Same language. Same commitment level. Different AI company.

When TechCrunch asked Snowflake about the apparent redundancy, vice president of AI Baris Gultekin's response was telling: "Our partnership with OpenAI is a multi-year commercial commitment focused on reliability, performance, and real customer usage. At the same time, we remain intentionally model-agnostic. Enterprises need choice, and we do not believe in locking customers into a single provider."

That "intentionally model-agnostic" phrase is the real story. Snowflake is explicitly, deliberately choosing not to standardize on a single AI vendor. This isn't happening because Anthropic wasn't good enough, or because OpenAI somehow beat them out. It's happening because Snowflake figured out that their customers are better served when they have options.

Gultekin went on to list the roster: Anthropic, OpenAI, Google, Meta, and others. Not some. Not most. Not "we're evaluating." These partnerships are already live.

This strategy is unconventional precisely because most enterprise software works on winner-take-most dynamics. A CRM picks Salesforce or HubSpot, not both. A data warehouse picks Snowflake or Databricks, usually not simultaneously. The idea of a major enterprise signing huge deals with direct competitors is unusual enough that it demands explanation.

Why Snowflake's Strategy Signals a New Era

The Snowflake approach isn't just about hedging bets. It's about recognizing a fundamental truth that's emerged from eighteen months of enterprise AI experimentation: different models solve different problems.

Consider what we know about frontier models. OpenAI's GPT-4 excels at broad reasoning and general problem-solving. It handles nuance and context extremely well. Claude (from Anthropic) is known for long-context reasoning, compliance awareness, and being resistant to shortcuts. Google's models have advantages in multi-modal tasks (images, text, video together). Meta's models are open-source optimized and lower-latency. Different tools. Different strengths.

For a customer trying to build an AI system on top of their data, this variation matters enormously. If you're summarizing legal documents, you might want Claude's longer context window and compliance focus. If you're generating customer insights from product analytics, GPT-4's reasoning might shine. If you're processing images alongside text, Google's offering gets the nod. If you need sub-100ms latency, open-source models might be the play.

Snowflake's customers weren't going to wait for a single "best" model to emerge and then slowly migrate to it. They needed solutions now, and they needed multiple options to solve multiple problems. By signing deals with all the major players, Snowflake essentially said: we're going to let our customers pick the right tool for their job.

This has profound implications for how enterprise AI gets built. Instead of waiting for platform wars to resolve (which could take a decade), enterprises can build and ship AI systems today. They can experiment. They can see what works. They can optimize over time. The cost of switching models is lower when you're not locked into one vendor's ecosystem.

Gultekin's phrase matters again here: "enterprises need choice." That's not philosophical. That's practical. When you're building mission-critical systems, you want redundancy. You want the ability to swap components if something breaks. You want competition keeping vendors honest on pricing and performance.

The ServiceNow Precedent: Dual Deals Become the Norm

Snowflake wasn't first. In January 2025, ServiceNow, the workflow automation giant with 12,000+ enterprise customers, announced multi-year deals with both OpenAI and Anthropic. ServiceNow president Amit Zavery explained the logic directly: they wanted to give customers and employees the ability to choose which model made sense for each task.

This was significant. ServiceNow isn't a data company like Snowflake. It's a workflow and automation platform. If a major workflow automation company sees the value in model agnosticism, it suggests the pattern isn't limited to one industry vertical. It's a broader shift in how enterprises are thinking about AI integration.

Zavery's framing was explicit about what enterprises actually want. Not a single, all-powerful model. Not a long bet on one horse. They want the ability to evaluate, test, and optimize across options. They want their employees to use what works best. They want their customers to build on whatever foundation makes sense.

Both Snowflake and ServiceNow are betting that this flexibility creates better customer outcomes. The enterprise data analytics company and the enterprise workflow company, two different markets, both making nearly identical strategic choices about AI partnerships. That's not coincidence. That's pattern recognition at scale.

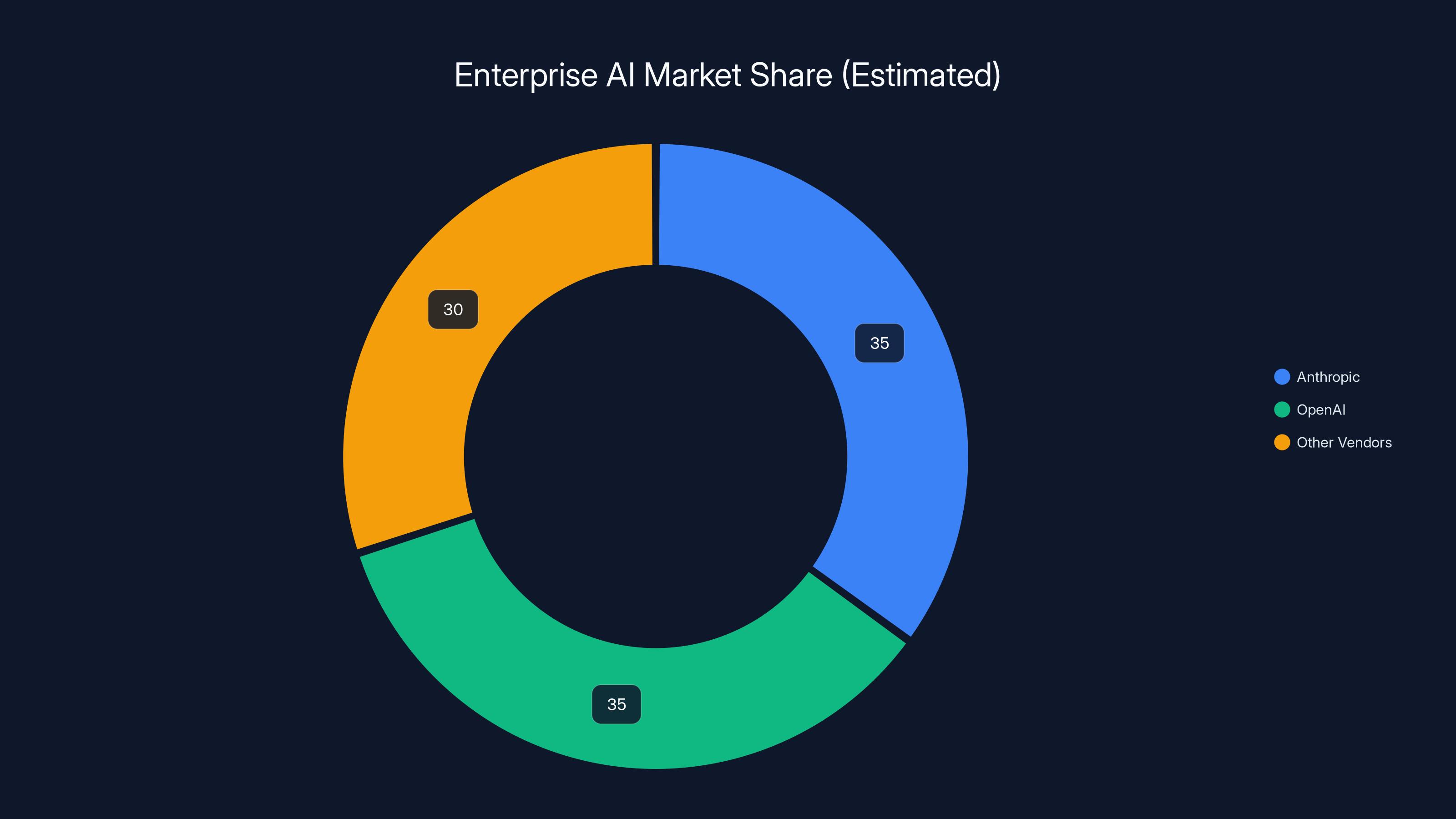

Estimated data suggests Anthropic and OpenAI each hold a 35% share, with other vendors collectively holding 30%. This reflects the trend of enterprises diversifying AI model usage due to unclear market leaders.

The Employee Factor: Why Top-Down AI Strategy Breaks Down

Here's a reality that most enterprise leadership hasn't fully grappled with yet: your employees are already using their preferred AI models regardless of what your company contracts with.

A Snowflake employee with ChatGPT Plus might prefer GPT-4 for creative tasks. Same employee might keep Claude open in another tab for deep analysis because they've found it handles long-form technical documents better. A ServiceNow engineer might use Perplexity for research because the source citations are valuable. Another colleague might swear by Copilot for code because they're already in the Microsoft ecosystem.

This happens whether or not the company officially supports it. And it's driving enterprise leadership to reckon with an uncomfortable truth: standardizing on a single model doesn't actually standardize how people work. It just creates shadow adoption of other models.

Snowflake and ServiceNow's leadership realized something important: instead of fighting this behavior, facilitate it. Let employees use what works. Provide company accounts for multiple platforms. Build integrations that make switching seamless. This approach has multiple benefits:

First, it improves output quality. When someone can reach for the right tool instead of forcing their problem into a suboptimal tool, the work gets better. That's not speculation. That's basic craftsmanship.

Second, it surfaces real data about which models excel at which tasks. Enterprises can now collect metrics about actual usage patterns. When does your organization choose Claude over GPT-4? When does it pick open-source models? These patterns tell you something about what your people actually value.

Third, it kills the artificial scarcity mentality that emerges from single-vendor lock-in. People stop hoarding access. They stop complaining about quotas. They stop friction around procurement. When everyone can access multiple options, selection becomes merit-based instead of politically fraught.

Snowflake and ServiceNow aren't being altruistic here. They're being pragmatic. They've watched their best employees build workarounds. Now they're building those workarounds into the product.

The Market Data Problem: Whose Numbers Should You Trust?

Amidst all this, there's a nagging problem: we don't have clear data on which AI companies are actually winning in enterprise. The conflicting surveys paint wildly different pictures.

Menlo Ventures released a survey in late 2025 showing Anthropic holding a commanding market lead in their portfolio analysis. A16Z (Andreessen Horowitz) released a report shortly after showing OpenAI leading. Both firms have obvious conflicts of interest. A16Z is an early investor in OpenAI. Menlo has Anthropic in its portfolio. Neither firm released methodology detailed enough to fully evaluate. Neither has access to the full picture.

This creates a strange situation. Major enterprise partnerships are happening, but we're essentially blind about the underlying adoption numbers. Snowflake tells us they're doing $200 million in business with both OpenAI and Anthropic. That's useful. ServiceNow tells us the same. But we don't know how their customers actually allocate usage between models. We don't know the cost per inference. We don't know retention rates. We don't know which models are generating the most value.

What we do know is what enterprises are signaling through their deals. And the signal is clear: model diversity is valuable. Enterprises aren't betting on a single horse because they genuinely don't know which horse will win, and the cost of guessing wrong is too high.

This lack of clarity actually accelerates the multi-vendor trend. If there were clear adoption winners, enterprises would gravitate toward them. But the absence of clear winners creates space for multiple vendors to survive and thrive. Competing on different dimensions, supporting different use cases, building loyal followings for different reasons.

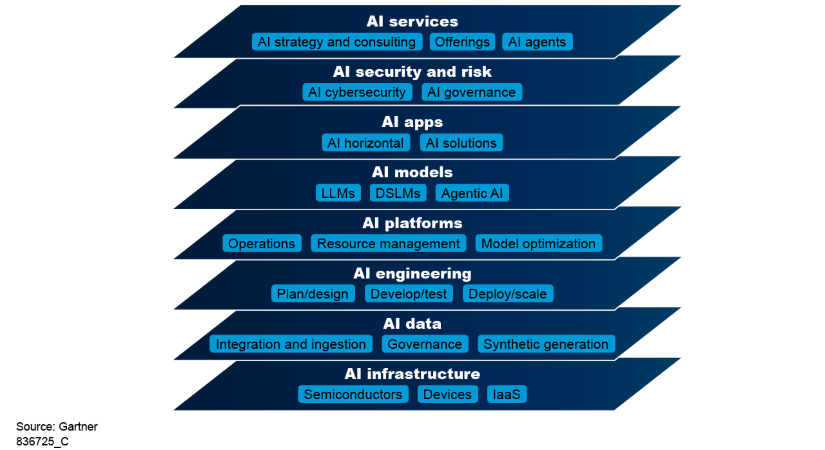

Why Different Models Excel at Different Things

Understanding the multi-model strategy requires understanding why the models themselves are genuinely different. It's not just marketing differentiation. The underlying architectures and training choices create real differences in behavior.

OpenAI's GPT-4 was trained with an emphasis on broad capability. The model learned to handle nearly any task thrown at it. As a result, it's exceptionally flexible. Ask it to write poetry, debug Python, explain quantum mechanics, or draft a contract, and it handles all of them competently. This flexibility is powerful but comes with a tradeoff: it's not specifically optimized for any one task.

Anthropic's Claude was built with constitutional AI principles and long-context training as priorities. This means it can handle much longer inputs (up to 200K tokens in the latest version, compared to GPT-4's 128K). It's also trained to be cautious about edge cases and explicit about uncertainty. For tasks like summarizing legal documents, analyzing compliance, or processing long research papers, this matters.

Google's Gemini brought multi-modal capabilities to enterprise earlier than some competitors. It understands images, text, audio, and video in a single model. For enterprises with complex data that spans multiple media types, this is valuable. You don't have separate models for text analysis and image understanding.

Meta's Llama models (released open-source) enabled something different: on-premises deployment and fine-tuning. For enterprises with serious data governance constraints or extremely large-scale needs, running models on your own infrastructure eliminates latency and keeps data internal.

These aren't minor differences. They're architectural choices that cascade through how the models work. Enterprises building systems realize quickly that picking a single model means optimizing for one set of tradeoffs. Multiple models mean you can optimize for the actual problems you're trying to solve.

Snowflake customers building AI applications now do something interesting. They might use GPT-4 for initial ideation and rapid prototyping because of its flexibility. Then migrate the production system to Claude if the task involves sensitive compliance issues. Then layer in Gemini if the data involves images. This isn't indecision. It's sophisticated engineering.

The Ride-Hail Model: What Enterprise AI Might Look Like

There's a useful analogy hiding in how people use Uber and Lyft. Both companies compete directly. Both solve the same core problem (getting you from point A to point B). Yet both survive profitably. Here's why: users switch between them based on context.

One app has a driver nearby right now. The other has a slight surge pricing. One has better ratings in this neighborhood. The other is cheaper. Experienced users maintain both apps and choose based on the specific situation. Neither has "won." Neither needs to. They coexist.

This is increasingly how enterprises are approaching AI models. Different models for different contexts. Different vendors because each has different strengths. Different pricing structures because they've optimized for different customer segments.

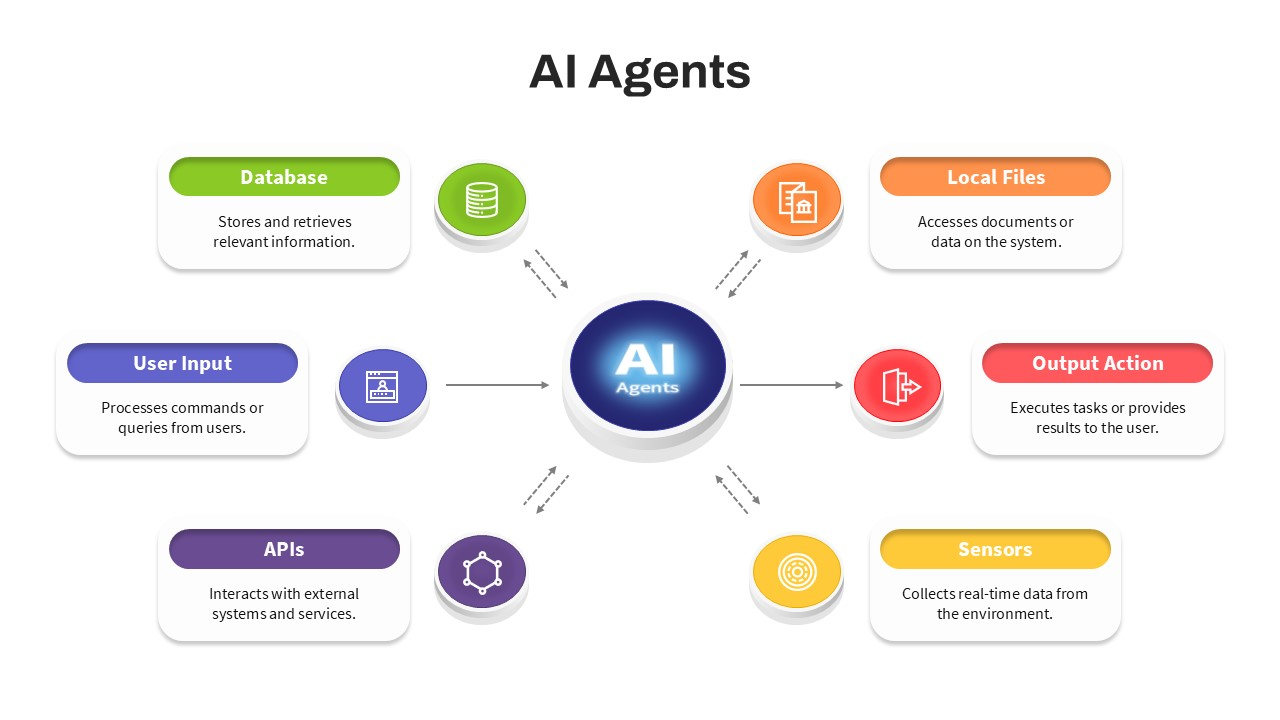

For this to work at enterprise scale, there's a crucial infrastructure requirement: models need to be interchangeable at the integration layer. Snowflake's strategy requires that swapping between OpenAI, Anthropic, and Google doesn't require rewriting applications. It requires standardized APIs, similar input/output formats, and abstraction layers that make the model beneath irrelevant to the application.

The industry is moving in this direction. Companies like Anthropic and OpenAI are adopting compatible APIs. Cloud providers (AWS, GCP, Azure) are building multi-model abstraction layers. Enterprises are investing in middleware that translates between different model APIs. The infrastructure for the "ride-hail model" of AI is being built right now.

If this infrastructure matures (which seems increasingly likely), then competition shifts. Instead of companies competing on whether their model is "better," they compete on specific dimensions: reasoning speed, context length, cost per token, latency, specialized capabilities, compliance features. Different companies can win at different dimensions, and customers can choose based on their priorities.

This sounds chaotic. It's actually efficient. It's competition working the way it's supposed to.

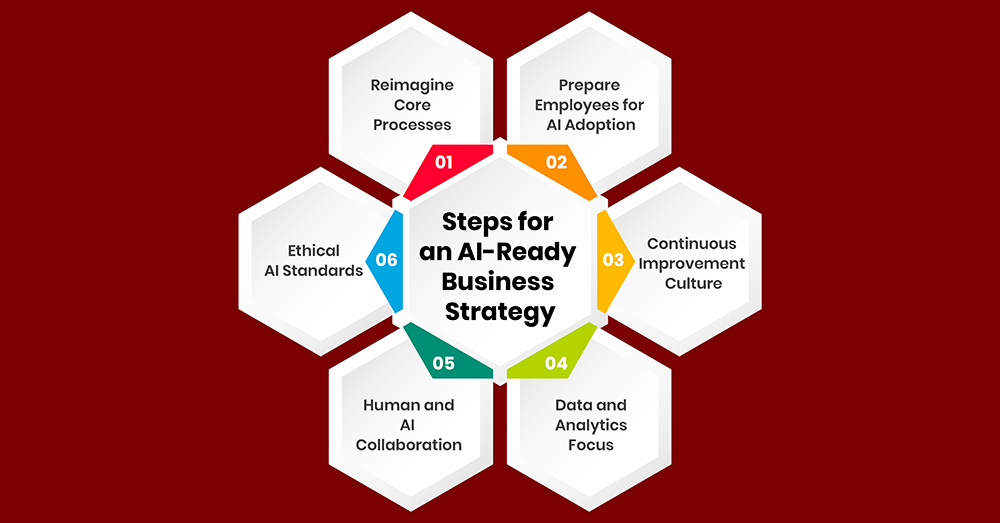

Different AI models excel in various areas, highlighting the importance of choice in enterprise AI strategies. Estimated data based on model descriptions.

The Cost Dynamics: How Model Agnosticism Improves Pricing

Here's an economic reality that enterprises understand but don't always voice publicly: when you're locked into a single vendor, that vendor has enormous pricing power. You've bet your applications on their model. Switching costs are huge. They know it. You know it. Pricing reflects that reality.

When enterprises signal that they're willing to switch between models, something changes in the negotiation dynamic. Vendors can no longer assume lock-in. They have to compete on value, not just capabilities. Pricing pressure emerges. Service levels matter more.

Snowflake's dual partnerships with OpenAI and Anthropic create a negotiating situation where Snowflake can credibly say: "Your model is valuable, but if pricing doesn't work, we can optimize toward the other option." This isn't empty leverage. Snowflake has $200 million committed to Anthropic. They genuinely could shift usage.

And Snowflake isn't alone. If every major enterprise customer follows the same pattern, pricing dynamics change industry-wide. Companies can't rely on lock-in to sustain premium pricing. They have to deliver value that's worth the price relative to alternatives.

This might sound beneficial to enterprises, and it is. But it cascades through the AI industry. If pricing becomes more competitive, that affects how much money companies can pour into research and development. It affects how much they spend on safety and alignment. It affects how aggressively they can expand to new market segments.

The multi-model strategy isn't just about getting better systems. It's about creating a market structure where innovation and value delivery matter more than just being one of a few incumbents.

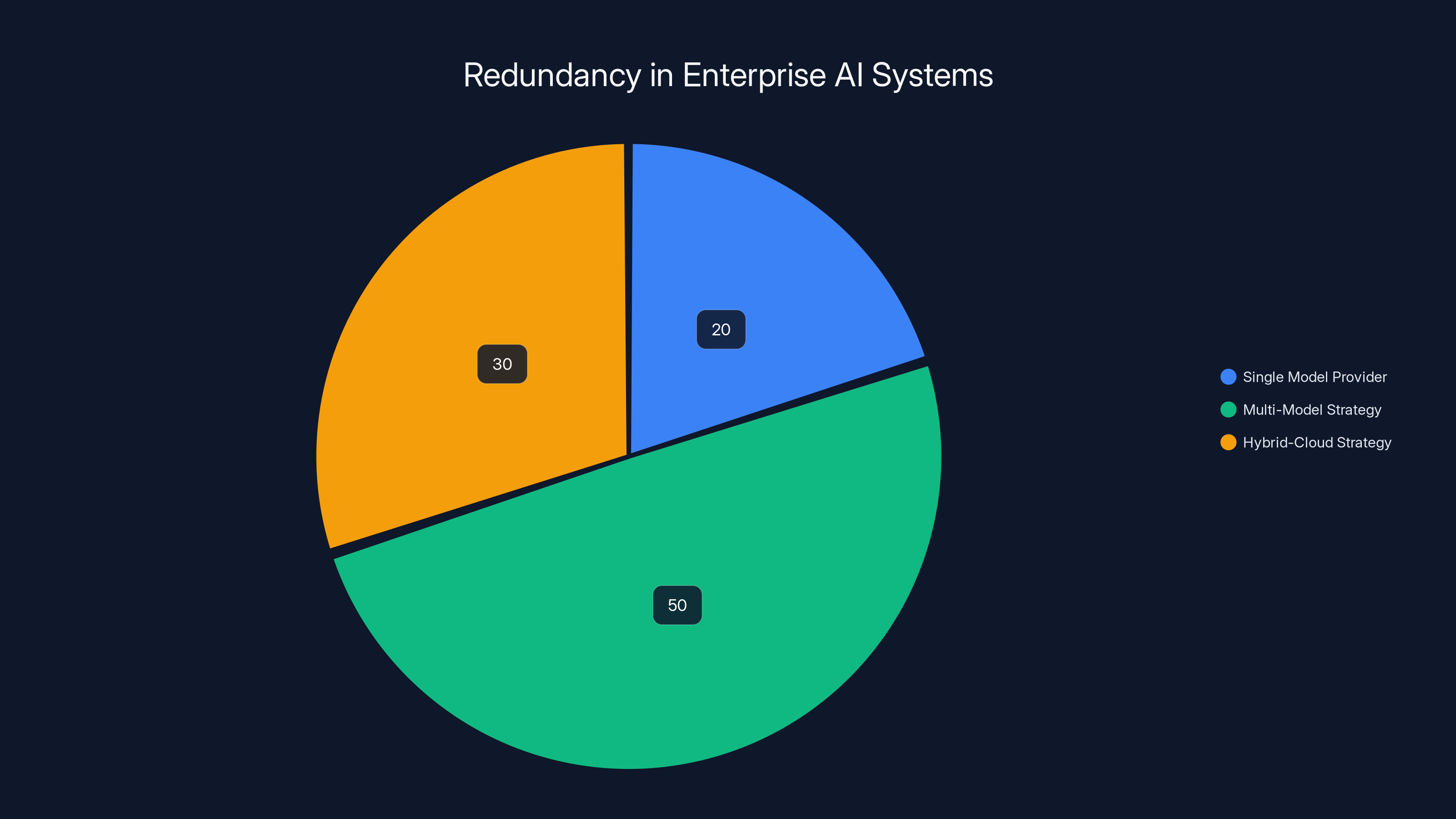

The Reliability Factor: Why Redundancy Matters in Mission-Critical Systems

When you're building systems that thousands of enterprises depend on, reliability becomes non-negotiable. A single model provider going down, experiencing a performance degradation, or losing a major customer is a single point of failure.

Multi-model strategies create built-in redundancy. If OpenAI's systems experience a regional outage, Snowflake can route traffic to Anthropic. If pricing suddenly increases, Snowflake can optimize toward lower-cost alternatives. If a model stops advancing as quickly as needed, there are other options to evaluate.

This reliability benefit isn't just theoretical. It mirrors how cloud infrastructure evolved. Companies initially bet on a single cloud provider. Then realized that risk concentration was dangerous. Now, multi-cloud and hybrid-cloud strategies are standard for mission-critical workloads.

Enterprise AI is following the same arc, just faster because the stakes became clear earlier. Snowflake's customers are running important workloads on top of AI systems. Downtime is costly. A single model provider outage affects them all. Redundancy isn't luxury. It's necessity.

Snowflake's willingness to sign major deals with multiple providers signals to their customers: we've thought about the worst cases. If something goes wrong with one vendor, we have plans. Your critical systems have fallbacks.

This creates a market environment where model providers have to think about SLA guarantees, uptime commitments, and degradation modes. Not because customers are demanding it explicitly, but because enterprises like Snowflake are signaling through their vendor choices that reliability is table stakes.

Compliance and Data Governance: The Hidden Driver

Snowflake's core value proposition is secure, governed data platforms. Compliance isn't an afterthought. It's the entire reason enterprises trust Snowflake with their most valuable data assets.

When Snowflake brings in AI models, they inherit these compliance requirements. Different models have different compliance strengths. Claude was explicitly trained with constitutional AI principles and has built-in guardrails for sensitive use cases. GPT-4 is more general-purpose but less specialized for compliance-heavy workflows. Different models might have different data handling policies.

For enterprise customers in regulated industries (healthcare, finance, legal, government), the ability to choose which model processes sensitive data is enormously valuable. You might use GPT-4 for general analysis, but route healthcare data exclusively to Claude because of its compliance orientation. You might use Google's models for image processing but keep sensitive documents with Anthropic.

This isn't just risk management. It's operational efficiency. Instead of imposing blanket restrictions ("no models are allowed to process healthcare data"), enterprises can implement granular controls. Different data gets different models. Different models have different compliance profiles. Different users get access to different options.

Snowflake's compliance customers (and Snowflake has a lot of them) benefit enormously from having multiple models available. Snowflake benefits because it keeps customers who might otherwise have left to find a more compliant AI integration strategy.

This compliance dimension is underappreciated in the current discussion of multi-model strategies. But for regulated enterprises, it might be the most important factor driving the move toward model agnosticism.

The Open-Source Wild Card: How Meta's Llama Changes the Game

Snowflake's partnership list includes Meta, which is significant for reasons that don't always make it into mainstream coverage. Meta's Llama models are open-source. You can download them. You can run them on your own infrastructure. You can fine-tune them on your own data.

This changes the economics of enterprise AI. Instead of being locked into cloud-based APIs controlled by OpenAI or Anthropic, enterprises can deploy Llama models on their own hardware. This offers multiple advantages:

Data stays completely internal. No API calls. No data leaving your infrastructure. For enterprises with serious data governance requirements or highly sensitive data, this is transformative. You get frontier-model-quality AI without ever sending data to external providers.

Latency drops dramatically. Running inference locally eliminates network round trips. For applications where response time matters (customer-facing chatbots, real-time analysis, live coding assistance), this makes Llama compelling.

Cost changes. API pricing models charge per token. On-premises models have upfront capital costs but lower marginal costs at scale. For high-volume use cases, the economics might favor local deployment.

Customization becomes possible. You can fine-tune Llama models on your own data, creating models specifically optimized for your domain. This is hard with proprietary APIs but straightforward with open-source models.

Snowflake including Llama in their portfolio is brilliant. It gives customers a complete spectrum: use Anthropic or OpenAI for cloud-hosted convenience, or use Llama for maximum control and customization. The choice is customer's to make.

This open-source element is part of what makes the multi-model future credible. It's not just different closed-source options competing. It's a mix of proprietary and open approaches, each with different tradeoffs. Enterprises can genuinely choose based on what matters to them.

Estimated data shows that multi-model strategies are the most adopted redundancy approach in enterprise AI systems, reflecting the importance of reliability in mission-critical workloads.

How Enterprises Are Actually Using This Choice

Theory is one thing. Implementation is another. What does multi-model usage actually look like inside enterprises that have access to several models?

Based on patterns we're seeing emerge, it looks something like this:

Customer service applications tend to land on Claude for its compliance strengths and reduced hallucination. When you're representing the company to customers, accuracy and safety matter more than raw creativity.

Analytics and insights generation often gravitates toward GPT-4 because its reasoning capabilities excel at interpreting complex datasets and generating novel insights. The flexibility and broad capability matter more than specialized strengths.

Content generation for marketing might use multiple models in parallel. Create drafts with GPT-4, refine with Claude, get creative variations from Gemini. Different tools for different stages of the workflow.

Code generation and debugging shows interesting patterns. Developers use Copilot (built on GPT-4) when they're in IDEs, Cursor when they want a more code-focused experience, and Claude in browsers when they want different reasoning approaches. They've essentially built multi-model development practices without even thinking of it as that.

Complex research tasks layer models. Start with Perplexity or Claude for comprehensiveness. Use GPT-4 for reasoning through implications. Use local Llama models for tasks involving sensitive internal data. The research process becomes multi-model by nature.

What's interesting is that none of this is mandated. There's no edict from leadership saying "use this model for that task." These patterns emerge because people discover they work better. They're organic optimization.

Enterprises that institutionalize this learning (documenting which models work best for which tasks, building integrations that make switching easy, training employees on what each model is good at) will likely out-execute their single-model competitors. Their systems will be better because they're using better tools for each job.

The Vendor Response: How AI Companies Are Adapting

AI companies are reading the same signals. They understand they're competing in a world where customers will have multiple options. This is forcing rethinking of strategy across the industry.

OpenAI's approach has been to stay somewhat ahead on capability. Be the model that's most capable at the broadest range of tasks. If you're the frontier, enterprises have an incentive to use you even if other options exist. The challenge with this strategy is that frontier improvements are slowing. Each generation brings incremental gains, not paradigm shifts.

Anthropic is leaning into specialized strengths: constitutional AI, compliance awareness, long-context reasoning. The bet is that enterprises will value these strengths enough to keep Claude in their portfolio even if GPT-4 is generally more capable. This is viable if the specialization is real and valuable.

Google is emphasizing multi-modal and integration with existing enterprise infrastructure. If you're already in the Google Cloud ecosystem, using Gemini is friction-free. Enterprises optimize for reducing operational friction.

Meta's open-source strategy creates a different competitive dynamic. They're not trying to win on the merits of a proprietary model they control. They're trying to become infrastructure. If Llama becomes the standard model that enterprises self-host, Meta wins through ubiquity, not exclusivity.

None of these strategies involve competing head-to-head for exclusive enterprise relationships. They all assume a multi-model future where success means being valuable enough to keep in the portfolio.

This shift in competitive strategy is profound. For the first time in major software markets, leading companies aren't trying to win by locking in customers. They're trying to win by being so valuable for specific use cases that enterprises choose them even with other options available.

The Data Infrastructure Layer: Why This Matters for Architecture

The multi-model strategy is only possible because companies like Snowflake have built flexible data infrastructure. The ability to run different models against the same data, to switch between models without data migrations, to integrate multiple APIs seamlessly—this requires architectural thinking.

Snowflake's core is a data warehouse that separates compute from storage. Different workloads (SQL queries, Python ML pipelines, now AI model inference) can run against the same data without moving anything. This architecture makes integrating multiple AI models elegantly simple. Add an integration for OpenAI's API. Add an integration for Anthropic. Add Llama support for local inference. The underlying data doesn't care. It just processes requests from different places.

Other data platforms are adding similar multi-model support. Databricks, another major player in the data space, is building competing multi-model integrations. This signals that the data infrastructure layer is becoming the competitive battleground for multi-model access.

Why does this matter? Because it means the ability to integrate multiple models isn't a feature that gets bolted on later. It's becoming part of the core platform design. Companies building data platforms now are assuming multi-model futures. They're designing architecture to make switching between models trivial.

This cascades upward. When switching models is architecturally trivial, enterprises can experiment more freely. When experimentation is easy, learning accelerates. When learning accelerates, optimization happens faster. The data infrastructure layer enables the multi-model strategy.

For enterprises building their own AI infrastructure (not using pre-built platforms like Snowflake), this is a valuable lesson. Architect for flexibility. Don't embed model choice so deeply that switching is painful. Build abstractions that let you swap implementations. This flexibility will matter increasingly.

The Employee Experience: How Choice Cascades Through Organizations

When enterprises give employees access to multiple AI models, something shifts in how work actually gets done. People find preferences. They discover which tools are best for different jobs. They develop patterns and workflows that leverage multiple models.

This creates organizational learning. Over time, you develop institutional knowledge about which models are best at which tasks. You train new employees on these patterns. The optimization becomes implicit in how your organization operates.

Companies like Snowflake that embrace this are building persistent competitive advantages. Not because they own better models (they don't; they're integrating others' models). But because they're learning how to use multiple models effectively. That knowledge is hard to replicate. It compounds over time.

Moreover, employee satisfaction increases. Professionals want the best tools to do their jobs. Forcing someone to use a suboptimal tool because of licensing agreements is frustrating. Giving them multiple options, letting them pick what works, improves morale. This sounds soft, but it cascades through retention, productivity, and institutional capability.

Companies that restrict employees to single AI models will find their best people eventually leave or build workarounds. Companies that embrace multi-model strategies will find their people more engaged and productive.

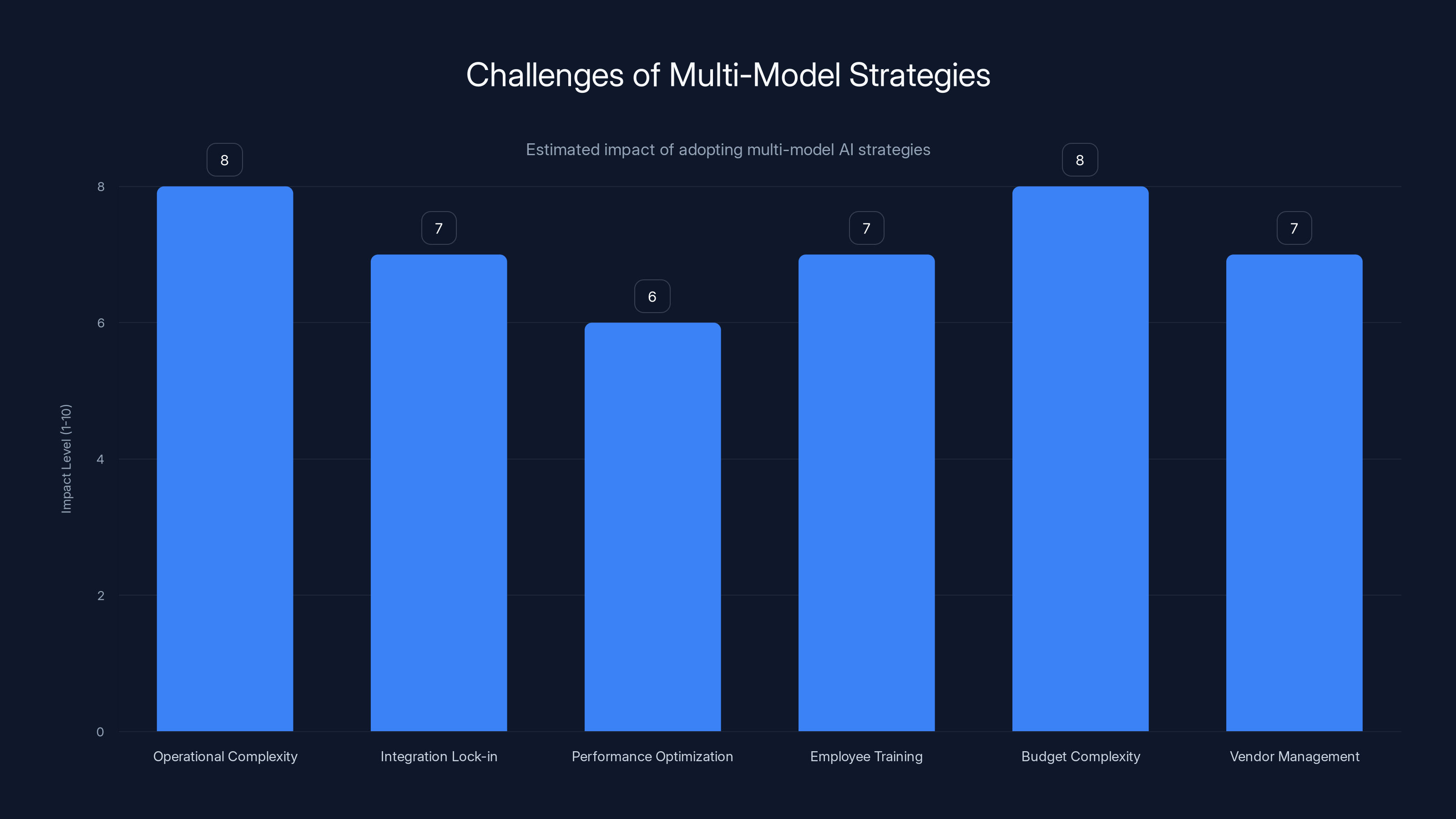

Multi-model strategies increase complexity across various operational aspects, with operational and budget complexities being the most impacted. Estimated data.

Merger and Acquisition Implications: How Consolidation Might Play Out

The multi-model strategy has interesting implications for M&A in the AI space. If enterprises are deliberately avoiding single-vendor lock-in, then company survival depends on being so valuable for specific use cases that enterprises keep you in their portfolio, not on being "the" solution.

This changes how startups get valued and acquired. In the old software model, acquisition targets were companies that could expand TAM for the acquiring company. Slack got acquired by Salesforce to enhance CRM. Figma stayed independent because it was the clear category leader.

In the multi-model AI world, acquisitions look different. A company might get acquired not because they're the best overall, but because they're the best at specific dimensions and complement an existing portfolio. OpenAI (owned by Microsoft) and Anthropic (separate) coexist because they serve different needs.

Smaller AI companies might find themselves more viable longer. You don't need to be the universal winner. You need to be the best at something enough enterprises care about. You need to play nice with other models in customer environments. You need to accept that you'll be one option among several.

This is actually healthier for innovation. It means the market can sustain more companies with different approaches. Less pressure to optimize for omniscient general capability. More freedom to specialize.

The Global Dimension: How This Plays Internationally

The multi-model strategy is particularly interesting when viewed through international lens. Different countries have different regulatory requirements. Europe has GDPR. China has data residency laws. India has data localization requirements. Brazil has LGPD.

A global enterprise using a multi-model strategy might use Anthropic for certain tasks in Europe (knowing it has compliance strengths), use local providers where regulations require it, use open-source Llama where data governance is critical, use proprietary models in regions where the regulatory environment is more permissive.

This isn't just theoretical. Enterprises with serious international presence are already doing this. They're building hybrid strategies that respect regional requirements while maintaining global optimization. The multi-model approach makes this architecturally possible.

This also creates opportunities for regional AI providers. If enterprises need different models in different geographies, local providers have a foothold. They don't need to beat OpenAI globally. They just need to be better at serving their region's requirements.

This international dimension suggests the multi-model future will look more pluralistic globally than many people expect. Not just multiple global players, but multiple regional players too. The architecture accommodates it.

The Performance Measurement Challenge: How Enterprises Are Evaluating Models

When you're using multiple models, how do you measure which is actually performing better? The obvious answer is metrics. But in practice, it's complex.

Different models excel at different measurements. GPT-4 might score higher on general reasoning benchmarks. Claude might score higher on compliance-specific tasks. Gemini might excel at multi-modal tasks. You can't just say "GPT-4 is better" because it's only better at specific dimensions.

Enterprises are developing internal benchmarking practices. They're running the same tasks through multiple models and comparing outputs for quality, accuracy, compliance, latency, and cost. They're building evaluation frameworks specific to their use cases.

This is more work than just using a single model. But it's creating organizational capability. Companies that get good at evaluating and comparing models develop expertise that becomes competitive advantage.

Moreover, this measurement drives feedback that helps AI companies improve. If Snowflake can tell OpenAI exactly why their customers are choosing Claude for certain tasks, that information is gold. It drives product development toward actually addressing customer needs, not just chasing benchmark scores.

Looking Forward: The Five-Year Trajectory

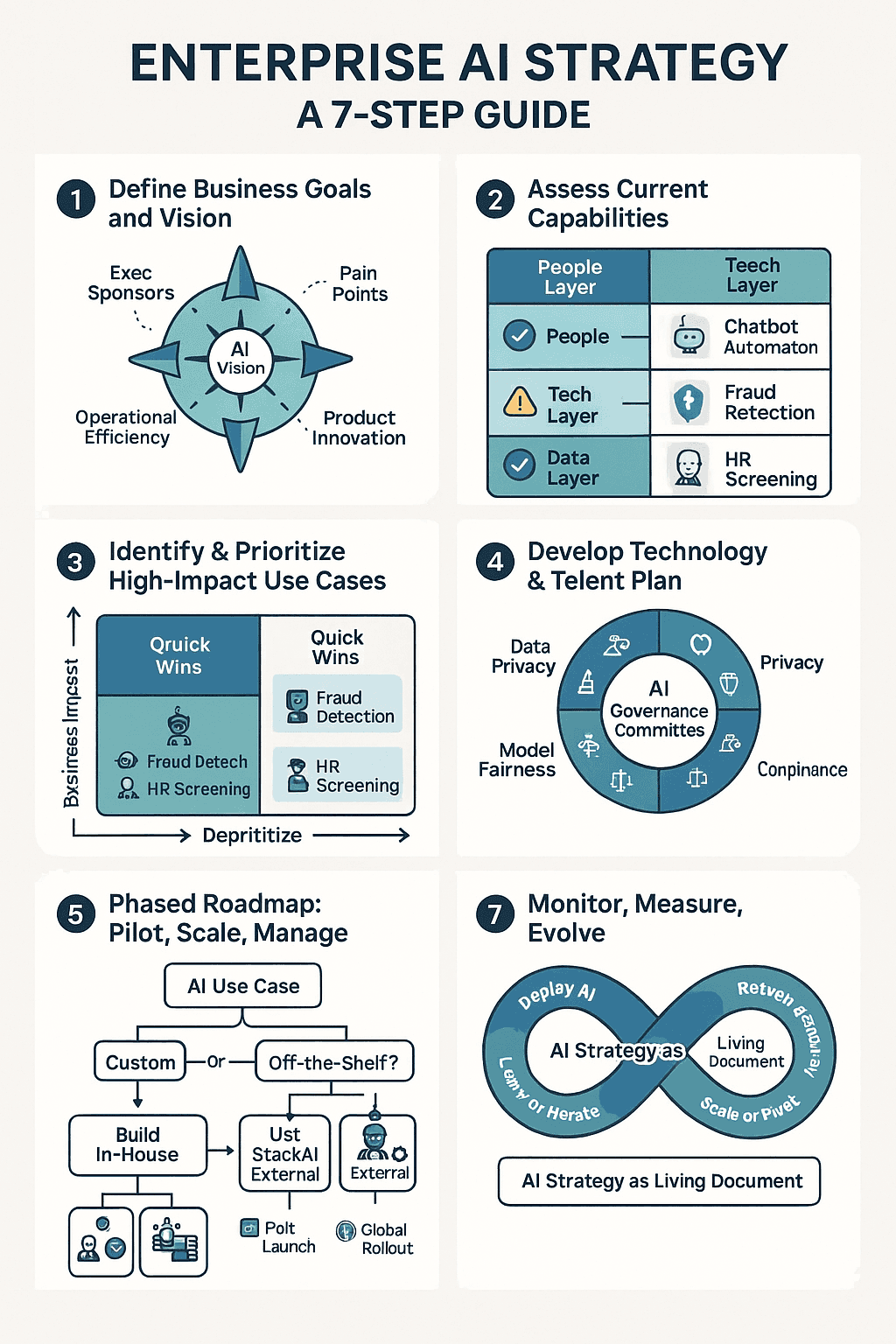

If Snowflake and ServiceNow's strategy becomes the norm (which current signals suggest it will), what does enterprise AI look like in five years?

Most likely scenario: the multi-model approach becomes standard. Enterprises maintain relationships with 3-5 major AI providers. They have clear patterns for which provider is best for which task. They've built abstractions that make switching seamless. They're not locked into anything.

Model providers compete on specialization, not universality. OpenAI owns general reasoning. Anthropic dominates compliance and long-context. Google controls multi-modal. Meta becomes the self-hosted standard. Smaller players own specific niches. Different companies win at different things.

Pricing becomes more competitive because lock-in disappears. Companies can't charge premium prices just because switching is hard. They have to charge prices that reflect value. This creates pressure to innovate continuously, not just maintain status quo.

Edge cases and specialized models proliferate. Since there's room for multiple winners, there's room for specialized models optimized for specific industries or tasks. Healthcare gets models trained on healthcare data. Financial services gets models trained on financial tasks. Legal gets specialized models. The market becomes more segmented.

Open-source models (like Meta's Llama) become more important, not less. As enterprises learn to use multi-model stacks, they realize open-source options are valuable for privacy, latency, and customization. The commercial viability of open-source AI increases.

AI becomes infrastructure, not product. Like databases or cloud providers, enterprises view AI as a utility they'll source from multiple places depending on the workload. This shift is already underway. It accelerates from here.

The companies that win are those that become indispensable for specific jobs, not those that try to be everything for everyone. This is healthier for the market, healthier for innovation, and healthier for enterprises.

Snowflake's strategy involves multiple AI partnerships, with OpenAI and Anthropic each holding a 25% share, emphasizing their model-agnostic approach. Estimated data.

The Honest Trade-offs: What You're Giving Up

Multi-model strategies have genuine costs. Let's be direct about them.

Operational complexity increases. Managing multiple AI vendors means multiple contracts, multiple APIs, multiple authentication systems, multiple compliance certifications. This is harder than one vendor. It requires organizational discipline and infrastructure investment.

Lock-in sometimes comes back through integration. If you've built your entire workflow around orchestrating models together, switching one becomes hard because you've built infrastructure dependencies. The flexibility is real but not unlimited.

Performance optimization becomes harder. With a single model, you can deeply optimize for that model's characteristics. With multiple models, you're compromising. You might get slightly worse performance on each task in service of the overall flexibility.

Employee training is more complex. If your team is using three different models with different capabilities and interfaces, onboarding is more difficult. People have to learn multiple tools instead of one.

Budget complexity increases. Instead of a single contract with predictable costs, you're managing multiple usage-based pricing models with different rate structures. Forecasting costs becomes harder.

Vendor management becomes more sophisticated. You're not just implementing one platform. You're managing relationships with multiple providers, ensuring compatibility, handling edge cases where one provider has an outage.

These aren't dealbreakers. For enterprises, the benefits of flexibility outweigh these costs. But pretending they don't exist would be dishonest. Multi-model strategies are more complicated than single-model approaches. Enterprises choosing this path need to accept that complexity.

The Workflow Automation Angle: ServiceNow's Specific Case

ServiceNow's dual deals deserve deeper analysis because they tell a slightly different story than Snowflake's.

ServiceNow is fundamentally about workflow. The company automates business processes for enterprises. When ServiceNow integrates AI, it's typically at workflow decision points. Should this request be routed here or there? Should we escalate? Do we have enough information to proceed?

Different models have different strengths at these decision points. GPT-4 is great at nuanced judgment calls that require broad reasoning. Claude excels at consistency and reliability when compliance matters. Different workflows benefit from different models.

By integrating both, ServiceNow gives workflow designers more options. Build a workflow that uses GPT-4 for creative problem-solving. Build another that uses Claude for compliance-sensitive decisions. Build a third that layers models based on the specific situation.

This is more architecturally sophisticated than just "use both models." It's about workflow builders having model selection as a native design capability. It's about different processes being able to optimize for different models based on requirements.

ServiceNow customers are getting a much richer capability surface by having multiple models available. This is likely why ServiceNow made the investment in both partners simultaneously. It wasn't defensive. It was offensive. It's building product capabilities that rival platforms can't easily replicate.

The Competitive Response: How Other Platforms Are Catching Up

Snowflake and ServiceNow moved first. But they won't move alone for long. Other enterprise platforms are scrambling to build similar multi-model capabilities.

Databricks is building multi-model integrations into its data platform. Palantir is exploring how to give customers AI model choice. Salesforce is integrating AI models into CRM workflows. SAP is adding multi-model capabilities to its enterprise suite.

This race to multi-model support is actually beneficial. It pushes toward standardization. The more platforms support multiple models, the more model-agnostic the infrastructure becomes. The more agnostic the infrastructure, the more freely enterprises can choose models.

Within 12-18 months, expecting multi-model support will be table stakes for major enterprise platforms. It's not going to be a differentiator. It's going to be basic functionality that customers expect.

When that happens, competition shifts to other dimensions. How well do you integrate? How fast is inference? How good is your documentation? How responsive is support? Pure model capability becomes less important than execution quality.

What This Means for AI Startups Building on Top

If enterprises are multi-model by default, what does that mean for startups building AI applications?

The obvious lesson: don't lock yourself into a single model. Build abstractions that let you swap models as the landscape evolves. Use APIs that are relatively standardized. Avoid deep dependencies on model-specific capabilities unless you're specifically designed around that model.

The harder lesson: you probably can't win by being better than Claude or GPT-4 at reasoning. If your value prop is "we're a reasoning engine," you're competing against frontier models from well-funded companies. You'll lose.

You can win by being better at specific tasks. Better at something domain-specific. Better for a particular industry. Better at orchestrating models together. Better at integrating AI into workflows where humans weren't using AI before. You win by solving problems, not by owning models.

Startups building AI tools should study how Figma won. It didn't win by having the best rendering engine (Adobe already had that). It won by building a fundamentally better experience for collaborative design work. Similarly, AI startups don't need better models. They need better tools, better workflows, better integrations, better UX.

The multi-model future rewards builders, not model owners.

The Research Community Impact: How This Affects AI Development

One underappreciated aspect of Snowflake and ServiceNow's multi-model strategies: it changes incentives for AI research companies.

When enterprises are locking in exclusively, a company has huge incentive to pursue moonshot capabilities. Push the frontier aggressively. Be the best at something, lock customers in, rake in profits.

When enterprises are diversifying, the incentive shifts. You need to be valuable in your particular niche, not try to be everything. This actually might lead to more focused, strategic research rather than broad capability chasing.

Anthropic's focus on constitutional AI and alignment is sensible precisely because OpenAI is going for broader reasoning capability. If Anthropic tried to match GPT-4's breadth, they'd lose. But they've carved out a niche where their approach is valued.

Google and Meta approaching multi-modal and open-source respectively makes sense in this context too. Different niches. Different strengths. Different research directions.

This diversification of research directions is probably healthier long-term. It means you're not betting everything on a single approach to intelligence. You've got multiple teams pushing in different directions, learning different things, creating options.

The Risk Scenario: What Could Go Wrong

The multi-model future sounds plausible. But plausibility isn't certainty. What could derail it?

If one company achieves genuine AGI or something close to it, lock-in returns. If GPT-5 is so much better than everything else that there's no rational reason to use alternatives, the multi-model market collapses. Everyone becomes a customer of whoever has AGI. This seems unlikely soon, but over a five-year horizon, it's not impossible.

Regulatory intervention could force consolidation. Governments concerned about AI dominance might require open standards or interoperability. This could either accelerate the multi-model trend or create artificial winners if regulation is captured by incumbent interests.

Massive commoditization could make models interchangeable. If all frontier models become basically identical in capability and cost, enterprises stop caring about diversity. The multi-model strategy becomes pointless if you can't distinguish between models.

A new competitor emerges and becomes so dominant that it resets the market. OpenAI wasn't obvious ten years ago. Neither was Anthropic. An unknown competitor could emerge with an approach that's so much better it reshapes the landscape.

These are real risks. But they seem less likely than the multi-model future continuing to develop as current signals suggest.

The Bottom Line: Why This Matters Beyond Enterprise AI

Snowflake's $200 million deals with OpenAI and Anthropic matter, but not primarily because of the money. They matter because of what they signal about market structure.

For two decades, software markets have consolidated around winners. Winner-takes-most economics created huge incumbent advantages and substantial switching costs. That created tremendous value for the companies that won but also created innovation deserts where alternatives struggled to gain traction.

The multi-model strategy suggests AI might be different. It suggests a future where multiple companies can win at different things and coexist profitably. It suggests competition that's based on value delivery and specialization rather than lock-in and exclusivity.

If this plays out, it's not just better for enterprises (more choice, better tools, lower prices). It's better for the entire ecosystem. It allows more companies to compete. It encourages specialization and diversity of approach. It creates options that don't exist in winner-takes-all markets.

The enterprise AI race isn't about finding a single winner. It's about building a market structure where multiple companies can win. That's what Snowflake and ServiceNow's strategy signals. And if they're right, it changes everything about how AI gets deployed, commercialized, and improved over the next decade.

FAQ

What is the multi-model strategy in enterprise AI?

The multi-model strategy is when enterprises deliberately maintain relationships with several AI companies and integrate multiple models into their systems rather than standardizing on a single model. Snowflake, for example, signed $200 million deals with both OpenAI and Anthropic simultaneously, and also integrated models from Google, Meta, and others. This approach gives enterprises the flexibility to use different models for different tasks based on which model is best suited for the specific job.

Why are enterprises like Snowflake choosing multiple AI models instead of picking the best one?

Enterprises are adopting multi-model strategies because different AI models genuinely excel at different things. OpenAI's GPT-4 excels at broad reasoning and flexibility. Anthropic's Claude is stronger at long-context processing, compliance, and safety. Google's Gemini handles multi-modal tasks better. Instead of forcing all problems through a single model, enterprises realize they get better results by using the right tool for each job. Additionally, diversification provides redundancy—if one model provider has an outage or pricing increases, alternatives are available. Finally, having multiple options gives enterprises better negotiating leverage on pricing and terms.

How does multi-model strategy affect employees using AI tools?

When enterprises embrace multi-model strategies, employees typically end up using their preferred models across different platforms, which was already happening informally. By formalizing this approach, companies like Snowflake and ServiceNow improve employee satisfaction because people can use the tools that work best for their specific tasks rather than being forced into a single solution. Employees discover that Claude might be better for detailed analysis while GPT-4 is better for creative ideation. They learn when to use open-source models versus proprietary ones. This flexibility creates organizational knowledge about which tools are best for which problems, which becomes a competitive advantage as these practices compound over time.

What does the multi-model future mean for AI pricing and competition?

The multi-model approach eliminates vendor lock-in, which fundamentally changes AI pricing dynamics. When customers can switch between models, vendors can't rely on high switching costs to maintain premium pricing. This creates pressure for AI companies to compete on actual value rather than just being hard to leave. Smaller specialized companies can survive because they don't need to be "the best at everything"—they just need to be the best at specific dimensions that enterprises value. Instead of a winner-takes-all market, you get a more competitive landscape where multiple companies win at different things. This is healthier for innovation because companies compete on capability and service, not just lock-in.

How do enterprises technically implement multi-model strategies?

Implementing a multi-model strategy requires flexible architecture and abstraction layers. Companies like Snowflake use their data warehouse's separation of compute and storage to make adding new AI model integrations straightforward. You standardize on API formats where possible, build middleware that handles differences between providers, and create workflows that can route to different models based on the task. This requires more operational complexity than single-model approaches—you're managing multiple contracts, authentication systems, and compliance certifications. But it means that swapping between models or adding new ones doesn't require complete system redesigns. You're building for flexibility from the start.

Which AI models are best at which tasks in enterprise environments?

Different models have emerged with distinct strengths. Claude from Anthropic excels at handling very long documents, compliance-sensitive tasks, and providing consistent, safety-aware responses. OpenAI's GPT-4 is exceptional at general-purpose reasoning, creative tasks, and handling novel problems. Google's Gemini is particularly strong with multi-modal inputs (images, text, video together). Meta's open-source Llama models are best for organizations needing on-premises deployment, full control over data, or significant customization. Customer service often uses Claude for accuracy. Analytics and insights generation gravitates toward GPT-4. Content creation might layer multiple models. The specific choice depends on your requirements for speed, cost, compliance, accuracy, and customization.

Is the multi-model approach becoming industry standard?

Yes, multi-model is becoming the new normal. Snowflake's and ServiceNow's early moves are being followed by other major platforms. Databricks, Salesforce, SAP, and Palantir are all building multi-model capabilities. Within 12-18 months, expecting support for multiple AI models will be table stakes for enterprise platforms rather than a differentiator. This is driving toward infrastructure that treats AI models as interchangeable components where possible, making the entire ecosystem more flexible and competitive. Smaller companies and startups without legacy single-model investments can build multi-model support from day one.

What are the operational challenges of managing multiple AI models?

Multi-model strategies trade simplicity for flexibility. You have more contracts to manage, more vendor relationships to maintain, more authentication systems to secure, and more pricing models to forecast. It's harder to train teams on multiple tools than one. Integration complexity increases because you're orchestrating different APIs. Performance optimization becomes trickier—you might accept slightly lower performance on individual tasks to achieve overall flexibility. Troubleshooting becomes harder when failures could originate from different providers. Data governance gets more complex when different models have different data handling policies. These aren't insurmountable challenges, but they're real costs that enterprises making this move need to accept and plan for.

How does open-source AI fit into the multi-model landscape?

Open-source models like Meta's Llama are critical to the multi-model future. They provide enterprises with options for on-premises deployment, full data privacy, and customization without being dependent on any company's API or policies. This creates a spectrum from fully proprietary (OpenAI API) to fully open (Llama deployed locally). Enterprises might use cloud-based proprietary models for convenience and performance, but keep open-source models available for sensitive data or cases where they need maximum control. As enterprises learn to integrate multiple models, open-source becomes more valuable rather than less. It's no longer a second-rate option—it's a legitimate choice for specific use cases within a diversified portfolio.

What does this mean for startups building on top of AI models?

Startups shouldn't try to compete directly on raw model capability—that's a losing game against well-funded companies like OpenAI and Anthropic. Instead, startups should build around solving specific problems, creating better workflows, delivering better user experiences, or serving specific industries where they can develop deep expertise. The multi-model world rewards builders and integration specialists more than model creators. The most valuable AI companies in five years will likely be those that brilliantly combine multiple models and solve real problems, not necessarily those that own the underlying models. Keep your solutions model-agnostic so you can adapt as the landscape evolves.

Will there be a single winner in the AI market eventually?

It's possible but increasingly unlikely. The signals from enterprises like Snowflake suggest they're betting on continued diversity. If one company achieves genuine transformative AI capability (something significantly better than anything else available), lock-in could return. But this seems unlikely in the near term, and by then the infrastructure for multi-model approaches will be deeply embedded in enterprise systems. Even if one model becomes technically superior, enterprises might still maintain alternatives for reliability, redundancy, and negotiating leverage. The trajectory suggests a market with multiple sustainable winners, each dominant in specific dimensions, similar to how Uber and Lyft coexist in ride-hailing rather than one clearly winning.

Conclusion: The Enterprise AI Market Has Already Made Its Choice

Snowflake's decision to sign massive deals with both OpenAI and Anthropic, combined with ServiceNow's identical strategy and the emerging patterns across enterprise platforms, tells us something clear: the enterprise AI market has essentially decided that diversity is more valuable than standardization.

This isn't a tentative experiment or a hedge-your-bets contingency plan. These are deliberate, front-loaded, $200 million+ commitments to multiple vendors with the explicit acknowledgment that enterprises need choice.

The implications ripple across the entire AI ecosystem. For enterprises, it means better tools and more leverage. For AI companies, it means competition will be based on genuine value delivery rather than lock-in. For startups, it means building integration layers and solving problems is more important than owning models. For the research community, it means incentives favor specialization over trying to be everything to everyone.

The ride-hail analogy is apt. Uber and Lyft aren't in a death match to be the only option. They coexist because they serve slightly different user preferences, have different regional strengths, and users maintain both apps. AI models are heading in the same direction. OpenAI, Anthropic, Google, Meta, and others will coexist because enterprises, teams, and developers will maintain relationships with multiple options.

This future is healthier for innovation, competition, and user outcomes. It's also harder to execute than a single-vendor approach. But enterprises are clearly making the tradeoff deliberately.

The enterprise AI race isn't about finding one winner. It's about building infrastructure and practices that make multiple winners viable. Snowflake and ServiceNow aren't just making business decisions. They're shaping the market structure that will define AI's role in enterprise for the next decade.

That's why these deals matter. Not because of the dollars (though $200 million isn't nothing). Because of what they signal about where the market is heading.

Key Takeaways

- Enterprise AI leaders are deliberately signing major deals with competing AI companies instead of standardizing on single vendors

- Different AI models have genuinely different strengths (reasoning, compliance, speed, multi-modal), making diversity valuable

- Multi-model strategies give enterprises better negotiating leverage, redundancy, and the ability to match models to specific tasks

- The market is heading toward a multi-winner ecosystem similar to ride-hailing rather than traditional winner-takes-most software markets

- Flexibility at the infrastructure layer (Snowflake, ServiceNow) is becoming table stakes for enterprise platforms

Related Articles

- Why Businesses Fail at AI: The Data Gap Behind the Divide [2025]

- NVIDIA's $100B OpenAI Investment: What the Deal Really Means [2025]

- Shared Memory: The Missing Layer in AI Orchestration [2025]

- OpenAI vs Anthropic: Enterprise AI Model Adoption Trends [2025]

- OpenClaw AI Agent: Complete Guide to the Trending Tool [2025]

- SpaceX's 1 Million Satellite Data Centers: The Future of AI Computing [2025]

![Enterprise AI Race: Multi-Model Strategy Reshapes Competition [2025]](https://tryrunable.com/blog/enterprise-ai-race-multi-model-strategy-reshapes-competition/image-1-1770061218206.jpg)