Introduction: The Data Problem Nobody Solved

When you walk into most Fortune 500 companies, their data teams are drowning. Not in data itself, but in the tools they're using to make sense of it.

Large language models like Chat GPT and Claude have revolutionized how we work with unstructured data—text documents, emails, videos, code repositories. But here's what nobody talks about: structured data (the spreadsheets, databases, and tables that actually run most businesses) still relies on the same methods from the 1990s. A data analyst opens a CSV with a million rows and runs SQL queries. A data scientist spins up Python notebooks. A business analyst uses Tableau dashboards that take weeks to build correctly.

Meanwhile, everyone's touting AI as this universal solver. It's not. At least, not yet.

Enter Fundamental, a stealth AI company that just announced a $255 million Series A funding round to solve exactly this problem. Instead of forcing structured data into the context windows of language models (which doesn't work well, honestly), the company built something fundamentally different: a Large Tabular Model called Nexus.

This isn't just another AI funding announcement. This is a signal that the industry is finally acknowledging a massive gap in how enterprise AI actually gets deployed. Most of the conversations about artificial intelligence focus on chatbots and content generation. But behind closed doors at major companies, the real bottleneck is analyzing billions of rows of transactional data, customer records, and operational metrics.

Fundamental's approach challenges everything we've assumed about how modern AI should work. No transformers. No context window limitations. Deterministic outputs (same input, always the same answer). It sounds almost retro until you realize it's solving a problem that affects every large organization on the planet.

This article digs into what Fundamental actually built, why investors threw a quarter-billion dollars at it, and what this means for how enterprises will analyze data in the next decade.

TL; DR

- $255M Series A: Fundamental raised a massive Series A led by Oak HC/FT, Valor Equity Partners, Battery Ventures, and Salesforce Ventures to commercialize its Large Tabular Model

- The Problem: Traditional LLMs struggle with structured data (tables, databases, spreadsheets) because of context window limitations and transformer architecture constraints

- The Solution: Nexus, a deterministic foundation model optimized for tabular data analysis without relying on transformer architecture

- Market Opportunity: Enterprise structured data is massive, complex, and currently handled by legacy tools; this represents a multi-billion-dollar addressable market

- Proof: Seven-figure contracts already signed with Fortune 100 companies; strategic partnership with AWS for deployment integration

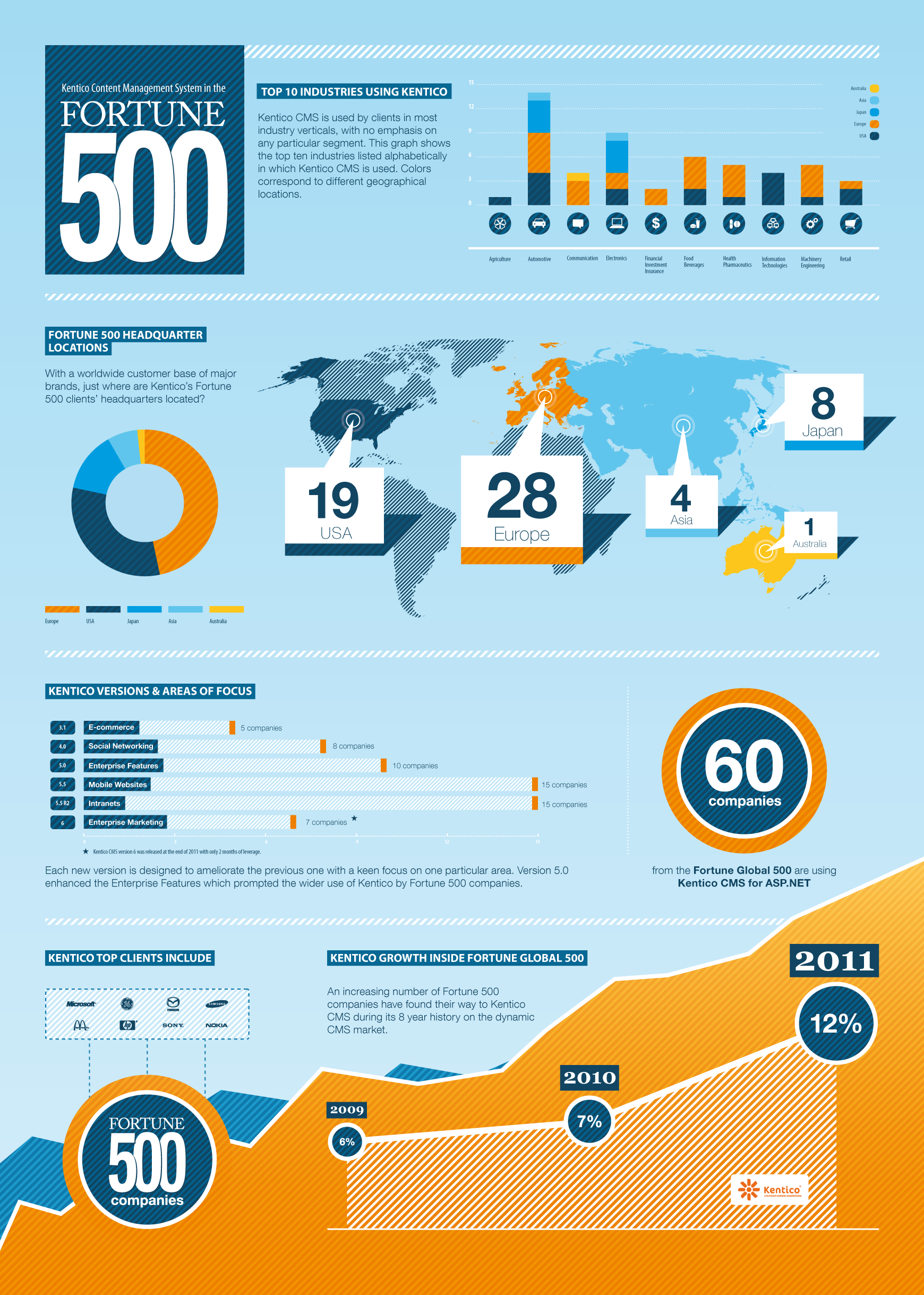

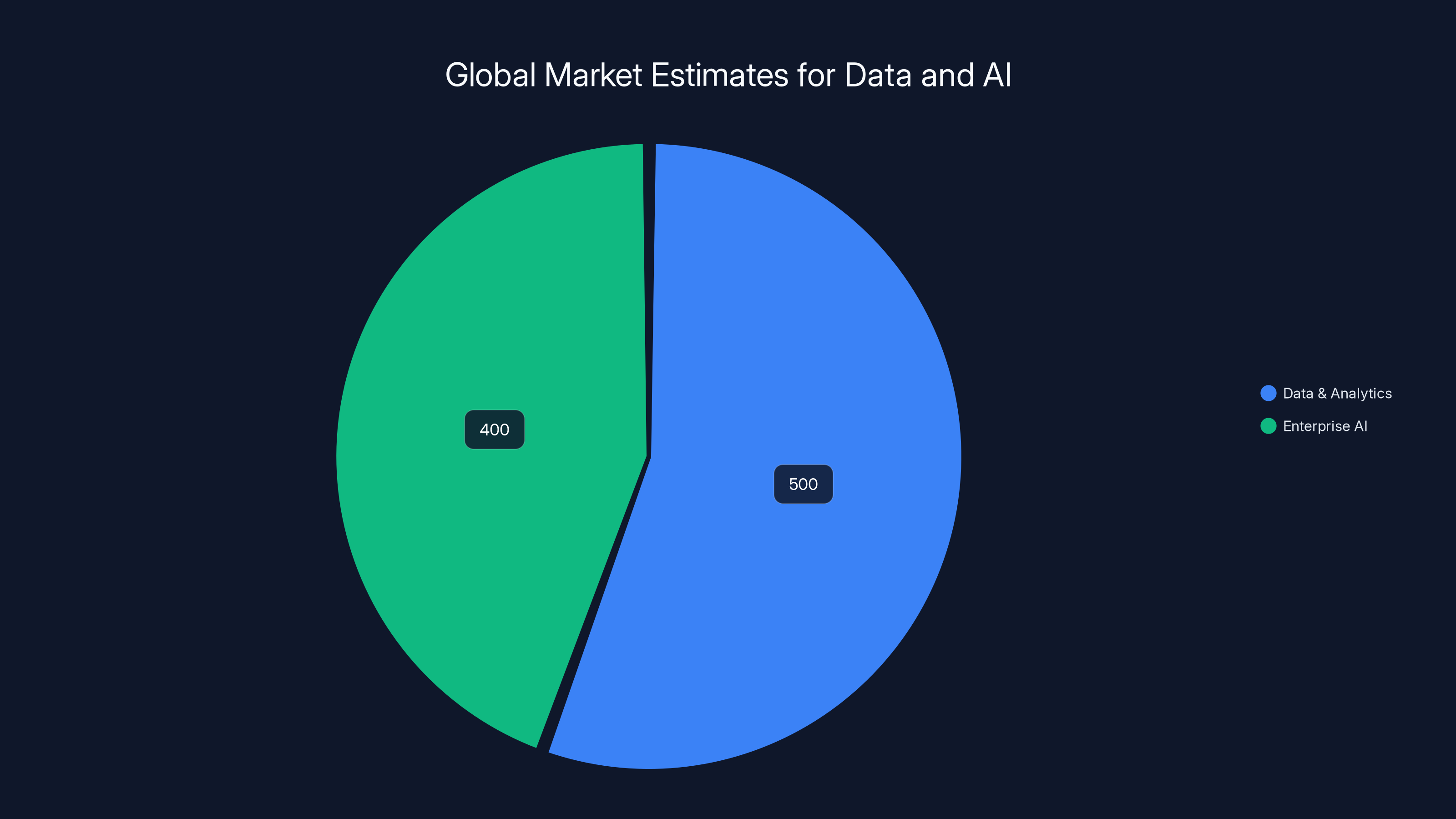

The global data and analytics market is estimated at

What Fundamental Actually Built: Beyond Language Models

Fundamental's CEO Jeremy Fraenkel spent months explaining the problem before landing on the solution. The conversation always started the same way: "LLMs are amazing at text. But nobody's trained a foundation model specifically for numbers."

That distinction matters more than it might sound. Language models are built around the transformer architecture, which processes information in sequences. Feed it text, and it learns patterns. Feed it a table with 10 billion rows, and you've hit a ceiling. The context window—the amount of information the model can process at once—becomes your constraint. You can't analyze a dataset that's bigger than what fits in that window.

Most enterprises don't have small datasets. They have data warehouses. Petabytes of historical transactions. Billions of customer interactions. Millions of log entries per day.

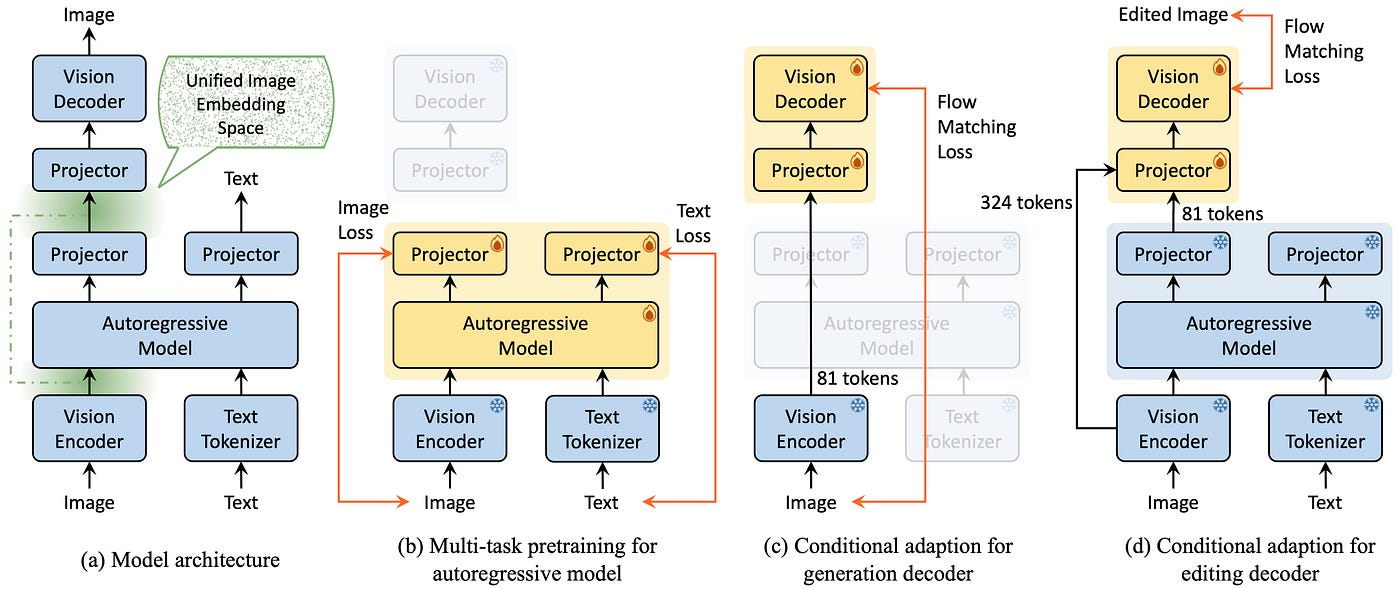

Fundamental's Nexus doesn't work like traditional language models. It's called a Large Tabular Model (LTM) specifically because it's engineered from the ground up for structured data. The model doesn't rely on transformer architecture at all. Instead, it uses different mathematical foundations designed to handle the scale and nature of tabular data.

Here's where it gets interesting: Nexus is deterministic. Ask it the same question twice, and you get the exact same answer. For business-critical decisions, this matters. A loan approval algorithm that gives different results depending on when you ask? That's a compliance nightmare. But language models are probabilistic by nature—they generate the most likely next token, which means slight variations in output.

The model went through the standard foundation model training process: pre-training on massive structured datasets, then fine-tuning for specific use cases. But the output is something entirely different from what you'd get from OpenAI or Anthropic. It's not trying to generate creative text or write code. It's trying to understand relationships in data at scales that would break traditional approaches.

Fraenkel describes it as bringing contemporary AI techniques to a problem that's been stuck using older methods. "You can now have one model across all of your use cases," he told me in conversation. "You can expand massively the number of use cases you tackle. And on each one, you get better performance than you'd get with an army of data scientists."

That last part is the real claim. Better than data scientists. That's bold. But the underlying logic is sound: data scientists spend most of their time on repetitive tasks (feature engineering, model selection, hyperparameter tuning). A foundation model that's specifically optimized for these problems could handle them automatically.

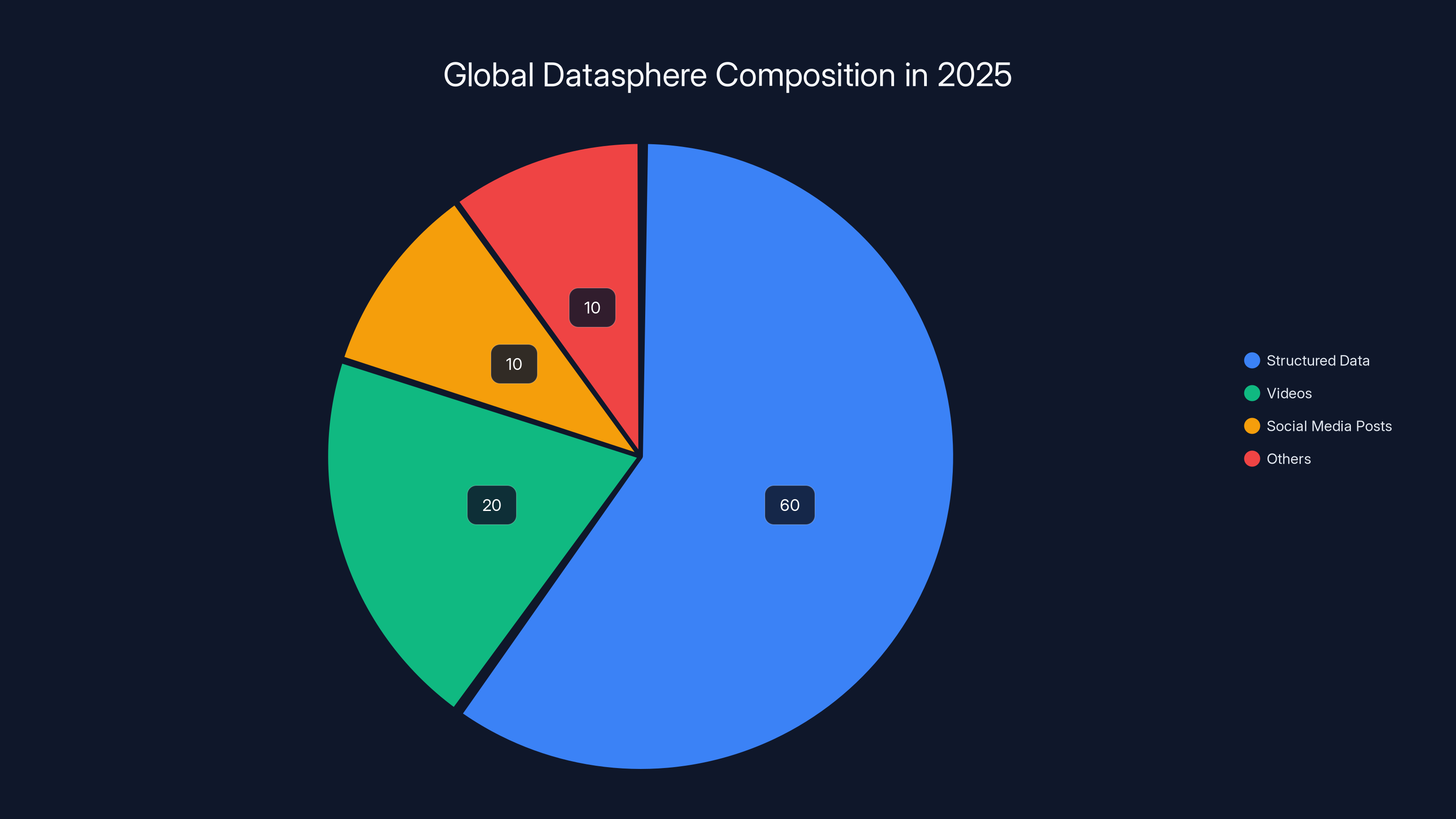

In 2025, structured data is estimated to comprise 60% of the global datasphere, highlighting its dominance over other data types like videos and social media posts. (Estimated data)

The Funding Round: When Serious Money Shows Up

A

The lead investors are telling: Oak HC/FT, Valor Equity Partners, Battery Ventures, and Salesforce Ventures. These aren't seed-stage operators. They're firms that invest in companies targeting enterprise-scale problems. Oak HC/FT focuses specifically on healthcare and financial services, industries where structured data and regulatory compliance matter enormously. Valor invests in enterprise infrastructure. Battery's portfolio includes dozens of billion-dollar companies.

Then there's Salesforce Ventures. Salesforce doesn't just write checks—they integrate. If Fundamental's technology works, it could become part of the Salesforce ecosystem, giving the company instant distribution to millions of users.

The angel investors are even more telling. Aravind Srinivas from Perplexity, Henrique Dubugras from Brex, and Olivier Pomel from Datadog all put personal money into this. These are founders and CEOs who understand technology at a deep level. They wouldn't invest unless they genuinely believed in the technical approach.

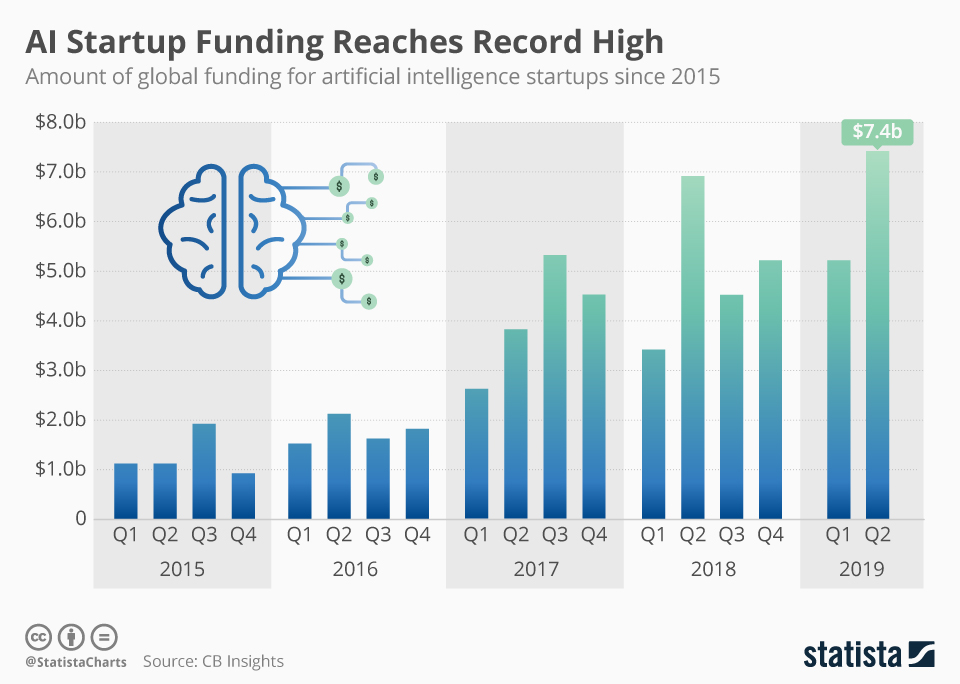

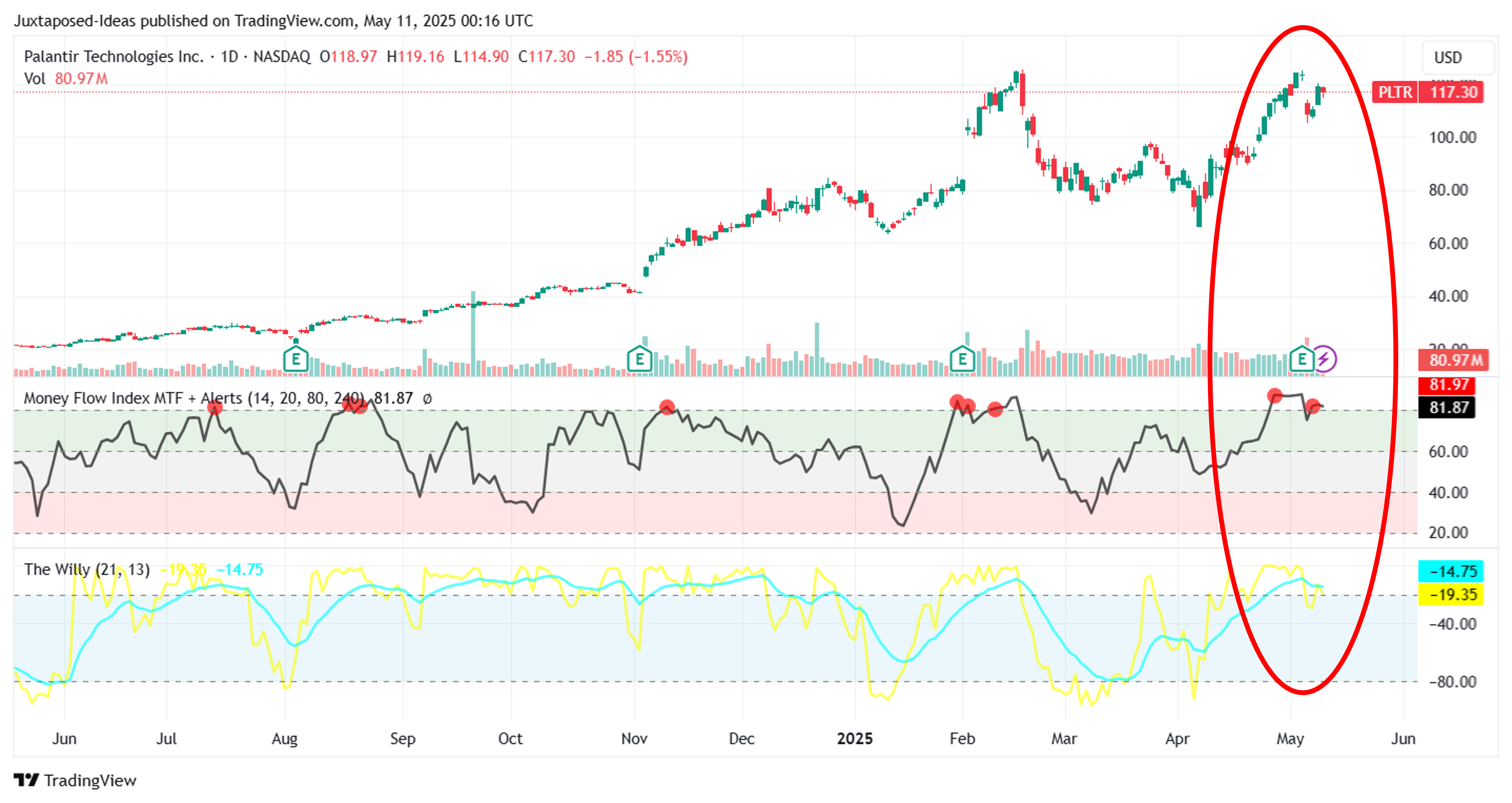

The timing is also important. This funding round happened in early 2025, a period when AI funding has become more cautious and selective. Investors are moving past the "AI is going to do everything" phase and into the "AI solves specific, measurable business problems" phase. Fundamental fits that narrative perfectly. It's not trying to build AGI. It's trying to solve a specific, expensive problem that enterprises face every single day.

The amount of money also signals runway. At typical burn rates for an AI company with serious compute costs, $255 million could fund operations for 3-5 years, even if revenue is slower than expected. That's enough time to build a real product, establish enterprise contracts, and prove the business model.

The Market Opportunity: Why Structured Data Matters

To understand why investors are this interested, you need to understand the market Fundamental is attacking.

Structured data isn't sexy. It doesn't make for good marketing. But it's everywhere. Every transaction at a bank. Every click on an e-commerce site. Every sensor reading from a factory. Every patient record in a hospital. Every stock trade. Every insurance claim.

The global datasphere (the total amount of digital data created, captured, and replicated) hit roughly 175 zettabytes in 2025. That's not a typo. A zettabyte is a billion terabytes. Most of that isn't videos or social media posts—it's structured data. Databases. Logs. Financial records. Operational metrics.

Large enterprises don't struggle to collect this data. They struggle to derive insight from it.

Consider a typical use case: A major bank wants to detect fraudulent transactions in real-time. They have historical data on billions of transactions, patterns of user behavior, network graphs showing relationships between accounts. A modern approach would involve:

- Data engineers spending weeks cleaning and preparing the data

- Data scientists building feature engineering pipelines

- Machine learning engineers training and tuning models

- Dev Ops engineers deploying and monitoring the results

- Analysts managing the output and handling edge cases

That entire workflow, from question to deployed model, typically takes months and costs hundreds of thousands of dollars. And that's just one problem. The bank has dozens of similar problems.

With a foundation model optimized for structured data, the workflow compresses dramatically. The model already understands patterns in tabular data. You don't need to hand-engineer features—the model discovers them. You don't need to select between 50 different algorithms—the model adapts to the specific structure and scale of your data.

This isn't speculative. Fundamental already has seven-figure contracts with Fortune 100 companies. That's deployments. Real problems. Real money.

The addressable market is enormous. IDC estimates that data and analytics spending across enterprises will exceed $500 billion annually by 2025. That includes data warehousing, analytics platforms, business intelligence tools, and data science infrastructure. Fundamental is attacking a specific segment of that market, but it's a segment that affects nearly every company above a certain size.

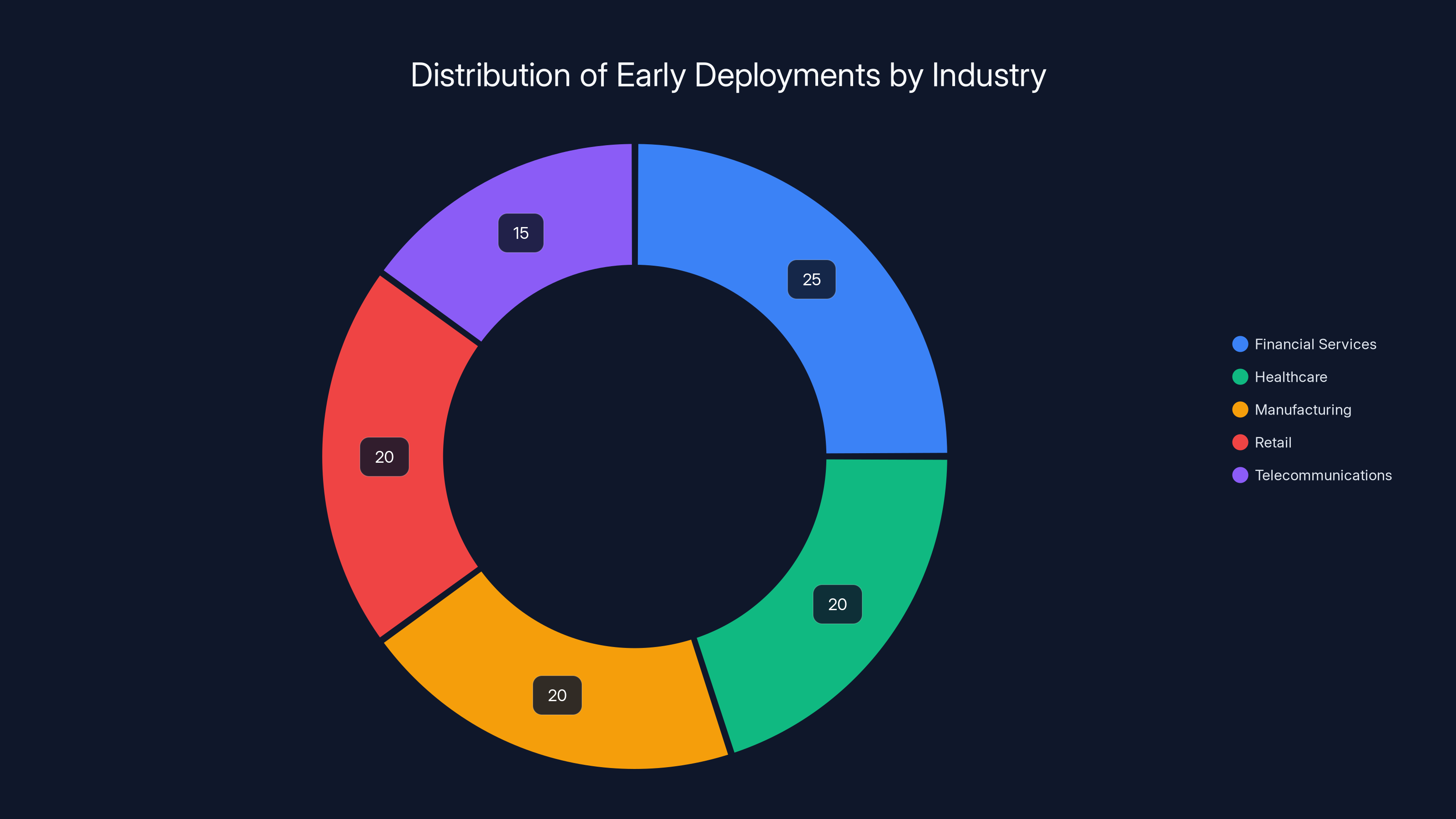

Estimated data shows Financial Services leading with 25% of early deployments, followed by Healthcare, Manufacturing, and Retail each at 20%. Telecommunications holds 15%.

The Technical Approach: Why Not Using Transformers Matters

Most modern AI discussions assume that transformer architecture is the final answer. It's not. Or at least, not for every problem.

Transformers work brilliantly for sequences. Text is a sequence. Code is a sequence. Audio can be treated as a sequence. The self-attention mechanism—the core innovation of transformers—lets the model learn which parts of the input are relevant to which parts of the output. That's powerful for language.

Structured data is different. Tables aren't sequences. They're relationships. A table has columns and rows, and the meaning of a cell depends on its position in both dimensions. Forcing this into a sequential model is like reading a spreadsheet one cell at a time and trying to understand the whole picture.

Beyond that, transformers have a hard ceiling: the context window. Current large language models can handle context windows of 100,000 to 200,000 tokens. That sounds like a lot until you realize that one row in a database table might require multiple tokens to represent, and enterprise datasets have millions or billions of rows.

Fundamental's approach is different. Without getting into proprietary details, the model uses mathematics tailored to the structure of tabular data. This allows it to:

- Scale to massive datasets: Not limited by context window size

- Maintain determinism: Same input produces same output, crucial for compliance

- Work with multiple data types: Numbers, categories, dates, text all handled natively

- Preserve numerical precision: Transformers sometimes lose precision when handling floating-point numbers

- Reason over entire datasets: Not just sampling or approximating patterns

The trade-off is that Nexus won't write poetry or generate creative content. It's not designed to. It's designed to find patterns in data that humans can't find by hand and make predictions that matter for business decisions.

This is actually a strength, not a weakness. Enterprises don't want creativity from their data analysis tools. They want accuracy. They want reliability. They want repeatability.

Existing Solutions: Why They Fall Short

Before Fundamental, how were enterprises handling structured data analysis? Mostly with tools designed 20+ years ago, updated incrementally.

SQL is still the lingua franca for data analysis. It's powerful, precise, and limiting. Writing SQL well requires expertise. Writing SQL that performs well on billion-row datasets requires expertise, patience, and experience. Most organizations have a bottleneck: the people who can write good SQL are busy.

Traditional machine learning, before deep learning, handled structured data pretty well. Random forests, gradient boosting, neural networks without transformers—these approaches worked on tabular data. The problem is they still required feature engineering. You had to decide which columns matter, how to combine them, which transformations to apply. This is where data scientists spent most of their time.

Modern business intelligence tools like Tableau and Power BI handle visualization and dashboarding. They're great at showing you what's in the data. They're not great at discovering what you didn't know was there. And they still require someone to define the metrics, build the queries, and create the dashboards.

Auto ML platforms (like H2O, Data Robot, Google Auto ML) tried to automate the machine learning pipeline. They work okay on small to medium datasets, but they tend to break down on enterprise scale and complexity.

Large language models, predictably, have been oversold for structured data work. Some companies tried feeding tabular data into GPT models. The results were... mixed. The models can answer questions about data, but they can't handle the scale, they're slow, they're expensive, and they hallucinate. You might ask an LLM to analyze a financial dataset and get an answer that sounds perfectly reasonable but is completely wrong.

Where these solutions all fall short: they don't adapt to new datasets automatically. Every new problem requires manual configuration. Every new data source requires custom engineering. Every new business question requires new development work.

A foundation model for structured data changes this equation. Like how pre-training on massive text corpora lets GPT understand almost any language task without task-specific training, pre-training on massive structured datasets lets Nexus understand almost any tabular analysis task without custom engineering.

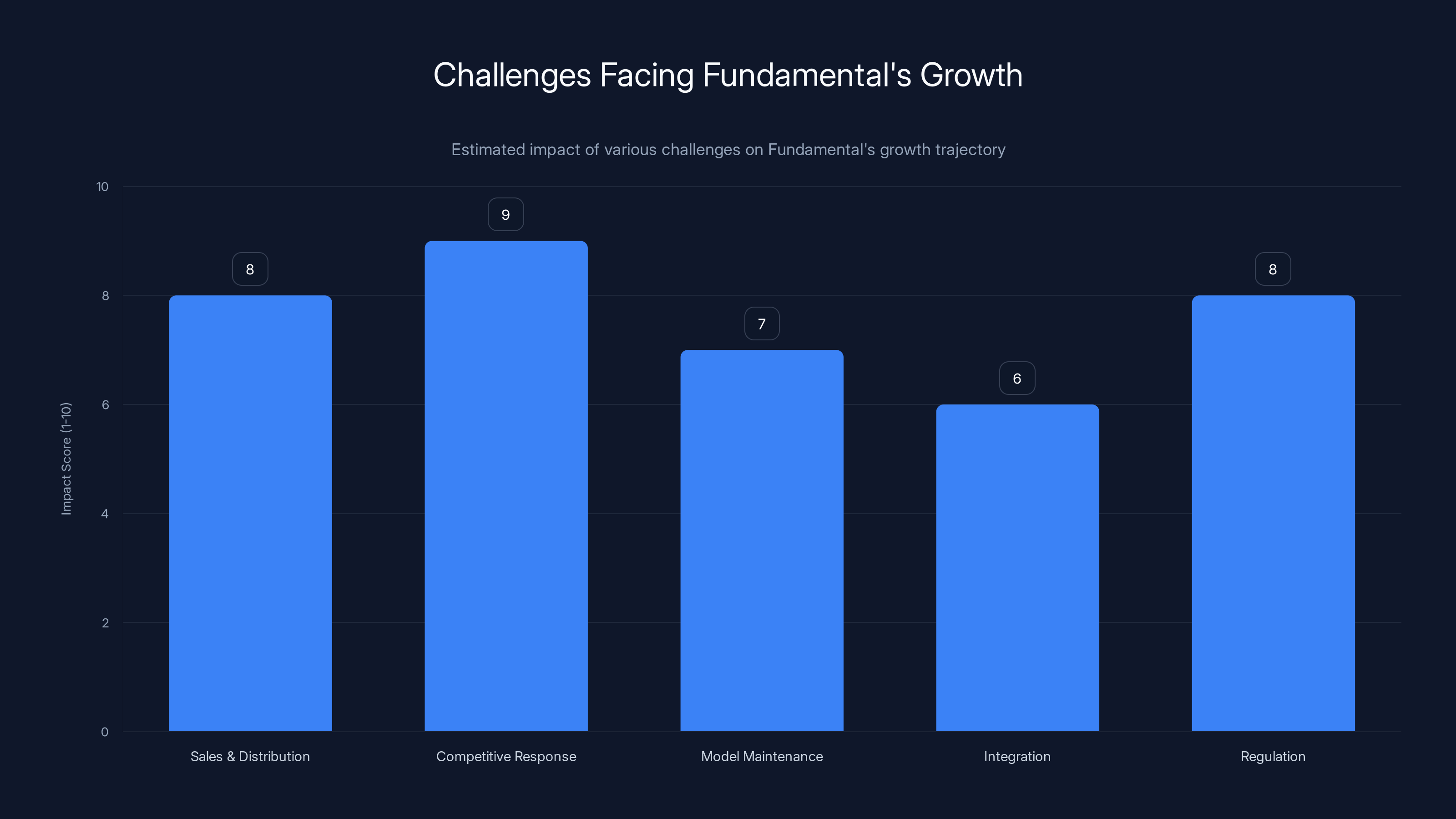

The competitive response and sales/distribution are estimated to have the highest impact on Fundamental's growth, with regulation and model maintenance also posing significant challenges. (Estimated data)

Real-World Use Cases and Early Deployments

Fundamental's early customers are already extracting serious value. The company has signed seven-figure contracts with Fortune 100 companies. That's deployments, not evaluations. Real money, not potential money.

We can infer some use cases from what makes sense for large enterprises:

Financial Services: Fraud detection, credit risk modeling, trading signal generation, compliance monitoring. Banks process trillions of transactions annually. Traditional approaches to fraud detection are rule-based (if this pattern emerges, flag it) or machine learning models that need retraining when fraud tactics change. A foundation model could adapt continuously.

Healthcare: Patient risk stratification, clinical outcome prediction, drug discovery analysis, operational optimization. Healthcare data is massive and complex—patient records, lab results, imaging data, billing information. Predicting which patients are at risk for readmission or complications is enormously valuable and currently done through a combination of manual review and statistical models.

Manufacturing and Operations: Predictive maintenance (when will this machine break?), quality control, supply chain optimization, energy usage prediction. Industrial facilities generate millions of sensor readings daily. Current approaches to predictive maintenance are mostly reactive (fix things when they break) or rules-based (monitor these sensors and alert when they exceed thresholds).

Retail and E-commerce: Demand forecasting, inventory optimization, churn prediction, recommendation systems. These companies have years of transaction data. Current forecasting methods are statistical (exponential smoothing, ARIMA) or require extensive feature engineering.

Telecommunications: Network optimization, churn prediction, usage forecasting. Telecom companies have exceptionally detailed data about customer behavior and network performance.

The common thread: these are all problems where the data is structured, the scale is massive, and the current solutions are either hand-coded or require constant human tuning. A model that can adapt automatically is a genuine breakthrough.

The Seven-Figure Contracts matter psychologically. It's not like Fundamental is giving the product away to test it. Fortune 100 companies are paying serious money because they believe the product delivers serious value. When a bank pays millions for something, they're not buying hype. They've evaluated it. They've tested it. They believe it solves their problem better than the alternatives.

The AWS Partnership: Distribution and Validation

Fundamental's strategic partnership with Amazon Web Services is significant and often overlooked in funding announcements.

Why does this matter? Because AWS doesn't partner with unproven technology. AWS has a massive suite of data tools and AI services. If Fundamental's model wasn't genuinely good, AWS wouldn't integrate it. The partnership signals validation at the highest level.

Practically, here's what the partnership means: AWS customers can now deploy Nexus directly from their AWS instances. If you're already running data warehouses on Amazon Redshift or storing data in S3, you can access Fundamental's model without moving data or complex integration work. This dramatically lowers friction.

It also means AWS has skin in the game. They're not just endorsing the product—they're integrating it into their ecosystem. That means they've likely invested engineering resources. That means future AWS releases will consider Fundamental's needs. It's not a casual partnership.

For Fundamental, it's even better. AWS has millions of enterprise customers. AWS can surface Fundamental's solution to companies that might never have heard of the startup directly. It's distribution built into the platform.

This partnership also suggests where the market opportunity really is: cloud-native enterprise analytics. Most large companies are moving their data infrastructure to cloud providers. AWS, Google Cloud, Microsoft Azure. They have data warehouses, lakes, and processing infrastructure all running in the cloud. Fundamental's model works in that environment seamlessly.

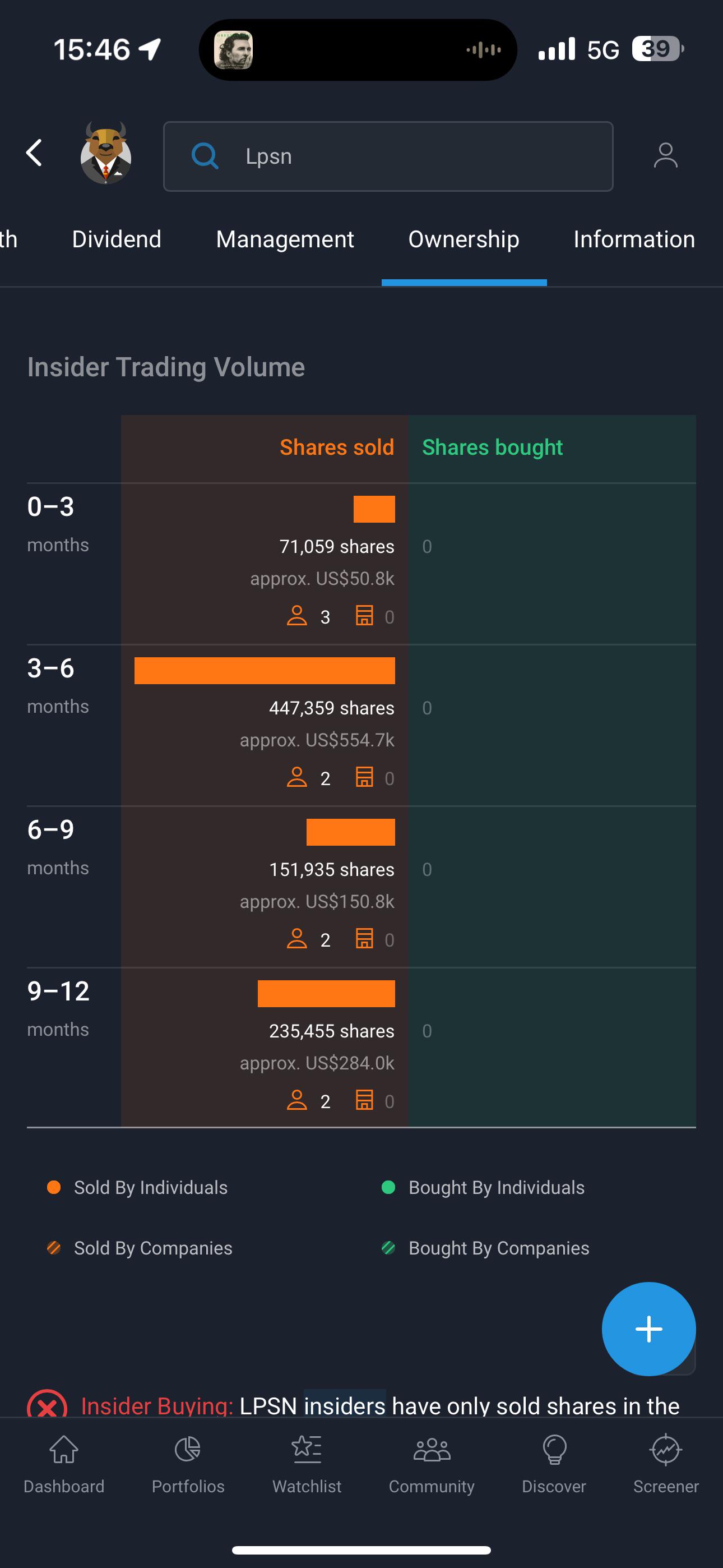

Nexus significantly outperforms traditional ML models in scalability and deterministic outputs, making it ideal for enterprise-scale data analysis. (Estimated data)

Founder and Team Background: Why This Matters

Jeremy Fraenkel, Fundamental's CEO, doesn't come from the typical "AI researcher turned founder" background. He spent years in data infrastructure and optimization. That's significant. The problem Fundamental is solving isn't primarily a research problem anymore—it's an engineering and deployment problem. You need someone who understands both cutting-edge AI and how to make it work in messy enterprise environments.

The angel investors—Srinivas from Perplexity, Dubugras from Brex, Pomel from Datadog—all built companies that process massive amounts of data. Perplexity searches the web in real-time and synthesizes results. Brex processes financial transactions at scale. Datadog monitors every metric enterprises care about. They understand data problems at a deep level.

Their involvement signals something specific: they believe Fundamental has solved a real problem that they themselves have experienced. Founders who've built billion-dollar companies don't invest in companies they don't believe in.

The investor mix (Oak HC/FT in healthcare, Valor in infrastructure, Battery in enterprise) suggests the team is thinking about multiple verticals. This isn't a company betting everything on one industry. It's a company with technology that applies across most enterprise sectors.

Competitive Landscape: Who Else Is Trying This?

Fundamental isn't the only company thinking about AI for structured data. But the space is surprisingly open.

Traditional analytics companies like Tableau, Looker, and Power BI are adding AI features, but they're bolting features onto existing products designed for dashboarding. It's like adding an AI chatbot to a spreadsheet—helpful, but not a fundamental reimagining of what's possible.

Auto ML platforms (Data Robot, H2O, Google Auto ML) have been in this space for years. They've achieved some success, but they haven't fundamentally transformed how enterprises work with data. Partly because Auto ML itself has limitations, and partly because enterprises are skeptical of trusting critical decisions to fully automated systems.

LLM companies (OpenAI, Anthropic, Google) are trying to solve structured data with language models. Some success on medium-sized datasets, but it doesn't scale. You can't ask GPT-4 to analyze a billion-row transaction database effectively. It's the wrong architecture.

There are academic approaches and startups trying various things, but nothing has raised $255 million or deployed at enterprise scale the way Fundamental is doing.

This is partly because the problem is hard (building a foundation model for a new data type is non-trivial) and partly because the market wasn't ready until now. Enterprise adoption of AI accelerated dramatically post-2023. Companies are now seriously investing in AI infrastructure, which means they're evaluating solutions like Fundamental.

Fundamental's competition isn't really from other startups yet. It's from the status quo: SQL, traditional machine learning, and expensive data science teams. That's easier competition to beat because the status quo is expensive and slow.

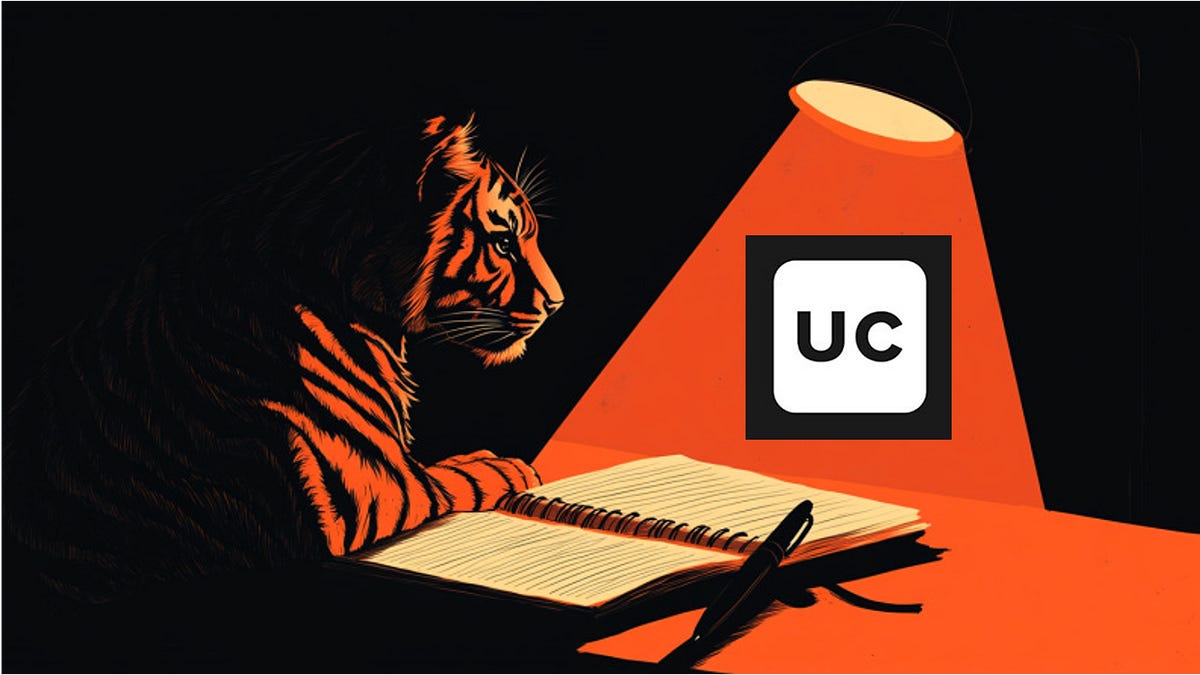

Projected growth shows increasing Fortune 100 customers and revenue, with potential expansion into new markets and use cases by 2028. Estimated data based on strategic goals.

Challenges Ahead: What Could Go Wrong

Fundamental is in a strong position, but the path to becoming a billion-dollar company is not guaranteed.

The first challenge is sales and distribution. Enterprise AI adoption is still early. Many companies are cautious. They want to see proof (which Fundamental has with Fortune 100 contracts), but they also want to see success stories from companies similar to theirs. Building this takes time and requires a strong enterprise sales team.

The second challenge is competitive response. Once Fundamental proves this market exists, bigger companies will move in. Google could build a Large Tabular Model. Microsoft could. Amazon could. These companies have more resources, established enterprise relationships, and can subsidize pricing to lock in market share. Fundamental's window to establish dominance is probably 2-4 years.

The third challenge is the nature of foundation models: they require constant retraining and improvement. As new data patterns emerge and business problems evolve, the model needs to stay current. This requires ongoing investment in data, compute, and research. The investment doesn't decrease after launch; it increases.

The fourth challenge is integration. For Fundamental to be truly useful, it needs to integrate with the data infrastructure companies already use. That's not just AWS—it's Databricks, Snowflake, Google Big Query, Oracle, and others. Each integration requires engineering work.

The fifth challenge is regulation. As Fundamental gets used for increasingly important decisions (loan approvals, clinical decisions, hiring), regulatory scrutiny will increase. Model interpretability becomes critical. If the model makes a decision, someone needs to understand why. This is harder for neural network-based approaches than traditional machine learning.

Market Sizing and Revenue Potential

To understand why investors threw a quarter-billion at this, you need to understand the addressable market.

IDC estimates the global data and analytics market at roughly

Fundamental is targeting a specific segment: foundation models for structured data. Even if Fundamental captures just 5% of the enterprise analytics market over the next decade, that's tens of billions in revenue.

How does this translate to user pricing? There are a few models Fundamental could use:

Per-Query Pricing: Like most AI APIs, charge per query or per computation. This scales with usage. A bank running millions of queries monthly could spend hundreds of thousands or millions. This rewards scale but creates uncertainty in costs.

Subscription Tiers: Offer different tiers based on datasets size, number of queries, or advanced features. Enterprise contracts could be multi-million annually. This creates recurring revenue and predictability.

Revenue Share: For use cases where Fundamental directly drives revenue (trading signals, fraud detection preventing losses, etc.), take a percentage of incremental value. This aligns incentives but is complex to track.

Hybrid: Some combination based on the customer and use case.

Based on the seven-figure early contracts, Fundamental is likely already using enterprise pricing models that generate significant revenue per customer. If the company has 10-20 Fortune 100 customers at

For comparison, Databricks (founded 2013, similar timeline for building enterprise infrastructure) was valued at

The Shift in Enterprise AI Strategy

Fundamental represents a larger shift in how enterprises think about AI.

The initial AI wave (2016-2023) was about proving AI could work at all. Can we build a chatbot? Can we generate images? Can we write code? Yes, yes, yes. The focus was on novel applications.

The current wave (2023-2026) is about making AI valuable for the specific problems businesses actually care about. For most businesses, that's not chatbots or creative content. It's optimization, prediction, and decision support on their core data.

Fundamental is part of this second wave. It's not trying to do everything. It's trying to do one thing exceptionally well: help enterprises get more value from their structured data.

This shift has major implications:

-

Specialized Models Over General Models: The era of one model doing everything is probably ending. Companies will build or buy specialized models for specific domains and tasks.

-

On-Premise and Private Deployment: Enterprises won't ship sensitive data to external APIs. Fundamental will need to run on customer infrastructure. The AWS partnership enables this.

-

Explainability Over Power: As AI influences more critical decisions, understanding why the model decided something becomes table stakes. A model that's 99% accurate but can't explain its reasoning is less valuable than a model that's 95% accurate but transparent.

-

Integration Over Isolation: Standalone AI tools are useful. AI that integrates into existing workflows is transformative. Fundamental integrated with AWS is much more valuable than Fundamental as a standalone service.

-

Measurement Over Hype: Enterprises are finally demanding proof that AI actually works. Not proof of concept—proof of value. Hard ROI numbers.

Fundamental's strategy aligns with all of these trends.

What This Means for Data Scientists

When a new technology emerges, the first question people in affected roles ask is: "Will this replace me?"

Data scientists are asking this about Fundamental.

Honest answer: partially, probably.

A foundation model for structured data will automate the routine parts of data science. The parts that don't require deep business knowledge or creativity. Feature engineering (increasingly automated). Model selection (automated). Hyperparameter tuning (automated). Deployment (simplified through integration).

But it won't eliminate data scientists. Instead, it will elevate them. The best data scientists will move from "how do I build a model" to "what problems should we solve" and "how do we ensure this model serves our business goals."

Dataframe manipulation, statistical testing, and exploratory analysis—these might be handled by the model automatically. But defining success metrics, interpreting results, and making recommendations to leadership still require human judgment.

It's similar to how calculators didn't eliminate mathematicians. They eliminated calculation. And good mathematicians got better at math because they could focus on harder problems.

The data scientists who'll struggle are those doing routine work—building the same model types for the same problems repeatedly. The data scientists who'll thrive are those who can think about business problems strategically and use tools (including Fundamental) to solve them efficiently.

Timeline and Outlook: What's Next

Assuming Fundamental executes well, here's a plausible timeline:

2025: Expand Fortune 100 customer base to 20-50 companies across multiple industries. Build integration with other major cloud providers (Google Cloud, Azure). Release improvements to Nexus based on real-world feedback.

2026: Achieve $50-200 million annual revenue (based on enterprise contracts). Begin expanding downmarket to mid-market companies. Release industry-specific versions (Nexus for Financial Services, Nexus for Healthcare, etc.).

2027-2028: Become the de facto standard for structured data analysis at large enterprises. Similar to how Databricks became essential infrastructure for data engineers. Explore acquisition by Microsoft, Google, or Amazon (or remain independent if growth and profitability are strong).

2028+: Expand to new use cases (video analysis, real-time decision-making). Build partner ecosystem of integrations and consultants.

This isn't guaranteed. But the company has strong fundamentals: real product-market fit (proven by early contracts), experienced leadership, sufficient capital, and a massive addressable market.

How Enterprises Should Think About This Technology

If you work at a large enterprise with substantial structured data, here's how to think about Fundamental and similar technologies:

First, audit your current state. How much of your data is analyzed? What tools are you using? How long does it take to answer new business questions? What's the bottleneck?

Most enterprises will find that analysis is the bottleneck, not data collection. You have data but can't get insight from it fast enough.

Second, identify high-value use cases. Don't try to apply this to everything. Start with problems where:

- The answer directly impacts revenue or saves costs

- You have historical data to learn from

- Current analysis is slow or expensive

- The decisions are repeated (so any improvement scales)

Third, run a pilot. Fundamental (and likely competitors coming soon) will offer evaluation periods. Use this seriously. Evaluate on your actual data, your actual problems. Don't just run benchmarks.

Fourth, think about integration. Technology is only valuable if it integrates into your existing workflows. Can your analysts use it alongside their current tools? Does it export to formats you already use? Can your data engineers deploy it on your existing infrastructure?

Fifth, consider the economics. Compare total cost of ownership: subscription fees plus integration effort plus the value generated. For most enterprise use cases, the value far exceeds the cost.

The Broader Implications for AI

Fundamental's funding and success has implications beyond just this one company.

It signals that enterprise AI is moving beyond chatbots and creative applications into operational AI that directly impacts business decisions. That's more valuable long-term.

It validates the idea that specialized models for specific data types can outperform general models. This is obvious in hindsight (specialized tools work better than general tools), but the AI industry spent years trying to prove that general models could do everything.

It shows that foundation models aren't limited to language. The same pre-training plus fine-tuning approach that works for text can work for other domains. This opens possibilities for foundation models for code (which already exist), for scientific data, for audio, and for other domains.

It demonstrates that enterprise adoption of AI is real and accelerating. This wasn't a pivot to AI because of hype. This was a company solving a real problem that enterprises genuinely need solved and are willing to pay for.

Fundamental's success will likely trigger a wave of similar companies: foundation models for legal documents, for medical imaging, for technical papers, for time-series sensor data. Each addressing a specific, valuable niche.

Conclusion: The Next Era of Enterprise AI

We're at an inflection point in how enterprises use AI.

The first era (2016-2023) was about proof of concept. Could AI work? Yes. Could it do interesting things? Absolutely. That era created enormous value and justified the hype in many ways.

The current era (2023 onward) is about practical value. AI needs to solve real business problems more effectively and cost-effectively than existing approaches. Hype is being replaced with ROI.

Fundamental is a perfect representative of this new era. The company isn't trying to build AGI or create the next viral AI app. It's trying to help enterprises get more value from the data they already have, using tools specifically designed for that job.

A $255 million Series A doesn't represent hype. It represents conviction from sophisticated investors that there's a real market opportunity, a real technical approach that works, and a real team that can execute.

If Fundamental executes well (and the early evidence suggests they might), it will become essential infrastructure for how enterprises analyze data. That's not revolutionary in the flashy sense. But it's transformative for the bottom line of every company that uses it.

The structured data problem has been waiting for a solution for decades. Fundamental might be it.

FAQ

What is a Large Tabular Model (LTM)?

A Large Tabular Model is a foundation model specifically designed to understand and analyze structured data (tables, databases, spreadsheets) rather than unstructured data like text or images. Unlike Large Language Models that rely on transformer architecture and sequential processing, LTMs use different mathematical foundations optimized for the relationships and patterns found in tabular data. They're pre-trained on massive structured datasets then fine-tuned for specific business applications.

How does Fundamental's Nexus differ from traditional machine learning models?

Traditional machine learning models require extensive manual feature engineering (deciding which data columns matter and how to combine them) and explicit model selection by data scientists. Nexus, as a foundation model, automatically discovers patterns and relationships in data without manual feature engineering. It also handles scale problems that traditional models struggle with—analyzing billions of rows of data simultaneously—and provides deterministic outputs (same answer every time for the same input), which is critical for business compliance and decision-making.

Why can't companies just use Chat GPT or Claude for structured data analysis?

Large Language Models like Chat GPT are built around transformer architecture designed for sequential text processing. They have context window limitations, meaning they can't process extremely large datasets (billions of rows). Additionally, they're probabilistic by nature, generating slightly different answers each time, which creates problems for business-critical decisions. They also struggle with numerical precision and can't effectively reason over the entire structure of large databases. Nexus solves these problems by using architecture specifically optimized for tabular data at enterprise scale.

What kinds of businesses benefit most from Fundamental's technology?

Any enterprise with massive structured data benefits: financial services (fraud detection, risk modeling), healthcare (patient risk prediction, clinical outcomes), manufacturing (predictive maintenance, quality control), retail (demand forecasting, inventory optimization), and telecommunications (churn prediction, network optimization). The common factor is having billions of historical structured records and needing to make predictions or identify patterns at scale.

How much does Fundamental's technology cost?

Fundamental hasn't published standard pricing, but early enterprise contracts are in the seven-figure range (millions of dollars annually). Pricing likely depends on dataset size, number of queries, specific use cases, and deployment model. The company will probably offer different tiers for mid-market versus enterprise customers. Based on early contracting patterns, enterprises should expect costs between $500,000 and several million dollars annually.

Can Fundamental's Nexus work on our existing cloud infrastructure?

Yes, through the strategic partnership with AWS, Fundamental customers can deploy Nexus directly from existing AWS instances without moving data or complex integration work. The company is likely expanding partnerships with other major cloud providers (Google Cloud, Microsoft Azure). On-premise deployment options are also likely being developed for customers with strict data residency requirements. Integration with existing data warehouses (Snowflake, Databricks, Redshift) is essential to the product strategy.

What's the learning curve for using Fundamental's technology?

One major advantage of foundation models is that they're designed to be accessible to non-experts. You don't need to be a Ph D in machine learning to use Nexus effectively. Data analysts with SQL knowledge should be able to get started relatively quickly. The company will likely provide APIs, SDKs, and integrations with familiar tools so teams don't need to completely change their workflows. However, getting maximum value from the technology will require understanding your business data well and thinking strategically about which problems to solve first.

How does Fundamental make money, and what's the business model?

Based on early contract sizes (seven-figure enterprise deals), Fundamental is likely using a combination of subscription pricing (annual or multi-year contracts) and potentially usage-based pricing depending on query volume or dataset size. The company could also eventually offer tiered pricing for mid-market companies at lower price points. As the business scales, they might add professional services revenue (helping customers implement and optimize the technology) or partner revenue (from AWS and other platform integrations).

Key Takeaways

- Fundamental's $255M Series A validates that specialized AI for structured data is a multi-billion-dollar market opportunity overlooked by LLM companies

- Large Tabular Models solve fundamental limitations of transformer-based LLMs: context window constraints, lack of numerical precision, and inability to handle enterprise-scale datasets

- Early Fortune 100 customers and AWS partnership provide strong proof of product-market fit beyond typical startup hype

- This funding round signals the shift from general-purpose AI to specialized models solving specific business problems with measurable ROI

- Enterprise data science teams will shift from building models to defining business problems, with automation handling routine analysis tasks

Related Articles

- Google-Apple AI Deal: Why Alphabet Won't Talk About It

- AI Chatbot Ads: Industry Debate, Business Models & Future

- Physical Intelligence: Building Robot Brains in 2025 & Beyond

- Apple's AI Monetization Challenge: Strategy Analysis & Industry Outlook

- Airtable Superagent: AI Agent Features, Pricing & Alternatives [2025]

- On-Device Contract AI: How SpotDraft's $380M Valuation Changes Enterprise Legal Tech [2025]

![Fundamental's $255M Series A: How AI Is Solving Enterprise Data Analysis [2025]](https://tryrunable.com/blog/fundamental-s-255m-series-a-how-ai-is-solving-enterprise-dat/image-1-1770304026845.jpg)