Understanding Apple's AI Monetization Dilemma: The Elephant in the Room

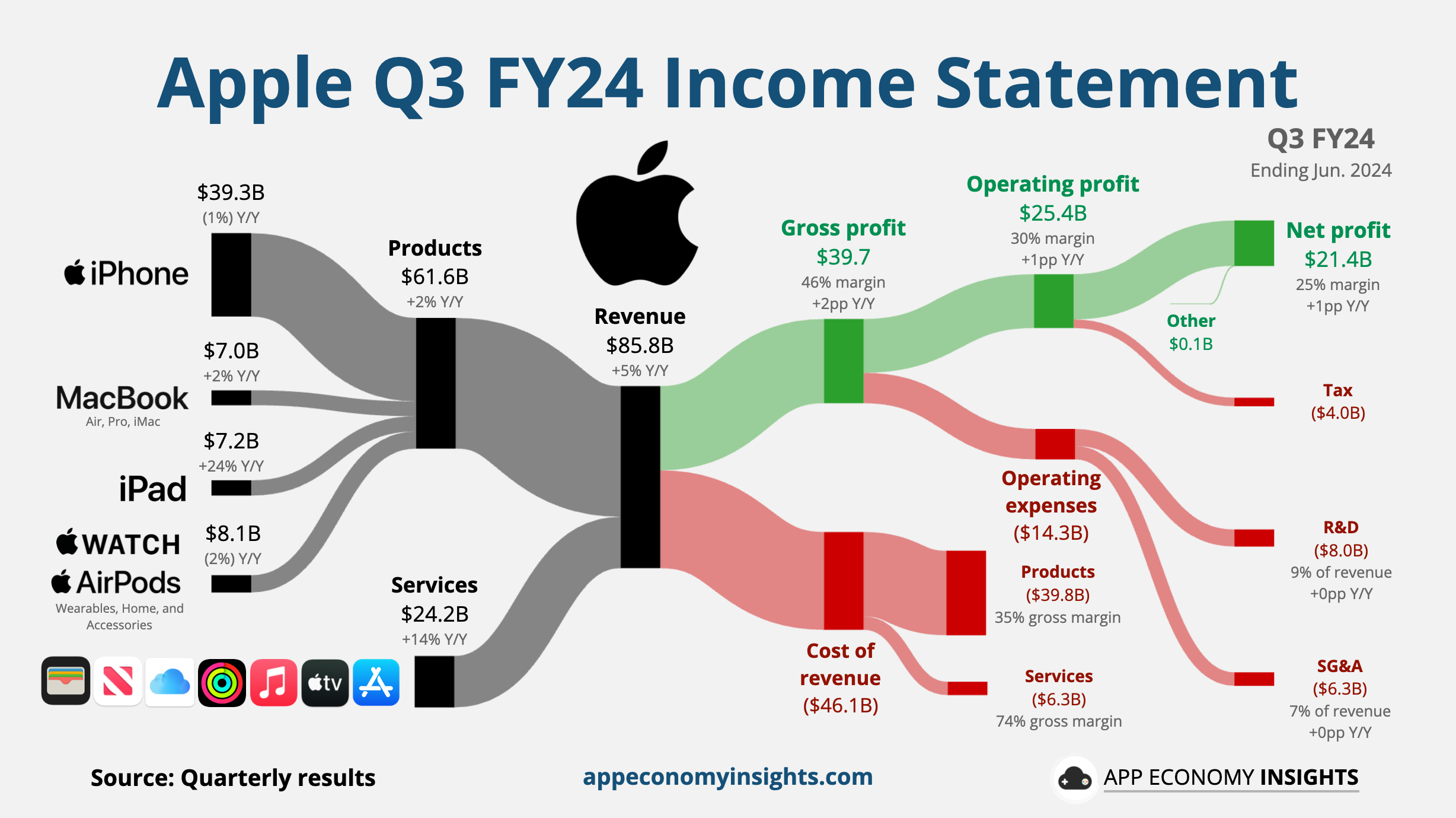

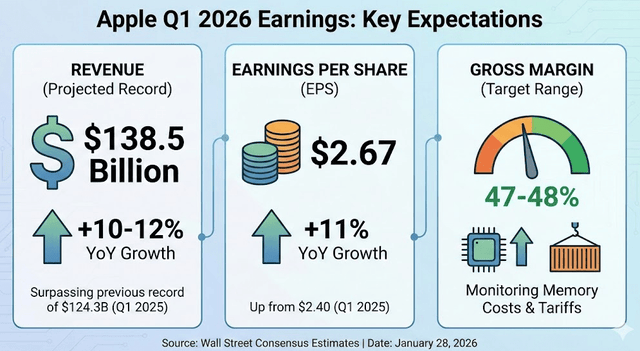

When Tim Cook reported Apple's quarterly earnings of $143.8 billion in revenue—a 16% year-over-year increase—the company appeared unstoppable. Yet beneath the impressive financial surface lies a question that haunts Silicon Valley like an unanswered midnight call: How exactly will tech companies monetize their massive investments in artificial intelligence?

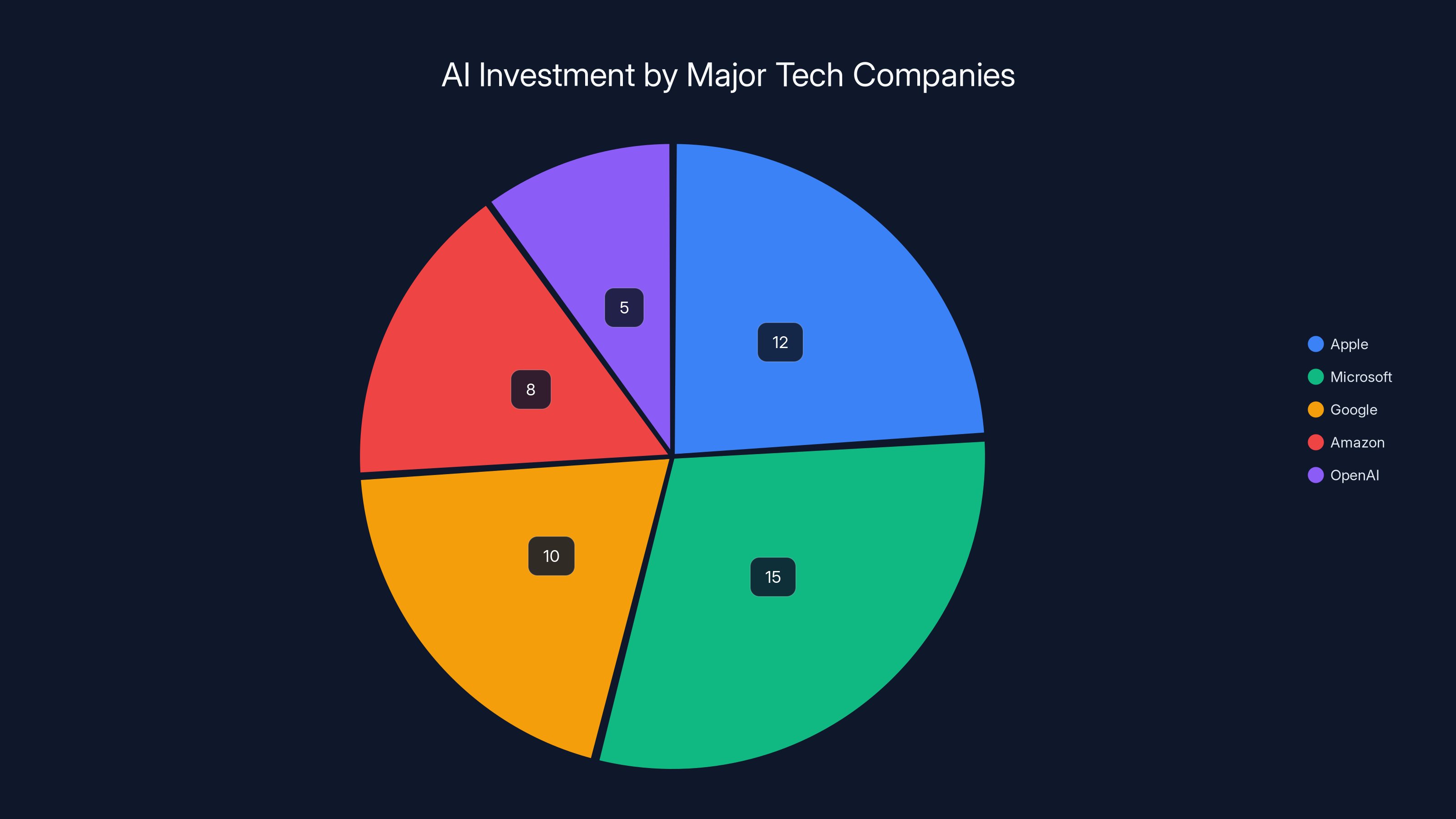

This isn't a trivial concern. According to industry analysts, major technology companies have invested over

During Apple's January 2026 earnings call, Morgan Stanley analyst Erik Woodring posed a question so deceptively simple it exposed a fundamental vulnerability in the tech industry's narrative: "How do you monetize AI?" What followed was a masterclass in corporate non-answering, with Cook explaining that Apple brings "intelligence to more of what people love" and integrates it "in a personal and private way." Translation: The company hasn't figured it out yet, and neither has anyone else.

This moment crystallizes a broader crisis of confidence that's beginning to permeate the investment community. While venture capitalists and tech evangelists have spent years preaching the gospel of AI transformation, they've largely glossed over the inconvenient truth: the economics of artificial intelligence remain fundamentally uncertain. Companies are investing as if the returns are guaranteed, yet the actual business models that will generate those returns remain largely theoretical.

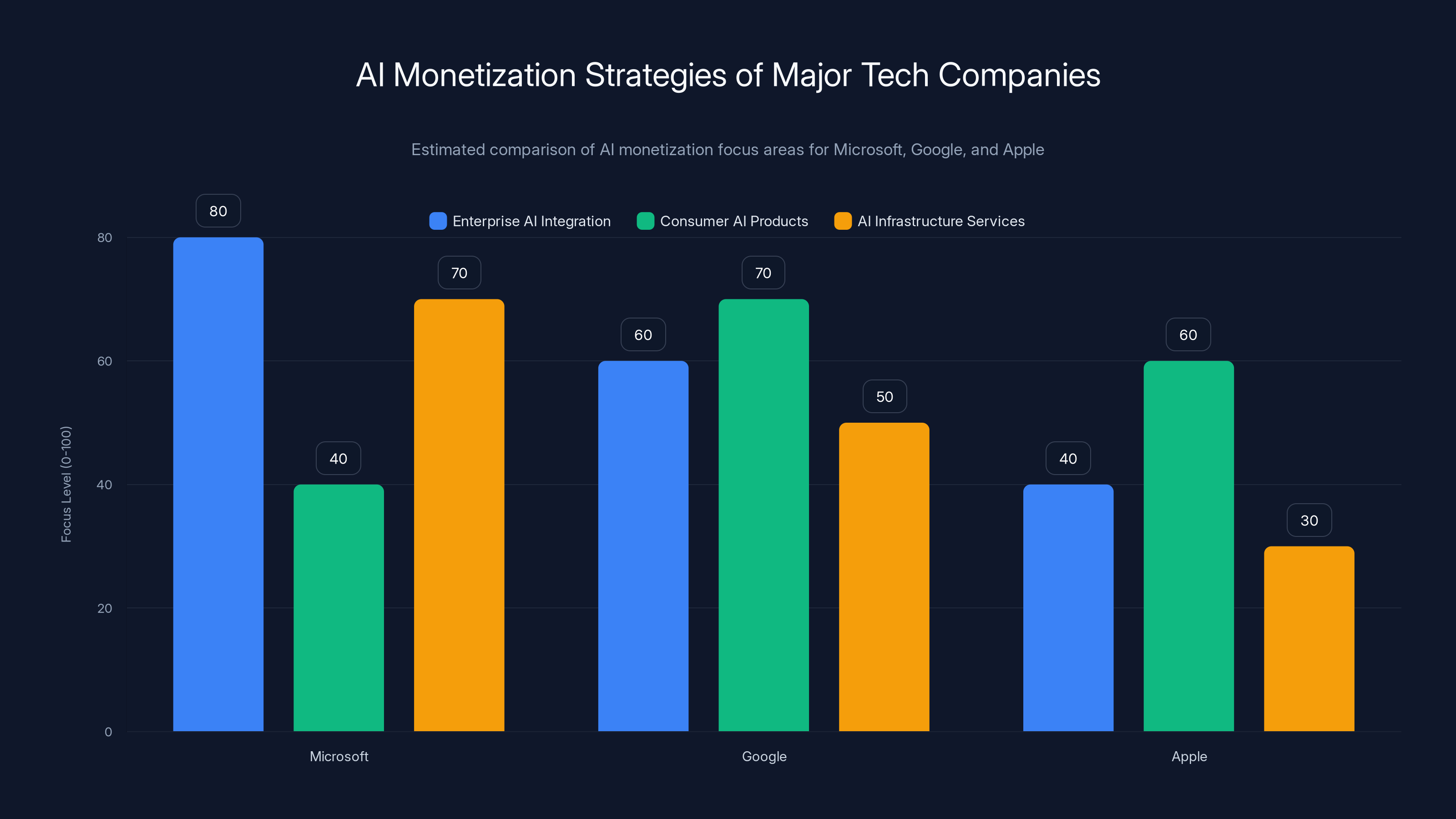

The monetization challenge becomes even more complex when you consider the competitive landscape. Apple's competitors—Microsoft, Google, Meta, and Amazon—are pursuing radically different strategies, from embedding AI into workplace productivity tools to developing proprietary large language models. Some strategies appear more promising than others, but none have definitively proven they can generate returns proportional to the investment required.

This comprehensive analysis explores the multifaceted dimensions of Apple's AI monetization challenge, examines how industry competitors are approaching the problem, evaluates different revenue models being tested across the sector, and provides frameworks for understanding which approaches might ultimately succeed.

The Current State of Apple's AI Strategy: Foundation Without Revenue

Apple's approach to artificial intelligence differs significantly from competitors in both philosophy and execution. Rather than building flashy consumer-facing AI products or promoting generative AI capabilities as headline features, Apple has chosen a more conservative, privacy-centric path that quietly embeds intelligence throughout its devices and services.

This strategy manifests in several concrete ways. Apple Intelligence, launched in late 2024, integrates machine learning into core iPhone, iPad, and Mac functionality. The system handles writing assistance, image generation, text summarization, and device-level processing without constantly shipping data to cloud servers. This on-device approach aligns with Apple's well-established brand positioning around privacy, but it also creates a monetization obstacle: consumers don't pay separately for features they perceive as natural OS improvements.

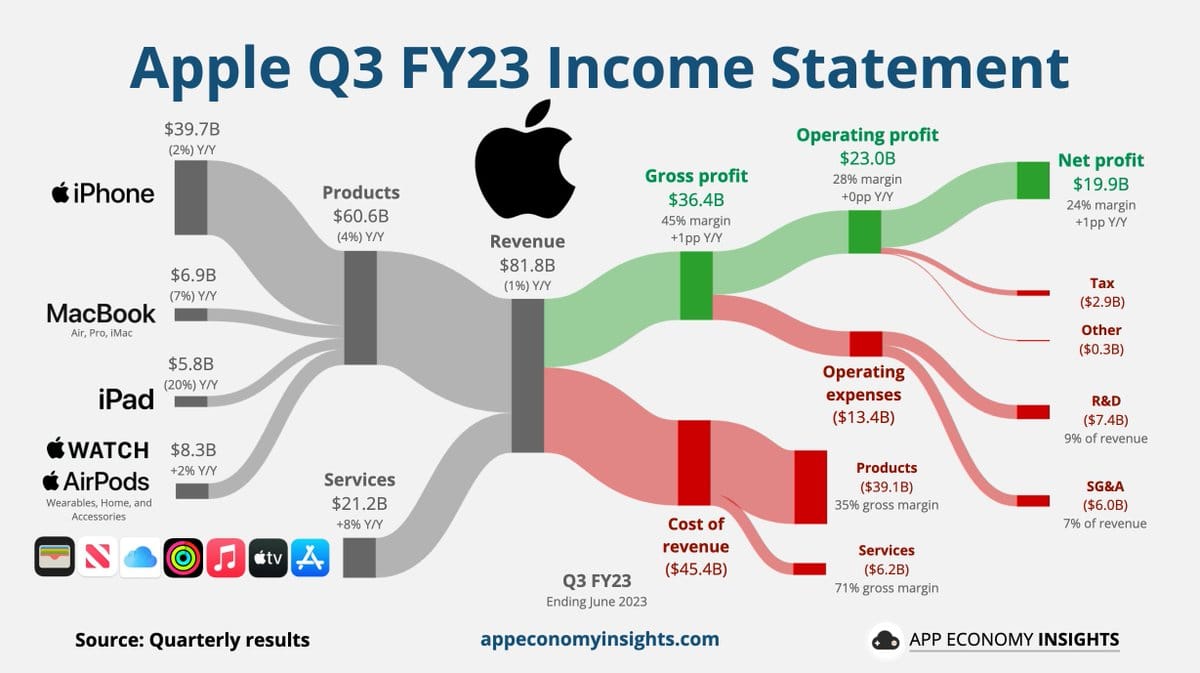

The company has invested heavily in building proprietary chips optimized for AI workloads, including the Neural Engine that processes machine learning tasks locally. These chips represent billions of dollars in R&D spending and manufacturing costs. Apple spreads this investment across hundreds of millions of devices sold annually, but the company doesn't charge customers extra for AI capabilities. They're bundled into the base product price, meaning Apple must recoup these costs through traditional device sales and services revenue.

Apple's services business provides the clearest monetization path. With over $20 billion in quarterly services revenue as of early 2026, Apple has built recurring revenue streams through iCloud subscriptions, Apple Music, Apple TV+, Apple Care, and App Store commissions. Theoretically, AI could enhance these services' value proposition, justifying premium pricing or increased adoption. In practice, Apple hasn't aggressively marketed AI as a standalone service enhancement.

Siri, Apple's virtual assistant powered by machine learning, represents a perfect case study in unrealized monetization potential. Despite being available on over two billion devices, Siri generates no direct revenue. The assistant handles user requests for information, device control, and app launching, but Apple doesn't charge for Siri access or provide premium Siri tiers. Competitors like Amazon (with Alexa) and Google (with Google Assistant) face similar challenges, though all three companies view their assistants as platforms for enabling other monetized services rather than revenue sources themselves.

Apple's decision to embed privacy-preserving AI directly into devices rather than relying on cloud-based processing creates a fundamental economic constraint. Cloud-based AI processing generates clear monetization opportunities: companies can track usage, implement metering, and charge based on computational resources consumed. On-device AI, by contrast, operates within devices already owned by customers, making usage-based pricing impractical.

The company faces additional constraints from its premium market positioning. Apple's luxury brand positioning allows the company to command premium prices for hardware, but customers expect AI features as standard functionality rather than premium add-ons. Attempting to charge separately for AI capabilities would contradict Apple's carefully cultivated brand identity as a seamless, integrated ecosystem provider.

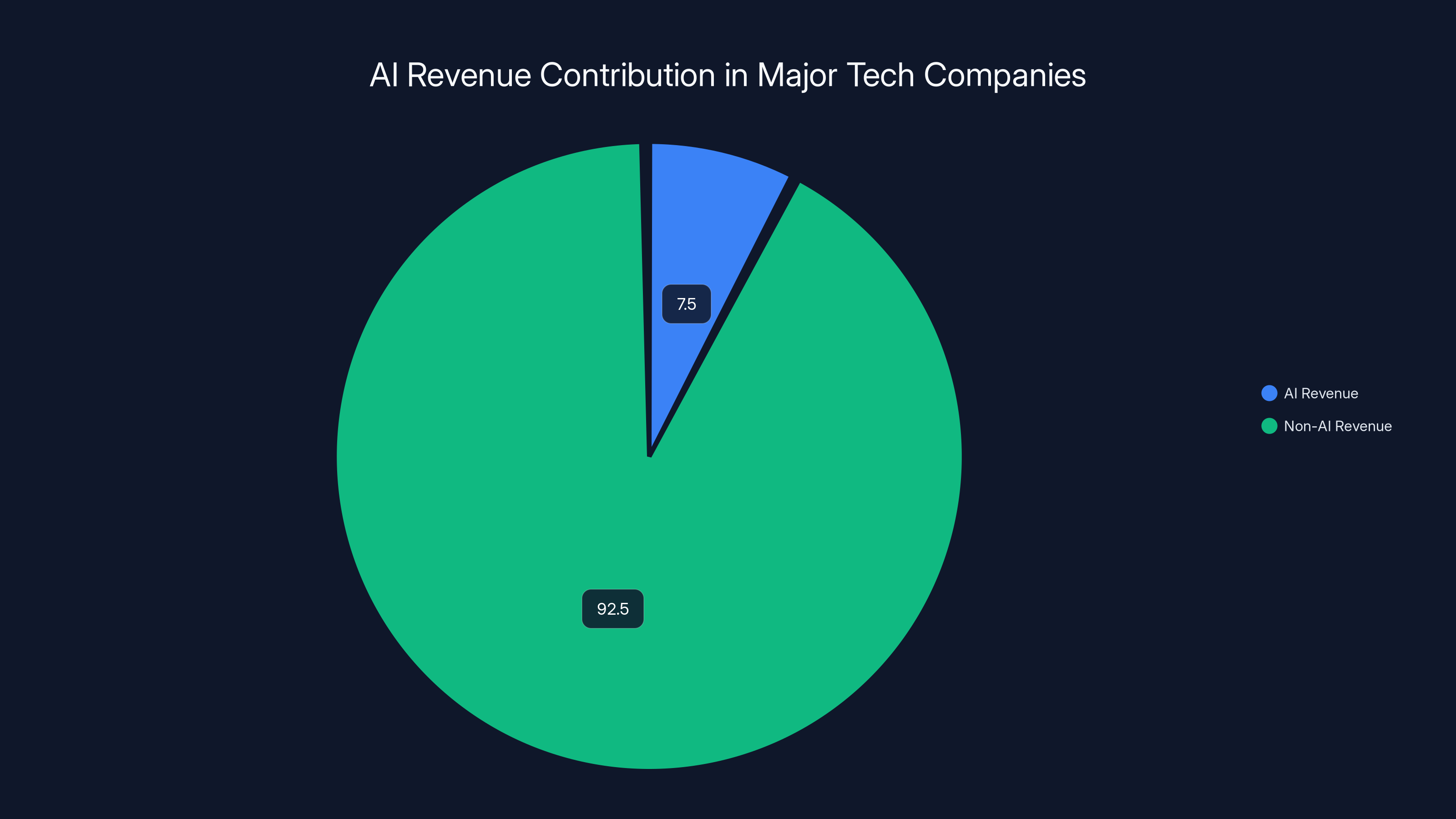

Estimated data suggests AI contributes approximately 5-10% of revenue for major tech companies, highlighting the current limited monetization success.

Competitive Approaches: How the Industry Is Searching for the AI Monetization Answer

Understanding Apple's monetization challenge requires examining how competitors are attacking the same fundamental problem. Each major technology company has adopted a distinctly different strategy, reflecting different core business models and competitive advantages.

Microsoft's Enterprise AI Bet: Vertical Integration and Integration Fees

Microsoft has positioned itself as the enterprise AI company, pursuing an aggressive strategy of embedding AI throughout its productivity suite, cloud infrastructure, and enterprise services. The company's relationship with OpenAI (in which Microsoft invested $13 billion) represents a calculated bet that paying for access to advanced AI models makes business sense if those models drive enterprise adoption and usage.

Microsoft's approach centers on charging for AI-enhanced services within existing products. Copilot, available across Office 365, Azure, and Windows, operates on a straightforward premise: enterprises pay for subscriptions that include AI-powered features like document drafting, code generation, and data analysis. The company charges $30 per month per user for Microsoft 365 Copilot Pro, layered on top of existing Office 365 subscriptions. This model works because enterprises have already committed to Microsoft's ecosystem and view AI augmentation as justified productivity improvement.

The Copilot strategy generates revenue in multiple ways. First, existing customers upgrade to higher-tier plans to access AI features. Second, the AI capabilities reduce customer churn by increasing switching costs—once employees become dependent on Copilot for generating code and documents, moving to competing platforms becomes painful. Third, Microsoft's cloud infrastructure business grows as enterprises demand more computational resources to power AI workloads, creating a self-reinforcing virtuous cycle.

Google's Diversified AI Revenue Streams: Advertising, Cloud, and Ecosystem Integration

Google faces a different monetization landscape due to its dominant position in advertising. The company generates over $280 billion in annual revenue, with approximately 80% coming from advertising. This revenue concentration means Google's AI strategy must first and foremost protect and enhance advertising effectiveness.

Google's AI monetization approach includes four primary components. First, AI improves advertising targeting and content recommendations, directly boosting advertiser ROI and Google's pricing power with advertisers. Second, AI-powered Google Cloud services generate direct revenue from enterprises seeking machine learning capabilities, data analysis, and infrastructure. Third, integrating generative AI into search (as Google has attempted with its AI Overview feature) could eventually justify new premium search subscription tiers, though consumer adoption remains uncertain. Fourth, AI tools embedded in Google's productivity suite (Docs, Sheets, Gmail) mirror Microsoft's approach, though Google's smaller enterprise footprint limits near-term revenue potential.

Google's search advertising dominance provides a massive advantage: the company can test new monetization approaches on billions of search queries daily, seeing which AI-augmented features drive advertiser willingness to pay higher prices. Unlike Apple's privacy-focused approach, Google explicitly tracks user interactions, enabling sophisticated machine learning models that optimize for advertiser ROI.

Meta's AI-Driven Engagement and Advertising: Betting on Attention

Meta pursues perhaps the most aggressive AI development strategy among major tech companies, having invested billions in AI infrastructure for video recommendation, content moderation, and advertising optimization. The company's core monetization model—advertising—benefits substantially from AI improvements that increase user engagement and advertising targeting precision.

Meta's AI strategy focuses on using machine learning to understand user preferences with unprecedented granularity, enabling hyper-targeted advertising that commands premium prices from advertisers. The company also views AI as critical for competitive positioning in emerging platforms like virtual and augmented reality, where personalization powered by advanced AI could become decisive. Unlike Apple's conservative approach, Meta openly incorporates AI into its primary business model, with each algorithm improvement directly correlated to measurable revenue gains.

Amazon's AI Infrastructure Play: Selling the Picks and Shovels

Amazon operates differently from consumer-focused competitors, deriving the majority of profits from Amazon Web Services (AWS). The company's AI monetization strategy centers on selling AI infrastructure, machine learning services, and tools to enterprises.

AWS offers numerous AI services including SageMaker (a machine learning platform), Rekognition (computer vision), Polly (text-to-speech), and others. Enterprises pay for these services based on usage and computational resources consumed. Amazon also benefits from AI improvements in its retail operations—recommendation engines, fraud detection, and logistics optimization all improve profitability while requiring no additional customer payments. Amazon's partnership with Anthropic (in which the company invested over $4 billion) reflects its belief that having direct relationships with leading AI model developers creates strategic value.

Amazon's approach generates revenue immediately through AWS service charges while also improving operational efficiency across the company's retail, logistics, and advertising businesses. This diversified revenue model provides more insulation against the possibility that some AI monetization approaches ultimately fail.

The computational requirements for training AI models have increased exponentially from GPT-3 to GPT-4 and are projected to continue rising for future models. (Estimated data)

The OpenAI Cautionary Tale: When Vision Exceeds Business Model Clarity

No analysis of AI monetization challenges would be complete without examining OpenAI, the company that catalyzed the current AI boom through ChatGPT's explosive success. OpenAI presents a cautionary tale about the gap between technical achievement and sustainable business models.

ChatGPT achieved unprecedented growth metrics for a software product, reaching 100 million monthly active users faster than any consumer software in history. The company's technology achievements are undeniable—GPT-4 represents a genuine leap forward in language model capability compared to predecessor models. Yet OpenAI's path to profitability remains genuinely uncertain.

The company currently operates ChatGPT Plus, a subscription service at

OpenAI's projections that it will reach profitability by 2030 are based on assumptions about future scale, improved efficiency, and new revenue models that haven't yet materialized. The company's funding requirements—analysts estimate $207 billion in cumulative future funding needs—reflect the staggering economics of building and maintaining world-class AI models. The training compute costs alone for advanced language models rival the GDP of small nations.

OpenAI's situation exposes a fundamental tension in AI economics: the most capable models are expensive to develop and expensive to run. Creating a profitable service requires either (a) aggregating billions of users at high margins, (b) selling to enterprises at prices that justify computational costs, (c) dramatically reducing computational costs through new techniques, or (d) some combination thereof. None of these paths are guaranteed to succeed.

The company's uncertainty about monetization extends to enterprise customers as well. While companies like Microsoft integrate OpenAI's models into enterprise products, OpenAI's own direct enterprise sales remain modest. Large enterprises hesitate to depend on OpenAI for mission-critical applications because OpenAI's long-term viability remains unproven, and the company lacks existing trust relationships in enterprise settings.

Analyzing the Economics of AI Model Development and Deployment

Understanding why tech companies struggle with AI monetization requires examining the underlying economics of building and operating AI systems. The mathematical realities are less forgiving than many public narratives suggest.

The Scaling Laws Challenge: When Bigger Isn't Always Profitable

Fundamental research in machine learning has established consistent relationships between model size, training data, and performance: doubling model size typically requires approximately two to three times more computational resources. This creates a scaling problem where each generation of improved models costs exponentially more to train and operate.

GPT-3, released in 2020, required approximately 3,640 Petaflop/s-days of computation to train. GPT-4, released in 2023, likely required 10x to 100x more computation (exact figures remain proprietary). The next generation will presumably require similar or greater increases. These computational requirements translate to direct costs: training a state-of-the-art language model costs between

Serving these models to users requires additional computational resources. Each user query to a large language model consumes measurable compute resources—roughly 0.0001 to 0.001 GPU hours per query depending on query length and model size. At scale, with millions of concurrent users, this aggregates to massive infrastructure costs.

The monetization trap becomes clear: training costs are fixed investments that must be recouped through service revenue, but serving costs are variable and directly correlated to usage. If serving costs are high relative to revenue per query, increasing usage actually decreases profitability. This inverts traditional software economics, where serving additional users typically increases profitability (because software can be copied at nearly zero cost).

Comparison of AI Revenue Models

Tech companies have experimented with several revenue models to extract value from AI investments:

Subscription Model (Microsoft Copilot Pro, ChatGPT Plus): Users pay monthly fees for access to advanced models and features. This provides predictable recurring revenue but requires consistent value delivery to justify continued payments. Success depends on customers perceiving sufficient benefit relative to subscription cost. Typical pricing ranges from

Usage-Based Pricing (AWS AI Services, OpenAI API): Companies charge based on computational resources consumed—typically dollars per million tokens processed. This aligns incentives: more usage generates more revenue. However, it requires customers to accept variable costs and creates pricing transparency that invites competitive pressure. A typical API call might cost

Embedded Features (No Direct Charge) (Apple Intelligence, Google Assistant): AI features are included in products without separate charges. This protects user experience consistency and supports premium positioning but generates no direct AI revenue. The monetization occurs through base product sales and improved user retention.

Enterprise Infrastructure (AWS SageMaker, Google Cloud AI): Sell infrastructure and tools for enterprises to build their own AI systems. This generates predictable infrastructure revenue while transferring development risk to customers. Typical enterprise deals range from

Advertising Enhancement (Google, Meta, Amazon): Improve advertising targeting and ROI through AI-driven optimization. This generates revenue indirectly through improved advertiser ROI and higher advertising prices. The advantage is that optimization investments can be measured directly against revenue impact.

Each model has trade-offs. Subscription models require ongoing value delivery and face consumer willingness-to-pay constraints. Usage-based pricing exposes companies to margin pressure from competitors offering similar services at lower prices. Embedded features don't generate direct revenue. Enterprise infrastructure requires extensive sales and support efforts. Advertising enhancement works only for companies with existing advertising businesses.

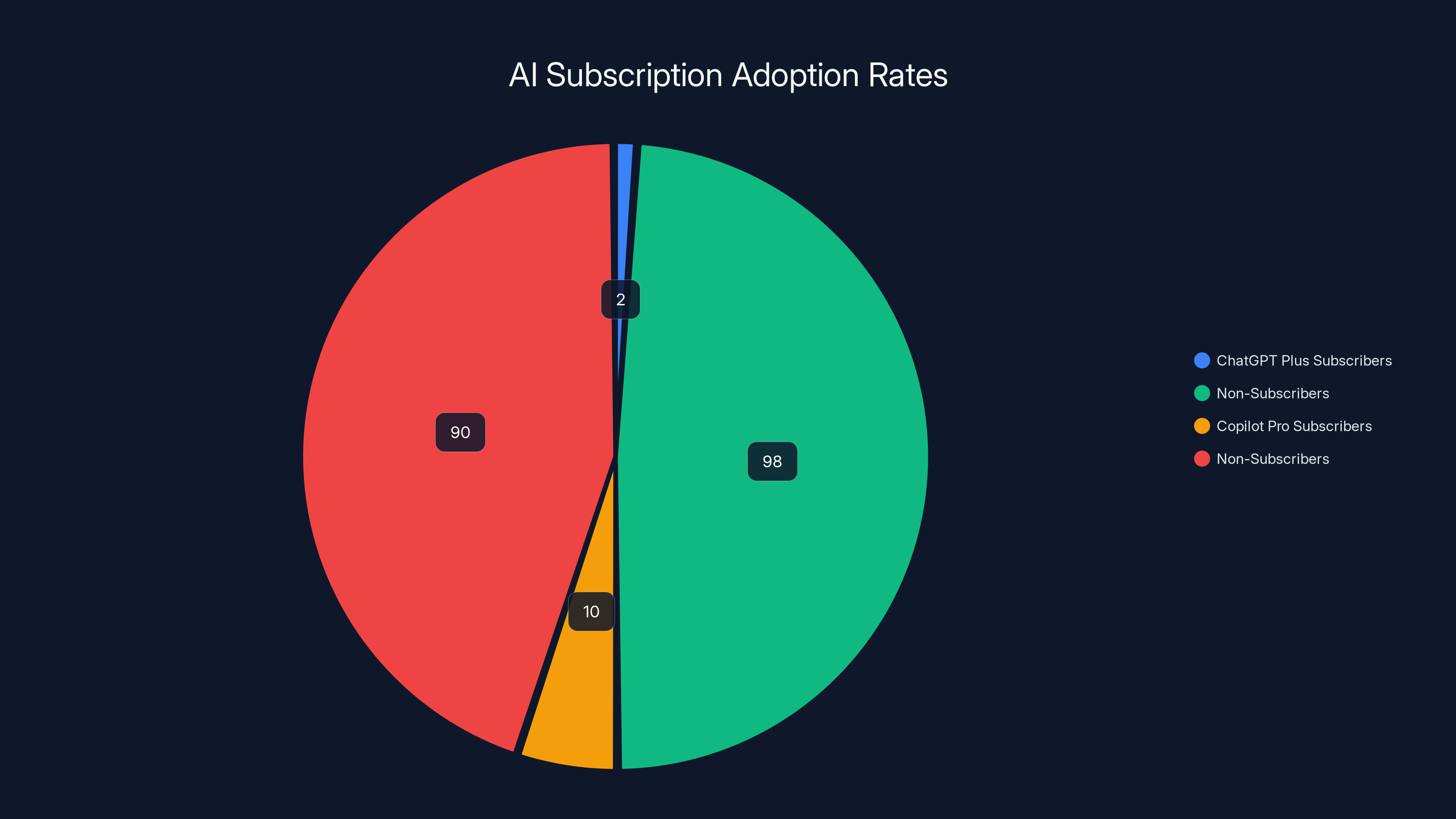

Estimated data shows that only 2% of ChatGPT users subscribed to ChatGPT Plus, while 10% of Microsoft's enterprise users adopted Copilot Pro, indicating higher willingness to pay among enterprise users.

The Privacy-Monetization Trade-off: Apple's Strategic Constraint

Apple's monetization challenge is compounded by a strategic choice that competitors avoided: the company committed to privacy-preserving AI that processes data locally on devices rather than in cloud data centers.

This choice provides genuine customer benefits. Users don't transmit sensitive documents, emails, photos, or personal information to Apple's servers for processing. Apple cannot access the data used to train or improve its AI models. From a privacy perspective, this approach is demonstrably superior to cloud-based alternatives.

However, this design choice creates significant monetization constraints. Cloud-based AI generates clear monetization opportunities: companies can measure usage, implement metering, and charge based on computational resources consumed. Apple cannot easily implement usage-based pricing for on-device AI because the processing occurs on devices already owned by customers.

Furthermore, on-device AI processing is less efficient than cloud processing. The computational resources available on a smartphone or tablet are orders of magnitude smaller than cloud data centers. This means Apple's on-device models must be smaller, less capable, and optimized heavily for efficiency. Complex AI tasks still require cloud processing, which reintroduces the privacy trade-offs Apple sought to avoid.

Apple's competitive positioning in premium consumer electronics provides limited guidance for monetization. Samsung, Google Pixel, and other Android manufacturers face similar challenges—their AI features must be competitive with Apple, but customers expect them as standard functionality rather than premium add-ons. The entire smartphone industry operates on razor-thin profit margins for feature parity, making AI an expensive competitive necessity rather than a profit driver.

The privacy-monetization tension illustrates a deeper challenge: the most valuable AI applications require data—lots of it. Privacy-preserving AI is inherently constrained in capability compared to models trained on billions of user interactions. Companies seeking maximum AI capability must choose between privacy and model performance. Companies choosing privacy necessarily accept reduced capability and reduced monetization potential.

Market Adoption and Consumer Willingness to Pay

Beyond technical and strategic challenges, AI monetization faces fundamental market adoption hurdles. Consumers and enterprises have limited experience with standalone AI products, making it difficult to predict how much they'll pay for AI capabilities.

Consumer Behavior: Reluctance to Adopt Premium AI Subscriptions

Early data on consumer AI subscription adoption reveals hesitation. ChatGPT Plus achieved a reported 2 million subscribers by early 2025, representing approximately 2% of ChatGPT's 100 million monthly active users. This suggests that while users value free access to AI, the vast majority are unwilling to pay for premium tiers.

Microsoft's experience with Copilot Pro proved more positive, reaching approximately 1 million subscribers by late 2025, though Microsoft has 10x larger enterprise user base. The difference between Copilot Pro's uptake and ChatGPT Plus's suggests that enterprise users demonstrate greater willingness to pay than consumers, which aligns with historical software monetization patterns.

Several factors explain consumer reluctance to adopt premium AI subscriptions. First, expectations are anchored to free AI access—ChatGPT established a baseline expectation that advanced AI should be free or nearly free. Second, differentiation between free and premium tiers is less obvious than traditional software features. A free cloud storage service clearly differentiates between 2GB (free) and 200GB (paid), but AI quality differences are subtle and subjective. Third, consumers have numerous free or low-cost AI alternatives, creating competitive pressure that limits pricing power.

Enterprise adoption appears more promising because businesses evaluate AI through ROI rather than price sensitivity. If a tool demonstrably increases productivity by 20%, the ROI calculation is straightforward—the tool pays for itself through efficiency gains. This business logic doesn't apply to consumer use cases where "efficiency gain" is ambiguous and value is subjective.

Enterprise Monetization: The More Promising Path

Enterprise software has historically achieved superior monetization compared to consumer software, a pattern emerging with AI as well. Companies like Microsoft benefit from existing enterprise relationships, established budgeting processes, and clear ROI metrics.

Enterprises purchase AI capabilities for specific use cases: customer service automation, document processing, code generation, fraud detection, demand forecasting, and content creation. For each use case, enterprises can measure ROI: reduction in customer service staff hours, faster contract review, reduced code development time, etc. This business logic justifies premium pricing that consumer markets won't support.

However, enterprise AI markets face their own challenges. Enterprises move slowly in adopting new technologies, requiring extensive proof-of-concept testing, security evaluation, and integration planning. Competitors can undercut on price while enterprises evaluate offerings, compressing margins. Enterprise deals also require extensive sales and support investments, reducing profitability compared to subscription-only consumer models.

The enterprise AI market is also consolidating around dominant players. Microsoft, Google, and Amazon have structural advantages through existing enterprise relationships, established trust, and integrated ecosystems. Startups face the challenge of proving their AI technology is sufficiently superior to convince enterprises to switch from established providers.

Estimated data shows that Microsoft and Apple lead in AI investment with

Alternative Monetization Models: Lessons from Adjacent Industries

Understanding how AI companies might ultimately monetize requires examining successful monetization models from adjacent technology sectors and emerging experiments in the AI industry.

The Software-as-a-Service Model: Established Success, Uncertain Applicability

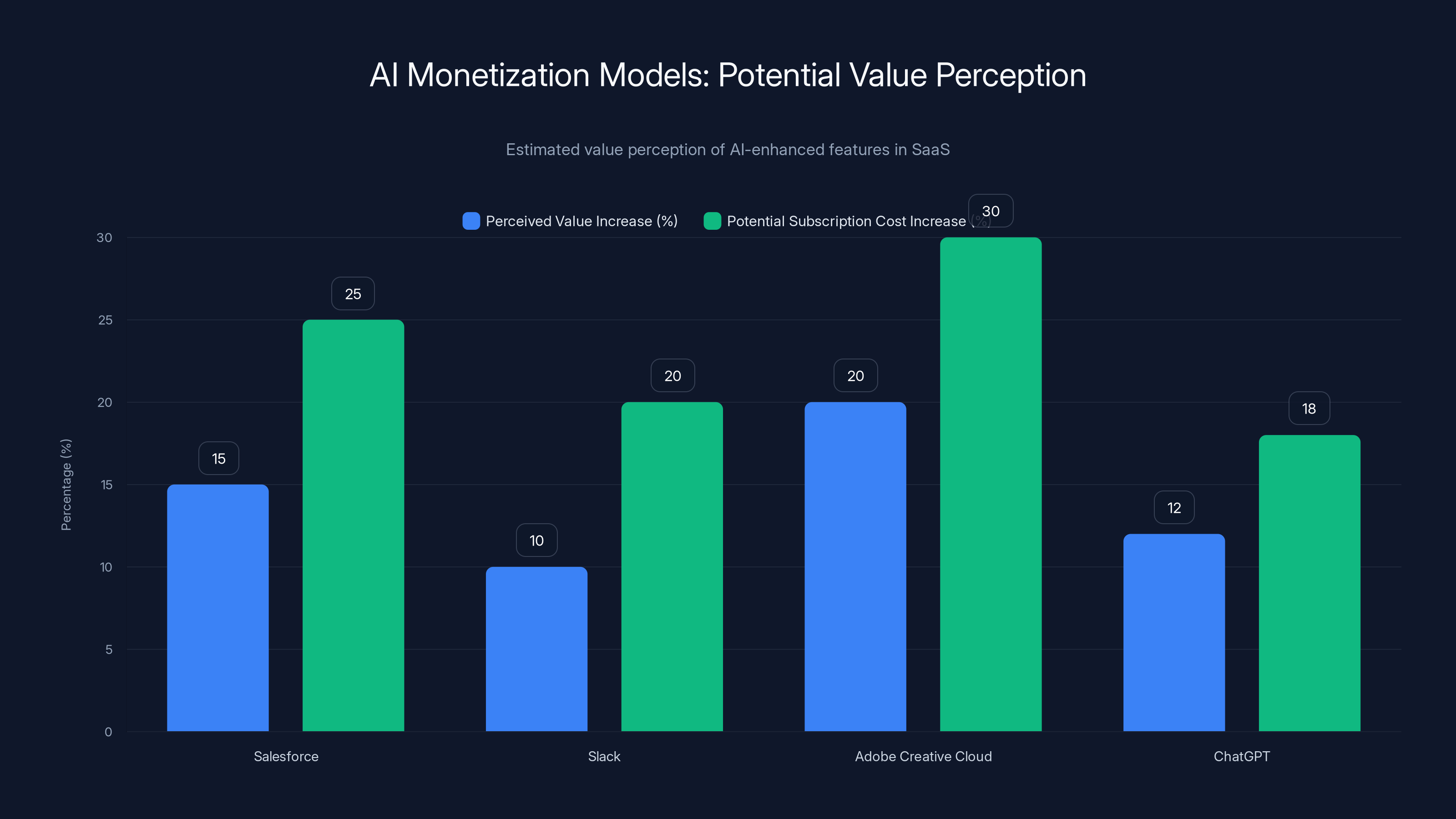

Traditional SaaS companies monetize through subscription fees based on usage tiers, features, or number of users. This model works exceptionally well for tools like Salesforce (customer relationship management), Slack (team communication), and Adobe Creative Cloud (content creation). These products provide clear, measurable value that justifies ongoing subscription fees.

AI services are attempting to apply similar models. Slack integrated AI writing assistance into its platform and could theoretically charge premium prices for enhanced AI features. The question remains whether businesses perceive sufficient incremental value. If Slack's AI writer improves message composition by 10%, does that justify a 20% increase in subscription cost? The answer depends on subjective value perception rather than objective metrics.

The Freemium Model: Generating Revenue Through Conversion

Freemium products provide baseline functionality free and charge for advanced features. This model works well when there's clear feature differentiation and a meaningful subset of users converts to paying status. Slack itself operates on a freemium model, as do tools like Notion, Figma, and Discord.

AI companies have experimented extensively with freemium approaches. ChatGPT (free with paid premium tier), Claude (free and paid), and Perplexity (free and paid) all use freemium models. Success metrics remain mixed—each company reports some percentage of free users converting to paid, but exact figures are proprietary. The challenge for AI specifically is that free AI is quite good, reducing motivation to pay for premium variants.

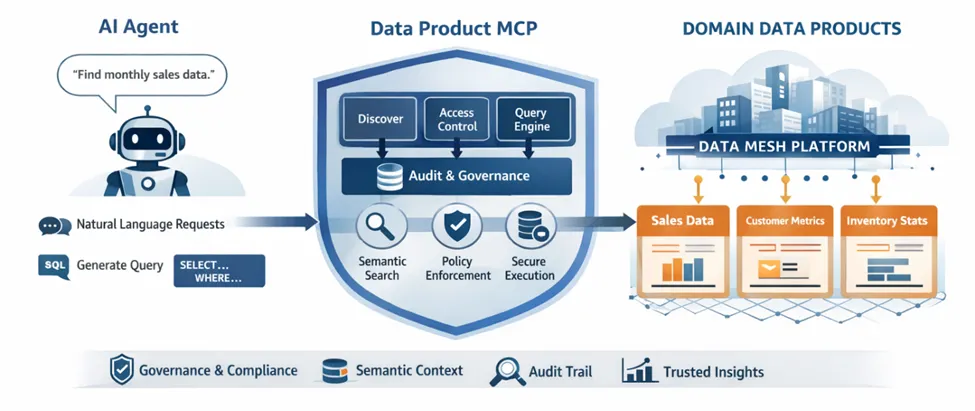

The Infrastructure/Platform Model: Selling to Builders

Rather than targeting end users directly, some companies are positioning themselves as platforms for developers and enterprises to build AI applications. OpenAI's API, Anthropic's API, Hugging Face, and Mistral all pursue this approach.

The infrastructure model has advantages: it's usage-based (more usage = more revenue), it removes the company from building consumer products (reducing customer support burden), and it taps into enterprise budgets that are more generous than consumer budgets. The disadvantage is commoditization risk—if multiple companies offer similar AI infrastructure, prices decline and margins compress. This describes the current trajectory of the API market.

Vertical AI Solutions: Domain-Specific Applications

An emerging pattern shows promise: companies building AI solutions for specific vertical markets (healthcare, legal, financial services) can achieve better monetization than horizontal platforms. A healthcare company deploying AI for diagnostic imaging can justify premium pricing because the value is measurable and the market's willingness to pay is high.

Vertical AI companies like Scale AI (data annotation for AI), Jasper (AI writing for marketing), and GitHub Copilot (AI for code) have demonstrated stronger business model sustainability than horizontal AI platforms. The reason is straightforward: domain-specific expertise allows better optimization and more defensible competitive positioning.

The Data Leverage Model: Monetizing Data Advantages

Some companies are exploring monetization models based on data advantages. Companies with privileged access to high-quality domain-specific data can build better AI models than competitors and monetize through superior capabilities. This describes Google (search queries), Meta (social data), and Amazon (e-commerce transactions).

However, this model works only for companies that already possess substantial data advantages. For new entrants, building equivalent data advantages requires either (a) years of operation to accumulate data, (b) acquisition of existing companies with data, or (c) partnerships that provide data access. All three paths are slow or expensive, limiting the viability of the data leverage model for most AI companies.

The Role of Artificial General Intelligence: Speculative Futures

Many technology industry observers argue that current AI monetization challenges will be moot once artificial general intelligence (AGI) is achieved. This argument contains both logic and deep uncertainty.

AGI Scenarios and Economic Implications

If AI systems achieve general intelligence matching or exceeding human cognitive capabilities across all domains, economic implications would be transformative. Such systems could perform virtually any knowledge work, potentially replacing human labor across entire sectors. The economic value would be staggering—potentially worth trillions in labor replacement value alone.

However, multiple scenarios could unfold. In an optimistic scenario, AGI systems are expensive to develop and operate but provide immense value to businesses that deploy them, enabling those businesses to capture massive profits that can then be captured as rents by AGI developers. In a pessimistic scenario, AGI becomes a commodity—multiple companies develop comparable systems, competition drives down prices, and whoever developed the system first reaps temporary benefits before commoditization destroys profitability.

A third scenario is genuinely concerning: if AGI systems become sufficiently capable and autonomous, economic returns might accrue not to developers but to the AGI systems themselves or to whoever controls them. This raises complex questions about economic organization, power distribution, and value capture that extend far beyond traditional business model analysis.

Timeline Uncertainty: Why AGI Speculation Doesn't Solve Current Challenges

While AGI represents a potential long-term game-changer, its timeline remains deeply uncertain. Optimistic estimates place AGI development within the next 5-10 years. More conservative estimates extend to 20-30 years or suggest AGI may not be achievable at all. This uncertainty matters because companies must solve monetization challenges with current technology while speculating about future capabilities is not a viable business strategy.

Apple, OpenAI, Google, Microsoft, and Amazon all need to generate returns on AI investments before AGI may arrive. Current monetization models must work with current technology. Companies cannot operate at losses indefinitely waiting for AGI-scale disruption that may never arrive.

Furthermore, assuming AGI is achieved, significant lags typically occur between technical capability and economic monetization. Even with powerful new technology, creating business models, capturing market share, building customer relationships, and establishing pricing power takes years. Companies that have effectively monetized earlier AI generations will have competitive advantages in monetizing AGI-scale systems.

Estimated data shows Microsoft leading in enterprise AI integration, while Google focuses more on consumer AI products. Apple's strategy appears more balanced across areas.

Investment Market Reactions: How Markets Are Pricing AI Monetization Uncertainty

Public markets have responded to AI monetization uncertainty with volatility and divergent valuations. Examining market reactions provides insight into investor sentiment regarding AI monetization prospects.

Big Tech Stock Performance Amid AI Uncertainty

Despite monetization questions, big technology companies have maintained strong stock valuations. Apple's market capitalization exceeded

However, returns on AI-specific investments remain difficult to demonstrate. When companies report earnings, they emphasize AI integration but struggle to quantify AI-specific revenue contributions. Investors are essentially betting that companies will eventually figure out monetization rather than seeing clear evidence it's already happening.

AI Startup Funding and Valuation Dynamics

Startup AI companies have received massive funding despite unprofitable business models. OpenAI raised billions despite projecting losses until 2030. Anthropic raised billions with even less clarity on monetization path. Thousands of smaller AI startups are funded based on technology promise rather than business model validation.

This funding dynamic reflects an increasingly common investment pattern: when an important new technology emerges, investors provide capital to numerous startups betting that some will solve monetization and achieve massive scale. For every successful company, many others fail. This is venture capital's intended function—allocate capital efficiently across uncertain outcomes at scale.

However, the ratio of failures to successes in AI is likely to be higher than historical technology cycles because AI monetization is particularly challenging. Investors have relatively high priors that OpenAI, Anthropic, and a few other advanced model companies have competitive moats. Most other AI startups are essentially software services built on top of others' models—inherently less defensible.

Public Market Corrections and Sentiment Shifts

Technology stocks experienced modest corrections during 2025-2026 as monetization questions became more mainstream. This reflects a logical market process: as more investors recognize that AI monetization is uncertain, valuations compress from "extremely optimistic" to "moderately optimistic." Full recognition that AI monetization is genuinely difficult would trigger more substantial repricing.

Market expectations are now pricing in a scenario where AI generates meaningful incremental value for major technology companies but not at the transformative scale that early AI evangelicals promised. This represents a significant moderation from 2023-2024 hype, when some investors believed AI would revolutionize entire industries overnight.

Geographic and Regulatory Considerations: Additional Monetization Constraints

Beyond technical and market challenges, geographic and regulatory factors significantly impact AI monetization strategies.

Data Localization Requirements: Constraining Cloud-Based AI

Many countries require that personal data remain within national borders. European Union regulations (GDPR), China's data protection laws, Brazil's LGPD, and similar regulations across dozens of other countries create constraints on cloud-based AI systems that process personal data.

For companies like Google, Microsoft, and Amazon that operate global cloud infrastructure, data localization requirements mean maintaining separate data processing infrastructure in multiple countries. This increases operational costs and reduces the efficiency gains that cloud-based AI would normally provide. These regulations favor privacy-preserving, on-device AI approaches like Apple's—but on-device processing has its own monetization limitations.

Regulations also constrain the types of AI applications that companies can monetize. Biometric identification, facial recognition, and other surveillance-adjacent AI capabilities face restrictions in multiple jurisdictions. This eliminates potentially valuable monetization opportunities while forcing companies to invest in compliance infrastructure.

Export Controls: Limiting Market Access

U.S. export controls on advanced computing chips significantly impact global AI monetization. Companies cannot freely export advanced AI systems to countries like China, creating bifurcated markets where U.S. companies cannot compete in large portions of the global economy.

This matters substantially because China represents approximately 20% of global GDP and has the world's largest smartphone market, making it an essential market for consumer AI monetization. Export restrictions limit U.S. companies' addressable market, reducing potential returns on AI investments.

Tax Implications: Complexity in Global Operations

Different countries tax software and cloud services differently, creating incentive structures that affect monetization strategies. Some countries tax software as goods, others as services. Some provide preferential tax treatment for R&D-intensive companies, others don't. These differences matter when deciding which services to monetize where.

The uncertainty around digital services taxation also creates investment hesitation. Companies are uncertain whether AI-derived revenue will face special taxation, potentially reducing profitability. This uncertainty extends to carbon taxation—energy-intensive AI operations might face future carbon taxes that aren't fully priced into current business models.

Estimated data shows that while AI features can increase perceived value, the potential subscription cost increase may not always align, posing a challenge for monetization.

Case Study: How Runable Addresses AI Monetization Through Simplified Workflows

While major technology companies struggle with AI monetization at scale, emerging platforms like Runable demonstrate alternative approaches to generating value from AI investments. Rather than attempting to monetize advanced foundation models at consumer or enterprise scale, Runable focuses on practical AI automation workflows that address specific productivity needs.

Runable's approach centers on $9/month pricing for AI-powered automation tools including slides generation, document creation, report production, and presentation building. This positions Runable in the vertical AI solutions category—domain-specific applications rather than horizontal platforms.

The monetization model demonstrates several principles worth examining: (1) Domain specificity reduces competition and allows premium positioning relative to generic AI tools, (2) Bundled features (multiple AI capabilities in one platform) increase switching costs and reduce temptation to try competitors, (3) Accessible pricing ($9/month) focuses on market breadth rather than capturing maximum value per customer, and (4) Developer-first positioning creates a community that naturally drives adoption and retention.

Runable's business model also demonstrates how companies can generate returns on AI investments without solving the generalized AGI monetization problem. By focusing on specific use cases where AI provides clear value—content generation, workflow automation—Runable can implement straightforward pricing that customers accept because the value is clear and quantifiable.

For teams seeking AI-powered automation capabilities without massive infrastructure investments, platforms like Runable offer practical value propositions that established competitors often overlook. The willingness of customers to pay $9 monthly for specialized AI tools suggests that the problem isn't AI monetization generally, but rather designing specific, valuable applications where customers perceive clear benefits.

Emerging Monetization Models Being Tested in 2025-2026

Even as major companies struggle with traditional monetization approaches, emerging models are being tested in real market conditions. Several deserve examination as potential paths forward.

Model 1: AI as a Service Financer

Some companies are exploring hybrid models where AI services are provided at reduced cost or free, with profitability driven by downstream transactions. For example, an AI writing assistant might be provided free or cheaply, with monetization through premium services like professional editing, fact-checking, or publishing services. This converts AI from a product to a platform for additional services.

Model 2: Enterprise AI Staffing

Another emerging model treats AI as staff augmentation. Rather than selling software licenses, companies hire AI-augmented teams to perform specific work for clients, using proprietary AI tools internally. Monetization flows from client fees for completed work rather than software licensing.

Companies Fold AI, Relativity Labs, and others operate in this space. The model works because clients want outcomes (completed work) rather than tools. The company retains competitive advantage through superior AI capabilities and workflow optimization.

Model 3: AI-Powered Managed Services

Similar to enterprise staffing but applied to ongoing services: companies provide managed services (customer support, content moderation, data processing) that are executed using proprietary AI augmented by humans. The client pays for the service outcome rather than AI technology. The company's competitive advantage is the combination of AI and operational expertise.

Model 4: Industry-Specific Licensing

Emerging models involve licensing AI capabilities specifically to industry-specific software vendors. For example, healthcare software companies license AI diagnostic capabilities that they integrate into their products. The healthcare vendor monetizes; the AI developer receives licensing fees. This divides the revenue opportunity—AI developers receive less revenue per user than if they monetized directly, but they reduce sales and support burden by working through established vertical vendors.

Model 5: AI Training and Certification

With skilled AI practitioners in short supply, companies are monetizing through training and certification programs. Organizations invest heavily in teaching employees and partners how to use AI tools effectively. This model works because training, education, and certification have historically commanded premium pricing in professional services markets.

Competitive Dynamics: How Monetization Success Depends on Market Structure

The likely success of different AI monetization approaches depends significantly on market structure—specifically, how fragmented or concentrated the market becomes.

Scenario 1: Winner-Take-Most Market (High Concentration)

If AI markets consolidate around a few dominant platforms (likely Microsoft, Google, Amazon, and potentially OpenAI), monetization becomes more feasible. Dominant platforms can more easily extract rents—charge premium prices because customers have limited alternatives. This scenario benefits large, established technology companies that can integrate AI across existing products and relationships.

In this scenario, Apple's challenge becomes even more acute: competing against dominant cloud-based AI providers while maintaining privacy positioning becomes increasingly difficult. Apple might be forced to choose between (a) loosening privacy commitments to compete on AI capability, (b) accepting a secondary position in AI capabilities, or (c) investing massive additional capital to maintain competitive parity.

Scenario 2: Fragmented Market with Specialization

Alternatively, AI markets might fragment into numerous vertical specialists, with different companies dominating different industries. Legal AI platforms, healthcare AI platforms, financial services AI platforms, etc., each with specialized capabilities and defensible market positions.

This scenario actually favors startups and specialized companies more than the dominant players, because vertical expertise becomes more valuable than platform dominance. It also enables better monetization because vertical specialists can charge premium prices justified by domain-specific value.

Scenario 3: Commodity Markets with Margin Compression

If AI models and capabilities become commodified—multiple companies offer comparable capabilities at similar prices—margins compress dramatically. This scenario describes aspects of the current API market, where OpenAI, Anthropic, Google, and others all offer language model APIs at comparable pricing.

In this scenario, monetization becomes extremely difficult because price is the primary competitive variable. Companies with superior operational efficiency can sustain profitability, but companies unable to operate at scale or with high-cost structures face pressure. Venture-funded startups with substantial burn rates would find this environment inhospitable.

Future Outlook: What Must Happen for AI Monetization to Succeed

Despite current challenges, AI will eventually become meaningfully monetized. The question is not whether monetization will occur, but rather how, when, and which companies will succeed. Several preconditions must be met.

Requirement 1: Demonstrated Quantifiable Value

For any monetization approach to succeed at scale, customers must perceive clear, quantifiable value. Currently, many AI applications provide uncertain value—better writing assistance, more helpful customer service chatbots, improved image generation. These improvements are real but subjective.

As AI matures, applications with clearer value propositions will proliferate. Reducing customer service costs by 30% through AI automation is quantifiable. Using AI for fraud detection that prevents $100 million in losses annually is quantifiable. These use cases will monetize more readily than marginal improvements to consumer tools.

Requirement 2: Customer Willingness to Pay Must Develop

A generation of free AI access has created expectations that AI should be cheap or free. As customers become more dependent on AI for critical workflows, their willingness to pay will increase. This describes a standard technology adoption pattern: free access during early adoption, willingness to pay as value becomes critical.

We're likely in the transition phase where the vast majority of customers expect free access but a growing minority recognizes sufficient value to justify payments. This minority will expand as AI capabilities improve and applications become more essential.

Requirement 3: Cost Structures Must Improve

Current AI cost structures make profitability difficult even at scale. As computational efficiency improves—through better algorithms, more efficient hardware, and operational optimization—margins will expand. Companies that achieve 2x or 3x efficiency improvements relative to competitors will establish defensible profitability.

This suggests that companies investing heavily in computational efficiency and operational optimization may ultimately succeed where companies betting on raw capability do not. Microsoft's integration of custom AI chips into Azure infrastructure positions the company advantageously in this regard.

Requirement 4: Regulatory Clarity

Uncertainty about taxation, data protection, and AI-specific regulations creates hesitation in monetization planning. As regulatory frameworks clarify—which is already happening in the EU with the AI Act and emerging U.S. regulations—companies can make more confident monetization decisions.

Regulatory clarity also creates defensibility: established players can more easily comply with regulations than startups, so regulatory clarity often benefits incumbent technology companies.

Strategic Recommendations for Technology Companies

For companies attempting to monetize AI investments, several strategic approaches show promise based on current market conditions and emerging patterns.

Recommendation 1: Focus on Enterprise Over Consumer

Enterprise markets demonstrate superior monetization potential because businesses evaluate through ROI rather than price sensitivity. Investing more aggressively in enterprise AI solutions and less in consumer-facing AI provides clearer paths to profitability. This particularly applies to companies like Apple that lack existing enterprise sales infrastructure—developing this capability requires time but enables substantially better monetization.

Recommendation 2: Specialize Rather Than Generalize

Vertical specialization shows superior monetization compared to horizontal platforms. Companies should consider whether their AI investments can be more effectively monetized through domain-specific applications rather than general-purpose capability.

Recommendation 3: Establish Clear ROI Metrics

Successful monetization requires that customers understand value in concrete, measurable terms. Rather than marketing "better AI," successful monetization focuses on specific outcomes: "reduce customer service costs by 30%" or "improve fraud detection accuracy by 15%." Companies should invest in demonstrating ROI rather than marketing capability.

Recommendation 4: Develop Ecosystem Partnerships

Companies that position themselves as infrastructure or capability providers within broader ecosystems often achieve better monetization than standalone offerings. Partnering with established vertical software companies, consulting firms, and systems integrators extends reach without requiring direct sales efforts.

Recommendation 5: Maintain Optionality on Business Models

Given uncertainty about optimal monetization approaches, companies should structure investments to maintain flexibility. Building AI capabilities that can be monetized through multiple approaches (subscription, usage-based, licensing, managed services) provides insurance against any single approach failing.

Conclusion: The Monetization Question Will Eventually Be Answered

Tim Cook's non-answer to Erik Woodring's monetization question reflects genuine uncertainty rather than evasion. The uncomfortable truth is that no technology company—regardless of scale, resources, or talent—has definitively figured out how to monetize advanced AI capabilities profitably at scale. Apple, Microsoft, Google, Amazon, and OpenAI are all still experimenting with approaches whose long-term viability remains unproven.

This doesn't mean AI monetization is impossible. Rather, it means that solving this problem requires patience, experimentation, and willingness to accept interim periods of profitability uncertainty. The companies that will ultimately succeed in monetizing AI are those that:

- Invest continuously in AI capability improvement, recognizing that better models and more capable systems eventually become worth paying for.

- Experiment relentlessly with monetization approaches, learning from failures and scaling successes.

- Focus on customer value delivery rather than technology for its own sake.

- Develop operational efficiency so that as usage scales, profitability increases rather than declining.

- Maintain long-term strategic patience rather than demanding immediate returns on AI investments.

For teams and developers seeking AI-powered solutions today, platforms like Runable demonstrate that practical monetization is already possible in specific applications. By focusing on solving genuine problems—content generation, workflow automation, productivity enhancement—with clear value propositions and accessible pricing ($9/month), companies can generate sustainable returns even as larger players work through monetization challenges at scale.

The broader lesson is that AI monetization will eventually work, but not through any single universal approach. Instead, multiple monetization models will emerge across different market segments. Enterprise solutions will monetize differently than consumer products. Vertical specialists will monetize differently than horizontal platforms. Infrastructure providers will monetize differently than application developers.

The next 12-24 months will likely clarify which monetization approaches are working in practice. Companies demonstrating clear revenue growth from AI services will become valuable case studies. Companies unable to translate AI capabilities into paying customers will face investor pressure to either monetize more aggressively or reduce AI spending.

Until then, Tim Cook's vague answer—that Apple creates great value through AI integration—remains the most honest response the industry can offer. "Great value" will eventually translate to revenue. But the timeline remains uncertain, the path forward unclear, and the ultimate winners far from determined. This uncertainty is not comfortable for investors accustomed to predictable technology trajectories, but it's honest. And when companies finally solve the AI monetization equation, having maintained that honesty while the industry experiments will itself prove valuable.

FAQ

What is AI monetization and why is it challenging?

AI monetization refers to generating revenue from investments in artificial intelligence technology. It's challenging because the costs of developing and operating AI systems are extraordinarily high—training large language models costs tens to hundreds of millions of dollars—while pricing models for consumers and enterprises remain uncertain. Most companies have invested billions in AI with unclear paths to recouping these investments through customer payments.

How do major technology companies currently monetize AI?

Major tech companies employ several approaches. Microsoft embeds AI into its enterprise productivity suite and charges for premium features through Copilot. Google monetizes AI indirectly through improved advertising targeting and offers cloud-based AI services. Amazon sells AI infrastructure and tools through AWS. Apple includes AI in devices without separate charges. OpenAI offers subscription plans (ChatGPT Plus at $20/month) and API access on usage-based pricing. Each approach has trade-offs regarding revenue potential and operational complexity.

What percentage of technology companies' revenue comes from AI?

Exact percentages remain largely proprietary, but estimates suggest AI contributes less than 5-10% of revenue for most major technology companies as of early 2026. Despite massive AI investments, AI services remain a small portion of overall business. This reflects both limited monetization success to date and companies' preference for embedding AI into existing products rather than creating new revenue streams. As AI capabilities improve and become more essential, the revenue percentage should increase substantially.

Why hasn't OpenAI achieved profitability despite ChatGPT's massive user base?

OpenAI serves over 100 million monthly active users, yet faces profitability challenges because serving language model queries is expensive. Each ChatGPT query consumes computational resources that cost money. OpenAI's subscription revenue (approximately 2% of users converting to

What is the difference between subscription-based and usage-based AI monetization models?

Subscription models charge fixed monthly fees regardless of usage—customers pay $20 monthly for ChatGPT Plus whether they make 1 query or 1000. This provides predictable revenue but risks customer dissatisfaction if they perceive they're not using the service enough. Usage-based models charge per transaction or computational unit used, aligning costs with actual usage. This works well for APIs but creates pricing uncertainty for customers and requires transparent, competitive pricing to avoid customer backlash. Different models suit different applications and customer segments.

How does Apple's privacy-first approach constrain AI monetization?

Apple processes AI computations locally on devices rather than uploading data to cloud servers. This protects privacy but prevents Apple from tracking usage metrics that would enable usage-based pricing or advertising targeting. Cloud-based competitors can implement metering and usage-based charges; Apple cannot easily do this for on-device AI. Additionally, on-device processing has lower computational capability than cloud processing, limiting AI sophistication. This creates a trade-off: privacy is preserved but monetization options are constrained and AI capability is limited compared to cloud alternatives.

What role will artificial general intelligence (AGI) play in AI monetization?

If AGI is achieved—AI systems matching or exceeding human cognitive capabilities across all domains—the economic implications would be transformative, potentially generating trillions in value. However, the timeline for AGI remains deeply uncertain, ranging from 5-30+ years depending on the source. Companies cannot wait for AGI to solve current monetization challenges; they must generate returns on investments using current technology. Additionally, if multiple companies achieve AGI-level capability, competition would compress prices and reduce profitability despite the enormous underlying value. Near-term monetization success depends on current technology, not speculative future capabilities.

Which industries show the most promising AI monetization opportunities?

Vertical markets with specific, quantifiable AI use cases demonstrate the strongest monetization potential. Healthcare shows promise for diagnostic imaging and clinical decision support (where outcomes are measurable). Financial services show promise for fraud detection and algorithmic trading (clear ROI calculations). Legal services show promise for contract analysis and legal research (time savings are quantifiable). Enterprise software shows promise for content generation and customer service automation (cost reduction is measurable). Consumer applications with subjective value propositions face greater monetization challenges than these verticals where value is economically quantifiable.

How could companies like Runable achieve sustainable monetization at lower price points?

Companies offering specialized AI solutions at accessible pricing (Runable's

What metrics should investors use to evaluate AI company monetization progress?

Beyond traditional SaaS metrics, investors should examine: (1) Payback periods—how long until customer lifetime value exceeds acquisition cost, ideally under 12 months; (2) Unit economics specifically—revenue per customer relative to serving costs (critical for AI given computational expenses); (3) Gross margins excluding infrastructure—revealing whether pricing is sustainable; (4) Customer retention and expansion—indicating whether customers perceive sufficient value to continue paying; (5) Computational efficiency improvements—showing whether companies are improving operational leverage over time; (6) Market penetration in target segments—revealing whether monetization models are working for specific customer types; (7) Competitive win rates—demonstrating whether customers switch from competitors to your AI solution. Traditional metrics like revenue growth alone are insufficient given the capital intensity of AI business models.

Key Takeaways

- Apple, Microsoft, Google, Amazon, and OpenAI have invested over $50 billion cumulatively in AI development with monetization models remaining fundamentally uncertain

- Enterprise markets show superior monetization potential compared to consumer markets because businesses evaluate AI through ROI rather than price sensitivity

- OpenAI projects profitability by 2030 despite having 2 million ChatGPT Plus subscribers, demonstrating the scale required for AI monetization success

- Vertical specialization approaches (healthcare AI, legal AI, financial AI) show stronger monetization prospects than horizontal general-purpose platforms

- Privacy-preserving on-device AI provides user benefits but constrains monetization options and limits capability compared to cloud-based approaches

- Multiple monetization models will likely coexist rather than a single dominant approach emerging industry-wide

- Companies must develop operational efficiency and cost reduction alongside capability improvement for AI monetization to succeed

- Accessible pricing platforms like Runable demonstrate that sustainable monetization is possible through specialization and bundled features

- Regulatory clarity around taxation and AI-specific rules will accelerate monetization decisions in 2026-2027

- The next 12-24 months will reveal which monetization approaches work in practice as companies report concrete AI revenue contributions

Related Articles

- Parloa's $3B Valuation: AI Customer Service Revolution 2025

- Airtable Superagent: AI Agent Features, Pricing & Alternatives [2025]

- On-Device Contract AI: How SpotDraft's $380M Valuation Changes Enterprise Legal Tech [2025]

- Qwen3-Max Thinking vs GPT-5.2 & Gemini 3 Pro: AI Reasoning Showdown 2025

- Intent-First Architecture: Why Conversational AI Fails [2025]

- AMI Labs: Inside Yann LeCun's World Model Startup [2025]