Gemini 3 Becomes Google's Default AI Overviews Model: What This Means for Search

Google just made a quiet but significant shift. Starting today, Gemini 3 is the default model powering AI Overviews across Google Search globally. If you've been using Google Search lately, you've probably noticed those AI-generated summaries appearing above the traditional results. Those used to be powered by a mix of Google's older systems, with only the trickiest queries routed to Gemini 3. Now every query gets the newer, more capable model.

This matters more than you might think. AI Overviews have been controversial since they launched, with users reporting hallucinations, incorrect information, and bizarrely unhelpful summaries. Google's been quietly iterating behind the scenes. The upgrade to Gemini 3 as the standard model represents a genuine attempt to fix those problems at scale.

But there's more happening here than just a model swap. Google's also launching direct integration between AI Overviews and AI Mode conversations. Instead of reading a summary and then running a separate search, you can now jump straight into a conversation with context preserved. It's a small change that signals how Google sees the future of search unfolding: less about finding links, more about getting answers through dialogue.

Let's break down what's actually happening, why it matters, and what this tells us about where search is heading.

TL; DR

- Gemini 3 now powers all AI Overviews globally, replacing the previous routing system that only sent difficult queries to the newer model

- AI Mode conversations can start directly from AI Overviews, preserving context and creating a fluid experience without separate searches

- The shift aims to improve accuracy and relevance in AI-generated summaries that users previously complained about

- Mobile devices get the feature first, with desktop access rolling out afterward

- This represents a fundamental change in how Google structures search, moving from link-finding to answer-conversation

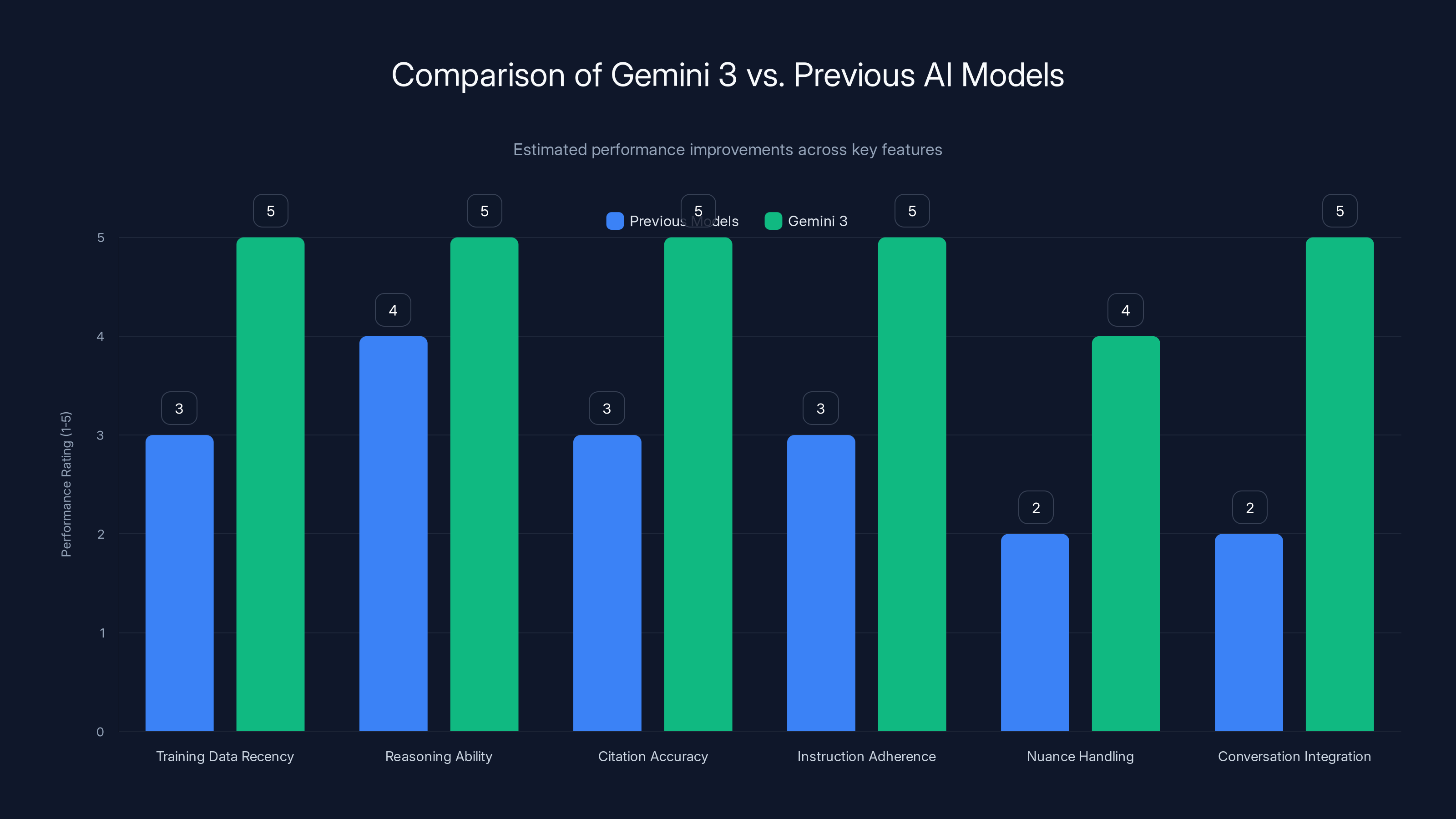

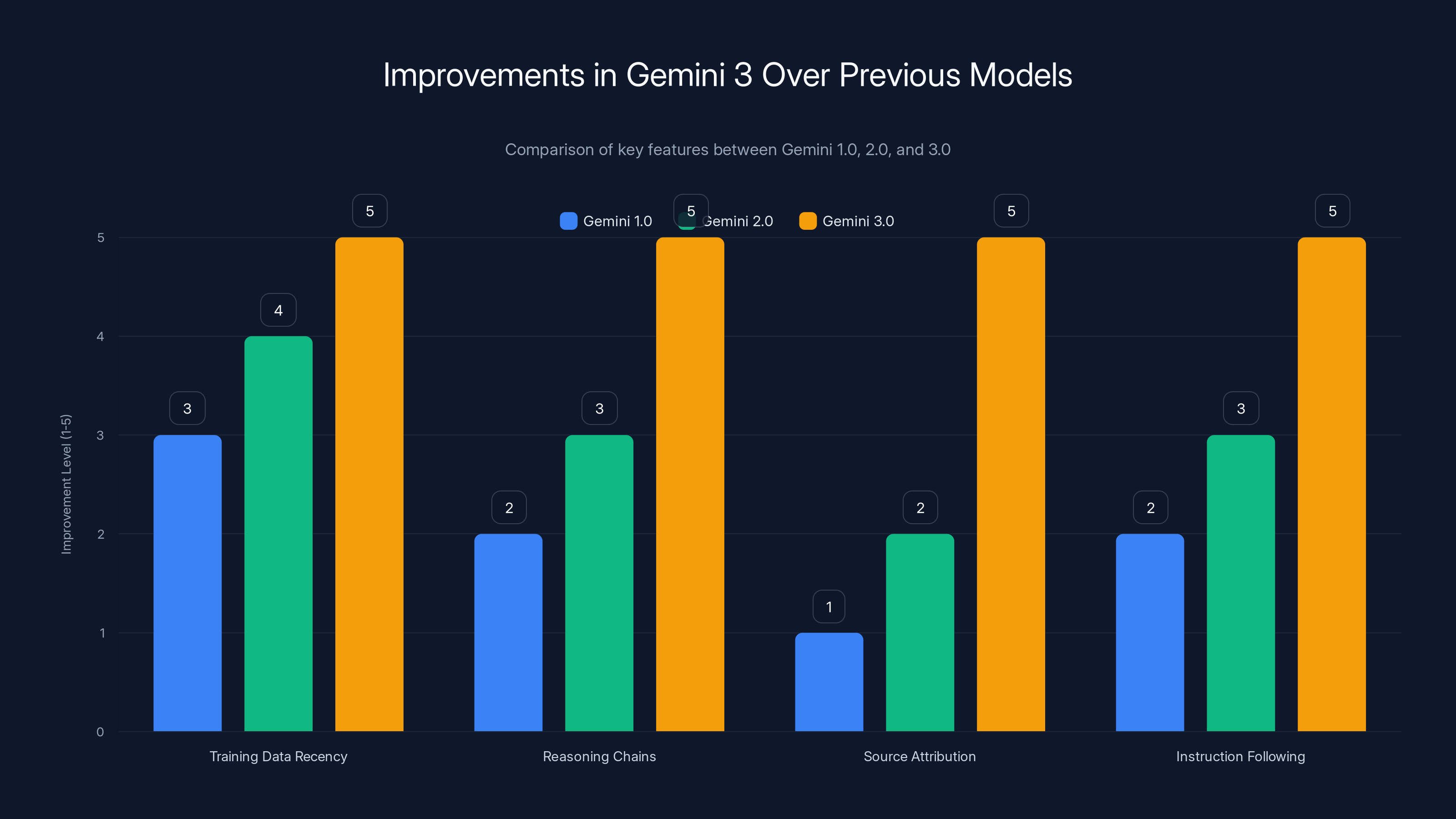

Gemini 3 shows significant improvements over previous models in areas such as reasoning ability, citation accuracy, and conversation integration. Estimated data based on qualitative descriptions.

Understanding Google's AI Overviews: The Context You Need

Google launched AI Overviews last year with genuine ambition. The idea was simple but powerful: instead of scanning a page of blue links, users would get a natural-language summary synthesizing multiple sources. It would be like having a personal research assistant who reads everything and tells you the answer.

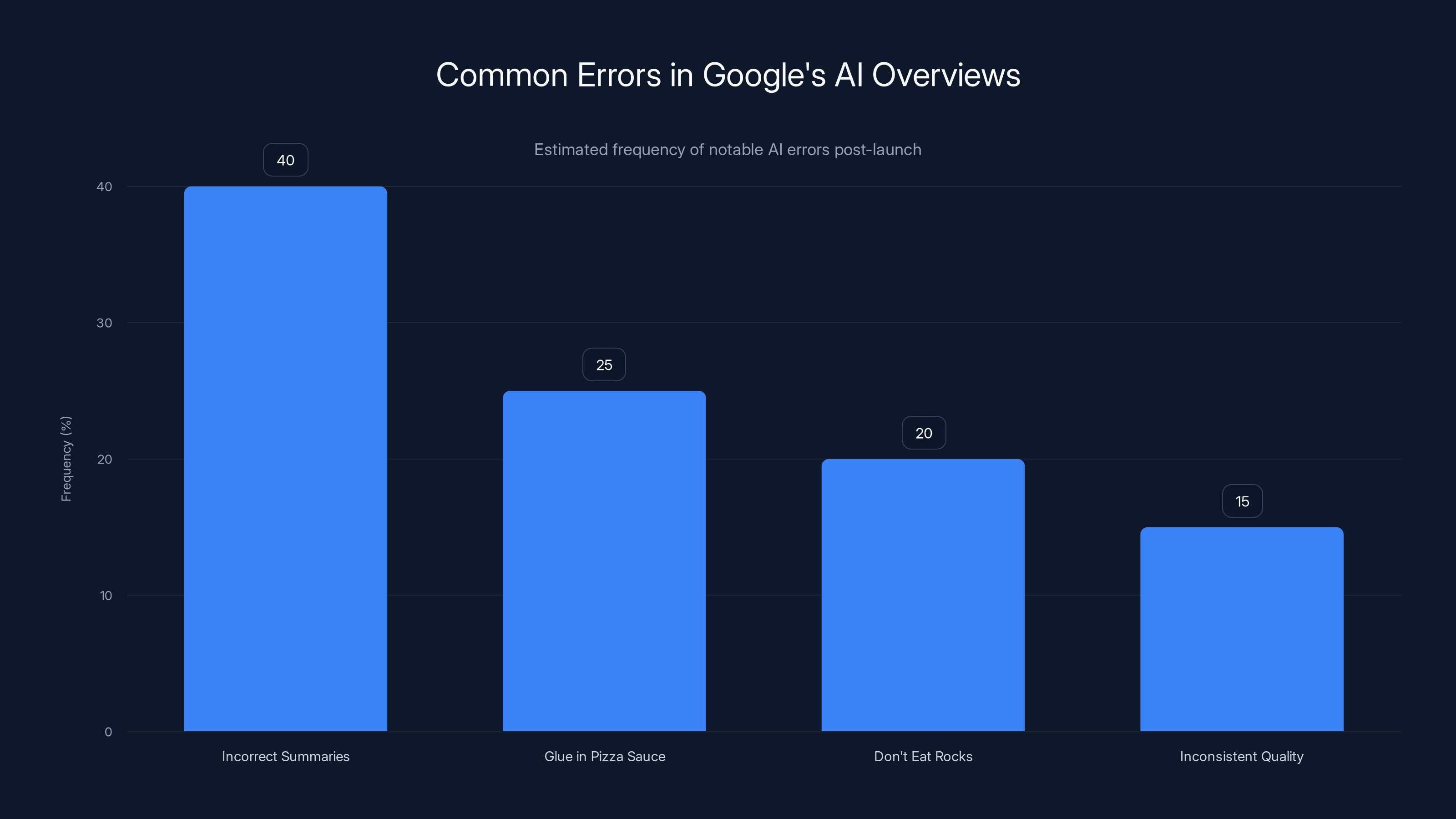

The reality crashed into that ambition pretty hard. Within weeks of launch, people started posting screenshots of completely wrong summaries. Someone asked how much glue to use in pizza sauce, and Google's AI helpfully suggested adding glue. The "don't eat rocks" rocks mistake became a meme. These weren't edge cases. They happened frequently enough that most users noticed them quickly.

What went wrong? The system was trying to do something incredibly difficult. AI Overviews weren't just summarizing existing content. They were generating entirely new text based on patterns learned from training data, attempting to synthesize multiple sources into a coherent answer. When sources conflicted, the model had to make judgment calls. When information was ambiguous, the model sometimes just made things up.

Google's previous approach to this problem was routing. If a query seemed difficult, the system would send it to Gemini 3, the newer model that performed better on complex reasoning. Simple queries went through older systems. But this created inconsistency. Users would see varying quality depending on whether their specific question hit the routing threshold for the better model.

The new approach is simpler and more democratic: everyone gets Gemini 3. Everyone gets the better model. It's a significant resource commitment because Gemini 3 is more computationally intensive than the older systems, but it signals that Google's betting on scale and capability rather than trying to be clever about routing logic.

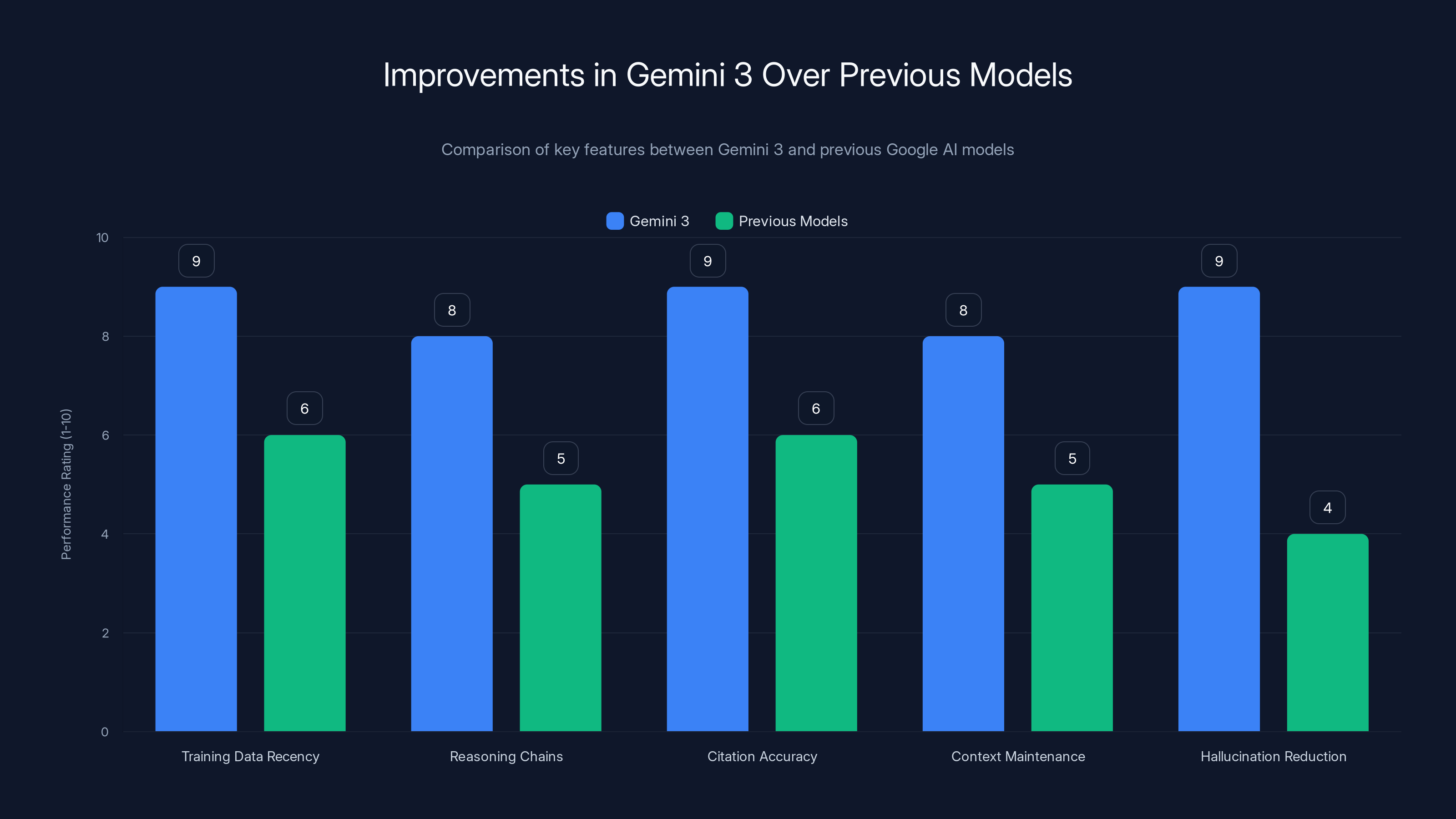

Gemini 3 significantly improves over previous models in key areas such as training data recency, reasoning chains, and hallucination reduction. Estimated data based on feature descriptions.

How Gemini 3 Actually Improves on Previous Models

Gemini 3 isn't just a marginally better version of what came before. The architecture is fundamentally different. Previous versions of Gemini (1.0, 2.0) were designed with certain constraints and optimizations that made them faster but less capable. Gemini 3 represents a different philosophy: capability first, then optimize.

The model has been trained on significantly more recent data. This matters because one of the biggest failures in AI Overviews was outdated information. Someone would ask about current events, and the system would synthesize information from months or years ago because it was pattern-matching against stale training data. Gemini 3's training includes more recent information and better mechanisms for temporal reasoning, which means it understands that "recent" events matter differently than "historical" facts.

Gemini 3 also has improved reasoning chains. It doesn't just pattern-match and output text. Internally, the model follows more explicit logical steps when synthesizing information. When you ask a question that requires combining information from multiple sources, the model now explicitly reasons through how those pieces fit together. You don't see this reasoning in the output, but it happens underneath, and it makes the final answer more reliable.

The model has also been trained specifically on being cited. This is technically complex but practically important. Gemini 3 learned to generate summaries in a way that naturally connects each claim to its source. When it generates a statement, it's simultaneously identifying which source that statement comes from. This means AI Overviews powered by Gemini 3 can include better attribution, and users can more easily verify claims.

There's also improved instruction-following. Older models sometimes ignored specific constraints. If you asked something that should have a simple, factual answer, the older models might still try to be creative or add editorial commentary. Gemini 3 better understands when to stick to facts and when to add context. This is harder than it sounds because the model still needs to be natural and conversational, but it's been specifically trained to recognize these distinctions.

The Problems It's Designed to Solve

The AI Overviews that launched last year had specific, documented failure modes. Understanding these helps explain why the Gemini 3 upgrade matters.

Hallucination and confidence without accuracy was the most obvious problem. The model would output information with complete confidence even when that information was wrong or made up. It would cite sources it had technically "learned" about but that didn't actually support the claim. Gemini 3 has better uncertainty estimation, which means it's more likely to acknowledge when something is unclear or when information comes from limited sources.

Information synthesis failures happened when sources contradicted each other or presented incomplete pictures. The older models would sometimes pick one source and ignore contradictions. Sometimes they'd blend contradictory information together and hope it sounded coherent. Gemini 3 has been trained to explicitly identify contradictions, flag them when they exist, and explain different perspectives when appropriate.

Recency problems were common. Someone would ask about something that happened last week, and Google would synthesize information from older events with the same name or category. Gemini 3 has better temporal reasoning and understands that recency matters for different types of queries in different ways.

Lack of nuance was another issue. Some topics require acknowledging complexity. Previous models would oversimplify or miss important caveats. Gemini 3 is better at expressing nuance while still being concise. It understands that sometimes the honest answer is more complicated than a simple summary.

Citation reliability was a significant issue. Sources were cited, but sometimes they didn't actually contain the information attributed to them, or the quote was taken out of context. Gemini 3 was specifically trained to improve citation accuracy, which means better matching between claimed information and actual sources.

Estimated data shows that incorrect summaries were the most frequent error in Google's AI Overviews, followed by specific notable errors like 'glue in pizza sauce' and 'don't eat rocks'.

How the AI Mode Integration Works Now

This is the second major change Google's rolling out, and it might actually be more important to how search evolves than the model change itself. You can now jump directly from an AI Overview into AI Mode conversation with context preserved.

Here's what that means in practice. You search for something. Google shows you an AI Overview with a summary. Previously, if you wanted to follow up with questions, you had two choices: you could run another search (losing context), or you could... run another search. There was no conversation mode integrated into the search results.

Now there's a button. Click it, and the conversation opens right there, with the AI Overview context already loaded. When you ask a follow-up question, the model knows what the original overview was about. It doesn't need you to re-explain context. This is such a small thing, but it fundamentally changes how search feels.

Google's been testing this for months, and the data suggests people actually prefer it. That makes intuitive sense. When you're researching something, you don't want to context-switch. You want to stay in one place and dig deeper. The conversation is more fluid. It feels less like using search and more like having someone help you understand something.

Mobile gets this feature first, which shows Google's priorities. Most people search on mobile, and most searches on mobile are quick lookups. But conversations happen on mobile too, and integrating them smoothly into the search experience is probably the area where the UX improvement is most obvious.

The conversation mode preserves everything. Your search history for that query, the sources in the overview, the context of what you asked. When you ask a follow-up question, the model has all that information. This means fewer clarifications, fewer misunderstandings, and genuinely more helpful responses.

What This Means for Search Quality

Google's been taking heat on search quality for years. The company faced criticism that results were becoming less useful, cluttered with spam and AI-generated content, and filled with affiliate links that prioritized revenue over helpfulness. Some of those criticisms are fair. Some are overblown. But the narrative is real, and it's affecting Google's reputation.

AI Overviews were pitched as part of the solution. Instead of scanning ten links yourself, the AI does it for you. But the early execution was rough enough that some people started questioning whether AI Overviews made things worse, not better.

The Gemini 3 upgrade is Google's statement that it's committed to fixing the execution. Better models, more consistent capability, improved accuracy. It's not a magic bullet. AI will still sometimes make things up. That's a fundamental limitation of the technology. But pushing everyone to Gemini 3 suggests Google believes the improvement in average quality is worth the additional computational cost.

For search quality specifically, this probably means fewer hallucinations and more accurate summaries. You should start seeing fewer of those bizarre, factually incorrect overviews. The information should be more reliably linked to actual sources. Follow-up conversations should feel more coherent and less like the model is making things up as it goes.

But there's a secondary effect. By making the system more reliable, Google can be more confident about AI Overviews being the primary way people interact with search. If quality stays patchy, people will ignore the overviews and go back to scanning blue links. If quality improves substantially, the overviews become the dominant interface. That changes everything about how search works.

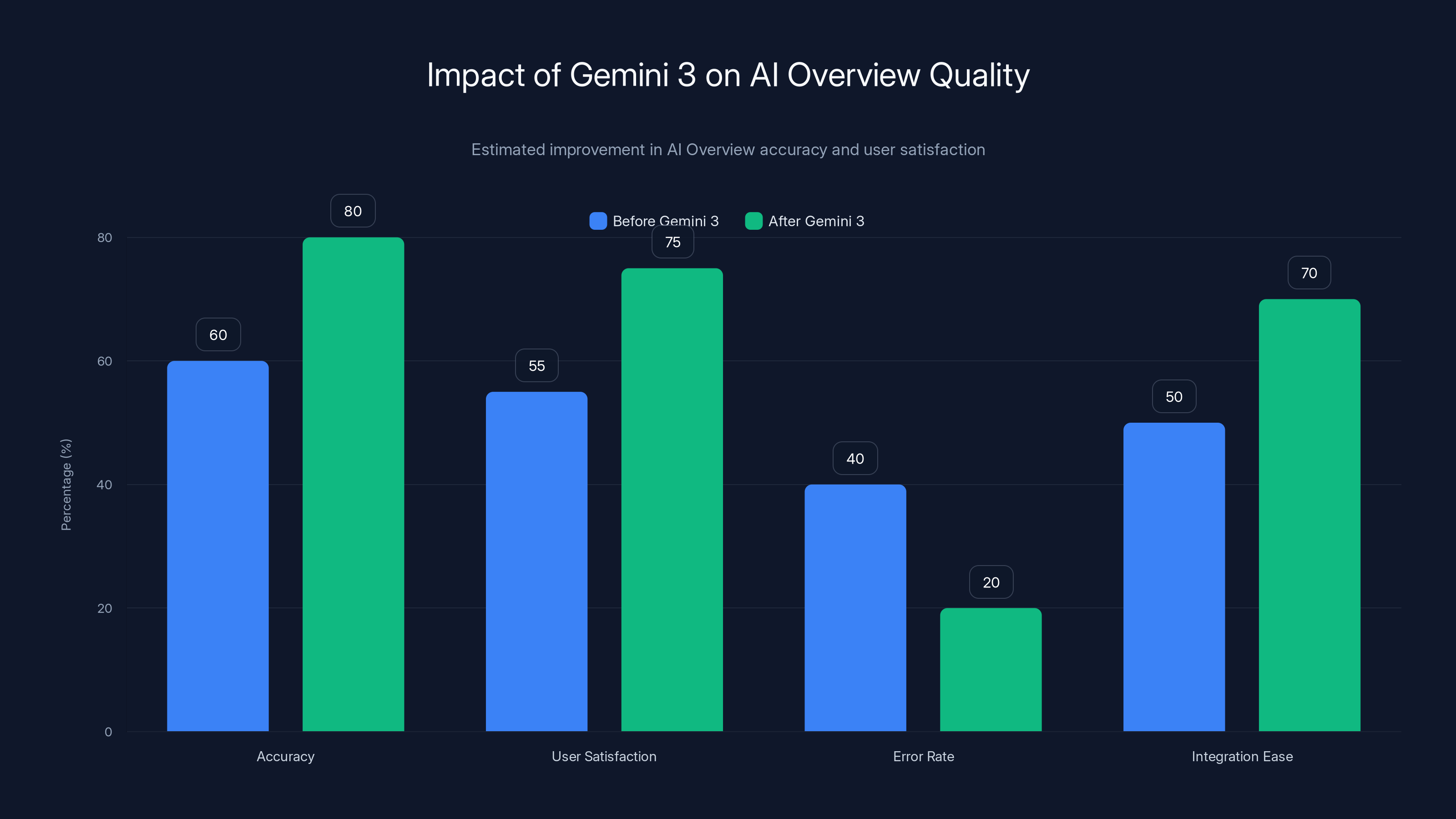

The transition to Gemini 3 is estimated to improve AI Overview accuracy and user satisfaction significantly, while reducing error rates. Estimated data.

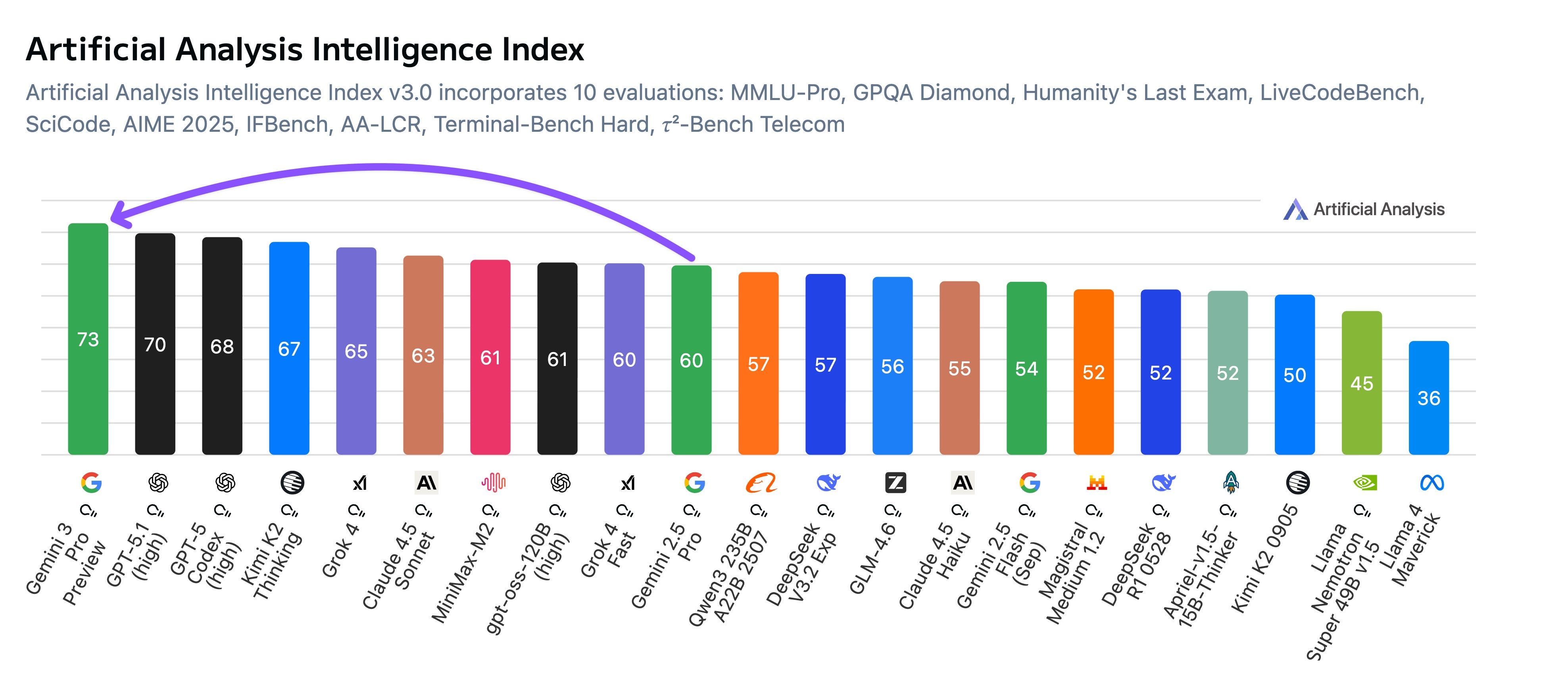

The Competitive Landscape: Where This Fits

Google isn't the only company trying to integrate AI into search. Perplexity has built an entire platform around AI-powered search with source citations. Open AI and Microsoft have made Search a central feature of their AI offerings. Even specialized search tools are adopting AI summaries.

What's interesting about Google's approach is the integration into an existing search engine that billions of people already use. Google doesn't need to convince people to try a new product. They're improving what you already use.

But this also creates constraints. Google can't completely reinvent search overnight. The system has to work with existing indexing, existing ranking algorithms, and existing user expectations. The AI features are being integrated into search, not replacing it. That's why AI Overviews appear above results, not instead of them. The blue links are still there.

Gemini 3 as the default moves Google closer to a unified, AI-first experience, but it's still a hybrid. You get an overview, then sources. You can have a conversation, then go to sources. It's a different approach from pure-play AI search tools, and whether it's the right approach depends on what people actually want.

The data Google's collected suggests people want both. They want a quick answer (the overview), but they also want the ability to dig deeper and verify things (the sources). The new integration that lets you jump into conversation mode while maintaining context is addressing exactly that need.

Impact on Content Creators and Publishers

There's a real question that content creators have been asking: if Google's giving AI summaries directly in search results, why would people click through to websites? The answer, in Google's view, is that good sources still matter. The AI still needs information to synthesize. And users still want to go deeper.

But the distribution of traffic changes. Publishers who get cited in AI Overviews get traffic from people clicking those citations. Publishers who don't get cited in overviews might not get traffic at all, because users get their answer directly from Google.

Gemini 3 being more reliable at citing sources is actually helpful for publishers, because it means the citations are more likely to be accurate and properly attributed. If Gemini 3 says "According to Tech Crunch, X is true," and Tech Crunch actually does say that, then people are more likely to click through to verify or read more.

However, there's still the core problem: some percentage of people will take the answer they got from Google and not visit any source. That's just how it works. Publishers have to reckon with that reality, regardless of model improvements.

Content creators who focus on depth and nuance are probably safer than those creating thin content just for Google ranking. If an AI overview can capture the essence of your article in two sentences, you need to offer something beyond those two sentences to get traffic. That something could be deeper analysis, expert commentary, primary data, case studies, or anything else that adds real value beyond the facts that made it into the overview.

Gemini 3 shows significant improvements in training data recency, reasoning chains, source attribution, and instruction following compared to previous models. (Estimated data)

Technical Deep Dive: How AI Overviews Actually Generate Text

Understanding the actual mechanics helps explain why model changes matter and why there are still failure modes to watch for.

When you search for something, Google's system first retrieves relevant documents using standard search algorithms. This retrieval step is actually pretty good. Google knows how to find relevant pages. The problem starts when you try to synthesize multiple retrieved documents into a coherent answer.

The system feeds those retrieved documents to the language model (now Gemini 3) along with the query. The model reads through all of them and generates a summary. But the model doesn't really "read" in the human sense. It's processing tokens and predicting the most likely next token based on its training. When it generates text, it's doing statistical pattern completion.

This is where hallucination comes from. The model can generate plausible-sounding text that isn't in any source. It can cite sources implicitly (mentioning something that sounds like it came from a source without saying so). It can blend information from different sources in ways that create false implications.

Gemini 3 has been trained to be better at all of these things, but the fundamental challenge remains. The model is still doing statistical pattern completion. It's just gotten better at doing it in ways that are more likely to be accurate.

The system also uses a technique called grounding, where it tries to keep the generated text connected to actual source material. But grounding isn't perfect. Sometimes the model can be grounded to a source in a way that's technically true but misleading. The source mentions X, the model mentions X, but in a context the source didn't actually discuss.

Gemini 3 has better semantic understanding, which helps it avoid these kinds of mismatches. It's better at understanding not just the words a source uses, but what the source actually means. That's a more subtle improvement than raw capability, but it's probably where most of the quality gains come from.

User Experience Changes You'll Notice

If you start seeing AI Overviews (they're not available everywhere yet, and they're often behind a toggle), you should notice some differences with Gemini 3 as the default.

First, the summaries should be more stable. You'll ask something, get an answer, and the answer will be consistent when you ask again. The older models sometimes generated wildly different summaries for the same query depending on exactly when you ran the query or some other factor. Gemini 3 is more deterministic.

Second, sources should be better cited. You'll see clearer connections between claims in the overview and the sources cited. If something seems suspicious, you can more reliably follow the citation and check it.

Third, the conversation jump will change how you interact with search. On mobile, this will feel pretty natural. You get an overview, ask a follow-up, and the conversation just continues. It'll reduce the friction of doing multi-step research.

Fourth, you might notice fewer bizarre errors. Not zero errors, but fewer. The hallucination problem isn't solved, but it should be reduced. You probably won't see new summaries suggesting you add glue to pizza sauce or eat rocks.

The most important change is probably the subtlest one: consistency. The system should feel more reliable. You might not consciously notice it, but you'll unconsciously start trusting the summaries more, which changes how you interact with search.

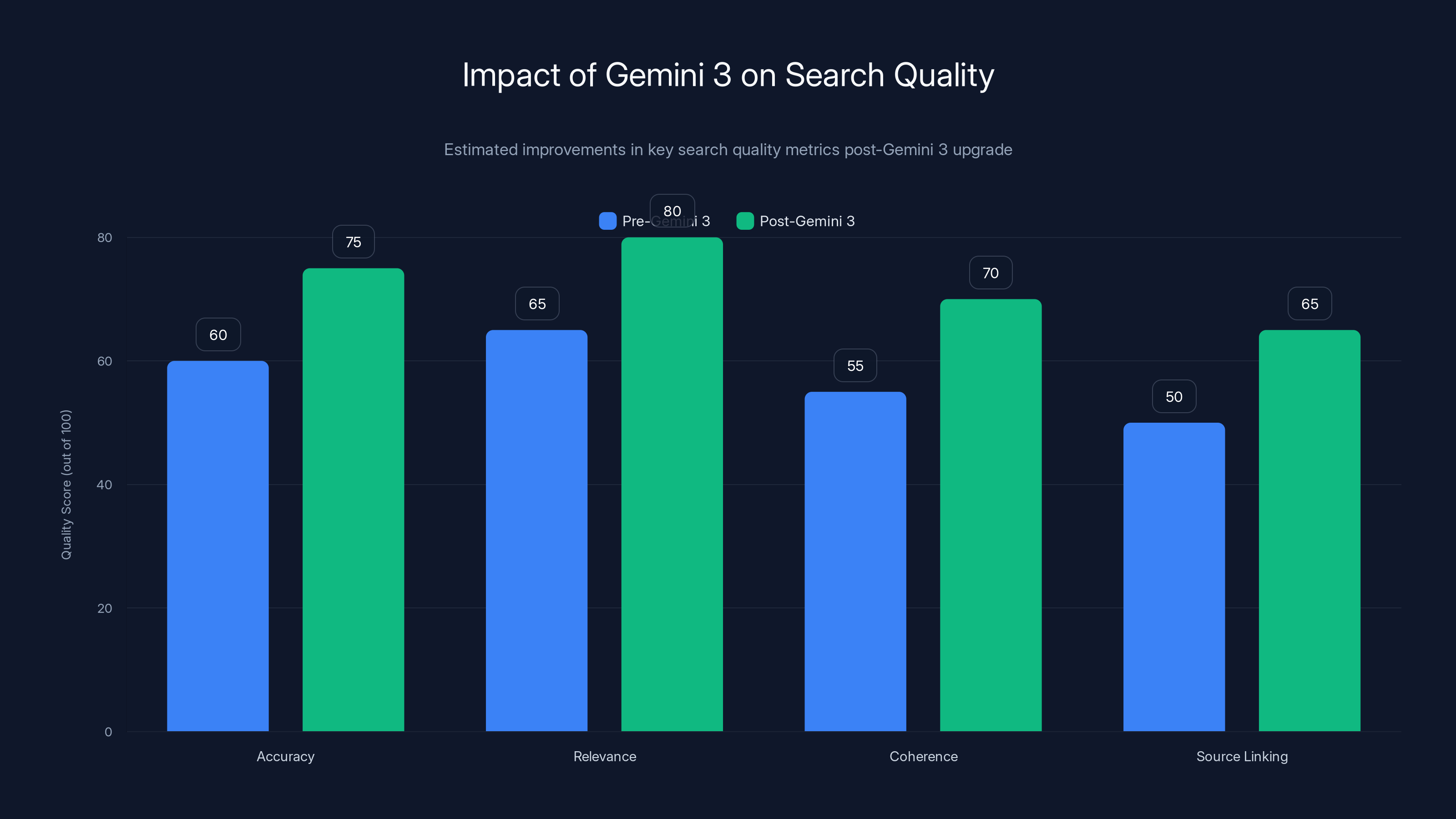

The Gemini 3 upgrade is expected to enhance search quality by improving accuracy, relevance, coherence, and source linking. Estimated data based on projected improvements.

The Bigger Picture: Search is Becoming Conversation

This rollout is part of a larger shift that's been happening for a few years. Search used to be about finding pages. Now it's becoming about getting answers. And the next step is about having conversations.

Google's adding conversational capabilities because that's what users actually want. Instead of running multiple searches and synthesizing results yourself, you want to ask follow-up questions and have the system understand the context. That's a fundamentally different interaction model.

Making Gemini 3 the default and integrating AI Mode conversations is Google pushing hard on this shift. The company is betting that this is the future of search. Whether that bet pays off depends on execution. But the direction is clear.

Other players are moving in the same direction. Perplexity has been conversational from the start. Open AI's approaching search as a conversation. Even Microsoft's Copilot, which started as a pure generation tool, is becoming more search-like.

The convergence suggests something real is happening. Search and conversation are merging. The tools that do this well will dominate. The tools that don't adapt will become niche.

What Could Still Go Wrong

For all the improvements Gemini 3 brings, there are still real risks. The model can still hallucinate. It can still misinterpret sources. It can still generate confident-sounding nonsense.

One specific risk is that users will start trusting AI Overviews too much. If reliability improves dramatically, people might start relying on summaries without checking sources. Then if hallucinations happen (and they will), the impact is bigger because trust was higher.

Another risk is that the system creates echo chambers or biases. If the model learns patterns from sources that themselves have biases, those biases get amplified in the overviews. Gemini 3 is probably better at this than previous models, but it's not solved.

There's also the problem of changing information. Some topics don't have stable answers. If you ask about the weather, the stock market, or breaking news, the correct answer depends on when you ask. AI models can struggle with these temporal dependencies. Gemini 3 has been trained to handle recency better, but the underlying challenge remains.

For high-stakes information (medical, legal, financial), AI Overviews have real limitations. The system will sometimes generate plausible-sounding advice that's actually wrong. Google typically includes disclaimers for these topics, but disclaimers don't prevent people from using the information anyway.

The Road Ahead: Where Search Goes From Here

If Gemini 3 as the default and integrated conversations are working well (and early signals suggest they are), Google will probably keep pushing further in this direction. The next logical steps would be things like:

Better personalization in conversations. Right now the system knows your search history, but it could use more of your broader context. What topics are you interested in? What's your background? How technical should explanations be? The system can already infer some of this, but it'll get better.

Deeper integration with Google's other products. Google has tools for everything from analytics to email to workspace. Search conversations could pull information from these tools in useful ways. Imagine asking about your business metrics and getting a conversation-based answer that's informed by your actual data.

Improved fact-checking and verification. Google could build better mechanisms for checking claims against databases of verified facts. Some of this is happening, but it could go much further.

Better handling of complex, multi-part questions. Right now the system works best for relatively simple queries. But real conversations often involve complex questions that need nuance and qualification. Gemini 3 is better at this than previous models, but there's still room for improvement.

More explicit source control. Users might want to specify which sources they trust. The system could learn to weight cited sources based on your preferences. This gets into personalization territory, which has its own challenges, but it's a plausible direction.

The fundamental trajectory is clear: search is becoming less about finding documents and more about having intelligent conversations with a system that understands context and can synthesize information. Whether that's good or bad depends on your perspective, but the direction isn't changing.

Practical Tips for Using AI Overviews Effectively

If you're going to use AI Overviews as they evolve with Gemini 3, here are some practical approaches that work better than others.

Always check sources, especially for anything important. An AI Overview can give you a quick understanding, but verifying with actual sources is still essential. Gemini 3 is better at citation accuracy, but it's not perfect. The blue links are still important.

Use follow-up questions in conversation mode to clarify and dig deeper. Don't take the first answer and leave. Ask why, ask for examples, ask about edge cases. The conversation model is designed to handle this, and you get better information.

Be specific in your initial query. The more specific your question, the better the overview. Vague queries tend to produce vaguer overviews. If you can be precise about what you're looking for, do it.

Pay attention to when overviews are less reliable. For breaking news, recent events, niche topics, and cutting-edge information, AI Overviews are less trustworthy. These are areas where sources might not have caught up yet, or information is changing rapidly. In these cases, check sources carefully.

Use overviews for quick understanding, then decide if you need to go deeper. The overview isn't the final answer. It's a starting point. If what you're reading seems important, important enough that being wrong would matter, dig into sources.

For searches involving expertise or judgment (medical advice, legal questions, career decisions), treat AI Overviews as background information only. These topics need real expertise, not AI synthesis. Google includes disclaimers for some of these, but even with disclaimers, people sometimes rely too heavily on AI answers for high-stakes questions.

Comparison: Gemini 3 vs. Previous AI Overview Models

Understanding how Gemini 3 compares to what came before helps explain why this change matters.

Previous models were trained on older data. Gemini 3 has more recent training data, which means better understanding of recent events and current information. This is a huge improvement because outdated information was a consistent problem.

Previous models had weaker reasoning chains. Gemini 3 explicitly reasons through multi-step logic. When synthesizing sources, it doesn't just pattern-match. It actually works through how information fits together. You don't see this reasoning, but it happens underneath and produces better outputs.

Previous models were less reliable at citation accuracy. Gemini 3 was specifically trained to connect claims to sources accurately. This means when it cites something, there's a higher probability the source actually says what it's being cited for.

Previous models sometimes ignored instructions or constraints. Gemini 3 better understands when to stick to facts and when to add context. It's more reliable at following the implicit instruction to be accurate when accuracy matters.

Previous models had harder limits on handling nuance and complexity. Gemini 3 can express more subtle ideas while staying concise. This is hard to quantify but noticeable in real examples.

Previous models didn't integrate well with conversation mode. Gemini 3 was designed from the start to support the conversation interface, so it maintains context better and responds more naturally to follow-ups.

All of these are incremental improvements, but together they add up to a substantially better experience. You probably won't notice every improvement individually, but you'll notice the overall quality is higher.

FAQ

What is Gemini 3 and how does it differ from previous Google AI models?

Gemini 3 is Google's latest large language model that now powers all AI Overviews in Google Search. It differs from previous models by featuring more recent training data, improved reasoning chains for multi-step logic, better citation accuracy, and enhanced ability to maintain context in conversations. The model was specifically trained to improve hallucination rates and provide more reliable summaries of search results.

How do AI Overviews work with Gemini 3 as the default?

When you search for something, Google retrieves relevant documents using traditional search algorithms. Gemini 3 then reads through these retrieved documents and generates a natural-language summary that appears above the traditional search results. The model synthesizes information from multiple sources into a coherent answer. With Gemini 3 as the default, all queries now benefit from this improved model rather than only complex queries being routed to it.

What is the AI Mode conversation feature and how does it integrate with AI Overviews?

AI Mode is a conversation interface that lets you ask follow-up questions to get deeper information. Previously, if you wanted to follow up on an AI Overview, you'd need to run a new search and lose context. Now you can jump directly from an AI Overview into conversation mode while maintaining all the context from the original summary. This creates a fluid experience where you ask questions and the system understands what you're asking about.

What were the main problems with previous AI Overviews that Gemini 3 addresses?

Previous AI Overviews suffered from hallucinations and confidence without accuracy, poor information synthesis when sources contradicted each other, recency problems where old information was blended with new queries, insufficient nuance for complex topics, and unreliable citations that didn't actually support the claims being made. Gemini 3 addresses these through improved uncertainty estimation, better contradiction detection, stronger temporal reasoning, nuance expression, and more accurate citation matching to actual sources.

Why did Google make Gemini 3 the default instead of continuing with selective routing?

Google's previous approach routed difficult questions to Gemini 3 while simpler queries went through older systems. This created inconsistency in quality. Making Gemini 3 the default for all users globally ensures consistent quality across all queries, though it requires more computational resources. Google determined that the quality improvement justifies the additional cost, and testing showed users prefer the more reliable experience.

How does Gemini 3 improve citation accuracy in AI Overviews?

Gemini 3 was specifically trained with techniques to connect each claim in a summary to its source more accurately. When the model generates a statement, it simultaneously identifies which source that statement comes from. This means the citations aren't applied after generation as an afterthought, but are integrated into the generation process itself. The model has learned what it means for a source to actually support a claim, rather than just pattern-matching similar words.

When will the Gemini 3 update and AI Mode conversation feature be available?

Google started rolling out both features globally immediately, with mobile devices getting priority access to the AI Mode conversation integration. Desktop access is following afterward. The rollout is gradual, so not all users will see these features simultaneously. Availability also depends on your geographic region and whether you have AI Overviews enabled in your Google Search settings.

Can I trust AI Overviews with important information now that Gemini 3 is the default?

Gemini 3 significantly improves reliability compared to previous models, but it's not a replacement for verification. For important information, especially in high-stakes areas like medical, legal, or financial decisions, you should always check the original sources. AI Overviews are best used as a starting point for understanding a topic, followed by deeper research when the information matters significantly.

How does this update affect content creators and publishers?

Publishers benefit from Gemini 3's improved citation accuracy because claims attributed to their content are more likely to be accurate, increasing the likelihood of click-through traffic. However, the fundamental challenge remains: some users will take the AI-generated answer without visiting sources. Publishers need to offer depth beyond what appears in overviews to drive traffic, through analysis, primary data, case studies, or expertise that adds value beyond summary-level facts.

What's the future direction for Google Search with these changes?

These changes represent Google's shift from search being about finding documents to search being about getting answers through conversation. Future developments likely include better personalization in conversations, deeper integration with Google's other products, improved fact-checking against verified databases, better handling of complex multi-part questions, and more explicit user control over which sources are trusted. The fundamental direction is clear: search is becoming a conversational interface rather than a link-finding tool.

Conclusion: The Evolution of Search Continues

Google's move to make Gemini 3 the default for AI Overviews and integrate AI Mode conversations represents a significant milestone in how search is evolving. It's not revolutionary—the basic concept of AI-powered summaries has been around for months. But the execution matters, and making a commitment to reliability by pushing everyone to the better model signals that Google is serious about making AI summaries work.

The integration of conversations into search is equally important. For years, search has been a one-off interaction: you ask a question, you get results, done. Conversations change that. They let you refine your query, ask follow-ups, explore tangents, and maintain context. That's a fundamentally different way of interacting with information.

Both changes together point toward a future where search becomes less about finding and more about understanding. Instead of you doing the work to find documents and synthesize information, the system does that work for you. Whether that's good or bad is a question people disagree on, but the trajectory is clear, and it's not reversing.

For users, this means better search experiences on average, with fewer hallucinations and more reliable information. It also means relying more on systems you don't fully control and can't easily verify. That's a tradeoff worth thinking about.

For publishers, it means working in an information landscape where Google's summaries compete with your content for attention. Publishers who offer depth, expertise, and unique information will do better than those creating thin content optimized only for search ranking.

For Google itself, this is about defending search in an era where users are increasingly comfortable asking questions directly to AI systems. By making search more conversational and AI-integrated, Google is trying to keep search the primary interface for information access, even as AI capabilities evolve.

The Gemini 3 rollout won't be a dramatic moment where everything changes overnight. It'll be gradual. Over weeks and months, you'll notice summaries are slightly more reliable, conversations flow a bit better, and search feels more useful. That's how transformative technology usually works in practice. Not with a bang, but with incremental improvements that add up.

Key Takeaways

- Gemini 3 is now the default model for all AI Overviews globally, replacing selective routing that only sent difficult queries to the newer model

- Direct integration between AI Overviews and AI Mode conversations preserves context and enables fluid follow-up questions without separate searches

- The shift aims to reduce hallucinations, improve citation accuracy, and enhance information synthesis from multiple sources

- Mobile devices are getting the feature first, indicating Google's priority for mobile-first search experiences

- Search is fundamentally shifting from link-finding to answer-conversation, representing a major evolution in how users interact with information

Related Articles

- Gemini-Powered Siri: What Apple Intelligence Really Needs [2025]

- The AI Adoption Gap: Why Some Countries Are Leaving Others Behind [2025]

- EU Forces Google to Share Android AI Access: What It Means [2025]

- Moonshot Kimi K2.5: Open Source LLM with Agent Swarm [2025]

- Where Tech Leaders & Students Really Think AI Is Going [2025]

- Why AI Projects Fail: The Alignment Gap Between Leadership [2025]

![Gemini 3 Becomes Google's Default AI Overviews Model [2025]](https://tryrunable.com/blog/gemini-3-becomes-google-s-default-ai-overviews-model-2025/image-1-1769536180634.jpg)