Where Tech Leaders and Students Really Think AI Is Going [2025]

The future feels less certain than ever. We're living through one of those rare moments in history where technology is moving so fast that even the people building it aren't entirely sure what comes next. Politics is shifting, culture's transforming, science is exploding in new directions, and artificial intelligence is sitting at the center of it all.

That's why we decided to do something different. Instead of predicting the future ourselves, we asked the people who are actually building it. We spoke with tech founders, AI researchers, entertainment executives, business leaders, and—crucially—the students who'll inherit whatever we create. We asked them one question: where is AI really going?

The answers were revealing. Some were optimistic to the point of evangelism. Others were deeply, genuinely worried. Most fell somewhere in between—excited about the potential but wrestling with real, tangible concerns about what happens when AI systems become even more powerful, more integrated into daily life, and less understood by the people relying on them.

This isn't a story about predictions. This is a snapshot of how the smartest people thinking about technology actually see the world right now. It's messy, contradictory, and honest. And if you're trying to understand where AI is headed—whether that's for your business, your career, or just your own peace of mind—this is the conversation you need to read.

TL; DR

- AI is already mainstream: Nearly 2 in 3 US teens use chatbots, and tech leaders are integrating them into daily tasks from parenting to healthcare

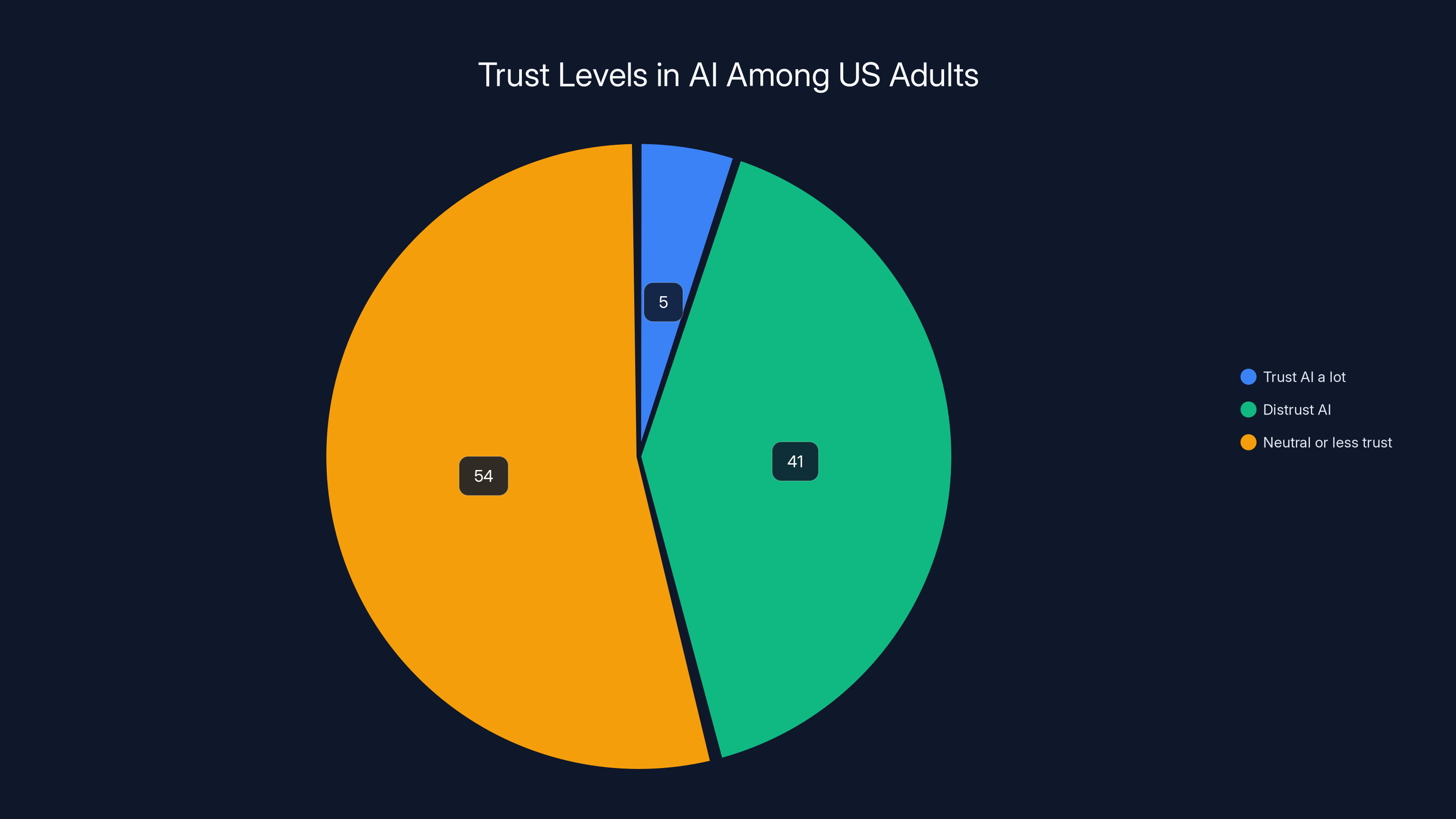

- Trust is the biggest problem: Only 5% of Americans trust AI companies "a lot," while trust in data protection actually declined globally from 2023 to 2024

- Safety questions aren't optional: Leading founders and CEOs are asking harder questions before launch: "Would I give this to my own child?"

- Students are skeptical: Unlike executives, many students view AI with caution, prioritizing personal work and independent thinking over convenience

- The regulatory gap is dangerous: With minimal government oversight, companies are largely self-policing on crucial safety and ethics questions

Only 5% of US adults trust AI a lot, while 41% actively distrust it, highlighting a significant trust deficit despite high usage rates.

AI Has Already Won the Mainstream Battle

There's a moment in any technology's lifecycle where you realize it's not coming anymore. It's already here. It's been here for a while. And you've stopped noticing.

That's where we are with AI.

When you talk to people across industries, you realize AI isn't some distant threat or fascinating experiment. It's woven into the fabric of how people actually work and live. Angel Tramontin, a business student at UC Berkeley, uses large language models multiple times a day to answer questions he used to Google. It's faster. It's more conversational. It's contextual. He doesn't think of it as special anymore—it's just what you do.

This casual integration is the real story. It's not the flashy demos or the apocalyptic headlines. It's people asking their AI assistant for parenting advice at 2 AM, or getting a quick health symptom check before deciding whether to call a doctor. Anthropic's co-president Daniela Amodei has used Claude to help with childcare basics, including potty training strategies. Director Jon M. Chu turned to LLMs for parenting health advice (and honestly admitted it might not be the best source, but it works as a starting point).

The scale of this adoption is staggering. According to Pew Research, nearly two-thirds of US teenagers use chatbots. About 3 in 10 use them daily. And here's the thing: many of them might not even know it. When Google Gemini became integrated into search results, millions started using AI without explicitly deciding to. It just happened. Search evolved, and suddenly you were talking to an AI.

AI companies themselves are actively pushing into new domains. OpenAI announced Chat GPT Health earlier this year, disclosing that hundreds of millions of people use chatbots weekly for health and wellness questions. They've even added privacy protections because health data is so sensitive. Anthropic launched Claude for Healthcare, targeting hospitals and healthcare systems as enterprise customers. This isn't fringe usage anymore. This is mainstream healthcare infrastructure.

But not everyone is on board. Some people are actively resisting. UC Berkeley undergraduate Sienna Villalobos deliberately avoids using AI, believing that doing your own work and forming your own opinions is essential. She's not wrong—there's a legitimate concern that outsourcing thinking to AI systems could atrophy critical thinking skills. Her position, however, seems increasingly in the minority among her peers.

The real question isn't whether AI has reached mainstream adoption. It clearly has. The question is what that means for how we work, think, and relate to information.

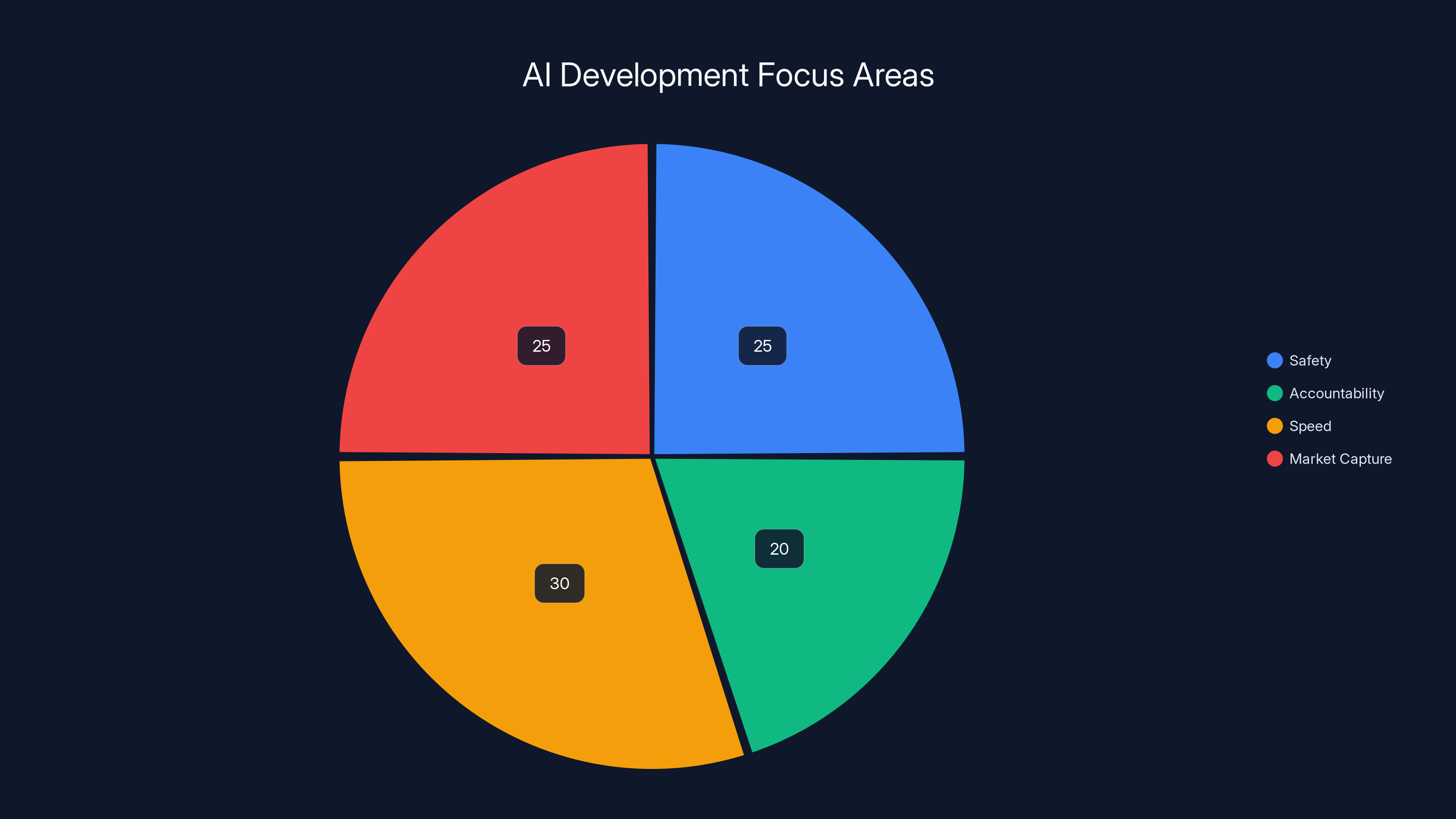

Anthropic emphasizes safety and transparency, while Cloudflare focuses on accountability. Both companies accept a trade-off in market share for ethical practices. Estimated data.

The Trust Crisis That Threatens Everything

Here's a problem that doesn't get enough attention: nobody trusts AI companies.

Let's be specific about this, because the numbers are alarming. While 35% of US adults use AI daily, only 5% say they trust AI "a lot." That's a 7:1 ratio of users to true believers. Meanwhile, 41% of Americans are actively distrustful of AI. This is adoption without confidence, integration without belief. That's an unstable combination.

It gets worse. A recent Ipsos poll showed that global trust in AI companies to protect personal data actually fell from 2023 to 2024. As AI companies have gotten bigger and more aggressive, trust has eroded. Why? Look at the lawsuits. Major publishers suing for unauthorized scraping of content for training data. Artists suing over image generation trained on their work. Individual creators watching their likenesses used to build commercial AI products without consent.

Cloudflare CEO Matthew Prince, whose company has been at the forefront of holding AI companies accountable for data scraping, emphasizes that trust needs to come before launch, not after. Building trust isn't a marketing problem to solve with better messaging. It's a fundamental business problem. If people don't trust your system with their data, they'll eventually stop using it—or they'll use it while feeling violated.

UC Berkeley student Sienna Villalobos articulated the core problem clearly: "I think a lot of them put financial gain over morality, and that's one of the biggest dangers." This isn't a fringe student opinion. This is increasingly mainstream sentiment. When people feel like companies are prioritizing profit over their wellbeing, they become wary.

Michele Jawando, president of the nonprofit Omidyar Network, frames the essential question: "Who does it hurt, and who does it harm? If you don't know the answer, you don't have enough people in the room." This is deceptively simple. Most AI companies probably can't answer this question comprehensively before they launch. They should be able to. If they can't, they shouldn't ship.

The trust crisis is particularly acute for large language models. These systems are trained on massive amounts of internet data—much of it collected without explicit consent. The models themselves work as black boxes. You ask a question, you get an answer, but neither the user nor the company fully understands why that specific answer emerged from the weights and parameters. There's an inherent opacity that makes trust harder to build.

What Safety Actually Looks Like in Practice

Daniela Amodei from Anthropic asks a deceptively simple question before her company launches any new AI system: "How confident are we that we've done enough safety testing on this model?" Think about that. Not "is this cool?" or "will people pay for this?" or "can we beat our competitors to market?" The first question is about safety.

She uses an analogy: car manufacturers don't launch new vehicles without crash tests. Why? Because cars can hurt people. The liability is real. The responsibility is clear. Yet AI companies often move faster than car manufacturers, with less testing and lower liability concerns. "We're actually putting this out into the world; it's something people are going to rely on every day," Amodei says. "Is this something that I would be comfortable giving to my own child to use?"

That parent test—would you give this to your own child—is a useful safety filter. It cuts through the marketing and gets to the actual question: is this reliable enough for real-world use? If an AI system makes mistakes, could those mistakes hurt someone? If yes, how much testing is enough?

The challenge is that AI systems can fail in unintuitive ways. A chatbot might confidently hallucinate a medical fact. It might reinforce bias in hiring decisions. It might subtly manipulate people through persuasive language it's learned from training data. These aren't bugs you find through traditional testing. They're emergent properties of how the system generalizes from training data to novel situations.

Mike Masnick, founder of Techdirt, emphasizes the importance of asking "What might go wrong?" before every launch. This sounds obvious, but it's not how most tech companies operate. The dominant culture in tech is move fast, iterate, fix problems when users complain. For many products, that's fine. For AI systems that influence health decisions, hiring processes, or major life choices, it's reckless.

The problem compounds when you realize that safety concerns aren't equally distributed. A bug that affects 0.1% of users might cause serious harm to thousands of people. When millions use your system daily, even rare failure modes become common in absolute terms. The math forces you to be more careful.

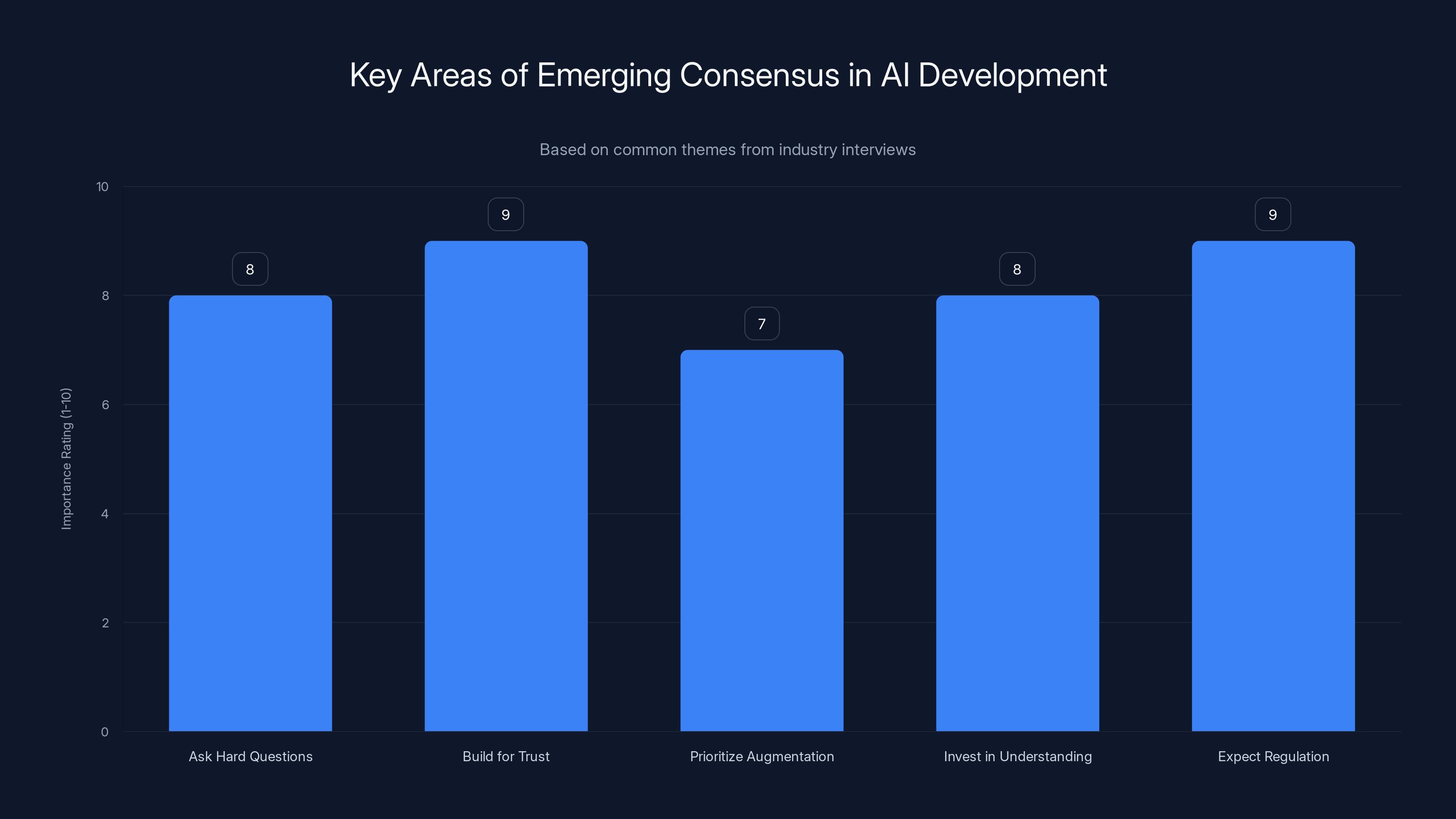

The chart highlights the emerging consensus on key areas in AI development, with 'Build for Trust' and 'Expect Regulation' rated as highly important. Estimated data based on industry insights.

Regulatory Vacuum: Who's Actually in Charge?

There's a crucial absence hanging over the entire AI landscape: regulation. In the US, there's minimal federal AI regulation. Europe's moving faster with their AI Act, but enforcement is still ramping up. Most of the world? Basically no guardrails.

This creates a strange dynamic. AI companies are essentially self-policing. Some, like Anthropic, take this seriously. Others are moving faster than they probably should. The regulatory vacuum means that the only constraint on deployment is the company's own judgment, public pressure, and fear of lawsuits after something goes wrong.

Matthew Prince articulates this well: companies need to establish trust before launching products. Why? Because if you don't, you're betting that the regulatory environment stays favorable to you forever. That's a bet no one should make. Public trust erosion leads to regulatory backlash. The AI companies that move carefully now are the ones that'll face less harsh regulation later.

But here's the reality: some companies aren't moving carefully. They're racing to scale. They're racing to capture market share. They're racing to achieve capabilities that seem impressive in demos, even if real-world reliability is questionable.

The absence of regulation creates perverse incentives. A company that invests heavily in safety testing bears costs that a company moving recklessly doesn't. If both can launch similar products, the careless company wins on price and speed. This is a classic market failure that regulation exists to solve.

Jawando's point about having enough people in the room is about this too. You can't think through all the ways something might go wrong if the room is full of engineers and product managers optimizing for launch speed. You need ethicists, social scientists, people from affected communities, domain experts. That's expensive and slowing. Companies that do it sacrifice speed. Companies that don't do it sacrifice responsibility.

The Student Perspective: Digital Natives Are Skeptical

One of the most interesting voices in these conversations came from students. These are people who've never known a world without smartphones, without constant internet, without AI assistants. They're digital natives. And many of them are surprisingly cautious about AI.

Sienna Villalobos represents a meaningful minority: the deliberate non-users. She sees AI as a shortcut that undermines deeper thinking. When you ask an AI for your opinion, you're outsourcing judgment. That concerns her. Philosophically, she's not wrong. There's a real cost to outsourcing thinking, even if that outsourcing is convenient.

Other students cited job security concerns. Berkeley students worry about whether their chosen fields will exist in a decade. Computer science students, you'd think, would be most comfortable with AI. Instead, many are worried that AI will commoditize coding. Design students worry that AI can now generate visual concepts. Business students worry that analysis they'd spend weeks on can be done in minutes. The worry isn't theoretical. It's specific, field-by-field erosion of job roles.

Data privacy is another consistent concern among students. They've grown up seeing their data harvested, sold, and used in ways they never explicitly consented to. They're less trusting of tech companies than previous generations, not more. When they see AI companies scraping data to train models, they think: "Of course they are. That's what tech companies do." The normalization of data exploitation in their lifetimes makes them more cynical about promises of better privacy practices.

Another interesting dynamic: many students use AI extensively despite their concerns. It's not ideological purity. It's pragmatism mixed with resignation. "I don't like it, but everyone else is using it, and I can't fall behind," is the sentiment. That's a different kind of adoption than enthusiasm. It's adoption born from competitive pressure, not genuine belief in the technology.

AI companies like Anthropic spend 2-3 times longer on safety testing compared to typical QA processes in tech companies. Estimated data.

The Optimism That Survives Caution

Despite all these concerns, many of the people interviewed expressed genuine optimism about AI's potential. This isn't naive optimism. It's cautious, conditional, but real.

Matthew Prince from Cloudflare says plainly: "I'm pretty optimistic about AI. I think it's actually going to make humanity better, not worse." This from the guy whose company has spent substantial resources holding AI companies accountable for web scraping. He's not optimistic because he thinks companies are behaving perfectly. He's optimistic because he believes the underlying technology, used responsibly, could genuinely improve human capabilities.

The optimism tends to center on specific domains. Healthcare. Scientific research. Education. Accessibility. These are areas where AI could genuinely augment human capability rather than replace human judgment. An AI that helps a doctor diagnose disease faster is different from an AI that replaces the doctor. One amplifies human expertise. The other removes human judgment from a critical decision.

The pattern emerges when you talk to people who've seen AI work well: it's best at augmentation, not replacement. At amplifying human capability, not substituting for human judgment. The companies that understand this distinction and build accordingly seem to be the ones people trust more.

Anthropic's Amodei emphasizes this in her thinking about Claude: it's designed to be a thinking partner, not a replacement for thinking. You use it to explore ideas, test logic, find problems in your reasoning. The human is still the decision-maker. The AI is the amplifier. That distinction matters.

The Specific Worries That Keep People Up at Night

Beyond the broad concerns about trust and safety, people are worried about specific failure modes. Some of these are technical. Some are social. All are real.

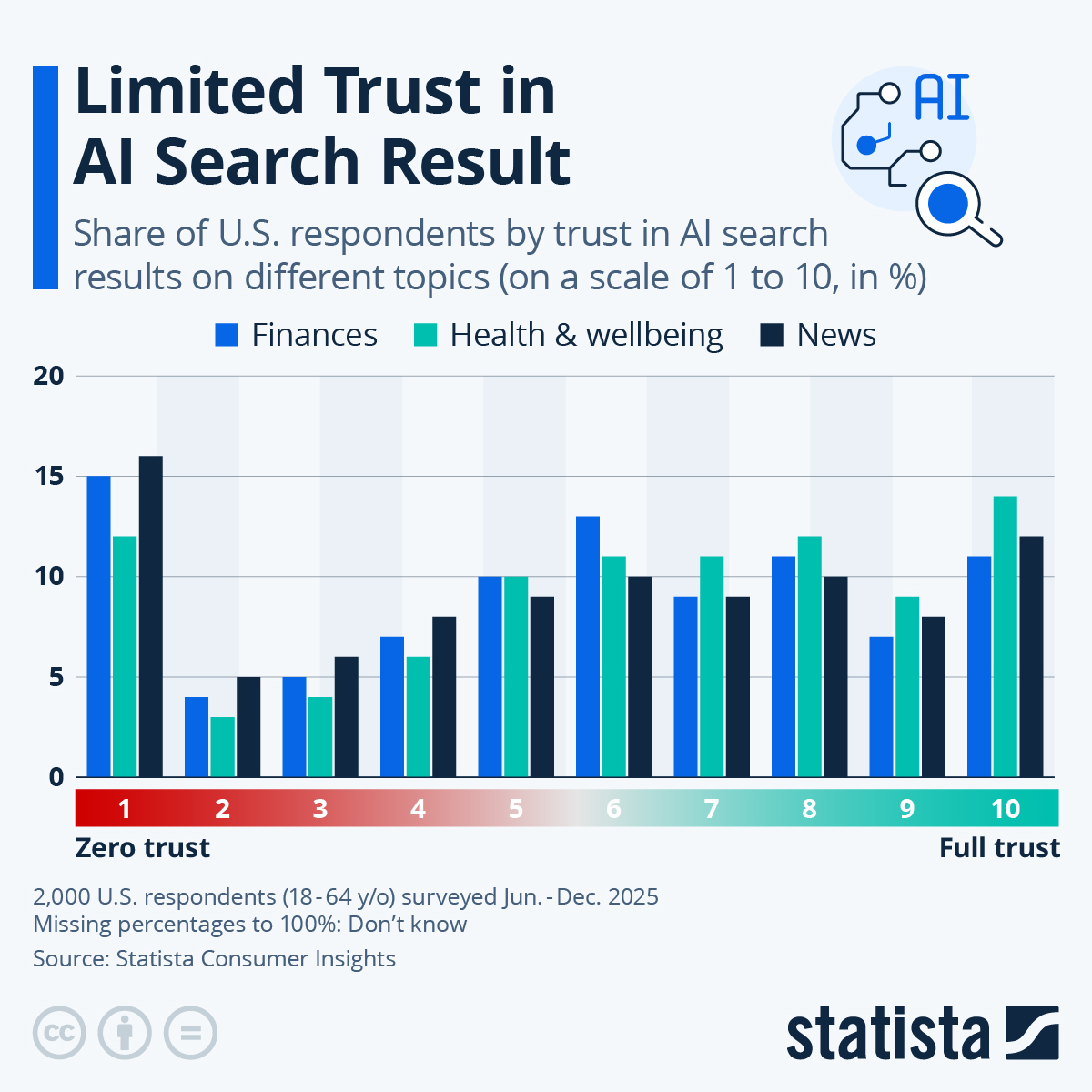

Health information reliability is near the top of everyone's worry list. When Jon M. Chu jokes about using AI for health advice "which is maybe not the best," he's actually pointing at something serious. If AI systems are confidently wrong about health questions, and millions of people rely on them for initial guidance, you have a public health problem. Not every question is urgent, but some are. An AI that confidently tells someone their symptom is harmless when it's actually serious could lead to delayed medical care and real harm.

Environmental impact concerns emerged in several conversations. Training large language models requires massive computational resources. That means server farms running 24/7, pulling enormous amounts of electricity. Some estimates suggest training a large model uses as much electricity as thousands of homes in a year. If we're scaling this up to serve billions of users, the environmental cost becomes massive. The irony: we're deploying AI to help with climate problems while the AI itself contributes to energy consumption.

Job displacement is personal. It's not abstract. When a marketing coordinator realizes that AI can now write decent copy, when a junior designer realizes AI can generate visual concepts, the threat is real. Not all jobs will disappear, but some will. Others will transform. The people in those jobs right now are understandably anxious.

Social and political manipulation is harder to quantify but increasingly concerning. Large language models can be remarkably persuasive. If they're deployed to generate personalized political content, or to create convincing disinformation, or to subtly nudge people toward particular behaviors, the stakes are high. The technology is already capable of this. The question is how aggressively it gets deployed and whether people can even tell they're being influenced.

Estimated data shows a balanced focus on safety, accountability, speed, and market capture in AI development, highlighting the ongoing tension between responsible innovation and competitive pressures.

Where Companies Get It Right

Amid all the concerns, a few companies stand out as getting things more right than others. Not perfect. But thoughtful.

Anthropic's approach centers on safety from day one. They've published research on constitutional AI, trying to design systems that follow principles even when they're not explicitly told to. They're transparent about limitations. They built safety testing into their deployment process, not as an afterthought. This costs them speed and probably costs them market share against competitors moving faster. They've chosen to trade growth for responsibility.

Cloudflare's approach is different but related: accountability. They're using the power of their position in the internet infrastructure stack to create consequences for companies that scrape the web unethically. They can't force everyone to be responsible, but they can make irresponsibility more costly. That's not perfect, but it's something.

Both companies seem to believe that the long-term business advantage comes from building trust and responsibility now. They're betting that the regulatory environment and public sentiment will eventually reward companies that were careful early. That might be correct. It might not be. But it's a bet on something other than pure quarterly growth.

The Skills Gap: What Companies Actually Need

One conversation that emerged repeatedly: most companies deploying AI don't actually understand their own systems. They're using black boxes they don't fully comprehend. They're shipping products they can't fully explain. That's a problem.

The best teams have people who can explain what the model does, why it works, where it fails. They have people who understand the training data, the potential biases, the edge cases. They have people who can say: "If we deploy this in this way, here's what might go wrong." That's rare. Most companies are moving too fast to build this expertise.

This creates a gap between what companies think their systems can do and what they actually do. The public announcement says X. The actual capabilities are Y. Reality is a messy Z that depends on specific inputs and specific contexts. When the gap between promised and actual is large, that's when trust erodes.

Building true AI literacy in an organization takes time and money. It requires hiring for expertise, not just for execution speed. It requires patience to understand systems before deploying them at scale. Most companies aren't willing to invest this. The competitive pressure is too high. Everyone's racing to be first, which means being second on safety.

Only 5% of Americans trust AI companies 'a lot,' highlighting significant public skepticism. Estimated data based on narrative.

The Emerging Consensus on What Should Happen

Across all the interviews, a few things kept emerging. They weren't universal, but they were common enough to feel like an emerging consensus.

One: Ask hard questions before launch. Not after. Before. "What might go wrong?" "Would I give this to my child?" "Who does this hurt?" "Do we understand why the model makes the decisions it does?" If you can't answer these questions, you're not ready.

Two: Build for trust from the start. Trust isn't a marketing problem. It's a design and execution problem. How is data handled? What's the privacy policy and is it understandable? What are the actual limitations? Be honest about them. Transparency builds trust faster than marketing claims ever will.

Three: Prioritize augmentation over replacement. Design systems that amplify human capability rather than removing human judgment. Keep humans in the loop on important decisions. Make it clear where the AI is contributing and where human judgment is required.

Four: Invest in understanding your systems. This is expensive and slows deployment. Do it anyway. You need to understand what you're shipping. Your users will demand this eventually. Doing it now is cheaper than doing it after lawsuits start.

Five: Expect regulation and build for it. The regulatory environment is coming. Companies that build responsibly now will have an easier transition than companies that've optimized for growth at all costs. Regulation will happen. The question is whether you'll be on the good side of it.

The Uncomfortable Reality About Competition

Here's the tension nobody wants to talk about directly: doing AI responsibly is harder and slower than doing it recklessly. A company that tests thoroughly, considers consequences, and invests in safety will likely move slower than a company that's optimizing for speed. In the short term, speed wins. In the long term, responsibility wins. But "long term" is years away, and quarterly earnings are right now.

This creates a prisoner's dilemma dynamic. Every company would prefer a world where everyone moves responsibly. But if you're the only one moving responsibly while others move fast, you lose. So everyone moves faster than they probably should.

The only way out of this is either regulation (which changes the rules for everyone) or market dynamics where users actively prefer responsible companies. The market dynamics aren't there yet. Trust measures show people don't believe in AI companies to begin with. The responsibility message hasn't broken through as a differentiator.

Regulation is coming. The question is whether it'll be light, focused, and encouraging to good actors—or heavy, punitive, and driven by backlash. Companies that move responsibly now are basically betting that lighter regulation comes faster and is better for their business. That might be correct.

What These Conversations Actually Reveal

If you step back from the specifics, the interviews reveal something important: there's no consensus about AI's future, but there is consensus about how companies should behave while building it.

People disagree about whether AI will be net positive or net negative for humanity. They disagree about which applications are beneficial and which are dangerous. They disagree about job displacement and economic disruption. But they agree on something simpler: companies should be honest, should test thoroughly, should consider consequences, and should build systems that amplify human judgment rather than replace it.

That's not a high bar. It's not innovation. It's just responsibility. The fact that so few companies are doing it says something about the current state of tech industry incentives.

The Path Forward: What Happens Next

AI is going to keep evolving. That's certain. Systems will get more capable. They'll be deployed in more domains. They'll touch more people's lives. That's not debatable.

What's debatable is how well-built those systems will be. Whether they'll be designed with care or just shipped quickly. Whether companies will invest in understanding what they're deploying. Whether users will be informed about what they're relying on. Whether the technology will augment human capability or erode human judgment.

The next two years matter more than people realize. The companies that make conscious choices about responsibility now will be well-positioned for a more regulated environment. The companies that race to scale without thinking through consequences will face harder regulatory headwinds and more public backlash.

For individuals, the path forward is simpler: use AI thoughtfully. Understand what you're relying on. Don't outsource judgment on important decisions. When you use these tools, think about the trade-offs. Speed and convenience have costs. Sometimes those costs are worth it. Sometimes they're not.

For organizations: build AI literacy. Make sure people understand the systems you're deploying. Invest in safety testing. Ask hard questions before launch. Don't compete purely on speed. Competition on responsibility is harder but ultimately more sustainable.

The future of AI isn't predetermined. It's being shaped right now by decisions companies and organizations are making every day. The pattern that emerges from these conversations is clear: the smart money is on responsibility. Not because it's faster or cheaper or more profitable in the short term. But because it's the only strategy that works over time.

FAQ

What's driving the rapid adoption of AI in mainstream use?

AI adoption is being driven by three factors: ease of access (free or low-cost tools), obvious practical benefits (time savings, better answers), and competitive pressure (if everyone else is using it, you feel like you're falling behind if you don't). Nearly two-thirds of US teenagers now use chatbots regularly, up from essentially zero just three years ago. The integration into search engines and productivity tools means many people are using AI without even realizing it.

Why do people use AI if they don't trust AI companies?

This is the central tension. People use AI tools because they're useful, even though they don't trust the companies behind them. It's similar to using Google Search or Facebook—useful enough that the convenience outweighs concerns about privacy and data handling. This gap between usage and trust is unstable. As awareness grows about data practices and algorithmic bias, that gap may close, but through declining usage rather than growing trust.

What specific safety concerns do AI experts have about current systems?

The main safety concerns are: (1) systems confidently producing false information (hallucinations), (2) biases learned from training data affecting decisions in hiring or lending, (3) lack of transparency about how systems make decisions, (4) potential for manipulation and persuasion at scale, and (5) environmental costs of running massive model inference. Many experts argue these aren't unsolvable problems, but they require deliberate investment to address.

How should companies approach AI safety testing?

Leading companies like Anthropic are applying a framework: ask "what might go wrong?" before launch, conduct thorough testing across different use cases and edge cases (similar to how car manufacturers do crash testing), involve ethicists and domain experts in addition to engineers, and use the "would I give this to my own child?" test for critical applications. This process is slower and more expensive than launching quickly, but results in higher reliability.

Will AI eliminate jobs or transform them?

Most experts believe it will do both. Some jobs will be eliminated entirely. Others will be transformed—the skills required will change, and the level of expertise needed will shift. What's clear is that the transition will be disruptive for workers currently in affected fields. Workers in data analysis, coding, design, and knowledge work generally face the most near-term exposure. Policies around retraining and economic support during this transition are still being developed.

What regulatory changes are likely in the next 2-3 years?

Expected regulations focus on: mandatory disclosure when users are interacting with AI, transparency about training data sources and consent, liability for harms caused by AI systems, requirements for bias testing and disclosure, and privacy protections for data used in model training. The EU's AI Act is already moving in these directions. The US is likely to follow with sector-specific regulations (healthcare, finance, hiring) before broad federal rules.

What This Moment Demands

We're at a fork in the road. The path we take in the next two years will shape how AI develops for the next decade. The people building AI systems today have more power than they realize to determine whether the technology becomes something we can trust or something we just tolerate.

The good news: there's growing awareness that responsibility matters. Companies like Anthropic are proving you don't have to sacrifice all speed for safety. Cloudflare is showing that accountability can be built into systems. Students are asking hard questions instead of blindly accepting the hype.

The challenge: competitive pressure pushes toward speed and market capture. It's easier to move fast and apologize later than to move carefully and risk being second. Until that incentive structure changes—either through regulation or through markets actively preferring responsible companies—most companies will take the path of least resistance.

The conversations we captured in this reporting show people who are thinking hard about these questions. They're not all in agreement, but they're all engaged with the real implications of what they're building. That's rarer than it should be.

Your own path forward is personal. But if you're using AI, working with AI, or relying on AI systems: think about what you're accepting, what the actual costs are, and whether the convenience is worth the trade-offs. If you're building AI systems: ask hard questions. Test thoroughly. Consider consequences. Build for the world you want, not just the outcome you want next quarter.

The future isn't written yet. That's actually the most important thing everyone in these conversations agreed on. We're making it right now, every day, with the decisions we're making about what to build and how to build it responsibly.

Key Takeaways

- AI adoption is mainstream: 66% of US teens and 35% of adults use AI daily, but this adoption lacks corresponding trust in AI companies

- Trust crisis threatens stability: Only 5% of Americans trust AI companies, while 41% actively distrust them; global trust in data protection has eroded from 2023-2024

- Safety requires deliberate investment: Leading companies ask hard questions before launch (would I give this to my child?), mimicking safety practices from automotive industry

- Students bring healthy skepticism: Gen Z views AI with caution despite digital nativity, citing job security, data privacy, and critical thinking erosion concerns

- Responsibility beats speed long-term: Companies investing in safety testing, transparency, and augmentation-focused design will outperform reckless competitors once regulation arrives

Related Articles

- Cognitive Diversity in LLMs: Transforming AI Interactions [2025]

- Payment Processors' Grok Problem: Why CSAM Enforcement Collapsed [2025]

- Meta Pauses Teen AI Characters: What's Changing in 2025

- EU Investigating Grok and X Over Illegal Deepfakes [2025]

- Data Center Backlash Meets Factory Support: The Supply Chain Paradox [2025]

- ChatGPT Citing Grokipedia: The AI Data Crisis [2025]

![Where Tech Leaders & Students Really Think AI Is Going [2025]](https://tryrunable.com/blog/where-tech-leaders-students-really-think-ai-is-going-2025/image-1-1769512084045.jpg)