Why AI Projects Fail: The Alignment Gap Between Leadership [2025]

Last Tuesday, I talked to a CTO at a mid-sized fintech company. They'd just shut down a six-month AI initiative. The project had solid funding, decent talent, and reasonable goals.

But here's what killed it: the CEO wanted to transform customer experiences. The IT leader wanted to stabilize infrastructure first. Neither was wrong. Both were speaking different languages.

This isn't a rare story.

The Silent Crisis Nobody's Talking About

Artificial intelligence is everywhere in boardrooms right now. CEOs see transformation, efficiency gains, and competitive advantage. They see Chat GPT, Claude, and GPT-4 and think: "This is the future. We need this."

Meanwhile, IT leaders are thinking about integration complexity, security nightmares, data quality issues, and the fact that their infrastructure barely handles today's workload.

They're not wrong either.

But here's the problem: when leadership teams don't share the same mental model of what AI actually requires, projects become zombies. They stumble forward without real alignment, burning budget and credibility until someone finally pulls the plug.

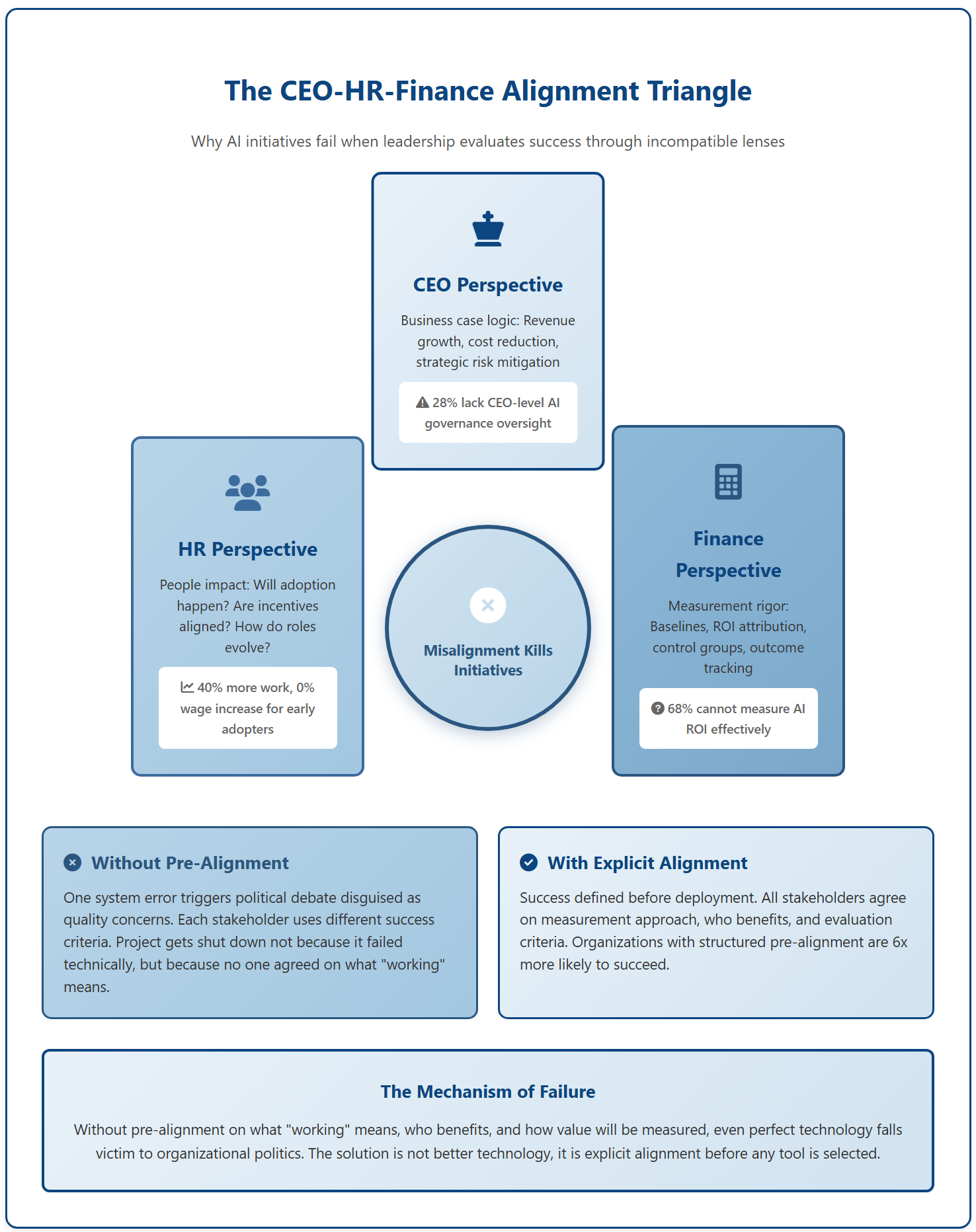

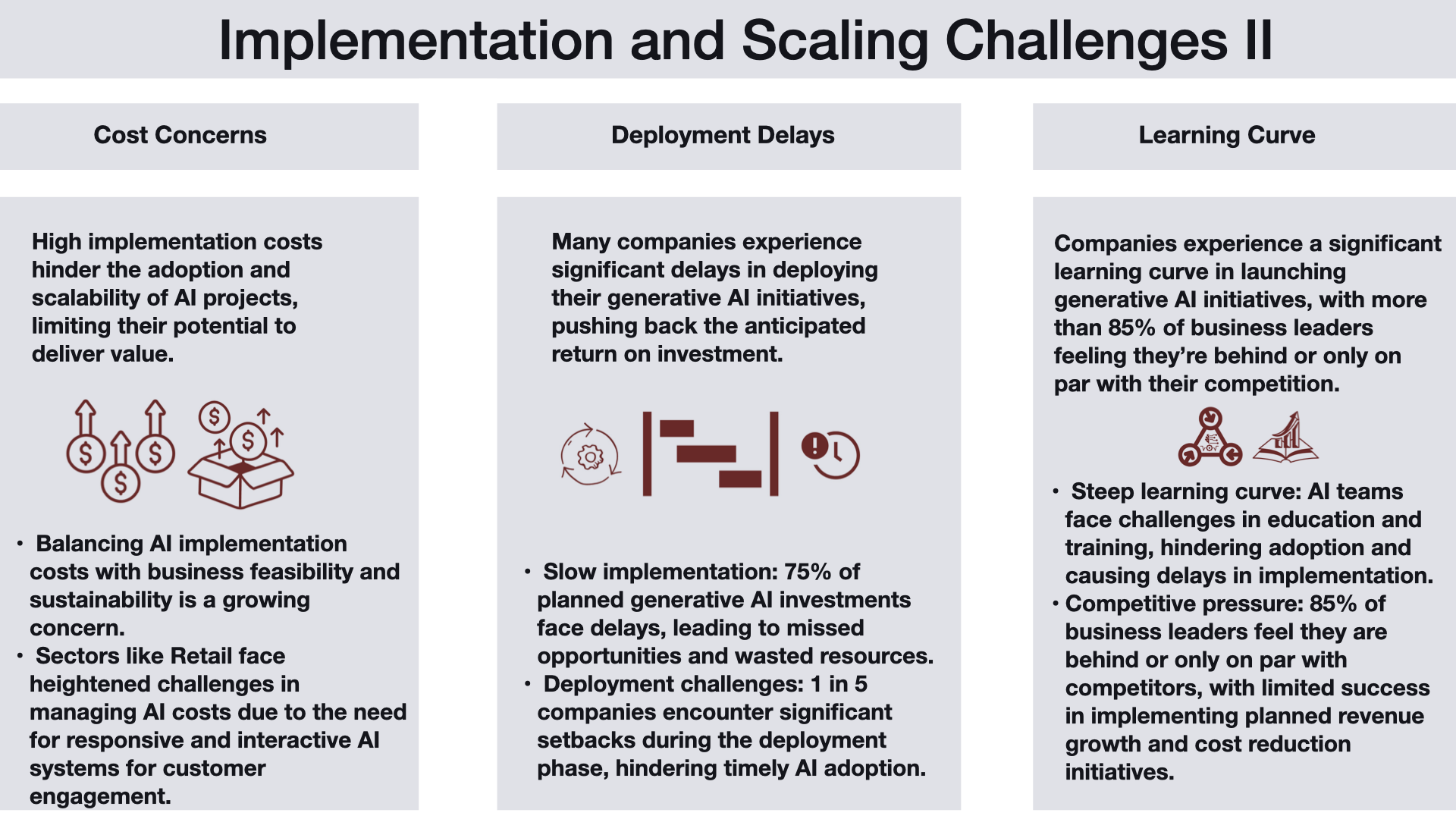

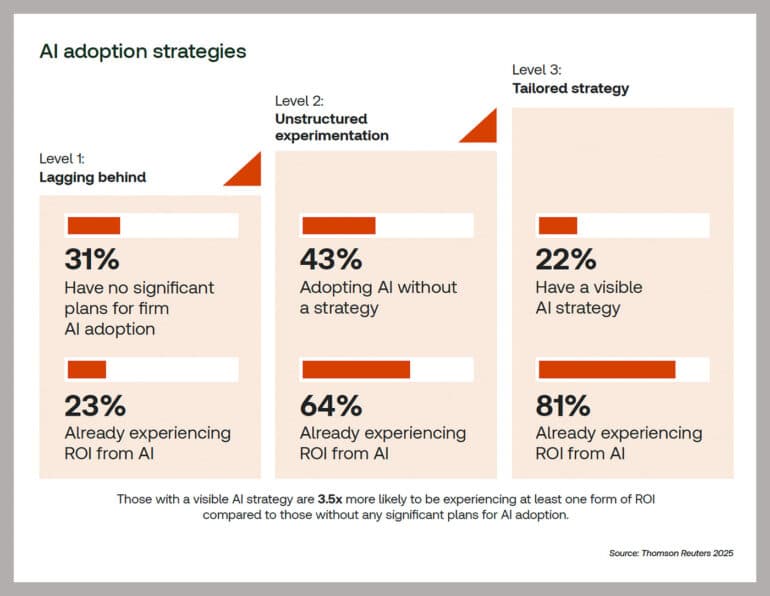

The research backs this up. Studies show that companies fail at AI adoption not because the technology is bad—it's actually getting better every month—but because they never aligned on what success even looks like. CEOs think success is "transformative change." IT leaders think success is "doesn't crash the system."

These aren't compatible definitions of success.

The gap isn't technical. It's linguistic. It's cultural. It's about two groups of smart people looking at the same opportunity through completely different lenses.

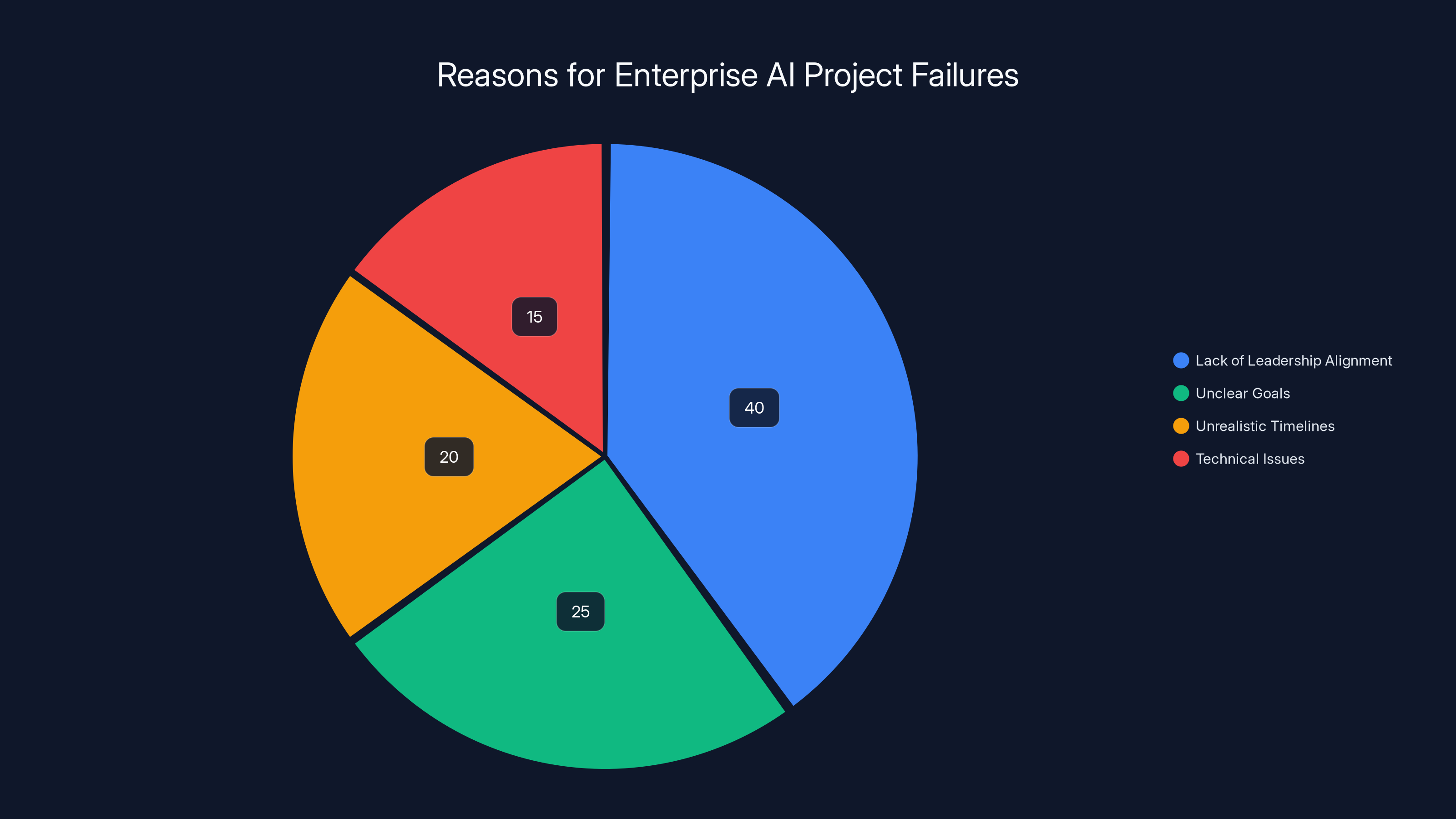

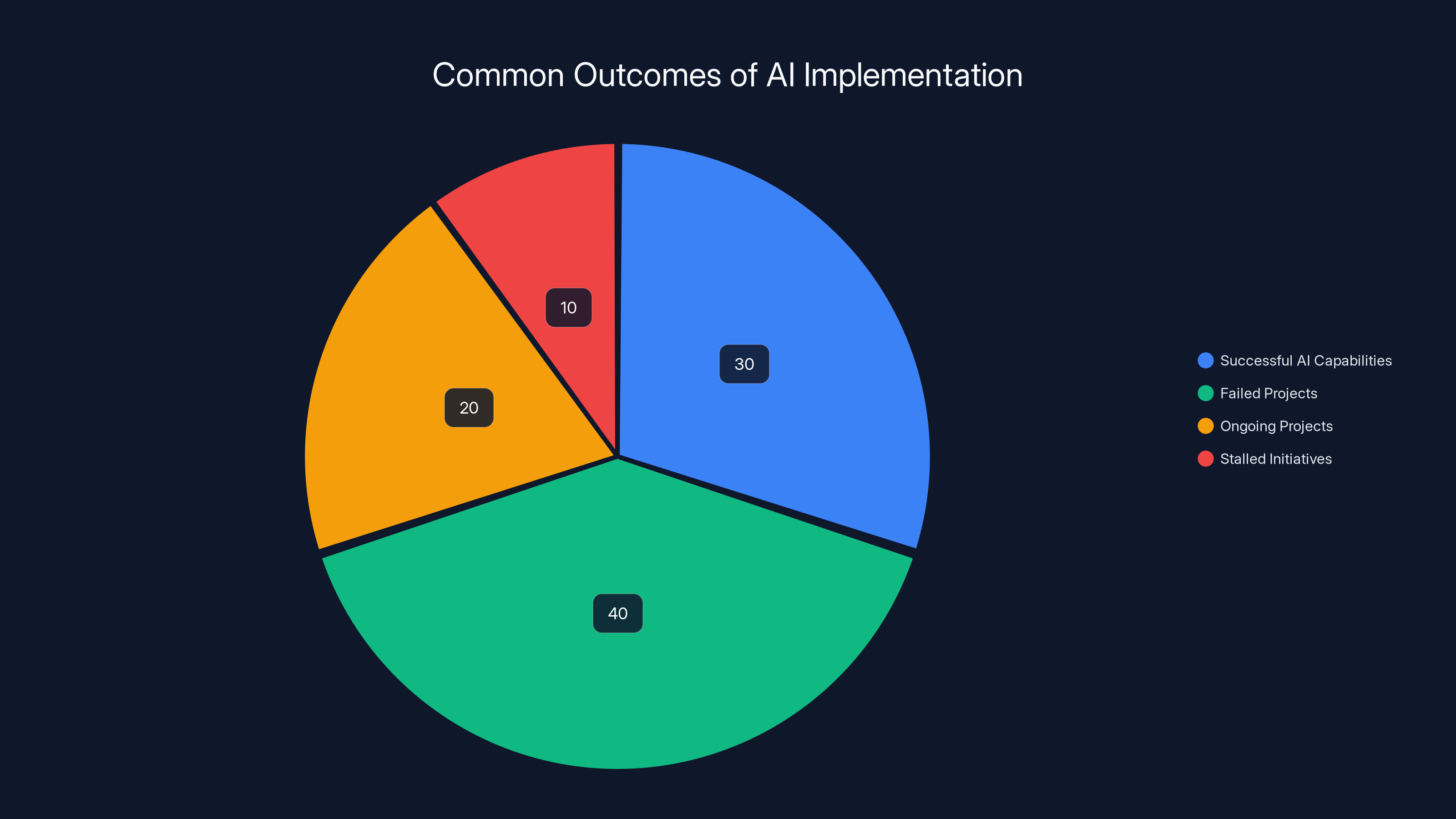

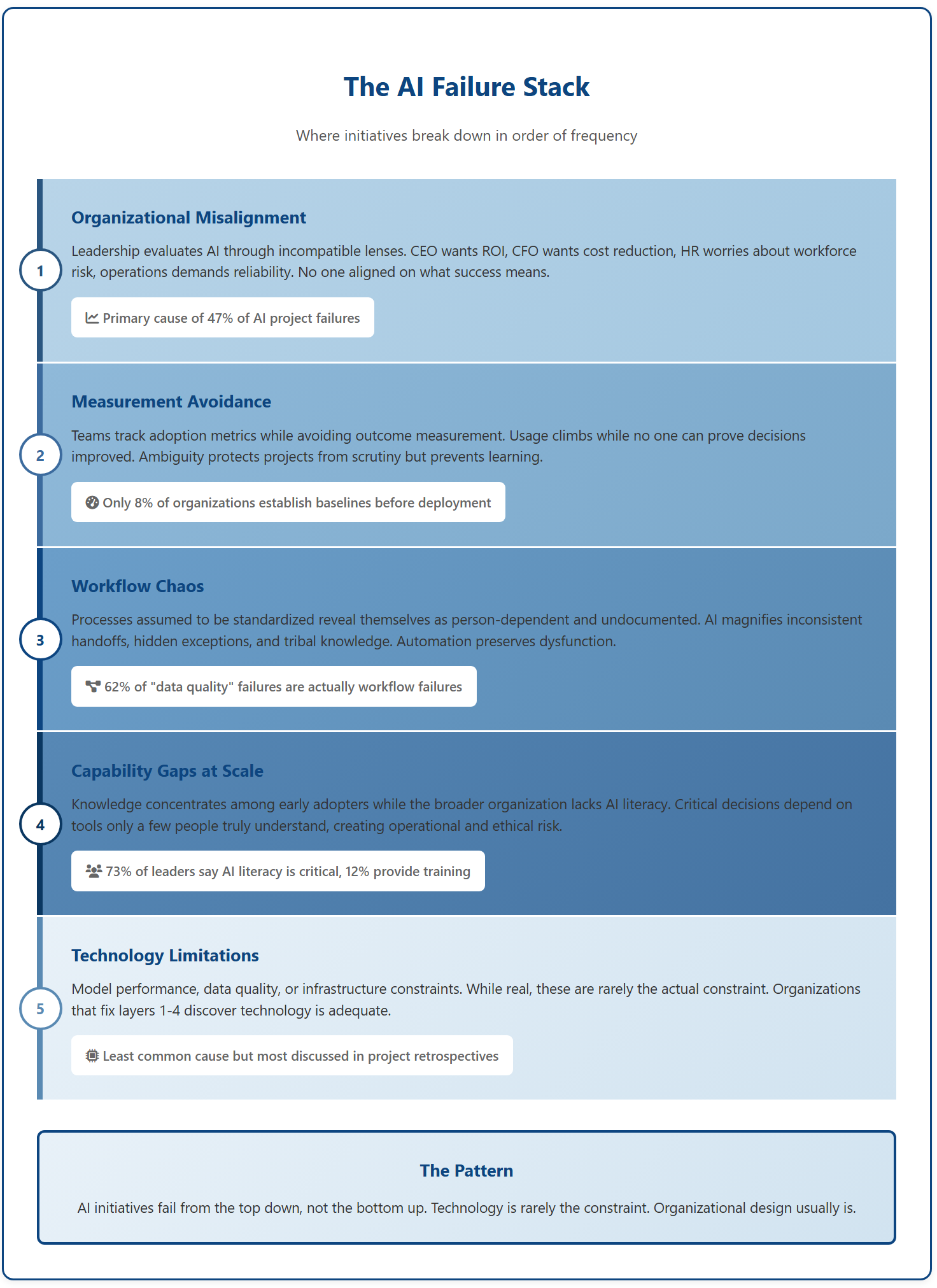

The primary reason for AI project failures is a lack of leadership alignment, accounting for 40% of failures. Estimated data based on common issues.

Understanding the CEO Perspective

Let's start with the C-suite view. CEOs live in a world of quarterly earnings, shareholder expectations, and competitive pressure. They see Open AI's GPT models getting smarter every quarter. They read about competitors integrating AI into customer interactions. They see the clock ticking.

From their vantage point, AI is the future. Not someday. Now. Companies that move fast gain advantage. Companies that move slow get left behind.

So CEOs ask reasonable questions: Can we use AI to personalize our customer experience? Can we automate our support team? Can we predict churn better? Can we speed up decision-making?

These aren't crazy ambitions. They're legitimate business goals.

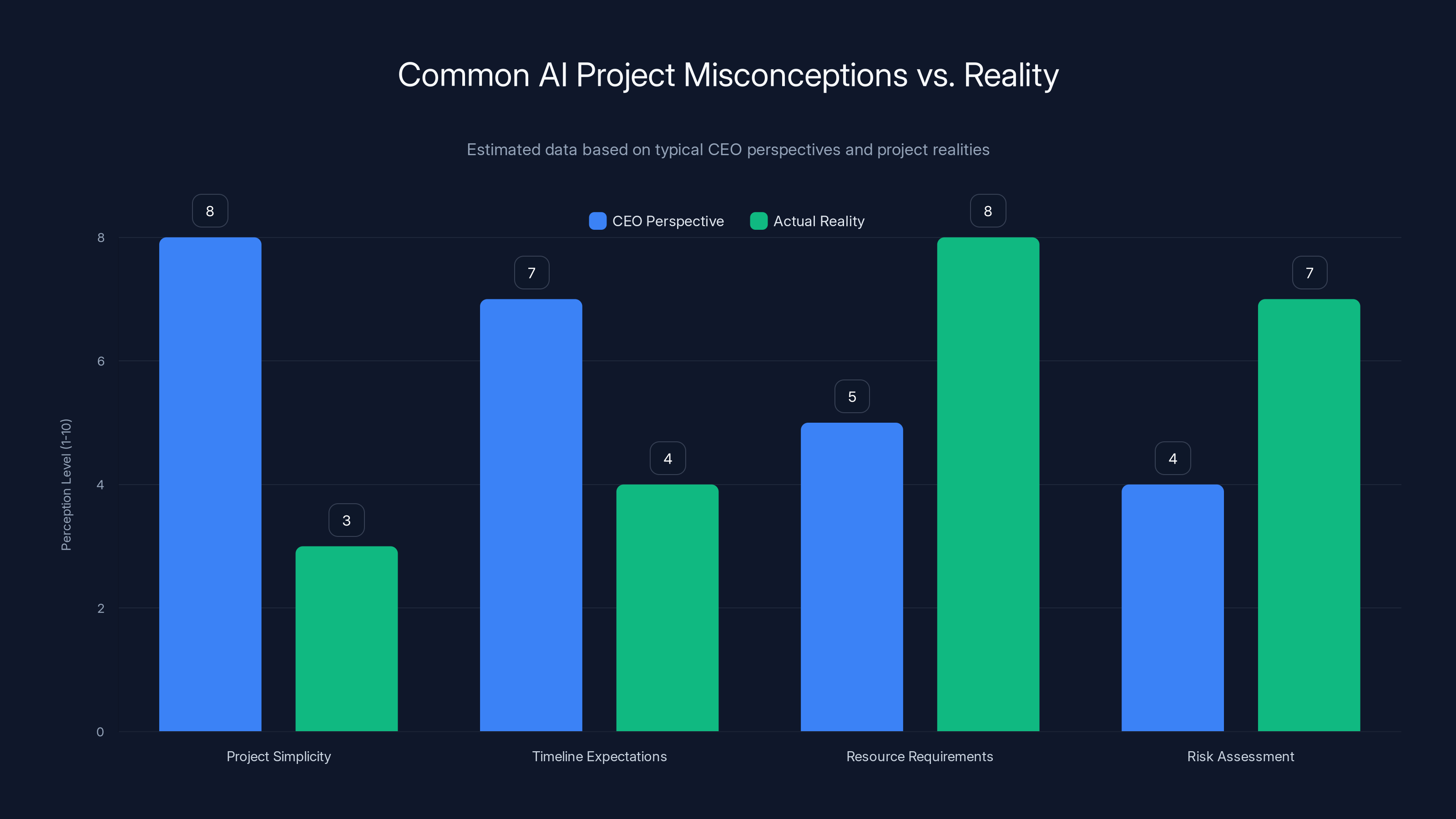

But here's where the gap opens up. CEOs often have limited visibility into what these projects actually require. They might think: "We'll plug in Chat GPT and it'll handle our customer service." Simple. Elegant. Three-month project.

What they don't see is the work underneath: data cleaning, API integration, security audits, staff retraining, compliance checking, and the 47 edge cases where AI gives completely wrong answers that you have to catch and fix manually.

The CEO incentive structure also pushes toward big swings. They're rewarded for transformation, growth, and moving markets. "We did an incremental improvement" doesn't move stock price. "We launched an AI-powered product" gets press coverage.

This creates natural optimism bias. Projects get framed as smaller and simpler than they actually are. Risk gets minimized. Timeline estimates get compressed.

Again, this isn't stupidity. It's just how incentives work.

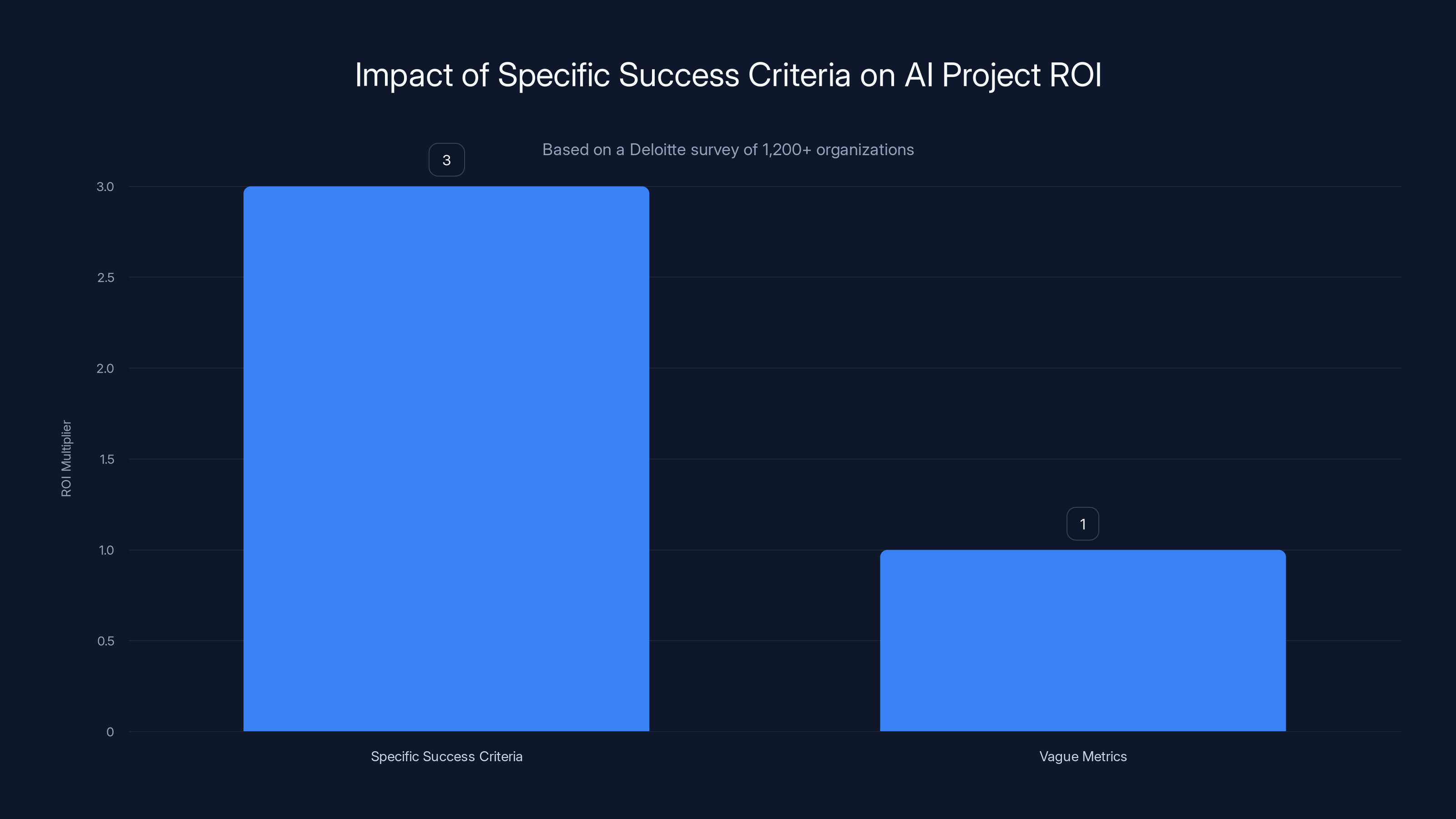

Companies using specific, measurable success criteria for AI projects report 3x higher ROI compared to those using vague metrics like 'transformation' or 'modernization'.

Understanding the IT Leader Perspective

Now flip the view. IT leaders are thinking about something different entirely.

They're managing production systems that can't go down. They're protecting sensitive data. They're balancing technical debt against new features. They're coordinating between legacy systems and modern ones.

When an IT leader hears "AI project," they immediately start thinking about infrastructure requirements. How much compute power? How do we handle data at scale? What happens if the model fails? How do we ensure security?

These aren't paranoid questions. They're the questions of someone responsible for keeping the lights on.

IT leaders also have visibility into constraints that leadership often doesn't understand. They know their data is fragmented across three legacy systems. They know their current infrastructure is already running hot. They know that adding new workloads could create cascading failures.

So they say things like: "We need to stabilize infrastructure first." "We should start with a pilot." "We need to address data quality."

From the IT perspective, this is cautious and responsible. From the CEO perspective, it sounds like: "No. You don't want to move."

IT leaders are also incentivized differently. They're rewarded for stability, security, and efficiency. A production outage is catastrophic. A missed deadline is bad, but recoverable. A security breach is a career-ending event.

This creates natural conservatism. Not obstruction—just caution.

The problem is that both perspectives are correct. The CEO is right that speed matters. The IT leader is right that infrastructure matters. But without a shared framework for thinking about both, they're just talking past each other.

The Mid-Market Amplifier

This problem gets worse in mid-market companies. These are organizations big enough to have real complexity—multiple systems, distributed teams, security requirements—but small enough that everyone still reports to the same few people.

At a huge enterprise, you have layers between the CEO and IT. Messaging gets translated through layers of management. At a smaller startup, everyone talks constantly and alignment happens naturally.

But mid-market? That's the danger zone.

You've got a CEO who talks directly to the CTO. You've got an ops team that works with both. You've got business units pushing for speed and IT pushing for stability.

And because the org is small, when things get misaligned, there's nowhere to hide. Everyone sees the friction. Projects get defended by their champions and questioned by their skeptics. Nothing gets the benefit of the doubt.

Mid-market companies also face a unique talent squeeze. Your IT team probably isn't huge. They're wearing multiple hats. They don't have a dedicated AI strategy person. They don't have a separate infrastructure team. They've got maybe three solid engineers who are already running the entire tech operation.

Add an AI project onto that? Something else breaks.

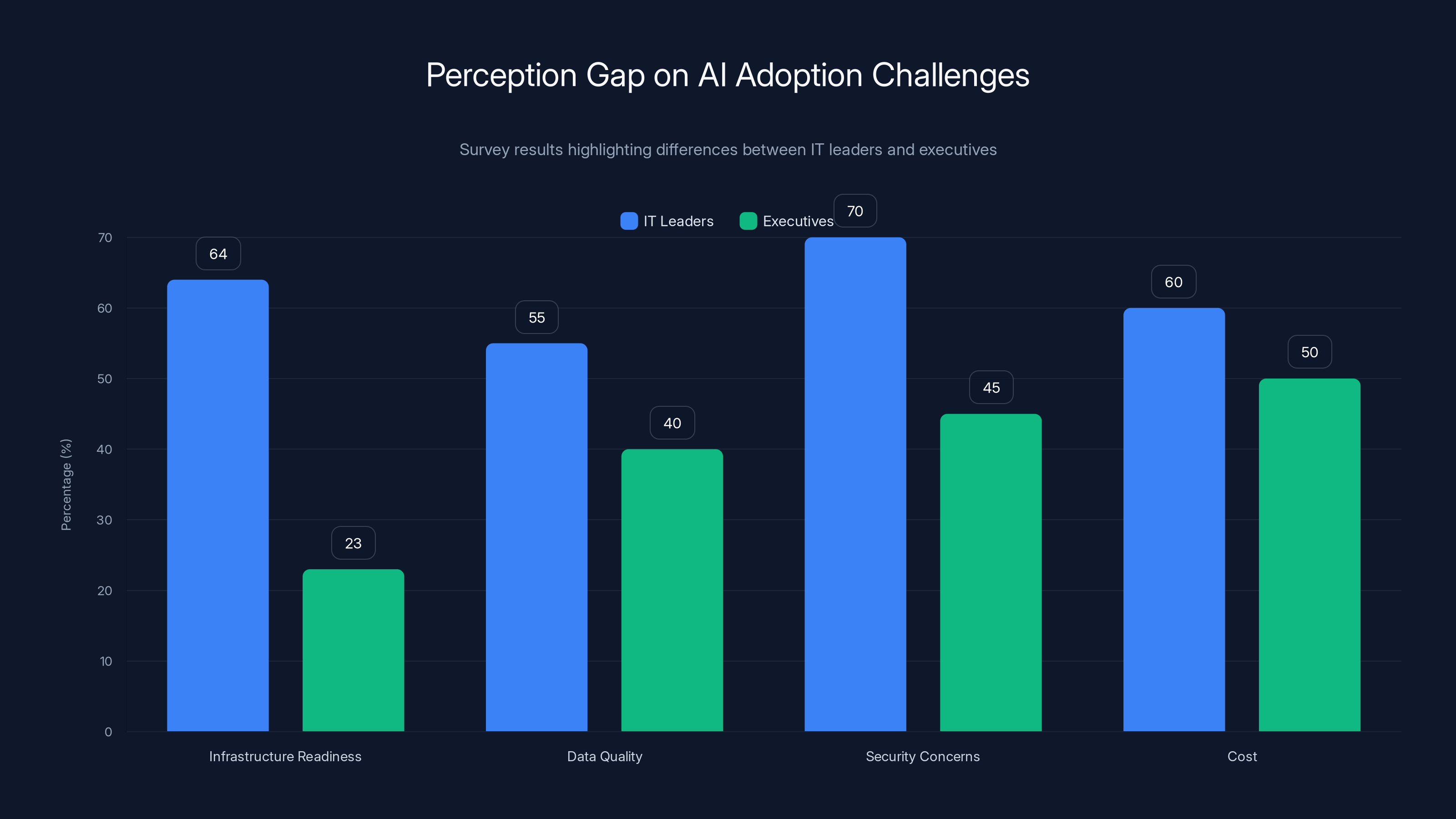

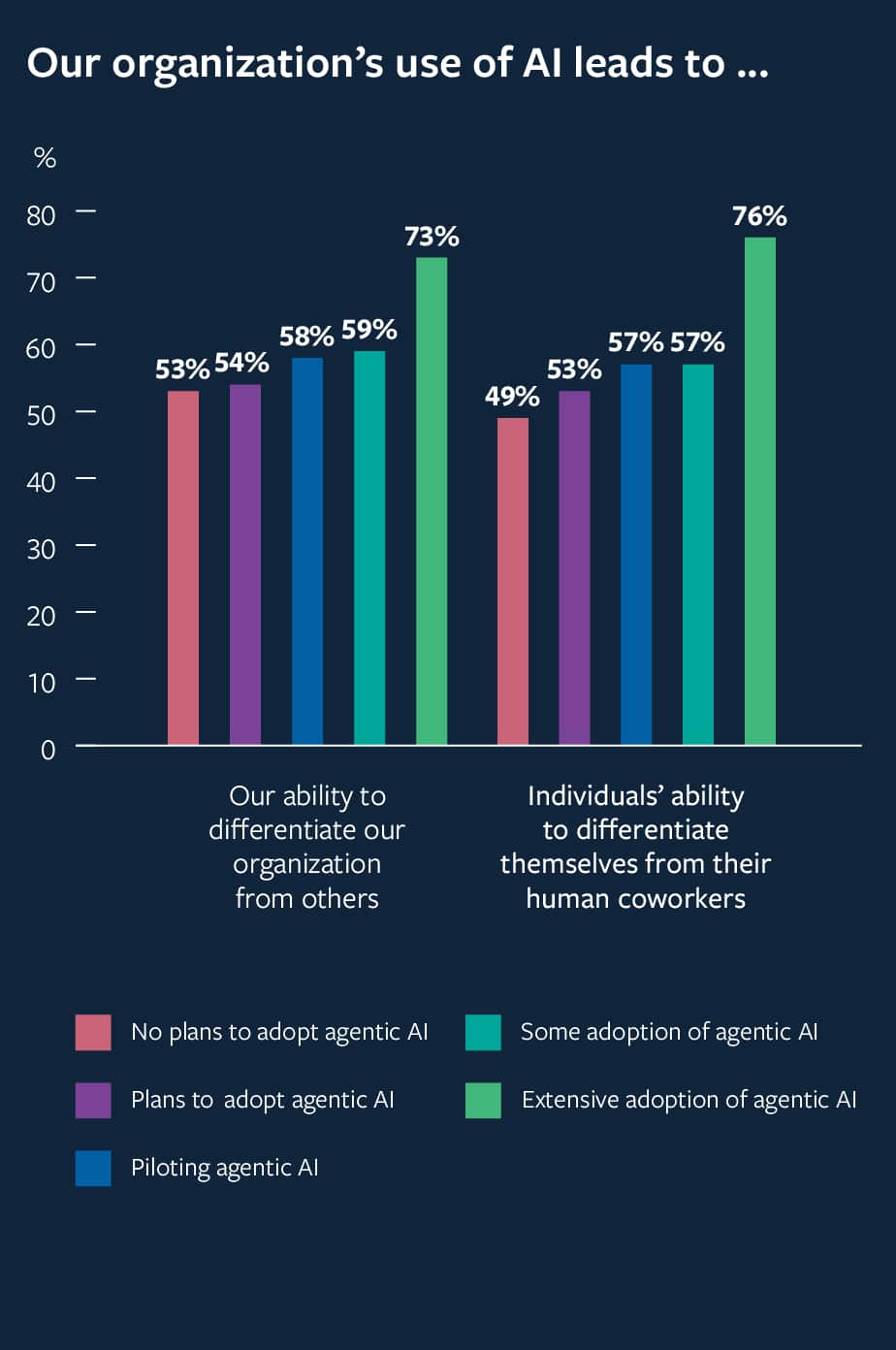

A significant perception gap exists between IT leaders and executives on AI adoption challenges, with infrastructure readiness being a major concern for IT leaders but not for executives.

The Advice Gap: Where the Real Problem Lives

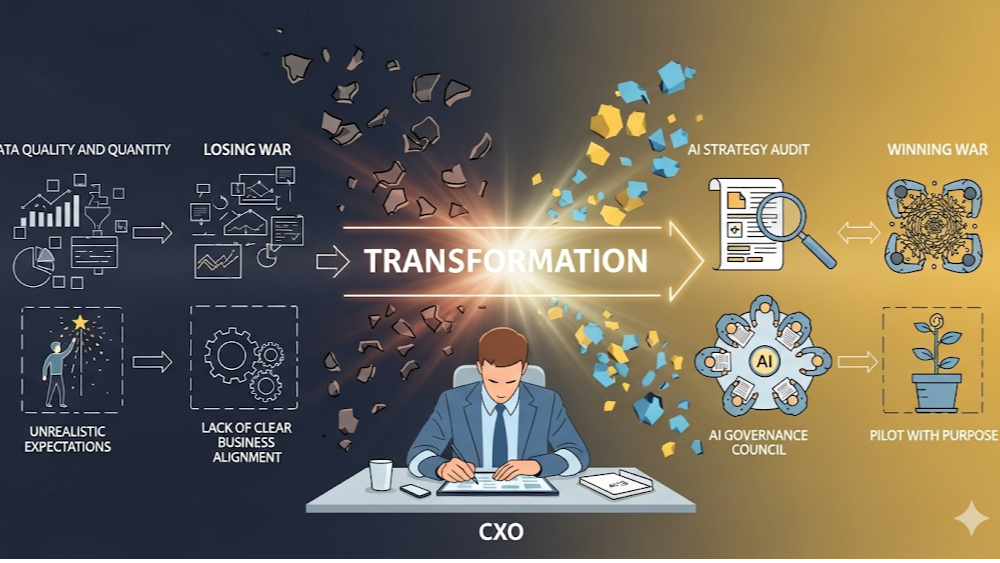

Here's the insight that changes everything: the real issue isn't the gap between CEO and IT leader. It's the advice gap.

CEOs get advice from business consultants, industry peers, conference keynotes, and LinkedIn posts. They hear: "AI is transformative. Move fast. Competitors are ahead. You need to act now."

IT leaders get advice from technical documentation, engineering blogs, Stack Overflow, and internal war stories. They hear: "Data is messy. Implementations fail. Security is critical. Move cautiously. Test everything."

Both sources are giving good advice. But it's advice optimized for different outcomes.

The business advice is optimized for growth and competitive advantage. The technical advice is optimized for stability and risk mitigation. These aren't contradictory, but they're not naturally aligned either.

Without a shared framework for thinking about AI specifically—not generic business advice, not generic technical advice—leadership teams stay in separate worlds.

The CEO reads a McKinsey report on AI ROI and plans accordingly. The IT leader reads about ML Ops challenges and plans accordingly. They never actually integrate these mental models.

So projects get proposed on the business case alone. Then they hit technical reality and grind to a halt. Then there's conflict about why progress stopped. Then the project gets killed.

And everyone blames the other side.

Building Shared Context

Fixing this requires something simple but rare: shared context about what AI actually is and what it actually requires.

Not MBA-level abstraction. Not deep technical documentation. Shared understanding.

Here's what needs to be true:

The CEO needs to understand: AI is incredibly useful for specific, well-defined problems with good data. It's not useful for everything. It requires integration work, data quality, model monitoring, and human oversight. It's not magic. It's a tool that amplifies what you already do.

The IT leader needs to understand: AI is genuinely different from previous tech adoptions. It's not just infrastructure. It requires different processes, different skill sets, and different risk management. Ignoring AI is also a risk. There's a cost to moving too slowly.

These aren't contradictory. But they're not intuitive either.

Most leadership teams never have this conversation. They assume shared understanding and operate on assumptions.

Building shared context means actually talking about:

- What specific problem we're solving (not "transform the business," but "reduce support response time by 40%")

- What data we have and what state it's in

- What timeline is realistic (not what we want, what's actually realistic)

- What success looks like in measurable terms

- What can go wrong and how we'll handle it

- What other projects might suffer if we pull resources

- What expertise we need and whether we have it

This conversation is uncomfortable. It surfaces disagreement. But it beats six months of misalignment followed by project death.

Estimated data suggests that companies with aligned leadership are more likely to develop successful AI capabilities, while misalignment can lead to failed projects and stalled initiatives.

The Phased Approach That Actually Works

Once you have shared context, you can build an actual roadmap. And the roadmap that actually works in practice looks nothing like what most companies do.

Most companies try to do this: "We're going to build an AI system that transforms our business. Here's the vision. Go." Then IT disappears for six months and comes back saying "it's complicated."

What actually works is methodical and iterative.

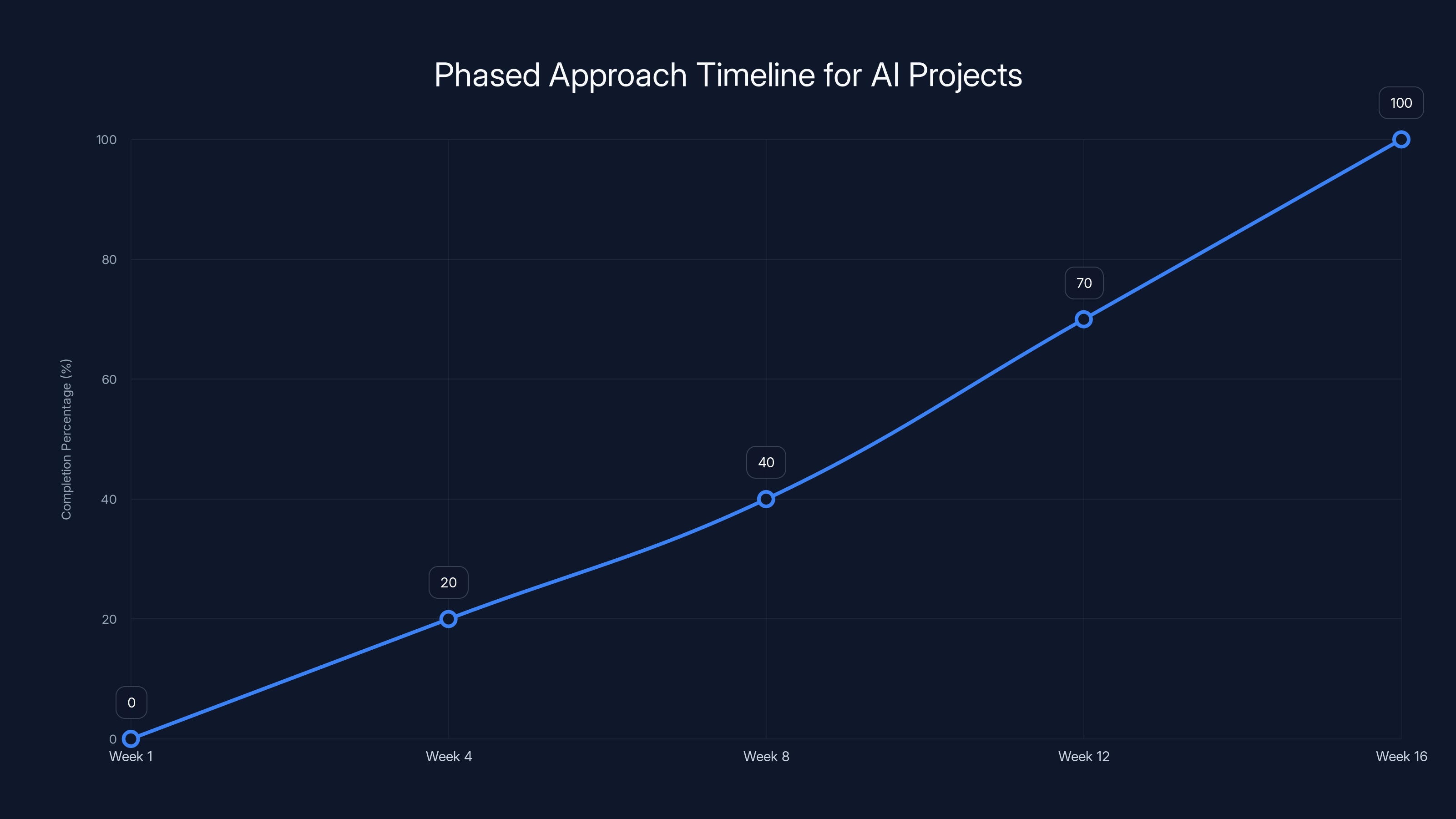

Phase 1: Pilot Selection (Weeks 1-4)

Identify a high-impact problem that's also low-friction. Not the most important problem. The problem that's important enough to matter, but small enough that you can actually succeed.

Good pilot: "Reduce time support team spends categorizing incoming tickets by 30%."

Bad pilot: "Revolutionize customer experience with conversational AI."

The good pilot has clear success criteria. You can measure it. You can do it in 8-12 weeks. You can fail without catastrophic consequences.

Phase 2: Data Assessment (Weeks 4-8)

Stop assuming your data is ready. It's not. Look at what you actually have.

Where does it live? In what format? How clean is it? How biased is it? How much manual work would it take to use it?

You'll discover that the data you thought was clean isn't. That the data you thought was comprehensive has huge gaps. That data you thought was unbiased isn't.

This is where most projects should fail if they're going to fail. If your data isn't usable, an AI project can't succeed. Full stop.

Phase 3: Small Team Execution (Weeks 8-16)

Assign a small, focused team. Three people max: one person who knows the business problem deeply, one engineer, one data person.

Their job is to build something that works. Not something beautiful or elegant. Something that solves the problem.

This team should have explicit permission to ignore process. Normal approval workflows, standard architecture, formal documentation—that comes later. Right now, the job is to prove the concept works.

Phase 4: Measurement and Learning (Weeks 16-20)

Does it work? Be honest about this. Not "does it work perfectly," but "does it solve the problem better than the current approach?"

Measure three things: Does it improve the metric you're targeting? Does it create new problems? Does it cost more or less than you expected?

If the answer to the first question is "yes," you move forward. If it's "no," you kill it and move to the next problem.

Phase 5: Scaling (Week 20 onward)

Now bring in the architecture and process stuff. Documentation. Security reviews. Integration with existing systems. Proper monitoring and alerting.

But you're scaling something that works, not building something that hopefully will work.

This approach feels slow at first. Sixteen weeks for a pilot sounds long when your CEO wanted results in three months.

But it's actually the fast path. Because when you succeed, you know it works and why. You can scale confidently. You can talk about AI projects with credibility.

Companies that skip this and try to go fast end up going slow. They fail at the big project, rebuild the team, try a different approach, and six months later they're back at square one.

Governance That Doesn't Suck

Once you're beyond the pilot phase, you need governance. Not the 47-approval-gate kind. The kind that actually helps.

Good AI governance has four components:

Clear Decision Rights

Who decides which AI projects get funded? Not a committee that argues forever. A clear decision-maker with input from both business and technical sides.

That person should have a simple rubric: What problem are we solving? How big is the problem? What's our confidence in the solution? What resources would it require?

Then they make a decision and move on.

Regular Sync Communication

Every two weeks, the people running AI projects talk to leadership. Not a big presentation. A 30-minute conversation: What did we learn? What's the new plan? What do we need from you?

This prevents the situation where a project disappears into IT for six months and resurfaces with "it's way harder than we thought."

Risk Management

AI projects have specific risks: data bias, model drift, security vulnerabilities, performance degradation over time.

Have a simple process for identifying and monitoring these. Not a 50-page risk document. A living list: What could go wrong? How would we catch it? What's our response?

Success Criteria That Make Sense

Not "AI transformation." Specific metrics: "Reduce customer support response time by 30%." "Identify 20% more churn risk earlier." "Reduce data entry errors by 50%."

Measure against these. Celebrate wins. Learn from misses.

This chart illustrates the phased approach timeline for AI projects, showing key phases from pilot selection to execution. Estimated data based on typical project phases.

The Skills Gap Nobody Mentions

Here's something that doesn't get enough attention: the skills required to actually execute on AI are different from the skills that got you promoted.

Your best engineer might be amazing at building traditional software systems. That doesn't mean they know how to build ML systems. Different problems. Different solutions.

Your business operations expert might be excellent at optimizing existing processes. That doesn't mean they can assess whether AI is the right solution to a problem or just expensive automation.

Your IT operations team might be world-class at keeping systems stable. That doesn't mean they understand how to monitor ML models for drift or bias.

So here's what needs to happen: someone has to become the translator.

Not a full-time ML researcher. Not someone who leaves to work at Open AI. Someone in your organization who understands enough about AI to make informed decisions, explain technical constraints to non-technical people, and work with your technical team on implementation.

This person needs to:

- Understand what AI can and can't do

- Know how to read research papers without getting lost

- Understand data concepts at a working level

- Know your business well enough to spot real problems

- Be credible with both the business and technical sides

- Actually care about getting this right

Finding this person is hard. Paying them appropriately is harder. But skipping this step is where so many projects fail.

Making the Hard Conversations Happen

None of this works without actually having the hard conversations. The ones where people disagree openly.

Most leadership teams avoid this. It's uncomfortable. Someone might get defensive. Someone might challenge the CEO. It's easier to just let people operate from their own assumptions.

But that's how projects die.

Having good conversations means creating conditions where it's actually safe to disagree. Some practical ways to do this:

Separate the People from the Problem

You're not arguing about whether the CTO is too cautious or the CEO is too aggressive. You're looking at a specific problem together and trying to understand different perspectives on it.

Language matters: "I'm concerned about infrastructure stability" is different from "you're blocking progress." One is a problem to solve. One is a personal attack.

Bring in Data

Arguments without data are just opinions. "What percentage of our support tickets could AI actually help with?" is a better question than "AI could transform support."

Spend time on research before jumping to conclusions. Get people thinking about the same facts.

Assign Intellectual Honesty

Ask people to steelman the other side's argument. The CEO explains why IT caution is legitimate. The CTO explains why speed matters.

This prevents people from building strawman versions of opposite positions and arguing against those instead of reality.

Make Decisions and Move On

Talk, gather data, understand perspectives. Then someone makes a decision. Not "let's table this," but "here's what we're doing and why."

This prevents endless debate while actually making progress.

CEOs often perceive AI projects as simpler and quicker than they are, underestimating resources and risks involved. Estimated data highlights this gap.

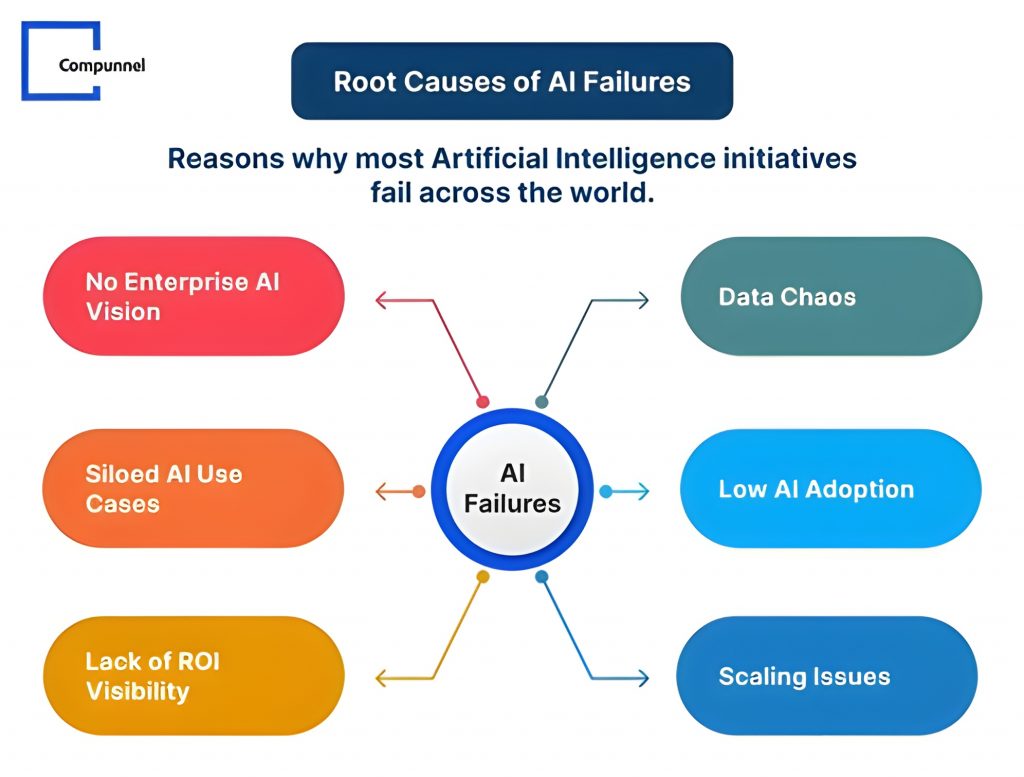

Common Patterns in Failed AI Projects

Over time, you see patterns in how AI projects fail. Understanding these helps you avoid them.

The Vaporware Pattern

Project is announced with great fanfare. Lots of talk about transformation. Then it disappears into IT for six months. When it resurfaces, expectations have changed. The original problem has been partially solved by something simpler. Or the focus has shifted. The project keeps going but no one's convinced it matters.

Cause: Not enough regular communication during development.

The Scope Creep Pattern

Start with a reasonable project: "Improve support ticket categorization." Three months in, someone says "while we're building this, we should also do sentiment analysis, and predict customer satisfaction, and identify upsell opportunities."

Scope explodes. Timeline extends. Complexity increases. Team gets demoralized. Project fails.

Cause: No clear decision-maker saying "no."

The Wrong Team Pattern

Assemble a team of people who are already overloaded. They have their normal jobs, plus "10% of their time" on the AI project. Except 10% of their time means no one's actually working on it.

Or assign people who are good at traditional engineering but have no idea how to build ML systems. They apply their existing playbook, which doesn't work for this problem.

Cause: Treating AI like any other project instead of recognizing it needs different skills.

The No Pilot Pattern

Skip the pilot. Go straight to the big ambitious project. Seems faster. But when things go wrong—and they go wrong—there's no learning, no iteration, no graceful fallback.

Cause: Overconfidence or pressure to show results fast.

The Data Denial Pattern

"We have great data." Then the project starts and it turns out the data is a mess. Fragmented. Inconsistent. Missing values. Biased. Cleaning it would take longer than originally planned.

Project timeline shifts. Team gets frustrated. Quality drops.

Cause: Not actually looking at data before committing.

Recognizing these patterns early helps you stop them before they become fatal.

Building a Culture That Actually Embraces AI

There's something deeper than alignment that matters: organizational culture around technology adoption.

Some organizations treat new technology like a threat. "This might make our jobs obsolete. Better resist it."

Other organizations treat new technology like a religion. "This is the future. We must adopt it or die."

Neither approach produces good outcomes.

The organizations that actually succeed with AI have a different culture. They're genuinely curious. They see AI as a tool that could help, but they don't assume it's the answer to everything. They're willing to experiment and learn.

They also create psychological safety around failure. If a pilot doesn't work, that's data, not a disaster. If someone raises a concern about an approach, they get heard, not shut down.

Building this culture is leadership work. It means:

- Being visibly curious about AI yourself

- Asking good questions, not pretending to know

- Creating space for people to say "this won't work" without career risk

- Celebrating smart failures (projects that fail and teach something)

- Investing in learning for people at all levels

- Making decisions about AI based on evidence, not hype

The Competitive Reality

Let's be clear about what's at stake: companies that figure out how to actually execute on AI will have a competitive advantage. Maybe not today. But in five years, probably yes.

Companies that can't figure it out—that stay misaligned and process-bound and stuck arguing about whether to move forward—will fall behind.

This isn't theory. You're already seeing it. Startups that are AI-native are doing things that take traditional companies two years in six months.

The good news: being AI-native isn't about having the smartest people or the biggest budget. It's about alignment, focus, and execution.

Mid-market companies actually have an advantage here. You're small enough to move fast. You're big enough to have real problems to solve. You don't have layers of bureaucracy slowing everything down.

But only if you actually align.

Moving from Theory to Action

So here's what needs to happen in the next 30 days:

Week 1: Have the alignment conversation

CEO and CTO sit down for two hours. Bring a whiteboard. Not a formal meeting. Not a presentation. A conversation.

Where do we agree? Where do we disagree? What's our shared understanding of what AI actually requires? What's our shared understanding of what matters most?

Document it. Even if it's messy.

Week 2: Identify the first pilot project

Not the most important problem. The right problem. High impact, but actually solvable, with the team you have, in the timeline you have.

Write down the success criteria. Be specific. "Improve support efficiency by 20%" is specific. "Transform the support function" is not.

Week 3: Assess data readiness

Actually look at the data for that pilot. Don't assume it's ready. Look at it. Be honest about what needs to happen to make it usable.

If data prep will take longer than the entire project, maybe it's not the right pilot.

Week 4: Assemble the team

Three people: one person who knows the business problem, one engineer, one data person. Give them explicit permission to ignore normal process. Their job is to learn if this can work.

This isn't a huge commitment. It's a learning investment.

After four weeks, you'll know more than 80% of companies do about whether AI makes sense for this specific problem.

That's the starting point.

What Success Actually Looks Like

When alignment is real, you notice it.

Projects move faster not because people are working harder, but because they're not working against each other. Decisions get made and people commit to them. Problems surface early because everyone's talking about them.

When pilots succeed, teams have real evidence to point to. "We reduced support ticket response time by 32%. Here's the data." That creates credibility and momentum for the next project.

When pilots fail, teams learn from them. "We thought sentiment analysis would help, but it didn't change behavior. Here's why." That's valuable. That informs the next attempt.

Over time, you build a track record. One successful project. Two. Three. Your team learns. Your processes improve. Your competitive advantage grows.

Companies that do this well end up with AI deeply integrated into their operations within 18-24 months. Not as a separate initiative. As part of how they work.

That's the goal.

The Real Cost of Not Doing This

What's the cost of staying misaligned?

In the short term: wasted money and time. Failed projects. Demoralized teams.

In the medium term: loss of competitive advantage. Your competitors figure it out. They move faster. They serve customers better.

In the long term: irrelevance. In five years, AI will be as normal as cloud infrastructure is today. Companies that didn't figure it out will have to catch up. That's expensive and painful.

Companies that did figure it out will be so far ahead it won't be close.

So the real cost of misalignment isn't the projects that fail today. It's the competitive ground you lose over the next five years.

Conclusion: From Ambition to Action

The gap between CEOs and IT leaders around AI is real. It's not a character flaw on either side. It's structural.

CEOs see opportunity and urgency. IT leaders see complexity and risk. Both perspectives matter. Both are true.

But when leadership teams operate from separate mental models, nothing good happens.

What actually works is committing to share a frame. Not a frame where IT says "yes, we'll do whatever you want." Not a frame where the CEO says "okay, we'll move at the pace you want." A frame where you're actually solving the same problem together.

That means having hard conversations. It means looking at data instead of assumptions. It means moving in phases instead of leaping. It means accepting some constraints while still moving with urgency.

It's not complicated. But it is rare.

The companies that figure this out will end up building real, sustainable AI capabilities. The ones that don't will end up with a graveyard of failed projects and expensive lessons.

Which one do you want to be?

Start with one conversation. Start with one pilot. Start with real alignment. Everything else follows from there.

For teams looking to accelerate AI execution without the complexity of custom implementations, Runable offers a different approach. It provides AI-powered automation tools for creating presentations, documents, reports, images, and videos—eliminating entire categories of manual work. At $9/month, it's a practical way to start realizing AI value immediately without the overhead of massive infrastructure investments or custom model training. For teams stuck between ambition and execution, sometimes a simpler tool beats a complex project.

FAQ

What is the AI alignment gap between business and technical leaders?

The alignment gap is the mismatch between how CEOs and IT leaders think about AI projects. CEOs focus on business transformation and competitive advantage, receiving advice about moving fast and capturing opportunity. IT leaders focus on infrastructure stability and risk management, receiving advice about technical best practices and cautious implementation. Without shared context about what AI actually requires, these different perspectives create conflict that stalls projects.

Why do most enterprise AI projects fail?

Research shows that 70% of enterprise AI projects fail or significantly underperform because leadership teams lack alignment on goals, success criteria, and realistic timelines. The failure isn't usually about technology—it's about organizations not creating a shared understanding of what they're actually building, why it matters, and what it requires. When CEOs and IT leaders operate from different mental models, projects become caught between competing priorities.

How can mid-market companies bridge the alignment gap?

Mid-market companies should start with a structured conversation between CEO and CTO to identify shared understanding of what AI requires and what success looks like. Then identify a pilot project that's high-impact but low-friction, assess data readiness honestly, assemble a small focused team, and measure results clearly. Regular communication during execution prevents the six-month disappearance act that creates misunderstanding and conflict.

What makes a good AI pilot project?

A good pilot solves a real, measurable problem, but isn't so big that failure is catastrophic. "Reduce support ticket categorization time by 30%" is better than "transform the customer experience." The pilot should have clear success criteria, realistic 8-12 week timeline, available data quality, and low impact on other operations. Good pilots teach what works and what doesn't without massive resource commitment.

What role does data quality play in AI project success?

Data quality is often the determining factor in whether an AI project succeeds or fails. Messy, incomplete, or biased data undermines everything downstream. Many projects fail because organizations assume data is ready and move fast, then discover six weeks in that data prep will take months. Assessing actual data state before committing to a project prevents timeline surprises and failure.

How should companies measure AI project success?

Success should be specific and measurable, not vague. Instead of "AI transformation," define outcomes like "reduce response time by 25%," "identify churn risk 30 days earlier," or "reduce error rate by 40%." Measure actual results against these criteria. Celebrate wins, learn from failures, and use evidence to decide which problems to tackle next. Specific metrics create alignment and credibility.

What governance structure actually works for AI projects?

Effective AI governance is lightweight and decision-oriented, not bureaucratic. It includes: clear decision-makers who evaluate projects against simple rubrics, regular two-week syncs that surface problems early, active risk management for AI-specific issues like bias and model drift, and meaningful success metrics. The goal is enabling speed while preventing catastrophic failures.

How important is the "AI translator" role in organizations?

The AI translator—someone who understands both technical constraints and business needs—is critical for success. This person explains why infrastructure matters to business leaders, explains why business context matters to engineers, and makes informed decisions about where AI can help. This role prevents the misunderstanding and miscommunication that kills projects. Often the best translator is someone promoted from within your organization who's always been curious about technology.

Why do companies often skip the pilot phase?

Skipping pilots usually stems from pressure to show results fast or from overconfidence that "this time it'll be different." In reality, pilots are the fastest path forward because they test assumptions, surface problems early, and build credibility for scaling. Companies that skip pilots end up failing at bigger projects, rebuilding teams, and relearning lessons they could have learned cheaply in a 12-week pilot.

How can organizations build a culture that supports AI adoption?

Culture change requires leadership to be visibly curious about AI, ask good questions without pretending to know answers, create psychological safety around smart failures, invest in learning for people at all levels, and make decisions based on evidence not hype. Monthly learning sessions, celebrating failed projects that teach something valuable, and promoting people who raise legitimate concerns all contribute to a culture where AI can actually succeed.

Key Takeaways

- AI project failure is primarily caused by alignment gaps between CEOs and IT leaders, not technology limitations

- CEOs and IT leaders receive different advice from different sources, creating separate mental models that prevent collaboration

- Mid-market companies face amplified alignment challenges due to small teams and direct reporting lines

- The phased approach (pilot → assess → execute → measure → scale) succeeds where big-bang projects fail

- An 'AI translator' role is critical for bridging business and technical perspectives in organizations

- Specific, measurable success criteria and lightweight governance prevent most common failure patterns

- Data quality assessment is often the deciding factor in whether AI projects succeed or fail

- Building organizational culture that supports AI adoption is as important as technical execution

Related Articles

- Why Agentic AI Projects Stall: Moving Past Proof-of-Concept [2025]

- Modernizing Apps for AI: Why Legacy Infrastructure Is Killing Your ROI [2025]

- The AI Adoption Gap: Why Some Countries Are Leaving Others Behind [2025]

- EU Investigating Grok and X Over Illegal Deepfakes [2025]

- ChatGPT Citing Grokipedia: The AI Data Crisis [2025]

- AI Coordination: The Next Frontier Beyond Chatbots [2025]

![Why AI Projects Fail: The Alignment Gap Between Leadership [2025]](https://tryrunable.com/blog/why-ai-projects-fail-the-alignment-gap-between-leadership-20/image-1-1769441884582.jpg)