Gemini on Google TV: AI Video and Image Generation Comes Home

You're sitting on your couch, watching TV, and you casually say, "Create a video of my dog running through the park as a superhero." Thirty seconds later, it's playing on your screen. No waiting, no switching devices, no uploading anything to the cloud. This isn't science fiction anymore. It's what Google is rolling out to your living room right now.

For years, AI content generation has lived in apps and websites. You'd pull out your phone, open an app, wait for processing, and then transfer files back to your TV if you wanted to see anything bigger than 6 inches. That workflow is clunky. It breaks the living room experience. Google understands this. At CES 2025, the company announced something genuinely different: Gemini is coming to Google TV with full image and video generation capabilities baked directly into the platform.

This is bigger than it sounds at first. We're not talking about adding a chatbot to your smart TV (though that's happening too). We're talking about bringing generative AI workflows directly into the space where you actually consume media. Your TV becomes a creative tool, not just a passive display. Your Google Photos library can be remixed with AI. You can generate entire videos from prompts. You can ask follow-up questions and get contextually rich responses displayed across your entire screen.

The implications ripple across three major domains: how we create content at home, how we interact with entertainment systems, and how deeply integrated AI becomes in everyday technology. Let's break down what's actually happening, why it matters, and what the real constraints are.

TL; DR

- Gemini on Google TV includes two powerful AI models: Nano Banana for image generation and Veo for video, both running natively on compatible TVs

- Photo remixing and video creation connect directly to your Google Photos library, letting you transform stored memories into new content without uploading anything

- Voice-first interaction lets you command the TV to generate content, adjust settings, or get information—all through natural conversation

- Limited rollout initially starts with TCL smart TVs, then expands to other devices like Google TV Streamer in coming months

- Requires Android 14 or higher, meaning older TVs and streaming boxes won't support these features immediately

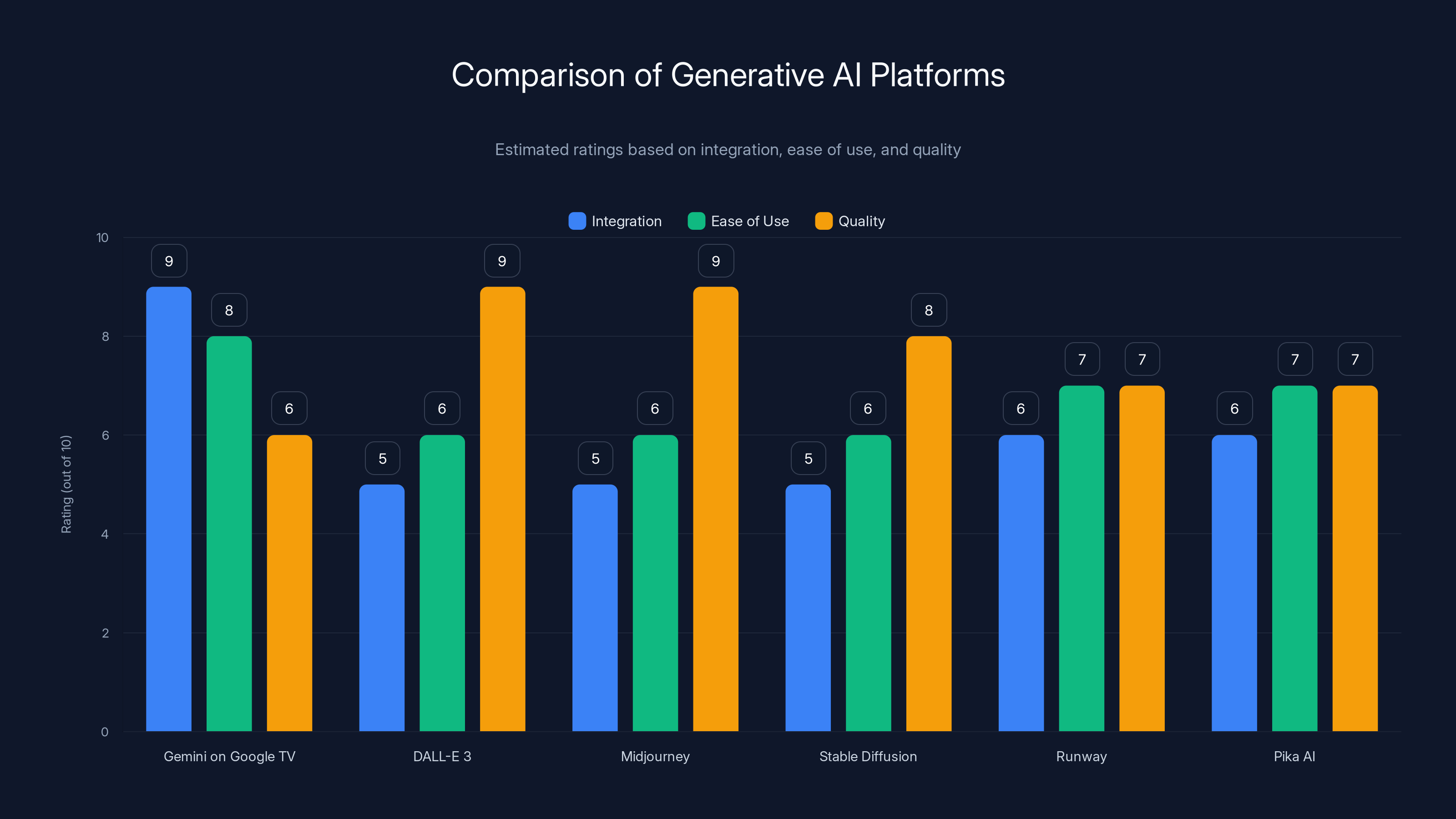

Gemini on Google TV excels in integration and ease of use, making it ideal for casual home use, while DALL-E 3 and Midjourney lead in quality for professional work. Estimated data based on platform characteristics.

Understanding the Gemini Expansion on Google TV

Google's TV strategy has evolved considerably. The company didn't invent the smart TV platform—that emerged from a combination of TV manufacturers' needs and content providers' demands. But Google TV has become increasingly central to how millions of people watch content. It's the operating system running on TCL TVs, Sony TVs, and Google's own TV Streamer. It powers the interface connecting you to Netflix, YouTube, Disney+, and everything else.

For years, Google TV was primarily a discovery and playback platform. It learned what you watched, recommended what to watch next, and gave you a unified interface across apps. The AI involved was mostly machine learning—collaborative filtering, recommendation algorithms, content ranking. Nothing generative. Nothing creative.

The shift toward generative AI on TVs started with Google's TV Streamer device last fall, which introduced basic Gemini capabilities. But that version was limited. It could answer questions and provide information, but it couldn't generate images or videos. It wasn't a creative tool.

The 2025 expansion changes this fundamentally. Google is bringing two of its most capable generative models directly to your TV. This isn't a web version of Gemini streamed to your screen. This is actual model inference happening on your local hardware. That's a technical achievement worth understanding.

The Two Models: Nano Banana and Veo Explained

Google doesn't just have one image and video generation model. It has multiple versions designed for different purposes. Understanding the difference matters because it explains what you can actually do with Gemini on your TV.

Nano Banana: Fast, Local Image Generation

Nano Banana is Google's efficient image generation model. The name sounds silly (and it kind of is), but the underlying concept is serious. This is a smaller, optimized version of larger image generation models. It's designed to run quickly on consumer hardware without requiring a data center's worth of GPU resources.

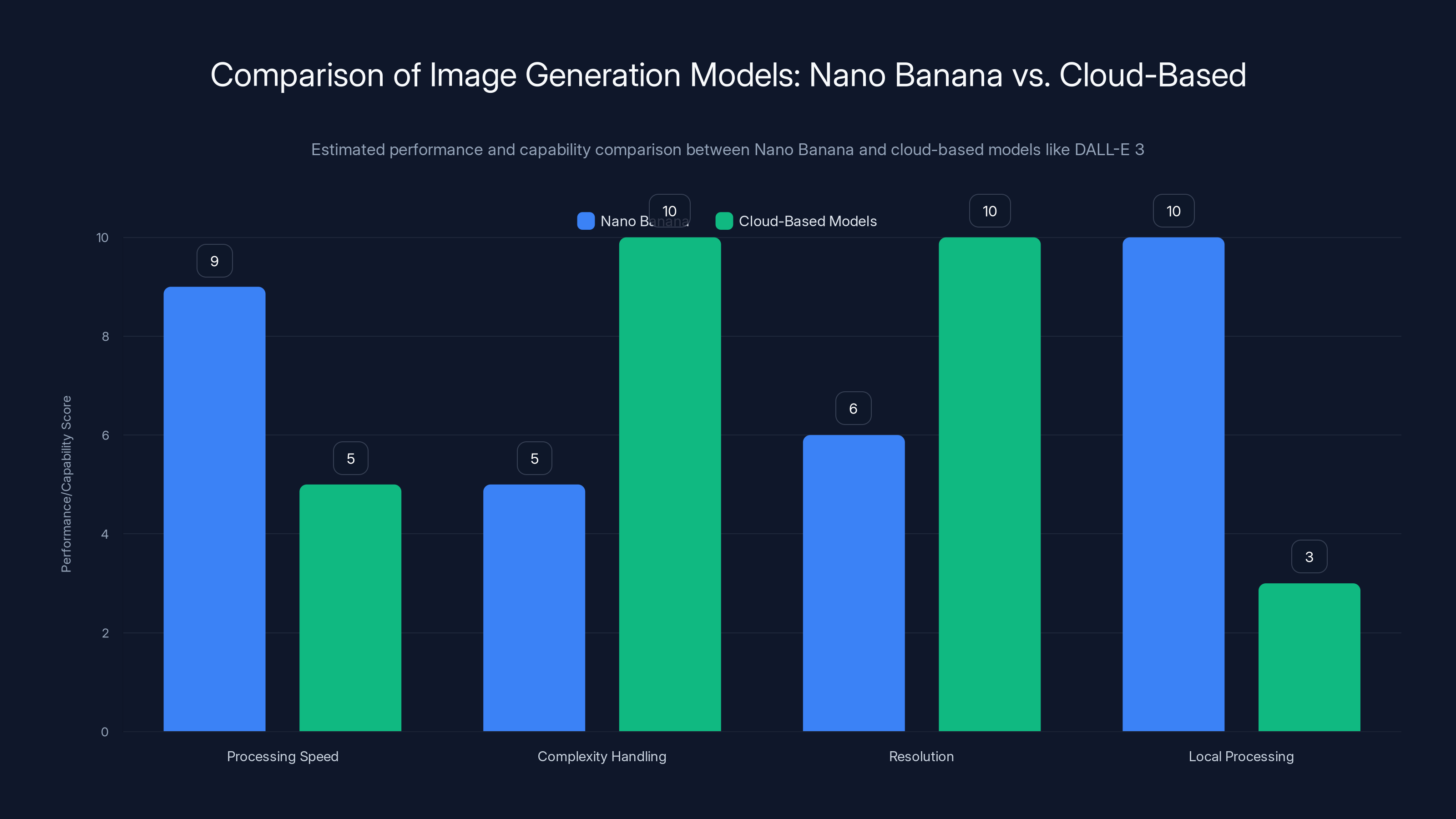

What does "efficient" mean in practice? It means you can generate an image in seconds, not minutes. Traditional image generation models like those powering DALL-E 3 or Midjourney require significant computational power. You're sending your prompt to a server farm, waiting in a queue, and checking back for results. With Nano Banana on your TV, the processing happens locally. You describe what you want, and it appears on your screen.

The trade-off? Local models are typically less capable than their cloud-based equivalents. They can't handle extremely complex prompts with dozens of specific requirements. They have limitations on resolution and detail. But for everyday use cases—generating a poster, creating a scene variation, remixing your photo collection—they work well enough. And honestly, the latency reduction alone makes them worth the capability trade-off for casual home use.

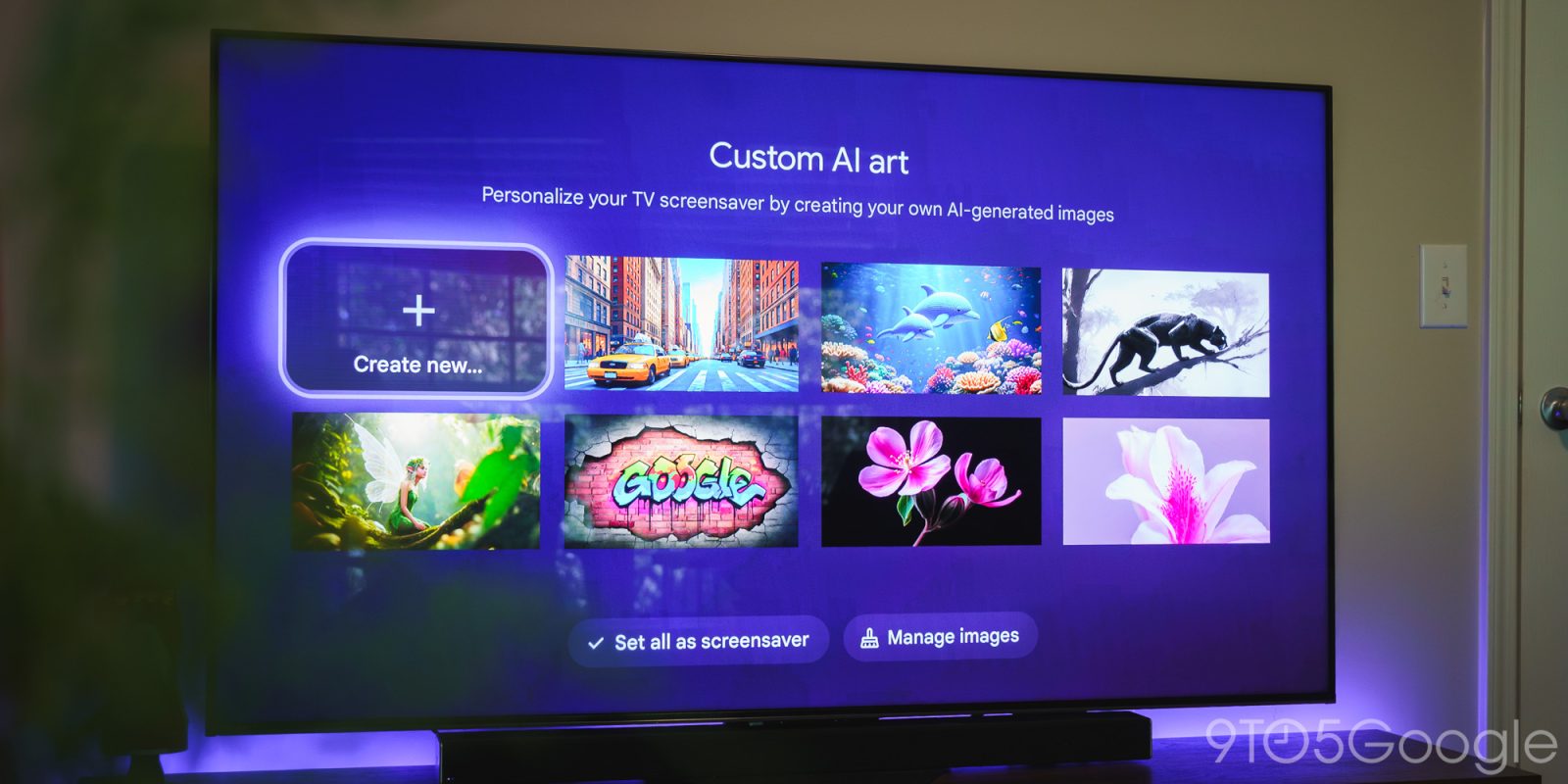

Nano Banana's role on Google TV is specifically for image generation. You can use it to create new images from scratch, or more interestingly, to remix existing photos from your Google Photos library. Let's say you have a family photo from vacation. Instead of just keeping it as is, you can ask Nano Banana to reimagine the scene in different styles. "Make it look like a watercolor painting." "Put us in a different location." "Render it as a fantasy epic." The model processes your request and generates variations.

Veo: Video Generation at Home

Veo is Google's more powerful video generation model. When Google first released Veo in late 2024, the improvement over previous video generation systems was noticeable. Earlier models produced short clips with visible artifacts. Veo produced clips that looked more natural, with better motion coherence and higher visual quality. It was a meaningful leap forward.

Bringing Veo to Google TV means you can generate short video clips directly from your TV. This is extraordinary from a UX perspective. Imagine describing a scene, and 30 seconds later, it's playing on your 65-inch display. That's the vision Google is pushing.

The practical implementation works like this: you use voice commands or text prompts to describe what you want to see. Veo processes the request and generates a short video clip (typically 5-30 seconds depending on the prompt complexity). The clip appears directly on your TV screen. You can watch it, save it to your library, or generate variations.

Veo is also being integrated with photo remixing. You can take a still image from your Google Photos library and ask Veo to turn it into a video. That photo of your dog sitting perfectly still becomes a short video of your dog running through a field. That sunset photo becomes a time-lapse. This is where the real creative potential emerges.

The capabilities difference between Nano Banana and Veo is significant. Video generation is substantially more compute-intensive than image generation. Veo likely requires more processing power than Nano Banana, which is why Google is being strategic about which devices get which features first.

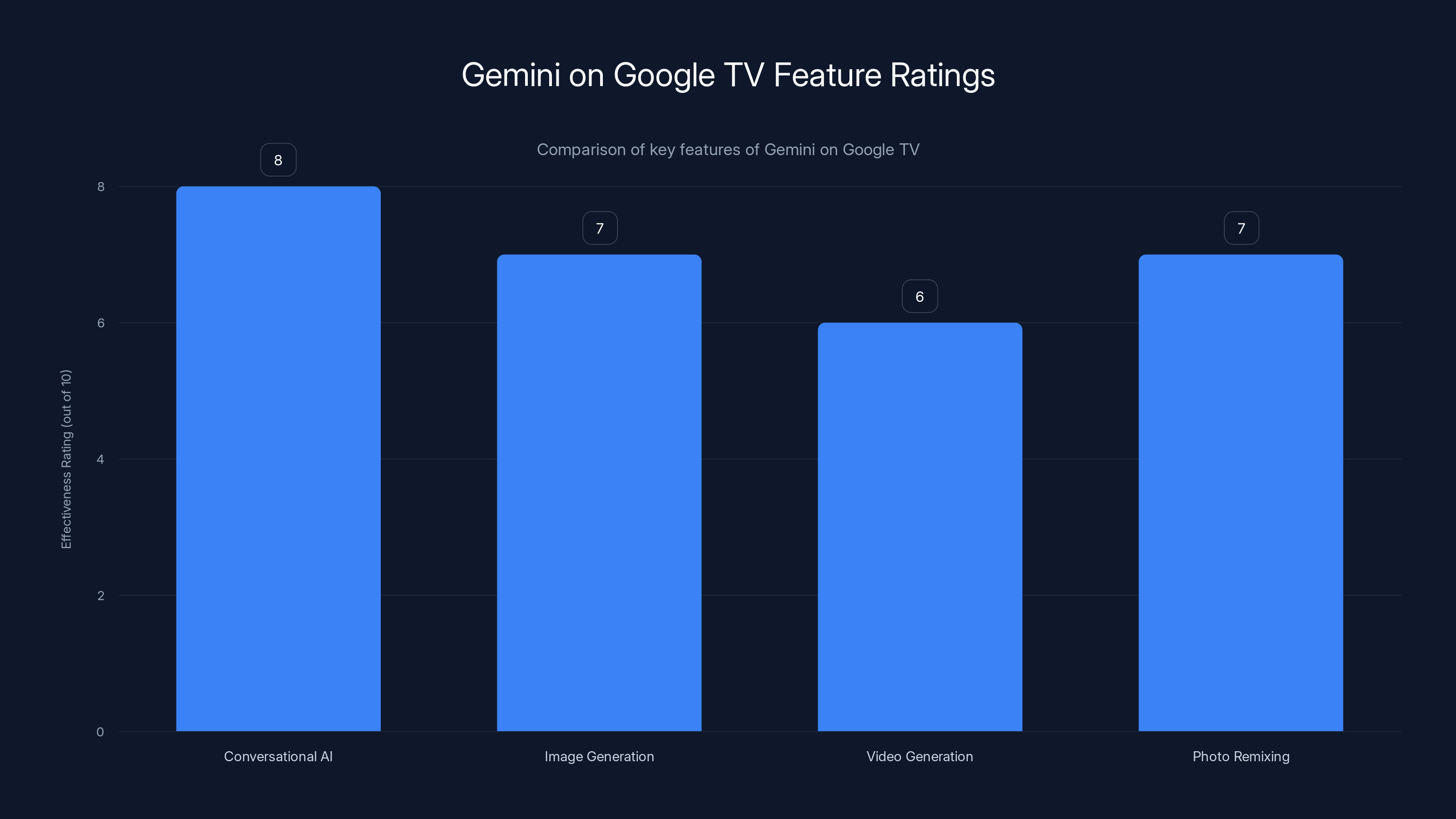

Gemini on Google TV offers a range of AI-driven features with conversational AI rated highest in effectiveness. Estimated data based on typical feature performance.

How Photo Remixing and Content Generation Actually Works

The feature that's getting the most attention is photo remixing, specifically because it connects to something people actually have: photos. Most of us have years of photos sitting in cloud storage. They're memories, but they're static. Google is now offering a way to transform those memories using AI.

Here's the technical architecture: when you enable Gemini on your Google TV, you grant it permission to access your Google Photos library. Google doesn't move anything. It doesn't need to upload your photos anywhere. The Gemini system on your TV can access the images locally through your Google account. When you ask it to remix or modify a photo, the processing happens on your TV hardware (or minimally in the cloud if the local hardware can't handle the computation).

The workflow is straightforward. You're watching TV, you open Gemini on your TV (through voice or the interface), and you say something like: "Take the beach photo from March and make it a painting in the style of Van Gogh." Gemini retrieves that specific photo from your Google Photos library, feeds it into Nano Banana along with your style preferences, and generates a new image. That new image appears on your TV. You can save it, share it, or delete it. Your original photo is untouched.

This differs fundamentally from how photo editing has traditionally worked. You've always needed to download a file, open an editing app, make changes, and save. Now the file stays in the cloud, the processing happens on your TV, and you get results on the same device where you're having the conversation.

The more ambitious use case involves video generation. You take a still photo and ask Veo to animate it. A photo of a snowy landscape becomes a video with snow gently falling. A portrait becomes a short clip of that person blinking or smiling. This is where the technology gets genuinely impressive, but also where the limitations become visible. Video generation from still images requires the AI to invent motion that never existed. The results can look unnatural if the prompt isn't specific enough.

Google is also adding what they're calling "Dive Deeper" functionality. When Gemini responds to a request, you can ask follow-up questions or request variations. This creates an interactive loop. "Generate a sunset video" is followed by "Make it more dramatic" or "Add some birds in the sky" or "Make the colors more vibrant." Each request refines the output.

Voice-First Interface and Natural Interaction

One of the most significant changes to Google TV is how you interact with it. Traditional smart TV interfaces involve remote controls with directional pads, sometimes voice buttons for searching. You navigate menus, click on things, select options. It's functional but not particularly efficient for creative tasks.

Gemini on Google TV is designed to be voice-first. You don't navigate menus to generate an image. You speak a command. "Create an image of a dragon flying over mountains." "Turn my beach photo into a video." "Make it more colorful." "Generate something completely different." The interface understands context. If you ask for variations, it knows what "it" refers to. If you ask to make something "more dramatic," it understands you're referring to the last generated content.

Google claims to have built a "visually rich framework" for Gemini's responses on TV. This means the outputs are optimized for large screens. When you ask Gemini a question on your phone, you get text. When you ask it on your TV, you get a visually rich response with images, videos, and interactive elements. If you ask "What are the best restaurants in San Francisco," instead of just getting text, you might get images of restaurants, ratings, location maps, and options to "Dive Deeper" into specific cuisines or neighborhoods.

This voice-first interaction also extends to system control. You can say "the dialog is too quiet" and Gemini will adjust your TV's audio settings. "Make the picture brighter" triggers brightness adjustments. "I want to watch comedy" brings up comedy recommendations. The TV is listening to your conversational requests and acting on them. This is more natural than reaching for a remote and navigating menus.

The privacy and security implications are worth considering. Your TV is listening for commands, understanding context, and accessing your Google Photos library. Google says this processing happens on-device when possible, with encrypted communication to Google's servers for more complex tasks. But it's still worth being aware of what data is flowing where. If you're uncomfortable with voice commands, you can also use text input through a keyboard or on-screen keyboard.

Technical Requirements and Device Compatibility

Not every TV will get these features immediately, and that's actually important to understand. Google TV might be on millions of TVs, but the hardware requirements for running image and video generation models locally are substantial.

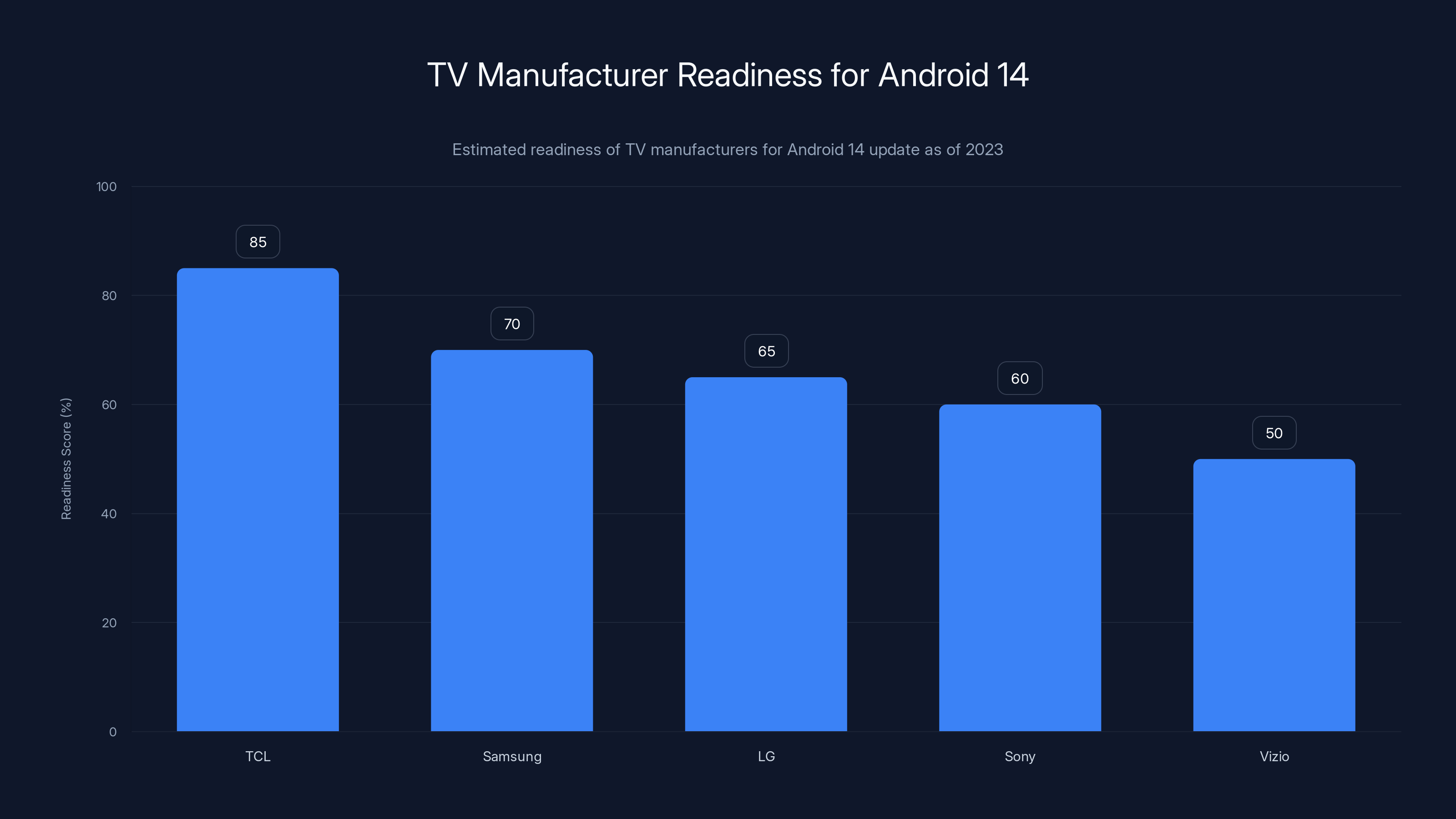

The baseline requirement is Android 14 or higher for the TV's operating system. This immediately excludes TVs made before 2023 or those stuck on older Android versions. Not every TV manufacturer has pushed Android 14 updates to their devices. TCL is leading the charge with these features, but broader adoption requires other manufacturers to update their TVs.

Beyond the OS requirement, there's the hardware itself. Running Nano Banana and Veo requires significant GPU resources. Not every TV's processor is powerful enough. Some TVs use simpler processors designed to save power and cost. Streaming video playback doesn't require much processing. Generating images and video does.

Google's strategy here is to start with newer, more powerful TVs from partners like TCL, then expand the feature set gradually. The Google TV Streamer device (Google's own hardware) will get these features, but not immediately. Google says most devices will receive them "in a few months." That's not specific, but it suggests they're testing the features first, optimizing performance, and planning a staged rollout.

This staggered approach is actually smart. Launching features on flagship hardware first, proving they work, gathering user feedback, and then expanding prevents the entire ecosystem from experiencing bugs or poor performance. It's different from how Google typically does software updates (usually just released to all devices at once), but it makes sense for more resource-intensive features.

The requirements also explain why cloud processing will still be involved for some operations. If your TV doesn't have sufficient local hardware, complex prompts might be processed in Google's data centers with results sent back to your TV. This maintains the responsive experience while falling back to cloud resources when needed.

Nano Banana excels in local processing and speed, making it ideal for quick, everyday tasks, while cloud-based models offer superior complexity handling and resolution. Estimated data.

Photo Remixing: Transforming Your Digital Archive

Photo remixing deserves its own deep dive because it's the feature most people will actually use, even if they never generate a video from scratch.

Most of us have thousands of photos. We take pictures constantly. Smartphones make it effortless. Google Photos backs them up automatically. But once they're in the cloud, we rarely interact with them. We might scroll through memories, export a few for printing, maybe share some with family. But we're not actively creating with them.

Photo remixing changes this dynamic. It transforms your photo library from a storage problem into a creative resource. Say you have a photo of your house. With photo remixing, you can ask Gemini to:

- "Show what this house would look like with a different color of exterior paint"

- "Reimagine this as a fairytale castle"

- "Make it look like it's in a different season"

- "Render it as an oil painting"

- "Show what this location would look like in 100 years"

Each request generates a variation without modifying the original. You're essentially asking the AI to answer "what if" questions about your photos. This is genuinely useful for more than just entertainment. Homeowners considering renovations can see paint colors. Parents can create fantasy versions of family photos. Artists can use it for inspiration.

The implementation connects Google Photos directly to Nano Banana. When you select a photo in Google Photos while Gemini is running, you get remix options. You can also search your library from within Gemini. "Show me that beach photo from last summer, and make it snowy." Gemini retrieves the photo and applies the transformation.

This feature highlights an important shift in how we think about AI. It's not just for generating completely new content. It's for interacting with content you already have. It's a way to explore variations and possibilities without losing the original. The creative potential here extends far beyond casual fun. Photographers could use it to explore editing directions before actually editing. Content creators could generate variations of images for social media. Teachers could remix educational photos for different contexts.

Video Generation: From Static to Motion

Image remixing is one thing. Video generation is another level entirely.

Veo can generate short video clips from prompts. "A cat jumping off a table." "Waves crashing on a rocky beach." "A person dancing in a nightclub." "A spaceship launching into orbit." The model takes your description and synthesizes a short video. Resolution and length depend on the prompt complexity, but Veo can generate clips up to several minutes long with reasonable visual quality.

But the more interesting implementation on Google TV is animating still images. Take a photo from your Google Photos library and have Veo transform it into motion. The AI needs to infer what motion would look natural. A photo of trees becomes a short clip with branches swaying. A photo of a beach becomes waves moving. A portrait becomes a person blinking or smiling.

This is technically harder than generating video from scratch because the AI must respect what's already in the image while adding believable motion. A person's face needs to move naturally. Water needs to flow realistically. The lighting and shadows need to remain consistent. This is where Veo's improvements matter. Earlier video generation models would produce visible artifacts and unnatural motion. Veo handles this more smoothly.

The practical use cases are interesting. You could create video slideshows from your photo library. "Turn my travel photos into a video montage." You could create short animated clips for social media without needing video editing software. You could bring old family photos to life. You could generate reference videos for artists and animators. The range of applications expands as the technology improves.

Google is also building in refinement loops. Generate a video, and if you don't like it, you can ask for variations. "Make it slower." "Add more movement." "Make it more dramatic." Each request generates a new clip with your requested adjustments. This interactive loop is essential because you rarely get exactly what you want on the first try.

The limitation you'll hit immediately: these are short clips. Veo isn't generating movies. It's generating 15-30 second videos. This is suitable for social media, highlight reels, and creative projects, but not for longer-form content. The computational requirements to generate longer videos are substantial, and there's also a creative challenge: maintaining narrative coherence over longer durations is harder for AI than generating short clips.

Conversational AI as a Smart TV Assistant

Beyond content generation, Gemini on Google TV includes a full conversational AI assistant. This extends beyond just creative tasks into general TV use.

You can ask Gemini things you'd normally search for online. "What's happening in sports today?" "What should I watch?" "Tell me about this actor." "What's the weather tomorrow?" "How do I cook risotto?" Gemini provides answers with a TV-optimized visual interface. Instead of text, you get images, videos, and interactive cards. Instead of tiny text on your phone screen, information is displayed large enough to see from across your room.

The "Dive Deeper" feature mentioned earlier is crucial here. When Gemini provides an answer, you can ask follow-up questions. "What's happening in sports today?" is followed by "Show me highlights of the Lakers game" or "What's the schedule for tomorrow?" The context remains consistent across the conversation. You're not starting over with each question. You're having an actual conversation.

Gemini can also control your TV settings. This is genuinely useful. "The dialogue is too quiet" automatically adjusts audio levels. "Make the picture brighter" increases brightness. "Switch to dark mode" changes the interface. "Set a sleep timer for 30 minutes" initiates a timer. These aren't revolutionary features, but they're significantly more natural than navigating menus.

The assistant also integrates with Google's ecosystem. Ask about your Google Calendar events, and it can pull that information. Ask what smart home devices you have, and it can control them if they're connected to Google Home. Ask about your photos, and it accesses Google Photos. This ecosystem integration is where the real utility emerges. Your TV becomes a hub for managing your entire digital life, not just watching content.

However, there's a significant caveat: this only works if you trust Google with access to all this data. Your calendar, your photos, your home devices, your search history. Gemini needs permission to access all of this to function as a complete assistant. If you're privacy-conscious, you can limit what Gemini can access, but you're also limiting functionality.

Estimated data suggests TCL leads in Android 14 readiness, with a score of 85%, while other manufacturers lag behind, indicating a staggered adoption of new features.

Privacy, Data Handling, and Security Considerations

Anytime you're dealing with a microphone, a camera (if your TV has one), access to your photos, and integration with your home devices, privacy becomes an important conversation.

Google's official position is that Gemini on Google TV processes as much as possible locally. Image and video generation models run on your TV hardware. Voice recognition happens locally. Data doesn't get sent to Google's servers unnecessarily. When cloud processing is needed (for complex requests or devices without sufficient local hardware), the communication is encrypted.

But here's what actually matters: Google still has access to everything you ask. Even if the processing is local, Google's logs show what prompts you're using, what content you're generating, what questions you're asking. This information could theoretically be used to improve the service, train new models, or provide more targeted advertising. Google's privacy policy covers this, but it's worth being aware of.

Photo access is particularly sensitive. When you grant Gemini permission to access your Google Photos library, it can retrieve any photo you've uploaded. Theoretically, this is sandboxed within the Gemini system. But it's still data flowing through Google's infrastructure (at minimum, during the authentication and permission process).

For audio, if your TV has a microphone (many do), it's listening for voice commands. Most smart TVs mute the microphone during recording, and you can disable voice commands entirely. But the option exists. If someone walks into your room and speaks, the TV is listening. This is less concerning than something like an Alexa device because the TV's microphone is designed for voice control, not always-on listening. But it's worth being aware of.

Security updates are another consideration. These features require Android 14 or higher, which means your TV needs to receive regular updates from the manufacturer. Not all TV manufacturers have good track records with security updates. Some stop supporting devices after a few years. If your TV isn't receiving regular updates, it could become a security vulnerability. This is a broader problem with smart TVs, but it's relevant here.

Google's recommendation is reasonable: only grant permissions you're comfortable with, review what data is being accessed, and consider disabling voice commands if you're uncomfortable with microphone use. But the fundamental trade-off remains: more convenience means more data sharing.

Real-World Use Cases and Practical Applications

Let's move beyond the theoretical and consider what people will actually use these features for.

Content Creation Without Leaving Your Couch

TikTok creators, Instagram content creators, and social media managers spend hours generating variations of content. "Let me try this photo with a different filter." "Let me see how this looks in black and white." "Let me generate some variations for the algorithm." Currently, this requires multiple devices and applications. With Gemini on Google TV, you could do this from your living room. Select a photo from your Google Photos library, ask for variations, and save the best ones directly to your library. You're not leaving your TV, not switching applications, not uploading anything manually.

Family Entertainment and Shared Creativity

A family watches TV together. Someone says, "Wouldn't it be cool if our family dog was in that action movie?" Another person asks, "Can you show what our house would look like painted blue?" Kids want to see themselves as superheroes. Parents want to create videos of family memories with creative effects. Gemini on Google TV turns the TV from a passive viewing device into a creative tool that the entire family can interact with.

Design and Home Planning

Someone is considering renovating their kitchen. They take a photo of their current kitchen and ask Gemini to show what it would look like with new cabinets, different paint, new flooring, different appliances. They generate dozens of variations, each showing a different design direction. This provides inspiration without needing to hire a designer or use specialized software.

Photo Organization and Storytelling

Years of photos sit in Google Photos, barely organized. With Gemini, you can search for photos more naturally. "Show me photos from that trip to Colorado." "Create a video from my winter photos." "Generate variations of my favorite family photos." The library transforms from static storage into an interactive scrapbook.

Educational and Professional Use

Teachers could use these features to generate educational materials. Create variations of images for different age groups or learning contexts. Generate videos explaining concepts. Illustrators and designers could use it for brainstorming. The feature set is broad enough that professional applications will emerge.

Comparison with Existing Generative AI Platforms

How does Gemini on Google TV compare to the ecosystem of generative AI tools already available?

DALL-E 3, Midjourney, and Stable Diffusion are powerful image generation tools, but they require subscriptions, learning curves, and separate interfaces from where you consume content. You're not generating images on your TV. You're generating them elsewhere and transferring them.

Runway and Pika AI offer video generation, but again, you're working on a computer, not on your TV, and you're subscribing to separate services.

The unique value of Gemini on Google TV is integration. Your TV is where you already spend entertainment time. Your Google Photos library is already synced. Your voice is already the primary input method. Google isn't asking you to learn a new interface or adopt a new service. It's extending what you already have.

However, this convenience comes with trade-offs. Nano Banana and Veo, while capable, are optimized for efficiency, not peak quality. They might not produce the stunning results that DALL-E 3 or Midjourney can generate when given access to unlimited cloud computing. They're designed to be good enough, not best-in-class. For professional creative work, you'll likely still use specialized tools. For casual home use, Gemini on Google TV is more convenient and more integrated.

Runable offers an alternative approach to AI-powered content creation, focusing on automating presentations, documents, and reports. While it doesn't directly compete with TV-based image and video generation, it represents a similar trend: embedding generative AI into tools and platforms where people already work.

The broader ecosystem is moving toward AI being ubiquitous. It's not a separate thing you do. It's integrated into the platforms and devices you already use. Gemini on Google TV exemplifies this trend. So does Runable's approach to integrating AI agents into document creation and presentations. The pattern is consistent: embed AI where people are already working.

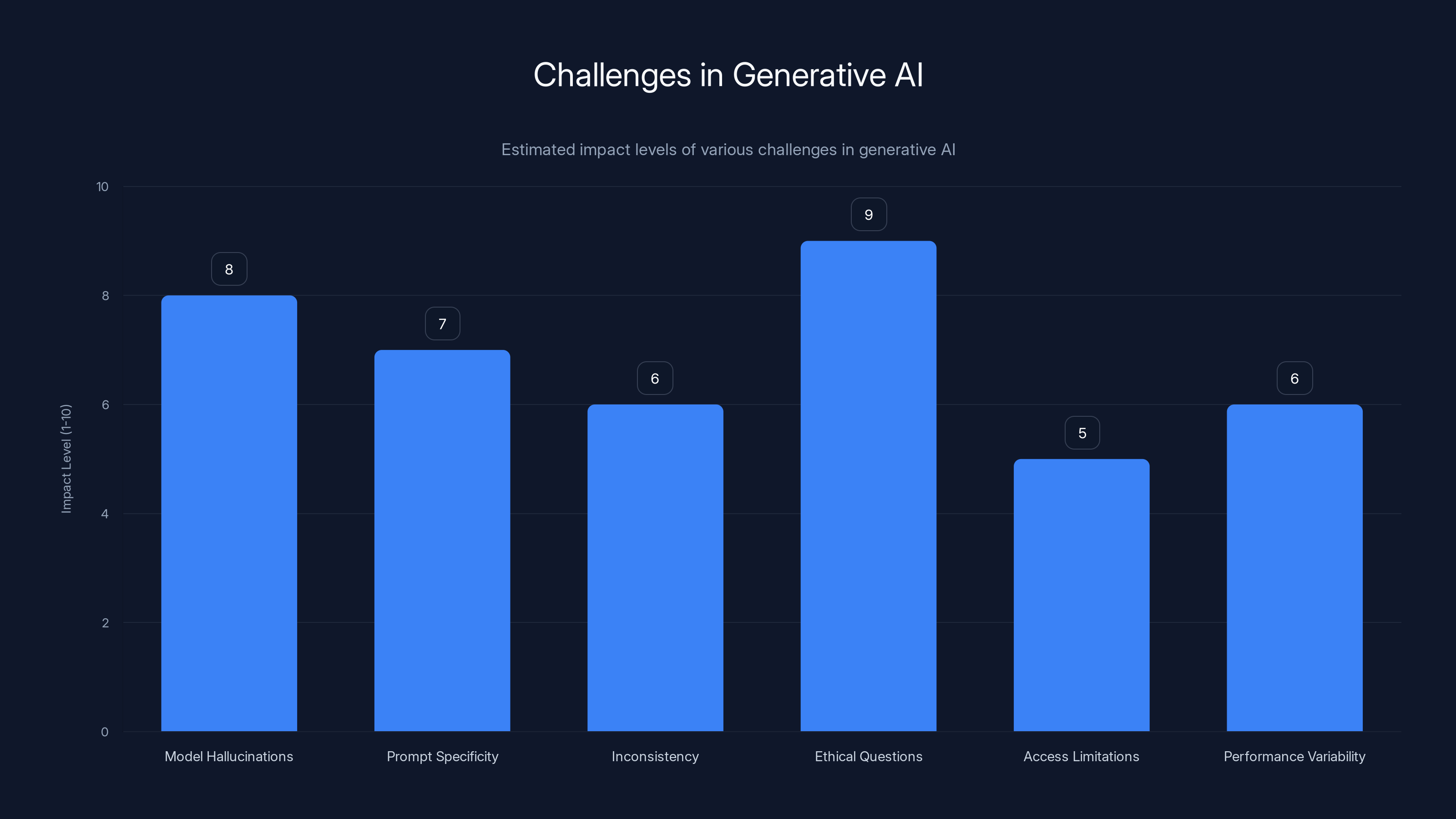

Model hallucinations and ethical questions are perceived as the most impactful challenges in generative AI, with high impact levels. (Estimated data)

Performance and Latency Considerations

One of the biggest technical challenges in running generative models locally on TVs is latency. How long does it actually take to generate an image or video?

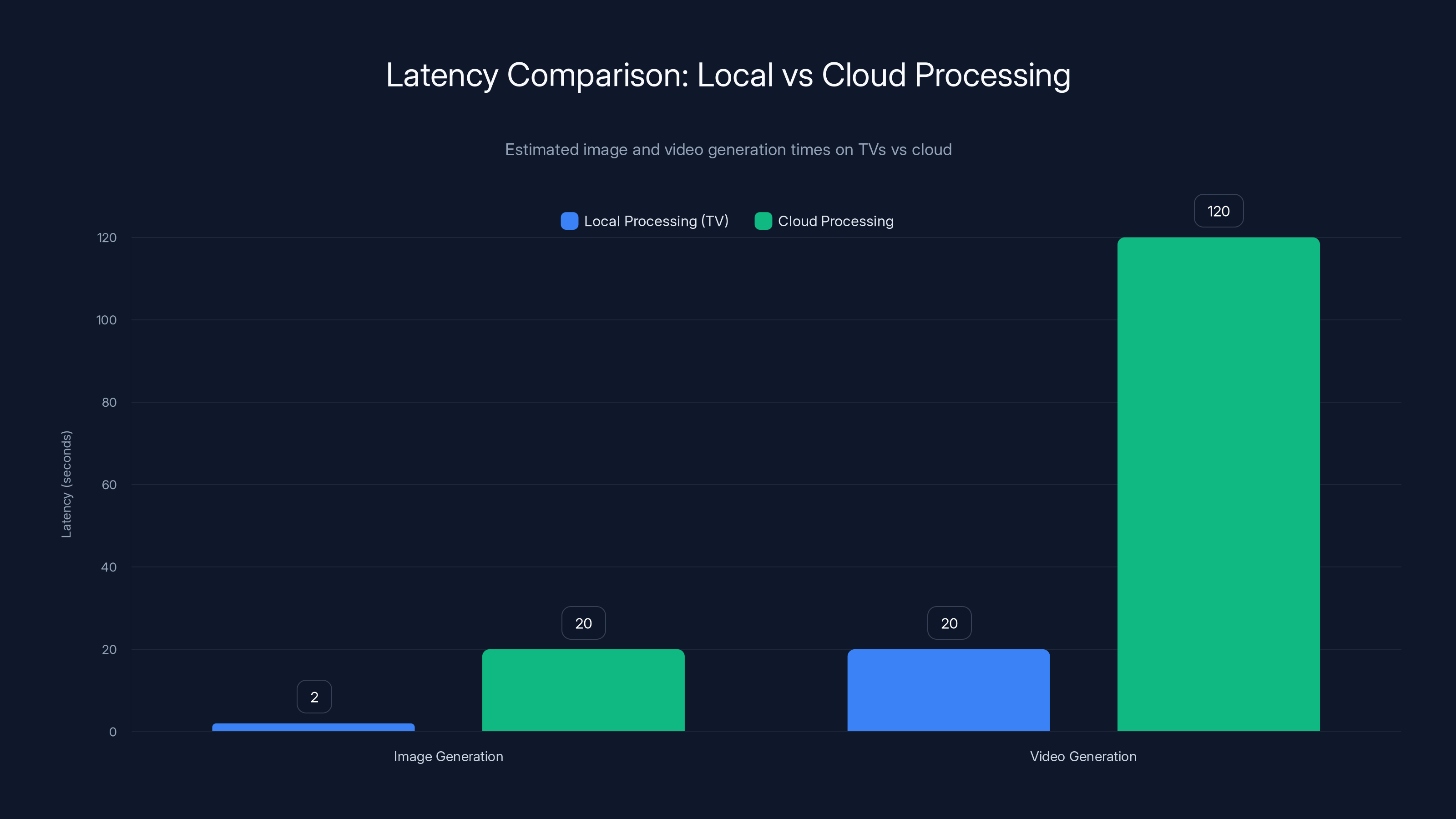

For image generation, users expect subsecond to second-level response times. You ask for something, and it appears quickly. If it takes 10 seconds, the experience feels laggy. If it takes a minute, you've mentally moved on to something else. Nano Banana is designed with this in mind. It's optimized for speed. Typical image generation takes 1-3 seconds on modern TV hardware. This is dramatically faster than cloud-based alternatives, which might take 10-30 seconds depending on server load.

Video generation is slower. Veo typically takes 10-30 seconds to generate a short clip, depending on the prompt complexity and the hardware capabilities of your TV. This is still faster than cloud-based video generation, which might take minutes, but it's long enough that you notice the wait. You ask for a video, and you wait 15 seconds or so for it to appear. It's acceptable, but it's not instant.

As model optimization continues and TV hardware improves, these latencies should decrease. Newer TVs with more powerful processors will generate faster. Future versions of the models might be more efficient. But there's a fundamental trade-off: running models locally means you're limited by your TV's hardware. Cloud processing can be more powerful, but slower due to network latency. Google has chosen to optimize for local processing, accepting the latency trade-off for privacy and integration benefits.

Quality also affects latency. A simple prompt might generate in 2 seconds. A complex prompt with specific requirements might take 10 seconds. This is normal and expected. Users should understand that more detailed requests take longer.

The Rollout Timeline and Availability Strategy

Google's decision to roll out these features gradually is worth analyzing because it reveals something about their strategy.

Starting with TCL TVs makes sense. TCL is a major TV manufacturer with modern hardware. TCL TVs generally receive software updates more consistently than some competitors. Starting with a single manufacturer allows Google to test the features, gather feedback, and optimize before expanding.

The timeline suggests Google TV Streamer and other devices will get these features "in a few months." This is vague, but suggests Q1 or Q2 2025. Not immediately, but not years in the future. Google is buying time to test, optimize, and prepare for broader rollout.

The requirement of Android 14 or higher creates a natural gate. Only newer TVs and devices running recent versions of Android will get these features initially. This limits the support burden. Google doesn't need to optimize for hundreds of different hardware configurations. It can focus on newer, more capable devices first.

This phased approach is smart from a business perspective. Early adopters with newer TVs get the features first. This creates excitement and media attention. The features improve and mature in the market. By the time broader rollout happens, most bugs are fixed and the experience is optimized. Mainstream audiences then get a polished feature rather than a rough launch.

For users, this means patience. If you have an older TV or an older Google TV Streamer, these features aren't coming tomorrow. But they're probably coming eventually. The question is whether you can wait or whether you should upgrade your hardware to access these features sooner.

Implications for the Broader Smart TV Market

Gemini on Google TV isn't just a feature update. It signals a shift in how manufacturers and software platforms think about TVs.

For decades, TVs were dumb displays. You connected them to cable boxes or streaming devices, and the TV's job was displaying content and nothing else. The software layer was an afterthought. Gradually, this changed. Smart TVs emerged with built-in software, app stores, and recommendation algorithms. But the core function remained: display content someone else created.

Now, the TV is becoming a creative tool. You're not just watching content. You're generating it. You're asking questions and getting rich interactive responses. You're controlling your home through voice commands. The TV is becoming a hub.

This has implications for competing platforms. Amazon's Fire TV operating system has Alexa integration, which is powerful for smart home control but less developed for creative content generation. Roku has a different strategy, focusing on simplicity and app accessibility. Apple TV emphasizes seamless ecosystem integration for Apple users. Each platform is making different bets about what a smart TV should be.

Google's bet is clear: AI is central. Not as an add-on, but as a core capability. Image and video generation. Conversational assistance. Smart control. These aren't optional features. They're foundational to Google TV's future direction.

For TV manufacturers, this raises an important question: Can they compete on their own TV OS? TCL, Samsung, LG all make TVs running their own custom operating systems in some markets. But the advantage of Google TV or Amazon Fire TV or Roku is that you're outsourcing the software complexity. The OS gets updates frequently, supports a huge app ecosystem, and integrates with a broader service platform. Building your own OS and keeping it competitive with Google TV and Fire TV is increasingly expensive.

This might accelerate consolidation around three major OS platforms: Google TV, Fire TV, and Roku. Smaller manufacturers might continue, but the software experience might become secondary to hardware quality. The OS platform you choose determines the experience, more so than the TV brand.

Local processing on TVs offers significantly lower latency for both image and video generation compared to cloud-based alternatives. Estimated data.

Technical Architecture and Model Inference

Understanding how these models actually work on your TV requires looking at the technical architecture.

Generative models, especially image and video models, are substantial. A single model can be several gigabytes. Quantization (reducing precision) and model compression can make them fit on consumer devices, but you're still talking about significant storage and memory requirements. Your TV needs not only to store the model but to have enough RAM to run inference.

For Nano Banana specifically, Google has optimized for mobile and embedded devices. The training process included techniques to reduce the model size while maintaining quality. Distillation, where knowledge from larger models is transferred to smaller ones, is likely involved. Quantization definitely is. These techniques reduce model size and inference speed requirements, making it feasible to run on TV hardware.

Veo is more demanding. Video generation requires more computation than image generation. The model probably runs with some compromise: either reduced resolution, shorter videos, or cloud fallback for complex requests. Or some combination. Google hasn't been completely transparent about this, but the technical reality is that generating video locally on TV hardware would be extremely challenging without fallback to cloud processing for some scenarios.

The inference process itself is optimized for batching. When you request an image or video, the model doesn't generate it frame-by-frame or pixel-by-pixel in real-time. It processes the request in batches, generating entire images or videos (or chunks of videos) in parallel. This requires GPU resources more than CPU resources, which is why TV hardware needs modern GPUs to handle this.

Google's architectural decision to do local processing when possible is interesting from a systems perspective. Local processing reduces latency and bandwidth requirements. It also reduces privacy concerns: your request stays on your device. The trade-off is compute power. Cloud processing could be more powerful and more flexible, but it adds latency and requires uploading data.

Google likely uses a hybrid approach: local processing for simple requests, cloud fallback for complex ones. This maintains performance while handling edge cases where local hardware isn't sufficient.

The Content Generation Quality Question

Let's be honest about what these models actually produce.

Nano Banana and Veo are good, but they're not perfect. Nano Banana can struggle with complex prompts. If you ask for specific details, exact configurations, or particular artistic styles, results might be hit-or-miss. Some generations look great. Others look obviously AI-generated. The quality varies based on the prompt and the model version.

Veo has similar challenges with video. Motion can look unnatural if the prompt is ambiguous. Character details might not be consistent frame-to-frame. Camera movements might be jerky. The quality is impressive for how fast it generates videos, but it's not ready for professional production work. It's ready for social media, fun experiments, and creative exploration. Not for commercial video production.

This quality ceiling is important to understand. These are consumer-grade generative tools, not professional-grade. They're designed to be accessible and fun, not to replace professionals. If you're a professional designer or video producer, you'll still use specialized tools where you have more control and better results.

But for casual home use, the quality is good enough. You're not trying to win awards. You're trying to have fun with your photos, create interesting variations, and explore creative possibilities. For that, Nano Banana and Veo are perfectly adequate.

Google is also iterating. The versions of these models available in 2025 are better than the ones that launched earlier. Future versions will be better still. As the models improve, the quality on TVs will improve as well. What looks slightly artificial today might look quite natural in a year or two.

Integration with Google's Broader AI Strategy

Gemini on Google TV doesn't exist in isolation. It's part of Google's broader push to embed AI everywhere.

Google is integrating Gemini (their conversational AI model) across their entire product suite. Search results now show Gemini-generated summaries. Gmail can suggest completions. Google Docs can generate content. Google Sheets can analyze data. Google Photos can auto-generate albums and collages. Each product is getting an AI layer.

Google TV is a natural extension of this. The TV is a major product for Google. It's something people use daily. It's a place where Google can offer unique value by integrating generative AI in ways that other platforms can't match as easily.

The broader strategy is about ecosystem lock-in, but done in a way that benefits users. If you use Google services (Gmail, Google Calendar, Google Photos), you benefit from Gemini integration on your TV because it can access your data and provide contextual assistance. If you're in the Apple ecosystem, you might prefer using Apple devices where integration is potentially stronger. Google's bet is that for most people, Google services and Google devices will provide better value through integrated AI capabilities.

This strategy is smart but also reinforces Google's dominance in information services and personal data. Gemini on Google TV is one more mechanism through which Google becomes more central to your digital life.

Comparison Table: AI Content Generation Tools

| Tool | Best For | Generate Images | Generate Video | TV Integration | Monthly Cost |

|---|---|---|---|---|---|

| Runable | AI automation, presentations, documents | Yes | Yes | Limited | $9 |

| DALL-E 3 | Professional image generation | Yes | No | No | Free (limited) |

| Midjourney | Artistic image creation | Yes | No | No | $10–120 |

| Stable Diffusion | Custom image generation | Yes | No | No | Free (self-hosted) |

| Runway | Professional video editing | No | Yes | No | $12–60 |

| Pika AI | Quick video generation | No | Yes | No | $10–30 |

| Google TV + Gemini | Living room content creation | Yes (local) | Yes (local) | Yes | Included |

Expert Insights and Industry Reactions

When Google announced these features at CES, the reaction from tech observers and industry analysts was largely positive, with some caveats.

The common refrain: this is a natural evolution of smart TV platforms, and the integration of local generative models on consumer hardware is technically impressive. The criticism: Google TV's market penetration is strong in some regions but weaker in others, so the impact might be geographically limited. The speculation: other platforms will add similar features, creating competitive parity.

What stands out is that generative AI on TVs wasn't necessarily on anyone's priority list until now. Streaming content recommendations? Yes. Smart home control? Yes. Generative content creation on TVs? Most people didn't think about it until Google showed it was possible. This is characteristic of Google: they identify something that wasn't obvious as a priority, and they make it work.

The skepticism worth noting: Is this a feature anyone actually wanted? Or is Google creating demand? Early adopters and content creators will definitely find uses. Casual TV watchers might never touch these features. The uptake rate will reveal what people actually want versus what's technically impressive.

Future Roadmap and Expected Evolution

What's coming next for Gemini on Google TV?

Google hasn't released a detailed roadmap, but based on their typical pattern, expect:

Improved Models: Nano Banana and Veo will get regular updates. Each new version should be faster and produce better quality. Model improvements are continuous, and TV versions will benefit.

Broader Device Support: The phased rollout will continue. More TVs and TV devices will get access. Eventually, any device running recent Android versions should get these features.

Enhanced Integration: More TV apps will integrate with Gemini. You'll be able to generate content within specific apps. Netflix might let you generate artwork. YouTube might let you remix clips. YouTube Music might create visualizers. As developers add Gemini integration, the capabilities expand.

Multimodal Experiences: Current features are somewhat separate: image generation, video generation, conversational AI. Expect more integrated experiences where you're combining these capabilities. "Show me a video of my photo as a superhero" is one example. More complex requests requiring multiple models working together will become possible.

Personalization: Gemini will learn your preferences. The images and videos it generates will get better tailored to your taste. The recommendations it offers will get better. The system becomes more personalized over time.

Real-time Processing: Latency will continue to improve. Models will run faster. Eventually, these features might approach real-time responsiveness, making the experience feel more natural.

The longer-term question: does local model inference on TVs represent the future, or is this a transitional phase? As cloud computing becomes faster and more powerful, the advantage of local processing diminishes. But privacy and the appeal of offline-capable features might sustain local processing even as cloud capabilities improve. Likely future: hybrid approach where local processing handles routine tasks, cloud processing handles complex ones.

Challenges and Limitations

Let's talk honestly about what doesn't work and what probably won't be solved soon.

Model Hallucinations: Generative models sometimes produce artifacts, distortions, or completely wrong elements. A photo of a dog might get generated with seven legs. Text in generated images might be incomprehensible. Video might have temporal inconsistencies. These aren't bugs. They're fundamental limitations of how these models work. They'll improve, but won't disappear completely.

Prompt Specificity: Writing good prompts is an art. Vague prompts produce mediocre results. Detailed prompts require effort to compose. Users will need to learn how to ask for what they want. This is a friction point. Unlike traditional software, where you click buttons to do specific things, generative AI requires articulating what you want. Some people will find this intuitive. Others will struggle.

Inconsistency Across Generations: Ask Nano Banana to generate "a dragon" five times, and you'll get five completely different dragons. This is useful for exploration, but problematic if you're trying to create consistent branding or narrative. Professional tools might handle this better, but the basic challenge remains.

Ethical and Copyright Questions: If Gemini generates an image that's suspiciously similar to something in its training data, who owns the result? If you generate a video of a celebrity, is that okay? If you create AI-generated content, how do you label it? These are complex questions that society is still figuring out. Google's terms of service cover some of this, but the broader questions remain.

Access Limitations: These features require Android 14 or higher, specific hardware, internet connectivity (for cloud fallback), and a Google account. Not everyone has access. This creates a digital divide where newer, more expensive TVs get features that older, cheaper TVs don't.

Performance Variability: Depending on what else your TV is doing, model inference performance varies. If your TV is streaming video, running apps, or doing other processing, generative features might be slower. This variability is hard to predict and frustrating when it happens.

These aren't reasons to dismiss the features. They're important context for understanding the limitations and being realistic about what's possible today.

FAQ

What is Gemini on Google TV?

Gemini on Google TV brings Google's generative AI capabilities directly to your smart TV. It includes conversational AI for answering questions and getting recommendations, image generation through the Nano Banana model, and video generation through the Veo model. You can generate new content from scratch, remix photos from your Google Photos library, or get AI-assisted help with TV control and recommendations.

How does photo remixing work on Google TV?

When you enable Gemini on your TV, you grant it permission to access your Google Photos library. You can then select a photo or search for specific images, and ask Gemini to remix them. "Make this sunset photo look like a painting" or "Show what this room would look like with different furniture." Nano Banana processes your request locally on your TV and generates variations without modifying the original photo.

Can Gemini on Google TV generate videos from scratch?

Yes, using the Veo video generation model. You can provide a text prompt describing a scene you want to see, and Veo generates a short video (typically 5-30 seconds depending on complexity). You can also animate still photos from your library. "Turn my beach photo into a video with waves moving" is a valid request that Veo can fulfill.

What devices currently support Gemini image and video generation?

TCL smart TVs with Google TV are the first devices getting these features as of early 2025. Google TV Streamer and other devices will receive support in the coming months. The requirement is Android 14 or higher and sufficient hardware (newer processors and GPUs). Older TVs and older Android versions won't support these features.

How is Gemini on Google TV different from using DALL-E or Midjourney?

Gemini on Google TV prioritizes convenience and integration over maximum quality. You generate content directly on your TV without switching devices. The models are optimized for speed and run locally when possible. However, Nano Banana and Veo are designed for consumer use and might not produce the same professional-quality results as DALL-E 3 or Midjourney. Choose based on your actual workflow: use Gemini on TV for casual home use, use specialized desktop tools for professional creative work.

Is there a subscription cost for Gemini on Google TV?

Google hasn't announced separate pricing for Gemini on Google TV image and video generation. These features appear to be included with Google TV. If you're already a Google One subscriber, you might get additional benefits or higher usage limits, but the core features appear to be available to all Google TV users with compatible devices.

Can I save generated images and videos to my Google Photos library?

Yes, generated content can be saved to your Google Photos library directly through Gemini. You can then access it on other devices, share it, or use it in other applications. The saved content is treated like any other photo or video in your library.

What happens if my TV doesn't meet the hardware requirements?

If your TV doesn't have sufficient processing power or doesn't run Android 14 or higher, Gemini's image and video generation features won't be available locally. Some functionality might be available through cloud processing if it exists as a fallback option, but it would be slower. Your alternative is upgrading to a newer TV that meets the requirements, or using generative AI tools on other devices (phone, computer) and transferring the results to your TV.

Is my Google Photos library private when using Gemini on Google TV?

Gemini accesses your Google Photos only with your explicit permission, and you can revoke that permission anytime. Local processing means photos don't necessarily get sent to Google's servers for simple remixing tasks. However, for complex requests or devices without sufficient local hardware, some cloud processing might be involved. Review Google's privacy policy and Gemini's specific privacy documentation for complete details.

How good are the generated images and videos compared to professional AI tools?

Nano Banana and Veo are good for consumer use but not professional-grade. Quality is impressive given the local processing constraints, but artifacts and unnatural elements can appear. These tools are suitable for social media content, creative exploration, family entertainment, and design inspiration. For professional commercial work, specialized tools like DALL-E 3, Midjourney, or Runway might provide better results and more control.

Conclusion: Your TV Just Became a Creative Tool

For decades, your TV has been a passive device. It displays what others created. You turn it on, watch, and turn it off. Smart TVs added apps and recommendations, but the fundamental experience didn't change. You were still consuming content others made.

Gemini on Google TV changes that dynamic. Your TV is now a creative tool. You can generate images. You can create videos. You can remix your memories. You can ask questions and get rich interactive responses. The experience is different.

This isn't revolutionary in the sense that it solves a critical problem. Most people don't desperately need to generate images from their couch. But it's significant because it represents a shift in how we think about home entertainment technology. The TV isn't just for consumption. It's for creation.

The practical impact will vary by person. Content creators will find real utility. People who enjoy visual projects will have fun. Casual TV watchers might never touch these features. But the option is there, integrated seamlessly into a platform that already exists in millions of homes.

The broader implications are interesting. As generative AI becomes ubiquitous, we'll see it show up everywhere. Not as special separate tools, but as integrated capabilities within the systems we already use. Gemini on Google TV is one example. Runable's AI agents for presentations and documents are another. The pattern is consistent: AI as a feature of existing platforms, not a standalone service.

The question isn't whether these features will matter. It's how deeply generative AI will integrate into everyday life before we stop noticing it as something special. Gemini on Google TV is another step in that direction.

For the near future, these features are coming to newer TVs running Google TV first. Other platforms will follow with similar capabilities. The smart TV experience will become increasingly AI-powered. Whether you use these features or not, they're becoming standard.

The takeaway: if you're considering a TV purchase, Google TV devices with these features coming soon are worth considering. If you already have a compatible TV, these features will arrive in the next few months. And if you have an older TV, you might be thinking about whether an upgrade is worth it for these capabilities. The answer depends on how much you value content creation on your TV versus purely consumption. But the conversation is worth having, because your TV just became more than a screen.

Use Case: Transform how you create presentations and documents with AI agents that automate your entire creative workflow, similar to how Gemini automates TV content generation.

Try Runable For FreeKey Takeaways

- Gemini on Google TV brings image and video generation directly to your smart TV using local AI models (Nano Banana and Veo) for faster, privacy-respecting processing

- Photo remixing connects directly to your Google Photos library, letting you transform memories without uploading anything or switching devices

- These features are rolling out first to TCL smart TVs with Google TV, expanding to other devices like Google TV Streamer in coming months

- The integration requires Android 14 or higher and modern TV hardware, excluding older devices from receiving these capabilities immediately

- Generative AI on TVs represents a shift in how we use home entertainment systems, transforming them from passive viewing devices into creative tools

Related Articles

- Google TV's Gemini Photo Editing: Everything You Need to Know [2025]

- CES 2026: Why AI Integration Matters More Than AI Hype [2025]

- Subtle Voicebuds: AI Earbuds That Transcribe Whispers and Loud Spaces [2025]

- Photonic AI Chips: How Optical Computing Could Transform AI [2025]

- Meta Acquires Manus: The AI Agent Revolution Explained [2025]

- How to Watch LG's CES 2026 Press Conference Live [2025]

![Gemini on Google TV: AI Video and Image Generation Comes Home [2025]](https://tryrunable.com/blog/gemini-on-google-tv-ai-video-and-image-generation-comes-home/image-1-1767636575108.png)