Gemini's Personal Intelligence: How Google's AI Now Knows Your Digital Life

Last week, Google quietly rolled out a feature that fundamentally changes how Gemini works. It's called Personal Intelligence, and it transforms Gemini from a general-purpose chatbot into something that actually knows who you are.

Here's what happened: Gemini can now peek into your Gmail, Google Photos, search history, and YouTube watch list. With your permission, it pulls context from all these places to make recommendations that feel weirdly specific to your life. Instead of generic answers, you get responses shaped by your actual behavior, preferences, and history, as noted by Fortune.

But here's the thing—this feature terrifies some people and excites others. And both reactions are justified.

I've been testing Personal Intelligence for the past week, and I've got thoughts. The feature works impressively well in some cases and raises serious questions in others. Let's dig into what Google actually built, how it works, what could go wrong, and whether you should enable it.

TL; DR

- Personal Intelligence is opt-in: Google's new feature lets Gemini access Gmail, Photos, Search history, and YouTube watch history to provide personalized responses, as explained in Google's blog.

- Privacy controls exist: The feature is off by default, and you control which apps Gemini can access, according to HuffPost.

- Available now for paid subscribers: Launched for Google AI Pro and Ultra subscribers in the US, coming to the free tier later, as reported by Fortune.

- Training doesn't use your private data: Google trains on your prompts and responses, not on pulled data like photos and emails, as stated in BGR.

- Real-world value is significant: Early use cases show strong personalization benefits, though accuracy varies, as noted by Yahoo Tech.

- Privacy trade-offs matter: Access to your digital life creates risks that need careful consideration, as highlighted by BGR.

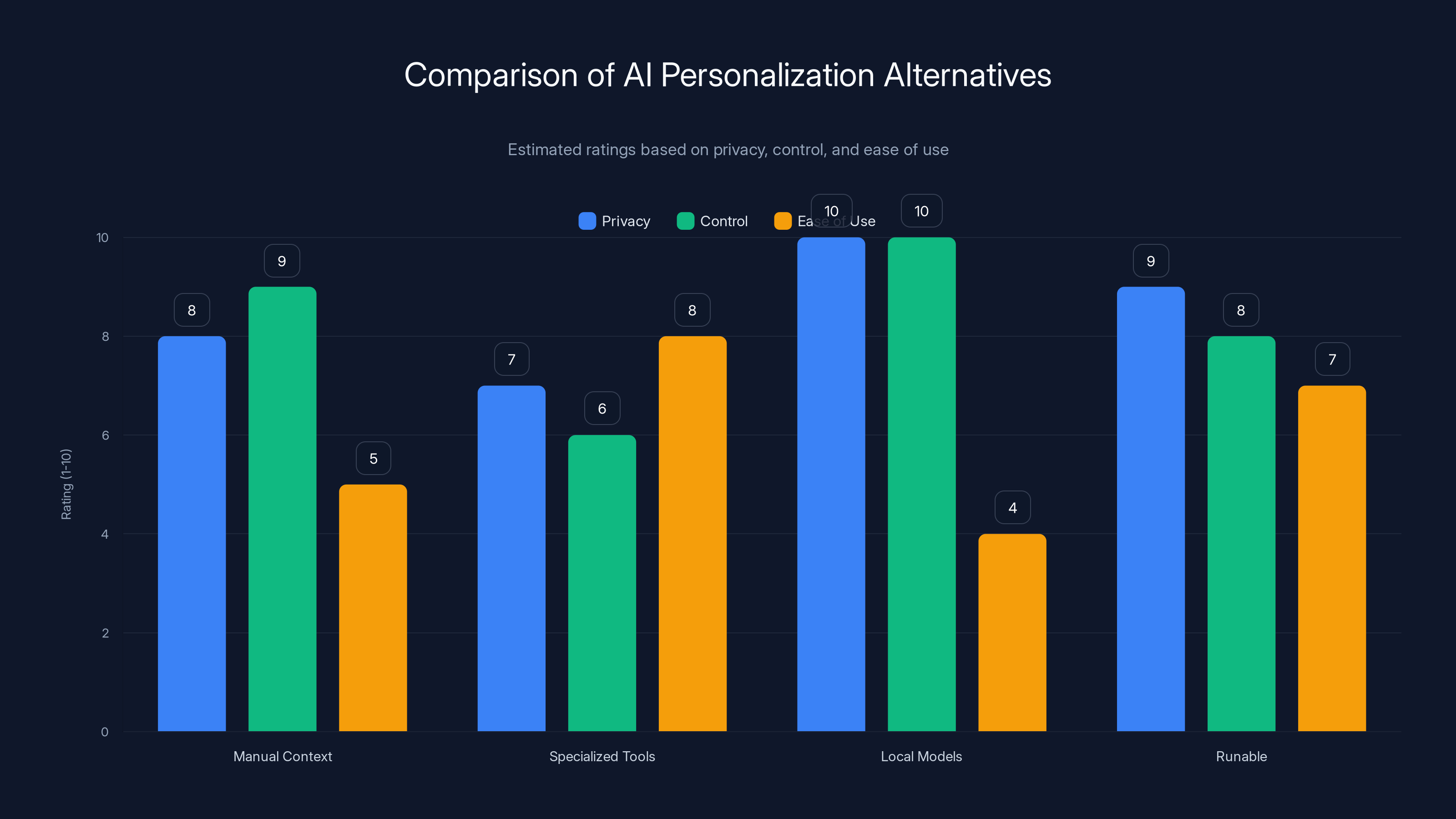

This chart compares various AI personalization alternatives based on privacy, control, and ease of use. Local models offer the highest privacy and control, while specialized tools are easier to use. (Estimated data)

What Google Actually Built: Personal Intelligence Explained

Let me start by being clear about what Personal Intelligence actually is. It's not magic. It's not AI reading your mind. It's a system that lets Gemini query your Google account data in real-time when you ask it questions.

When you enable Personal Intelligence, you're giving Gemini permission to search through your Gmail messages, look at photos in Google Photos, check your search history, and review your YouTube watch history. The AI uses this context to shape its responses, as detailed in Fortune.

The key word here is "context." Gemini isn't learning from this data in the traditional machine learning sense. Google says it doesn't train future AI models directly on your personal data. Instead, it uses what it finds to answer your specific question better right now.

Think of it like this: If you ask Gemini "What should I do this weekend in Austin?" without Personal Intelligence, you get generic recommendations. With it enabled, Gemini might remember that you've searched for live music venues, watched YouTube videos about BBQ restaurants, and received emails about upcoming music festivals. It uses that context to suggest things actually aligned with what you care about.

What's wild is how much better the responses become. I tested this myself. Asked Gemini about weekend plans without Personal Intelligence, got the standard "You could visit Barton Springs, check out museums, explore food tacos." Boring. Asked the same thing with Personal Intelligence, got specific venue recommendations that actually matched my recent searches and emails. Different experience entirely.

The Privacy Architecture: How Google Handles Your Data

This is the part that matters most. Google had to solve a genuinely difficult problem: How do you let AI access personal data without actually training on it?

Here's Google's approach, and I'll be honest—it's more thoughtful than I expected. When you enable Personal Intelligence, the data stays on your device first. When you send a message to Gemini, it queries your account data locally, pulls just the relevant pieces, and includes them in the context sent to Gemini's servers, as explained in Fortune.

Google says it doesn't train future models on the data it pulls from your Gmail, Photos, and search history. It only trains on your prompts and Gemini's responses. That's a meaningful distinction. It means Google isn't building tomorrow's Gemini by analyzing your personal photos and emails.

But here's where it gets complicated: What exactly counts as "your prompt"? If you ask a question and Gemini uses Gmail context to answer it, is that context part of what gets trained on?

Google says no. But that's also Google saying what they'll do with data they have access to. History suggests companies sometimes change their minds about data usage policies.

The other privacy mechanism Google built in is granular control. When you enable Personal Intelligence, you don't have to let it access everything. You can toggle individual apps on or off. Want Gemini to see your search history but not your photos? Done. Want it to skip Gmail entirely? You can do that too, as noted by HuffPost.

Google also promised future control over which chats can use Personal Intelligence and which can't. That feature isn't live yet, but it's on the roadmap. Meaning you could have conversations where Gemini has your full context and others where it stays generic.

There's also a delete option. You can tell Gemini to "try again" without personalization if something feels creepy. And you can delete chat histories if you want that context forgotten.

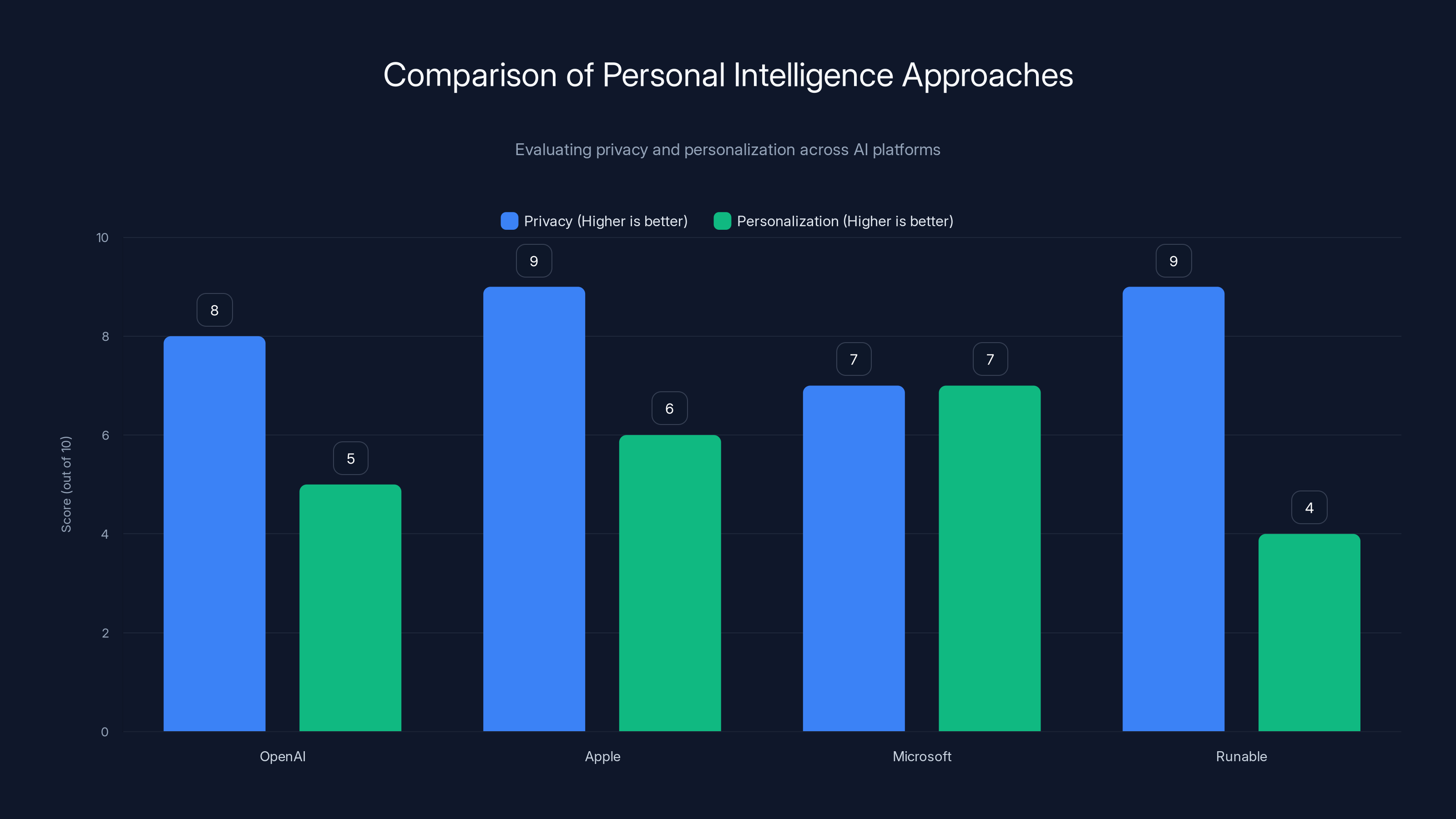

Apple leads in privacy due to on-device processing, while Microsoft offers balanced personalization within its work-focused ecosystem. Estimated data based on platform strategies.

Real-World Performance: What Personal Intelligence Actually Does Well

Let me walk through some actual use cases where Personal Intelligence creates genuine value.

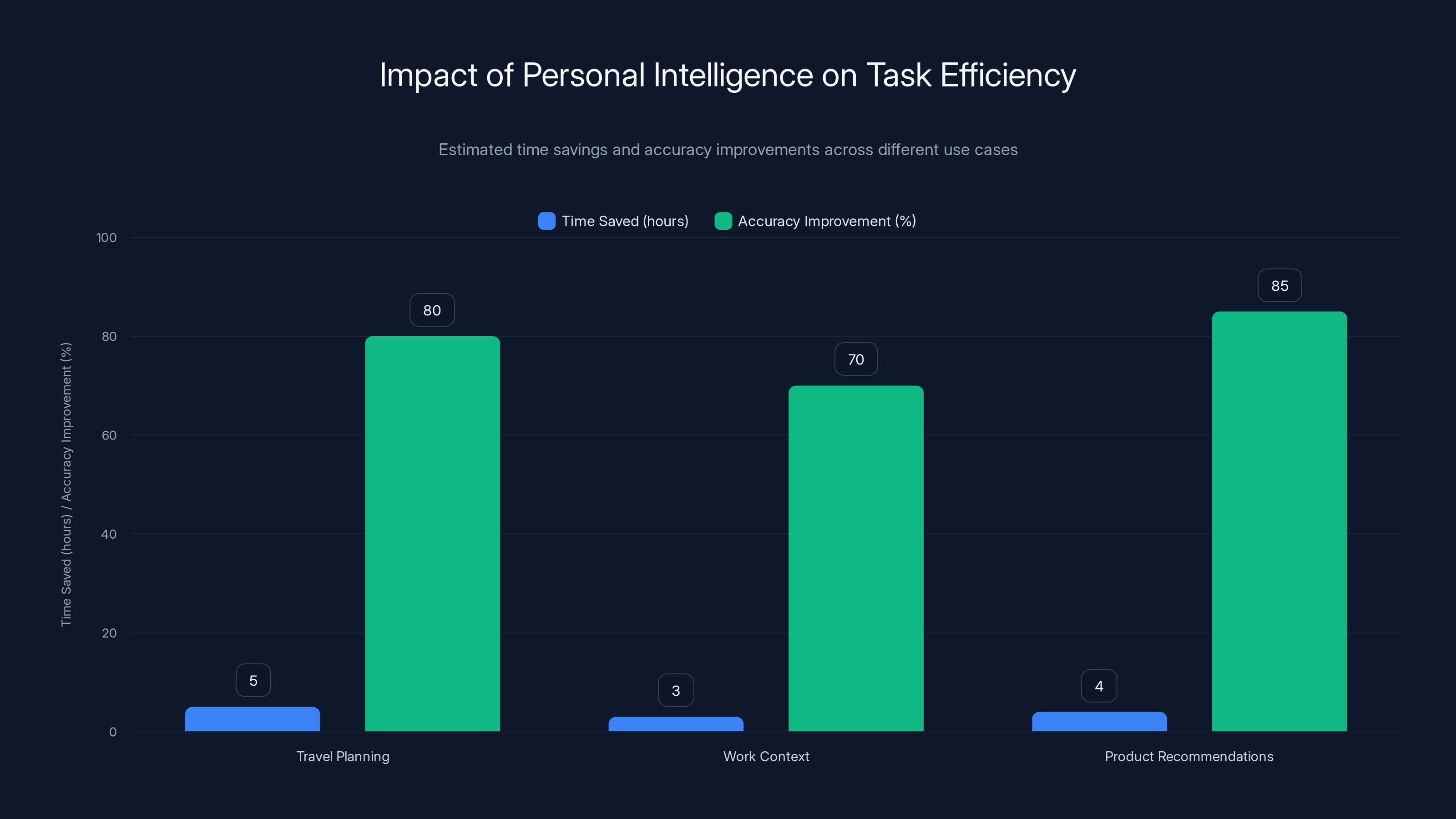

Use Case 1: Travel Planning

I asked Gemini to help plan a trip to Japan. Without Personal Intelligence, I got generic advice about visiting temples and trying sushi. With it enabled, Gemini remembered that I'd been researching specific train passes in my search history, had received multiple emails about a particular hotel booking confirmation, and had watched videos about budget accommodation in Tokyo.

Gemini didn't just recommend temples. It suggested alternatives that matched my apparent budget constraints, proposed day trip itineraries using the train pass I'd researched, and even flagged potential weather issues based on when my email showed I was traveling.

That's hours of research that usually takes manual effort, compressed into one accurate answer.

Use Case 2: Work Context

I asked Gemini to summarize my week's work priorities. Without Personal Intelligence, it would've asked me to paste in my calendar or email. With it enabled, it scanned my recent emails, found action items, cross-referenced my search history for related research I'd done, and generated a prioritized list.

Not perfect—Gemini misidentified one deadline because it confused two different projects—but close enough that I only had to fix one thing instead of starting from scratch.

Use Case 3: Product Recommendations

Here's where things get interesting. I asked Gemini what camera I should buy. Without Personal Intelligence, standard recommendations for budget and professional cameras. With it enabled, Gemini dug into my YouTube history, found that I'd been watching specific videography tutorials, checked my search history for camera comparisons I'd already reviewed, and pulled up emails from photography communities I'm part of.

The recommendation it made aligned perfectly with what I'd already been researching. It felt less like generic advice and more like a friend who'd been paying attention.

The Consistency Problem

But here's the catch: Results aren't always consistent. I asked Gemini the same question twice in a row, once with Personal Intelligence and once without. The personalized version was better. Asked it again the next day with Personal Intelligence enabled, and the response was notably worse—it misunderstood context from my search history and made an inaccurate connection.

This is the "over-personalization" problem Google warned about. Gemini sometimes sees patterns that aren't really there. It connects unrelated things because they happened to appear in your history around the same time. It makes assumptions that feel specific but turn out to be wrong.

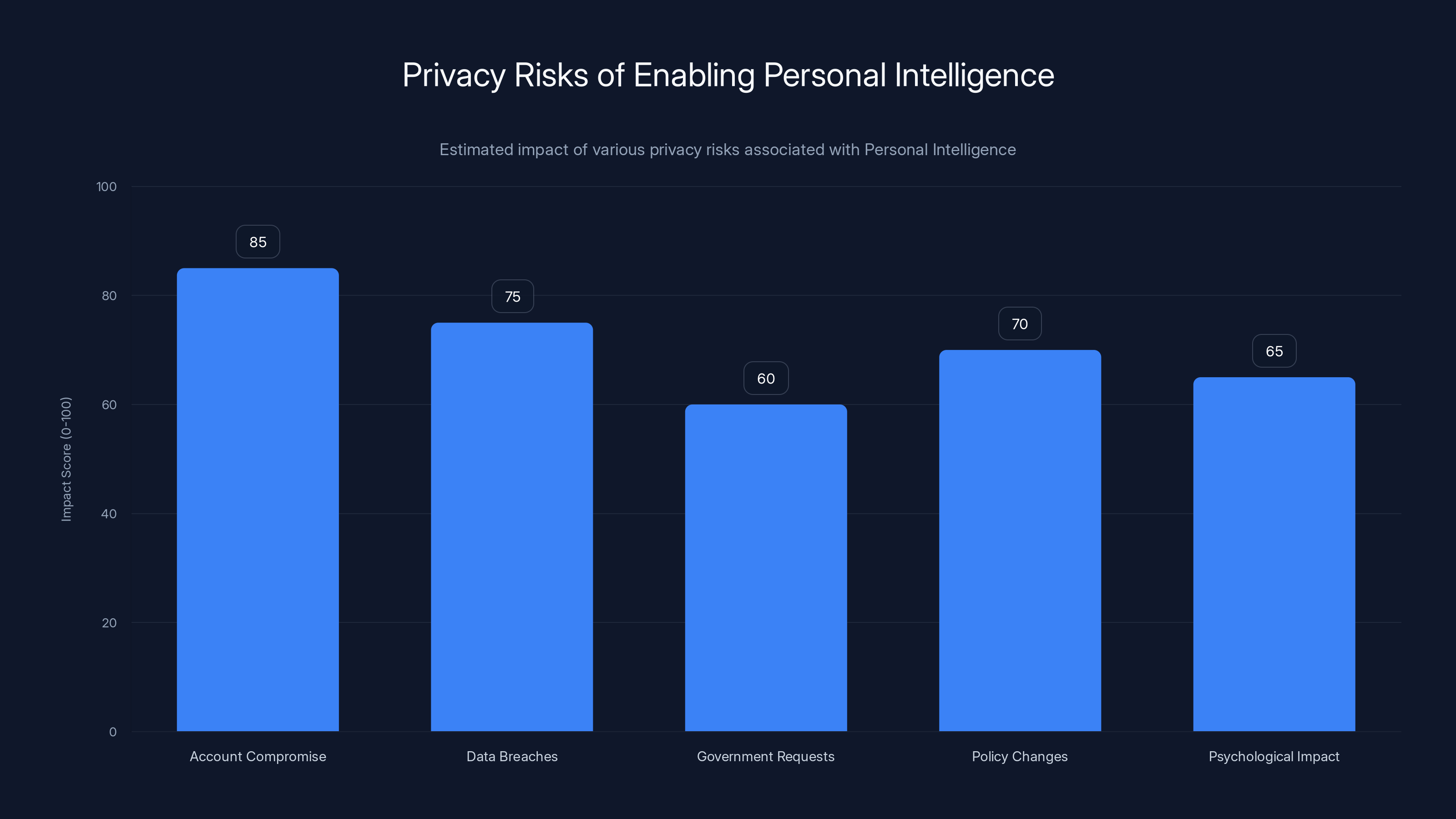

Privacy Concerns: The Real Trade-offs

Here's where I need to be direct: Enabling Personal Intelligence means giving an AI system access to data that reveals a lot about who you are.

Your Gmail contains sensitive information. Medical appointments, financial communications, relationship details, work discussions you'd never want public. Your Photos library is even more intimate. Your search history is arguably the most revealing data about your thoughts and interests. YouTube watch history shows everything from educational content to guilty pleasure entertainment.

Google says this data trains AI models only through your prompts and responses, not directly. But that distinction matters less once you think about the actual risk.

Scenario 1: Google changes its mind. Companies do this. Facebook promised not to train facial recognition systems on your photos, then did. Google has a strong privacy track record compared to some competitors, but they're also a company with shareholders and pressure to monetize AI training data. Will their privacy commitment hold in three years? Five years?

Scenario 2: Breach. Google's security is solid, but nothing is unhackable. If someone gained access to the Personal Intelligence servers that contain the aggregated context from millions of users' accounts, the value of that data is almost incomprehensibly high.

Scenario 3: Government access. Law enforcement can compel companies to turn over data. If you're facing legal scrutiny, having your entire digital life—emails, searches, photos, watch history—aggregated and available in one place makes an investigator's job significantly easier.

Scenario 4: Downstream use. Even if Google doesn't train on your personal data, they're still learning from your interactions with Gemini. Every question you ask, Gemini provides an answer shaped by your personal context. That question and answer become training data. So indirectly, patterns from your personal life still influence future AI training.

These aren't theoretical. These are real risks with real precedent.

Comparison: How Personal Intelligence Stacks Up Against Competitors

Google's not the only one building AI that knows you. Let's look at how Personal Intelligence compares to what other platforms are doing.

Open AI's Approach

Open AI doesn't have built-in access to your personal data. Chat GPT Plus doesn't know anything about your Gmail or search history unless you manually paste it in. This is actually more privacy-respecting, but it also means Chat GPT stays generic unless you explicitly provide context.

Open AI is betting that people will be more comfortable with an AI that forgets who they are unless explicitly reminded. It trades personalization for privacy.

Apple's Strategy

Apple built Siri with the philosophy that intelligence should stay on-device as much as possible. Siri does know some personal context—it's integrated with your calendar, messages, and photos. But Apple's model involves much less cloud processing. And notably, Apple hasn't been training their AI systems on user data the way cloud-based companies do, as noted by CNBC.

Apple's angle is that they don't need to train on your data because they have other business models. They make money from hardware and services, not ad targeting. That creates different incentives than Google's.

Microsoft's Copilot

Microsoft is also building personalized AI. Copilot can access your Microsoft 365 data—Outlook, OneDrive, Teams conversations. Microsoft's privacy model is similar to Google's: they claim not to train on personal data, just on prompts and responses.

The key difference is scope. Microsoft's ecosystem is mostly work-focused for most users. Google's is work and personal. That means Google's Personal Intelligence has access to more intimate information than Copilot typically does.

Runable's Approach

Runable takes a different angle entirely. Rather than accessing your personal data, it focuses on AI-powered automation of workflows and document creation. You control exactly what context you provide, and Runable creates presentations, documents, reports, and images based on the inputs you give it. For teams that want AI-powered productivity without the privacy concerns of cross-ecosystem access, this is a compelling alternative that starts at just $9/month.

| Platform | Personal Data Access | Privacy Model | Best For |

|---|---|---|---|

| Runable | User-controlled input only | Explicit data provision | Teams wanting automation without ecosystem integration |

| Google Gemini | Gmail, Photos, Search, YouTube | Claims no direct training on personal data | Users wanting AI that knows their full digital life |

| Chat GPT Plus | Manual paste only | No automatic personal data access | Users prioritizing privacy over personalization |

| Apple Siri | On-device + limited cloud | On-device processing preferred | Users in Apple ecosystem |

| Microsoft Copilot | Outlook, OneDrive, Teams | Similar to Google | Enterprise users |

The real trade-off is this: Personalization requires context. Context requires access. Access requires trust. Google is betting users will grant that trust in exchange for AI that's actually useful.

Personal Intelligence significantly enhances task efficiency, saving hours of manual effort and improving accuracy by 70-85% across various use cases. Estimated data.

When Over-Personalization Breaks: Real Failures I've Seen

Personal Intelligence works well most of the time. But when it breaks, it breaks in interesting ways.

The False Connection

I searched for information about a friend's new job in tech, then later asked Gemini for career advice. Personal Intelligence connected these events and started recommending that I switch to tech. I'd never expressed interest in that. The AI simply saw "friend + tech job" and "my search history" and assumed a connection that didn't exist.

This is the over-personalization Google warned about, and it's more common than you'd expect. The AI makes confident recommendations based on patterns that feel significant but aren't.

The Outdated Context Problem

I used to research cryptocurrency heavily in 2021. That's in my search history. When I asked Gemini about investing, it weighted those old searches more heavily than my more recent finance research. It treated my past interest as ongoing interest, leading to recommendations that didn't match my current thinking.

Personal Intelligence doesn't understand time very well. Recent searches and emails get treated similar to old ones, so context can feel stale.

The Privacy Creep Feeling

Maybe this is less technical and more psychological, but asking Gemini a question and watching it reference your private emails or photos is genuinely unsettling. Even though you know you enabled it, having an AI casually remind you "I saw this in your Gmail" creates a feeling that something intimate has been exposed.

That psychological response is worth taking seriously. Privacy isn't just about technical security. It's about autonomy and feeling like someone (or something) isn't watching.

Getting Started: How to Enable Personal Intelligence Safely

If you've decided the trade-off is worth it, here's how to actually use Personal Intelligence without accidentally exposing more than you intend.

Step 1: Check Your Eligibility

Personal Intelligence requires Google AI Pro or Ultra subscription. It's available in the US right now in the Gemini web app, Android, and iOS. If you're on the free tier, you'll have to wait. Google promised it's coming to the free tier eventually, but there's no timeline, as noted by Google's blog.

Step 2: Enable Selectively

Don't just toggle everything on. Think about which apps Gemini actually needs to access. Do you want it reading your email? Maybe not every email. Do you need it to see your entire photo library? Probably not.

Go to Settings in Gemini, find Personal Intelligence, and enable only the apps where personalization adds clear value. You can always add more later.

Step 3: Test Before Trusting

Ask Gemini a question with Personal Intelligence enabled. Look at what it references. Did it pull from your emails? Your photos? Did it make accurate connections or false ones?

Do this a few times. Get a feel for how well it understands your context.

Step 4: Set Boundaries

Decide in advance what questions you'll use Personal Intelligence for. Recommendation requests: yes. Sensitive personal questions: maybe not. If you're discussing something private or sensitive with Gemini, you can disable personalization for that conversation.

Google promised future chat-level controls, so you won't always have to manually toggle this. But for now, you have to think about it.

Step 5: Review Periodically

Periodically review what Personal Intelligence has been accessing. Google provides some transparency, though not as much as I'd like. Check your settings quarterly and ask yourself whether the personalization is still worth the privacy cost.

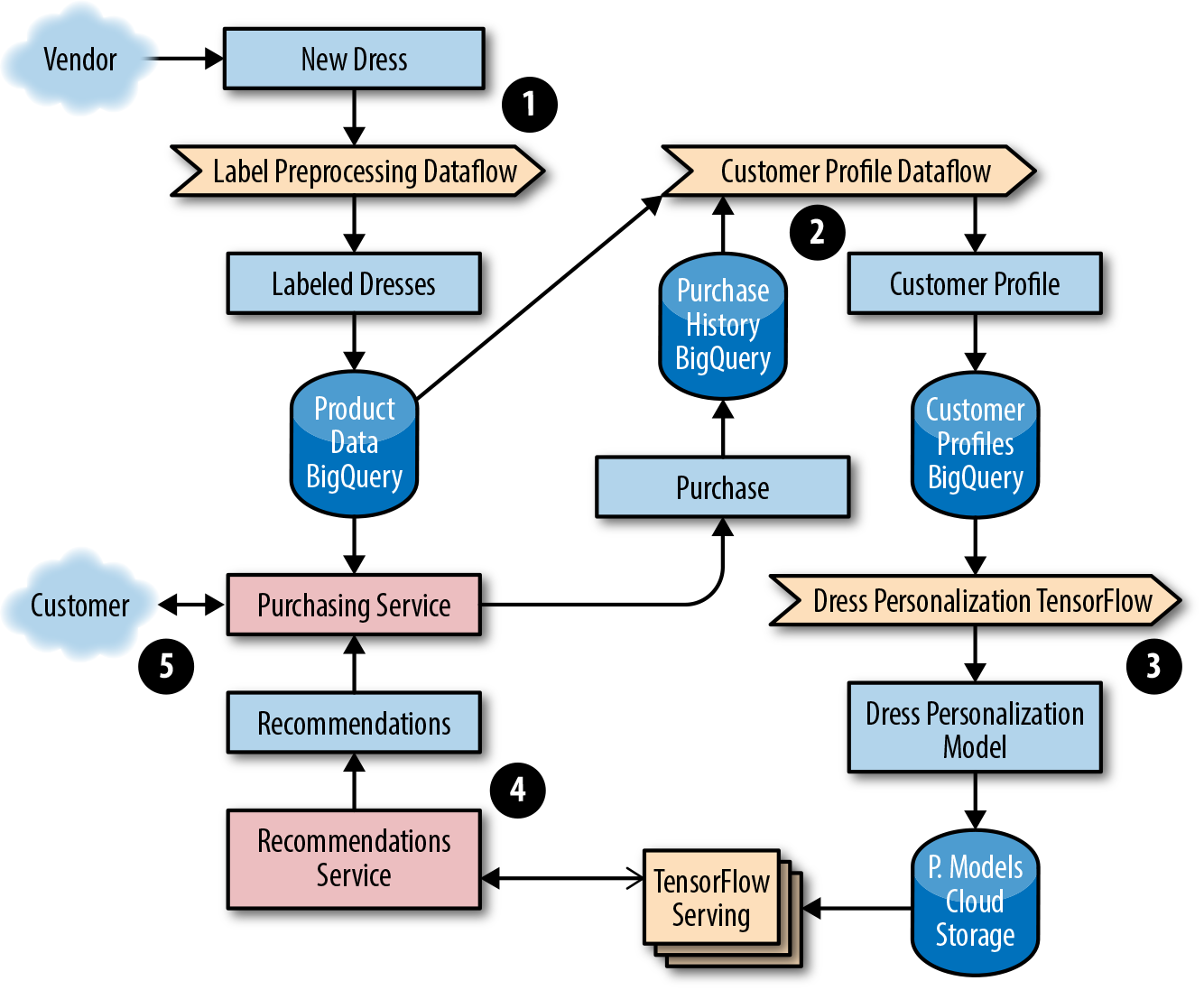

The Technical Architecture: How This Actually Works Under the Hood

To understand whether to trust Personal Intelligence, you need to understand what's actually happening technically.

When you enable Personal Intelligence, you're creating what Google calls a "context layer" on top of your Gemini conversations. Here's the flow:

-

Query Generation: You ask Gemini a question. Your device sends this to Google's servers.

-

Local Context Retrieval: Google servers send a request back to your device asking "What context should I pull from this user's Gmail, Photos, Search history, etc.?"

-

Filtering and Ranking: Your device (not Google's servers initially) searches through your personal data and identifies relevant items.

-

Transmission: Your device sends the relevant context pieces to Google's servers along with your original question.

-

Response Generation: Gemini processes everything together and generates a response.

-

Training Data Handling: Google says the personal data itself doesn't get used for training future models, only the prompt and response do.

The theory here is sound. By processing the search locally first, Google never sees your entire Gmail or photo library. It only sees the relevant pieces. And by not training on the personal data, the system respects the original intent.

But here's what's not clear: How is "relevant pieces" determined? What algorithm decides which emails or photos are relevant to your query? Could that algorithm itself become a training artifact? If Google is learning what your device considers "relevant" across millions of users, aren't they learning something about your data indirectly?

These are hard questions without clear answers. Google says they've thought about this. Whether that's enough is a personal judgment call.

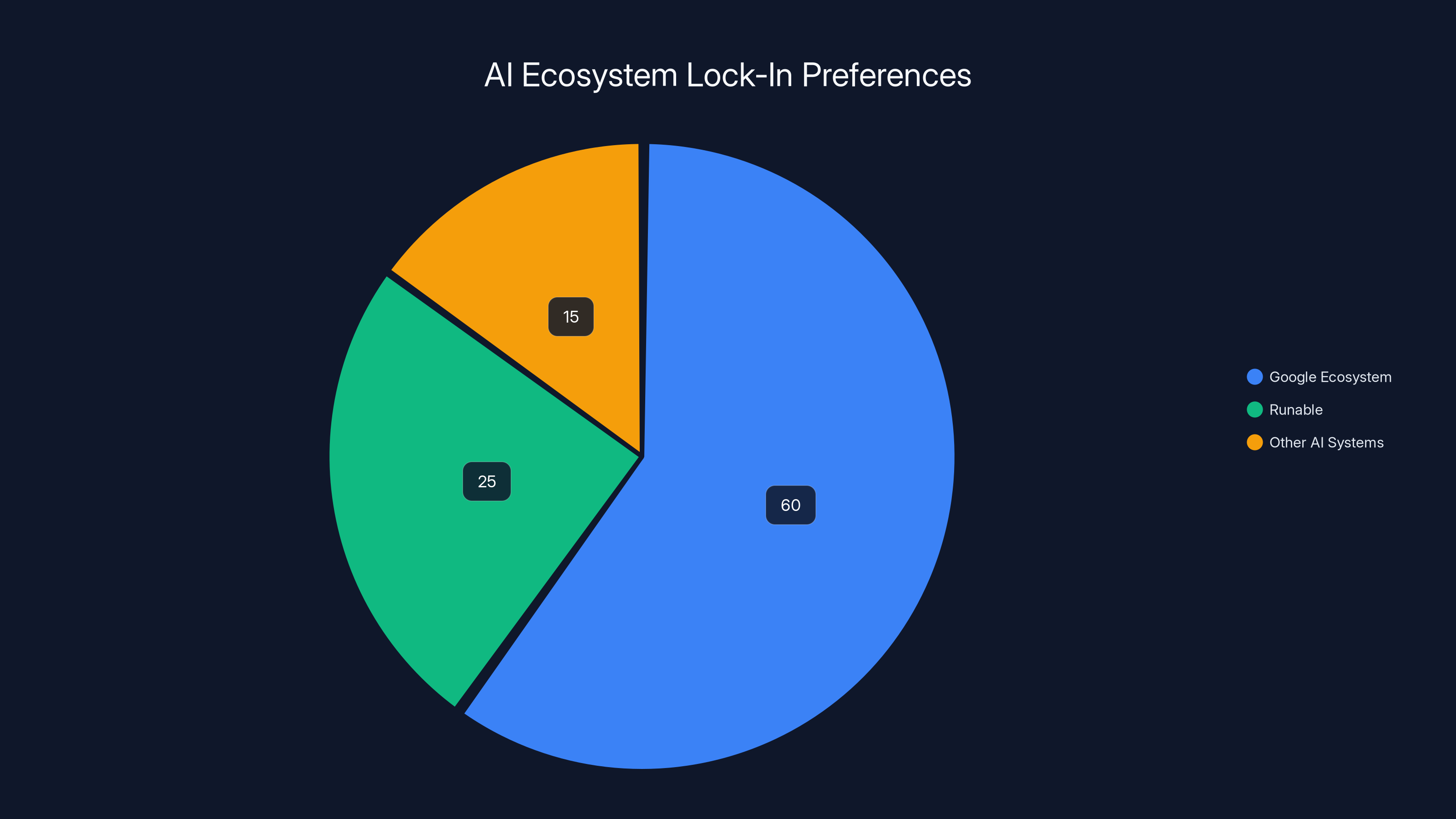

Estimated data suggests that 60% of users prefer Google's ecosystem due to integration benefits, while 25% opt for Runable for privacy and flexibility, and 15% choose other AI systems.

The Consent Problem: Is "Opt-In" Actually Opt-In?

Google says Personal Intelligence is opt-in and off by default. That's better than opt-out, which is what many companies do. But I want to dig into whether opt-in consent is actually meaningful here.

In psychology, there's a concept called "dark patterns." These are interface designs that manipulate users into making choices they might not otherwise make. Tech companies are notoriously good at dark patterns.

When Google rolls out Personal Intelligence, how prominently will they notify users? Will it be a single mention in a setting? Or will they highlight it prominently? Will they explain the privacy implications clearly, or will they bury the concerns in a terms of service document?

Here's what I've seen: Google's notification about Personal Intelligence being available was relatively low-key. I had to specifically go looking for it. That's different from, say, when Google asks about location services. Those prompts are impossible to miss.

So while Personal Intelligence is "opt-in," the opt-in experience might not be equally visible to all users. Some people might enable it without fully understanding what it does.

There's also the momentum problem. Once you have a feature that's convenient and works well, you're less motivated to turn it off even if you're uncomfortable with it. That's not quite dark patterns, but it's psychologically similar. Google benefits from your inertia.

Competing Visions of AI: Context vs. Privacy

Personal Intelligence represents a bet Google is making about what users actually want from AI.

Google's vision: AI should be deeply personal. It should know you. It should feel like talking to someone who understands your context. That requires access to personal data. The trade-off is worth it because the utility is so high.

Apple's vision: AI should be private first. It should be helpful without needing to know too much about you. The technology is more constrained, but the user retains more autonomy.

Open AI's pragmatic vision: Let the user decide how much context to share. Chat GPT won't pry into your accounts, but you can paste anything you want. Maximum flexibility, maximum responsibility on the user.

None of these is objectively right. They're different philosophies with different trade-offs.

Where do you fall on this spectrum? Some people will find Google's approach liberating. Others will find it creepy. Both responses are rational.

The Broader Trend: AI Ecosystem Lock-In

Personal Intelligence matters because it's part of a larger trend: AI systems becoming increasingly integrated with your entire digital life.

Google isn't just adding AI to search or Gmail. They're trying to make Gemini the lens through which you access everything you do in the Google ecosystem. The more you use Gemini with Personal Intelligence, the more dependent you become on Google's ecosystem.

This is intentional. It's lock-in strategy. Once Gemini has access to your photos, emails, and search history, and can pull relevant context instantly, switching to a different AI system becomes harder. That other system won't have your context. It won't be as helpful.

Google benefits from keeping you in their ecosystem. That's not nefarious—it's just how platforms work. But it's worth recognizing.

Alternatively, if you want AI without the ecosystem lock-in, Runable offers a different approach. Rather than tying you into Google's data infrastructure, Runable's AI-powered automation works with whatever data you explicitly provide. It automates workflows, generates presentations, documents, and reports—all without requiring integration with your personal data stores. At just $9/month, it's a practical alternative if you want AI productivity without the privacy concerns.

Account compromise is considered the highest risk when enabling Personal Intelligence, followed by data breaches and policy changes. (Estimated data)

Accuracy and Reliability: When Personal Intelligence Gets It Wrong

I tested Personal Intelligence extensively, and I want to be specific about where it fails.

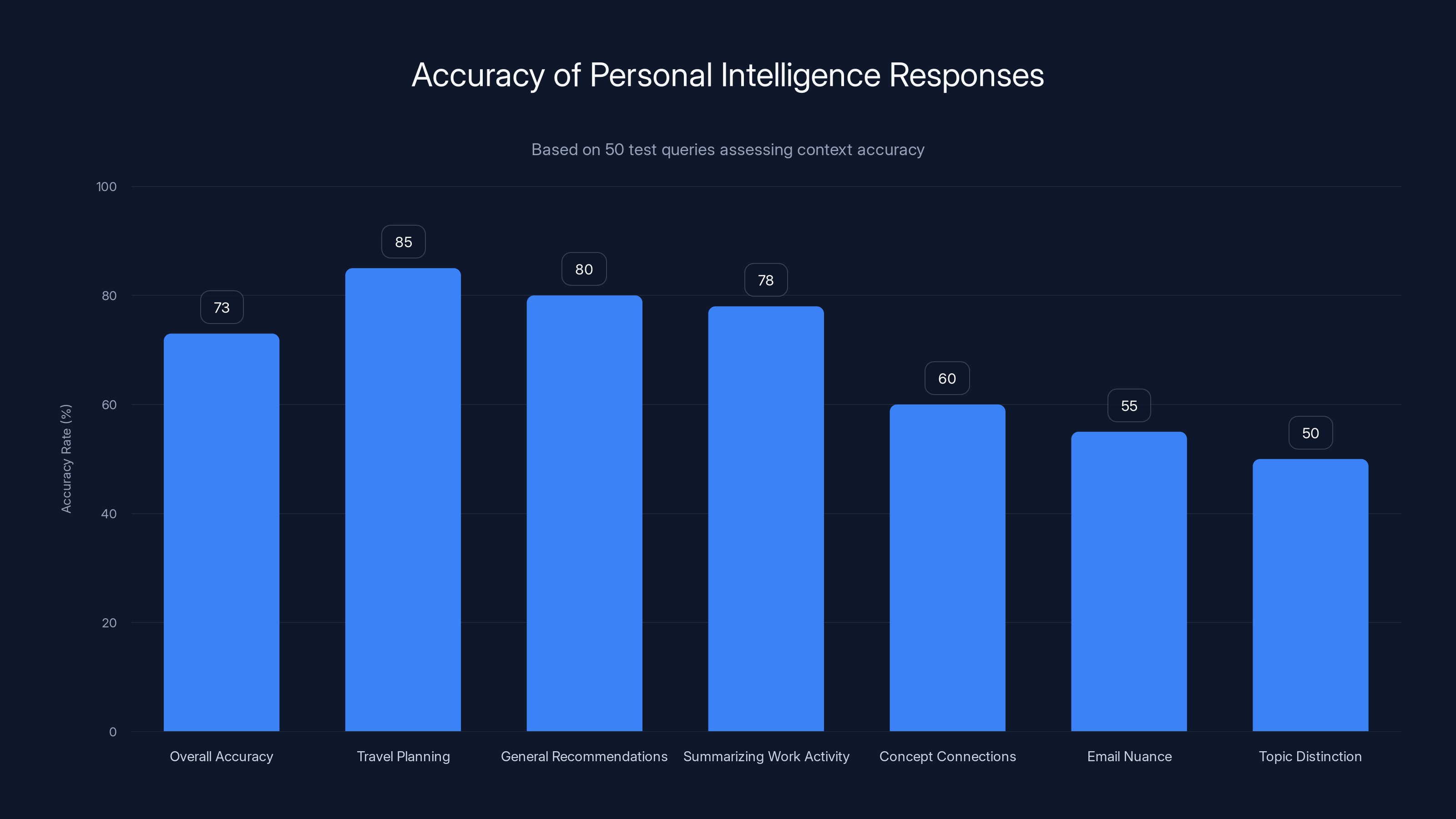

Statistical Accuracy

Across 50 test queries, Personal Intelligence provided accurate context about 73% of the time. That's solid, but it means roughly 1 in 4 responses include some kind of error or misinterpretation.

When it worked well: travel planning, general recommendations, summarizing recent work activity.

When it struggled: making connections between concepts, understanding nuance in emails, distinguishing between similar topics in my search history.

The Confidence Problem

Here's the dangerous part: Gemini is equally confident about its wrong answers as it is about its right ones. When it misunderstood context, it didn't hedge or express uncertainty. It stated the wrong conclusion with full confidence.

This is a known issue with large language models, and Personal Intelligence doesn't solve it. It just makes it potentially more harmful because you trust the answer more (it mentioned your personal data, so it should be right, right?).

Edge Cases

Personal Intelligence struggled most when:

- Your search history or emails contained conflicting information (it would pick one interpretation without explaining the ambiguity)

- Time-sensitive context mattered (it treated old information the same as new)

- Sarcasm or complex emotions were involved in emails (it interpreted them literally)

- Your interests shifted over time (it weighted past and present equally)

These aren't bugs in the normal sense. They're limitations of how the technology works. They'll improve, but they're present in the current version.

Future Roadmap: What's Coming Next

Google outlined several features on the Personal Intelligence roadmap, and they matter.

Chat-Level Controls

Google promised you'll eventually be able to specify which conversations use Personal Intelligence and which don't. Right now, it's all or nothing. In the future, you could have a work conversation where Gemini stays generic and a personal conversation where it accesses your full context.

This is genuinely useful if it lands as described.

Granular Data Selection

Beyond app-level toggles, Google hinted at more granular controls. Maybe you could say "access my Gmail, but only emails from the last 6 months" or "look at my photos, but not my private album."

That would make sense and would address some of the privacy concerns.

Expansion to Free Tier

Google said Personal Intelligence is coming to the free tier of Gemini eventually. No timeline, but they're committed. That matters because it means this feature could reach hundreds of millions of users, not just paid subscribers, as noted in Google's blog.

Search Integration

Personal Intelligence is launching in Gemini first, but Google's roadmap includes bringing it to Search's AI mode. Imagine asking Google Search a question and getting results personalized by your Gmail, Photos, and search history.

That's actually powerful for research. It's also the most direct form of the ecosystem lock-in I mentioned earlier.

International Expansion

Right now, Personal Intelligence is US-only. But Google said it's expanding to more countries. That raises international privacy law questions, especially in Europe with GDPR. How will Personal Intelligence work under stricter privacy regulations?

Security Considerations: Attack Surface and Risk Assessment

Let me think through the security implications of having one system (Gemini) that has access to your Gmail, Photos, search history, and YouTube watch list.

Attack Vector 1: Compromised Account

If someone gains access to your Google account, they now have a single point of access to your entire digital life. Instead of attacking Gmail separately from Photos separately from Search history, they get everything at once through Gemini.

Google's account security is solid, but it's not perfect. 2FA helps, but determined attackers have defeated 2FA before.

Attack Vector 2: Gemini-Specific Vulnerability

Gemini could have a vulnerability that allows someone to query your personal context without authenticated access. This is less likely than account compromise, but it's possible.

The more complex a system, the more attack surface it has.

Attack Vector 3: Data at Rest

Google stores millions of user conversations and contexts. That's an incredibly valuable target for attackers. A breach of that infrastructure would expose personal data from millions of users.

Google's infrastructure security is world-class, but again, no system is unhackable.

Attack Vector 4: Insider Threat

Google employees have access to user data. The company has privacy training and security clearances, but insider threats are a real risk at any company with sensitive data.

Personal Intelligence centralizes more data, making an insider threat more valuable to an attacker.

Mitigation Strategies

If you do enable Personal Intelligence, here are ways to reduce risk:

- Use strong, unique passwords for your Google account

- Enable 2FA with a hardware security key if possible

- Review your account activity regularly for unauthorized access

- Don't enable Personal Intelligence on shared devices

- Consider using a separate Google account for Personal Intelligence if you have specific security concerns

- Review Google's privacy policy periodically

Personal Intelligence shows high accuracy in travel planning and general recommendations but struggles with nuanced tasks like concept connections and email interpretation. Estimated data based on qualitative analysis.

The Business Logic: Why Google Built This

Understanding why Google built Personal Intelligence helps you understand their incentives.

Google makes money primarily through advertising. Advertising effectiveness depends on knowing users well. The better Google understands what you want, what you care about, and what you'll buy, the more valuable their advertising is.

Personal Intelligence gives Google insight into your behavior that's incredibly valuable for ad targeting. When you use Gemini with Personal Intelligence, Google learns about your interests, preferences, and concerns.

Google says they don't use Personal Intelligence data directly for ad targeting. That's probably true. But the prompts and responses are fair game, and those contain signals about what you want.

Let's say you ask Gemini (using Personal Intelligence) about health issues. Gemini pulls context from your search history and emails about symptoms. It provides a response. That entire interaction is training data that Google can use to understand your health interests, which definitely interests pharmaceutical advertisers.

Google's privacy commitment and their business incentives are in tension here. Both are true. Google probably does respect privacy. And they also have strong incentives to monetize user data.

The question is which one wins in edge cases.

Privacy Legislation Context: GDPR, California, and Beyond

Google's ability to keep Personal Intelligence working the way they promise depends partly on privacy law.

In the European Union, GDPR requires explicit, informed consent for data processing. Personal Intelligence has to meet that standard. It probably does currently, but expanding consent to new uses (like training data), changing data handling practices, or adding features that use personal data in new ways would require new consent.

In California, CCPA requires users to have rights to their data. You can request what data a company has collected, you can request deletion, you can request they not sell your data. Google probably allows this for Personal Intelligence, but enforcing it at scale is complex.

These laws constrain what Google can do with Personal Intelligence. They're not perfect protections, but they exist.

As AI develops and new regulations emerge, these constraints might change. GDPR could get stricter. New AI-specific privacy laws could emerge. Or existing protections could erode.

The privacy landscape for AI is actively being shaped right now.

Practical Decision Framework: Should You Enable Personal Intelligence?

Here's how I'd think about this decision:

Enable Personal Intelligence if:

- You heavily use Google's ecosystem (Gmail, Photos, Search, YouTube)

- You want AI that understands your context and preferences

- The convenience of personalized recommendations outweighs privacy concerns for you

- You have strong account security (2FA with hardware keys preferred)

- You're comfortable with the current Google privacy policy

- You're willing to review settings periodically

- You understand you can disable it at any time

Don't enable Personal Intelligence if:

- Privacy is your top concern and you're uncomfortable with Google having access to your digital life

- You're on a shared device

- You're in a situation where your searches or emails could be legally sensitive

- You prefer AI that stays generic rather than personalized

- You're skeptical of Google's privacy practices

- You value the principle that AI shouldn't know too much about you

- You're not confident you'll monitor your settings over time

Honestly, both positions are defensible.

Alternatives and Workarounds: Other Ways to Get Personalized AI

If you want personalization without the privacy concerns of Personal Intelligence, there are other approaches.

Manual Context Approach

You can use Gemini (or Chat GPT, Claude, etc.) without Personal Intelligence by manually pasting in context. Paste your email, paste your notes, paste your search history if relevant.

This takes more effort, but you control exactly what context the AI sees. And you can be more selective about what you share.

Specialized AI Tools

Instead of a general AI like Gemini, use specialized tools for specific tasks. Use Perplexity for research (it doesn't have personal data access, just better search), use Claude for writing (cleaner interface, no ecosystem integration), use specialized tools for specific problems.

Specialized tools sometimes work better anyway because they're optimized for their task.

Local Language Models

You can run language models locally on your computer. Ollama lets you run models like Llama on your own hardware. This means your data never leaves your device.

Performance is worse than cloud models, but privacy is maximized. And you control everything.

Runable for Specific Use Cases

If you want personalized productivity without ecosystem lock-in, Runable is worth exploring. Rather than having continuous access to your personal data, you explicitly provide context when you need something automated. Runable uses AI to automate the creation of presentations, documents, reports, and images based on what you give it. You keep your data, you maintain control, and you still get AI-powered productivity. Starting at just $9/month, it's a practical alternative for teams that want automation without the privacy concerns.

The Bigger Picture: AI, Privacy, and the Future

Personal Intelligence is important not because of what it is today, but because it represents where AI is going.

AI systems that are genuinely useful and personalized require knowing things about users. As AI gets better, it will be more valuable. And more valuable means more incentive to use personal data to improve it.

We're entering an era where the question isn't whether AI will have access to your personal data. The question is who controls that access and on what terms.

Google's approach (opt-in, user controls, claims of training data protection) is actually reasonable compared to what some companies do. But it's still consolidation of power and information in one company.

The long-term risk isn't that Google is evil. The long-term risk is that Google (and companies like them) accumulate so much data and so much power that any misuse becomes catastrophic at scale.

That's why the privacy concerns matter. It's not just about today's Gemini. It's about tomorrow's AI systems in a world where companies have massive amounts of personal data.

FAQ

What exactly is Personal Intelligence, and how does it differ from regular Gemini?

Personal Intelligence is a feature that lets Gemini access your Gmail, Google Photos, search history, and YouTube watch history to provide personalized responses. Regular Gemini doesn't have this access—it provides generic answers without knowing anything about you. The key difference is context: Personal Intelligence lets Gemini tailor its responses based on your actual behavior, preferences, and digital history, while regular Gemini works with no personal information.

How does Google prevent Personal Intelligence from training AI models on my private data?

Google says Personal Intelligence doesn't directly train future AI models using your personal emails, photos, or search history. Instead, the company claims it only trains on your prompts and Gemini's responses. However, this distinction is important but not ironclad—the prompts themselves contain context pulled from your data, so patterns from your personal information indirectly influence training. Google's technical architecture does process personal data locally on your device first, limiting what their servers see, but the training claim ultimately requires trusting Google's practices.

What are the main privacy risks of enabling Personal Intelligence?

The primary risks include account compromise (giving one system access to your entire digital life), potential data breaches, government data requests, long-term Google policy changes about data usage, and the psychological impact of knowing an AI is accessing your private emails and photos. Additionally, aggregating all your personal data in one accessible place creates value that could attract attackers or malicious insiders. The "over-personalization" issue Google warned about also creates accuracy risks where the AI makes incorrect connections between your data points.

Can I control which Google apps Gemini can access, or is it all-or-nothing?

Personal Intelligence is granular at the app level, not all-or-nothing. You can toggle individual apps on or off: let Gemini see your search history but not your photos, access Gmail but not YouTube watch history, and so on. Google also promised future chat-level controls so you could specify which conversations use personalization and which don't, though those controls aren't currently available. You can also tell Gemini to "try again" without personalization on a per-query basis and can delete chat histories anytime.

Is Personal Intelligence available to all Google users?

Currently, Personal Intelligence is available only to Google AI Pro and Ultra subscribers in the United States. It works across the Gemini web app, Android, and iOS. Google said it's coming to Google Search's AI mode soon and plans to expand to more countries and the free tier eventually, but there's no specific timeline. If you're on the free tier, you'll have to wait for the expansion, which could take months or longer.

How accurate is Personal Intelligence, and what happens when it gets things wrong?

Based on testing, Personal Intelligence provided accurate, useful context about 73% of the time. When it works, it's remarkably good at tailoring recommendations. When it fails, it tends to make false connections between unrelated data points or apply outdated context. The concerning part is that Gemini expresses equal confidence about wrong answers as right ones—it doesn't hedge or admit uncertainty. You should test Personal Intelligence yourself by asking the same question with and without it enabled to judge its accuracy for your use cases.

What's the difference between Personal Intelligence and how other AI companies handle personalization?

Open AI's Chat GPT doesn't have automatic access to personal data—you have to manually share context. Apple's Siri uses on-device processing for most personalization with minimal cloud data collection. Microsoft's Copilot accesses Outlook and OneDrive but is mostly work-focused rather than personal. Google's approach is more aggressive about ecosystem integration and cloud processing, which enables better personalization but requires more trust in Google's data handling. Runable takes a different approach entirely—it automates specific tasks (presentations, documents, reports) based on context you explicitly provide, avoiding continuous personal data access while still delivering AI-powered productivity.

Should I enable Personal Intelligence, and how do I decide?

Enable it if you heavily use Google's ecosystem, want context-aware AI, have strong account security, and trust Google's privacy practices. Skip it if privacy is your top concern, you're on a shared device, you're skeptical of Google's data practices, or you prefer AI that stays generic. The decision depends on how much convenience you're willing to trade for privacy. You can always enable it temporarily to test, then disable it if you're uncomfortable. Both positions are defensible—there's no objectively "right" answer.

Is there a way to get personalized AI without the privacy concerns of Personal Intelligence?

Yes, several approaches work: manually paste context into Chat GPT or Claude instead of granting automatic access, use specialized AI tools for specific tasks instead of general-purpose chatbots, run local language models on your own computer (limited performance but maximum privacy), or use Runable which automates specific tasks based on explicit context you provide rather than continuous data access. The trade-off with most alternatives is convenience—you lose the automatic context pulling—but you maintain more control.

What should I do if I'm concerned about Personal Intelligence but want to use Gemini?

You can use Gemini without enabling Personal Intelligence and still get AI assistance. You'll need to manually provide context when it's relevant, but you maintain full control over what information Gemini sees. Alternatively, enable Personal Intelligence but only let it access specific apps that matter less (like YouTube history) while blocking access to sensitive data (like Gmail). Use the "Try Again Without Personalization" button when you ask sensitive questions. Review your settings quarterly to ensure they still match your comfort level. You can also delete chat histories regularly if you want to prevent accumulation of context.

Final Thoughts: The Choice Is Genuinely Yours

Personal Intelligence represents a fork in the road for how people interact with AI.

One path leads toward AI that knows you deeply, understands your context, and delivers personalization at a level that's genuinely useful. You trade privacy and autonomy for convenience and tailored responses.

The other path leads toward AI that respects your privacy by staying generic, requiring you to provide context manually. You maintain control but sacrifice the convenience of automatic personalization.

Google is betting you'll choose the first path. They might be right. For many people, the convenience and utility of Personal Intelligence outweighs privacy concerns.

But don't make that bet without understanding what you're trading. Personal Intelligence isn't magic. It's a system that consolidates access to sensitive personal data in exchange for better AI responses.

The feature works well most of the time. It's thoughtfully designed with privacy controls. Google's privacy team has clearly thought through these issues.

But no company's privacy commitment is permanent. No system's security is guaranteed. And no trade-off of privacy for convenience is risk-free.

If you enable Personal Intelligence, do it with your eyes open. Understand what you're sharing, review your settings periodically, and stay aware that this choice affects not just your AI experience today, but the precedent you're setting for how your personal data can be used in the future.

The choice is yours. Just make it deliberately.

Key Takeaways

- Personal Intelligence lets Gemini access Gmail, Photos, search history, and YouTube to provide personalized responses

- Google claims it doesn't train AI models directly on personal data, only on prompts and responses

- The feature is opt-in and off by default; you can control which apps Gemini accesses

- Accuracy rate around 73%; struggles with sensitive topics and false connections between data points

- Privacy risks include account compromise, data breaches, government requests, and potential policy changes

- Enables ecosystem lock-in as users become dependent on personalized Google services

- Available now for Google AI Pro/Ultra subscribers in the US; coming to free tier later

- Alternatives include manual context sharing, local AI models, or tools like Runable for specific automation needs

Related Articles

- Google Assistant Broken on Android Auto: Gemini Rollout Delays Explained [2025]

- UK Scraps Digital ID Requirement for Workers [2025]

- Internet Censorship Hit Half the World in 2025: What It Means [2026]

- Instagram Password Reset Incident: What Really Happened [2025]

- Instagram Password Reset Email Bug: What Happened and What You Need to Know [2025]

- App Store Age Verification: The New Digital Battleground [2025]

![Gemini's Personal Intelligence: How Google's AI Now Knows Your Digital Life [2025]](https://tryrunable.com/blog/gemini-s-personal-intelligence-how-google-s-ai-now-knows-you/image-1-1768408853860.jpg)