Introduction: The Future of Photo Editing Has Arrived

Remember when editing a photo meant opening Photoshop, wrestling with layers, adjusting curves, and spending 30 minutes just to brighten your vacation pic? Yeah, those days are dying fast.

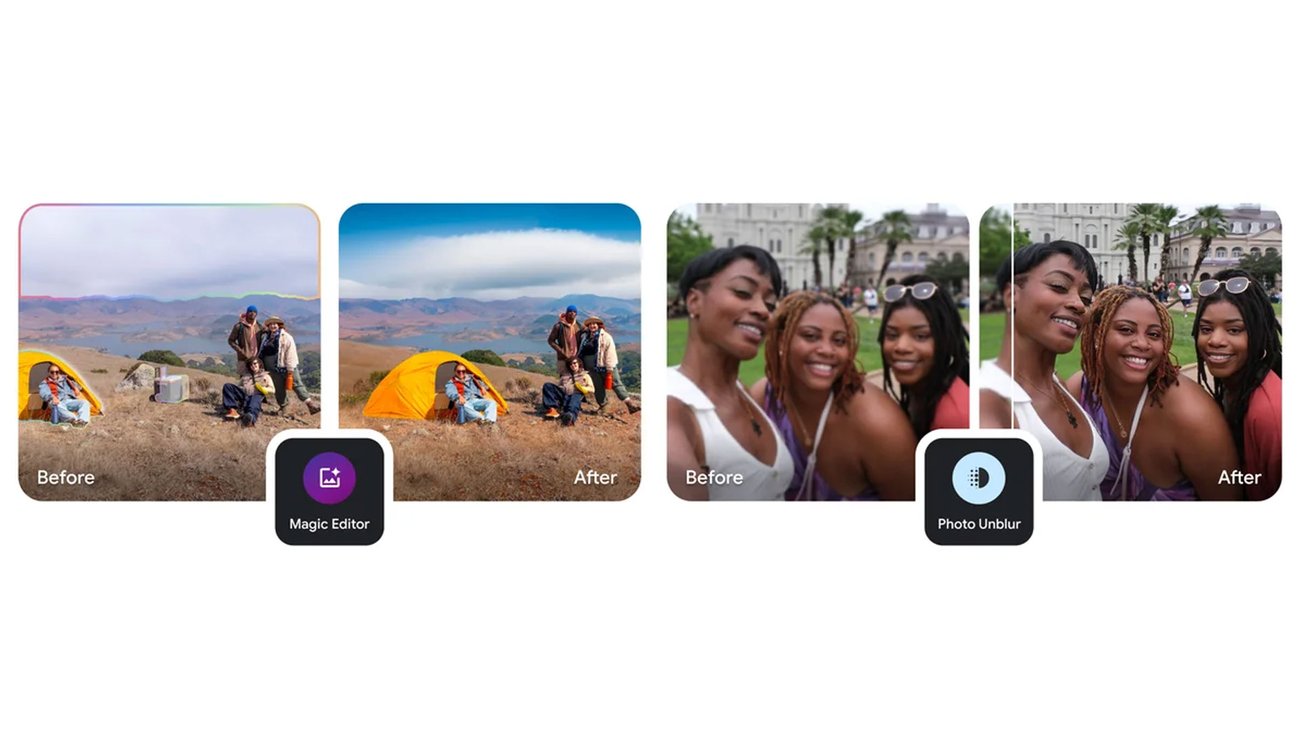

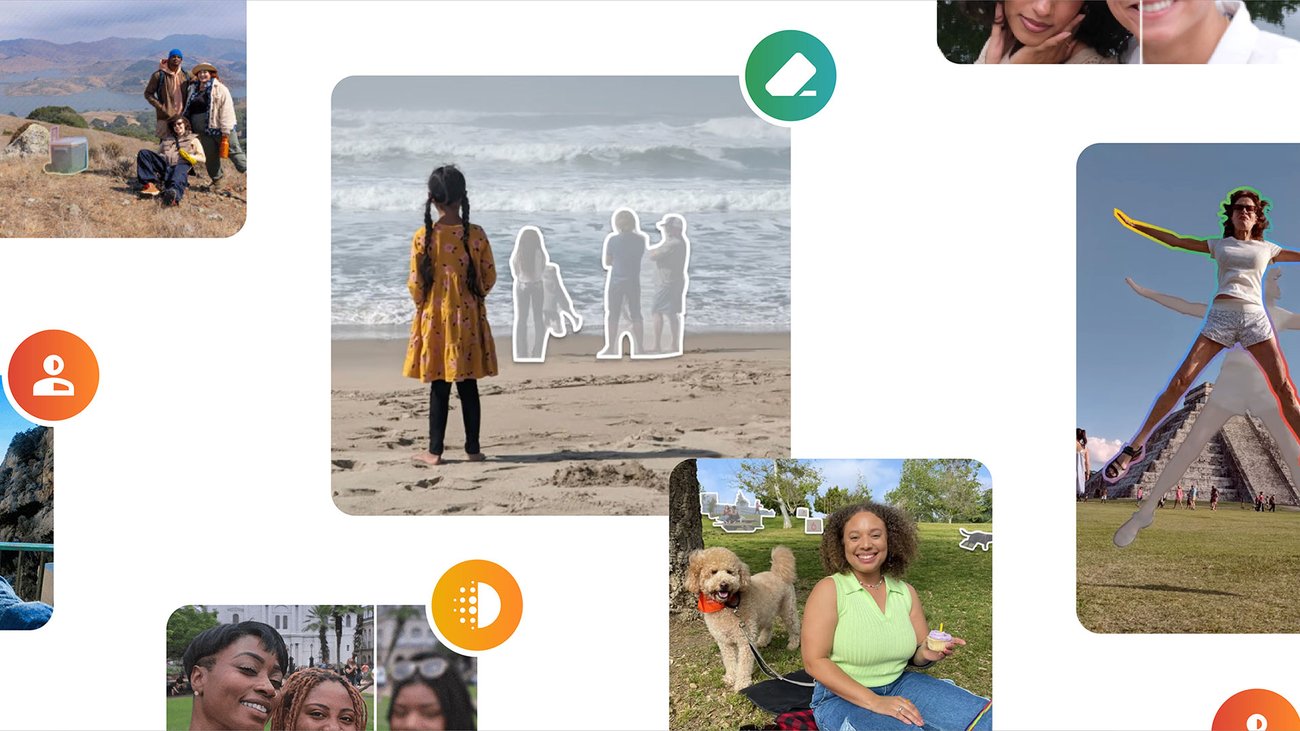

Google Photos just rolled out something genuinely game-changing: AI-powered photo editing through natural language. Instead of clicking sliders or hunting through menus, you just describe what you want. "Remove the motorcycle in the background." "Make the sky more dramatic." "Fix the red-eye." You type it, the AI does it, and you move on with your life.

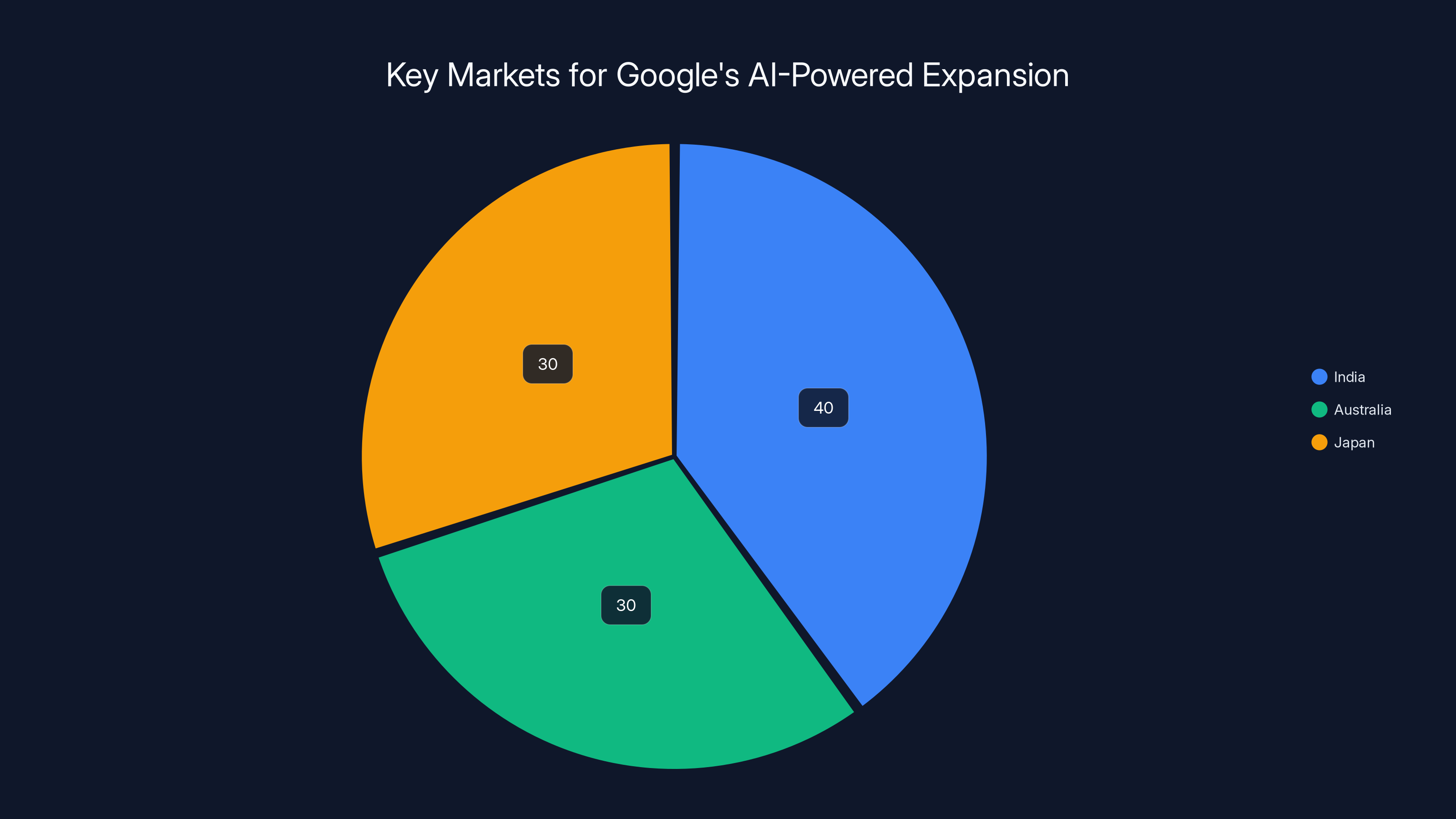

This isn't some experimental lab feature anymore. Google's expanding this to India, Australia, and Japan, which means over 2 billion Google account holders now have access to one of the smartest photo editing tools ever created. The feature arrived for Pixel 10 users in the U.S. last August, but the global expansion signals something important: AI-powered photo editing is becoming table stakes, not a luxury feature.

What makes this particularly interesting isn't just the convenience (though it's genuinely convenient). It's the shift in how technology is getting built. We're moving away from tools that force you to learn complex interfaces and toward systems that understand human intent. You don't need to know what "desaturation" means. You just say what you want.

In this deep dive, we're exploring what this means for photography, content creation, image authentication, and the broader implications of AI tools that actually work without requiring a Ph.D. in UX. We'll look at the technical mechanics, the real-world use cases, the honest limitations, and why this matters way more than it seems on the surface.

TL; DR

- Natural Language Editing: Users describe photo edits in plain text instead of using traditional sliders and tools

- AI-Powered Processing: Google's Nano Banana model handles edits directly on-device without internet connection requirements

- Global Expansion: Now available in India, Australia, Japan, and dozens of other markets beyond initial U.S. launch

- Content Authentication: New C2PA metadata tracking shows when images were created or AI-edited

- Specific Capabilities: The tool handles complex requests like removing objects, adjusting poses, fixing blinks, and restoring old photos

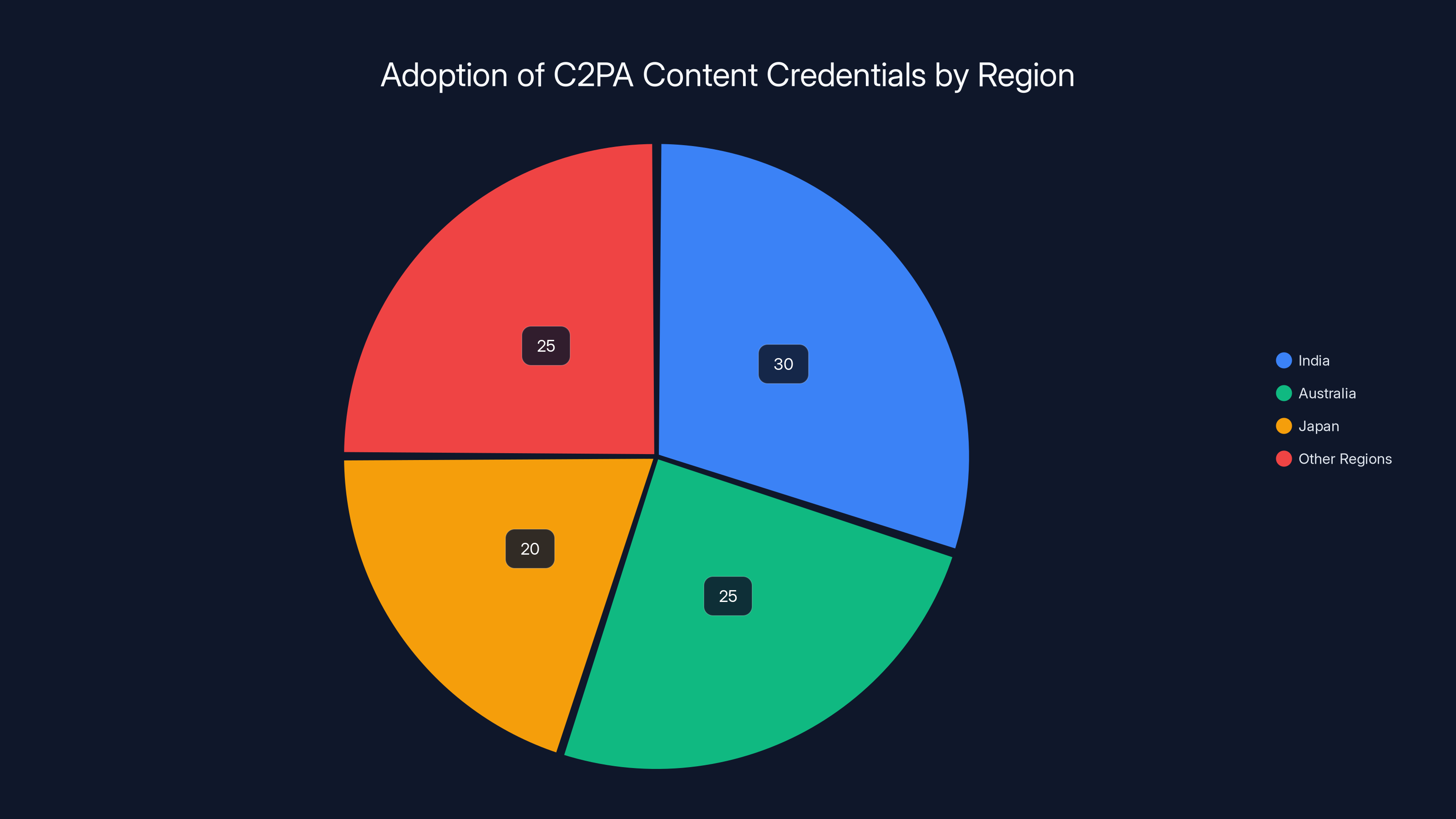

Estimated data shows that India, Australia, and Japan are leading in adopting C2PA content credentials, each contributing significantly to the early adoption phase.

What Is Prompt-Based Photo Editing?

Prompt-based photo editing is exactly what it sounds like: you write a description of the edit you want, and AI makes it happen. But the simplicity of that explanation hides some genuinely sophisticated technology underneath.

When you open a photo in Google Photos and tap the edit button, you now see a "Help me Edit" box. From there, you get two paths. First, you can choose from suggested prompts Google's system recommends based on what it detects in your photo. See people with their eyes closed? Suggested prompt: "Fix the blink." See a cluttered background? Suggested prompt: "Blur the background." It's contextual and actually useful.

Or, you type your own prompt. This is where things get interesting. Google's system doesn't just recognize simple commands. You can ask for surprisingly specific edits. "Remove the motorcycle behind the person," "Change the pose," "Remove their glasses," "Make the sunset warmer," "Add more vibrant colors," "Restore this old photo." The tool handles all of it.

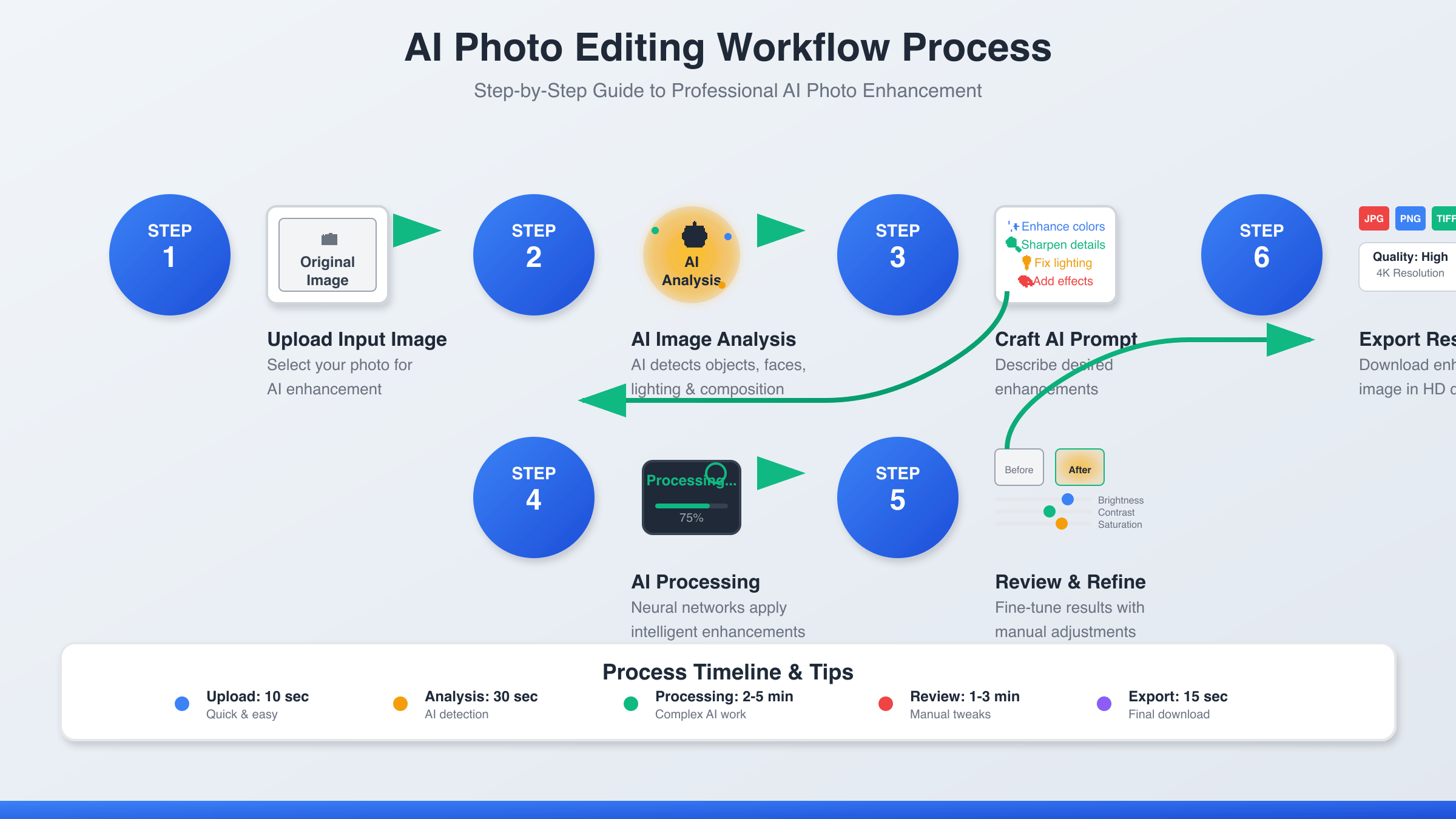

The magic happens on-device. Google uses its Nano Banana image model for the processing, and here's the critical part: all the actual editing happens locally on your phone. There's no internet call to some Google data center. This matters because it means faster processing, better privacy, and the ability to edit offline (though you do need to be online to issue the initial prompt request).

Compare this to traditional photo editing workflows. You'd open Photoshop or Lightroom, hunt for the right tool, figure out the settings, maybe watch a YouTube tutorial, make mistakes, undo, try again. With prompt-based editing, you describe the problem, and the AI solves it. The cognitive load drops dramatically.

The expansion to India, Australia, and Japan isn't just geographic. These are markets with huge mobile-first photography audiences. India, especially, has over 700 million smartphone users, most of whom take and edit photos regularly. Australia has among the highest smartphone penetration rates globally. Japan has some of the world's most tech-forward photography communities. These aren't random choices.

How the AI-Powered Editing Actually Works

The technical architecture here is worth understanding because it reveals why this is genuinely different from simpler editing tools.

First, there's the prompt understanding layer. When you type "remove the motorcycle in the background," Google's system needs to parse your natural language, understand what you're referring to (the motorcycle), understand the action (removal), and understand the context (background, implying depth). This uses large language model technology, but it's tuned specifically for image editing tasks, not general conversation.

Second, there's the object detection and segmentation. The AI needs to actually identify the motorcycle in your image. It uses computer vision models trained on millions of images to detect objects, understand their boundaries, and separate them from the rest of the scene. This segmentation is crucial because you can't edit something you haven't identified.

Third, there's the inpainting or editing generation step. Once the system knows what to remove, it needs to fill that space intelligently. This is where the Nano Banana model comes in. It's a generative model specifically designed for on-device image generation. Instead of using massive transformer models that require cloud computing, Nano Banana is optimized for efficiency. It generates plausible content to fill the removed area while maintaining consistency with the surrounding image.

Fourth, there's the quality assurance and blending. The generated content needs to blend seamlessly with the original image. If you remove an object and leave obvious artifacts or weird blending artifacts, the user notices immediately. Google's system applies additional processing to ensure smooth transitions, correct lighting, and natural-looking results.

What's particularly clever is that all this happens on-device. Your phone or tablet is doing the heavy lifting. This is different from how many AI tools work, where processing happens on remote servers. On-device processing means:

- Speed: No network latency. Your edit starts immediately after you describe it.

- Privacy: Your photos never leave your device (unless you explicitly share them).

- Reliability: No dependence on cloud infrastructure or network connectivity.

- Cost: Google doesn't need to run massive server farms for every edit request.

The Nano Banana model is specifically designed for this on-device work. It's smaller and faster than larger generative models, but it still delivers quality results. Think of it like the difference between a specialized compact car and a full-size SUV. The compact car won't tow a boat, but it gets you where you need to go faster and uses less fuel.

Google's also smart about showing you the editing process. You don't just see the final result. The app shows you suggestions based on what it detects in your image, which teaches users what kinds of edits are possible. Over time, users get better at formulating prompts because they see what works and what doesn't.

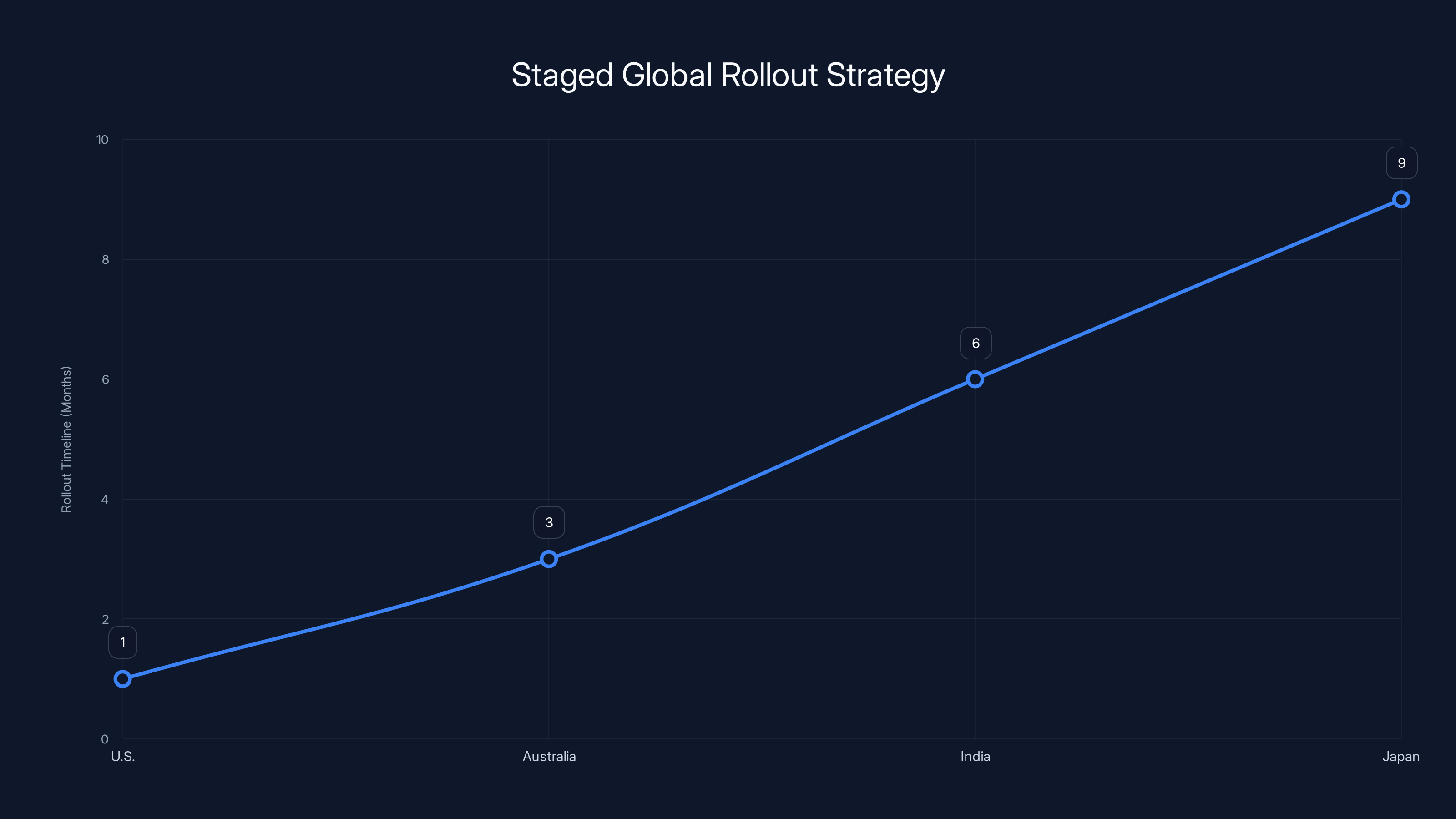

Google's staged rollout strategy begins with the U.S. and progresses through Australia, India, and Japan over an estimated 9-month period. This approach ensures robust testing and adaptation to local needs. Estimated data.

The Geographic Expansion: Why These Markets Matter

Google isn't expanding to India, Australia, and Japan randomly. These markets represent critical opportunities for AI-powered consumer technology, each for different reasons.

India's Mobile-First Photography Boom

India has over 700 million smartphone users, and the number is growing. Most of these users interact with technology almost exclusively through mobile. Photography is core to how Indians engage with social media. WhatsApp, Instagram, and TikTok are where content is shared and consumed. A tool that makes photo editing faster and more intuitive has massive appeal here.

The smartphone user base in India is also younger, on average, than in mature markets. This means higher comfort with AI-powered tools and faster adoption of novel features. India's also a major market for Google Photo's core functionality—storage and organization—so expanding editing capabilities to Indian users strengthens the entire ecosystem.

Beyond consumer appeal, there's a strategic element. China dominates consumer tech in Asia, and India represents one of Google's largest opportunities to establish itself as the primary tech ecosystem. Expanding features to India quickly signals Google's commitment to the market.

Australia's High-Tech Adoption Rate

Australia has one of the world's highest smartphone penetration rates. Australians are also early adopters of new technology features. Cloud photography storage adoption is high, which means Australian users already trust Google Photos with their image libraries. Rolling out advanced editing features here lets Google test adoption and feedback from a tech-savvy audience that will immediately try new capabilities.

Australia's also a market where competitors like Adobe and others have strong presences. Expanding editing capabilities to Australia directly competes with those tools, helping Google defend its position in the premium photography space.

Japan's Photography-Centric Culture

Japan has a unique relationship with photography. Japanese consumers have historically spent more on camera equipment and photography technology than consumers in most other markets. There's a sophisticated photography culture, from casual phone photography to serious hobbyists.

Japan's also an early adopter of AI-powered features. Japanese consumers generally embrace new technology and are willing to experiment with novel AI tools. Expanding prompt-based editing to Japan lets Google demonstrate the technology to one of the world's most sophisticated consumer tech audiences.

Moreover, Japan's a major market for Google's search and cloud products. Strengthening Google's position in consumer photography helps support the broader business relationship.

The expansion to these three markets also signals something broader about how Google is approaching AI rollout. Rather than launching globally simultaneously, Google's pursuing a staged approach. Start with the U.S. (understand the feature), expand to developed markets (Australia), then expand to massive growing markets (India), then expand to sophisticated tech markets (Japan). This staged rollout lets Google gather feedback, improve the model, and adjust the feature based on real-world usage patterns.

The Technical Magic: How Specific Edits Get Done

Let's get concrete about what this tool can actually do, because the capabilities are genuinely impressive.

Object Removal

You take a photo of your friends, but there's a random person in the background. You type "remove the person in the background." The system identifies that person using object detection, segments them from the background, and then uses generative filling to create plausible background content to replace where that person was. This is genuinely hard to do well. Simple systems would just blur the area or leave obvious artifacts. Google's system understands the background pattern and extends it naturally.

The same principle works for removing other objects: motorcycles, poles, signs, or anything else cluttering your shot. The constraint is that the system needs to be able to identify the object and segment it clearly. If the object is partially occluded or blended with other elements, the editing becomes harder.

Pose and Expression Edits

This is where things get wild. You take a photo where your friend is mid-blink or making a weird expression. You type "fix the blink" or "make them smile." The system uses face detection and facial landmark detection to identify the relevant features, and then generatively adjusts them while preserving facial identity and consistency.

This is sophisticated because the system needs to understand not just what to change but how to change it while keeping the person looking like themselves. Make someone smile, but change their face too much, and the result is creepy. The technology needs to find the right balance.

Lighting and Color Adjustments

You describe the aesthetic you want: "Make the sunset warmer," "Brighten the background," "Add more vibrant colors." These adjustments are more straightforward than object removal, but they still require the system to understand what region to adjust and how to make the change look natural.

The advantage here is that color and lighting are relatively easier for AI to adjust than generating new content. The system's just redistributing existing tones and light values, not creating entirely new pixels.

Restoration of Old Photos

One of the more emotionally powerful capabilities is the "restore this old photo" command. Old photographs often suffer from fading, discoloration, and damage. Google's system can colorize faded photos, reduce noise, correct color casts, and repair damage.

This combines multiple AI techniques: denoising networks (to reduce grain), colorization models (to add appropriate color), and inpainting (to repair damaged areas). The result is photos from decades ago suddenly look like they were taken yesterday.

The constraint on all these edits is that they happen on-device, which means the Nano Banana model has to be small enough to run on phones without using all available memory and battery. There's an inherent trade-off between model size and quality. Google's found a sweet spot where the model is capable enough for most real-world edits but efficient enough to run on consumer hardware.

C2PA Content Credentials: Authenticity in the Age of AI

Alongside the editing expansion, Google's rolling out support for C2PA Content Credentials. This is actually more important than it sounds, especially as AI-generated and AI-edited images become mainstream.

C2PA stands for Coalition for Content Provenance and Authenticity. It's a standard that embeds metadata into digital media, creating a record of what happened to a file over time. When you edit a photo with Google Photos' prompt-based tool, C2PA metadata gets added that says, "This image was created on [date] and edited using AI on [date]." This creates a chain of custody for the image.

Why does this matter? Because in a world where AI can generate convincing fake photos, remove people from images, or manipulate content, the ability to verify what's actually happened to an image becomes critical. A news outlet publishing a photo needs to know if that image has been significantly altered. A user sharing a family photo wants to know if they're looking at authentic content or AI-modified content.

C2PA credentials don't prevent editing (they can't). They just document it. It's like the difference between a photo with no metadata and a photo with EXIF data showing the camera make, model, and when it was taken. The credential says, "Here's what happened to this image."

This has real implications for social media platforms, news organizations, and how we handle AI content generally. If a photo has C2PA credentials showing significant AI editing, platforms can handle it differently than authentic photos. This isn't about preventing AI editing (which would be impossible). It's about transparency and allowing consumers to make informed decisions about what they're looking at.

Google's support for C2PA in India, Australia, and Japan means that when users in these regions edit photos with the prompt-based tool, those edits are documented. Over time, as more platforms support C2PA, this creates a broader ecosystem where image provenance is actually trackable.

The technical implementation is invisible to users. You edit a photo, and the credentials get added automatically. You don't need to do anything. But the metadata is there, readable by platforms and tools that support the standard.

This is part of a broader industry movement toward content authenticity. Platforms like TikTok, Instagram, and others are grappling with how to handle AI-generated content. Some are adding labels. Some are restricting AI content. C2PA credentials offer a more nuanced approach: instead of banning or restricting, just document and let users decide.

India, Australia, and Japan are critical markets for Google's AI expansion, with India leading due to its large, young smartphone user base. Estimated data.

The Broader Google Photos AI Strategy

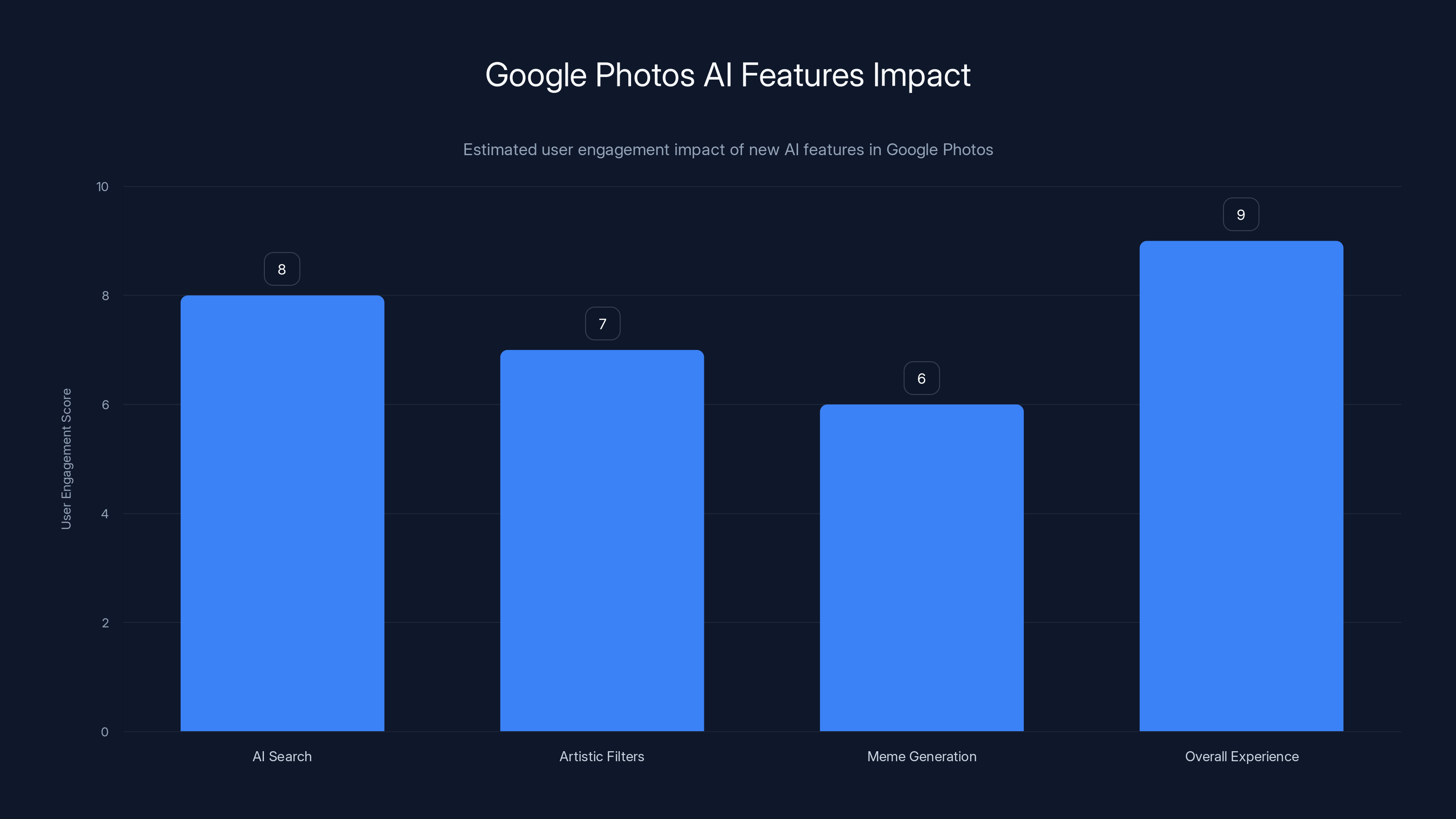

The prompt-based editing expansion isn't happening in isolation. It's part of a much larger push by Google to weave AI throughout the entire Google Photos experience.

Search and Discovery

Last November, Google expanded AI-powered search capabilities to over 100 countries with support for more than 17 languages. Instead of just searching for dates or camera types, you can now describe what you're looking for in plain language. "Find photos of me with my family at the beach" instead of scrolling through months of photos manually.

This is genuinely useful for people with massive photo libraries. The average user with Google Photos has thousands or tens of thousands of photos. Being able to search using natural language instead of metadata tags is transformative.

Artistic Filters and Templates

Google's also rolled out AI templates that can convert photos into different artistic styles. Want your vacation photo to look like a watercolor painting or a charcoal sketch? AI templates can do it. These aren't just preset filters. They're AI-generated stylizations that maintain content while changing the artistic rendering.

Meme Generation

Just last week, Google rolled out a "Meme me" feature that lets users combine reference templates with their own images to create memes. This is lighter, more fun than other features, but it demonstrates how Google's thinking about AI as a content creation tool, not just a content organization tool.

All of these features share a common thread: they're reducing friction between users and the photos they want to create or find. Instead of learning complex tools, users describe what they want, and AI makes it happen. This is a fundamental shift in how consumer photography tools work.

The business logic here is also worth noting. Google Photos is a core product that drives engagement and dependency on Google services. Better photo editing means more reasons to use Google Photos instead of alternatives like Amazon Photos, Apple Photos, or specialized tools like Lightroom. Better search means users spend more time in Google Photos. Better creative tools mean users create more content and share it more often, driving more traffic through Google services.

Real-World Use Cases and Practical Applications

Let's ground this in actual scenarios where prompt-based editing becomes genuinely useful, not just cool.

Social Media Content Creation

You take a selfie, but the lighting's off. Instead of retaking 47 photos, you type "improve the lighting." Done. You took a group photo for social media, but random people in the background are distracting. "Remove the people in the background." Done. For creators managing multiple social platforms with different content needs, reducing editing time is massive.

Calculate the time savings: if you post to 5 platforms weekly and spend 5 minutes editing each photo before posting, that's 25 minutes per week, over 2 hours per month, 24 hours per year. For a creator with hundreds of photos, prompt-based editing recovers real time.

Family Photo Archiving

Family members are getting older, and that old shoebox of photos from the 1970s is finally being digitized. "Restore this old photo" suddenly makes those faded, discolored photos vivid again. Grandkids can see their great-grandparents' wedding in color, clearly, without artifacts.

This isn't just sentimental. Restored photos become heirlooms that can be printed, framed, and preserved for the next generation.

Professional Use Cases

Real estate agents photograph properties, and a few unwanted details can hurt the listing. "Remove the car in the driveway" or "fix the shadows in the living room." Product photographers can fix minor issues quickly without requiring a Photoshop expert.

The tool isn't replacing professional photo editing yet. Professional photographers still use specialized software for complex work. But for quick, everyday fixes, prompt-based editing is fast and accessible enough that it eliminates the need to pay someone or spend hours learning Photoshop.

Accessibility and Inclusivity

Here's something often overlooked: prompt-based editing makes photo manipulation accessible to people who can't use traditional editing tools due to disability, lack of training, or language barriers. If you can describe what you want in your native language, you can edit photos. No need to learn complex UI, no need to understand technical jargon.

This is a genuine accessibility win. Making creative tools more accessible expands who can participate in digital culture.

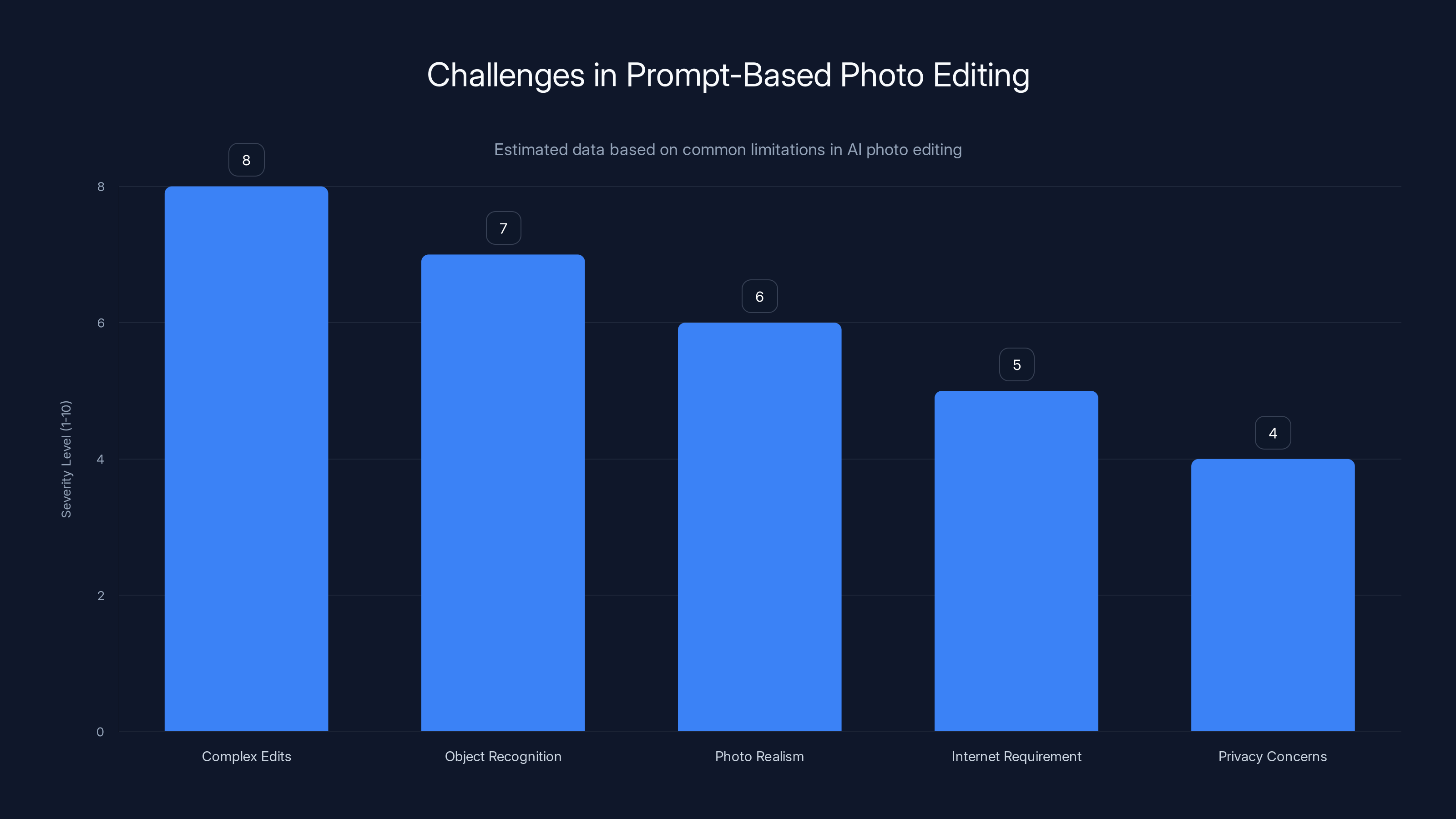

The Limitations and Honest Trade-Offs

Prompt-based editing is genuinely impressive, but it's not magic. There are real limitations worth understanding.

Complex Edits Can Fail

The system handles straightforward requests well. Remove an object, brighten the photo, fix a blink. But complex edits that require deep understanding of context or significant content generation can fail or produce weird results.

Ask it to "change the background completely" and you might get something that looks synthetic. Ask it to "make them look younger" and you might get uncanny results. The technology works best when the edit is clear, bounded, and doesn't require extensive content generation.

Specific Object Recognition Challenges

If the system can't identify what you're referring to, the edit fails. Point to something ambiguous like "remove the thing in the corner" without more detail, and the AI might get confused. Small objects are harder to remove than large ones. Objects that blend with their background are harder to isolate than discrete objects.

Maintaining Photo Realism

When the system generates content to fill removed objects, it aims for photorealism. But sometimes it fails. You might see artifacts, incorrect lighting, unnatural blending, or content that looks "AI-generated" in subtle ways. The results are usually good, but not always perfect.

Internet Requirement for Prompt Submission

While the actual editing happens on-device, you still need internet to submit your prompt. This is a minor inconvenience for most people, but it means you can't edit completely offline.

Privacy Considerations

On-device processing means your photos don't leave your phone. But your prompt is sent to Google's servers for processing. While this is better than sending full photos, it does mean your editing requests are logged by Google. For some users, this is a privacy concern worth considering.

Not a Replacement for Professional Tools

This bears repeating: prompt-based editing is not replacing Photoshop or Capture One for professional photographers. It's solving a different problem: quick, accessible edits for average users. Professionals working on complex projects with exacting standards still need specialized tools.

But for the 90% of users who just want to fix their phone photos quickly, this is genuinely better than the alternatives.

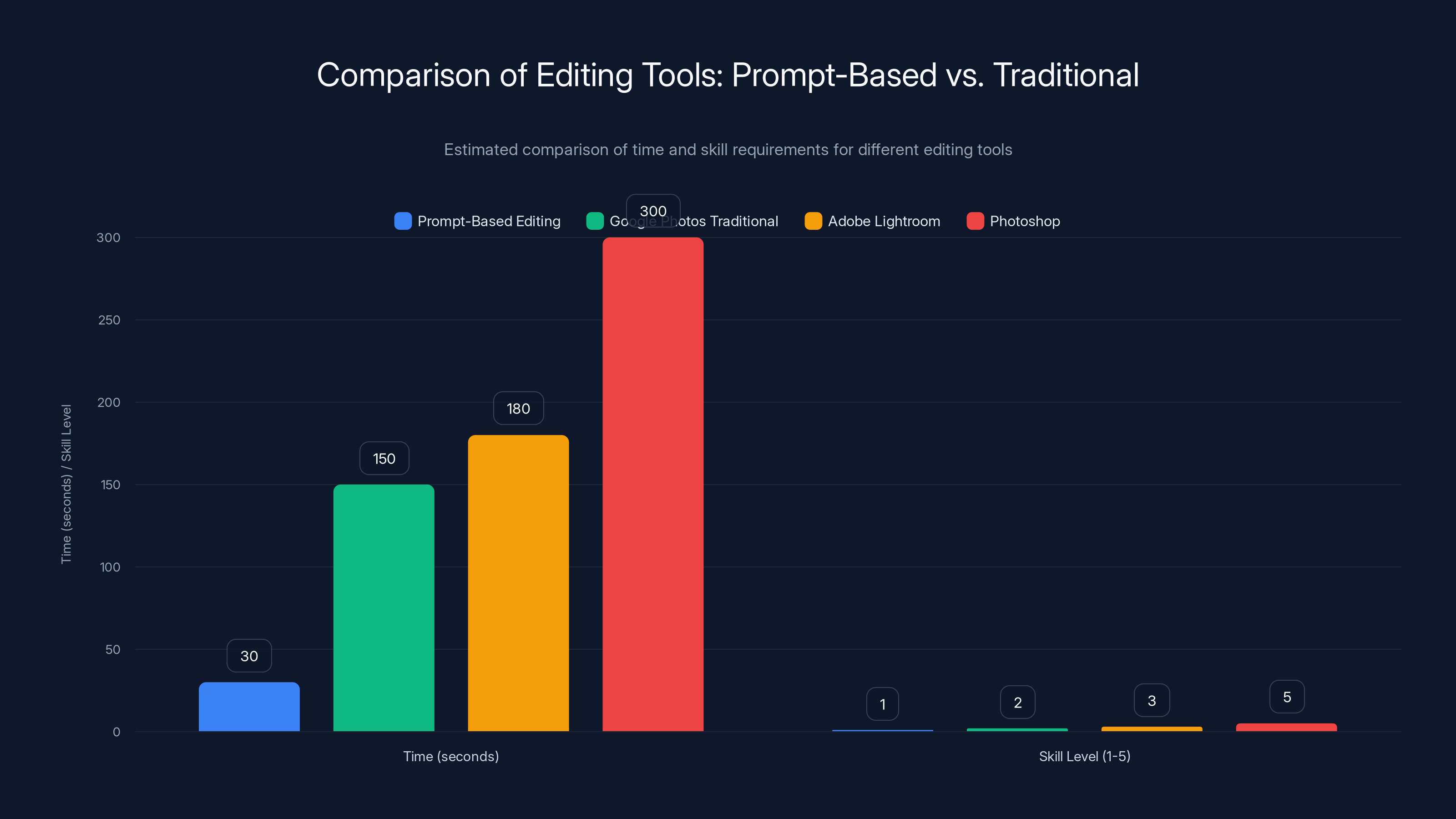

Prompt-based editing is significantly faster and requires less skill compared to traditional tools like Photoshop and Lightroom. (Estimated data)

Comparison: Prompt-Based Editing vs. Traditional Tools

Let me break down how this compares to existing approaches to photo editing.

Google Photos Traditional Editing

Google Photos already had editing tools: brightness, contrast, saturation, color curves, effects. These are slider-based, meaning you adjust values manually. Prompt-based editing removes the need for manual adjustment. You describe the result, not the parameters.

Time difference: Traditional editing of a cluttered photo might take 2-3 minutes. Prompt-based editing takes 30 seconds.

Skill difference: Traditional editing requires understanding what saturation does, what curves do, etc. Prompt-based editing requires only describing what you want.

Adobe Lightroom

Lightroom is more powerful than Google Photos' traditional editing, but it requires understanding its interface and workflow. You can use AI-powered features like Sky Replacement or content-aware removal, but these are add-ons to the core editing workflow.

Prompt-based editing in Google Photos is more accessible but less powerful for complex professional work.

Photoshop

Photoshop is the professional standard, but it has a steep learning curve. Adobe's added generative AI features via generative fill, but these are still within Photoshop's broader, complex interface.

Google's approach prioritizes accessibility over power. Most users don't need Photoshop. They need quick, understandable edits. Google's delivering that.

Specialized Mobile Apps

There are hundreds of mobile photo editing apps: Snapseed, Pixlr, Canva, etc. Some offer AI features. Google's advantage is integration with Google Photos and the sheer number of users who already have Google Photos installed.

Google's approach is also more integrated. You're editing your photo directly in the app where you organize and store it, not jumping between apps.

The Competitive Landscape: Who's Racing to Match This

Google's not alone in pursuing prompt-based photo editing, though they're currently leading the execution.

Apple

Apple has AI-powered photo features in Photos for macOS and iOS, but nothing quite matching prompt-based editing yet. Apple's been cautious about on-device AI, but they're investing heavily in on-device ML. Expect Apple Photos to get similar features eventually.

Adobe

Adobe's been aggressive with generative AI in Photoshop and Lightroom. Their generative fill works on text prompts. But Adobe's approach is cloud-based, which means slower processing and privacy trade-offs. Adobe has also historically charged subscription fees, making their tools less accessible than Google's free offering.

Amazon and Microsoft

Both have photo services, but neither has rolled out prompt-based editing at Google's scale yet. This might not be a strategic priority for them compared to other AI initiatives.

Smartphone Manufacturers

Samsung, OnePlus, and others have custom photo apps on their devices. Some are adding AI features, but none have matched the sophistication of Google's implementation.

Google's advantage is a combination of scale (billions of Google Photo users), technical capability (years of AI research), and strategic focus (photo tools are core to Google's ecosystem).

Market Implications and What This Means for Consumers

Prompt-based photo editing is arriving at an inflection point where AI tools are becoming commoditized expectations rather than premium features.

Democratization of Photo Editing

Historically, good photo editing was a skill. You had to learn Photoshop, Lightroom, or specialized tools. Now, if you can describe what you want, you can edit. This is democratization. More people can create better-looking content, which changes what's possible in digital communication.

Shift in Tool Design Philosophy

This is a broader signal about how tools are evolving. Instead of learning to use a tool, you describe your intent and the tool figures it out. This principle is spreading beyond photos to documents, presentations, code, and more. The question changes from "How do I use this tool?" to "What do I want to accomplish?"

Privacy vs. Convenience Trade-off

On-device processing is a win for privacy compared to cloud-based alternatives. But you still need to send your prompt to Google. Users need to decide if that trade-off is acceptable. For most users, it probably is. For privacy-conscious users, it's worth considering.

Acceleration of AI Adoption in Consumer Tech

Every AI feature Google adds to Google Photos makes the product stickier and increases the switching cost to competitors. If your photo library, organization system, and editing tools are all in Google Photos, switching to Amazon Photos or Apple Photos becomes harder. Google's using AI as a defensive moat to protect its consumer services.

Impact on Professional Photography

The availability of accessible, good photo editing to everyone raises the baseline for what's expected in visual content. This might hurt entry-level photo editing freelancers, but it also increases demand for high-level professional work because consumers' expectations rise.

Estimated data shows that AI Search and overall experience enhancements have the highest impact on user engagement, highlighting the effectiveness of Google's AI strategy in Google Photos.

The Technical Evolution: What This Means for AI

Prompt-based photo editing is one example of a broader trend: AI becoming embedded in everyday consumer tools.

On-Device AI Becoming Practical

For years, AI meant cloud processing because the models were too large and complex for consumer devices. On-device AI was limited to simple tasks like face detection. Nano Banana and models like it represent the next evolution: capable AI models that run efficiently on phones.

This changes everything. It enables privacy-first AI, faster processing, and reduced dependency on cloud infrastructure.

Multimodal AI Becoming Seamless

Prompt-based photo editing combines understanding language (NLP), understanding images (computer vision), and generating images (generative models). For users, this is seamless. Submit a prompt, get an edit. But underneath, multiple specialized AI systems are working together.

As AI capabilities improve, these multimodal combinations will become more sophisticated and common.

AI as a Productivity Multiplier

The real value here isn't just the individual edits. It's the aggregate time savings across billions of users. If Google's feature saves each user an average of 2 minutes per month editing photos, that's 2 billion minutes per month across all users. That's millions of hours recovered from tedious work.

This is what AI should do: take tasks that are time-consuming and error-prone and make them fast and reliable. Google's doing it in photos. Others are doing it in documents, code, presentations, and more.

Implementation and Rollout Strategy

The geographic rollout strategy itself is worth examining because it reveals how companies now approach new AI features.

Staged Global Rollout

Google started with the U.S., then expanded to established tech markets (Australia), then to massive growth markets (India), then to sophisticated tech markets (Japan). This staged approach lets them:

- Test with a sophisticated, feedback-rich audience first (U.S.)

- Expand to markets with high tech adoption (Australia)

- Validate at massive scale (India)

- Reach sophisticated users with high content expectations (Japan)

Each stage informs the next. Feedback from the U.S. improves the model before India rollout. Indian user data informs Japan experience.

Infrastructure Considerations

Bringing prompt-based editing to billions of users requires ensuring the infrastructure can handle it. On-device processing helps here because most computing happens locally. But the initial prompt processing, model downloads, and credential embedding still require Google's servers.

The rollout to these specific markets suggests Google's confident in their infrastructure to support global scale.

Localization Beyond Translation

It's not just translating the interface. The AI needs to understand prompts in different languages, recognize culturally specific photo content, and handle regional variations in how people describe edits. A prompt in Hindi might describe an edit differently than a prompt in English.

Google's support for "more than 17 languages" in their broader AI search reflects this investment in localization.

Future Directions: Where This Is Heading

If you squint, you can see where prompt-based editing is heading.

Video Editing

Photography is just the beginning. Google's likely working on prompt-based video editing. "Remove this person from this scene," "change the lighting in this shot," "extend this video to 30 seconds." Video editing is more complex than photo editing, but the principles are similar.

Multi-Modal Prompts

Currently, you submit text prompts. Future versions might accept voice prompts, sketches, or even reference images. "Make this look like that photo I took in Greece." The UI gets simpler and more natural.

Real-Time Editing

Imagine the camera app showing real-time AI-based editing. Want to apply effects while recording? "Make this black and white," "reduce the blur." Prompt-based real-time editing could change how content is captured, not just edited afterward.

Integration with Other Services

Google Photos is becoming an AI-powered creative platform, not just storage. Integration with Docs ("Turn this photo into a document"), Slides ("Put this photo in a presentation"), and other services makes sense.

Third-Party API

Eventually, other developers might get access to Google's prompt-based editing capabilities through an API. This would spread the technology to countless third-party apps and services.

Complex edits and object recognition are the most challenging aspects of AI photo editing, with privacy concerns being the least severe. Estimated data.

Challenges and Concerns Worth Considering

Like any powerful technology, prompt-based photo editing raises some valid concerns.

Misinformation and Deepfakes

The ability to edit photos convincingly raises questions about misinformation. If journalists or social media posters use prompt-based editing to remove people, change expressions, or manipulate scenes, how do consumers know what's real? C2PA credentials help, but only if consumers actually check them.

Google has a responsibility to encourage metadata adoption and help consumers understand AI-edited content.

Job Displacement

Photo editors and retouchers might worry about this technology replacing their work. Some entry-level editing work will be automated. But as mentioned earlier, this typically raises quality expectations, which creates demand for higher-level professional work.

Bias in AI Models

AI models trained on biased data can perpetuate or amplify bias. If the Nano Banana model was trained primarily on Western faces, it might perform worse on other ethnicities. Google needs to ensure their training data is diverse and test performance across different demographics.

Environmental Impact

Training and running AI models requires energy. Google should be transparent about the environmental impact of scaling these features to billions of users. On-device processing helps because it reduces cloud processing requirements.

Practical Recommendations for Users

If you're getting access to this feature, here are some practical tips.

Start Simple

Begin with straightforward edits like "remove the blink," "brighten the photo," or "remove the person in the background." As you see what works, you can try more complex edits.

Understand the Limitations

Not every edit will work perfectly. Complex requests involving extensive content generation are riskier than bounded edits. If something doesn't work, try rephrasing or breaking it into multiple steps.

Use It as a Starting Point

For professional work, use prompt-based editing as a starting point, then refine with traditional tools if needed. For casual editing, it's often the final step.

Export and Archive

After editing, export and back up your photos. While Google stores your edits, having local backups is good practice.

Stay Informed About Metadata

Understand that C2PA metadata is added to your edited photos. If you share photos across platforms, the metadata might be preserved (or stripped, depending on the platform). This affects what information about your editing travels with the image.

The Bigger Picture: AI in Consumer Tools

Prompt-based photo editing isn't isolated. It's part of a massive shift in how consumer technology is built.

AI is moving from being a specialized capability ("We have AI now!") to being a core feature of mainstream tools. Google Photos, Gmail, Docs, Sheets, and more are all getting AI features that prioritize natural language interaction over complex interfaces.

This shift fundamentally changes how technology companies compete. Instead of competing on UI design or feature breadth, they're competing on AI capabilities. Who has the best model? Who can make it work on-device? Who can make it accessible across languages and regions?

Google has advantages here: massive training data, years of AI research, scale of infrastructure, and deep integration across consumer products. But Apple, Microsoft, and others are catching up.

For consumers, this is generally positive. Better tools, more accessible technology, less time spent learning interfaces and more time spent on actual work or creativity. But it also means increased dependency on these companies' AI systems and the need to understand how those systems work and what trade-offs you're making.

Conclusion: The Photo Editing Future Arrives

Google Photos' prompt-based editing expansion to India, Australia, and Japan represents more than a feature rollout. It's a milestone marking the transition from AI as a novelty to AI as a standard consumer expectation.

When you can edit your photos by describing what you want in natural language instead of learning sliders and menus, the entire paradigm of consumer software changes. You don't learn to use the tool. The tool learns to do what you ask.

The technology itself is impressive: on-device processing, sophisticated computer vision, generative models optimized for efficiency, and seamless integration into existing workflows. But the real story is about accessibility and democratization.

Photo editing, once requiring skill and specialized software, is now accessible to anyone who can describe what they want. This changes what's possible in digital communication, social media, family archiving, and professional photography.

The C2PA credential integration addresses an increasingly urgent need: helping people understand what's real and what's AI-edited in a world where convincing image manipulation is becoming easier.

For Google, this is a smart strategic move. Photo tools drive user engagement and lock-in to the Google ecosystem. For competitors, this is a signal that they need to invest in AI-powered consumer tools or risk falling behind.

For the rest of us, it's a glimpse of what consumer technology will look like going forward: less friction between intent and action, more natural interfaces, and technology that understands what we're trying to accomplish rather than forcing us to learn how to accomplish it.

The question isn't whether prompt-based editing becomes standard across photo apps. It will. The question is who else rolls it out, how the technology improves, and how society adapts to living in a world where photo manipulation is both more powerful and more accessible than ever before.

The photo editing revolution is here. And honestly? It's overdue.

FAQ

What exactly is prompt-based photo editing?

Prompt-based photo editing allows you to describe the changes you want to make to a photo using natural language, rather than manually adjusting sliders or learning complex editing software. You type a description like "remove the person in the background" or "make the sunset warmer," and AI processes the edit. Google's implementation uses its Nano Banana model to handle all processing directly on your device, making edits fast and private.

How does on-device processing work differently than cloud-based editing?

On-device processing means your phone does the actual editing work instead of sending data to remote servers. This approach provides three major benefits: faster processing with no network latency, improved privacy since photos don't leave your device, and better reliability since you don't depend on cloud infrastructure. While you need internet to submit your prompt, the actual editing computation happens locally on your phone.

What kinds of edits can Google Photos' prompt-based editing handle?

The tool handles a surprisingly wide range of edits including object removal (removing people or items from backgrounds), pose and expression adjustments (fixing blinks, changing expressions), lighting and color modifications (brightening, adjusting warmth, changing vibrance), and restoration of old photos (colorizing faded photos, reducing noise). The system works best with clear, specific requests. Complex edits requiring extensive content generation are less reliable than bounded edits.

Why is C2PA metadata important for edited photos?

C2PA (Coalition for Content Provenance and Authenticity) metadata embeds information into your edited photos showing when they were created and what AI edits were applied. This creates transparency around image authenticity, which matters increasingly as AI-generated and AI-edited images become common. The metadata doesn't prevent editing; it just documents it, allowing consumers and platforms to make informed decisions about content they're viewing.

Will this replace professional photo editing tools like Photoshop?

No. Prompt-based editing is solving a different problem than professional tools. It's designed for quick, accessible edits on mobile devices that average users want to perform on their phone photos. Professional photographers and editors working on complex projects still need specialized tools like Photoshop or Capture One for precision, advanced capabilities, and customization. However, for the majority of casual users wanting to fix their photos quickly, this eliminates the need to learn or pay for professional software.

Is my privacy protected with prompt-based editing?

Your photos stay on your device during editing, which is better for privacy than cloud-based alternatives. However, your text prompt is sent to Google's servers for processing. Google stores logs of your editing requests. If privacy is a major concern, you should understand this trade-off. For most users, the convenience of prompt-based editing outweighs these privacy considerations, but the choice depends on your personal priorities.

How do I get the best results when using prompt-based editing?

Be specific and concise with your prompts. Instead of "fix the background," try "remove the people in the background." Start with straightforward edits like removing objects or fixing blinks before attempting complex edits. If an edit doesn't work perfectly, try rephrasing your prompt more specifically. Understand that results are usually very good but not always perfect, so be prepared to accept minor imperfections or use traditional editing tools for refinement if needed.

When will this feature be available in my region?

Google has rolled out prompt-based editing to the U.S., and is expanding to India, Australia, and Japan. The company typically follows a staged rollout approach, expanding to new markets gradually. Check your Google Photos app for the "Help me Edit" feature. If you don't see it yet, it should arrive in your region soon as Google continues expanding globally.

Key Takeaways

- Natural language photo editing eliminates the need to learn complex software interfaces or adjust manual sliders

- On-device processing ensures faster editing, better privacy, and reliability without cloud dependency

- C2PA metadata transparency helps users understand what's been edited and when AI modifications were applied

- Global expansion to India, Australia, and Japan reflects strategic markets for Google Photos growth

- The feature represents a broader shift toward AI-powered consumer tools that prioritize describing intent over learning interfaces

- Professional photography tools remain separate from this consumer-focused feature, which addresses different use cases

- Prompt-based editing is likely just the beginning, with video editing and multimodal prompts coming in future versions

Related Articles

- Google Photos Me Meme: AI-Powered Meme Generator [2025]

- Gemini 3 Becomes Google's Default AI Overviews Model [2025]

- Voice-Activated Task Management: AI Productivity Tools [2025]

- 7 Biggest Tech Stories This Week: LG OLED Breakthrough & More [2025]

- Ring Verify & AI Deepfakes: What You Need to Know [2025]

- Is Apple Intelligence Actually Worth Using? The Real Truth [2025]

![Google Photos AI Editing: Natural Language Photo Transforms [2025]](https://tryrunable.com/blog/google-photos-ai-editing-natural-language-photo-transforms-2/image-1-1769582209337.png)