Ring Verify & AI Deepfakes: What You Need to Know [2025]

Security cameras are supposed to show you what actually happened. That's the whole point. You get a notification, pull up the footage, and you've got proof of who broke into your car or walked off with your porch package.

But what if that footage was fake? What if someone generated it entirely with AI?

That's the problem Ring is trying to solve with its new verification tool. Ring Verify launched in December 2025, and it promises to tell you whether a video is authentic. On the surface, that sounds perfect. Finally, a way to know if what you're seeing actually happened.

Except it doesn't quite work that way.

I've spent the last few weeks digging into what Ring Verify actually does, how it works, and more importantly, what it doesn't do. The answer is more complicated than Ring's marketing suggests, and it highlights something critical about the state of video authentication in 2025: we still don't have a reliable way to spot AI-generated deepfakes at scale.

Let me walk you through the reality of video verification, why it matters more than you think, and what this means for your home security.

TL; DR

- Ring Verify can only confirm videos haven't been edited, not that they're authentic originals or genuine footage

- It won't catch AI-generated deepfakes because those are "original" files from Ring's perspective

- Videos fail verification if anything changes: cropping, brightness adjustment, compression, trimming even one second

- The tool is built on C2PA standards, which means it focuses on integrity, not origin verification

- Most Tik Tok "Ring footage" videos won't verify, but neither will legitimately edited clips you want to share

- The real problem: We need AI detection technology, not just integrity checking

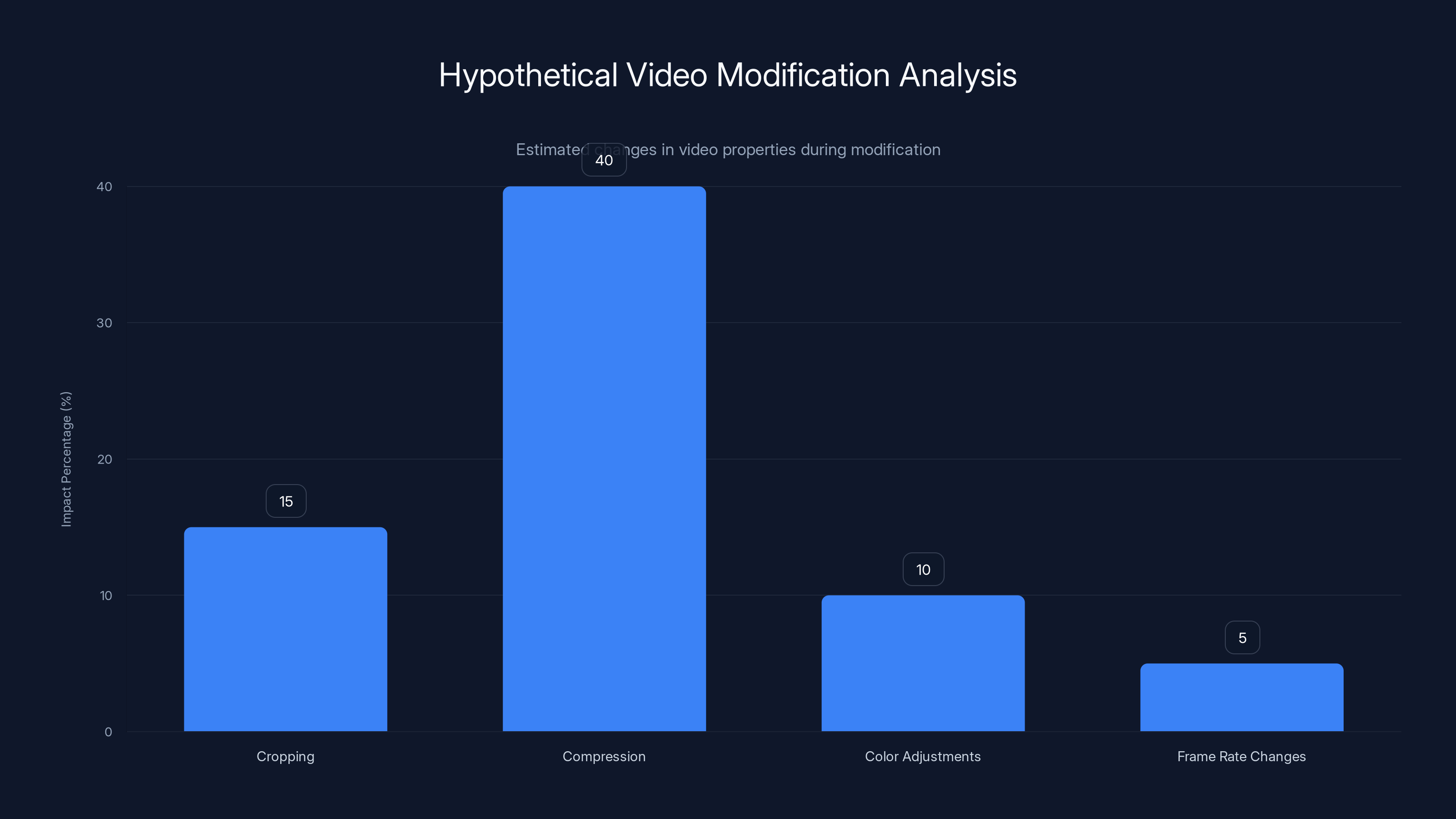

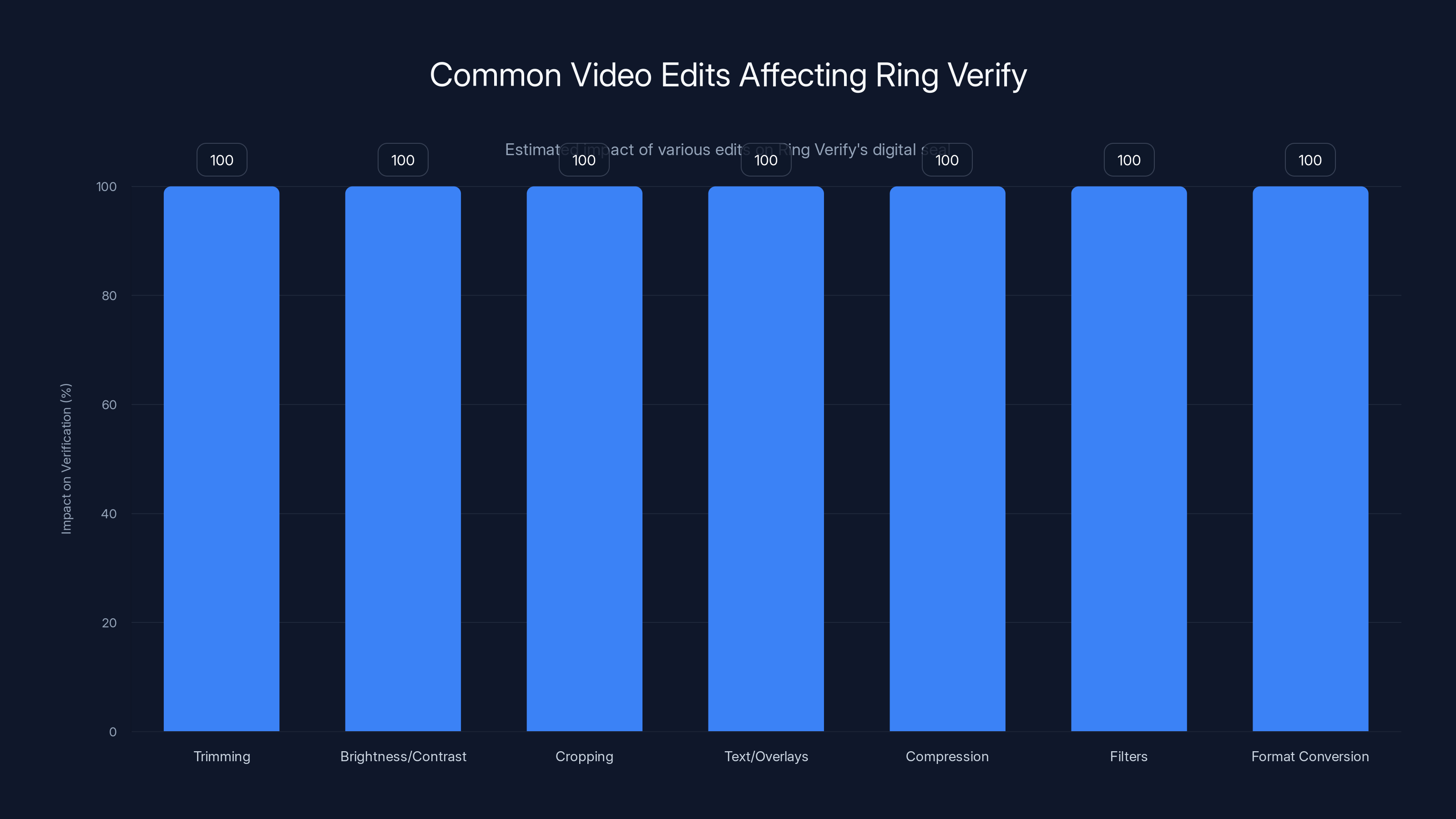

Estimated data showing potential modifications that could affect video verification. Cropping and compression are common changes.

What Ring Verify Actually Does (And Doesn't)

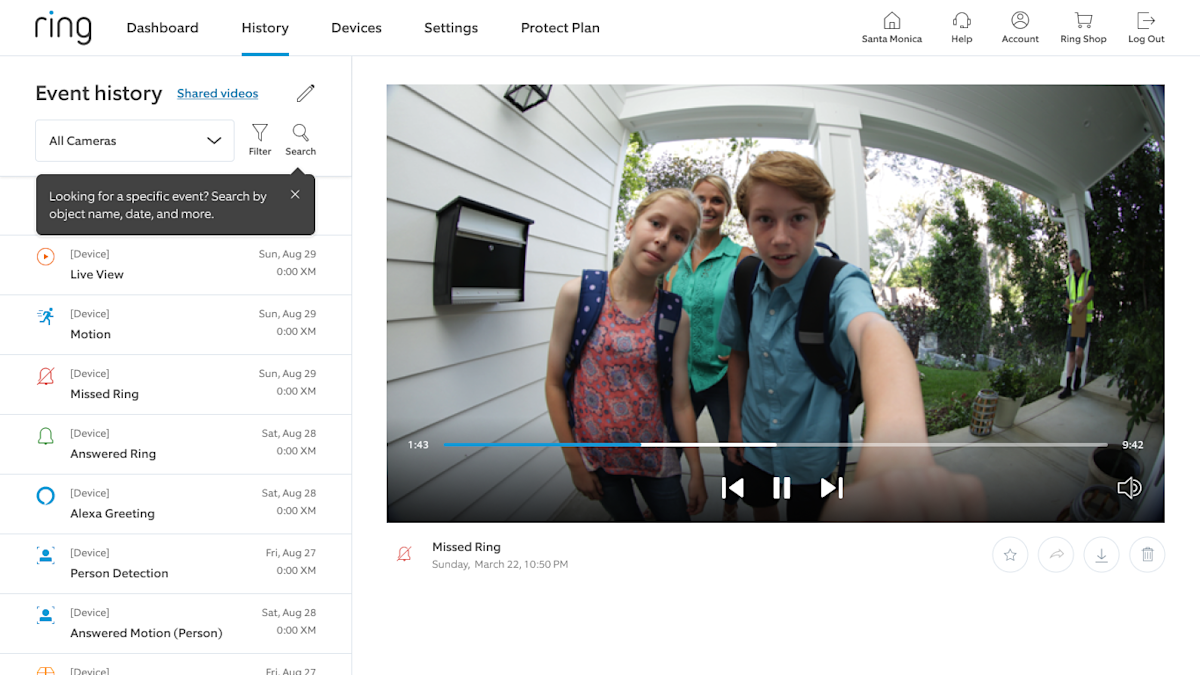

Let's start with what Ring Verify is designed to do. It's actually pretty straightforward: Ring added a "digital security seal" to all videos downloaded from its cloud storage starting in December 2025. When you upload a video to Ring Verify's website, the tool checks whether that digital seal is intact.

If the seal is intact, the video gets a "verified" badge. That means one thing and one thing only: the video hasn't been altered since you downloaded it from Ring.

That's it. That's the full scope of what this tool does.

Now, Ring's marketing language around this is careful. The company says the tool "verifies that Ring videos you receive haven't been edited or changed." Technically accurate, but it creates a perception problem. When people hear "verified," they think "authentic." They think it means the video actually happened, that it's genuine footage of a real event.

It doesn't mean that.

A video can be completely fabricated with AI and still pass Ring Verify's test. As long as it's never been touched after downloading from Ring's system (and assuming it's somehow in Ring's system in the first place), the seal would theoretically be intact.

But here's the actual limitation that matters most: Ring Verify won't verify videos that have been edited, cropped, filtered, or altered in any way after download. This includes:

- Trimming even a single second

- Adjusting brightness or contrast

- Cropping the frame

- Adding text or overlays

- Compressing for upload to social media

- Applying any filter or effect

- Converting to a different format

If you want to share a Ring video on social media and make even the smallest edit, it fails verification. The digital seal breaks. You get no verification badge.

The C2PA Standard: How Ring Verify Works Under the Hood

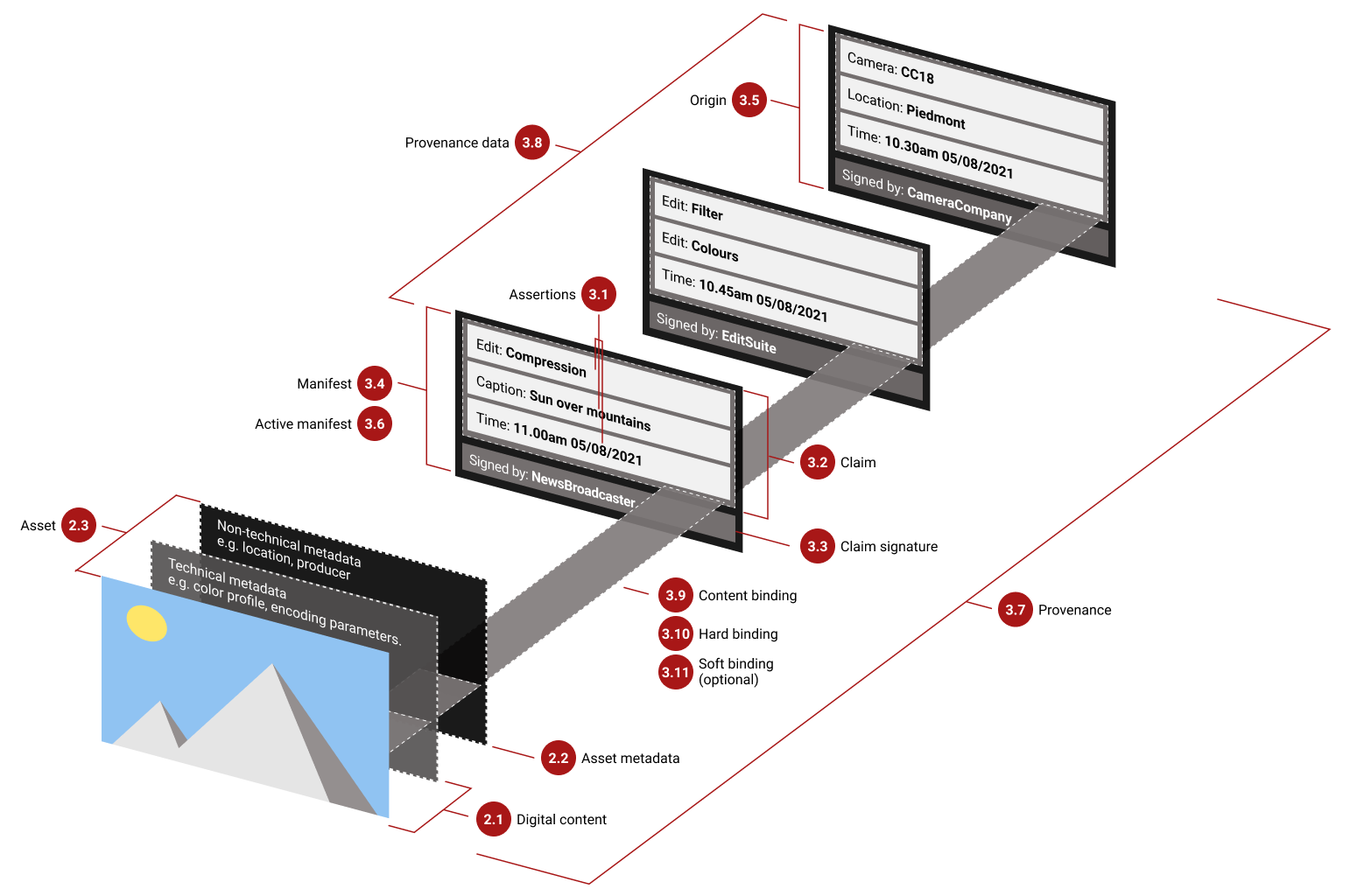

Ring Verify is built on something called C2PA, which stands for Coalition for Content Provenance and Authenticity. If you haven't heard of it, you're not alone. C2PA is industry infrastructure that most consumers have never encountered, but it's becoming increasingly important as AI-generated content becomes harder to spot.

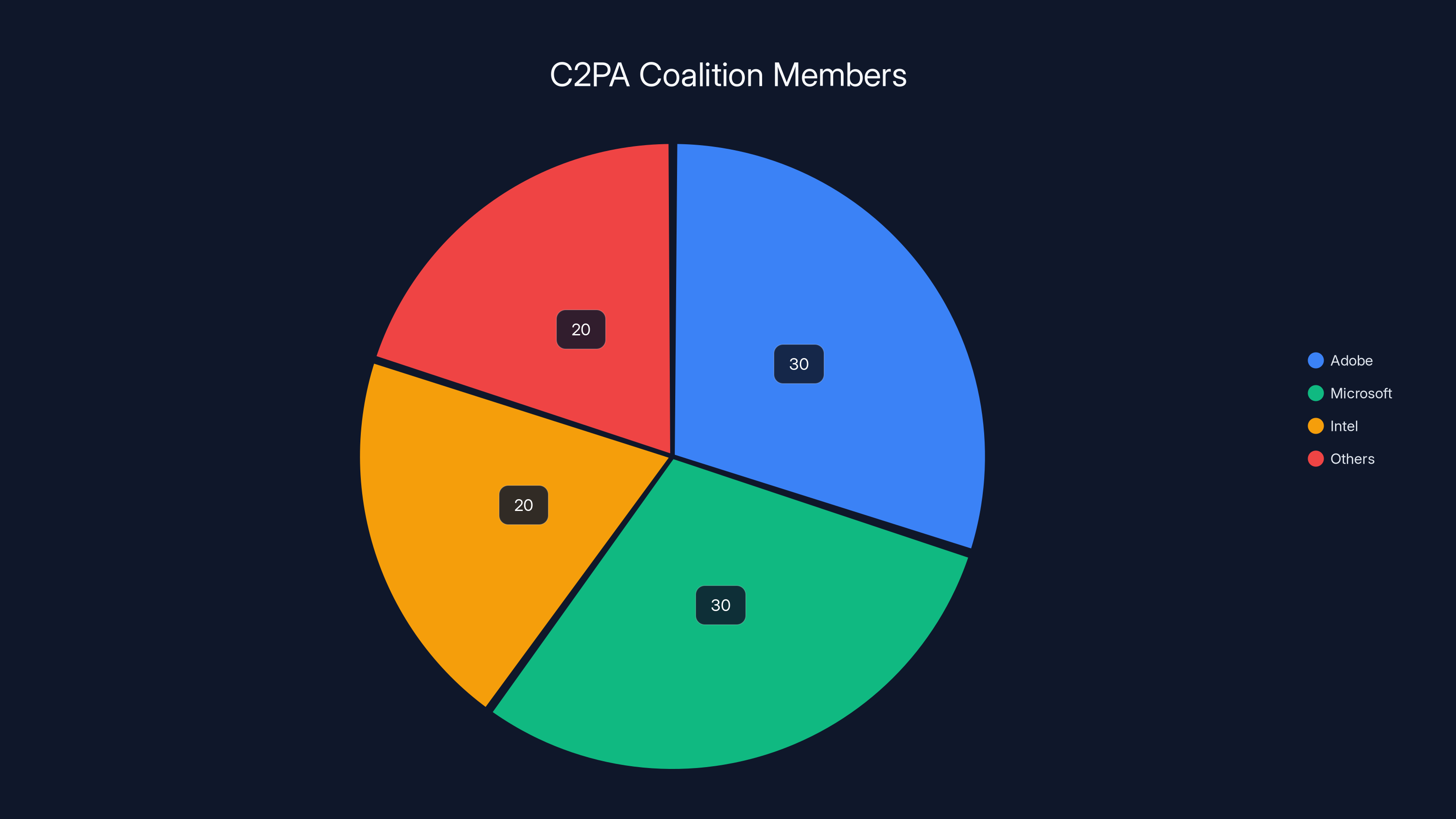

C2PA was developed by a coalition that includes Adobe, Microsoft, Intel, and other major tech companies. The basic idea is that creators can attach metadata to digital content that proves where it came from and whether it's been modified.

Think of it like a digital tamper seal on a bottle of medicine. If the seal is broken, you know something was wrong. If it's intact, you know the bottle hasn't been opened since manufacturing.

With C2PA, Ring can cryptographically sign videos when they're downloaded from the cloud. That signature becomes part of the file's metadata. When you upload it to Ring Verify, the tool checks whether that signature is still valid.

Here's the important part: C2PA is fundamentally designed to verify integrity, not authenticity. It answers the question "has this file been modified since it was created?" It does not answer the question "is this file real, or is it AI-generated?"

Those are two completely different problems.

Let's say someone uses NVIDIA's generative AI tools to create a fake video of someone stealing from your porch. They generate the video directly into their computer. They never touch Ring's servers. They never download a Ring video. They just create a fake one from scratch and post it to Tik Tok or Twitter.

That fake video would have a perfectly intact digital integrity. Nothing was ever modified. It was created that way. C2PA can't detect that because C2PA isn't designed to detect whether something is generated or real.

Ring Verify would be completely useless in that scenario.

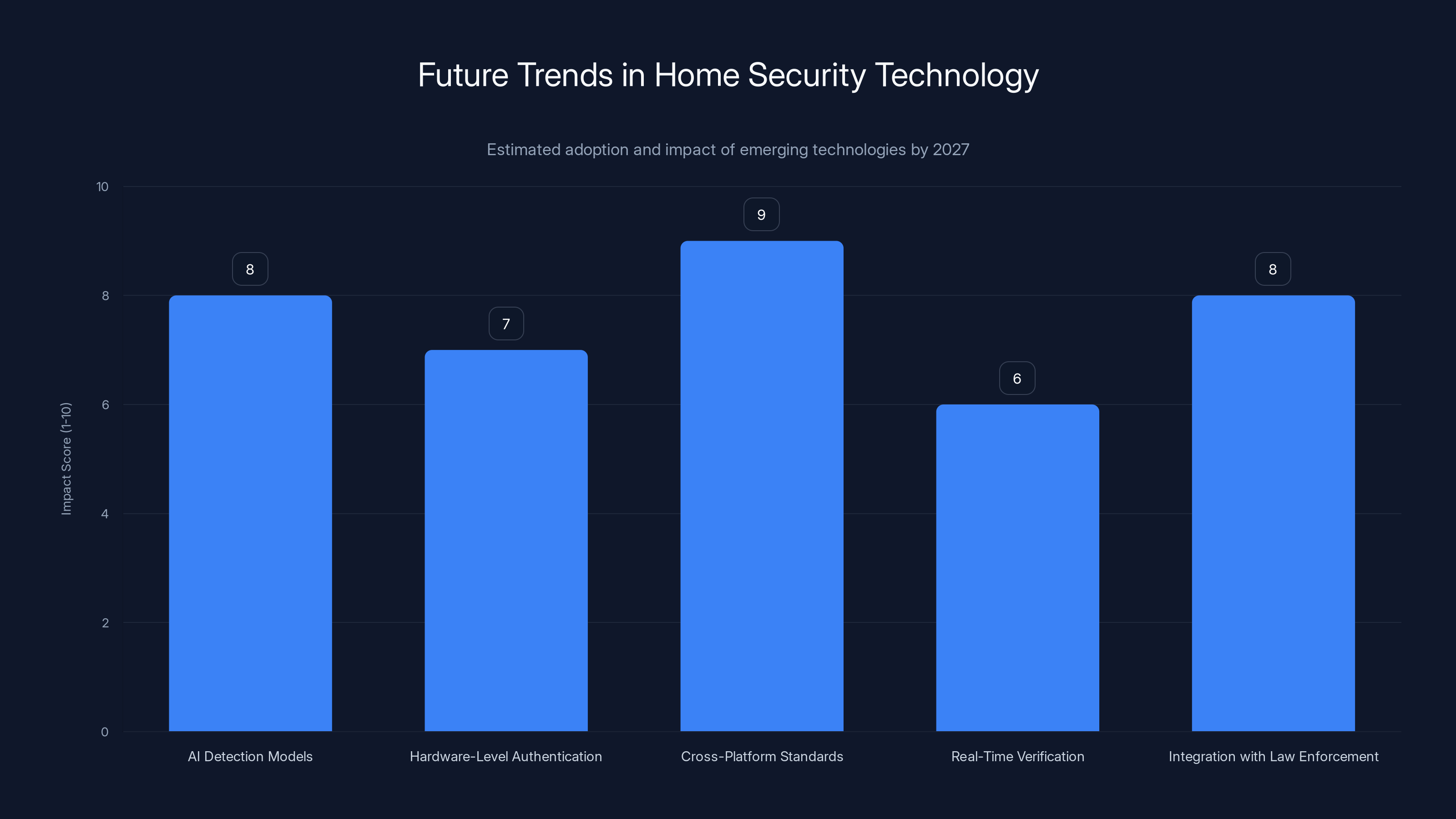

Estimated data suggests that cross-platform standards and AI detection models will have the highest impact on the future of home security by 2027.

The AI Deepfake Problem That Ring Verify Doesn't Solve

This is where things get genuinely concerning. The rise of generative AI has made video forgery trivial. Tools like Open AI's Sora, Stability AI's video generation models, and other video synthesis platforms can create photorealistic footage from text descriptions.

It's not perfect yet. If you know what to look for, you can often spot the AI-generated videos. But the quality is improving rapidly, and by the time you read this, the detection task has probably gotten harder.

The scary part: there's no authentication mechanism that can prove "this video was created with a real camera and not with AI." Not C2PA. Not any existing standard. Not anything on the horizon that's near deployment.

C2PA can tell you a video hasn't been modified. It cannot tell you whether a video is real or synthetic.

Here's a concrete example. Let's say someone creates a viral Tik Tok video that appears to be Ring camera footage showing something outrageous or funny. The video looks convincing. It has the timestamp overlay, the characteristic compression artifacts, the angle that looks like a doorbell camera.

But it was actually generated with AI. The person never had a Ring camera. They never recorded anything. They just wrote a prompt and let the AI do the work.

You could feed that video into Ring Verify and it would say "cannot verify" because Ring can't verify videos that weren't downloaded from Ring's system. Which makes sense. But you'd still have no way to know whether it's real or fake. You'd just know that Ring didn't make it.

Why Ring Can't Tell You How a Video Was Faked

Let's say you upload a video to Ring Verify and it fails the test. The verification fails. You get no badge.

Ring could theoretically tell you what happened. It could analyze the differences between the original and the modified version. It could say: "The video was cropped by 15% on the left side and compressed to 60% of the original file size."

But Ring doesn't do that. If verification fails, Ring just says "cannot verify." You get no information about what changed, how it changed, or why it failed.

This is a limitation of Ring's implementation, not of C2PA standards generally. C2PA supports detailed metadata about modifications. But Ring's tool is stripped down. You either get a green checkmark or you don't.

Why would Ring do this? Probably because detailed modification information could become a liability. If Ring told you exactly what changed, it might imply that Ring knows what the "correct" version should look like. That could open up legal questions if someone claims the original was fraudulent or if Ring's verification process is somehow compromised.

It's easier for Ring to just say "verified or not verified" and punt the investigation to you.

Who Ring Verify Actually Helps (And Who It Doesn't)

Let's be honest about the use cases where Ring Verify actually provides value.

If you're a landlord with security camera footage from a Ring camera, and you want to share that footage with law enforcement or a court, Ring Verify can help. The verified badge proves that what you're showing is exactly what the camera recorded. You didn't edit it. You didn't crop it. You didn't mess with the exposure. That's genuinely useful in a legal context.

Similarly, if you're concerned that someone intercepted and modified a Ring video before it reached you, the verification process can give you confidence that what you downloaded from Ring's servers is exactly what was actually recorded.

But if your concern is about AI-generated deepfakes, Ring Verify does almost nothing for you. Here's why:

Deepfakes don't come from Ring's system. They're generated independently. They don't have Ring's digital seal. They won't verify. But that doesn't tell you they're fake. It just tells you they didn't come from Ring, which you probably already suspected if you didn't own the camera.

Moreover, if someone wanted to create a convincing fake Ring video, they could just generate the video file, avoid Ring's verification system entirely, and distribute it however they wanted. The fact that it won't verify doesn't matter to them because they have no reason to run it through Ring's verification tool in the first place.

The people most likely to use Ring Verify are people who don't need it. The people who would need it most (people trying to spot AI-generated fakes) have almost no way to use it effectively.

Estimated data showing Adobe, Microsoft, and Intel as major contributors to the C2PA standard, with other companies also playing significant roles.

Limitations of the Verification System That Matter

Beyond the AI deepfake problem, there are other limitations to Ring Verify that constrain its usefulness:

End-to-End Encryption Breaks Verification

If you have end-to-end encryption turned on for your Ring videos, they can't be verified. This creates a paradox: the more paranoid you are about your privacy (and thus turn on E2EE), the less able you are to prove your videos are authentic.

Ring's reasoning here is understandable. If videos are encrypted, Ring's servers can't attach the digital seal without decrypting them first, which breaks the whole security model. But it means that people most concerned about security end up with less verifiable footage.

Videos Must Be Downloaded and Uploaded Fresh

You have to download the video from Ring's app, then upload it to Ring Verify's website. For a police investigation, this is workable. For casual sharing or social media, it's friction that most people won't accept.

Moreover, if you download a video and then want to share it through Ring's built-in sharing feature, Ring recommends asking the recipient to request a direct link from the Ring app instead. This is secure but completely defeats the purpose of Ring Verify for everyday use.

The Verification Window Is Limited

Ring Verify only works for videos downloaded after December 2025. If you have months of Ring footage from 2025 or earlier, none of it can be verified. The digital seal simply doesn't exist on older videos.

This limitation will fade over time, but for now it means your most important historical security footage is unverifiable.

Compression Ruins Everything

When you upload a video to Tik Tok, Instagram, YouTube, or virtually any social media platform, the platform re-encodes it for streaming. This compression is lossy. The file changes. The digital seal breaks. The video fails verification.

So if you want proof that a Ring video is authentic, you basically can't share it on social media while keeping it verifiable. You have to choose: share it widely, or keep it verifiable. You can't do both.

How Bad Actors Will Adapt to Ring Verify

Assuming Ring Verify becomes widely used, we should think about how people will work around it.

The most obvious move: if you want to create a fake Ring video and make it seem real, you just won't run it through Ring Verify. You'll post it directly to social media. You'll host it on an external server. You'll send it via email or messaging apps. You'll do anything except put it on Ring's verification system.

Ring Verify requires that you actively choose to verify a video. It doesn't automatically verify all videos that claim to be from Ring. This is good for simplicity but bad for actually catching fakes.

A more sophisticated approach: create a fake video that looks like a Ring video, post it to social media, and wait for someone to demand verification. When they ask "can you verify this?" you reply "oh, I didn't save the original, I only have this compressed version." Bingo. It can't be verified, but you never claimed it could be.

The adversarial dynamics are important here. Ring Verify is a tool for proving authenticity, not for catching fakes. The burden is on you to verify videos that seem suspicious. But most people won't bother. They'll just watch viral Ring videos on Tik Tok and assume they're real because they look real and because Tik Tok is full of security camera footage.

The Bigger Picture: What We Actually Need

Ring Verify is a step in the right direction, but it's solving the wrong problem.

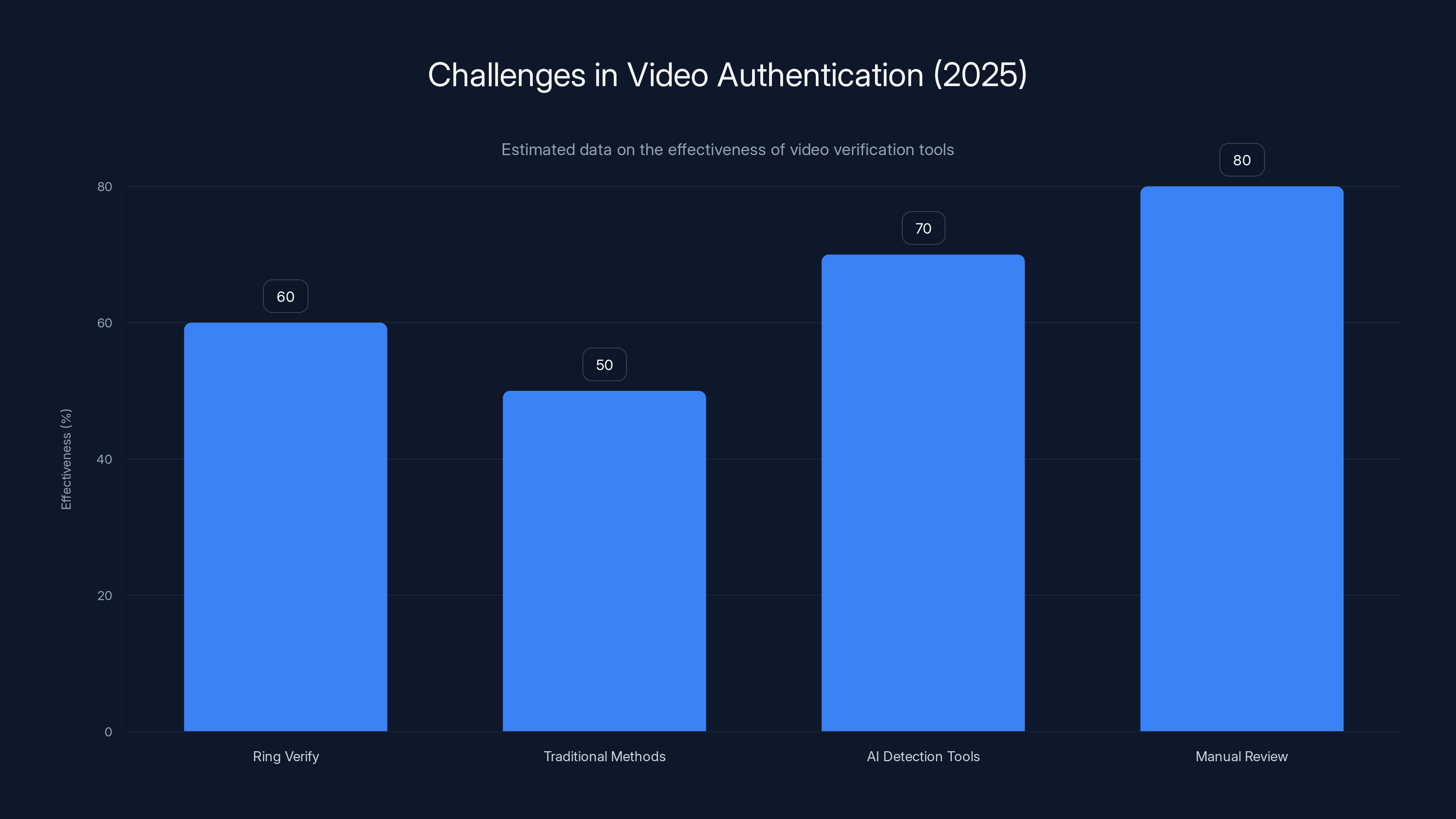

What we actually need is not integrity verification (proving a video hasn't been modified). We need authenticity verification (proving a video is real and not AI-generated).

These are completely different technical challenges. Integrity verification just requires a cryptographic signature. Authenticity verification requires AI detection models that can distinguish real video from synthetic video.

The bad news: we don't have reliable AI detection yet. The good news: we're getting closer.

Researchers at MIT, UC Berkeley, and other institutions are working on synthetic media detection. There are companies like True Pic and Sense Time building tools to detect deepfakes and generated content.

But most of these approaches have a fundamental limitation: they're reactive. They try to detect deepfakes after the fact, using AI detection models. But as generative models get better, detection gets harder. The arms race favors the attackers.

The real solution, long term, involves hardware-level authentication. This could mean:

- Cryptographic signatures embedded in video at the point of capture (the camera itself)

- Hardware-based content provenance tracking

- Integration of authentication standards across entire device ecosystems

- Real-time AI detection built into devices before video is stored

Ring is starting to do some of this with C2PA integration. But it's not enough. As long as we can generate videos entirely outside the Ring ecosystem, Ring Verify can't touch them.

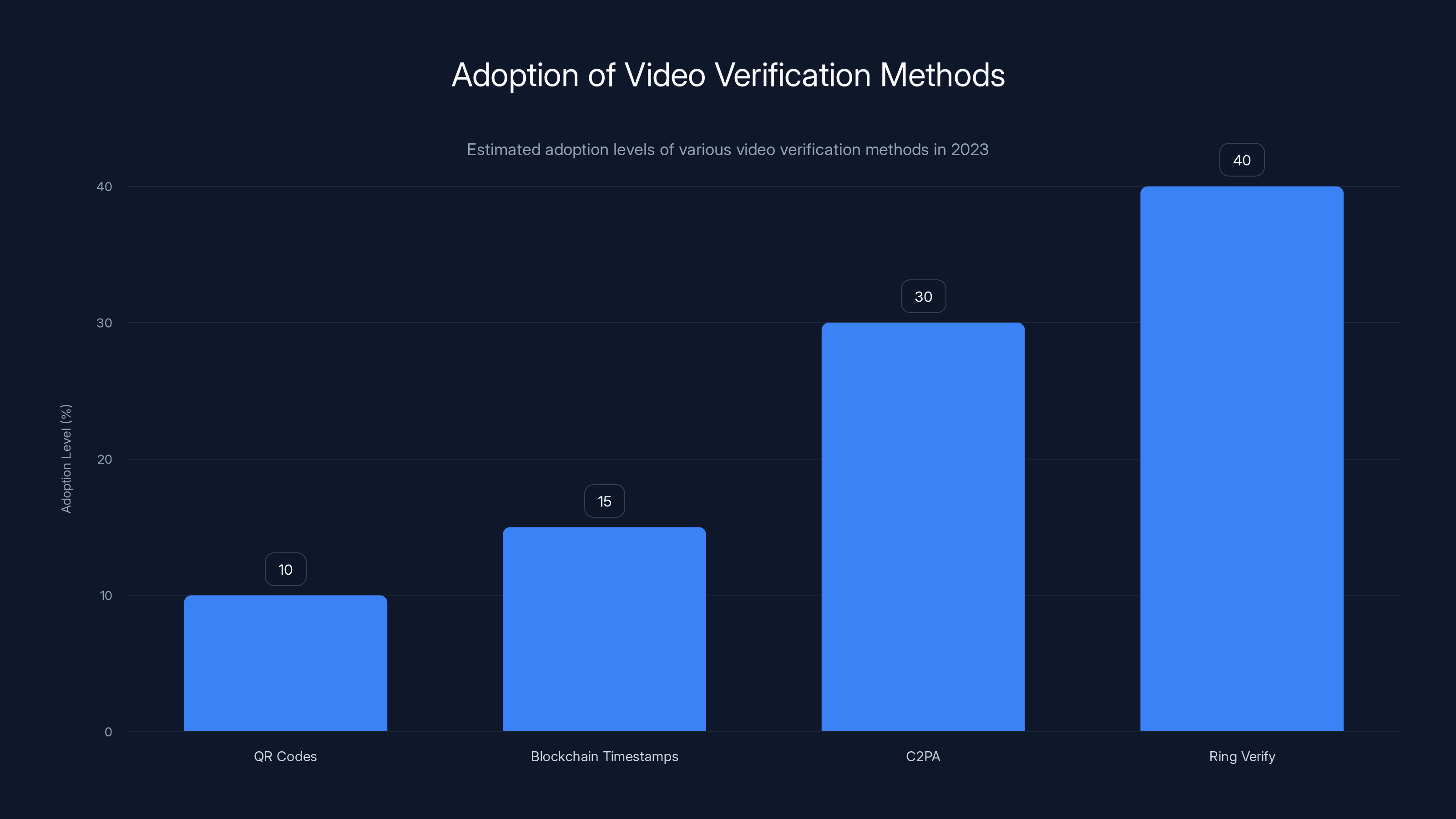

Despite various attempts, C2PA and Ring Verify show higher adoption levels compared to QR codes and blockchain timestamps. (Estimated data)

What Happened to Video Verification Before Ring?

Ring Verify didn't come out of nowhere. The company has been thinking about authentication for years.

Back in 2023, Ring experimented with other verification approaches, including physical QR codes on devices and blockchain-based timestamp verification. None of these gained significant traction.

C2PA offered a more promising path because it has industry backing. When Adobe and Microsoft put serious engineering resources behind a standard, other companies are more likely to adopt it.

But adoption has been slower than expected. Most cameras, most editing software, and most platforms still don't support C2PA. Ring is one of the first mainstream products to implement it for consumer use.

The question now is whether this creates momentum or whether Ring Verify becomes a feature that almost nobody uses because the friction is too high and the benefit is unclear.

How This Affects the Smart Home Security Industry

Ring's move to implement C2PA verification signals something important: smart home companies are waking up to the authentication problem.

If Ring Verify becomes standard, we could see verification built into other security products. Arlo, Wyze, Logitech, and other camera makers might follow.

More likely: this becomes a feature that exists but is rarely used outside of legal/law enforcement contexts.

Here's why I think that: Authentication is a coordination problem. It only provides value if enough parties adopt it. If Ring implements C2PA but law enforcement agencies don't have tools to verify C2PA metadata, what's the point? If social media platforms don't recognize C2PA verification, what's the point?

These coordination problems are hard to solve. Ring can do its part. But unless the entire ecosystem—camera makers, software developers, platforms, law enforcement—all agree to prioritize authentication, it remains a niche feature.

The Security Implications for Homeowners

Let's talk about what this actually means for you if you own a Ring camera.

First, the good news: Ring Verify doesn't break anything. Your camera still works exactly the same way. You get notifications, you can watch live view, you can share videos. Ring Verify is purely optional.

Second, the good news continues: if you ever need to prove to a court or law enforcement that a video is authentic, Ring Verify can help. That's legitimately valuable in the rare situations where it matters.

Third, the complicated part: Ring Verify might give you a false sense of security. You might think that because Ring has verification, you're protected against deepfake fraud. You're not. Ring Verify doesn't prevent someone from creating a fake video of, say, a delivery person stealing a package.

The real security lesson here is that video is always going to be vulnerable to manipulation and forgery. As long as someone can generate video from text prompts, perfect video forgery is possible. No verification system changes that fundamental reality.

What you can do instead:

- Trust video as evidence only in context. A video alone isn't enough to prove someone committed a crime.

- Combine video with other evidence. Timestamps, metadata, witness testimony, financial records.

- Be skeptical of viral videos that seem too perfect. If it's outrageous and it's on Tik Tok, there's a chance it's not real.

- Use Ring Verify for legal contexts but don't rely on it for everyday security.

- Keep your Ring account secure with strong passwords and two-factor authentication.

Ring Verify, launched in 2025, shows moderate effectiveness in detecting deepfakes compared to other methods. Estimated data.

Timeline: How We Got Here and Where We're Going

Understanding the history of video authentication helps explain why Ring Verify is the way it is.

2018-2020: First Concerns About Deepfakes Researchers at multiple institutions publish papers showing that AI-generated video is becoming photorealistic. Early deepfake videos mostly focus on celebrity faces swapped onto other bodies. The technology is crude but improving rapidly.

2021-2022: Generative Video Models Emerge Open AI's DALL-E and Stability AI's text-to-image models launch. It becomes clear that generative AI will soon be able to create video from text. Companies and researchers realize that detecting synthetic content is becoming critical.

2022-2023: C2PA Standard Adoption Adobe, Microsoft, Intel, and others formalize the C2PA standard for content provenance. The focus is on allowing creators to prove when and where content was created and whether it's been modified.

2023-2024: Generative Video Goes Mainstream Open AI releases Sora (initially limited, then broader access). Other companies release competing products. Creating convincing video from text becomes trivial for anyone with compute access.

Late 2024: Ring Announces Verify Feature Ring announces that verification is coming in December 2025. The industry realizes that even integrity verification (proving a video hasn't been modified) is valuable, even if it doesn't solve the AI deepfake problem.

December 2025: Ring Verify Launches The tool goes live. Early adoption is slow. Most people don't know it exists or understand what it does.

2025-2026 and Beyond: The Real Problem Emerges As generative video gets even better, and as deepfakes become more prevalent in fraud and disinformation, pressure builds for real authentication solutions. Integrity verification is nice, but authenticity verification is what's actually needed.

Comparing Ring Verify to Other Authentication Approaches

Ring Verify isn't the only approach to video authentication. Let's compare it to some alternatives:

| Approach | How It Works | Strengths | Weaknesses |

|---|---|---|---|

| Ring Verify (C2PA) | Cryptographic signature proves video hasn't been modified | Industry standard, legally defensible, integrated into device | Doesn't detect AI-generated video, requires fresh download, friction to use |

| Blockchain Timestamping | Records video hash on public blockchain | Immutable, transparent, verifiable | Slow, expensive, doesn't prove authenticity, doesn't prevent modification |

| Hardware Authentication | Camera embeds signature at point of capture | Strongest guarantee of provenance | Requires hardware changes, only works with trusted devices, doesn't help with existing video |

| AI Detection Models | Machine learning classifies real vs. synthetic video | Could catch deepfakes, scalable | Accuracy uncertain, arms race with generative AI, no legal standing yet |

| Watermarking | Invisible watermark embedded in video proves origin | Survives compression, hard to remove | Can be stripped by sophisticated attackers, doesn't prevent forgery |

| Manual Review | Humans watch video and assess credibility | Most accurate for now, human judgment | Doesn't scale, biased, expensive, slow |

Ring Verify's position in this landscape is interesting. It's not the strongest technical approach, but it's one of the few that's already deployed and used by real people.

Practical Use Cases for Ring Verify Today

Let's get specific about situations where Ring Verify actually helps:

Police Investigations If you have a break-in or theft and police request your Ring footage, Ring Verify adds credibility to what you provide. The verified badge proves you didn't edit the video to hide something. This is particularly valuable if the footage contradicts the suspect's story.

Insurance Claims Insurance companies often receive fraudulent claims with modified video evidence. Ring Verify helps them distinguish between legitimate claims and fraud attempts. If your claim includes verified video, the insurance company is more likely to trust it.

Landlord-Tenant Disputes If you're a landlord with security camera footage showing damage or unauthorized access, Ring Verify proves the video is exactly what the camera captured. This strengthens your legal position in disputes.

Legal Proceedings In court or arbitration, verified Ring video is more likely to be admitted as evidence. The verification process creates a chain of custody that strengthens the probative value of the footage.

Proving Your Innocence If you're accused of something caught on Ring footage, and you claim the video is manipulated or mistaken, you can use Ring Verify to prove that you didn't tamper with it. The verified badge proves the video is authentic to the camera's recording.

None of these use cases involve catching AI-generated deepfakes. All of them involve proving that a video from a Ring camera hasn't been modified after download. It's useful, but it's a narrower use case than the marketing suggests.

All listed video edits result in a 100% failure rate for Ring Verify's digital seal, meaning any alteration post-download breaks the seal. Estimated data.

The Honest Limitations You Should Know

Let me be direct about what Ring Verify can't do:

It can't prove a video is real. It can only prove it hasn't been modified.

It can't catch AI deepfakes. Those would come from outside the Ring system anyway.

It can't tell you if a video was shot with the camera pointed at a monitor playing pre-recorded video (a simple spoofing technique).

It can't verify videos compressed for social media.

It can't verify videos that have been edited in any way.

It can't tell you what changed if verification fails.

It can't prove that the person sharing the video is the person who owns the camera.

It can't prevent screenshots or screen recordings of video, which can then be edited and shared.

It's not foolproof if Ring's servers are compromised or if someone gains unauthorized access to your Ring account.

Understanding these limitations is crucial. Because if you think Ring Verify can do more than it can, you might make security decisions based on false confidence.

What This Means for the Future of Home Security

Ring Verify is a sign of where the industry is heading, but it's probably not the final answer.

The next steps will likely involve:

Better AI Detection Models As generative video improves, detection research will need to keep pace. By 2026-2027, we might have AI detection models sophisticated enough to be useful, though never perfect.

Hardware-Level Authentication Future cameras might include specialized hardware that makes it harder to spoof or manipulate video at the source. This could mean dedicated cryptographic processors or tamper-evident hardware markers.

Cross-Platform Standards C2PA will likely expand, and we'll see more devices and platforms adopting it. This creates network effects that make verification actually useful.

Real-Time Verification Instead of verifying video after download, future systems might provide real-time authenticity assessment. You'd see a live indicator telling you whether footage is genuine or suspicious.

Integration with Law Enforcement Police departments and prosecutors will build tools to verify C2PA metadata at scale. This creates demand among consumers who know they might need to prove video authenticity someday.

But here's the hard truth: authentication and AI detection will never be perfect. As long as someone can invest enough compute power into fooling the system, perfect fakes will be possible. The goal isn't to make perfect fakes impossible. The goal is to raise the bar high enough that only bad actors with serious resources can succeed.

Ring Verify raises the bar slightly. It makes it easier to prove video hasn't been modified, which is useful. But it doesn't fundamentally solve the problem of AI-generated video fraud.

How to Use Ring Verify Effectively

If you have a Ring camera and want to actually use Ring Verify, here's how:

Step 1: Download Your Video Open the Ring app, find the video you want to verify, and download it to your device. This is crucial: you need to download the original file, not a screenshot or screen recording.

Step 2: Don't Edit It Do not crop it, adjust brightness, apply filters, compress it, or make any modifications whatsoever. Even trimming a single second breaks the verification.

Step 3: Visit Ring Verify Go to Ring's verification website and upload your unmodified video file.

Step 4: Get Your Result You'll get either a "verified" badge or a "cannot verify" message. If verified, you now have proof that the video hasn't been modified since download.

Step 5: Use Verification Appropriately If you need to provide the video to police or for a legal proceeding, include the verification badge as evidence of authenticity. If you want to share it on social media, you'll need to edit it, which means losing verification.

It's a simple process, but the friction is real. Most people won't bother. Which means Ring Verify likely remains a niche tool for legal and law enforcement use.

The Verdict: Is Ring Verify Worth Caring About?

If you're a regular Ring user worried about AI deepfakes, here's the honest answer: Ring Verify doesn't solve that problem. You should still be skeptical of viral Ring videos on social media. You should still consider video alone as insufficient proof of anything important. You should still use common sense and critical thinking when evaluating video evidence.

But if you're in a situation where you might need to prove video authenticity to police, a court, or an insurance company, Ring Verify adds meaningful value. It creates a cryptographically verifiable record that the video you're providing hasn't been tampered with.

That's genuinely useful, even if it's not the silver bullet that solves deepfakes.

Ring Verify is a tool that improves the reliability of one specific type of evidence (unmodified video from Ring cameras) in specific contexts (legal, law enforcement, insurance). It doesn't revolutionize security. It doesn't prevent fraud. It doesn't catch AI-generated fakes.

But it does make it harder to lie about whether a Ring video has been edited. In a world where video evidence is becoming increasingly important and increasingly vulnerable to manipulation, that's worth something.

FAQ

What exactly does Ring Verify do?

Ring Verify checks whether a Ring video has been modified since you downloaded it from Ring's cloud storage. If the video hasn't changed at all, it gets a "verified" badge. This proves that what you're showing is exactly what the camera recorded, with no edits, crops, brightness adjustments, or compression.

Can Ring Verify detect AI-generated deepfakes?

No, it cannot. Ring Verify can only prove that a video hasn't been edited after downloading. It cannot determine whether a video is real or AI-generated. If someone creates a deepfake entirely outside of Ring's system and posts it to social media, Ring Verify has no way to detect it.

How does C2PA work with Ring cameras?

When you download a video from Ring's cloud storage, the company cryptographically signs it using C2PA standards. This creates a digital seal that proves the file hasn't been tampered with. When you upload it to Ring Verify, the tool checks whether that seal is still intact. If the seal is intact, verification succeeds. If anything changed about the file, the seal breaks and verification fails.

What edits will cause a video to fail Ring Verify?

Any modification at all will cause failure. This includes trimming even a single second, adjusting brightness or contrast, cropping the frame, adding text, applying filters, compressing for social media, or converting to a different format. Ring Verify requires the video to be completely unmodified from the moment of download.

Can I share a Ring Verify-verified video on social media?

No, not while keeping it verified. Social media platforms compress videos for streaming, which modifies the file and breaks the digital seal. You can share the video, but you'll lose the verification badge. If you want verification and sharing, Ring suggests asking people to access the original video through a direct link from the Ring app instead.

What happens if Ring Verify says it can't verify a video?

It means something is wrong, but Ring won't tell you what. The video might have been edited, compressed, converted, or downloaded before the December 2025 feature launch. Ring doesn't provide details about why verification failed.

Is Ring Verify available for all Ring camera models?

Ring Verify works for all Ring cameras going forward, but only for videos downloaded after the feature launched in December 2025. Older footage cannot be verified because those videos don't have the digital security seal.

If I have end-to-end encryption turned on, can I still verify videos?

No. Videos with end-to-end encryption cannot be verified because Ring's servers cannot attach the digital seal without decrypting them first. You'll need to turn off E2EE if you want verification capabilities.

What are the legal implications of Ring Verify?

In legal proceedings, a Ring Verify badge proves that a video hasn't been modified, which strengthens its admissibility as evidence. Courts may view verified video more favorably than unverified video. However, verification doesn't prove the video is real or that it depicts actual events accurately.

Could Ring Verify be used to commit fraud?

Potentially. If someone has unauthorized access to your Ring account, they could download your videos before you do and verify them, then claim they're authentic. However, Ring's account security measures (two-factor authentication, etc.) should prevent most unauthorized access.

The Bottom Line

Ring Verify represents progress on an important problem: how do we prove video authenticity in an age of AI-generated content? The answer, it turns out, is complicated.

Ring Verify doesn't solve the deepfake problem. It addresses a different problem: proving that a video hasn't been modified after download. For legal and law enforcement contexts, that's useful. For catching AI deepfakes, it's almost useless.

The real challenge ahead isn't building verification systems for videos that come from trusted sources like Ring. The real challenge is detecting synthetic video that's created entirely outside the ecosystem.

That problem remains unsolved. And until we have better AI detection capabilities or hardware-level authentication that spans the entire device landscape, deepfakes will remain a vulnerability we can't fully defend against.

Ring Verify is a step in the right direction. But it's only the first step. The journey toward trustworthy video evidence is just beginning, and we still have a long way to go.

Key Takeaways

- Ring Verify proves videos haven't been edited, not that they're authentic or real

- It cannot detect AI-generated deepfakes because those originate outside Ring's system

- Videos fail verification if anything changes: trimming, cropping, brightness adjustment, or compression

- C2PA standards focus on integrity verification, not authenticity verification

- Ring Verify is most useful for legal proceedings, law enforcement, and insurance claims

- Social media sharing makes verified videos impossible to verify due to platform compression

- AI detection models are still insufficient for reliably spotting synthetic video at scale

Related Articles

- Under Armour 72M Record Data Breach: What Happened [2025]

- Why We're Nostalgic for 2016: The Internet Before AI Slop [2025]

- CIRO Data Breach Exposes 750,000 Investors: What Happened and What to Do [2025]

- How Grok's Deepfake Crisis Exposed AI Safety's Critical Failure [2025]

- California AG vs xAI: Grok's Deepfake Crisis & Legal Fallout [2025]

- xAI's Grok Deepfake Crisis: What You Need to Know [2025]

![Ring Verify & AI Deepfakes: What You Need to Know [2025]](https://tryrunable.com/blog/ring-verify-ai-deepfakes-what-you-need-to-know-2025/image-1-1769130432677.jpg)