The Voice Revolution in Task Management: How AI Turns Rambling Into Results [2025]

Last Tuesday, I spent twenty minutes trying to explain a project timeline to my team. Then I realized I could've just opened my phone, talked for ninety seconds, and had the system organize everything automatically. That's the quiet revolution happening right now in productivity tools.

Voice input isn't new. But voice input that actually understands context, nuance, and urgency? That's the game-changer nobody saw coming.

There's something genuinely powerful about speaking instead of typing. Your brain works faster than your fingers. You don't self-edit. You just let everything out, and then—here's the magic part—an AI system takes that rambling brain dump and turns it into structured tasks, deadlines, and projects. No formatting. No copying between apps. Just talk.

The productivity landscape shifted hard once voice became reliable enough to handle real work. We're not talking about setting a timer or sending a text message anymore. We're talking about sophisticated natural language processing that can parse multiple related projects, extract deadlines buried three sentences into your rant, identify dependencies, and create actionable tasks.

This matters because context switching costs time. Real time. Research from the American Psychological Association shows that switching between tasks can reduce productivity by up to 40%. Every time you stop talking and start typing, stop thinking and start clicking, your brain pays a penalty. Voice-first workflows cut that friction.

The tools have gotten smart enough that they're not just transcribing anymore. They're understanding. They're contextualizing. And they're doing it well enough that people are actually replacing their old workflows.

Why Voice Task Management Works Better Than Text

Here's what nobody talks about: your voice contains information that text can't capture. Tone matters. Pacing matters. Emphasis matters. When you're tired, that comes across. When something's urgent, your voice conveys that before you consciously frame it.

AI systems trained on massive amounts of voice data have learned to pick up on these signals. A task you mention quickly, almost in passing, is handled differently than one you spend time explaining. The system gets it.

There's also the sheer speed differential. Fast typists hit about 75 words per minute. Average speakers hit 150 words per minute. That's literally double the throughput. For complex projects with multiple moving parts, that difference compounds fast.

But the real unlock is mental flow state. When you're typing, part of your brain is managing the interface. Where's the field? What's the format? Should this be a subtask or a new task? With voice, you're just thinking and speaking. The AI handles the structure.

Another underrated factor: accessibility. People with mobility limitations, vision impairments, or repetitive strain injuries can suddenly use productivity tools the way they were originally designed to be used. Voice removes a barrier, as noted by the National Council on Aging.

There's also something psychological happening. When you talk about what you need to do, you're externalizing your thoughts. That act of articulation itself clarifies thinking. You stumble over confusing points. You realize dependencies while you're speaking. You catch contradictions. The AI system isn't just transcribing—it's helping you think through problems by forcing you to articulate them.

And then there's the audit trail. Everything you've said is recorded and tagged. Future you or future team members can understand not just what was decided, but the thinking behind it. That's invaluable for asynchronous teams.

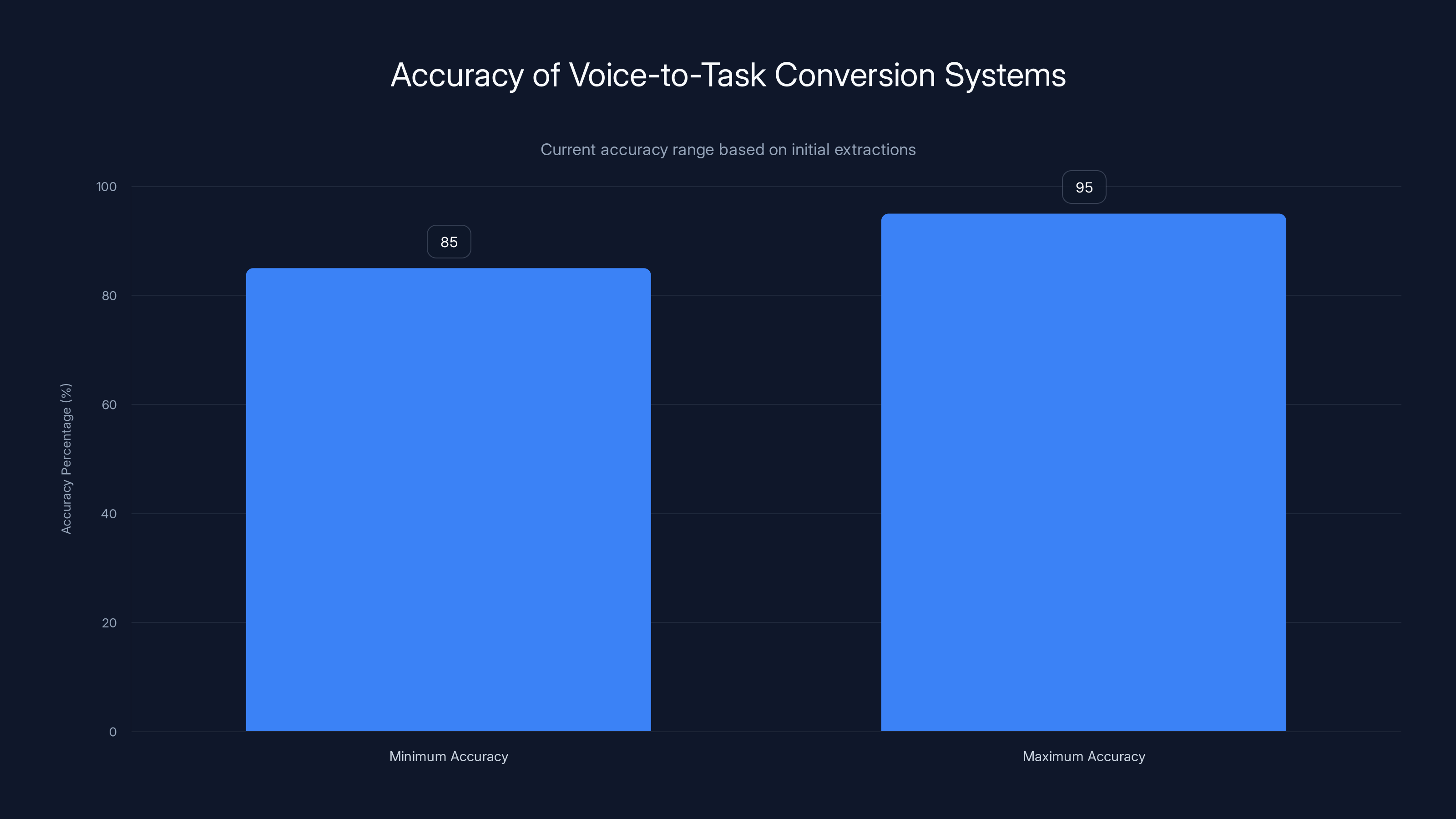

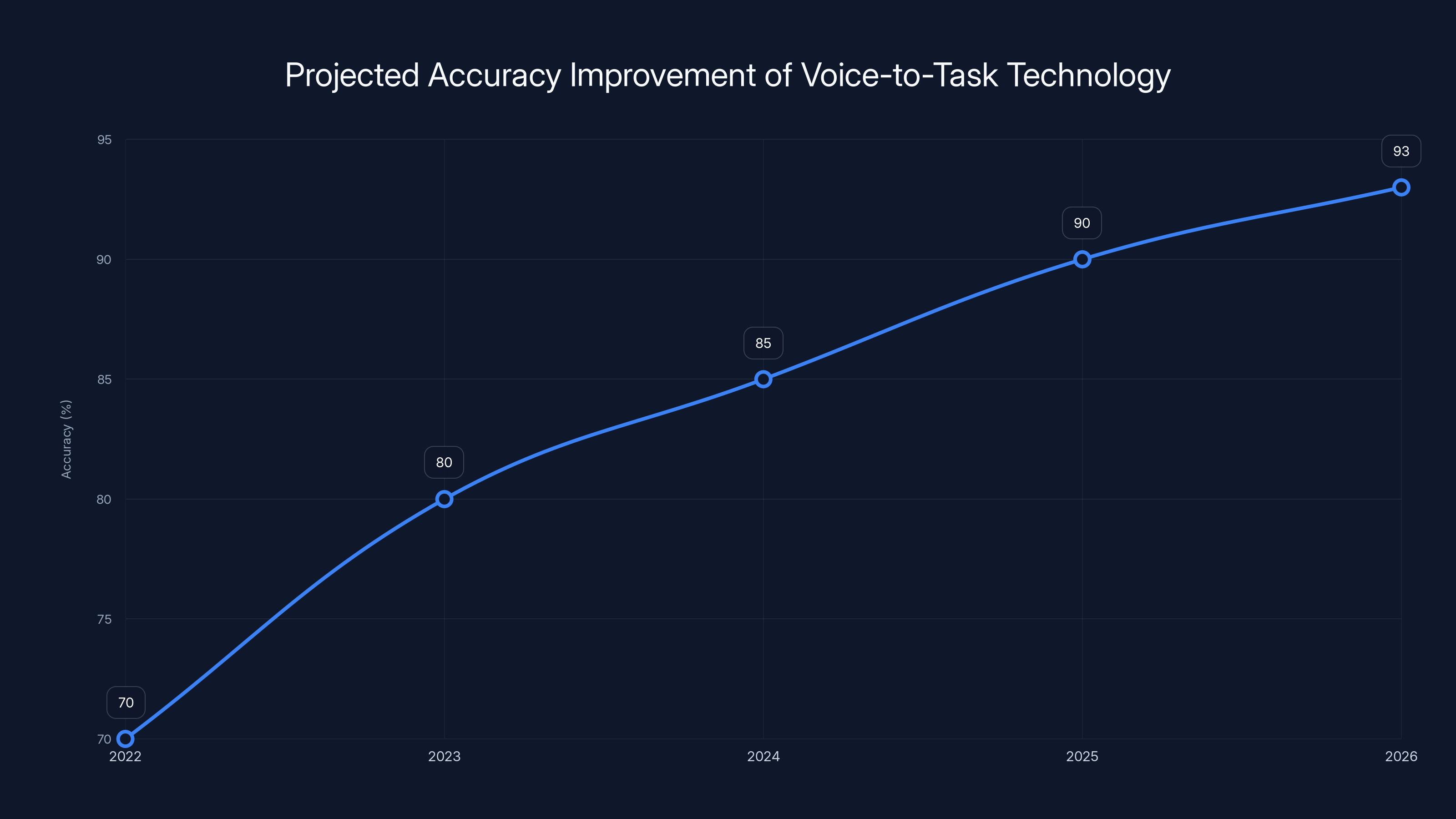

Voice-to-task conversion systems achieve 85-95% accuracy on the first pass, indicating that they are generally reliable for initial task extraction.

The Technology Behind Voice-to-Task Conversion

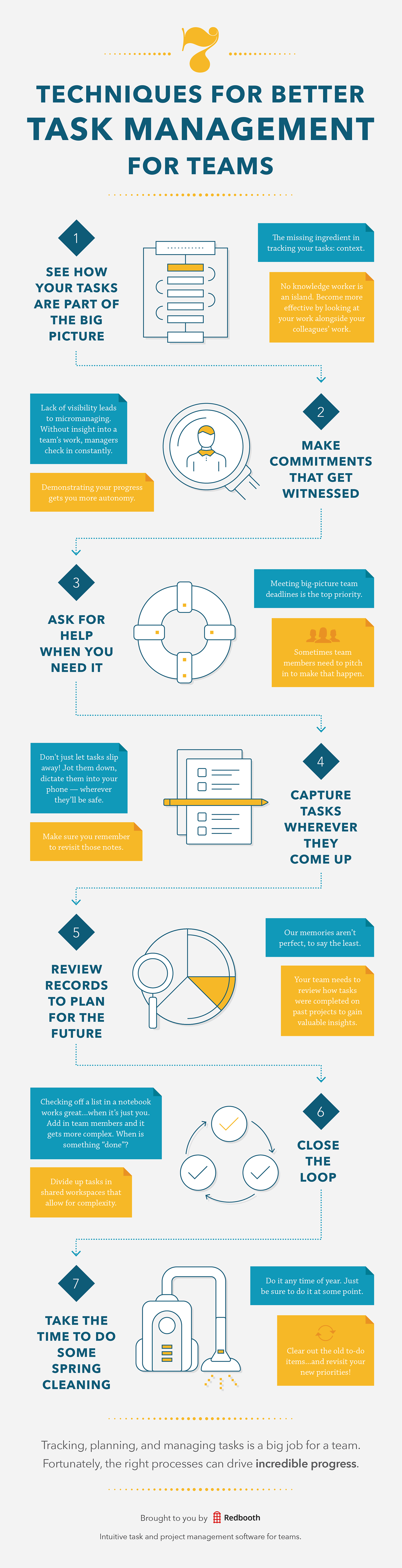

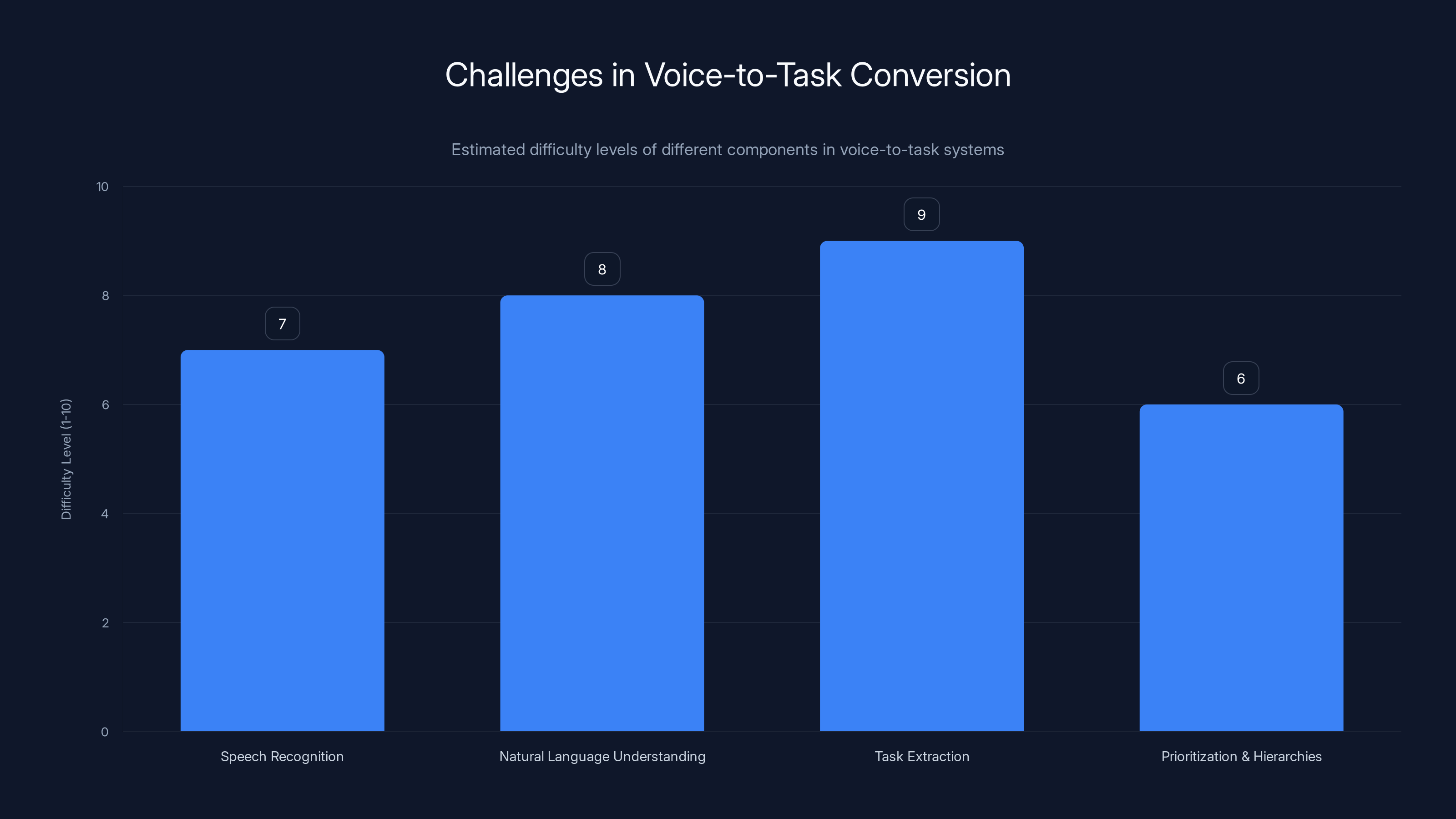

Making this actually work is harder than it sounds. The system needs to handle several distinct problems simultaneously.

First, there's speech recognition. Modern speech-to-text is incredibly accurate for clear audio, but real-world conditions are messier. Background noise. Accents. Technical jargon that's not in the standard dictionary. Interruptions. The system needs to be robust enough to handle all that.

Second, there's natural language understanding. The transcribed text is just the beginning. The system needs to understand intent. Is this a task or a comment about a task? Is this a deadline or just temporal context? Multiple projects mentioned in one breath—how should those be parsed?

Third comes task extraction. Converting natural language into structured data requires understanding business context. What's the difference between "I need to talk to Sarah" and "Schedule a meeting with Sarah and Tom about Q3 planning"? The second one contains a task, a participant, a subject, and timing context. The system needs to identify and extract all of that.

Fourth is prioritization and hierarchies. Real work has structure. Some tasks are subtasks of larger projects. Some are blockers for other work. The system needs to understand these relationships from conversational speech that doesn't explicitly label them.

The current state-of-the-art uses transformer-based language models trained on task management data. These models have learned patterns about how people describe work. They understand that "blocked by" indicates a dependency. They recognize that mentioning a person's name often means assigning to that person. They can pick up on urgency markers.

They're not perfect. They hallucinate sometimes. They miss context if you haven't explained it clearly. But they're good enough that they're faster and more accurate than manual entry for most use cases.

The filtering stage is where a lot of the sophistication hides. Not every sentence needs to become a task. "I'm going to finish this by Friday" is context, not a task creation instruction. The system learns to distinguish between information that should create tasks and information that should modify existing tasks.

Then there's the disambiguation stage. When you mention "the redesign project", the system needs to figure out which project you mean. When you say "next week", it needs to convert that to an actual date. These seem simple until you try to scale them across thousands of users with different calendars, timezones, and project naming conventions.

The really sophisticated systems also handle speaker adaptation. Your voice is unique. Your patterns of speech are unique. The system that knows you knows that when you say "quick touch base", you usually mean 15 minutes, and when you say "big meeting", you mean several hours. It learns your conventions.

Todoist Ramble: The Flagship Example

Todoist Ramble launched out of beta recently, and it's probably the most polished example of this concept currently available. The workflow is stripped-down simple: open the app, hit record, start talking, stop talking, let it process.

What's impressive is how much gets extracted from casual speech. You can mention three different projects, set varying levels of urgency, throw in deadlines that are relative ("by the time we ship") and absolute ("January 15th"), assign tasks to people, create subtasks, set due dates—all in one continuous brain dump.

The system handles interruptions gracefully. You can stop, think, resume, and it picks up coherently. You can ramble. The more data you give it, the better it works, because the AI can triangulate intent from multiple mentions.

One thing that works particularly well is how it handles negation and correction. "Actually, never mind about that Marketing thing, focus on the Dashboard instead" gets parsed correctly as canceling one set of tasks and prioritizing another.

The integration is seamless. Tasks appear directly in your Todoist projects with appropriate structure, due dates, and assignments. No copy-paste. No manual formatting. Just structure appearing from chaos.

What's notable about this approach is that it works because Todoist simplified the problem domain. Todoist is a task manager, nothing more. It's not trying to also be a calendar, a note-taker, a project planner, a communication tool. By focusing on tasks specifically, the AI can be really good at extracting exactly that information.

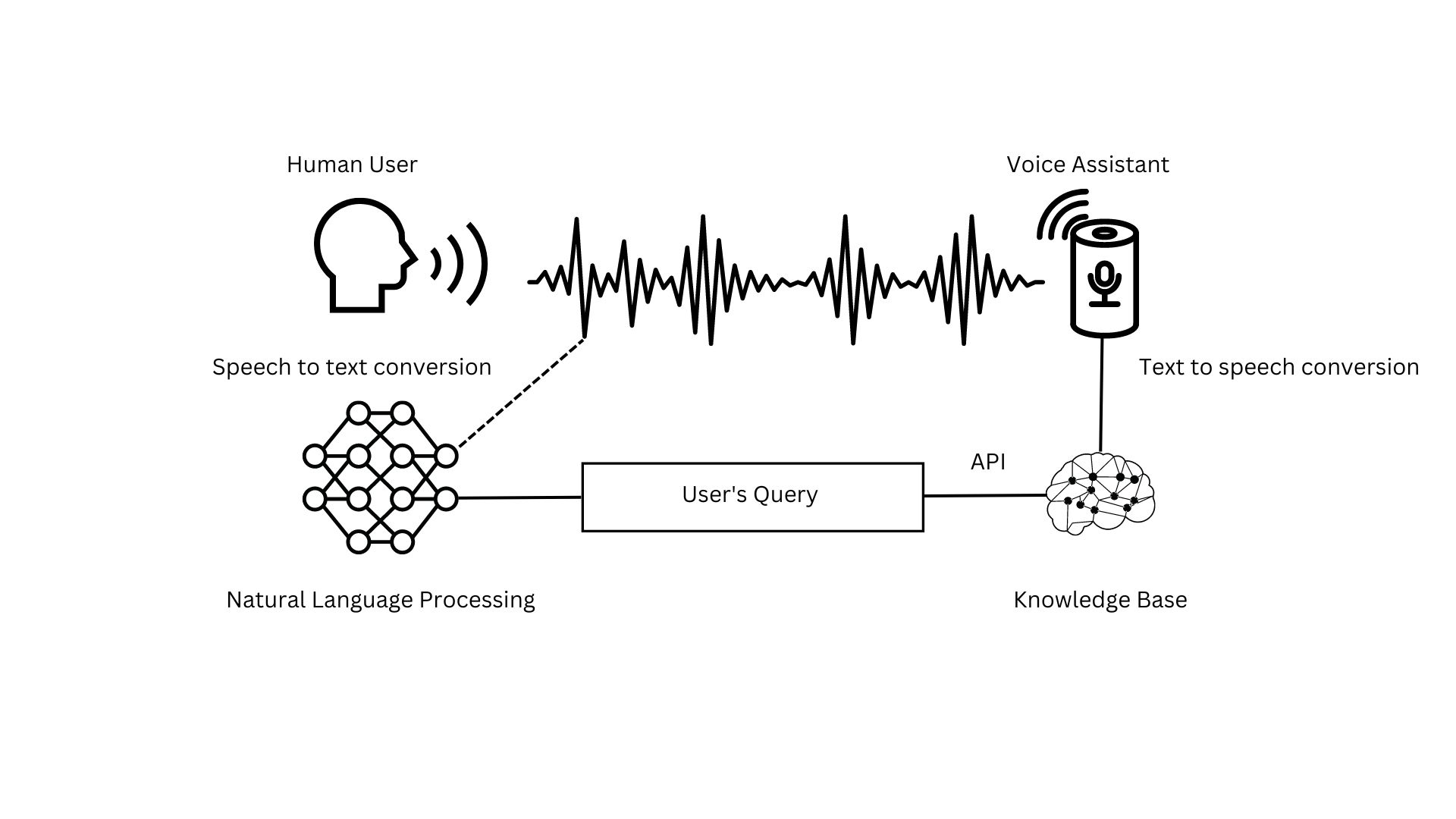

The current version handles about 85-90% of inputs cleanly on the first pass. The remaining 10-15% need manual tweaking. But that's still faster than doing the whole thing manually.

Users report that after about a week of using voice input, they stop reaching for typed input for task creation. The speed difference is just too pronounced. And the lower cognitive load of speaking instead of structuring text is something you notice every single time.

Todoist Ramble efficiently handles approximately 85-90% of inputs cleanly, requiring manual tweaks for only 10-15% of inputs. Estimated data.

The Productivity Multiplier Effect

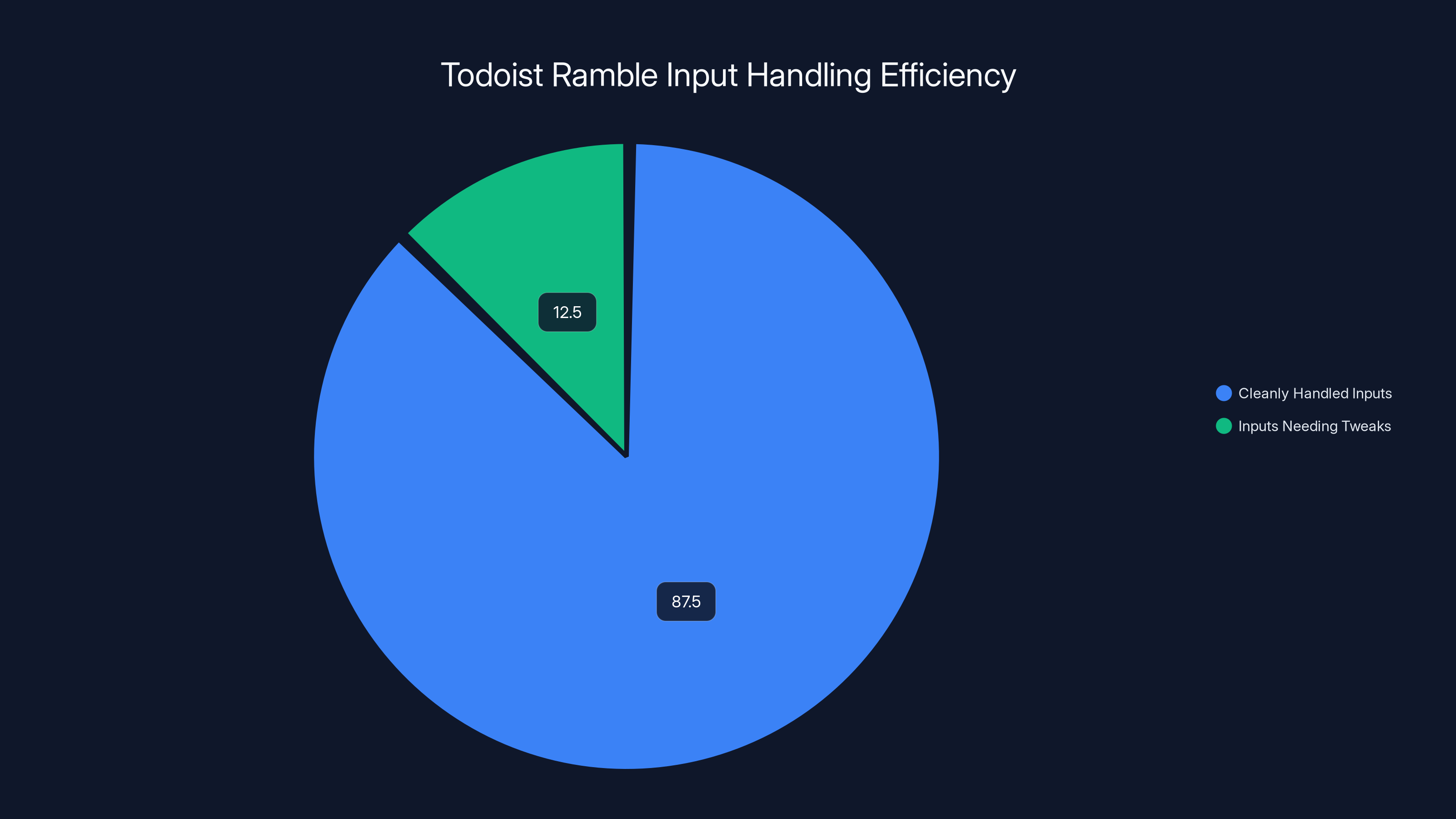

Here's where it gets interesting mathematically. The time saved on data entry alone is significant, but it's not the biggest factor.

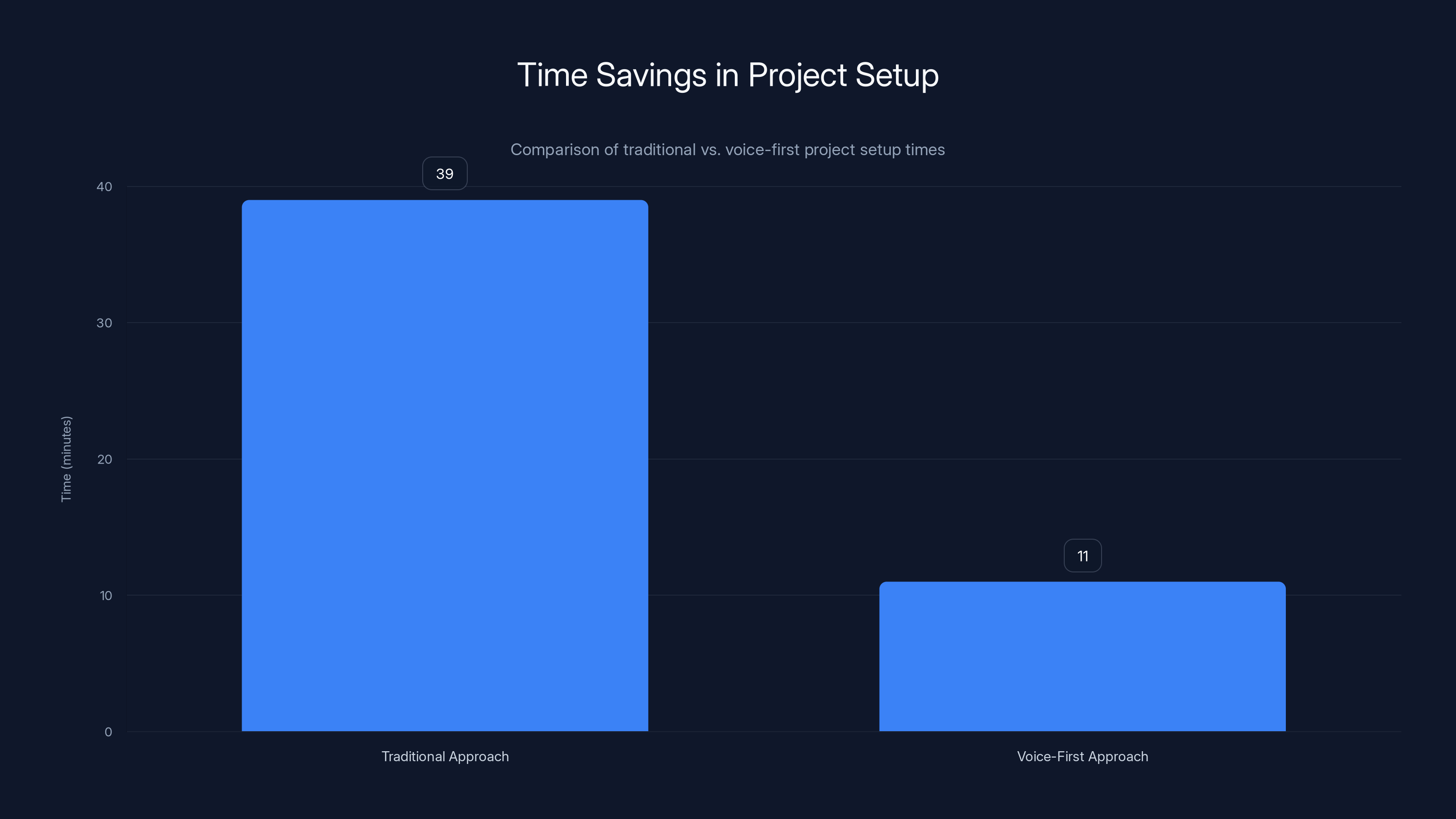

Let's break down the actual time investment for a moderately complex project—say, planning a product launch with multiple dependencies. Traditional approach:

- Think through the project (10 minutes)

- Open task manager (30 seconds)

- Create the project structure (5 minutes)

- Create individual tasks, assigning them, setting dates (15 minutes)

- Create dependencies and relationships (5 minutes)

- Review and adjust (3 minutes)

Total: 39 minutes

Voice-first approach:

- Open app and record brain dump (5 minutes)

- System generates structure (1 minute processing)

- Manual review and tweaking (5 minutes)

Total: 11 minutes

That's a 72% reduction in setup time. But the bigger win is the elimination of context switching. In the traditional approach, you're constantly switching between "thinking mode" and "interface mode". With voice, you stay in thinking mode the entire time. Your brain never context switches.

Context switching has a cognitive cost that extends beyond the immediate task. A study from UC Irvine found it takes an average of 23 minutes to return to the original task after interruption. With voice input, you're not being interrupted—you're just transitioning from speaking to reviewing, which is continuous thinking.

Over a year, if you're planning a few complex projects per month, that difference adds up. A conservative estimate suggests 30-50 hours per year saved just from eliminating context switching, separate from the direct time savings.

Then there's the quality factor. Projects set up via voice tend to be more thoroughly thought through, because you had to articulate everything. The act of explaining reveals gaps in thinking. You catch contradictions while speaking. You realize you haven't assigned ownership to something important. This results in fewer mid-project reschedules and timeline adjustments.

Projects that are well-planned upfront have about 15-20% better on-time delivery rates compared to projects that are half-planned and half-improvised. So the productivity gain isn't just faster setup—it's better execution.

Building the Tech Stack: What's Needed

Creating a voice-to-task system that actually works requires several pieces to come together.

At the foundation is speech-to-text. Modern solutions like Whisper from OpenAI have reached a quality threshold where they can handle most inputs cleanly, including technical terminology and background noise. But phone audio quality, thick accents, or heavily jargon-filled speech can still create issues.

On top of that sits the natural language understanding layer. This is where the proprietary magic usually lives. Training a model to understand business context, task-specific language, and individual user patterns takes significant data and refinement.

The extraction engine then converts the understood meaning into structured task data. This involves entity recognition (identifying what's a person, what's a deadline, what's a project name) and relationship mapping (understanding that "blocked by" creates a dependency).

The disambiguation engine handles ambiguity. When the user says "the project", which one do they mean? When they say "Friday", what date? The system uses conversation history, calendar data, and project context to make educated guesses.

Finally, there's the delivery layer—actually creating tasks in the system with appropriate formatting, assignments, and due dates.

All of this has to work in real-time or near-real-time. Processing lag kills the experience. Users expect to hit stop recording and immediately see results, or at least within 5-10 seconds.

The AI Learning Loop

What makes voice-to-task smarter over time is feedback. Every time a user corrects an extraction, the system learns. Every time a user says "that's wrong, here's what I meant", the system gets better.

This is where individual user training becomes powerful. A system that's been adapted to your specific patterns, your naming conventions, your team structure, and your communication style becomes dramatically more useful than a generic system.

The best implementations track:

- Which corrections you make most frequently

- Which ambiguities cause problems for you specifically

- Your preferred task structure and naming patterns

- Your team's common project types and workflows

- Temporal patterns (when you plan projects, how urgently they usually run)

With this data, the system can proactively ask clarifying questions for the things it struggles with for you specifically. It learns to interpret your particular way of speaking. It adapts to your context.

Over time, this adaptation can increase accuracy from 85-90% to 95%+. The system becomes individualized. It stops feeling like a generic tool and starts feeling like it understands how you work.

The voice-first approach reduces project setup time by 72%, from 39 minutes to 11 minutes, highlighting significant efficiency gains.

Limitations and Reality Checks

Let's be honest about what voice-to-task systems can't do well yet.

First, they struggle with truly complex planning. If you're setting up a project with 50+ tasks, significant interdependencies, critical path analysis, and resource constraints, voice input alone isn't going to cut it. You need a visual interface for that complexity.

Second, they can miss context if you haven't been explicit. If you haven't mentioned a project in three weeks and then suddenly reference "the thing", the system might not know what you're talking about. You have to help it by providing enough specificity.

Third, they work better for certain types of tasks than others. "Create invoice for Client X" extracts cleanly. "Figure out why the dashboard is slow and identify solutions" is fuzzier. Concrete, action-oriented tasks convert well. Exploratory, research-based work converts poorly.

Fourth, they require good audio. If you're on a noisy call, in an airport, or speaking a heavily accented dialect that the training data didn't include much of, accuracy drops. This is improving, but it's still a factor.

Fifth, they're biased toward the dominant language of their training data. English works great. Other languages work less well. Very technical domains work well. Highly specialized business jargon might not be in the model.

Sixth, there's a learning curve for the user. You need to be explicit in ways you wouldn't be if typing. "Make sure we contact the three key decision-makers" is too vague. "Contact Sarah, James, and Michelle—they're the decision-makers for this vendor selection" is specific enough to extract actual tasks.

Seventh, privacy and security considerations matter. You're recording your voice and sending it to a service. That data contains sensitive information sometimes. You need to trust the platform and its security practices.

And finally, there's an adoption hurdle. Some people feel self-conscious recording voice notes. Talking to your phone feels weirder to some people than typing, even if it's faster. That's a real barrier.

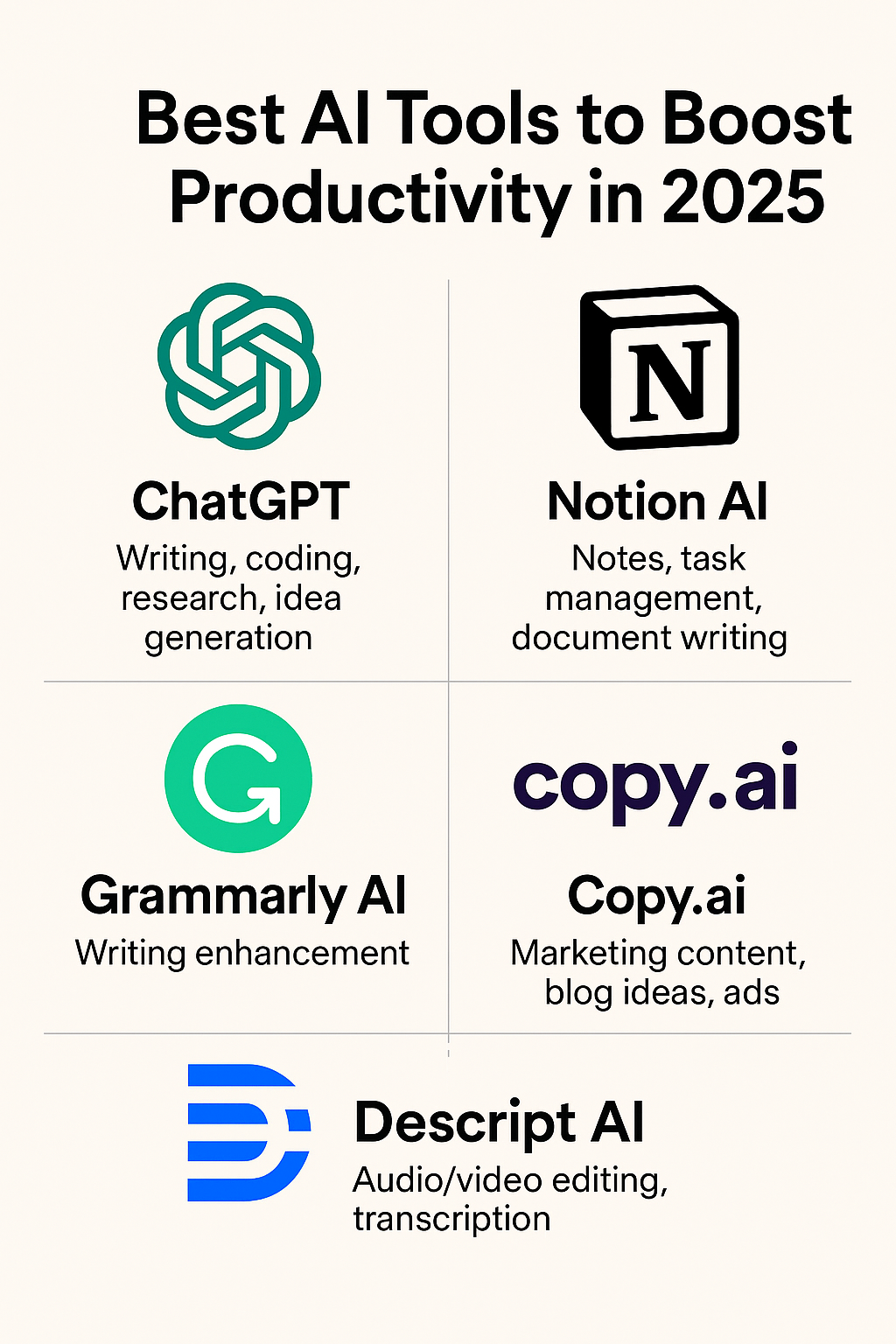

How This Fits Into Broader AI Productivity Trends

Voice-to-task conversion is one data point in a larger trend: AI making interaction with tools more natural and less procedural.

The pattern is consistent across productivity tools. Instead of learning the system's logic and formatting your input accordingly, you express intent naturally and let the AI handle the translation. Instead of system-centric, you're moving toward user-centric.

This mirrors what happened with search. Google didn't ask you to format queries in Boolean logic. It understood natural language questions. That was revolutionary at the time. Same thing is happening with task management, document creation, data analysis, and dozens of other tools.

The underlying technology (large language models) got good enough that this became viable. And once it became viable, the productivity gains were so obvious that adoption followed naturally.

We're seeing the same pattern with:

- Natural language queries on databases

- AI writing assistants that understand context

- Visual builders that convert natural language descriptions to code

- Chat interfaces that replace complex menus

The unifying theme is: fewer steps between intention and result.

Practical Implementation: Getting Started With Voice Workflows

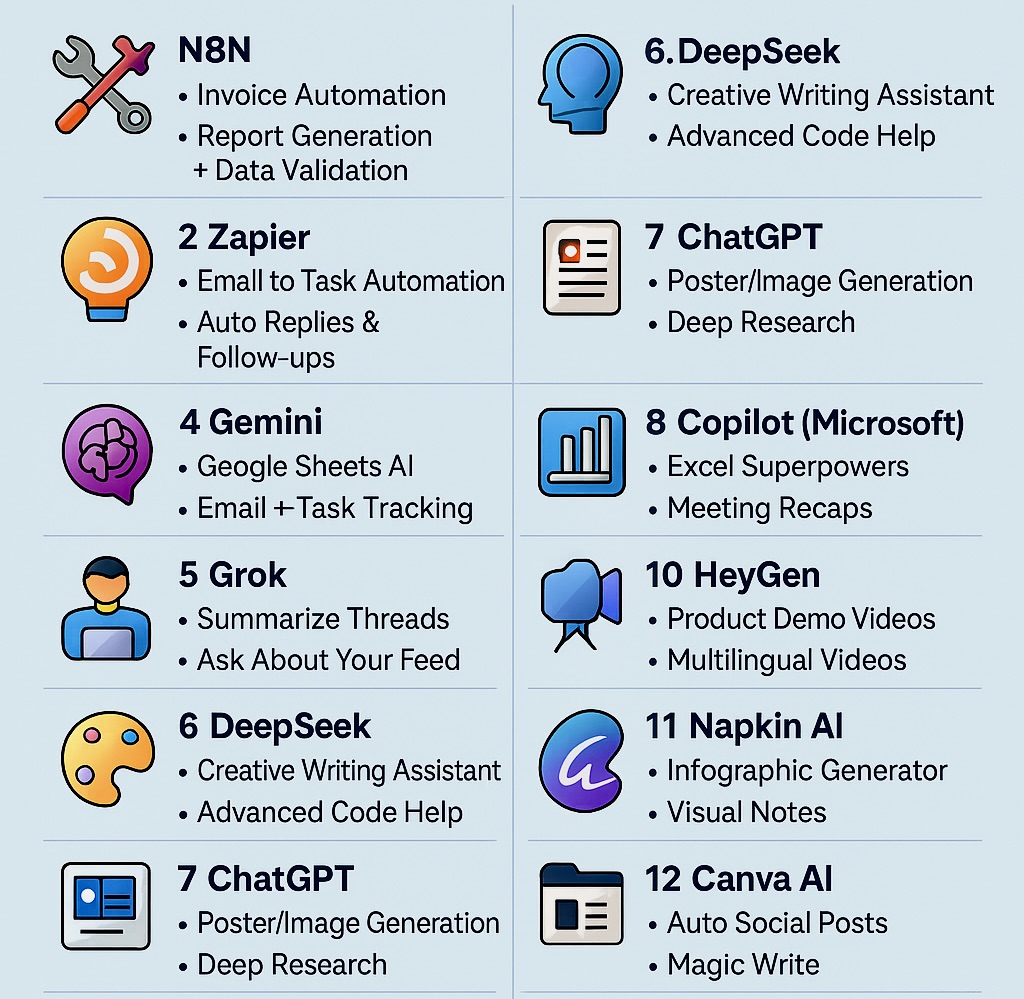

If you're interested in actually using voice-to-task conversion, there are a few approaches.

The easiest entry point is with dedicated tools like Todoist Ramble. They're purpose-built, so the extraction is optimized for task management specifically. Setup is minimal. You open the app, record, and you're done.

The advantage is reliability and ease. The disadvantage is lock-in and lack of customization. The system works the way it works. You adapt to it.

Another approach is using general-purpose AI assistants like Chat GPT or Claude with specific task management tools. You record a voice note (most phones have built-in transcription now), paste it into the assistant with instructions like "extract tasks, projects, deadlines, and assignees from this rambling thought", and then manually copy the structured output into your task manager.

This approach gives you more flexibility and customization, but it's slower because you're manually moving data between systems.

A third approach, for teams with technical resources, is building a custom integration. Record voice to a transcription service, parse the transcription with an LLM using custom prompts tailored to your team's specific context, and automatically create tasks in your system of choice.

This approach gives maximum flexibility and customization, but it requires engineering resources and ongoing maintenance.

For most people, starting with a dedicated tool like Todoist Ramble makes the most sense. It's low friction. The technology is already there. You just need to build the habit.

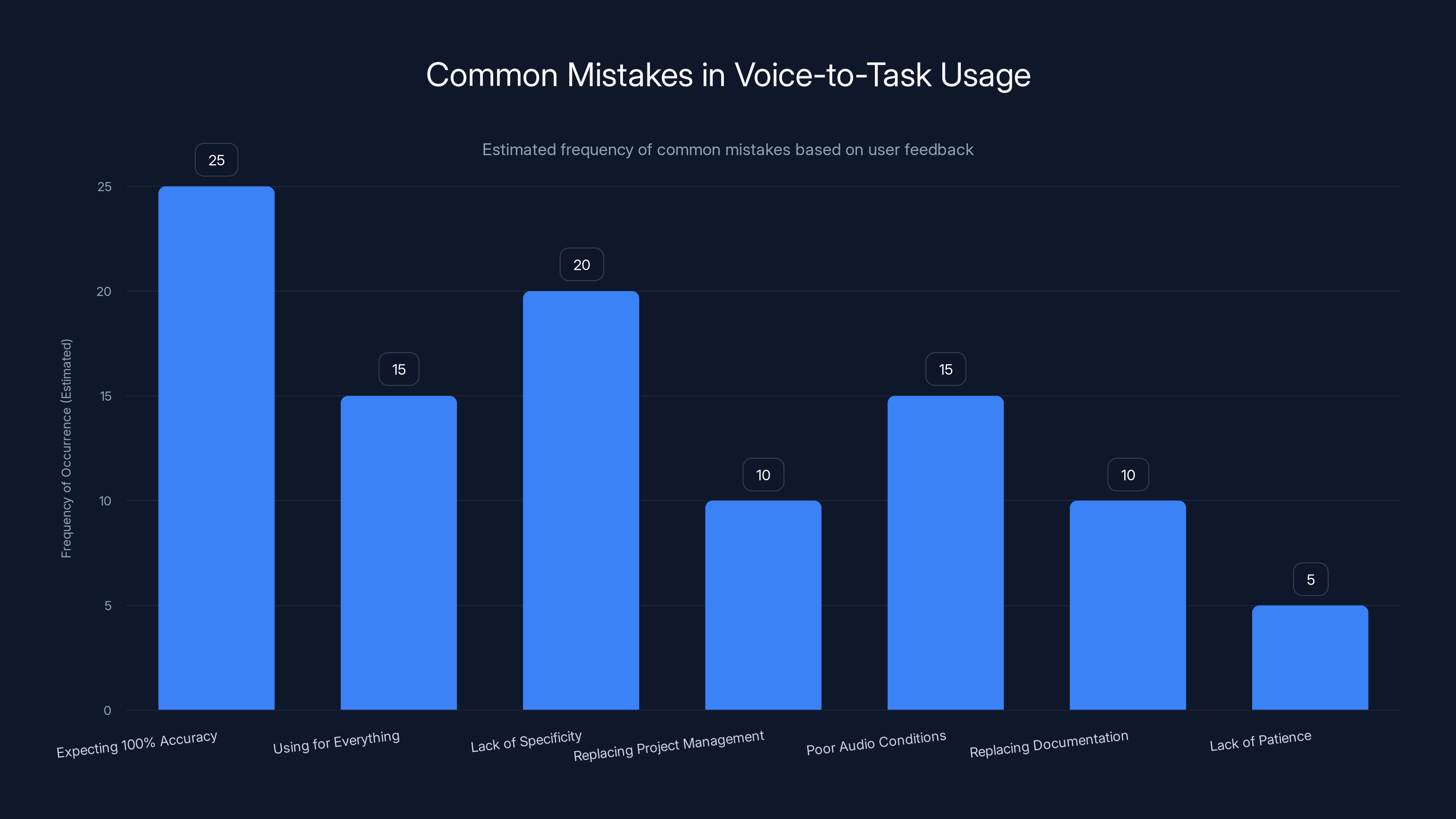

Expecting 100% accuracy is the most common mistake, while not giving the system time to learn is less frequent. Estimated data based on typical user feedback.

The Team Dynamic: From Individual to Collaborative

While voice-to-task conversion started as an individual productivity tool, it's evolving into something more collaborative.

Imagine a standup meeting where one person (usually the most organized person on the team) records the whole thing. The AI extracts tasks for different people, identifies blockers and dependencies, creates subtasks from discussions, and generates a fully structured project from a 30-minute conversation. That's becoming possible.

Or consider async teams. Someone records a voice memo explaining the problem they're solving, the approach they're taking, and the help they need. The AI extracts specific tasks for team members, creates a project structure, and identifies who needs to be involved. Messages go out automatically with context and clear asks.

This changes the cost-benefit calculation for documentation. Right now, teams avoid documenting because it's effort-intensive. But if the documentation extracts itself from voice discussion, suddenly everything gets documented as a side effect of normal communication.

There's also the knowledge capture angle. Over time, all the voice recordings create a searchable archive of how decisions were made, what was considered, what was rejected and why. This becomes invaluable for onboarding new team members or revisiting decisions months later.

The privacy and consent implications need thinking through—people need to understand that they're being recorded and what happens with that data. But the collaborative potential is significant.

Integration Ecosystem

For voice-to-task systems to become truly powerful, they need to integrate with the rest of the stack.

Direct integrations with:

- Calendar systems (to identify conflicts and suggest meeting times)

- Email (to create tasks from emails or assign emails to tasks)

- Communication tools like Slack (to create tasks from messages, update task status via chat)

- CRM systems (to tie tasks to customer records)

- Project management tools (for complex dependencies and resource allocation)

- Document stores (to attach relevant docs to tasks)

Right now, most of these integrations are manual or partial. But as the tooling matures, they should become seamless.

Imagine: record a voice note mentioning a customer problem, and the system automatically creates a task, finds the relevant customer record in your CRM, links the task to their account, identifies the relevant team members to assign it to, checks their calendars for when they have capacity, creates a draft message on Slack asking them to work on it, and sets a follow-up reminder to check on progress.

That's not magic. That's just integration. And it's coming.

The Economics: What This Costs and What It Saves

Todoist Ramble is available as a premium feature within Todoist's paid plans. You're looking at roughly $4-5 per month as part of a broader subscription.

That's cheap enough that the payback period is measured in days, not weeks.

Let's do the math: if you create 5-10 complex tasks or projects per month, and voice input saves you 20 minutes per project, that's 100-200 minutes per month saved. That's $4-5 worth of time at even modest hourly rates. You break even immediately.

But the actual benefit is higher because some of that saved time comes from reduced context switching, which creates downstream productivity gains.

For teams, the economics are even more compelling. If a standup meeting that generates 20-30 tasks gets automatically structured as a project with clear ownership and deadlines, you're saving each participant 15-20 minutes of post-meeting overhead.

For a team of 8 people, that's 2-2.5 hours per standup saved. Over a year with 50 standups, that's 100-125 hours. At even modest billing rates, that's significant money.

There's also the reduced error rate, fewer missed tasks, better on-time delivery, and all the downstream benefits of better-planned projects.

Estimated data shows a steady increase in accuracy of voice-to-task technology, projecting a rise from 70% in 2022 to 93% by 2026. This improvement is expected to drive further adoption.

Privacy, Security, and Data Handling

Voice is personal data. When you start recording yourself, the company handling that data becomes a confidant, whether you think about it that way or not.

You're recording your thinking process. Your concerns. Your plans. Sometimes sensitive information—financial details, personnel issues, customer problems. This data is valuable and needs to be treated carefully.

Key questions to ask any voice-to-task system:

- Is audio data encrypted in transit and at rest?

- How long is audio retained?

- Can you delete your audio?

- Is the AI transcription private to you, or is it used to train models?

- Can you use it on-premises or does everything go to the cloud?

- Who can access your recordings?

- How is the data used if the company is acquired?

Responsible vendors should have clear, honest answers to all of these. If they're vague or evasive, that's a red flag.

For regulated industries (healthcare, finance, law), there are additional considerations. You might need on-premises solutions or specific compliance certifications.

Generally, assume that any voice data you record could theoretically be accessed. Handle it accordingly. Don't record sensitive information unless necessary. Delete old recordings. Review what you're saying before you record.

But also recognize that this is a solvable problem. Privacy-preserving architectures exist. Edge computing (processing on your device rather than in the cloud) is getting better. The tools are improving.

Looking Forward: Where This Technology Is Heading

The trajectory is clear. Voice input for productivity is going to become standard, not novel.

As speech recognition improves, as LLMs get better at context understanding, as more tools integrate, voice-to-task conversion will become more reliable and more broadly applicable.

We're also going to see semantic understanding deepen. Right now, the system understands that "blocked by" means dependency. In the future, it'll understand more subtle relationships. It'll understand risk factors and implications. It'll model resource constraints. It'll integrate with real-time project data to suggest optimal task sequencing.

We'll see voice integrated into virtual and augmented reality tools. Imagine planning a project while wearing AR glasses, gesturing and speaking, and seeing the task structure materialize in front of you in real-time.

We'll see team-based voice interfaces that handle multiple speakers, understand turn-taking, and extract intent from group discussions without needing explicit labels.

We'll see voice-first interfaces for other workflows. Data analysis: "Show me customer churn by region and let me know which are declining fastest." Content creation: "Outline an article about voice productivity tools with these five talking points, then write the introduction." Code generation: "Create an API endpoint that..." All done conversationally.

The underlying paradigm shift is from "learn the system" to "the system learns you." From procedural interfaces to conversational interfaces. From steps to intentions.

Voice is just the medium through which this is happening first. It's the most natural way humans think and communicate. Everything else builds from there.

Comparison: Voice vs. Text Entry for Task Creation

Let's be systematic about how these actually compare in practice:

| Factor | Voice Input | Text Input | Winner |

|---|---|---|---|

| Speed (minutes per task) | 1-2 | 3-5 | Voice |

| Learning curve | Low | Medium | Voice |

| Accuracy (%) | 85-95 | 98+ | Text |

| Context capture | Excellent | Good | Voice |

| Mobile-friendly | Excellent | Poor | Voice |

| Open office friendly | Poor | Excellent | Text |

| Self-editing during entry | Low | High | Voice |

| Setup friction | Minimal | Minimal | Tie |

| Integration requirements | Medium | Low | Text |

| Privacy concerns | Higher | Lower | Text |

| Accessibility (vision) | Good | Poor | Voice |

| Accessibility (mobility) | Excellent | Poor | Voice |

| Detailed, structured data | Moderate | Excellent | Text |

The honest answer: they're complementary. Voice excels at quickly capturing complex, interconnected thoughts. Text excels at precision and detailed structured data.

Optimal workflow probably involves both. Voice for capture and initial planning. Text entry or structured forms for precise details and refinement.

Estimated data suggests task extraction is the most challenging component in voice-to-task conversion, followed closely by natural language understanding.

Common Mistakes and How to Avoid Them

People trying voice-to-task for the first time often make predictable mistakes.

Mistake 1: Expecting 100% accuracy immediately. The system is 85-90% good out of the box. The final 10-15% needs human review. Plan for 2-3 minutes of review and correction per voice entry, not zero.

Mistake 2: Trying to use it for everything. Voice-to-task is great for complex, multi-task planning. It's overkill for "buy milk" or "call the plumber." Use it strategically.

Mistake 3: Not being explicit enough. "The thing" is unclear. "The dashboard redesign project that started last month and needs QA by the 15th" is specific. Be specific.

Mistake 4: Expecting it to replace project management completely. This is a tool for task capture and initial structuring. For complex projects with serious constraints, you still need a proper project management interface.

Mistake 5: Recording in bad audio conditions. Noisy office, moving car, weak phone signal—all degrade quality. Find a quiet spot. Use a decent microphone. The better the audio, the better the extraction.

Mistake 6: Treating it as a replacement for async written documentation. Voice is real-time capture. It's not a substitute for written specs or documented decisions. It's a complement.

Mistake 7: Not giving the system time to learn your patterns. The first week will be more manual review. By week three or four, it gets significantly better as it learns your style. Stick with it.

Industry Applications and Use Cases

Voice-to-task conversion isn't just for general productivity. Different industries are finding unique applications.

Product Management: Capturing feedback, building feature requests, planning roadmaps. A PM can record customer conversations and have tasks auto-extract from insights and requests.

Consulting: Consultants in the field capture observations and recommendations that need to become deliverables. Voice capture during client discussions automatically generates tasks for the team.

Sales: Capturing deal progression, identifying blockers, planning follow-ups. A sales call gets recorded (with consent), key points are extracted, tasks are created for the team.

Research: Lab notes, findings, next experiments. Researchers can capture thoughts while still working, have them organized later.

Construction/Field Work: Team leads in the field directing work, planning next steps, identifying issues. Voice capture creates a task list for the crew and documentation for the project.

Healthcare: Providers in clinics recording patient issues and care plans that become task lists for follow-up care and administration.

Creative Work: Designers and writers brainstorming projects, planning sprints, defining requirements. Voice brainstorming becomes a work breakdown structure.

The common thread: situations where capturing the thought in real-time matters more than formatting it perfectly, and where the thought needs to become structured work later.

Building a Voice-First Workflow

If you want to actually adopt this, here's a practical roadmap.

Week 1: Explore. Get access to whatever tool you're using (Todoist Ramble, or your own setup). Create a few voice memos. Get used to the interface. See how accurate it is for your specific use case and communication style.

Week 2-3: Refine. Use voice for 2-3 complex projects. Build awareness of what works well (detailed project descriptions work better than quick mentions). Correct the system when it gets things wrong. Review results to build confidence.

Week 4: Expand. Start using voice for your regular workflow. Maybe it's Monday planning sessions. Maybe it's project kickoffs. Maybe it's standup follow-up. Find the use cases where it creates the most value.

Month 2+: Optimize. The system knows your patterns now. Accuracy is higher. You've found the rhythm that works for you. Now it's about integrating it into daily workflow and removing friction.

The reality: adoption takes 4-6 weeks to feel natural. Don't expect to love it immediately. It'll feel weird at first. That's normal.

The Broader Productivity Shift

Voice-to-task conversion is symptomatic of a bigger shift in how we think about productivity tools.

For decades, productivity tools made you think like a machine. You had to format your input according to the system's logic. You had to navigate menus. You had to learn syntax. The system was the constraint.

Now, systems are becoming elastic. They learn your patterns. They understand your intent. They adapt to your style.

This shift is irreversible. Once you've experienced tools that work the way you think instead of forcing you to think the way they work, going backward is painful.

The companies that understand this are winning. The ones still building machine-centric interfaces are losing relevance with every passing year.

This isn't just about voice. It's about conversational interfaces, AI-assisted everything, adaptive systems, and tools that get better the more you use them instead of requiring more manual setup.

Voice is just the most natural expression of this shift. It's how humans think. It's how humans communicate. It's where everything else leads.

FAQ

What is voice-to-task conversion?

Voice-to-task conversion is an AI-powered process where you record a voice memo describing work that needs to be done, and a system automatically extracts specific tasks, deadlines, priorities, and assignees from that recording, then populates them directly into your task management system. Instead of typing out tasks manually, you just talk about what needs to happen, and the AI handles the organization.

How accurate is the voice-to-task conversion right now?

Current systems achieve 85-95% accuracy on first pass, meaning about 1 in 10 to 1 in 15 extractions might need minor tweaking or correction. Accuracy improves with better audio quality, clearer speech, and as the system learns your specific patterns and vocabulary. Most users find that the accuracy is sufficient that voice entry is still faster than manual entry even accounting for correction time.

What's the difference between voice-to-task and just recording a voice memo?

A voice memo is just an audio recording. Voice-to-task conversion goes further—it transcribes the audio to text, analyzes that text to understand intent and extract specific information (tasks, deadlines, priorities, assignees), and then creates structured task entries in your system. It's the difference between recording yourself rambling and having a system that understands what you meant and organizes it accordingly.

Can voice-to-task systems handle complex projects with many dependencies?

They can handle moderate complexity well, extracting multiple related tasks and some basic dependencies from a single voice memo. However, for projects with extensive interdependencies, critical path analysis, or resource constraints, you'll likely still need a dedicated project management interface and visual representation. Voice-to-task excels at initial capture and simple-to-moderate structuring.

What languages and accents does voice-to-task support?

English support is excellent. Spanish, French, German, and Mandarin have good support. Most other languages work but with reduced accuracy. Accents are increasingly well-supported thanks to training data diversity, but very heavy accents or non-standard dialects may see reduced accuracy. The quality and breadth of language support varies by platform.

How does voice-to-task handle sensitive information and privacy?

Voice recordings contain personal data and potentially sensitive information, so it's important to understand how your platform handles this. Look for platforms that encrypt data in transit and at rest, clearly state how long audio is retained, allow you to delete recordings, explain whether voice is used for model training, and provide privacy controls. For regulated industries, consider whether on-premises solutions are available.

Is voice-to-task worth it for small teams or is it only for large organizations?

Actually, voice-to-task becomes more valuable for small teams. You save time on manual task creation and project planning, and the planning itself tends to be more thorough because you're forced to articulate everything. For a single person or small team, the time savings accumulate quickly and the cost is minimal (usually $4-5/month for the feature).

How does the system know which project to create a task in if I have multiple projects?

The system uses context from your conversation. If you mention a project name, it recognizes that. If you describe work that sounds like it belongs to a certain type of project, it makes an educated guess based on patterns it's learned from you. If it's ambiguous, some systems ask you to clarify; others create a task in a default location for you to move. The more specific you are in your voice memo, the better the system performs.

Can voice-to-task extract deadlines from natural language like "by end of quarter" or "before the client meeting"?

Yes, it can handle relative deadlines. "End of quarter," "next Friday," "before the client meeting on the 15th"—these get parsed and converted to actual dates using calendar context. However, this requires the system to have access to your calendar. Ambiguous deadlines ("soon", "eventually") are harder; the system might skip these or ask for clarification.

What happens to accuracy when you have background noise during recording?

Background noise degrades accuracy in two ways: speech-to-text misses words or misinterprets them, and the system's understanding of intent can be affected if critical phrases are unclear. Find a relatively quiet spot to record for best results. Modern microphones and noise-cancellation help. If you're recording in genuinely noisy conditions, expect to spend more time on correction.

How long does it take to process a voice memo into structured tasks?

Most systems process within 5-30 seconds—you hit stop recording and within a minute or two, tasks appear in your system. Some systems process faster (real-time or near-real-time) while others might take a few minutes if they're running more complex analysis. Total time from "hit record" to "tasks are in my system and I'm adjusting them" is typically 3-10 minutes for a moderately complex project.

The Path Forward: Adoption and Future Development

We're at an inflection point with voice-to-task technology. The capabilities have reached a threshold where they're genuinely useful. The user experience is smooth enough that it doesn't feel like using beta software. The economics are compelling.

What happens next depends on adoption. As more people use voice-to-task systems, more data gets generated. More data improves the models. Better models increase accuracy and capability. Higher accuracy drives more adoption. It's a positive cycle.

The companies that win in this space will be the ones that:

-

Obsess over accuracy and user feedback. Every correction is a learning opportunity. They iterate fast.

-

Integrate broadly. The tool that works with everything else in your stack is the one you actually use.

-

Respect privacy. People will only trust you with voice data if they're confident you're handling it responsibly.

-

Focus on specific use cases. Generalist tools struggle. Tools purpose-built for a specific workflow win.

-

Build communities of power users. Users who rely on voice-to-task become evangelists. They drive adoption.

For users, the advice is straightforward: try it. The friction to entry is low. The potential benefit is high. The worst case is you spend 20 minutes and decide it's not for you. The best case is you find a 30% productivity increase.

And if it doesn't work for you the first time, try again in six months. The technology improves steadily. What was 70% accurate last year is 90% accurate this year. The bar keeps moving.

Voice-powered productivity isn't the future. It's the present. And it's just getting started.

Key Takeaways

- Voice-to-task conversion achieves 85-95% accuracy on first pass, extracting tasks, deadlines, and assignments from casual speech

- Time to plan complex projects drops 72% when using voice input (from 39 minutes to 11 minutes) plus eliminates context switching costs

- The technology works best for complex, multi-task planning in product management, consulting, sales, and field services

- Accuracy improves to 95%+ after 3-4 weeks as the system learns your specific communication patterns and conventions

- Privacy and data security are critical considerations—voice recordings contain sensitive information requiring careful platform selection

Related Articles

- Todoist Ramble: Voice-Powered Task Creation Transforms Productivity [2025]

- Gemini's Personal Intelligence: Power & Pitfalls [2025]

- Claude Cowork: Enterprise AI Infrastructure Beyond Chat [2025]

- Humans& AI Coordination Models: The Next Frontier Beyond Chat [2025]

- Google's Hume AI Acquisition: Why Voice Is Winning [2025]

- Google's Hume AI Acquisition: The Future of Emotionally Intelligent Voice Assistants [2025]

![Voice-Activated Task Management: AI Productivity Tools [2025]](https://tryrunable.com/blog/voice-activated-task-management-ai-productivity-tools-2025/image-1-1769261851403.png)