How Google Photos Is Revolutionizing Image-to-Video Creation

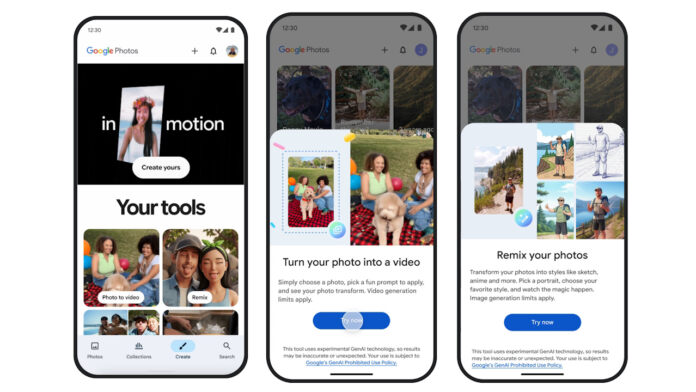

Last year, when Google first introduced its generative AI image-to-video feature in Photos, it felt like watching someone else drive your car. You got a video, sure. But it went wherever Google felt like taking it. Either subtle movements nobody asked for, or the "I'm feeling lucky" button that might create something watchable or something genuinely bizarre.

Then came the update that changed everything: text prompts.

Now you can tell Google's AI exactly what you want to see. Want your static portrait to look like someone's gazing out a window with wind rustling their hair? Describe it. Need your landscape photo to pan dramatically across a canyon? Type it. The control has shifted from passive viewer to active director, and honestly, it's one of those features that makes you wonder why it took this long to arrive.

This isn't just another incremental feature drop. It's a fundamental shift in how everyday people interact with generative AI. For years, AI tools have felt intimidating or unpredictable. You'd get results that ranged from impressive to embarrassing with no middle ground. Google Photos' text prompt feature flips that script. It gives creators real agency without requiring them to understand prompting techniques or settle for preset options.

The timing matters too. As platforms from Tik Tok to Instagram increasingly demand video content, tools that make video creation faster and easier become genuinely valuable. Google's 1.9 billion Photos users suddenly have access to a video generation capability that previously required expensive software, technical knowledge, or luck.

But this feature exists in a complicated landscape. x AI's Grok offers similar capabilities through their image-to-video feature. Other AI companies have been experimenting with similar tools. The question isn't whether text-prompt video generation exists anymore. It's whether Google's implementation actually works better than the alternatives, and whether regular people will actually use it.

Let's dig into what Google just rolled out, what it means for creators, and what the broader implications are for the future of AI-powered content creation.

TL; DR

- Text Prompts Are Now Live: Google Photos users can now describe exactly how still images should animate into videos using natural language descriptions instead of preset options.

- Age Restriction Applied: The feature is limited to users 18 and older in the Photos app, though it's available to users 13+ in Gemini.

- Built-In Audio Support: Videos can now include default audio, making them ready to publish without additional editing.

- Competitive Positioning: This brings Google Photos more in line with rivals like Grok while addressing safety concerns from past AI video misuse.

- Regional Limitations: Features remain geographically restricted, so availability varies by location and account type.

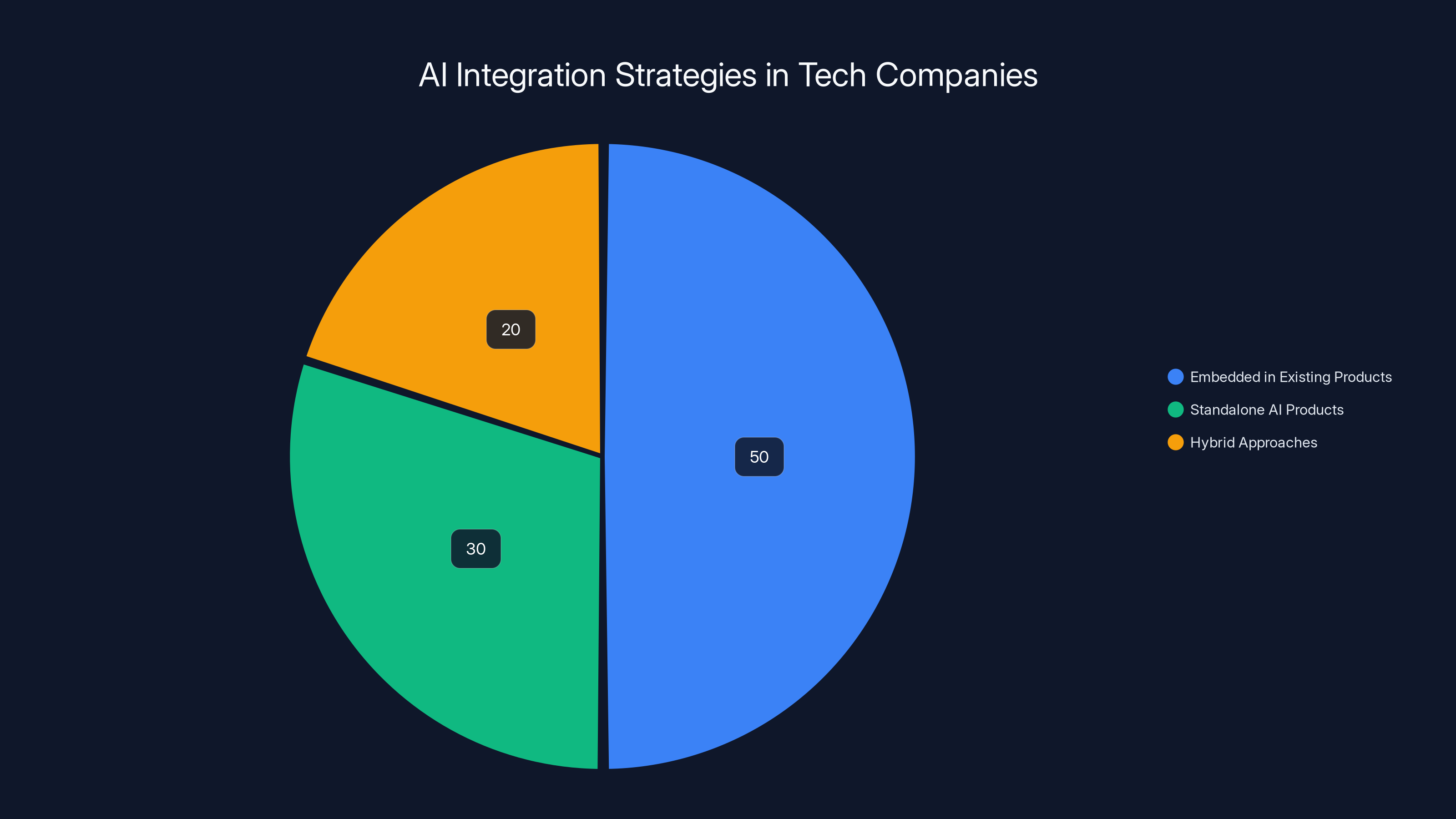

Estimated data shows that embedding AI into existing products is the most common strategy, accounting for 50% of AI integration approaches among major tech companies.

What Google Photos' Image-to-Video Feature Actually Does

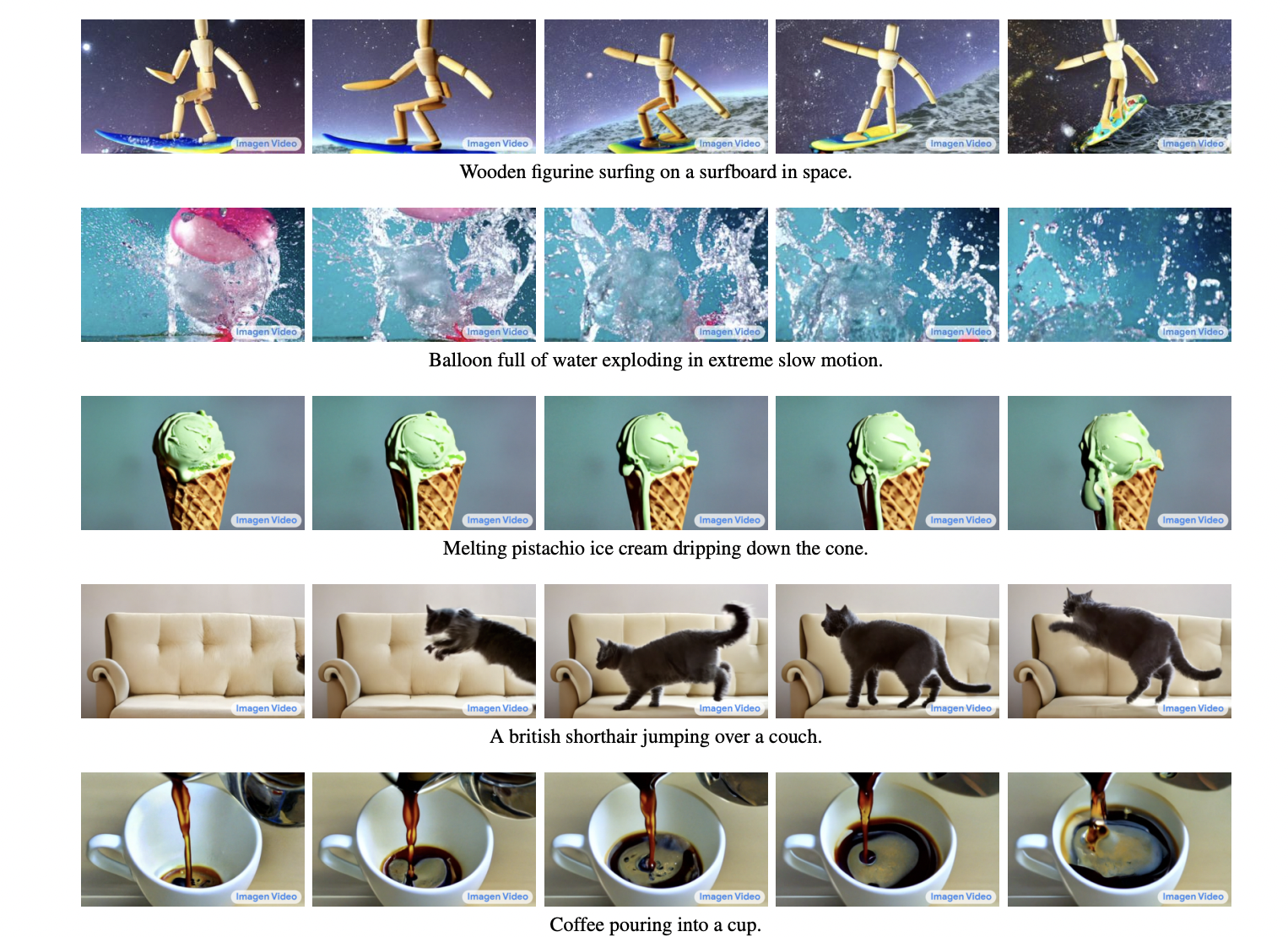

Before we talk about the new text prompt capability, you need to understand what the base feature does. Google Photos' image-to-video tool takes a single still photograph and animates it. Not by shifting pixels around randomly, but by using AI to understand spatial depth, lighting, and composition, then creating realistic movement that feels like the moment captured in the photo is continuing to unfold.

This is fundamentally different from simple zoom-and-pan effects. Those are transitions. This is animation. The AI generates new pixels that don't exist in the original image, extrapolating what the scene might look like if time advanced by a few seconds.

A portrait becomes a person turning slightly or breathing subtly. A landscape becomes a gentle camera pan. A food photo becomes steam rising from a bowl. The sophistication of these animations has improved dramatically since Google first introduced the feature. The algorithm has gotten better at understanding what should move, how fast, and in what direction.

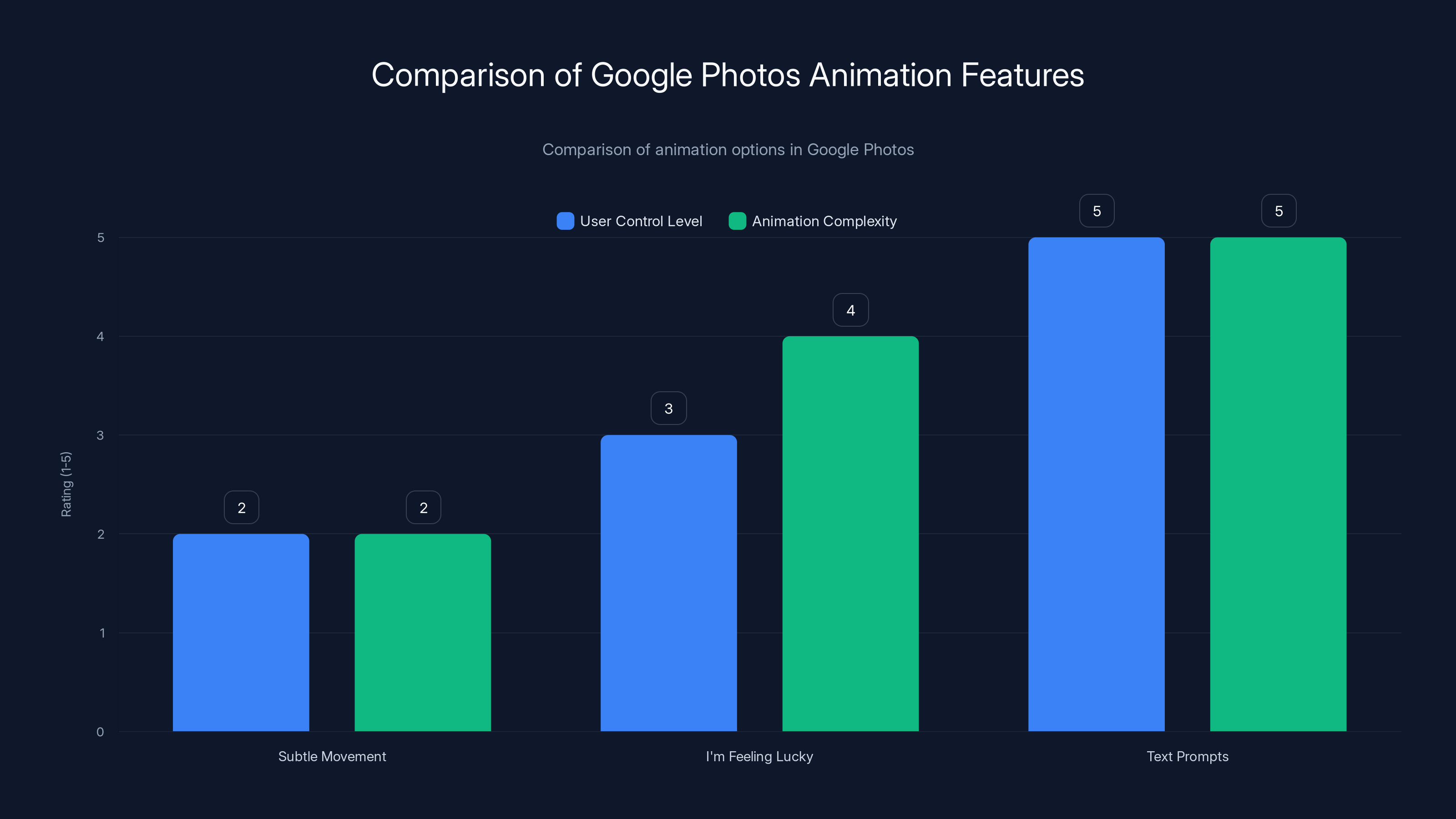

Before the text prompt update, you had three options: let Google apply subtle movement automatically, hit "I'm feeling lucky" for something more pronounced, or just skip the feature entirely. Those presets worked okay for generic situations. But they didn't work for creative direction. You couldn't say "I want the camera to move left while the subject stays centered" or "add wind effects to make the scene feel more dynamic."

The practical limitation was real. Creators forced to choose between presets often felt the results didn't match their vision. Users without strong creative instincts sometimes got results that looked weird or felt off without understanding why. The feature existed, but it didn't give you control.

Now text prompts change that equation entirely. You describe the video you want, and the AI attempts to generate it. This is where the feature becomes genuinely useful rather than just a novelty.

Text prompts offer the highest level of user control and animation complexity in Google Photos, surpassing previous preset options.

Understanding the Text Prompt Capability

The new text prompt feature works like a traditional AI image or video prompt, but specifically optimized for animating existing photos. You describe the movement, style, mood, or effect you want, and Google's AI interprets your description and applies it to your image.

Examples of effective prompts might include:

- "Slow, gentle zoom on the subject's face while maintaining focus"

- "Pan camera left to right across the landscape"

- "Add gentle water ripples with light reflections"

- "Subject looks around the room with a curious expression"

- "Slow fade to dark with stars appearing overhead"

- "Gentle wind blowing through grass while camera stays still"

These aren't random ideas. They're the kinds of movements and effects that require understanding both the original image and what the prompt is asking for. The AI has to recognize the key elements in your photo, understand spatial relationships, predict how things should move, and generate frames that feel natural rather than glitchy.

Google also provides prompt suggestions as you work, offering inspiration for what's possible with your specific image. This is helpful for people who aren't sure what to ask for. Instead of staring at a blank text field wondering what to write, you get examples like "romantic sunset gaze" or "morning light coming through window," which you can use as-is or modify to match your vision.

You can edit and refine prompts until you get something you like. The workflow isn't click-once-and-done. It's iterative. Try a prompt, see the result, adjust, try again. This matters because AI video generation is imperfect. Sometimes it misinterprets your request, or the result looks good but not quite right. The ability to iterate without starting from zero is genuinely valuable.

The generated videos are relatively short, probably in the 3 to 5 second range based on Google's typical approach to generative video. Long enough to feel like actual content, not long enough to be feature-film material. But for social media, product photos, or personal memories, that's exactly the right length.

The Age Restriction Controversy

Here's where things get interesting, and a little complicated. Google restricted text prompt image-to-video generation to users 18 and older in the Photos app. But the same capability is available to users 13 and older in Gemini.

This inconsistency raises immediate questions. Why does Gemini allow younger users but Photos doesn't? Is it actually less safe in Photos? Is the restriction about liability rather than actual risk?

The answer appears to be both. Generative AI video, particularly when combined with image-to-video conversion, can be misused. The most notorious example is what happened with Grok, which made headlines when people used it to generate undressing videos of real people, including minors. That wasn't theoretical concern. That was actual, documented abuse.

Google is likely restricting the Photos version to avoid similar headlines and potential legal liability. Gemini's restriction to 13+ exists because Gemini is already a general AI assistant with comprehensive safety systems. The assumption is that people using Gemini for video generation are doing so in a more deliberate, considered context. Photos is different. It's casual. Anyone with a Google account touches it. Restricting the more powerful video feature to adults feels like Google erring toward caution.

You can argue whether this restriction is necessary or overreach. Some people will say teens should be allowed to use creative tools. Others will say that given the history of generative video abuse, restrictions make sense. The practical reality is that Google made a deliberate choice to treat Photos as more restricted than Gemini, acknowledging the different use contexts.

What's worth noting is that age restrictions apply to the feature itself, not your account. If you're old enough, the feature works. If you're not, it doesn't. No workarounds, no technical methods. Just a hard restriction.

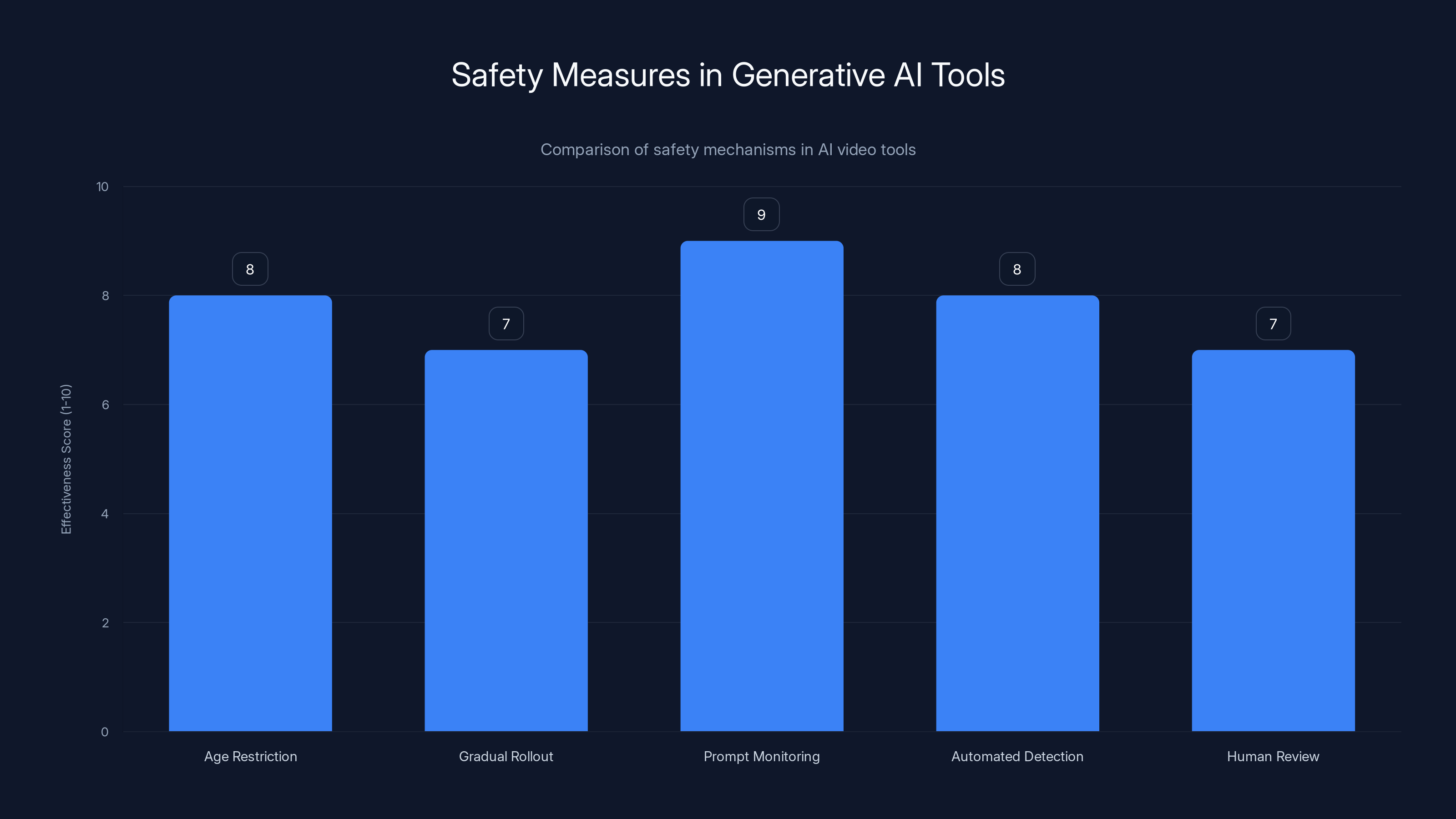

Google's generative AI tools employ multiple safety mechanisms, each with varying effectiveness, to prevent misuse. Estimated data based on typical industry practices.

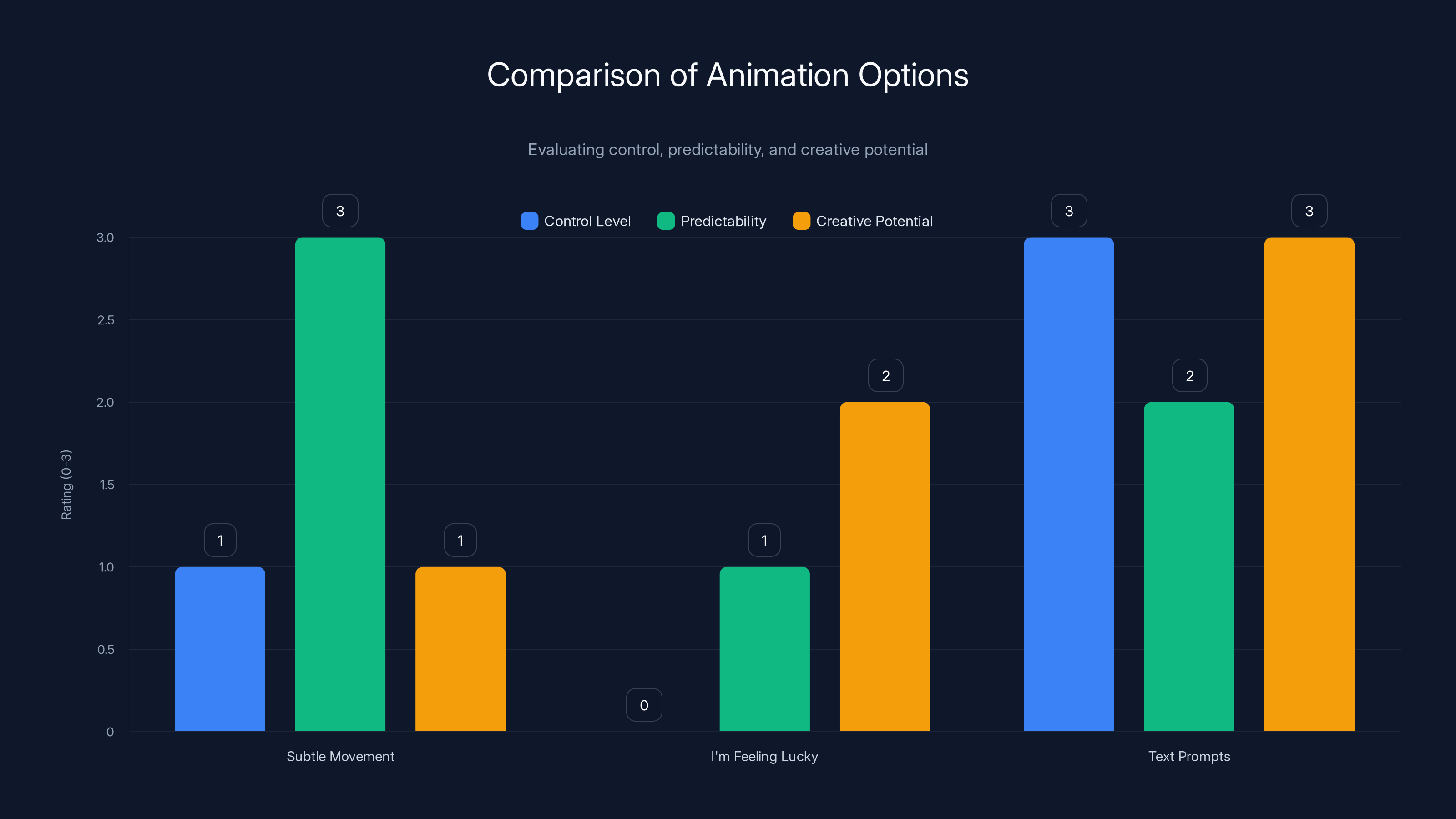

How Text Prompts Compare to Preset Options

To understand what you're gaining with text prompts, it helps to contrast them with what came before. The old system gave you two choices: subtle movement or random generation.

Subtle Movement was designed for safety and subtlety. It's the option Google recommended for most users. It would apply minimal animation that enhanced the image without changing it fundamentally. A person's eyes might blink slightly. Their shoulders might shift. A flower's petals might seem to sway. The movement was gentle enough that if you didn't notice it, you wouldn't feel like something was missing.

This approach was safe and predictable. It rarely produced bizarre results. But it also rarely produced anything remarkable. If you wanted your video to feel dynamic or creative, subtle movement just wasn't enough.

I'm Feeling Lucky was the alternative. This option applied more pronounced animation. Think dramatic camera pans, significant movement, noticeable effects. Sometimes it produced stunning results. Other times it produced videos that looked like someone fed your photo to an AI that had just woken up from a five-year nap and was still confused about how reality works. The unpredictability was the entire point.

With text prompts, you get a middle ground. You can request whatever level of animation you want, describe the specific mood or effect you're looking for, and guide the AI toward your vision. It's not fully deterministic—the AI will still interpret your prompt in ways you didn't expect—but it's far more directional than the preset options.

The comparison table tells the story:

| Option | Control Level | Predictability | Creative Potential | Best For |

|---|---|---|---|---|

| Subtle Movement | Very Low | Very High | Low | Safe, professional content |

| I'm Feeling Lucky | None | Low | Medium | Experimental, fun videos |

| Text Prompts | High | Medium | High | Creative direction with flexibility |

This is why text prompts matter. They fill a genuine gap in the tool's capabilities. For professionals, casual creators, marketers, or anyone with a specific vision, the ability to describe what you want is exponentially more useful than choosing between "subtle" and "random."

Built-In Audio: Making Videos Ready to Publish

A smaller but meaningful addition to this update is built-in audio support. Generated videos can now include default audio, making them ready to share directly without additional editing.

This seems minor until you think about workflow. Previously, if you generated a video and wanted audio, you'd need to export it, open a video editor or audio application, add a soundtrack, and re-export. Even for simple background music, that's multiple steps. Now you can generate a video with audio already included.

The audio isn't customizable within Photos itself—you can't choose specific music or adjust audio levels. It's more like Google adds a default royalty-free soundtrack that matches the mood of your video. Something appropriate and inoffensive that works for social media.

For Tik Tok or Instagram posting, this is genuinely useful. Those platforms care about whether your video has audio. Videos with sound tend to perform better. Before this update, you had to manually handle audio. Now it's included.

The philosophy here is clear: Google wants to reduce friction between creation and publishing. Fewer steps between idea and finished product means more people will actually use the feature. It's smart product design.

Text prompts offer the highest control and creative potential, balancing between the predictability of subtle movement and the creativity of 'I'm Feeling Lucky'.

The New Gmail Photos Picker Integration

Alongside the text prompt update, Google introduced a new Photos picker for Gmail. While not directly related to image-to-video generation, it represents Google's broader push to make its ecosystem more integrated and friction-free.

The new picker lets you search your Photos library directly from Gmail's attachment interface. Instead of downloading photos to your computer and uploading them, you search your library within Gmail, select what you want, and attach it. You can select multiple photos at once and browse your albums, collections, and shared albums without leaving Gmail.

This matters because email is still how most people share photos, despite social media's dominance. Making that process faster removes a small but real barrier to sharing. Instead of thinking "this will take a minute to find the file," you just search and attach.

It's the kind of feature that doesn't seem significant until you use it, then you realize how much time you've been wasting on a simple task. Google didn't invent the idea—tons of applications let you search and attach—but they brought it to Gmail, which 1.8 billion people use.

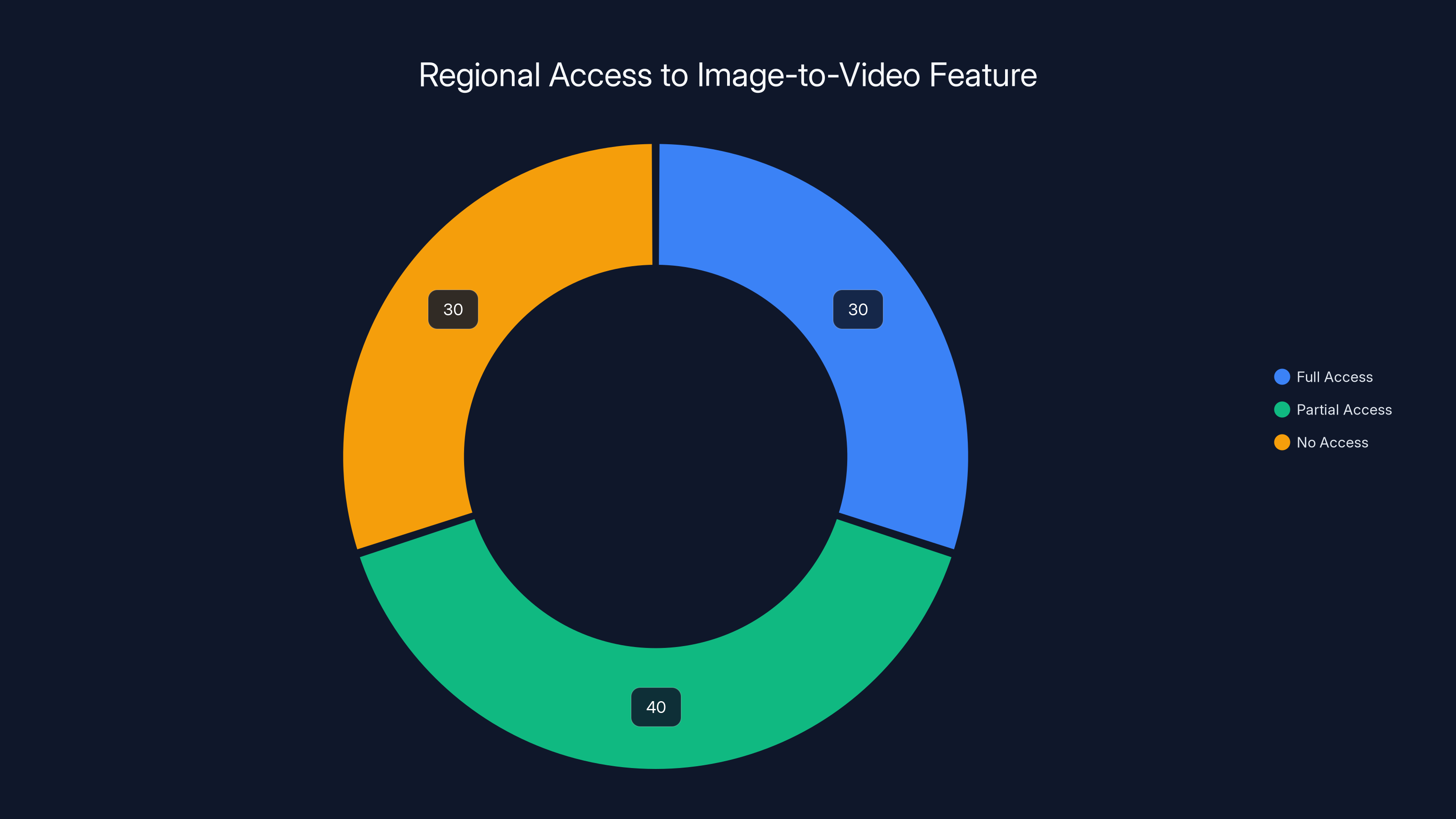

Regional Availability and Limitations

Here's where things get frustrating: the image-to-video feature, including the new text prompt capability, isn't available everywhere. Google has geographically restricted access for various reasons related to regulation, licensing, and content moderation.

The exact restrictions vary by region and even by account type. Some countries have full access. Others have partial access to the base feature but not the text prompt variant. Some have access via Gemini but not Photos. The limitations are real and they're inconsistent.

Google publishes this information on their official support pages, but it requires checking your specific region. You might have access, or you might not. There's no universal rollout. This is frustrating for international users and creators in restricted regions, but it's the reality of operating a consumer product at scale while complying with different regulatory frameworks.

The practical implication is this: don't assume you have access just because the feature exists. Check Google's official documentation or try accessing it. If it's not available in your region, you might eventually get it, or you might not for months. It depends on local regulations and Google's priorities.

Estimated data showing that around 30% of regions have full access, 40% have partial access, and 30% have no access to the image-to-video feature. This highlights the fragmented rollout due to regulatory and licensing issues.

Safety Measures and Why They Matter

The age restriction and gradual rollout of Google Photos' text prompt feature aren't just corporate caution. They reflect real lessons learned from how generative AI video can be misused.

The Grok situation established that image-to-video tools can be weaponized. People used Grok's capabilities to generate synthetic videos of real people, often without consent, sometimes depicting them in undressing scenarios. The fact that this happened publicly meant other AI companies had to reckon with the same potential.

Google's approach has been more cautious from the start. Their generative AI features include multiple safety mechanisms: they're tested for potential abuse patterns before rollout, they're restricted by age or region when necessary, and they're monitored for misuse once live.

Text prompts actually increase safety concerns in one way—they give more directional control, which could theoretically be used for harmful purposes. But they also increase it in another way—they create an explicit record of what someone is asking the AI to generate, which makes moderation and abuse detection easier.

Google likely has systems flagging prompts that appear designed to create harmful content. Automated detection catches many attempted abuses before videos are even generated. Human review catches others. The combination creates friction against misuse without making the feature unusable for legitimate creative purposes.

This is important context because some people criticize these safeguards as overreach or censorship. The reality is more nuanced. These guardrails exist because generative video tools have demonstrated potential for real harm. The restrictions aren't arbitrary. They're responses to documented problems.

How This Stacks Up Against Competitors

Google Photos isn't the only platform offering image-to-video generation. Understanding how the text prompt feature compares to alternatives helps contextualize what Google is doing and whether it's actually competitive.

Grok was an early mover in this space, and their image-to-video feature is powerful. You can generate videos with significant creative control. The problem, as mentioned, is the safety issues that emerged with misuse. Grok's approach has been more permissive, which generated headlines for all the wrong reasons.

Other AI companies like Anthropic and Open AI have been working on video generation capabilities. Open AI's Sora model, for instance, can generate video from text descriptions, though it's not optimized for animating existing images the way Google Photos is.

There's also a broader ecosystem of specialized video generation tools—Runway, Synthesia, and others—that offer professional-grade video generation capabilities. These are more powerful but also more complex and usually paid services.

Google's advantage is distribution. 1.9 billion Photos users suddenly have access to video generation without installing new software or paying for specialized tools. The feature is built into an app they already use. The barrier to entry is zero.

The tradeoff is flexibility. Specialized tools let you do more. Google Photos' implementation is simpler, more constrained, but accessible to everyone. For the average person wanting to animate a photo, Google's approach is better. For professionals needing advanced capabilities, specialized tools are better.

The text prompt feature makes Google's offering more competitive because it adds the creative control that was previously missing. You can now describe specific effects and movements, which brings it closer to what specialized tools offer, while maintaining the simplicity advantage of being in an app people already use.

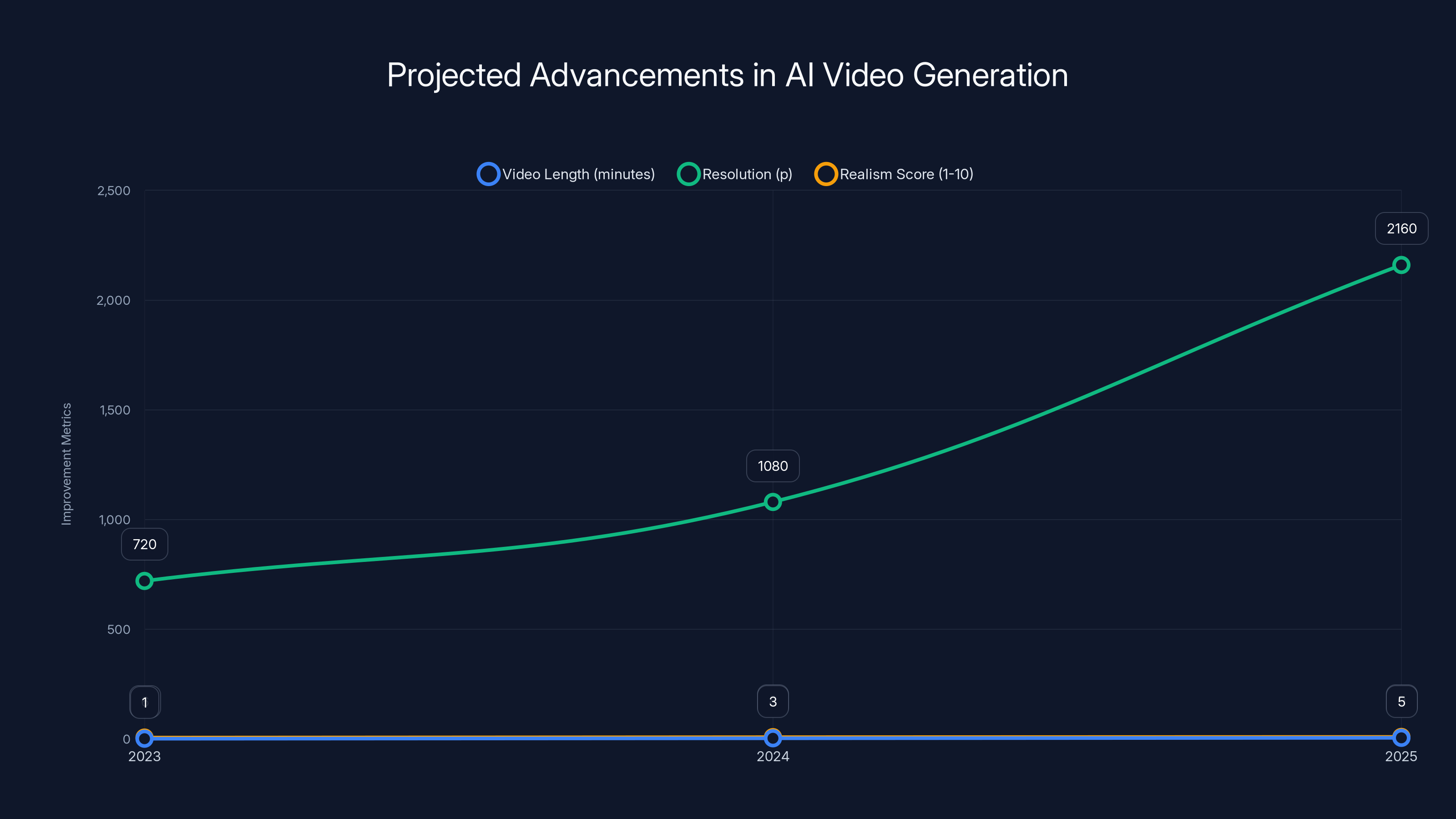

AI video generation is expected to produce longer videos, higher resolution, and more realistic motion by 2025. Estimated data.

Best Practices for Using Text Prompts Effectively

If you're going to use the text prompt feature, here are practical guidelines that will improve your results:

Be specific about movement. Instead of "pan the camera," say "slow pan from left to right" or "zoom in gradually while maintaining focus on the subject's face." The more detail you provide, the better the AI can interpret your intent.

Include mood or tone. You can describe not just the movement but the feeling you want. "Dramatic wind blowing through hair with cinematic lighting" tells the AI more than "add wind effects." The mood helps guide the overall aesthetic.

Describe what stays still. If the subject should remain centered while the background moves, say so. The AI needs to understand what the static anchor point is.

Start simple, then iterate. Your first prompt might not produce the perfect video. That's normal. Try it, see the result, adjust based on what worked or didn't work, and try again. The iteration process is how you get good results.

Use the suggested prompts as starting points. Google provides suggestions. They're not perfect, but they give you direction and examples of what's possible with your image. Use them as inspiration rather than final answers.

Test different images. Some photos animate better than others. Images with clear depth, obvious subjects, and interesting backgrounds tend to produce better results than flat, minimal compositions. This matters more with AI video generation than with static images.

Creative Applications and Use Cases

Beyond the obvious "make a video of this photo" use case, the text prompt feature opens up several interesting creative possibilities.

Product photography becomes more dynamic. A product shot becomes a video with the camera panning around the object, showing it from multiple implied angles. For e-commerce, this is actually valuable. Videos tend to outperform static images in conversion.

Travel and event photography can be enhanced. Landscape photos become videos with camera movement that creates a sense of exploration. Event photos become videos with subtle movement that brings the moment to life rather than freezing it.

Personal archival becomes more interesting. Family photos can be animated in ways that preserve memories more vividly than static images. Birthdays, holidays, vacations—all become short video memories rather than snapshots.

Social media content creation becomes faster. Platforms increasingly favor video content. The ability to generate videos from your existing photo library without specialized tools or complex editing makes it realistic for regular people to create video content.

Portfolio and professional use becomes accessible. Photographers, designers, and creatives can use the feature to create dynamic portfolios or presentations without expensive video software.

The common thread is that text prompts enable creative control that was previously missing. You're not just delegating the animation to an algorithm. You're directing it. That's powerful for anyone creating content.

Future Directions and What's Coming Next

If you're thinking about where this technology is heading, the trajectory is clear: AI video generation will become more sophisticated, more accessible, and more integrated into everyday creative tools.

Google's text prompt feature is a waypoint, not a destination. Within a year or two, expect longer videos, higher resolution outputs, more realistic motion, and better prompt interpretation. The current generation is impressive but imperfect. The next generation will be noticeably better.

You'll also likely see more integration with other Google products. Imagine having image-to-video capabilities built into Google Docs, Google Slides, or even Google Search. Once a technology becomes mature enough, Google tends to distribute it across their entire ecosystem.

Other companies will continue iterating as well. Open AI's Sora and other advanced models will become more accessible. Specialized video creation tools will improve. The entire category will mature and improve.

The bigger shift is cultural. As AI video generation becomes easier and more common, people's expectations for content will change. Static images will feel dated faster. Video creation will feel as normal and straightforward as photo editing. The barrier between idea and creation will continue shrinking.

For creators and professionals, this means staying ahead of the curve has real value. Learning to use these tools effectively now will be an advantage as they become ubiquitous. People who understand how to craft effective prompts, which images animate well, and how to edit and refine results will have an edge.

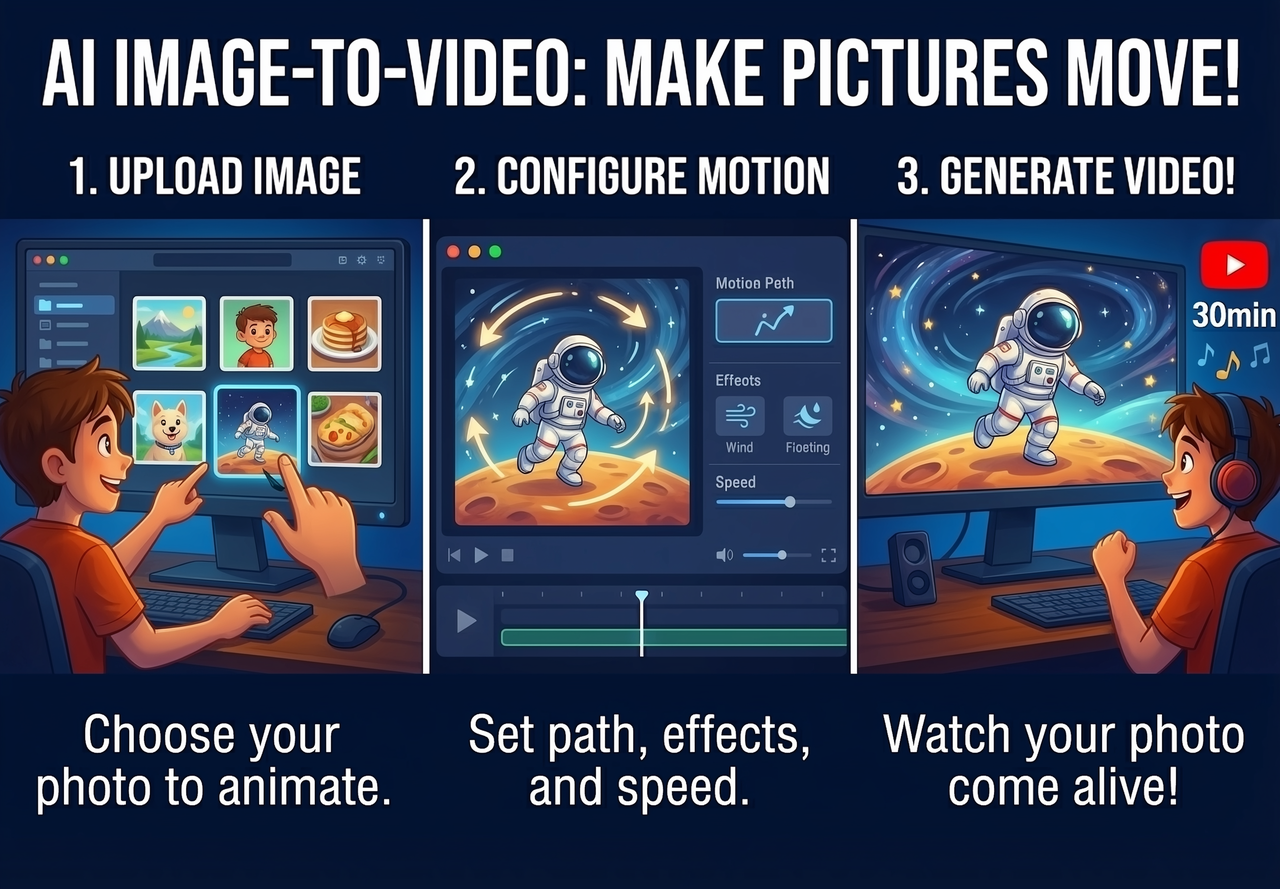

Practical Workflow: From Idea to Published Video

Let's walk through what an actual workflow looks like with the new text prompt feature, from initial idea to published content.

Step 1: Choose your image. Pick a photo you want to animate. Consider composition, lighting, and whether there's interesting depth or implied movement. A good source image makes everything easier.

Step 2: Open Photos and navigate to the image. Find it in your library, tap it, and look for the image-to-video option. It should be available in the editing interface.

Step 3: Select the text prompt option. Instead of choosing preset options, select "Add text prompt" or similar wording.

Step 4: Describe your vision. Type what you want to see. Be specific about movement, mood, and effects. Don't overthink it—you can iterate.

Step 5: Review the result. The AI generates a video based on your prompt. Watch it fully. Does it match your vision? Partially? Not at all?

Step 6: Iterate if needed. If the result isn't what you wanted, edit your prompt and try again. The system remembers your original image, so you're just trying different prompts, not starting over.

Step 7: Add or adjust audio. Decide if the default audio works or if you want to modify it. Some use cases need silence, others need specific music.

Step 8: Save and export. Once you're happy with the result, save it to your library or export it directly for social media posting.

Step 9: Publish. Depending on where you're sharing (Tik Tok, Instagram, You Tube, etc.), upload and let it run.

The entire process takes maybe 5-10 minutes if the first prompt works, or 15-20 minutes if you need to iterate. That's genuinely fast for video creation. Compare that to traditional video editing, and you're saving substantial time.

Potential Limitations and Honest Assessment

Here's the thing about generative AI video: it's impressive, but it's not magic. There are real limitations worth understanding before you get too excited.

Quality varies by image. Some photos animate beautifully. Others produce weird results or awkward motion. You can't predict which without trying. This means some of your attempts won't work out, and you'll either accept the imperfect result or abandon the video.

Motion interpretation is imperfect. The AI doesn't always understand spatial relationships the way you do. Sometimes the motion feels wrong or unnatural. Sometimes it's brilliant. The inconsistency is part of using generative AI.

Prompts still require precision. You can describe what you want, but vague prompts produce vague results. Writing effective prompts is a skill that improves with practice. It's not as simple as describing anything and getting exactly what you envisioned.

Regional restrictions limit access. If you're in a restricted region, you might not be able to use this feature at all. The geographic fragmentation is frustrating but real.

Age restrictions prevent some users from accessing it. If you're under 18, you're blocked from using text prompts in Photos, even though you might have legitimate creative reasons to use the feature.

It's not a replacement for real video. For serious creative projects, professional-grade video production still beats AI generation. This feature is useful for casual content, social media, and specific use cases. It's not an all-purpose video solution.

These limitations don't make the feature not worth using. They just mean you should approach it with realistic expectations. It's a tool that solves specific problems well, not a tool that solves all video creation problems.

The Broader Implications for AI Integration

Google's approach to integrating image-to-video generation into Photos reveals something important about how major tech companies are thinking about AI: distribute it quietly, integrate it deeply, and make it accessible by default.

Google isn't making a big deal about this feature. It's not a separate product or service. It's just part of Photos, available to existing users, with optional text prompts if you want more control. This is different from how some AI features are marketed—as revolutionary new products you need to learn about and adopt.

Instead, Google is treating generative AI as infrastructure. It's something Photos does now, the same way it does photo editing or backup. This approach has advantages: it gets technology to users without requiring them to seek it out, it integrates with existing workflows, and it doesn't require massive user education.

It also creates dependencies. If you use Photos, you're now potentially using generative AI whether you consciously chose to or not. Google's handling of the feature—age restrictions, safety systems, regional rollout—becomes important because it affects billions of people.

This is the future of how major technology gets distributed. Not as separate products you opt into, but as capabilities embedded in tools you already use. It's efficient and effective, but it also raises questions about consent, agency, and choice.

For product teams across the industry, Google's approach is instructive. When you have distribution at scale—billions of users—you don't need flashy marketing. You just need to build something useful and make it part of the product. The technology adoption happens naturally.

Conclusion: Why This Matters More Than It Seems

On the surface, Google Photos adding text prompts to its image-to-video feature is an incremental update to a tool most people already have access to. You might think "okay, cool, another AI feature, moving on."

But if you squint and look at the broader pattern, something more significant is happening. Generative AI tools are moving from novel capabilities to standard features in mainstream apps. Image-to-video generation went from a specialized tool that required Runway or a dedicated application to something built into Photos with text prompts.

This democratization matters. It means a student can create video content for a project without buying expensive software. A small business owner can generate product videos without hiring a videographer. A creator can prototype video ideas without extensive technical knowledge.

The text prompt capability specifically matters because it gives people agency. Instead of choosing between presets, you can describe your vision. That shift from passive to active, from choosing options to directing an AI, is significant from both a capability and a psychological perspective.

Yes, there are safety concerns to manage. Yes, there are limitations to the technology. Yes, age restrictions and regional barriers are frustrating. But the underlying trend is real: creative tools are becoming more accessible, more integrated, and more capable. AI is making that acceleration possible.

For anyone working with photos, video, or creative content, this feature is worth trying. Not because it's perfect, but because it represents a genuine expansion of what's possible for non-professionals. That's genuinely valuable.

The next evolution will be even better. Text-to-video will improve. Image-to-video will become more sophisticated. Integration will deepen. The current version is impressive. The future versions will be even more so.

FAQ

What exactly is Google Photos' image-to-video feature?

Google Photos' image-to-video feature takes a single still photograph and animates it using AI to create realistic movement and visual effects. Unlike simple zoom-and-pan transitions, this feature generates new pixels and realistic motion that makes it look like the moment in the photo is continuing to unfold. The animation can include subject movement, camera pans, lighting effects, and other visual changes that feel natural.

How do text prompts work differently from the previous preset options?

Before text prompts, users had two choices: "Subtle Movement" for minimal, safe animation, or "I'm Feeling Lucky" for unpredictable, more dramatic effects. Text prompts let you describe exactly what movement or effect you want. Instead of choosing between presets, you can specify directions like "slow pan from left to right" or "zoom in on the subject's face," giving you creative control while still letting the AI handle the technical animation work.

Why is there an age restriction for text prompts in Photos but not in Gemini?

Google restricted text prompt image-to-video generation to users 18 and older in the Photos app, while allowing users 13 and older to access similar capabilities through Gemini. The difference likely reflects Google's assessment that Photos is a more casual app with broader accessibility, while Gemini is a dedicated AI assistant where users are making more deliberate choices. The age restriction also acknowledges past misuse of image-to-video tools, such as what occurred with Grok's similar feature, which was used to create non-consensual synthetic videos.

Is this feature available everywhere?

No. Google's image-to-video feature, including the new text prompt capability, is geographically restricted. Availability varies by region due to different regulatory requirements, licensing agreements, and content moderation considerations. Some regions have full access to all features, others have limited access or no access at all. Users should check Google's official support documentation to see if the feature is available in their specific region and account type.

How long are the generated videos?

Generated videos using Google Photos' image-to-video feature are typically short, usually in the 3 to 5 second range. This length is appropriate for social media content, animated memories, and quick creative pieces, though it's too short for longer-form content or professional video projects.

Can I edit the generated videos after creation?

Yes. Once a video is generated, you can export it and edit it using any video editing software. The feature includes default audio that you can remove or replace with your own music or sound. You can also save the video and adjust it in other applications if the initial result needs refinement.

How does Google's feature compare to dedicated video generation tools like Runway or Synthesia?

Google Photos' feature is simpler and more accessible but less flexible than specialized tools. Runway and similar platforms offer more advanced controls and longer video generation. However, Google's approach has a major advantage: it's built into an app 1.9 billion people already use, with zero barrier to entry. For casual users and quick video creation, Google's simplicity is an advantage. For professionals needing advanced capabilities, specialized tools are still better.

What happens if the AI misinterprets my prompt?

If the generated video doesn't match your description, you can edit your prompt and generate a new version. The iteration process is normal with generative AI. Sometimes multiple attempts are needed to get exactly what you want. The system remembers your original image, so you're only trying different prompts, not restarting from the beginning each time.

Is this feature completely free?

Yes. The image-to-video feature, including text prompts, is included free for all Google Photos users who meet the age requirement (18+) and are in a region where the feature is available. You don't need a paid subscription or additional purchase to access these capabilities.

What's the difference between generative AI video and deepfakes?

Generative AI video like Google Photos' feature creates animated content from existing static images using AI-generated motion and effects. Deepfakes typically refer to synthetic videos of real people that are designed to deceive by making them appear to say or do something they didn't actually do. While the underlying technologies can overlap, their intentions are very different—one is creative animation, the other is often used for deception or misinformation. This is why Google implements safety restrictions and why text prompts are age-restricted.

Can I use generated videos commercially?

Yes, generally you can use videos generated from your own photos commercially since you own the source material. However, if you use the default audio that Google provides, you need to verify that it's licensed for commercial use. The terms of service for Google Photos specify permitted uses, so reviewing those terms for your specific use case is important before using generated videos in professional or commercial contexts.

Key Takeaways

- Google Photos' new text prompt feature for image-to-video generation gives users creative control previously unavailable through preset options

- The feature is restricted to users 18+ in Photos (but 13+ in Gemini) due to demonstrated misuse patterns with similar tools like Grok

- Generated videos include default audio and are ready to publish without additional editing, reducing friction for content creators

- Text prompts enable practical applications including product photography, travel content, social media video, and personal archiving

- The feature represents a broader trend of AI capabilities being embedded into mainstream apps rather than distributed as separate products

Related Articles

- Google Photos Me Meme: AI-Powered Meme Generator [2025]

- Gemini's Personal Intelligence: How AI Now Understands Your Digital Life [2025]

- Google Veo 3.1 Vertical Videos: Reference Images Game-Changer [2025]

- Asus Zenbook Duo 2026 Review: Dual-Screen Performance [2026]

- Apple's Gemini-Powered Siri Coming February 2026 [Update]

- Google's Free SAT Practice Exams With Gemini: A Game-Changer [2025]

![Google Photos AI Video Generation: Text Prompts & Creative Control [2025]](https://tryrunable.com/blog/google-photos-ai-video-generation-text-prompts-creative-cont/image-1-1769519287420.jpg)