Introduction: The Shift From Reactive to Proactive AI

For years, AI assistants have operated on a simple principle: you ask, they answer. Chat GPT waits for your prompt. Claude reads your message. Gemini sits idle until you type something into the chat box. But Google just broke that pattern.

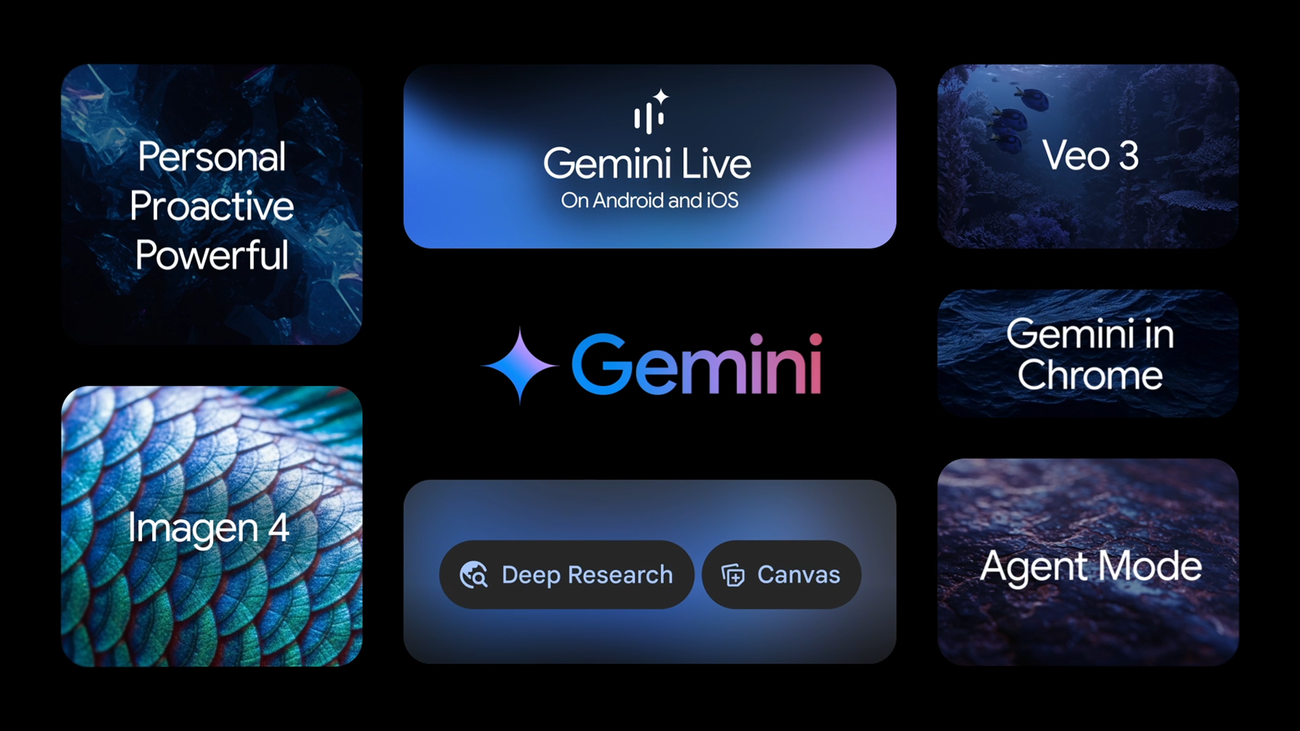

In January 2026, Google announced Personal Intelligence, a new beta feature that fundamentally changes how AI assistants interact with your data. Instead of waiting for you to ask about your car's tire size, Gemini now proactively suggests tire recommendations after seeing your road trip photos. Instead of requiring you to manually search for a video recommendation, it notices your cooking searches and suggests relevant YouTube channels.

This isn't incremental improvement. This is the difference between a tool and something that actually understands your life.

But here's what matters: Google built this with privacy controls baked in. Personal Intelligence is completely off by default. Users choose which apps to connect. Data doesn't train the model directly. Google even avoids making proactive assumptions about sensitive topics like health. Yet the capability is undeniably powerful—and undeniably controversial.

This shift raises important questions. What happens when AI understands your photos, emails, and search history well enough to make personalized suggestions without being asked? How do companies balance capability with privacy? What does "consent" actually mean when you're giving AI access to years of personal data? And most importantly: is this the future of AI assistants, or a warning sign about how much power we're willing to give tech companies?

The answers matter because Personal Intelligence isn't just a Gemini feature. It's a proof of concept for how AI assistants will work in the next five years. Every major AI company is building toward this—the ability to understand you contextually, proactively, across your entire digital footprint.

Let's break down what Personal Intelligence actually does, how it works, what makes it different from competitors, and what it means for you.

What Is Personal Intelligence, Exactly?

Personal Intelligence is Gemini's ability to reason across your Google apps and provide contextual, proactive suggestions without being explicitly asked. That's the simple version. The complex version requires understanding what "reasoning across" and "proactive" actually mean in practice.

Traditionally, when you ask Gemini a question, it retrieves information in real-time from available sources. You ask "What's a good tire brand?" and it gives you a generic answer. With Personal Intelligence, Gemini now has standing access to your email history, photo library, YouTube watch history, and Google Search records. More importantly, it analyzes patterns across these sources to understand your preferences, needs, and context.

So when you ask about tires, Gemini doesn't just give you generic recommendations. It recognizes that you've been searching for family road trip destinations, finds photos from previous family trips, reads emails about vacation planning, and synthesizes all of that to suggest tires suitable for someone who drives long distances with family. It combines tire safety data with your actual life context.

The "proactive" part is even more significant. Gemini doesn't wait for you to ask. When it detects that information from your apps could help with something you're working on, it offers suggestions unprompted. This is radically different from search engines (which require a query) and even from traditional AI assistants (which respond to prompts).

Think about the tire shop example Google shared. The user didn't ask Gemini for tire recommendations. They asked about tire size for their car. Gemini recognized that the user was standing in a tire shop (inferred from context), found relevant photos showing family trips and terrain types, identified that the family did multi-day road trips regularly, and suggested all-weather tires before the user even knew to ask about tire types.

That's not just retrieval. That's AI understanding.

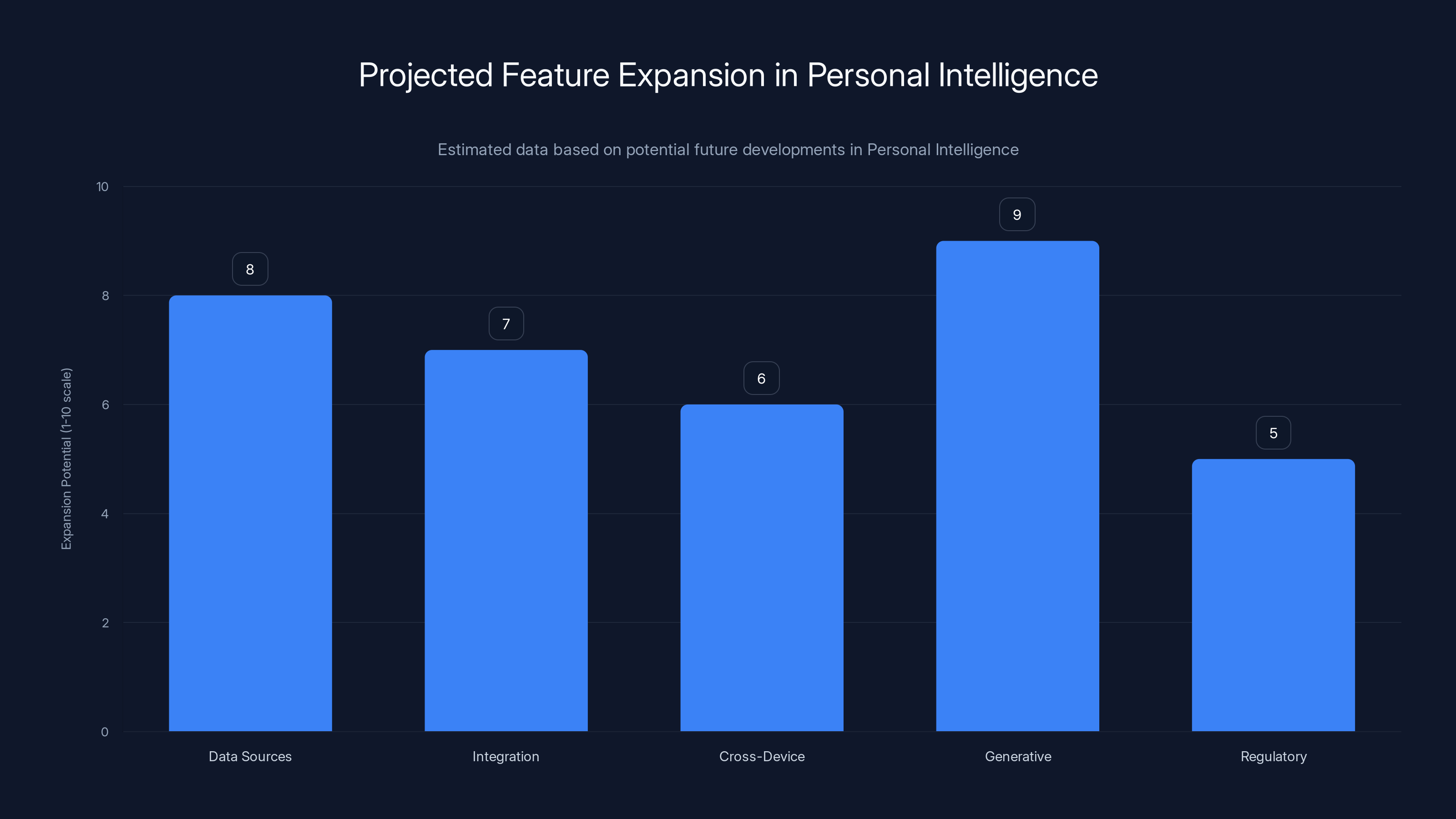

Estimated data suggests significant expansion potential in generative capabilities and data sources for Personal Intelligence, with regulatory evolution being a moderate factor.

How Personal Intelligence Actually Works

The mechanics of Personal Intelligence are deceptively simple on the surface, but complex underneath. Here's the actual process:

Step 1: Permission and Connection. Users explicitly enable Personal Intelligence in their Gemini settings and choose which Google apps to connect. You can connect Gmail, Photos, Search history, and YouTube viewing history independently. This isn't automatic—it requires deliberate action and is off by default.

Step 2: Context Analysis. When you interact with Gemini, the system analyzes your prompt and determines whether accessing your connected apps would provide relevant context. This is the critical filtering mechanism. Gemini doesn't automatically scan everything. It only accesses your data when it predicts that doing so will improve its response.

Step 3: Cross-Source Reasoning. If the system determines that your apps contain relevant information, Gemini retrieves specific data points: relevant emails, photos matching certain criteria, videos you've watched, or searches you've performed. The system looks for patterns—what topics you've researched, what visual elements appear in your photos, what you've watched repeatedly.

Step 4: Synthesis and Response. Gemini combines this cross-app information with its training data and your actual query to generate a personalized response. If you ask about cookbook recommendations, it might recognize that you've been researching specific cuisines, watched cooking videos featuring certain ingredients, and sent emails discussing dietary preferences. It then synthesizes this into a tailored recommendation.

Step 5: Optional Proactive Suggestions. Finally, Gemini can offer unprompted suggestions. If it detects that you're asking about one thing but your cross-app data suggests another need, it might offer additional insights. You ask about tire size and Gemini also suggests tire types.

Critically, Google implemented specific safeguards. The system maintains a list of sensitive topics where it avoids making proactive assumptions. Health information is off-limits for unsolicited suggestions. Financial data similarly receives restricted treatment. This isn't foolproof—it's a guardrail, not a guarantee—but it's more thoughtful than typical AI deployment.

Also important: your data doesn't directly train Gemini's base model. The system doesn't feed your Gmail inbox into the next version of Gemini. Instead, it only uses your data to generate responses in that specific conversation. Your photos, emails, and search history remain isolated to your Personal Intelligence interactions.

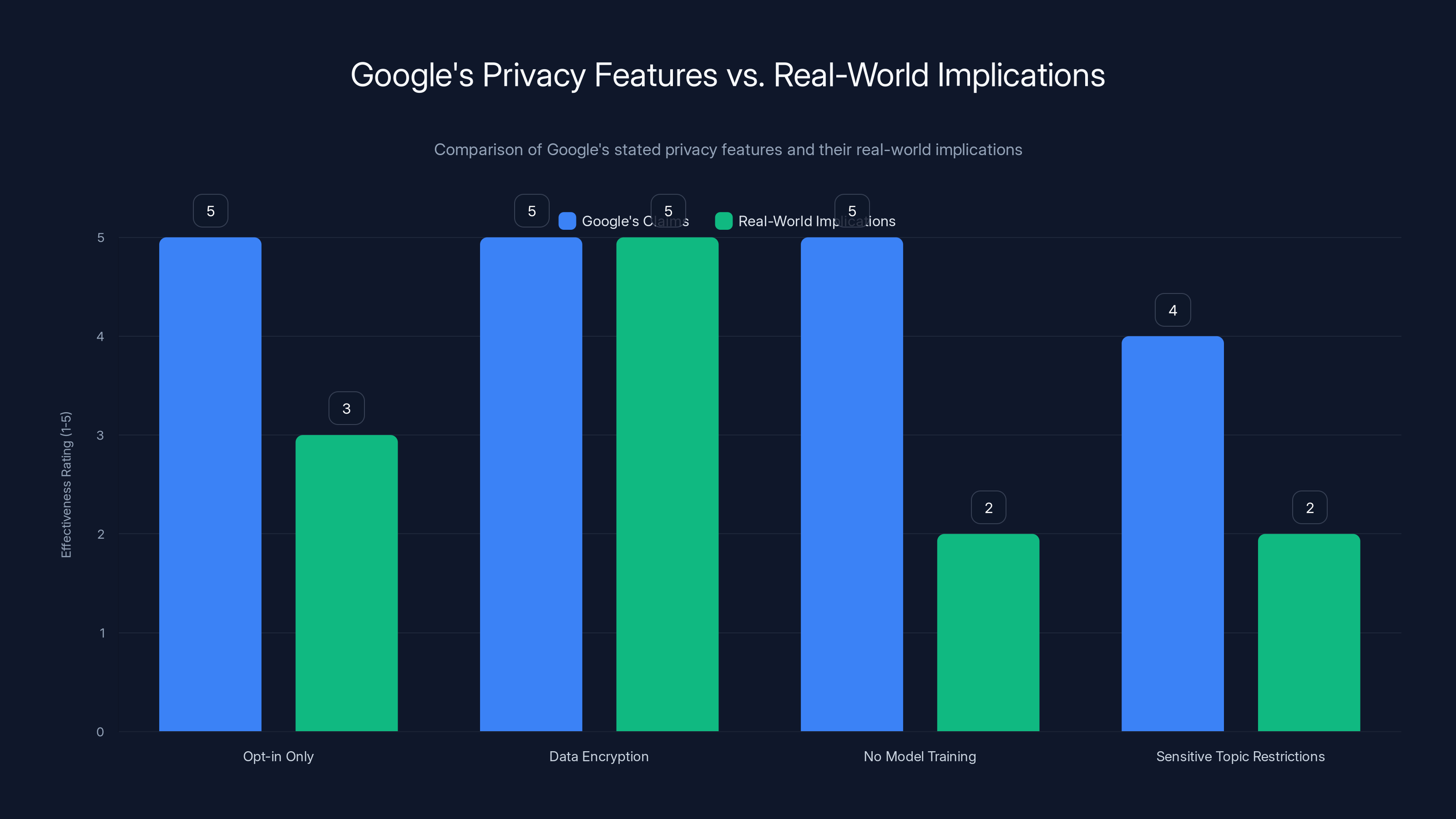

While Google's privacy features are robust on paper, real-world implications reveal gaps, particularly in data usage and topic restrictions. Estimated data based on content analysis.

Privacy Architecture: Guardrails and Real-World Implications

Google's privacy story around Personal Intelligence requires careful examination. The company claims strong protections, but the reality is more nuanced.

What Google Says About Privacy: Personal Intelligence is opt-in only. Users must explicitly connect each app. Google doesn't use your personal data to train the base Gemini model. The system has built-in restrictions on sensitive topics. Data remains encrypted in transit and at rest. Users can disconnect apps anytime.

What This Actually Means: Yes, it's opt-in, but "opting in" to Gmail access means Gemini can read 15 years of emails. "Opting in" to Photos means the system analyzes your entire personal photo library. The promise that data doesn't train the model is technically true, but it's incomplete. Your data absolutely trains the prompt-response patterns that Gemini learns from. When you ask about cookbooks and Gemini sees your cooking searches and suggests recipes, the system learns that pattern. Over thousands of similar interactions, Gemini's behavior is shaped by your personal data, even if the underlying model weights aren't directly updated.

The restrictions on sensitive topics are also more limited than implied. Google avoids making proactive suggestions about health data—but if you ask Gemini a health question, it will happily dig through your medical-related searches and emails. That's not protection; it's just a restriction on unsolicited health suggestions.

Real Privacy Risks: The biggest concern isn't about what Google does with your data. It's about what it could do if policies change. Regulatory pressure, business incentives, or policy shifts could change how Personal Intelligence data is used. Data breaches are another concern. The more centralized your personal data becomes in one system, the higher the impact of a compromise. If someone gains access to your Personal Intelligence profile, they don't just get your email. They get a synthesized understanding of your preferences, patterns, and life context.

There's also the question of how data might be disclosed in legal proceedings. If someone subpoenas your Gemini data, they're not just getting random emails. They're getting the system's synthesized understanding of your life—arguably more revealing than the raw data itself.

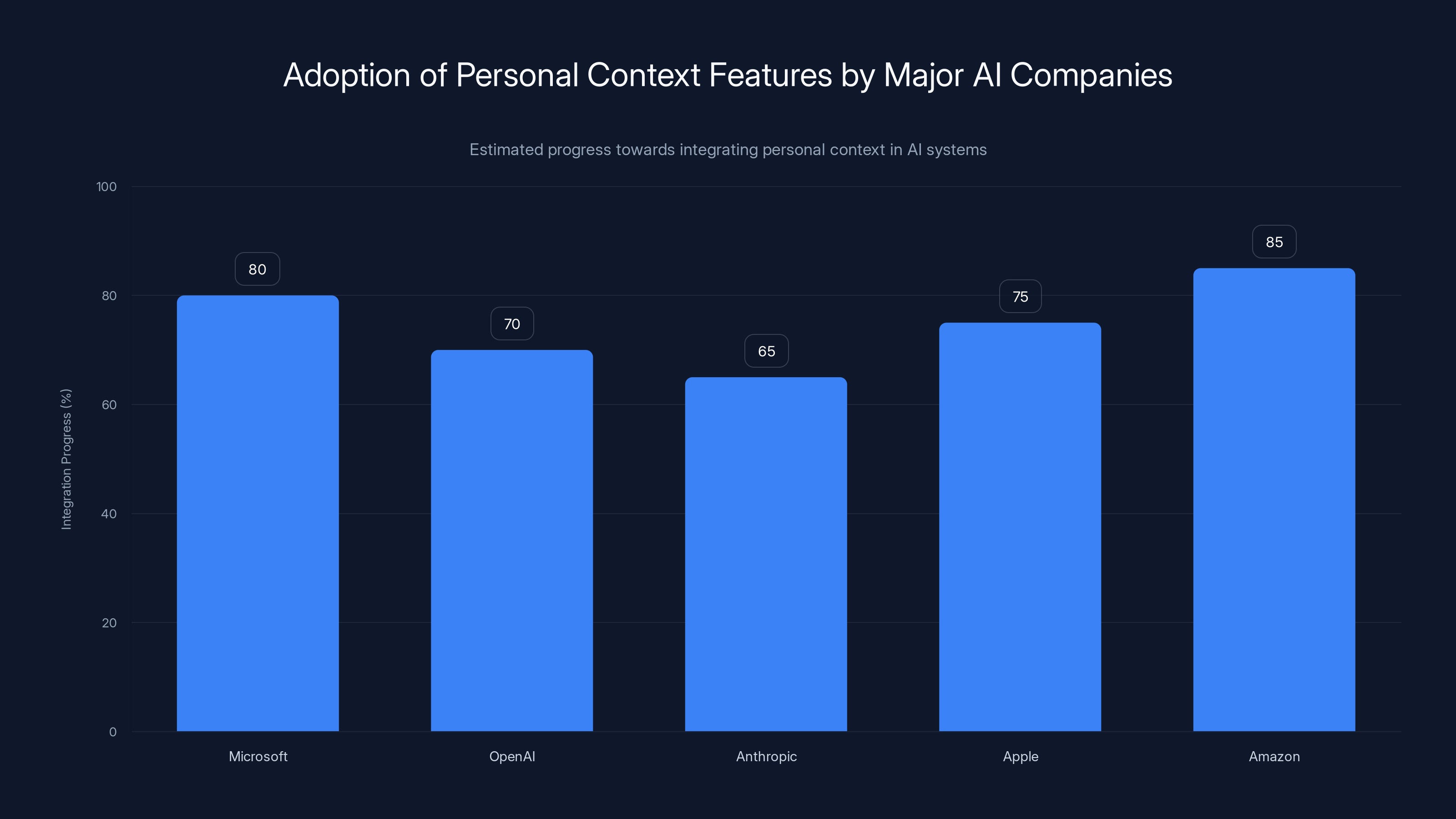

The Broader Context: This isn't unique to Google. Every major AI company is building similar features. Microsoft's Copilot is integrating Office data. OpenAI is exploring personal context features. Amazon's Alexa has access to your shopping, smart home, and browsing data. Apple is working on on-device personal AI. The industry consensus is that the future of AI assistants requires personal context. Privacy controls are becoming table stakes, but the fundamental pattern—"give AI your data to get better answers"—is becoming standard.

Personal Intelligence vs. Competitors: The Competitive Landscape

Google isn't alone in building AI assistants that understand personal context. But the execution differs significantly across companies.

Chat GPT and Advanced Context: OpenAI's Chat GPT doesn't have automatic access to your emails or photos, but it does offer file uploads and memory features. You can upload documents for Chat GPT to analyze. The Chat GPT memory feature lets the model remember information about you across conversations. But this is reactive—you provide the context, not the system pulling it from your digital life. Chat GPT Plus and Enterprise users also get some integration with other apps, but it's limited compared to Personal Intelligence.

Claude's Canvas and Analysis: Anthropic's Claude also lacks automatic personal context, but it offers strong document analysis capabilities. You can upload PDFs, emails, or other documents, and Claude will analyze them deeply. Claude's approach is more structured—it focuses on specific tasks like writing, analysis, or coding rather than proactive life understanding.

Microsoft Copilot and Enterprise Integration: Microsoft has built Copilot across Office 365, leveraging your emails, documents, and calendar. In enterprise settings, Copilot for Microsoft 365 actually does reason across your work context—suggesting which colleagues to invite to meetings, finding relevant past emails, or analyzing documents in context. But this is limited to work apps and enterprise deployment.

Perplexity's Research Focus: Perplexity AI connects to web search but not to your personal data. Its approach is opposite to Personal Intelligence—it's about understanding current information and sources rather than understanding you personally.

Apple's On-Device Personal Context: Apple is building AI features that run locally on your iPhone and Mac, accessing personal data stored on your device without sending it to servers. This is potentially the most privacy-friendly approach, though it's limited by on-device processing power. Apple Intelligence can understand your photos, emails, and messages because they're stored locally.

The Key Difference: Personal Intelligence is notable because it's the first scaled, cloud-based, cross-app, proactive personal AI system from a major tech company. Others have pieces of this—Microsoft has the enterprise version, Apple has privacy-first local processing—but Google is building the comprehensive version that reasons across your entire Google ecosystem and proactively suggests things.

This matters competitively because context is increasingly the differentiator between generic AI assistance and actually useful AI. Chat GPT can write well. Claude can analyze documents. Perplexity can research thoroughly. But Gemini with Personal Intelligence can do all of those things while understanding your specific situation. That's more valuable than any individual capability.

Estimated data shows an even distribution of Personal Intelligence use cases, highlighting diverse applications from professional research to travel planning.

Real-World Use Cases That Actually Make Sense

Google's marketing examples (tire recommendations, spring break planning) are interesting, but they're carefully selected to seem helpful without being creepy. Real-world uses are more varied and sometimes more concerning.

Genuinely Useful Cases:

Professional research and synthesis. If you're a marketer researching competitor strategies, Personal Intelligence can connect emails from sales conversations, search queries about competitor features, and relevant YouTube videos into a coherent competitive analysis. You don't need to manually compile information—Gemini synthesizes it.

Financial decision-making. Gemini could analyze your spending patterns from Gmail receipts and shopping emails, understand your financial goals from search history, and suggest budget adjustments. It understands your actual situation rather than generic financial advice.

Health and fitness. If you've been searching for running training plans, logging your runs via email confirmations, and watching fitness videos, Gemini could provide training suggestions tailored to your level and goals. This requires understanding your journey, not just giving generic advice.

Content discovery. The cookbook example makes sense: if you've been researching specific cuisines, watched cooking videos, and emailed about dietary preferences, personalized cookbook recommendations are actually useful. Generic recommendations miss what you actually care about.

Travel planning. Beyond Google's spring break example, imagine Personal Intelligence understanding that you prefer boutique hotels (from booking confirmation emails), enjoy photography (from your YouTube watch history), and have specific accessibility needs (from your search history). Travel suggestions become actually useful.

Uncomfortable Cases:

Inferring sensitive situations. If you've been searching for specific health conditions, reading emails from doctors, or looking at medical information, what happens if you ask an unrelated question? Does Gemini infer something about your health and modify its responses? The guardrails prevent proactive suggestions, but the data is still accessible.

Reminder of past regrets. Personal Intelligence inherently brings up old information. You might ask a simple question and have Gemini remind you of something you were researching a year ago that you've since moved past. This could be uncomfortable (being reminded of job searches, relationship research, etc.).

Recreation of behavior profiles. Over time, Gemini's understanding of you becomes detailed enough to predict behavior. This data could hypothetically be valuable to advertisers or used for manipulation. Google says it won't do this, but the capability exists.

Disclosure in sensitive situations. If someone gains access to your Gemini account, they don't just see random emails. They see what the system has synthesized about you—a detailed, AI-generated understanding of your life and interests.

The Honest Assessment:

Personal Intelligence is genuinely useful for research-oriented tasks and when you need contextual understanding. It's less useful for routine questions. And it introduces privacy trade-offs that some users will be comfortable with and others won't. There's no universal answer to whether it's worth it—it depends on your comfort with data access and what you actually use AI for.

The Technical Challenge: Cross-Source Reasoning

What makes Personal Intelligence technically interesting is the cross-source reasoning problem. This is harder than it sounds.

When you ask about tire recommendations, Gemini needs to:

- Parse your query to understand that you're asking about tires

- Determine whether your personal data would be relevant

- Search across multiple types of data (emails, photos, videos) for relevant information

- Extract useful signals from unstructured data (photos require image analysis, emails require NLP, video requires summarization)

- Synthesize information from different sources into a coherent context

- Generate a response that uses this context without hallucinating

Each step is technically complex. Extracting meaning from photos requires computer vision. Understanding email context requires NLP. Determining relevance across types of data requires semantic understanding. Synthesizing coherent responses without hallucinating requires careful prompt engineering.

Google's approach is to use Gemini's base reasoning capabilities (which are already strong) combined with retrieval of specific data from your apps. This is more practical than trying to build a entirely new system. But it's not trivial.

The filtering mechanism—deciding whether to access your data at all—is probably the trickiest part. Ask "what should I eat for dinner" and accessing your entire email history and photo library would be wasteful. Ask "what should I eat for dinner given my recent travels" and the data becomes relevant. Gemini needs to make this distinction correctly most of the time without constantly accessing your data unnecessarily.

Google likely uses several techniques: routing your query through a classifier that predicts whether personal data would be helpful, setting confidence thresholds before accessing data, and monitoring user feedback to improve the filtering mechanism. But this is still an area where Personal Intelligence probably makes mistakes—either accessing data unnecessarily or missing opportunities to provide better answers.

Signal extraction and multi-source search are the most complex steps in cross-source reasoning, requiring advanced technologies like computer vision and NLP. Estimated data.

How This Changes What AI Assistants Can Do

Personal Intelligence represents a shift in AI assistant architecture. Instead of stateless systems that reset between conversations, Personal Intelligence introduces persistent context about you specifically.

The Implications for AI Capability:

AI becomes more useful the more context it has. Generic advice about time management is less valuable than advice tailored to someone who works in tech and has family commitments. Generic cookbook recommendations are less valuable than recommendations from someone who understands your dietary preferences, cooking skill level, and kitchen equipment.

This is why Personal Intelligence works. Gemini becomes more useful specifically because it understands you.

But this also means AI becomes more opinionated. Instead of asking you clarifying questions, Personal Intelligence makes inferences about your preferences and goals based on your data. This is more efficient but also more fallible. Inferences can be wrong. Preferences change. AI might understand you based on last year's behavior without realizing you've changed.

The Skill Shift for Users:

Using Personal Intelligence effectively requires different skills than using traditional AI. You need to understand what data the system has access to and how that might bias its suggestions. You need to recognize when it's making good inferences and when it's making bad ones. You need to provide negative feedback when it misunderstands your preferences.

This is similar to the skill shift that happened with recommendation algorithms. Learning to use Netflix, Spotify, or YouTube effectively requires understanding that these systems make inferences and knowing how to correct them. Personal Intelligence creates the same skill requirement.

The Strategic Implications:

Personal Intelligence makes Google's AI more sticky. If you've connected your Gmail, Photos, and search history to Gemini, switching to Chat GPT means losing that context. You're not just switching tools; you're abandoning the AI system that understands you. This is a powerful lock-in mechanism.

It also gives Google (and future competitors with similar features) deep insight into user behavior, preferences, and patterns. Not the raw data, but the synthesized understanding. Over time, this compounds into a strategic advantage.

Privacy Regulations and What They Mean

Personal Intelligence exists in a regulatory gray area that's rapidly becoming a regulated space.

GDPR Implications: In Europe, GDPR gives users rights around personal data processing. Personal Intelligence probably qualifies as processing personal data, which means users have rights to access, correction, and deletion. Google's opt-in approach likely satisfies GDPR's consent requirements, but the regulations are strict enough that regulators might challenge specific practices. For example, if Google uses patterns from Personal Intelligence interactions to improve its ad targeting, that might constitute secondary use that requires additional consent.

California Consumer Privacy Act (CCPA): California's CCPA gives users rights to know what data is collected, delete data, and opt out of sale or sharing. Personal Intelligence probably complies on the surface, but again, the interpretation of what constitutes "sharing" is contested. If Google shares insights derived from Personal Intelligence with advertisers, that might violate CCPA.

UK Online Safety Bill: The UK is moving toward stricter AI regulation, including requirements for transparency about how AI uses personal data. Personal Intelligence would likely need clear disclosures about data usage.

The Broader Regulatory Trend: Globally, regulators are focusing on AI transparency and data protection. The EU's AI Act, proposed regulations in the US, and emerging standards worldwide are all pushing toward stricter requirements around how AI uses personal data.

Google built Personal Intelligence with privacy safeguards partly because compliance is becoming mandatory. The guardrails around sensitive data, the opt-in approach, and the clear data usage policies are likely influenced by anticipated regulatory requirements.

What This Means: Personal Intelligence as currently designed probably complies with existing and anticipated regulations. But regulations will tighten. Features that are acceptable today might require additional consent tomorrow. Users should assume that what's currently allowed may become more restricted—and conversely, what's currently restricted might become allowed if regulations change.

Policy makers have the highest priority in addressing Personal Intelligence implications, followed by individual users and businesses. Estimated data based on content analysis.

The Broader Trend: Personal Context as Standard

Personal Intelligence isn't an outlier. It's the leading edge of a trend that's reshaping AI assistants.

Why Personal Context Matters: AI without personal context is generic. With personal context, it becomes personalized. Personalized assistance is objectively more useful for most tasks. This economic reality means every AI company is moving toward similar capabilities.

The Competition: Every major AI company is building similar features. Microsoft has already integrated Office data into Copilot. OpenAI is exploring memory and personal context. Anthropic is working on similar capabilities. Apple is building on-device personal context. Amazon is leveraging smart home and shopping data.

The question isn't whether personal context becomes standard—it's already becoming standard. The question is how it's implemented, what controls users have, and how data is protected.

The User Experience Evolution: As personal context becomes standard, AI assistant behavior will shift:

- From query-response to contextual assistance. Instead of just answering your question, AI will proactively offer relevant information.

- From generic to personal. Instead of the same suggestions for everyone, each user gets unique recommendations.

- From stateless to stateful. AI will remember things about you across conversations instead of starting fresh each time.

- From shallow to deep. AI will understand not just your current task but your broader context and goals.

This is more useful but also requires more trust. You're not just trusting AI with good responses; you're trusting it with your personal data.

Security Considerations Beyond Privacy

Privacy and security are related but distinct. Personal Intelligence has security implications beyond privacy protection.

Account Compromise Risk: If someone gains access to your Gemini account, they don't just get access to that account. They get access to a synthesized understanding of your entire digital life across Google's ecosystem. This is more valuable than access to a single app because it reveals patterns and connections.

Cross-App Attack Surface: Personal Intelligence connects multiple Google services. If a vulnerability exists in any of them, it potentially exposes data to all of them. This increases the attack surface—not because Google is less secure, but because connecting more systems means more potential vulnerabilities.

Phishing and Social Engineering: An attacker who understands your Personal Intelligence profile (your interests, preferences, patterns, and goals) can craft more convincing phishing attacks. They can reference your personal context to make malicious messages seem more trustworthy.

Data Retention: The longer Personal Intelligence retains your data, the greater the risk of a historical breach. If your entire email history, photo library, and search history is indexed and searchable by Gemini's systems, a breach could expose years of data. This is different from accessing a service once; it's storing a comprehensive profile.

Google's Response: Google uses standard security practices—encryption in transit and at rest, access controls, regular security audits, and breach notification policies. These are table stakes, not differentiators. But the security is only as strong as the implementation. Previous Google services have had vulnerabilities. Future vulnerabilities are inevitable.

What Users Can Do: Enable two-factor authentication. Review which apps are connected to Personal Intelligence and disconnect ones you're uncomfortable with. Monitor your Google Security Hub for suspicious activity. And understand that no system is perfectly secure—there's inherent risk when consolidating personal data.

Estimated data shows that Amazon leads in integrating personal context features, closely followed by Microsoft and Apple. Estimated data.

Practical Setup and Getting Started

If you decide to use Personal Intelligence, the setup process is straightforward but requires deliberate choices.

Step 1: Access Personal Intelligence Settings

Open Gemini and navigate to Settings > Personal Intelligence. Google will display information about what Personal Intelligence does, what data it accesses, and privacy controls.

Step 2: Choose Which Apps to Connect

You can enable Personal Intelligence for:

- Gmail: Allows Gemini to read your emails and understand your communication history

- Photos: Allows image analysis of your photos to understand visual context

- Search History: Allows analysis of your Google searches to understand your interests and research

- YouTube Viewing History: Allows analysis of what you've watched to understand your preferences

Start with one app if you're uncertain. Many users find Gmail alone provides significant contextual value.

Step 3: Adjust Privacy Controls

Google provides checkboxes for sensitive topics where you don't want proactive suggestions:

- Health and medical information (proactive suggestions disabled)

- Financial sensitive data (limited proactive suggestions)

- Relationship and personal matters (limited scope)

Enable all restrictions initially, then disable ones you're comfortable with.

Step 4: Start Using It Naturally

You don't need to change how you interact with Gemini. Just ask questions normally. The system will determine when to use personal context and when to provide generic responses.

Step 5: Provide Feedback

Gemini should offer feedback options on responses. Thumbs up/down or more detailed feedback helps the system learn your preferences and understand when contextual suggestions are helpful.

Step 6: Monitor and Adjust

After a week, evaluate whether Personal Intelligence is actually helpful. If it's providing better suggestions, keep it enabled. If it's mostly noise or if you're uncomfortable with data access, disconnect the apps.

The Future of Personal Intelligence

Personal Intelligence will almost certainly expand significantly in the coming years.

Feature Expansion: Google will likely add more data sources over time. Phone activity, calendar, travel history, fitness data, and smart home information could all become Personal Intelligence inputs. Each addition makes the system more contextually aware.

Deeper Integration: Personal Intelligence might integrate more deeply with Google's services. Imagine Gmail getting better at automatically prioritizing messages based on Personal Intelligence understanding of what's important to you. Or Google Photos automatically organizing images based on contextual understanding. Or Google Search results ranking differently based on personal context.

Cross-Device Consistency: Currently, Personal Intelligence is primarily in Gemini. Google might extend it to other interfaces—maybe Google Assistant on your phone, or built into Chrome, or integrated into Google's other products.

Generative Capabilities: Personal Intelligence is currently extraction and synthesis focused. Future versions might generate content specifically for you—summaries, reports, presentations—all tailored to your context and preferences.

Regulatory Evolution: As regulators clarify rules around personal AI, Personal Intelligence will adapt. Some features might be restricted in certain regions. Additional consent mechanisms might be required. Transparency requirements will likely become stricter.

Competitive Response: Competitors will accelerate building similar features. Microsoft, OpenAI, Anthropic, and others will race to build personal context capabilities that match or exceed Gemini's.

Key Takeaways and Recommendations

For Individual Users:

-

Understand what you're enabling. Personal Intelligence gives AI access to significant personal data. Before enabling it, be clear on what that means for your privacy.

-

Start selective. Don't enable all apps at once. Start with one and evaluate whether the results are worth the privacy trade-off.

-

Use it for what it's good for. Personal Intelligence is most valuable for research tasks, planning, and discovery. It's less useful for simple factual questions.

-

Maintain control. Remember that you can disconnect apps anytime. This isn't an irreversible decision.

-

Stay informed. Google will evolve Personal Intelligence. Stay aware of how it changes and what new data sources it accesses.

For Businesses:

-

Prepare for personal AI. If your customers or employees use Personal Intelligence, understand what that means for your interactions with them.

-

Data implications. If you generate content that ends up in Personal Intelligence profiles (emails, YouTube videos, search results), understand that it's now part of users' personal context.

-

Competitive pressure. Personal context will become standard in AI. Consider how this affects your own AI strategy.

For Policy Makers:

-

Regulation is necessary. Personal Intelligence operates in a regulatory gray area today. Clear rules around consent, data usage, and transparency are needed.

-

Balance innovation and protection. Overly restrictive regulations could prevent beneficial uses. Insufficient regulation could allow harmful practices.

-

International coordination. Personal AI systems are global. Coordinated regulatory approaches are more effective than fragmented rules.

FAQ

What exactly is Google's Personal Intelligence feature?

Personal Intelligence is a beta feature in Gemini that allows the AI assistant to access and reason across your Google apps—including Gmail, Photos, Search history, and YouTube viewing history—to provide contextual, personalized responses. Instead of just answering generic questions, Gemini can now understand your specific situation by connecting information from multiple sources and even offer proactive suggestions related to your interests and needs.

How is Personal Intelligence different from regular Gemini?

Regular Gemini responds to your questions without access to your personal data, providing generic answers. Personal Intelligence enables Gemini to retrieve and synthesize information from your Gmail, Photos, and other Google apps to deliver personalized, context-aware responses. For example, when you ask about tire recommendations, Personal Intelligence can analyze your road trip photos and travel emails to suggest appropriate tires for your specific driving patterns, whereas regular Gemini would offer generic tire brand suggestions.

Is my data used to train Gemini's model?

No, Google states that your personal data does not directly train Gemini's base model weights. However, it's important to note that your data absolutely shapes how Gemini behaves and responds to you in Personal Intelligence interactions. When you ask questions and receive personalized responses, the system learns from those patterns in your account specifically, even if the underlying foundational model isn't retrained on your data.

Can I use Personal Intelligence without connecting all my Google apps?

Yes, absolutely. Personal Intelligence is completely opt-in, and you choose which apps to connect individually. You can connect just Gmail while keeping Photos disconnected, or enable Photos while leaving your Search history private. You can change these settings anytime and disconnect apps if you decide you're uncomfortable with the data sharing.

What happens to my privacy with sensitive information?

Google has implemented guardrails to restrict proactive suggestions about sensitive topics like health information. This means Gemini won't offer unsolicited health advice based on your medical searches or emails. However, if you explicitly ask a health-related question, Gemini will still access your health-related data if you've connected those apps. The restriction applies to proactive behavior, not to direct queries you make to the system.

How do I enable or disable Personal Intelligence?

Open Gemini, navigate to Settings, find Personal Intelligence, and toggle it on or off. You'll then be prompted to select which Google apps you want to connect. You can add or remove apps from Personal Intelligence at any time, and disconnecting an app immediately revokes Gemini's access to that data. There's no lengthy process or contractual commitment—it's designed to be user-controlled.

Is Personal Intelligence available to everyone?

As of January 2026, Personal Intelligence is rolling out as a beta feature exclusively to Google AI Pro and AI Ultra subscribers in the United States. Google has announced plans to expand the feature to additional countries and eventually to Gemini's free tier users, but the timeline remains unclear. If you're not currently eligible, you may need to wait for broader availability or consider upgrading to a paid Gemini plan.

What data does Personal Intelligence actually access?

Personal Intelligence can access your Gmail inbox (reading emails and understanding communication history), your Google Photos library (analyzing images to understand context and visual patterns), your Google Search history (understanding what you've researched), and your YouTube viewing history (knowing what you've watched and your viewing patterns). The system synthesizes this information to provide contextual suggestions relevant to your questions.

Conclusion: Understanding AI's Role in Your Digital Life

Personal Intelligence represents a significant shift in how AI assistants will work. For years, AI has been a tool you query. With Personal Intelligence, AI becomes something that understands your life, remembers your preferences, and anticipates your needs.

This is genuinely useful. The tire recommendation example Google highlighted—where Gemini understands your family's travel patterns and suggests appropriate tires—is actually helpful. It's the difference between generic advice and advice tailored to your specific situation.

But usefulness comes with trade-offs. Giving AI access to your emails, photos, and search history means giving Google (and future regulators, potential attackers, or acquirers) access to a detailed understanding of your life. The privacy controls Google built are thoughtful, but they're not bulletproof. Nothing is.

The honest assessment is that Personal Intelligence is worth trying if you're curious about what personal context adds to AI assistance. Start with limited data sharing—maybe just Gmail. See whether the contextual suggestions are actually helpful or mostly noise. If it's genuinely valuable, expand to other apps. If it feels creepy or invasive, disconnect and go back to regular Gemini.

Personal Intelligence also signals where AI is heading. Every major company is building toward this. The future of AI assistance isn't just smarter models or more advanced reasoning. It's AI that understands you specifically—your preferences, your patterns, your context. This will be more useful than generic AI. It will also require more trust.

The question you need to answer isn't whether Personal Intelligence is good or bad in the abstract. It's whether you trust Google with this level of access to your digital life, whether the usefulness justifies the privacy trade-off, and whether you're comfortable with how this data might be used in the future.

That's ultimately a personal decision. But it's worth making deliberately rather than by default.

Related Articles

- AI Models Learning Through Self-Generated Questions [2025]

- Is Artificial Intelligence a Bubble? An In-Depth Analysis [2025]

- MacBook Air M4: The Best AI Laptop of 2025 [Review]

- AI Isn't a Bubble—It's a Technological Shift Like the Internet [2025]

- AI's Hype Problem and What CES 2026 Must Deliver [2025]

- Satya Nadella's AI Scratchpad: Why 2026 Changes Everything [2025]

![Gemini's Personal Intelligence: How AI Now Understands Your Digital Life [2025]](https://tryrunable.com/blog/gemini-s-personal-intelligence-how-ai-now-understands-your-d/image-1-1768406791730.jpg)