Introduction: When Voice Assistants Listen Without Permission

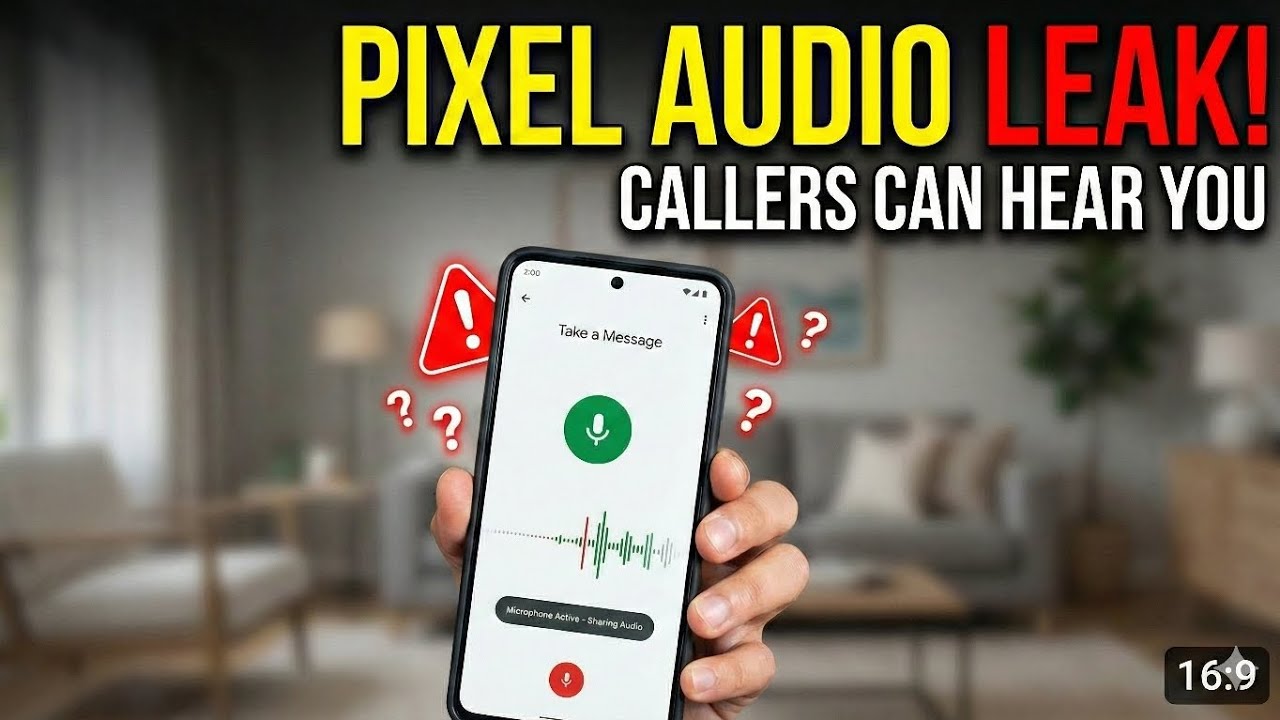

Imagine missing a call, and instead of a quiet voicemail, your phone's microphone silently activates and sends your room sounds, conversations, and ambient noise directly to the caller. No notification. No visual indicator until too late. This wasn't a hypothetical nightmare—it actually happened to dozens of Google Pixel 4 and Pixel 5 owners.

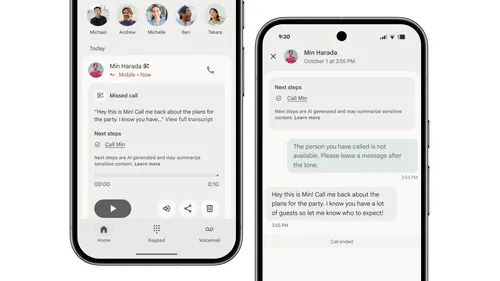

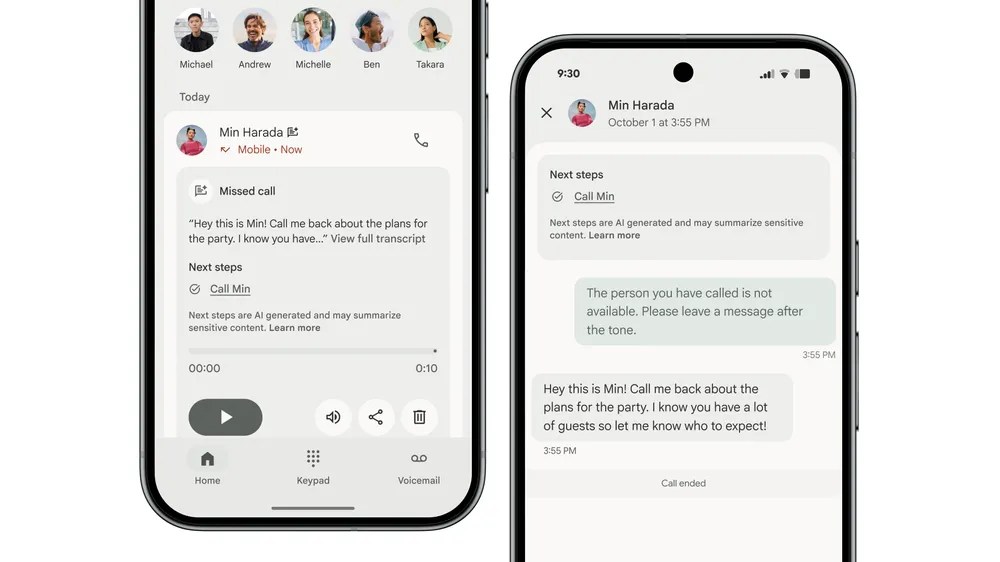

In late 2020, Google rolled out an ambitious feature called Take a Message. The idea was genuinely useful: if you missed a call, the feature would automatically answer, politely let the caller know you weren't available, and then transcribe their message in real-time. No more playing voicemail tag. No more missing important information buried in garbled audio messages.

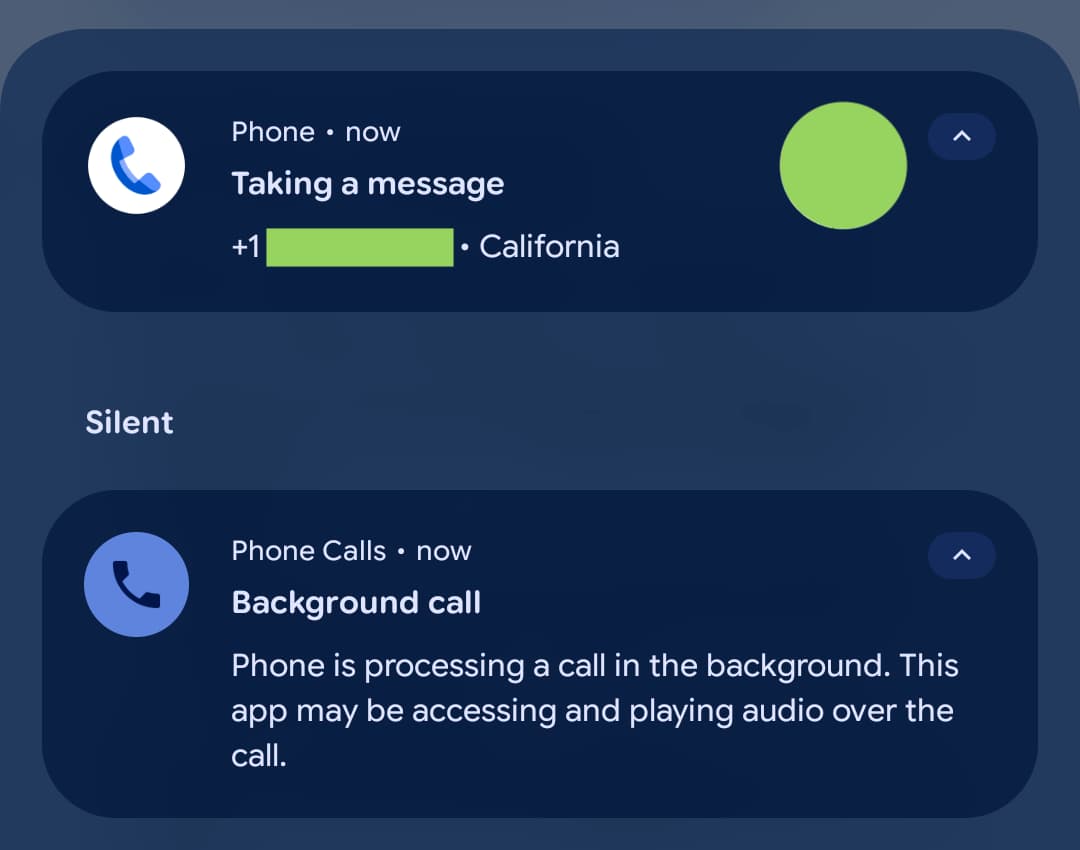

But somewhere in the development cycle, something went wrong. A bug with "very specific and rare circumstances" meant that instead of just listening to the caller's message, the feature was also actively recording and transmitting the phone owner's environment back to the caller. The microphone would turn on. The indicator light would flash. And the user would have no idea until they checked their call history and realized their privacy had been breached.

This incident touches on something larger than a single feature malfunction. It highlights the complex tension between convenience and privacy in modern smartphones, where AI-powered features operate in the background with minimal user awareness. When a feature can listen to your environment, who controls that activation? What safeguards exist? And how quickly does a company respond when those safeguards fail?

This is the story of what happened, why it matters, and what it reveals about the hidden mechanisms inside our phones.

TL; DR

- Critical Privacy Bug: Google's Take a Message feature on Pixel 4 and 5 leaked audio to callers under specific circumstances

- How It Happened: The microphone activated during voicemail transcription, broadcasting room sounds instead of just recording the caller's message

- Google's Response: Disabled Take a Message and Call Screen features on affected devices as a precautionary measure

- Real User Impact: Affected owners experienced unwanted audio exposure during missed calls, with no clear notification

- Alternative Features: Users can still use manual Call Screening or their carrier's voicemail service

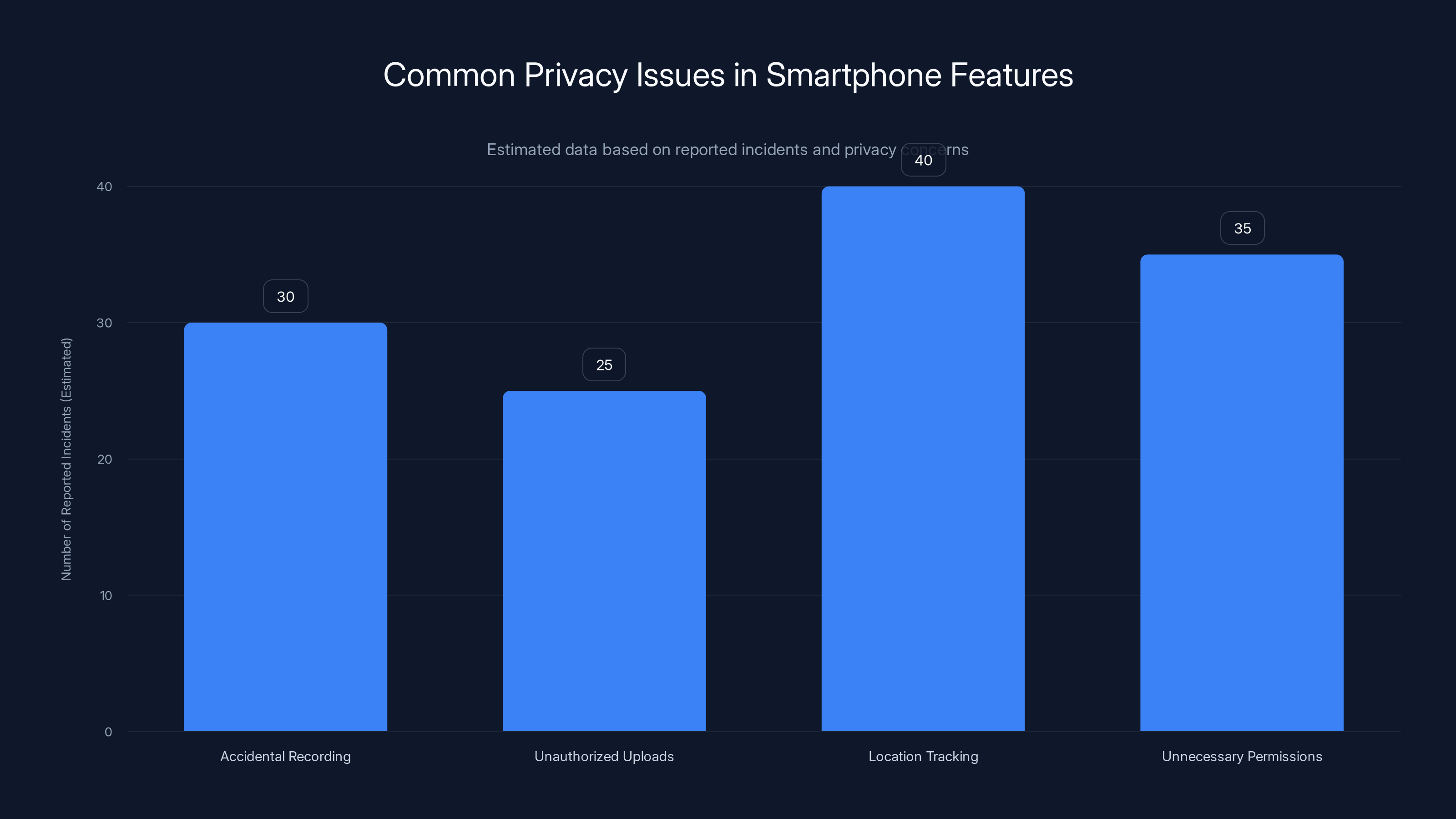

Estimated data shows that location tracking and unnecessary permissions are the most reported privacy issues in smartphones, highlighting the need for improved transparency and control.

Understanding Take a Message: Google's AI-Powered Voicemail Replacement

Before diving into the bug itself, you need to understand what Take a Message actually does and why Google considered it valuable enough to develop.

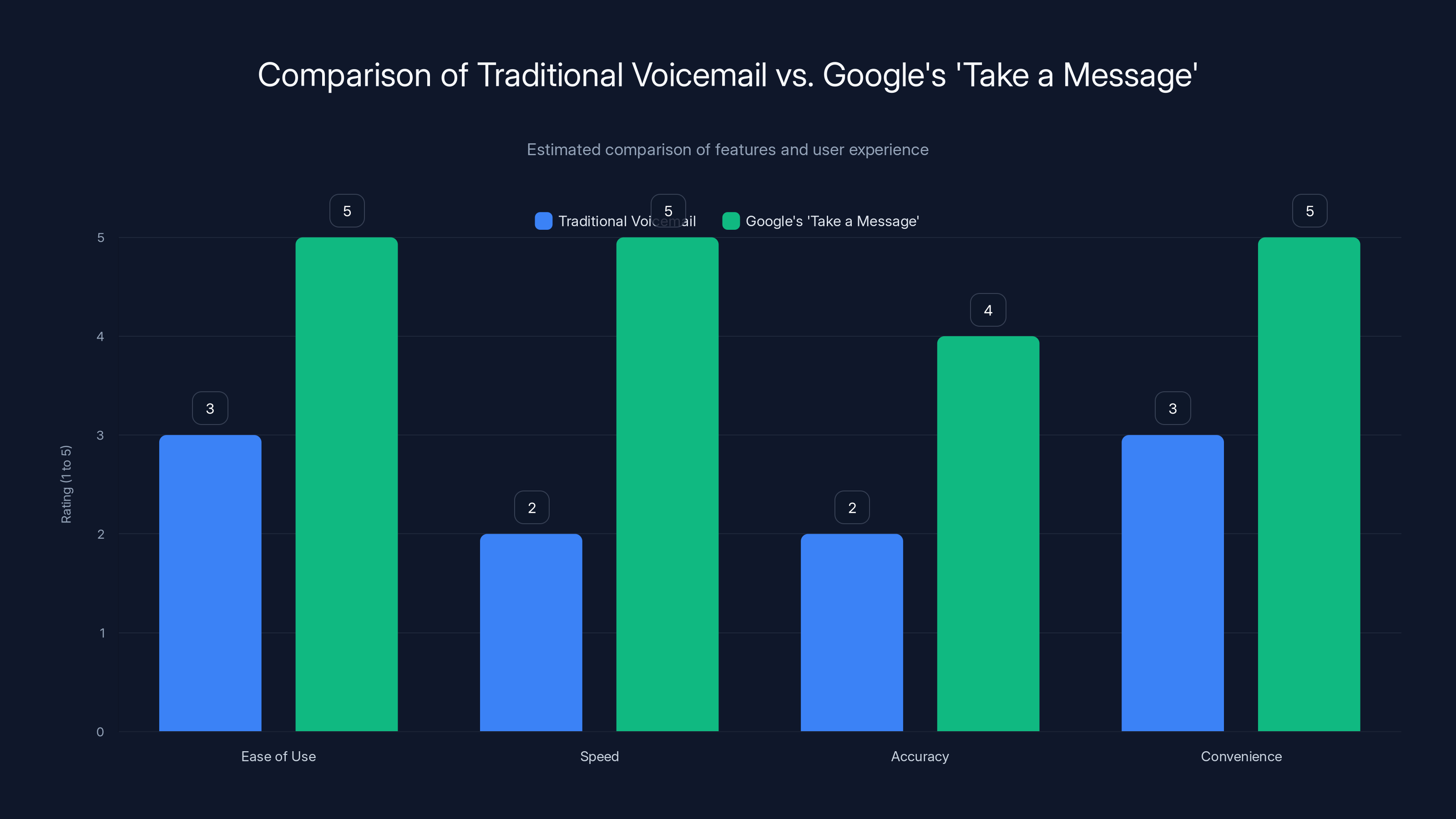

Traditional voicemail is broken. It's slow, asynchronous, and requires you to dial in, enter a PIN, and listen through multiple messages at real-time speed. If someone leaves a 90-second message with critical information, you're spending 90 seconds listening, unable to skip or skim. Transcription existed, but it was usually inaccurate, especially with accents, background noise, or technical jargon.

Take a Message tried to solve this by combining real-time transcription with intelligent call answering. Here's how it was supposed to work: when you miss a call, the feature automatically answers with a pre-recorded message ("I can't take your call right now, but I can take a message"). The caller then leaves a message. Google's AI transcribes it in real-time and stores the full text alongside the audio. You get an instant notification with both the original audio and a searchable transcript. No dialing in. No playing through messages at full speed.

The feature was genuinely innovative. For busy professionals, customer service teams, or anyone missing frequent calls, this could have been a meaningful quality-of-life improvement. Google positioned it as part of a broader "helper" philosophy—using AI to handle the boring, repetitive parts of communication so humans could focus on what matters.

The problem was in execution. To transcribe a caller's message in real-time, Google needed to process audio continuously. The feature had to listen to the caller and convert speech to text. But somewhere in the implementation, the listening mechanism became bidirectional. The phone wasn't just processing the caller's audio—it was also streaming the receiver's environment back to the caller.

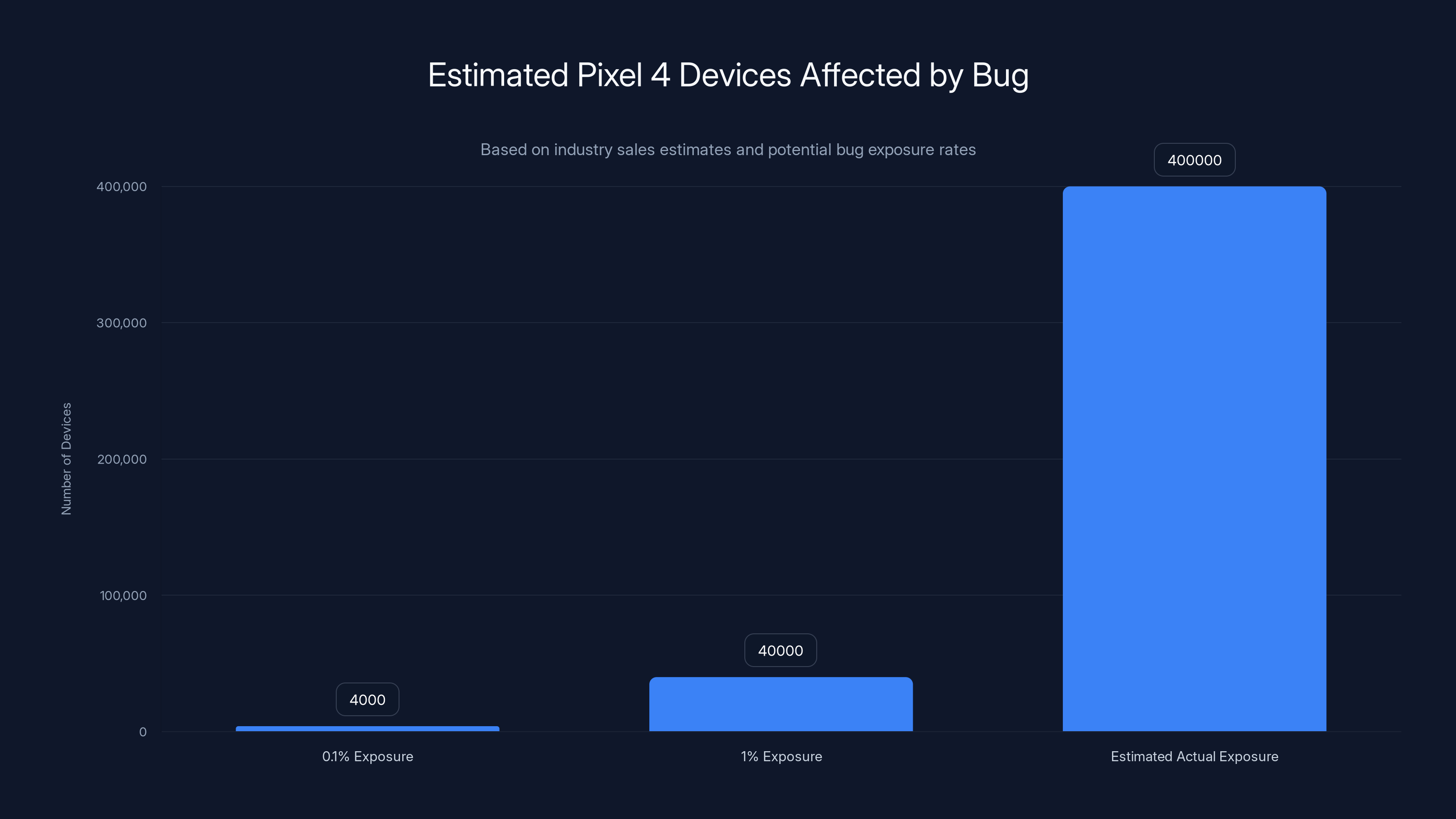

Estimated data suggests that while Google reported a small subset of Pixel 4 devices affected, the actual number of affected devices could be significantly higher, potentially reaching up to 400,000 if underreporting is considered.

The Bug: How Audio Leaked in Both Directions

Google's official statement described the issue as affecting "a very small subset of Pixel 4 and 5 devices under very specific and rare circumstances." This bureaucratic language doesn't capture what actually happened or why it's so alarming.

According to reports from affected users, here's what occurred: when a call came in and the user didn't answer, Take a Message would activate automatically. The feature would send the pre-recorded greeting to the caller. Then, instead of silently recording only the caller's response, the microphone would activate on the receiver's end and begin streaming audio back to the caller.

This wasn't background noise filtering or acoustic echo. This was active microphone capture of the user's environment. One Reddit user who experienced the issue described it clearly: "It was as though I picked up the phone, except I had done nothing. It just passively started recording me and sending audio to the caller."

The user noticed the microphone indicator light—the small LED at the top right of the Pixel phone—illuminate after missing a call. Under normal circumstances, when Take a Message is working correctly, that indicator shouldn't light up. The feature should process audio on Google's servers, not activate the local microphone. The fact that the light appeared meant the phone was actively recording its local environment.

What makes this bug particularly insidious is that it's silent. There's no notification saying "your microphone is active." There's no popup asking for permission. The user only finds out something went wrong by noticing the indicator light or, worse, after the call ends and they realize a caller heard their background conversations.

The bug appeared to be triggered by a specific combination of conditions. Google acknowledged that the issue occurred "under very specific and rare circumstances," but the company didn't publicly detail what those circumstances were. Based on user reports, it seems the bug manifested when:

- A call arrived while the Pixel 4 or 5 was locked

- Take a Message was enabled as the default call answering feature

- The device had specific Android or Google Phone app versions installed

- Possibly when the device was in certain power or connectivity states

The "rare circumstances" qualifier is important because it's both a reassurance and a problem. If the bug only affected specific conditions, it means most Pixel 4 and 5 users never experienced it. But "rare" doesn't mean nonexistent. It means anyone with a vulnerable device who missed calls under those specific conditions had their privacy breached without knowing it.

The Red Flag: When Privacy Indicators Betray Hidden Activation

Privacy experts often point out that technical controls (like microphone indicators) only work if users notice them. A privacy indicator light that flashes for one second while you're not looking at your phone is arguably worse than no indicator—it gives false confidence that the system is transparent.

In this case, the indicator actually saved the day. The user who discovered the bug noticed the light and realized something was wrong. They then tested the bug repeatedly, documented the behavior, and reported it publicly. This is essentially how privacy bugs get caught: not through company testing or user consent, but through accident and user diligence.

The microphone indicator light exists specifically because phones do things in the background that users can't see or hear. Google's philosophy (borrowed from Apple) was to make that activity transparent. But transparency only matters if users pay attention. Most people don't stare at their phone's indicator lights. They're focused on their apps, their screens, their tasks. A privacy-critical feature that only works if you're watching the right corner of your phone is a feature that will frequently fail.

This raises a broader question: how many bugs like this exist in AI-powered features where the user interaction is primarily behind-the-scenes? If a machine learning model is processing your data, your voice, your location—and you're not actively interacting with the phone—how would you ever know if something goes wrong? The privacy indicator helps with visual acknowledgment, but it doesn't capture the scope of data being processed or the potential for misuse.

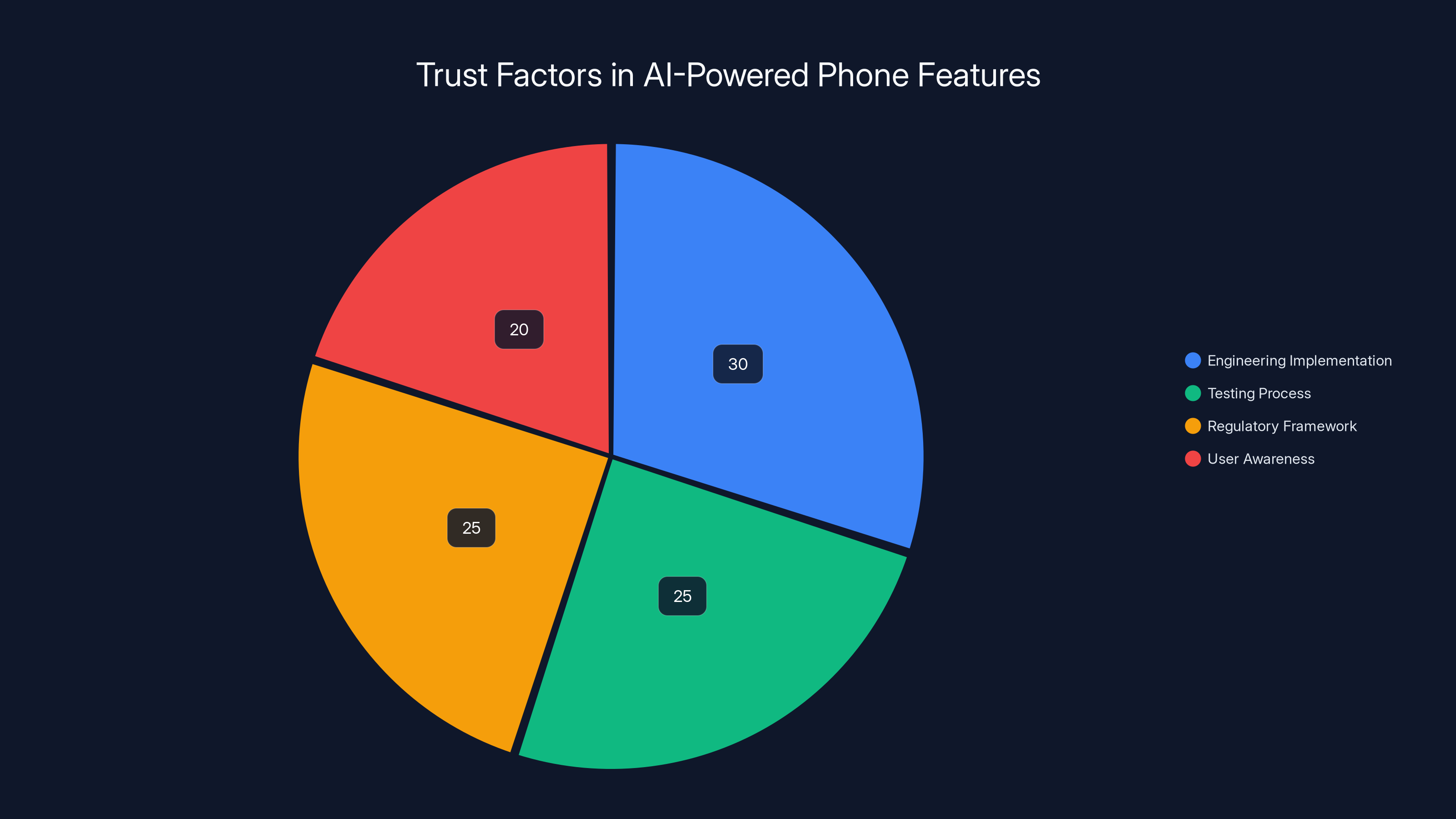

Estimated data shows that users place significant trust in engineering and testing processes, but regulatory frameworks and user awareness are also crucial components.

Why This Bug Matters: Privacy, Consent, and AI Features

On the surface, this might seem like a minor bug. A "very small subset" of devices. "Rare circumstances." A few Reddit users complaining. Why should anyone care?

Because the architecture of modern phones puts microphones, speakers, and processing engines in constant potential communication. We've normalized the idea that phones are listening to some degree. Hey Siri. OK Google. Alexa. These wake words mean your phone is listening for activation. Most of the time, that's fine. Most of the time, processing happens on-device or in secure servers.

Most of the time. But bugs happen. Misconfigurations happen. And when they do, the first line of defense—user awareness—is almost nonexistent. The average Pixel owner doesn't think about how Take a Message accesses their microphone. They certainly don't think about what should prevent the microphone from streaming their environment back to callers.

The deeper issue is the implicit trust model. When you enable a convenience feature on your phone, you're trusting:

- The engineers who built it to implement it correctly

- The company's testing process to catch bugs before release

- The regulatory framework around privacy to enforce accountability

- Your own awareness to notice when something goes wrong

This case broke point three and tested point four. Google's testing process (apparently) didn't catch the bug before it affected real users. The regulatory framework didn't prevent it from happening. And only one user's attentiveness to the microphone indicator revealed the problem.

For AI and ML-powered features specifically, this is a critical vulnerability. These features operate through inference—the model makes decisions based on input data. The developer might not fully understand why the model made a specific decision in a specific case. Testing becomes harder because you can't enumerate every possible input combination the model might encounter in production. Edge cases and unexpected behaviors are more likely.

Google's Official Response: Disabling Features Rather Than Fixing Them

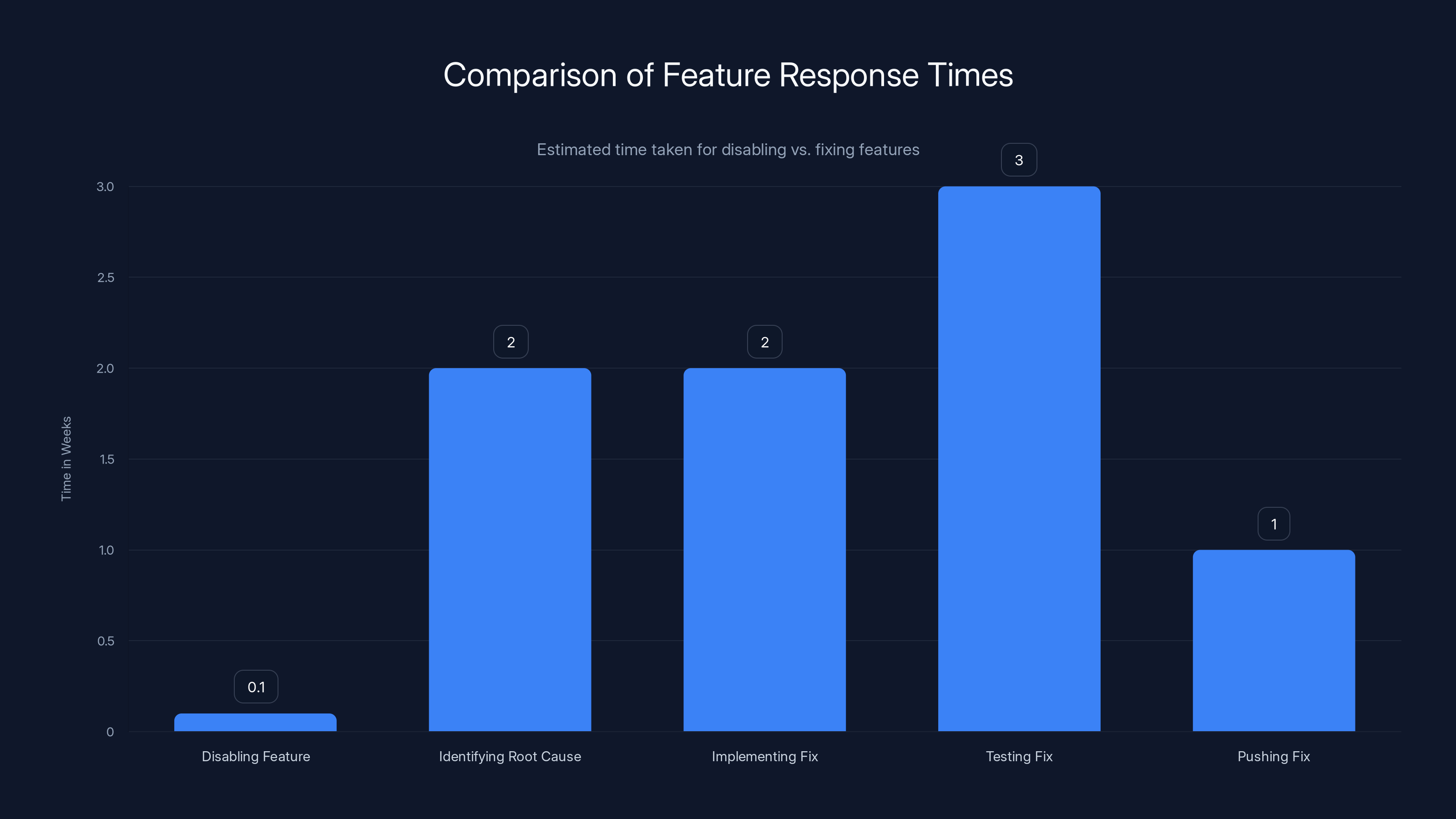

Google's response was swift, even if it wasn't ideal. Within days of the bug being publicly reported, Google disabled the Take a Message feature on affected Pixel 4 and 5 devices. The company also disabled the newer "Call Screen" feature, which uses similar architecture.

From a user perspective, this is frustrating. You weren't using the buggy code on purpose. You were relying on a feature you were promised would work. Now it's gone, and Google's official position is basically "we don't know if we can fix this, so we're just turning it off."

But from a liability and safety perspective, it's the right move. Disabling a feature is the fastest way to guarantee it can't cause harm. Fixing a bug requires:

- Identifying the root cause (sometimes takes weeks)

- Implementing a fix (more weeks)

- Testing the fix across different device variations and Android versions (more weeks)

- Pushing the fix through the Play Store or system updates (even more waiting)

- Dealing with devices that don't update or devices running older Android versions

Disabling the feature takes hours. It's a server-side change that can be pushed immediately. Every affected phone stops using the vulnerable code right away.

Google's statement from community manager Siri Tejaswini framed this as "abundance of caution," which is corporate speak for "we want to avoid a privacy lawsuit." The company offered affected users an alternative: use manual Call Screening (which doesn't automatically answer) or stick with your carrier's voicemail.

These alternatives are functional but objectively worse. Manual Call Screening requires you to actively decide whether to screen a call, meaning you still have to decide in the moment. Carrier voicemail returns to the old paradigm that Take a Message was designed to improve. Users who had grown to rely on real-time transcription were now back to playing voicemail messages at full speed.

Disabling features is significantly faster (hours) than the weeks required for identifying, fixing, and deploying a bug fix. Estimated data.

The Larger Pattern: When Convenience Features Have Hidden Costs

This incident isn't isolated. It's part of a pattern where modern smartphones bundle convenience with privacy complexity, and that complexity creates exploitable gaps.

Consider Google's history with privacy bugs:

- Google Assistant accidentally recording conversations: Reported in 2019, the assistant was transcribing audio even when users thought recording had stopped

- Gmail automatically uploading photos: Users thought they were saving photos to their device; Gmail was uploading them to Google's servers

- Location tracking even when disabled: Multiple reports of Pixel phones reporting location data even when location settings were turned off

None of these are intentional surveillance. They're mistakes in complex systems. But they share a common thread: the user interface doesn't match the actual system behavior. You think you're turning something off, but background processes continue. You think a feature isn't active, but it is. You think your data is staying local, but it's being transmitted.

The Pixel 4 and 5 Take a Message bug is part of this pattern. It's a reminder that convenience features require constant skepticism. Every feature that operates in the background is a potential privacy liability. Every feature that processes audio, location, or sensitive data is worth scrutinizing.

Technical Analysis: How Bidirectional Audio Streaming Failed

To understand why this bug happened, you need to understand the technical architecture of how Take a Message works.

A simplified version of the architecture looks like this:

- Incoming Call Detection: The Phone app detects an incoming call

- Feature Activation: If Take a Message is enabled and the call is unanswered, the system activates the feature

- Greeting Transmission: The system sends a pre-recorded greeting to the caller (this is audio going OUT from the phone)

- Audio Capture: The system needs to capture the caller's response (this requires the local microphone or the system audio stream from the call)

- Transcription: Google's servers transcribe the captured audio using speech-to-text

- Storage and Notification: The transcript and audio are stored and the user is notified

The bug appears to have occurred at step 4. When the system was set up to capture "the caller's response," there was confusion about what audio source to use.

In a normal phone call, there are technically two audio streams:

- The caller's voice coming IN through the earpiece/speaker

- The receiver's microphone capturing their voice to send OUT to the caller

The bug seems to have opened both streams in the wrong direction. Instead of just capturing the incoming audio from the caller, the system also activated the local microphone and sent that audio back to the caller.

Why would this happen? A few possibilities:

Possibility 1: Audio Routing Error: The engineer might have accidentally routed both the incoming audio AND the local microphone to the transcription service. The microphone audio, instead of being sent to the caller as normal (which would be appropriate in a two-way call), was also being transmitted as part of the message capture.

Possibility 2: Call State Confusion: The system might have been confused about whether it was in a call or just capturing audio. If the system thought it was handling a two-way call instead of a one-way message capture, it would activate both the speaker (to receive) and the microphone (to transmit), which are normal in calls but shouldn't be active when just recording a message.

Possibility 3: Permission Scope Explosion: The feature requested permission to use the microphone for transcription purposes. That permission might have been overly broad, allowing the microphone to be accessed in contexts beyond what was intended.

Without seeing Google's internal code, we can't know which scenario happened. But each points to a failure in the architecture review process. Someone should have asked: "In what states can the microphone be active? When should it be active? What prevents it from being active when it shouldn't be?"

Users rated 'Take a Message' significantly higher than other call management features, highlighting the gap in satisfaction after its disablement. (Estimated data)

The Privacy Indicator That Worked

One aspect of this story is actually encouraging: the privacy indicator light worked. It did exactly what it was designed to do.

When you see the microphone indicator light on your Pixel phone, that's not a bug or a false positive. That's the Android system telling you "something is actively using your microphone right now." It's one of the few transparent signals smartphones provide about background activity.

Compare this to older Android phones or iOS before the privacy transparency features. Users had no visual indication when apps were accessing their microphone, camera, or location. They had to trust permission dialogs from months ago and hope nothing changed.

The privacy indicator works because it's a system-level control. It doesn't ask apps to report their behavior; it directly monitors hardware access. An app can't lie to the indicator. If the microphone is powered on and being used, the indicator will light up.

The problem isn't the indicator itself—it's that users need to be staring at the right corner of their phone at the right moment to see it. A 1-second flash is easy to miss if you're looking at the screen, the notification area, or literally anywhere else. And once you see the light, you need to understand what it means and care enough to investigate.

This is why transparent privacy features are necessary but not sufficient. They need to be paired with user education, accessible controls, and clear feedback when something unexpected happens.

Affected Devices: Pixel 4, Pixel 5, and the Question of Older Hardware

The bug specifically affected Pixel 4 and Pixel 5 devices. Why these models and not others?

Pixel 4 (released October 2019) and Pixel 5 (released October 2020) were the phones where Take a Message was first introduced or widely available. Newer Pixel models like the Pixel 6 and 6a had the feature too, but they apparently didn't experience the bug, or Google was less concerned about it on those devices.

The difference might be related to hardware variations. Pixel 4 and 5 might have different audio chipsets, microphone configurations, or drivers compared to the Pixel 6. The bug might be specific to how those devices route audio between different hardware components.

Alternatively, the software versions running on Pixel 4 and 5 might be different. Not all users update their phones to the latest Android version, so there might be lingering older versions of the Google Phone app that contain the vulnerable code.

Google's decision to disable the feature only on Pixel 4 and 5 (and not older Pixel models like Pixel 3 or 3a) suggests the bug was specific to these devices' architecture or software versions.

The broader point is that mobile devices are complex hardware-software combinations. A bug that affects one model might not affect another due to subtle differences in how audio is routed, how permissions are enforced, or how the OS manages hardware access. This is why device-specific testing is crucial but also difficult to do comprehensively.

Google's 'Take a Message' significantly improves user experience with higher ratings in ease of use, speed, accuracy, and convenience compared to traditional voicemail. (Estimated data)

The Broader Context: AI Voice Features and Privacy Risk

Take a Message was part of Google's larger push into AI-powered assistant features. The company wanted to position its phones as truly intelligent devices that could handle routine tasks without user intervention.

This philosophy has expanded dramatically:

- Google Assistant can now proactively suggest actions based on context

- Gboard uses machine learning for typing predictions and now AI-powered text rewriting

- Google Photos uses AI to organize, enhance, and understand images

- Pixel phones have increasingly moved toward always-listening architecture for voice commands

All of these features operate partially in the background, using various sensors (microphone, camera, location) to gather data and make decisions. The more features like this accumulate on a single device, the larger the attack surface becomes.

Each feature is generally well-intentioned. Each one is tested by reasonable engineers. But each one adds complexity to the permission system, the audio routing, the data processing pipeline. The more complex the system, the more likely edge cases will slip through.

This is a fundamental challenge of modern smartphones: they're incredibly capable but also incredibly opaque. Users enable features without understanding the full technical implications. Companies ship features without fully grasping how they'll interact with other components. Edge cases emerge in production that nobody anticipated.

Google's response—disabling the feature—is pragmatic but also somewhat pessimistic. It says, "We don't trust ourselves to keep this safe, so we're just removing it." That might be the honest assessment. Voice features that activate automatically and process audio in real-time are inherently risky in ways that other features aren't.

User Experience: From "Smart" to "Not Worth the Risk"

From the user's perspective, the saga of Take a Message is frustrating. You had a feature that worked well most of the time. It made your life better. Then it turned out the feature had a privacy bug. Google disabled it. And now you're back to the old way of doing things.

This is the real cost of being an early adopter of smart features. You get to beta test Google's ideas. When they work, you love them. When they break, you lose access.

For Pixel 4 and 5 users who loved Take a Message, the alternatives are clearly inferior:

Call Screening: Still lets you screen calls intelligently, but doesn't answer automatically or capture messages

Carrier Voicemail: Returns to the old paradigm of playing voicemail messages at full speed without transcription

No automated response: Caller gets your regular voicemail greeting and has to wait

None of these are as good as a feature that automatically answers, records, and transcribes messages. Users are worse off after the disable.

This creates a perverse incentive structure. If Google had just never shipped Take a Message, users wouldn't miss what they never had. But because Google shipped it, users experienced the benefit, became dependent on it, and then felt robbed when it was taken away.

The Regulatory Question: Who Enforces Accountability?

One of the striking aspects of this incident is the lack of regulatory consequence. Google identified a privacy bug, disabled the feature, and that was largely it. A few tech news outlets wrote about it. Some Reddit discussions happened. Then the news cycle moved on.

Compare this to how a similar incident might be handled in other industries:

- Healthcare: If a medical device had a bug that exposed patient data, there would be FDA investigations, patient notifications, regulatory fines, and possibly class-action lawsuits

- Banking: Financial institutions face severe penalties for any data exposure, including regulatory investigations and mandatory public disclosures

- Automotive: If a car had a malfunction that exposed data or endangered safety, there would be recalls, investigation, and potential fines

For smartphones, the regulatory framework is much lighter. There's no equivalent to the FDA overseeing phone software. The FTC has enforcement power but focuses mainly on companies making false claims about privacy rather than actual privacy failures. Users have basically no recourse beyond potential class-action lawsuits, which are expensive and time-consuming.

Google's privacy policy probably includes language like "we strive to protect your data but make no guarantees" and "we're not liable for indirect damages." These clauses are standard boilerplate that shield companies from accountability.

The lack of regulatory framework for privacy in consumer technology is a major reason bugs like this happen. If Google faced substantial penalties for privacy breaches, they'd invest more heavily in testing and security. If users had clear regulatory recourse, they'd have stronger incentives to hold Google accountable. Instead, both parties operate under a regime where privacy breaches are treated as business-as-usual risks.

This is likely to change eventually. Europe's GDPR has started holding companies more accountable. The US might eventually follow with similar regulations. But today, the incident with Take a Message illustrates how weak consumer privacy enforcement actually is.

Real-World Impact: Estimating the Actual Risk Exposure

Google said the bug affected "a very small subset of Pixel 4 and 5 devices." What does that actually mean?

Pixel 4 was released in October 2019. By the time of the bug report in 2020, it had been on the market for about a year. Google doesn't publicly disclose Pixel sales figures, but industry analysts estimate Google sold somewhere between 3-5 million Pixel 4 units in that year.

Pixel 5 had been on the market for a shorter period at the time of the bug report.

If "very small subset" means 0.1% of devices, that's 3,000-5,000 Pixel 4 units potentially affected. If it means 1%, it could be 30,000-50,000 devices. Neither number is enormous, but both represent real privacy exposure.

We don't know how many of those affected devices actually experienced the bug in practice. The bug required specific circumstances, and users had to be missing calls and relying on Take a Message. Some devices with the vulnerability might have never triggered the bug.

Still, the actual risk exposure was non-trivial. Dozens of Reddit users reported experiencing the bug. Assuming underreporting, the actual number of affected users was probably in the thousands.

For each of those users, the privacy exposure was significant:

- Room sounds and conversations were streamed to a caller

- The user had no idea it was happening in real-time

- Only a visible indicator light (which might have been missed) revealed the problem

- No audit trail showing which calls were affected

Lessons for Other AI Voice Features

Take a Message was a specific implementation, but the lessons apply broadly to any AI-powered voice feature that operates automatically.

Lesson 1: Explicit vs. Implicit Activation Features that activate automatically (like Take a Message answering calls) are inherently riskier than features that require explicit user action. When the user has to actively press a button or say a wake word, the activation is deliberate and observable. When the system decides to activate on its own, the user might not know it's happening.

Lesson 2: Real-Time Processing Creates Complexity Take a Message did real-time transcription, which required continuous audio processing. Continuous processing creates more states where things can go wrong. A simpler feature that recorded audio first and transcribed later would have fewer failure modes.

Lesson 3: Audio Handling Is Uniquely Complex Audio hardware, drivers, and software layers are deeply intertwined. Unlike visual features or data processing, audio routing touches multiple hardware components and can be affected by subtle differences in device configuration. Voice features need extra scrutiny.

Lesson 4: Privacy Indicators Must Be Noticeable The Pixel's microphone indicator was the only thing that caught this bug. If users hadn't been able to see the indicator, the bug might never have been discovered. Privacy controls need to be impossible to miss, not hidden in a corner of the screen.

Lesson 5: Disable Over Fix When Unsure Google's decision to disable the feature rather than deploy a patch was correct. When privacy is at stake and the fix is uncertain, disabling is the right move. A partially fixed bug is worse than a completely disabled feature.

These lessons should influence how all companies design voice features. The technology for voice assistants, real-time transcription, and AI-powered call handling is improving rapidly. But the privacy implications are keeping pace. Each new feature needs serious thought about failure modes, user awareness, and recovery procedures.

What This Reveals About Smartphone Security

Smartphone operating systems like Android are designed with multiple layers of security and privacy controls. Apps are sandboxed. Permissions are requested. Users can revoke access. In theory, a rogue feature shouldn't be able to secretly activate the microphone without permission.

The Take a Message bug suggests these controls aren't airtight. Either:

- The permission model isn't granular enough: Take a Message might have had permission to use the microphone for transcription, but that permission was interpreted too broadly

- System apps have too much power: As a built-in system app, the Google Phone app might have access that regular apps don't have, bypassing normal permission constraints

- The security model doesn't account for bugs: The system assumes if code is authorized to do something, the developer intended it. But bugs can cause code to do things the developer didn't intend

This is a fundamental challenge in system design. You can't prevent all bugs through permission models. Permission systems are designed to prevent malicious abuse, not accidental misuse.

The most promising defense against this category of bug is transparency. If users can see when the microphone is active (through indicators) and can audit what data was processed (through activity logs), then accidental exposure becomes detectable.

Google's approach of using visible indicators is good. But the company could go further by:

- Providing detailed activity logs showing exactly when the microphone was active and what data was transmitted

- Allowing users to download and review transcripts of calls to verify they're correct

- Implementing redundant checks where system services periodically verify that the microphone is only active when expected

The Path Forward: Safer Voice Features

Voice features aren't going away. In fact, they're becoming more central to smartphone experience. The question is how to make them safer.

Explicit User Awareness Instead of silently answering calls, Take a Message could have notified the user: "Someone called you. I'm taking a message. Your microphone is active." A simple notification would have given users clear awareness of what was happening.

Redundant Safeguards The system could double-check: "I'm about to activate the microphone for voicemail transcription. Is the user expecting this?" If there's any ambiguity, disable the feature.

Graceful Degradation If the system isn't confident it can handle something safely, it should degrade gracefully. Instead of attempting to transcribe in real-time (which is risky), the system could just record the message and transcribe it later when resources are available and the system is less constrained.

User Control Instead of a binary on/off toggle, the feature could offer different modes: fully automatic (current approach, but riskier), semi-automatic (alerts user when feature activates), or manual (user decides for each call).

Auditability Every time the microphone is used, the system should log it with timestamp, duration, and reason. Users should be able to review these logs easily and see: "On date X at time Y, the microphone was active for reason Z."

These improvements would make voice features simultaneously more useful (better transparency and control) and safer (more defense-in-depth).

Conclusion: Living With Imperfect Smart Features

The Take a Message bug is ultimately a story about the gap between what we assume technology does and what it actually does.

Users assumed Take a Message would intelligently handle their missed calls. Google engineers assumed their implementation was safe. The company's testing assumed the feature worked as intended. And privacy advocates assumed regulatory frameworks would catch problems before they harmed users.

All of these assumptions failed. The bug existed. It exposed real audio. Users were harmed. Google's response was to remove the feature entirely.

This isn't an indictment of Google specifically—similar bugs could happen at any company making smartphone features. It's a reality of building complex software where the system is partly automatic, partly intelligent, and partly opaque to the user.

The broader lesson is skepticism about convenience features that operate in the background. Every feature that listens, watches, or transmits data is a potential privacy vulnerability. Every automatic action is a potential privacy exposure. The more of these features you enable, the larger your attack surface becomes.

This doesn't mean you should reject all smart features. Take a Message, for example, was genuinely useful. But it does mean you should be selective. Enable the features that provide real value. Disable the features that you don't use. And monitor your device's behavior using the privacy indicators and activity logs that systems like Android now provide.

For Google, the incident highlights the need for stronger internal testing of privacy-critical features, especially those involving audio. For users, it's a reminder that your smartphone is listening more than you probably realize, and that listening can fail in ways you don't expect.

The technology industry is moving toward more automated, more intelligent, more background-running features. That's the trajectory. But with that trajectory comes increasing responsibility to build these features safely, transparently, and with genuine safeguards. The Take a Message bug showed that even a well-intentioned company can fall short of that standard.

The good news is that the incident was caught, disclosed, and addressed relatively quickly. The bad news is that it happened at all, and that it probably won't be the last such incident. As long as phones are running complex AI features in the background, privacy bugs will be part of the reality we live with.

FAQ

What is Google's Take a Message feature?

Take a Message was an AI-powered voicemail feature that automatically answered incoming calls the user missed, let the caller leave a message, and transcribed it in real-time using Google's speech recognition technology. Instead of calling voicemail to listen to messages at full speed, users got instant transcripts with searchable text.

How did the Take a Message bug work?

The bug caused the Pixel phone's microphone to activate and stream audio back to the caller when Take a Message was handling a missed call. Instead of silently transcribing only what the caller said, the system also recorded and transmitted the phone owner's environment sounds back to the caller, exposing room conversations and background noise without the owner's knowledge.

Which phones were affected by the Take a Message bug?

The bug specifically affected Google Pixel 4 and Pixel 5 devices under "very specific and rare circumstances." Google didn't publicly disclose the exact conditions that triggered the bug, but it appears to have occurred when specific software versions, device states, or hardware configurations aligned in certain ways.

How did users discover the bug?

A Pixel user noticed the microphone indicator light (the LED at the top of the phone) illuminating when they missed calls with Take a Message enabled. Upon investigation and testing, they realized the microphone was actively recording and transmitting their environment sounds to the caller, rather than just silently processing the caller's message.

What did Google do in response to the bug?

Google disabled the Take a Message feature and the newer Call Screen feature on affected Pixel 4 and 5 devices as a precautionary measure. The company framed this as "an abundance of caution" while investigating the root cause. Users could still use manual Call Screening, standard Call Screen without automation, or their carrier's voicemail service instead.

Can the Take a Message feature be restored to Pixel 4 and 5 devices?

Google hasn't confirmed whether the feature will eventually be restored after a fix is implemented. The company's initial statement suggested the feature would remain disabled on these devices, but hasn't provided a definitive answer about future availability. Newer Pixel models like Pixel 6 and later continued to have access to similar features.

What privacy safeguards should prevent something like this?

Privacy safeguards include microphone indicator lights that show when the microphone is active, granular app permissions that restrict what each app can do, system-level auditing of sensor access, and explicit user notifications when sensitive hardware is activated. However, the Take a Message bug showed that these safeguards sometimes fail when a built-in system app accidentally misuses its permissions.

Why is audio handling particularly risky compared to other features?

Audio features are risky because they require direct access to hardware (the microphone), they often process data continuously in real-time, they interact with multiple software layers and drivers, and they're often automated or background-running rather than user-initiated. Unlike other data types, audio captures physical sounds and conversations, making accidental exposure more invasive.

What lessons does this incident teach smartphone developers?

Key lessons include: explicitly notify users when automated features activate, test edge cases thoroughly for features that handle sensitive data, prefer explicit user action over automatic activation, implement redundant safeguards rather than relying on a single control, disable risky features if you can't fix them safely, and provide transparency through activity logs so users can audit what happened.

How can users protect themselves from similar privacy risks?

Users can review microphone permissions in phone settings and disable microphone access for apps that don't need it, monitor the microphone indicator light and investigate if it illuminates unexpectedly, review activity logs in their Google account to see when devices accessed sensitive features, disable automated voice features if the convenience isn't worth the privacy risk, and stay informed about privacy bugs in widely-used phones and software.

Related Technical Deep Dives

For those wanting to explore related topics:

Understanding Mobile Audio Architecture explores how modern smartphones route audio between different hardware components, software layers, and applications. This foundation helps explain why audio bugs are particularly complex to prevent.

Privacy Indicators and Transparency Features examines how iOS and Android use visual signals to reveal background microphone and camera access, including their limitations and how users can actually notice them effectively.

Automatic Call Handling in Modern Phones details how modern smartphones decide when to answer calls, what to do with incoming audio, and how features like voicemail, call screening, and AI assistants interact with the device's calling infrastructure.

Testing Automated Voice Features for Privacy covers the testing strategies companies should use specifically for features that activate automatically and process audio, including edge case discovery and real-world scenario simulation.

Key Takeaways

- Google's Take a Message feature leaked user audio to callers due to a microphone activation bug affecting Pixel 4 and 5 devices

- The microphone privacy indicator light was the only effective safeguard that caught this bug—it should have been more obvious and user-friendly

- Automatic voice features are inherently risky because they operate in the background without explicit user awareness of when they're active

- Google's response to disable the feature was pragmatic but disappointing for users who had benefited from real-time voicemail transcription

- Modern smartphones need stronger privacy safeguards including explicit user notifications, redundant checks, and detailed activity auditing for sensitive features

Related Articles

- How to Protect iPhone & Android From Spyware [2025]

- Samsung Galaxy S26 Ultra Privacy Display: How It Works [2025]

- Google's $68M Voice Assistant Privacy Settlement [2025]

- Smartphones Share Your Data Overnight: Stop It Now [2025]

- House Sysadmin Stole 200 Phones: What This Breach Reveals [2025]

- Kimsuky QR Code Phishing: How North Korean Hackers Bypass MFA [2025]