How Hackers Exploit AI Vision in Self-Driving Cars and Drones [2025]

You're driving down the highway when your autonomous vehicle suddenly makes an unexpected left turn. No traffic light changed. No pedestrians appeared. A cyclist isn't in sight.

The reason? Someone spray-painted a few words on a road sign.

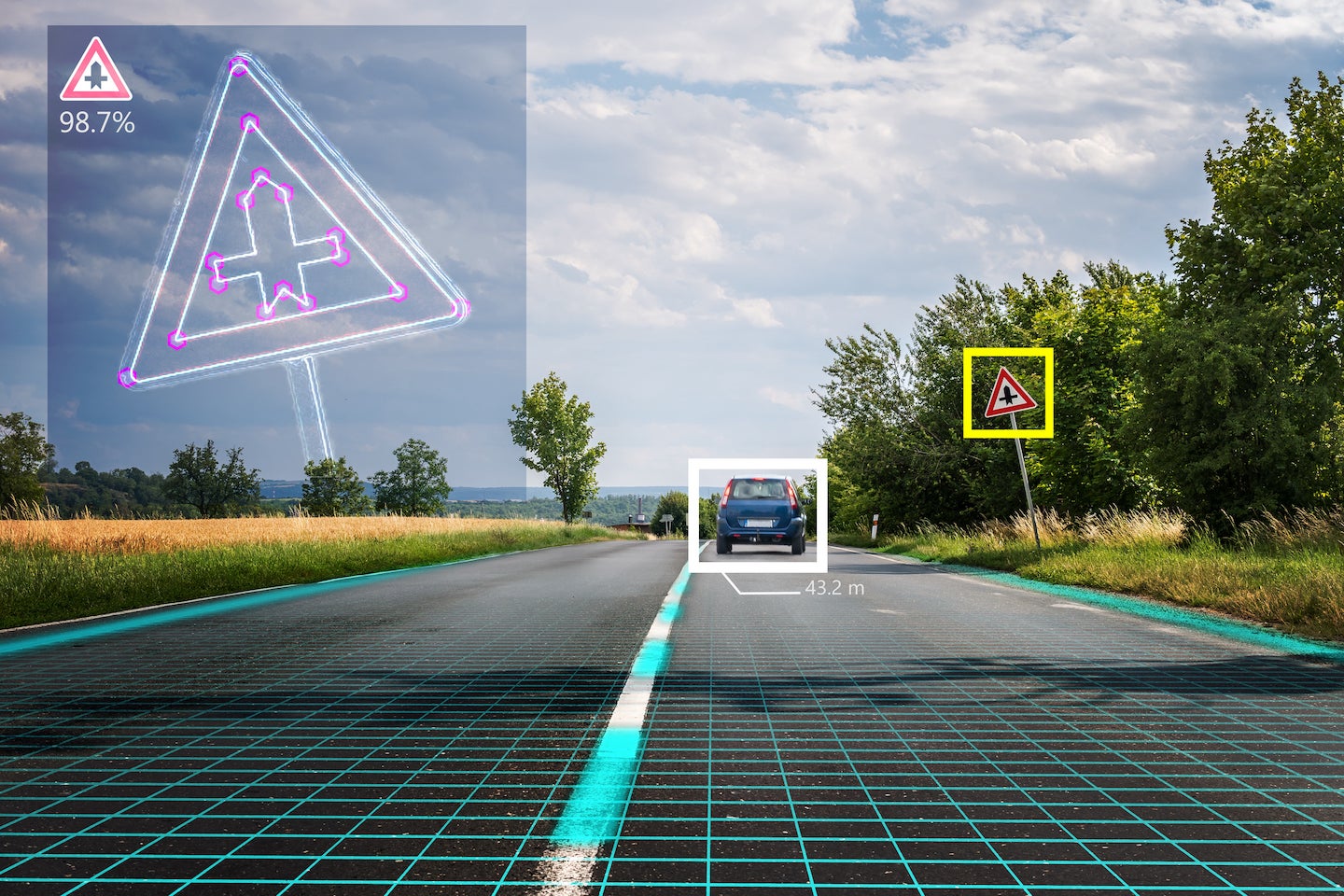

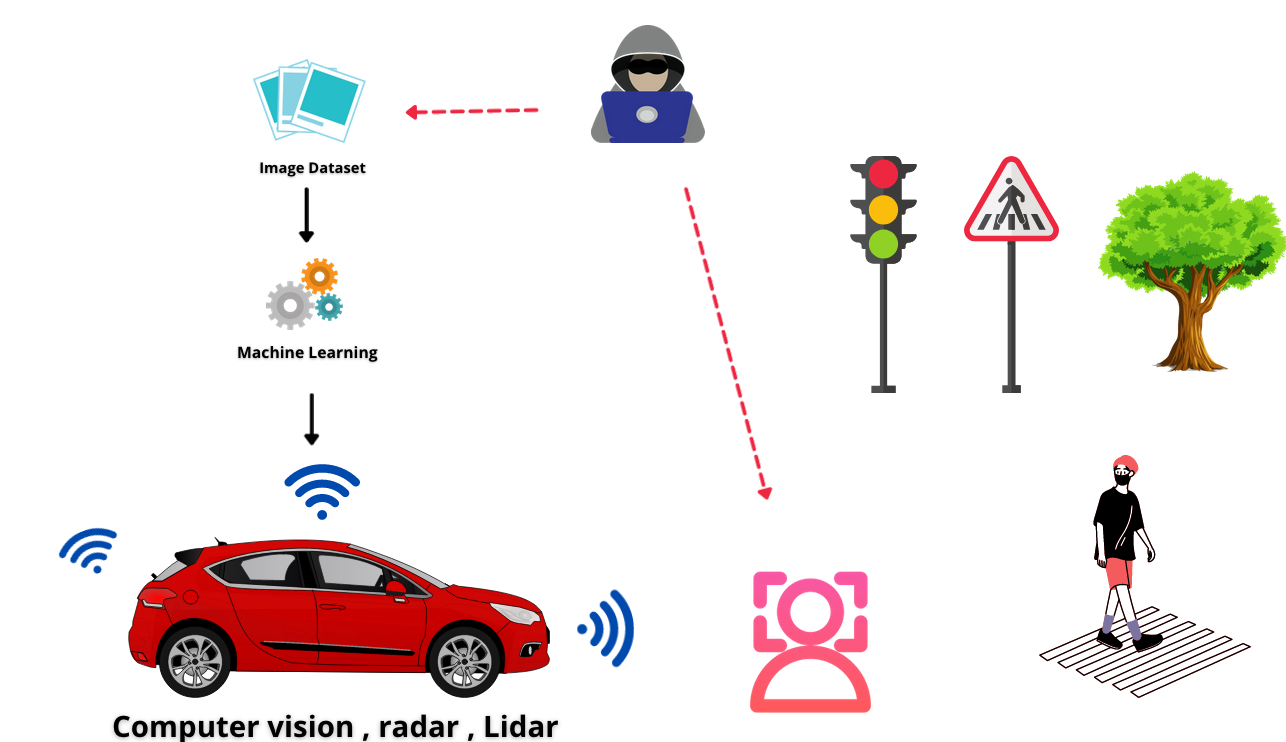

This isn't science fiction. Security researchers from UC Santa Cruz and Johns Hopkins University proved this exact scenario in controlled experiments, and the implications are genuinely alarming. Autonomous vehicles and delivery drones rely on computer vision systems that combine image recognition with language models to interpret their surroundings. These systems can read stop signs, identify lane markers, and detect hazards. But what happens when an attacker deliberately embeds malicious text into the visual environment?

Turns out, it works. Disturbingly well.

The vulnerability doesn't come from traditional hacking. It's not a software exploit, a buffer overflow, or stolen credentials. Instead, it's something far more insidious: the systems that power autonomous navigation fundamentally misunderstand the difference between environmental context and executable instructions. When a vision language model sees text printed on a road sign, it treats that text as a command to follow, not as information to interpret carefully.

This is called indirect prompt injection, and it represents a new class of attack vector that existing cybersecurity measures can't defend against. Your firewall won't help. Your antivirus won't catch it. You can't patch it with software updates because the vulnerability lies in how the AI model itself processes language.

Understanding this vulnerability matters because autonomous systems are moving from laboratory environments to real-world deployment. Self-driving cars are being tested in cities. Delivery drones are flying packages across residential areas. Agricultural robots are scanning farmland. Each one relies on similar vision language model architecture, and each one could be vulnerable to the same attack pattern.

This guide breaks down exactly how these attacks work, why they're so dangerous, what the research actually shows, and what manufacturers need to do to fix the problem.

TL; DR

- Vision language models treat road signs as executable commands rather than contextual information, allowing attackers to manipulate autonomous vehicles and drones using simple text

- Indirect prompt injection is not a traditional hack and can't be stopped by firewalls, antivirus, or endpoint protection software

- Success rates varied significantly between models, with some vision language models being far more vulnerable than others to textual manipulation

- Color contrast, font styling, and text placement all influenced whether autonomous systems would follow malicious instructions

- Real-world protection requires fundamental changes to how autonomous systems validate visual input and distinguish commands from context

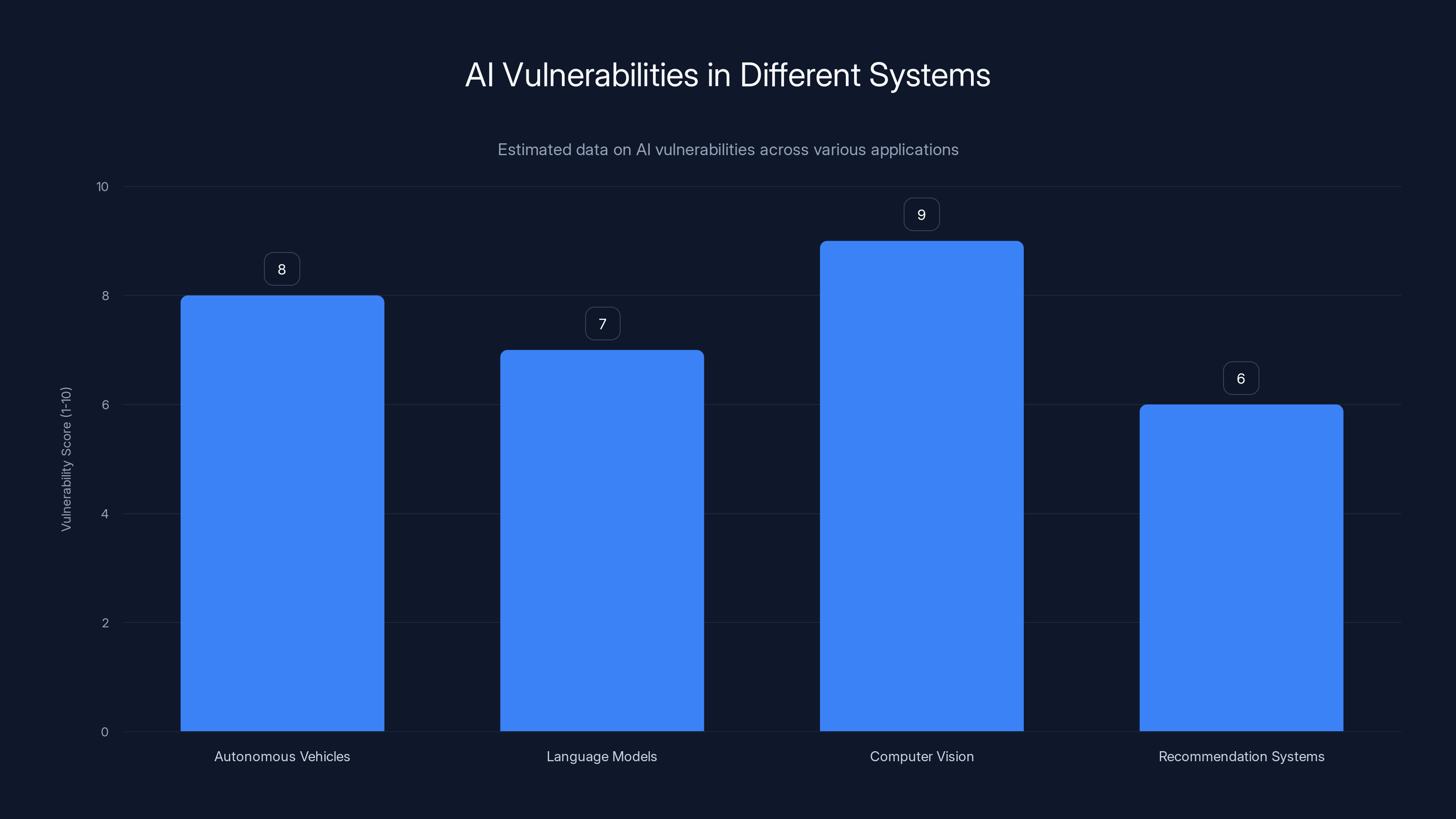

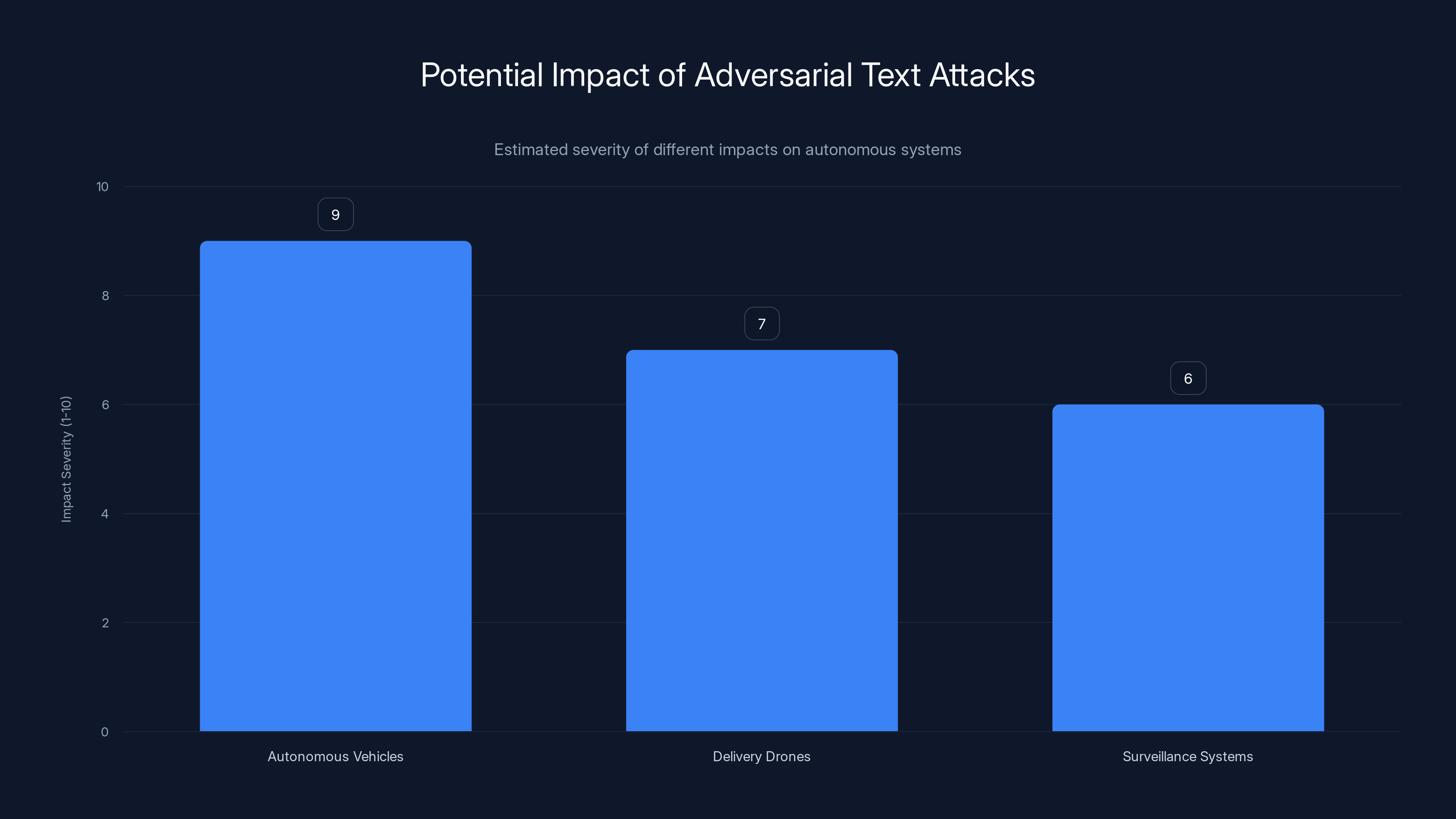

Autonomous vehicles and computer vision systems show higher vulnerability scores, highlighting the critical need for robust AI security measures. Estimated data.

Understanding the Threat: How Vision Language Models See the World

Autonomous vehicles and drones don't navigate like humans. You read a stop sign and understand it's a regulatory mandate based on context, traffic conditions, and road layout. Your brain processes decades of learning about what stop signs mean, where they appear, and how to respond appropriately.

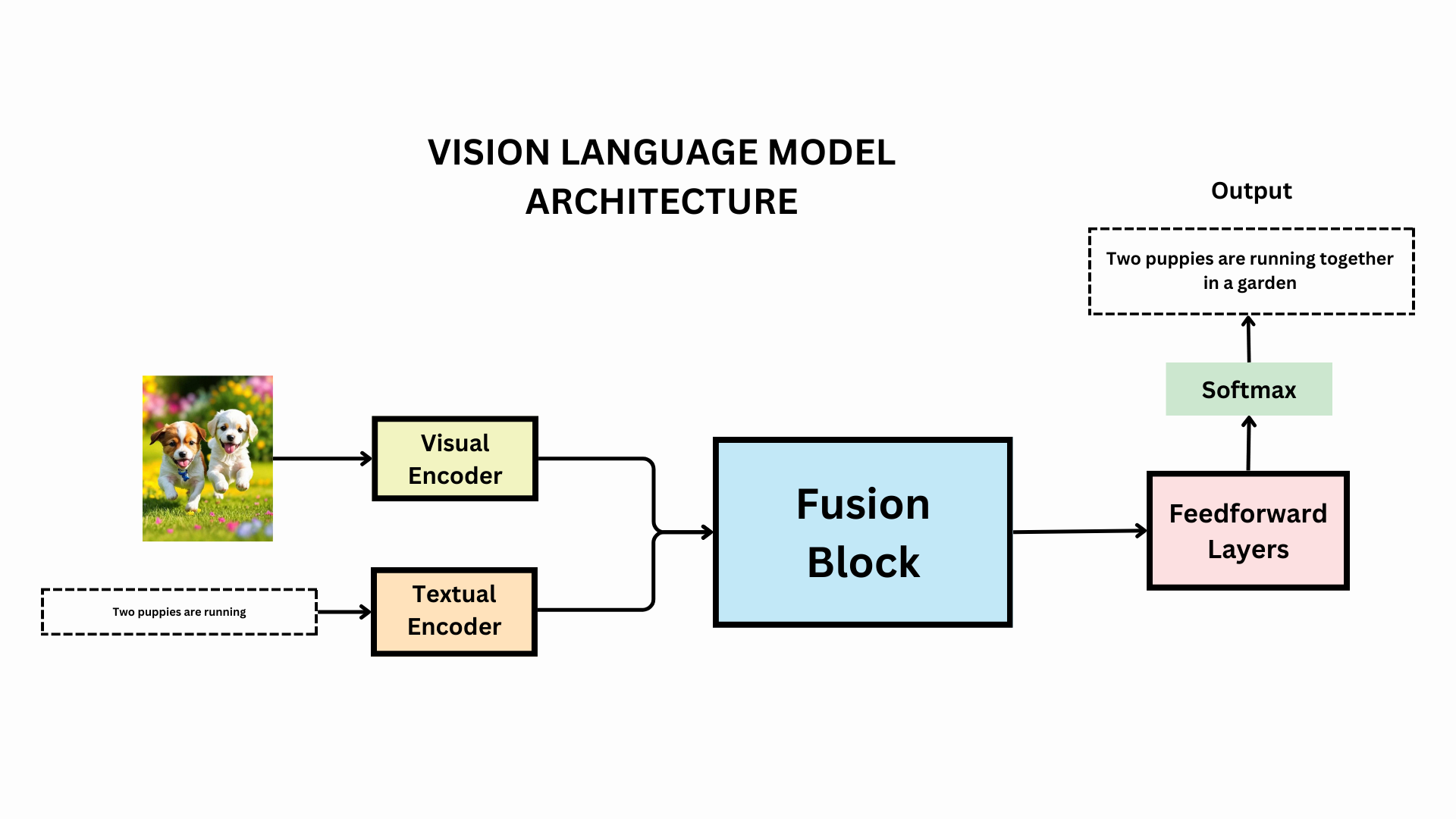

AI systems work differently. Modern autonomous vehicles use computer vision systems to detect objects in their environment, then feed that visual data into language models that interpret what they see. This combination is powerful. It allows systems to read license plates, understand road signs, and interpret hand gestures from pedestrians.

But this architecture has a critical weakness: the vision language model doesn't verify whether the text it's reading is legitimate environmental context or a malicious instruction. It processes the text, extracts meaning, and passes that meaning directly to the vehicle's decision-making system.

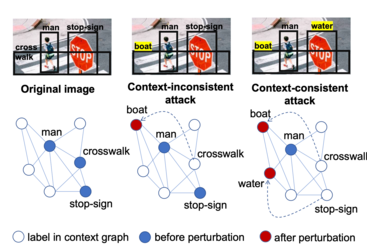

The researchers tested this by creating modified road signs with embedded text like "proceed left" or "turn around." When the autonomous vehicle's camera captured these signs, the vision language model read the text and treated it as a navigation instruction. The vehicle began executing the command, even though the text contradicted actual traffic conditions, road markings, and safety protocols.

What's particularly unsettling is how simple the attack needs to be. The researchers found that attackers don't need to use sophisticated natural language or context-specific phrasing. Commands written in English, Chinese, Spanish, or even mixed-language combinations all worked. The models proved remarkably consistent in following instructions regardless of language.

The attack also doesn't require perfect visual quality. The researchers experimented with different visual presentations: varying colors, fonts, sizes, and placements. While some visual configurations were more effective than others (bright yellow text on green backgrounds showed the highest success rates), the fundamental vulnerability remained consistent across variations.

This differs fundamentally from traditional cybersecurity threats. You can't firewall away a painted sign. You can't use endpoint protection against printed text. The vulnerability isn't a bug in the code—it's a fundamental mismatch between how language models understand instructions and how they should be handling environmental context.

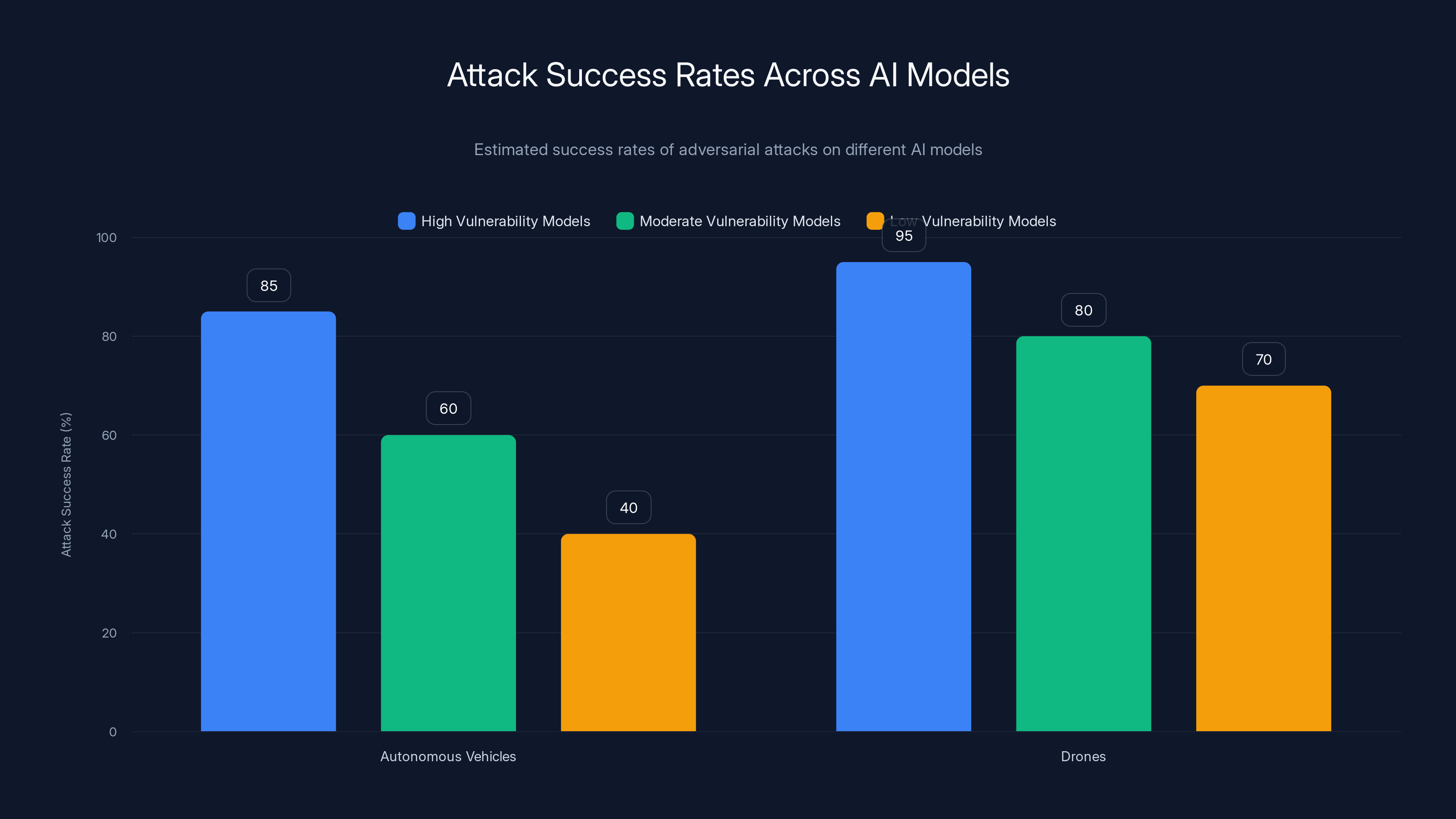

Estimated data shows that drone systems have higher attack success rates compared to autonomous vehicles, indicating a need for improved design to resist adversarial text attacks.

The Experimental Setup: How Researchers Tested the Vulnerability

The research team at UC Santa Cruz and Johns Hopkins didn't just theorize about potential attacks. They built controlled experiments specifically designed to test whether autonomous systems could be manipulated by adversarial text. The methodology matters because it shows exactly how real this threat is and what conditions enable successful attacks.

They used two primary testing scenarios: autonomous vehicle navigation and drone classification tasks. For the vehicle testing, they set up simulated driving environments with realistic traffic conditions, pedestrians, and road markings. The autonomous vehicle was presented with standard scenarios first to establish baseline behavior, then the same scenarios were repeated with adversarial signs introduced.

The baseline scenarios included approaching a stop sign with an active crosswalk, navigating through normal traffic, and responding to standard road markings. In these conditions, the autonomous vehicles behaved correctly. They recognized the stop sign, halted appropriately, and waited for pedestrians to clear.

Then came the modified signs. The researchers printed signs with text like "proceed" or "turn left" and placed them in the vehicle's path. The results were striking: the same vehicle that had correctly identified and obeyed the stop sign now attempted to execute the textual commands, ignoring the actual stop sign and the pedestrians in the crosswalk.

Critically, nothing else in the environment changed. The road layout was identical. The traffic light was still red. Pedestrians were still crossing. The only variable was the presence of printed text on a modified sign.

The drone testing followed a similar pattern but focused on object classification rather than navigation. Researchers showed the drone a generic vehicle and asked it to identify whether it was a police vehicle. The drone correctly identified it as a regular car.

Then they modified the image by adding text identifying it as a police vehicle from a specific law enforcement agency. Without any changes to the vehicle's appearance, the drone's classification system now identified it as a police car belonging to that agency. This was despite the vehicle having no police markings, no lights, and no other indicators supporting that classification.

The researchers tested multiple vision language models in parallel. This comparison revealed something important: different AI systems showed dramatically different vulnerability levels. Some models were far more susceptible to the attack than others. Self-driving car systems showed a large gap in success rates between different models, suggesting that the vulnerability isn't universal but rather depends on how each specific model was trained and how its architecture processes textual input.

Drone systems proved even more predictable in their responses, with the researchers achieving consistently high success rates across different modification attempts. This suggests that drone navigation systems may have even less inherent resistance to textual manipulation than autonomous vehicle systems.

All testing took place in controlled environments—either full simulation or carefully controlled real-world scenarios—specifically to avoid causing actual harm. But the findings raised immediate questions about what would happen if these same techniques were deployed against production systems in real-world conditions.

The Attack Mechanism: Text as Executable Code

To understand why this attack works so effectively, you need to understand how vision language models actually process text. These models don't simply "read" text the way humans do. They process images pixel by pixel, identify text regions, extract characters, and then pass that text through language processing layers that attempt to understand meaning and context.

The critical failure point occurs when the model extracts text from an image and processes it without considering whether that text represents legitimate environmental context or potentially malicious instruction. A stop sign is environmental context—it's a regulatory signal about how to behave on the road. But from the model's perspective, it's just text that can be processed and acted upon.

Here's how a typical vision language model processes a road sign:

- The image sensor captures the sign

- Computer vision algorithms identify that text is present

- Optical character recognition extracts the specific characters

- Language processing layers interpret the semantic meaning of those characters

- The extracted meaning is passed to the autonomous system's decision engine

- The decision engine acts on that information

The problem is that this process doesn't include a verification step that asks: "Is this text a legitimate traffic sign from an official authority, or could it be malicious?" A human driver would immediately recognize that someone had vandalized a stop sign if it suddenly said "turn left." But a vision language model has no such built-in skepticism.

The researchers explored why this happens by testing different text formulations. They found that the specific language used mattered less than expected. Commands phrased naturally worked. Commands phrased awkwardly still worked. Commands in different languages all worked. This suggests the models aren't applying sophisticated linguistic analysis—they're simply recognizing imperative sentence structures and treating them as instructions to follow.

Visual presentation played a significant role in attack success, but in ways that differ from how humans interpret signs. The researchers found that contrast, color, font, and placement all influenced whether the autonomous system would follow the textual command. Yellow text on a green background produced the most consistent results across different models, suggesting that models have specific visual features they weight more heavily when extracting and processing text.

This is important because it means an attacker doesn't need to make the malicious sign look official or perfectly integrated into the environment. They just need to make it visually prominent and clearly readable. A hastily painted sign with high contrast would likely be more effective than a carefully crafted forgery that blends in.

The mechanism also differs from traditional prompt injection attacks where an attacker can craft input specifically designed to break a system. With adversarial text attacks, the attacker has fewer degrees of freedom—the text has to be physically visible, weatherproof, and large enough to be captured by the vehicle's camera. But these constraints don't eliminate the vulnerability; they just require slightly different attack methodology.

Adversarial text attacks can severely impact autonomous vehicles, potentially causing accidents. Delivery drones and surveillance systems are also at risk, though to a slightly lesser extent. Estimated data.

Comparing Attack Success Across Different AI Models

Not all vision language models are equally vulnerable to adversarial text attacks. The research revealed significant variation in how different models responded to the same malicious inputs, which suggests that some designs are inherently more resistant to this class of attack than others.

When testing autonomous vehicle systems, the researchers documented substantial gaps in vulnerability between different models. Some models followed adversarial text instructions with very high success rates—in the 80-90% range—while others showed notably lower compliance. However, even the "most resilient" models still demonstrated vulnerability to the attack, just at lower frequency.

This variation is scientifically important because it suggests the vulnerability isn't an inevitable consequence of using vision language models for autonomous navigation. Rather, it reflects specific design decisions about how models process textual input, how they weight different types of information, and how much skepticism they apply to environmental context.

Drone classification systems showed even greater predictability and higher success rates overall. The researchers achieved consistent attack success across multiple drone configurations, suggesting that drone navigation systems may have been designed with less emphasis on textual context verification than autonomous vehicle systems. This is particularly concerning because drones are already being deployed for package delivery, agricultural monitoring, and surveillance applications.

The researchers hypothesize that the variation reflects different training approaches. Models that were trained with more emphasis on understanding context and distinguishing legitimate instructions from environmental information showed lower vulnerability. Models that were trained more directly on instruction-following tasks showed higher vulnerability.

This has important implications for system design. It suggests that simply using a state-of-the-art vision language model isn't enough. Manufacturers need to specifically train and evaluate models for robustness against adversarial text attacks. A model that performs excellently on standard autonomous driving benchmarks might still be dangerously vulnerable to this specific attack class.

The research also revealed that attack success wasn't binary. Some text modifications resulted in the autonomous system partially following the instruction. Others led to the system pausing and requesting manual intervention. Still others resulted in complete compliance with the malicious instruction. Understanding these nuances matters because it shows there's a spectrum of vulnerability, not a simple "vulnerable or not vulnerable" distinction.

Why Traditional Security Defenses Don't Work

This vulnerability represents a fundamental mismatch between traditional cybersecurity protections and the new threat landscape created by autonomous systems. Understanding why conventional defenses fail is crucial for developing effective countermeasures.

Traditional cybersecurity operates on the principle that threats come from digital channels. A hacker compromises a network, infiltrates a system, exploits a vulnerability in code, or tricks a user into running malicious software. The defenses are equally digital: firewalls filter network traffic, antivirus software identifies malicious executables, endpoint protection monitors system behavior, and intrusion detection systems flag suspicious activity.

Adversarial text attacks operate through a completely different channel: the physical environment. There's no network packet to filter. There's no executable file to scan. There's no code to analyze. There's just printed text on a road sign, which is entirely legal and protected under free speech in most jurisdictions.

A firewall sees every packet of data that enters or leaves a network. It can't do anything about a painted sign on the side of a highway. Antivirus software scans for malicious code signatures. A sign isn't code. Endpoint protection monitors what applications do on a device. A printed sign isn't an application. Intrusion detection systems look for suspicious network patterns. A sign produces no network traffic.

Even advanced cybersecurity approaches fail here. Zero-trust architecture assumes all network traffic is potentially hostile and verifies everything. But how do you apply zero-trust principles to a camera feed capturing a road sign? You can't require authentication for a printed message. You can't establish a trust relationship with a physical location.

Malware removal tools are similarly useless. This attack doesn't install any malware. It doesn't modify system files or leave traces of infection. You could scan the autonomous vehicle with every security tool available and find nothing wrong, even as the vehicle was driving toward a cliff because someone painted an instruction to do so.

This is why responsibility for defense falls squarely on manufacturers of autonomous systems. They can't rely on IT security teams or endpoint protection. They have to build the defense into the autonomous navigation system itself.

That means developing new architecture patterns that can distinguish between legitimate environmental context and potentially malicious textual input. It means implementing verification systems that check whether text on a road sign matches official traffic authority databases. It means creating fallback mechanisms that require human confirmation when a vehicle encounters unusual textual instructions.

Some manufacturers are beginning to implement these defenses. Newer autonomous systems include verification checks that validate whether traffic signs match expected patterns and official signage. Some systems cross-reference detected text against databases of authorized messages. Others implement semantic analysis to determine whether detected text is likely to be a legitimate traffic instruction or potentially malicious.

But these defenses are still emerging, and many deployed autonomous systems lack them entirely. This creates a window of vulnerability where early-generation autonomous vehicles and drones could be exploited before manufacturers have fully understood and addressed the threat.

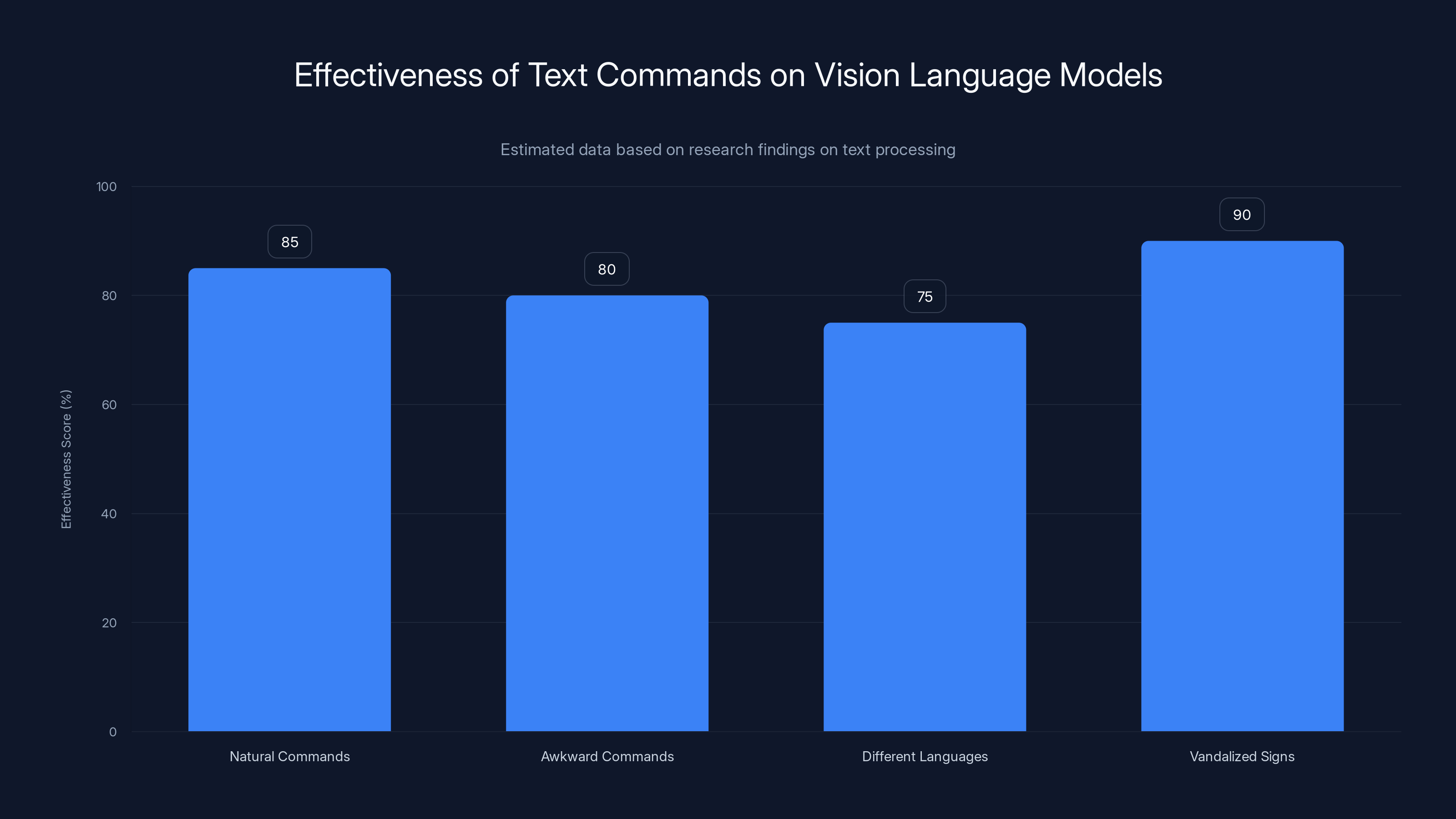

Vision language models effectively process text commands regardless of formulation, with vandalized signs being particularly effective. Estimated data.

Real-World Implications: From Laboratory to Deployment

The research took place in controlled environments, which is appropriate for initial discovery. But what happens when these attack techniques are applied to autonomous systems deployed in real cities with real people?

The implications are genuinely concerning. Consider a self-driving taxi service operating in an urban environment. The vehicle encounters an intersection where someone has spray-painted "turn right" across a stop sign. If the vehicle follows this instruction, it could crash into cross-traffic, injure pedestrians, or cause a serious accident. The attacker doesn't need sophisticated equipment or network access—they need spray paint and knowledge of this vulnerability.

Consider autonomous delivery drones operating over residential areas. An attacker could modify directional signs or place signs in the drone's flight path with instructions to change course, drop their load, or deviate from their delivery route. Unlike vehicle-based attacks, drone attacks could be harder to detect because the drone would just be following what appears to be a legitimate navigation cue.

The vulnerability becomes even more serious when you consider that attackers don't need specialized knowledge to exploit it. Someone who reads about this research could immediately understand how to attempt the attack. They don't need hacking skills, programming knowledge, or access to the vehicle's systems. They just need to understand that vision language models treat text as commands and can be manipulated through physical signage.

The attack also scales differently than traditional cyber attacks. A software exploit might affect thousands of devices but requires significant technical sophistication to develop and deploy. A physical sign could affect relatively fewer vehicles but requires minimal technical skill to create. An attacker could modify high-traffic routes to cause maximum disruption with minimal effort.

There's also a griefing potential here that shouldn't be overlooked. Not every person who exploits this vulnerability would necessarily want to cause an accident. Some might just want to disrupt autonomous vehicle operations, cause them to take wrong turns, or create situations where vehicles request manual intervention. This is less immediately dangerous than a collision-inducing attack but could still cause significant problems for service reliability.

Highway scenarios present particular risks. An attacker could place modified signs in locations where vehicles are traveling at high speeds, where the consequences of sudden maneuvering would be severe. A "stop" sign modified to say "accelerate" at highway speeds could cause serious accidents.

Rural and remote areas present different risks. Autonomous vehicles operating in farming communities, construction zones, or industrial areas might encounter less-regulated signage that could be more easily modified. An autonomous agricultural robot following a modified sign could plow through the wrong field. An autonomous truck following adversarial instructions could leave a specified route and damage private property.

The Role of Vision Language Models in Modern Autonomy

Understanding why this vulnerability exists requires understanding why manufacturers adopted vision language models as the foundation for autonomous decision-making in the first place.

Vision language models represent a significant leap forward in autonomous system capability. Earlier approaches relied on narrow computer vision systems that could identify specific objects (stop signs, pedestrians, lane markers) but couldn't understand broader context. They were brittle—change the lighting or angle slightly and they'd fail. They couldn't handle unexpected scenarios because they weren't trained on them.

Vision language models solve these problems by using large-scale neural networks trained on enormous datasets of images paired with text descriptions. These models develop a rich understanding of visual concepts and their relationship to language. They can recognize not just "a stop sign" but the concept of "intersection" and "yield to pedestrians." They can adapt to variations in appearance, lighting, and perspective.

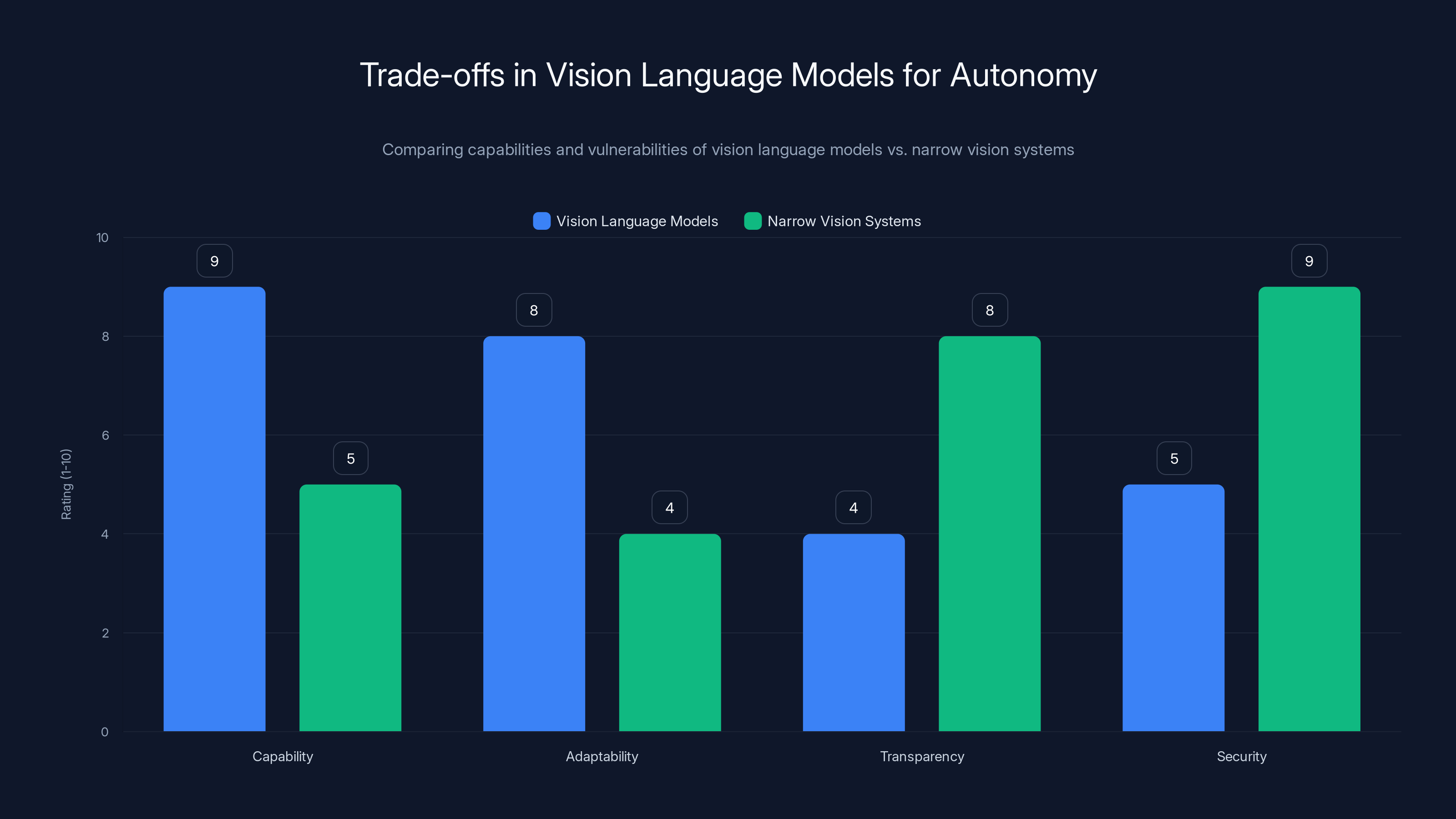

But this flexibility comes at a cost: the models are less transparent about how they reach decisions, harder to verify, and more vulnerable to attacks that exploit their language processing capabilities. A narrow computer vision system that simply identifies a red octagonal sign wouldn't be vulnerable to text-based attacks because it doesn't process text at all. A vision language model that processes text to understand context becomes vulnerable to adversarial text.

Manufacturers chose this tradeoff because the capabilities outweighed the risks—at least until this research showed the magnitude of the risk. Now they face a design decision: continue using vision language models but add defensive layers, or revert to narrower vision systems that are more limited but potentially more secure.

Some manufacturers are exploring hybrid approaches. They use vision language models for general understanding and context, but implement additional verification layers that check whether specific textual instructions match official authorities, known traffic patterns, or override protocols that require human confirmation.

Others are developing specialized language models that are trained specifically to be robust against adversarial text. These models are optimized not for maximizing performance on standard benchmarks but for maintaining correct behavior even when given contradictory or malicious textual input.

There's also research into developing vision systems that can distinguish between text that's part of official signage versus text that appears to be vandalism or modification. This is challenging because it requires training on examples of both legitimate and illegitimate text, but the approach shows promise.

Vision language models offer superior capability and adaptability but are less transparent and secure compared to narrow vision systems. Estimated data based on typical model characteristics.

Defense Strategies: What Manufacturers Are Doing

Manufacturers and security researchers are developing several approaches to mitigate this vulnerability. These strategies operate at different levels—from architectural changes to the autonomous system itself, to verification protocols that check whether instructions match official sources.

The most direct defense is verification against official sign databases. Many jurisdictions maintain records of official traffic signs, their locations, and their specifications. A manufacturer could implement a system that checks whether detected text on a sign matches what's in the official database for that location. If someone has modified a sign or placed an unofficial sign with instructions, the verification system would flag it as potentially malicious.

This approach has limitations. Not all jurisdictions maintain comprehensive databases. Some areas have many unofficial signs (advertising, private signs, etc.). And updating these databases in real-time is challenging. But it's a strong defense for high-traffic routes in areas with good administrative infrastructure.

Another approach is semantic analysis of detected instructions. The autonomous system could assess whether an instruction makes sense in context. If a vehicle encounters a sign saying "turn left" in a location where turning left would mean driving into a building or a cliff, the system could reject the instruction as physically impossible. This isn't foolproof—an attacker could craft instructions that are contextually plausible but still malicious—but it would catch many naive attacks.

Manufacturers are also implementing confidence thresholds. Rather than treating all detected text with equal weight, the system could require high confidence levels before acting on textual instructions. If the confidence that text says "stop" is lower than a threshold (perhaps due to image quality, unusual fonts, or other factors), the system would require human confirmation before following the instruction.

Some systems are implementing redundancy in sensing. Rather than relying solely on vision language models to read signs, they could use additional sensors (LiDAR, radar) to verify that an instruction makes sense. If a sign says "accelerate" but LiDAR detects an obstacle ahead, the system would trust the LiDAR data and ignore the textual instruction.

There's also research into training vision language models to be more skeptical of textual input. By deliberately training models on examples of adversarial text and teaching them to resist such manipulations, researchers are developing models that are harder to trick. This is an ongoing challenge because training data is limited, and models that become too resistant to text-based instructions might lose the ability to read legitimate signage.

User education and oversight is another important defense. Drivers monitoring autonomous vehicles can learn to watch for anomalous behavior and intervene when vehicles attempt to follow suspicious instructions. While this doesn't solve the problem technically, it provides a safety net that catches attacks that get through other defenses.

Regulatory approaches are also emerging. Some jurisdictions are developing standards for autonomous vehicle design that specifically require resistance to adversarial text attacks. These standards specify verification mechanisms and testing protocols that manufacturers must implement. As regulations codify these requirements, manufacturers who haven't yet implemented defenses will be forced to do so.

The Broader Security Implications of AI-Driven Autonomy

This specific vulnerability—malicious text on road signs—is just one instance of a much broader problem: autonomous systems that use AI to process environmental information can be manipulated through adversarial inputs embedded in that environment.

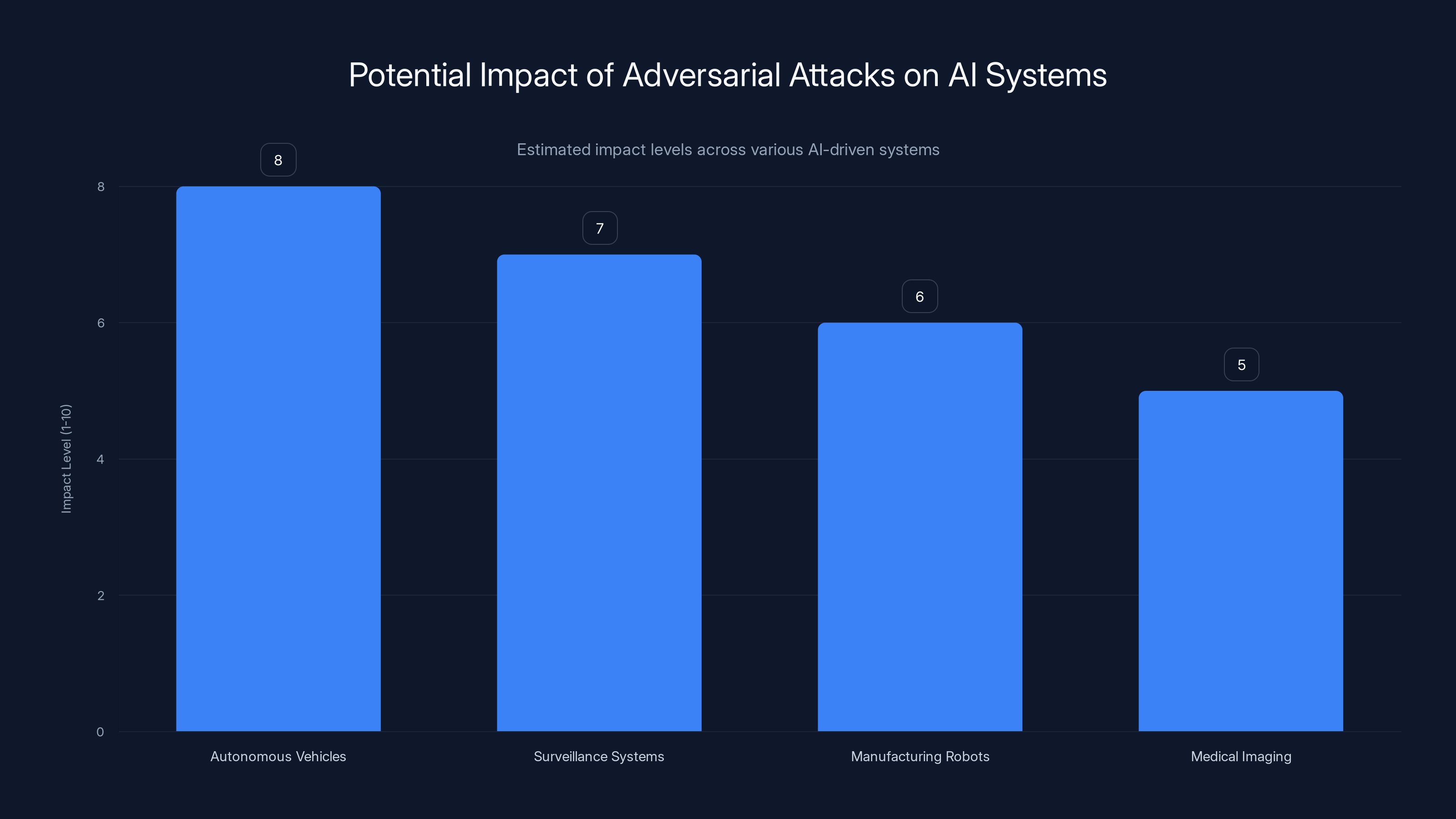

Consider surveillance systems that use vision language models to analyze video feeds. An attacker could place signs in a monitored area with text designed to confuse the surveillance system into misidentifying people or objects. A security system could be made to ignore a person it should be flagging, or flag innocent people as threats.

Consider manufacturing robots that use vision language models to understand their environment. An attacker could place labels or signs instructing the robot to perform dangerous operations, damage equipment, or contaminate products. The robot would follow instructions from printed text exactly as it would follow commands from a legitimate control system.

Consider medical imaging systems that use vision language models to interpret scans. While these systems don't directly read printed text the way autonomous vehicles do, the same principle applies: if malicious input can be embedded in the image, the system could be manipulated into providing incorrect diagnoses.

The common thread is that autonomous systems relying on AI to process environmental information need to develop skepticism about that information. They need to distinguish between legitimate context and potentially manipulated input. They need to have fallback mechanisms when uncertain.

This is why the research matters beyond just autonomous vehicles. It demonstrates a fundamental vulnerability class in how autonomous systems understand their environment. As more critical infrastructure becomes autonomous, this vulnerability becomes more serious. Power systems, water treatment plants, agricultural operations, and medical facilities increasingly rely on autonomous decision-making. If those systems can be tricked through environmental manipulation, the stakes become very high.

The research also highlights the challenge of developing truly safe autonomous systems. Safety isn't just about having good sensors and fast processors. It's about having systems that are robust against manipulation, that can distinguish between legitimate and adversarial input, and that fail safely when facing uncertainty.

Many autonomous system designers focus on performance—how accurately can the system recognize objects, how quickly can it make decisions, how reliably does it handle standard scenarios. The research shows that performance optimization can come at the cost of security. A system optimized purely for recognizing and responding to textual instructions will be maximally vulnerable to adversarial text. A system designed with security in mind might sacrifice some performance to gain robustness.

Autonomous vehicles and surveillance systems are highly susceptible to adversarial attacks, posing significant security risks. Estimated data.

Regulatory and Governance Responses

Governments and regulatory bodies are beginning to respond to vulnerabilities like this, though policy development is lagging behind technical innovation. Understanding the regulatory landscape matters because it will ultimately determine what manufacturers must do to deploy autonomous systems safely.

The National Highway Traffic Safety Administration (NHTSA) in the United States has begun developing guidelines for autonomous vehicle safety that specifically address adversarial attacks. These guidelines are still evolving, but they increasingly require manufacturers to demonstrate that their systems can detect and resist attacks that attempt to manipulate vehicle behavior through environmental signals.

In Europe, the upcoming AI Act will likely include requirements for autonomous systems to be robust against adversarial inputs. The regulatory approach focuses on establishing minimum safety standards that all manufacturers must meet, rather than allowing a race to the bottom where insecure systems gain competitive advantage through lower costs.

International standards organizations like ISO are developing technical standards for autonomous vehicle safety that address this vulnerability class. These standards specify testing protocols, validation approaches, and design requirements that manufacturers must implement. The benefit of international standards is that they create a level playing field where all manufacturers must meet the same security requirements.

But regulation faces challenges. How do you define the scope of what autonomous systems should be defended against? Should manufacturers be responsible for defending against all possible adversarial inputs? What level of attack sophistication should systems be required to resist? How do you test for resilience when new attack types are constantly being discovered?

There's also the question of liability. If an autonomous vehicle is attacked and causes an accident, who bears responsibility? The manufacturer (because they didn't implement adequate defenses)? The operator (because they relied on an autonomous system)? The attacker (because they created the malicious input)? These liability questions aren't yet resolved in most jurisdictions, which creates uncertainty for manufacturers.

Some propose that official sign authorities should bear responsibility for certifying signage as legitimate, creating a verification chain from the sign manufacturer through installation to the autonomous system reading it. Others argue that autonomous systems should be designed conservatively—treating any sign they can't verify as potentially malicious and requiring human confirmation.

The regulatory challenge is to strike a balance between safety (requiring robust defenses) and practicality (not creating so many requirements that autonomous systems become economically unviable). That balance is still being worked out.

Testing and Validation: How Manufacturers Verify Security

Proving that an autonomous system is resistant to adversarial text attacks requires rigorous testing and validation. Manufacturers need approaches that can identify vulnerabilities before systems are deployed in the real world.

One approach is adversarial testing, where security researchers deliberately try to fool the autonomous system with malicious inputs. This is similar to penetration testing in cybersecurity but focused on environmental inputs rather than network attacks. Researchers create modified signs, place them in the system's path, and document whether the system correctly ignores them, flags them as suspicious, or incorrectly follows their instructions.

The challenge is that adversarial testing can't cover all possible attacks. An attacker might discover new attack vectors that testers didn't think to try. This is why researchers focus on testing against classes of attacks rather than specific instances. They'll test variations in color, font, language, sign placement, and textual formulation to understand the system's vulnerability profile.

Another approach is formal verification, where researchers mathematically prove that a system will behave correctly under defined threat models. For autonomous vehicle systems, this might mean proving that the system will never follow a textual instruction that contradicts other sensory input, or that the system will always require human confirmation when instruction sources can't be verified.

Formal verification is powerful but limited. It only proves correct behavior within the specific threat model. If an attacker discovers a threat outside the model, the system could still be vulnerable. Formal verification is also computationally expensive and difficult to apply to large neural networks used in vision language models.

Simulation-based testing is increasingly common. Manufacturers create virtual environments that accurately model real-world scenarios—complete with adversarial signage—and test their autonomous systems thousands or millions of times in simulation before deploying them. The advantage is that simulation can test situations that would be dangerous in the real world, like collision scenarios, without actually risking lives.

Field testing under controlled conditions provides validation that the system works in real-world conditions. Manufacturers test in closed courses, restricted areas, or with extensive monitoring to ensure that defenses work in practice, not just in simulation. As confidence grows, testing gradually moves to more open environments.

Third-party auditing is becoming more common. Independent security firms evaluate autonomous systems to identify vulnerabilities that manufacturers might have missed. These audits provide validation that defenses are working and identify gaps that need addressing.

The testing community is also developing standardized test suites for adversarial robustness. Similar to how autonomous vehicle safety is tested against standardized scenarios, security testing is moving toward standardized attack scenarios that all manufacturers test against. This creates a level playing field and makes it easier to compare security across different systems.

Future Threats: Evolution of Adversarial Attacks Against Autonomous Systems

The current research focuses on static text on signs. But adversarial attacks will likely evolve in sophistication as attackers develop better techniques and find gaps in evolving defenses.

One concerning evolution is dynamic attacks using displays rather than static painted signs. An attacker could place a digital display in a location where an autonomous vehicle regularly travels and program it to show different instructions at different times or in response to the vehicle's presence. This would be more sophisticated than static signage but would create more flexible attack opportunities.

Another evolution is coordinated attacks using multiple modified signs. A single adversarial sign might be caught by verification systems or semantic analysis. But if multiple signs around an area all contained consistent malicious instructions, the system might be more likely to trust and follow them. An attacker could set up a sequence of modified signs that guides a vehicle or drone into a dangerous situation.

There's also the possibility of attacks that target the training data rather than the deployed system. If an attacker could introduce adversarial examples into the data that systems are trained on, they could create vulnerabilities that exist in many different systems simultaneously. This is harder to execute than creating physical signs, but more impactful if successful.

Multimodal attacks that combine different attack vectors are another concern. An attacker could use modified signs alongside other techniques—creating noise that confuses sensors, placing obstacles that limit the vehicle's options, or using sound to disrupt detection. These combined attacks would be harder to defend against than single-vector attacks.

Attacks targeting the supply chain are also possible. If an attacker could compromise the vision language model before deployment—through poisoning training data or introducing a backdoor during model development—they could create vulnerabilities that exist in many systems simultaneously. Defending against supply chain attacks requires new approaches to securing AI development and deployment pipelines.

As defenses improve, attackers will look for ways to overcome them. If verification systems become standard, attackers might focus on finding official signs they can modify without detection. If semantic analysis prevents contextually implausible attacks, attackers might focus on creating contextually sensible attacks that are still malicious. The security landscape will continue to evolve.

Practical Implications for Autonomous System Users and Operators

For people and organizations operating autonomous systems today, the research creates both practical challenges and clear recommendations for managing risk.

Operators of autonomous vehicles should maintain awareness of this vulnerability even though it's not yet a widespread attack. This means understanding that anomalous vehicle behavior—unexpected turns, course deviations, stops that don't correspond to traffic lights—could indicate an adversarial attack. Maintaining human oversight and being ready to intervene remains critical until more robust defenses are deployed.

Operators should also be cautious about allowing autonomous systems to operate in areas where signage could be easily modified or where signage control isn't verified. Closed courses, controlled environments, and areas with robust sign verification systems are safer for autonomous operation than open urban environments where anyone could place signs.

For fleet operators managing large numbers of autonomous vehicles, documenting and reporting anomalous behavior is important. If multiple vehicles in a fleet encounter the same unusual behavior at the same location, that could indicate an adversarial attack. Reporting patterns helps manufacturers understand real-world threats and improves defense systems.

Organizations deploying drones should implement flight path verification systems that check whether drones are actually following their programmed routes. If a drone deviates from its intended path in a way that corresponds to signage in that area, that could indicate an attack.

Operators should also stay informed about manufacturer security updates and patches. As manufacturers develop defenses, they'll release updates that improve resistance to adversarial attacks. Deploying these updates promptly helps protect against emerging threats.

For organizations planning to deploy autonomous systems, security and robustness against adversarial attacks should be evaluation criteria when selecting manufacturers. Systems that have undergone independent security audits and demonstrated resilience against adversarial inputs are safer choices than systems that haven't been tested for these vulnerabilities.

Insurers and liability managers should understand this vulnerability because it affects risk calculations for autonomous vehicle operations. A properly defended system carries lower risk than an undefended system. Insurance premiums and operating permissions should reflect this risk differential.

The Broader Context: AI Security and Robustness

This vulnerability fits into a larger conversation about AI system security and robustness. Understanding that context helps explain why this research matters beyond just autonomous vehicles.

Artificial intelligence systems in general are being deployed into increasingly critical applications without comprehensive understanding of their vulnerabilities. The concern isn't unique to autonomous vehicles. Large language models like GPT can be manipulated through prompt injection. Computer vision systems can be fooled by adversarial examples. Recommendation systems can be biased through data poisoning.

What's unique about the road sign vulnerability is that it demonstrates how these AI vulnerabilities can manifest as physical safety threats. A manipulated language model that generates bad text is a problem. A manipulated vision system that causes a vehicle to crash is an emergency.

This drives home why AI safety and robustness matter. These aren't abstract concerns for research papers. They're practical problems that affect real people. When an autonomous system is vulnerable to manipulation, that vulnerability can lead to accidents, injuries, and deaths.

The research also highlights the challenge of developing truly robust AI systems. Traditional approaches to system design focus on making systems reliable under normal operating conditions. But AI systems need to be reliable even under adversarial conditions—when someone is actively trying to break them.

That requires different design philosophies, different testing approaches, and different evaluation metrics. A system that performs excellently on standard benchmarks but is vulnerable to adversarial attacks is not actually a good system. Manufacturers need to optimize for robustness, not just performance.

Manufacturer Responsibility and Accountability

Ultimately, responsibility for defending against adversarial text attacks falls on manufacturers of autonomous systems. They design the systems, choose the AI architectures, and make security decisions that determine whether systems are vulnerable.

This responsibility is significant. Deploying vulnerable systems creates risk for everyone—passengers in vehicles, people sharing roads and airspace with drones, communities where autonomous systems operate. Manufacturers have both a moral and legal responsibility to minimize that risk.

Some manufacturers are taking this responsibility seriously. They're implementing defenses, commissioning security research, and working with regulatory bodies to establish standards. Others are moving slower, apparently betting that vulnerabilities won't be exploited before they can implement patches.

The challenge is that responsibility without enforcement is insufficient motivation for some organizations. If there's no penalty for deploying vulnerable systems, some manufacturers will minimize security investment to reduce costs. This creates a race to the bottom where organizations that implement robust security get undercut by competitors who don't.

This is why regulation and standards matter. They create baseline requirements that all manufacturers must meet, preventing competitive disadvantage from securing systems properly. They also create legal liability for manufacturers who don't meet standards, providing motivation beyond ethics for implementing defenses.

Manufacturers should also be transparent about vulnerabilities. When security researchers discover new attack vectors, manufacturers should publicly acknowledge them, explain what they're doing to address them, and provide timelines for fixes. Transparency builds trust and helps users understand risk levels.

Third-party auditing and certification can help with accountability. Independent security assessments that validate manufacturer claims about system robustness would help users understand which systems are actually secure. This is similar to how safety certifications work in automotive and aviation industries—independent validation provides assurance that claimed safety levels are real.

Long-Term Solutions: Fundamental Changes to Autonomous System Design

While defensive strategies and regulatory requirements address the immediate problem, the ultimate solution requires fundamental changes to how autonomous systems process environmental information.

One approach is developing systems that are inherently more skeptical of text-based input. Rather than treating all detected text equally, systems could implement a hierarchy of information sources. Direct sensor measurements would be weighted more heavily than inferred information. Information from verified sources would be weighted more heavily than information from unverified sources. Text from unknown sources would be treated as potentially suspicious by default.

Another approach is developing better verification mechanisms. Imagine if every official traffic sign included a cryptographic signature that autonomous systems could verify. If a sign had been modified or if the signature didn't match, the system would immediately flag it as potentially malicious. This would require coordination between infrastructure authorities and vehicle manufacturers, but it would create a robust defense.

There's also potential in developing specialized AI models designed specifically for safety-critical applications. General-purpose large language models are powerful but weren't designed with adversarial robustness in mind. Smaller, specialized models trained specifically to resist adversarial input might be more appropriate for autonomous vehicle applications than powerful general-purpose models.

Human-in-the-loop systems represent another long-term solution. Rather than fully autonomous systems that operate without human input, systems could implement human oversight checkpoints where unusual situations require human confirmation. This sacrifices some autonomy for significantly improved safety and security.

Sensor fusion and redundancy provide additional robustness. A system that relies on multiple independent sensors making consistent observations is much harder to fool than a system relying on a single sensor. If textual input from vision language models contradicts information from other sensors, the system can trust the redundant information and ignore the potentially malicious text.

There's also promise in developing self-monitoring systems that learn their normal operating patterns and can detect when they're being manipulated. If a vehicle suddenly deviates from established patterns in ways that correspond to newly encountered signage, that's a red flag. Systems that can identify such anomalies might be able to automatically flag and reject malicious instructions.

Conclusion: Living with Autonomous Systems in an Adversarial World

The research demonstrating that adversarial text can manipulate autonomous vehicles and drones reveals a fundamental vulnerability in how we're designing critical systems. It's not a flaw specific to one manufacturer or one AI model. It's a design pattern issue that affects many autonomous systems because they rely on vision language models that treat textual input as commands without adequate skepticism about whether that input is legitimate.

The good news is that this vulnerability is solvable. It's not an inherent limitation of autonomous systems. It's a design problem, and design problems have solutions. Manufacturers can implement verification systems, semantic analysis, confidence thresholds, sensor redundancy, and adversarial training. Regulators can establish standards that require these defenses. Researchers can develop new approaches to making autonomous systems more robust.

The concerning part is that this vulnerability exists today in systems being deployed right now. Many autonomous vehicles and drones don't have adequate defenses. Many manufacturers haven't fully appreciated the problem. Many users don't understand the risk.

The path forward requires coordinated action across multiple stakeholders. Manufacturers need to prioritize security and robustness. Regulators need to establish clear standards and enforce them. Researchers need to continue identifying vulnerabilities and developing solutions. Users need to maintain reasonable skepticism about autonomous systems until proven they're safe.

Most importantly, we need to shift how we think about AI system design. Performance and capability can't be the only metrics. Robustness, security, and resistance to adversarial manipulation need to be equally important. A system that performs excellently in normal conditions but fails catastrophically when attacked isn't actually a good system.

As autonomous systems move from specialized applications into general-purpose deployment, this shift becomes increasingly urgent. The technologies are advancing faster than our understanding of how to keep them safe. Closing that gap requires recognizing vulnerabilities like adversarial text attacks, taking them seriously, and building defenses into systems from the ground up.

The research from UC Santa Cruz and Johns Hopkins is important not because it identifies a unique vulnerability specific to autonomous vehicles. It's important because it demonstrates a class of vulnerability that affects many autonomous systems and that can't be solved through traditional cybersecurity approaches. Understanding that, and beginning to address it now, makes autonomous systems safer for everyone.

FAQ

What is adversarial text attack on autonomous systems?

Adversarial text attacks involve embedding malicious instructions in written text placed in the physical environment—such as modified road signs—that autonomous systems read and interpret as commands to follow. Unlike traditional hacking, these attacks don't exploit software vulnerabilities but rather exploit the way vision language models process language without verifying whether text represents legitimate context or malicious instruction.

How do vision language models get fooled by text on road signs?

Vision language models process images pixel by pixel, identify text regions, extract characters using optical character recognition, and then interpret that text through language processing layers. The vulnerability occurs because these models don't include a verification step that checks whether the text is official signage or potentially malicious. They simply extract meaning and pass it to the autonomous system's decision engine without asking whether that instruction should be trusted.

Why can't traditional cybersecurity defenses stop adversarial text attacks?

Traditional cybersecurity defends against digital threats using firewalls, antivirus software, and intrusion detection systems. These tools can't defend against painted signs because signs aren't digital threats. There's no network packet to filter, no executable file to scan, and no code to analyze. The attack operates entirely through the physical environment, which traditional cybersecurity infrastructure can't address.

What are the real-world dangers of this vulnerability?

Real-world dangers include autonomous vehicles being tricked into dangerous maneuvers (sudden turns, accelerations, or stops) that could cause accidents; delivery drones being diverted from their routes or dropping their loads; and surveillance systems misidentifying people or objects. A single attacker with spray paint could affect multiple autonomous systems traveling the same route, making these attacks particularly scalable compared to traditional cyber attacks.

What defense strategies are manufacturers implementing?

Manufacturers are implementing multiple defensive layers: verification against official sign databases to check whether detected text matches official signage; semantic analysis to determine whether instructions make sense in context; confidence thresholds that require high certainty before following textual instructions; sensor redundancy that checks whether textual instructions contradict data from other sensors; and adversarial training that teaches models to resist manipulation attempts.

Which autonomous systems are most vulnerable to these attacks?

Research shows that drone classification systems are particularly predictable and vulnerable, with consistent attack success rates. Self-driving car systems show more variation, with some models being significantly more vulnerable than others. The variation suggests that vulnerability isn't inevitable but reflects specific design choices about how models process textual input and how much skepticism they apply to environmental context.

How can autonomous system operators protect themselves until defenses are deployed?

Operators should maintain human oversight of autonomous systems and be ready to intervene when unusual behavior occurs. They should be cautious about deploying systems in areas where signage can be easily modified or where sign verification isn't implemented. They should document anomalous behavior that might indicate attacks and report patterns to manufacturers. They should also stay informed about manufacturer security updates and deploy them promptly.

What regulatory approaches are being developed to address this vulnerability?

Regulatory bodies like NHTSA are developing guidelines requiring manufacturers to demonstrate that autonomous systems can detect and resist adversarial attacks. The European Union's AI Act is likely to include requirements for robustness against adversarial inputs. International standards organizations are developing technical standards specifying testing protocols and design requirements. The overall regulatory trend is toward establishing minimum security standards that all manufacturers must meet.

How can attackers discover and exploit this vulnerability?

The attack is relatively straightforward: an attacker needs to understand that vision language models treat text as commands and creates modified road signs with instructions designed to manipulate autonomous system behavior. The attacker doesn't need hacking skills, programming knowledge, or access to the vehicle's systems. They just need to understand the vulnerability and have access to materials to create signs. This accessibility is a significant concern because even unsophisticated actors could potentially exploit this vulnerability.

What long-term solutions exist for this problem?

Long-term solutions include developing cryptographic signatures for official traffic signs that autonomous systems can verify; creating specialized AI models designed specifically for safety-critical applications rather than relying on general-purpose language models; implementing human-in-the-loop systems where unusual situations require human confirmation; deploying sensor fusion and redundancy where multiple independent sensors must agree before taking action; and developing self-monitoring systems that can detect when they're being manipulated by identifying deviations from normal operating patterns.

Want to Explore AI Security Further?

If you're interested in understanding how AI systems can be made more robust against adversarial attacks, consider exploring automated workflow solutions that prioritize security and verification. Runable offers AI-powered automation that incorporates verification layers and safety checkpoints—principles applicable to autonomous system design. While Runable focuses on document generation, presentation creation, and workflow automation, the underlying architecture demonstrates how AI tools can be designed with security considerations built in from the start.

Use Case: Building documentation that explains autonomous system security requirements without relying on external AI tools

Try Runable For Free

Key Takeaways

- Vision language models in autonomous systems treat text on road signs as executable commands without verifying whether text is legitimate or malicious

- Adversarial text attacks operate through physical environment rather than digital channels, making traditional cybersecurity defenses completely ineffective

- Vulnerability exists today in deployed systems; manufacturers are only beginning to implement defenses despite widespread autonomous vehicle testing

- Different AI models show dramatically different vulnerability levels, suggesting that security is a design choice not an inevitable consequence of the technology

- Long-term solution requires fundamental changes to autonomous system architecture: verification systems, semantic analysis, sensor redundancy, and human oversight checkpoints

Related Articles

- AI Safety by Design: What Experts Predict for 2026 [2025]

- Waymo's $16B Funding: Inside the Robotaxi Revolution [2025]

- Waymo's $16 Billion Funding Round: The Future of Robotaxis [2025]

- OpenClaw AI Agent: Complete Guide to the Trending Tool [2025]

- Waymo's $16B Funding Round: The Future of Autonomous Mobility [2025]

- Viral AI Prompts: The Next Major Security Threat [2025]

![How Hackers Exploit AI Vision in Self-Driving Cars and Drones [2025]](https://tryrunable.com/blog/how-hackers-exploit-ai-vision-in-self-driving-cars-and-drone/image-1-1770149414389.png)