Is AI Really Causing Layoffs? The Data Says Otherwise [2025]

We hear it constantly: AI is destroying jobs. Scroll through LinkedIn any given Tuesday and you'll see someone claiming their role was cut because of artificial intelligence. News outlets run headlines about AI-driven layoffs. Industry reports warn of automation wiping out millions of positions. It's become the go-to explanation for why companies are cutting headcount.

But here's the uncomfortable truth nobody wants to admit: the data doesn't actually support this narrative.

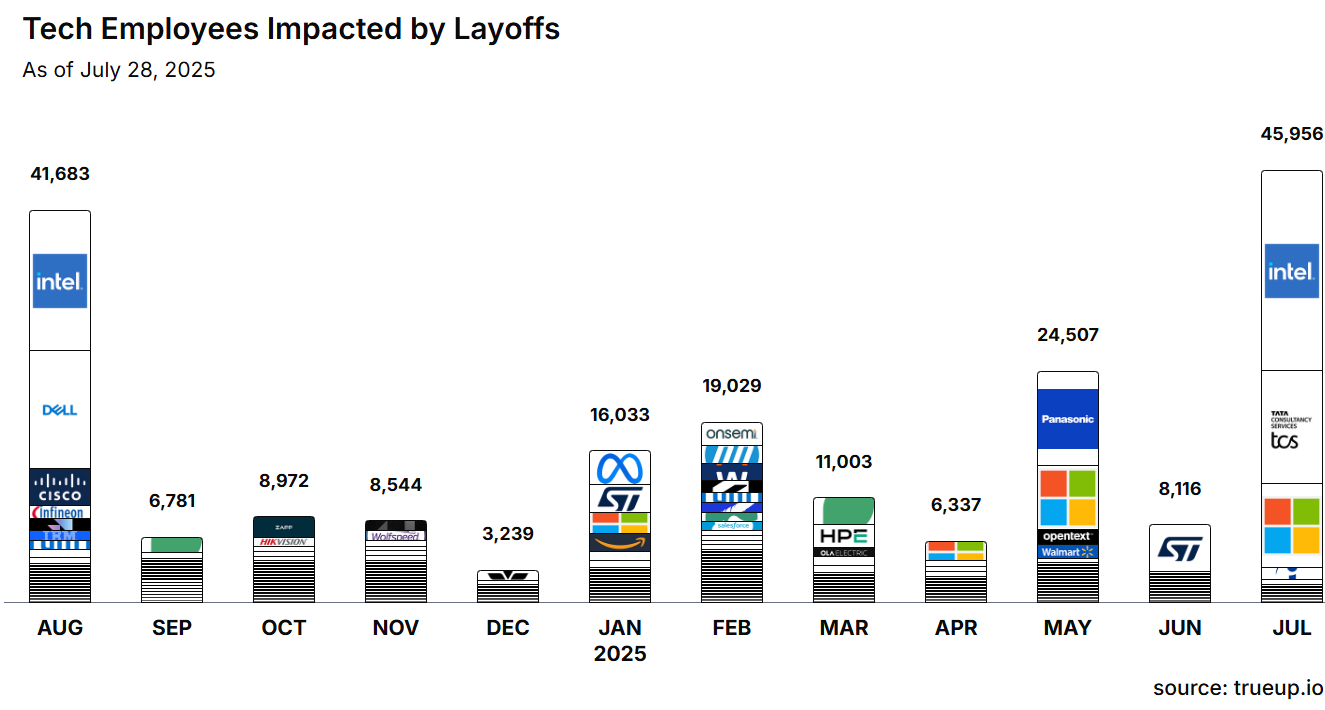

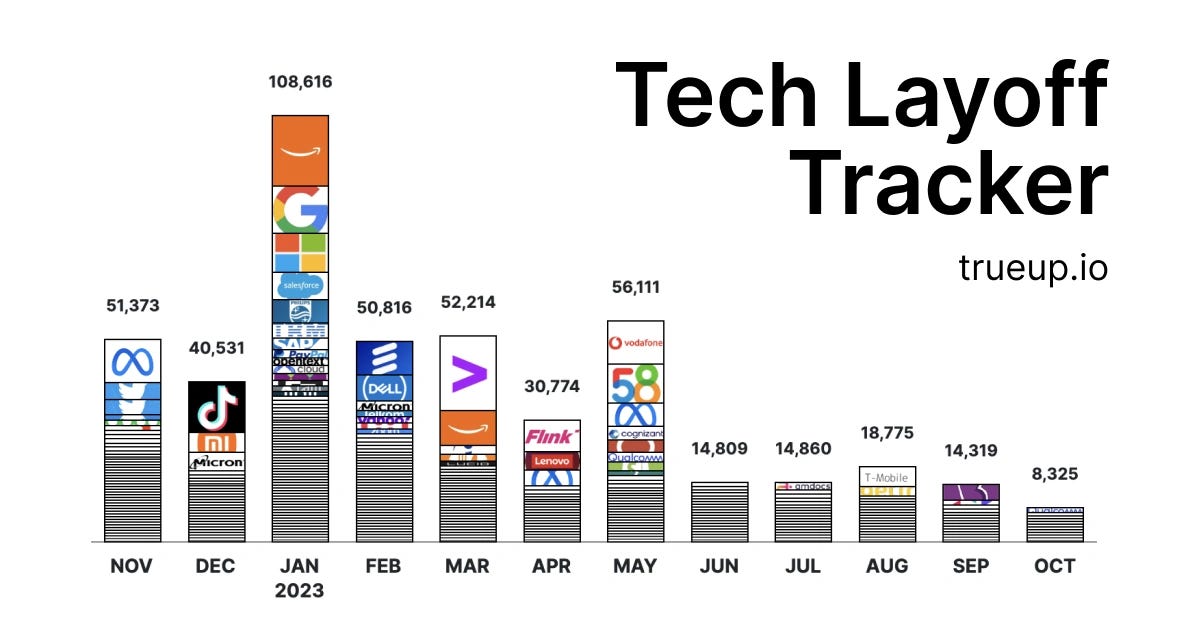

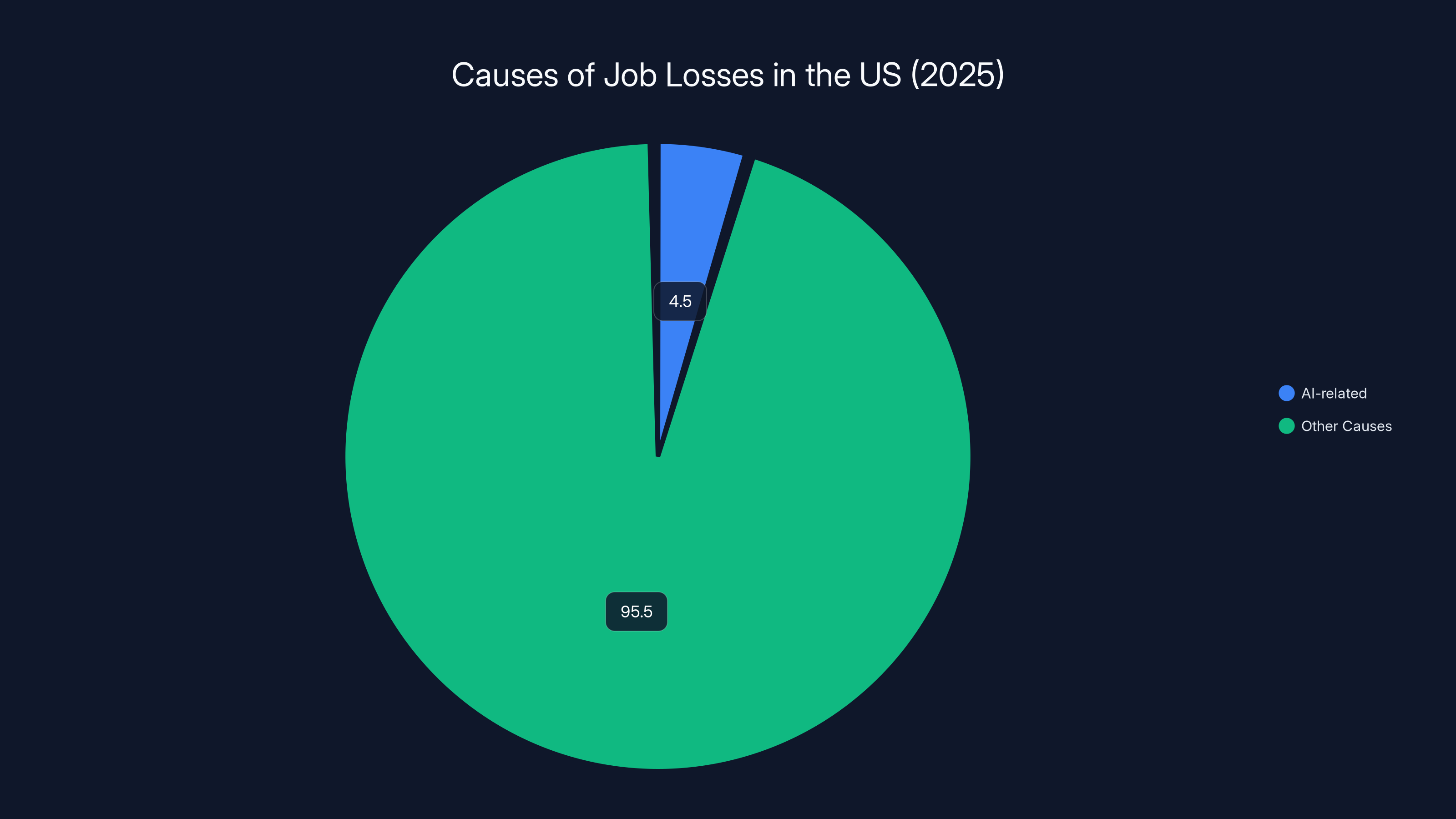

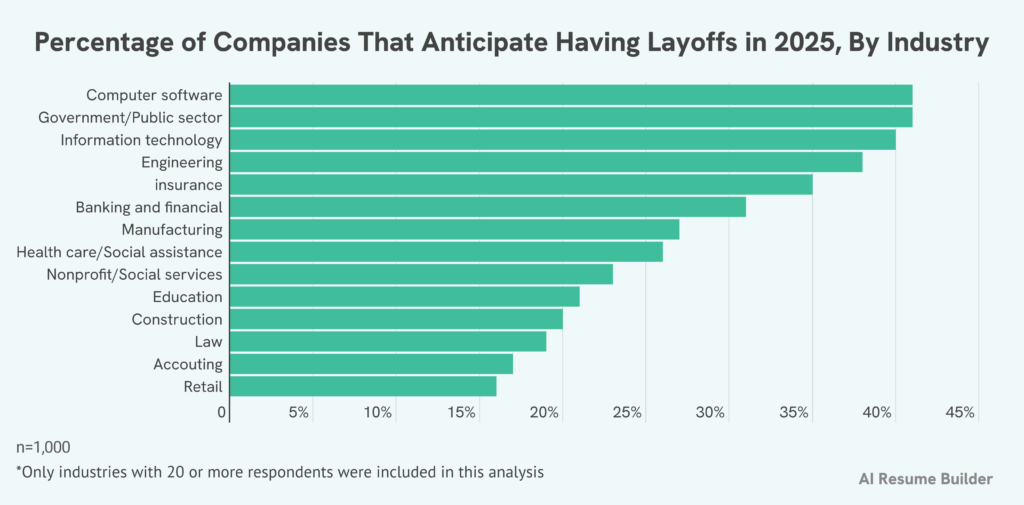

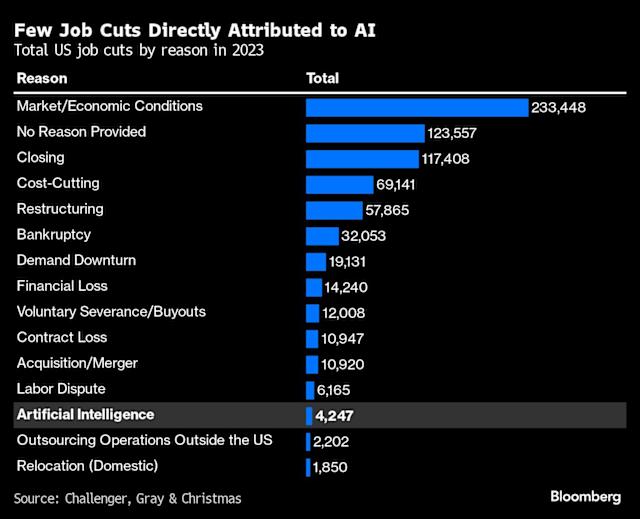

Last year, new research from Oxford Economics dug into the real numbers behind job losses across the United States. What they found challenges almost everything we think we know about AI and employment. According to their analysis, artificial intelligence was cited as the reason for only 55,000 job cuts throughout 2025, representing just 4.5% of all US job losses during that period. Let that sink in for a moment. We're talking about a tiny fraction of total job cuts being attributed to AI.

Meanwhile, companies are losing money. Profit margins are tightening. Management is under pressure. And suddenly, every layoff becomes an "AI initiative" or a "strategic automation project." It's better PR to say you're implementing cutting-edge technology than to admit the business model isn't working.

This matters because the story we tell ourselves shapes policy, education, and personal career decisions. If AI is actually causing massive job displacement, we need entirely different solutions than if companies are simply using AI as a convenient excuse. The narrative and the reality have drifted dangerously far apart.

TL; DR

- AI caused only 4.5% of US job losses in 2025: Out of all job cuts, just 55,000 were attributed to artificial intelligence

- "Market and economic conditions" were cited 4x more often: Companies blame general economic pressures, not AI, when you read between the lines

- Zero productivity surge from AI: If AI were actually replacing workers, we'd see measurable productivity gains—we haven't

- More graduates, fewer entry-level roles: The flood of new degree holders entering the job market explains youth unemployment better than automation does

- AI is convenient narrative cover: Companies use AI layoffs as better PR than admitting profits are low or management failed

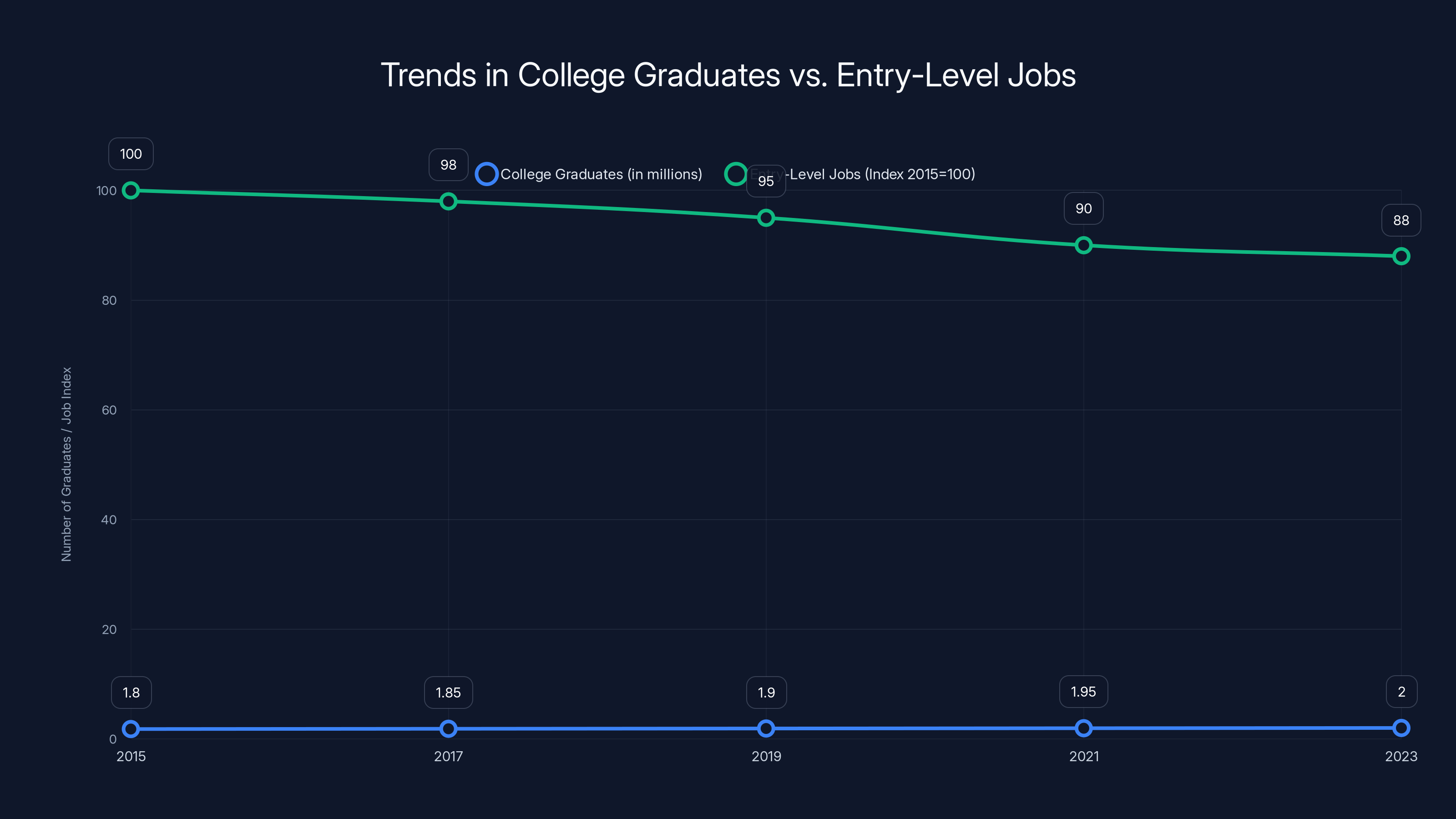

The number of college graduates has steadily increased from 1.8 million in 2015 to 2 million in 2023, while entry-level job availability has declined by 12% since 2015. Estimated data for graduates.

The AI Layoff Narrative vs. What Actually Happened

The story is seductive in its simplicity: brilliant engineers built powerful AI systems, these systems got really good at doing human jobs, companies realized they don't need as many humans anymore, and suddenly millions of people are redundant.

It's a clean narrative. It fits perfectly into tech media's favorite storylines about disruption and inevitable technological progress. It also absolves individual companies of responsibility. After all, you can't blame a CEO for following the inevitable march of technology, right?

Except that's not what the data shows.

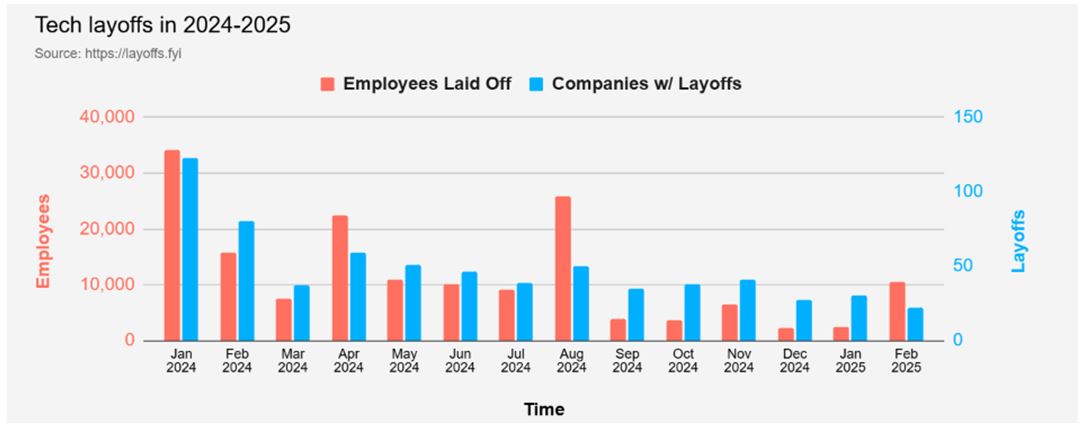

When you actually look at the numbers, the AI layoff story falls apart almost immediately. In 2025, across the entire United States economy, approximately 55,000 job losses were explicitly tied to artificial intelligence. In the same period, companies cited "market and economic conditions" as the reason for roughly 250,000 job cuts. That's nearly five times as many people losing jobs due to general economic pressure than due to AI.

Think about what that means. If AI were truly the dominant force reshaping the job market, you'd expect it to show up as the primary driver of layoffs. Instead, it barely registers. It's not that AI isn't affecting work—it certainly is. It's that companies are dealing with far bigger problems right now: inflation, higher interest rates, slowing consumer spending, weakening demand, and the need to cut costs to maintain profit margins.

When you talk to people who've been through these layoffs, the story gets more complicated. A product manager might have been cut as part of a shift toward leaner teams. A data analyst might have lost their job because the company's forecasts were wrong and they don't need as many people analyzing data that's now less relevant. A customer support specialist might have been replaced—not by AI, but by reducing service tiers and asking fewer people to do more work.

Does AI play a role in some of these decisions? Sure. But it's rarely the primary reason. It's more often a justification layered on top of existing cost-cutting impulses.

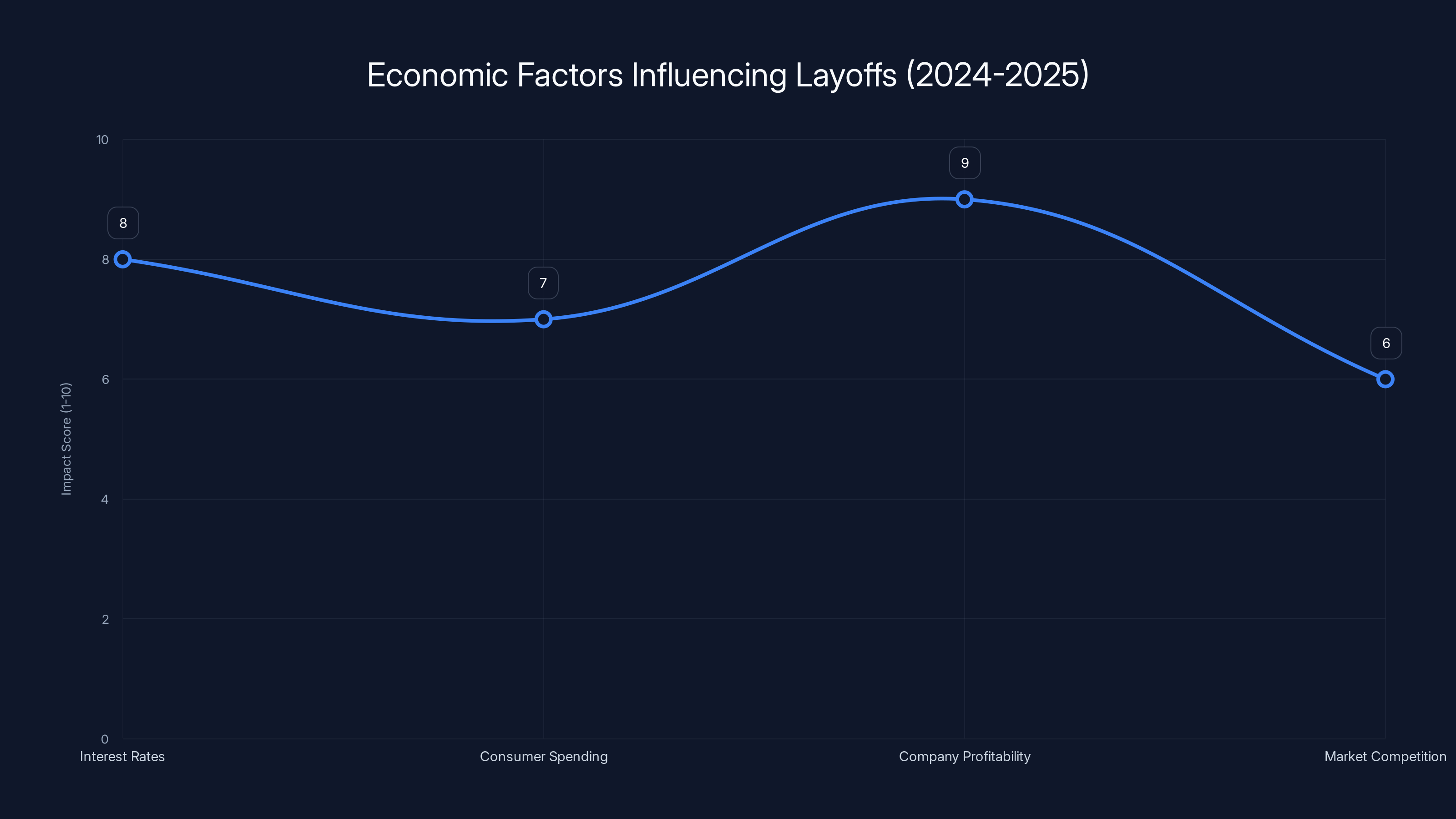

Interest rates and company profitability had the highest estimated impact on layoffs in 2024-2025, highlighting the economic pressures beyond AI. Estimated data.

The Missing Productivity Surge

Here's the test that should settle this debate once and for all: if AI is actually replacing workers and making companies more efficient, we should see productivity through the roof.

We're not.

In fact, productivity data from 2025 shows something far more mundane. Worker productivity has been essentially flat. Some quarters it ticks up slightly, some quarters it ticks down slightly, but the overall trend is unremarkable. If AI were delivering on its promise to amplify human workers' capabilities—or worse, to replace them entirely—we should be seeing dramatic productivity improvements. The remaining workers should be doing vastly more with the same tools and resources.

Instead, what we're actually seeing is more complexity layered on top of existing workloads. Employees are learning new AI tools. They're adapting workflows to accommodate AI outputs. They're fact-checking AI-generated content because LLMs have a tendency to confidently hallucinate. They're managing AI systems and dealing with the unique failure modes that emerge when you deploy machine learning in the real world.

Most importantly, they're doing all of this in addition to their existing work, not instead of it.

A software engineer who used to write code now spends time reviewing code generated by AI, which sounds faster until you realize the AI code needs serious review and often isn't suitable for production. A marketing team that implemented an AI copywriting tool now has copywriters reviewing, editing, and fact-checking everything the AI produces—adding extra steps rather than removing them. A customer support team using an AI chatbot now has to handle the escalations from customers angry that they can't talk to humans.

This is the reality of AI deployment. You don't get a clean replacement of humans with machines. You get a temporary productivity dip, followed by a slow climb back to where you started, and maybe eventually some modest gains if you're lucky.

So when the Oxford Economics report concludes that we're seeing zero compelling evidence of major productivity improvements from AI, that conclusion doesn't surprise anyone who's actually worked with these tools. The productivity gains exist in the marketing presentations and the board meetings, not in the actual day-to-day work.

Why Companies Love AI as an Excuse

Let's be brutally honest: calling your layoffs "AI-driven" sounds better to the public than admitting the truth.

The truth is often far less attractive: "We overhired during the pandemic, demand for our product hasn't grown as fast as we thought, consumer spending is slowing, our profit margins are shrinking, and we need to cut 15% of our workforce to hit our financial targets." That's a true statement at many companies, but it's not the story you want to tell. It makes the CEO and the board look bad. It suggests poor planning. It invites questions about whether the company's business model actually works.

But say the same layoffs are "part of our strategic AI initiative" or "optimizing operations for next-generation AI tools" and suddenly it sounds forward-thinking. It sounds like you're being proactive. It sounds like you're making smart bets on the future instead of just cutting costs because profits are down.

From a PR perspective, it's brilliant. The company gets to frame negative news—losing your job is unquestionably negative for the person losing it—as part of a larger vision for technological progress. Tech workers, and the journalists who cover them, tend to be excited about AI. Framing layoffs as "AI-driven" transforms them from sad news into "disruption."

It also gives the company political cover. If your job is cut as part of a vague "cost reduction" initiative, that's on the company's leadership. If your job is cut because of inevitable technological progress, well, who can you blame? You can't argue with the future, right?

This pattern isn't new. During the height of cloud computing adoption, companies blamed layoffs on "digital transformation." During the mobile revolution, they blamed it on "transition to mobile-first strategy." Every wave of technology gets weaponized as an excuse for the hard business decisions that would be unpopular if explained honestly.

But the pattern does real damage. It shapes how we think about technology's role in society. It influences which skills young people choose to develop. It affects policy decisions about education and workforce development. And it lets executives off the hook for what are often poor strategic decisions disguised as inevitable technological change.

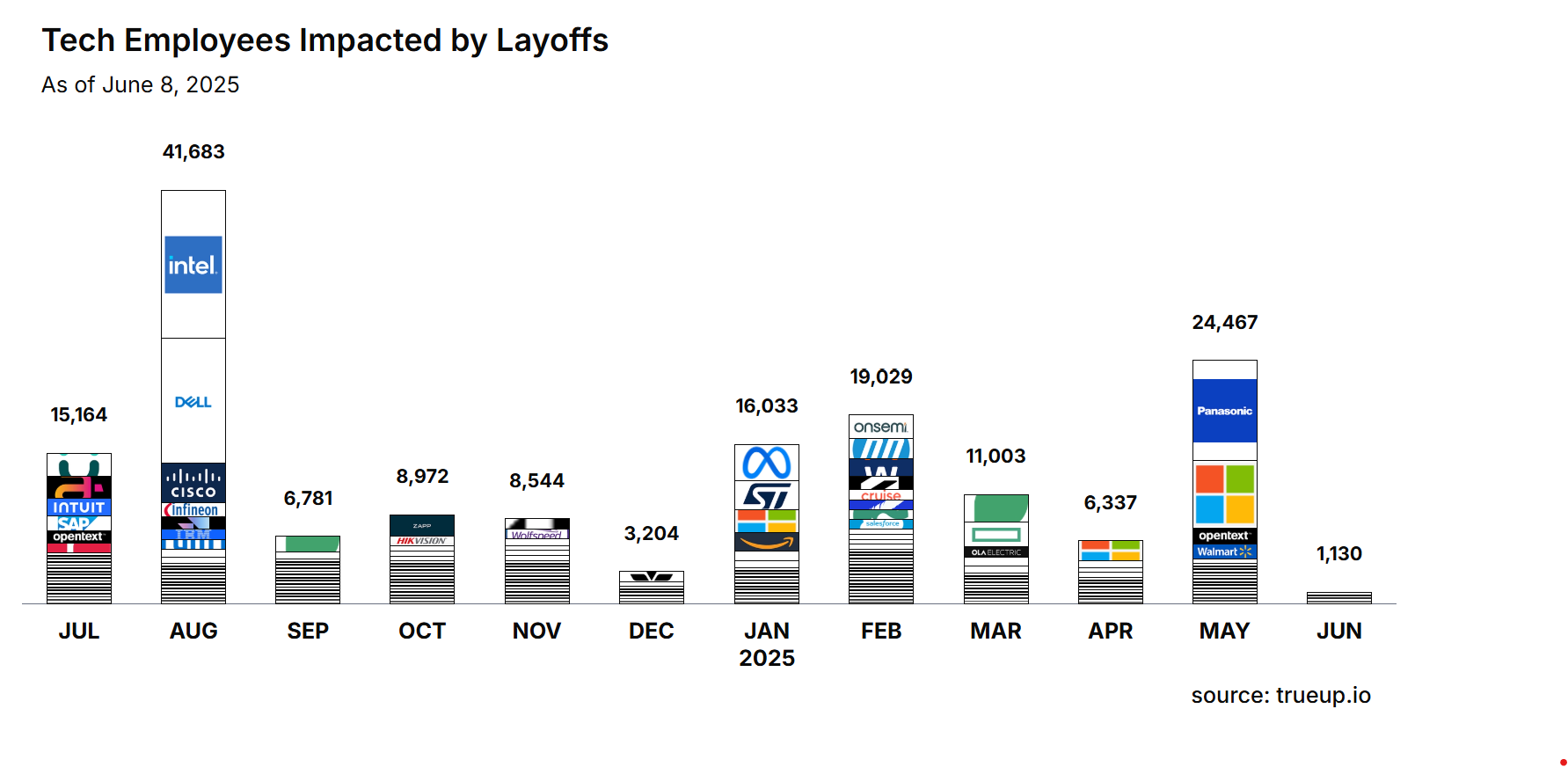

In 2025, AI accounted for only 4.5% of layoffs, while market and economic conditions were cited four times more frequently, highlighting broader economic issues as the primary driver. Estimated data.

The Actual Culprit: Too Many Graduates, Too Few Entry-Level Roles

If AI isn't causing the youth unemployment crisis, what is?

The data points to something far more straightforward: there's been an explosion in the number of college graduates entering the job market, and entry-level positions haven't kept pace.

Since the late 2010s, the percentage of young adults pursuing higher education has climbed steadily. We've also seen the rise of credential inflation—the phenomenon where employers require a bachelor's degree for jobs that previously only needed a high school diploma. A "business analyst" position that once required a few years of relevant work experience now requires a degree. Customer service positions that once hired high school graduates now ask for "some college experience."

The result is a massive flood of new degree holders competing for a relatively fixed number of entry-level roles. In late 2022, right around the time AI started dominating headlines, we saw a sharp uptick in unemployed college graduates. The timing might seem suspicious—correlation suggesting causation—but the underlying trend was already well established before Chat GPT existed.

Consider the numbers: the Bureau of Labor Statistics reported approximately 2 million bachelor's degrees awarded in the United States in 2023-2024 alone. Meanwhile, the number of entry-level positions available hasn't grown proportionally. A 2024 analysis found that entry-level jobs requiring less than one year of experience have become increasingly rare, declining by roughly 12% since 2015.

When you have two million new graduates and far fewer entry-level positions than there are new graduates, you're going to see youth unemployment rise. It's supply and demand. It has nothing to do with AI.

Now here's where it gets interesting: AI might eventually accelerate this trend. If employers can use AI to automate some entry-level work, they might hire fewer junior employees. But that's a future concern, not what's happening right now. Today, the youth unemployment problem is almost entirely explained by credential inflation and an oversupply of graduates relative to available positions.

But again, this makes for a less compelling narrative. It's harder to write dramatic articles about the slow creep of credential inflation and the mismatch between degree production and job creation. It's much easier to blame the shiny new technology that everyone's talking about anyway.

Correlation, Causation, and Convenient Timing

One of the most important logical rules is also one of the most commonly violated: correlation does not equal causation.

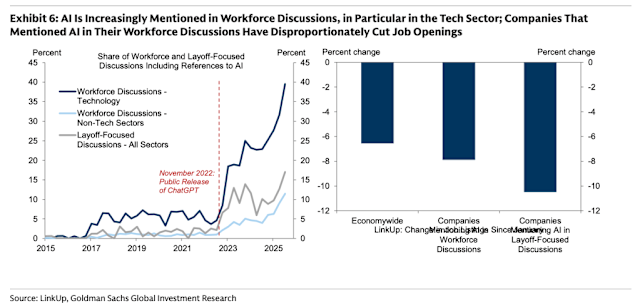

AI adoption accelerated dramatically starting in late 2022. Layoffs also increased significantly around the same time. Journalists and analysts observed these two trends happening together and concluded one caused the other. It's a natural inference, but it's exactly backwards.

The layoffs weren't caused by AI adoption. Rather, the difficult business environment—the one forcing companies to lay people off—also encouraged them to invest in technologies like AI that might help them operate with fewer employees. Companies laying people off are simultaneously looking for efficiency improvements. These things happen at the same time because they have the same root cause (tougher business conditions), not because one causes the other.

Think of it this way: both umbrella sales and umbrella manufacturing increase when it rains. Umbrella manufacturing doesn't cause the rain. They're correlated because they have the same external cause.

Similarly, AI deployment and layoffs are both symptoms of the same underlying pressure: companies are struggling to maintain profitability in a tougher economic environment. They cut costs (through layoffs) and invest in efficiency improvements (through AI). The media observes these two things happening together and assumes one caused the other. In reality, they're both responses to the same underlying pressure.

This matters because it shapes how we understand technology's role in society. If we incorrectly attribute layoffs to AI when they're actually caused by economic factors, we'll develop the wrong policies and make the wrong predictions about the future. We might overinvest in AI safety programs while ignoring the economic instability that's driving the actual employment problems.

A helpful way to think about this: ask yourself what the counterfactual would be. If AI didn't exist, would companies still be laying people off in 2024 and 2025? Almost certainly yes. The economic fundamentals would be the same: slower growth, higher interest rates, consumers spending less, companies fighting for market share while trying to maintain profitability. All of those pressures would still exist.

So AI is better understood as an enabling tool for cost-cutting that was going to happen anyway, not as the root cause of job losses. The job losses would happen regardless. AI just makes them sound more palatable.

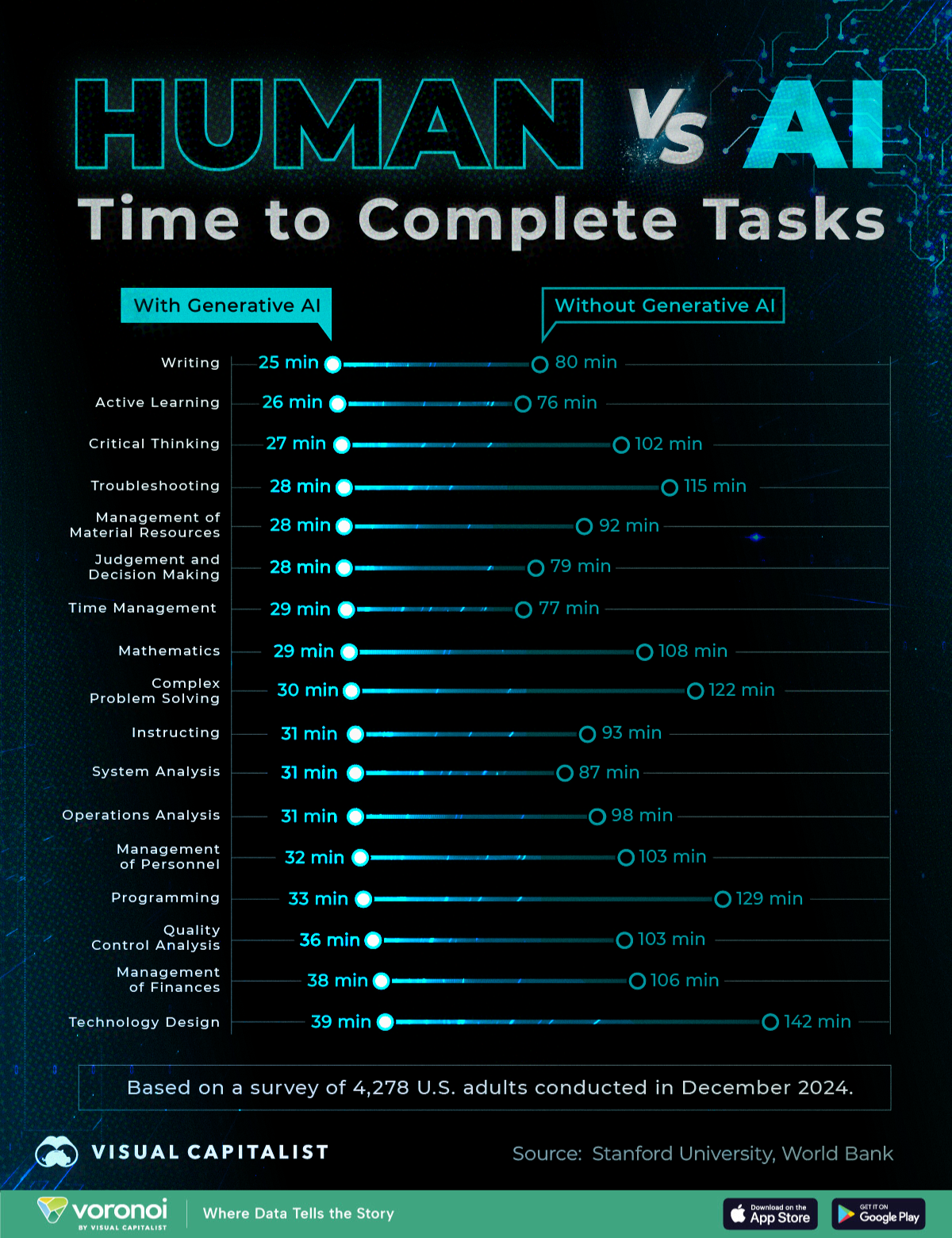

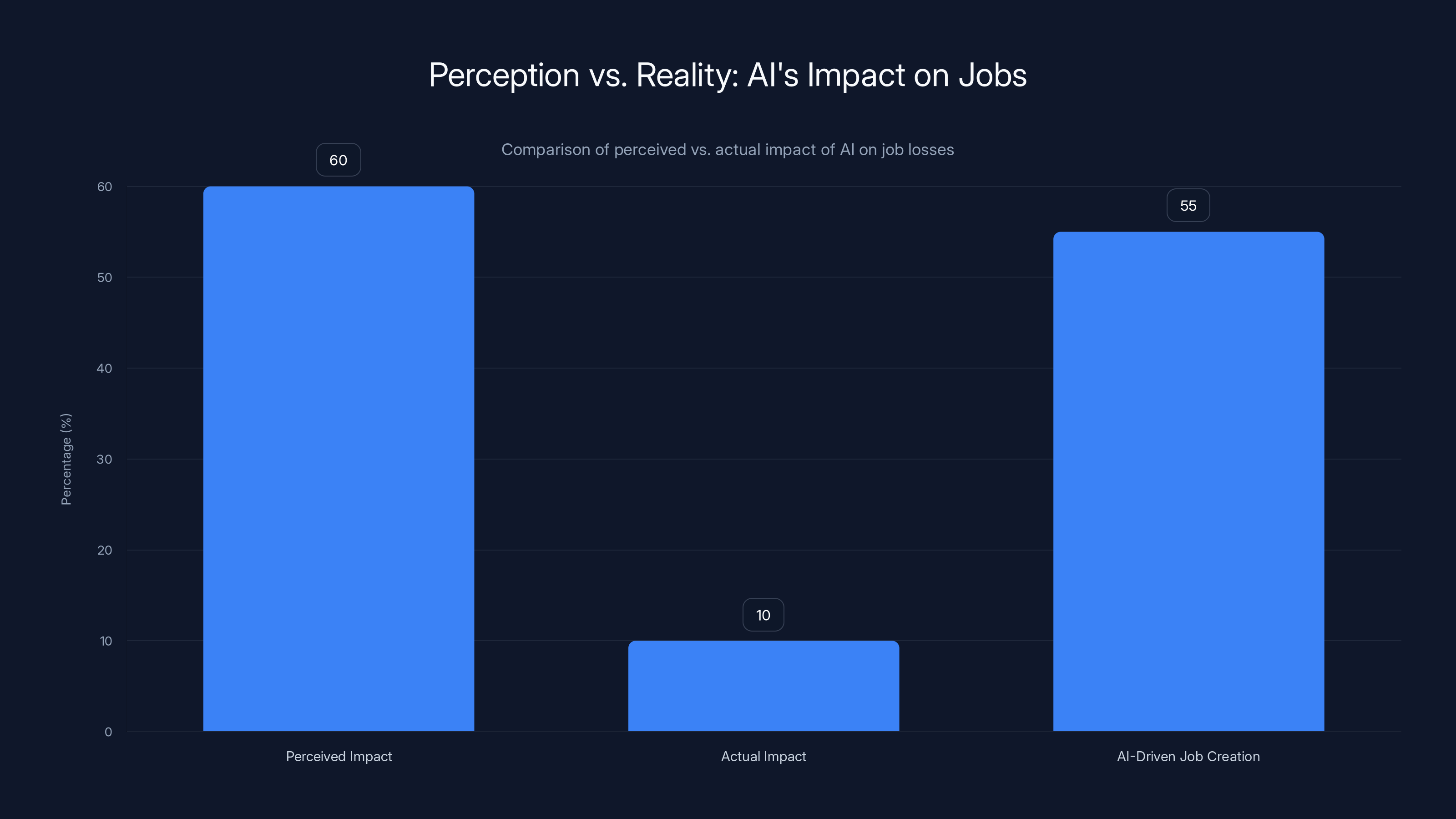

Estimated data shows a significant perception gap: 60% of workers fear AI's impact on jobs, while actual AI-driven job losses are minimal. Interestingly, 55% of companies plan to hire for AI roles.

The Complexity of AI Work: Managing Rather Than Replacing

Here's something that doesn't get talked about enough: AI doesn't just eliminate jobs, it often transforms them into different, sometimes harder jobs.

Take content moderation as an example. Before AI, companies employed thousands of human moderators to review user-generated content and remove violations. AI was supposed to eliminate most of those jobs by automatically catching violations. And to some degree, it has: companies need fewer moderators than they used to.

But here's what actually happened: you still need human moderators, but now they're reviewing AI's decisions and handling edge cases the AI can't figure out. You've gone from "moderator reviews content" to "moderator reviews AI's assessment of content." It's a different job, it requires different skills, and critically, it's often more intellectually demanding than the original job. You can't just train someone to review content. You need someone who understands what content violates policy and can judge the nuance when the AI gets it wrong.

Or look at writing. Journalists feared that AI would eliminate journalism jobs. Instead, what happened in some newsrooms is that reporters now spend time fact-checking AI-generated articles, interviewing sources to verify AI claims, and doing the original thinking that AI can't do. The job transformed rather than disappeared.

Software developers feared GitHub Copilot would put them out of work. Instead, developers are using it to write boilerplate code faster, but they still need to think through the system architecture, understand the requirements, review the generated code for quality and security issues, and handle all the complex, nuanced work that AI can assist with but can't own entirely.

What's happening across industries is the emergence of hybrid roles where humans and AI work together, and frankly, these roles often demand more from the human than the pre-AI version did. You need to understand what the AI is doing, why it's making certain decisions, where it's likely to fail, and how to maintain quality when you're working with an imperfect tool.

For workers, this is both good and bad. It's good in the sense that the jobs might not disappear entirely. It's bad in the sense that the jobs have gotten more cognitively demanding without always bringing corresponding salary increases. Many companies are treating AI as a way to do more work with the same number of people, not as a way to create better-paying, more interesting roles.

But again, this is a much more nuanced story than "AI is replacing workers," so it doesn't get told as often.

The Perception Gap: What We Think vs. What's Real

One of the most interesting findings from the Oxford Economics research is the huge gap between perception and reality when it comes to AI and job losses.

Workers perceive AI as a massive threat to employment. Surveys show that roughly 60% of workers believe AI will significantly impact job availability in their field within the next 5-10 years. News coverage amplifies this fear. Tech media runs constant stories about AI's potential to automate entire industries. Investment bankers present slides about the trillion-dollar productivity gains from AI, which inherently implies that a lot of jobs will become redundant.

But the actual data on current job losses is radically different from the perception. AI is responsible for a tiny fraction of current layoffs. The economic pressures causing most job cuts have nothing to do with artificial intelligence.

This perception gap matters because it shapes behavior. Workers might make career decisions based on a threat that's far less immediate than they think. They might avoid certain fields or skills because they believe AI will make them obsolete, when in reality those fields still have plenty of opportunities. They might invest time learning AI tools that won't help their career because they believe AI will make their current skills worthless.

On the flip side, the perception gap means that AI's real risks don't get taken seriously. The real challenges from AI adoption—the need to retrain workers in new tools, the transformation of job roles without corresponding support, the potential for dramatic changes down the road if AI keeps improving—these real issues get drowned out by apocalyptic narratives about massive job loss.

The perception gap also creates a credibility problem. When predictions about AI-driven job loss don't materialize as dramatically as forecasted, people start to dismiss warnings about AI entirely. The boy who cried wolf effect. Even when real concerns exist, they get ignored because the previous alarmist predictions didn't come true.

Much of this perception gap flows from media incentives. "AI gradually changes job requirements over the next decade" doesn't sell as many articles as "Millions of Jobs at Risk from AI." Tech journalists naturally gravitate toward stories about disruption and transformation. Nuanced stories about how AI is actually changing work are harder to write and less attention-grabbing.

Companies also benefit from the perception gap in the short term. If workers believe AI is coming for their jobs, they're less likely to ask for raises or push for better benefits. They'll accept "you should be grateful you still have a job" as management positioning. They'll volunteer for AI training because they're scared. In the medium term, this undermines productivity and retention, but it gives management short-term cost control.

Media amplification and confirmation bias significantly shape AI-driven layoff narratives, overshadowing the actual impact of AI. Estimated data.

The Data That's Actually Important: Sectoral Shifts and Skill Changes

Instead of focusing on the dramatic "AI will replace all human workers" narrative, it would be more useful to look at what the data actually shows: which specific jobs are being affected, how are job requirements changing, and which skills are becoming more valuable.

The Oxford Economics data shows that certain sectors are more affected by AI adoption than others. Knowledge work—jobs that involve processing information, writing, analysis, and communication—is seeing more AI-driven change than manual labor. Jobs that require complex physical dexterity, interpersonal nuance, or deep domain expertise are relatively resistant to automation.

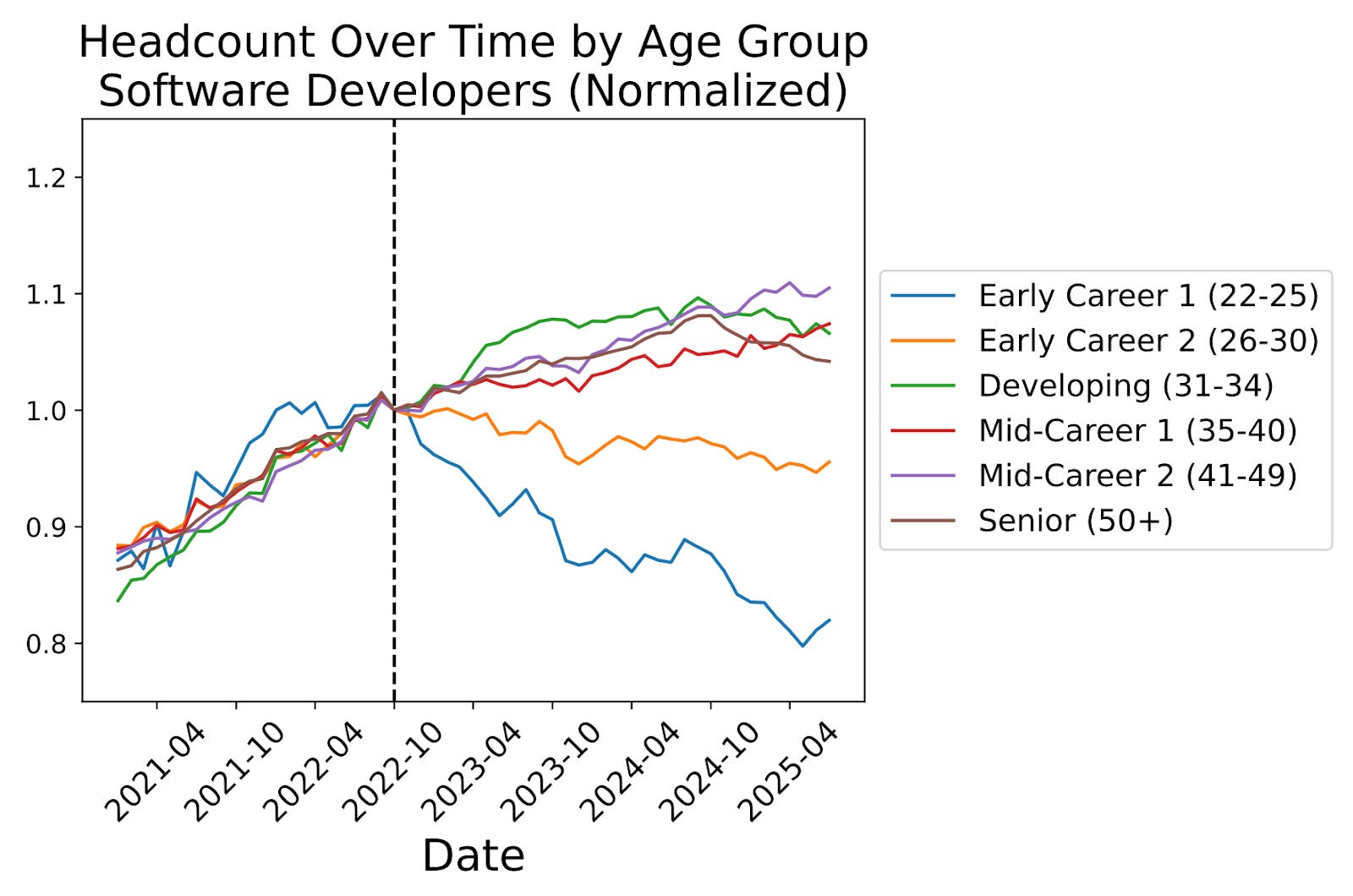

Within affected sectors, the pattern that emerges is specialization. Entry-level, commoditized knowledge work is under pressure, not because AI is replacing experienced workers, but because AI is increasingly good at doing routine cognitive tasks. A junior analyst doing straightforward data compilation might see their job pressured. An analyst with deep domain knowledge and judgment, who can think through complex problems, remains valuable.

This has huge implications for education and career development. It means that differentiating yourself through unique expertise becomes more important. It means that the career path of "learn basic skills, do routine work for a few years, develop expertise gradually" might not work anymore, because the routine work gets automated while you're learning. You need to be competent from day one, or find work that requires more judgment and less routine execution.

It also means that the jobs that are safest aren't necessarily the high-skilled ones (which might be easier to automate) but the ones that require human judgment, interpersonal skills, and real-world grounding. A nurse who coordinates complex medical care, manages patient relationships, and makes judgment calls isn't at risk from AI, even though nursing requires learning a lot of technical information. A radiologist who does routine image review might be more at risk, because that task is more easily automated.

What Actually Drives Layoffs: The Economic Reality

To understand what's really causing job losses, you have to look at economic fundamentals: interest rates, consumer spending, company profitability, and market competition.

In 2024-2025, US companies faced a specific set of economic pressures. First, interest rates were much higher than they'd been in the previous decade, making debt more expensive. Second, many companies had over-hired during the pandemic boom, betting that demand growth would continue. Third, consumer spending began slowing as inflation hit, people depleted their pandemic savings, and the wealth effect from rising asset prices reversed. Fourth, multiple markets became increasingly competitive and saturated, making it harder for companies to grow at the rates they'd promised to investors.

The result: companies made earnings forecasts they couldn't meet. They had too many employees for the revenue they were actually generating. Investors got unhappy. Stock prices fell. Boards demanded cost reductions. And suddenly, layoffs became necessary to hit financial targets.

This is just normal business cycle stuff. It happens regularly. The difference this time is that there's a convenient new technology to blame—AI—so the layoffs get reframed as strategic positioning for the future rather than painful necessity.

But if you want to understand why a specific company is laying off 10% of its workforce, don't look to AI. Look to the company's balance sheet, growth rate, and operating margins. That's where the real story is.

And the economic environment is important because it tells us that layoffs would be happening anyway, regardless of AI. The economic pressures would still be there. The need to cut costs would still exist. AI is just making a difficult necessity sound better in the press release.

AI was responsible for only 4.5% of job losses in the US in 2025, highlighting that other factors were the primary causes.

The Future: Where AI Might Actually Create Job Risk

All of this isn't to say AI poses zero risk to employment. It does. It's just not the risk we're talking about right now.

The real medium-term risk is that AI continues to improve, and at some point it genuinely becomes capable of handling tasks that currently require human judgment. Not in the next year or two, but over the next five to ten years. If AI can write better code than software engineers, create better marketing copy than copywriters, conduct better analysis than analysts, and do research better than researchers, then you have a genuine displacement problem.

The question is whether that happens, and if it does, how quickly and what policies we put in place to manage the transition.

But that's a future risk, not a present reality. Right now, AI is augmenting human capabilities in uneven ways. It's good at certain narrow tasks and genuinely bad at others. It can write a first draft of an email but can't manage a complex project. It can generate an idea but can't evaluate its feasibility. It can answer questions based on its training data but can't navigate novel situations.

So the real policy question isn't "how do we protect workers from AI-driven unemployment" right now. It's "how do we prepare for the possibility of AI-driven displacement in the future, and how do we refocus workforce development on skills that will remain valuable even if AI gets dramatically better."

That's a very different conversation than the one we're having now.

How to Think About AI and Your Career

If you're worried about AI affecting your career—and you probably should be thinking about it, even if not panicking—here's a practical framework.

First, separate the present from the future. Right now, in 2025, AI is responsible for a tiny percentage of job losses. It's a minor factor in current employment dynamics. If you're losing your job right now, AI probably isn't why. The reason is more likely economic, organizational, or strategic. Don't blame a future technology for a present problem that has present causes.

Second, look at your specific role and be honest about what AI can currently do. If your job involves routine, well-defined tasks with clear inputs and outputs, AI poses more risk. If your job involves judgment, creativity, complex problem-solving, managing relationships, or handling unprecedented situations, you're relatively safe. At least for now.

Third, develop AI competency regardless of how safe your job feels. Not because you need to panic, but because AI is becoming a normal tool in most fields. Learning to use AI tools well, understanding their capabilities and limitations, and integrating them into your workflow will make you more effective. It's like learning Excel in the 1990s—not essential for survival, but it made you dramatically more effective.

Fourth, focus on skills that complement AI rather than compete with it. Judgment, communication, complex problem-solving, domain expertise, and the ability to work with imperfect tools are all things that AI handles poorly. These are worth developing.

Fifth, understand that the risk timeline is longer than the headlines suggest. Current job losses aren't being driven by AI. They're being driven by economic factors. But over the next five to ten years, as AI improves, the risk profile could change. Plan accordingly, but don't make drastic career decisions based on a threat timeline that might be ten years out.

The Narrative We Should Be Having Instead

Instead of talking about AI replacing workers, we should be talking about AI changing work.

Work is always changing. The manufacturing jobs that dominated the early 1900s mostly disappeared by 2000, not because factories became impossible, but because automation and overseas manufacturing restructured the industry. Agricultural jobs went from employing 50% of the workforce to employing less than 2% because of mechanization. These transitions were traumatic for the people involved and caused real hardship. But they also created new opportunities and jobs that didn't exist before.

AI will likely follow a similar pattern. Some jobs will become less in-demand. Others will be created. The transition will be difficult for some people and exciting for others. But it won't be the apocalyptic job extinction event that some narratives suggest.

The conversation we should be having is about how to manage this transition thoughtfully. How do we retrain workers whose jobs are disappearing? How do we avoid a situation where AI's benefits are captured by a small number of people while disruption is distributed broadly? How do we ensure that as AI increases productivity, those gains benefit workers and not just capital owners?

These are hard questions. They involve policy, education, economics, and labor relations. They're less dramatic than "AI is killing jobs," so they don't get as much attention. But they're far more important.

The fact that AI layoff narratives are exaggerated doesn't mean we should ignore AI's real impacts. It just means we should focus on the real impacts rather than the imagined ones. We should look at how AI is changing job requirements, creating new skill demands, and reshaping career paths. We should think about education systems that prepare people for these changes. We should consider policies that help people adapt to technological transition.

But we should do all of this based on evidence, not on narratives that feel true because they fit existing technology-disruption stories.

The Role of Media and Incentives in Shaping Narratives

Why are AI-driven layoff narratives so persistent when the evidence doesn't support them?

A lot of it comes down to media incentives and how stories get amplified. Outlets that cover technology need to demonstrate their readers how technology matters. "AI gradually changes job requirements in subtle ways over the next decade" doesn't make a compelling headline. "AI is Killing Millions of Jobs" does.

When a company announces layoffs and cites AI as part of the restructuring, that's news. Journalists report it. Other outlets pick it up. It becomes part of the larger narrative about AI and employment. The fact that AI was maybe 10% of the reason for the layoffs and generic "cost reduction" was 90% of the reason gets lost in translation.

There's also confirmation bias. Once the narrative exists—"AI is driving massive job losses"—journalists naturally look for evidence that confirms it. Every layoff announcement that mentions AI gets framed as more evidence. Announcements that don't mention AI get ignored. Over time, the selective reporting creates the impression that all layoffs are AI-driven, even though they're not.

Investors also have incentives to promote the AI narrative. AI companies benefit from fears about AI disruption—it drives investment and valuations. Venture capitalists have billions of dollars betting on AI companies, so they benefit when media amplifies stories about AI's impact. Companies laying off workers benefit from framing it as technological inevitability rather than management failure. These incentives all push toward amplifying the AI-driven job loss narrative.

The media ecosystem creates a kind of narrative drift where stories that fit existing patterns and confirm existing beliefs get amplified, while evidence that contradicts those stories gets ignored. The result is that we collectively come to believe things that the data doesn't support.

This is worth being aware of because it shapes how we make decisions. If you believe AI is going to destroy your career, you'll make different choices than if you believe AI will augment your capabilities. If policymakers believe AI will cause mass unemployment, they'll pursue different policies than if they understand AI's impact as more gradual and sector-specific. Media narratives have real effects.

What Companies Actually Need to Do (And Probably Won't)

If we take seriously the idea that AI is going to change work in significant ways, even if not in the catastrophic job-destruction way that's often predicted, what should companies actually be doing?

They should invest heavily in training and reskilling. They should help current employees understand how AI will change their roles and develop new skills. They should think carefully about how to integrate AI into workflows in ways that enhance rather than replace human capabilities. They should be transparent about how AI decisions are made, especially when they affect customers or employees.

Most importantly, they should recognize that successful AI adoption requires people to change how they work, and changing how people work requires investment in that change. It requires time, training, management support, and often cultural shifts. You can't just drop an AI tool into an organization and expect productivity gains. You get those gains through thoughtful implementation and people development.

What companies are actually doing is often different. They're deploying AI tools without investing in training. They're using AI to reduce headcount rather than to augment existing employees. They're making decisions about AI systems without involving the people who'll be affected. They're pursuing "move fast and break things" approaches when what's breaking is people's careers and livelihoods.

There's no mystery why. The benefits of AI implementation accrue to the company immediately in the form of cost reduction and efficiency. The costs—retraining, lost productivity during transition, dealing with disrupted employees—are also immediate but feel temporary from the company's perspective. Once people are retrained and productivity recovers, the company has permanently lower costs.

The problem is distributed across time and people. A company saves money. An employee loses their job or has to retrain on their own time. A community loses tax revenue and purchasing power. These costs don't show up on the company's balance sheet, so they don't factor into decision-making.

This is partly a policy problem. The costs of technological transitions should be distributed more broadly—through retraining programs funded by government, through taxes on AI implementation, through regulations requiring company investment in transition support. But we're not there yet.

The Honest Assessment: Where We Actually Stand

Let's cut through all the narrative and get to what we actually know:

-

AI is not currently causing massive job losses. In 2025, AI was cited as a reason for less than 5% of all job losses. This is measurable and verifiable. Any claim that AI is the primary driver of current layoffs is not supported by the data.

-

Economic factors are the primary driver of current layoffs. Slower growth, higher costs, weaker demand, and the correction of pandemic over-hiring account for the vast majority of job losses.

-

AI is changing work and will continue to. How jobs are done is evolving. Some tasks are being automated. Some new roles are being created. But the change is gradual and uneven, not sudden and universal.

-

AI competency is becoming valuable. Understanding AI's capabilities and limitations, and being able to use AI tools effectively, are increasingly important skills.

-

The future is uncertain. AI might continue to improve and eventually handle tasks that currently require human judgment. Or it might hit plateaus and turn out to be less transformative than we think. Nobody knows.

-

Current narratives are exaggerated. Both the apocalyptic "AI will destroy all jobs" narratives and the breathlessly optimistic "AI will solve everything" narratives overstate what we actually know.

The honest truth is that we're in the early stages of deploying a powerful technology whose long-term impacts we don't fully understand, in an economic environment that's creating pressure on businesses and workers regardless of AI, and with a narrative ecosystem that rewards drama and confirmation over accuracy.

This doesn't mean there's nothing to worry about. It means we should worry about the things that are actually happening and might actually happen, not the things that sound dramatic but don't have evidence.

FAQ

Is AI actually causing layoffs in 2025?

AI was cited as the reason for only 55,000 job losses out of approximately 1.2 million total US layoffs in 2025, accounting for 4.5% of all job cuts. While AI may have played a role in some restructuring decisions, it is far from the primary driver of current layoffs. Instead, companies cited "market and economic conditions" roughly four times more frequently, suggesting that broader economic pressures, slowing consumer demand, and general profitability concerns are the dominant factors pushing companies to reduce headcount.

Why do companies claim AI is the reason for layoffs if it isn't?

Framing layoffs as "AI-driven" is better PR than admitting lower profits, weak demand, or management failures. Describing job cuts as part of a strategic "AI initiative" makes companies sound forward-thinking and innovative rather than just cutting costs. It also appeals to tech-savvy investors and journalists who find technological disruption stories more compelling than economic realities. Additionally, if jobs are cut due to "inevitable technological progress," it shifts blame away from leadership decisions, which executives obviously prefer.

What does the data actually show about AI and productivity?

Despite predictions that AI would dramatically increase worker productivity, the actual data from 2025 shows no meaningful productivity surge. Worker productivity has remained relatively flat, suggesting that while AI tools are being deployed, they're not yet delivering the transformative efficiency gains that were promised. In many cases, employees spend time learning new AI tools, fact-checking AI outputs, and managing AI systems, which can actually add complexity rather than reduce it—at least in the near term before workflows fully adapt.

Why are young people struggling to find jobs if AI isn't to blame?

Youth unemployment is primarily driven by an oversupply of college graduates relative to available entry-level positions. Since the late 2010s, the percentage of young adults pursuing higher education has increased significantly, while the number of entry-level jobs has not grown proportionally. Additionally, credential inflation means employers now require degrees for positions that previously only needed high school education. This creates a supply-demand mismatch that has nothing to do with AI—the trend existed before Chat GPT and would continue regardless of AI deployment.

Could AI cause job losses in the future, even if it isn't doing so now?

Yes, this is genuinely possible. If AI continues to improve and becomes capable of handling complex tasks that currently require human judgment—such as writing, analysis, software development, and creative work—it could displace workers in these fields. However, this is a medium-to-long-term risk, not an immediate one. The real challenge is preparing for this possibility through education, workforce development, and policies that help people adapt, rather than assuming it's already happening.

What skills should I develop to be safe from AI displacement?

Focus on skills that complement AI rather than compete with it: complex problem-solving, critical judgment, communication, interpersonal skills, domain expertise, and the ability to manage and work with AI tools effectively. Jobs requiring human connection, contextual understanding, and nuanced decision-making are more resistant to automation than jobs involving routine, well-defined tasks. Additionally, developing AI literacy—understanding what AI can and cannot do—has become an increasingly valuable meta-skill across fields.

How should I think about AI and my career right now?

Don't make drastic career changes based on AI fears, but do invest in understanding how AI is affecting your specific field. Develop competency with AI tools relevant to your work. Focus on differentiating yourself through expertise, judgment, and skills that AI doesn't handle well. Learn continuously, since the specific demands of your field will likely evolve. Most importantly, separate present realities from future possibilities—current job losses are primarily economic, not AI-driven, so make decisions based on what's actually happening now, not on predictions about what might happen in five to ten years.

Why does the narrative about AI and job loss persist if the data doesn't support it?

Media outlets benefit from dramatic narratives about disruption and technological change, as these stories drive engagement. Confirmation bias means journalists naturally look for evidence that confirms the "AI is killing jobs" narrative. Investment communities benefit from AI disruption narratives because it drives funding and valuations. Companies benefit from framing layoffs as technological necessity rather than management failure. These systemic incentives push toward amplifying the AI-driven job loss narrative, even when evidence is limited. Additionally, it takes time for evidence-based narratives to spread compared to dramatic, emotionally resonant stories.

Conclusion

We're living through a fascinating moment where a powerful new technology is emerging, economic pressures are real and significant, and our narratives about what's happening have drifted dangerously far from what the data actually shows.

AI is affecting work. It will continue to affect work. It may eventually displace workers in ways we're only starting to understand. These are real things worth paying attention to.

But AI is not currently the primary driver of job losses. That distinction belongs to economic factors: slowing growth, higher costs, weaker demand, and the structural correction of pandemic-era over-hiring. Companies are using AI as a convenient narrative frame for layoffs that would be happening anyway.

This matters because narratives shape behavior. If workers believe AI will destroy their careers, they make different decisions. If policymakers believe AI is causing mass unemployment, they pursue different policies. If we base decisions on false narratives, we'll solve the wrong problems while ignoring the real ones.

The real conversation we should be having is about how to manage the genuine changes that AI is bringing to work. That means investing in education and retraining. It means thinking carefully about how to implement AI in ways that augment human capabilities rather than just reduce costs. It means preparing for future risk while recognizing present reality.

It means, most importantly, stopping to look at the evidence before accepting a narrative just because it's dramatic and fits existing patterns.

AI might eventually become the dominant driver of job change. But in 2025, that's simply not what the data shows. And if we want to make good decisions about technology, policy, and our careers, we need to start with what's actually true, not with what would make the best headline.

Key Takeaways

- AI accounted for just 4.5% of US job losses in 2025—economic factors drove ~75% of layoffs

- Companies deliberately frame cost-cutting as AI initiatives because it sounds better than admitting business failures

- Zero productivity surge from AI deployment despite widespread adoption—evidence it's not replacing workers yet

- Youth unemployment driven by credential inflation and oversupply of graduates, not AI automation of entry-level roles

- Future AI risk to employment is real but medium-term; current job losses are economic, not technological

Related Articles

- AI Isn't Slop: Why Nadella's Vision Actually Makes Business Sense [2025]

- Switchbot's Onero H1 Laundry Robot: The Future of Home Automation [2025]

- Invisible Unemployment in Tech: 2026 [2025]

- Boston Dynamics Atlas Production Robot: The Future of Industrial Automation [2025]

- AI Isn't a Bubble—It's a Technological Shift Like the Internet [2025]

- AI at Work: Why Workers Face More Responsibilities Without Higher Pay [2025]

![Is AI Really Causing Layoffs? The Data Says Otherwise [2025]](https://tryrunable.com/blog/is-ai-really-causing-layoffs-the-data-says-otherwise-2025/image-1-1767883197637.jpg)