AI Isn't a Bubble—It's a Technological Shift Like the Internet [2025]

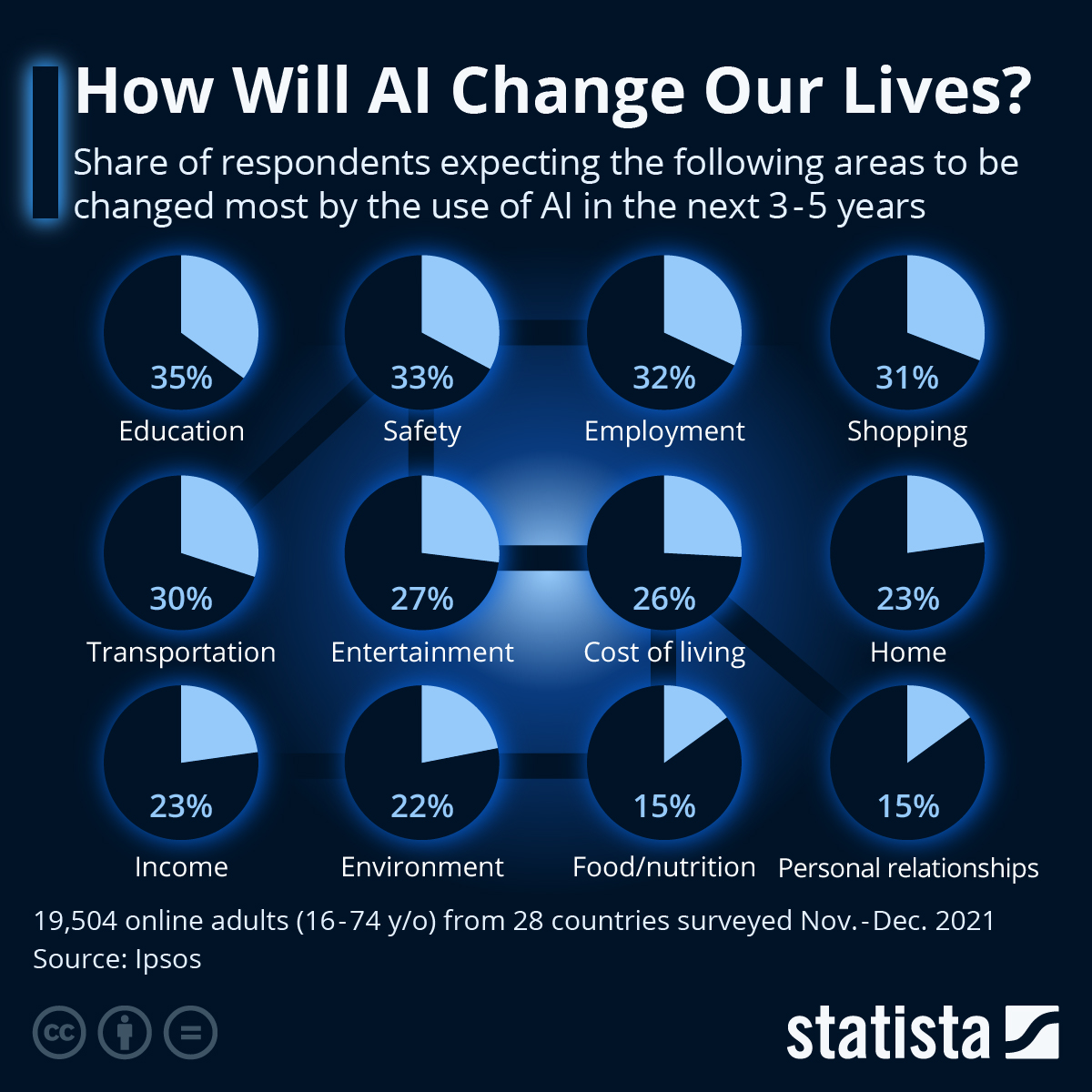

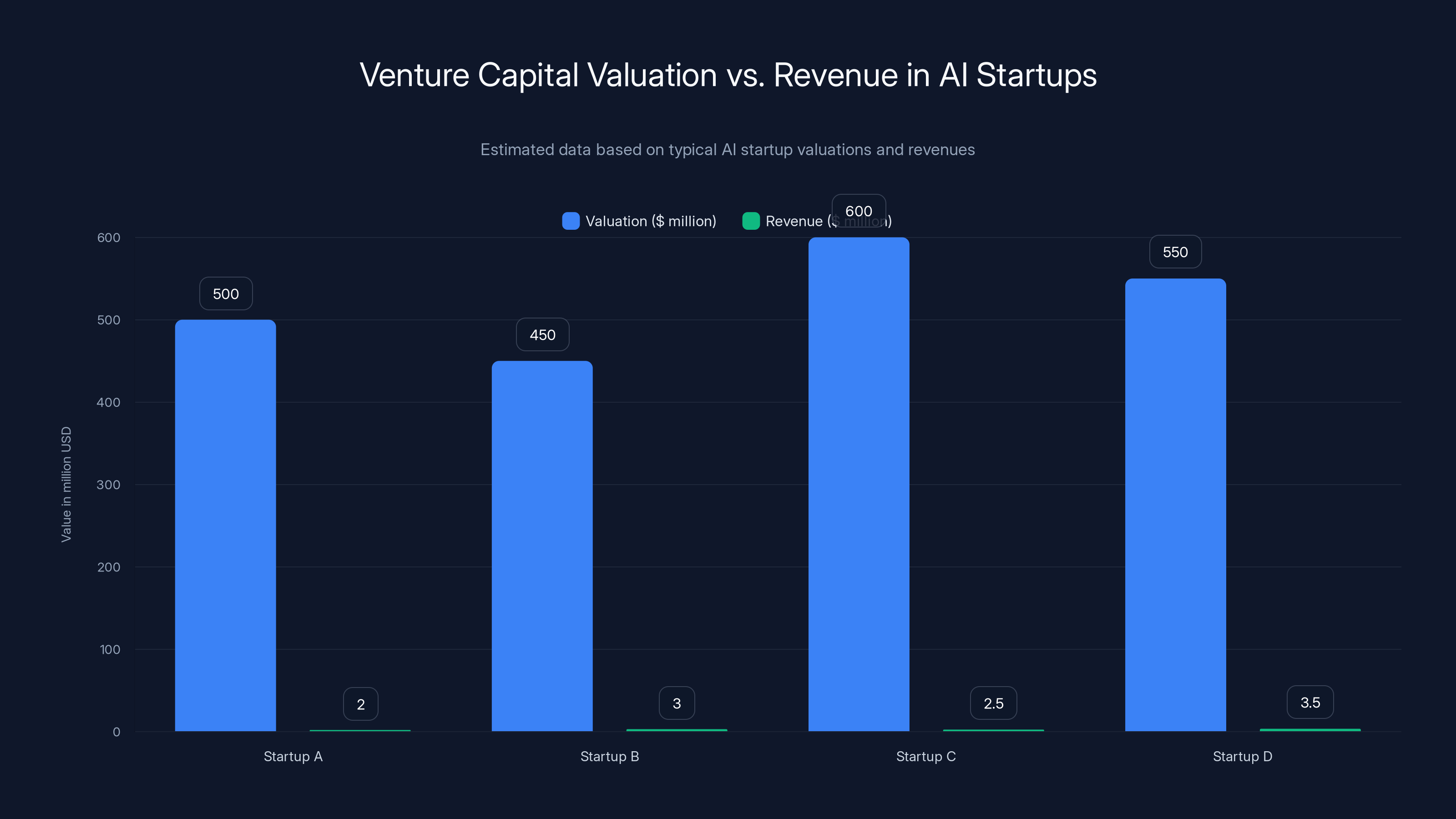

You've probably heard it a dozen times in the last month: "AI is in a bubble. It's going to burst. Mark my words." People point to inflated valuations, unprofitable startups commanding billion-dollar valuations, and market exuberance as proof that we're living through another tech cycle destined for correction.

Here's the thing: they're not entirely wrong about the valuations. But they're asking the wrong question. The question isn't whether AI's bubble will burst. The question is what happens after it does.

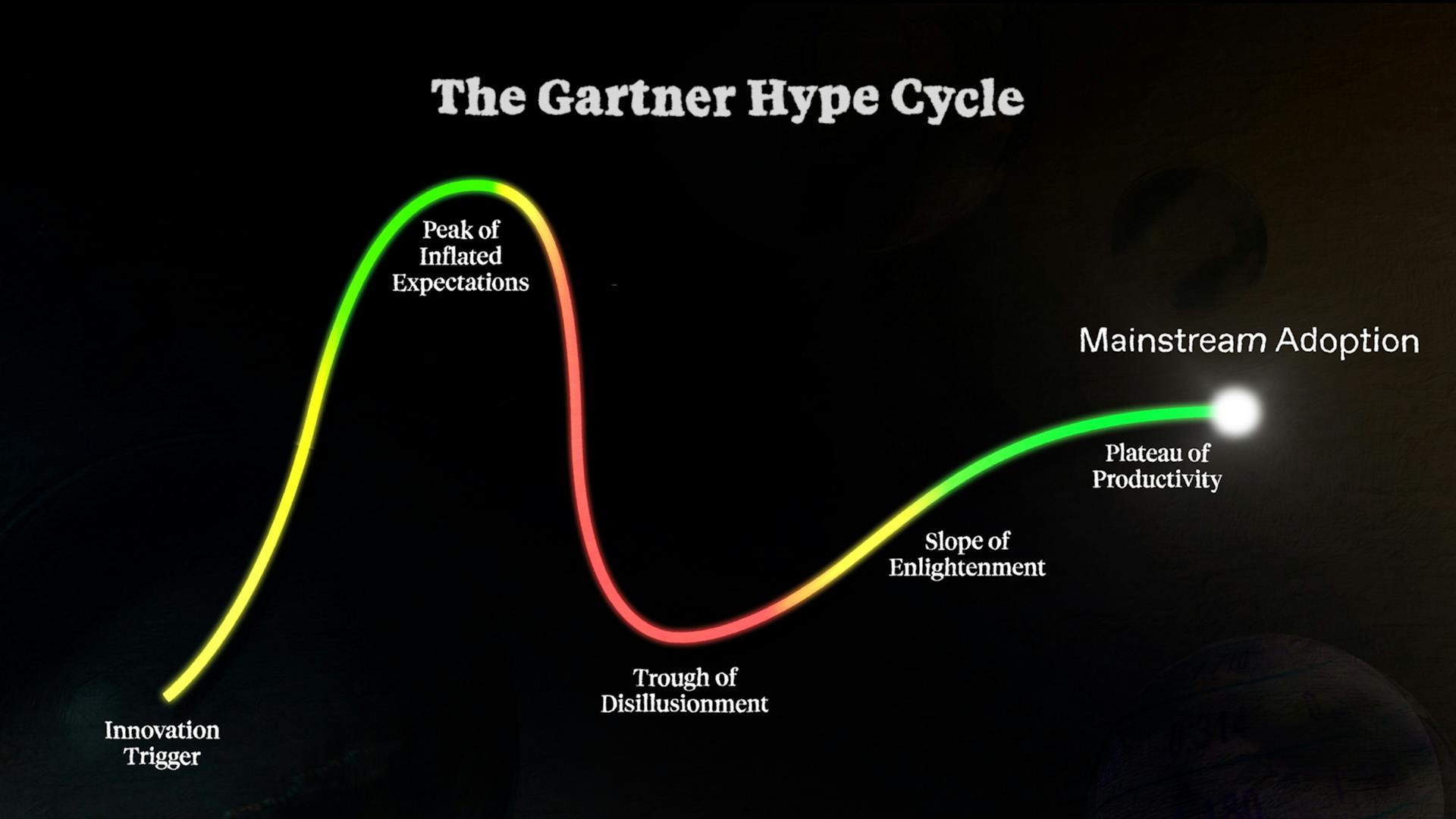

Every major technological shift in modern history has looked like a bubble from certain angles. The dot-com crash wiped out billions in paper wealth. Companies with no revenue and infinite burn rates disappeared overnight. And yet, the internet didn't go anywhere. It rewired civilization. The same pattern played out with cloud computing, mobile devices, and blockchain. The infrastructure remained. The applications evolved. The valuations corrected. But the underlying technology fundamentally changed how humans work.

AI is following that exact same arc. And the organizations sitting on the sidelines waiting for the pop will discover far too late that the world has stopped waiting for them.

TL; DR

- The bubble is real, but it's a bubble of expectations, not capability: Current AI systems can't achieve AGI, but the technology remains valuable for enterprise automation and efficiency

- Every tech bubble leaves permanent value behind: The internet, electricity, and computing all survived their corrections; AI will too

- The real competition is global and it's heating up: The US innovates, China scales, Europe regulates—and only the innovators will define the future

- AI will reshape work, not replace workers: Organizations that use AI to amplify human capability will dominate those that use it for cost-cutting

- The infrastructure and implementation layer is where real money lives: Not in flashy demos, but in data governance, analytics, and enterprise-grade systems

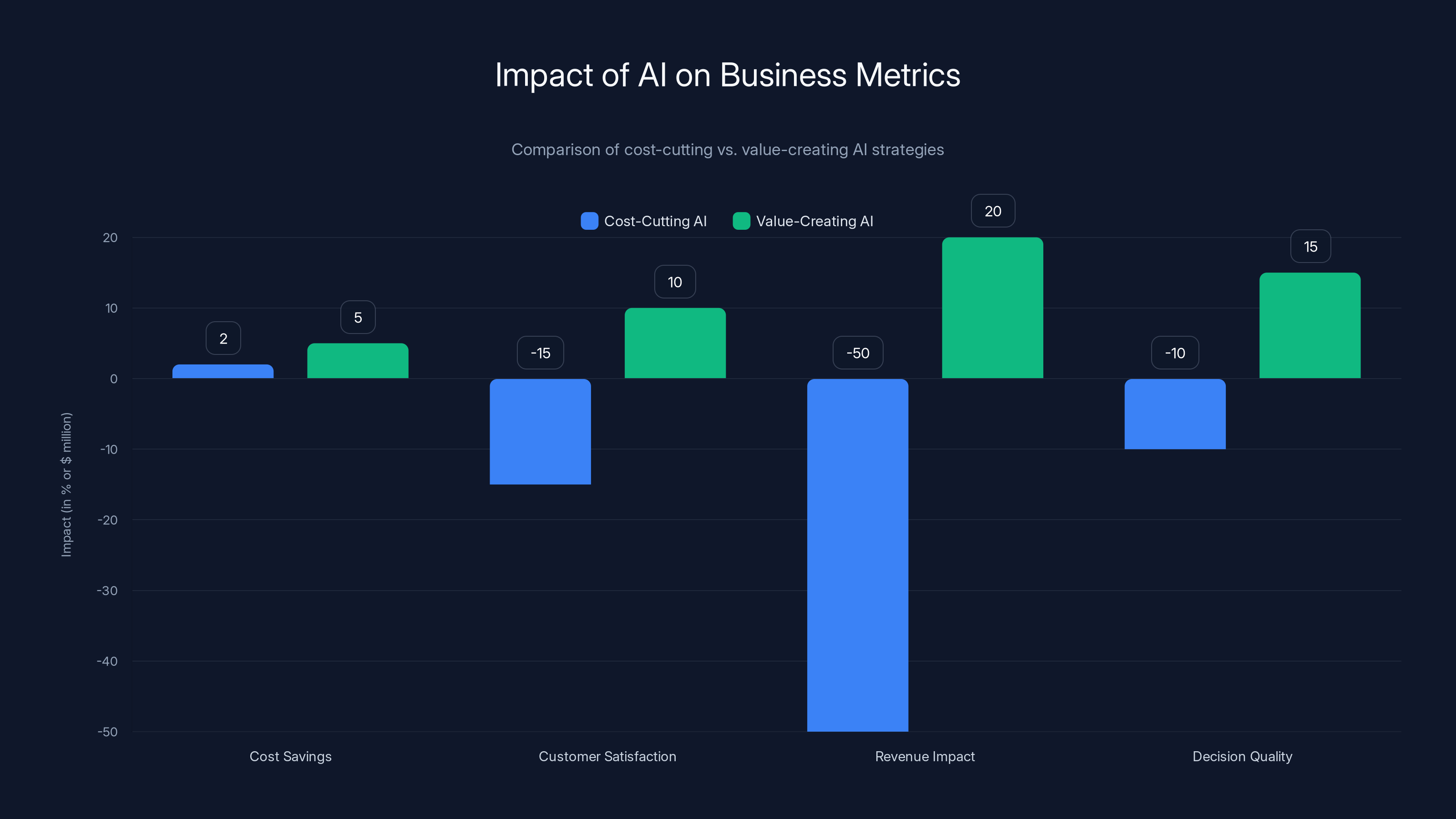

Cost-cutting AI can save

The Distinction Between Hype and Reality: Where the Actual Bubble Sits

Let's get specific about what's actually a bubble and what isn't.

The bubble exists. But it's not in the technology itself. It's in the expectations market. Venture capitalists are funding startups that assume breakthroughs in artificial general intelligence (AGI) that simply haven't materialized. They're betting that a company founded last Tuesday will somehow achieve what teams with billions in capital and world-class researchers haven't managed in decades.

Valuations have drifted into science fiction. A startup with

The giveaway? Look at what the loudest companies actually demo. It's rarely enterprise-grade infrastructure. It's rarely deep integration into real business processes. It's coding assistants. It's chatbots. It's shopping tools. You know why? Because those are easy to show. They look impressive in a 10-minute demo. A person can understand them immediately. "Look, the AI wrote code!" Boom. Minds blown.

But building a full, enterprise-grade data backbone? That's boring. There's no narrative tension. Nobody gets excited about data lineage tracking. Nobody takes screenshots of semantic grounding and posts them to Twitter. But that's the work that actually matters.

The real AI infrastructure lives in:

- Analytics and data movement: How does the data flow from your actual systems into the AI model?

- IT infrastructure: Can your existing architecture handle the computational load?

- Data governance and compliance: How do you ensure data is trusted, secure, and used legally?

- Output reliability and explainability: Can you audit why the AI made a decision? Can you explain it to regulators?

- Context preservation: Does the system understand the meaning and nuance of your specific business context?

These aren't sexy problems. But they're the ones that determine whether a company gets actual value from AI or just burns money on experiments.

The chart highlights the disparity between the high valuations and relatively low revenues of AI startups, indicating a potential bubble in expectations. Estimated data.

The Precedent: Every Major Tech Bubble Left Infrastructure Behind

Let's look back at what actually happened when previous "bubbles" burst.

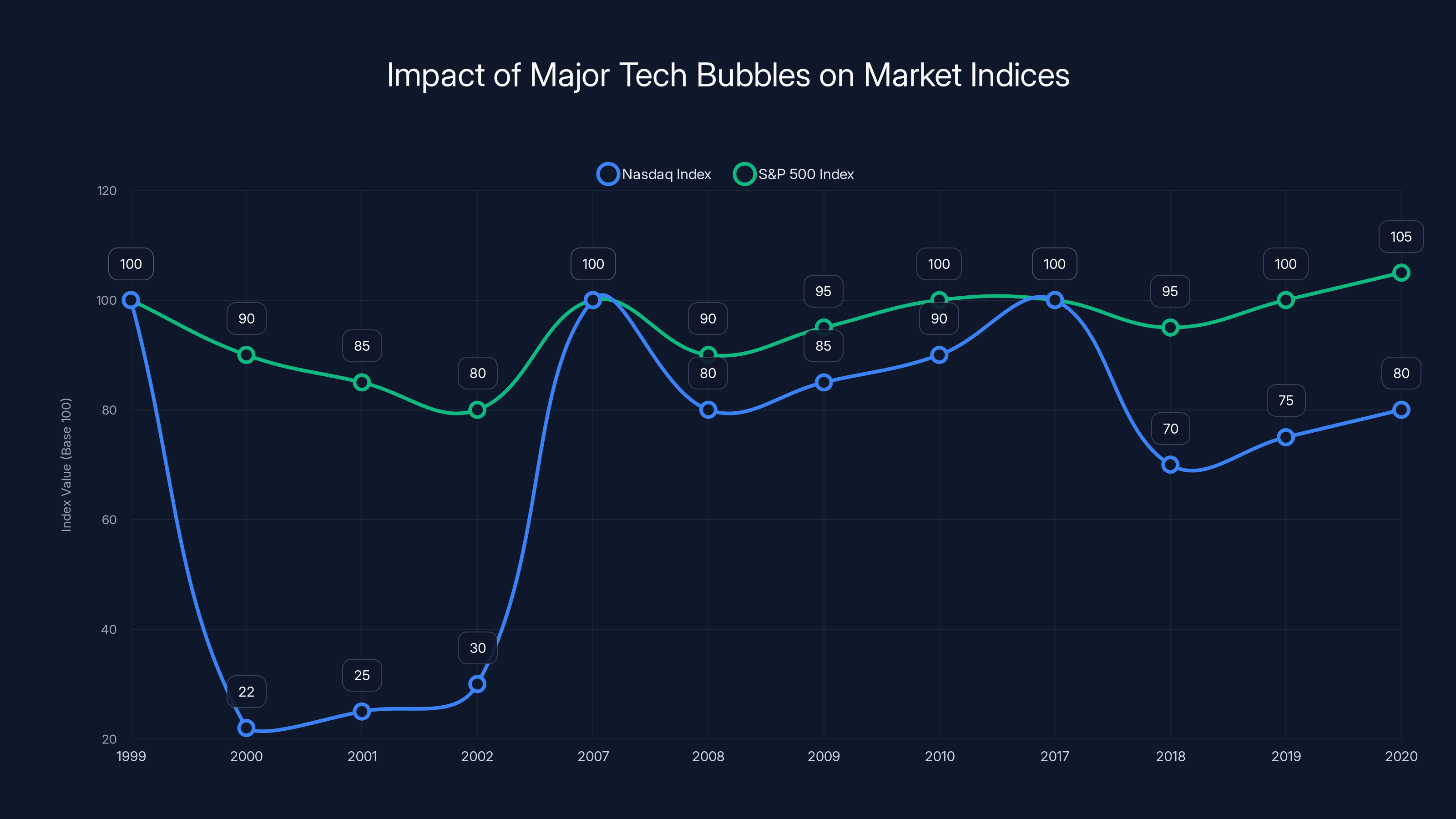

The Dot-Com Collapse (2000-2002)

Companies with names like Pets.com and eToys were valued at hundreds of millions of dollars. The Nasdaq fell 78%. Entire investment firms imploded. Articles declaring "the internet is dead" filled newspapers.

Yet the internet didn't die. It was still there. The hyperlink still worked. Email still functioned. The servers still ran.

What died were companies predicated on the belief that "the internet changes everything about business, so the old rules don't apply." Companies with no path to profitability and no competitive advantages suddenly looked very expensive at bubble valuations.

But the companies that survived—or were built in the aftermath—didn't succeed despite the internet. They succeeded because of it. Google emerged from the wreckage. Amazon, which was nearly destroyed during the crash, rebuilt and became one of the world's most valuable companies. The infrastructure remained.

The Mobile Bubble (2007-2012)

Every tech company and investor decided that "mobile is the future." The assumption was that anything mobile would succeed. Apps for everything. Startups raised hundreds of millions to build mobile-first experiences for problems that didn't need them.

Many of those companies are now dead. But the smartphone? It didn't go anywhere. It rewired how humans access information, communicate, and conduct commerce. The technology was never the problem. The unrealistic expectations about what mobile alone could achieve were the problem.

Companies that treated mobile as infrastructure—a new platform to build real solutions on—won. Companies that treated it as a magic bullet lost.

The Blockchain Bubble (2017-2022)

Every investor decided that "blockchain will replace databases." Every startup decided to slap "blockchain" into their pitch deck and raise Series A funding at a 10x valuation multiple. The hype was deafening.

Most of those startups are now defunct. But blockchain technology didn't disappear. It's been quietly integrated into logistics, supply chain verification, and financial infrastructure. The technology remained valuable. The unrealistic expectations didn't.

The pattern is consistent: Technology survives. Bubble expectations evaporate. The organizations betting on the technology do fine. The organizations betting on the hype go bankrupt.

AI will follow the same arc.

The Expectation Bubble: AGI, Superintelligence, and the Timeline Problem

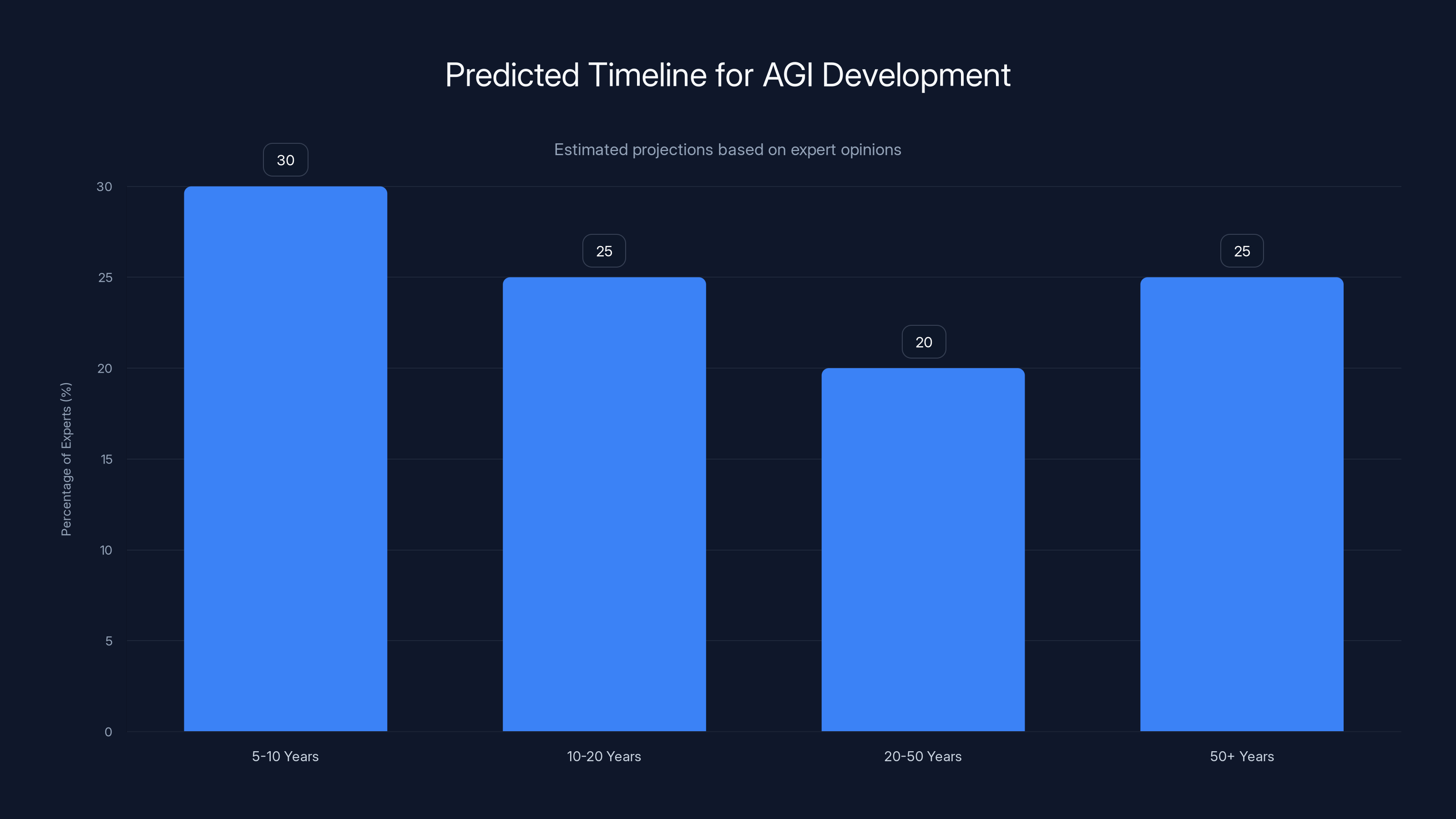

The current AI bubble is built on a specific bet: that we're very close to artificial general intelligence.

For context, artificial general intelligence is a system that can understand, learn, and apply knowledge across any domain at human level or beyond. It's a theoretical concept. We don't have it. We might never have it with current approaches. But investors are pricing in its arrival as if it's a few quarters away.

Here's the problem: the timelines are fundamentally uncertain. Some researchers think AGI is 5-10 years away. Others think it's 50+ years away. Still others think the concept itself is misconceived—that you might have superhuman performance on specific tasks without having "general" intelligence.

When a major part of your investment thesis depends on an uncertain future breakthrough, you've stopped betting on current capability. You've started betting on mythology.

Companies raise funding at bubble valuations because investors believe:

- "We'll achieve AGI before competitors": The company will be the first to general intelligence and will therefore dominate the industry

- "AGI will create trillion-dollar value": Once AGI exists, whoever controls it will have a license to print money

- "First-mover advantage is permanent": Being first means you'll stay dominant forever

All three assumptions are questionable. None of them are guaranteed.

But here's what's not uncertain: AI is useful right now. It can:

- Detect fraud patterns that humans miss

- Optimize supply chains in real time

- Analyze medical imagery faster and sometimes more accurately than radiologists

- Automate routine tasks so humans can focus on judgment calls

- Generate initial drafts of documents, code, and creative work

These applications don't require AGI. They don't depend on a breakthrough that's 5-10 years away. They work today. And they deliver measurable value today.

The bubble companies are betting you won't notice the difference. They're building on speculation about AGI while the real value is being extracted from more mundane applications.

The chart illustrates how major tech bubbles, such as the Dot-Com and Mobile bubbles, impacted market indices like Nasdaq and S&P 500. Despite significant drops during bubble bursts, infrastructure like the internet and mobile technology persisted and eventually supported recovery. Estimated data based on historical trends.

The Geopolitical Reality: US Innovation, China's Scale, Europe's Regulation

There's another dimension to the AI debate that often gets overlooked in American tech discourse: geopolitics.

The world isn't moving as a unified bloc on AI development. Different regions have chosen fundamentally different strategies. Understanding these strategies is crucial to understanding which AI bets will actually matter.

The United States: Move Fast and Break Things

America's approach to AI is essentially: "Build it. Figure out the implications later. Regulation will catch up eventually." The country has the research talent, the computational resources, the capital markets, and the cultural appetite for risk-taking. Companies like OpenAI, Anthropic, Google, and Meta have invested enormous resources into AI development with minimal regulatory overhead.

The result: The US is currently leading in frontier AI research. The largest language models. The most advanced vision systems. The cutting-edge breakthroughs.

But leadership in research doesn't automatically translate to dominance in applications. Research is one layer. Building products that customers actually use is another.

China: Copy at Scale

China's approach is fundamentally different. The country has:

- Limited access to frontier research (due to export controls on chips and cloud computing resources)

- Enormous engineering talent that can rebuild systems from scratch

- A culture of rapid iteration and optimization

- Scale: A market of 1.4 billion people with unique data and unique applications

China isn't leading on frontier research. But it's building production-grade AI systems and deploying them at scale. The economic value isn't necessarily in being first. It's in being fast at turning research into products that work at scale.

The assumption that the US will dominate AI is based on current research leadership. But research leadership has predicted market dominance before, and it hasn't always worked out that way.

Europe: Regulate and Hope

Europe's approach is essentially: "Let's pass laws." The EU AI Act attempts to regulate AI development and deployment. The theory is that by setting strict rules on how AI can be trained and used, Europe will ensure responsible AI development.

The problem with this approach is that regulations don't create innovation. They can prevent harms. They can ensure accountability. But they can't compel breakthroughs. A company operating under strict European regulations still needs the research talent, the computational resources, and the market to justify investment.

Europe has talented researchers. But the best and brightest often migrate to the US or Asia. Europe has strong regulations. But regulations don't translate to competitive advantage in a race.

The geopolitical reality is this: The AI race is starting to look like the space race. You either launch, or you watch. Pretending you can legislate your way into technological relevance is delusional.

This matters because the valuations of AI companies are partly a bet on which geopolitical bloc will win the AI race. If you believe America will dominate, you bet on US companies. If you believe China will win at scale, you invest accordingly. If you believe Europe has a chance, you're probably making a mistake.

The bubble will deflate. But the geopolitical competition will intensify. The winners will be the companies and countries that balance innovation with responsible deployment.

The Wrong Fear: AI Will Replace Humans

There's a persistent anxiety that runs through discussions of AI: "Will this technology replace me?"

It's the same fear that surfaced with every major automation wave. The printing press would eliminate scribes. Factory machinery would eliminate craftspeople. ATMs would eliminate bank tellers. Calculators would eliminate mathematicians.

All of those predictions were partially correct and partially wrong. Those jobs didn't disappear entirely, but they did change. The skills required shifted. The value proposition of human labor shifted. Some people adapted. Some didn't. Some industries restructured entirely.

AI will do the same. But the outcome depends on how organizations use the technology.

The Cost-Cutting Trap

Some companies will use AI as cover for layoffs. They'll see AI as a way to reduce headcount. "We can automate this function. We don't need those people anymore." This approach has a consistent track record: it fails.

Here's why: If you're using AI to cut costs, you're not getting the productivity gains that make the technology worthwhile. You're just replacing people with a system you don't understand, that you didn't train properly, and that you have no incentive to improve.

The organizations that thrive will be the ones that use AI to make their people more capable. More decisive. More strategic. They'll automate the tasks that nobody wants to do anyway, and redeploy that human capacity toward higher-value work.

The Amplification Path

A customer service representative with AI-powered tools can handle 3-5x more cases than without those tools. But the representative is still required. The human judgment, the ability to handle edge cases, the empathy—these are still essential.

A financial analyst with AI can analyze 10x more data sources and produce recommendations 10x faster. But the analyst is still required. Someone needs to question the outputs, integrate external knowledge, and make the final judgment call.

A doctor with AI can review medical imagery 100x faster and catch patterns a human might miss. But the doctor is still required. Someone needs to integrate the AI findings with patient history, clinical judgment, and ethical considerations.

In every case, the human becomes more valuable, not less. The work becomes more strategic, not less. The role evolves.

The fear that "AI will replace humans" is based on a misunderstanding of how technology actually works in practice. Technology doesn't eliminate roles. It changes what value those roles provide.

But this only works if organizations invest in retraining, in creating a culture where AI is a tool rather than a threat, and in restructuring roles to capitalize on what AI can do (process massive data quickly) while preserving what humans can do (judge, contextualize, decide).

Organizations that don't make that investment? They'll struggle. But that's a failure of strategy, not a failure of the technology.

Estimated data shows a wide range of predictions for AGI development, with significant uncertainty about the timeline.

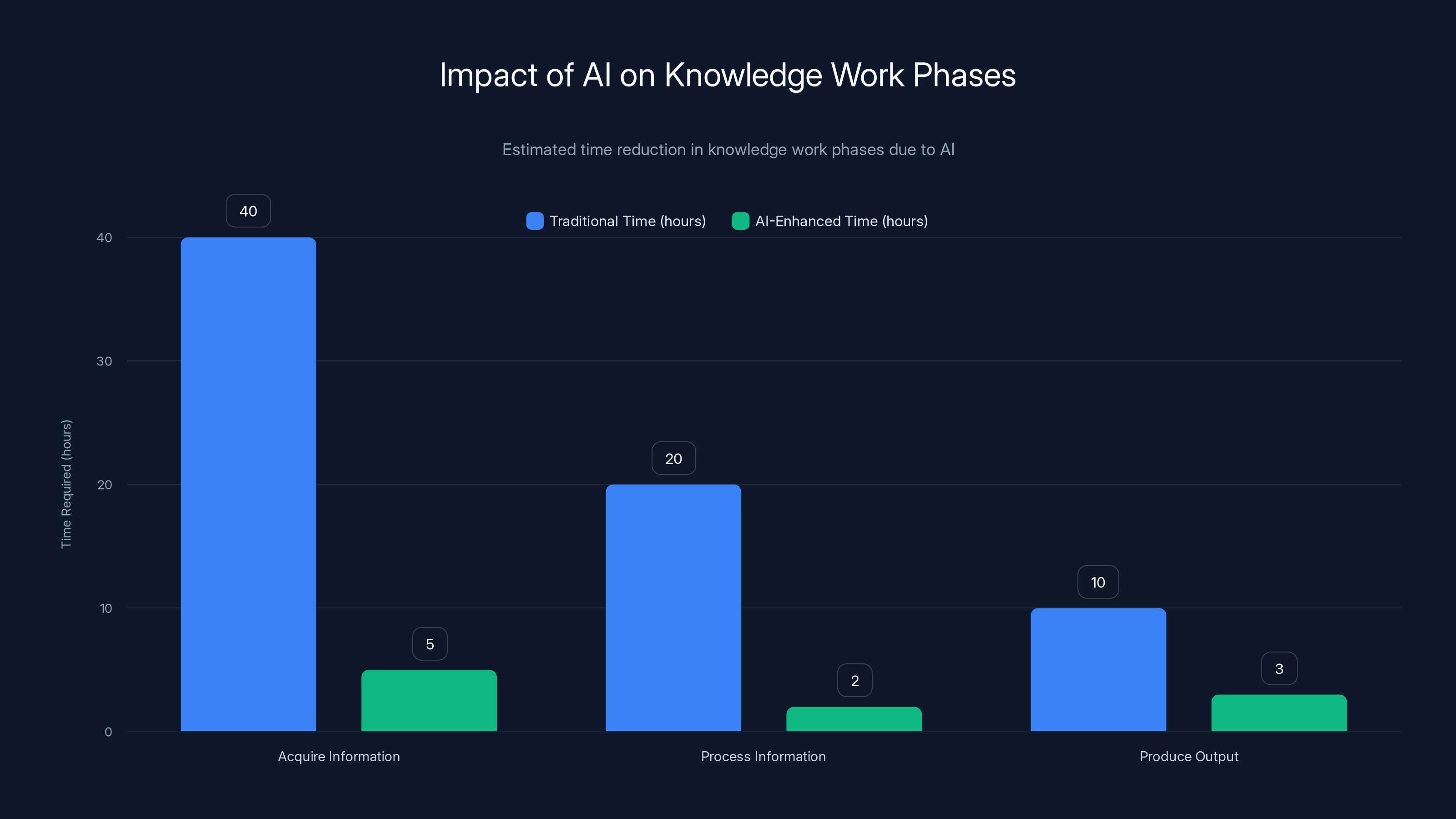

Knowledge Work Is Being Rewritten in Real Time

We're living through a transition in how knowledge work actually happens. And the speed of change is creating a new set of opportunities and challenges.

For most of human history, knowledge work followed a pattern:

- Acquire information (read, research, talk to people)

- Process it (think, analyze, synthesize)

- Produce output (write, present, decide)

Each step took time. Lots of time. A financial analyst would spend weeks gathering data from disparate sources. A researcher would spend months reviewing the literature. A writer would spend days researching before writing a single article.

That's still the conceptual model. But the execution has changed.

AI accelerates the acquisition phase: You can now get relevant information from thousands of sources instantly. You don't need to manually search through databases. You don't need to call people to ask questions. You ask the system, and it synthesizes a response.

AI accelerates the processing phase: You can analyze larger datasets, spot patterns, and generate hypotheses faster than humans can. What used to take a week of analysis now takes an hour.

AI changes what the output phase means: You're no longer starting from a blank page. You're starting from an AI-generated draft, outline, or recommendation. Your job isn't to create from scratch. Your job is to refine, question, and integrate.

This is a fundamental shift in how knowledge work functions. And it has cascading implications.

What becomes more valuable:

- Critical thinking: The ability to question AI outputs is now essential. If you accept everything the system generates, you'll make terrible decisions. You need judgment.

- Integration: The ability to connect disparate insights into a coherent narrative is now the bottleneck. AI can generate insights. Humans need to connect them.

- Judgment calls: Decisions that require ethical consideration, business context, or stakeholder alignment are now the domain of human work. Optimization problems are AI's domain.

What becomes less valuable:

- Routine analysis: If it's a pattern-matching problem that can be solved by optimization, AI will do it better and faster.

- Information gathering: If the information is documented and structured, AI will find it faster than you will.

- Mechanical writing: If you're just translating data into a standard format, automation will handle it.

The organizations that thrive in this environment won't be the ones that replace workers with AI. They'll be the ones that redefine what workers do.

A manager's job changes from "coordinate task execution" to "set strategy and override AI recommendations when business context demands it." An analyst's job changes from "gather data and create reports" to "question AI recommendations and integrate them with business knowledge." A researcher's job changes from "search the literature" to "synthesize AI findings with original thinking."

Everyone's job becomes more strategic, not less. But it requires a different skill set. And it requires organizations that invest in developing that skill set.

Agentic Systems and Autonomous Decision-Making: The Next Layer

We're currently in the era of conversational AI. You ask a question. The system answers. You ask another question. The system answers again.

But the frontier is moving toward agentic systems. Systems that don't just answer questions. They take action.

A conversational system answers: "Based on the data, you should probably investigate the supply chain route through Vietnam." An agentic system does the investigation, analyzes alternatives, and proposes a specific change to the supply chain.

A conversational system answers: "Here's a summary of the fraud patterns in your transaction data." An agentic system flags suspicious transactions, freezes accounts, and logs the decisions for human review.

A conversational system answers: "Here's what the market analysis suggests." An agentic system adjusts pricing in real time based on market conditions, logs the changes, and flags anomalies.

Agentic systems introduce a new layer of complexity: how do you maintain human oversight of autonomous decisions?

This is where the real infrastructure challenges emerge. You can't just deploy an agentic system and hope it makes good decisions. You need:

- Continuous monitoring: Are the decisions consistent with expected patterns?

- Audit trails: Why did the system make this choice? Can you explain it?

- Override capabilities: When the human disagrees, can they override the system?

- Feedback loops: Does the system learn from human corrections?

These are the unglamorous infrastructure problems that determine whether agentic AI succeeds or fails in production.

And these are precisely the problems that bubble companies ignore. Building a system that makes autonomous decisions is easy. Building a system that makes autonomous decisions that humans trust enough to deploy at scale is hard.

The real competition in AI isn't happening in the headlines. It's happening in the infrastructure layer. The companies that figure out how to build trustworthy, auditable, explainable autonomous systems will own the future. The companies that just build chatbots and hope investors believe they're on the path to AGI will become cautionary tales.

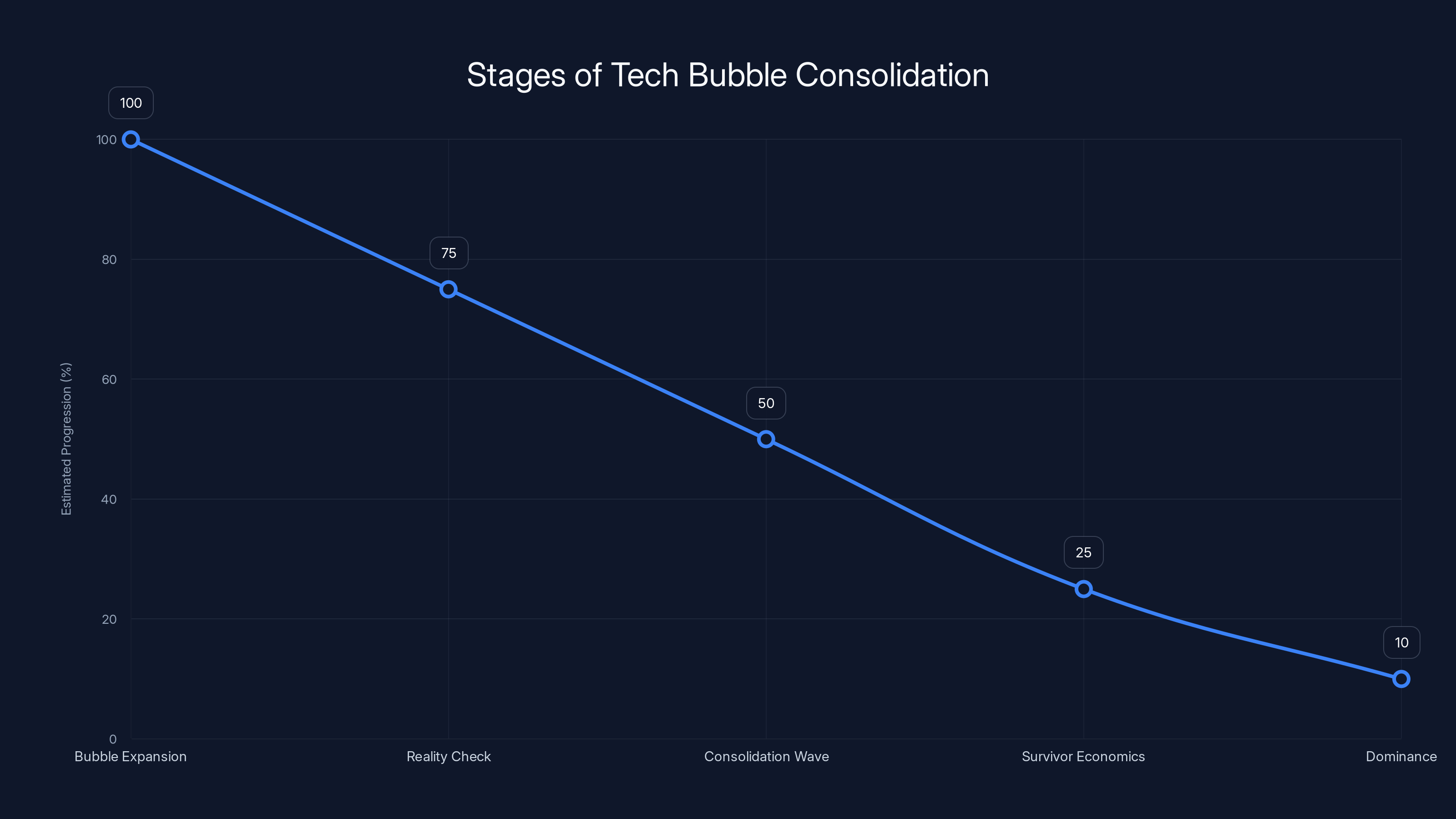

The tech bubble lifecycle progresses from expansion to dominance, with significant consolidation occurring during the 'Consolidation Wave' stage. Estimated data.

Value Creation vs. Cost Cutting: How to Actually Win with AI

Here's a critical distinction that leaders often miss: There are two ways to use AI. One creates value. One destroys it.

Cost-Cutting AI

Using AI to cut costs is straightforward: "We have a process that costs $X. We can automate it with AI and save money." The logic is simple. The execution is often a disaster.

Why? Because when you use AI to cut costs, you're optimizing for a metric (lower expense line) that has nothing to do with what customers actually want.

You automate customer service and save

You automate data analysis and eliminate analysts' jobs. But the organization loses the institutional knowledge those analysts had. Decisions become more mechanical. The organization becomes less adaptive.

Cost-cutting AI is seductive because it's easy to measure. You can point to the line item and say "we saved money." But it's rarely the path to sustainable value creation.

Value-Creating AI

Using AI to create value is harder to measure and harder to execute. It requires thinking differently about what the technology is for.

Value-creating AI is deployed to:

- Open new revenue streams: Netflix uses AI to understand viewing patterns and commission content that viewers actually want. This created an entirely new category of value—data-driven content creation.

- Develop new products: OpenAI's API allows companies to build new products on top of AI capabilities that didn't exist before. That's not cost-cutting. That's new value creation.

- Improve decision quality: Amazon uses AI to optimize inventory and pricing. This isn't about eliminating people. It's about making decisions faster and more accurately than humans can.

- Accelerate time to market: Companies use AI to generate initial drafts of code, documentation, and designs. This doesn't eliminate engineers. It makes them faster.

- Create new data products: Companies use AI to analyze customer data in ways that weren't possible before, creating new insights they can monetize.

In every case, the organization is deploying AI to do something that was previously impossible or impractical.

The organizations that win with AI will be the ones that figure out how to combine the two approaches: deploy AI to automate the tasks that nobody wants to do, and redeploy the human capacity to higher-value activities.

But this only works if the organization invests in its people. If you use AI to automate tasks and then lay off the people, you've just done a cost-cutting exercise. But if you automate tasks and then invest in retraining those people for higher-value work, you've created a more capable organization.

Culture matters as much as compute in determining whether AI creates value or destroys it.

The Retraining Imperative: Why Culture Matters More Than Technology

This is the part of AI strategy that most organizations get wrong.

They focus on the technology. "What AI system should we buy?" "What vendor should we partner with?" "How do we implement this?" These are important questions. But they're not the most important question.

The most important question is: "Do our people have the skills and confidence to actually use this technology effectively?"

A mediocre team with a strong AI system will produce mediocre results. A strong team with a mediocre AI system will find ways to make it work. But a strong team with a strong AI system and an organizational culture that encourages experimentation and intellectual challenge? That's where the real value lives.

Retraining is expensive. It's time-consuming. It doesn't show up as a line item that gets cut when budgets tighten. But it's where the value actually comes from.

Here's what effective AI-driven organizations do differently:

They invest in foundational understanding: Before deploying AI systems, they ensure that teams understand what the systems can and can't do. Not at a surface level. At a deep level. If your team thinks AI is magical, they'll trust it too much. If they think it's useless, they won't use it at all.

They create a culture of challenge: The best organizations using AI encourage people to question the system. "Why did it recommend this? Let me check." This isn't friction. This is how you prevent AI from making bad decisions at scale.

They iterate and experiment: They don't expect the first deployment to be perfect. They deploy, measure results, adjust, deploy again. They treat AI like any other system that needs tuning.

They maintain clear human accountability: Someone owns the decision. Even if the AI generated the recommendation, a human is accountable for whether that recommendation was followed. This creates the right incentives for skepticism.

They communicate what's changing: If AI is going to change people's roles, they tell people early and explicitly. They don't surprise anyone. They invest in helping people adapt.

Organizations that do these things will win. Organizations that just buy the best AI system and assume it will automatically make them better will struggle.

AI significantly reduces the time required for each phase of knowledge work, enhancing efficiency and allowing for more focus on critical thinking and integration. Estimated data.

The Plumbing Problem: Why Boring Infrastructure Matters More Than Flashy Demos

There's a massive gap between "we built an AI system" and "we have an AI system running in production that produces reliable, auditable, compliant outputs."

Most of the media attention goes to the flashy demos. The ones that make headlines. But the real work—the work that determines whether AI creates value or just costs money—is in the plumbing.

Data Governance

Before you can use AI effectively, you need to know what data you have. Where it lives. How clean it is. Whether it's up to date. Whether it's compliant with regulations.

Most organizations don't have this figured out. They have data scattered across legacy systems, cloud platforms, and shadow IT. Nobody's quite sure what the complete picture is.

Building an AI system on top of garbage data means you get garbage outputs. It doesn't matter how sophisticated the AI is. Garbage in, garbage out.

Data governance is unglamorous. It's not something you demo. But it's foundational.

Data Pipelines

You need to get data from where it lives to where the AI system can use it. You need to do this reliably, at scale, without losing data or introducing errors.

Data pipelines are complex. They break. Systems go down. Data formats change. Vendors update APIs. If you don't have robust pipelines, your AI system is only running on outdated or incomplete data.

Monitoring and Alerting

Once your AI system is running, you need to know when something goes wrong. Is the system still producing reliable outputs? Are the patterns it's detecting still valid? Has the data distribution changed in ways that make the model unreliable?

Without monitoring, you don't know when your AI system becomes useless. It just silently starts making bad recommendations.

Compliance and Audit

If your AI system is making decisions that affect people (hiring, lending, content moderation, medical diagnosis), you probably need to be able to explain those decisions. You need audit trails. You need evidence that the system is behaving as expected.

Building compliant AI systems is hard. It requires rethinking how you design and deploy the technology.

Version Control and Reproducibility

When your AI model makes a bad decision, you need to be able to go back and understand why. What version of the model was running? What data was it trained on? What were the inputs?

Without this, you're flying blind.

These infrastructure layers don't get press coverage. They're not what gets pitched to investors. But they're what determines whether your AI implementation succeeds or fails in the real world.

The companies that win with AI will be the ones that treat infrastructure as a first-class problem, not an afterthought.

The Talent War: Where AI Advantage Actually Lives

There's a shortage of people who know how to build production-grade AI systems.

There's no shortage of people who can fine-tune a model or run a chatbot API. But people who understand the full stack—from data engineering to model training to monitoring to compliance—are rare and expensive.

This creates a talent concentration problem. The best people congregate at a few dominant companies. Google has most of the expertise. OpenAI has some. A few other companies have pockets of capability. Everyone else is scrambling.

For most organizations, the limiting factor in AI implementation isn't the technology. It's not even the data. It's talent. Can you hire the people who know how to actually build these systems? Can you retain them? Can you build a culture where they'll do their best work?

This is where the AI bubble really bites. Venture-backed startups can throw massive salaries at AI talent. But once the bubble deflates and valuations correct, those salaries become unsustainable. Some companies will have to cut staff. The best people will migrate to better-funded companies. The organization's capability will crater.

Established companies with deep pockets and strong brands will win the talent war. They can offer stability and long-term career growth. They can offer challenging problems. They can offer compensation that's attractive even if the equity isn't as exciting as it was during the bubble.

Bubble-funded startups with mediocre problems and sky-high salaries will lose the talent war. Once the salaries become untenable, the people will leave.

The Geopolitical Arms Race and Chip Constraints

There's a physical constraint to AI development that's often overlooked: you need specialized hardware.

Training large AI models requires GPUs (graphics processing units) or TPUs (tensor processing units). These are expensive, specialized chips. And they're produced by a very small number of companies.

NVIDIA dominates the market. It's produced most of the chips used to train the latest generation of AI models. This gives NVIDIA enormous leverage and enormous market power.

But there's a secondary layer: access to chips is increasingly becoming a geopolitical question. The US has implemented export controls on advanced chips to prevent them from being used in China for military purposes. This constrains China's ability to develop frontier AI systems.

China is building its own chips to reduce dependence on US suppliers. This is taking time. But it's happening.

Meanwhile, Europe is trying to develop its own chip capacity through initiatives like the European Chips Act. But Europe is years behind in terms of existing capability and manufacturing at scale.

The result: Access to chips is a geopolitical constraint on AI development. The US, by controlling access to advanced chips, has a structural advantage in developing frontier AI systems. China is trying to reduce this disadvantage. Europe is playing catch-up.

This matters because it means AI development is not purely a function of talent and capital. It's also a function of geopolitics and supply chain control. Organizations operating under different geopolitical constraints face different options.

For most organizations outside the US, this means you can't build frontier AI systems locally. You'll either need to depend on US cloud platforms and APIs, or you'll need to build systems in China using different technology and different constraints.

For startups, this is a problem. For established companies, it's less of a constraint because they can access compute wherever they need it.

The Consolidation Pattern: What Happens After the Bubble Pops

Historically, when tech bubbles burst, consolidation follows.

During the dot-com bubble, there were hundreds of startups in a given space. After the pop, there were a handful of winners and a graveyard of failures.

The same pattern played out with mobile apps. Hundreds of startups building the next Instagram. A few winners. Most failures.

AI consolidation is already happening. We're seeing bigger companies (Google, Meta, Microsoft) acquire smaller AI companies. We're seeing venture capital dry up for mid-tier startups. We're seeing the return of the "only the biggest wins" dynamic that characterizes technology markets.

The consolidation pattern follows a predictable trajectory:

- Bubble expansion: Lots of startups. Lots of capital. Lots of ideas.

- Reality check: Some startups start missing milestones. Investors get nervous.

- Consolidation wave: Better-funded companies and bigger incumbents start acquiring smaller competitors.

- Survivor economics: A handful of surviving companies become very valuable because they've eliminated competition.

- Dominance: The surviving companies face limited competition and enjoy fat margins.

We're somewhere between stages 2 and 3 right now. Some startups are missing milestones. Investors are getting nervous. Acquisitions are happening.

This is bad news for bubble-funded startups. It's good news for:

- Investors in the companies that acquire the startups: Acquisitions are a way to buy talent and technology at reasonable prices.

- Employees at the surviving companies: Consolidation creates job security and career opportunities.

- Large tech companies: They can buy innovative startups at discounts and integrate the technology.

But it's bad news for founders of bubble companies that don't make the acquihire list. Those companies will die.

The Role of Open Source: AI for Everyone vs. Winner-Takes-All

There's a hidden war happening in AI between closed, proprietary systems and open-source alternatives.

OpenAI, Anthropic, Google, and other companies have built closed AI systems. You access them through APIs. You can't see the code. You can't modify them. You depend on the company to keep the service running and keep it priced reasonably.

But there are also open-source models. Meta's Llama. Mistral's models. Open-source implementations of major architectures. These models are free. You can download them. You can modify them. You can run them on your own infrastructure.

For a long time, the closed, proprietary systems were better. They were trained on more data. They had more resources behind them. But the open-source alternatives are getting good. Really good.

This has massive implications for the AI bubble and what happens after it pops.

Scenario 1: Closed-source dominance

If OpenAI, Google, and a handful of other companies maintain a significant capability advantage, they'll be able to extract enormous value. Startups will depend on their APIs. Enterprises will depend on their systems. They'll have pricing power.

In this scenario, the bubble winners will be the companies that own the APIs. Everyone else will be a customer or a failure.

Scenario 2: Open-source parity

If open-source models achieve parity with closed-source models, the dynamics change dramatically. Organizations won't need to depend on any single vendor's API. They can download models and run them locally. This drives commodity pricing for the underlying models.

In this scenario, value moves from owning the models to owning the applications and the data. Building ChatGPT becomes less valuable than building the tools on top of ChatGPT that solve real problems.

We're not at parity yet. But we're moving in that direction. Organizations that have bet heavily on API dependency might find themselves in a weak negotiating position in a few years.

The Practical Path Forward: What Organizations Should Actually Do

If you're leading an organization and trying to figure out what to do about AI, here's the straightforward path:

Don't bet on AGI. Bet on productivity.

AI will change how your organization works. But the value won't come from a magical breakthrough. It will come from incremental improvements in how you process data, make decisions, and execute tasks.

Start with applications that:

- Solve a real problem: Not "it's cool" but "it saves us time" or "it improves our output"

- Have clear metrics: You can measure whether it's working

- Don't require perfect: It needs to be good, not perfect. Good enough AI often delivers more value than perfect human work that takes 100x longer

- Are reversible: If it doesn't work, you can turn it off and go back to how you were doing it

Invest in people and infrastructure, not flashy demos.

Your competitive advantage isn't going to come from having the most sophisticated AI system. It will come from having people who understand how to use AI effectively and infrastructure that makes that possible.

This means:

- Hiring people who understand AI: Not necessarily PhD researchers. But people who understand the technology well enough to evaluate it critically.

- Training existing staff: Your current employees have domain expertise. AI doesn't replace that. It augments it. Help them understand how.

- Building infrastructure for data governance: You can't have good AI without good data. This is unsexy but essential.

- Monitoring and iteration: Don't expect the first deployment to be perfect. Plan to iterate.

Participate in open source.

Even if your organization isn't a pure AI company, participating in open-source AI projects gives you visibility into what's coming and builds relationships with the people doing the work.

This could be as simple as contributing to a popular open-source model or framework. Or as involved as building tools on top of open-source models and sharing the improvements.

Maintain healthy skepticism about vendor promises.

If a vendor says their AI system will solve your problem, ask for evidence. Ask for references. Ask to run it on your data before you commit to a contract.

If it's too good to be true, it probably is.

The Future: AI as Infrastructure

In five to ten years, AI will be infrastructure. Like databases. Like APIs. Like cloud computing. It will be everywhere. Most organizations will use it. Nobody will be talking about it as a revolutionary technology.

Some companies will have built on top of AI and become very valuable. Some will have bet on pure-play AI and disappeared. The venture capitalists who picked the winners will have made enormous returns. The venture capitalists who picked the losers will have written off capital.

But the technology will still be there. Useful. Powerful. Embedded into how we work.

The organizations that will thrive are the ones that aren't waiting to see if the bubble bursts. They're deploying AI today. Learning what works. Building capability. Investing in people.

The organizations that will struggle are the ones that are sitting on the sidelines waiting for the market to correct. By the time the bubble pops, the leaders will already be lapping them.

The Bottom Line: The Genie Isn't Going Back in the Bottle

Yes, the AI bubble will deflate. Valuations will correct. Startups will fail. Capital will dry up. Hype will cool.

But the technology will keep evolving. The value will keep compounding. The organizations that adapt will win. The ones that don't will become historical footnotes.

This is the pattern with every major technology. The technology survives the bubble. The organizations and investors who bet on the technology instead of the hype do fine. The ones who panic and sell or sit on the sidelines get left behind.

AI isn't special in this regard. It's just the next layer in a progression that's been happening since electricity.

The question isn't whether AI is a bubble. The question is what you're doing about it right now. Because by the time you have certainty about the answer, it will be too late to act.

FAQ

What does it mean to say AI is a bubble but not a technology failure?

The distinction is between valuations and capability. AI valuations—especially for early-stage startups—are based on optimistic assumptions about AGI and future breakthroughs that may never happen. These valuations will correct. But the underlying technology is real and useful today. Organizations can create measurable value from AI right now without waiting for AGI breakthroughs. When the bubble deflates, companies making money from real applications will survive. Companies betting purely on future breakthroughs will disappear.

How is the AI bubble different from previous tech bubbles like the dot-com crash?

Previous bubbles required breakthroughs in infrastructure before the technology could be useful at scale. The internet required fast broadband. Mobile required powerful processors and wireless networks. AI is already useful on current infrastructure. This means the value creation can happen faster, and the timeline between hype and reality is shorter. But the pattern is the same: inflated expectations about distant futures combined with real near-term capabilities.

What should enterprises prioritize if they want to actually benefit from AI?

Focus on solving specific problems that have clear metrics and don't require perfection. Build the infrastructure for data governance and pipeline management. Invest in retraining people to work effectively with AI. Create a culture where people feel empowered to question AI outputs and experiment with new approaches. Don't chase the flashiest use cases. Chase the ones that solve problems you actually have and create measurable value.

Why does geopolitical positioning matter for AI development?

AI capability is constrained by access to specialized hardware (GPUs and TPUs), computational resources, and top-tier talent. The US has advantages in all three. China is building alternatives to reduce dependence on US suppliers. Europe is trying to play catch-up but is behind in terms of existing capability. This means organizations in different geopolitical locations face different constraints on what AI systems they can build and where they can build them.

Will open-source AI models eventually replace commercial AI services?

It's possible. Open-source models are getting good fast. But there's a difference between having access to a model and having the infrastructure, expertise, and support to run it in production. Most organizations will use a mix: open-source models for some applications, commercial APIs for others. The real question is whether open-source achieves parity in capability. If it does, value shifts from the model itself to applications and data.

How long until the AI bubble actually bursts and valuations correct?

Bubbles are impossible to time precisely. What we can say is that the most inflated valuations (early-stage startups betting on AGI) are vulnerable now. A significant correction is already underway. But the overall AI market will probably continue growing even after valuations adjust. The crash won't be as dramatic as the dot-com collapse because more companies are actually generating revenue from AI today.

What's the single most important factor in determining whether an AI initiative will succeed?

Not the quality of the AI system. Not the sophistication of the infrastructure. It's whether the organization has people—from frontline staff to executives—who understand what the technology can and can't do and feel empowered to use it effectively. Culture beats everything else. If you have that, mediocre tools will work. If you don't, the best tools will fail.

Final Thoughts

The world hasn't stopped waiting for certainty. AI is reshaping how work happens. The organizations leading this transition aren't the ones with perfect clarity about the future. They're the ones deploying iteratively, learning fast, and adapting.

The AI bubble will burst. But intelligence isn't going anywhere. The technology, the capability, the competitive advantage—those persist. The organizations that act now will define the next decade. The ones waiting for the dust to settle will be playing catch-up.

Don't wait. Start now. Build. Learn. Adapt. The future belongs to the people and organizations that do.

Use Case: Generate comprehensive AI strategy documents and roadmaps automatically using AI agents, then present findings in automated slides and reports.

Try Runable For Free

Key Takeaways

- The AI bubble is real, but it's a bubble of expectations about AGI, not a failure of the underlying technology itself

- Every major tech bubble has left permanent infrastructure and value behind; AI will follow the same pattern

- Organizations win with AI by focusing on productivity and specific problem-solving, not flashy demos or AGI speculation

- The real value in AI lives in unsexy infrastructure—data governance, pipelines, monitoring, and compliance—not conversational applications

- Geopolitical positioning and access to specialized hardware (GPUs) is becoming a constraint on AI development alongside talent and capital

- Human-AI collaboration produces better outcomes than either humans or AI working independently; investment in people matters more than technology selection

- Open-source AI models are rapidly closing the capability gap with proprietary systems, shifting value from the models themselves to applications and data

- The AI talent war will determine winners after the bubble pops; organizations that retain experienced people will maintain competitive advantage

Related Articles

- AI's Hype Problem and What CES 2026 Must Deliver [2025]

- CES 2026: Everything Revealed From Nvidia to Razer's AI Tools

- xAI's $20B Series E: What It Means for AI Competition [2025]

- AI at Work: Why Workers Face More Responsibilities Without Higher Pay [2025]

- The YottaScale Era: How AI Will Reshape Computing by 2030 [2025]

- AMD at CES 2026: Lisa Su's AI Revolution & Ryzen Announcements [2026]

![AI Isn't a Bubble—It's a Technological Shift Like the Internet [2025]](https://tryrunable.com/blog/ai-isn-t-a-bubble-it-s-a-technological-shift-like-the-intern/image-1-1767778698220.jpg)