Meta Pauses Teen AI Characters: A Major Shift in Social Media Safety Strategy

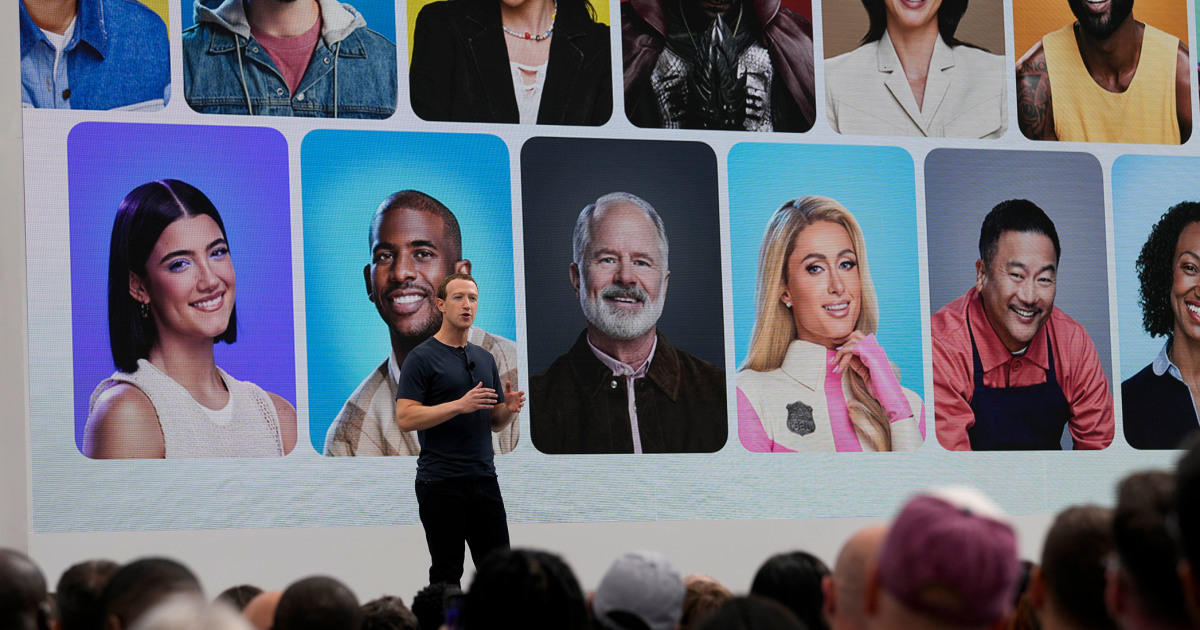

Meta just made a significant move that's reshaping how teenagers interact with artificial intelligence on its platforms. The company announced it's pausing teenage access to AI characters across Instagram, Facebook, WhatsApp, and Threads while developing updated versions designed specifically for younger users. This isn't a casual feature tweak—it's a calculated response to mounting regulatory pressure, parental concerns, and the growing complexity of keeping kids safe in an AI-powered digital landscape.

The timing matters too. This decision comes just days before Meta faces trial in New Mexico on accusations of failing to protect children from sexual exploitation on its apps, as reported by New Mexico Political Report. The company is also bracing for another lawsuit alleging its platforms fuel social media addiction, with CEO Mark Zuckerberg expected to testify, according to The New York Times. Against this backdrop, pausing teen AI character access looks like both a protective measure and a strategic positioning move.

But what does this really mean for teenagers, parents, and the broader tech industry? More importantly, what are the underlying issues that forced Meta's hand? And what can we expect when these "age-appropriate" AI characters finally return?

The answer to these questions reveals something bigger than one company's policy change. It shows us how the intersection of artificial intelligence, child safety, and regulatory oversight is fundamentally reshaping the social media landscape. Let's dig into exactly what's happening, why it matters, and what comes next.

TL; DR

- Meta is pausing teen access to AI characters across all its apps globally starting in the coming weeks, with no current timeline for relaunch

- Regulatory pressure is mounting with trials in New Mexico and other jurisdictions focused on teen safety and platform accountability

- Age-appropriate controls will include parental oversight, content restrictions on topics like extreme violence and graphic drug use, and education-focused interactions

- Industry-wide shift toward stricter teen AI safety, with OpenAI, Character.AI, and other platforms implementing similar restrictions

- Parental controls will be built-in to the new version, allowing guardians to monitor and restrict teen interactions with AI characters

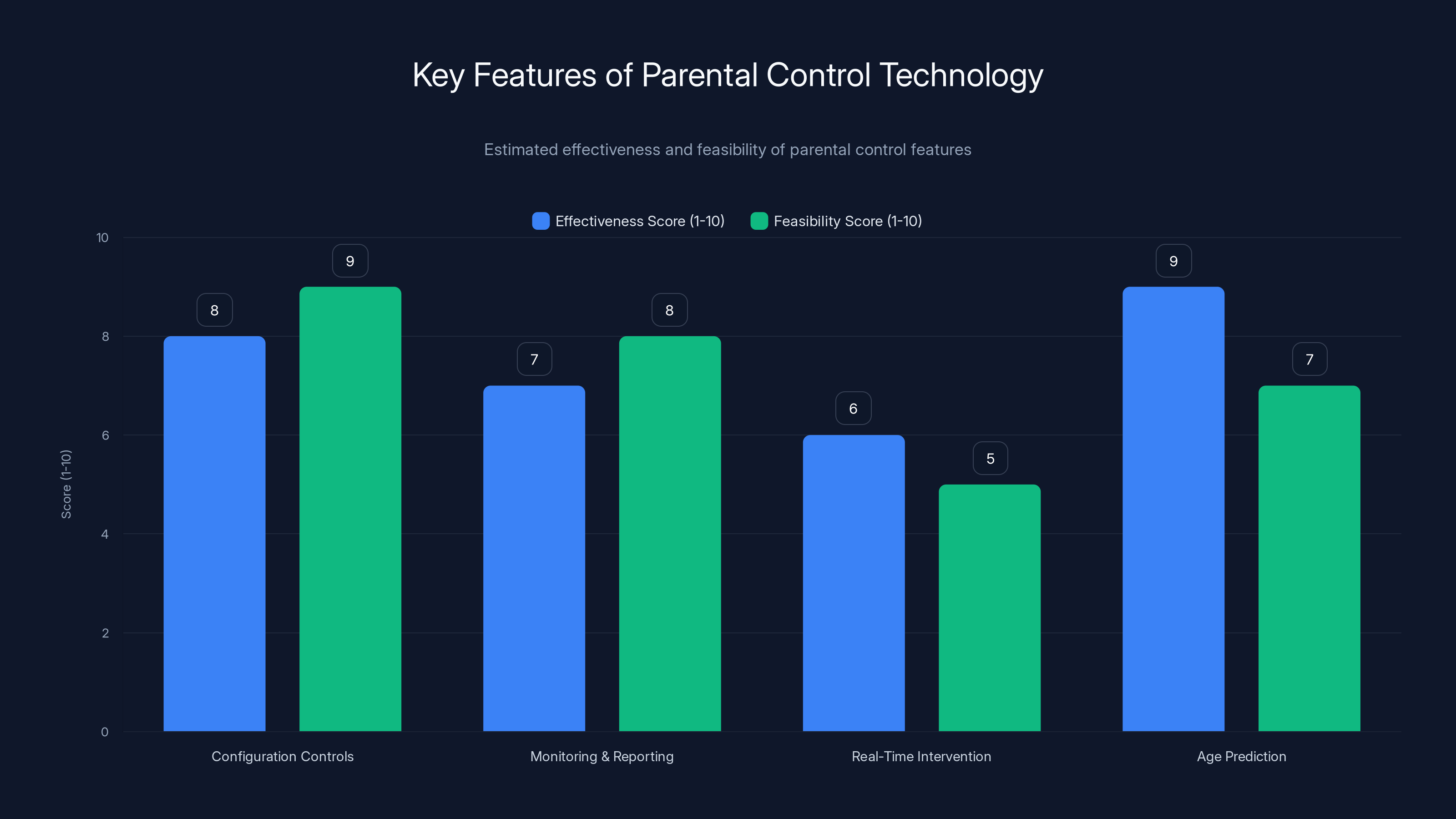

Configuration controls and age prediction are highly feasible and effective, while real-time intervention poses more technical challenges. Estimated data based on typical technology capabilities.

The Perfect Storm: Why Meta Had to Act Now

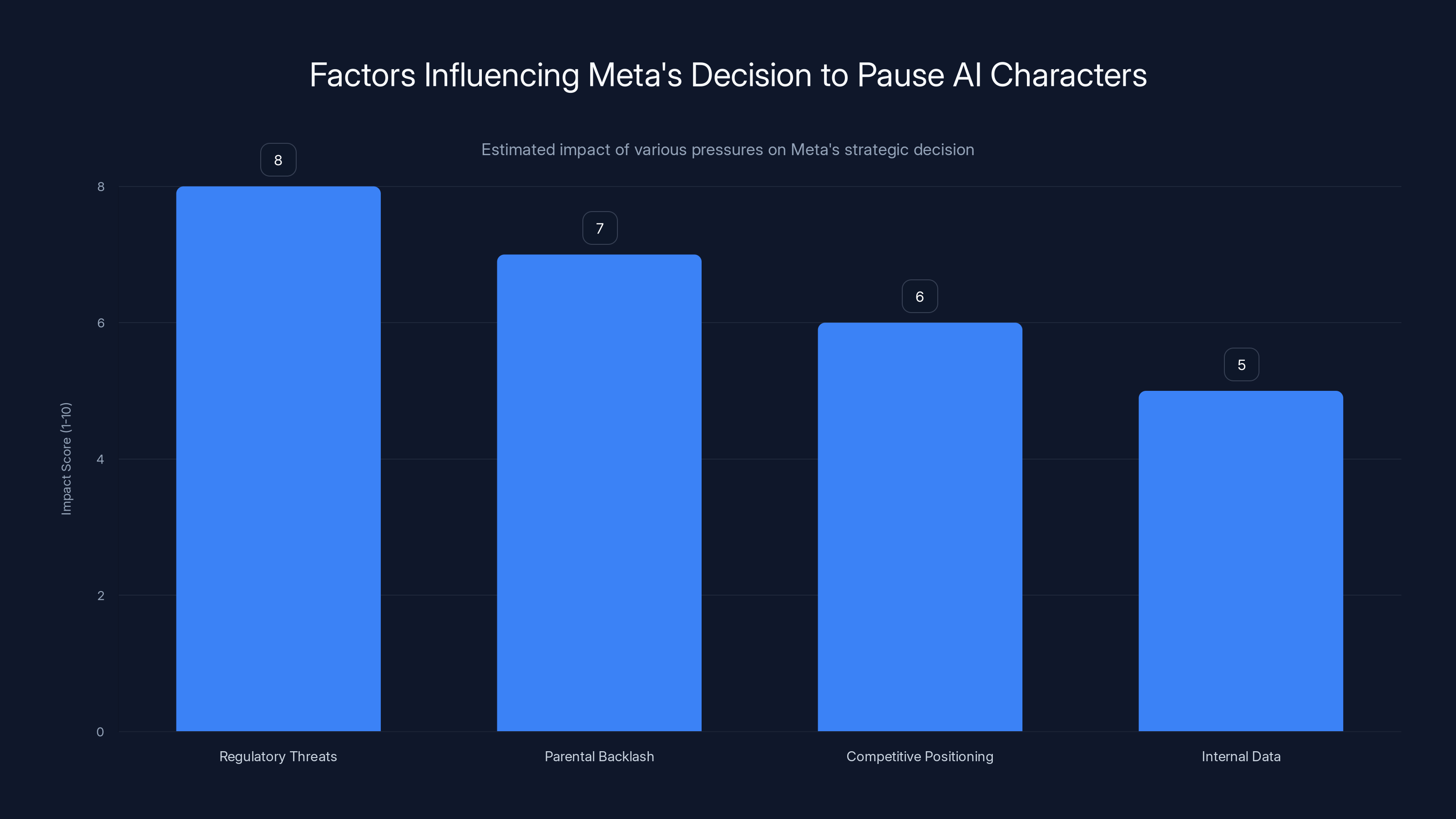

Meta didn't wake up one morning and decide to pause AI characters out of the blue. The decision reflects a perfect storm of pressures colliding simultaneously. Understanding what drove this move requires looking at multiple angles: regulatory threats, parental backlash, competitive positioning, and the company's own data about how teens interact with these tools.

First, let's talk about the legal pressure. Meta faces a lawsuit in New Mexico accusing the company of not doing enough to shield kids from sexual exploitation. The timing of that trial couldn't be worse for Meta—right as AI characters are becoming more sophisticated and, potentially, more risky. The company doesn't want a jury watching testimony about inadequate protections while teenagers are actively chatting with AI avatars that might not be properly safeguarded, as noted by New Mexico Political Report.

Second, there's the addiction lawsuit. Mark Zuckerberg being called to testify about platform engagement and teen mental health creates an obvious vulnerability. If the company is simultaneously promoting AI character interactions to teenagers while facing accusations of designing addictive features, that's a terrible narrative position. The pause effectively removes that particular criticism from the courtroom, according to The New York Times.

Third, parental concern is genuine and growing. After Meta surveyed what parents actually wanted, the feedback was overwhelming: more visibility, more control, and more assurance that AI interactions are age-appropriate. Parents aren't comfortable with their teens having open-ended conversations with AI systems without oversight. That's not hysteria—that's a reasonable concern about a technology that's still evolving, as discussed in Wired.

Fourth, other platforms are moving faster on this. Character.AI, which specializes in AI chat avatars, already restricted open-ended conversations for users under 18 in October. OpenAI added teen safety rules for ChatGPT and implemented age prediction technology. If Meta doesn't move quickly to match these safety standards, it looks negligent by comparison, as noted by TechCrunch.

Finally, there's the competitive angle that nobody talks about openly. By pausing AI characters now and announcing a more sophisticated version later, Meta gets to look responsible while also repositioning AI as a premium feature. When these characters return with parental controls built in, Meta can market them as "the safe way for teens to interact with AI"—and that's a selling point competitors will have to match.

Regulatory threats and parental backlash are the most significant pressures influencing Meta's decision to pause AI characters. Estimated data.

What Parents Asked For (And Why Meta Finally Listened)

Meta claims this entire shift came from listening to parent feedback. That's partially true, but it's worth understanding what specifically parents complained about and why Meta's initial approach wasn't sufficient.

Parents wanted three things: transparency, control, and confidence that their kids weren't being exposed to harmful content. The original AI character rollout gave them almost none of these things. Teens could chat with AI avatars designed by Meta and third-party creators, and parents had no way to know what topics were being discussed or whether the AI was giving age-appropriate responses.

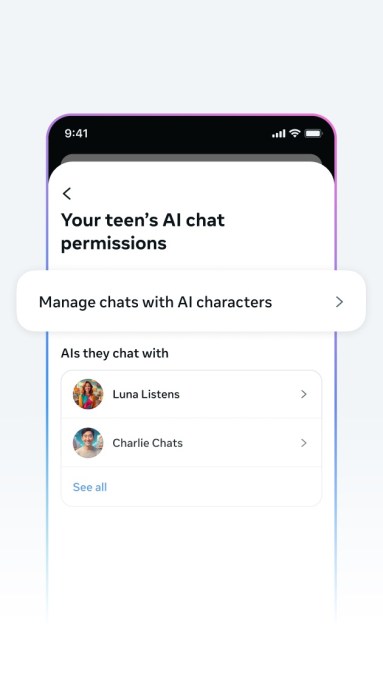

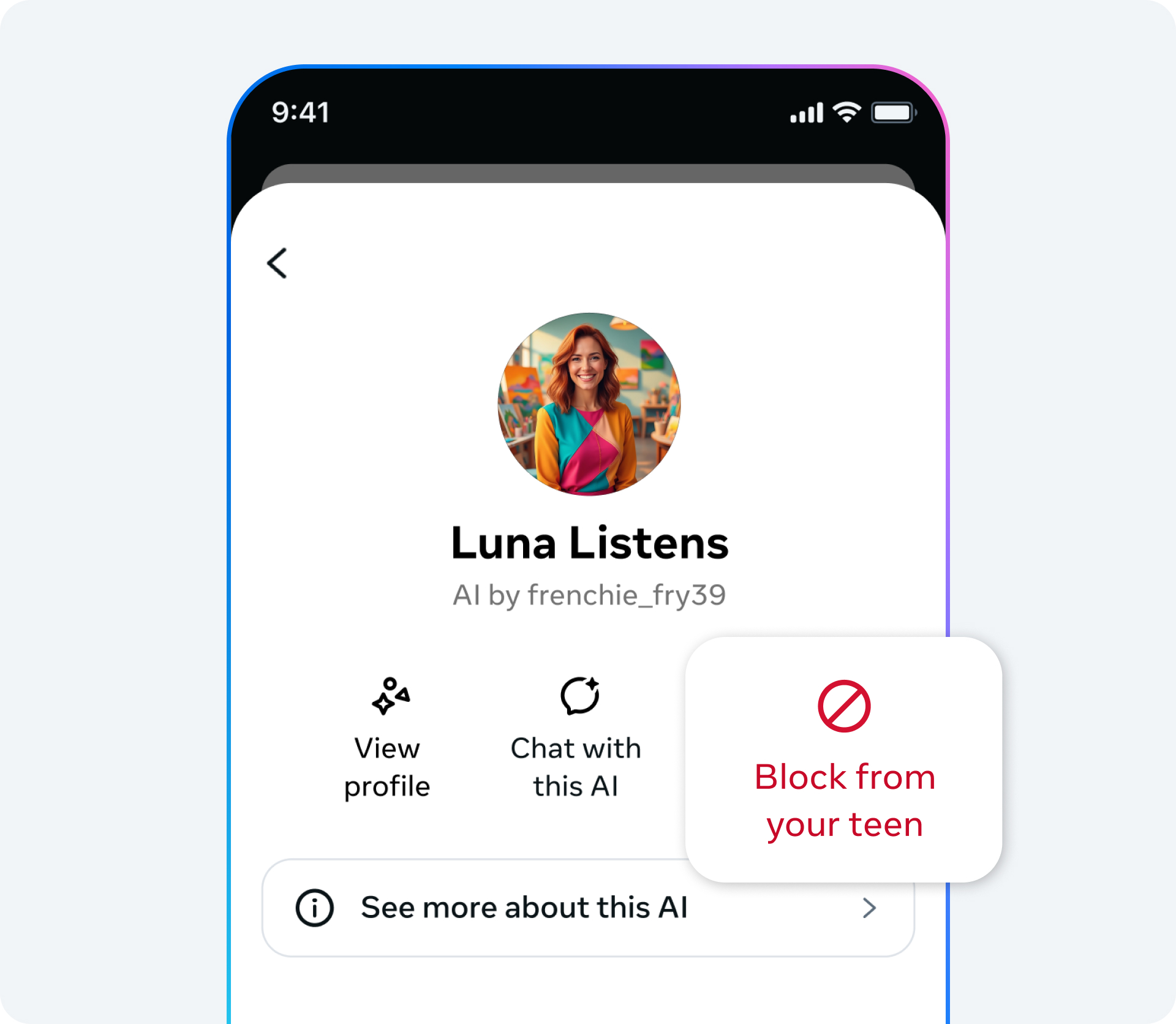

In October, Meta previewed some controls that were supposed to address this. Parents would be able to:

- Monitor which characters their teens were chatting with

- Block access to specific characters

- See what topics the AI was allowed to discuss

- Completely disable AI character interactions

These features were initially set to roll out in 2025. But parents wanted something different—not just monitoring tools, but assurance that the AI itself was fundamentally designed for teenage users, with safety built into the system rather than layered on top.

This distinction is crucial. Monitoring tools assume the platform is generally safe and parents just need oversight. What parents actually wanted was for Meta to ensure the platform was safe first, with monitoring as an additional layer. That's a completely different engineering challenge.

Meta also heard concerns about specific topics. Teenagers were supposedly having conversations with AI characters about:

- Self-harm and suicide

- Drug use and addiction

- Explicit sexual content

- Eating disorders and body image issues

Some of these conversations might have been helpful—a teen struggling with depression getting supportive information from an AI could be therapeutic. But other conversations might have been harmful—an AI character describing methods of self-harm or encouraging risky behavior. The line between helpful and harmful is blurry, and Meta realized its original system wasn't equipped to navigate that complexity, as noted by Hawaii News Now.

The company's response is to essentially say: "We're going to stop this experiment, completely redesign the system with safety first, and bring it back when we're confident it's age-appropriate." Whether that's genuine commitment or strategic positioning is debatable. But from a parental perspective, it's the right move.

The Age-Appropriate Redesign: What's Actually Changing

When Meta brings back AI characters for teens, the company says they'll be fundamentally different. Let's break down what "age-appropriate" actually means in Meta's redesign and why it matters.

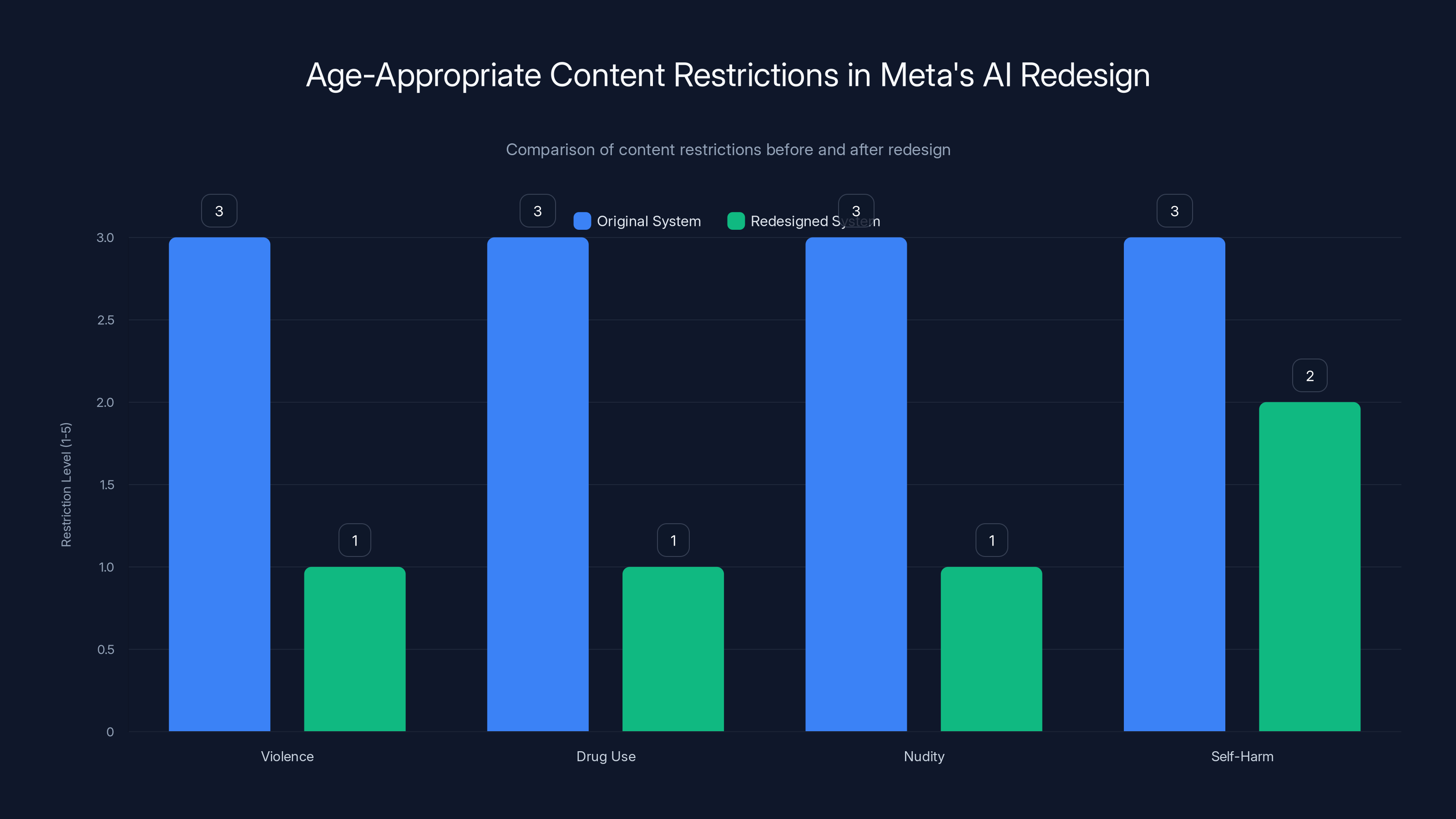

Content Filtering and Topic Restrictions

The new AI characters will operate under a set of content guidelines inspired by PG-13 movie ratings. That means:

- Extreme violence is off-limits

- Graphic drug use is forbidden

- Nudity and sexual content are excluded

- Discussions around self-harm are restricted

This is more restrictive than the original system, which largely relied on user discretion and content policies that were more reactive than preventative. The new system will be designed to actively avoid these topics rather than simply flagging them after the fact, as noted by CryptoRank.

But here's where it gets complicated. Completely banning discussions of self-harm means a depressed teen can't ask an AI for support with suicidal thoughts. That could actually be harmful—pushing teenagers toward isolation rather than connection. Meta's answer is that the new system will recognize when a teen is in crisis and redirect them to human resources (school counselors, crisis hotlines, mental health professionals) rather than trying to handle it through AI conversation.

That's theoretically good policy, but implementation is tricky. How does an AI system reliably detect crisis situations? False negatives (missing actual crises) could be dangerous. False positives (flagging normal teen angst as crisis) could create unnecessary alarm.

Education and Hobbies: The Safe Zone

Meta has been explicit that the new characters will focus on "education, sport, and hobbies." This is telling—these are the topics Meta considers genuinely safe for teen-AI interaction. Think of it like AI tutors, coaching chatbots, and hobby discussion partners.

A teen interested in learning Python programming could chat with an AI character designed to teach coding. An aspiring athlete could get fitness advice. A teenager interested in creative writing could get feedback from an AI character specialized in that domain.

The logic is sound. These use cases have clear educational or recreational value without triggering mental health, safety, or exploitation concerns. But it also dramatically narrows the scope of what AI characters can do, which raises a question: if AI characters are restricted to tutoring and hobby discussion, are they still interesting enough for teenagers to use regularly?

That's not a rhetorical question. Meta's entire strategy for AI characters depends on teenager engagement. If the new versions are too restrictive, adoption could collapse. Teenagers might decide AI characters are boring and irrelevant. Meta needs the sweet spot between safety and engagement, which is notoriously hard to find.

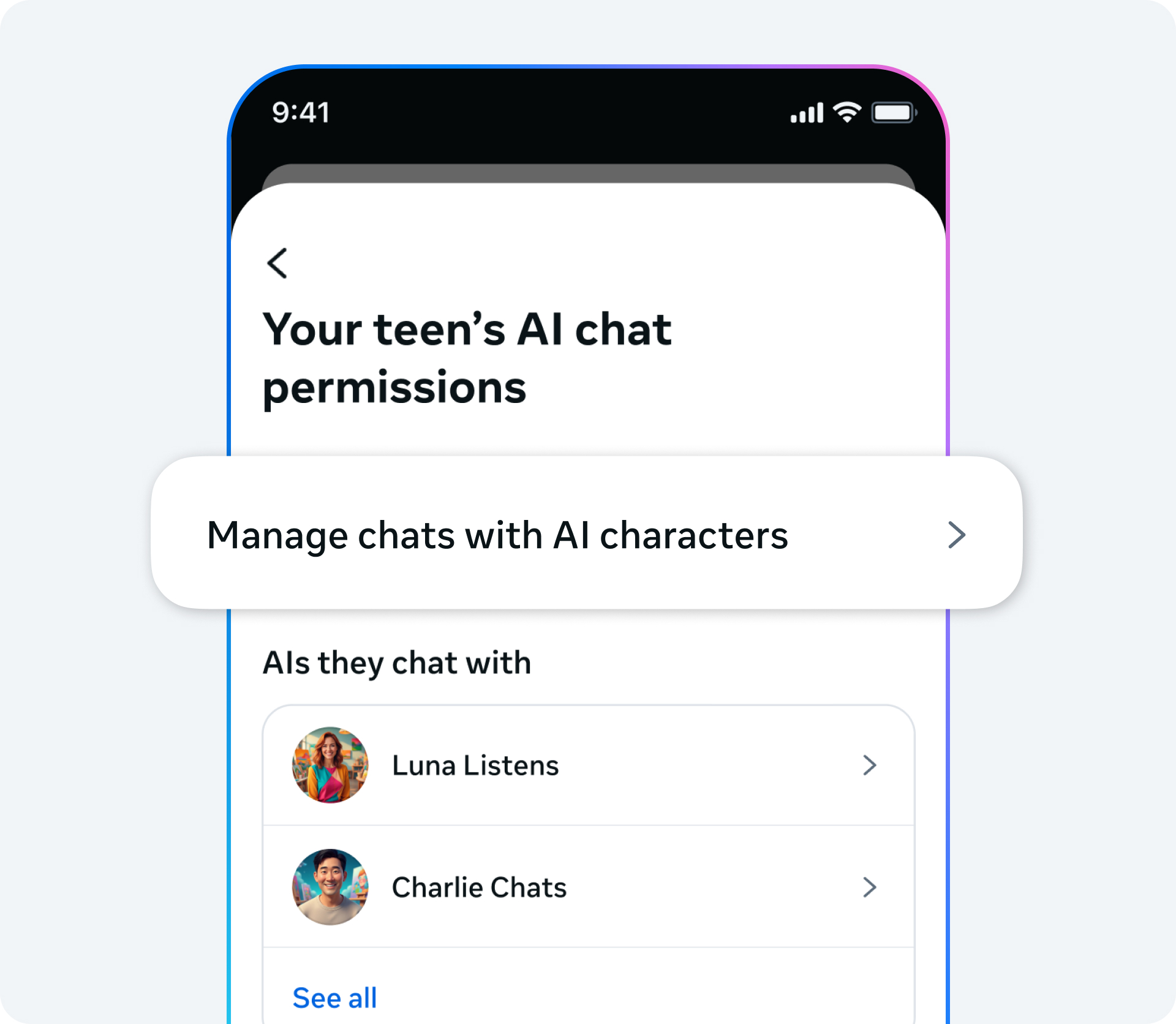

Built-In Parental Controls

The critical difference in the redesigned version is that parental controls won't be an afterthought—they'll be part of the core architecture. This means:

- Parents can set content restrictions before teens ever access the system

- Real-time monitoring is available without installing third-party monitoring software

- Parents can adjust settings as their teen matures

- Teens have less ability to work around parental restrictions

This is significantly more sophisticated than the controls Meta originally previewed. Instead of monitoring what's happening after the fact, parents can essentially configure the AI character's behavior upfront. It's the difference between a babysitter watching your kids and telling you what happened versus a nanny following a detailed set of instructions from you before the kids even arrive.

The redesigned AI system by Meta imposes stricter content restrictions across violence, drug use, and nudity, with a moderate approach to self-harm, aiming to provide a safer environment for teens. Estimated data.

The Broader Industry Response: A Race to the Middle

Meta's decision didn't happen in a vacuum. The entire AI industry is scrambling to figure out teen safety, and Meta's move is part of a much larger trend.

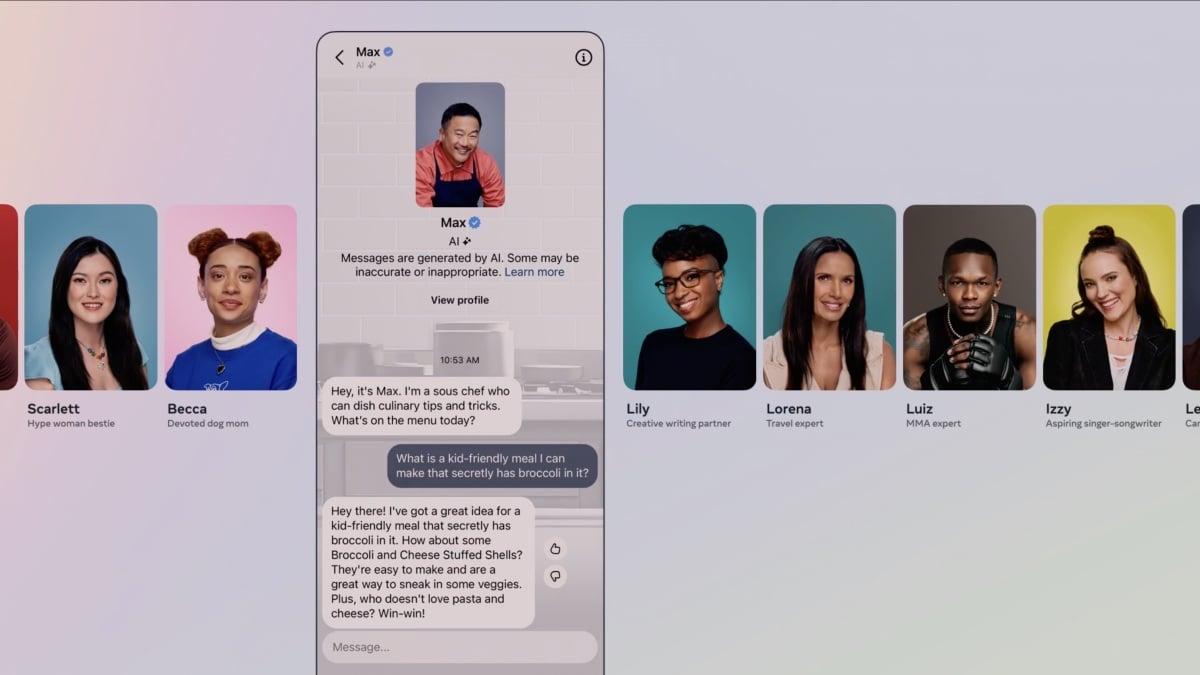

Character. AI's Restriction Model

Character.AI, the platform that lets users create and chat with AI avatars, moved first on this issue. In October, the company disabled open-ended conversations for users under 18. That's a more aggressive restriction than Meta's approach—essentially saying teenagers can use Character.AI, but only in controlled, guided ways rather than free-form conversation.

Character.AI's rationale was straightforward: a teenager talking with an AI that could say anything, created by essentially anyone on the internet, is a recipe for exposure to inappropriate content. By restricting open-ended conversations, Character.AI reduced that risk surface dramatically. The company also said it would develop interactive stories for kids—essentially moving toward the same education and hobby focus that Meta is pursuing, as noted by TechCrunch.

Character.AI's approach proves something important: restrictions don't kill the product. Users still value the platform, they just interact with it differently. That gives Meta confidence that its redesigned, safer version will still be attractive to teenagers.

OpenAI's Age-Based Safeguards

OpenAI took a different approach with ChatGPT. Rather than restricting teenager access, OpenAI added new safety rules specifically for users identified as teens. The system:

- Refuses requests for help with substance abuse

- Declines to engage with explicit sexual content

- Avoids conversations that might encourage self-harm

- Redirects health concerns toward professional resources

OpenAI also implemented age prediction technology similar to Meta's—using behavioral signals to identify likely teenagers and apply restrictions even if they claim to be adults. This is less intrusive than parental controls but more proactive than simple content policies, as noted by The Regulatory Review.

The difference between OpenAI's and Meta's approaches reflects their different use cases. ChatGPT is primarily a productivity and learning tool. AI characters are primarily social and entertainment. Restricting ChatGPT less is fine because teenagers are using it for homework and information. Restricting Meta's characters more is necessary because teenagers might use them for emotional support, validation, or even grooming-adjacent conversations.

The Regulatory Moment

These moves by Character.AI, OpenAI, and Meta suggest something bigger happening: the industry is collectively moving toward self-regulation before government regulation becomes more draconian. If major platforms voluntarily implement strong teen safety measures, regulators might be satisfied without imposing heavier-handed restrictions.

That's the strategic calculation Meta is definitely making. By being seen as responsible and proactive on teen AI safety, the company inoculates itself against future regulatory criticism. When that New Mexico trial concludes and other lawsuits proceed, Meta's lawyers will point to the AI character redesign as evidence of the company's commitment to teen protection.

But there's also genuine concern here. The AI industry knows it's dealing with powerful systems that could genuinely harm teenagers if misused. Moving fast to restrict teenage access to unfiltered AI is the responsible thing to do, regardless of the regulatory angle, as discussed in Alabama Daily News.

The Timeline and What It Means for Teens Right Now

Meta's announcement is vague on one critical detail: when exactly are these new AI characters coming back? The company said "in the coming weeks" access will be paused, but didn't specify when the updated version will launch.

This vagueness is intentional. Meta wants:

- Time to redesign the system without promising specific dates it might miss

- Space to see how courts rule in pending cases before committing to specific features

- Flexibility to adjust based on parent and teen feedback while the system is being rebuilt

For teenagers, this means immediate impact. Starting in the coming weeks, they simply won't have access to AI characters. No chatbots, no avatar interactions, nothing. Teens who were regularly using this feature will lose it abruptly.

Meta is framing this as temporary. The company says the new versions will be "accessible to everyone, not just teens, when it launches," with built-in parental controls. That means when AI characters return, they won't be relegated to a teen-only section—they'll be platform-wide features that adults, teenagers, and younger children can all use, but with age-appropriate restrictions applied based on the user's age.

For teens who were using AI characters for legitimate purposes (homework help, hobby discussion, learning), this pause is an inconvenience. For teens who were using them for potentially harmful reasons, it's a forced reset. For parents, it's a reprieve—time to have conversations with their kids about AI safety while access is paused.

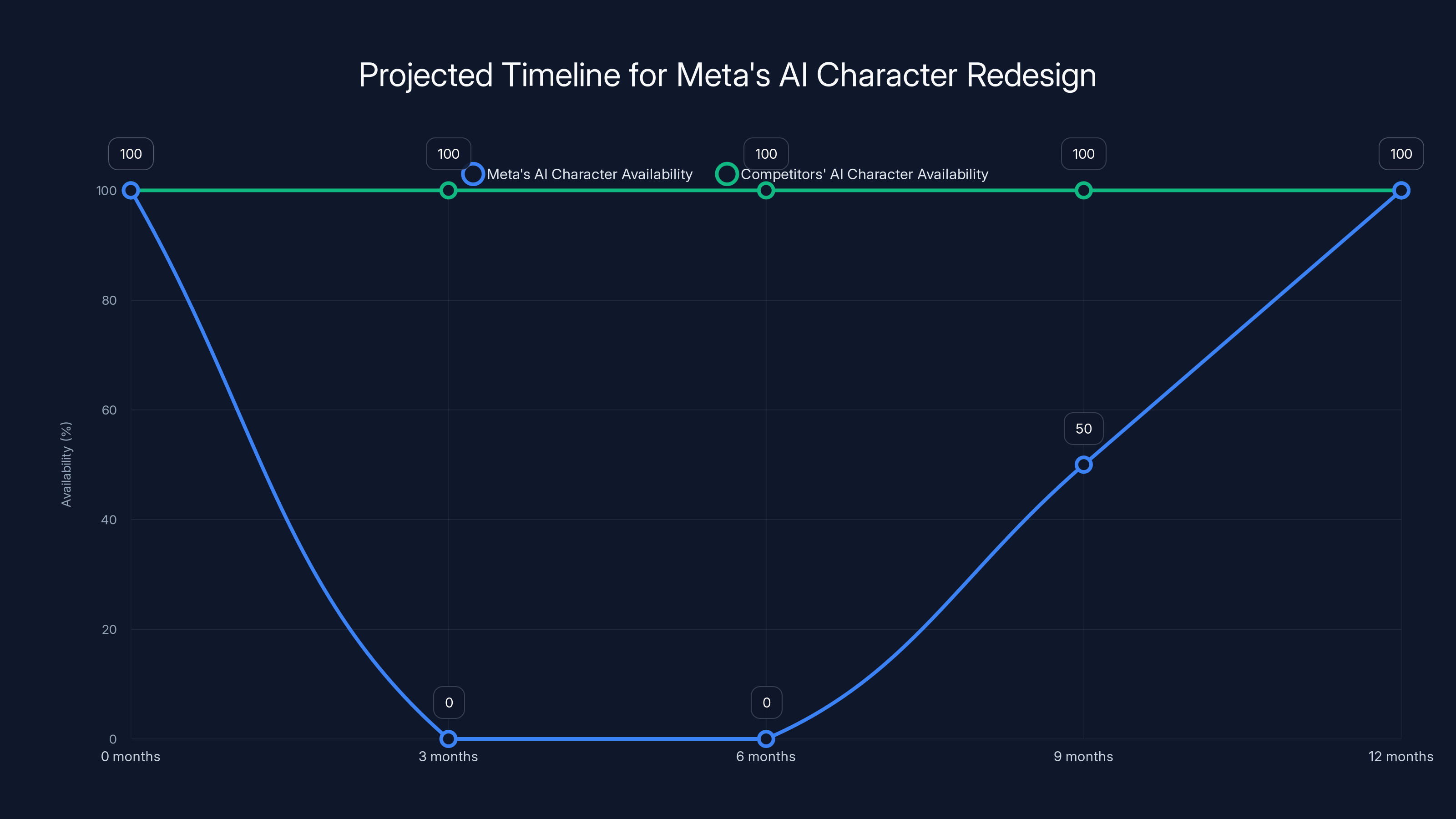

The timeline also affects Meta's competitive position. If the redesign takes 6 months, that's 6 months where Meta's AI character feature is completely unavailable while competitors like Character.AI continue operating (albeit with restrictions). If it takes 12 months, Meta might fall behind in this category altogether.

That pressure will likely push Meta to accelerate the redesign. We might see a beta version launching within 3-4 months, with full rollout by mid-2025. But that's speculation—Meta isn't committing to any timeline.

Implementing AI safety measures upfront costs 15-25% of development, while retrofitting can cost 3-5 times more. Estimated data.

The Mental Health Question: Are Restrictions Actually Protective?

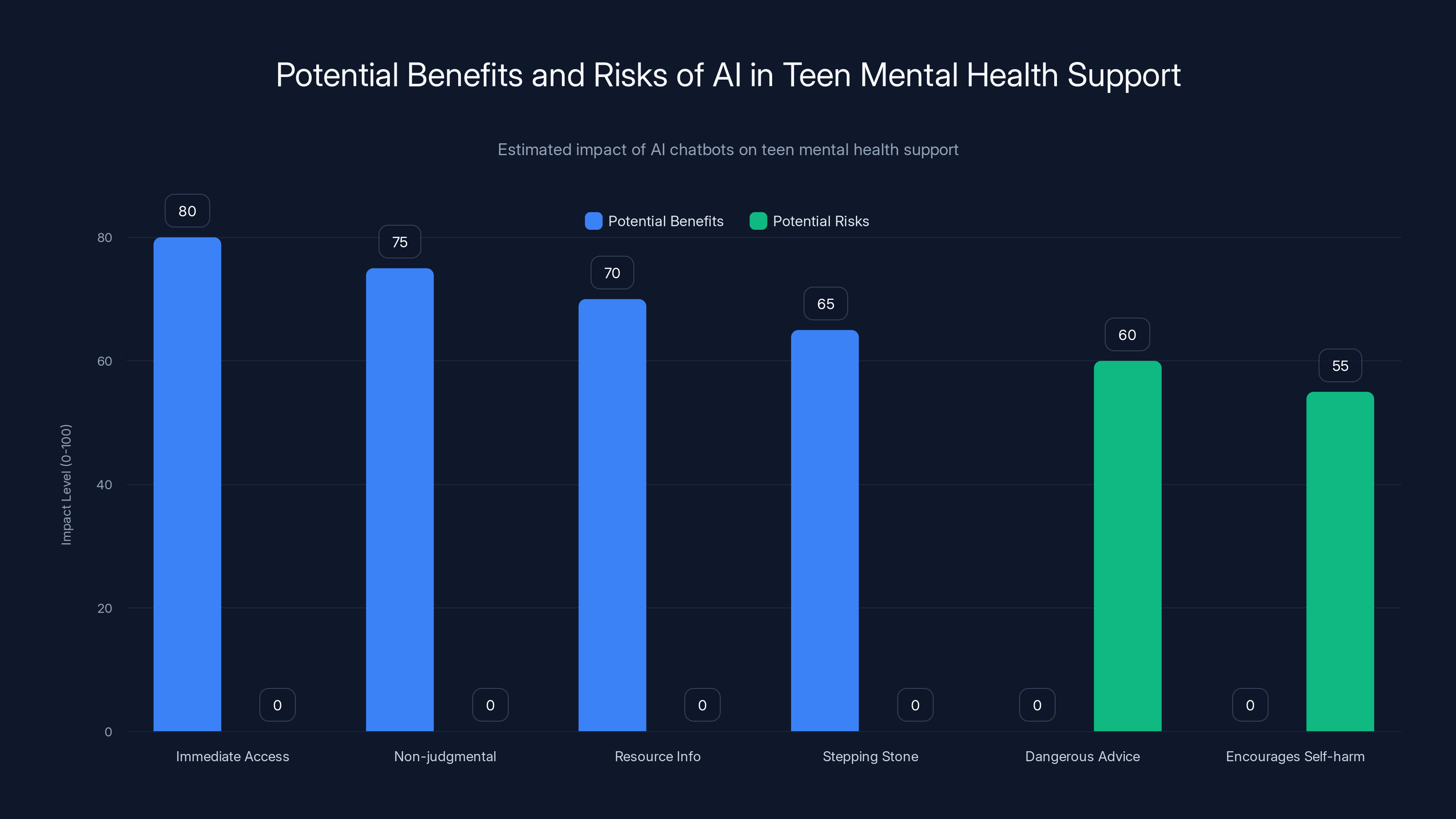

This is the thorny issue everyone's dancing around. Restricting teenager access to AI conversations about self-harm, depression, and other mental health concerns is supposedly protective. But does it actually protect teens, or does it push them away from potential resources?

There's a real argument that an AI chatbot could be genuinely helpful for a struggling teenager. Imagine a 16-year-old dealing with depression who finds it easier to talk to an AI than to a human counselor. The AI could provide:

- Immediate access to support (no appointment waiting)

- Non-judgmental conversation

- Information about mental health resources

- A stepping stone toward human help

Completely restricting that conversation means that teenager loses even that option. They might not reach out to human help at all.

But the counterargument is equally strong. An AI isn't trained as a therapist. It might give dangerous advice. It might accidentally encourage self-harm. It might be manipulated by bad actors who've jailbroken the system to remove safety restrictions. A teenager in crisis needs a trained professional, not a chatbot pretending to be one, as discussed in Frontiers in Education.

Meta's answer is the "redirect to human resources" approach—the AI identifies crisis signals and steers the conversation toward professional help. But that only works if:

- The AI accurately detects crisis signals

- The teenager actually follows the redirection

- Professional resources are accessible (not always true)

- The conversation hasn't already caused harm

These are big ifs. In practice, Meta's safeguards will probably prevent some harm while also preventing some genuine connections. That's an acceptable trade-off from a legal liability perspective (Meta is protected), but maybe not from a public health perspective.

The broader implication is that Meta and other platforms are essentially saying: "We don't feel comfortable letting AI systems interact with teenagers about mental health." That's a reasonable position. It's honest about the limits of current technology. But it also means teenagers won't get AI-based support for these issues, even though some teenagers might benefit from it.

Parental Control Technology: What's Actually Possible

Understanding what Meta means by "built-in parental controls" requires understanding what's technologically feasible. These controls will likely include:

Configuration Controls

Parents will be able to set the AI character's behavior before their teen ever interacts with it. This might include:

- Topic allowlists (only certain topics can be discussed)

- Content rating levels (how explicit can responses be?)

- Character filtering (which AI avatars are allowed?)

- Time restrictions (can the teen access AI characters during school hours?)

These are all achievable with standard software engineering. They're essentially configuration settings that adjust the AI's operating parameters.

Monitoring and Reporting

Parents will likely be able to see:

- How often their teen is using AI characters

- Which characters they're interacting with most

- Summary statistics about conversation topics

- Flagged conversations that the system identifies as concerning

This doesn't mean parents see the full conversation transcripts (that would be technically complex and legally thorny). Instead, they see high-level summaries and alerts when unusual patterns are detected.

Real-Time Intervention

Meta might also allow parents to:

- Block or allow specific characters in real-time

- Adjust content restrictions on the fly

- Pause access temporarily

- Set up alerts for certain keywords or topics

These are more technically challenging because they require real-time monitoring and integration with the AI system itself. But they're certainly possible with modern technology.

Age Prediction Overlay

Meta's age prediction technology is the real innovation here. The system will try to identify teenagers even if they claim to be adults. If identified, the more restrictive teen safeguards apply automatically. This prevents teenagers from circumventing parental controls by lying about their age.

Age prediction works by analyzing:

- Typing patterns and slang usage

- Social graph (who they follow/are friends with)

- Device type and settings

- Behavior patterns (when they're most active, how they interact)

- Account history and previous declarations of age

None of these signals are perfect individually, but collectively they create a probabilistic estimate of the user's likely age. Meta's success rate on this is probably 70-80% accurate, which is good enough for applying age-appropriate restrictions but not good enough for critical decisions.

The concern about age prediction technology is privacy. Analyzing user behavior to estimate age means analyzing a lot of personal data. There are privacy implications, though Meta's argument is that it's using this data to protect teenagers, so the benefit outweighs the cost.

Estimated data shows Meta's AI characters could be unavailable for up to 6-12 months, potentially affecting its competitive stance against ongoing competitors.

The Legal Strategy: Timing and Optics

Let's be direct: Meta's decision to pause AI characters is strategically timed to influence ongoing litigation. The company faces multiple lawsuits alleging it harms teenagers. By pausing AI characters now and redesigning them with strong safeguards, Meta gets to:

- Remove a liability from the courtroom narrative

- Demonstrate proactive safety measures

- Show responsiveness to regulatory concerns

- Position itself as more responsible than competitors

This is smart legal strategy. It doesn't mean the decision is insincere—Meta probably genuinely wants safer AI for teenagers. But the legal implications are definitely part of the calculation.

The New Mexico trial is particularly significant. That case involves allegations that Meta failed to protect children from sexual exploitation on its apps. If a jury is hearing testimony about insufficient child protection measures, Meta definitely does not want teenagers actively using a new AI feature that might have safety gaps. By pausing access now, Meta essentially says: "We're taking this seriously and fixing the issue." That narrative helps in litigation, as noted by New Mexico Political Report.

The addiction lawsuit is similarly positioned. If Mark Zuckerberg is testifying about whether Meta designs features to be addictive, the company doesn't want teenagers addicted to AI character interactions happening in real-time. By pausing, the company removes that specific vector of addiction criticism, as reported by The New York Times.

From a legal perspective, this is a masterclass in preemptive protection. Meta isn't admitting any wrongdoing (the pause is framed as wanting to improve the feature, not fixing a safety problem). But the practical effect is that the company addresses the most vulnerable aspect of its AI character product before critics can use it against the company in court.

Regulators watching this case will also be influenced. If Meta is being proactive about teen safety, regulators might be less inclined to impose heavy-handed restrictions. That's valuable for Meta's long-term ability to develop AI features for all ages.

What This Means for AI Companies Generally

Meta's move creates a template that other AI companies will likely follow. The industry is essentially learning: if you're building AI that teenagers might use, you need to build in strong safety controls from the start, or you'll face regulatory pressure and have to bolt them on later.

For startups building AI products, this is important context. If your AI product could potentially attract teenagers as users, you need to:

- Consider teen safety from day one, not as an afterthought

- Implement age detection systems

- Create different experience paths for different age groups

- Get ahead of regulatory criticism by being proactive

- Document your safety measures thoroughly

For larger AI companies like OpenAI and Google, Meta's move validates their own cautious approach to teenage users. OpenAI's implementation of teen-specific safety rules for ChatGPT makes more sense now—it's not excessive caution, it's the industry standard being established by Meta.

For AI character platforms specifically, this is a moment of reckoning. Any platform serving teenagers needs to decide: do we implement similar safeguards proactively, or wait for regulatory pressure to force us to act? Meta's experience suggests that being proactive is cheaper and less damaging than being reactive.

AI chatbots offer significant benefits like immediate access and non-judgmental support but pose risks such as potentially dangerous advice. Estimated data highlights the need for careful balance.

Parental Perspective: What Should You Actually Be Doing

If you're a parent with teenagers on Meta's platforms, Meta's pause on AI characters is good news. It's buying you time to have conversations with your kids about AI safety before the feature returns.

Here's what you should be doing:

Have the Conversation

Talk with your teenager about their experience with AI characters. What did they like? Did they ever talk about concerning topics? Were they aware of any limitations? This isn't about judgment—it's about understanding their experience and helping them think critically about AI interactions.

Establish Expectations

When AI characters return, help your teenager understand what you're comfortable with. What topics are okay to discuss? How much time is reasonable to spend? Are there certain types of AI characters you're not comfortable with?

Use the Parental Controls

When the redesigned version launches, actually use the parental controls Meta provides. Don't just enable them and forget—check in periodically on what your teenager is doing with AI characters. These tools are only useful if you engage with them actively.

Teach Critical Thinking About AI

Help your teenager understand that AI systems aren't human. They don't understand context the way humans do. They can seem smarter than they actually are. They can hallucinate facts. They can be manipulated. The more your teenager understands how AI actually works, the safer their interactions will be.

Know the Resources

If your teenager is struggling with mental health, depression, or suicidal thoughts, know what resources are available. AI characters won't provide real help—human professionals can. Having this information ready is important.

The Future of AI and Teenagers: Where This Goes

Meta's redesign is a step toward safer AI for teenagers, but it's not the end of the story. The real future will probably involve several developments.

Specialized AI for Education

AI tutors and homework helpers will probably become much more common and more sophisticated. These are genuinely valuable for teenagers and have clear safety parameters. Expect this category to grow significantly as schools adopt AI tutoring tools.

Restricted Social AI

General-purpose AI characters for social interaction will probably remain restricted for teenagers. The safety risks are too high and the value proposition is unclear. As AI gets better, this might change, but not for several years.

Family-Focused Features

Look for more features designed to let families use AI together—parents and teenagers co-using the same AI system with different privilege levels. This might be the "safe" model for teenage AI interaction: shared spaces with oversight rather than private conversations with AI.

Regulatory Clarity

Regulators will probably establish clearer standards for teen AI interaction over the next 2-3 years. This could come through legislation, court precedent from ongoing cases, or industry standards. Meta's actions now are partly shaping what those standards will look like.

Better Age Detection

Age prediction technology will improve significantly. Within a few years, it might be quite good at identifying teenagers even if they try to disguise their age. This could make teen-specific safety features much more reliable.

Liability Questions

Eventually, courts will have to determine who's responsible if AI causes harm to a teenager. Is it the platform's responsibility? The parent's responsibility? The AI company's responsibility? These liability questions will reshape what companies are willing to deploy for teen audiences.

Implementation Challenges Meta Will Face

Designing safe AI for teenagers sounds good in theory, but implementation is genuinely difficult. Meta will face several challenges as it rebuilds AI characters.

False Positives in Safety Detection

If the system is too aggressive in flagging concerning content, it will block legitimate conversations. A teenager asking for help with homework might get flagged as abuse. A teen talking about sports injuries might trigger self-harm warnings. Too many false positives and the feature becomes useless.

Circumvention Attempts

Teenagers are creative at working around restrictions. If parental controls are in place, they'll find ways to disable them. If certain topics are blocked, they'll use slang or code words. If certain characters are restricted, they'll create new ones. Meta will be in an arms race with motivated teenagers trying to bypass safeguards.

Cultural Differences

What's age-appropriate varies significantly by culture. PG-13 standards are American. Globally, Meta needs to adapt these standards to local norms, religious beliefs, and cultural values. That's a massive complexity layer.

Balancing Safety and Utility

The more restrictions Meta adds, the less useful the feature becomes. Finding the right balance between restrictive enough to be safe and permissive enough to be valuable is incredibly difficult. Most implementation attempts will err too far in one direction or the other.

Keeping Parents Informed

Building monitoring tools is one thing. Making sure parents actually understand and use them is another. If the interface is confusing or the alerts are overwhelming, parents won't use the parental controls effectively.

The Bigger Picture: AI and Childhood in 2025

Meta's decision about AI characters is one data point in a much larger story: how society is grappling with AI's role in children's lives. The pause on AI characters reflects broader uncertainty about what's safe, what's beneficial, and what's appropriate for teenagers.

We're at a moment where AI is becoming mainstream, but we don't fully understand its effects on developing brains and psyches. The precautionary principle suggests we should be careful. Meta's decision reflects that caution. But it also reflects something deeper: the realization that tech companies shouldn't be making these decisions unilaterally.

For teenagers, this moment is actually important. It's saying that their safety matters more than feature launches. For parents, it's validation that their concerns about their kids and technology are legitimate. For regulators, it's evidence that the industry is capable of self-regulation when the stakes are high enough.

The redesigned AI characters, whenever they launch, will tell us a lot about whether the tech industry has learned to build safer systems or whether it's just gotten better at the appearance of safety.

FAQ

What exactly is an AI character on Meta platforms?

AI characters are chatbots designed to simulate conversations with specific personas or archetypes. They can be created by Meta or by third-party developers, and they're designed to be interactive, entertaining, and (theoretically) helpful. Teenagers could chat with AI characters for conversation, learning, or entertainment, similar to how users might interact with chatbots on other platforms. The interaction is text-based, real-time, and lacks human oversight during the conversation.

Why is Meta pausing AI character access for teens specifically?

Meta is pausing teen access because the company couldn't guarantee that the AI characters were giving age-appropriate responses to potentially sensitive topics. The decision is part protective (removing potential harms from circulation) and part strategic (improving the product before regulatory scrutiny becomes more intense). By pausing now, Meta gets credit for proactive safety measures rather than waiting for complaints or lawsuits to force the decision later.

When will these redesigned AI characters be available again?

Meta hasn't provided a specific timeline, only saying "in the coming weeks" for the pause to take effect and that the new version will launch sometime after that. The company is intentionally vague to avoid committing to dates it might miss. A reasonable estimate based on typical tech product cycles would be 3-6 months, but that's speculation. Meta has incentives to move fast (to avoid looking irresponsible) and move carefully (to ensure the redesign actually works).

What will the parental controls actually let parents see?

Parental controls will likely allow parents to see high-level summaries of teen interaction with AI characters, including how frequently they use the feature, which characters they interact with most, and alerts when the system detects unusual or concerning patterns. Parents probably won't have access to full conversation transcripts unless a pattern triggers a safety alert. The controls will also let parents set topic restrictions and character allowlists before their teen interacts with the system.

Are teens currently able to circumvent the pause and still access AI characters?

Once Meta fully implements the pause, regular teenage users won't have access to AI characters. However, teenagers who lie about their age during signup might still be able to access some features. That's why Meta is investing in age prediction technology to identify likely teenagers even if they claim to be adults. Over time, the system should become better at identifying teenagers and applying age-appropriate restrictions, but there will always be some margin of error and opportunities for circumvention.

How does this compare to what other AI companies are doing for teen safety?

Character.AI restricted open-ended conversations for users under 18 in October. OpenAI added teen-specific safety rules for ChatGPT. Google has also implemented age-appropriate restrictions on its AI tools. Meta's approach is somewhat more aggressive (complete pause and redesign) than competitors, but that might reflect Meta's additional regulatory pressure related to teen protection lawsuits. The industry trend is clearly toward stricter teen AI safeguards across the board.

What should parents do about AI character access while it's paused?

Parents should use this pause as an opportunity to have conversations with their teenagers about AI safety, about what they were using AI characters for, and about how they'll use redesigned versions when they return. Parents should also set expectations about monitoring and parental controls before the new version launches. Finally, parents should educate themselves about how AI actually works so they can have informed conversations with their teens about its capabilities and limitations.

Could restricting AI character access actually harm teenagers who were using them for genuine purposes?

That's a legitimate concern. Teenagers who were using AI characters for homework help, hobby discussion, or learning will lose that resource temporarily. The restriction could push teens toward less safe alternatives or simply remove a potentially useful tool. Meta's answer is that the new version will include legitimate educational uses while restricting potentially harmful ones. Whether that balance actually works won't be known until the feature relaunches.

Is Meta's focus on parental controls enough to make AI characters safe for teens?

Parental controls are necessary but probably not sufficient for teen safety. Parental controls assume parents are engaged and monitoring, which isn't always the case. Some teenagers have absent parents, hostile parents, or parents who aren't technically sophisticated. For those teenagers, built-in safety features in the AI itself matter more than parental oversight. Meta's approach of combining both parental controls and built-in safety restrictions is better than either alone, but there's no guarantee it's sufficient.

What happens if the redesigned AI characters still have safety problems after launch?

If problems emerge after the new AI characters launch, Meta will face additional liability and regulatory pressure. The company has essentially committed to fixing the safety issues by pausing and redesigning. If the redesigned version also has safety problems, that would be a significant failure of the redesign process and would likely trigger more aggressive regulatory intervention. This gives Meta strong incentive to get the redesign right before launching.

Conclusion

Meta's decision to pause teenage access to AI characters represents a watershed moment for how tech companies approach artificial intelligence, child safety, and regulatory relationships. It's not a casual feature pause—it's a strategic restructuring in response to converging pressures: litigation concerns, parental demands, regulatory scrutiny, and honest uncertainty about whether current AI systems are safe enough for teenage users.

What makes this decision significant isn't that Meta is pausing the feature, but how and why. The company is essentially admitting that its original AI character rollout didn't adequately account for teenage users' unique needs and vulnerabilities. Rather than defend the existing system, Meta is building a new one from the ground up with safety as a core design principle rather than a bolt-on afterthought.

This is good. It shows that the company is capable of prioritizing teen safety over product launches. It demonstrates that regulatory pressure and legal liability are powerful incentives for tech companies to do the right thing. And it suggests that the industry as a whole is learning to take teenage AI safety seriously.

But the real test comes when the redesigned AI characters launch. Will they actually be safe, or just appear safe? Will they be useful enough to attract teenage users, or will restrictions make them irrelevant? Will parental controls actually be used by parents, or will they gather dust? These questions don't have answers yet.

What we do know is that the tech industry is at an inflection point with AI and teenagers. The decisions made over the next 6-12 months will shape what teenage AI interaction looks like for the next decade. Meta's pause is part of that decision-making process—buying time to get it right, or at least to look like they're trying to get it right.

For parents, regulators, and teenagers themselves, that's worth watching closely. The future of how AI integrates into adolescent life is being decided right now, in meetings at Meta, in courtrooms, and in policy discussions happening mostly out of public view. The pause on AI characters is the visible tip of that iceberg.

Whatever comes next, one thing is clear: the era of tech companies building features for teenagers without seriously considering safety is ending. Meta's redesign is evidence of that shift. Whether the actual implementation matches the rhetoric remains to be seen.

Key Takeaways

- Meta is pausing global teen access to AI characters to develop age-appropriate versions with built-in parental controls and content restrictions

- The decision is driven by regulatory pressure from pending lawsuits, parental demands for oversight, and industry-wide movement toward stricter teen AI safeguards

- Redesigned AI characters will focus on education and hobbies while restricting content about self-harm, extreme violence, and explicit material based on PG-13 standards

- Other AI platforms including OpenAI, Character.AI, and Google are implementing similar teen safety measures, indicating an industry-wide trend shift

- When the redesigned characters launch, they will feature built-in parental controls allowing parents to monitor interactions, set content boundaries, and adjust restrictions in real-time

Related Articles

- Age Verification & Social Media: TikTok's Privacy Trade-Off [2025]

- Meta's Aggressive Legal Defense in Child Safety Trial [2025]

- Snapchat Parental Controls: Family Center Updates [2025]

- Snapchat's New Parental Controls: Screen Time Monitoring for Teens [2025]

- TikTok US Deal Finalized: 5 Critical Things You Need to Know [2025]

- Apple's AI Wearable Pin: What We Know and Why It Matters [2025]