Meta's Vibes Standalone App: The Future of AI-Generated Social Content

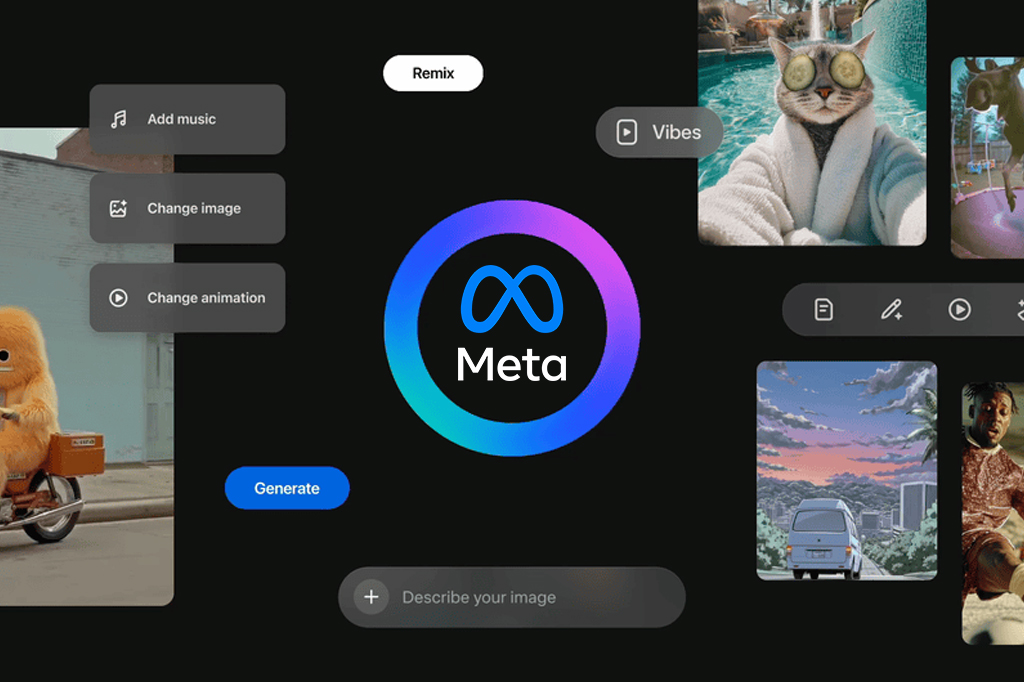

In September 2025, Meta introduced Vibes as a feature within its Meta AI app, marking a significant pivot in how social media platforms approach content generation and distribution. The feature was designed to democratize video creation by allowing users to generate Tik Tok-style vertical videos through simple text prompts powered by advanced AI models. Now, recognizing the unexpected momentum this feature gained within months of its launch, Meta has announced the development of a standalone Vibes app, signaling the company's commitment to AI-generated content as a core pillar of its social media strategy.

This move represents more than just feature separation; it reflects a fundamental shift in how Meta perceives the future of engagement on its platforms. Rather than competing solely on curated content or user-generated material, Meta is betting heavily on AI-generated video as the next major driver of user engagement and time spent on the platform. The decision to give Vibes its own dedicated application follows a pattern established by companies like Open AI with their Sora app, suggesting that AI-powered creative tools are becoming standalone entertainment and productivity products in their own right.

What makes this development particularly significant is the timing and context. Social media platforms have increasingly struggled with content moderation, creator burnout, and algorithm fatigue. By introducing AI-generated content as a primary offering, Meta is attempting to create an infinite stream of fresh, engaging material that doesn't rely on human creators uploading content on their own schedules. This approach addresses a fundamental challenge facing all social platforms: the constant need for new content to keep users engaged.

The standalone app approach also allows Meta to experiment with features and monetization strategies that might clutter its primary social apps. Rather than forcing all users of Facebook, Instagram, or Threads to engage with Vibes functionality, Meta can create a dedicated environment where the core experience revolves entirely around discovering, creating, and sharing AI-generated videos. This separation of concerns follows successful product strategies like how Instagram Reels eventually integrated into Instagram proper, or how WhatsApp maintained its own identity before integration.

For developers, creators, and content strategists, understanding Vibes and its implications is becoming increasingly important. The app represents a new category of creative tools that blur the line between social media platforms and content generation software. Unlike traditional social platforms where users must either create content themselves or curate content from others, Vibes democratizes professional-quality video creation, making it accessible to anyone with a smartphone and an idea.

This comprehensive guide explores every aspect of Meta's Vibes standalone app, analyzing its features, technical capabilities, market positioning, and implications for the broader creator economy. We'll examine how it compares to competing solutions, discuss the advantages and limitations of AI-generated content platforms, and help you understand whether this tool fits your content creation or business needs.

Understanding Meta's Vibes: What It Is and How It Works

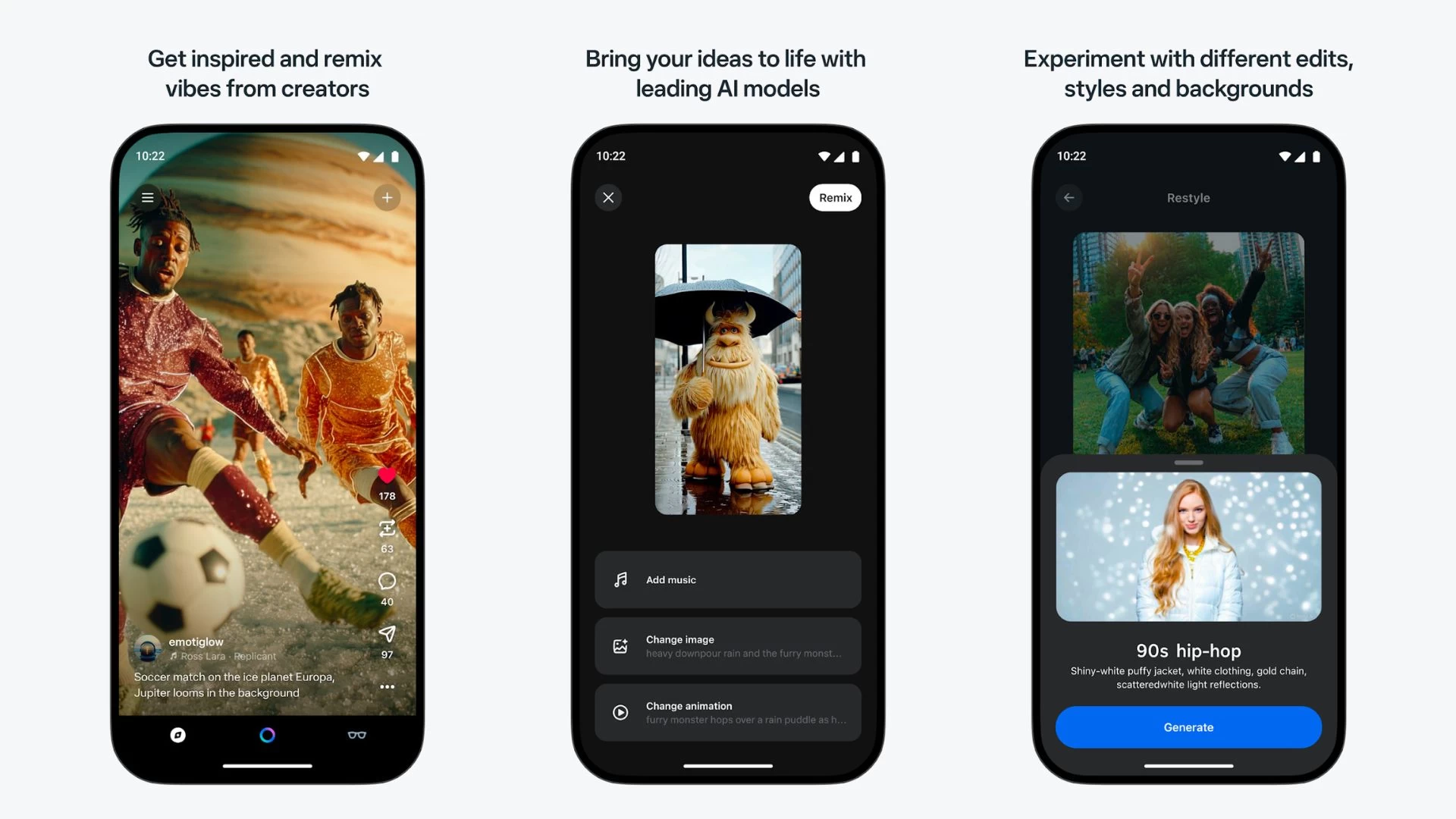

The Evolution from Feature to Standalone App

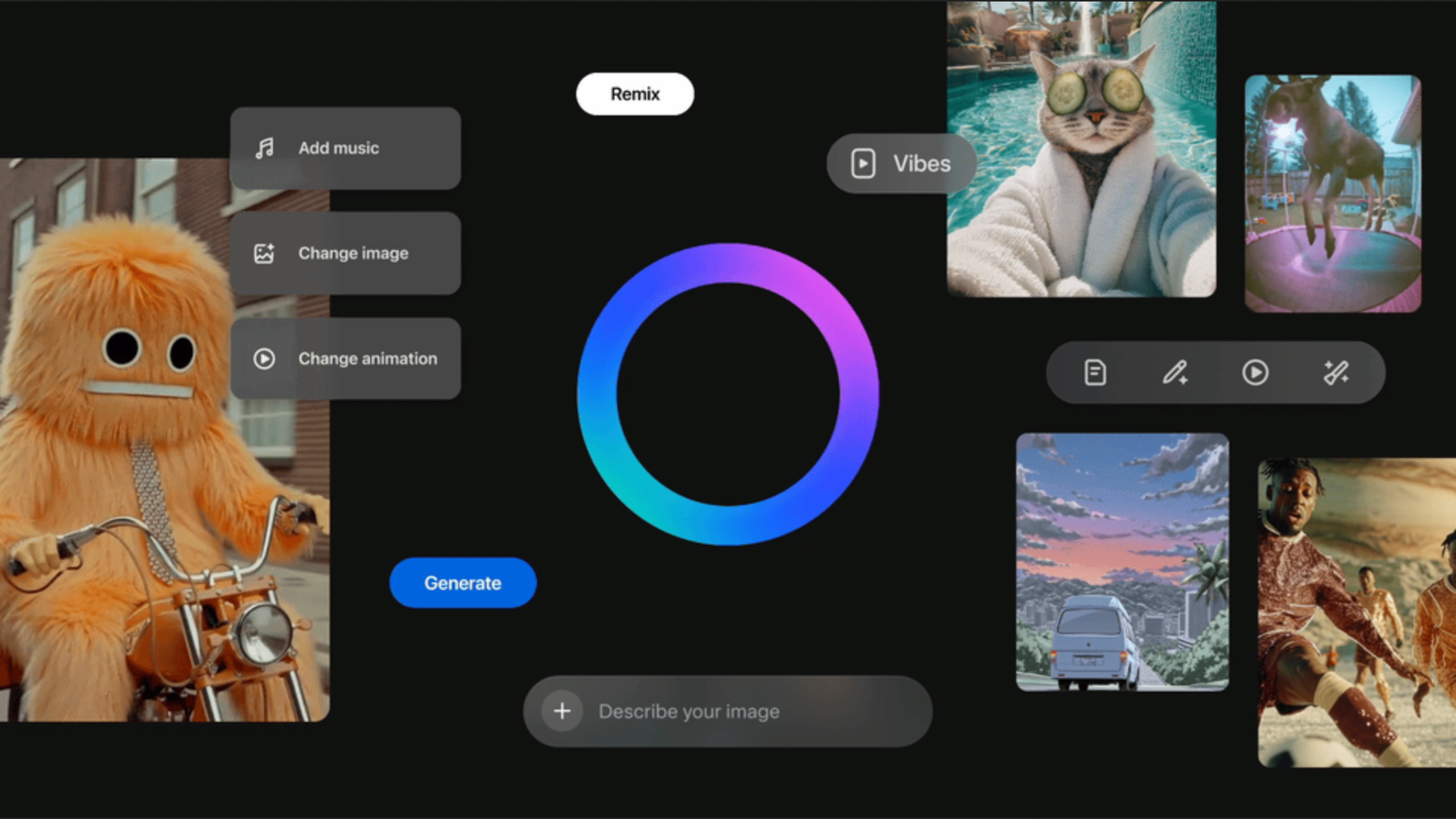

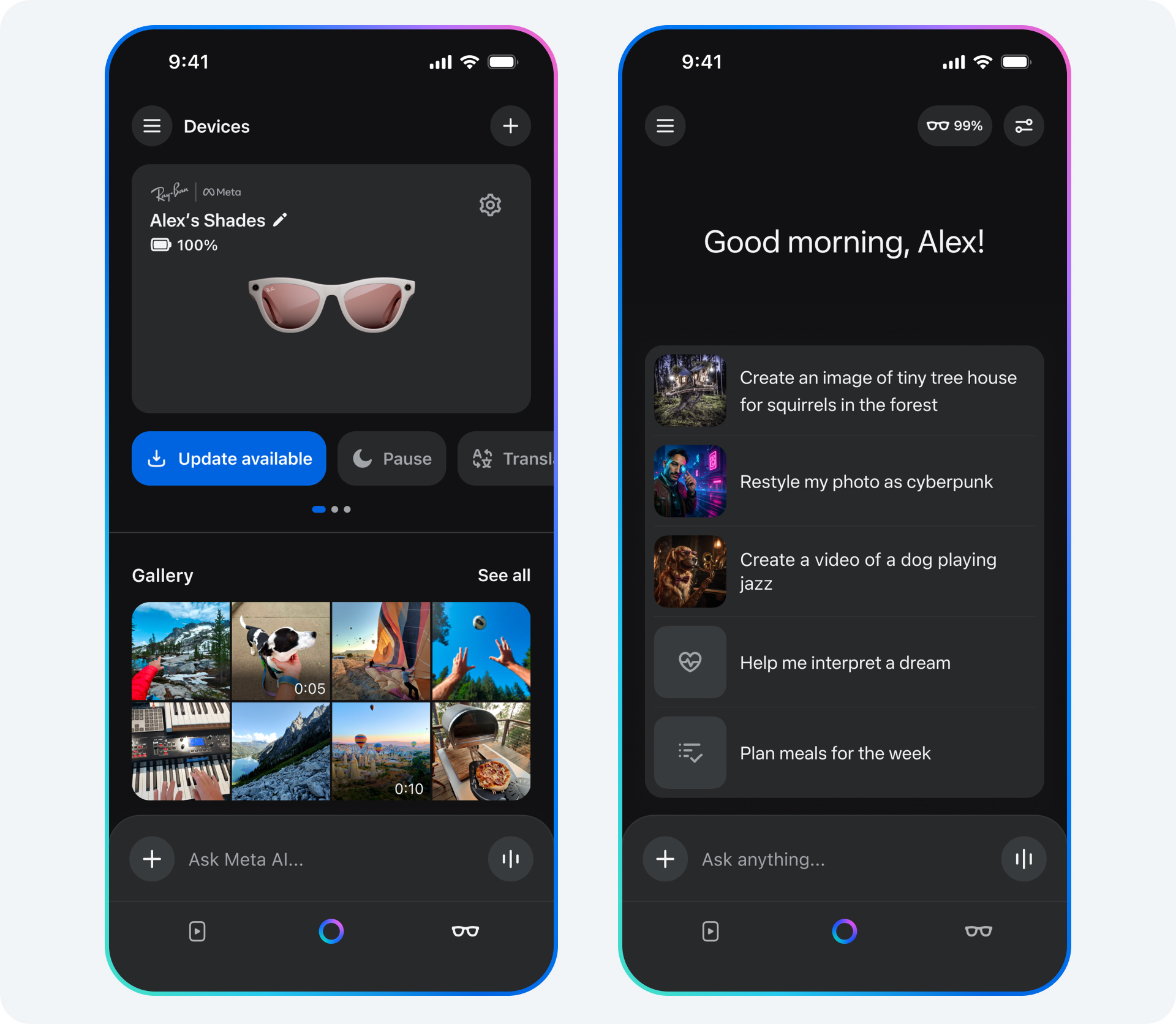

Meta's journey with Vibes began not as a standalone product but as an experimental feature buried within the Meta AI app. Launched in September 2025, Vibes was initially positioned as one of several creative tools available within Meta's broader artificial intelligence suite. The feature allowed users to input text descriptions of videos they wanted to create, and Meta AI would generate corresponding vertical videos in the style popular on Tik Tok and Instagram Reels. Initial adoption metrics were surprising to Meta's product teams—the feature saw strong engagement from a diverse user base including creators, casual content consumers, and experimental users curious about AI capabilities.

This strong early adoption prompted Meta to reconsider Vibes' positioning within its product portfolio. Rather than treating it as a secondary feature, Meta recognized an opportunity to create a dedicated platform where AI-generated video creation could be the primary experience. The decision to develop a standalone app reflects a broader trend in the tech industry where successful features graduate to become independent products with their own development teams, user bases, and monetization strategies. Similar transitions have occurred with Instagram (originally a photo-sharing app that became social media's leading visual platform), WhatsApp (initially focused on messaging that evolved into a multimedia communication platform), and Threads (Meta's answer to Twitter, launched as a standalone app rather than integrated into Facebook).

The standalone approach offers several strategic advantages. First, it allows Meta to focus development resources on optimizing the AI-generated video experience without compromise. Second, it enables differentiated branding and marketing—Vibes can be positioned as a creative tool rather than a social network, potentially attracting users who might not be interested in Meta's other properties. Third, it creates opportunities for platform-specific features that wouldn't make sense in the broader Meta ecosystem, such as advanced video customization, effect libraries, or specialized AI models trained on particular content genres.

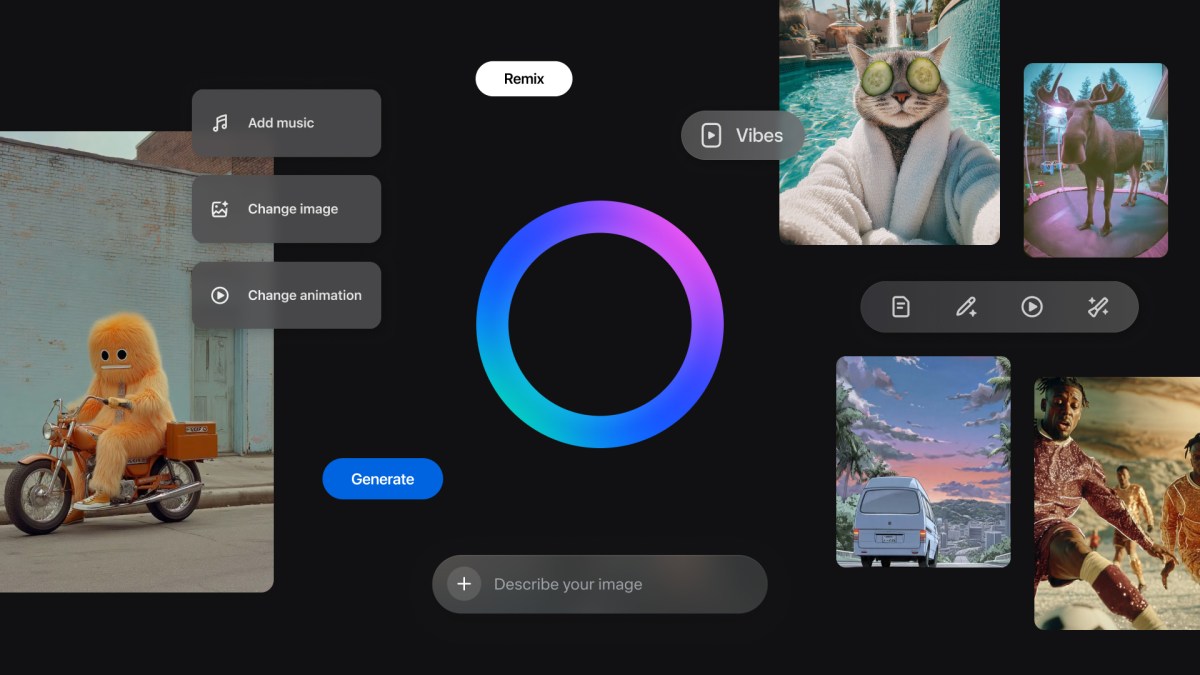

How Vibes Generates AI Videos

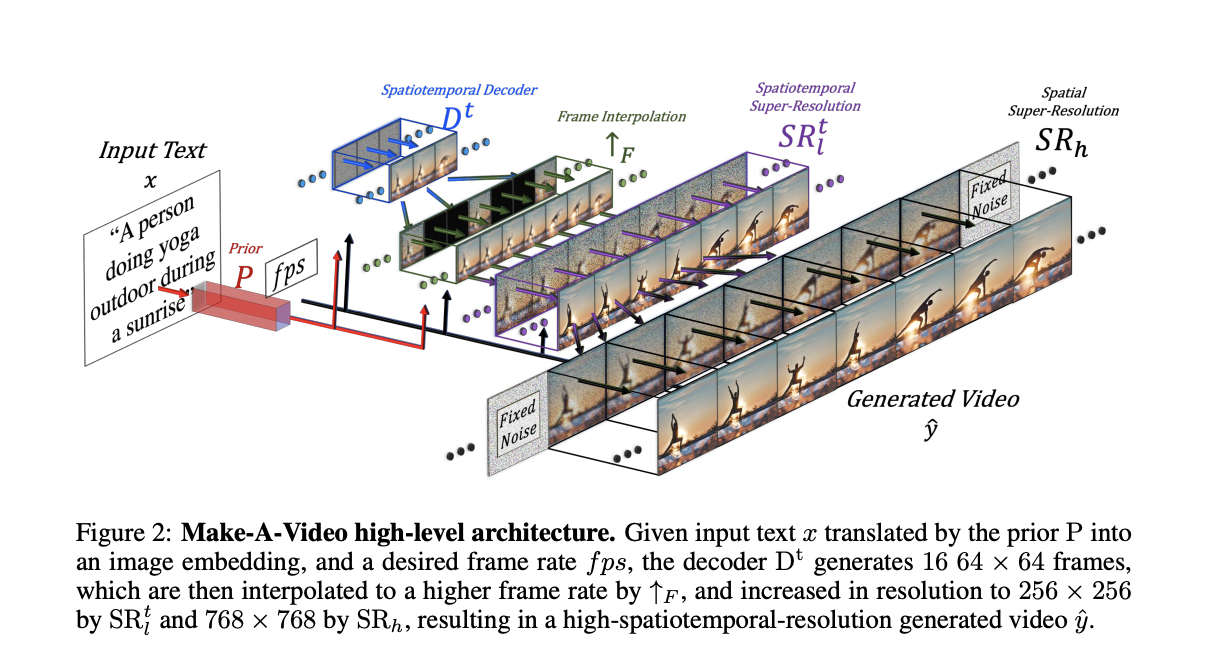

At its technical core, Vibes functions as a user interface wrapper around Meta's large language models and video generation models. When a user inputs a text prompt describing a video they want to create, the system follows a multi-stage processing pipeline. First, the language model parses the user's description, extracting key semantic information about the desired content, including visual elements, motion characteristics, audio requirements, and overall aesthetic direction.

Once the prompt has been understood and refined, Meta's video generation model—a transformer-based architecture similar in principle to diffusion models used by competitors like Open AI's Sora—begins the generation process. Rather than creating high-resolution video in a single pass (which would be computationally expensive), the model generates video progressively, starting with key frames and gradually adding detail and motion coherence. The model is trained on vast amounts of video data collected from the internet, enabling it to understand visual conventions, motion physics, and aesthetic principles that make videos appear professionally produced.

The quality of generated videos depends significantly on the specificity and clarity of the user's prompt. A detailed description like "A person in a red jacket running through a snowy forest at dawn with warm morning light filtering through the trees" will generate more coherent results than a vague prompt like "Running in the snow." However, Meta has invested in prompt optimization systems that help users refine their inputs—the app includes suggestions for improving prompts and examples of how slight wording changes can dramatically affect output quality.

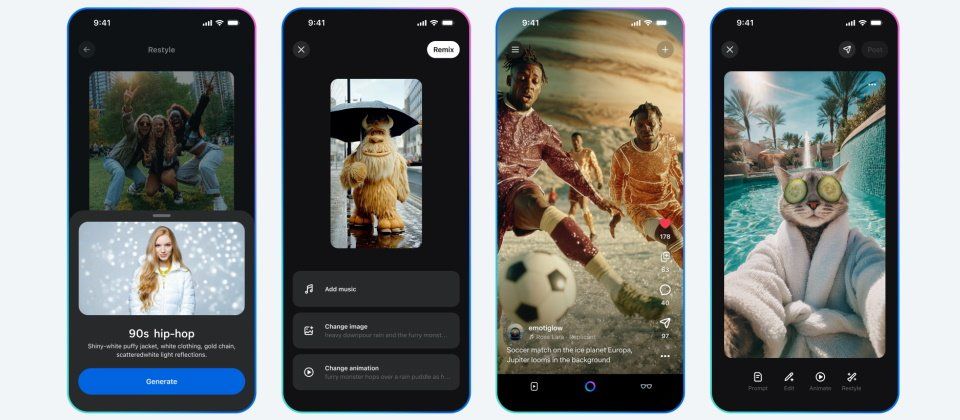

Vertical video aspect ratios (typically 9:16) were chosen specifically because they dominate on mobile platforms like Tik Tok, Instagram Reels, and YouTube Shorts. By optimizing for vertical formats, Vibes generates content that's immediately shareable on any platform where vertical video dominates, reducing friction between content creation and distribution. The generated videos typically range from 15 to 60 seconds, matching the optimal length for short-form video consumption on modern social platforms.

Key Technical Specifications

While Meta hasn't published detailed technical specifications for Vibes, industry analysis and available information suggest several important capabilities. Generated videos support resolutions up to 1080p, with frame rates typically set to 24 frames per second (matching cinema standards while balancing quality with file size). The system supports text-to-video generation as the primary input method, with planned support for image-to-video (where users can provide a starting image that the model animates) and potentially video-to-video capabilities (where users can modify existing videos through prompting).

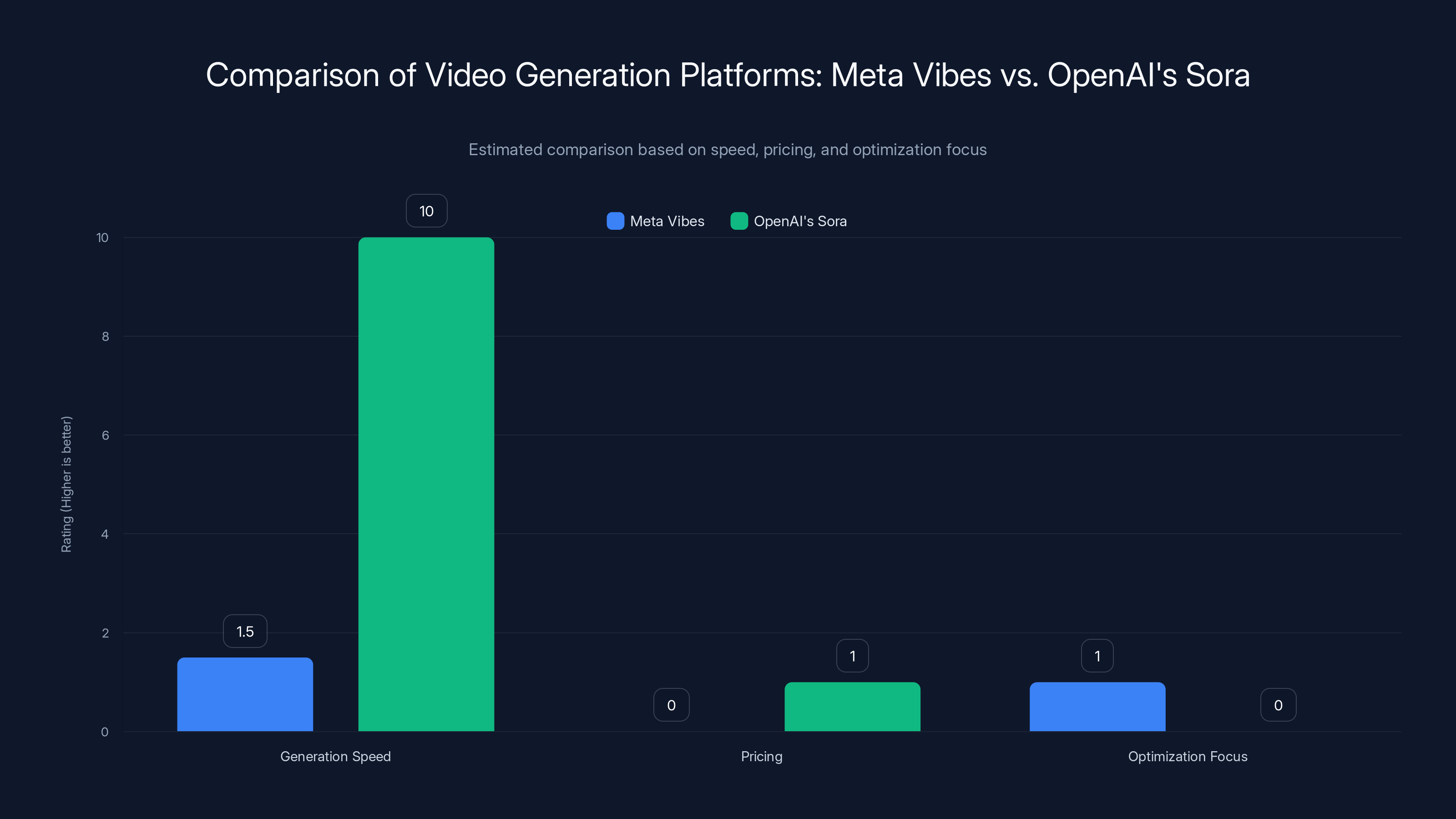

Generation time varies based on system load and output complexity, but typical videos generate within 30 seconds to 2 minutes. The faster generation times compared to competitors like Sora (which can take 10+ minutes per video on paid tiers) represent a significant technical achievement, suggesting Meta has optimized its inference pipeline for responsive user experience. This speed advantage is crucial for maintaining user engagement—users are far more likely to iterate on prompts and create multiple videos if they're not waiting several minutes between each generation.

The platform includes watermarking of generated videos by default, clearly indicating that content was created with Vibes. This addresses copyright and authenticity concerns while also serving as marketing for the platform. Users presumably will have options to remove or customize watermarks through premium subscriptions, a common monetization strategy for content creation tools.

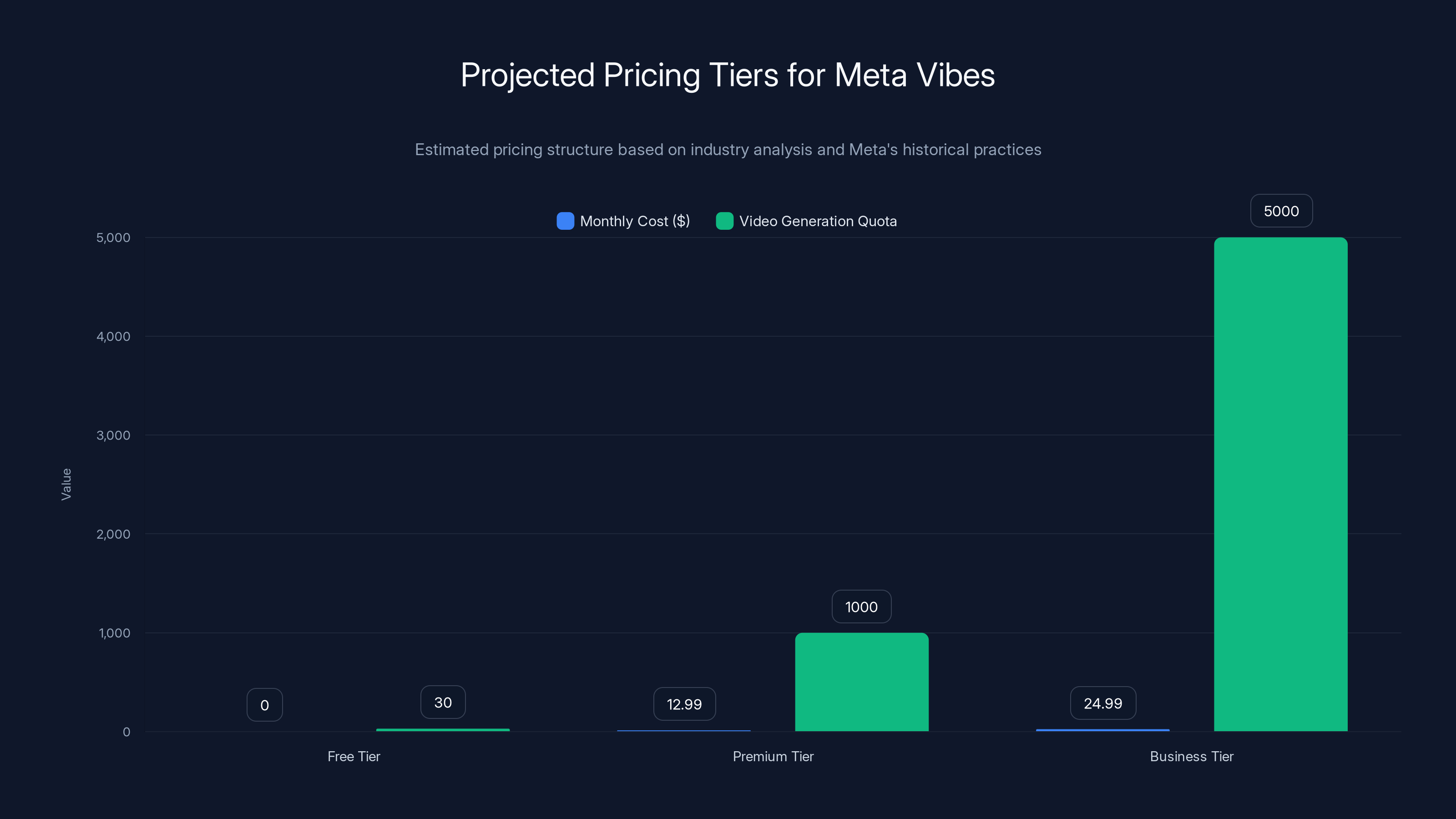

Estimated data suggests a freemium model with a free tier offering limited video generation, a premium tier with extensive features at

Core Features and Capabilities of the Vibes Platform

Text-to-Video Generation at Scale

The foundational feature of Vibes is its ability to transform written descriptions into finished video content with minimal user intervention. Unlike video editing software that requires knowledge of composition, transitions, color grading, and timing, Vibes abstracts away these technical requirements. A user with no video production experience can generate sophisticated-looking videos by simply describing what they want to see.

This democratization has profound implications for content creation. Historically, producing professional-quality video required either years of training in video production techniques or significant financial investment in equipment and education. A smartphone camera, which most users already own, is sufficient hardware. The only other requirements are creative vision and the ability to articulate that vision in text—skills that are far more widely distributed across the population than video production expertise.

The text-to-video capability includes several optimization features to improve output quality. The system recognizes when prompts are incomplete or ambiguous and suggests clarifications. For example, if a user writes "A person dancing," the system might suggest adding details like "A woman in a silver dress dancing in an electric blue nightclub with neon lights," explaining that additional visual detail typically results in higher-quality outputs. This guidance has been shown to significantly improve user satisfaction with generated videos.

Meta has also implemented content filtering within the text-to-video system, preventing generation of videos containing violence, explicit content, or other policy violations. These filters operate at the prompt understanding stage, meaning the system recognizes potentially problematic requests before attempting generation, saving computational resources and maintaining platform safety standards.

Discovery and Curation Features

While the focus of Vibes is creation, a successful platform also requires robust discovery mechanisms that help users find interesting content created by others. The standalone app includes a feed of popular Vibes-generated videos, curated through algorithmic ranking similar to Instagram's Explore page or Tik Tok's For You page. The algorithm considers factors like watch time, shares, likes, and saves to determine which videos appear prominently in feeds.

The curation system faces interesting challenges unique to AI-generated content. Traditional social feeds curate based on explicit user actions (what did this user like? who do they follow?) and implicit signals (how long did they watch before scrolling?). With AI-generated content, an additional dimension emerges: the quality and sophistication of the creation process. A beautifully-crafted prompt that generated an exceptional video should potentially be surfaced more prominently than a lazy prompt that happened to produce acceptable results. Some reports suggest Meta is experimenting with ways to surface not just the best videos, but also the most interesting prompts that generated those videos.

Search functionality allows users to discover videos by topic, style, or creator. Users can search for terms like "cyberpunk city," "cozy cafe," or "underwater scenes" to find relevant content. This search-driven discovery complements algorithmic feeds, accommodating both browsing behavior (users who want to explore what's trending) and goal-driven behavior (users looking for specific content types or inspiration).

Community and Sharing Features

Beyond individual video creation and discovery, Vibes includes social features that encourage community building and content distribution. Users can comment on videos, like content they enjoy, and share videos across Meta's ecosystem (Facebook, Instagram, Threads) or to external platforms via link sharing or direct download.

The ability to share generated videos across platforms is strategically important for Meta. Each shared video serves as implicit marketing for Vibes itself—friends who see shared content and want to create similar videos are directed to download the app and try it themselves. This viral loop has driven adoption of previous Meta products and is likely a key growth lever for Vibes.

Following and profile features allow users to see what other creators are making, encouraging community formation around popular creators. While all videos on Vibes are AI-generated, community dynamics still emerge—users who consistently create compelling content attract followers who enjoy their aesthetic and creative sensibility, even if the actual video generation is handled by AI.

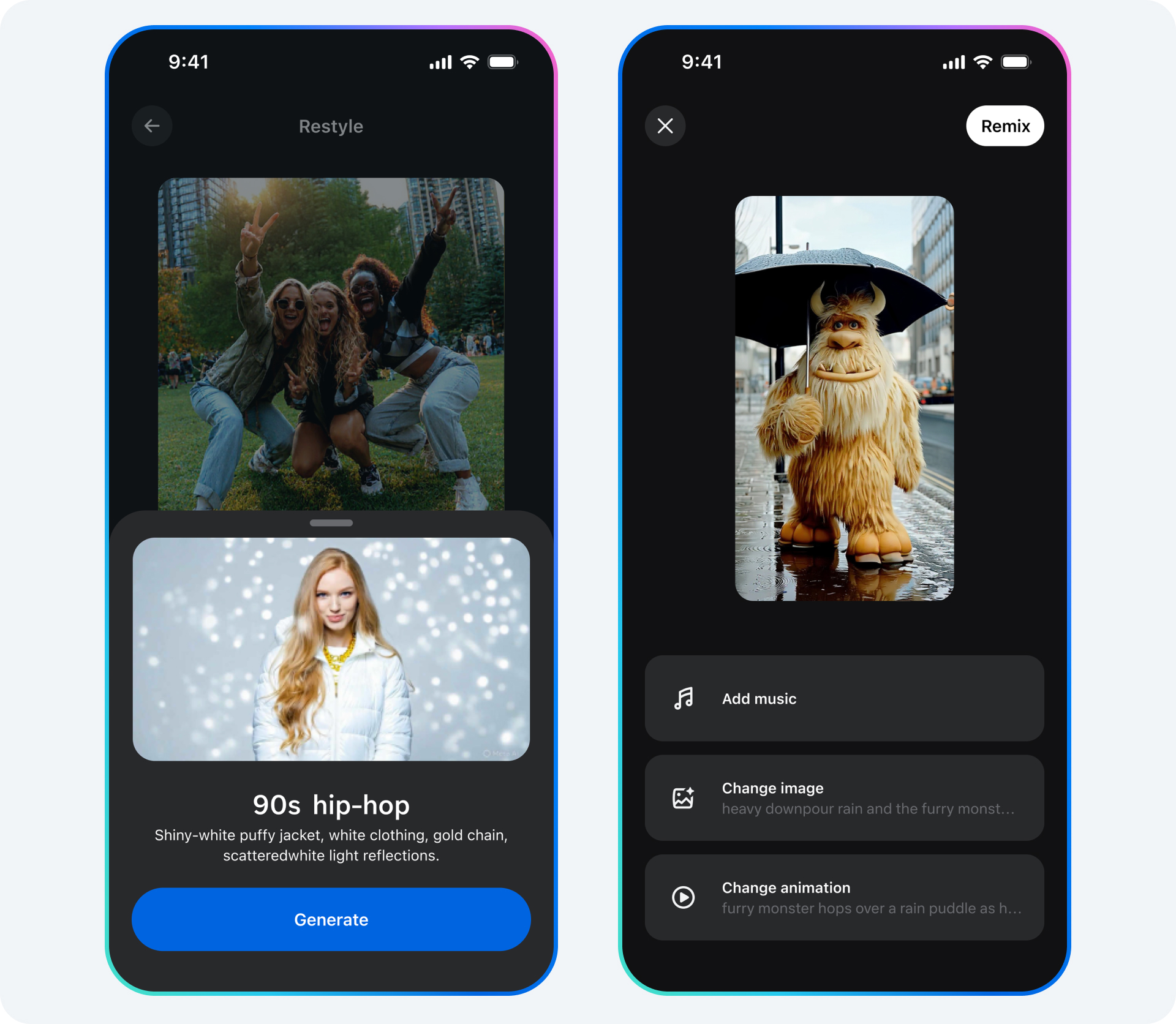

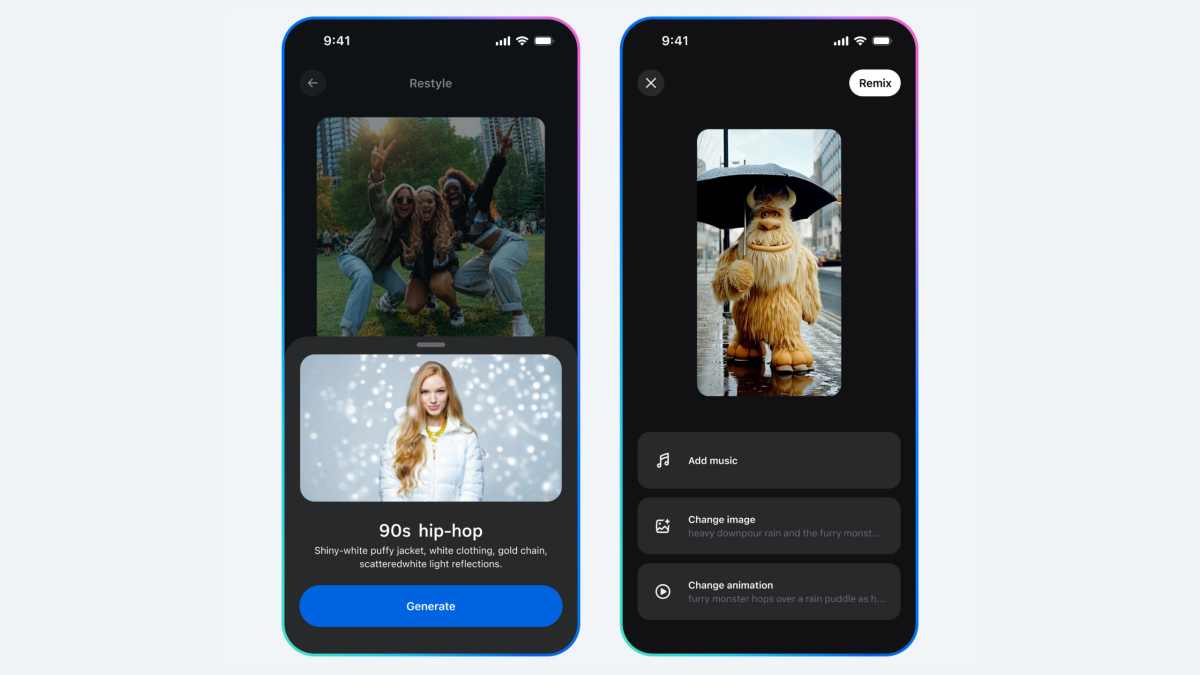

Advanced Customization Options

As users develop proficiency with Vibes, they gain access to more granular controls over the generation process. Rather than simply entering a text prompt, advanced users can specify parameters like aspect ratio, video length, camera movement style (static, pan, zoom, dolly movement), color grading preferences, and even AI model versions for different effects.

These advanced parameters are crucial for creators and brands wanting to maintain visual consistency across multiple videos. A brand might develop a house style—a consistent color palette, motion vocabulary, and visual aesthetic—that should appear in all their marketing videos. The ability to specify these parameters ensures that AI-generated videos align with brand guidelines rather than producing random variations that would confuse audiences.

Effect libraries and stylization options allow users to apply post-generation modifications to videos. Generated videos can be adjusted for color grading, brightness and contrast, saturation, and even stylistic effects like film grain or cinematic color grading. While these adjustments don't require expertise with professional software like Da Vinci Resolve or Final Cut Pro, they provide creators with more control over the final aesthetic.

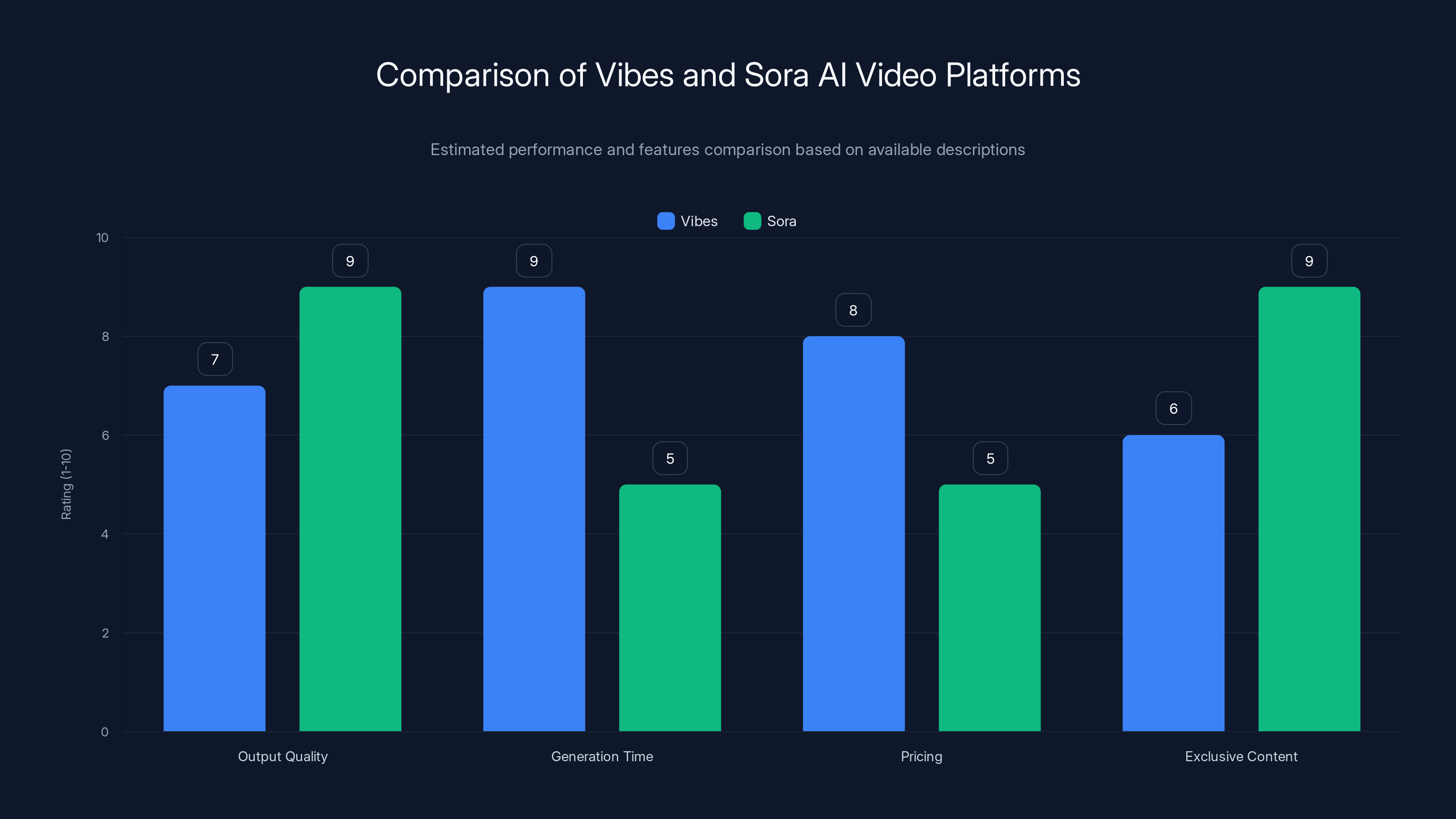

Vibes excels in faster generation times and potentially lower pricing, while Sora leads in output quality and exclusive content. (Estimated data)

How Vibes Compares to Competing AI Video Platforms

Open AI's Sora: The Premium Competitor

Open AI's Sora application, released in early 2024 with public access expanding through 2025, represents the most direct competitor to Vibes. Sora also generates videos from text prompts using transformer-based AI models trained on vast video datasets. In head-to-head comparisons, Sora's output quality often edges out Vibes, particularly in terms of physical coherence, realistic lighting interactions, and complex multi-object scenes.

However, Sora's advantage comes with significant trade-offs. Generation times are substantially longer—a single 60-second video can take 10+ minutes to generate on Sora's free tier, with paid tiers offering somewhat faster processing. This slow feedback loop discourages iteration and experimentation. Users are less likely to try multiple prompts, refine prompts based on initial results, or engage in the creative process of testing ideas if each attempt requires a lengthy wait.

Pricing represents another critical difference. Open AI charges for Sora access through monthly subscriptions starting at $20/month or pay-per-use credits, with more complex or longer videos consuming more credits. Meta's pricing for Vibes has not been finalized, but Meta historically monetizes through advertising rather than direct subscription fees, suggesting Vibes will likely be free with optional premium features. For creators and businesses generating dozens or hundreds of videos monthly, the pricing difference becomes substantial.

Open AI has pursued licensing deals with major entertainment properties, including an announced partnership with Disney allowing Sora users to generate videos with Disney characters and intellectual property. These exclusive deals provide competitive differentiation—users wanting to create content with specific characters or franchises must use Sora. Meta, having previously negotiated celebrity likeness licenses for Instagram features, will likely pursue similar partnerships.

Runway and Other Generalist Platforms

Runway's AI video tools occupy a different positioning within the landscape. Rather than focusing exclusively on text-to-video generation, Runway provides a comprehensive creative studio including AI-powered video editing, motion graphics generation, visual effects, and style transfer. This breadth makes Runway valuable for creators who want to use AI throughout their entire video production pipeline, not just for initial video generation.

Runway's advantage is versatility—a creator can generate a base video with text-to-video, then use Runway's editing tools to modify it, apply effects, and refine the result without leaving the platform. Vibes, by contrast, focuses narrowly on generation. If users want to edit generated videos, they must export them to video editing software like Cap Cut, Adobe Premiere, or Final Cut Pro. This workflow friction might be acceptable for casual creators but becomes problematic for professional production pipelines.

Runway's pricing also reflects its broader capabilities—subscriptions start at $12.50/month for basic access to AI video tools, with higher tiers offering more generation minutes. For creators who need comprehensive AI-powered creative tools, Runway represents better value. For creators whose primary need is fast, easy video generation, Vibes' narrower focus and superior speed provide better utility.

YouTube Dream Screen and Platform-Native Solutions

YouTube introduced Dream Screen, a feature allowing creators to generate AI backgrounds and environments for shorts. While not full video generation, Dream Screen demonstrates how platform-native AI video features are becoming commonplace. By integrating AI video generation directly into YouTube Studio, Google ensured their user base has easy access to these tools without requiring external applications.

This trend has significant implications for Vibes. Many users might prefer using YouTube Dream Screen, Instagram's AI features, or Tik Tok's generative capabilities because they're integrated into creation workflows they already know. Meta's decision to create a standalone app somewhat contradicts this platform-native trend, though it allows for more focused development and potentially higher quality experiences than could be achieved with feature integration.

Emerging Competitors and Startups

Beyond these major platforms, numerous startups are building AI video generation tools targeting specific use cases. Synthesia focuses on video generation for business presentations, allowing users to generate videos with virtual presenters. D-ID specializes in creating talking head videos from photos and scripts. Pictory offers AI-powered video creation specifically designed for social media content. These specialized tools serve particular user needs that general-purpose platforms like Vibes don't address as effectively.

The fragmentation of the AI video landscape suggests that no single tool will dominate all use cases. Different creators will choose different platforms based on their specific needs, target formats, and workflow preferences. Vibes' strength lies in rapid, high-volume generation of short-form vertical video, making it ideal for Tik Tok and Reels creators. Specialized platforms serve narrower but deeper needs.

Use Cases and Ideal User Personas

Content Creators and Influencers

Content creators represent Vibes' most natural user base. The ability to rapidly generate dozens of video ideas and test which resonate with audiences directly addresses creators' core challenge: producing consistent, high-quality content on demanding schedules. A Tik Tok creator might use Vibes to generate 50 video concepts, select the 10-15 with highest potential, and release them across a week, maintaining audience engagement without requiring constant personal time investment.

For creators facing algorithm fatigue—where their current audience has seen their content style exhaustively and engagement is declining—Vibes offers a mechanism to explore new aesthetics and formats without the effort of personally producing new content. A creator can experiment with "cyberpunk" themes, "nature documentary" styles, or "retro 80s" aesthetics across multiple generated videos to test which resonates with their audience.

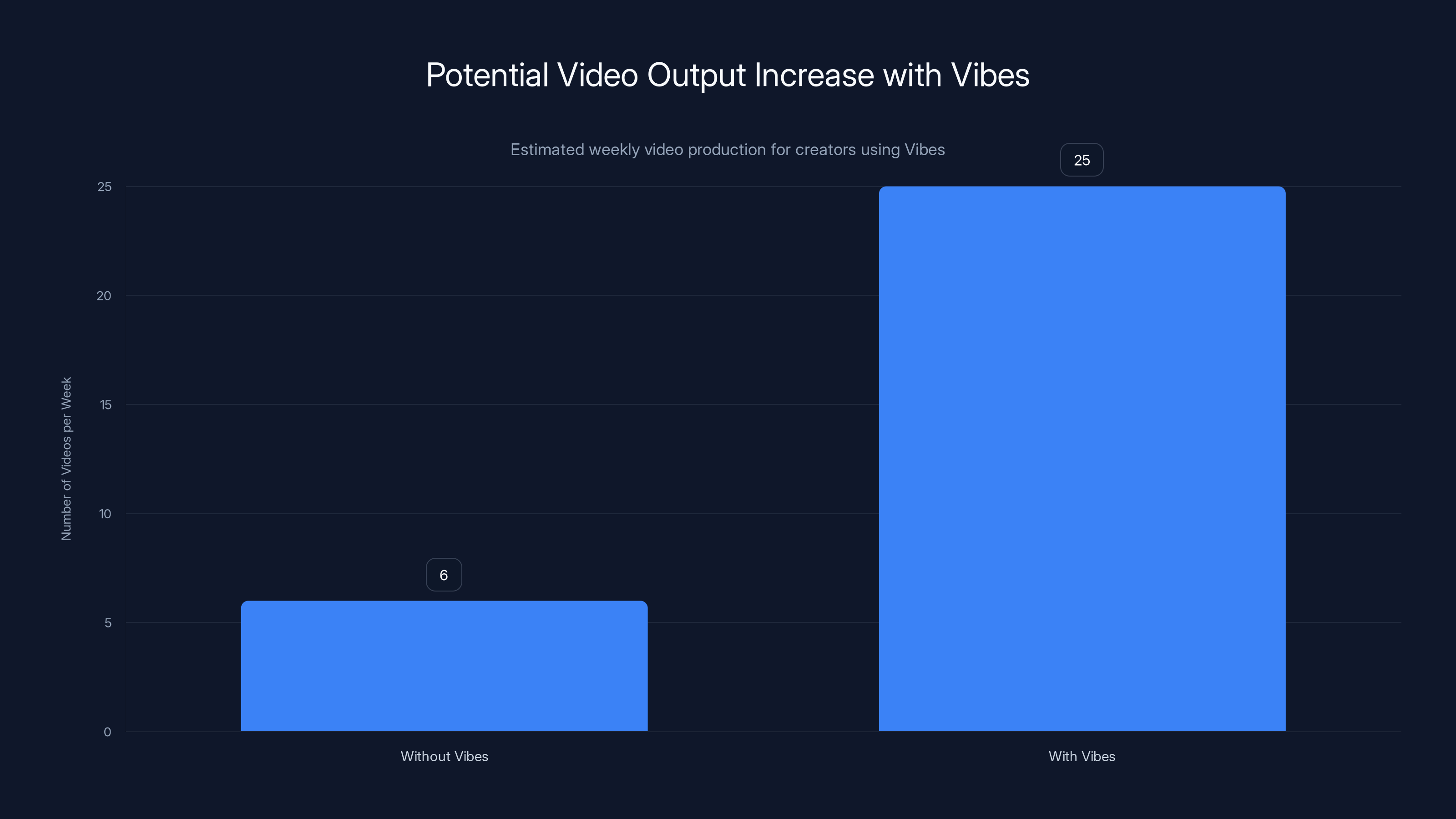

The financial implications are significant. A professional video creator earning income through sponsorships, brand partnerships, or platform monetization can use Vibes to dramatically increase output while maintaining quality standards. Where previously one creator might produce 4-8 videos weekly, Vibes enables production of 20-30 videos weekly with similar effort investment. This scaling directly translates to increased revenue opportunities.

Marketing and Brand Content

Brands represent a secondary but equally important user segment. Marketing departments can use Vibes to rapidly generate product showcase videos, advertising content, and social media marketing materials. Where a brand previously spent

For e-commerce brands particularly, the impact is transformative. Rather than shooting videos of their products in a few fixed settings, they can generate the same product shown in dozens of different contexts and situations. A clothing brand can see their jacket displayed on a hiker in the mountains, a professional in an urban setting, a casual wearer at a beach, and an athlete in active use—all generated without requiring actual production shoots.

Smaller brands and startups with limited marketing budgets gain access to video content capabilities previously only available to well-funded enterprises. This democratization of content quality helps level the competitive landscape, allowing well-executed marketing campaigns to come from companies without massive production departments.

Educational and Explainer Content

Educational institutions and training platforms can use Vibes to generate visual explanations and animated demonstrations. A science educator might generate videos showing molecular structures, planetary systems, or biological processes animated in ways that are difficult or impossible to film with traditional cameras. A language learning platform could generate scenarios showing everyday situations to help learners understand contextual language usage.

The ability to rapidly generate multiple variations is particularly valuable for education. An instructor can create 5-10 versions of the same concept explained visually, then test which approach resonates most effectively with their student population. This evidence-driven iteration improves educational outcomes by matching explanations to student learning preferences.

Entertainment and Experimentation

Beyond commercial use cases, Vibes serves entertainment purposes. Users create and share videos purely for enjoyment—funny scenarios, surreal imagery, creative experiments that explore the boundaries of AI capabilities. This entertainment use case drives social engagement and platform growth as users share entertaining or impressive videos with friends.

The experimental angle is particularly interesting. Creatives in music, visual art, and filmmaking use Vibes to visualize ideas that exist only in imagination, then share these visualizations to gather feedback and iterate on creative concepts. An independent filmmaker might generate hundreds of visual concepts and select the most compelling to guide actual production, dramatically reducing preproduction time and risk.

Meta Vibes excels in generation speed and vertical video optimization, while Sora has a higher pricing structure. Estimated data based on platform descriptions.

Pricing Models and Monetization Strategy

Expected Pricing Structure

While Meta hasn't publicly finalized Vibes pricing, industry analysis and Meta's historical practices suggest the likely structure. Meta typically monetizes user-facing products through advertising rather than direct user charges. We can expect Vibes to follow a freemium model where basic video generation remains free, supported by advertising, while premium tiers unlock advanced features and ad-free experiences.

The free tier would likely include a monthly generation quota—perhaps 20-50 generated videos monthly, sufficient for casual users but limiting for heavy creators. Generation time might be slower on the free tier (with videos processing during lower-demand hours) compared to premium tiers with immediate processing.

Premium subscriptions would probably start at

Business accounts might include additional tiers starting at $24.99 monthly or higher, offering features like batch processing (uploading lists of prompts for bulk generation), API access for automated workflows, advanced analytics, and branded templates. This tiering approach generates revenue from multiple user segments based on willingness and ability to pay.

Enterprise and Licensing Opportunities

Beyond consumer monetization, Meta will likely offer enterprise licensing to brands, agencies, and media companies. An advertising agency might license Vibes technology to use internally for client work, integrating AI video generation into their creative production pipeline. A media company might integrate Vibes capabilities into their content management systems.

These enterprise relationships unlock significantly higher revenue per customer compared to consumer subscriptions. An agency serving 20+ brands might pay

Data and Advertising Value

Beyond direct monetization, Vibes generates enormous value through data collection. Every prompt entered, every video generated, and every engagement signal reveals information about user interests, aesthetic preferences, and creative inclinations. This behavioral data informs Meta's broader advertising targeting and content recommendation systems.

Video generation data also trains Meta's AI models. Each generated video and corresponding prompt provides training examples that improve future model performance. While Meta must balance privacy considerations (ensuring user data isn't misused), the proprietary training data from Vibes usage gives Meta's AI systems significant advantages over competitors.

Advantages of Using Meta's Vibes for Content Creation

Speed and Efficiency

The most compelling advantage of Vibes is sheer speed. Generating a finished video in 30 seconds to 2 minutes fundamentally changes creative workflows. Rather than spending hours planning shots, securing locations, recruiting talent, and filming and editing, creators can generate dozens of video concepts in the time previously required to produce a single video.

This speed advantage extends to iteration and refinement. A creator might generate five variations of a concept with different prompts, review results, and select the strongest version within minutes. Testing creative ideas that would require weeks of planning and execution with traditional methods becomes feasible with AI generation.

For time-sensitive content, this speed is transformative. News organizations can rapidly generate supporting visuals for breaking stories. Brands can create timely marketing content responding to trends within hours rather than days or weeks. The ability to quickly capitalize on trending topics or seasonal moments significantly improves content relevance.

Cost Reduction

The financial implications of Vibes extend far beyond subscription costs. Professional video production requires significant investment in equipment, talent, locations, and time. Even creating modest-quality videos professionally costs hundreds to thousands of dollars. AI-generated videos eliminate these costs, replacing them with computation costs that Meta absorbs.

This cost elimination levels competitive dynamics. A small brand can now create marketing content comparable in technical quality to what large corporations produce, previously requiring proportionally higher investment. This democratization accelerates market innovation by reducing barriers to professional content production.

Beyond direct production costs, AI generation eliminates opportunity costs. A creator or marketer spending 8 hours daily on video production can reallocate those 8 hours to strategy, planning, audience engagement, or other value-generating activities. The time-to-value dramatically improves, with creators going from ideation to published content in minutes rather than days.

Creative Exploration and Experimentation

With traditional production, creators are conservative about experimenting with new styles, formats, or concepts. Each experiment requires time and financial investment, creating real downside risk. This risk aversion leads to content homogenization—creators stick with formats and styles that have proven successful.

AI generation dramatically reduces this experimentation cost. A creator can generate 20 different aesthetic treatments of the same concept, from photorealistic to abstract to surreal, at minimal cost. This low-cost experimentation enables discovery of new creative directions that audiences respond to, driving innovation in the creators' content.

Specialized creators can explore niche aesthetics previously too costly to pursue. A filmmaker interested in cyberpunk visual styles can generate dozens of videos exploring this aesthetic, building an audience of fans interested in this visual language. The cost is so low that pursuing niche interests becomes economically viable.

Accessibility and Inclusivity

Video production requires technical knowledge of equipment, composition, lighting, editing software, and post-production workflows. This expertise barrier excludes many potential creators from participating in video culture. Vibes eliminates this barrier—anyone who can describe ideas in text can now create videos.

This accessibility has profound cultural implications. Creators from non-traditional backgrounds, people with disabilities that make traditional video production difficult, and creators from low-resource communities can participate in video culture at the same technical quality level as well-resourced professionals. The democratization of content quality improves cultural representation in media.

Language barriers also reduce significantly. While Vibes currently operates primarily in English, expanding to additional languages removes constraints on who can use the tool. Non-English speakers can describe videos in their native language and generate professional-quality content without language translation barriers.

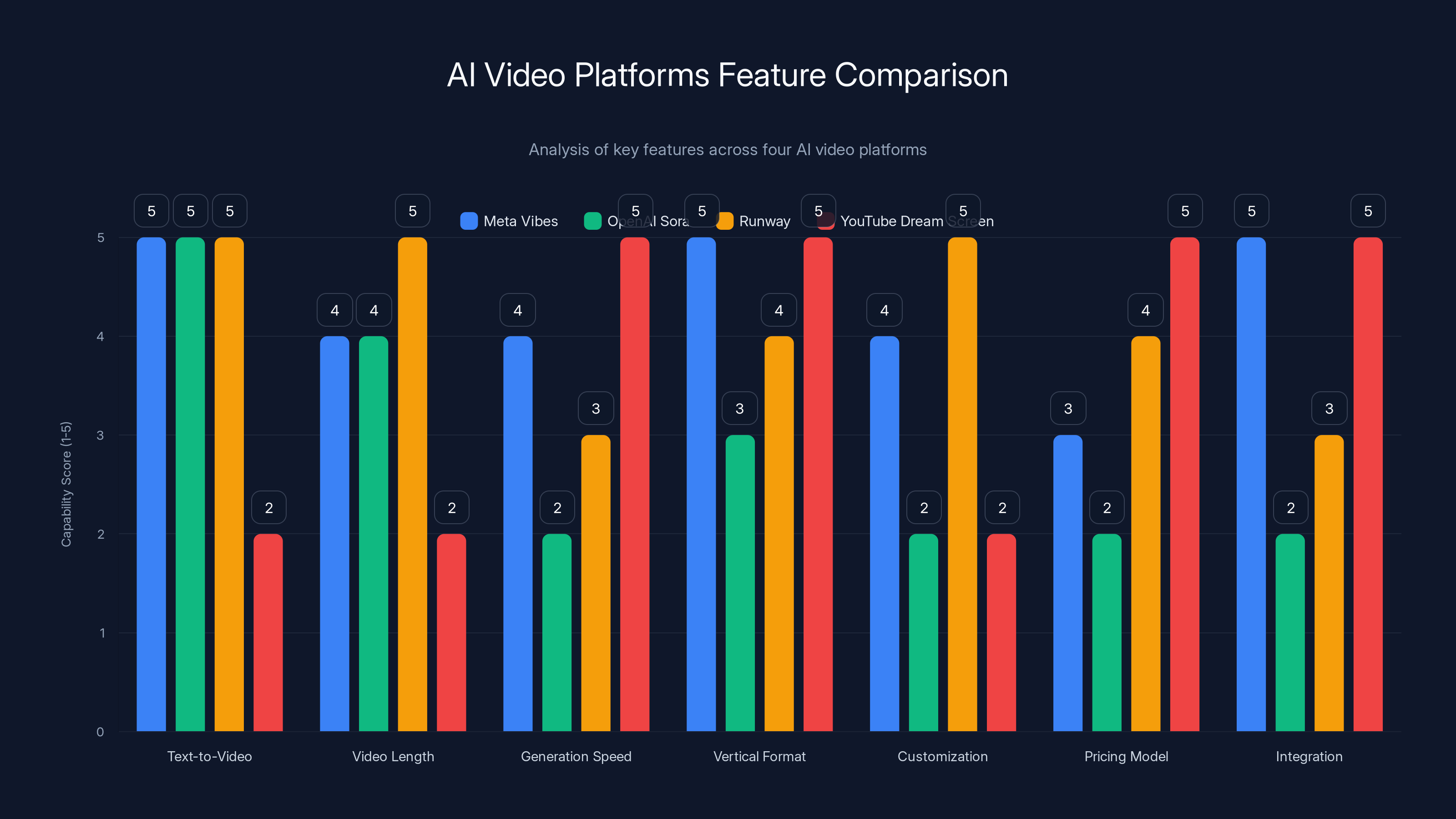

This chart compares the capabilities of AI video platforms across key features. Meta Vibes and Runway excel in text-to-video and customization, while YouTube Dream Screen offers seamless integration for YouTube creators. Estimated data based on feature descriptions.

Limitations and Challenges of AI-Generated Video

Video Quality and Coherence Issues

Despite significant advances, AI-generated videos still exhibit quality limitations compared to professionally-produced content. Common issues include physical incoherence (people's hands appearing distorted, objects passing through each other), inconsistent perspectives (camera angles shifting unnaturally), temporal discontinuities (abrupt transitions between logical scenes), and lighting inconsistencies.

These quality issues become more pronounced in complex scenes with multiple interacting objects or people. A simple scene—a person walking through a forest—generates more coherently than a complex scene like "five dancers performing choreographed movements while fireworks explode in the background." The AI model struggles with temporal consistency when managing multiple simultaneous elements.

For content where imperfection is acceptable or even desirable (experimental art, playful entertainment), these limitations matter little. For professional applications demanding photorealism and technical accuracy (product demonstrations, instructional videos, corporate communications), quality limitations remain significant.

Prompt Engineering Skill Required

While Vibes is designed to be accessible, creating high-quality videos still requires skill—specifically, the ability to write effective prompts. "Generate a nature video" produces worse results than "A wide shot of a misty pine forest at sunrise, with golden light filtering through the canopy, slight breeze creating gentle movement in the branches, peaceful and serene mood." The difference between mediocre and excellent results often comes down to prompt quality.

This creates a new skill category: "prompt engineering"—the ability to communicate with AI systems in ways that produce desired outputs. Users must learn what level of detail matters, which descriptive terms the AI understands well, and how to structure prompts for optimal results. This learning curve, while gentler than learning video production, still requires time investment.

Inequality emerges around prompt engineering skills. Users who invest time in learning effective prompting achieve dramatically better results than casual users, recreating some of the expertise gatekeeping that video production traditionally had. While the barrier is much lower, it's not zero.

Originality and Intellectual Property Concerns

AI-generated videos trained on vast datasets create questions about training data provenance and intellectual property. The models generating videos were trained on existing videos scraped from the internet, including content created by professional creators. Questions persist about fair compensation for creators whose work trained the models, copyright implications, and potential legal liability.

Meta has attempted to address these concerns through licensing agreements with content partners and commitment to removing content from creators who opt out of training data inclusion. However, questions remain about the comprehensiveness of these approaches and whether they adequately compensate creators.

Additionally, users generating content with Vibes may inadvertently generate videos that closely resemble existing content. The AI model, having been trained on existing videos, might generate outputs that are highly similar to specific existing works. This creates potential copyright infringement risk for users who generate and share content without realizing how closely it resembles protected works.

Authenticity and Trust

As AI-generated content becomes more prevalent, challenges emerge around authenticity, trust, and media literacy. Audiences increasingly encounter videos and question whether they're real or AI-generated. This uncertainty can undermine trust in content from creators, brands, and news organizations. An educational video that's actually AI-generated rather than filmed with real people might be perceived as less authentic by viewers.

Misinformation risks also escalate. Bad actors can use Vibes to generate convincing deepfakes of real people or false scenarios that appear real but are entirely fabricated. While Vibes includes watermarking and content filters, determined actors might circumvent these protections. The impact on media literacy and public trust could be significant.

Brands must consider reputational implications of using AI-generated content. Some audience segments respond positively to brands embracing AI, while others perceive it as cheap or inauthentic. Marketing professionals must strategically decide when AI-generated content aligns with brand identity and audience expectations, and when human-created content remains preferable.

Market Implications and Industry Impact

Disruption to Traditional Video Production

Meta's investment in Vibes signals confidence that AI-generated video will become a primary content source on social platforms. This has significant implications for traditional video production. Entry-level videography jobs—filming simple promotional videos, product demonstrations, or training content—become less essential as AI can generate equivalent content at lower cost.

This disruption parallels previous technology waves. Photography became democratized as film cameras, then digital cameras, then smartphones enabled anyone to take quality photos. Professional photographers shifted focus to areas where aesthetic judgment, composition expertise, and subject matter mastery remain valuable. Similarly, videography will shift toward work requiring creative direction, visual storytelling, and production value that AI cannot yet match.

Smaller video production shops serving local businesses face particular pressure. A small business no longer needs to hire a videographer for basic promotional content when they can generate equivalent content with Vibes. Surviving shops will need to specialize in high-end production, narrative storytelling, or niche expertise where AI cannot yet compete.

Shift in Content Creator Economics

The viability of AI-generated content at scale transforms economics for content creators. The marginal cost of producing additional content drops dramatically, making high-volume content strategies economically feasible for small creators. This pushes the market toward content creators who can produce volume, identify successful formats, and optimize for engagement.

Creators who built careers around content production skills (shooting, editing, color grading) face pressure to evolve. Those who transition to become "creative directors"—focused on conceptualizing compelling videos rather than executing their technical production—remain valuable. Those who fail to evolve risk obsolescence as AI handles execution.

New creator archetypes emerge. The "prompt engineer" who excels at writing creative AI video descriptions, the "creative strategist" who identifies what videos will engage audiences, and the "style curator" who selects AI generations that align with brand aesthetic become valuable roles. These roles require creativity and strategic thinking but not traditional video production skills.

Platform Consolidation and Ecosystem Effects

Meta's standalone Vibes app contributes to a platform ecosystem where Meta controls content creation, distribution, and monetization. Users create videos with Vibes, share to Instagram and Facebook through native integrations, and Meta captures advertising value from all engagement. This vertical integration creates advantages for Meta compared to competitors like Tik Tok.

However, the standalone app approach also invites competition. Rather than integrating video generation as a platform feature, Meta created a separate app that competes for user attention with other apps. This approach risks fragmentation—users must juggle multiple apps (Vibes for creation, Instagram for sharing, Threads for social engagement, Messenger for communication) rather than accessing everything from one platform.

Competitors will respond. Tik Tok might integrate more advanced AI video generation into their creation tools. YouTube is expanding Dream Screen capabilities. Competing platforms will offer differentiated approaches to AI video generation, creating genuine alternatives to Vibes rather than knockoffs.

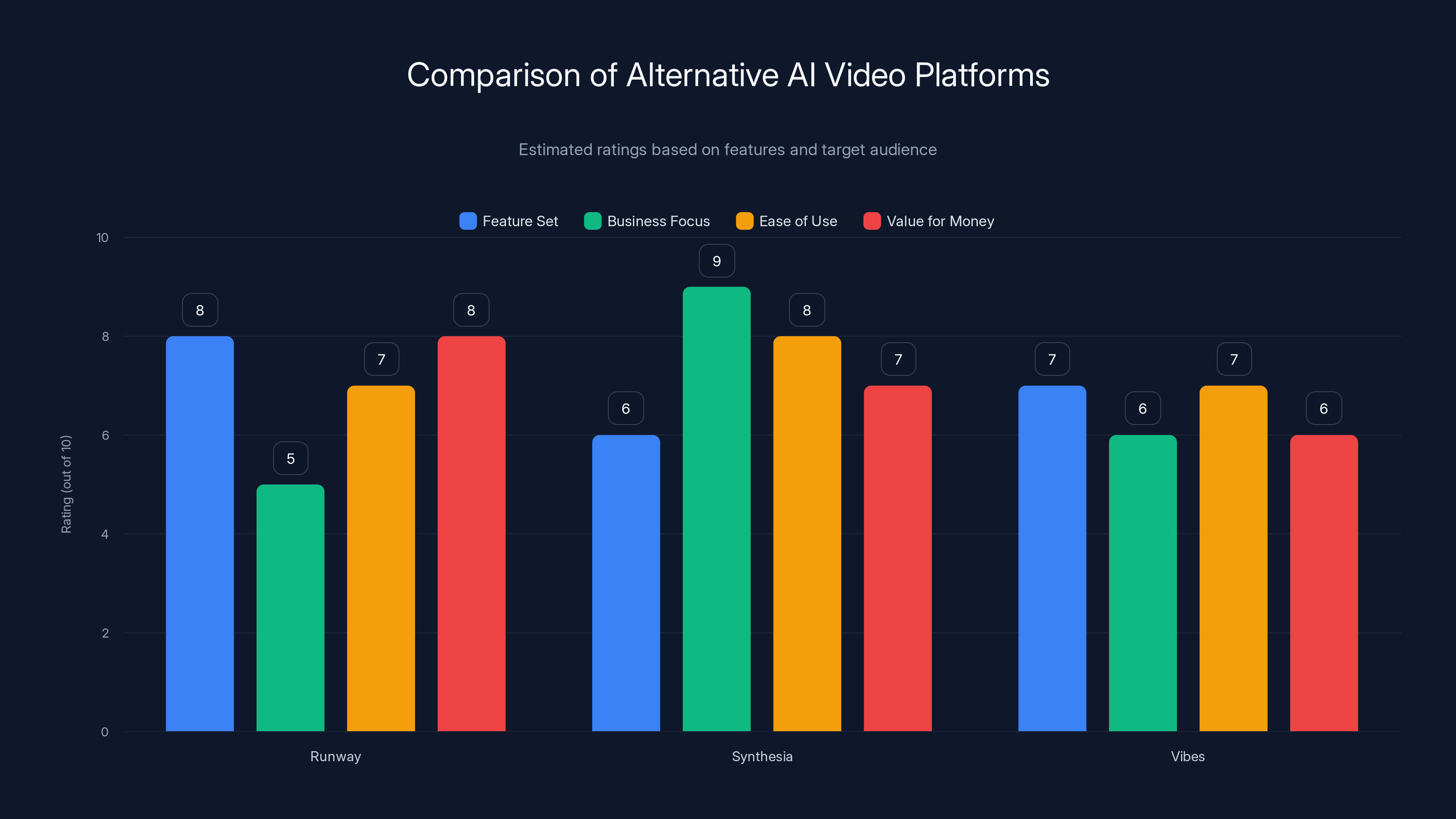

Runway excels in feature set and value for money, while Synthesia is highly rated for business-focused applications. Estimated data based on platform descriptions.

Understanding Vibes in the Broader Meta Strategy

AI as Core Product Strategy

Vibes represents more than a single feature—it exemplifies Meta's broad strategic commitment to AI integration across its entire product portfolio. From content recommendation algorithms powered by AI models, to generative AI features in image editing, to the standalone Vibes app, Meta is betting that AI capabilities will become core differentiators in social media.

Mark Zuckerberg has explicitly stated that AI-generated content will become increasingly dominant on Meta's platforms, with both recommendation algorithms and content production leveraging AI. The October 2025 earnings call referenced in the Vibes announcement confirms this strategic direction—Meta is deliberately shifting its content mix toward AI-generated material as a percentage of total content surfaces.

This strategic commitment has important implications for creators and businesses. Platforms that privilege AI-generated content in their recommendation systems will surface such content more prominently than human-created alternatives. Creators who adopt AI tools early gain advantages in visibility and engagement. Businesses that incorporate AI into content production gain efficiency and cost advantages over competitors who maintain traditional production workflows.

Long-term Vision: AI-First Social Platforms

Meta's vision extends beyond simply adding AI tools to existing platforms. The company is building toward AI-first social platforms where AI-generated content represents a significant percentage of what users consume, alongside human-created and curated content. Rather than humans creating and algorithms distributing, the dynamic shifts toward AI generating, algorithms curating, and humans engaging.

This AI-first approach addresses fundamental challenges facing social platforms. Content supply-constrained platforms struggle to keep users engaged—if creators aren't uploading content, feeds become stale and user engagement drops. AI generation addresses this by ensuring an infinite supply of fresh, varied content. Recommendation systems can optimize content mix in real-time, testing which content types and styles generate highest engagement.

The transition toward AI-first platforms represents fundamental change in media consumption. Rather than watching content created by people they know or follow, users watch an increasingly large percentage of content created by AI and curated by algorithms. The implications for authenticity, parasocial relationships, and human connection remain unclear.

Comparing AI Video Platforms: Feature and Capability Analysis

To help creators and businesses make informed decisions about which AI video platforms suit their needs, a detailed feature comparison is valuable:

| Feature | Meta Vibes | Open AI Sora | Runway | You Tube Dream Screen |

|---|---|---|---|---|

| Text-to-Video Generation | Yes, primary feature | Yes, primary feature | Yes, with editing tools | No, backgrounds only |

| Video Length | 15-60 seconds | Up to 60 seconds | Variable | 5-15 second clips |

| Generation Speed | 30 seconds to 2 minutes | 10+ minutes | 1-3 minutes | Real-time |

| Vertical Format Optimization | Yes, primary focus | Secondary focus | Supported | Yes, designed for |

| Advanced Customization | Color grading, effects | Limited parameters | Comprehensive editing | Limited customization |

| Pricing Model | Free + premium (expected) | $20+/month or pay-per-use | $12.50+/month | Included in You Tube |

| Platform Integration | Facebook, Instagram, Threads | Limited sharing | Export/download | Direct Shorts integration |

| Watermarking | Default included | Configurable | Configurable | Not applicable |

| Image-to-Video | Planned | Yes | Yes | Not available |

| API/Batch Processing | Unknown | Limited | Yes | Scripting available |

| Character/Likeness Generation | TBD | Disney partnership deal | Limited | Not applicable |

| Community/Discovery | Built-in features | Minimal | External gallery | You Tube ecosystem |

This comparison reveals that different platforms serve different creator needs. For creators prioritizing speed and ease of use with vertical video focus, Vibes excels. For creators wanting comprehensive post-generation editing within a single platform, Runway provides better value. For YouTube creators integrating AI backgrounds into existing workflows, Dream Screen offers seamless integration. No single platform is universally superior; choice depends on specific creative needs and workflow preferences.

Estimated data shows creators can increase their weekly video output from 4-8 to 20-30 videos using Vibes, enhancing content diversity and revenue potential.

Best Practices for Creating Effective Vibes Videos

Crafting Compelling Prompts

The most important skill for Vibes users is writing prompts that accurately communicate creative intent to the AI model. Effective prompts include specific visual elements (colors, textures, lighting), action descriptions (movement, pacing, energy level), emotional tone, and stylistic references.

Strong Prompt Example: "A woman in her 20s with dark curly hair, wearing a mint green vintage-style jacket, walking confidently through a rain-soaked Tokyo street at night. Neon signs reflect on wet pavement. Cinematic, cool color palette with hints of warm amber light. Steady camera movement, slight tracking from left to right. Moody, contemplative, mysterious atmosphere."

Weak Prompt Example: "A woman walking in the rain in Tokyo."

The strong version provides specific descriptive details about appearance, environment, mood, camera movement, and style. The AI model can interpret these details and generate a video matching the creative vision. The weak version is vague, allowing the AI to make arbitrary decisions about all these elements.

Effective prompt engineering follows several principles. Start with the main subject and action. Add specific descriptive details about appearance, environment, and conditions. Include camera and movement direction. Specify emotional tone and mood. Reference stylistic influences if relevant. Avoid unnecessary qualifiers and redundant descriptions.

Testing and iteration refines prompts further. Generate an initial video, review results, identify shortcomings, and refine the prompt based on what the AI generated versus intended. This iterative process dramatically improves results over initial attempts.

Maintaining Visual Consistency Across Videos

For creators building a recognizable aesthetic, maintaining consistency across generated videos is essential. Develop a "visual style guide" documenting preferred colors, camera movements, emotional tones, and subject matter. Reference this guide when writing prompts to ensure each generated video aligns with your overall brand aesthetic.

Include style descriptions in every prompt. Rather than relying on AI defaults, explicitly specify desired aesthetics. "Cinematic, warm color grading inspired by 1970s photography" guides the AI toward specific visual choices. Consistent style creates brand recognition—audiences begin to identify your content by its distinctive visual language, even if all videos are AI-generated.

Strategic Platform Optimization

Vibes generates vertical video optimized for Tik Tok, Instagram Reels, and YouTube Shorts. However, platform algorithms and audience expectations differ slightly across these platforms. Tik Tok audiences favor experimental and surreal content. Instagram Reels audiences appreciate polished, aspirational content. YouTube Shorts audiences expect value through humor, education, or entertainment.

Optimize generated videos for each platform's cultural norms. Generate different videos suited to each platform's audience rather than cross-posting identical content everywhere. While this requires more generation work, the improved engagement from platform-specific optimization justifies the effort.

Identifying Successful Formats

Content creators succeed by identifying formats that resonate with their audience, then executing variations on those formats. Use Vibes to rapidly test multiple format variations. Generate videos showing the same core concept in different styles, settings, or perspectives. Analyze which formats drive highest engagement, then focus generation efforts on successful formats.

This data-driven approach to format discovery would be impossible with traditional production. Testing 20 different format variations might cost $10,000+ in traditional production costs. With Vibes, it costs essentially nothing except time for prompt writing. This enables creators to optimize their content strategy based on audience response rather than guessing what might work.

Alternative Platforms Worth Considering

While Vibes represents an exciting option for AI-powered video creation, several alternative platforms merit consideration depending on specific creative needs and preferences. Understanding the strengths of each helps creators make informed decisions about which platforms to adopt.

Runway: Comprehensive Creative Suite

Runway positions itself as a comprehensive AI creative studio rather than a narrowly-focused video generation tool. Beyond text-to-video capabilities, Runway includes AI video editing, motion graphics generation, visual effects application, and style transfer. For creators wanting to work entirely within one platform, Runway reduces friction by eliminating context-switching between multiple applications.

The pricing starts at $12.50/month for basic access, with higher tiers offering more generation minutes and advanced features. For creators generating substantial video volume, Runway's more generous generation quotas compared to expected Vibes pricing might provide better value. The comprehensive feature set appeals to creators who want flexibility in their creative process—generating base videos, then refining through editing and effects within the same platform.

Synthesia: Business-Focused Video Generation

Synthesia specializes in video creation for business applications, particularly presentations and training materials. Rather than generating cinematic content or entertainment videos, Synthesia generates videos featuring AI-powered talking-head presenters delivering scripted content. Users write scripts, select avatars and voices, and Synthesia generates finished videos with synchronized speech and gestures.

For businesses creating training content, product demonstrations, or corporate communications, Synthesia offers advantages Vibes doesn't provide. The AI avatars provide credible presenters without requiring hiring actors or recording video of real people. Companies can rapidly produce translated versions with localized avatars and voiceovers, enabling global content distribution in multiple languages.

Synthesia's pricing starts at $22.50/month for small business plans, with enterprise pricing available for larger organizations. The specialization toward business content means Synthesia isn't suitable for entertainment, social media, or artistic content creation, but for organizations producing business videos at scale, it provides superior specialization.

D-ID: Talking Head Video Generation

D-ID focuses specifically on creating "talking head" videos—videos of people speaking directly to camera. Users provide photos of people (themselves, employees, or stock images), write scripts, and D-ID animates the photos to deliver the script with synchronized lip movements and natural gestures.

This specialization serves specific use cases well. Real estate agents can generate videos of themselves describing properties without recording actual video. Customer service teams can generate personalized video messages at scale. Educational instructors can create lesson videos without performing to camera. The narrow focus means D-ID isn't suitable for narrative storytelling, action sequences, or most entertainment use cases, but for head-and-shoulders talking format, it excels.

Alternative Options Worth Monitoring

Beyond established platforms, numerous startups are building AI video generation tools for specific use cases. Pika focuses on image-to-video generation with particular strength in animation creation. Stable Video provides video generation from text and image prompts with emphasis on stability and consistency. As the market matures, new platforms will likely emerge with different approaches and specializations.

Creators should avoid committing entirely to a single platform. Instead, maintain awareness of alternatives and be prepared to adopt emerging platforms if they offer compelling advantages. The AI video market is rapidly evolving—today's best option might be supplanted by something better within months.

Future Developments and Roadmap Predictions

Expanded Capability Roadmap

Based on Meta's statements and industry trends, Vibes will likely expand its capabilities significantly over the next 12-24 months. Image-to-video generation, allowing users to provide starting images that the model animates, will likely arrive as a Vibes feature. This extends creative possibilities—users can generate videos starting from custom artwork, photographs, or even sketches.

Video-to-video capabilities would allow users to provide existing videos that Vibes can modify—changing backgrounds, adding effects, or morphing video content in response to prompts. This functionality would bridge gap between full generation and post-production editing.

Audio generation and synchronization represent another likely expansion. Rather than generating silent videos that users add music to afterward, Vibes might generate accompanying music, sound effects, and dialog. This integration would enable complete video generation without requiring additional audio production work.

Character consistency across multiple videos represents a technical challenge that Vibes will likely address. Currently, generating multiple videos featuring "the same character" results in different appearances because the AI model generates each video independently. Future iterations will likely introduce systems ensuring consistent character appearance across videos, enabling narrative sequences and serialized content.

Potential Business Model Evolution

Beyond generation capabilities, Vibes' business model will likely evolve. Licensing deals with entertainment properties and celebrities will expand the platform's appeal. Users might be able to request videos featuring Marvel characters, sports celebrities, or other licensed IP, with revenue sharing between Meta and license holders.

White-label opportunities will emerge for enterprises wanting to integrate video generation into their own platforms. A large social network, media company, or brand might license Vibes technology to offer customers AI video generation as a native feature. These enterprise deals could become significant revenue sources.

Content marketplace features might develop where users can purchase and sell prompt libraries, style packs, or character templates. These ecosystem features would create secondary economies around Vibes, incentivizing high-engagement creators and prompt engineers to build valuable assets.

Competitive Dynamics

As Vibes gains traction and Meta demonstrates market viability, competitors will respond aggressively. Tik Tok, possessing enormous technical talent and massive user base, will likely accelerate AI video generation capabilities integrated into their platform. Rather than requiring a separate app, Tik Tok might embed advanced generation directly into their creation tools.

Google will expand YouTube's capabilities beyond Dream Screen, building more comprehensive AI generation features. Emerging platforms will continue launching specialized AI video generation tools for specific use cases. The market will likely fragment, with different platforms serving different creator segments rather than one winner taking most of the market.

Regulatory developments will also shape competitive dynamics. As AI-generated content becomes more prevalent, regulations around authenticity disclosure, copyright, and AI training data will emerge. Platforms that proactively address these concerns will gain advantages over those fighting regulatory battles reactively.

Conclusion: Making Informed Decisions About AI Video Platforms

Meta's Vibes standalone app represents a significant milestone in AI video generation evolution. By extracting AI video generation from the Meta AI app and creating a dedicated platform, Meta is signaling confidence that AI-generated content will become central to social media engagement. For creators, marketers, and content professionals, understanding Vibes' capabilities, limitations, and strategic positioning is increasingly important as AI-powered content creation becomes mainstream.

Vibes excels in specific areas. The speed of generation—30 seconds to 2 minutes per video—dramatically exceeds competitors like Sora. Vertical video optimization makes Vibes ideal for Tik Tok, Instagram Reels, and YouTube Shorts creators. The expected freemium pricing model removes financial barriers for casual creators. Integration with Meta's ecosystem enables seamless distribution across Facebook, Instagram, and Threads.

However, Vibes isn't universally superior to all alternatives. Creators needing comprehensive editing within a single platform might prefer Runway. Businesses generating talking-head videos might prefer Synthesia. Professionals demanding the highest visual quality might prioritize Sora despite slower generation times. The optimal choice depends on specific creative needs, workflow preferences, budget constraints, and target platforms.

The broader implication is that AI video generation has matured from experimental technology to practical tool for content creation. The debate is no longer whether to use AI for video creation, but rather which AI video platform best serves specific needs. This shift has significant consequences for content creators, traditional video production professionals, brands, and social media platforms.

Creators who proactively adopt AI video tools position themselves advantageously. The combination of AI generation for rapid production combined with human creativity for conceptualization and curation creates synergies that magnify creative output. Creators who ignore AI tools risk falling behind competitors who leverage AI for efficiency gains.

Traditional video production professionals face displacement in commoditized video work (product demonstrations, simple promotional content, basic corporate videos). However, opportunities emerge in high-end production, creative direction, and specialized storytelling that AI cannot yet match. Success requires evolution—those who transition to roles where human expertise remains valuable will thrive; those who fail to evolve will face career challenges.

Businesses gain enormous value from AI video platforms through cost reduction, increased output capacity, and ability to rapidly test content variations. The financial case for adopting AI tools is compelling. However, strategic deployment matters—using AI for all content creation without human direction produces mediocre results. The optimal approach combines AI generation for efficiency with human judgment for quality control and strategic direction.

For media literacy and public trust, AI video proliferation creates challenges. As AI-generated content becomes increasingly prevalent and visually convincing, distinguishing real from synthetic video becomes harder. This emphasizes the importance of watermarking, disclosure standards, and media literacy education. Platforms must maintain transparency about content origins and audiences must develop critical evaluation skills.

Looking ahead, the trajectory is clear: AI video generation will become increasingly central to social media. Meta's Vibes investment confirms this direction. Competing platforms will expand their capabilities. New startups will build specialized tools. Creators, brands, and organizations that understand these platforms and incorporate them strategically into their content workflows will operate with significant advantages over those who remain traditional-media focused.

The AI video revolution is underway. Vibes represents one major manifestation of this broader trend. Whether Vibes becomes the dominant platform or faces serious competition from superior alternatives remains uncertain. What's certain is that AI-generated video will become increasingly important for content creators, and decisions about which platforms to adopt will significantly impact creative output, efficiency, and success in the evolving social media landscape.

FAQ

What is Meta Vibes and how does it work?

Meta Vibes is an AI-powered video generation platform that transforms text descriptions into finished vertical videos suitable for Tik Tok, Instagram Reels, and YouTube Shorts. Users input text prompts describing videos they want to create, and Meta's AI models generate corresponding videos in 30 seconds to 2 minutes. The technology uses advanced transformer-based models trained on vast video datasets to understand user descriptions and generate coherent, visually compelling video content.

How does Vibes differ from Open AI's Sora?

While both platforms generate videos from text prompts, they differ in generation speed, pricing, and optimization focus. Vibes generates videos in 30 seconds to 2 minutes, while Sora takes 10+ minutes per video. Vibes optimizes specifically for vertical video formats ideal for mobile social platforms, while Sora focuses on general video generation. Pricing also differs significantly—Vibes is expected to offer free tier with premium options, while Sora requires monthly subscription ($20+/month) or pay-per-use pricing. For speed and ease of use with vertical video content, Vibes generally offers advantages over Sora.

What are the main use cases for Vibes?

Vibes serves multiple user segments. Content creators use it to rapidly generate dozens of video concepts for social media distribution. Marketing professionals use it for generating product showcase videos and promotional content. Educational institutions use it for creating animated explanations and visual demonstrations. Brands leverage it for cost-effective marketing content generation. Entertainment users create content purely for enjoyment and creative experimentation. For teams looking for cost-effective automation and content generation solutions, platforms like Runable offer AI-powered content creation features that complement video generation tools.

How much does Vibes cost?

Meta hasn't finalized pricing, but industry analysis suggests Vibes will follow a freemium model. The free tier will likely include monthly generation quotas (20-50 videos per month), with slower processing and watermarked videos. Premium subscriptions probably start at

What quality issues exist with AI-generated videos?

AI-generated videos still exhibit several limitations. Physical incoherence can occur—hands appear distorted, objects pass through each other, or lighting behaves unnaturally. Temporal consistency challenges emerge with complex multi-object scenes where the AI struggles to maintain coherent movement over extended sequences. Complex scenarios with multiple interacting elements prove more difficult than simple scenes. These limitations matter less for entertainment or experimental content but remain problematic for professional applications demanding technical accuracy and photorealism.

How does Vibes integrate with Meta's ecosystem?

Vibes generates videos that integrate seamlessly with Meta's social platforms. Users can share generated videos directly to Instagram Reels, Facebook Feed, and Threads through native sharing functionality. This ecosystem integration makes Vibes particularly valuable for creators focused on Meta's platforms. Generated videos can also be downloaded and shared to competing platforms like Tik Tok and YouTube, though they're optimized specifically for Meta's vertical video formats.

What makes effective Vibes prompts?

Effective prompts include specific visual details (colors, textures, lighting), action descriptions (movement, pacing, energy), emotional tone, and stylistic references. Instead of "a woman walking in the rain," a strong prompt specifies "a woman in her 20s wearing a mint green vintage jacket, walking confidently through rain-soaked Tokyo streets at night with neon signs reflecting on wet pavement, cinematic mood, cool color palette with warm amber accents." More specific descriptions guide the AI toward desired outputs. Testing and iteration—generating videos, reviewing results, and refining prompts based on shortcomings—improves results dramatically.

How does Vibes compare to Runway for video creation?

Runway and Vibes serve somewhat different needs. Runway positions itself as comprehensive creative studio with AI video generation, editing, motion graphics, visual effects, and style transfer all within one platform. This breadth appeals to creators wanting integrated workflows without switching between applications. Vibes focuses narrowly on generation speed and ease of use. For rapid video generation optimized for social platforms, Vibes excels. For creators needing comprehensive post-generation editing within single platform, Runway likely provides better value. Pricing differs too—Runway starts at $12.50/month while Vibes is expected to be free with optional premium.

What are the intellectual property and copyright concerns with AI video generation?

AI video models trained on vast datasets raise questions about fair compensation for creators whose work trained the models, copyright implications, and potential legal liability. Users generating videos might inadvertently create content closely resembling existing copyrighted works. Meta has addressed concerns through licensing agreements and content removal options, but questions remain about comprehensiveness. Users should be aware that using AI-generated videos without understanding potential copyright implications carries legal risk, particularly for commercial use without proper licensing verification.

Will AI-generated videos replace human video creators?

While AI generation handles commoditized video work (product demonstrations, simple promotional content, basic corporate videos), human creativity remains valuable in areas requiring aesthetic judgment, narrative storytelling, and subject matter expertise. Video production professionals who evolve toward roles as creative directors and strategic consultants—rather than technical executors—will remain valuable. The market will likely segment, with high-end, narrative-driven, and specialized content continuing to be created by humans, while routine, high-volume content increasingly uses AI generation. Success requires adaptation rather than traditional production skills alone.

What are the best alternative AI video platforms to Vibes?

Several compelling alternatives exist depending on specific needs. Open AI's Sora offers highest visual quality for general video generation but with slower processing and higher costs. Runway provides comprehensive creative suite with generation and editing in single platform. Synthesia specializes in business-focused talking-head video generation ideal for corporate communications. D-ID focuses on animated talking-head videos from photos and scripts. Each platform serves different creator segments. For developers and teams seeking broader automation solutions beyond video, Runable offers AI agents for content generation and workflow automation at competitive pricing. The optimal choice depends on specific creative needs and workflow preferences.

Key Takeaways

- Meta Vibes generates high-quality vertical videos from text prompts in 30 seconds to 2 minutes, significantly faster than competitors like Sora

- Vibes optimizes specifically for TikTok, Instagram Reels, and YouTube Shorts formats, making it ideal for social media creators

- Freemium pricing model with expected premium tiers starting around 14.99 monthly removes financial barriers for casual creators

- Alternative platforms like OpenAI Sora, Runway, Synthesia, and D-ID serve different creator needs and specializations

- AI video generation transforms content creator economics by enabling high-volume production at minimal cost, creating advantages for early adopters

- Quality limitations in physical coherence and temporal consistency mean AI generation remains most suitable for entertainment and experimental content

- Prompt engineering skills become valuable as users learn to write detailed descriptions that guide AI models toward desired outputs

- Platform integration with Meta ecosystem enables seamless sharing to Facebook, Instagram, and Threads, creating vertical integration advantages

- Traditional video production shifts toward high-end creative work, narrative storytelling, and specialized content where human expertise remains valuable

- Intellectual property and authenticity concerns require attention to watermarking, copyright implications, and transparent disclosure of AI-generated content origins

![Meta's Vibes App: Complete Guide to AI-Generated Video Platform [2025]](https://tryrunable.com/blog/meta-s-vibes-app-complete-guide-to-ai-generated-video-platfo/image-1-1770320269524.jpg)