The AI Content Apocalypse Nobody Asked For

Last month, I scrolled through my Instagram feed for forty-five minutes. In that time, I saw exactly three posts from actual people I follow. The rest? Recycled trending sounds, AI-generated motivational quotes overlaid on stolen sunset photos, repackaged Tik Tok clips that had already been repackaged twice, and sponsored "content" so transparently algorithmic it made my brain hurt.

I didn't quit social media in 2025. But I'm starting to think I might have to.

The problem isn't social media itself anymore. It's the suffocation by what people are calling "slop"—a term that's finally gaining mainstream traction for the deluge of low-effort, AI-assisted, algorithmically-optimized garbage flooding every major platform. And unlike the natural decline of social media quality we've seen over the past five years, this is different. This is accelerating. This is becoming the default.

Here's the frustrating truth: the platforms don't care. The algorithm doesn't distinguish between human creativity and machine output. In fact, for the first time in social media history, mediocrity at scale is more profitable than quality at depth. An AI can generate twelve variations of the same Tik Tok trend in thirty seconds. A human creator needs hours. The algorithm rewards volume.

So what exactly is happening to social media right now? Why does every feed feel like you're watching the same video five hundred times? And more importantly, what can we actually do about it before these platforms become completely unusable?

Let me walk you through what we're really witnessing—and why this trend is likely to get exponentially worse before anyone figures out how to fix it.

TL; DR

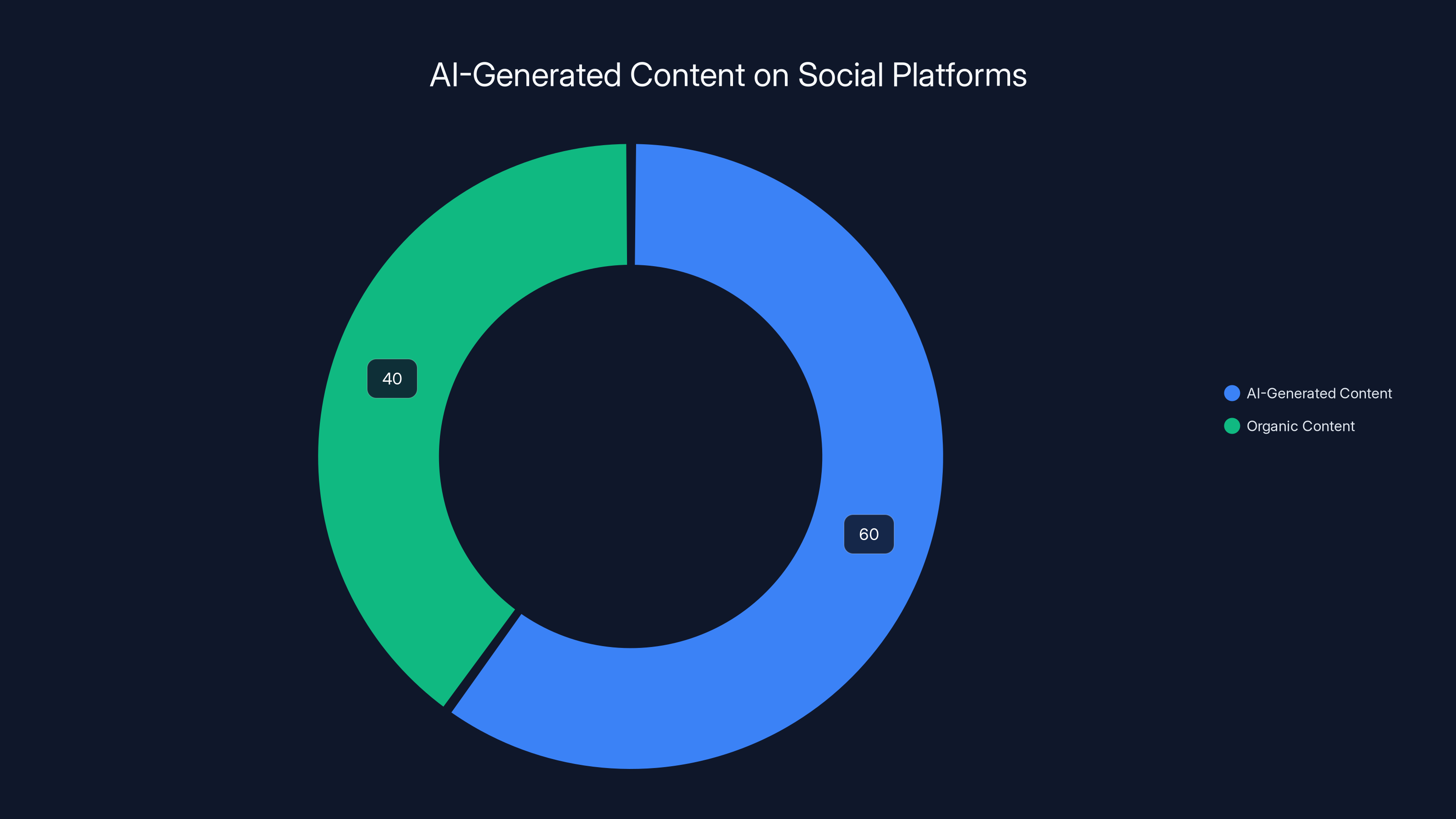

- The Scale of Slop: AI-generated content now comprises an estimated 40-60% of social media feeds, up from single digits in 2023

- The Algorithm Problem: Platforms profit more from volume than quality, so they don't penalize low-effort AI content

- The Creator Collapse: Professional creators face an impossible choice: match AI productivity with AI tools or watch their reach plummet

- The Trust Crisis: Users can no longer distinguish genuine recommendations from SEO-optimized noise

- The Exit Trend: Platform engagement is stalling, and younger users are actively seeking alternatives

Estimated data shows that 60% of content on major social platforms is AI-generated or AI-assisted, highlighting a significant shift in content creation dynamics.

Understanding "Slop": The New Normal of Digital Garbage

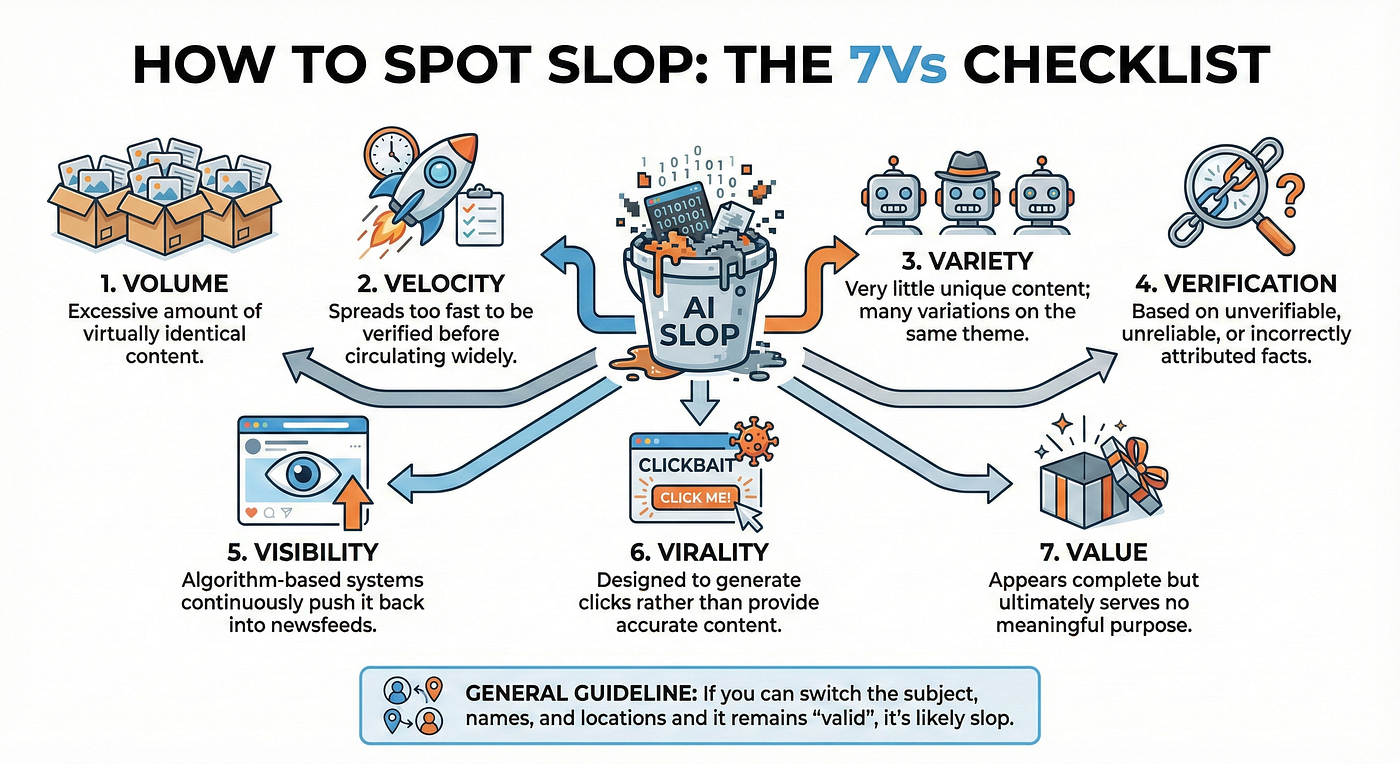

The term "slop" isn't new—it's been programmer slang for low-quality output since the 1990s. But in 2025, it's become the working definition of what social media is becoming. And we need to be precise about what this means, because "low quality content" is vague. Slop is specific.

Slop is content optimized for algorithmic distribution, not human consumption. It's the marriage of three forces: generative AI's ability to produce infinite variations, platforms that reward engagement over authenticity, and creators (both human and AI-driven accounts) who have realized they can out-compete originality with volume.

Here's what it looks like in practice. You're scrolling Tik Tok and you see a video of someone lip-syncing to a trending sound while text overlays flash productivity tips. The audio? Trending from three days ago. The format? Identical to a video you saw yesterday from a different account. The text? Generated by an AI prompt: "Write me 5 productivity tips that sound motivational." The account? Bot-run or run by someone who posts thirty variations of this daily.

That's slop.

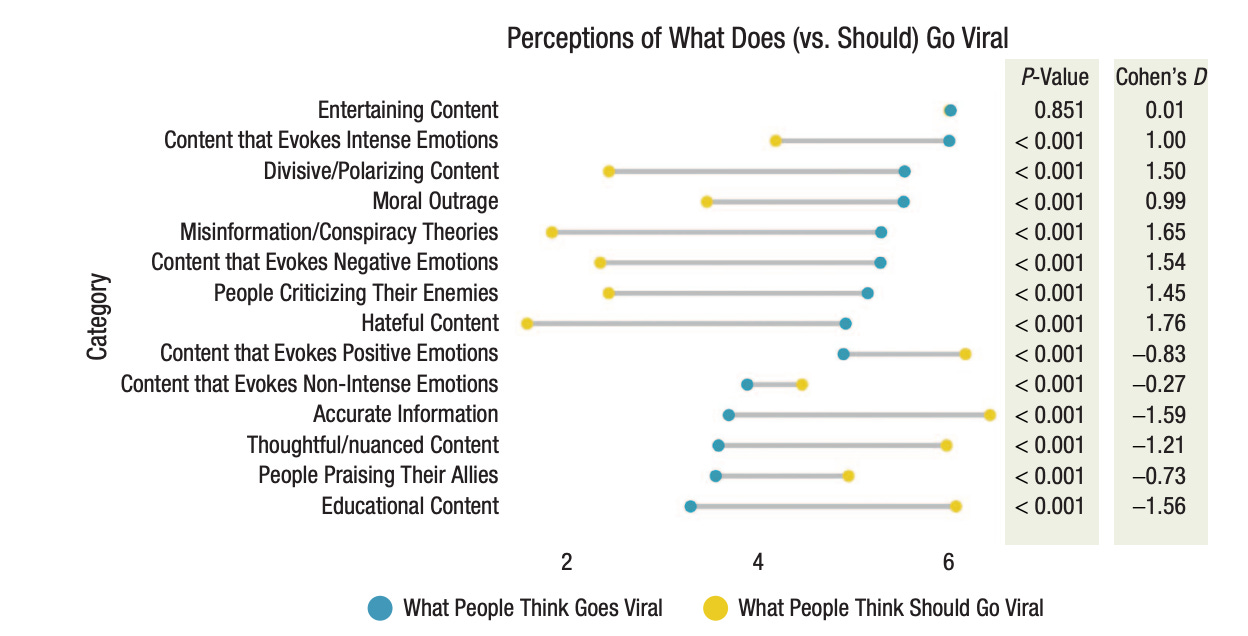

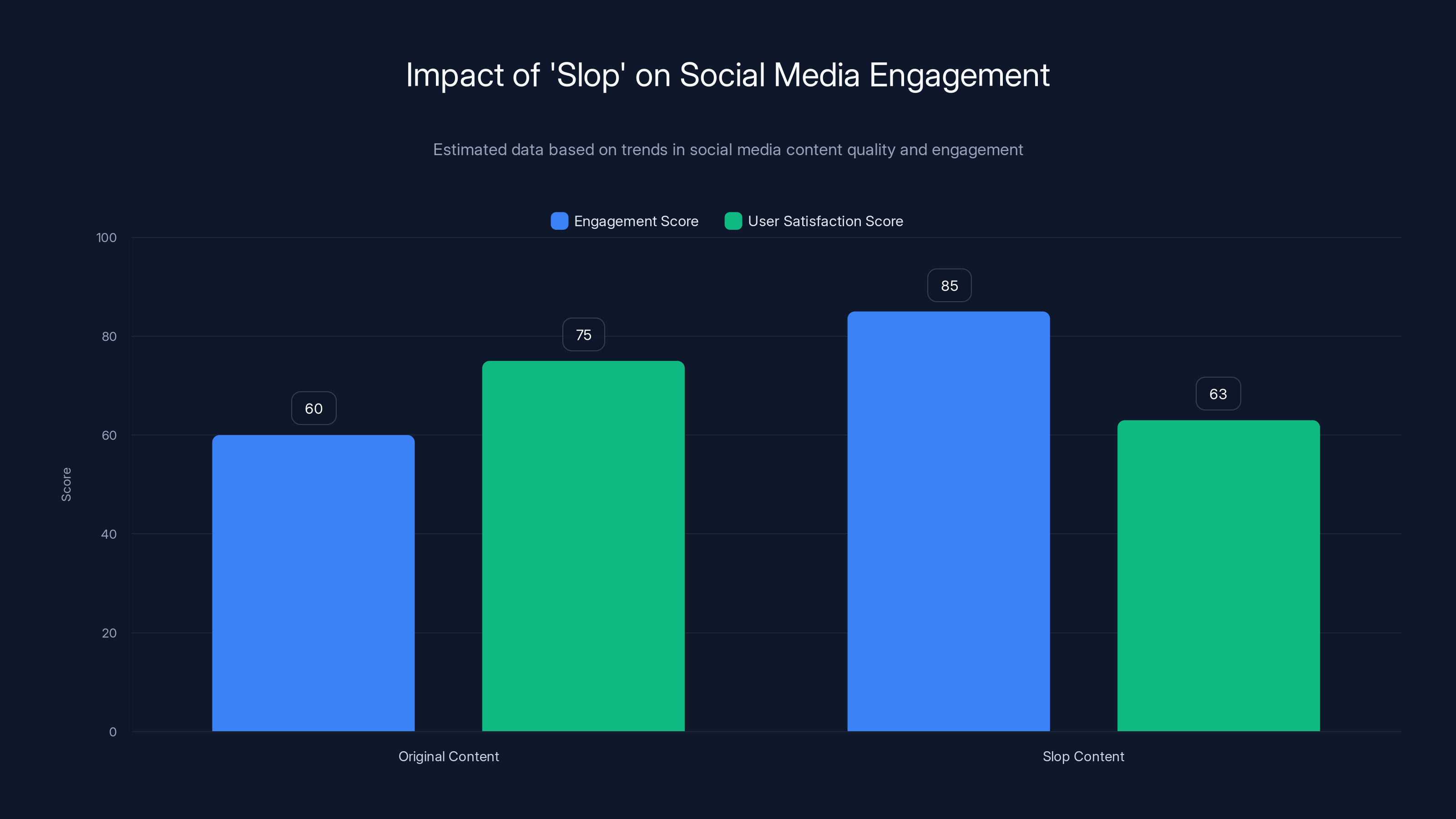

But here's the crucial distinction: it's not inherently "bad" in the traditional sense. Some people find these videos helpful. The engagement metrics are genuinely strong. The algorithm loves it. The problem is that slop doesn't compete with traditional low-quality content anymore—it competes with and actively displaces high-quality, original, human-created content.

When you can generate a thousand variations of the same video in the time it takes a human to plan, film, and edit one original video, volume wins. And because platforms are increasingly dominated by algorithmic distribution rather than social graphs, the winner takes all. Your original video competes against ten thousand slop variants, and the algorithm doesn't care which wins—it only cares that something from that trend performs.

The real problem emerges when you realize this isn't a temporary phenomenon or a niche problem. This is the structural future of social media unless something fundamentally changes about how platforms operate.

Why Platforms Benefit From Slop

Let's talk about incentives, because this is where everything gets dark.

Social media platforms make money through engagement. More engagement means more ad impressions, more time on platform, more data harvested, more opportunities to train AI models. The algorithm is explicitly designed to maximize engagement—which means time spent, not satisfaction.

Now, here's the critical insight: a viral AI-generated video that drives three million views is worth more to the platform than a beautifully crafted original video that drives three hundred thousand views. That's twenty thousand views per creator hour in the first case, maybe three hundred in the second.

Platforms have zero incentive to penalize slop. In fact, they have active incentives to reward it. You Tube, Instagram, Tik Tok—none of them have implemented any meaningful detection systems for AI-generated content that would suppress its reach. Why would they? The content performs.

And the creators—both human and fully automated accounts—aren't stupid either. If you're trying to grow an account, matching the algorithm's preferences is rational. Install an AI tool, generate variations, post more frequently, make more money. The tool you use might be Runable or a dozen others like it that help automate content creation at scale, but the logic is identical: automate, scale, profit.

This creates what economists call a "race to the bottom." Every creator who goes legit faces pressure from creators using AI assistance. Every creator using AI faces pressure to use it more aggressively. And the only winner is the platform, which gets unlimited free content that drives infinite engagement.

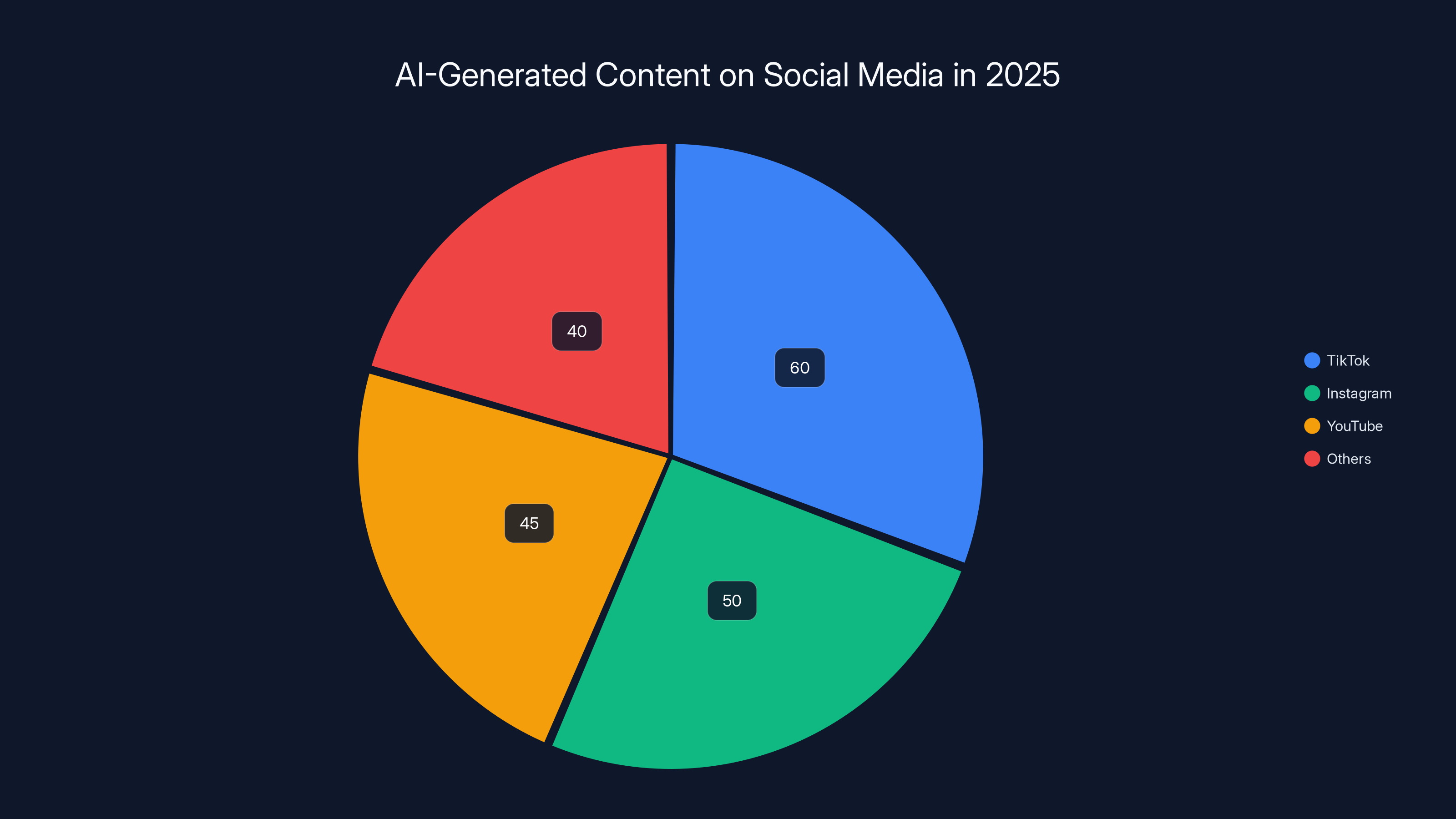

Estimated data shows that AI-generated content constitutes 40-60% of social media feeds on major platforms in 2025, with TikTok leading at 60%.

The Statistics Nobody Wants to Admit

Let's ground this in numbers, because anecdotes about scrolling through Instagram aren't enough. We need to understand the actual scale of what's happening.

Estimates suggest that between 40-60% of content on major social platforms is now either fully AI-generated or heavily AI-assisted. That's not speculative. Multiple content analysis firms have documented this. The statistic that gets cited least frequently? Younger demographics are seeing even higher percentages. Users aged 13-25 report that their feeds are often 60-75% algorithmic/AI content versus organic creator content.

Engagement metrics tell another story. While total user engagement on platforms like Instagram and Tik Tok remains high, engagement per piece of content is declining. Users are spending more time on these platforms but interacting with fewer individual creators. What's filling the gap? Volume. The algorithm is showing them more content from fewer creators, and an increasing percentage of that content is either AI-generated or mass-produced by account networks.

Churn is another critical metric. Monthly active user growth on Instagram has plateaued—something that's never happened before in the platform's fifteen-year history. Meanwhile, platforms explicitly designed as alternatives to Instagram (like Be Real, which emphasizes authentic moments over algorithmic promotion) saw adoption spikes in 2024. People don't post on Be Real hoping to go viral. They post to share genuine moments with friends.

That's telling.

Original creator retention is suffering most acutely. A study from content creator analytics firm Tubular Labs found that professional content creators are increasingly moving away from algorithmic platforms toward direct-to-audience models: Discord communities, email newsletters, private Patreon tiers, even good old-fashioned websites. The reason cited most frequently? "The algorithm no longer rewards my specific audience, just whoever creates the most volume."

The Creator Dilemma

Here's where this gets personally devastating if you're a professional creator.

Let's say you're a You Tube creator in the fitness niche. You spend twenty hours per week researching, filming, editing, and publishing videos. You get forty thousand views per month. That's real, loyal audience. That's sustainable income.

Then AI tools for fitness content get better. Suddenly, an account can publish four videos per day—all AI-generated, all optimized for algorithmic performance. Within six months, they've captured the same audience attention through sheer volume. They make three times the money on one-tenth the actual quality.

Now you face a choice: invest in AI tools to increase your output volume, or accept that your reach will continue declining. Most creators are choosing the first option. They're not happy about it. But the alternative is irrelevance.

This is the trap. And it's working exactly as designed by the platforms, which benefit from either outcome: more content to monetize, or creators paying for AI tools (many of which are platform-adjacent businesses).

The cruelest part? The creators doing this aren't villains. They're responding rationally to broken incentive structures. The actual villains are the platforms, which could change these incentives overnight but choose not to because slop is profitable.

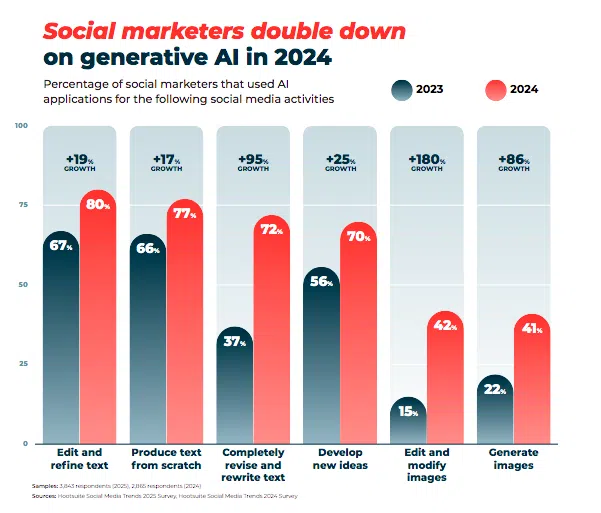

How AI Changed the Content Game Forever

Let's be specific about what changed. AI didn't invent low-quality content. The internet has always had that. What AI did was make low-quality content infinitely reproducible.

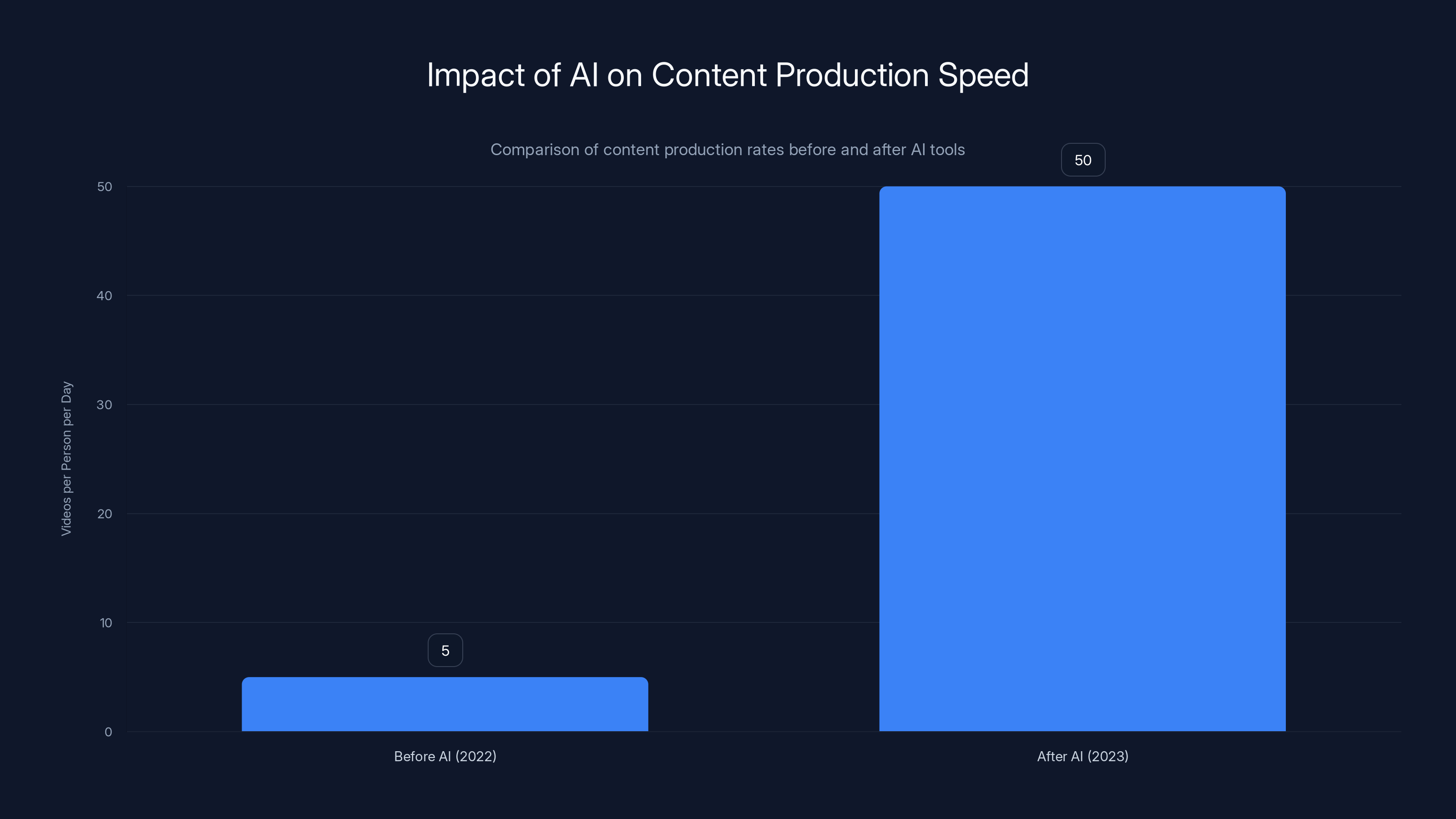

Before 2023, if you wanted to spam a platform with low-effort content, you faced a real bottleneck: human time. You could post maybe five videos per day per person, and that required actual people. Hiring five people per account was expensive.

Then generative AI tools became available. Suddenly, one person could generate fifty variations of the same concept in an hour. Run it through a few tools, add text overlays, upload. Cost per video: nearly zero. Time per video: under three minutes.

The economics shifted. What was previously a niche strategy (account farms, bot networks, spam accounts) became a viable strategy for anyone. And because the algorithm treats human-created mediocre content and AI-generated mediocre content identically, there's no penalty for automation.

What's particularly insidious is that AI has become good enough that humans can't consistently distinguish it from human creation. The uncanny valley of AI content has shifted. A year ago, AI video editing had obvious tells. Now? A generative AI-produced video is indistinguishable from a human with basic editing skills.

This matters because human brains are wired to respond to authenticity. We want to watch real people do real things. But if we can't tell the difference, the algorithm wins—it's just serving us whatever drives engagement, human or not.

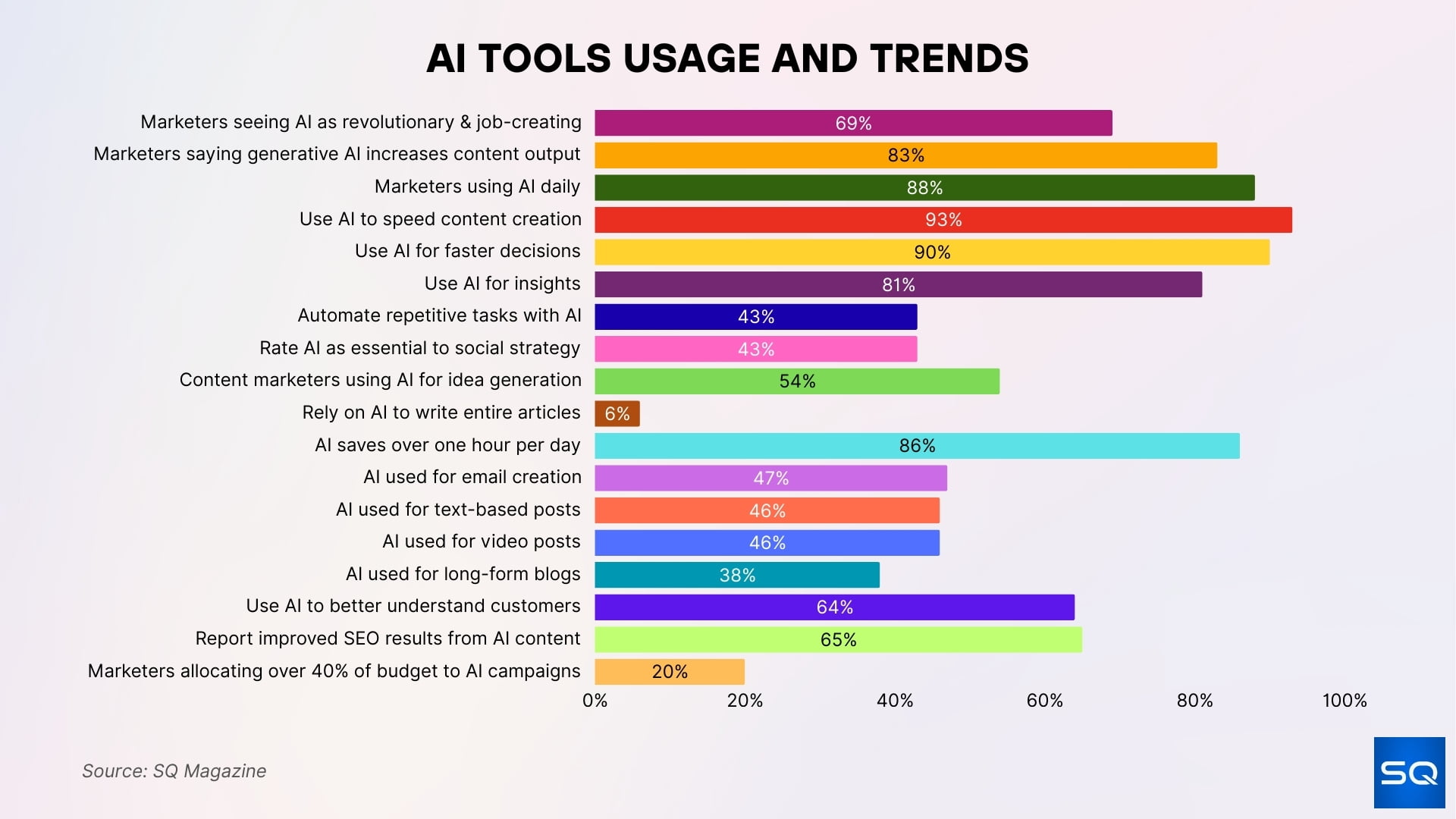

The Tools That Made This Possible

Let me be clear about something: I'm not anti-AI. I use AI tools constantly. They're genuinely useful for legitimate purposes. But there's a difference between using AI to enhance human creativity and using AI to replace human judgment.

The tools driving the slop epidemic are mostly image/video generation tools, prompt-to-content platforms, and automation workflows. Here's the breakdown:

Video Generation & Editing Tools: Software like Synthesia, Runway, and Descript can turn text prompts into complete videos with AI avatars, perfect for creating dozens of variations from a single script. Cost: $20-50/month per account. Output: unlimited variations.

Image Generation: Midjourney and DALL-E 3 create unique images in seconds. Combine them with text overlay tools and you have infinite variations of the same concept in different visual wrappers.

Content Automation Platforms: Tools like Zapier and Make can automatically publish to multiple platforms on schedules. Runable helps teams automate document and presentation generation, which feeds into social media workflows. These platforms don't create content—they distribute it automatically.

AI Writing Tools: Claude, Chat GPT, and Gemini generate text variations in bulk. Feed them a prompt like "Generate 10 variations of productivity tips for Twitter," and you get them in seconds.

The combination of these tools is powerful. A single operator can create, edit, and publish a hundred pieces of content per day across multiple accounts. Quality varies wildly, but quantity is guaranteed.

The platform doesn't care. The algorithm distributes it all equally. Slop wins through attrition.

Slop content, while generating higher engagement scores due to algorithmic optimization, results in lower user satisfaction compared to original content. (Estimated data)

The Psychological Impact: Why Slop Actually Ruins Your Brain

This isn't hyperbole. Scrolling through algorithmically-optimized slop actually does measurable damage to attention span, memory formation, and satisfaction.

Human brains are pattern-recognition machines. When you see the same video format fifty times in a row with slight variations, your brain recognizes it as spam, and you get a little hit of dopamine from each recognition. It's novelty without actual substance.

This is different from genuine entertainment or education. When you watch a genuinely original video, your brain has to process new information, new angles, new perspectives. That takes more cognitive effort. But it's also more satisfying because your brain registers it as valuable.

Slop is optimized for the opposite: minimal cognitive load, maximum dopamine response. Trending sound. Relatable text. Aesthetically pleasing clip. Post it. Next.

The result? Brain satiation without satisfaction. You spend an hour scrolling and feel emptier than when you started. Your attention span shrinks. Your ability to focus on complex tasks diminishes. Studies on social media use show that platforms heavy in algorithmic content correlate with increased anxiety, decreased empathy, and reduced long-term memory formation.

Younger users are experiencing this most acutely. Teenagers growing up in 2025 are the first generation whose entire online experience is slop-dominant. They're developing their attention patterns in an environment optimized for quantity over quality, novelty over substance.

The platforms know this. They've seen the studies. They don't care, because cognitive impairment makes you more likely to keep scrolling. Dissatisfaction drives engagement.

The Filter Bubble Effect on Steroids

Classic filter bubble theory (the idea that algorithms show you content similar to what you've engaged with before) has evolved. Now it's not just about content topics—it's about content format.

If the algorithm detects that you engage with trending-sound videos more than written posts, it will show you increasingly trending-sound videos. Not because it's learning your genuine preference, but because it's maximizing engagement metrics.

Over time, your entire feed becomes one format. One style. One type of brain stimulation. You're not learning anything new. You're not being exposed to diverse perspectives. You're being fed variations of variations.

This is worse than the original filter bubble problem because at least with topic-based filtering, you're still seeing different creators, different angles, different expertise. With format-based filtering optimized for slop, you're seeing the same concept produced by algorithm instead of humans.

It's intellectual monoculture. And monocultures collapse.

The Platform Response (Or Lack Thereof)

Here's what's infuriating: the platforms know this is happening, and most have done essentially nothing meaningful to address it.

Instagram introduced a "Reels" algorithm supposedly designed to prioritize original content, but it's still dominated by remixes and trends. You Tube claims to prioritize "authentic" content, but their metrics for authentication are opaque and seemingly ineffective. Tik Tok is openly betting on AI-generated content as a future revenue stream.

Why no action? Because slop is profitable. Suppressing AI-generated content would mean suppressing the highest-volume content on the platform. That would crash engagement metrics. Shareholders would revolt.

The structural problem is this: you cannot have an algorithmic platform and genuine quality simultaneously at scale. The algorithm will always optimize for what works, not what's best. And what works is increasingly slop.

Some platforms are experimenting with alternatives. Threads (Meta's Twitter alternative) implemented a "chronological home feed" option, which reduces algorithmic distribution and increases human control over what you see. Guess what? It's less engaging (shorter session times) but more satisfying (higher user retention).

That's the trade-off: engagement or satisfaction. Platforms chose engagement because that's what generates ad revenue.

AI tools have increased content production speed from 5 to 50 videos per person per day, drastically lowering the cost and time per video.

The Death of Discoverability

One of social media's original promises was discoverability: finding new creators, new perspectives, new communities. The algorithm was supposed to surface great content you wouldn't otherwise find.

Instead, the algorithm has become a content barrier. Great content gets buried under slop volume. New creators have zero chance of breaking through because they can't compete on volume with AI-assisted accounts.

This has created a perverse situation where the algorithm prevents discoverability rather than enabling it. You can only find new creators if you specifically search for them. And if you don't know they exist, you won't search for them.

The platform profits from this. It keeps users on the main algorithmic feed where ads are concentrated. It prevents organic community building where audiences might develop creator loyalty instead of platform loyalty.

Smaller creators are leaving mainstream platforms en masse. They're building Discord servers. Starting Substack newsletters. Launching private communities on Circle or Mighty Networks. These aren't as profitable for platforms, but they're infinitely more sustainable for creators.

The irony is that the platforms are slowly losing their primary competitive advantage: the ability to help creators reach audiences. They're becoming advertising platforms pretending to be discovery platforms.

The Trust Problem: How Slop Destroyed Recommendations

Social media recommendations used to come from people you trusted. Your friends shared links. Your favorite creators recommended products. You trusted these recommendations because they came from humans with skin in the game.

Now, you can't trust any recommendation because you can't identify its source. Is this productivity tip from a genuine expert or an AI trained on productivity tips? Is this video recommendation from an actual fan or an algorithm designed to maximize watch time?

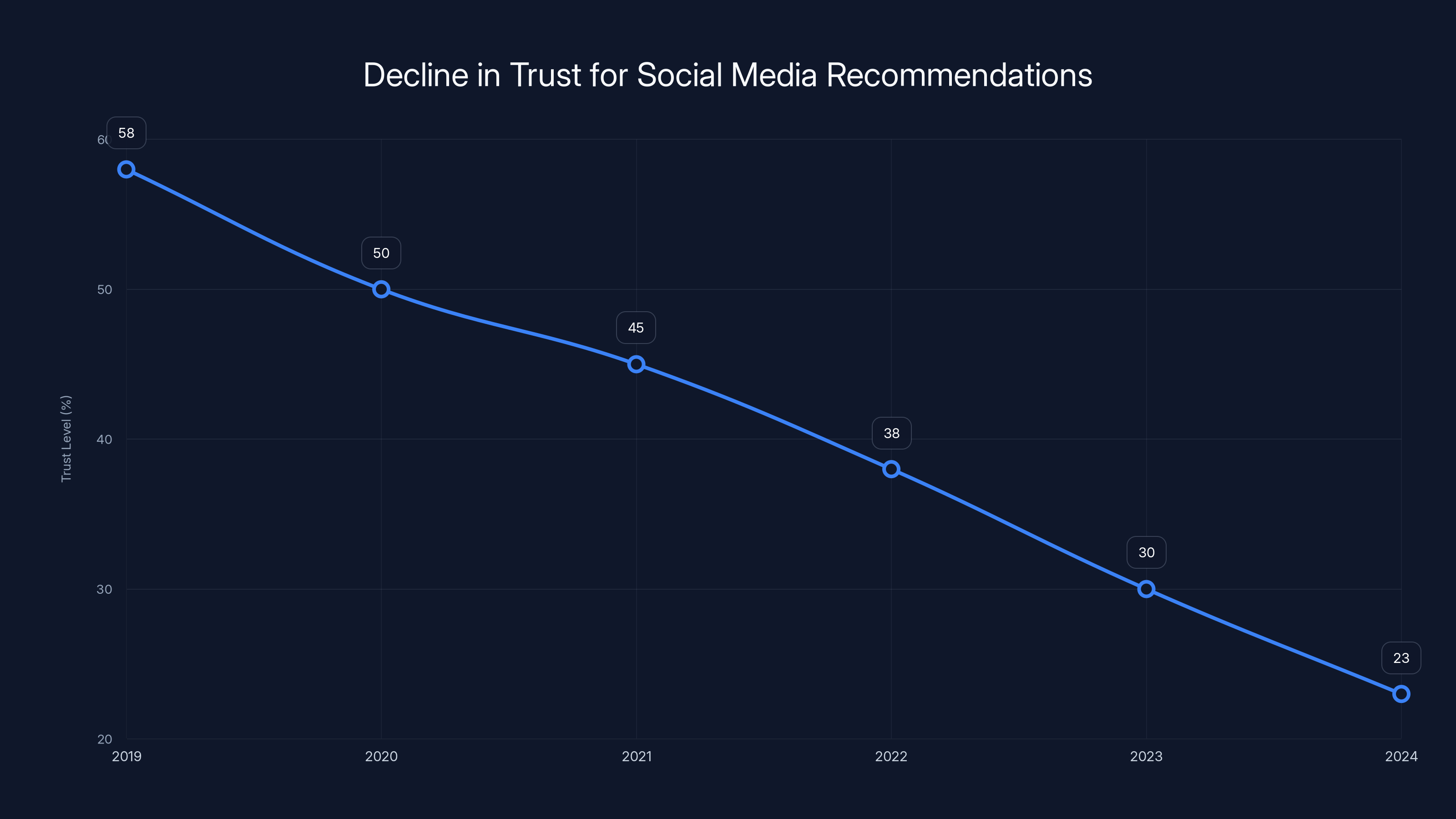

This has spillover effects in the real world. Consumer trust in social media recommendations has dropped dramatically. According to recent consumer research, only 23% of users now trust social media recommendations, down from 58% in 2019.

This is catastrophic for the entire ecosystem. Creators made careers on authentic recommendations. Brands built influence through influencers. That entire structure is collapsing because nobody knows what's real anymore.

Who suffers most? Ironically, the platforms themselves. As trust declines, alternatives become more attractive. Users migrate to private communities, email newsletters, text conversations—anywhere they can trust the source.

The platforms created this problem and now can't fix it without admitting guilt and restructuring their entire business model. So they don't. They double down on AI, hoping scale solves what structure broke.

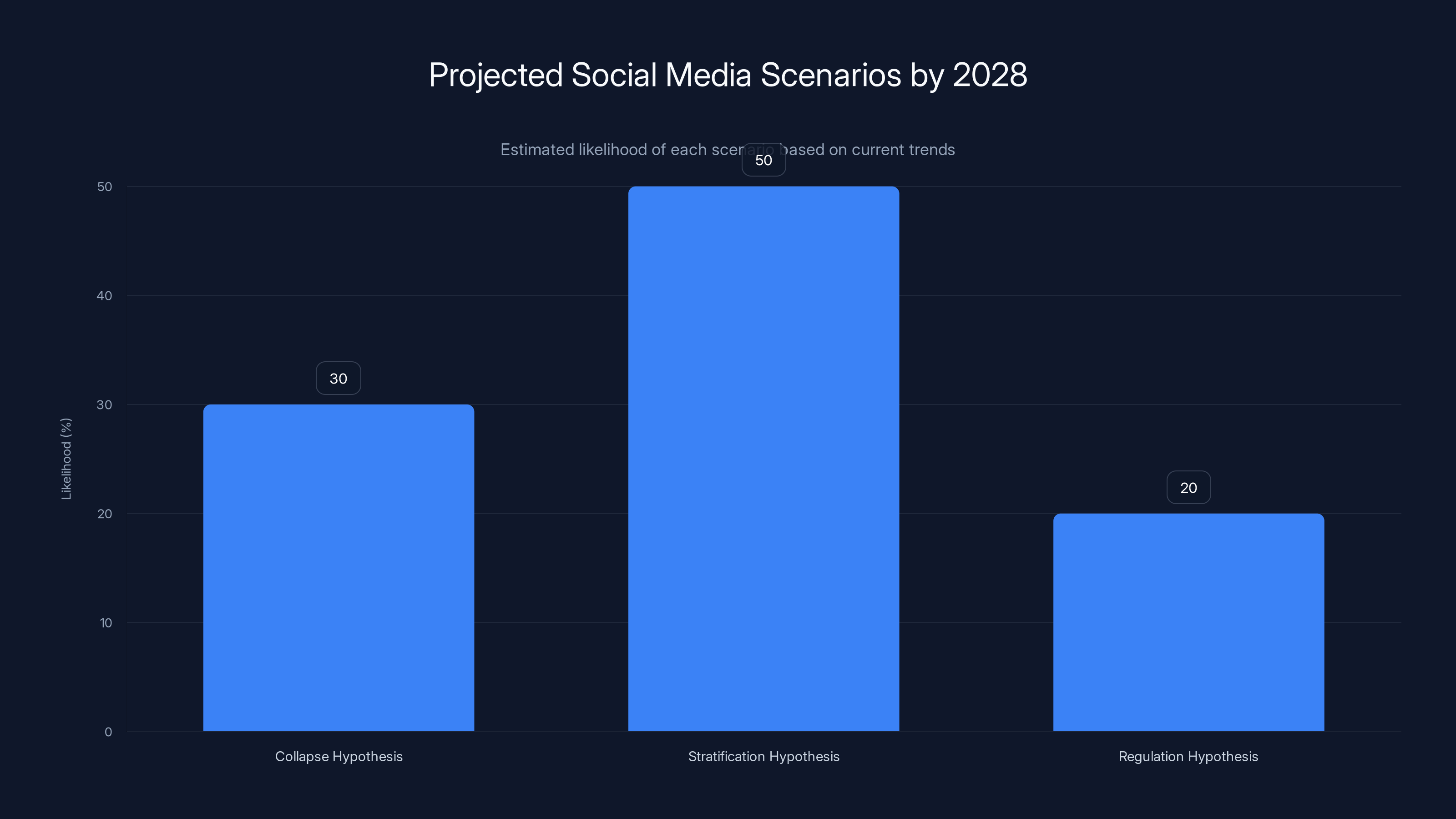

Estimated data suggests the Stratification Hypothesis is the most likely outcome by 2028, with a 50% likelihood, followed by the Collapse Hypothesis at 30%.

What Happens if This Continues

Let's project forward three years. What does social media look like in 2028 if current trends accelerate?

Scenario 1: The Collapse Hypothesis

Engagement metrics continue declining. Users spend more time on platforms but get less value. Quality creators finish their exit to private communities. The algorithm is 80% AI-generated content competing with itself for attention. New users don't join because there's nothing authentic. Growth plateaus. Advertising becomes less valuable because the audience is increasingly bot-generated or disengaged users.

Platforms make drastic changes—potentially requiring verification, limiting posting frequency, actually penalizing slop. But by then, they've lost the best creators and the most engaged users.

Scenario 2: The Stratification Hypothesis

Social media splits into tiers. Mainstream platforms become completely algorithmic, slop-dominated wastelands for casual scrolling. Premium platforms emerge (maybe paid tiers within existing platforms) where humans verify content, recommendations are curated, and slop is explicitly prohibited. This becomes the "real" social media for serious users, while the free tier becomes entertainment/advertising.

Platforms would profit from both, but it requires them to admit that algorithmic distribution is the problem.

Scenario 3: The Regulation Hypothesis

Governments step in and require AI-generated content to be labeled. Platforms must show algorithmic origin, creation method, and engagement source. This creates friction, but it solves the trust problem. Users can filter slop if they choose. Creators can distinguish themselves. The algorithm loses power but gains legitimacy.

This is the only scenario where everyone wins. But it requires regulatory intervention and platforms accepting reduced engagement metrics.

My prediction? We're heading toward Scenario 2, with elements of Scenario 1 in the platforms that don't adapt. Scenario 3 would require unified global regulation, which seems unlikely given current political fragmentation.

The Creator's Path Forward

If you're a content creator, what do you actually do right now? The situation is genuinely difficult, but not hopeless.

Option 1: Embrace the Tools

If you can't beat the algorithm, join it. Use AI tools to increase your output. Generate variations. Post more frequently. This means sacrificing some authenticity, but it's increasingly necessary for algorithmic visibility. Tools like Runable can help automate presentation and document generation if you're creating educational content. It's a valid strategy, but know that you're contributing to the problem you're experiencing as a user.

Option 2: Niche Down

Instead of competing on algorithmic platforms, build a specific community around a specific topic. Create for people, not algorithms. Use algorithmic platforms as traffic funnels to your owned channels: email list, Discord server, website.

This is slower initially but infinitely more sustainable. Your audience is loyal because they follow you, not the algorithm.

Option 3: Hybrid Model

Use algorithmic platforms for discovery and casual engagement. Use owned channels for serious content and community. Post on Tik Tok/Instagram to get people to your email list or Discord. This minimizes algorithm dependence while maintaining discoverability.

Most successful creators are moving toward Option 2 or 3. Option 1 works short-term but has diminishing returns as everyone does it.

Trust in social media recommendations has plummeted from 58% in 2019 to 23% in 2024, highlighting a significant decline in consumer confidence.

What You Can Do as a User

You might feel powerless in this situation, but you're not. Your attention is the currency, and you have more control than you think.

Control Your Feed

Turn off algorithmic recommendations wherever possible. Switch to chronological feeds on Instagram and Facebook. Unfollow accounts that don't add value. Follow creators directly rather than relying on algorithm suggestions. Take control back from the algorithm.

Seek Authenticity Deliberately

Find creators who share their process, show failures, demonstrate authenticity. These people stand out because slop production requires anonymity. Real creators have names, faces, and stakes in their reputation.

Migrate to Alternatives

Bluesky (Twitter alternative) has dramatically lower slop concentration because bots haven't caught up yet. Mastodon (decentralized social network) has minimal algorithmic content. Threads is still establishing its culture but prioritizes chronological feeds.

These platforms are smaller and less polished, but they're more authentic. The trade-off is worth it for users exhausted by slop.

Build Your Own Audience Channel

Start an email newsletter. Create a Discord server. Build a community website. These aren't social media, but they're how real communities function. You control the experience. No algorithm interferes.

Support Real Creators

If you follow creators who produce genuine content, engage with it meaningfully. Leave thoughtful comments. Share with people who'd appreciate it. Send tips or payment if you can. Make it economically viable to create quality content.

Creators compete for attention. If you give your attention to quality, you incentivize more quality.

The Middle Ground: AI Tools Done Right

Let me be clear about something I haven't said yet: AI tools aren't inherently the problem. AI-assisted creation is legitimate. The problem is AI-generated slop masquerading as content.

There's a meaningful distinction. A creator using AI to handle editing, transcription, or formatting—freeing up time for actual creative work—is using AI well. A creator generating fifty variations of the same video and posting them all is using AI for slop.

The difference comes down to intent. Are you using AI to enhance human creativity or replace human judgment?

Some platforms and tools are starting to recognize this. Adobe's generative fill is designed as an enhancement tool, not a content generator. Grammarly improves writing without replacing the writer. These are tools that augment human ability.

Compare that to tools explicitly designed for mass content generation, where human judgment is optional and automation is the goal. The intention is fundamentally different.

If the industry develops toward AI-as-enhancement rather than AI-as-replacement, the slop problem could actually improve. But that requires intentional choices by platforms and creators. Currently, the incentives point the other direction.

The Regulatory Question Nobody's Asked

Even in all the discussion of slop and algorithmic content, there's been almost no meaningful regulatory discussion specific to AI-generated content on social media.

Governments have discussed AI regulation broadly. The EU implemented the AI Act. The US has various proposals. But none specifically address the slop problem on social platforms.

There's a good reason: it's genuinely hard to regulate. How do you define AI-generated content versus AI-assisted content? How do you label it without breaking legitimate uses? How do you enforce it across platforms and countries?

But the absence of regulation is also a choice. It's a choice to let platforms self-regulate, and we've seen how that goes. Platforms don't self-regulate when slop is profitable.

Potential regulatory solutions:

Mandatory Labeling: Require disclosure of AI use, generation method, and automation level. This is feasible technically but platforms would hate it because it reduces engagement on AI content.

Algorithm Transparency: Require platforms to explain why content is shown to users. This would expose the slop-rewarding structure. Also technically possible, also strongly opposed.

Content Authentication: Implement cryptographic proof of content origin (human-created vs. AI-generated). This is technically complex but possible with blockchain or similar systems.

Engagement Limits: Restrict how frequently a single account can post to prevent bot-farm scaling. This would break slop economics but also limit legitimate high-volume creators.

None of these are likely to happen voluntarily. Platforms would need regulatory pressure, and that requires public pressure, and that requires people understanding the problem.

Hopefully, articles like this help with that.

The Light at the End: How This Actually Gets Fixed

I've been pretty doom-and-gloom throughout this, so let me end on what's actually possible.

The slop problem isn't permanent. It's structural, which means it can be restructured. Here's how I think it actually gets solved:

Step 1: User Migration (Already Happening)

Users are already leaving mainstream platforms. They're not all leaving—platforms are too embedded in daily life for total exodus. But they're splitting attention. Bluesky is growing. Discord has more active users than Twitter. Email newsletters are booming. This fractionalizes the audience, which reduces algorithmic platform power.

Step 2: Creator Exodus (In Progress)

Top creators are leaving to build independent audiences. Once the best creators are gone, platforms become less valuable. When John Oliver leaves Instagram, millions of followers notice. When enough creators leave, the platform's value declines irreversibly.

Step 3: Competitor Pressure

Bluesky, Threads, Mastodon, Be Real—these platforms are explicitly positioned as alternatives that resist algorithmic slop. They're smaller now but growing. If they reach critical mass, they become genuine competitors, forcing older platforms to adapt or die.

Step 4: Regulatory Intervention

Once platforms are losing users and creators to competitors, governments will feel empowered to regulate. Regulation is coming anyway, but it'll accelerate as platforms weaken.

Step 5: Platform Restructuring

Platforms will have to choose: accept lower engagement metrics in exchange for user trust and creator retention, or die. Some will make this choice (see Meta investing in Threads as insurance). Others won't and will decline.

This is the actual path forward. It requires user and creator agency. It requires new platforms succeeding. It requires regulators acting. But all of these are in motion right now.

The timeline is probably 3-5 years. By 2030, social media will look dramatically different.

FAQ

What exactly is "slop" in the context of social media?

Slop refers to low-effort, AI-generated or mass-produced content optimized for algorithmic distribution rather than human appreciation. It's characterized by recycled trends, minimal originality, automated posting, and high volume. The term encompasses everything from AI-generated videos with trending sounds to bots farming engagement through repetitive posts, specifically content that's algorithmically optimal but creatively bankrupt.

How much of social media is actually AI-generated content in 2025?

Estimates suggest 40-60% of content on major platforms like Tik Tok, Instagram, and You Tube is now either fully AI-generated or significantly AI-assisted, though this varies dramatically by platform and demographic. Younger users aged 13-25 report experiencing 60-75% algorithmic or AI content in their feeds, compared to more established users who see lower percentages. These numbers have grown exponentially from single-digit percentages just two years ago.

Why do platforms allow slop to dominate their feeds?

Platforms profit more from engagement volume than content quality, and slop generates enormous engagement volumes through its sheer quantity. The algorithm doesn't distinguish between human and AI-generated content when measuring success—it only measures engagement metrics. Additionally, more content means more ad impressions, more user time on platform, and more data for AI training, so platforms have structural incentives to allow and even encourage slop production.

What happens to original creators in a slop-dominated environment?

Original creators face intense pressure to either adopt AI tools to increase their posting volume or accept declining algorithmic visibility. Many are choosing to migrate toward direct-to-audience models like email newsletters, Discord communities, and private membership platforms rather than compete with bot-farms on algorithmic feeds. This represents a fundamental shift in how professional creators relate to social platforms.

Can I actually do anything about this as a regular user?

Yes. You can turn off algorithmic recommendations and switch to chronological feeds, deliberately follow creators rather than trends, unfollow accounts that don't add value, migrate to alternative platforms like Bluesky or Mastodon, and support creators by engaging with their content directly. Additionally, shifting your attention to email newsletters and direct creator communities signals market demand for alternatives to algorithmic content.

What platforms have the least slop right now?

Bluesky (Twitter alternative) and Mastodon (decentralized social network) currently have dramatically lower slop concentrations because they lack large bot networks and algorithmic promotion. Threads uses chronological feeds by default, which reduces algorithmic content distribution. Be Real, which emphasizes authentic unfiltered moments, has minimal slop by design. The trade-off is that all these platforms are significantly smaller than mainstream options.

Is using AI tools for content creation the same as creating slop?

No. There's a critical difference between using AI tools to enhance human creativity (like AI-assisted editing or transcription) versus using AI to replace human judgment entirely (like generating fifty variations of the same video). The distinction comes down to intent: are you using AI as a tool to support your voice, or as a replacement for creative decision-making? AI-as-enhancement is legitimate; AI-as-slop-factory is not.

Could regulation actually fix the slop problem?

Possibly, but it would require coordinated action across multiple countries with different regulatory philosophies. Potential solutions include mandatory AI-content labeling, algorithm transparency requirements, content authentication systems, or posting-frequency limits. However, platforms have strong financial incentives to resist these regulations, so change would likely require significant user migration to competitors first, which is already beginning to happen.

What's the realistic timeline for social media changing?

Based on current migration trends and creator exodus patterns, meaningful change is likely within 3-5 years (2027-2030). This timeline assumes continued user migration to alternative platforms, regulatory pressure increasing, and top creators establishing independent audiences. Some platforms may change faster through competitive pressure, while others may decline as users and creators leave.

Should I quit social media entirely?

That depends on your personal use case. If you primarily consume content, the slop problem affects you directly and alternatives exist. If you're a creator with audience dependency on social platforms, quitting isn't realistic but strategic migration to owned channels is. Most experts recommend splitting attention: maintain social presence for discovery, but build your actual audience in spaces you control (email, Discord, website).

The Reality Check

I didn't quit social media in 2025. But more importantly, I changed how I use it.

I turned off algorithmic recommendations. I unfollowed trends and followed creators. I moved my actual community to Discord. I started an email newsletter. I cut my Instagram time from ninety minutes daily to fifteen minutes every other day.

Did I miss things? Sure. Probably some trends I would've found entertaining. But here's what I gained: genuine recommendations, control over my attention, connection to real people instead of algorithm.

That's the real answer to the slop problem—not waiting for platforms to fix it, but individually choosing not to participate in the system that creates it.

Every person who migrates to an alternative platform, every creator who builds an independent audience, every user who chooses quality over quantity—they're collectively defunding the slop machine.

It's not fast. It's not automatic. But it's real, it's happening, and it's the only path that actually works.

The question isn't whether you should quit social media. It's whether you should quit this version of social media—the version optimized for slop, designed for maximum engagement, and completely indifferent to your actual satisfaction.

I'm betting that enough people say yes to that question to actually change things. We'll find out by 2030 if I'm right.

Key Takeaways

- AI-generated content now comprises 40-60% of social media feeds, with algorithmic platforms actively rewarding volume over quality

- Platforms profit more from slop engagement than original content, creating structural incentives against quality curation

- User trust in social recommendations has collapsed from 58% (2019) to 23% (2025), indicating fundamental platform failure

- Professional creators are migrating to independent audience models (email, Discord) rather than competing with AI-scale content farms

- Alternative platforms (Bluesky, Mastodon, Threads) are growing as users seek slop-resistant social media experiences

Related Articles

- GPS Jamming: The Vulnerability Threatening Modern Infrastructure [2025]

- AI Budget Is the Only Growth Lever Left for SaaS in 2026 [2025]

- The 32 Top Enterprise Tech Startups from TechCrunch Disrupt [2025]

- Rainbow Six Siege Server Shutdown: Marketplace Hack Explained [2025]

- Panoptic Lens Technology: The Camera That Focuses Everything at Once [2025]

- Xbox 2026 Predictions: Halo on PlayStation, Fable Returns [2025]

![The AI Content Crisis Destroying Social Media in 2025 [What You Need to Know]](https://tryrunable.com/blog/the-ai-content-crisis-destroying-social-media-in-2025-what-y/image-1-1767022833086.jpg)