Microsoft's Copilot AI Email Bug: What Happened and Why It Matters

Here's something that should have you thinking twice before trusting AI with your email: Microsoft disclosed that its Copilot AI chatbot was reading your confidential emails without permission. For weeks. Even if you'd explicitly blocked this from happening.

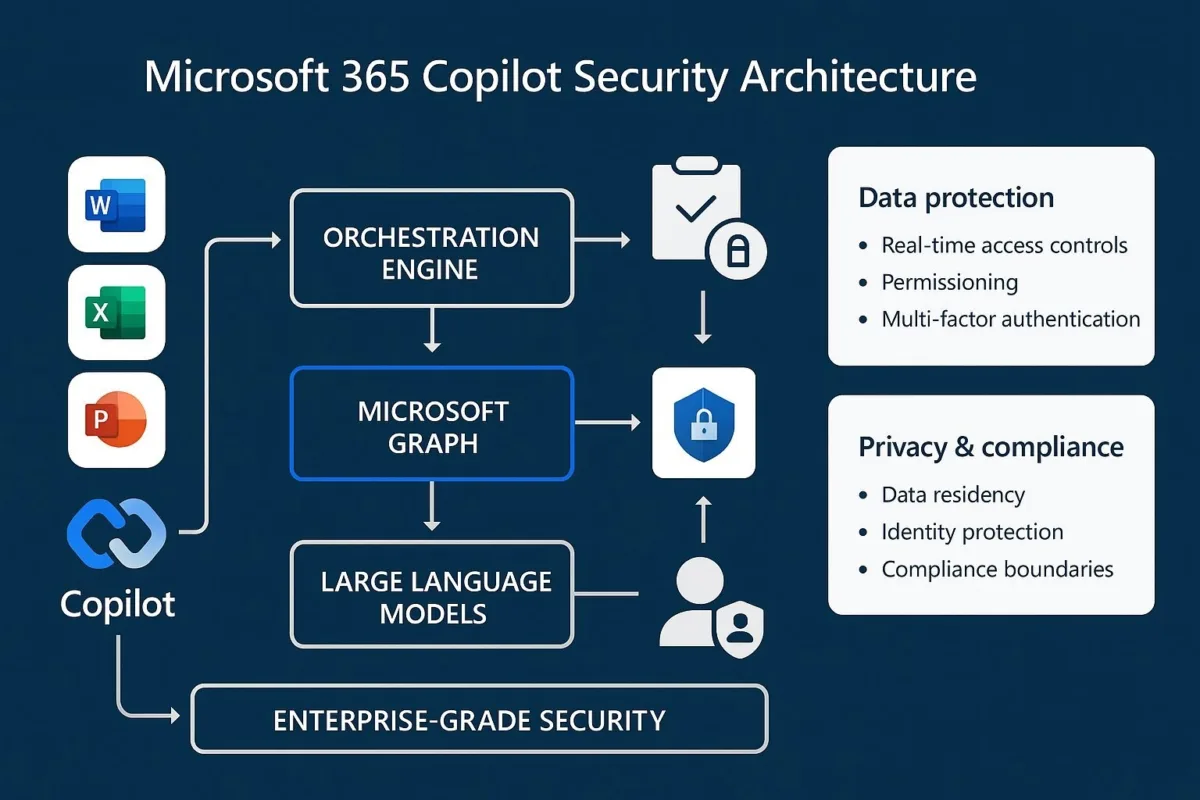

This isn't theoretical security theater. It's a real bug that Microsoft 365 customers—people paying for enterprise-grade security—unknowingly experienced. The bug meant that draft and sent emails marked as confidential were being fed into Microsoft's large language model, completely bypassing the data loss prevention policies companies put in place specifically to stop this.

The thing that gets me is the casual way this was handled. No big announcement. No emergency notification to affected customers. Just a quiet fix rolling out in February with a ticket number (CW1226324) that most people will never know about.

This incident cuts to the heart of a much bigger problem: AI integration is moving faster than security controls. Companies are bolting AI features onto existing products without fully thinking through how those features interact with sensitive data. And when things go wrong—when a bug slips through—the damage is already done before anyone even knows about it.

Let's break down what actually happened, why it matters, and what this tells us about the future of AI in enterprise software.

TL; DR

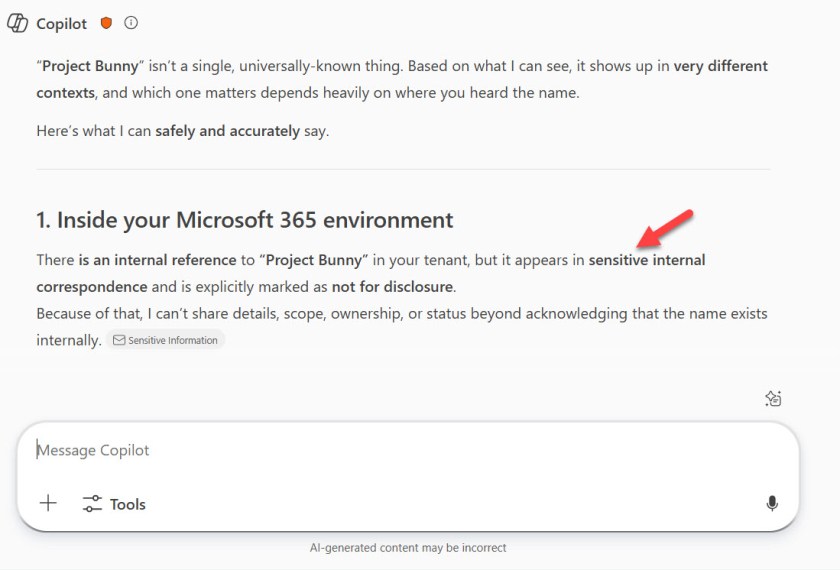

- A bug allowed Copilot AI to read confidential emails: Despite data loss prevention policies being active, Copilot Chat was summarizing emails marked with confidential labels starting in January 2025

- The exposure lasted for weeks: Microsoft only began rolling out fixes in February after the bug was first reported by Bleeping Computer

- Data protection policies didn't work: Customers who believed their sensitive emails were protected discovered the safeguards had failed

- No customer notification from Microsoft: The company confirmed the issue but didn't proactively inform affected users

- The European Parliament took action: Lawmakers blocked AI features on their work devices specifically citing these kinds of security concerns

- This represents a fundamental tension: Enterprise AI features and data security don't always play nicely together

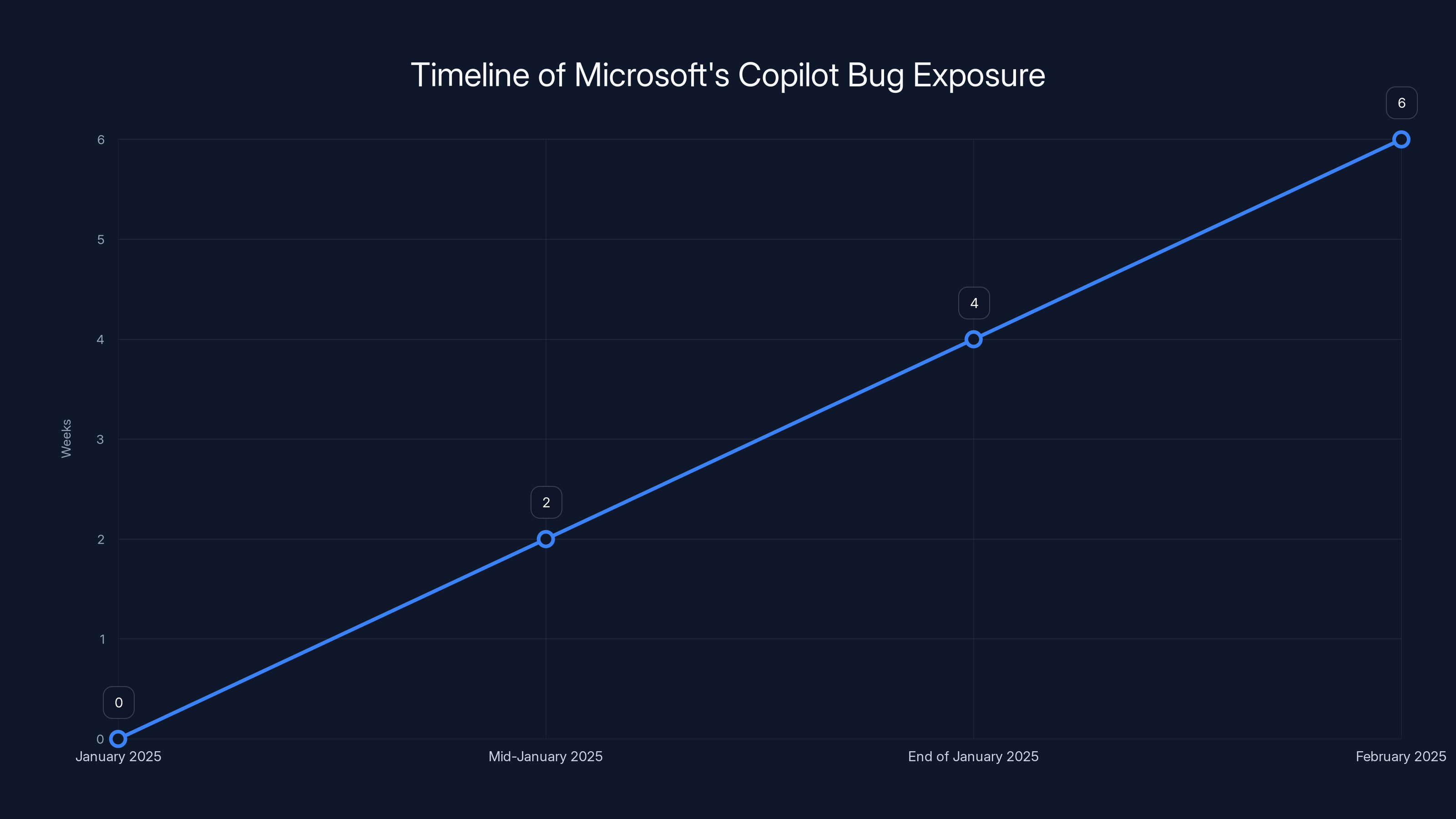

The bug in Microsoft's Copilot Chat was active for approximately four to six weeks from January to February 2025, exposing confidential emails to unauthorized access. Estimated data.

The Bug: How Confidential Emails Made It Into Microsoft's AI

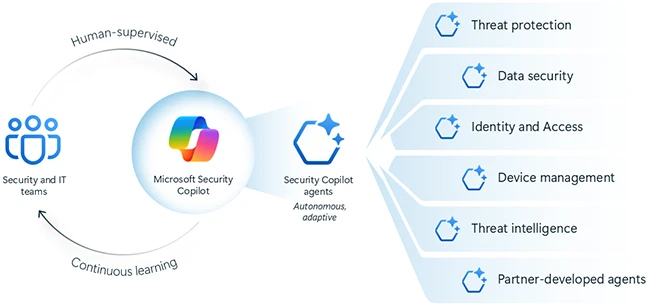

Let's be clear about what happened here. Microsoft 365 offers Copilot Chat, an AI feature that integrates into Word, Excel, PowerPoint, and Outlook. When customers use Copilot Chat, they're essentially sending their content to Microsoft's large language models so the AI can analyze and summarize that content.

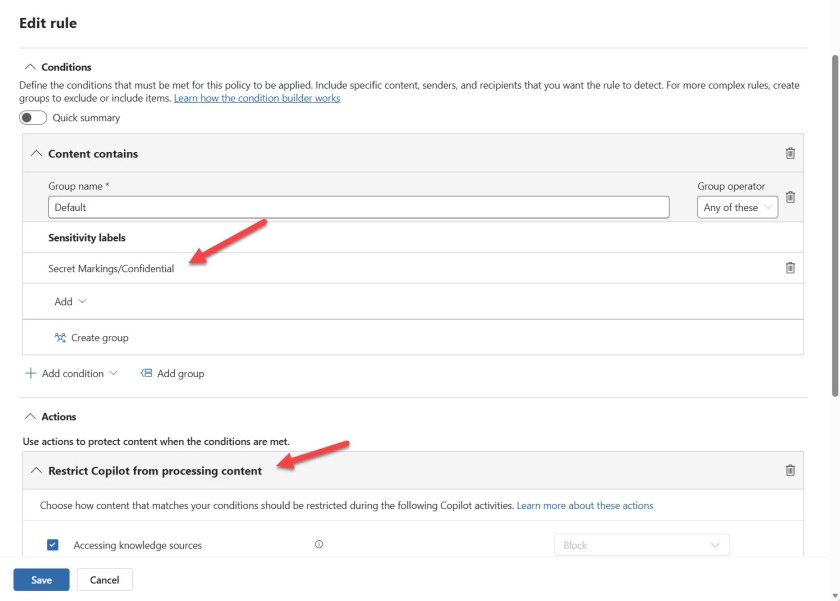

Companies know this happens. That's why Microsoft built data loss prevention (DLP) policies—security controls that are supposed to stop sensitive information from being ingested into AI systems in the first place. An email marked with a "confidential" label should never reach the LLM. That's the entire point of the label.

Except it was.

The bug specifically targeted emails with confidential labels already applied. These weren't ambiguous cases where the system was struggling to classify sensitivity levels. These were emails where someone had explicitly marked them as confidential, and the security system was supposed to respect that marker.

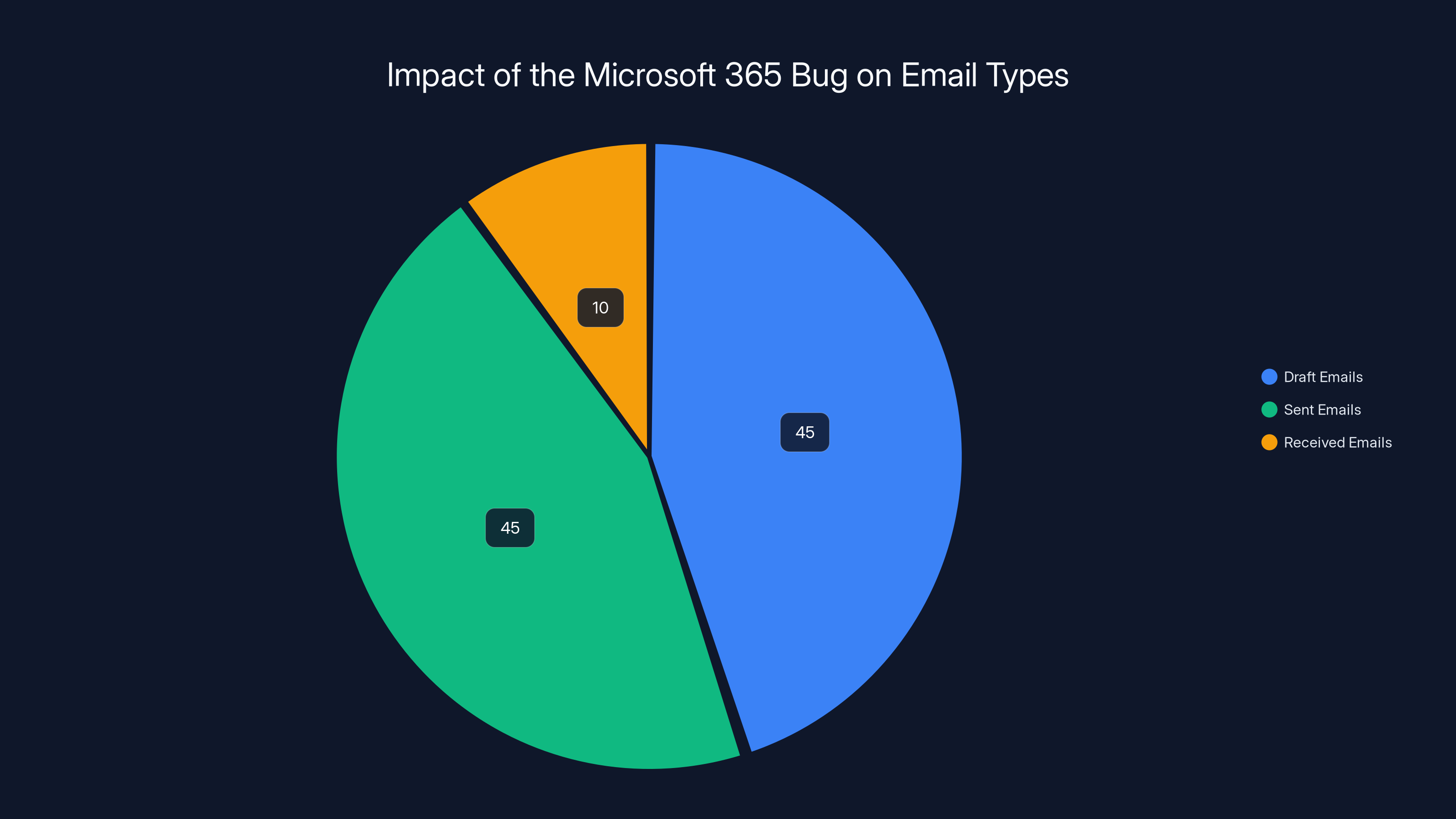

According to Microsoft's own disclosure, the bug affected draft and sent messages only—not received emails. That's a narrow but important distinction. It means your inbound confidential emails were safer, but your own writing, your own drafts, your own thinking process captured in outgoing messages, was being fed to the AI.

Think about what you write in email. The early versions of ideas. The frustrated comments you'd never say aloud. The client vulnerabilities you're discussing with your team. The merger discussions. The personnel issues. The legal strategy. All of that, if marked confidential, was supposed to be protected.

It wasn't.

The timeline is important here. The bug existed since January 2025. Microsoft didn't roll out fixes until February. That's weeks—potentially a month or more—where every Copilot Chat interaction with a confidential email was a security failure.

Microsoft hasn't disclosed exactly how many customers were affected. When asked, a spokesperson declined to comment. That's frustrating from a transparency perspective, but also realistic—they probably don't know the full scope of the issue yet. Enterprise security incidents rarely have clean numbers attached to them.

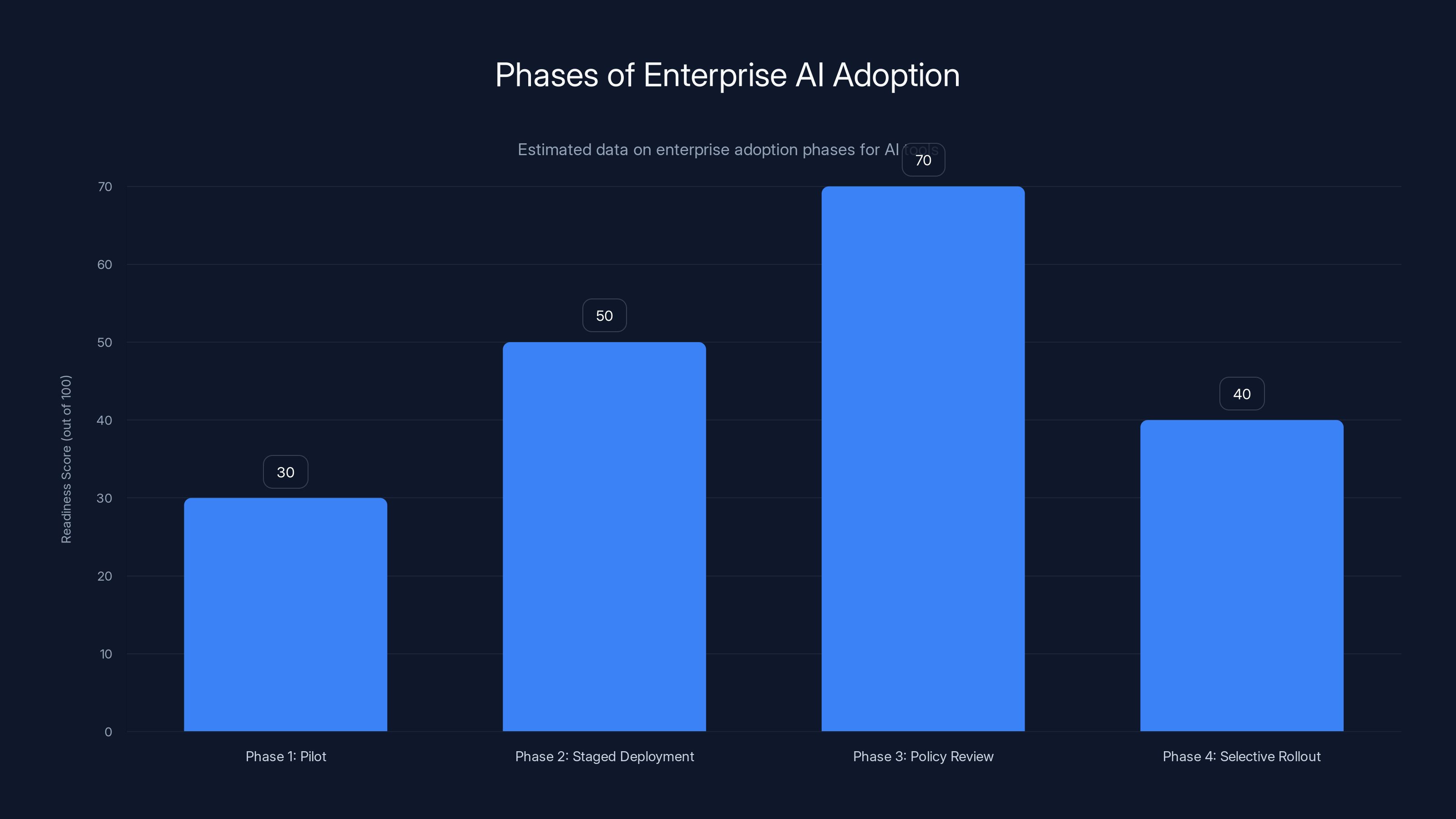

Estimated data suggests that most organizations are ready to adopt AI tools up to Phase 3, with a significant drop-off in readiness for Phase 4 due to increased risk with sensitive data.

Why Data Loss Prevention Policies Failed

Data loss prevention policies are one of the fundamental tools of modern enterprise security. Basically, you set rules like "block any email containing credit card numbers" or "prevent confidential documents from being uploaded to cloud services." The DLP engine sits in the middle of the system and enforces those rules.

Except in this case, it didn't. At least not for Copilot Chat.

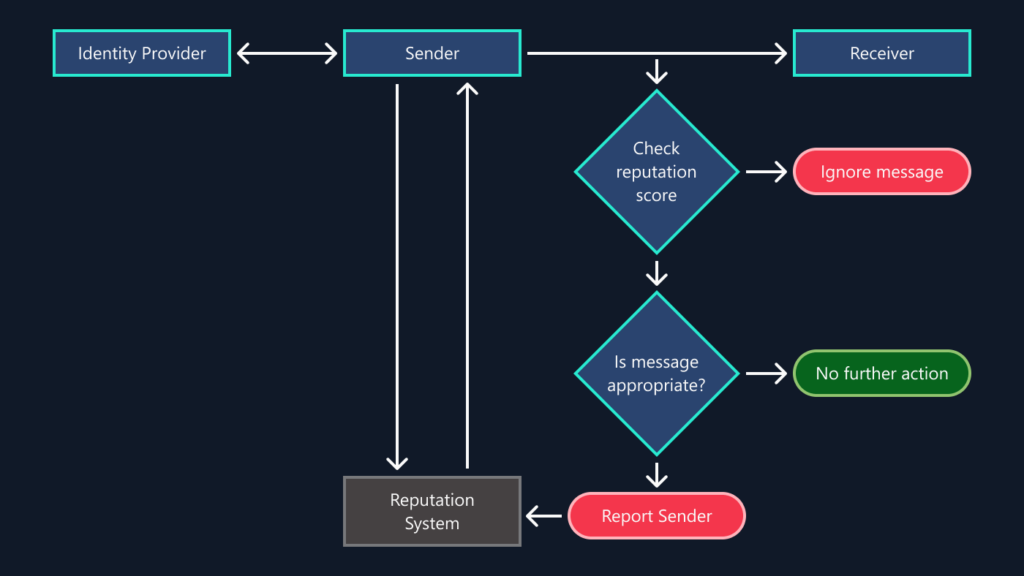

Here's likely what happened, even though Microsoft hasn't released detailed technical analysis: The Copilot Chat feature was probably built as a separate process or subsystem. When you ask Copilot to summarize an email, the request goes into the Copilot pipeline, which then pulls the email content and feeds it to the LLM. At some point in this chain, the DLP policy check either didn't happen or happened in the wrong order.

Maybe Copilot was retrieving emails before the DLP check ran. Maybe the DLP engine didn't have visibility into Copilot's internal data flows. Maybe there was a race condition where the policy was being checked, but the email had already been queued for processing. Or maybe—and this is possible—the DLP check was happening, but it wasn't correctly reading the confidential label metadata.

The technical root cause matters less than the architectural lesson: when you add a new AI system to existing enterprise software, the security model doesn't automatically extend to cover it. You can't just bolt Copilot onto Outlook and assume your existing DLP policies will magically work. The architects and engineers have to explicitly design the integration with security in mind from day one.

That's expensive. It's slower. It requires threat modeling and careful implementation. And clearly, in this case, it didn't happen.

What's worse is that this isn't a complex attack that required sophisticated hacking. This is a straightforward failure of basic controls. The DLP infrastructure existed. The confidential label system existed. The two just weren't properly connected.

For enterprises that spent significant budget on implementing DLP policies, this must be incredibly frustrating. You bought security, configured it, trained your employees to use it, and then found out it wasn't protecting you.

The Copilot Chat Feature: What It Does and Why It's Risky

Copilot Chat is the feature that lets Microsoft 365 paying customers use AI directly in their productivity tools. Need a summary of a long email thread? Copilot can do it. Need to generate a presentation outline from document content? Copilot can do that too.

On its face, it's genuinely useful. When it works correctly, it saves time. A user can delegate the tedious summarization of a lengthy email conversation and get the key points in seconds. Instead of reading through 15 back-and-forth messages, you get a paragraph of the essential information.

The problem is that utility requires sending your content to Microsoft's servers. Specifically, to their large language models. And once your content is there, it's subject to Microsoft's data handling practices, their security controls, their infrastructure, and their incident response.

Most enterprise customers understand this at a conceptual level. But understanding it intellectually and actually managing the risk are different things.

For sensitive communications, the calculus changes. If you're discussing M&A strategy, legal strategy, financial performance, or personnel issues, the convenience benefit of AI summarization needs to be weighed against the risk. And that's exactly what data loss prevention policies are supposed to do—enforce that decision at the technical level.

Except they didn't. Which brings us back to the core issue.

Microsoft isn't the only company doing this, either. Google's integrating Gemini into Workspace. Slack's building AI features into messaging. Salesforce bundled Einstein into CRM. Every major productivity platform is racing to add AI, and security is often playing catch-up.

The bug primarily affected draft and sent emails, each accounting for 45% of the impact, while received emails were largely unaffected, comprising only 10% of the affected cases. Estimated data.

How the Bug Was Discovered

The bug wasn't discovered by Microsoft's own security team. It was reported by Bleeping Computer, a cybersecurity news outlet that has a strong track record of breaking enterprise security stories.

That detail matters. It means customers didn't discover this themselves. They weren't seeing suspicious activity. Nobody noticed a breach. An external researcher or security professional tipped off the media, which then reported it, which forced Microsoft to publicly confirm it.

If Bleeping Computer hadn't covered this, how long would the bug have continued? Would Microsoft have eventually discovered it? Or would it still be happening right now?

We don't know. And that's the problem.

Enterprise security relies heavily on responsible disclosure—the practice where researchers find bugs, report them privately to the vendor, and give the vendor time to fix them before going public. It's a system that generally works well. The researcher gets credit, the vendor gets a fix, customers get protected.

But it requires that someone is actually looking. A bug that sits in production for a month without being discovered internally is a flag that the security testing and monitoring might not be sufficient.

The fact that it was a reporter who found it, not Microsoft's internal teams, suggests a few possibilities:

First, maybe the bug was subtle enough that normal security testing didn't catch it. The system was correctly processing most emails and most DLP policies. It was specifically the combination of a confidential label and the Copilot Chat feature that triggered the issue.

Second, maybe Microsoft's internal threat modeling didn't adequately consider the Copilot Chat integration with existing DLP systems. The feature was probably tested for functionality, but maybe not tested against the full range of DLP configurations that enterprise customers actually use.

Third, maybe the bug was only affecting a specific configuration or subset of customers, making it harder to detect.

Any of these explanations are plausible. And any of them should concern enterprise customers who rely on Microsoft 365 for confidential communications.

Microsoft's Response and Communication Failure

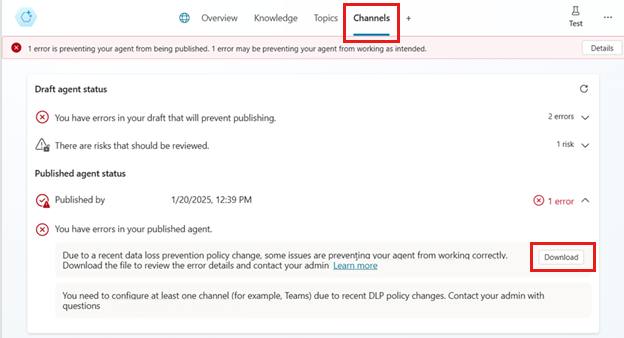

Here's what happened: Microsoft disclosed the bug with a support ticket number. They said they're fixing it. They're rolling out patches.

That's the technical response. The communication response was... minimal.

When you disclose a security incident that affects paying enterprise customers and potentially compromises their confidential information, you'd expect more than a quiet support ticket. You'd expect:

- A public security advisory with details

- Customer notification emails to affected organizations

- Clear guidance on what was exposed and when

- Instructions for how to identify if you were impacted

- Information about any additional steps customers should take

Microsoft provided none of that. The disclosure happened because Bleeping Computer reported it, not because Microsoft proactively informed customers.

When a reporter asked for comment—specifically asking how many customers were affected—Microsoft's spokesperson declined to respond.

That's a communication approach that prioritizes damage control over transparency. And in the world of enterprise security, it tanks trust.

Companies spend enormous budgets on security infrastructure because they're trusting vendors like Microsoft to protect sensitive information. When something goes wrong, the response matters as much as the fix. Saying "we found a bug, we're fixing it" without transparently explaining scope and impact feels like the vendor is minimizing the incident rather than treating it seriously.

For context: when Apple discloses a security vulnerability, they typically publish detailed advisories. When Google discloses a Chrome vulnerability, they publish release notes explaining the impact. When cloud providers have security incidents, they usually send direct customer notifications.

Microsoft's approach here fell short of that standard. Which is interesting, because Microsoft actually has good security practices in many areas. But this incident shows what happens when a vendor handles communication poorly.

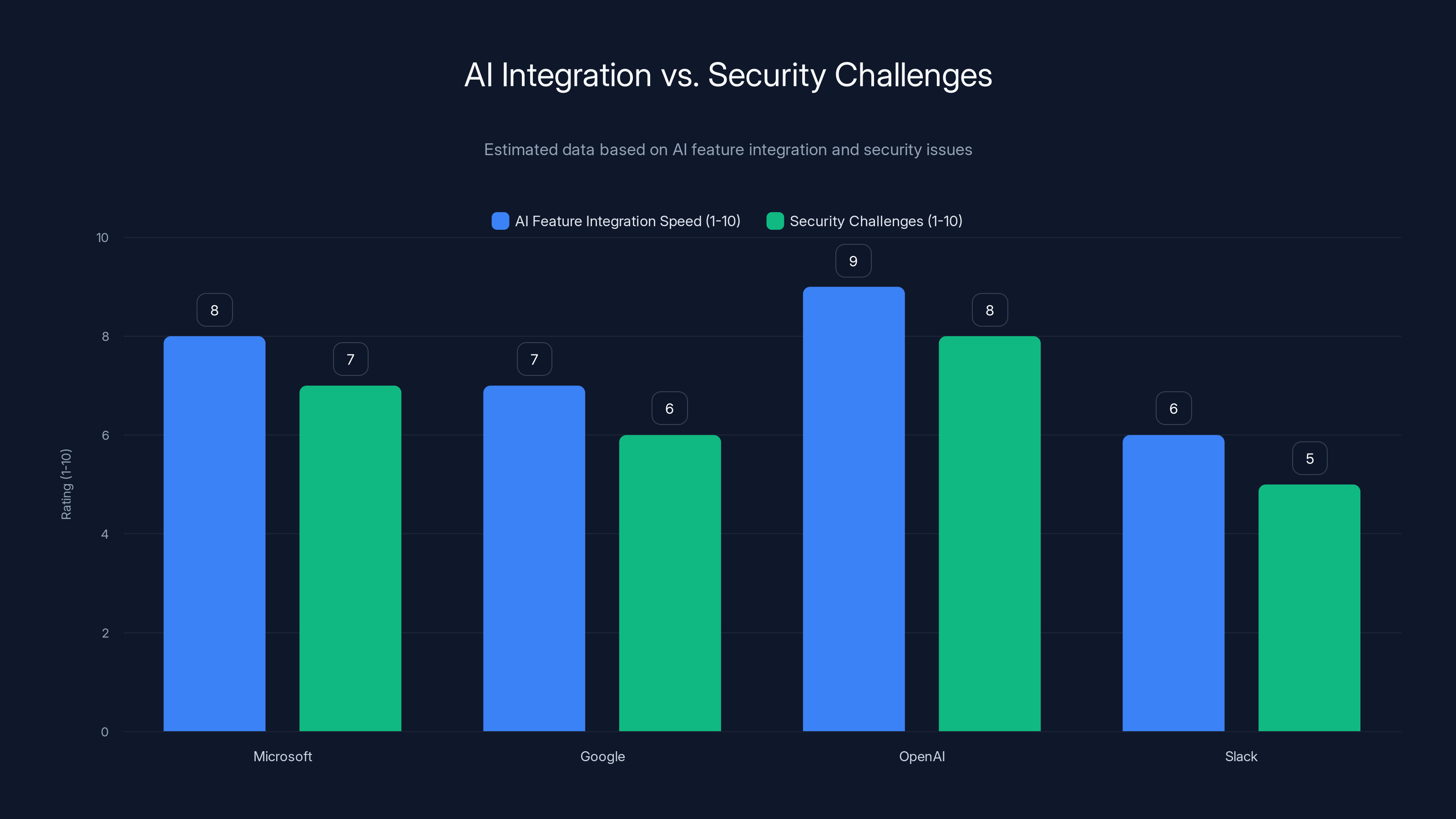

Estimated data suggests that while companies are rapidly integrating AI features, they are facing significant security challenges, with Microsoft and OpenAI leading in both integration speed and security issues.

The European Parliament Connection: Warnings About AI in Office

Here's the kicker: right around the same time this bug was making headlines, the European Parliament's IT department made a decision.

They blocked the built-in AI features on lawmakers' work devices.

Why? The official reason cited security concerns—specifically, concern that AI tools could upload confidential correspondence to the cloud.

The timing is almost too perfect. The Parliament's IT team doesn't necessarily have early visibility into Microsoft's bugs. They weren't reacting to this specific incident. They were reacting to the general risk profile of integrating AI into systems that process sensitive information.

And then, proving their point, a bug was discovered where AI systems were processing confidential correspondence against policy.

It's like watching someone install a new lock on their door and then deciding not to use it because they're worried it might fail. Then the lock immediately fails. The concern wasn't paranoia—it was foresight.

European Parliament lawmakers are processing everything from diplomatic cables to sensitive legislative drafts. They absolutely should be cautious about AI systems accessing that information. The EU's data protection regulations are stricter than US regulations, and for good reason.

This incident will likely be cited in future discussions about AI governance in the EU. Regulators will point to this as evidence that AI features in enterprise software need more rigorous security review before deployment.

For Microsoft, operating in the EU means complying with GDPR and increasingly with AI-specific regulations. A bug that exposes personal data isn't just a technical problem—it's a regulatory problem. Microsoft may face investigation by data protection authorities, and remediation could include fines, mandated security improvements, or restrictions on certain features.

The Parliament's decision to block the feature isn't just about this specific bug. It's about the pattern. Every major software vendor is racing to add AI, and not all of them are getting the security right on the first try. The Parliament's IT team decided the risk wasn't worth it, at least not until the feature is more mature.

It's a reasonable position. And this bug provides justification for that caution.

The Broader Pattern: AI Integration Outpacing Security

This incident isn't unique. It's part of a larger pattern we're seeing across enterprise software.

Vendors are under enormous competitive pressure to add AI features. Chat GPT's success proved there's demand for these capabilities. Every company that makes productivity tools is trying to integrate AI. And they're trying to do it fast.

The problem is that adding AI features to existing systems is architecturally complex. You're not just bolting on a new button. You're creating new data flows, new integrations, new places where security could fail.

Consider what has to work correctly:

- The user makes a request in Copilot Chat

- The request is transmitted securely to Microsoft's servers

- Microsoft's system retrieves the relevant content from the user's email, documents, or files

- That content passes through data governance checks (DLP policies, access controls, etc.)

- The content is fed to the language model

- The model generates a response

- The response is transmitted back to the client

- The response and the original content may be logged for various purposes

If any step in this chain doesn't implement security correctly, you get breaches or exposures. And the more complex the chain, the higher the likelihood of a failure.

Microsoft's bug happened at step 4—the data governance check didn't work correctly for this specific scenario. But it could have happened anywhere in the chain.

What concerns security professionals is that this pattern is repeating across vendors. Google had to fix security issues with Gemini. Open AI's had to address data handling questions. Slack's working through AI feature security. It's not that these companies are negligent—it's that they're pushing features to market at a pace that sometimes exceeds their security testing capacity.

From a user perspective, this creates a dilemma. You want the productivity benefits of AI. But you also want assurance that your sensitive information isn't being exposed.

For enterprise customers, the answer is clear: don't use AI features with sensitive data until you've thoroughly tested them and verified they work with your security controls. It's not exciting, but it's responsible.

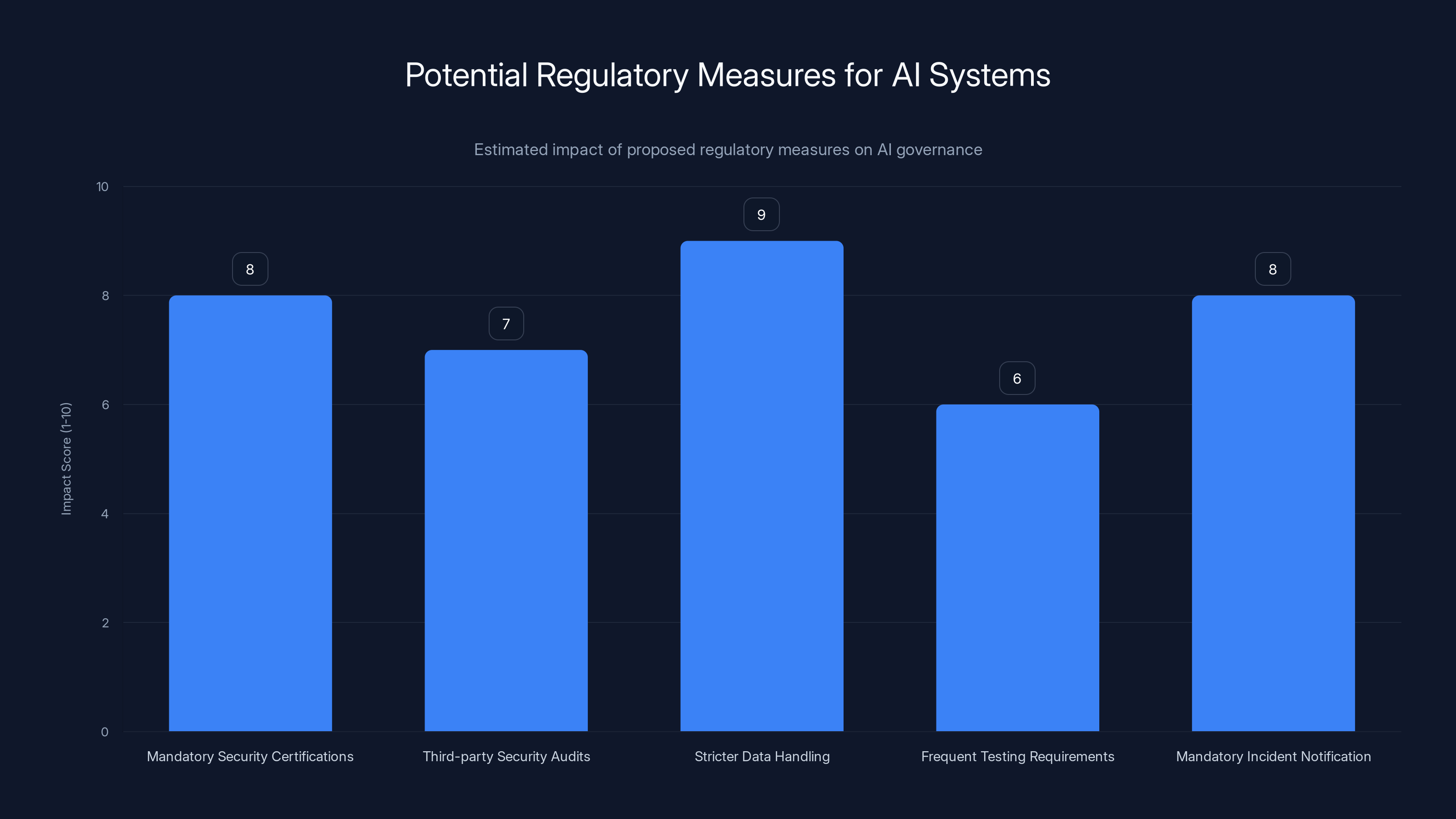

Estimated data suggests that stricter data handling and mandatory security certifications could have the highest impact on AI governance, potentially leading to faster discovery and disclosure of incidents.

What Actually Gets Sent to Microsoft's Servers?

When you use Copilot Chat, you're not just sending a request to the cloud. You're sending content. If you ask Copilot to summarize an email, that email gets transmitted to Microsoft's infrastructure.

Microsoft says this data is handled in accordance with their data handling agreements. It goes to their LLM, gets processed, and the output is returned to the client. Microsoft says they don't retain this data in the same way they retain metadata from other Office interactions.

But here's the thing: understanding how your data is handled requires reading the service agreement, understanding the technical architecture, and trusting the vendor to implement it correctly. The Copilot bug shows that last part—trusting the vendor—can be risky.

Even if data handling is theoretically correct, if DLP policies don't work, then confidential information is being sent to the cloud against policy. And once it's there, the risk surface expands.

Microsoft likely employs standard protections—encryption in transit, encryption at rest, restricted access. But security is only as strong as the weakest link. And this bug proved that one of the links (the DLP policy integration) was weak.

For users who interact with Microsoft 365, it's worth being explicit about what you put in emails. If you're discussing something confidential, consider whether you really need to use Copilot Chat to summarize it. If DLP policies aren't working, your confidence level should be low.

If you are going to use these features, test them. Create a test email with a confidential label and try to use Copilot. See what happens. That will tell you whether your security controls are actually working or whether you're relying on a false sense of security.

The Risk Calculus for Enterprise Adoption

So what's the decision matrix here?

On one side: AI features provide real productivity benefits. Copilot Chat can save time on summarization, brainstorming, and analysis tasks. That's not trivial—if you can save 30 minutes a day across a team of 50 people, that's substantial.

On the other side: bugs happen. Security controls don't always work as intended. And when they fail with sensitive information, the consequences can be significant. Legal liability, regulatory fines, loss of customer trust, competitive damage.

For most organizations, the right approach is:

Phase 1: Pilot with non-sensitive data. Test the feature with general business content where accidental exposure isn't catastrophic. Marketing copy, product documentation, public business analysis. Use this phase to understand how the feature works and whether your security controls are actually effective.

Phase 2: Test with staged deployments. Once you're confident in the feature's behavior, roll it out to specific teams or departments. Monitor for issues. Verify that DLP policies are working as intended. Make sure your audit logging captures what's happening.

Phase 3: Review and refine policies. After a few weeks or months of use, review what data is actually being processed by Copilot. Adjust your DLP policies based on what you're seeing. If certain types of content are being processed that you didn't expect, tighten the policies.

Phase 4: Selective rollout for sensitive data. Only after you've verified everything is working correctly do you consider using Copilot Chat with sensitive information. And even then, consider explicit policies about what's appropriate to send to the AI.

Many organizations will decide they never reach phase 4. For those dealing with confidential information—legal firms, consulting companies, financial services, healthcare—the risk might not be worth the reward. Keeping those communications away from AI systems might be the safer choice.

The bug Microsoft disclosed is exactly why this caution is justified. The DLP policy was supposed to prevent this exposure. It didn't. Until Microsoft can prove that DLP works reliably with Copilot Chat, enterprises have every reason to be restrictive about what they allow.

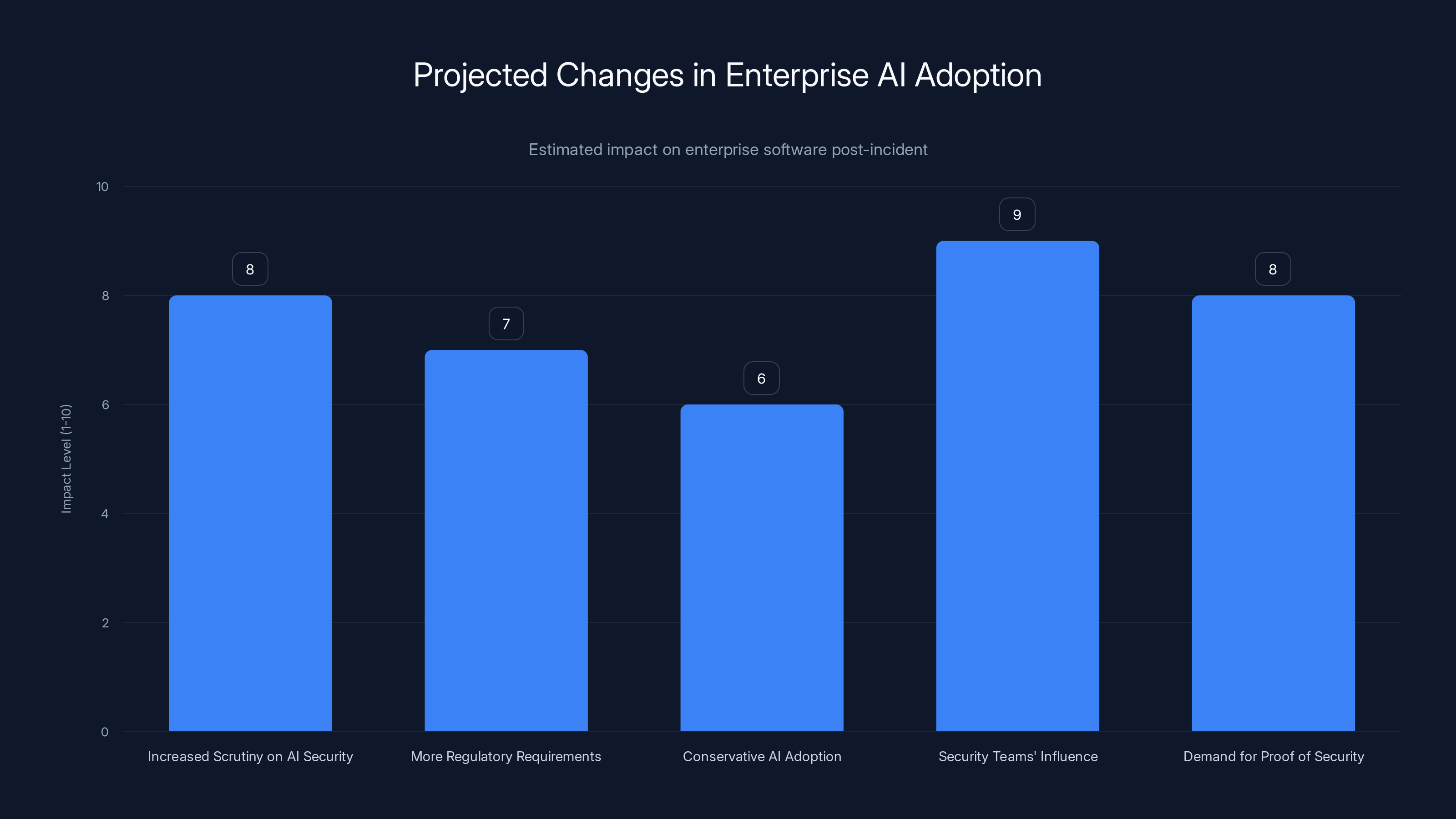

Estimated data suggests that increased scrutiny on AI security and greater influence of security teams will have the highest impact on enterprise software changes.

Microsoft's Technical Fix and Rollout

Microsoft began rolling out a fix in February. The company hasn't provided extensive technical details about what the fix actually does—likely because they don't want to reveal how the bug worked (which could help attackers look for similar vulnerabilities in other systems).

But a fix probably involves:

- Ensuring that DLP policy checks happen before content is fed to Copilot

- Adding additional verification of email labels and metadata

- Possibly restructuring how Copilot Chat retrieves email content

- Enhanced logging and monitoring to catch if this type of issue happens again

The rollout is being done gradually. Microsoft can't push updates to all 400 million users simultaneously. They have to test the fix extensively, roll it out in waves, monitor for unintended consequences, and ensure it doesn't break legitimate Copilot functionality.

That's a reasonable engineering approach. But it also means that for some period of time, some organizations might still be vulnerable while waiting for the patch to reach their environment.

For enterprise customers, the right question to ask is: when exactly is the fix deployed to your environment? Most enterprises don't get the latest updates immediately. Some organizations are on extended support channels and might not get the fix for weeks or months after it's released.

If you're in a delayed update channel, you might still be vulnerable even after Microsoft says it's fixed. That's not Microsoft's problem to solve—it's the result of your organization's decision to stay on slower update channels for stability reasons. But it does mean you need to be extra cautious about Copilot Chat usage until the patch hits your infrastructure.

Microsoft could accelerate patching by making this a critical security update with mandatory deployment on all channels. The fact that they haven't suggests they don't consider it critically urgent, which is... interesting, given that it exposed confidential information.

Lessons for Enterprise Security Teams

What should security teams take away from this incident?

First, your security controls are only effective if they're actually integrated with all the systems that process data. When new features get added to existing applications, security architects need to explicitly design how those features interact with existing controls. It shouldn't be an afterthought.

Second, test your controls with new features before you deploy them to production. Create a test environment that includes new features and verify that DLP policies, access controls, and other protections actually work. Don't assume they work—verify it.

Third, understand the risk profile of each feature you enable. Copilot Chat is useful, but it comes with the risk that your content will be transmitted to cloud services. That's a trade-off that makes sense for some data and not for other data. Be explicit about where the boundary is.

Fourth, diversify your vendor dependencies. Relying entirely on Microsoft for all productivity software creates concentration risk. If something goes wrong with Office, your entire communication and document infrastructure could be compromised. Consider where you can substitute other tools or maintain fallback options.

Fifth, maintain detailed audit logging of what data gets processed by AI systems. You should be able to answer questions like "what did Copilot Chat access in the past month?" and "were any confidential emails processed by Copilot?" Those logs are critical for incident response and compliance.

Sixth, invest in threat modeling for AI features specifically. How could this feature be misused? What if controls fail? What's the worst-case scenario? Use that analysis to inform your deployment strategy.

These are all practices that mature security organizations already do for other systems. But many haven't formalized the process for AI features yet. This incident is a good reminder to do so.

The Regulatory Implications and Future Policy

This bug happened in a regulatory environment that's tightening around AI. The EU's AI Act, various national regulations, and industry-specific rules (like HIPAA for healthcare and SOX for finance) all increasingly define how AI systems can and can't be used.

In the EU specifically, this incident will likely trigger investigations by data protection authorities. If confidential personal data was exposed to Microsoft's AI systems, that's a data breach under GDPR. Microsoft could face fines of up to 4% of global revenue—not as a punishment for the bug, but as a remedy for the exposure. The fix matters less than the fact that it happened.

Even in the US, where regulations are less stringent, there could be consequences. If customers were harmed by unauthorized exposure of their confidential communications, they might have legal claims against Microsoft. Class action lawsuits have been filed for less.

More broadly, this incident will inform policy discussions about AI governance. Regulators will point to it as evidence that voluntary corporate governance isn't sufficient. If vendors like Microsoft can have bugs that expose confidential data despite having security controls in place, then stronger mandatory requirements might be needed.

That could mean:

- Mandatory security certifications before AI features can be deployed to production

- Third-party security audits of AI implementations

- Stricter requirements around data handling with AI systems

- More frequent and rigorous testing requirements

- Mandatory incident notification timelines

None of this would necessarily prevent the bug from happening. But it might result in faster discovery and disclosure, more rigorous testing, and stronger oversight of how vendors handle AI-related security.

What Customers Should Do Now

If you're a Microsoft 365 customer, here are practical steps:

First, assess your exposure. Were you using Copilot Chat during January and early February? Did any of your emails have confidential labels? If the answer to both questions is yes, you were potentially affected.

Second, check your audit logs. Microsoft 365 has detailed audit logging. Check to see what content Copilot Chat accessed during the vulnerable period. If confidential emails were accessed, you need to know about it.

Third, notify your stakeholders. If your organization was affected, your leadership and your customers probably need to know. This isn't just a technical issue—it's a data handling issue that might have compliance implications.

Fourth, review your DLP policies. Work with your security and compliance teams to ensure that DLP policies are actually preventing sensitive data from reaching cloud services. Test this explicitly. Don't assume it works.

Fifth, consider your AI adoption strategy. This bug should inform your organization's approach to AI features in enterprise software. Be more cautious. Test more thoroughly. Maintain higher standards before enabling features with sensitive data.

Sixth, engage with your vendors. Tell Microsoft—and other vendors—that security integration matters. If you're not able to verify that your controls work with their AI features, that affects your buying decisions. Vendor feedback is one of the few levers enterprises have.

Seventh, stay informed about the fix. Track the patch rollout. Understand when the fix will be deployed to your environment. Don't just assume it happens automatically.

These steps aren't paranoid. They're reasonable security hygiene in an environment where cloud services are processing sensitive information and bugs can expose that information to unintended systems.

Comparing This to Other Enterprise AI Incidents

Microsoft isn't the only company that's had security issues with AI features. But the pattern is instructive.

Open AI had to address concerns about Chat GPT being used to process sensitive information that should have been confidential. They eventually added options for users to control data retention and disable training on their conversations.

Slack, which has been integrating AI summarization features, had to think carefully about what content the AI could access. They made sure that certain content (like DMs, private channels) wasn't available to the AI unless explicitly enabled.

Google has had to balance the convenience of AI in Workspace with the privacy implications of having Google's models access business content.

The pattern is clear: once you integrate AI into systems that process sensitive information, you create new attack surfaces and new potential exposures. Vendors have to be very deliberate about security. And sometimes, they miss things.

Microsoft's bug isn't unique. But it is a good reminder that even when vendors try to get it right, bugs happen. And when they happen at the intersection of data security and AI, the impact can be significant.

The Tension Between AI Utility and Data Security

Here's the fundamental tension that this incident highlights: AI is most useful when it can access broad data. But broad data access is a security risk when that data is sensitive.

Copilot Chat is useful in part because it can access your email. It can summarize what's happening, pull out key information, help you understand threads. But that same access that makes it useful also creates the possibility of exposing sensitive information.

Vendors can't solve this tension entirely. They can add controls, implement safeguards, test carefully. But as long as AI systems are processing sensitive data, there will be some level of risk.

That's not a reason to avoid AI entirely. It's a reason to be intentional about where and how you use it.

The European Parliament's decision to block AI features on lawmakers' devices reflects this thinking. For their use case—processing extremely sensitive legislative and diplomatic information—the utility of AI summarization doesn't outweigh the security risk. They decided to err on the side of caution.

Other organizations might make different decisions. A marketing team using Copilot Chat on marketing copy faces a very different risk profile than a legal team using it on attorney-client communications.

The problem is that many organizations don't think through this calculus carefully. They enable a feature because it's available, without explicitly considering whether the data they'll be processing with it is appropriate for cloud-based AI systems.

This bug is a good reminder to do that thinking upfront, rather than discovering afterward that you've been exposing sensitive information.

Looking Forward: What Changes Might Result

This incident will ripple through enterprise software in a few ways.

First, vendors will face more scrutiny on AI security. Customers will ask harder questions about how AI features integrate with existing security controls. Vendors will need to provide more detailed technical documentation about security architecture.

Second, we might see more regulatory requirements around AI in enterprise software. The EU's regulatory path suggests that mandatory security certifications and audits are coming. US regulators might follow.

Third, enterprises might be more conservative in AI adoption. The early enthusiasm for "add AI to everything" is bumping up against the reality that adding AI to sensitive systems is hard and risky. Customers will be more selective about which features they enable and which data they allow those features to access.

Fourth, security teams will get more seat at the table in feature decisions. The days of business units requesting features and IT just enabling them might be ending. Going forward, security needs to approve AI features before they get enabled with sensitive data.

Fifth, vendors like Microsoft will have to show, not tell, that their security controls work. Customers won't accept theoretical promises. They'll demand proof through testing, audits, and incident disclosure transparency.

The Copilot Chat bug isn't a catastrophe. It's not like a major breach where millions of records were stolen. But it is a signal that the current approach to integrating AI into enterprise systems isn't sufficient.

Microsoft and other vendors will learn from this. They'll implement better testing, more rigorous security reviews, clearer documentation. The next generation of AI features in enterprise software will probably be more secure than the current generation.

But there will be more bugs. Because pushing features to market at the pace the industry is moving means not all edge cases get caught before deployment. That's just the reality of software development at scale.

Enterprise customers need to account for that reality in how they approach AI adoption.

Conclusion: Managing Risk in the Age of Enterprise AI

Microsoft's Copilot Chat bug is a reminder of something that should already be obvious: integrating AI into systems that process sensitive information is risky. When the integration fails, sensitive information can be exposed. When the vendor's response to that failure is slow and opaque, it erodes trust.

This incident isn't a reason to avoid AI entirely. AI features in enterprise software can provide real value. But it is a reason to approach adoption carefully. Test features thoroughly before deploying with sensitive data. Verify that your existing security controls actually work with new features. Understand what data the AI system will access and what risks that entails.

For Microsoft specifically, this is a reminder that security integration needs to be architected from day one, not bolted on afterward. DLP policies and Copilot Chat should have been designed together, with security architects explicitly thinking through how they'd interact.

For enterprises, this is a reminder that vendor promises about security aren't sufficient. You need to verify, test, and monitor. You need to understand your risk profile. You need to make deliberate decisions about which data is appropriate for cloud-based AI systems.

The technology will keep advancing. AI will keep getting integrated into more products. The vendors will keep pushing to make these features ubiquitous because they drive value and adoption.

But security teams need to push back appropriately. Not to stop progress, but to ensure that progress happens thoughtfully, with appropriate safeguards, and with clear-eyed understanding of the risks.

Microsoft's bug proves that even well-resourced vendors with strong security practices can miss something. Your organization's job is to catch those misses before they compromise your sensitive information. That requires skepticism, testing, and a clear-eyed assessment of risk.

The Copilot Chat feature will probably be useful. And it will probably have more bugs. The question is whether your organization is prepared to navigate that reality responsibly.

FAQ

What exactly did Microsoft's Copilot bug expose?

The bug allowed Copilot Chat to read and summarize emails that were marked with a "confidential" label, despite data loss prevention (DLP) policies being in place to prevent exactly this type of access. Draft and sent emails with confidential labels were being processed by Microsoft's large language models, exposing the content of sensitive communications to cloud-based AI systems without authorization.

How long was the bug active?

The bug existed from January 2025 until Microsoft began rolling out fixes in February 2025. That means sensitive emails were potentially being exposed to Copilot Chat for approximately four to six weeks before the issue was identified and Microsoft started deploying patches.

How many customers were affected by this bug?

Microsoft has not disclosed the number of affected customers. When asked by reporters, a Microsoft spokesperson declined to comment on the scope of the incident. However, given that Microsoft 365 has over 400 million users and Copilot Chat is a paid add-on feature, the potential exposure could affect hundreds of thousands of organizations if even a small percentage of Copilot users had confidential emails during the vulnerable period.

Why didn't Microsoft's data loss prevention policies work?

The specific technical reason hasn't been disclosed, but likely explanations include the DLP policy check either not executing before Copilot accessed the email content, the system not properly reading the confidential label metadata, or the Copilot Chat feature being built as a separate subsystem that wasn't fully integrated with existing DLP infrastructure. The root cause suggests a gap in the architectural design of how Copilot integrates with Microsoft 365's security controls.

Should I stop using Copilot Chat because of this bug?

No, but you should use it selectively. The bug has been identified and fixes are being deployed. However, the incident demonstrates that new features should be tested before using them with highly sensitive information. Consider restricting Copilot Chat to non-confidential business content until the patch is fully deployed to your organization and you've verified that DLP policies are working correctly with the feature.

How should enterprises respond to this vulnerability?

Enterprises should verify whether they were affected by checking their audit logs for Copilot Chat activity during January and early February 2025. Review your DLP policies to ensure they're actually preventing sensitive data from reaching cloud services. Wait for Microsoft to fully deploy the fix and then conduct testing to verify that DLP policies now work correctly with Copilot Chat before enabling the feature with confidential information. Consider establishing formal AI governance policies for your organization.

Will Microsoft face regulatory penalties for this bug?

Potentially. In the European Union, data protection authorities could investigate whether personal data was exposed in violation of GDPR, and Microsoft could face fines up to 4% of global annual revenue. In other jurisdictions, the company might face civil litigation from customers who were harmed by the exposure. The regulatory impact will depend on the scope of affected data and how data protection authorities view the incident.

How does this relate to the European Parliament blocking AI features?

The European Parliament's IT department blocked AI features on lawmakers' work devices, citing concerns that AI tools could upload confidential correspondence to the cloud. Microsoft's bug is essentially proof of concept for that exact concern—an AI system accessing and processing emails that should have been protected from that access. The incident validates the Parliament's caution about integrating AI into systems processing sensitive government communications.

What should I look for in other vendors' AI features?

When evaluating AI features from any vendor, ask for detailed security architecture documentation showing how the AI feature integrates with existing data protection controls like DLP policies. Request information about what data the AI system accesses and how that access is logged and monitored. Ask whether the feature has been through third-party security audits. Demand proof that security controls actually work, not just theoretical assurances.

Will this affect Microsoft's enterprise sales?

Possibly, though likely not dramatically. Microsoft has strong market dominance in enterprise productivity software and customers have significant switching costs. However, customers will be more skeptical about enabling AI features and will demand stronger security assurances before trusting Microsoft with sensitive data. The incident could affect the adoption trajectory of Copilot features, with more organizations taking a wait-and-see approach before enabling them with important information.

What's the fundamental tension this bug highlights?

The core issue is that AI is most useful when it can access broad data, but broad data access creates security risk when that data is sensitive. Every AI feature that accesses enterprise data creates a potential exposure vector. Vendors can add controls and safeguards, but they can't eliminate the fundamental tension between utility and security. Organizations need to consciously decide which data is appropriate to expose to AI systems, rather than assuming features are secure by default.

Key Takeaways

- Microsoft's Copilot Chat bug exposed confidential emails to AI processing despite data loss prevention policies being enabled

- The vulnerability lasted from January through early February 2025, affecting unknown numbers of enterprise customers

- Integration of new AI features with existing security controls like DLP policies requires explicit architectural design, not assumptions

- The European Parliament's decision to block AI features on lawmakers' devices was vindicated by this incident, suggesting regulatory scrutiny will increase

- Enterprise customers should adopt phased, cautious approaches to AI feature adoption with explicit testing and governance before using features with sensitive data

- Vendor transparency and rapid incident notification are as important as technical fixes when security incidents occur

Related Articles

- AI Apocalypse: 5 Critical Risks Threatening Humanity [2025]

- OpenClaw AI Ban: Why Tech Giants Fear This Agentic Tool [2025]

- EU Parliament's AI Ban: Why Europe Is Restricting AI on Government Devices [2025]

- Online Privacy Questions People Are Asking AI in 2026 [Guide]

- India's 100M ChatGPT Users: What It Means for AI Adoption [2025]

- AI Bias as Technical Debt: Hidden Costs Draining Your Budget [2025]

![Microsoft's Copilot AI Email Bug: What Happened and Why It Matters [2025]](https://tryrunable.com/blog/microsoft-s-copilot-ai-email-bug-what-happened-and-why-it-ma/image-1-1771427408124.jpg)