EU Parliament's AI Ban: Why Europe Is Restricting AI on Government Devices [2025]

In February 2026, something quietly happened that probably should've been front-page news everywhere. The European Parliament, the democratic institution representing over 400 million Europeans, issued a directive to block AI tools from government-issued devices. Not a temporary pilot. Not a limited trial. A full block.

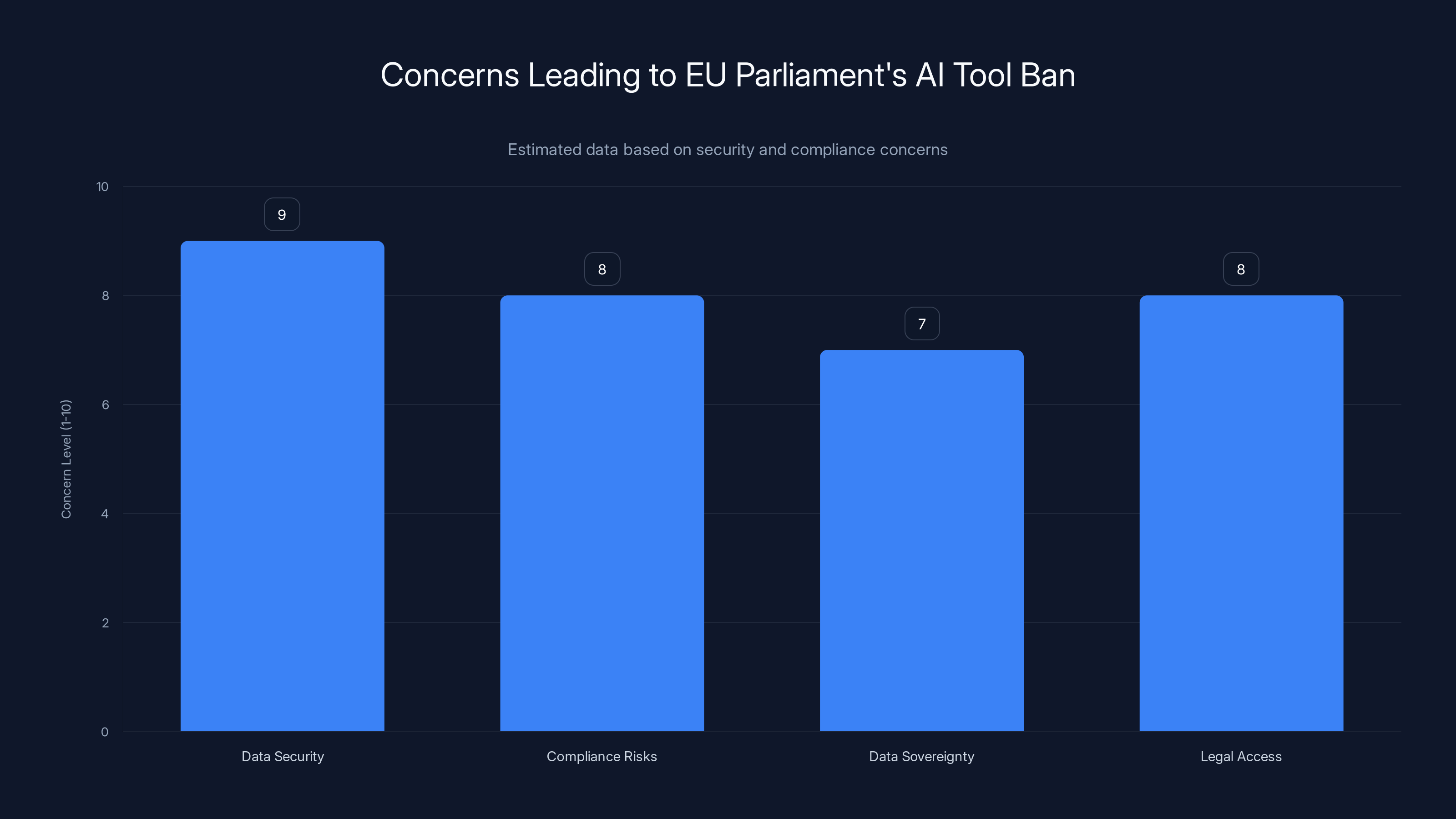

The official reason? Security. Privacy. Data protection. But here's what really happened: one of the world's most powerful legislative bodies looked at the current state of AI and decided the risk wasn't worth it.

This move sent shockwaves through Silicon Valley, Brussels, and corporate IT departments everywhere. Because if the EU Parliament—an institution with billions in resources, dedicated cybersecurity teams, and direct access to the continent's strictest data protection frameworks—couldn't trust AI tools with their data, what does that say about the rest of us?

This article dives deep into what happened, why it matters, and what it means for the future of AI in enterprise environments, government agencies, and every organization caught between the promise of AI productivity and the reality of data security.

TL; DR

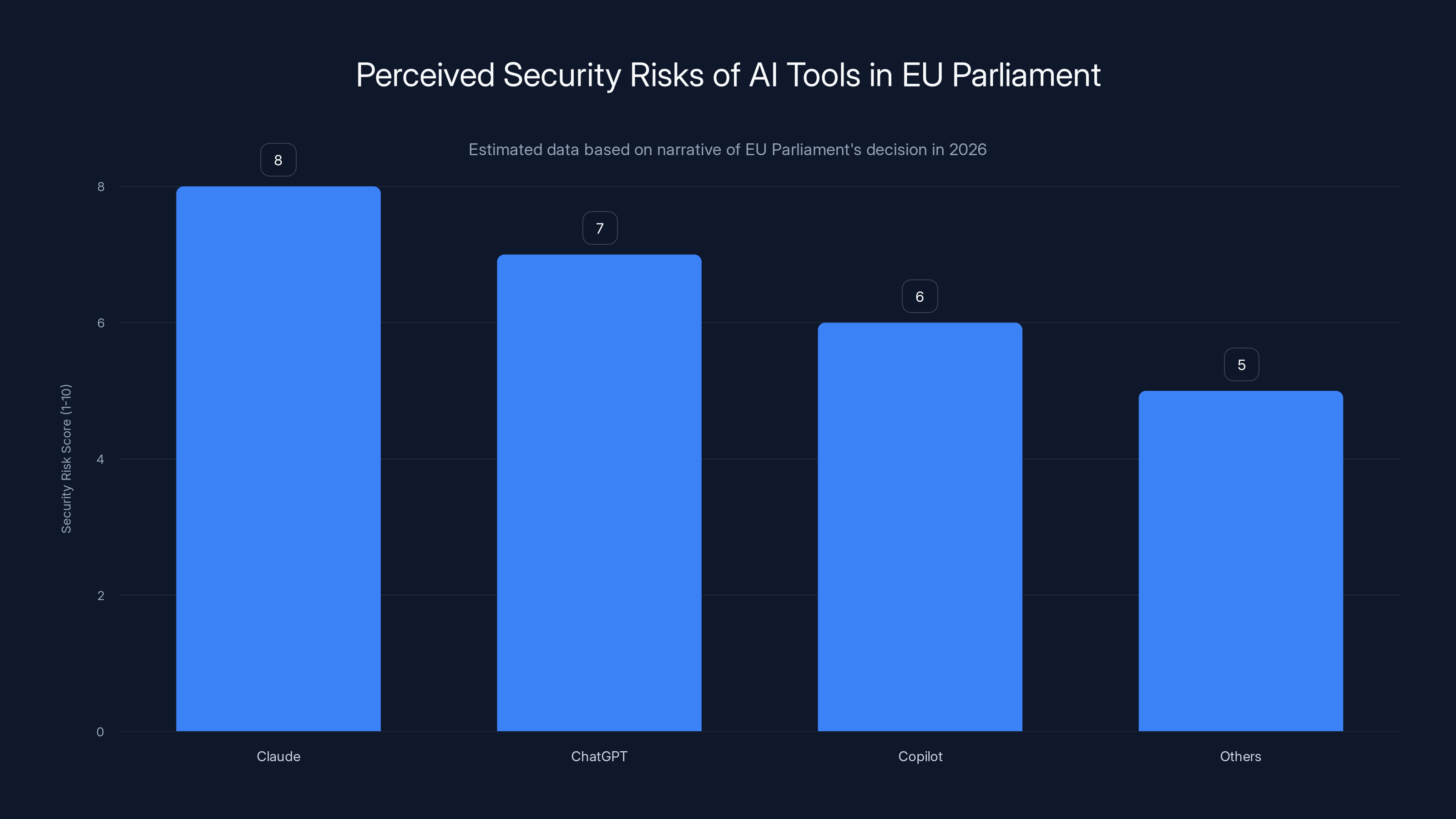

- The Decision: EU Parliament blocked built-in AI tools (Claude, Chat GPT, Copilot) on all government devices citing insufficient security guarantees as reported by Politico.

- Core Risk: Sensitive legislative data uploaded to U.S. servers operated by companies subject to U.S. law and government demands.

- Broader Impact: Signals growing skepticism about AI data handling, challenges U.S. tech dominance in Europe, and will reshape enterprise AI adoption policies.

- Data Sovereignty Crisis: Reveals fundamental tension between AI's need for data access and Europe's data protection regulations.

- Future Implications: Expect more restrictive AI policies globally, rise of alternative tools, and increased pressure for EU-based AI solutions.

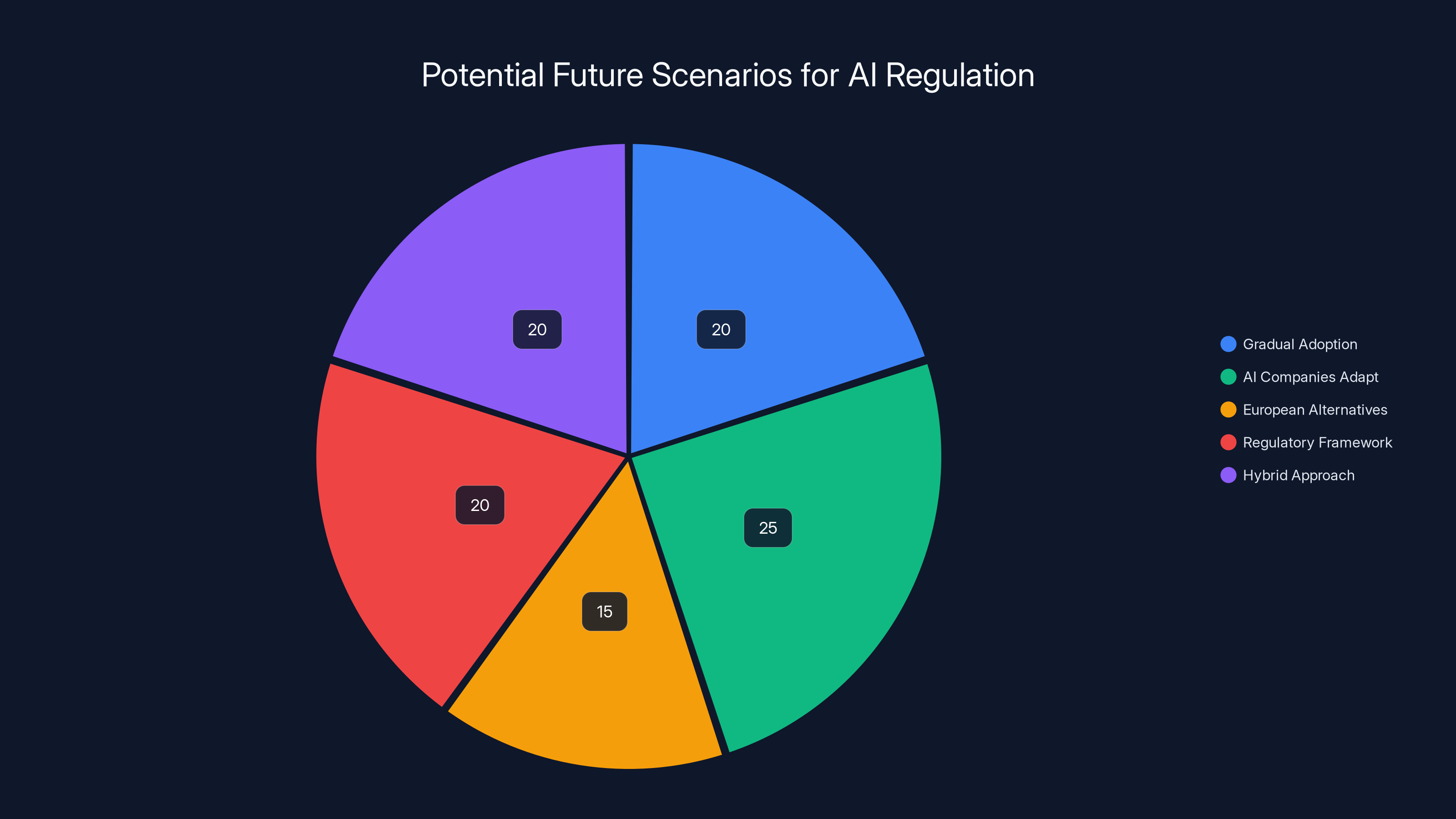

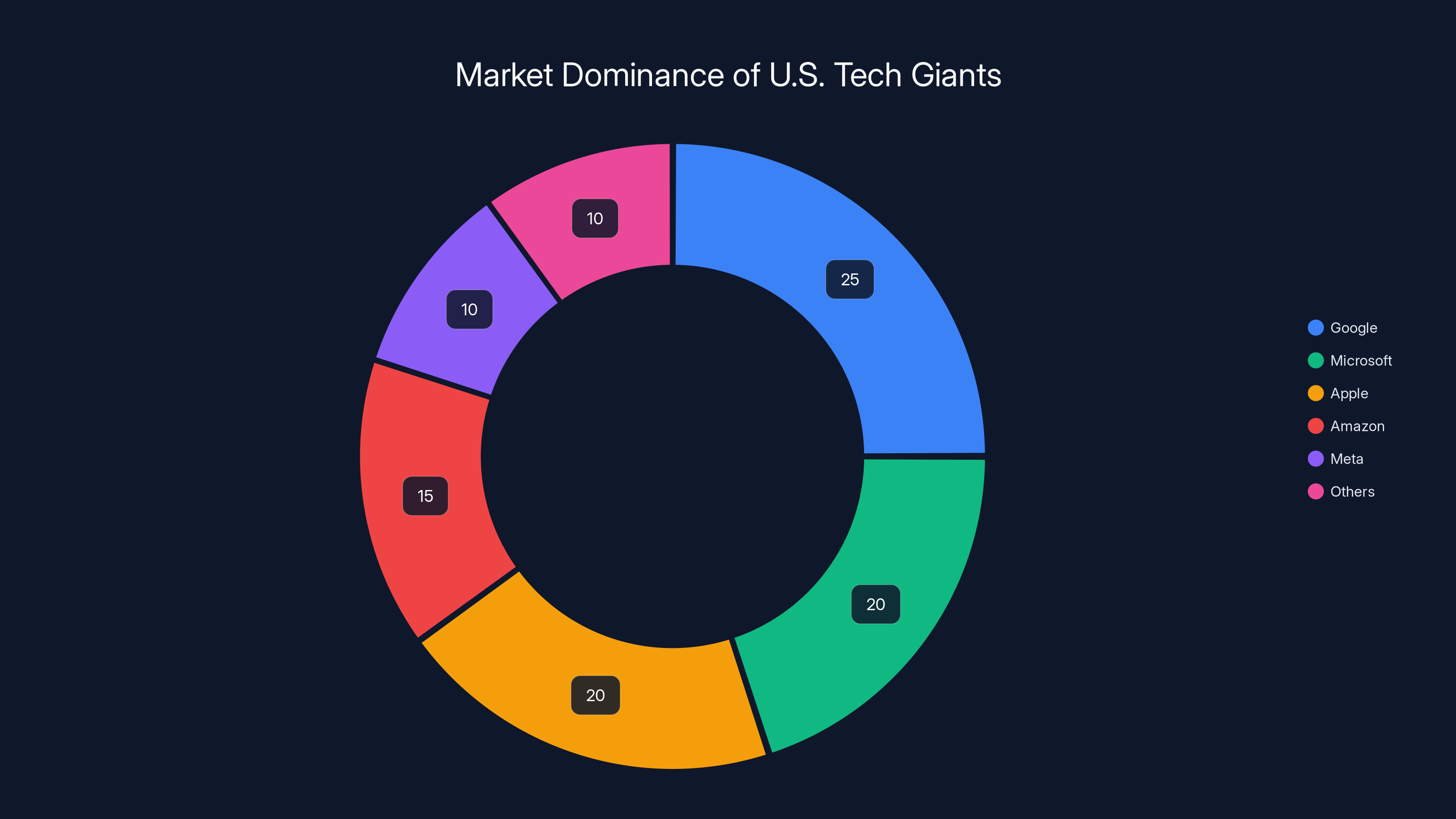

EU Parliament perceived AI tools like Claude and ChatGPT as high security risks, leading to their block. Estimated data reflects the relative concern levels.

What Actually Happened: The EU Parliament's Quiet Decision

Let's start with the basics. On a Tuesday in February 2026, EU lawmakers found something unusual on their work devices. The AI tools they'd been using—Claude, Chat GPT, Copilot, and others—simply stopped working.

Not a glitch. Not an update. A deliberate block.

The decision came via internal email from the Parliament's IT department. But the message was clear and uncompromising: these tools created unacceptable security risks. The Parliament "could not guarantee the security of data uploaded to the servers of AI companies," and the "full extent of what information is shared with AI companies is still being assessed" as detailed by K&L Gates.

Translate that IT-speak into plain English: we don't know where your data goes, we don't know who sees it, and we're not comfortable with that.

What makes this remarkable isn't just the decision itself. It's that this happened at an institution with roughly 750 of the continent's most tech-savvy, digitally-forward politicians. These aren't Luddites afraid of technology. These are people who've been regulating the tech industry for years. They understand innovation. They push for digital transformation.

And they still said no.

The timing mattered too. This came as relations between Europe and the United States were already strained. The Trump administration had begun aggressively pursuing information from tech companies through subpoenas that, according to TechBuzz, weren't even always issued by judges. Google, Meta, and Reddit had already complied in multiple cases.

For EU lawmakers, the message was clear: U.S. tech companies operate under U.S. jurisdiction. That means U.S. law enforcement can demand data, and companies have limited ability to refuse.

Now imagine what that means when you're uploading legislative communications, draft policy documents, political strategy memos, and constituent information to those U.S. servers.

The Technical Reality: Where Does Your Data Actually Go?

Here's where it gets complicated, because the conversation about "data security" requires understanding exactly what happens when you use an AI chatbot.

When you type something into Chat GPT, Claude, or Copilot, several things happen almost simultaneously:

First, your input travels across the internet to servers operated by the company running the AI. These servers are typically located in the United States (though some companies operate distributed infrastructure).

Second, the AI processes your input. This processing itself creates data trails. Logs of what you asked, when you asked it, what language you used, what type of information you requested.

Third—and this is critical—your data may be used to train or improve the AI model, depending on the service. Many AI services explicitly state that user inputs might be processed by third-party vendors, stored for service improvement, or analyzed to understand usage patterns.

Fourth, because these companies operate under U.S. law, U.S. government agencies can demand access to any of that data through legal processes.

Now, most AI companies will tell you they have protections in place. They'll mention encryption, access controls, and privacy policies. And technically, they probably do have those things. But "technically protected" isn't the same as "actually secure" when you're dealing with legislative communications about sensitive policy debates, draft laws that haven't been public, and detailed information about political negotiations.

Take a real example: imagine an EU legislator drafting a new regulation on artificial intelligence. That draft regulation, combined with private notes about how different member states might respond, combined with internal debates about potential compromises—all of that is legislative work product that requires complete confidentiality until the right moment.

Upload a summary to Chat GPT to get feedback on the wording? Now that information exists on U.S. servers. It's theoretically encrypted, theoretically protected, but it's there. And if U.S. law enforcement wants to know what the EU is planning legislatively, they now have legal tools to access it.

This isn't theoretical paranoia. The EU Parliament's decision specifically cited the "unpredictable whims and demands of the Trump administration." In preceding weeks, the U.S. Department of Homeland Security had sent hundreds of subpoenas to tech companies demanding user information about people critical of administration policies.

Google complied. Meta complied. Reddit complied. And they did this even though, according to Tech Policy Press, the subpoenas hadn't been issued by judges and weren't formally enforced by courts.

That's the technical reality the EU Parliament was responding to: the formal structures designed to protect privacy can be overwhelmed by executive demands.

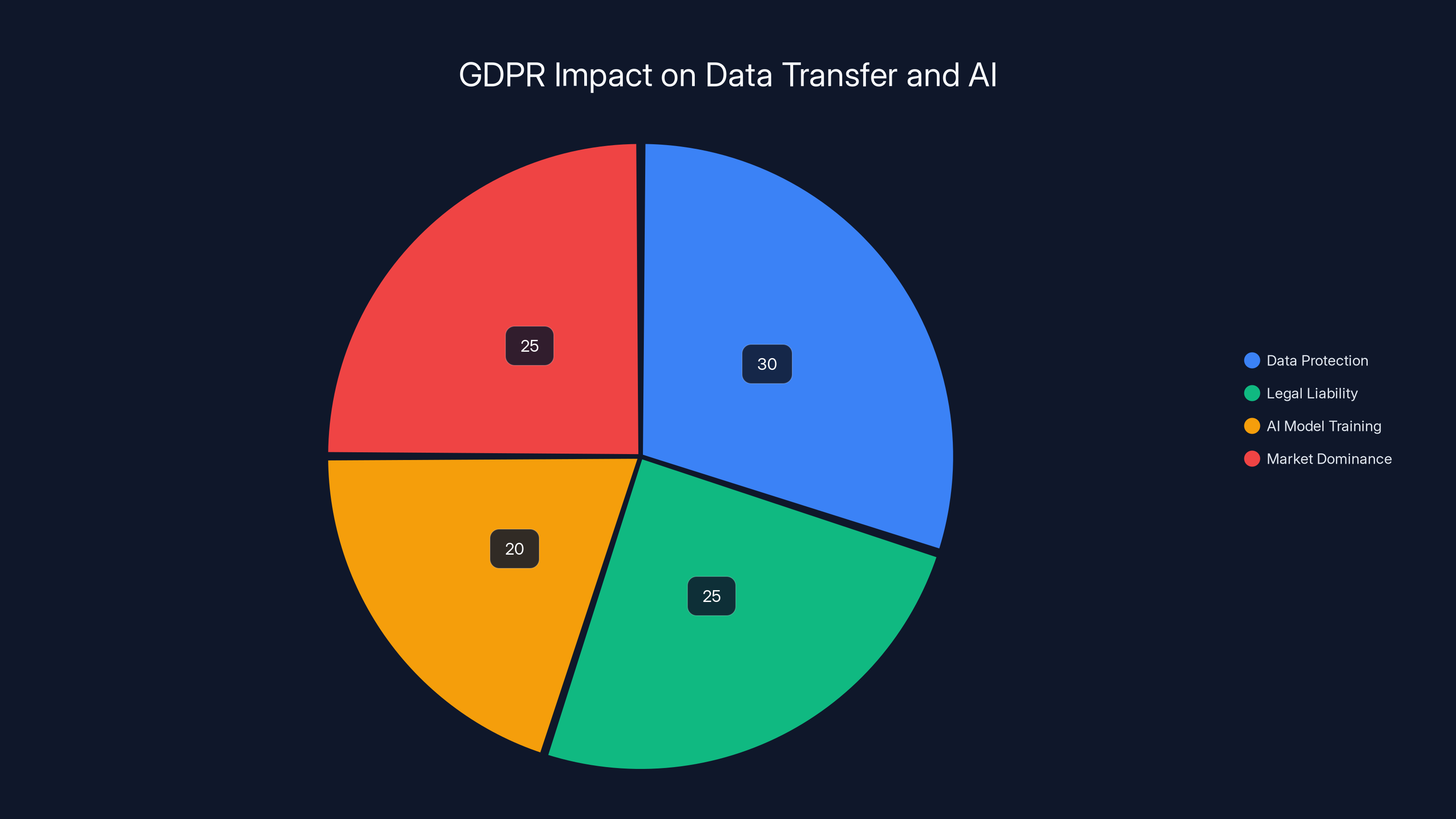

Data security and compliance risks are the top concerns leading to the EU Parliament's decision to block U.S.-based AI tools. Estimated data shows high concern levels across all categories.

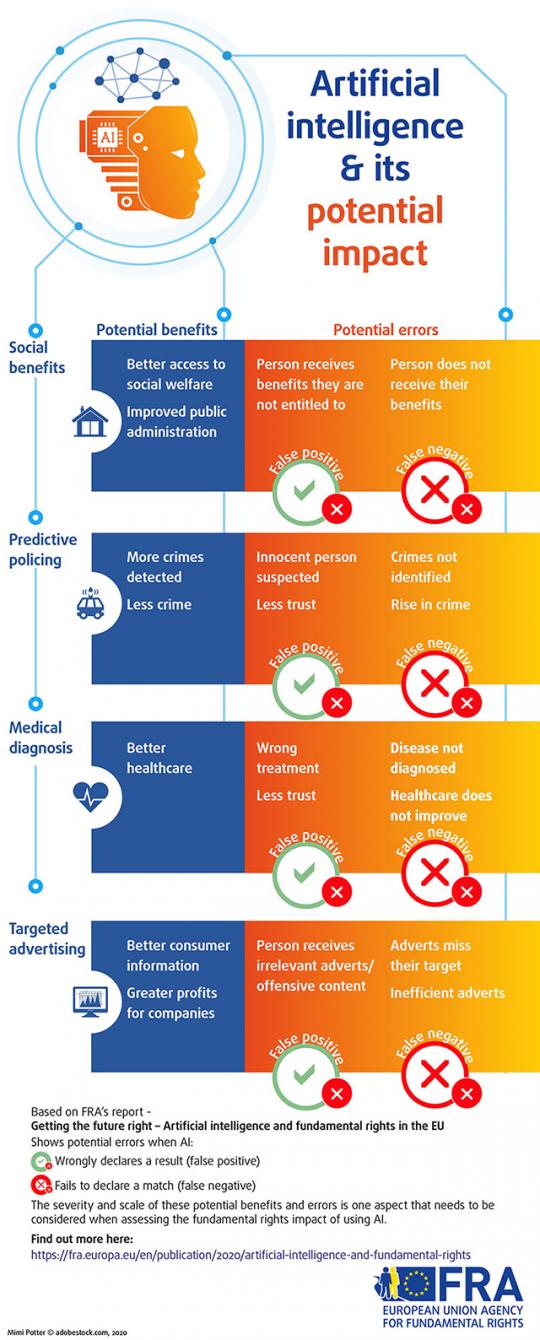

The Data Training Problem: Your Input Trains the Next Version

Here's another layer to this that makes the EU Parliament's decision even more sensible.

Many AI companies use user inputs to improve their models. Open AI, for example, has stated that they may review conversations to improve their services, though they've added opt-out mechanisms for business users. Google's Gemini works similarly. Even Anthropic's Claude uses some user information for improvement purposes, depending on the account type.

Now think about that in context. You ask Claude for help drafting a legislative proposal about tax policy. Your input—which includes strategic thinking, policy rationale, and negotiating positions—gets fed into a training pipeline. The next version of Claude is slightly better at understanding tax policy nuances.

But who else uses Claude? Potentially your political competitors. Tax lawyers working for opposing parties. Lobbyists working against your position.

You've just uploaded your strategic thinking into a system that will make your opponents slightly smarter.

This is the scenario that keeps government data security officers awake at night. It's not just about an individual breach or a hacker stealing your password. It's about voluntarily uploading your most sensitive information into a system designed to learn from it and improve based on it.

The EU Parliament recognized this. Their decision directly referenced the fact that "AI chatbots typically rely on using information that users provide or upload to improve their models, increasing the chance that potentially sensitive information uploaded by one person may be shared and seen by other users" as noted by France24.

That's not speculation. That's how these systems work.

The European Regulatory Context: Why This Matters More in the EU

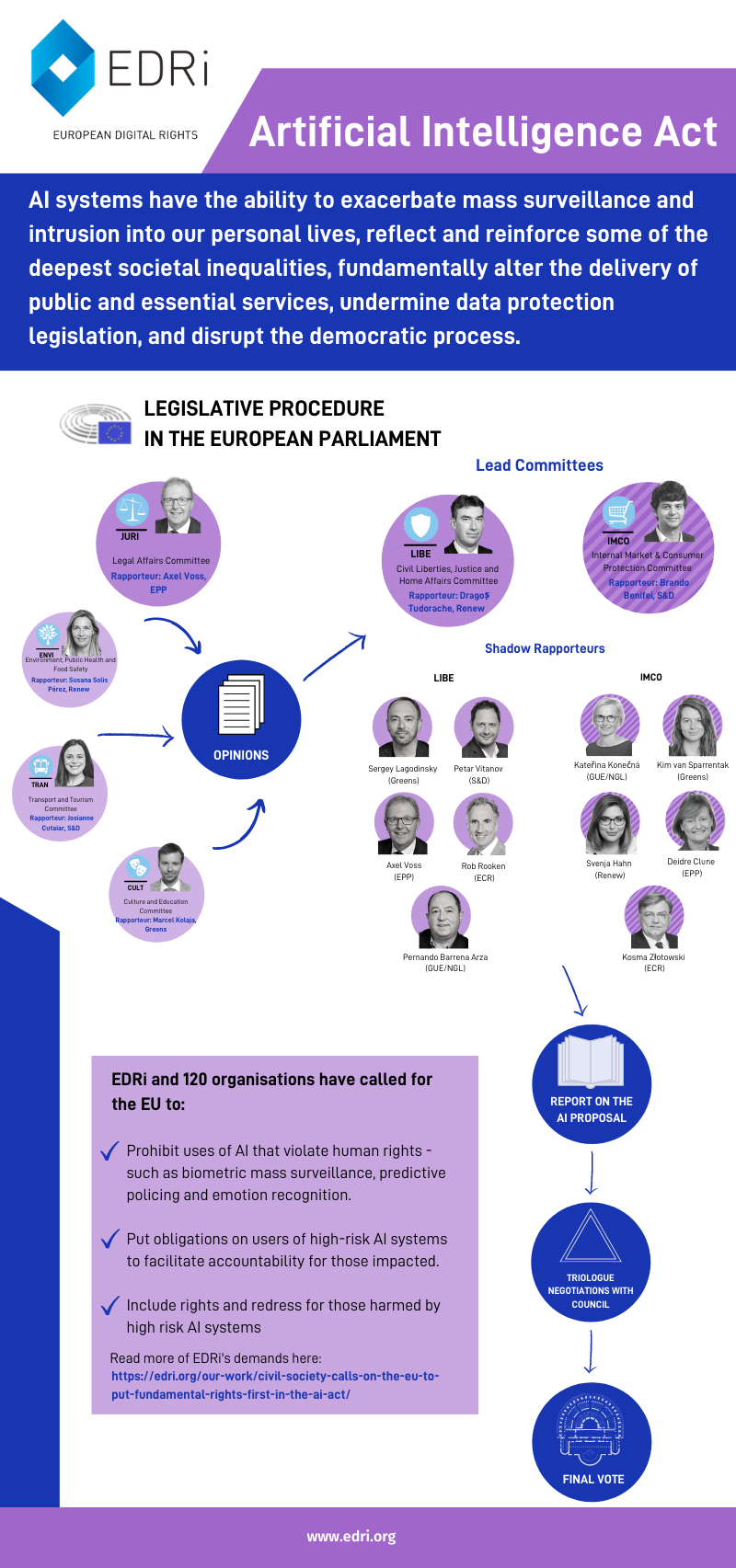

Understand that the EU Parliament didn't make this decision in a vacuum. It happened in a specific regulatory and political context that makes this decision particularly significant.

Europe has the world's strongest data protection regulations. The General Data Protection Regulation (GDPR) didn't emerge because Europeans are paranoid. It emerged because Europeans experienced what happens when governments and corporations have unchecked access to personal data.

The GDPR created massive fines, legal liability, and accountability for data mishandling. Under GDPR, organizations that collect personal data are liable for protecting it. Uploading that data to third-party services doesn't absolve you of that responsibility.

In fact, uploading personal or sensitive data to U.S. servers creates what GDPR specialists call a "data transfer problem." Europe has specific legal requirements for transferring data to countries outside the EU. Those requirements have become increasingly strict as U.S. law enforcement and surveillance capabilities have become more aggressive.

The European Commission itself recognized this growing tension. Last year, they floated proposals to relax data protection rules—specifically to make it easier for tech companies to train AI models on European data. The goal was clear: help AI companies grow by making it easier for them to access training data.

But that proposal faced immediate criticism from privacy advocates who correctly noted that loosening data protection requirements primarily benefits U.S. tech companies, not European ones. It would allow companies like Open AI and Google to access more European data, further entrenching their market dominance.

The EU Parliament's decision to block AI tools was, in part, a response to that controversy. They were essentially saying: no, we're not comfortable with that trade-off.

This reveals a fundamental tension in the AI era. AI systems need data. Lots of it. And they need it to be useful and sensitive. But Europe has decided that data protection matters more than AI convenience.

It's a choice. And it's a choice the EU Parliament made very consciously.

U.S. Law and Foreign Data: The Core Problem

Here's the part that might seem abstract but is actually very concrete: U.S. law enforcement has broad legal tools to access data held by U.S. companies.

These tools include:

- Subpoenas: Demands for information backed by court authority

- Court-authorized warrants: Legal orders to search for information

- National security letters: FBI demands that don't require court authorization and include gag orders

- Executive orders: Presidential directives that companies often feel compelled to follow

All of these can be used to access data held by U.S. tech companies, regardless of where the data originated or whose information it is.

Now, companies do push back on some of these demands. Microsoft publishes transparency reports about government requests. Apple has made public statements about refusing certain demands. But the reality is that U.S. companies ultimately operate under U.S. law.

What made the EU Parliament's decision particularly urgent was the recent pattern of broad subpoenas and compliance. The Department of Homeland Security's wave of subpoenas demanding information about people critical of administration policies—without even going through judges—represented what EU officials saw as a dangerous precedent.

If a U.S. agency can demand information about Americans who speak critically, what about information about Europeans? What about information about EU legislators?

The answer is: there's nothing legally stopping it.

This is the issue that the EU has been grappling with for years. Europe wants to use modern technology, including AI. But Europe also wants to protect its citizens' and institutions' data from surveillance and unauthorized access.

Those two goals are in tension when the AI tools are operated by U.S. companies.

Some might argue that for routine business purposes, this tension doesn't matter. If you're using Chat GPT to write an email, does it really matter if that email gets stored on U.S. servers?

But the EU Parliament made a different judgment: yes, it matters. Because data patterns matter. Because aggregate information matters. Because the principle matters.

If you're uploading your legislative strategy to a U.S.-based AI system, you're accepting a risk that EU regulators decided isn't acceptable.

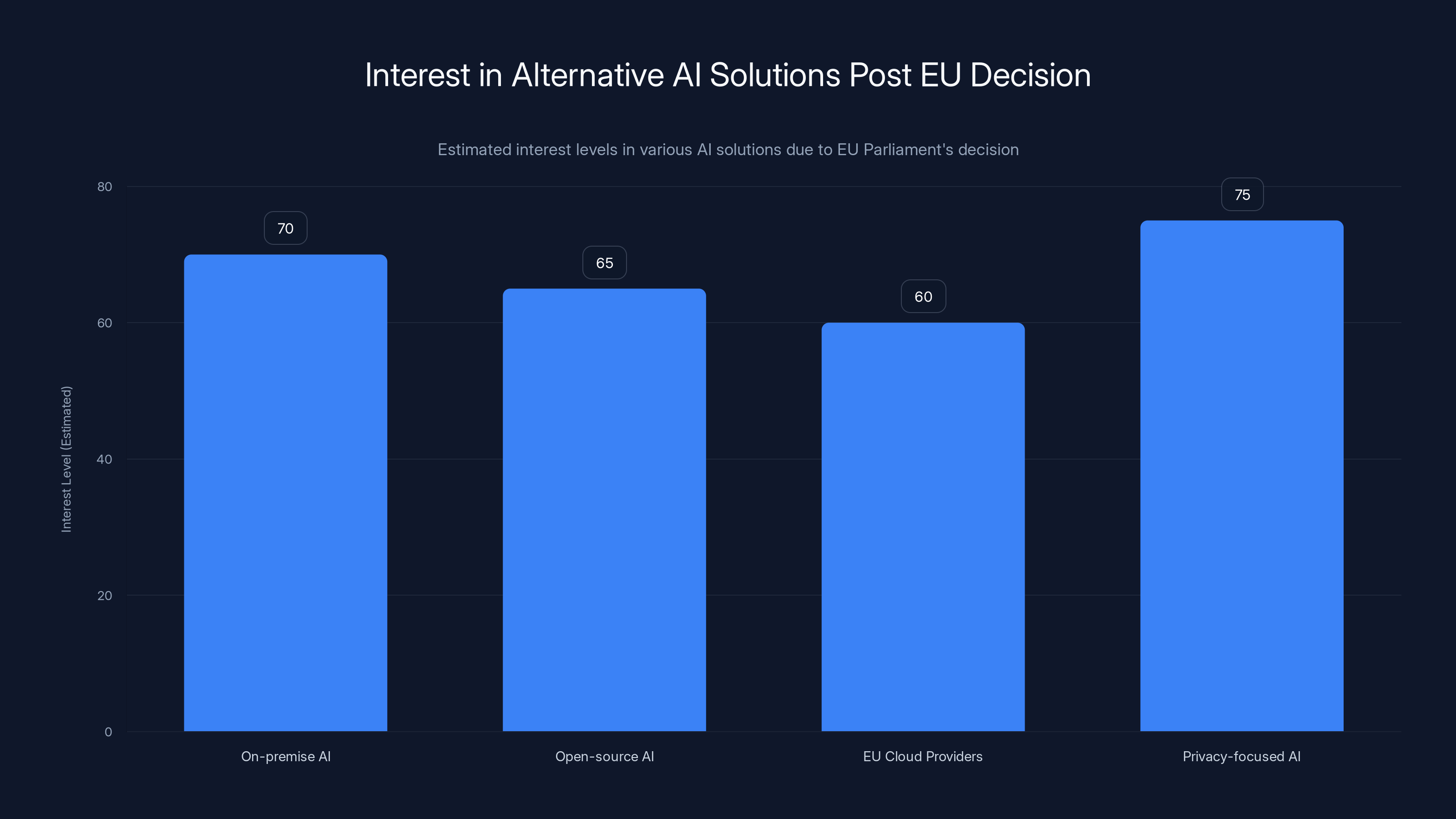

The EU Parliament's decision has sparked increased interest in privacy-focused and EU-based AI solutions, with privacy-focused AI seeing the highest estimated interest level. Estimated data.

The Broader Geopolitical Context: U.S.-EU Tech Tensions

The EU Parliament's decision didn't happen in isolation from larger U.S.-Europe tensions over technology, data, and sovereignty.

For decades, U.S. tech companies have dominated the global market. Google, Microsoft, Apple, Amazon, Meta—the companies that control how billions of people communicate, access information, and work—are all American.

Europe has built a strong regulatory framework (GDPR, Digital Services Act, AI Act), but it hasn't built a competitive alternative tech ecosystem. European tech companies exist, but none rival U.S. giants in scale or capability.

This creates a structural problem: Europe makes rules, but U.S. companies control the tools that everyone uses. The U.S. companies operate under U.S. law and U.S. government authority. So when Europe makes a rule about data protection or privacy, U.S. companies have to balance European regulations against U.S. legal demands.

Often, U.S. law wins.

The EU Parliament's decision to block AI tools is, in this context, a form of leverage. It's a relatively small institution saying: "We won't use your tools because we don't trust the legal framework you operate under."

It's not enough to stop U.S. companies. But it is a signal. And signals matter in regulatory negotiations.

Meanwhile, geopolitical tensions between the U.S. and Europe have been rising. Trade disputes, defense spending disagreements, and now the Trump administration's unpredictable legal demands have strained the relationship.

EU officials have explicitly mentioned the Trump administration's "unpredictable whims." That's diplomatic language for: we don't know what legal demands might come next, and we're not comfortable with our legislative data sitting on U.S. servers while that administration is in office.

This is more than a technical security question. It's a political and geopolitical statement.

What EU Institutions Were Using AI For (And What They're Losing)

Before diving into the implications, let's be clear about what the AI ban actually restricts.

EU Parliament lawmakers and staff were using these tools for things like:

- Drafting support: Getting feedback on document structure and clarity

- Translation assistance: Though EU institutions have their own translation services

- Research assistance: Finding relevant information on policy topics

- Email composition: Getting help with professional communication

- Document summarization: Condensing long reports into key points

- Brainstorming: Generating ideas for policy approaches

None of this is exotic or classified. These are routine productivity tasks that millions of professionals do every day with AI tools.

So what's the actual impact of blocking these tools? For most day-to-day work, it's probably manageable. EU legislators can still use traditional office tools like Word, Excel, and email. They can still access search engines and databases.

What they can't do is use AI tools built into their devices for quick productivity boosts.

Is that a significant handicap? Probably not catastrophic. Office workers have been productive for decades without AI assistance.

But it does slow things down. It does mean that certain tasks take longer. And it does signal something important: security and data protection are more important than convenience.

That's a statement with implications beyond the EU Parliament.

How This Decision Affects Enterprise AI Adoption

Now here's where the EU Parliament's decision gets really important for everyone else.

If government institutions are blocking AI tools due to security concerns, what does that mean for other organizations?

Most enterprises haven't gone as far as the EU Parliament. But many are asking the same questions:

- Can we trust these AI systems with sensitive data?

- Do we understand where the data goes?

- Are we comfortable with the legal jurisdiction?

- What's the actual security risk?

For organizations in regulated industries—finance, healthcare, law, government—these questions are especially urgent. These are industries with compliance requirements around data protection, privacy, and security.

Using an AI tool that uploads data to U.S. servers creates potential compliance problems for organizations in Europe, especially under GDPR.

What the EU Parliament did was essentially formalize this risk assessment and make it institutional policy.

Expect similar policies to follow. Not everywhere. Not immediately. But in government agencies, regulated companies, and institutions dealing with sensitive information, we'll likely see restrictions on AI tool usage.

Some organizations might handle this by:

- Blocking tools entirely: Like the EU Parliament did

- Restricting sensitive data: Allowing AI tools for routine tasks but not sensitive work

- Implementing approved alternatives: Using EU-based or compliance-focused AI tools

- Requiring formal reviews: Making AI usage something that needs approval from security and legal

- Using enterprise agreements: Negotiating specific terms that address data security concerns

The EU Parliament chose option 1. But that's a nuclear option that most organizations probably won't take. Instead, expect a lot more organizations to seriously re-evaluate their AI tool policies.

Estimated data suggests a balanced distribution of potential scenarios following the EU Parliament's decision on AI regulation, with AI companies adapting and hybrid approaches being slightly more likely.

The Rise of Alternative Solutions: EU-Based and Privacy-Focused AI

One immediate consequence of the EU Parliament's decision is increased demand for alternative AI solutions.

If you can't use Chat GPT or Claude because they're U.S.-based, what are your options?

Europe has actually been investing in its own AI capabilities. There are European AI companies building tools that operate under European jurisdiction and European data protection frameworks.

These companies are smaller and less well-known than Open AI or Anthropic. They don't have the same level of funding or user base. But they have something that U.S. companies don't: they operate under European law.

The EU Parliament's decision creates an immediate market opportunity for these companies. Organizations that need to maintain GDPR compliance or avoid U.S. legal jurisdiction suddenly have strong reasons to explore European alternatives.

We're also likely to see increased interest in:

- On-premise AI solutions: Tools that run on your own servers rather than cloud-based services

- Open-source alternatives: Models that you can run yourself without relying on cloud providers

- European cloud providers: Services that keep data within the EU

- Privacy-focused tools: AI systems specifically designed to minimize data collection

None of these are perfect. On-premise solutions require more technical expertise and infrastructure investment. Open-source models can be powerful but require more engineering work. European alternatives often have less capability than U.S. competitors.

But they offer something increasingly valuable: data sovereignty and regulatory compliance.

The EU Parliament's decision, while affecting a relatively small institution, creates a ripple effect that favors European tech alternatives and privacy-focused approaches.

The Precedent: Why This Matters for Global AI Governance

Here's what might be the most important aspect of the EU Parliament's decision: it sets a precedent.

When one major institution makes a bold decision about technology governance, other institutions pay attention. Especially when that institution is as influential as the European Parliament.

We're likely to see similar restrictions in:

- National governments: If the EU Parliament is blocking these tools, individual EU member states will likely follow

- Financial institutions: Banks and financial firms already have strict data policies; they may use the Parliament's decision as justification for restrictions

- Healthcare organizations: Hospitals and medical institutions dealing with patient data will face similar pressures

- Law firms and legal departments: Organizations handling confidential information will likely implement similar policies

- Other democratic institutions: National parliaments, city councils, and other legislative bodies may follow the EU's lead

Once one major institution says "this is a security risk," it's much harder for other institutions to justify allowing it.

Moreover, the EU Parliament's decision will likely influence regulatory discussions worldwide. Other countries may look at what the EU did and decide to implement similar rules.

This could have enormous consequences for how AI tools are deployed globally. If major institutions increasingly restrict AI usage, that reduces the ability of AI companies to integrate their tools into enterprise and government workflows.

The Security vs. Productivity Trade-off: What's Actually at Stake?

At its core, the EU Parliament's decision reflects a choice about trade-offs.

On one side: productivity. AI tools genuinely make some tasks faster and easier. They're useful. They're convenient.

On the other side: security, privacy, and data protection. Uploading sensitive information to U.S. servers operated by companies subject to U.S. law and U.S. government demands creates security and privacy risks.

The EU Parliament decided the security side was more important. They said: we're willing to be less productive if it means better protection of sensitive information.

This is a judgment call. Different organizations will probably come to different conclusions.

For most commercial enterprises, the risk might be acceptable. If you're uploading routine business communications to Chat GPT, the security risk is probably low enough compared to the productivity benefit.

But for legislative institutions, government agencies, and organizations handling truly sensitive information, the calculation is different.

What's interesting is that this trade-off becomes more visible in the post-AI world. Before AI tools existed, the question didn't arise. You couldn't upload your legislative strategy to a cloud-based service that improves its models based on user input.

Now that the option exists, we're forced to ask: is this trade-off worth it?

The EU Parliament's answer is no. But that answer is likely context-dependent. Different organizations, in different industries, with different types of data, will probably reach different conclusions.

What we might see is a divergence in practices. Some organizations embrace AI tools across the board. Others restrict them carefully. Still others, like the EU Parliament, block them entirely for sensitive work.

The GDPR significantly impacts data protection and legal liability, with notable effects on AI model training and market dominance. (Estimated data)

Looking Ahead: What This Means for AI Companies

Now let's think about this from the perspective of AI companies themselves.

The EU Parliament's decision is a problem for them. Not catastrophic. The Parliament isn't a huge market. But symbolically significant.

AI companies will likely respond with several strategies:

First, they'll attempt to address the security concerns. Open AI, Google, Microsoft, and Anthropic will all point to their security infrastructure, encryption, privacy policies, and terms designed to address regulatory requirements.

But they have a fundamental problem: they operate under U.S. law. No matter how good their security is, that basic fact doesn't change.

Second, they'll try to negotiate. Microsoft and Google, which have significant presences in Europe, will try to work with EU regulators to find solutions. They might offer special terms for government clients. They might offer data residency options.

But again, they can't change the fundamental fact that they're U.S. companies subject to U.S. law.

Third, they'll probably develop European versions or partnerships. Google and Microsoft might partner with European cloud providers to offer EU-based versions of their services. This could help address some of the data residency concerns.

Fourth, they'll probably accept that some segments—government, regulated finance, and similar—will be difficult markets. They'll focus on commercial enterprises and consumers where the security concerns are less critical.

Overall, the EU Parliament's decision is a signal that there's a growing market segment that won't use U.S.-based AI tools. AI companies will need to either address this through technical and legal solutions, or accept that they won't have access to these markets.

Regulatory Implications: AI Acts and Data Protection

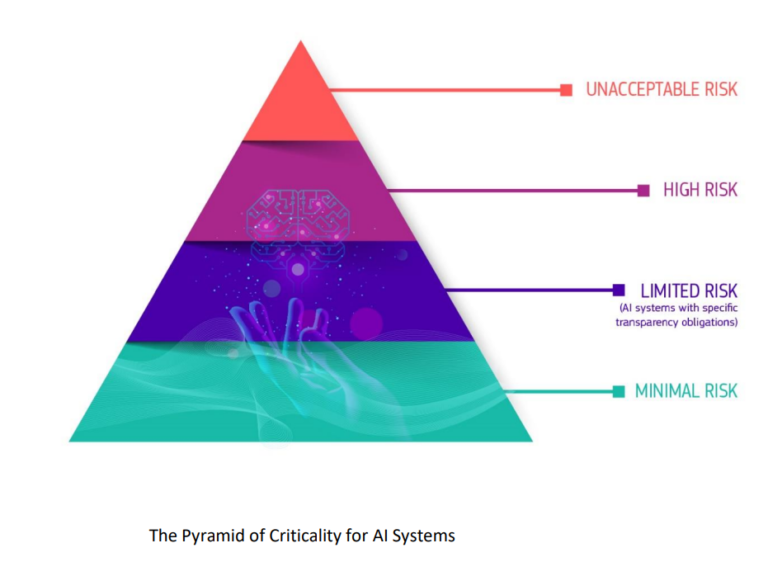

The EU Parliament's decision also has implications for how AI will be regulated going forward.

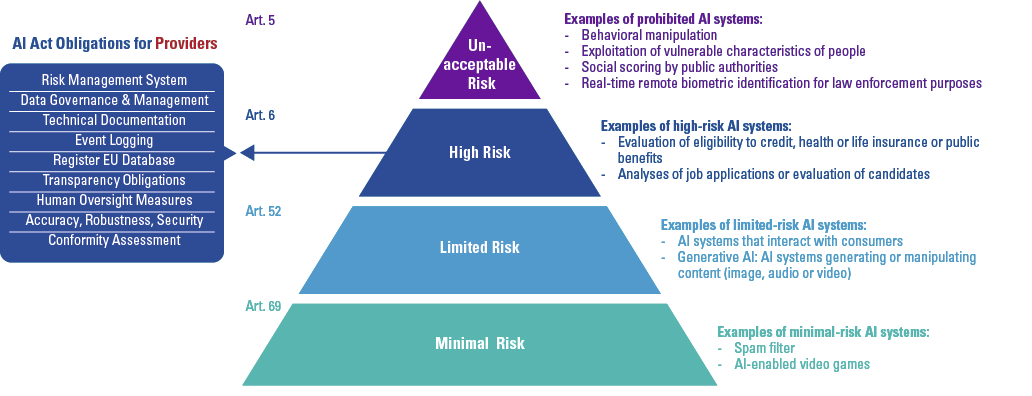

Europe has already passed the AI Act, which creates comprehensive regulations for AI systems. The EU Parliament's decision to block AI tools suggests that European regulators are willing to take practical steps to enforce their values around data protection and privacy.

This could influence how the AI Act is actually implemented and enforced. It signals that European institutions won't simply accept U.S. tech as inevitable. They'll actually make hard choices about what tools they use.

Meanwhile, conversations about AI regulation will increasingly focus on the data security issue. Regulators in other countries will likely note Europe's experience and consider whether they want similar protections.

We might see regulatory frameworks emerge that specifically address:

- Data residency: Requiring data to stay in certain jurisdictions

- Legal jurisdiction: Requiring AI services to operate under specific legal frameworks

- Government access: Creating clear rules about what happens when governments demand information

- Training data: Specifying what data can and can't be used to train AI models

- Transparency: Requiring AI companies to disclose exactly where user data is stored and who can access it

The EU Parliament's decision is a shot across the bow. It says: we're serious about these issues, and we're willing to inconvenience ourselves to address them.

The Broader Question: Can We Trust AI Companies with Sensitive Data?

Ultimately, the EU Parliament's decision raises a fundamental question: can we trust AI companies with sensitive data?

The answer, from the Parliament's perspective, is: not really. Not when the companies operate under foreign jurisdiction. Not when the data can be accessed by foreign governments. Not when the data might be used to improve systems that competitors also use.

Now, AI companies would say their security is robust. And technically, it probably is. But security isn't the only issue here. Jurisdiction, legal access, and data practices are different questions.

And on those questions, U.S. companies have inherent limitations. They can't change the fact that they operate under U.S. law. They can't make U.S. law enforcement needs disappear.

So the question becomes: is there a path forward?

Possibly. Some options:

- Special legal frameworks: Create specific legal agreements that address EU concerns and limit U.S. government access

- Data localization: Require data to be stored in Europe and not transferred to the U.S.

- European alternatives: Develop AI systems that operate under European jurisdiction

- Hybrid approaches: Use AI for non-sensitive work while keeping sensitive data off these systems

- Regulation: Create rules that require AI companies to implement specific security and privacy measures

But all of these require either significant changes from U.S. companies or significant investments in European alternatives. Neither seems likely to happen quickly.

So expect this tension—between AI's productivity benefits and data security concerns—to persist for a while.

U.S. tech giants like Google, Microsoft, and Apple dominate the global market, collectively holding an estimated 80% share. This underscores the challenge for the EU in balancing regulatory power with technological dependency. (Estimated data)

Comparing Global Approaches: How Different Countries Are Handling AI Security

While the EU Parliament took a relatively aggressive stance, other countries and institutions are handling AI security differently.

United States: The U.S. federal government hasn't implemented blanket restrictions on AI tools. Agencies use various tools and have different policies. Generally, the approach is more permissive.

United Kingdom: Post-Brexit, the UK has its own approach to AI regulation. They've emphasized responsible innovation over restriction.

Asia-Pacific: Countries like Singapore, Japan, and South Korea have generally been supportive of AI adoption while developing regulatory frameworks.

Canada: Similar to the U.S., more permissive approach with emerging regulations.

The EU's approach is notably more restrictive. This reflects Europe's broader philosophy that privacy and data protection deserve significant weight in policy decisions.

Whether the EU's approach is right or wrong depends on your priorities. If you prioritize AI adoption and productivity, Europe's caution seems excessive. If you prioritize data protection and privacy, it seems prudent.

What's likely is that we'll see a divergence. The EU might develop stricter AI rules and practices. Other regions might remain more permissive. This could create a situation where AI tools work differently depending on geography, similar to how privacy rules differ globally.

What Organizations Should Do Now

If you work in an organization that deals with sensitive data, here's what you should do now:

First, audit your current AI tool usage. Document what tools people are using, what data is being uploaded, and what sensitivity level that data has.

Second, review your compliance and security requirements. What do your regulations and security policies say about uploading data to cloud services?

Third, engage your legal, security, and compliance teams. This isn't just an IT decision. It's a legal and policy question.

Fourth, consider developing an AI tool policy. What tools are allowed? Which data can be uploaded? Do certain tools require approval?

Fifth, if you determine that you need to restrict AI usage, start implementing it now before regulatory pressure forces the issue.

Sixth, explore alternatives. Whether that's on-premise solutions, European-based tools, or different ways of using AI, understand your options.

The EU Parliament's decision isn't immediately binding on other organizations. But it's a strong signal that major institutions are taking data security seriously with respect to AI tools.

Better to get ahead of this now than to be forced into hasty restrictions later.

The Future: Where This Goes From Here

What happens next is uncertain, but here are the most likely scenarios:

Scenario 1: Gradual Adoption of Restrictions: Other government agencies, regulated companies, and institutions follow the EU Parliament's lead. Over time, AI tool restrictions become more common in sensitive sectors.

Scenario 2: AI Companies Adapt: Open AI, Google, Microsoft, and others develop solutions specifically designed to address EU concerns. They might offer EU-hosted versions, special legal frameworks, or other accommodations.

Scenario 3: European Alternatives Emerge: European AI companies receive more investment and attention. Over time, they develop competitive products that operate under European jurisdiction.

Scenario 4: Regulatory Framework Develops: New regulations emerge that specifically address AI security, data protection, and data residency. These become the new standard.

Scenario 5: Hybrid Approach: Organizations develop nuanced policies that allow AI usage for non-sensitive work while restricting it for sensitive data. This becomes the dominant approach.

Most likely, we'll see some combination of these. Different organizations will make different choices based on their specific needs and constraints.

But the EU Parliament's decision marks a turning point. It's the moment when a major, influential institution said: no, we're not comfortable with the current approach to AI and data security.

That signal will echo through organizations worldwide.

Lessons for Security and Policy Teams

For security and policy professionals, the EU Parliament's decision offers several lessons:

First: Security risk assessment matters more than convenience. The Parliament decided that the security risk of using AI tools with sensitive legislative data outweighed the productivity benefits.

Second: Jurisdiction and legal framework are real security concerns, not just theoretical ones. When a U.S. government agency can demand data from a U.S. company, that's a concrete security risk that needs to be addressed.

Third: Institutions need to make active choices about tool adoption. Drift—where people start using tools without explicit policy—creates risk and compliance problems. Better to decide deliberately.

Fourth: Privacy and data protection are increasingly powerful factors in technology decisions. Organizations that ignore these issues will eventually be forced to address them.

Fifth: There's often a trade-off between convenience and security. Sometimes security wins. Understanding that trade-off is important.

These lessons apply far beyond just AI tools. They're relevant for any technology that handles sensitive data.

FAQ

Why did the European Parliament block AI tools?

The EU Parliament blocked AI tools like Chat GPT, Claude, and Copilot because of concerns that sensitive legislative data could be uploaded to U.S.-based servers operated by companies subject to U.S. law and U.S. government demands. The Parliament's IT department determined they could not guarantee the security of data uploaded to these services and that sensitive information might be used to train AI models or accessed by U.S. authorities through legal processes.

What data security risks are associated with using U.S.-based AI tools?

U.S.-based AI companies operate under U.S. jurisdiction, meaning U.S. law enforcement can demand access to user data through subpoenas, warrants, or national security letters. Additionally, many AI services use user inputs to improve their models, creating potential for sensitive information to be shared with other users. Data stored on U.S. servers may not be subject to European data protection laws like GDPR, creating compliance risks.

Could this decision affect other organizations outside the EU?

While not directly binding on organizations outside the EU, the EU Parliament's decision sets an important precedent. Other government agencies, regulated financial institutions, healthcare organizations, and law firms may implement similar restrictions. The decision also signals to AI companies that major institutions are serious about data security concerns, likely influencing how AI tools are developed and deployed globally.

What are the alternatives to U.S.-based AI tools for organizations concerned about data security?

Organizations can explore several alternatives: EU-based AI companies operating under European jurisdiction, on-premise AI solutions that run on your own servers, open-source AI models you can deploy yourself, enterprise agreements with special data handling terms, or hybrid approaches that use AI only for non-sensitive tasks. Some U.S. companies are developing EU data residency options, though these still operate under U.S. law.

How does the EU's GDPR affect AI tool usage?

GDPR requires organizations handling EU citizen data to protect that data and ensure it's not transferred to countries with insufficient data protection without proper safeguards. Uploading EU citizen data to U.S.-based AI services creates GDPR compliance challenges, especially if data is used for model training or accessed by U.S. authorities. Organizations face potential fines up to 20 million euros or 4% of global revenue for violations.

Will AI companies change their practices in response to the EU Parliament's decision?

AI companies will likely respond through several strategies: improving security documentation, developing EU-hosted versions of their services, negotiating special terms for government clients, partnering with European cloud providers, or accepting that some markets (especially government and regulated finance) will be difficult to access. However, they cannot fundamentally change the fact that they operate under U.S. law.

What should my organization do about AI tool policies?

Begin by auditing current AI tool usage and the types of data being uploaded. Review your compliance and security requirements, then engage legal, security, and compliance teams to develop a formal policy. Categorize data by sensitivity level, decide what tools are appropriate for each level, and establish approval processes. Consider both U.S.-based and alternative solutions based on your specific needs and regulatory context.

Is this the start of broader restrictions on AI tools?

The EU Parliament's decision is likely to inspire similar restrictions in other government agencies, regulated industries, and major institutions. Expect increasing focus on AI tool policies, particularly in sectors handling sensitive data. However, most commercial organizations will probably maintain more permissive approaches for non-sensitive work while restricting AI usage for sensitive information.

Conclusion

The European Parliament's decision to block AI tools on government devices is more significant than it might initially appear. This wasn't a temporary pilot or a conservative overreaction. It was a deliberate institutional choice by one of Europe's most influential bodies to prioritize data security over the productivity benefits of AI tools.

That choice reveals something important about where we are in the AI revolution. We've reached a point where the benefits are obvious and the risks are real enough that major institutions are willing to inconvenience themselves to address them.

The EU Parliament didn't block these tools because they're afraid of technology. They blocked them because they've carefully considered what happens when sensitive information is uploaded to U.S. servers operated by companies subject to U.S. government demands. And they concluded that the risk wasn't worth it.

This decision will have ripple effects. Other institutions will likely follow. AI companies will need to adapt. Regulatory frameworks will evolve. And organizations worldwide will need to think more carefully about what data they're uploading to cloud-based services and under what circumstances.

The fundamental tension revealed here is real: AI tools offer genuine productivity benefits, but they come with data security and privacy costs. For organizations dealing with sensitive information, that trade-off is increasingly difficult to accept.

The question now is whether this will force change in how AI companies operate and how they handle data, or whether it will create a divergence where major institutions use different tools than commercial enterprises.

Either way, the EU Parliament has made a statement: the AI era isn't just about adoption and innovation. It's also about security, sovereignty, and who controls the most sensitive information. And on those questions, there are no easy answers.

Key Takeaways

- The EU Parliament blocked built-in AI tools (ChatGPT, Claude, Copilot) over concerns that sensitive legislative data could be uploaded to U.S. servers subject to U.S. government demands

- Data uploaded to AI services can be used for model training, exposing sensitive information to other users and competitors

- U.S. law enforcement has multiple legal mechanisms to demand data from tech companies, and recent patterns show aggressive use of subpoenas without judicial oversight

- This decision signals that major institutions are prioritizing data security and sovereignty over AI productivity benefits, likely inspiring similar policies in other sectors

- Organizations in regulated industries should immediately audit AI tool usage and develop policies that balance security concerns with productivity needs

Related Articles

- EU Parliament Bans AI on Government Devices: Security Concerns [2025]

- Tenga Data Breach 2025: How a Phishing Email Exposed Customer Data

- Chrome Extensions Hijacking 500K VKontakte Accounts [2025]

- Android Google Drive Local Backup: Complete Setup Guide [2025]

- Japanese Hotel Chain Hit by Ransomware: What You Need to Know [2025]

- Grok's Deepfake Crisis: EU Data Privacy Probe Explained [2025]

![EU Parliament's AI Ban: Why Europe Is Restricting AI on Government Devices [2025]](https://tryrunable.com/blog/eu-parliament-s-ai-ban-why-europe-is-restricting-ai-on-gover/image-1-1771348306821.jpg)