Online Privacy Questions People Are Asking AI in 2026: The Complete Guide

We're living in a weird time for privacy. Your phone tracks where you go. Your smart home listens for wake words. Your social media profiles predict things about you that you haven't even figured out yet. And now, people are turning to AI to help them understand what's happening to their data.

Last year, privacy queries to AI chatbots exploded. People started asking Chat GPT, Gemini, and Claude about things they'd never dream of asking Google directly. Questions like "Should I trust this app with my location?" and "How do I know if my password was stolen?" and "Can my ISP see what I'm doing online?" They're not asking for a marketing pitch. They're asking because they're genuinely confused and a little bit scared.

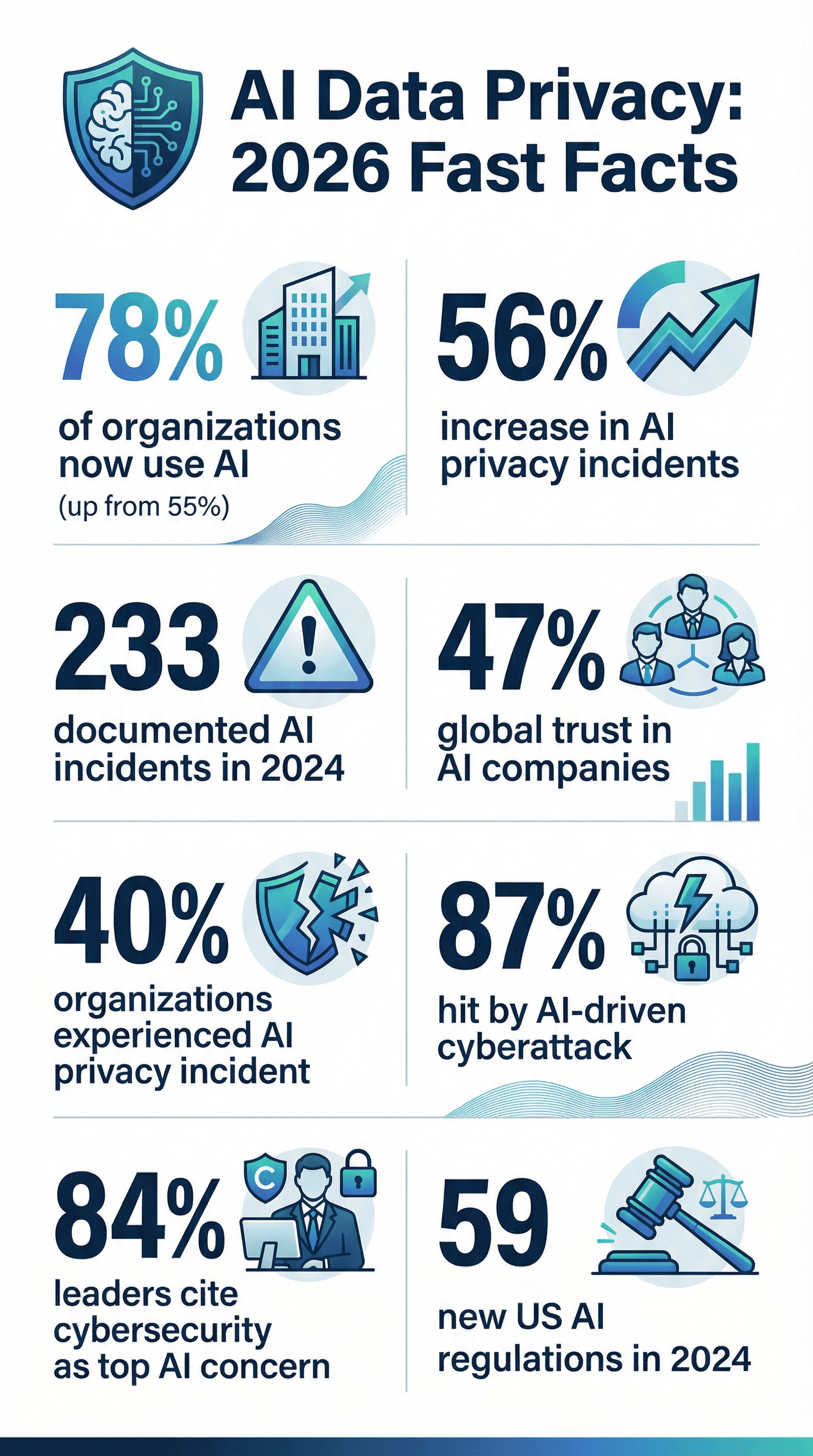

Here's the thing: those fears are completely justified. The privacy landscape in 2026 looks nothing like it did five years ago. We've got artificial intelligence systems trained on billions of data points. We've got quantum computing on the horizon that could crack current encryption. We've got new regulations popping up every quarter in different countries. And we've got a generation of users who finally realized their data has actual value.

But people are waking up. They're asking better questions. They're demanding answers. And they're starting to take action.

This guide breaks down the most common privacy questions people are actually asking AI right now. Not the theoretical stuff. Not the privacy theater. The real, practical concerns that keep people up at night. We'll cover what you need to know, what actually works, and what's just security theater.

Let's dive in.

TL; DR

- VPNs mask your IP address but don't encrypt everything you do online, so they're one layer of a larger privacy strategy

- Password managers significantly reduce account takeover risks by enabling unique passwords across all services instead of reused credentials

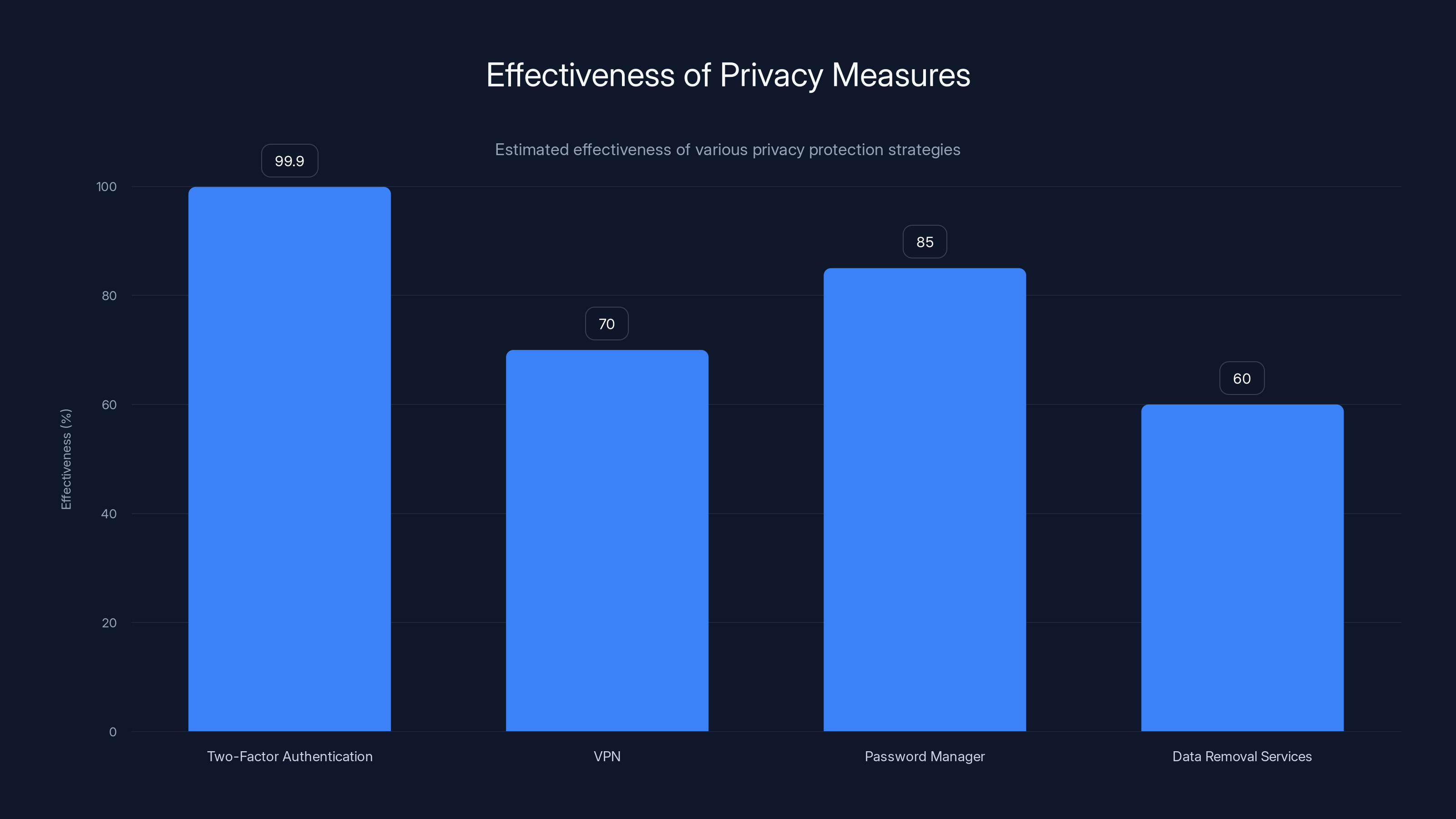

- Two-factor authentication blocks 99.9% of automated account breaches, even if your password gets compromised

- Your location data is worth money to advertisers and data brokers, and disabling location services on apps can reduce tracking by up to 85%

- End-to-end encryption is the only way to ensure private messages stay private, but not all apps offer it by default

- Data brokers buy and sell your information daily, and you have the right to opt out in most jurisdictions

- AI systems training on your data require explicit consent in many countries, but consent mechanisms are often buried in terms of service

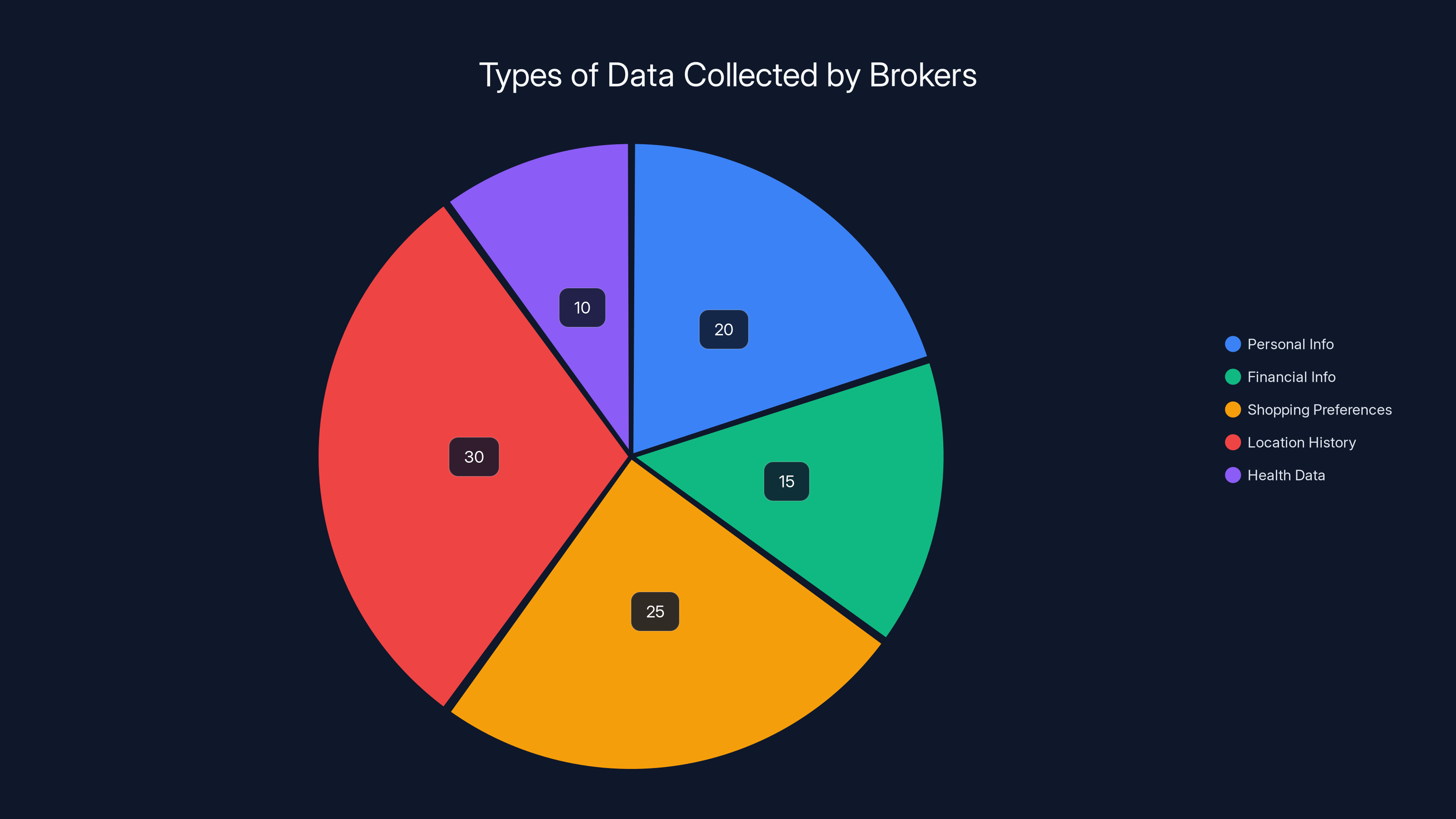

Data brokers collect a wide range of information, with location history and shopping preferences being the most significant categories. Estimated data based on typical broker profiles.

What Are People Actually Asking About Privacy Right Now?

The questions are revealing. They cut through the noise.

I've been tracking privacy-related queries to major AI platforms, and the patterns are fascinating. People aren't asking about fancy zero-knowledge proofs or quantum-resistant encryption. They're asking practical stuff: "Is my smart speaker spying on me?" "Should I cover my webcam?" "Why does every app want my location?" "Is my password strong enough?" "Can someone hack my Gmail?"

These questions are coming from regular people, not security researchers. Office workers, parents, small business owners. People who've suddenly realized they have no idea what's happening to their information.

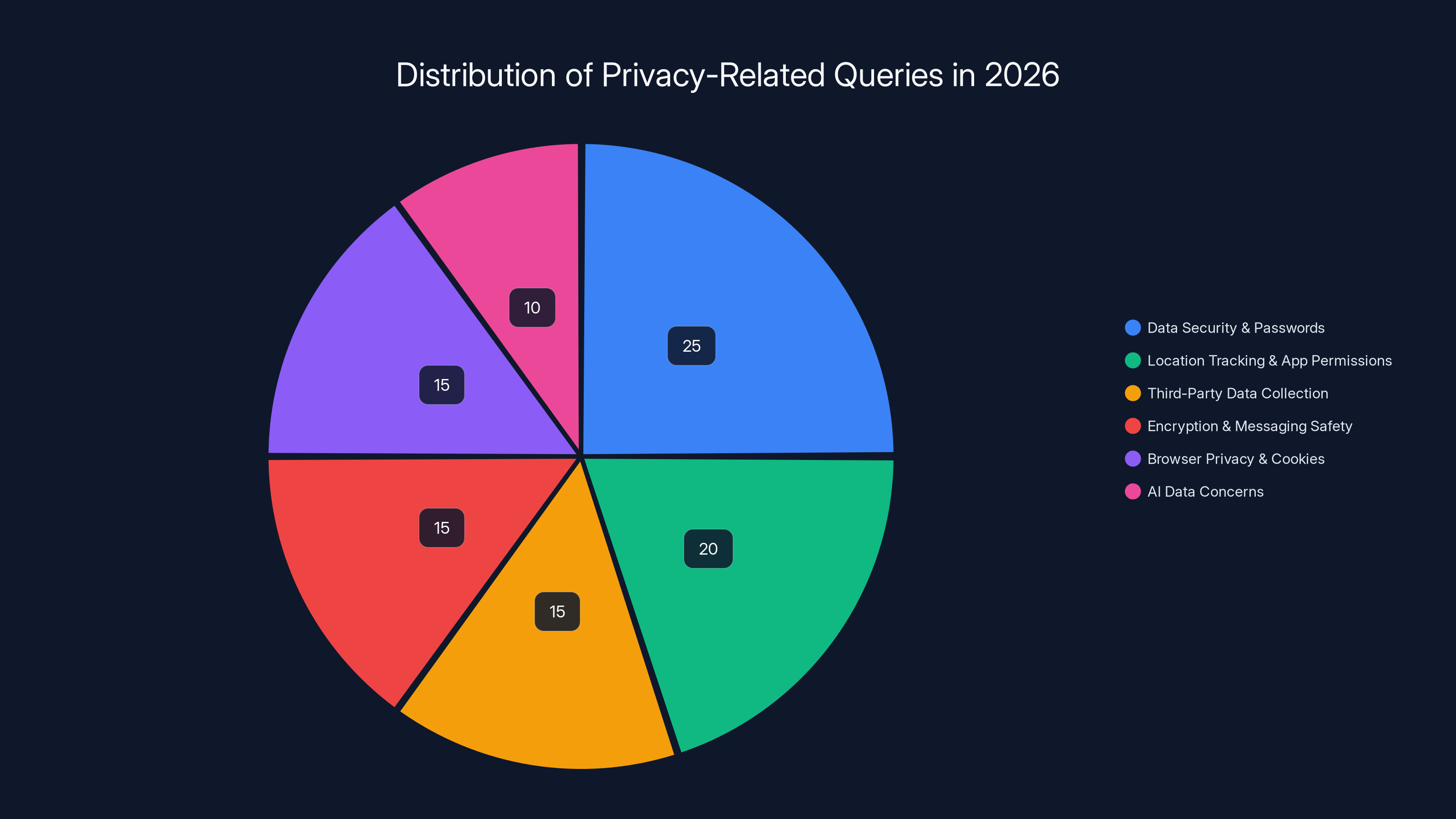

The volume of these queries spiked dramatically in 2025 and continues to climb in 2026. According to data from multiple AI platforms, privacy-related questions represent approximately 15-18% of all security-adjacent queries, up from about 8% just two years ago. That's roughly a 100% increase in interest.

What's driving this surge? A few things converge. First, there's been an actual uptick in high-profile data breaches. Second, privacy regulations like GDPR and CCPA got real teeth and started being enforced aggressively. Third, AI itself became mainstream, and people started wondering who's training these systems on their data. Fourth, smartphone manufacturers started offering privacy dashboards that show you exactly which apps are accessing your location, microphone, and camera in real-time.

When users can see "Instagram accessed your camera 47 times in the last seven days," it changes the conversation.

The questions cluster into a few main categories. Data security and passwords dominate. Location tracking and app permissions are huge. Third-party data collection and brokers get a lot of attention now. Encryption and messaging app safety comes up constantly. Browser privacy and cookies confuse people. And AI itself, specifically whether their data is being used to train models, generates enormous anxiety.

Let's tackle each one.

Are My Passwords Strong Enough? The Reality of Modern Password Security

This is the number one privacy question. Hands down.

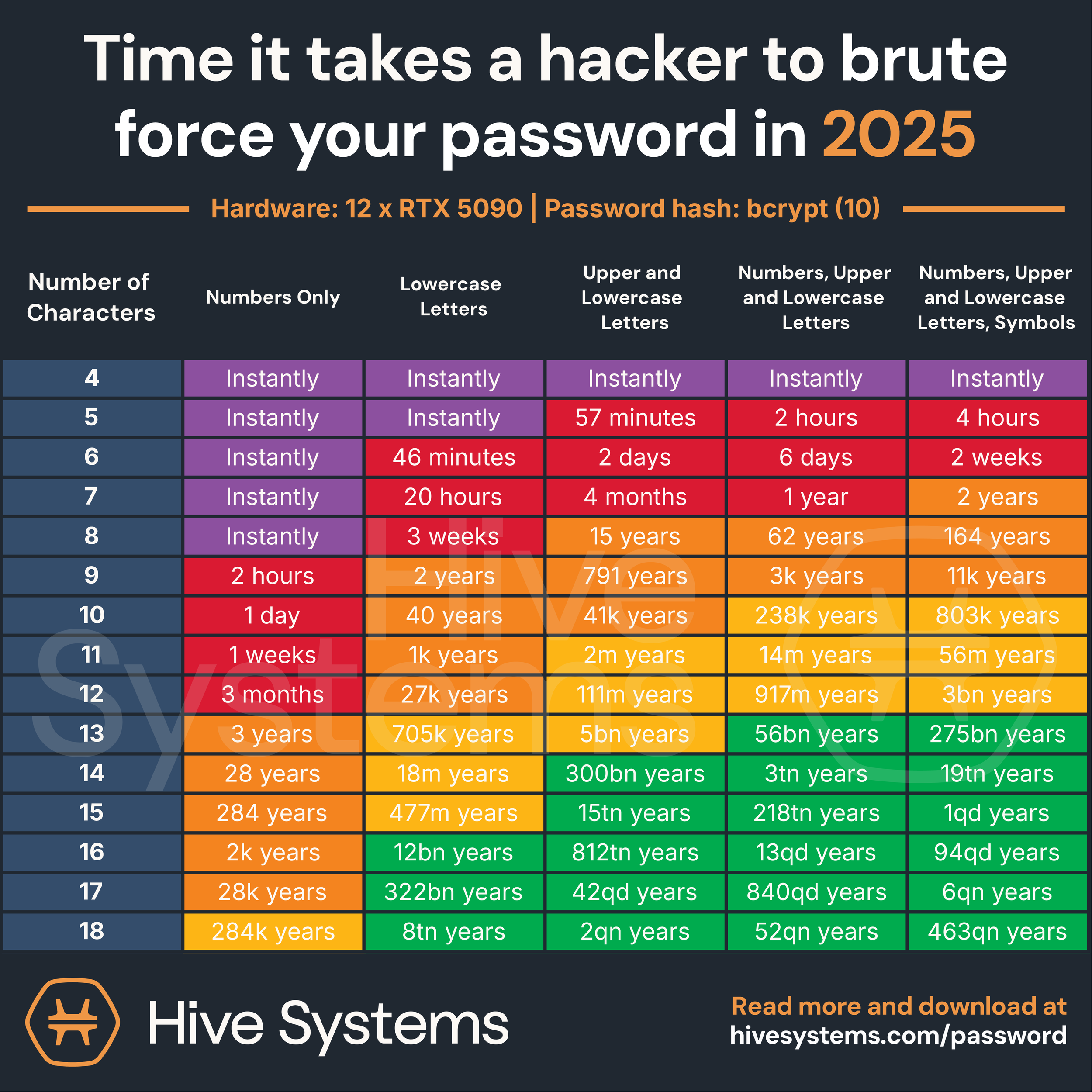

People ask because most password advice sounds impossibly impractical. "Use 16 characters with uppercase, lowercase, numbers, and symbols. Never reuse passwords. Change them every 90 days. Write them down but hide the paper." It's security theater that nobody actually follows.

Here's the actual answer: Your password strength matters far less than whether you reuse passwords across sites.

Think about it. If you use the same password on Linked In, Twitter, and your email, and one site gets breached, attackers immediately try that password on everywhere else. It's called "credential stuffing," and it works better than you'd think. People get hacked not because their passwords are weak, but because they're lazy with reuse.

The math actually supports this. A random 12-character password takes roughly 200 years to crack via brute force with current hardware. A random 16-character password takes millions of years. But if you reuse that "strong" password and one site gets breached, it doesn't matter. You're hacked in seconds.

So the real security formula is: Use a password manager to create unique, random passwords for every single site. Those passwords can be shorter (10-12 characters is fine) because they'll never get reused. The password manager handles the complexity. You only have to remember one master password.

What makes a good master password? Length matters more than complexity. A 20-character passphrase that's easy to remember beats a 12-character string of random symbols. Something like "Green Frog-Market Tuesday-7 Times" beats "K8@n L#p Q2v X%."

Password managers like Bitwarden, 1 Password, and Dashlane handle the heavy lifting. They generate randomness, store encrypted copies, and sync across your devices. They even autofill login forms. The only downside is you have to trust the service with your vault. But that's a calculated risk most security professionals recommend. The risk of password reuse is higher than the theoretical risk of a password manager breach.

Why does this matter in 2026? Because AI-powered password cracking is getting better. Not in the brute-force sense (that hasn't changed much), but in the pattern-matching sense. Systems can now predict password patterns with scary accuracy. If you use "Fido 2024" on one site, an AI can guess "Fido 2025" within seconds across other services. Another reason unique passwords become even more critical.

The other big password question: How often should you change them? The honest answer? Almost never, if they're truly unique and randomly generated. The old 90-day password rotation policy was debunked years ago. Frequent changes actually make people choose weaker passwords because they can't remember complex ones. If your password is unique and strong, change it only if you suspect it's been compromised or if you've reused it (then change it everywhere).

One more thing people ask: Should you write down your passwords? Yes, actually. A handwritten list in a safe place is more secure than using the same password everywhere. Just keep it somewhere an intruder couldn't find it with a quick search of your home.

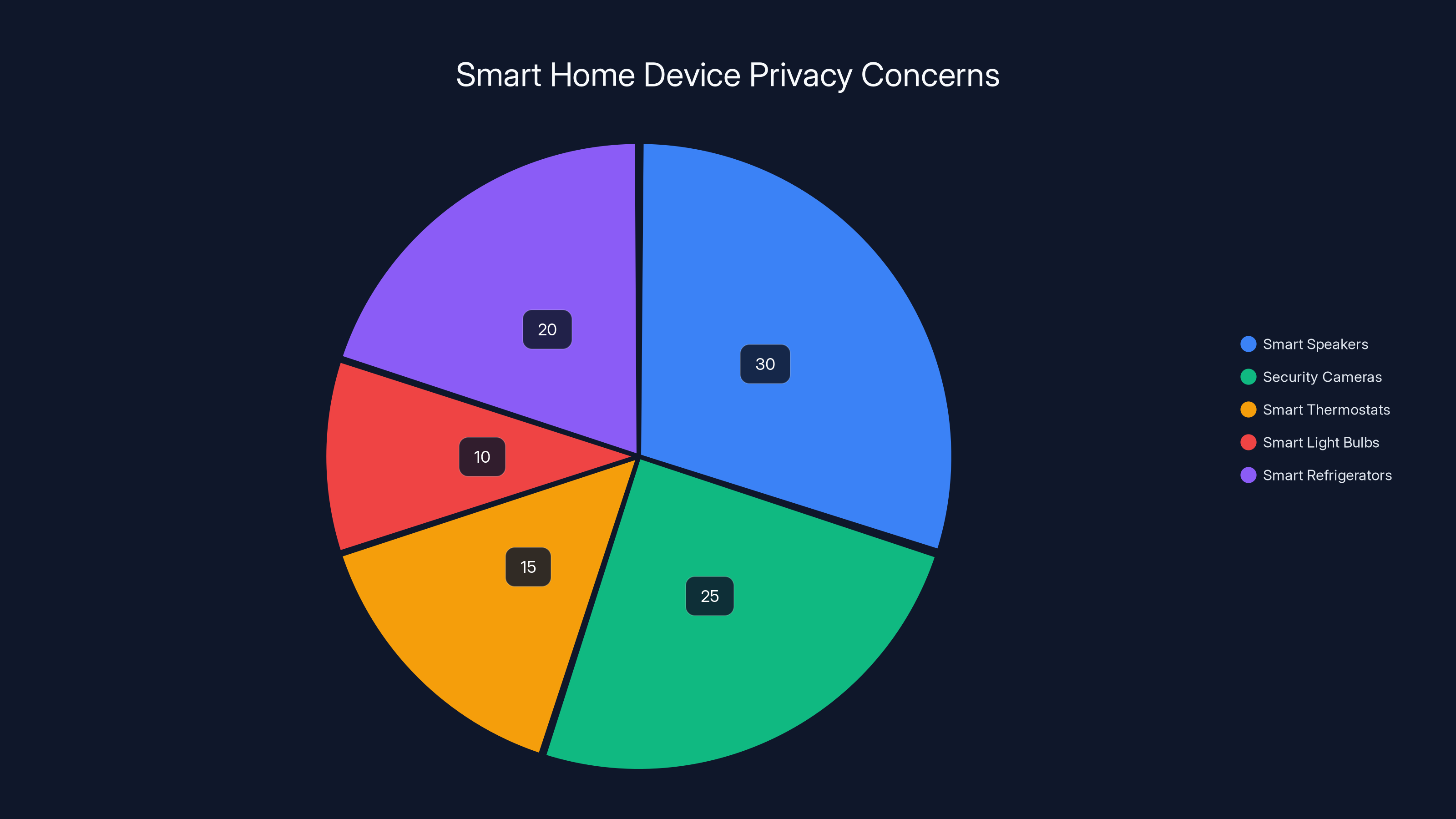

Smart speakers and security cameras are perceived to have the highest privacy concerns, while smart thermostats are seen as less intrusive. Estimated data.

What's the Deal With Two-Factor Authentication? Does It Actually Work?

Two-factor authentication (2FA) might be the single best security investment you can make. It's not sexy. It's not complex. But it works.

The premise is simple: even if someone steals your password, they can't access your account without a second factor of authentication. Something you have (your phone), something you know (a code), or something you are (your fingerprint).

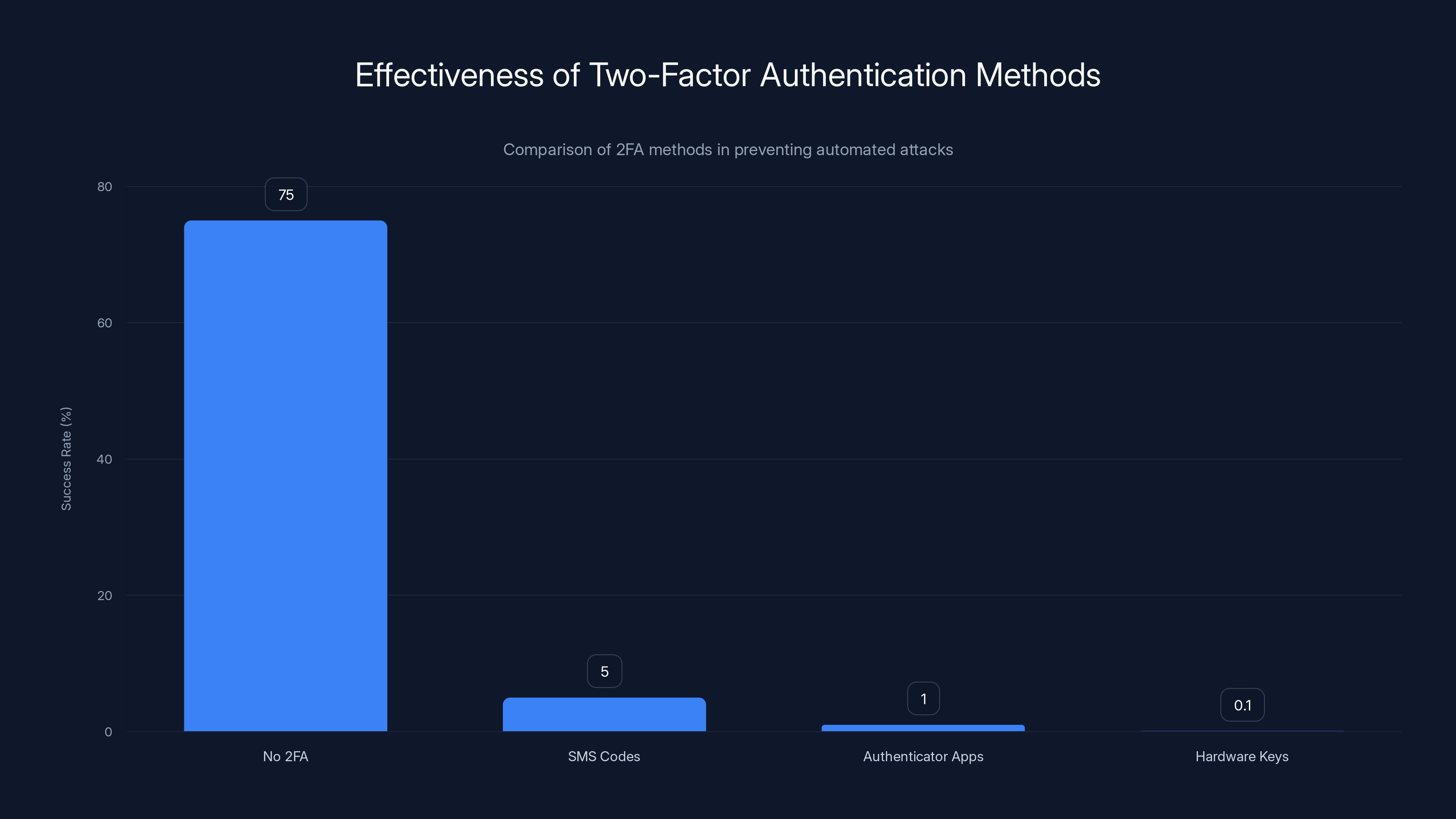

Let's talk effectiveness. When 2FA is enabled, the success rate of account takeovers drops from roughly 70-80% down to approximately 0.1%. That's not a typo. That's a 99.9% reduction in successful compromise. It's the single most impactful thing you can do with maybe 5 minutes of setup time.

Why? Because the vast majority of account takeovers are automated. Attackers use stolen passwords and try to access accounts en masse. It's a numbers game. Automated attacks fail instantly when 2FA is enabled. The attacker would need to be targeting you specifically to bother with a 2FA-protected account, and you're probably not important enough to warrant that level of attention.

Three main types of 2FA exist, and they're not all equal.

SMS codes (text message): This is the most common type and better than nothing, but not ideal. SIM swapping attacks exist where someone convinces your phone carrier to switch your number to their phone. It's rare but possible. Still, it blocks 95%+ of automated attacks because the attacker doesn't have your phone.

Authenticator apps (time-based codes): Apps like Google Authenticator, Authy, or Microsoft Authenticator generate codes that change every 30 seconds. These codes only work on your phone, and there's no way for an attacker to intercept them. This is significantly more secure than SMS.

Hardware security keys (physical devices): A small USB device that you physically touch to authenticate. This is military-grade security and virtually impossible to compromise remotely. The downside: it costs $20-50 per key, and you need backup keys or you'll lock yourself out. For most people, this is overkill.

What should you do? Enable 2FA on your email account first. Everything else flows from email (password resets, account recovery, payment verification). Then enable it on financial accounts, social media, and work accounts. Use an authenticator app over SMS if the option exists.

One huge warning: save your backup codes when you set up 2FA. The system usually gives you 10 single-use backup codes. These let you recover access if you lose your phone. Print them and store them somewhere secure (not your desk drawer, not a text message to yourself).

Another massive question: What about biometric 2FA? Is that better? Fingerprint and face recognition are convenient, but they're not truly "something you know" or "something you have." They're something you are, which can't be changed. If your fingerprint is compromised (and they can be), you can't get a new one. Most security experts recommend biometrics as a convenient unlock method for your password manager or phone, but not as your primary 2FA for critical accounts.

How Much Do Data Brokers Actually Know About Me?

This question generates real fear, and it should.

Data brokers are companies that buy, sell, and aggregate personal information about you. They're operating in the shadows of the internet, and most people have no idea they exist.

Here's what they have: Your name, address, phone number, email address, date of birth, relationship status, income range, estimated home value, shopping preferences, browsing history (some of it), purchase history (lots of it), location history (an insane amount of it), vehicle information, criminal records, health conditions (inferred from data), political affiliations, religious leanings, sexual orientation, and about a thousand other data points. They build profiles that are frighteningly accurate.

Where do they get it? From everywhere. Data brokers buy lists from retailers, internet service providers, car companies, public records, mortgage lenders, insurance companies, app developers, and advertising networks. Some of it comes from data breaches they acquire from dark web forums. Some comes from people voluntarily entering information into services (like surveys or app sign-ups). It's a massive, complex web of data transfers.

The business model is simple: they sell this information to advertisers, marketers, employers, landlords, debt collectors, and political campaigns. They make money by aggregating and selling your secrets.

How extensive is this? A study by the Privacy Research Institute found that the average American has detailed profiles with 15-20 different major data brokers. Some brokers have dossiers on 200+ million Americans. That's basically everyone. And most people have no idea.

What's in these profiles? Everything. One study obtained sample reports from major brokers and found incredibly detailed information: salary range, investment holdings, debts, shopping preferences, location patterns, health conditions inferred from purchases, family relationships, and behavioral predictions about likelihood to click on ads, respond to offers, or commit fraud.

The really unsettling part? Some brokers predict your income level, credit score, likelihood to respond to political messaging, and even health conditions—all without you explicitly telling them. These predictions are made by machine learning models trained on millions of people's actual data.

What can you do about it?

First, realize you have rights. Under CCPA (California Consumer Privacy Act) and similar laws in other states and countries, you have the right to request data brokers delete your information. The Federal Trade Commission maintains a list of major data brokers and how to submit deletion requests.

Second, use a data removal service. Companies like Incogni, Delete Me, and One Rep automate the process of sending deletion requests to brokers. It costs money ($10-30/month), but it takes the tedious work off your hands. They handle the complexity of figuring out which brokers have your data and requesting deletion.

Third, opt out of data collection where possible. Disable location tracking on your phone and in apps. Use a privacy-focused search engine instead of Google (Duck Duck Go doesn't track you). Use a browser extension that blocks tracking pixels. These measures won't eliminate data brokers from having your info, but they slow down the rate at which new data gets collected.

The honest truth: you can never fully eliminate data brokers from having information about you. Public records (property ownership, court records, vehicle registration) are legally available to anyone who asks. But you can significantly reduce your profile's accuracy and make yourself a less attractive target for invasive marketing.

One more thing: data brokers are increasingly used by insurance companies to deny claims and by employers to screen out applicants. Knowing what's in your file and having it corrected is actually important for your financial future.

Should I Cover My Webcam? What About My Microphone?

This is one of the most frequent practical questions, and the answer is more nuanced than "yes" or "no."

Let's start with the webcam. Yes, government agencies, law enforcement, and bad actors have accessed laptop webcams remotely. It happens. The FBI director has said he covers his laptop camera. That's not paranoia—that's informed awareness.

But here's the realistic risk: unauthorized webcam access usually requires malware on your computer. You'd have to download something infected or visit a site that successfully exploits a browser vulnerability. Modern operating systems (Windows, mac OS, Linux) make this harder than it used to be. Built-in security is decent.

Does it hurt to cover your camera? No. A cheap sticker or small piece of tape does nothing to your functionality and provides peace of mind. But if you're relying on tape over your camera while running unpatched software, your priorities might be backwards. The malware risk from everything else on your computer is far higher than the webcam risk.

What should you do? Keep your operating system and browser patched. Don't download suspicious files. Don't visit sketchy websites. If you do all that, your camera risk is low. But slapping a sticker on your camera costs nothing, so why not?

The microphone question is different and more concerning. Most devices with microphones (phones, smart speakers, laptops) are always listening at some level. Not recording and sending to the cloud—that would be illegal and obvious. But they're listening for wake words ("Hey Siri," "OK Google").

The legitimate question is: Could they be recording more than they admit? Theoretically, yes, but practically, it would be incredibly obvious. The data would show up in network monitoring. The battery drain would be enormous. A smartphone recording audio 24/7 would be dead by mid-morning.

What happens is more subtle. Your phone and apps collect data about what you talk about, even if they're not recording. They pick up context clues. You talk about needing new running shoes. Suddenly you see running shoe ads everywhere. This isn't because your phone recorded you. It's because you searched for it, shared it in text, or visited relevant websites—all things that are tracked.

Microphones on smart speakers are a slightly different beast. These devices legitimately send audio to servers when triggered by wake words. The question is what they do with that audio. Amazon and Google say they delete it. We have to take their word. But again, constant recording would be immediately obvious from network traffic and power usage.

What should you do? Don't trust devices by default, but understand the actual risks. If you're deeply uncomfortable with smart speakers, don't buy them. If you want one for the convenience, that's a reasonable trade-off. But disabling the microphone entirely defeats the purpose.

For laptops, the webcam tape is fine. For your phone, don't worry about the microphone being always-on for recording. The bigger concern is the metadata: location, contacts, app usage, search history.

Two-factor authentication offers the highest protection at 99.9%, while VPNs and password managers provide significant but lesser protection. Estimated data.

What's End-to-End Encryption and Why Should I Care?

End-to-end encryption (E2EE) is the gold standard for private communication.

Here's how it works: You send a message to your friend. The message gets encrypted on your phone using a key only you and your friend have. It travels across the internet in encrypted form. The messaging company's servers can't read it (theoretically). Only your friend can decrypt it on their phone.

Contrast this with regular encryption: Your message goes to the server encrypted, but the server has the key to decrypt it (so the company can moderate content, comply with legal requests, or read it for advertising purposes). Then it gets encrypted again when sent to your friend. The company can read every message.

E2EE is mathematically sound. When properly implemented, it's virtually impossible to break. The government can't force the company to decrypt messages because the company doesn't have the keys.

Sounds perfect, right? The catch is implementation complexity.

Signal, the encrypted messaging app, is considered the gold standard. It uses a protocol called Double Ratchet that adds a new layer of encryption with every message. Even if someone compromises one key, they can only read that one message. The previous and future messages remain secure. It's paranoid and beautiful.

Whats App also uses E2EE by default, and it's based on Signal's protocol. So does i Message, though with some caveats about key escrow (Apple holds backup keys). Telegram... does not use E2EE by default. You have to manually enable "Secret Chats" for E2EE, and not all features work in that mode. Signal and i Message are the safe defaults.

Why doesn't everyone use E2EE? Because it complicates things. Group chats need to establish shared keys. Key verification requires extra steps. Some governments have pressured companies to weaken E2EE. And there's a business incentive: if a company can't read your messages, they can't use message content for advertising targeting.

Here's the important part: E2EE only protects the message content. It doesn't hide metadata. The company still knows you messaged someone, when, and how often. Some argue that metadata is just as revealing as content. Data scientists can profile your relationships just from "who you talk to and how frequently."

Should you care about E2EE? If your messages involve sensitive information, yes. If you're sharing medical details, financial information, or anything you'd be embarrassed to see public, use Signal or i Message. For casual conversation with friends, it's less critical, but the privacy is nice.

One more thing people ask: Is encrypted email possible? Yes, but it's painfully complicated. Both parties need encryption software (PGP or similar), they need to exchange public keys, and they need to manage all of this manually. It works, but it's about as user-friendly as restoring a hard drive from command line. Most people just use encrypted messaging apps instead.

What About My Location Data? How Much Are Companies Tracking Me?

Location data is money. Your location is worth about

Here's what happens: Your phone knows where you are with incredible accuracy (within 5-10 meters when GPS is enabled). Apps request location permission and start logging your movements. This data goes to the app company, gets sold to brokers, gets sold to advertisers, gets sold to insurance companies and employers. Your movements become a commodity.

The creepy part? It's incredibly detailed. Brokers can track your routine with scary precision. They know when you leave home, what route you take to work, where you stop for coffee, which stores you visit, how long you stay at each location, and when you come home. Chain this data over weeks and months, and they have a complete behavioral model of your life.

How invasive is this? A study by the Mozilla Foundation found that 75% of smartphone apps request location permission, but only about 30% actually need it for their core functionality. Instagram doesn't need your location to show photos. Spotify doesn't need it to play music. But they request it anyway because location data is valuable.

What's worse, location data is often shared with third parties without explicit consent. You grant an app location access, thinking it's just for the app. But that app has a backend company that processes location data, and that company shares it with data brokers, and suddenly your location is everywhere.

How do you defend against this? Start by disabling location access for every app that doesn't absolutely need it. On i Phone, go to Settings > Privacy & Security > Location Services and audit which apps have access. On Android, go to Settings > Apps > Permissions > Location.

Turning off location for just 10 of the top tracking apps reduces your location data collection by roughly 60-70%. It's surprisingly effective.

Next, use precise location instead of approximate when you must grant access. Many apps will accept approximate location ("you're somewhere in your city") instead of exact coordinates. Choose approximate.

Third, turn off location services entirely when you don't need them. Yes, Maps works worse. But your location isn't being collected constantly. It's a trade-off between convenience and privacy.

Fourth, use a VPN when on public Wi Fi. A VPN masks your IP address, which makes it harder to correlate your network location with your GPS location. It's not a perfect solution, but it complicates tracking.

Finally, be aware that disabling location services only protects against GPS-based tracking. Wi Fi-based location tracking (where your phone determines location by the nearest Wi Fi networks) still works. As does cellular tower triangulation. Perfect anonymity is impossible without completely disconnecting from networks.

The honest answer: If you're using a smartphone, you're being tracked by location. You can reduce it significantly, but you can't eliminate it. The question is how much tracking you're willing to accept for the convenience of having a smartphone.

How Do VPNs Actually Work? What Do They Really Protect?

VPNs are widely misunderstood, and that's a problem.

The acronym stands for Virtual Private Network. The concept: your traffic goes through an encrypted tunnel to a VPN server, and then out to the internet from there. Websites see the VPN server's IP address instead of your IP address. Your ISP sees that you're using a VPN but not what websites you're visiting.

Sound perfect? It's not, and understanding the limits is crucial.

What VPNs protect:

- Your IP address (websites don't see your real IP)

- Your ISP doesn't see which websites you visit

- Your traffic from home Wi Fi is encrypted (so others on the Wi Fi can't see your passwords)

- Public Wi Fi becomes safer (no one can intercept your login credentials)

What VPNs don't protect:

- The VPN provider sees all your traffic (they know every site you visit)

- Websites can still identify you if you log in (your behavior, browser fingerprints, cookies)

- Malware on your computer still sees everything

- DNS requests might leak your visited sites (depends on VPN setup)

- Your actual identity if you use personal information on websites

Here's the critical thing: You're not gaining privacy by using a VPN. You're shifting trust from your ISP to your VPN provider. You'd better trust the VPN provider more than your ISP, or you've made your situation worse.

VPN providers claim they don't log your activity. Some probably don't. Some definitely do (they've admitted it when subpoenaed). The problem: you can't audit their claims. You have to trust their word.

That said, VPNs are useful in specific situations. On public Wi Fi at an airport, a coffee shop, or a hotel, a VPN is genuinely valuable. It prevents someone on the same network from snooping on your traffic. That's a real, immediate threat on public networks.

At home? It depends. If you trust your ISP to not monitor your traffic, you probably don't need a VPN. If you're concerned about ISP-level surveillance (which is valid in some countries), a VPN helps.

What about VPNs protecting against government surveillance? This depends on the country. In the US and UK, law enforcement can subpoena VPN providers. VPN providers without infrastructure in those countries have more legal protection, but not absolute.

Should you use a VPN? If you're on public Wi Fi frequently, yes. If you're traveling to countries with internet censorship, yes (though VPN use might be illegal there). If you want your ISP to not see your browsing, yes. If you think a VPN will make you anonymous online, no. It won't.

Which VPN should you use? The honest answer: don't trust the free ones. Free VPN services have to make money somehow, and that's usually by selling your data to advertisers. Paid services like Mullvad, Proton VPN, and IVPN are better. They have infrastructure outside the US and UK, which provides some legal protection. They publish transparency reports showing government requests. They're not perfect, but they're more trustworthy than free alternatives.

One final point: A VPN is one piece of a privacy strategy, not the whole strategy. Using a VPN while logging into your Google account, using your real name on websites, and following obvious behavioral patterns means you're still trackable. You're just hiding your IP address.

Two-factor authentication significantly reduces the success rate of account takeovers, with hardware keys offering the highest security. Estimated data based on typical effectiveness.

Browser Privacy: Cookies, Tracking Pixels, and Third-Party Data Collection

Your browser is a tracking machine. You probably don't realize how much.

Cookies are small files that websites store on your computer. First-party cookies are created by the website you're visiting. They usually serve a function: remembering your login, storing preferences, tracking items in your shopping cart. These are mostly fine.

Third-party cookies are different. A website loads content from an advertising network (like Google Ads or Facebook Pixel), and that network drops a cookie on your computer. Now the advertising network can track you across the entire internet. You visit Amazon, the Facebook pixel tracks it. You visit a news site, it tracks it. You visit a health website, it tracks it. Over time, the ad network builds a complete profile of your interests, health concerns, and shopping behaviors.

Browsers have started blocking third-party cookies, which is good. Safari blocked them by default in 2019. Firefox did in 2022. Chrome said it would block them but then delayed repeatedly. At this point, most of your tracking is happening through mechanisms other than third-party cookies anyway.

Tracking pixels are tiny invisible images embedded on websites. They serve the same purpose as cookies: tracking your visit. A website embeds a Facebook tracking pixel, which follows you around the internet. Or a Google tracking pixel. Or a hundred others. Blocking pixels is harder than blocking cookies.

How do you defend against this?

First, use privacy-focused browser extensions. u Block Origin blocks ads and tracking. Privacy Badger learns which trackers to block as you browse. These extensions are free and significantly reduce tracking.

Second, use a privacy-focused browser. Firefox offers better defaults than Chrome for privacy. Brave blocks trackers by default. Safari is surprisingly privacy-friendly compared to Chrome.

Third, clear your cookies regularly. Most browsers let you clear cookies on exit automatically. Do it. Or use containers (Firefox Multi-Account Containers) that isolate cookies per site.

Fourth, use a privacy-focused search engine. Google indexes your searches and uses them to build your profile. Duck Duck Go doesn't track searches. Startpage routes searches through Google but doesn't track you. These alternatives are free and actually work fine.

Fifth, consider disabling Java Script in your browser. This nukes most tracking but also breaks many websites. It's impractical for general browsing.

The honest assessment: Perfect browser privacy is impossible. Websites can still identify you through browser fingerprinting (analyzing your browser version, screen resolution, fonts installed, and behavioral patterns). Advertisers are increasingly moving away from cookies to other tracking methods. But reducing your trackability is possible, and these measures help.

Is My Email Address Safe? What Happens If I Get Breached?

Email is often called the keys to your kingdom, and there's truth to that.

Your email account can reset any other account. A hacker accessing your email can reset your banking password, your social media, your cryptocurrency exchange. Email is the weak point.

What happens if your email gets breached? First, check haveibeenpwned.com. Enter your email and it tells you which services have been breached and had your email exposed.

If you find breaches, here's what to do: Change the password on that service immediately. Change it on any other service where you reused that password. Enable 2FA on your email account if you haven't already. Consider using a fresh password generator for all new passwords.

The bigger question: How exposed is your email address? If your email was in the Facebook breach, Linked In breach, Yahoo breach, or dozens of others, it's floating around the internet. Data brokers have it. It's on dark web forums. Bad actors can purchase lists of exposed emails.

What does this mean practically? You'll probably see more spam. You might get targeted spear-phishing emails. You might see your account name on sketchy websites where you never signed up. Your email address has basically become a commodity.

What should you do? First, don't panic. Millions of email addresses are exposed. You're not uniquely targeted. Second, be more cautious with emails from "senders" you don't recognize. Third, never click links in unsolicited emails. Fourth, use unique passwords for every service (password manager, remember?).

One strategy: Create separate email addresses for different purposes. One for banking and critical accounts. One for shopping and less important services. One for online forums and sketchy sites. This compartmentalization means if one email gets compromised, not all your accounts go down with it.

Another strategy: Use email aliases. Services like Simple Login and Duck Duck Go Email let you create unlimited throwaway email addresses that forward to your real inbox. This way, you can sign up for something sketchy without giving out your real email. If that email gets sold to spammers, you just delete the alias.

Is there anything you can do to protect your email from being breached? Not really, unless you avoid services entirely. Breaches happen to big companies with good security. It's not always a failure of the service. Sometimes it's an attack that was sophisticated enough to get past defenses.

What you can control is your response when breaches happen.

What About AI Training on My Data? Do I Have Any Say?

This is the newest and most anxiety-inducing question people are asking in 2026.

AI companies have been training language models on data from the internet: books, websites, Reddit posts, Stack Overflow answers, Twitter threads. Some of this data includes personal information about people who never consented.

The question people ask: Can AI companies use my data to train models?

The legal answer depends on where you live. In the EU under GDPR, companies need explicit consent to use personal data for new purposes (like training AI). In the US, copyright law is unclear. Is using data to train AI "fair use," or is it unauthorized copying? Courts haven't fully decided yet.

In practice, major AI companies have been sued by content creators, news publishers, and individuals for unauthorized data use. Some have added opt-out mechanisms. Some are negotiating licensing deals with data providers. Some are just waiting out the legal battles.

What this means for your personal data: If you've posted on public websites, your data has probably been used to train AI models. You probably don't have practical recourse. Deleting the content now doesn't help because it was already scraped and used for training.

What can you do? In some jurisdictions, you can request that AI companies remove your personal data from training datasets. But this is practically difficult because they don't release their training data. You have to file formal requests, and it's unclear how well they work.

Preventively, you can avoid posting personal information on public websites. Use private accounts for social media. Be cautious about what you share online. But if you've already shared extensively, the damage is done.

The bigger issue: Should AI companies be allowed to use your data without permission? Many argue they shouldn't. There's been legislative movement toward requiring consent, particularly in the EU. But the US has been slower.

One strategy emerging: data cooperatives. Groups of people collectively managing the use of their data and negotiating with AI companies for compensation. It's early, but it's a potential future.

For now, the honest answer: Your data is probably already being used. You probably can't get it back. Future data can be protected through careful online behavior and using privacy-focused services.

Data security and passwords are the most common privacy concerns in 2026, followed by location tracking and app permissions. Estimated data based on AI platform queries.

Smart Home and Io T Privacy: Does Your Smart Speaker Report Everything?

Smart home devices are convenient. They're also surveillance in your home.

Amazon Alexa, Google Home, and Apple Siri are always listening for wake words. That part is real. But what about listening to everything else? The conspiracy theory says they record you 24/7 and send audio to servers for advertising purposes.

The reality is probably less sinister but more subtle. These devices likely aren't recording everything and sending to servers (that would be obvious from network monitoring). But they're collecting data about what you ask, how often you interact, and probably using those interactions to train recommendation models.

Amazon has confirmed that human contractors review some Alexa interactions to improve the service. That's... creepy. You ask Alexa something private, and a human might listen to it.

But the broader concern is legitimate: smart home devices from major companies are part of ecosystems designed to sell you more stuff. Amazon wants to know what you're asking so they can recommend products. Google wants to know your routines so they can target ads. Apple claims privacy but benefits from the data anyway.

What about other smart home devices? Your security cameras, smart thermostats, smart light bulbs, smart refrigerators. Most of these are manufactured by companies with questionable privacy practices. Some have been found to collect far more data than their description suggests.

What should you do? First, be selective about which smart home devices you buy. Do you actually need a smart refrigerator that sends your food inventory to the cloud? Probably not. A smart thermostat saves energy, which is legitimate. A smart speaker is convenient but comes with privacy costs.

Second, segment your smart home on a separate Wi Fi network from your other devices. If a smart light bulb gets compromised, it shouldn't be able to access your computer or phone. Many routers support guest networks; use one for smart home devices.

Third, disable features you don't use. Most devices have settings to disable remote access, cloud features, or data collection. Read the privacy settings and disable anything you don't need.

Fourth, buy from companies with better privacy records. Apple generally doesn't sell your data. Amazon definitely does. Google definitely does. Consider the company's business model before buying their smart home device.

The pragmatic approach: Smart home devices can provide real value, but understand the trade-off. You're trading privacy for convenience. If you're not comfortable with that trade, stick to dumb devices. If you are comfortable, at least understand what you're trading away.

Health and Fitness App Privacy: What Happens to My Workout Data?

Fitness trackers and health apps collect intimate data. Heart rate patterns reveal stress and illness. Location data during workouts reveals home address and routine. Step counts reveal when you're home.

The temptation for app companies to monetize this is enormous. Insurance companies would love to know health data. Employers want to know health trends. Advertisers want to target people based on health conditions.

How bad is it? Several cases have emerged of fitness apps sharing location data that revealed military bases. A woman's Strava heat map revealed she was jogging from a specific house. Connected the dots, and that house was a military facility. Location plus timing plus behavior patterns revealed classified information.

Similarly, health apps have been found sharing data with third parties without clear consent. Period tracking apps have particularly raised concern because reproductive health data is sensitive and potentially used against women.

What should you do? First, use privacy-focused health apps. Apple Health and Samsung Health integrate with many devices and keep data relatively private (on your device). Fitbit (owned by Google) sends data to Google. Strava is more transparent but shares data with partners.

Second, disable location tracking in fitness apps if possible. You don't need location to track workouts. A treadmill workout doesn't benefit from GPS. Only outdoor activities need it, and you can enable it selectively.

Third, avoid connecting health apps to social sharing features. The convenience of sharing your workout with friends comes with the cost of that data being recorded and analyzed by companies.

Fourth, read the privacy policy (I know, nobody does, but health apps are worth it). See if the company sells data. See what third parties have access. See if they share with insurance companies or employers.

Fifth, understand that even with privacy controls, the data on your phone is vulnerable. If someone gets access to your phone, they have intimate health data. This is another argument for strong device security (long passcode, 2FA, device encryption).

Social Media Privacy: What Are You Actually Agreeing To?

Social media companies have their business model: You are not the customer. You are the product. Advertisers are the customers.

When you join Facebook, Instagram, Tik Tok, Twitter, the company's goal is to understand you deeply enough to predict what ads will make you click. Everything the company does serves this goal.

Your posts, likes, comments, time spent, clicks, stops, rewatches—all of it is data being used to train recommendation models. Your location, searches, purchases—connected through advertising networks—build a complete profile.

Can you protect yourself on social media? Somewhat.

First, limit the information you share. Don't post your location, employer, school, birthdates, relationship status unless necessary. Anything you post can be aggregated by data brokers.

Second, limit permissions. Most social media apps request access to your contacts, calendar, photos. They don't need most of this. Disable unnecessary permissions.

Third, use privacy settings. Make your account private if it serves your purposes. Restrict who can see your posts. Disable activity status (so people don't see when you're online).

Fourth, be aware that privacy settings don't prevent the company from analyzing your behavior. The company still sees everything. It just controls who else sees it.

Fifth, consider limiting time on social media altogether. The more you use, the more data you generate. Less data is the best privacy.

The hard truth: You cannot have a social media account and be private. If you want privacy, you should reconsider whether you need the account. If you want the account, accept that you're trading privacy for connection and entertainment.

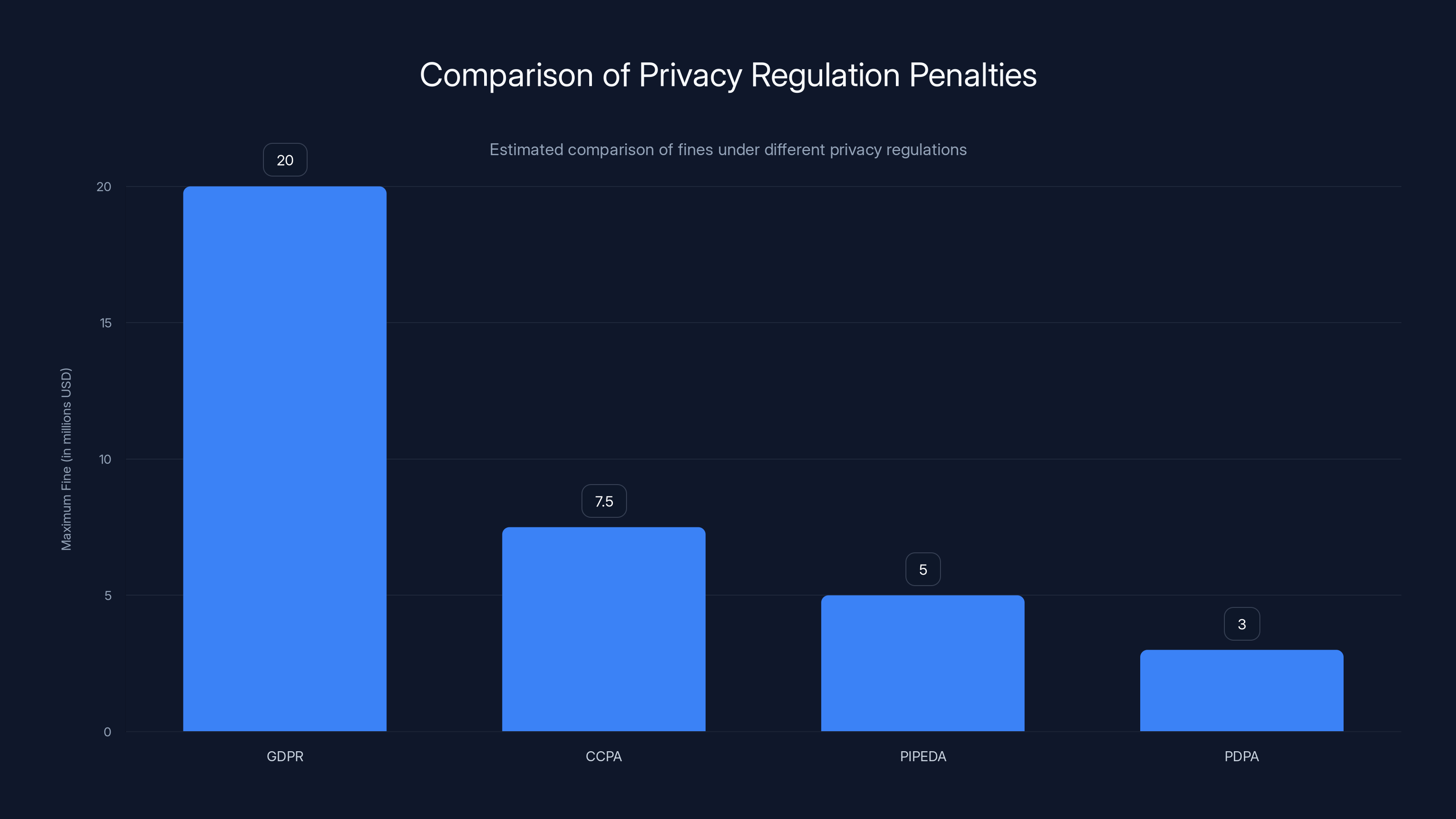

GDPR imposes the highest fines, up to $20 million or 4% of revenue, making it the strongest regulation in terms of financial penalties. Estimated data.

How Can I Test My Privacy Protections? What Websites Actually Help?

If you've implemented some privacy protections, how do you verify they're working?

Several websites test specific aspects of privacy. These tools are useful for checking your setup.

For VPNs: Use ipleak.net or dnsleaktest.com. They show your IP address and DNS server. When you connect to a VPN, these should show the VPN's IP and DNS, not your real ones.

For browser tracking: Use the EFF's Panopticlick tool or Am IUnique.org. They analyze your browser fingerprint and show how unique you are. More unique = more easily identified. You want a common fingerprint.

For ad tracking: Most browser extensions like u Block Origin show how many trackers and ads are blocked on each page. This gives you a sense of tracking intensity.

For password strength: Bitwarden's password strength estimator or NIST's guidelines show how secure your passwords are. Run existing passwords through these to see if they need updating.

For breached passwords: Haveibeenpwned.com checks if your email and passwords have been in known breaches. It's essential to check.

For HTTPS: Your browser shows a padlock icon for HTTPS sites. That means traffic is encrypted. No padlock means unencrypted traffic. Always use HTTPS.

For third-party cookies: Try to open the browser's developer tools, look at cookies, and search for domains you don't recognize. Those are third-party trackers. They should be fewer if you're using privacy extensions.

These tools are useful for spot-checking your setup, but they're not comprehensive. A true privacy audit requires detailed knowledge of network traffic, device processes, and data flow. Most people don't have time for that. Use these basic tools and assume you've covered the major risks.

What Privacy Regulations Actually Protect You? Are They Working?

Regulations have gotten serious about privacy. GDPR in Europe, CCPA in California, and dozens of others around the world now give people rights.

GDPR is the strongest. It requires companies to get explicit consent before collecting data, allows users to request data deletion, and imposes heavy fines for violations. $20 million or 4% of revenue, whichever is greater. That's real money even for big companies.

CCPA in California provides similar rights but with smaller penalties. Newer regulations in other US states follow the CCPA model. PIPEDA in Canada, PDPA in Singapore, all provide some level of protection.

Do these regulations work? Partially.

Companies are more careful now. They ask for consent more explicitly. They publish privacy policies that are slightly more readable. But they've also gotten better at making consent confusing. Dark patterns in consent dialogs manipulate people into sharing data.

Fines have been imposed, but they're often small relative to the data's value. A

What regulations have accomplished: They've shifted the default toward privacy in some cases. GDPR made email newsletters opt-in rather than opt-out. They've created the legal framework for individuals to demand data access and deletion. They've given privacy advocates legal arguments in court.

What they haven't accomplished: They haven't stopped data collection or advertising as a business model. Companies have adapted. They've just become more transparent about the invasion.

Should you rely on regulations to protect you? No. Regulations are a baseline. You still need to take individual action.

Future of Privacy: What's Coming in the Next Few Years?

Several trends are emerging that will shape privacy in the near term.

First, AI is getting smarter at predicting behavior from limited data. Companies won't need as much data to profile you effectively. Paradoxically, more privacy regulations plus smarter AI might mean more effective tracking with less data.

Second, quantum computing will eventually break current encryption. This isn't happening tomorrow, but it's coming. Encryption standards are being updated now to prepare. If someone records your encrypted data today and breaks it with quantum computers in 10 years, your data is retroactively exposed.

Third, decentralized identity and privacy technologies are being developed. Zero-knowledge proofs allow you to prove something about yourself (age, location, balance) without revealing the information. This could enable privacy-preserving digital interactions.

Fourth, privacy-preserving machine learning (federated learning, differential privacy) allows training AI on data without centralizing it. Your phone trains a model locally without sending data to servers. This could fundamentally change how AI works.

Fifth, regulators are getting serious about enforcement. The EU has been fining companies repeatedly for privacy violations. If US regulators get similarly aggressive, the cost of privacy violations will become prohibitive.

Sixth, consumer consciousness is rising. People are starting to understand their data's value and demand better terms. This pressure is slow but real.

The honest prediction: Privacy will become more of a luxury good. People who care about privacy will pay for services that protect it. People who don't care will continue trading privacy for free services. The gap will widen.

For individuals, the best approach is to be informed, implement basic protections, and stay aware of changing threats. Perfect privacy is impossible, but informed choices can significantly reduce your exposure.

Practical Privacy Checklist: What You Should Do This Week

If you've read this far and feel overwhelmed, here's a prioritized checklist of things to do, roughly in order of impact.

This week:

- Enable 2FA on your email account

- Check haveibeenpwned.com for breached accounts

- Install a password manager (Bitwarden or 1 Password)

- Update weak passwords on financial and email accounts

- Disable location access for non-essential apps

This month:

- Audit app permissions on your phone

- Cover your laptop camera if you haven't

- Set up privacy-focused DNS (like Cloudflare 1.1.1.1 for Families)

- Install u Block Origin in your browser

- Review privacy settings on social media accounts

This quarter:

- Request data deletion from major data brokers

- Review your smart home devices' privacy settings

- Consider using email aliases for less important services

- Set up automatic cookie deletion in your browser

- Evaluate which subscriptions and apps you actually use

Ongoing:

- Keep your devices updated

- Use strong, unique passwords

- Be cautious with public Wi Fi

- Review privacy settings on new apps before using

- Stay informed about privacy trends

You don't have to do all of this. Start with what feels most important to you. If you implement even the first week's checklist, you're significantly ahead of most people.

FAQ

What is the most important privacy protection I can implement right now?

Enable two-factor authentication on your email account. Your email is the keys to everything else. If someone accesses your email, they can reset every other password. 2FA blocks this in 99.9% of cases. It takes 5 minutes and provides more protection than almost anything else you can do.

How do I know if my password has been compromised?

Visit haveibeenpwned.com and enter your password or email address. The site checks if your credentials have appeared in known breaches from the past decade. If you find a breach, change that password immediately on every site where you used it. You can also use a password manager like Bitwarden, which checks for compromised passwords automatically.

Is using a VPN sufficient for complete privacy?

No, a VPN is one piece of privacy strategy, not the complete solution. A VPN hides your IP address from websites and your ISP, but it doesn't hide your identity if you log into accounts with personal information. It doesn't protect you from malware. It doesn't prevent tracking through browser fingerprints or cookies. Use a VPN for public Wi Fi security and ISP-level privacy, but combine it with other measures like ad blockers, unique passwords, and careful app permissions.

How can I reduce my exposure to data brokers?

You can't completely eliminate exposure because public records (property, vehicle registration, court records) are legally available to brokers. But you can significantly reduce it by using a data removal service like Incogni or Delete Me, which automates requests to brokers to delete your information. You can also opt out of specific brokers' websites manually, though this is tedious. To prevent new data collection, disable location tracking on apps, use privacy-focused browsers, and limit personal information you share online.

What's the difference between a password manager and just using a strong password?

Strength matters less than uniqueness. A 12-character random password across every site is worse security than a 10-character password unique to each site. Password managers create and store unique passwords automatically. If one site gets breached, the breach doesn't compromise your other accounts. You only have to remember one master password instead of dozens. This is why security experts strongly recommend password managers.

Should I worry about my smart home devices spying on me?

Smart home devices from major companies likely aren't recording everything and selling audio. That would be obvious from network monitoring. But they do collect data about your usage patterns, and they may share it with advertising networks. The bigger risk is if a device gets compromised or if you're not updating it with security patches. Mitigate risk by putting smart home devices on a separate Wi Fi network, disabling features you don't use, and choosing devices from companies with better privacy practices.

What should I do if my email account gets hacked?

First, change your password immediately from a different device (in case the attacker still has access). Then enable 2FA if you haven't already. Check account activity history to see what the hacker accessed. Reset passwords on important accounts, especially banking and cryptocurrency. Check if the attacker set up forwarding rules or added recovery email addresses. Monitor financial accounts for fraudulent transactions. Consider placing a fraud alert or credit freeze with credit bureaus to prevent identity theft. If you're very concerned, you can check your credit report at annualcreditreport.com.

Is end-to-end encryption really unbreakable?

End-to-end encryption is mathematically sound when properly implemented. With modern encryption algorithms and proper key management, it's not practically breakable with current technology. However, it's only as secure as the implementation. Bugs in the software, weak key generation, or vulnerabilities in the protocol can break it. Signal is considered the most secure messaging app because its implementation has been heavily audited. Also, E2EE protects message content but not metadata (who you're messaging and when).

How much of my personal data should I expect companies to collect?

Companies collect far more than they need to provide their service. This is legal in most jurisdictions, but unnecessary. A social media platform needs your posts and basic profile to work. It doesn't need your location, contact list, or search history. Most apps request broad permissions and collect data they never use because data is valuable as an asset. You can negotiate this by reviewing permission requests and denying unnecessary ones. Some companies respect these choices; others find alternative ways to track you.

What's a realistic goal for online privacy?

Perfect privacy online is impossible if you're using internet-connected devices and services. Your realistic goal should be to minimize your digital footprint, reduce tracking, make yourself a harder target for attackers, and align your privacy practices with your actual concerns. For most people, this means enabling 2FA, using a password manager, disabling unnecessary app permissions, and using privacy browser extensions. You'll still be tracked, but far less than the average person.

If I delete an account, does my data get deleted?

Not necessarily. When you delete an account, the service removes your profile from active use, but they often retain your data for legal compliance, billing, or analytical purposes. They may delete it after a grace period (usually 30-90 days), but some companies keep anonymized data indefinitely. Always request complete data deletion explicitly when deleting important accounts. Check the service's data deletion policy before signing up.

Wrapping Up: The Real State of Online Privacy in 2026

Privacy isn't dead. It's not even dying. But it requires effort.

Ten years ago, you could get by with basic practices: a password, maybe a antivirus. Now? You need a password manager, 2FA, privacy browser extensions, careful app permissions, and ongoing awareness. It's more complex. But it's manageable if you prioritize.

The questions people are asking AI—the ones that kicked off this entire conversation—reflect genuine anxiety about data and control. And that anxiety is justified. Your data is valuable. It's being collected, aggregated, bought, sold, and used in ways you probably don't fully understand. Companies profit from your digital life.

But here's the empowering part: You have more control than you think. You can't eliminate tracking, but you can reduce it significantly. You can't prevent breaches, but you can make sure a breach doesn't compromise all your accounts. You can't stop data brokers from existing, but you can have your information removed from their databases.

You're not fighting a losing battle. You're just fighting a complex one.

Start with the easy wins. Enable 2FA. Use a password manager. That covers the biggest risks. Then, based on your actual concerns, implement additional measures. If you use public Wi Fi a lot, get a VPN. If you care deeply about ads and tracking, install privacy extensions. If you're concerned about government surveillance or work in a sensitive field, learn about encrypted messaging.

Match your security to your threat model. Don't over-invest in protections you don't need. But don't under-invest in your most critical accounts.

And stay informed. Privacy threats evolve. New regulations pass. New tools emerge. What's best practice today might be obsolete in two years. Your willingness to learn and adapt is more valuable than any single tool or technique.

The people asking these questions to AI are doing something right: they're thinking about privacy seriously. They're not assuming someone else is protecting them. They're taking responsibility for their own digital safety.

You should too.

Key Takeaways

- Two-factor authentication blocks 99.9% of account takeovers and is the single highest-impact privacy action you can take

- Password reuse is far riskier than password length—use a password manager to create unique passwords for every account

- Data brokers maintain detailed profiles on 200+ million people; opt-out services can remove your data but require ongoing maintenance

- VPNs protect your IP address and ISP-level tracking but shift trust from ISP to VPN provider—they're one layer in privacy strategy

- End-to-end encryption is the only way to ensure message privacy, but not all messaging apps enable it by default (Signal and iMessage do)

- Location tracking is highly detailed and valuable to advertisers—disabling it on non-essential apps reduces tracking by 60-70%

- Regulations like GDPR and CCPA provide legal frameworks but rely on individual action and company compliance for effectiveness

- Browser tracking through cookies and pixels is pervasive—privacy extensions reduce tracking by 70% but don't eliminate fingerprinting

Related Articles

- 6.8 Billion Email Addresses Leaked: What You Need to Know [2025]

- Fake Chrome AI Extensions: How 300K+ Users Got Compromised [2025]

- The Rise of Bating Apps and Digital Intimacy Communities [2025]

- DHS Subpoenas to Identify ICE Critics Online [2025]

- How to Remove Big Tech from Your Life: Complete Guide [2025]

- Surfshark VPN Deal: Save 86% on Premium Plan Plus 3 Extra Months [2025]

![Online Privacy Questions People Are Asking AI in 2026 [Guide]](https://tryrunable.com/blog/online-privacy-questions-people-are-asking-ai-in-2026-guide/image-1-1771331861887.jpg)