The Return of a Legend: Napster Reinvents Itself for the AI Era

Napster is back. And no, this isn't another streaming service trying to compete with Spotify. The infamous peer-to-peer file-sharing platform that upended the music industry in the late '90s has quietly resurfaced with something fundamentally different. Instead of fighting over how we consume music, Napster's new AI-first application is asking a radically different question: what if everyone could create it?

This shift represents one of the most intriguing pivots in music technology history. For decades, Napster symbolized disruption from the outside—a technology that forced the industry to adapt whether it wanted to or not. But the reborn Napster isn't disrupting anymore. It's collaborating. It's empowering. And it's betting that artificial intelligence has finally matured enough to democratize something that once required years of training, expensive equipment, and natural talent.

The company's new AI-powered music creation platform launches with a deceptively simple promise: if you can describe what you want to hear, the app can help you make it. Whether you're a bedroom producer with zero musical training or a professional musician looking to accelerate your workflow, the technology claims to meet you wherever you are.

But here's where it gets interesting. This isn't just another AI tool slapped onto an aging brand name. This is Napster actually understanding what it learned from its original sin: that technology moves faster than policy, that distribution networks matter more than gatekeepers, and that power belongs to the people who can access and remix culture, not the corporations that control it.

The timing feels almost too perfect. We're living through a moment when AI music generation has crossed from "quirky tech demo" to "legitimate production tool." Artists like Dadabots have been using AI for years. Open AI's Jukebox sparked conversations about whether machines could truly compose. And now, major labels are beginning to strike licensing deals with AI music platforms instead of suing them into oblivion, as noted by Music Business Worldwide.

Napster's return, then, isn't a nostalgia play. It's a statement. The company is wagering that the next phase of music—the one where artificial intelligence becomes as essential to production as a DAW—needs a platform built on principles of accessibility, collaboration, and shared creativity. Whether that vision survives contact with copyright law, artist rights, and the music industry's well-honed instinct for protection remains the real question.

Let's dive into what Napster's actually building, why it matters, and whether this resurrection can actually stick the landing.

TL; DR

- AI-Powered Music Creation: Napster's new app uses artificial intelligence to let anyone generate music from text descriptions, no training required

- Democratic Access: The platform targets bedroom producers, content creators, and professionals who want faster workflow acceleration

- Collaborative Framework: The technology emphasizes human-AI collaboration rather than full automation, keeping creators in the driver's seat

- Licensing Reality Check: Unlike the original Napster, this version is built with industry partnerships, not against them

- Timing and Market Convergence: AI music generation has matured enough that major platforms are now treating it as legitimate, not experimental

- Bottom Line: Napster's return signals that music creation is entering its democratization phase, similar to what happened with video editing and graphic design

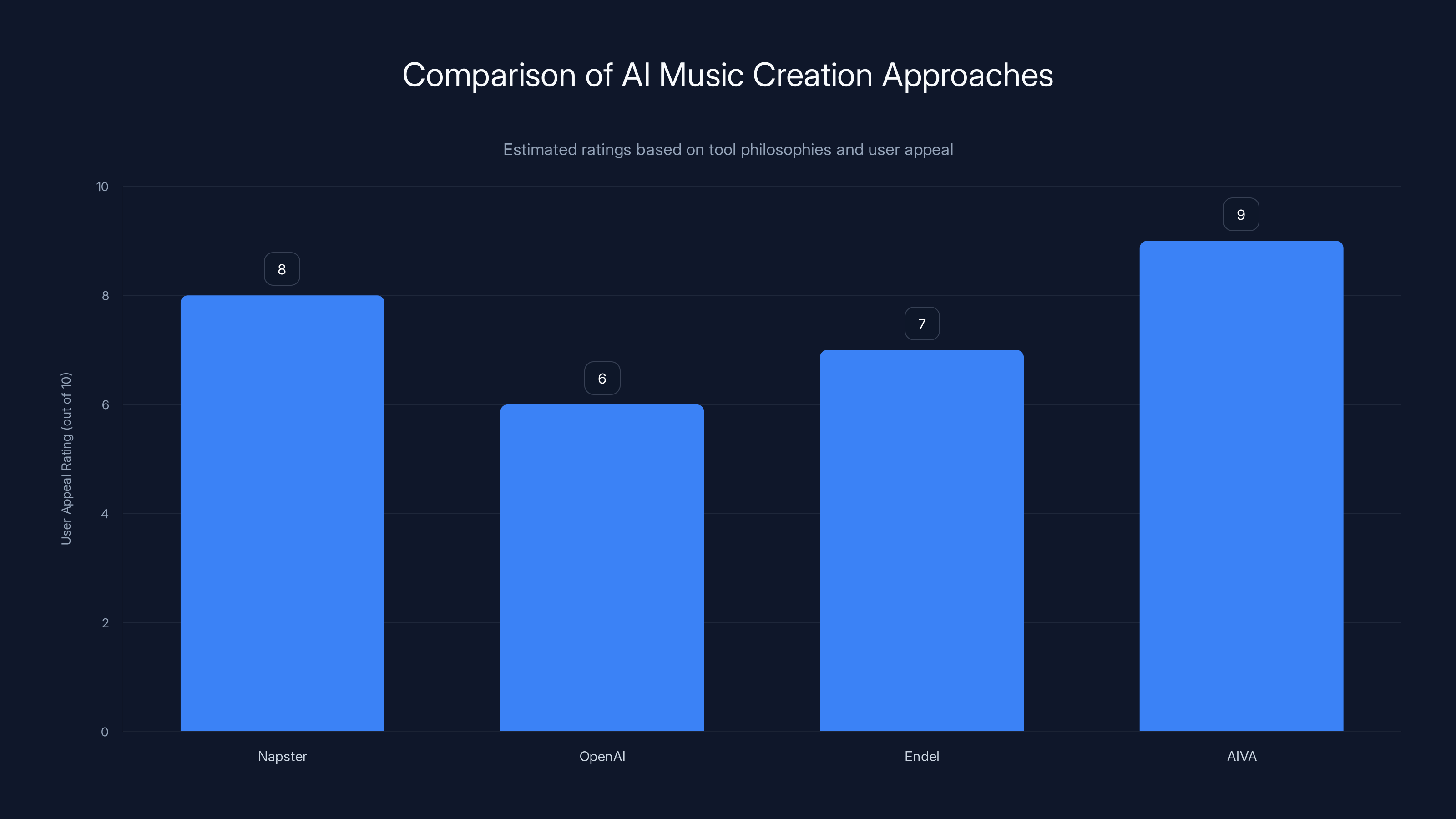

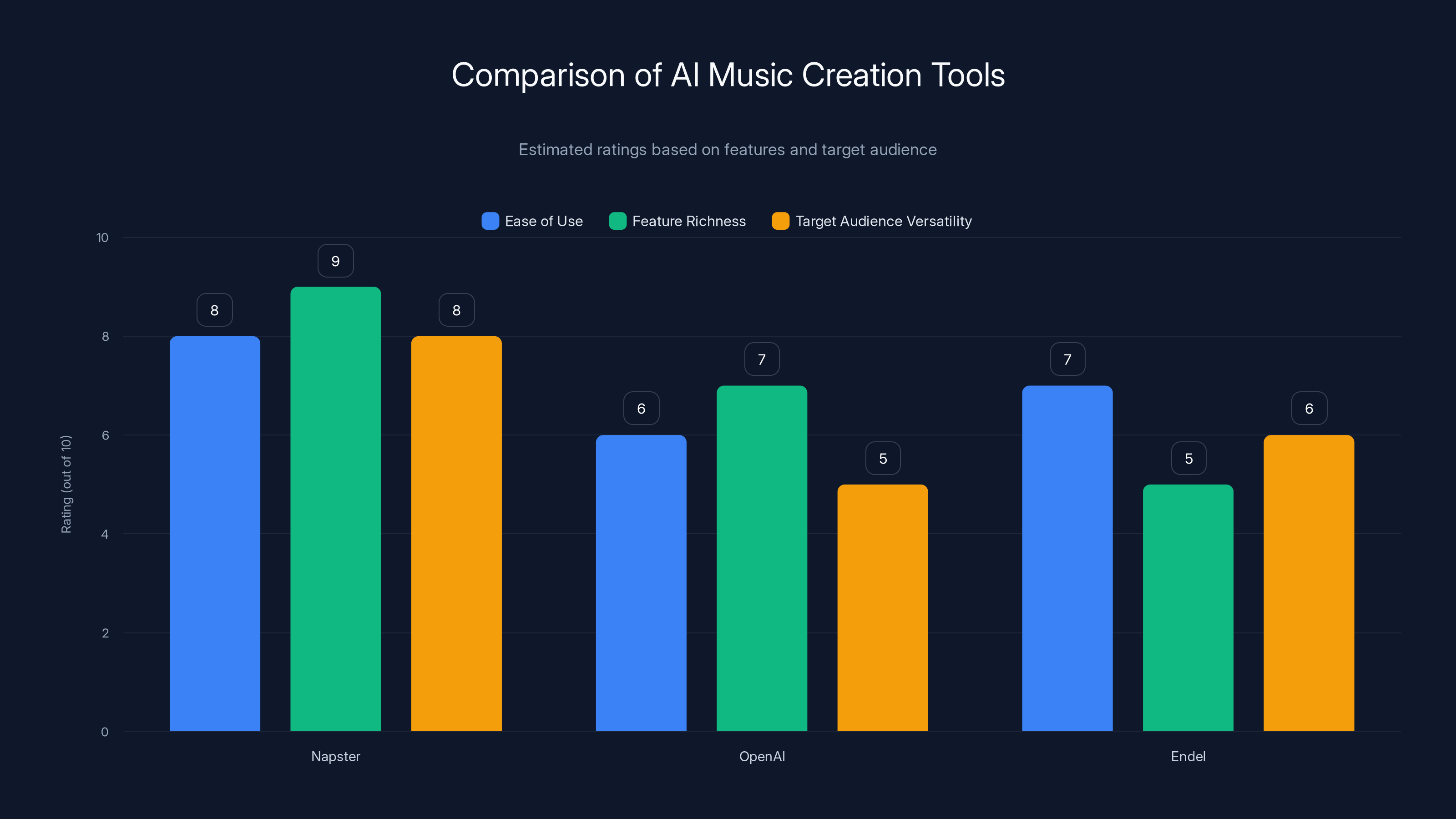

Napster and AIVA have higher user appeal due to their focus on collaboration and specific use cases, respectively. Estimated data based on tool philosophies.

Why Napster's Name Still Carries Weight in Tech History

Before we talk about what's coming, we need to understand what was.

Napster didn't just disrupt music. It fundamentally rewired how an entire industry thought about distribution, copyright, and the internet's role in culture. When Shawn Fanning launched the platform in 1999, he wasn't trying to start a revolution. He just wanted a better way to share MP3s with his college friends.

Instead, he accidentally built the blueprint for how peer-to-peer technology could make centralized gatekeepers obsolete. Within months, millions of users were downloading music directly from each other's computers, completely bypassing record labels and distribution channels. The Recording Industry Association of America lost its mind. Metallica's legal team filed suit. Congress held hearings. And yet, the momentum was unstoppable.

By the early 2000s, Napster was facing total legal destruction. The platform had become so synonymous with piracy that "napster" entered the vocabulary as a verb—something you did, not just an app you used. The courts eventually shut down the original service, and Napster pivoted into a legitimate streaming business that never quite found its footing against iTunes, Spotify, and Apple Music.

But the brand name itself never fully disappeared. It lingered in the cultural memory as shorthand for "the moment the internet broke the music industry's monopoly on distribution."

So when Napster announced its return in 2024, the message was clear: we're not just coming back. We're coming back bigger, with a lesson learned, and with technology that makes the original platform look quaint by comparison.

The psychology here matters. Using the Napster name signals that the company understands its own history. It's not hiding from the piracy baggage. It's acknowledging it, learning from it, and building something that channels that same spirit of democratization but through legal, collaborative channels. Instead of helping people steal music they didn't own, Napster's new platform helps people create music they will own.

That's not a small pivot. That's recognizing that the real disruption wasn't about piracy. It was about removing barriers to participation.

Understanding AI-Powered Music Generation: What's Actually Happening Under the Hood

Let's be honest: when most people hear "AI music generation," they think of soulless, robotic background tracks. Generic elevator music produced by a machine. Something that technically sounds like music but feels fundamentally empty.

That misconception is the first thing Napster's platform needs to overcome.

Modern AI music generation isn't a simple input-output machine. It's not like asking Photoshop to "make a photo" and getting back something usable. What's actually happening is far more sophisticated, and understanding it changes how you think about whether this technology has real value.

Here's the actual process. Napster's AI model was trained on massive datasets of existing music—millions of songs across different genres, styles, production techniques, and eras. During that training phase, the neural network learned to recognize patterns: which instruments typically play together, how melodies evolve over time, what makes a chord progression feel resolved versus unresolved, how rhythm and tempo shape emotional impact.

When you describe a song to the AI—let's say "upbeat indie pop with lo-fi production and melancholic vocals"—the system isn't grabbing pre-made loops and stitching them together. It's using the patterns it learned to generate novel audio that matches those specifications. It's creating something new that doesn't exist in its training data, while still sounding coherent and intentional.

The critical difference is collaboration. Unlike fully automated systems, Napster's approach keeps the human in the loop. You don't describe a song once and accept whatever comes back. You're iterating. You're tweaking parameters. You're selecting which generated variations feel right. You're potentially layering AI-generated stems with your own recordings, or using AI to suggest chord progressions while you play the melody yourself.

This matters because it means the technology becomes a tool that extends human creativity, not replaces it. A bedroom producer can suddenly access the production quality that used to require studio time and a mixing engineer. A content creator working on a YouTube video can generate custom background music instead of scrolling through generic royalty-free collections. A professional musician can rapidly prototype ideas and iterate on them instead of spending hours hunting for the right sample.

The technical foundation relies on several key innovations. Transformer architectures, which revolutionized how AI handles sequential data like music. Attention mechanisms that let the system understand how different parts of a song relate to each other. Diffusion models that can generate high-quality audio from text prompts. And crucially, fine-tuning processes that let the model specialize in particular genres or styles.

But here's where it gets complicated. The quality of the output depends enormously on the quality of the training data and how well the model learned to extract meaningful patterns. If your training data is biased toward certain genres or production styles, your AI will reproduce that bias. If the model wasn't trained with enough diversity, it might generate technically correct but creatively stale results. These aren't theoretical problems—they're real limitations that anyone using the platform will encounter.

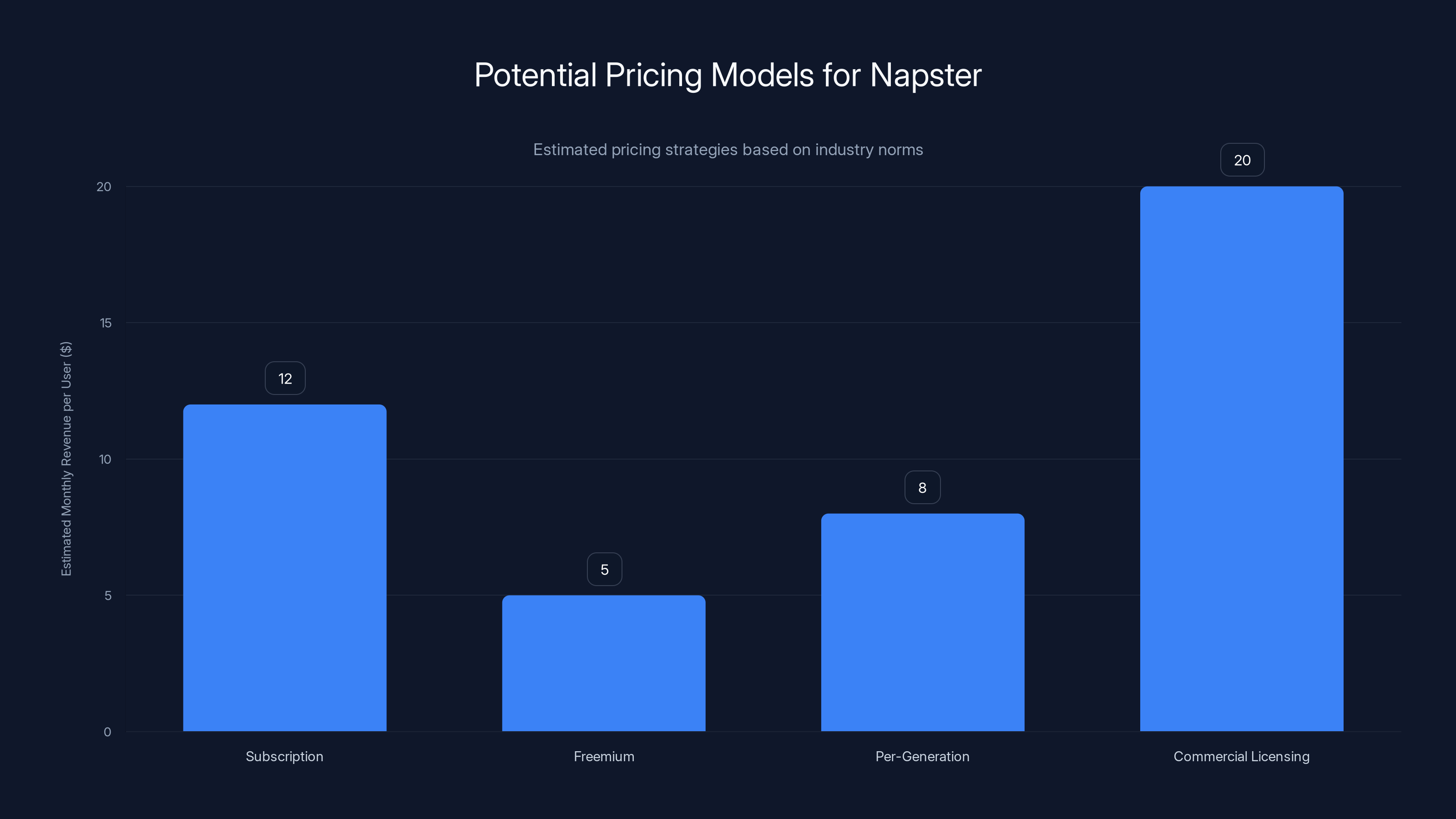

Estimated data suggests Subscription and Commercial Licensing models could generate higher monthly revenue per user compared to Freemium and Per-Generation models.

The Democratization of Music Production: Breaking Down Barriers

There was a time when making professional-quality music required entry conditions that were impossible for most people. You needed money—for instruments, recording equipment, software licenses, studio time. You needed knowledge—years of learning music theory, audio engineering, production workflows. You needed connections—to get your music heard by the right people, to collaborate with talented musicians, to understand industry dynamics.

Guitar Hero and basic music production software chipped away at those barriers. GarageBand made it possible to produce on a laptop. Streaming democratized distribution. But gatekeepers still existed. You still needed to understand DAWs. You still needed production knowledge. You still needed to spend months learning synthesis, EQ, compression, and mixing techniques.

Napster's AI-first approach removes another layer of friction. You don't need to understand music theory to describe what you want to hear. You don't need to know synthesis to generate novel sounds. You don't need to spend six months learning a DAW's workflow to have a functional production pipeline.

This sounds trivial until you actually sit down with the platform and realize what it means. A content creator on YouTube can spend 20 minutes generating five different background tracks for a video instead of spending two hours licensing royalty-free music or paying a composer. A bedroom producer can go from idea to fully realized demo in hours instead of weeks. A musician with a clear vision but limited technical chops can actually bring that vision to life.

But democratization also means something darker: it means lowering the barrier to entry for generating content that might violate someone's rights. It means making it easier to produce music that sounds like it was made by established artists. It means potentially enabling people to generate derivative works without permission from the original creators. The music industry has spent decades building copyright frameworks that assume humans are making intentional creative choices. AI throws that assumption on its head.

Napster clearly understands this reality, which is why the company has built its platform explicitly around licensing agreements and rights management. Unlike the original Napster, which assumed technology could move faster than law, the new platform is acknowledging upfront that legitimacy requires working with rights holders, not around them.

The democratization argument ultimately breaks down into a simple question: whose interests does democratization serve? If it serves creators, empowering them to make better work faster, that's positive. If it primarily serves commercial interests trying to displace professional creators by automating their jobs, that's... less positive. The answer will probably depend on how Napster chooses to price the platform and what restrictions they place on commercial use.

Artist Rights and Licensing: The Regulatory Reality Napster Learned the Hard Way

Here's the thing about Napster's original downfall: the company didn't fully understand the legal and cultural implications of what they were building until the RIAA came knocking with subpoenas.

The music industry's entire legal framework is built on a relatively simple principle: creators own the copyright to their work, and they should be compensated when that work is used, reproduced, distributed, or performed. That principle was somewhat fragile even before AI. But AI music generation creates an entirely new problem: if you train an AI model on millions of songs, have you violated the copyright of all those songs' creators? If you generate music using an AI trained on someone's work, did you create a derivative work? If someone else later creates music that sounds suspiciously similar to work the AI learned from, where does liability rest?

These questions don't have clear legal answers yet. Different countries have different copyright frameworks. The United States has "fair use" doctrine, which theoretically protects transformative uses of copyrighted material. The European Union has different rules. And the music industry, unsurprisingly, is arguing that AI training should require explicit licensing deals with copyright holders.

Napster's new platform seems to be navigating this by doing something the original Napster never did: working with rights holders before launch rather than facing legal action after. The company has announced partnerships with major record labels and music publishers. This isn't altruism. It's survival strategy. Napster knows that if the platform becomes a tool for generating unlicensed derivative works, the lawsuits will come, and they'll be devastating.

But there's still friction here. Rights clearance isn't free. Every time someone uses AI to generate a track that draws from copyrighted material in the training data, someone potentially deserves compensation. That costs money. That gets passed to the user either through subscription fees, per-generation payments, or usage restrictions.

The practical reality for creators using Napster's platform is that they need to understand what they're generating and what rights they actually own to the output. If you generate a track using the AI, do you own it completely? Can you distribute it commercially? Can you use it on YouTube, TikTok, or a streaming service? These terms matter, and they need to be clearly documented.

The honest truth is that the AI music generation industry as a whole is still in regulatory gray area. There will probably be lawsuits. There will probably be new legislation. There will probably be licensing disputes. Napster's betting that by being proactive about rights management, they can stay ahead of the worst-case scenarios. Whether that bet pays off remains to be seen.

How Napster's AI Music App Actually Works: The User Experience

Let's walk through what actually happens when someone opens Napster's application and tries to create music.

You start by describing what you want. This isn't a form with dropdown menus. It's more like having a conversation with someone who understands music. You might say: "I want an experimental electronic track with ambient textures, glitchy beat, minor key, around 120 BPM, with heavy reverb and a sense of melancholy."

The AI takes that description and generates several options. Not just one version—multiple variations that all match your specifications but have different character. Maybe one emphasizes the ambient textures more. Maybe another leans heavier into the glitchy beat. You listen through them, decide which direction resonates, and then you iterate.

You might say: "I like version three, but can you make the drums more prominent and add a melodic element in the second half?" The system regenerates, incorporating feedback. This process continues until you get something you're happy with.

Once you have a core track, you can start layering. Maybe you want to add your own vocals on top. The app gives you tools to separate stems—isolate the drums, the bass, the melody—so you can mix and match. You can layer in audio you've recorded yourself. You can adjust the energy level, the instrumentation, the emotional tone.

The final stage is export and distribution. You download the track in the format you need (stems for further production, full mix for mastering, streaming-ready version for distribution). The app keeps metadata that shows you created it using AI assistance, which is both transparent and potentially useful for rights management later.

Throughout this process, the system is learning your preferences. It's remembering what kinds of music you gravitated toward, what genres you tend to experiment with, what production characteristics you keep requesting. Over time, the suggestions get better because the AI understands your taste.

What's notably not in this workflow is full automation. You're not just hitting a button and accepting whatever the AI generates. You're collaborating. You're making choices. You're exercising aesthetic judgment. This matters because it means you're actually creating something, not just commissioning a machine to create something you then claim credit for.

The practical result is that someone with zero music training can potentially produce something that sounds professionally mixed and mastered in a fraction of the time it would take someone with years of training. That's the promise, anyway. The reality will depend on how well the AI generalizes, how intuitive the interface is, and whether the output quality is actually competitive with human-produced music.

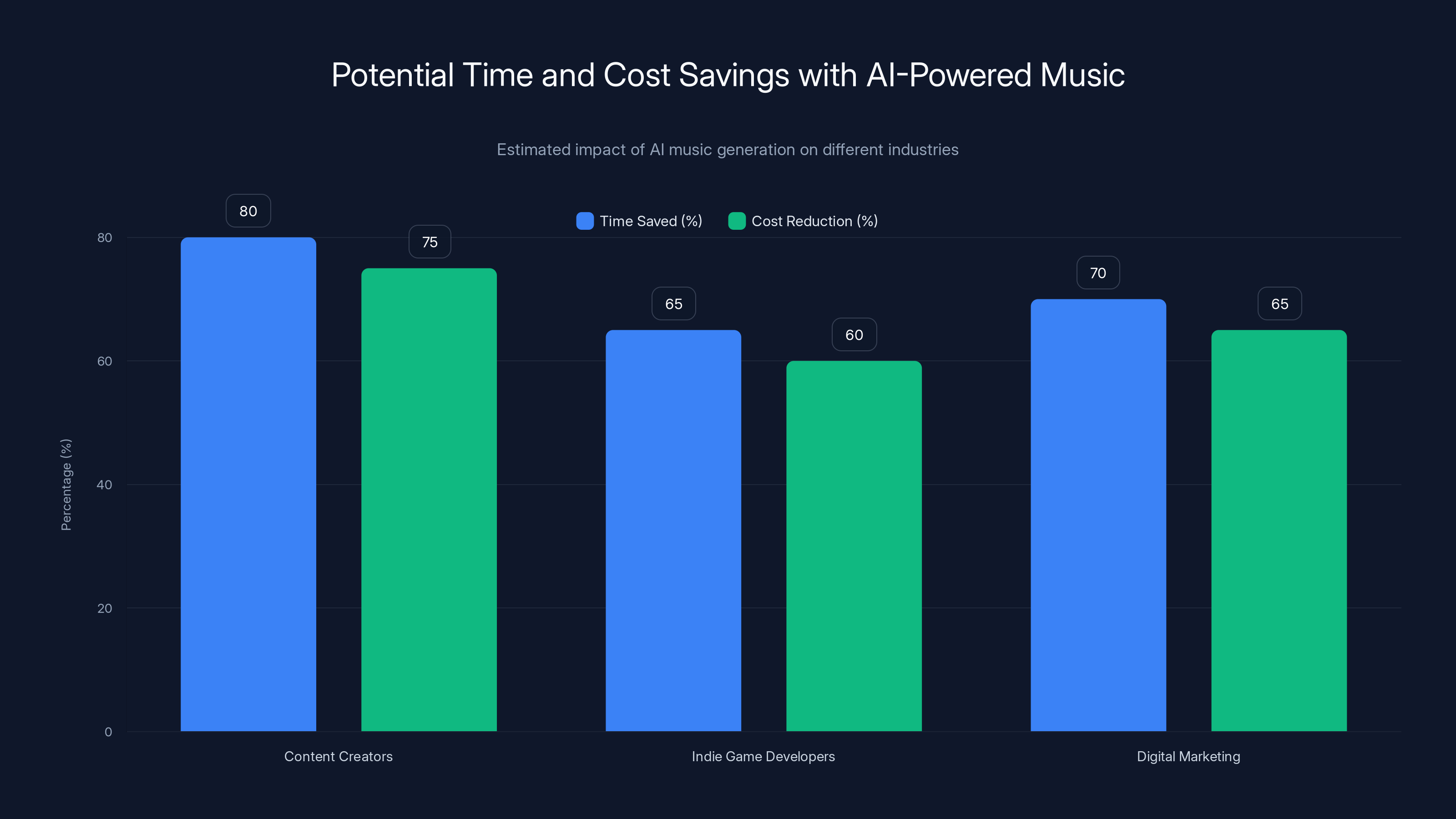

AI-powered music creation can significantly reduce both time and costs across various industries, with content creators potentially saving up to 80% in time and 75% in costs. Estimated data.

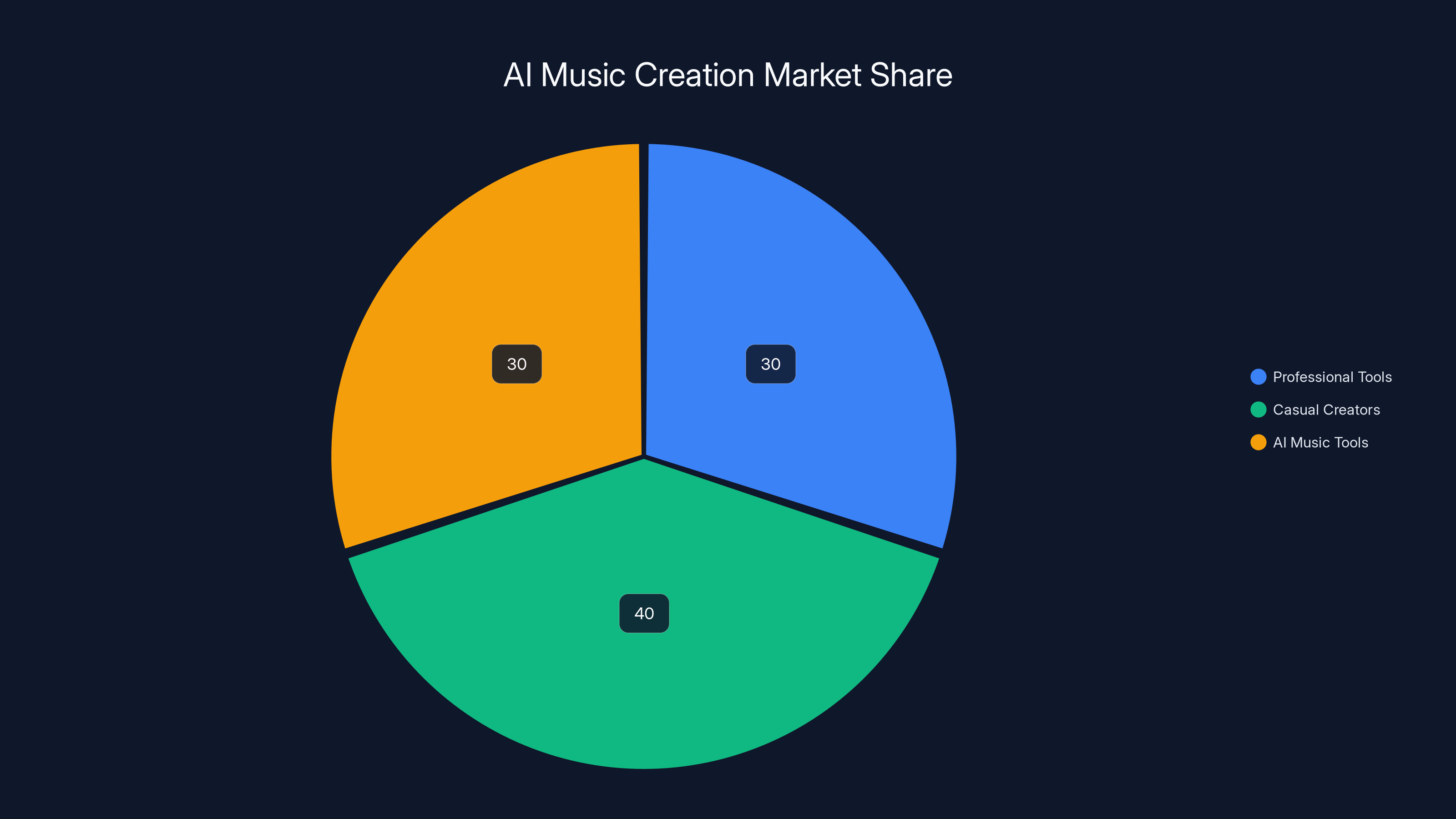

The Market Opportunity: Why Now Is the Right Time for This

Napster isn't entering the AI music space because it's trendy. The company is entering because the market conditions have finally aligned in a way that makes the business model viable.

Consider the demand side first. The global market for music creation tools and software was already valued at billions of dollars before AI became mainstream. But that market has been dominated by professional tools that assume you already understand music production: Ableton Live, Logic Pro, FL Studio. These tools are powerful, but they have a steep learning curve. They're also expensive—subscriptions run $100+ annually, and the full functionality takes months to master.

Meanwhile, the demand for music has exploded. Content creators need background tracks for YouTube videos, podcasts, TikTok content, Twitch streams, Instagram Reels. Indie game developers need ambient soundtracks. Filmmakers need original scores. The demand from non-professionals looking for accessible music creation tools far exceeds the demand from professional musicians.

AI music generation directly addresses this gap. It enables a new market of casual creators—people who want to make music-adjacent content but don't want to invest hundreds of hours in learning music production.

On the supply side, the technology has matured enough to be reliable. Early AI music generation was basically a novelty. The output was often incoherent, obviously generated by a machine, lacking in nuance. But the breakthroughs of the past two years—particularly in diffusion models and transformer architectures applied to audio—have made the technology actually useful. Quality has crossed a threshold where the output doesn't just sound like AI music. It sounds like music.

The competitive landscape has also shifted. A few years ago, Napster might have been the only major player. Now there are dozens of AI music tools competing for attention. Open AI experimented with music generation. Google has Music LM. Endel generates personalized ambient soundscapes. But none of these have Napster's brand recognition or infrastructure. They're fragmented offerings, each with their own strengths and weaknesses. There's room for a unified, well-executed platform that appeals to creators.

Financially, the unit economics make sense. You host the AI models on cloud infrastructure (expensive, but getting cheaper). You charge subscription fees or per-generation fees. Your marginal cost per user decreases as you scale. The platform becomes a subscription business with recurring revenue, which is something the original Napster never had.

But here's the deeper insight: the music industry itself has finally accepted that AI is coming whether they like it or not. Rather than fight it completely, major labels are negotiating licensing deals. They've learned the lesson from Napster's first incarnation. You can't stop technology. You can only try to shape it in ways that protect your interests. By working with the industry from day one, Napster is positioning itself as a legitimate player rather than a threat.

The market opportunity, then, isn't just "people want to create music." It's "people want to create music, the technology finally works, the regulatory environment is becoming clearer, and there's room for one platform to own this space before it gets completely fragmented."

Napster's betting they can be that platform.

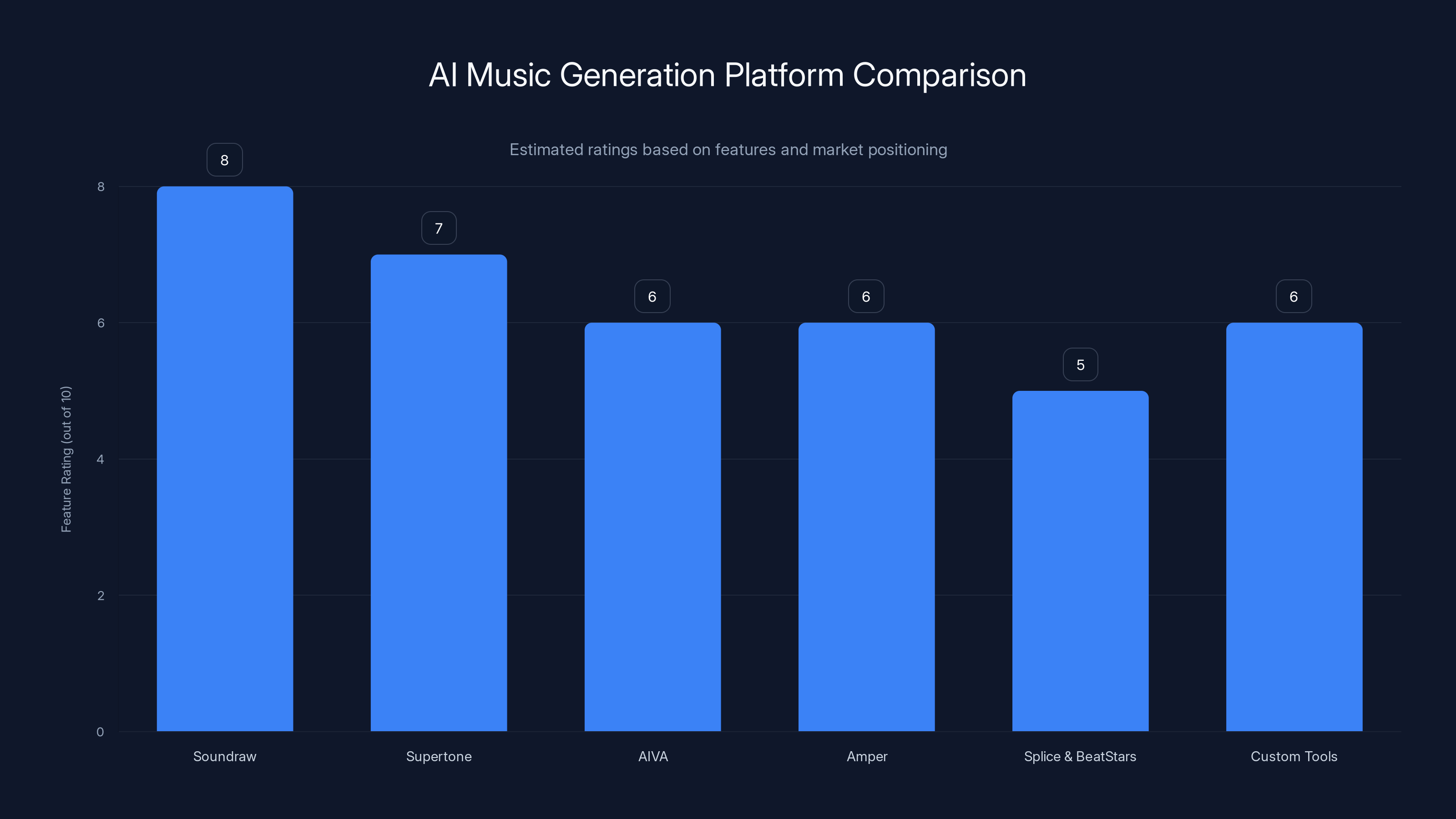

Comparing AI Music Creation Approaches: Different Tools, Different Philosophies

Napster isn't the only company working on AI music generation. Understanding how their approach differs from competitors matters if you're deciding whether this platform is right for you.

Napster's Approach: Collaborative Framework

Napster's philosophy emphasizes human-AI collaboration. You describe what you want. The AI generates variations. You iterate and refine. The final work is something you actively shaped through feedback and decision-making. The tool is meant to accelerate your creative process, not replace it. This approach appeals to people who want to maintain artistic control but need help with execution speed or overcoming technical barriers.

Open AI's Approach: Experimental and Closed

Open AI has experimented with music generation (particularly through Jukebox and subsequent models), but they're cautious about wider deployment. Their research suggests high-quality generation is possible, but they're concerned about copyright implications and the potential for misuse. Open AI's model is more "prove the concept works" than "build a product people can actually use right now."

Endel's Approach: Personalized Ambient Focus

Endel generates continuous, evolving ambient soundscapes personalized to your listening habits and preferences. The goal isn't song creation but environmental audio that responds to your behavior. Endel generates tens of millions of unique compositions through procedural generation and machine learning. This works great if you want background music. It's less useful if you want to create structured songs with beginning, middle, and end.

AIVA's Approach: Film and Game Scoring

AIVA positions itself as a tool specifically for composers working on films, games, and interactive media. You can describe a scene or emotional moment, and the AI generates original score that fits. This appeals to indie developers and filmmakers who need custom music but don't have composer budgets. The platform is built around composing workflows rather than general music creation.

Your Comparison Matrix

| Platform | Best For | Output Quality | Learning Curve | Commercial Use | Price Point |

|---|---|---|---|---|---|

| Napster | Casual creators, content makers | High | Low | Depends on licensing | Likely mid-range |

| Open AI | Research, experimentation | High but limited access | Moderate | Restricted | Not widely available |

| Endel | Ambient, background music | Medium-high | Very low | Permissioned | Subscription-based |

| AIVA | Film and game scoring | High (in domain) | Moderate | Yes, for commercial | Subscription + licensing |

The key differentiator is audience. Napster is targeting the broadest possible market: anyone who wants to create music. Open AI is still in research mode. Endel is laser-focused on ambient soundscape generation. AIVA is specialized for media composition. Napster's bet is that general-purpose, accessible music creation is where the biggest market opportunity lies.

Use Cases: Who Actually Benefits From AI-Powered Music Creation

Let's get concrete. Here are the people and businesses who will probably adopt Napster's platform if it works well.

Content Creators and Streamers

Every YouTube video needs music. Every podcast needs opening and closing themes. Every Twitch stream needs ambient background audio. Every TikTok video needs sound design. Right now, creators typically either use royalty-free music libraries (which means their content sounds generic because thousands of other creators used the same tracks), or they hire composers (which costs hundreds or thousands of dollars per project).

With Napster, a content creator can generate custom background music in minutes. Want something that's "upbeat but not intrusive, indie pop vibes, 60 seconds long, perfect for a product unboxing video"? Generate it. Like version two but want it 15% faster? Regenerate. Have a final version? Export and use it. This could reduce the time spent on audio from hours to minutes.

Indie Game Developers

Game development requires music. Lots of it. Ambient background music for exploration. Boss battle themes. Menu music. Victory fanfares. Emotional crescendos for story moments. Hiring a composer can cost thousands. Using royalty-free libraries means your game sounds like twenty other indie games. Using AI generation means you can have hundreds of unique pieces custom-tailored to your game's tone and pacing.

A small game studio could potentially use Napster to generate baseline music, then have a human composer refine and customize the AI output. This hybrid approach could reduce composition time by 60-70%.

Digital Marketing and Advertising

Advertisements need audio branding. Jingles. Background music. Sound design. Every audio element is an opportunity to differentiate or reinforce brand identity. Creating unique audio for every ad variant would normally be prohibitively expensive. AI generation makes it feasible to have custom audio for A/B testing, different markets, different seasons.

A brand could describe their sonic identity ("modern, trustworthy, energetic, tech-forward") and generate dozens of variations to test audience response. The cost per audio asset drops from hundreds of dollars to near-zero.

Educational and Training Content

E-learning platforms need background music for instructional videos. Corporate training videos need audio atmosphere. Meditation and wellness apps need ambient soundscapes. Right now, they're either using generic royalty-free music or paying composers. AI generation could create hyper-personalized audio that adapts to the content being taught.

Imagine an online course where the background music shifts to reflect the emotional tone of the lesson. Teaching a stressful concept? The music is calm and supportive. Teaching something motivational? The music energizes. This level of customization is currently impossible at scale. AI makes it possible.

Music Producers and Bedroom Producers

Even professional musicians benefit from AI assistance. It's not about replacing the musician. It's about accelerating the creative process. A producer could use AI to generate chord progressions, then write a melodic hook. Use AI to create drum patterns, then humanize and customize them. Use AI to suggest arrangements, then implement their own vision. The final product is still distinctly human-made, but it was created 10x faster.

This is particularly valuable for independent producers who don't have budgets for session musicians, string arrangements, or expensive studio time. AI becomes a collaborator that democratizes access to sounds and ideas that used to require hiring.

Therapeutic and Wellness Applications

Personalized ambient music could be generated for meditation apps, sleep platforms, focus tools, and therapeutic applications. Each user could have music that adapts to their biometric data, their preferences, their current emotional state. This is beyond what Napster's initial platform probably does, but it's the direction the technology is heading.

The unifying theme across all these use cases is the same: AI music generation eliminates the constraint that prevented non-musicians from creating audio content. It's not about making musicians obsolete. It's about enabling creators who never had the option to work with music before.

Napster's AI music tool is estimated to be more versatile and feature-rich compared to others, making it suitable for a broader audience. Estimated data.

The Creative Authenticity Question: Does AI-Generated Music Count as Art

Here's the uncomfortable question nobody wants to directly address: if the AI generates most of the music, does the creator really deserve credit?

This isn't philosophical navel-gazing. It has real consequences. It affects artistic legitimacy, copyright claims, credit attribution, and whether listeners feel like they're engaging with authentic human expression or hollow machine output.

The honest answer is that it depends on how much the human was actually involved in the creative process. If someone describes "ambient electronic track" and accepts the first generated output without modification, that's less creative input than someone who iterates forty times, rejecting ninety percent of generations, hand-crafting specific sections, and layering in their own recordings.

But this raises a deeper issue: what counts as creative input? When you hire a session musician to play your composition, did you create the music less than the musician who performed it? When you use a producer who suggests ideas you then implement, how much credit does the producer deserve? When you sample a break from another artist's song and build a new track around it, is that creative or derivative?

The history of music is full of borrowed ideas, collaboration, and standing on the shoulders of giants. Mozart learned by copying other composers. The Beatles heavily influenced each other. Hip-hop built an entire genre on sampling. The question isn't whether human creativity involves borrowing from others. It does. The question is whether AI changes the nature of that borrowing in a way that undermines authenticity.

One perspective is that AI is just a tool, like synthesizers were just tools. When synthesizers were new, people argued they weren't "real instruments" and that music made with them wasn't legitimate. That argument sounds absurd now because we're used to synthesizers and we've heard amazing music made with them. AI will probably follow the same trajectory. At first, it'll feel inauthentic and like cheating. Eventually, we'll develop cultural norms around how AI is used creatively, and some applications will feel legitimate while others feel like fraud.

Another perspective is that there's something categorically different about AI. When you use a synthesizer, you're still making real-time decisions about what sounds to create. The instrument has agency, but you have ultimate control. With AI, the system is making creative suggestions that you're choosing to accept or reject. That inverts the normal creative relationship between human intention and tool response.

The practical reality is probably that the authenticity question will be resolved not by philosophical arguments but by audience judgment. If listeners hear music that was AI-generated but then heavily customized by a human, and they like it, then it's authentic enough. If they feel manipulated because they thought a human created something that was actually 95% machine-generated, then it feels like fraud.

Napster's approach of keeping the human in the loop throughout the creative process is probably the right call for maintaining authenticity. It's harder to argue that something isn't art when the artist can point to dozens of decisions they made during creation, variations they rejected, and customizations they implemented.

Technical Challenges: What Could Go Wrong

Let's talk about the real limitations and potential failure points.

Quality Inconsistency

AI music generation isn't magic. It's a probabilistic process. The same prompt can generate wildly different outputs. Sometimes brilliant, sometimes terrible. The variance is part of the process, but it's also frustrating. If you ask for a 60-second upbeat pop track and get back thirty variations, how many of those are actually usable? What's the success rate for first-generation quality? How much iteration does it actually take to get something you're happy with?

The industry standard for generative AI is that humans need to curate outputs. You generate more options than you need because some will be garbage. But if Napster's platform requires heavy curation, the "you can make music in minutes" claim starts to erode.

Genre and Style Bias

AI models are typically trained on whatever data is available, which means they're better at generating music for well-represented genres and worse at niche or experimental styles. If the training data is predominantly pop, hip-hop, and electronic music, then generating ambient folk or avant-garde classical gets exponentially harder. The generated output will drift toward the training data's central tendency.

This means Napster's platform will probably have a characteristic sound, a gravitational center it pulls toward regardless of what the user requests. Some users will love that aesthetic. Others will find it limiting.

Context and Structure

Generating two minutes of plausible audio is different from generating a structured song with intro, verse, chorus, bridge, and outro. It's different from generating music that builds emotional intensity and then resolves. Full-length songs require understanding not just local patterns (what notes follow what notes) but global structure (how does the entire composition arc forward in time).

Early music generation often fails at this. It generates technically plausible sequences that don't actually go anywhere. They don't build. They don't resolve. They're just... static. If Napster hasn't solved this, the generated music will sound incomplete even when it's technically correct.

Controllability

There's a gap between "AI understands your request" and "AI generates what you actually wanted." Natural language is ambiguous. "Upbeat" means different things to different people. "Melancholic" could mean minor key, slow tempo, sparse arrangement, or all three. The AI has to make assumptions, and those assumptions might not match your expectations.

More precise control typically requires parameters and technical knowledge. But if Napster requires users to understand music theory and production terminology to get good results, then it's not actually democratizing music creation. It's just replacing one set of barriers with another.

Ethical and Legal Risks

The model is trained on real music created by real artists. Somewhere in the latent space of the neural network are patterns learned from those artists' work. If the AI generates something that sounds suspiciously like an existing song, or if that generated output is used commercially without permission, who bears liability? The artist? The platform? The user?

Napster says they've licensed the training data and worked with rights holders. But litigation will probably happen anyway. Someone will claim their copyright was violated. Someone will generate music that sounds too similar to an existing work. The legal system will have to figure out how to apply century-old copyright frameworks to algorithmic generation. This uncertainty creates ongoing risk.

The Broader Implications: What Napster's Return Means for Music

Let's zoom out and think about what this actually represents.

Music production has always required gatekeeping. Not because the music industry is evil, but because the tools and knowledge required to create professional-quality music were scarce. That scarcity gave power to the people who controlled access to recording studios, mixing engineers, distribution channels, and promotional platforms.

Each technological wave has eroded that gatekeeping. Multitrack recording made home studios possible. Digital audio workstations made production accessible without expensive analog equipment. The internet made distribution possible without record labels. And now AI is making creation itself accessible without music training.

This creates opportunity and threat simultaneously. Opportunity for new creators. Threat to professional musicians and composers who derive income from scarcity.

The music industry has learned that you can't stop technological change. So instead of fighting Napster directly, major labels are negotiating licensing deals. They're trying to ensure that AI music generation enriches the industry rather than destroying it. They're trying to channel the disruption in directions that protect existing business models.

But there are limits to how much technology can be channeled and controlled. If AI music generation becomes good enough, cheap enough, and easy enough, some jobs will disappear. Session musicians. Background composers. Jingle writers. People working in film and game audio. These aren't hypothetical jobs. These are real people with mortgages and families.

On the flip side, new opportunities will emerge. If AI removes the technical barriers to music creation, then more people will be composing. That means more music being created, more demand for music in games and films, more potential for AI-assisted tools that help composers do better work. The economic pie might grow even as some slices shrink.

Napster's role in all this is interesting. The company isn't trying to destroy the music industry. It's trying to expand access to music creation, working with rather than against the industry. That's a smarter play than the original Napster's approach, but it also means the impact will be more gradual and less revolutionary than the hype suggests.

The real question isn't whether Napster's platform succeeds. It probably will, in some form. The question is whether the success of AI music generation will eventually outstrip the industry's ability to regulate and control it. Whether scarcity can be manufactured around something that's fundamentally abundant. Whether the music industry can maintain its current power structure in a world where anyone can generate professional-quality music in seconds.

History suggests that technological abundance eventually beats artificial scarcity. But the transition is painful for people whose power depended on the scarcity.

Estimated data shows a balanced market share among professional tools, casual creators, and AI music tools, indicating a significant opportunity for growth in AI music solutions.

Privacy, Data, and the Infrastructure Behind the Curtain

Every time you use an AI music generation platform, you're creating data. You're inputting prompts that reveal your musical tastes. You're listening to generated outputs and implicitly indicating which ones you like. You're potentially uploading audio files that you want the AI to process.

That data has value. For Napster, it's valuable because it teaches the system what kinds of generation people prefer. But it's also valuable to third parties: advertisers who want to understand your taste, researchers studying music preferences, potentially the music industry itself understanding what kinds of music are being generated.

Napster hasn't been particularly transparent about data practices yet. The privacy policies will matter enormously, especially if the platform becomes popular. Are prompts logged? Are they sold? Are they kept indefinitely? When you generate a track, can Napster claim ownership of the output? Can they use outputs to train future versions of the model?

These aren't paranoid questions. Companies have faced enormous backlash for cavalier data practices. Open AI faced criticism for training on web data without explicit consent. Every AI company now deals with privacy as a critical issue. Napster needs to be proactive here rather than reactive.

The infrastructure question is also worth considering. AI music generation is computationally expensive. Every generation request requires running neural networks on cloud infrastructure. That costs money. Napster's business model needs to account for the fact that every user action is essentially buying cloud compute from Amazon or Google or whoever hosts their infrastructure.

That's fine at small scale. But what happens if the platform takes off and millions of people are generating music daily? The infrastructure costs would explode. The platform would need to either dramatically increase prices or find ways to make generation more efficient. This is a real constraint that doesn't get discussed in enthusiastic coverage.

The other infrastructure consideration is dependency. Napster is betting on cloud services, on neural networks, on training data that exists in the present. What happens if there are advances that make the current models obsolete? What if the training data becomes legally unavailable? What if regulation changes how the platform can operate? Napster needs to be building resilience against these scenarios, but there's limited visibility into how much thought is going into contingency planning.

Pricing and Business Model: How Will This Actually Make Money

Napster hasn't fully disclosed pricing details, but based on how similar platforms operate, we can infer how this will work.

Subscription Model (Most Likely)

Users pay a monthly fee (probably $9-15) for access to the platform with some quota of generations per month. Similar to how cloud storage or productivity tools work. This creates recurring revenue and predictable costs.

Freemium Model (Possible)

Free tier with limited generations (maybe 5-10 per month), then paid upgrades for more. This maximizes user acquisition and conversion from free to paid. The free tier is the funnel.

Per-Generation Pricing (Possible but Unlikely)

Pay-as-you-go model where each generation costs a small amount. This works for power users but is frustrating for casual users trying out the platform.

Commercial Licensing (Probable)

Separate pricing tier for commercial use. If you're generating music to use in published products, you pay more than if you're generating for personal use. This keeps consumer pricing low while capturing value from creators generating revenue.

The business model's viability depends on three factors:

-

Unit Economics: How much does it cost to generate one piece of music? If it costs

12/month for unlimited generations, then each active user generating more than 6 pieces per month is profitable. But infrastructure costs matter here. -

User Acquisition: How much does it cost to acquire a user? If user acquisition cost is high, then the subscription price needs to be high or the lifetime value of the user needs to be long. Napster probably has some brand recognition advantage, but it's not automatic.

-

Retention: How long do users stay active? If the average user stays for 2 months then churns, the business model falls apart. If users stay for 12+ months, it's sustainable. Building habits around the platform is critical.

The broader economic reality is that Napster is competing against free alternatives and deeply entrenched incumbents. YouTube's Creator Studio offers free music. Spotify has background music. Soundraw and other AI tools offer similar capabilities. For Napster's pricing to justify adoption, the platform needs to be demonstrably better than alternatives.

The Competition: Napster Isn't Alone in This Space

It's worth briefly surveying the competitive landscape, because Napster's success depends partly on whether they can differentiate against other options.

Soundraw

One of the first AI music generation platforms to reach mainstream adoption. Soundraw lets users describe songs and generate variations. Simple, clean interface. Reasonable pricing. The company has raised funding and built a sustainable business. If you want AI music generation today, Soundraw is probably your best option.

Supertone

Focused on vocal synthesis and voice cloning. Not exactly the same category as Napster, but it solves a related problem: creating custom vocals. If you generate a track with Napster, you might use Supertone to generate vocals that match it perfectly.

AIVA and Amper

Earlier entrants in the AI music space, both with strong focus on composers and professional creators. AIVA has specialized in film and game scoring. Both have paying customers and sustainable businesses. They're not trying to be general-purpose music creation tools.

Splice and Beat Stars

Not strictly AI music generation, but these are platforms where creators share loops, samples, and production tools. They represent an alternative approach: instead of generating music, provide curated access to existing music and tools for remixing.

Custom and Model-Specific Tools

Everyone's building AI music into their products. Adobe has Firefly for music. Other companies are likely building proprietary AI music tools into their existing services.

Napster's competitive advantage has to be either brand (the Napster name carries baggage but also recognition), or execution (better interface, higher quality outputs, better pricing), or positioning (reaching the market segment that existing tools don't address effectively). It can't just be "we're an AI music tool." That's table stakes.

Soundraw leads with a strong feature set for AI music generation, while Supertone excels in vocal synthesis. Estimated data based on platform capabilities.

The Psychological Shift: What Users Need to Accept

There's a fundamental mindset shift required to actually use AI music generation effectively. And a lot of people won't make that shift.

For decades, music creation has been positioned as something requiring talent, training, and dedication. If you want to make music, you need years of lessons, practice, theory study, ear training. There's an aspirational quality to that. Music makers are special because they have skills most people don't.

AI music generation challenges that entirely. It says: you don't need training. You don't need natural talent. You just need an idea and willingness to iterate. That's democratizing, but it's also threatening to people who invested years developing skills that AI can replicate instantly.

Users need to psychologically accept several things to use the platform effectively:

-

That imperfection is fine. Not every generated track needs to be perfection. Sometimes "good enough" is actually good enough.

-

That AI collaboration isn't cheating. Using an AI tool is using a tool. It's not less authentic than using a synthesizer.

-

That iteration is essential. You won't get exactly what you want on the first try. You'll generate options, reject most of them, request modifications. That's the creative process.

-

That the output remains yours. Even though an AI helped create it, your choices shaped the final product. You still deserve credit.

These psychological shifts are significant. Some people will embrace them. Others will resist because they challenge their identity as musicians or their belief that creation requires special talent.

Napster's marketing will need to address this carefully. The message can't be "AI makes you an instant musician." That's technically true but misses the point. The message should be "AI removes technical barriers so you can focus on creative vision." That appeals to the people who have ideas but not skills, and it doesn't threaten people who do have skills because it positions AI as a tool to work with, not against.

Future Possibilities: Where This Is Heading

Assume Napster's platform succeeds. Assume they reach millions of users. Assume AI music generation becomes normalized. What's the trajectory from there?

Real-Time Collaboration

Imagine collaborating with AI in real time while playing an instrument. You play a guitar riff, and the AI generates drum patterns, bass lines, and synth parts that complement what you're doing. Live jam sessions between human and machine, with the human providing intention and the AI providing instant execution. This is probably 2-3 years away technically.

Emotional Response Adaptation

Microsoft researched music that adapts based on biometric data: heart rate, stress level, arousal. Music that generates in real-time based on your emotional state. Imagine wearing a wristband that measures your stress and triggers ambient music generation that gradually calms you down. Meditation or focus music that responds to how well it's actually working.

Style Transfer and Remix

Take an existing song and have AI regenerate it in a completely different style. Your favorite pop song as a jazz standard, a metal track, an ambient reinterpretation. This technology mostly works already, but commercialization and rights management is still in progress.

Personalized Music Streaming

Instead of streaming pre-recorded music, imagine a service that generates music in real time based on your preferences and current context. Every song you listen to is unique to you, generated on demand, never the same twice. This solves the problem of music feeling worn out from listening too much.

Composition Assistance for Professionals

AI becoming a genuine tool in professional music production, not replacing composers but augmenting them. Suggest chord progressions. Generate arrangement options. Quickly prototype ideas. Autogenerate orchestration. Turn rough demos into polished arrangements in hours instead of weeks.

Educational Applications

AI music generation used to teach music theory, composition, and production. Interactive lessons where you experiment with variations and see how musical concepts work. Make learning music production accessible to anyone with curiosity.

The trajectory is pretty clear: from general music generation toward specialized, personalized, real-time, and integrated applications. Napster's role is as a foundation that proves the core technology works. After that, the ecosystem builds on top.

Napster's Credibility Question: Can the Brand Actually Deliver

Here's the thing nobody explicitly says: Napster the brand carries baggage.

The original Napster was brilliant and catastrophic in equal measure. Brilliant because it understood something fundamental about human behavior and technology. Catastrophic because it also understood absolutely nothing about intellectual property rights, artist compensation, or the power of corporations to litigate you out of existence.

The reborn Napster is trying to shed that reputation and position itself as a responsible player. They're working with labels. They're implementing licensing. They're building legitimacy. That's smart. But it's not automatic.

Every major tech company faces this: can you take a disruptor brand and make it respectable? Apple did it. Amazon did it. But plenty of companies with disruptive origins never managed the transition. The image problem sticks with you.

Napster's credibility hinges on three things:

-

Delivering Quality: The platform actually needs to generate music that's good enough to use commercially. If outputs are mediocre, no amount of brand nostalgia will save it.

-

Respecting Rights: The company genuinely needs to respect copyright, compensate artists, and work transparently with licensing. Even a whiff of the original Napster's "we don't care about copyright" attitude will destroy the platform.

-

Building Community: Success in creative tools depends on community. You need creators using the platform, sharing results, collaborating, pushing the boundaries. Napster needs to foster that rather than trying to control it.

The stakes are high because Napster's cultural moment is limited. The company gets maybe 12-18 months of mainstream attention before the story gets boring and competitors move in. They need to use that time to build something genuinely valuable, not ride nostalgia.

The Philosophical Question: What Makes Music Matter

Underneath all this technology discussion sits a more fundamental question that probably can't be answered definitively but is worth asking anyway.

What makes music meaningful?

Is it the skill of the musician? The years of practice? The natural talent? If so, then AI-generated music can never be as meaningful because nobody suffered for it, nobody trained for years to create it. It's just algorithmic output.

Or is it the emotional resonance? The way the music makes you feel? If so, then it doesn't matter whether a human or AI created it. If it moves you, it's meaningful.

Or is it the intention? The artist put thought and vision into the piece? If so, then AI is just a tool like any other. The vision comes from the human using the tool.

These are not technical questions. They're philosophical. And philosophers have been arguing about what makes art "real" since at least Plato arguing that paintings are fake copies of reality. We're not going to solve this in a blog post.

But the resolution of these questions will shape how people feel about AI-generated music long-term. If people decide that authenticity requires human suffering and years of training, then AI music will always feel hollow. If people decide that authenticity requires only honest creative intention, then AI is just another tool and no different from synthesizers.

Historically, the public has gotten over these objections faster than the gatekeepers expected. Photography wasn't "real art" to some painters in the 1800s. Now it obviously is. Video games aren't "real music" to some classical composers. But Koji Kondo's Super Mario Theme is now in the Library of Congress. Digital art isn't "real art" in some people's opinion. And yet, it's everywhere.

AI music will probably follow the same trajectory. Eventually, the question won't be "is this real music" but "is this good music." And that's a question that's always context-dependent anyway.

FAQ

What exactly is Napster's new AI music creation app?

Napster's new platform is an AI-powered music creation tool that lets users describe what they want to hear and generate original music from those descriptions. Rather than fully automating music creation, the platform emphasizes collaboration—you describe a concept, the AI generates variations, you iterate and refine until you get something you're happy with. The output can be stems (individual parts like drums, bass, melody) or full mixes ready for use.

How does the AI actually generate music?

The system works by analyzing patterns from massive datasets of existing music during training, learning which instruments typically play together, how melodies evolve, what makes chord progressions feel resolved, and how rhythm shapes emotion. When you describe what you want, the neural network generates novel audio that matches your specifications without simply replaying existing songs. The human remains in the loop throughout, iterating and refining based on feedback until the final result matches their vision.

Can I legally use AI-generated music commercially?

This depends entirely on the licensing terms in Napster's platform and what you plan to do with the music. The company has announced licensing partnerships with major record labels, which means some commercial use is likely permitted. However, the specific terms will matter—whether you can sell music, use it in YouTube videos, include it in games, or redistribute it. Always read the licensing agreement carefully before committing to commercial use, and understand that the legal landscape around AI-generated music is still evolving.

How is Napster's approach different from other AI music tools?

While platforms like Open AI's research is still experimental and Endel focuses specifically on ambient generation, Napster is positioning itself as a general-purpose music creation tool for casual creators and professionals. The emphasis on human-AI collaboration throughout the creative process distinguishes it from fully automated systems. Additionally, Napster's brand recognition and working partnerships with major labels suggest a more mainstream-focused, commercially viable approach than experimental alternatives.

What are the main limitations I should expect?

AI music generation isn't perfect. Quality varies depending on your prompt specificity. Genre representation bias means some styles generate better than others. Long, structured songs with clear emotional arcs are harder than short loops. The system makes decisions about musical elements that you then have to accept or regenerate. And while the output might sound professionally produced, it requires human curation—most generations won't be immediately usable without iteration and refinement.

Do I need any music training to use the platform?

No. The platform is designed for people without music background. You don't need to understand music theory, audio production, or how synthesizers work. The trade-off is that you'll get better results if you can be specific about what you want, which sometimes requires learning basic music terminology (tempo, key, instrumentation, etc.). But this is the point—the platform removes the biggest barrier to music creation: years of training.

How much will Napster's music creation platform cost?

Exact pricing hasn't been finalized, but based on industry standards and hints from Napster's announcements, expect either a subscription model ($9-15/month with limited generations) or freemium model (some free generations, paid for more). Commercial licensing will probably cost more. There will likely be free trials so you can experiment before committing money. The actual pricing will depend on how much compute costs Napster absorbs versus passes to users.

What happens to copyright and artist rights with AI-generated music?

This is actively evolving. Napster has licensed training data and announced partnerships with major labels, meaning the company is trying to ensure that artists are compensated for music the AI learned from. However, the legal framework is still developing. The key distinction is training data licensing (paying artists whose music the AI learned from) versus output licensing (what rights you have to use the music you generate). Both matter, and the terms may differ between regions and use cases.

Can AI replace human musicians and composers?

Probably not completely, but it will certainly change how musicians work. AI is more likely to augment professional musicians than replace them. It handles tedious production work, generates variations for rapid prototyping, and accelerates iteration. However, in some domains (background music for videos, ambient tracks, generic scoring), AI might displace lower-end commercial music work. The real value of human musicians will increasingly come from distinctive voice, emotional authenticity, and artistic vision that's hard to automate.

What's the future of music if AI generation becomes really good?

The likely trajectory includes real-time human-AI collaboration, emotionally responsive music that adapts to your state, personalized streaming where music is generated on demand, and integration into all creative tools (games, apps, films, etc.). Music itself probably doesn't change fundamentally—it will still need to be emotionally resonant and intentional. But the production process, the cost of entry, and who can participate all change dramatically. The impact is probably similar to what digital audio workstations did to music production in the 1990s: it democratized access and changed how professionals work.

The Bottom Line: A Smart Pivot for a Controversial Brand

Napster returning with an AI-first music creation platform is actually a clever move. The company learned from the original disaster: disruption works better when you work with power structures rather than against them. The timing aligns with mature AI technology and genuine demand for accessible music creation. The market opportunity is real.

But Napster is also carrying baggage. The name carries associations with copyright violation and corporate arrogance. The company needs to prove that the new version is genuinely different. That requires delivering exceptional product quality, respecting artist rights, and building a real community of creators.

If Napster executes well, they could own this space. The first-mover advantage in AI music creation for general audiences is still there. The brand recognition is there. The infrastructure is there. But the window for capitalizing on it is limited. Once competitors have better products or the novelty of Napster's return wears off, it gets much harder.

The bigger picture is that AI music generation isn't a question of whether it happens. It's definitely happening. The question is how it happens. Are the tools controlled by a few corporations? Open source and freely available? Distributed across many competing platforms? Does it benefit creators or primarily benefit the platforms that own the tools? Does it expand musical creativity or replace it with generated genericity?

Napster's bet is that they can be a player in shaping that future. Whether that bet pays off probably depends less on technology and more on culture—whether users accept AI-assisted creativity, whether the music industry tolerates the disruption, whether copyright law evolves in ways that make the platform viable long-term.

The 1999 version of Napster couldn't control those factors. They just pushed technology forward and dealt with the consequences. The 2024 version of Napster is trying to be smarter about it. Whether that wisdom actually applies to the music industry's legal and cultural systems remains the central question. History suggests that technology always moves faster than regulation eventually. The interesting part is watching how hard the establishment fights the transition and how messy it gets before equilibrium restores.

One thing seems certain: music creation is about to become radically more accessible. Whether that's ultimately positive or negative will depend on what people do with that access.

Key Takeaways

- Napster's return with AI music generation represents a fundamental shift from consumption to creation, democratizing tools that once required years of training and expensive equipment

- The platform uses neural networks trained on massive music datasets to generate novel compositions based on text descriptions, emphasizing human-AI collaboration rather than full automation

- Unlike the original Napster that fought copyright law, the new platform works with major labels and licensing agreements, learning that legitimacy requires operating within regulatory frameworks

- AI music generation is maturing from novelty to legitimate production tool, attracting content creators, game developers, marketers, and independent producers who previously lacked music creation access

- The market opportunity is massive—billions consume music but only millions can create it, and AI removes the primary barrier that prevented participation: the need for years of musical training

Related Articles

- Korg Kaoss Pad V: The 13-Year Upgrade You've Been Waiting For [2025]

- Bandcamp's AI Music Ban: What Artists Need to Know [2025]

- Deezer's AI Music Detection Tool Goes Commercial [2025]

- DJI RS 5 Camera Gimbal: Complete Guide for Video Creators [2025]

- TikTok US Ban: 3 Privacy-First Apps Replacing TikTok [2025]

- Meta's Premium Subscriptions Strategy: What It Means for You [2025]

![Napster's AI-First Music Creation App: The Future of Collaborative Music [2025]](https://tryrunable.com/blog/napster-s-ai-first-music-creation-app-the-future-of-collabor/image-1-1769712256658.jpg)