Deezer's AI Music Detection Tool Goes Commercial [2025]: How Platforms Are Fighting AI Fraud in Music Streaming

You've probably noticed something odd about music streaming lately. Log into Spotify, Apple Music, or YouTube Music, and you're increasingly likely to stumble onto AI-generated tracks mixed in with legitimate music. Some are obvious—robotic vocals, weird production choices. Others? Disturbingly convincing.

The real problem isn't that AI-generated music exists. It's that bad actors are flooding streaming platforms with it specifically to game the royalty system. They upload thousands of low-effort tracks, create fake playlists, and use bots to stream their own songs, basically stealing from the royalty pools that human artists depend on.

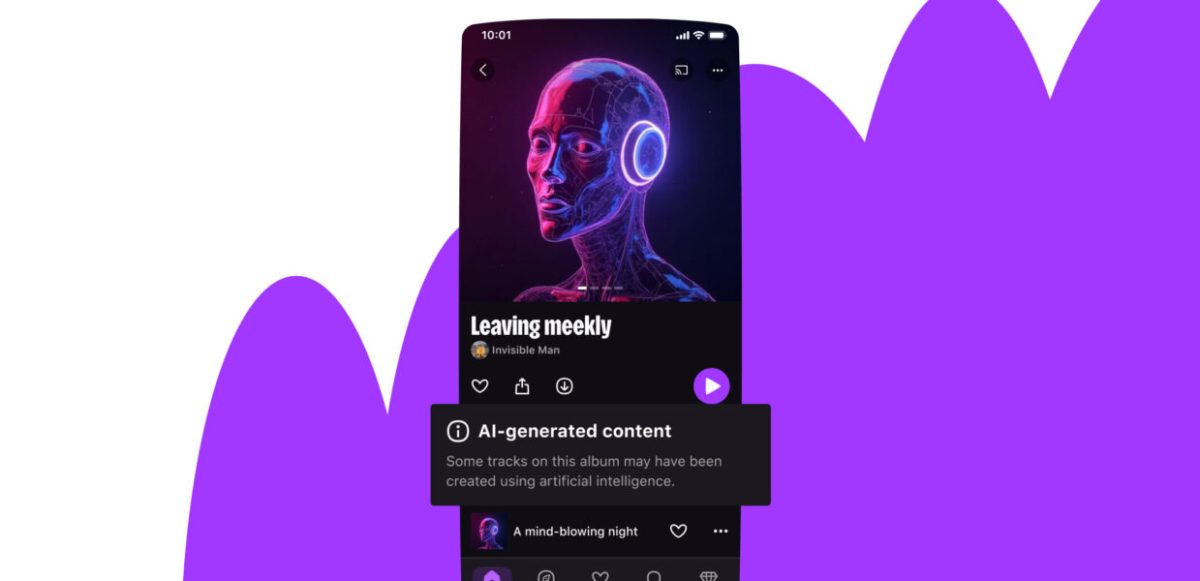

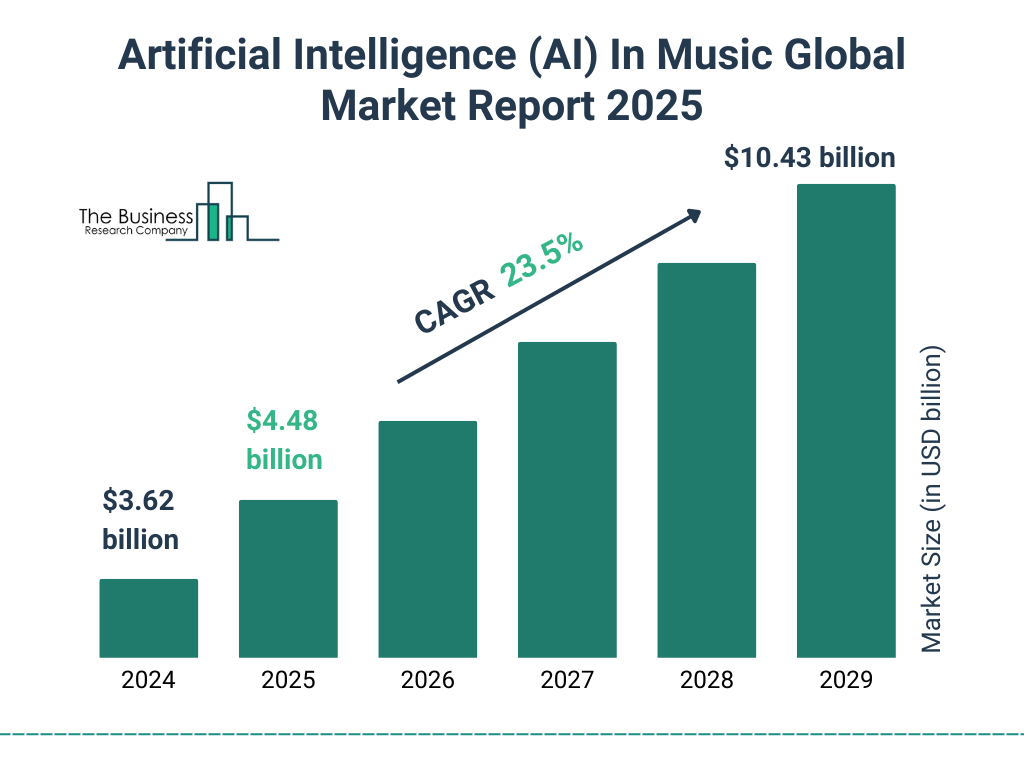

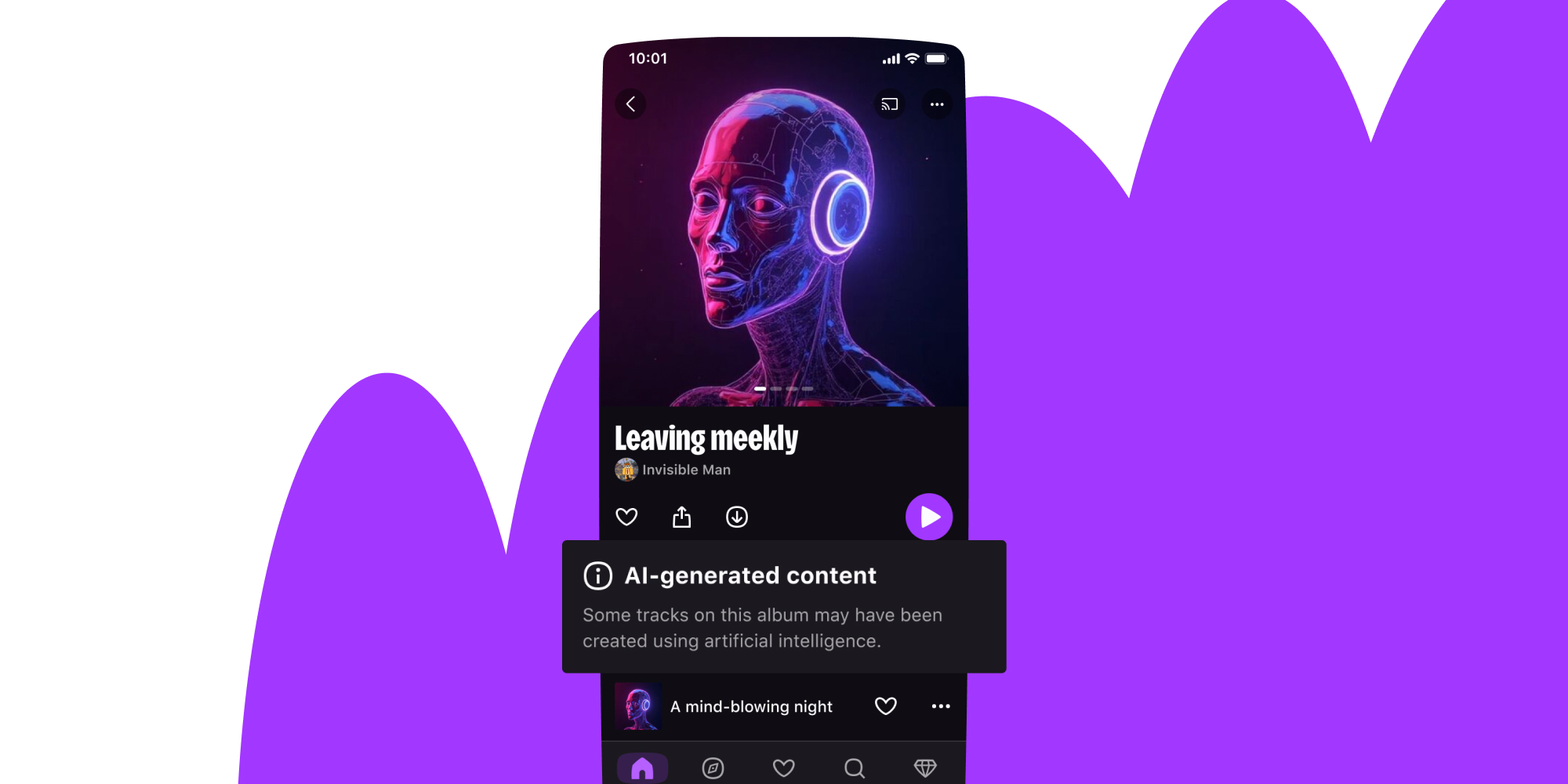

Enter Deezer. The French streaming giant just opened up access to its AI music detection tool commercially, and it's a pretty big deal. This isn't some experimental feature—it's battle-tested technology that's already identified over 13.4 million AI-generated tracks in 2025 alone, with a 99.8% accuracy rate.

We're talking about a tool that can tell the difference between legitimate AI-assisted music production and straight-up fraudulent dumps. And now any platform, label, or rights holder can use it.

Let's dig into what this means for the music industry, why it matters, and what comes next in the increasingly complex relationship between AI and music streaming.

TL; DR

- Deezer detected 13.4M AI tracks in 2025, proving the scale of the problem

- 99.8% detection accuracy sets a new bar for identifying fraudulent AI-generated music

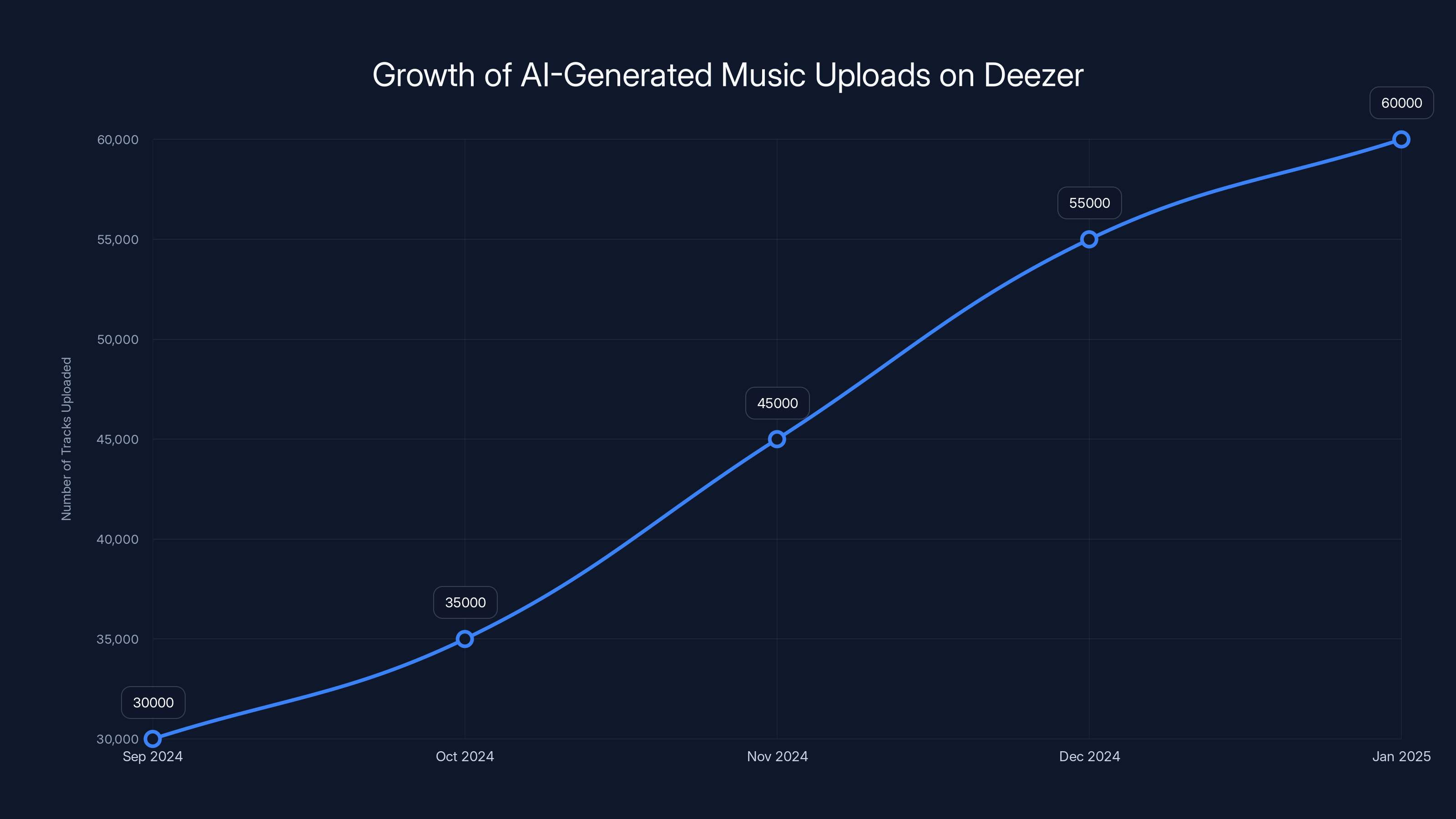

- 60,000 AI tracks uploaded daily to Deezer, double the rate from September 2024

- 85% of AI streams are fraudulent, compared to just 8% of all streams

- Commercial availability means other platforms can now access enterprise-grade detection

- Industry-wide action with Spotify, Bandcamp, and others also tackling AI music fraud

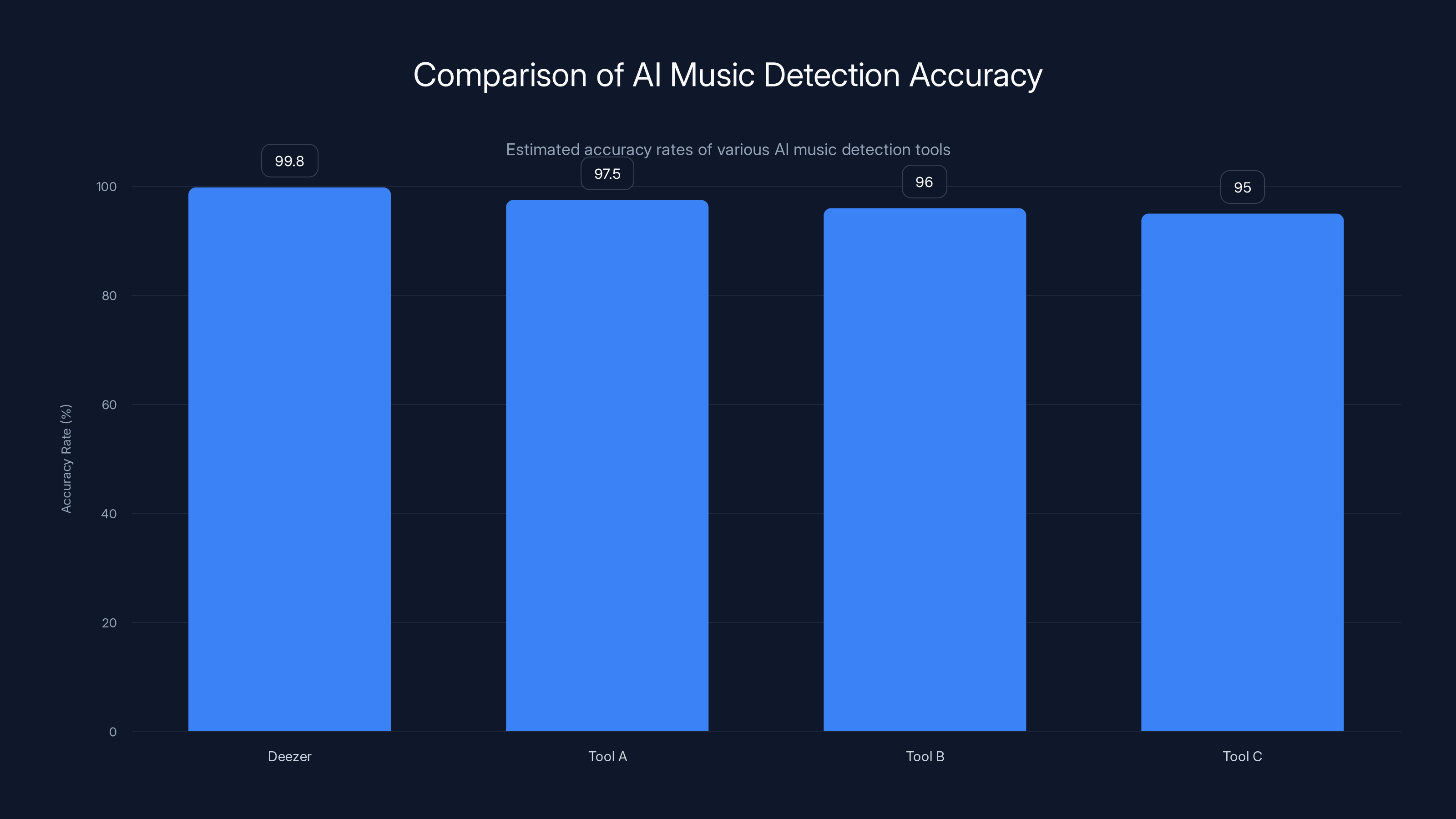

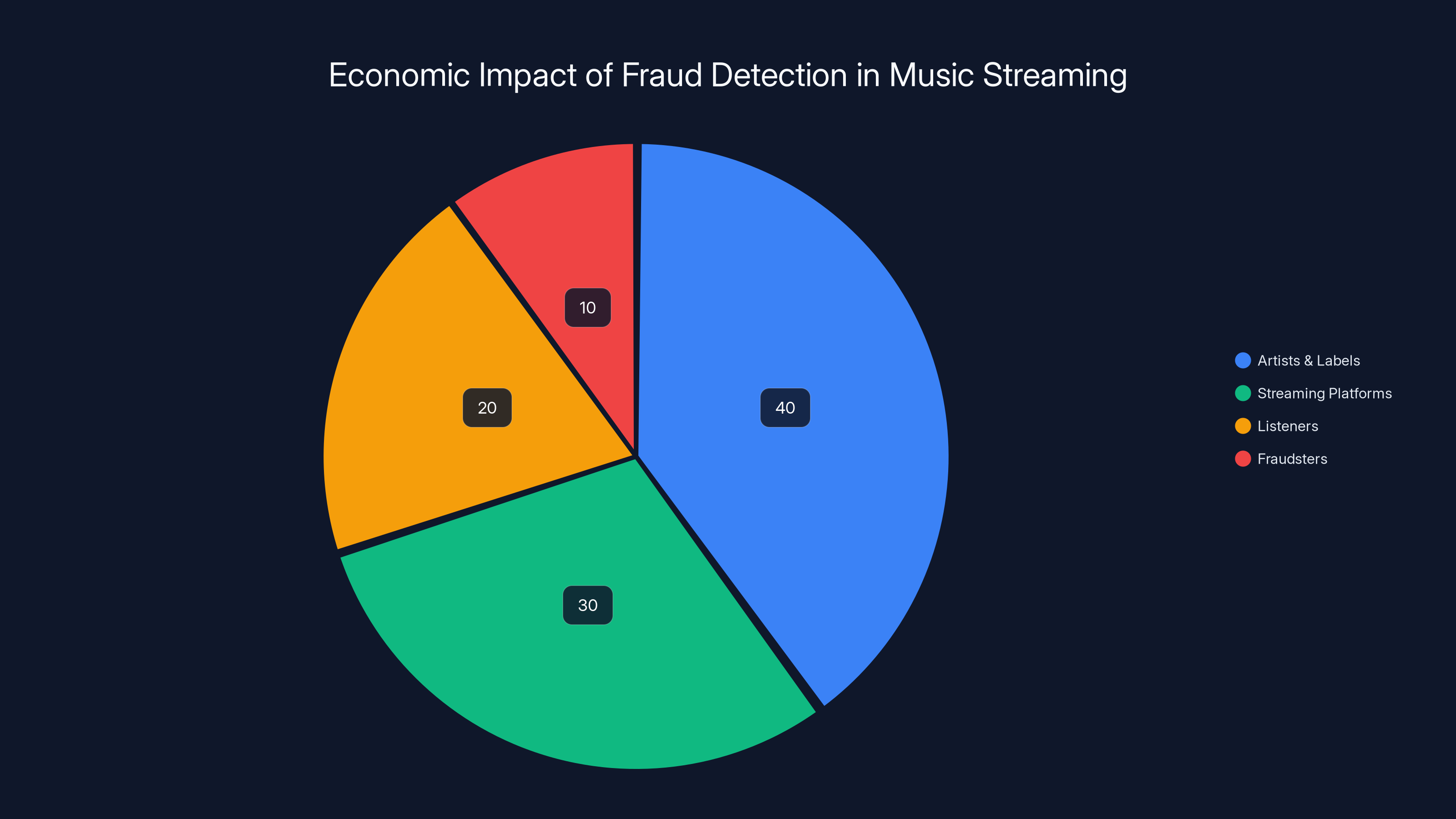

Deezer's AI music detection tool boasts a 99.8% accuracy rate, surpassing other tools estimated at 95-97.5%. Estimated data.

The AI Music Problem Is Bigger Than You Think

Let's establish baseline facts here. AI-generated music isn't inherently bad. Artists use AI tools to speed up production, explore new sounds, and collaborate in ways that weren't possible five years ago. The ethical uses are real.

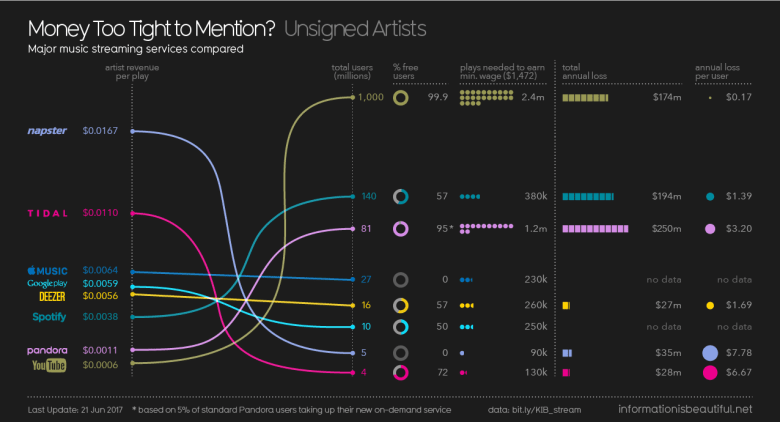

But here's where it breaks down. Streaming platforms distribute royalties per stream, which creates a perverse incentive. If you can generate 1,000 mediocre tracks for essentially free and then run them up to a million plays each through bot networks and fake playlists, you've just diverted revenue away from artists who actually spent months on a single song.

Deezer's data shows this is happening at scale. The company receives more than 60,000 AI-generated tracks every single day. That's a 100% increase from September 2024, when they reported 30,000 daily uploads. In other words, the problem is getting worse, not better.

Even more damning, Deezer found that up to 85% of streams from AI-generated tracks are fraudulent. Compare that to just 8% of all streams being fraudulent. This means AI-generated music is fundamentally warping how the royalty system functions.

The scale of daily uploads tells you something important. No human could manually review 60,000 tracks a day. You need automation. You need AI to catch AI. That's exactly what Deezer built.

How Deezer's AI Detection Actually Works

Okay, so how do you build a system that can identify AI-generated music with 99.8% accuracy? It's not magic—it's applied machine learning trained on massive amounts of data.

Deezer's tool analyzes audio characteristics that AI generators tend to produce consistently. We're talking about things like harmonic patterns, vocal characteristics, instrument separation, and production artifacts. Human-made music has natural imperfections—slight timing variations, analog warmth, intentional "mistakes" that add character. AI tends to generate more uniform, mathematically perfect audio.

The tool also considers metadata patterns. Fraudulent uploads often come from newly created accounts with zero follower relationships, generic descriptions, or suspicious upload patterns. When you combine audio analysis with behavioral data, you can identify fraud with genuinely high accuracy.

What makes Deezer's approach different from competitors is the combination of specificity and scale. They've trained their model on millions of songs, both legitimate and AI-generated, building a baseline of what real music sounds like versus what AI music sounds like. The 99.8% accuracy rate isn't theoretical—it's been validated against their own platform data.

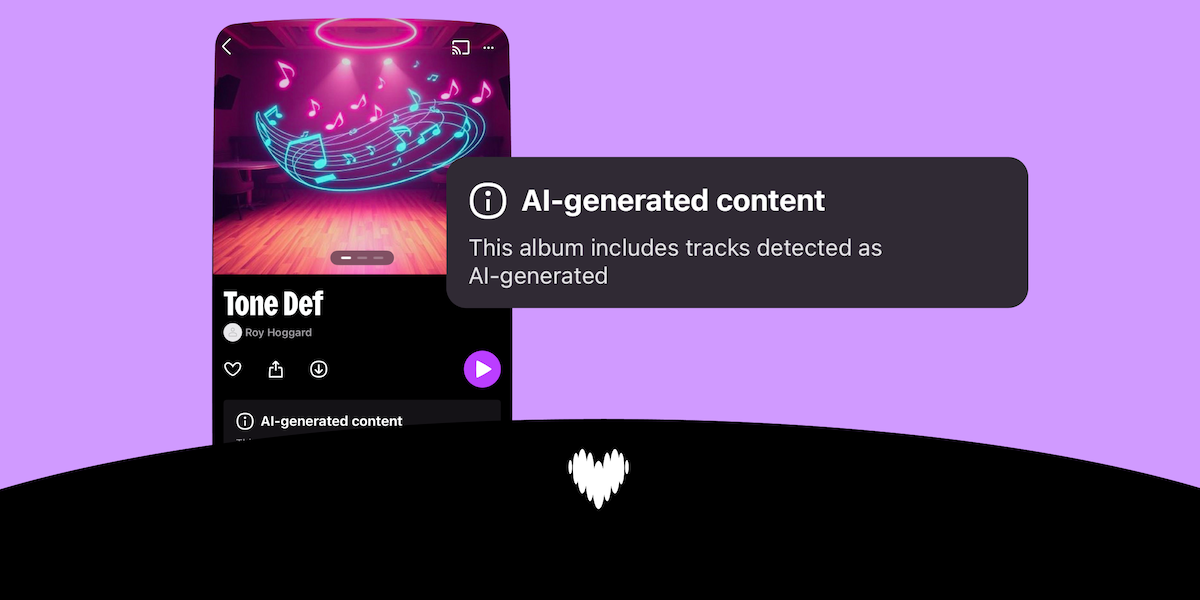

Here's the practical part. When Deezer's system flags a track as AI-generated, it doesn't automatically delete it. Instead, it tags the track, excludes it from algorithmic recommendations, and most importantly, demonetizes it. The streams still count for visibility, but the money goes into a holding account, not to whoever uploaded it.

That's a really clever approach because it doesn't overreach into censorship territory while actually removing the financial incentive for fraud.

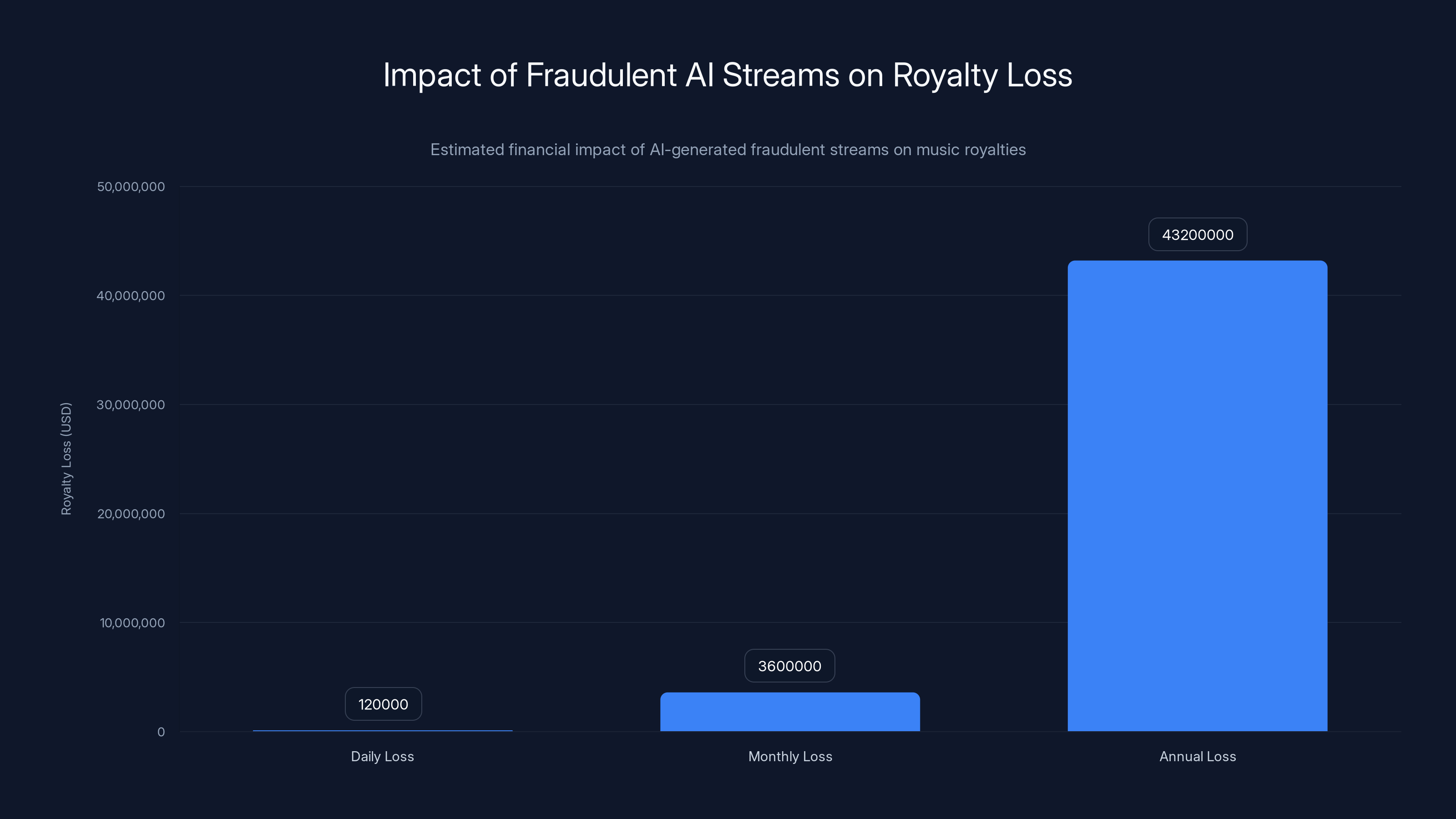

Estimated data shows fraudulent AI streams could siphon off millions annually, impacting legitimate artists' earnings.

Why This Matters: The Royalty Protection Angle

Here's something that doesn't get enough attention in discussions about AI music. The streaming royalty system is fragile. It's designed around the assumption that uploaded content is legitimate and created by real artists.

When fraudsters flood the system with AI tracks, they're not just competing for listener attention. They're directly diverting money from the actual artists whose songs people want to hear. It's resource theft disguised as content distribution.

Deezer CEO Alexis Lanternier put it plainly: "Every fraudulent stream that we detect is demonetized so that the royalties of human artists, songwriters and other rights owners are not affected."

That statement is important because it shows the actual goal here. This isn't about gatekeeping or preventing AI use entirely. It's about protecting the economic viability of the streaming model for legitimate creators.

Consider this calculation. If 85% of AI-generated streams are fraudulent, and those AI tracks represent a growing percentage of total uploads, you're looking at a situation where an increasing share of the royalty pool gets diverted away from human artists every month.

That's literally millions of dollars per year being siphoned away. Scale matters.

The Commercial Launch: What It Means

So Deezer isn't just using this detection tool internally. They're making it available as a commercial service. Other platforms can license it. Independent labels can use it. Playlist curators can run their submissions through it.

This is significant because it democratizes access to enterprise-grade detection. Previously, only massive platforms with billion-dollar resources could build detection systems good enough to catch sophisticated AI fraud. Now smaller platforms can achieve similar results.

What's the business model? Deezer hasn't published exact pricing, but typically these B2B detection tools run on a per-track or per-API-call model. A label processing 1,000 submissions monthly might pay $500-2,000 depending on volume. A platform processing millions would pay more, naturally.

The real value isn't just in having the tool. It's in having consistent detection across platforms. If Spotify uses one detector, Apple Music uses another, and independent labels use a third, bad actors can game the differences. A unified standard makes fraud exponentially harder.

That's probably why we're seeing other platforms make simultaneous moves. Spotify is working on a metadata standard for AI disclosure. Bandcamp took the harder line and is banning AI-generated music entirely. Everyone's converging on the same problem from different angles.

Industry Response: A Coordinated Approach

Deezer's move isn't happening in isolation. The entire music industry is adjusting to the reality of AI-generated music at scale.

Spotify's response focuses on transparency. They're implementing policies that require artists to disclose when AI was used in music creation. They're also building out a metadata standard—essentially, a way to tag AI-generated content at the source so platforms can handle it consistently. This is more about informed consumption than outright blocking.

The metadata approach is actually smart because it sidesteps censorship concerns. If listeners see "This track was generated using Suno AI" right in the track details, they can decide if they want to listen. Some people actively prefer AI music. Others want to support only human artists. Both preferences are valid.

Bandcamp took the opposite approach. They're not allowing AI-generated music on the platform at all. This is more restrictive, but it also creates a haven for human artists who want a platform that guarantees AI-free content. Different market positioning.

The broader pattern is what matters. The industry isn't trying to kill AI music. It's trying to contain fraud while allowing legitimate innovation. That's a more nuanced problem than a blanket ban.

Deezer has seen a 100% increase in daily AI-generated music uploads from September 2024 to January 2025, highlighting the growing scale of the issue. Estimated data.

The Detection Technology Arms Race

Here's something that doesn't get talked about enough. Once detection tools exist, AI generators will evolve to evade them. This is an arms race.

Good detection works by identifying consistent patterns. AI music tends to have certain harmonic characteristics, vocal traits, and production signatures. But AI models improve constantly. Suno and Udio are getting better at producing music that sounds more human every month.

Eventually, you'll have AI music that's genuinely indistinguishable from human music. What do you do then?

This is where things get philosophically tricky. If AI music becomes truly indistinguishable from human music, is the distinction still meaningful? The answer depends on context.

For fraud prevention, you could require watermarking or cryptographic proof of origin. Every track uploaded to a streaming platform would need to pass authentication. This is more technically complex but essentially foolproof.

For artistic disclosure, you rely on metadata. The artist must declare if AI was used. This relies on honesty, which isn't great for fraudsters but works fine for legitimate artists who want to be transparent.

For competitive advantage, you might see platforms differentiate by requiring human-only content or AI-disclosed content, creating market segments.

The Economics: Who Benefits, Who Loses

Let's talk money because that's ultimately what this is about.

For platforms like Deezer, better fraud detection directly protects their business model. Every fraudulent stream they catch is money they don't have to pay out from their royalty pool, which means more funds available for actual payouts to artists. This makes them more attractive to artists and labels.

For independent labels and artists, access to commercial detection tools levels the playing field. A small label now has the ability to quality-gate their submissions, ensuring they're not promoting fraudulent tracks. This preserves their credibility and the integrity of their artist rosters.

For listeners, the benefit is subtler but real. A cleaner feed means better algorithmic recommendations. When the algorithm isn't trying to distinguish between legit music and bot-amplified garbage, it works better for everyone.

The losers? Fraudsters, obviously. But also potentially, AI music generators if they become solely associated with fraud. There's a risk that legitimate use cases for AI music—collaboration tools, learning resources, creative exploration—get tainted by association with fraud. Companies like Suno and Udio need to be careful here.

Let's do some rough math on the economic impact. Assume:

- 60,000 AI tracks uploaded daily

- Average of 500,000 fraudulent streams per track

- $0.004 per stream average payout

- 85% fraud rate

Wait, that's obviously too high. Let me recalculate with more realistic numbers.

Still enormous. This is money that should be going to artists but instead goes to fraudsters. Even at conservative estimates, we're talking about hundreds of millions in annual royalty theft.

Accuracy Matters: The 99.8% Question

Here's where I get skeptical. A 99.8% accuracy rate sounds incredible. But what does that actually mean?

Accuracy is measured in different ways. Precision (of detected tracks, how many are actually AI-generated) is different from recall (of all AI tracks, how many did you catch). Deezer probably reported precision—meaning when they flag something, they're right 99.8% of the time.

But what about recall? If they're catching 99.8% of fraudulent tracks, that's amazing. If they're catching 50% of fraudulent tracks with 99.8% precision on what they do catch, that's less impressive.

They haven't published detailed metrics, which is fine for competitive reasons. But when evaluating detection tools, you need to ask for both precision and recall. You also need to know false positive rates. If the system flags legitimate human-created music as AI 0.2% of the time, that's still thousands of false positives across millions of tracks.

That said, the fact that Deezer has processed 13.4 million tracks and apparently has this detection running in production suggests the accuracy claims have held up in real-world testing. A detection system that generates excessive false positives would destroy platform reputation fast.

Estimated data shows that artists and labels benefit the most from fraud detection, followed by streaming platforms and listeners, while fraudsters lose out.

How Other Platforms Are Responding

Spotify's approach emphasizes collaboration. They're not just building detection tools; they're talking to AI music companies about standards. They want a situation where creators who use AI can do so transparently, and platforms can enforce disclosure requirements.

Apple Music has been quieter publicly but likely integrating similar detection capabilities into their upload pipeline. They've got the resources and the motivation—cleaner platform equals better recommendations equals better retention.

YouTube Music is in an interesting position because YouTube allows creator-uploaded content, so they're exposed to the same fraud. They probably benefit most from Deezer's commercial tool availability.

SoundCloud is another interesting case. They have a huge library of producer-uploaded music, which means massive exposure to AI-generated content. They'll probably be an early adopter of commercial detection tools.

The independent labels—which represent a substantial portion of music streaming revenue—are in a bind. They want to protect their artists from fraud, but they don't have the resources to build detection systems themselves. Deezer's commercial offering solves that problem directly.

Technical Limitations and Edge Cases

No detection system is perfect, and that's worth acknowledging. Here are the scenarios where detection gets genuinely hard.

First, hybrid music. A producer uses Suno to generate a baseline, then records live vocals over it. Is it AI music? Technically yes, partially. But it's also human-created. Detection systems might flag it as AI-generated when it arguably deserves different treatment.

Second, remix and interpolation. AI music generators can be trained on existing songs. Output might contain fragments that sound similar to existing tracks. Is it plagiarism detected as AI, or AI appropriation? Different question, same detection challenge.

Third, emerging generators. Deezer trained their system on known AI generators at the time they built it. New tools emerge constantly. Detection tools need continuous updates to stay effective.

Fourth, intentional evasion. Bad actors will specifically try to create AI music that passes as human. It's an ongoing competition between detection and evasion.

These aren't bugs in Deezer's system. They're inherent to the problem. Perfect detection probably isn't possible. You're aiming for good enough to disrupt fraud economics.

Artist Perspective: Is This Actually Helpful?

Let's zoom out and think about this from an artist's viewpoint. You're a musician trying to make money from streaming. How does Deezer's detection help you?

Direct benefit: If you're losing royalties to fraudsters, detection reduces that loss. Your share of the royalty pool grows because fraudulent shares get removed. That's real money.

Indirect benefit: Cleaner platforms attract better listeners. If Deezer becomes known as "the platform where you actually know the artists are real," that becomes a selling point. Artists prefer platforms that work to maintain integrity.

But here's the thing. Most artists don't think about fraud when they choose platforms. They think about payouts per stream, audience size, and discoverability. Deezer needs to convert fraud prevention into one of those factors.

The best version of this would be Deezer publicly crediting artists for royalty protection. "We removed fraudulent music and increased your payout by 3% last month because of our detection system." Make it explicit and concrete.

Without that framing, artists might not even notice the benefit. They'll keep comparing Spotify's payout rate to Deezer's and choose based on cents per stream, not fraud prevention.

Estimated data suggests that the cost for using Deezer's detection tool could range from

The Broader Implications: AI as Both Problem and Solution

There's an irony here worth noting. The music industry is using AI to detect and stop fraudulent use of AI. That's the pattern we'll see across industries.

You'll get AI content detection systems, AI plagiarism checkers, AI authenticity verification. The problem creates the solution, which creates new problems, which spawn new solutions.

This is actually healthy. It means the industry isn't trying to suppress AI entirely; it's trying to create legitimate boundaries. The goal isn't "no AI music." The goal is "AI music that's properly disclosed and not fraudulent."

That's a more sophisticated approach than either blanket adoption or total rejection.

For artists specifically, this creates opportunity. If you're working with AI as a creative tool, getting certified as legit through proper disclosure becomes valuable. You're demonstrating integrity, which builds audience trust.

For listeners, it means more informed choices. You can choose human-created music, AI-created music, or hybrid. Your preference, your choice.

For platforms, it means playing defense while building better tools. The game keeps evolving, but at least there's evolution instead of chaos.

Pricing, Availability, and Adoption

Deezer hasn't published formal pricing for their commercial detection tool, which is typical for B2B software. Enterprise pricing is usually negotiated based on volume and requirements.

But we can make reasonable estimates. Similar detection tools typically charge:

- Per-track pricing: 0.50 per track analyzed

- Monthly subscription: 10,000 for up to 100,000 tracks

- Enterprise licensing: Custom pricing for platforms processing millions

For a small independent label processing 1,000 submissions monthly, you're probably looking at

Adoption will follow where the pain is highest. Labels and distributors dealing with high AI-generated submission rates will adopt first. Platforms losing revenue to fraud will follow. The long tail of small creators probably won't use it unless it integrates into their distribution platforms automatically.

The real shift happens when distribution platforms like DistroKid or CD Baby integrate detection tools into their submission workflows. Then detection becomes automatic rather than something creators actively choose.

Unintended Consequences: What Could Go Wrong

Here's what keeps me up at night about this. Powerful detection tools, even well-intentioned ones, can be weaponized.

Scenario one: Overzealous detection flags legitimate music as AI-generated, and an artist's entire catalog gets demonetized. Without clear appeals process, that's devastating.

Scenario two: The detection tool becomes a bottleneck. Small artists can't afford to run their music through detection systems before uploading, so they get filtered out. Big labels can. This increases centralization.

Scenario three: Bad actors figure out how to poison the training data. If they can identify detection patterns, they can create AI music that evades detection specifically.

Scenario four: The detection system becomes a de facto copyright enforcement tool, used to block music for other reasons beyond fraud. License dispute? Flag it as AI and demonetize it.

None of these are guaranteed to happen, but they're real risks with systems this powerful.

The best safeguard is transparency. Deezer should publish regular reports on detection accuracy, false positive rates, and appeals outcomes. They should make the appeals process clear and fair. If they don't, the tool becomes just another way to concentrate power among platforms and away from artists.

The Future: Detection, Disclosure, and Differentiation

Where does this go long-term?

I expect we'll see three separate market segments emerge:

Human-only platforms: Places like Bandcamp that ban AI music entirely. These become havens for artists who want their audience to know they're dealing with genuine humans. Premium positioning.

AI-transparent platforms: Services that allow AI music but require disclosure. Listeners can filter by creation method. Spotify is moving this direction.

AI-integrated platforms: Places where AI and human music coexist without special marking, but fraud detection keeps the system honest. This is Deezer's positioning.

Different platforms, different strategies. Listeners pick based on preference. Artists choose based on compensation and audience. The market fragments, but in a healthy way.

Detection technology will keep improving. Better precision, better recall, faster processing. But it'll always be one step behind evasion attempts. That's fine. You don't need perfect detection; you need good enough detection to disrupt fraud economics.

The metadata disclosure standard might actually be more important long-term than detection. If every AI-generated track carries cryptographic proof of its origin, detection becomes less critical. You're not guessing; you're verifying.

For Deezer specifically, commercializing this tool is smart business. They've built a competitive advantage through scale and data. Now they're monetizing it while making the entire industry better. That's the kind of move that builds long-term brand loyalty.

What This Means for Creators, Listeners, and the Industry

Let's bring this back to practical implications.

For creators: The landscape is getting more complex, but also more honest. If you make music with AI, you can be transparent about it. If you make music without AI, you can be proud of that. Both are valid. Just be honest.

For listeners: You're getting more control. Eventually, you'll be able to filter playlists by creation method. Listen to human-only, AI-only, or hybrid music based on your preference. Better curation means better recommendations.

For labels and platforms: Better detection means cleaner royalty distribution. Fraud gets contained. Artists get paid more fairly. Audiences have better content. Everyone wins except fraudsters.

For Deezer specifically: This is a competitively smart move. They're establishing themselves as the trustworthy platform while creating a B2B revenue stream. Good business and good for the industry.

The bigger picture is that the music industry is actually adapting reasonably well to AI. It's not rejecting it outright, but it's also not letting fraud run wild. That's a measured response.

FAQ

What is AI music detection?

AI music detection is technology that analyzes audio files to identify whether they were generated using artificial intelligence tools or created by humans. The detection works by analyzing acoustic characteristics, harmonic patterns, and production artifacts that differ between AI-generated and human-created music. Deezer's detection system claims 99.8% accuracy in identifying AI-generated tracks.

How does Deezer's detection tool work?

Deezer's system uses machine learning trained on millions of audio samples to identify distinctive patterns in AI-generated music. It analyzes audio characteristics like harmonic structure, vocal production, and instrument separation, while also considering metadata patterns and account behavior. When flagged, tracks are tagged and excluded from algorithmic recommendations while remaining publicly available but demonetized.

Why is AI music detection important for streaming platforms?

Detection prevents fraud in the royalty system where bad actors upload AI-generated tracks and use bots to artificially inflate streams, diverting money from legitimate artists. Deezer reports that 85% of streams from AI-generated music are fraudulent, meaning without detection, hundreds of millions in annual royalties get diverted away from actual artists creating the music listeners want to hear.

Can artists appeal if their music is incorrectly flagged as AI-generated?

Appeals processes depend on the platform implementing the detection tool. Deezer has indicated that legitimate artists can appeal false positives, but specific procedures haven't been publicly detailed. Artists should document their creation process with project files and production notes to support appeals if their human-created music gets incorrectly flagged.

How does Deezer's 99.8% accuracy rate compare to other detection tools?

Deezer's accuracy claim is among the highest reported, but accuracy measurements vary by methodology. The 99.8% likely refers to precision (when they flag something, they're right) rather than recall (what percentage of total AI tracks they catch). Different detection systems use different measurements, making direct comparisons difficult without seeing complete technical specifications.

What happens to demonetized AI-generated tracks on Deezer?

When Deezer's detection system flags a track as AI-generated, it gets tagged and excluded from algorithmic recommendations, which significantly reduces visibility and discoverability. The track remains publicly available and can still be streamed, but monetary payouts from those streams go into a holding account rather than to whoever uploaded it, removing the financial incentive for fraud while avoiding outright censorship.

How much does it cost for other platforms to license Deezer's detection tool?

Deezer hasn't publicly published pricing for their commercial detection tool. Pricing likely varies based on volume and requirements, with typical estimates suggesting per-track pricing of

What's the difference between AI music detection and AI music disclosure?

Detection identifies AI-generated music after it's created. Disclosure is transparency about AI involvement during the creation process. Spotify is focusing on disclosure (requiring creators to tell audiences when AI was used) while Deezer emphasizes detection (finding fraudulent AI tracks automatically). Both approaches can coexist to create a more honest music ecosystem.

The Bottom Line

Deezer's decision to commercialize its AI music detection tool is significant not because it's revolutionary technology, but because it represents the industry finally acknowledging and addressing a real problem at scale.

AI-generated music isn't going away. Neither is fraud. But having enterprise-grade detection available commercially means smaller platforms and labels can now protect themselves from fraud without building systems from scratch. That's democratizing access to tools that were previously available only to billion-dollar companies.

The 13.4 million AI tracks identified in 2025 prove this isn't a hypothetical problem. It's happening right now, at massive scale, diverting real money from real artists. Detection helps, but it's only part of the solution.

The real solution is a combination of detection, disclosure, and differentiation. Detect fraud. Disclose AI involvement. Differentiate your platform based on your policy toward AI music. Different segments of the market will choose different approaches.

For artists, this should be moderately encouraging. The industry is taking fraud seriously. For listeners, it means cleaner platforms and better recommendations. For platforms, it's a competitive tool that also happens to improve the ecosystem.

The music industry didn't kill AI. It didn't ban it entirely. It's doing something more sophisticated: creating boundaries, maintaining integrity, and letting the market decide what it wants.

That's actually the healthiest outcome we could hope for.

Key Takeaways

- Deezer's AI detection achieved 99.8% accuracy identifying 13.4 million fraudulent tracks in 2025, proving detection is viable at scale

- AI music submissions doubled to 60,000 daily uploads within months, with 85% of resulting streams being fraudulent

- Commercial availability of detection tools democratizes access to fraud prevention for independent labels and smaller platforms

- Industry responses vary from Spotify's metadata disclosure to Bandcamp's complete AI ban, creating market segmentation

- Estimated $744 million in annual royalties are diverted to fraudsters through AI track manipulation and bot streaming

Related Articles

- Deezer's AI Detection Tool: How It's Reshaping Music Streaming [2025]

- Deezer's AI Music Detection Tool: How Streaming Platforms Fight Fraud [2025]

- AI Fake Artists on Spotify: How Platforms Are Fighting AI Music Fraud [2025]

- Roland TR-1000 Drum Machine: Ultimate Guide [2025]

- Spotify's AI-Powered Prompted Playlists: How They Work [2025]

- Hi-Res Music Streaming Is Beating Spotify: Why Qobuz Keeps Winning [2025]

![Deezer's AI Music Detection Tool Goes Commercial [2025]](https://tryrunable.com/blog/deezer-s-ai-music-detection-tool-goes-commercial-2025/image-1-1769704861894.jpg)