Replit 24/7 AI Agents: The Future of Software Development in 2025-2026

Introduction: The Evolution of AI-Powered Development

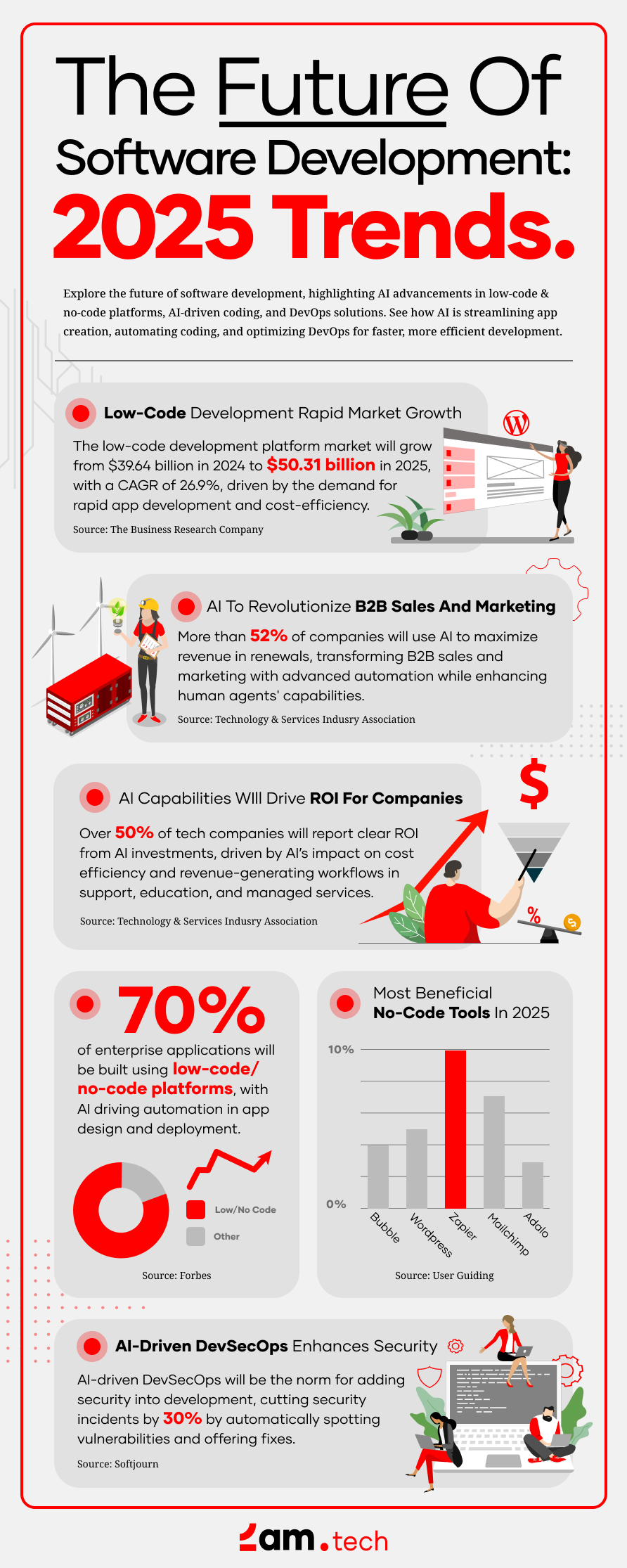

The landscape of software development has undergone a seismic shift over the past eighteen months. What seemed impossible in mid-2025—building commercial-grade B2B applications using AI-powered development platforms—has become increasingly plausible by late 2025. This transformation represents far more than incremental progress; it signals a fundamental restructuring of how we approach software creation, team composition, and product timelines.

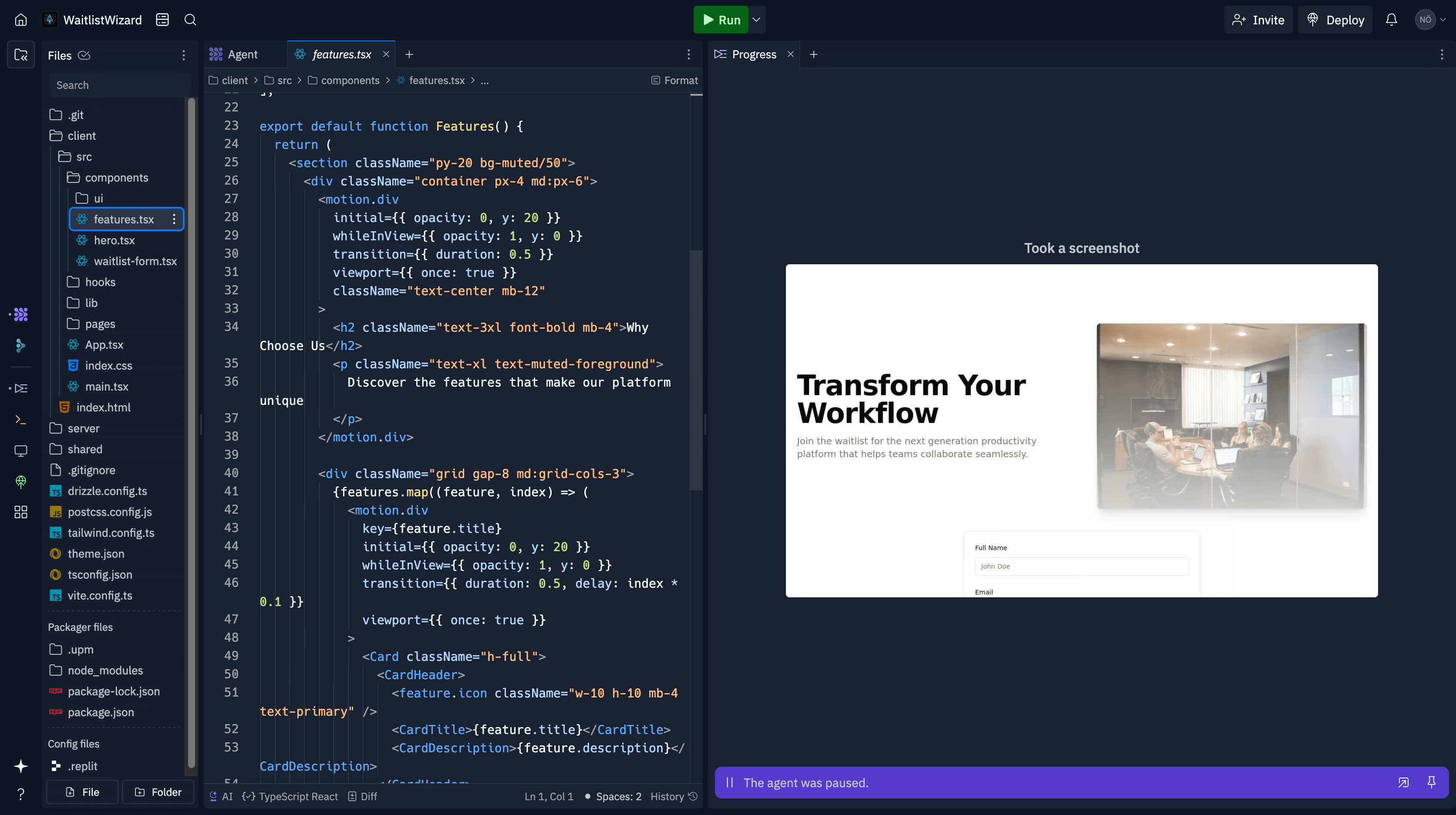

When Replit first emerged as a browser-based coding environment, it served a specific niche: rapid prototyping and educational use cases. The limitations were significant. Context windows were measured in thousands of tokens rather than millions. The AI agents could execute individual tasks but struggled with complex, interconnected feature development. Building anything approaching production-grade software felt like science fiction.

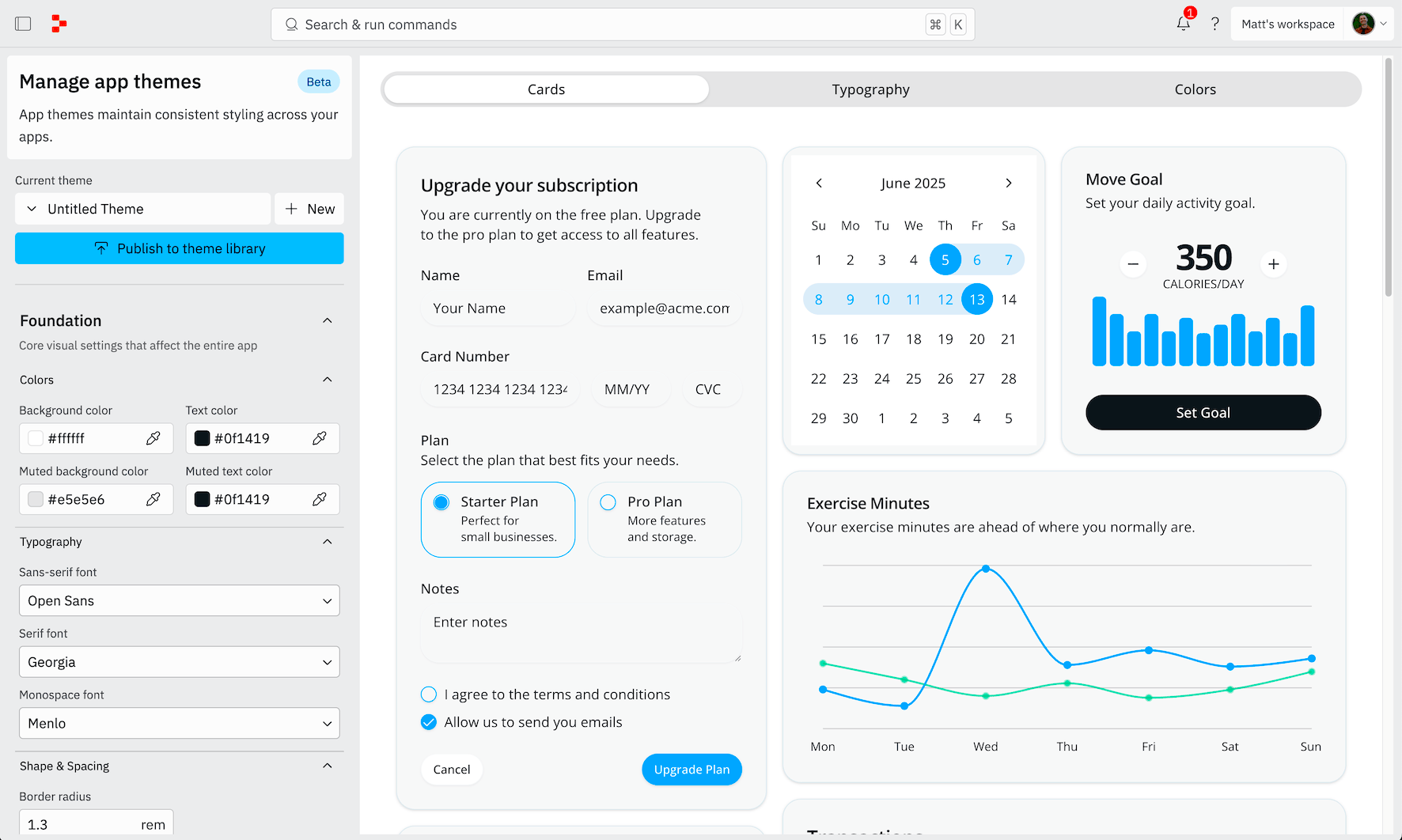

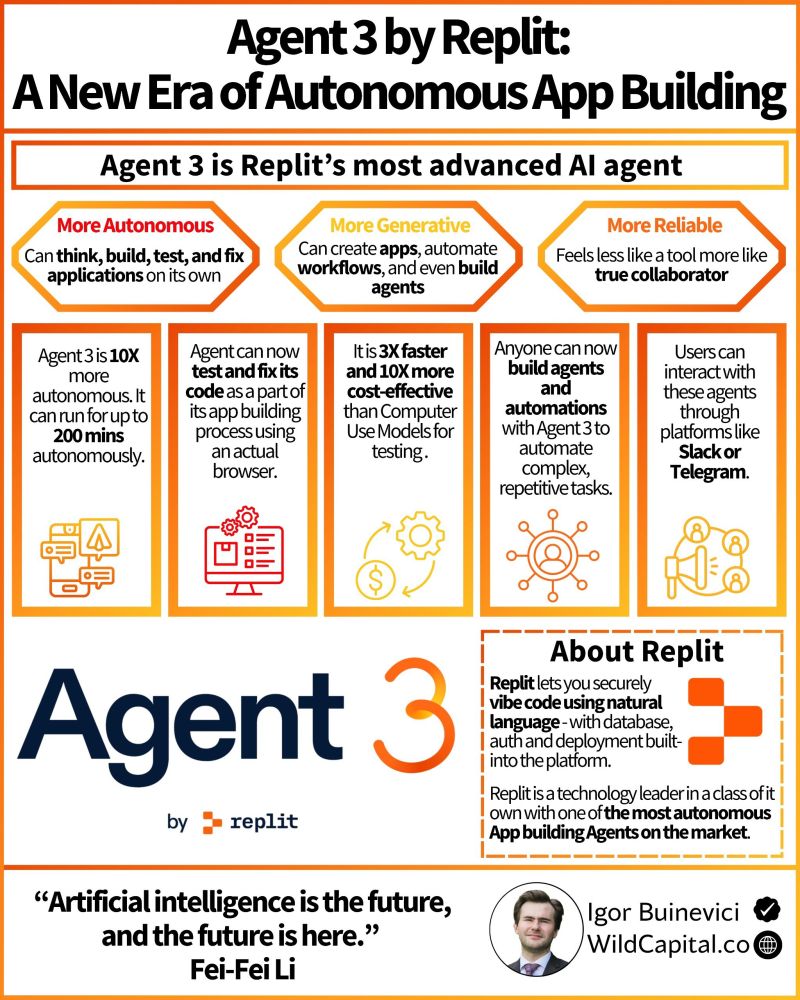

Yet by late 2025, Replit had achieved something remarkable. Unlimited context windows became possible. Sub-agents could be deployed to tackle specific problem domains autonomously. The introduction of Design Mode and Fast Mode provided developers with visual development capabilities alongside traditional coding. The platform transformed from a toy into a legitimate tool for building real applications.

However, the most intriguing thought experiment isn't about what Replit can do today—it's about what becomes possible when these agents operate perpetually. When your development team doesn't sleep. When feature development continues while you attend meetings, travel, or focus on other business priorities. When the traditional bottleneck of human availability and cognitive capacity is removed from the equation.

This exploration examines the current state of AI-powered development platforms, the mathematical reality of 24/7 autonomous agents, and the cascade of implications for software companies, development teams, and the entire technology industry. The numbers, the timelines, and the potential outcomes challenge fundamental assumptions about software development economics that have remained unchanged for decades.

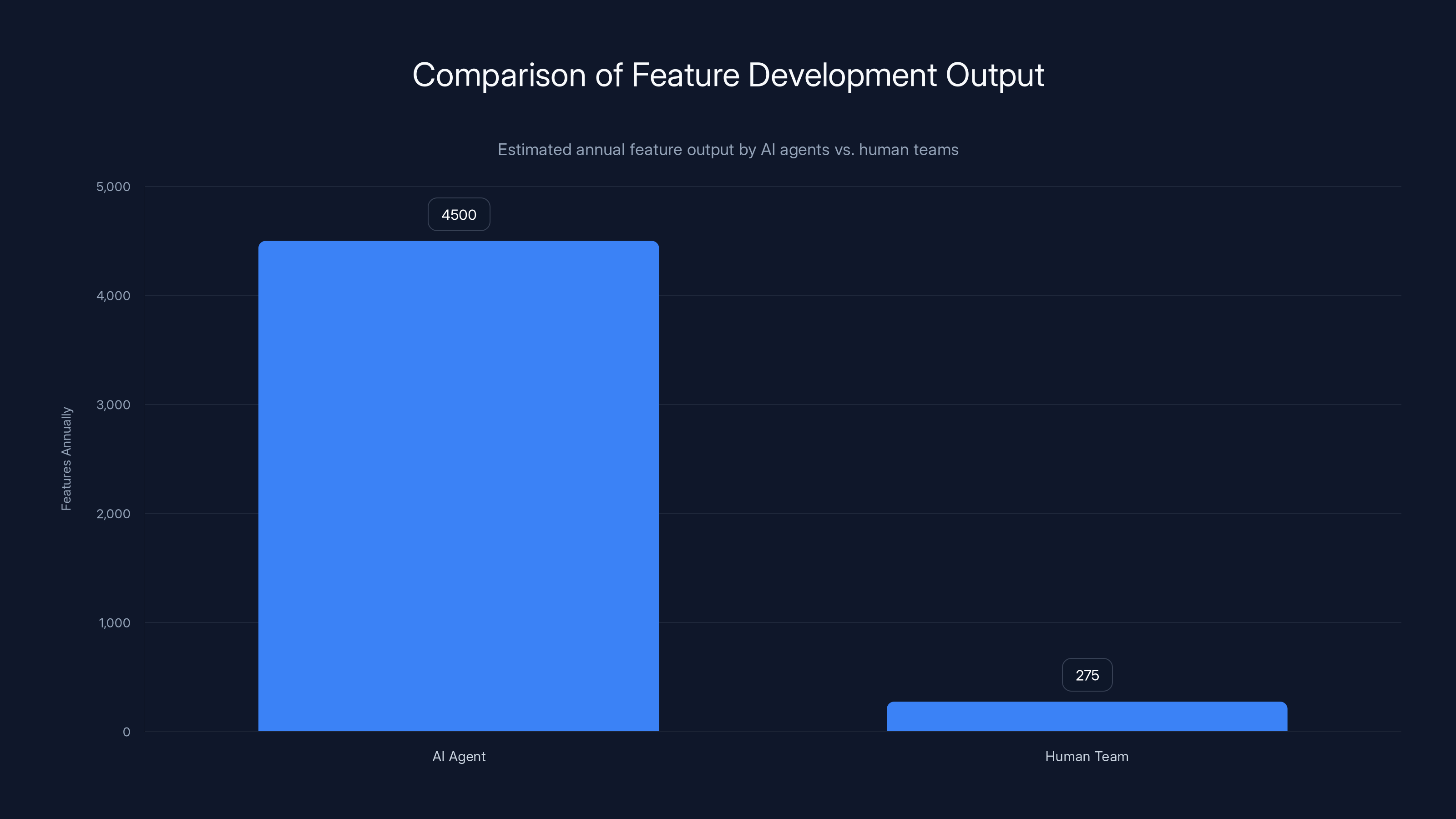

AI agents produce approximately 10-15 times more production-ready features annually than a five-person human team, highlighting their efficiency in routine tasks (Estimated data).

Understanding Replit's Late 2025 Capabilities

The Journey From Limited to Capable

Replit's evolution can be understood as a progression through distinct phases, each expanding the platform's capabilities. In early 2025, the platform operated with severe constraints. Context windows—the amount of code and documentation the AI could reference simultaneously—were limited to approximately 4,000-8,000 tokens. For perspective, a moderately complex feature might consume 2,000-3,000 tokens. This meant the AI could only reference a fragment of a project at any given moment, leading to repetitive mistakes, broken dependencies, and features that didn't integrate properly with existing code.

The testing capabilities were similarly primitive. The AI could generate code that appeared to function in isolation but frequently failed integration tests. Debugging required manual intervention. The development process became iterative in the worst way—not agile iteration with refinement, but frustrating loops of breaking and fixing broken code.

By mid-2025, incremental improvements emerged. Replit expanded context windows to 32,000 tokens. This proved more than incremental—it enabled the AI to reference entire applications simultaneously. Dependencies became traceable. The code quality improved measurably. But significant gaps remained.

By late 2025, the platform had crossed a critical threshold. Context windows expanded to effectively unlimited through advanced token management. The platform implemented dynamic context loading—automatically determining which code files, documentation, and system architecture were most relevant to the current task and prioritizing them within the context window. This meant the AI could reference an entire 100,000+ line codebase intelligently, pulling only the relevant 10,000 tokens needed for each specific task.

Sub-Agents and Specialized Capabilities

One of the most significant late-2025 developments was the introduction of sub-agents. Rather than a monolithic AI managing every aspect of development, Replit now supports specialized agents focused on specific domains: one agent managing database architecture and migrations, another handling frontend UI component development, another focused purely on API endpoint creation and testing.

This architectural shift mirrors how human teams organize themselves. A backend specialist handles database concerns. A frontend specialist manages UI complexity. A Dev Ops specialist handles deployment and infrastructure. By distributing responsibilities across specialized agents, Replit achieved what human teams achieve through specialization: deeper expertise in narrow domains, reduced context switching, and parallel progress on multiple fronts simultaneously.

The coordination between sub-agents occurs through a central orchestration layer. When the frontend agent needs to create components that consume an API, it can request specifications from the backend agent. Conflicts get resolved through negotiation logic. Shared dependencies are managed through a distributed coordination mechanism.

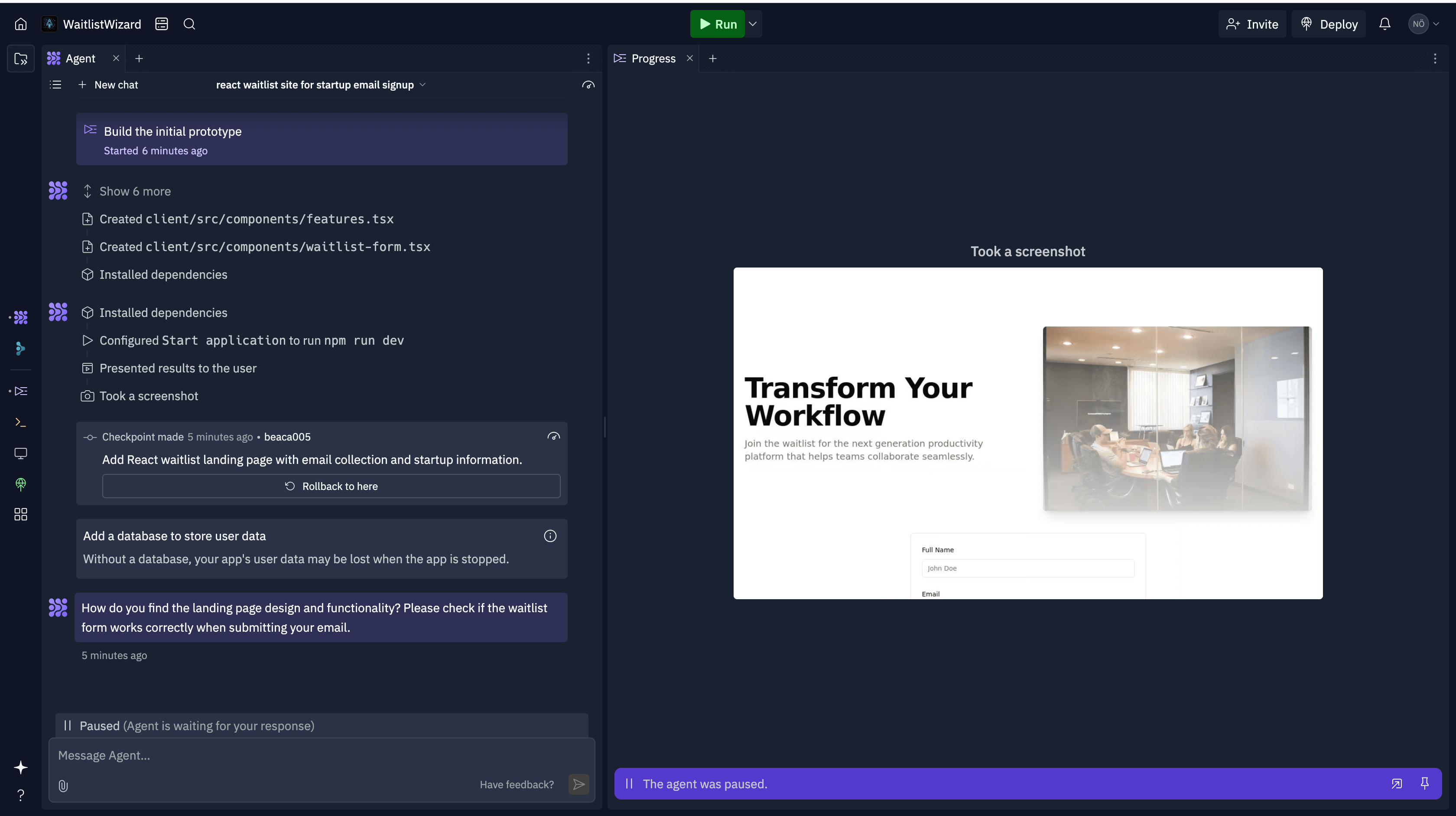

Design Mode and Fast Mode

Traditional coding requires translating design concepts into code. This translation introduces delays and interpretation gaps. A designer creates mockups. A developer reviews them. Questions arise about spacing, colors, interactions, animation timing. The design gets coded, reviewed, iterated.

Design Mode fundamentally alters this workflow. Developers can now create visual layouts in a Figma-like interface. Components snap to grids. Colors auto-populate from design systems. Interactions are defined visually. Replit then generates the production code—React components, CSS-in-JS, or vanilla HTML/CSS depending on the project requirements.

Fast Mode accelerates development velocity further. When a feature is straightforward and well-defined, Fast Mode skips certain intermediate steps. Rather than generating code, testing it, debugging failures, and iterating, Fast Mode produces battle-tested boilerplate rapidly. For common patterns—CRUD interfaces, authentication flows, data tables—Fast Mode delivers production-ready code in seconds rather than minutes.

The combination of Design Mode and Fast Mode reduces the time from concept to deployed feature from hours to minutes for routine work. More complex, custom development still requires traditional approaches, but the vast majority of common patterns now execute instantly.

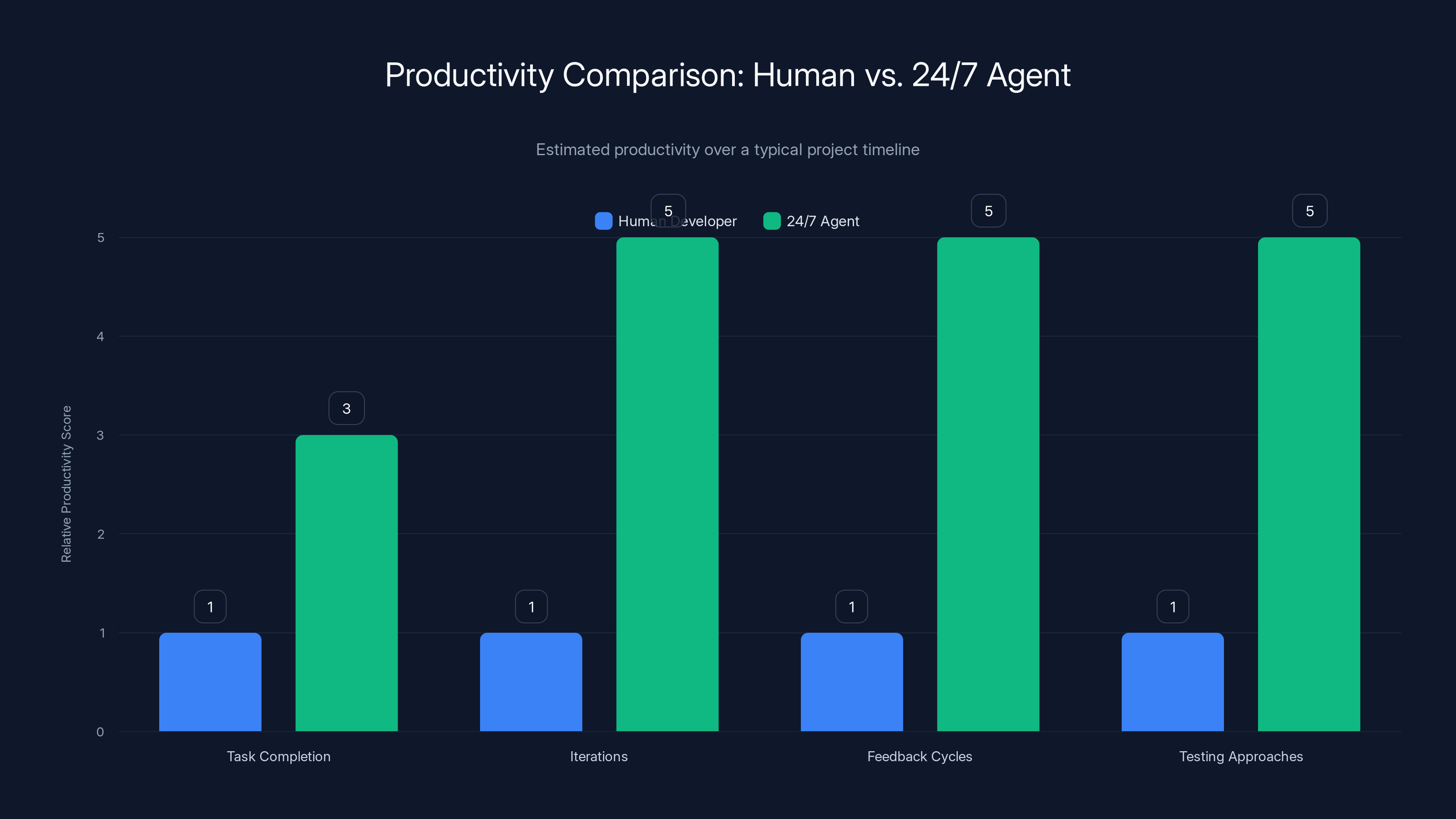

The 24/7 agent model shows significantly higher productivity in task completion, iterations, feedback cycles, and testing approaches compared to a human developer. Estimated data.

The Current Bottleneck: Human Availability and Attention

Why Current Development Still Stalls

Despite the dramatic improvements in Replit's capabilities, development projects still experience familiar delays. A developer kicks off a feature generation process. The system requires 15-20 minutes to generate code, run tests, and prepare output. The developer reviews the work. Issues emerge. The developer provides feedback. The system regenerates. This loop repeats 3-5 times before the feature reaches production quality.

This iterative cycle appears efficient compared to traditional development. A human developer might take 2-3 hours for the same feature. The AI accomplishes it in 1-1.5 hours of total system time. But this comparison misses the critical issue: the human developer is actively engaged for those entire 2-3 hours. With AI, the developer must be actively engaged for the review and feedback cycles, but the generation phases are passive waiting.

The real bottleneck becomes human attention bandwidth. A developer can only focus on one feature at a time. Yes, they can start one feature generation, then review code while the system works on another feature in parallel. But the human remains the constraint. If a developer can review and provide feedback on 3 features per hour, and each feature requires 3 feedback cycles, then that developer can complete 1 feature per hour maximum.

Scaling this multiplication: a five-person team, working eight hours per day, conducting three feedback cycles per feature, completes roughly 40 features per week. Accounting for coordination meetings, code reviews for quality assurance, and vacation time, a realistic annual output is approximately 1,500-2,000 features per year.

The Sleep Problem

Human developers sleep. They attend meetings. They take days off. On any given 24-hour period, a developer is available for productive work perhaps 6-8 hours realistically. The remaining 16-18 hours, the developer produces nothing.

Multiply this across a five-person team: that's 80-120 hours of unused development capacity every single day. Over a year, a five-person team wastes approximately 30,000-45,000 hours of potential development capacity simply because humans require rest, food, and social time.

This isn't a flaw in human nature—it's biological reality. But it remains an overlooked economic cost in traditional software development planning. The industry has accepted this constraint as inevitable. Hiring 25 developers to achieve the productivity of 5 developers would be absurd, so companies accept the lost capacity and plan accordingly.

But what if that constraint could be eliminated?

The 24/7 Agent Model: Fundamentals and Economics

Continuous Development Without Human Constraints

A 24/7 autonomous agent operates under completely different economics. The agent doesn't sleep. It doesn't attend meetings unless explicitly requested. It doesn't take vacations. If equipped with clear objectives and constraints, it can work continuously.

Consider the task assignment: "Build a customer relationship management system with the following core features: contact management, pipeline stages, activity logging, email integration, and reporting." A human team would need this specification expanded into dozens of documents, technical design reviews, architecture decisions, and clarification meetings.

A 24/7 agent, given this core assignment, immediately begins working. It generates the data model. It creates the API structure. It builds the frontend interface. It writes tests. It debugs failures. It discovers limitations and designs solutions. By the time you return from your morning meeting, substantial progress has occurred.

Here's the critical difference: the agent doesn't wait for your feedback to continue. While you're in meetings, the agent is building. It creates multiple approaches to each feature. It tests them. It collects performance data. It refines implementations. By the time you review the work, the agent has already iterated multiple times internally.

This internal iteration compounds significantly. A feature that a human developer might implement one way, the agent tests five approaches in the same time. It measures performance, code quality, maintainability, and extensibility across all five versions. It selects the best approach, but keeps the others as reference implementations.

Over 365 days, this becomes extraordinary. The product doesn't just accumulate features; it accumulates decisions, patterns, and architectural refinements continuously.

The Mathematics of Scaled Productivity

Let's establish baseline measurements for human developer productivity. Industry data suggests that a skilled developer completes approximately 1-2 meaningful features per week. This accounts for planning, implementation, testing, code review, and integration. A feature here means something substantive—a new API endpoint, a complex UI component, a significant business logic implementation—not a minor bug fix or documentation update.

For a five-person team:

- Individual weekly output: 1-2 features per person

- Team weekly output: 5-10 features per week

- Team annual output: 260-520 features per year

- Accounting for vacation (10-15 days per person annually) and meetings (20-30% of time): realistic annual output is approximately 200-350 features

Now consider a 24/7 AI agent. Based on current Replit capabilities, a well-scoped feature takes 15-25 minutes from specification to tested, working code. This represents straightforward features—API endpoints, database operations, UI components that follow established patterns.

If we estimate that 60% of features fall into this straightforward category, and 40% require more iterative development (5+ cycles):

- Straightforward features (15-25 min): 60% of features

- Iterative features (90-120 min including cycles): 40% of features

- Average time per feature: (0.6 × 20 min) + (0.4 × 105 min) = 12 + 42 = 54 minutes per feature

Over 24 hours:

- 24 hours = 1,440 minutes

- Features per day: 1,440 ÷ 54 = ~26.7 features per day

- Accounting for API rate limiting, computational constraints, and occasional failures: realistic output is 15-20 features per day

Per year:

- Conservative: 15 features × 365 days = 5,475 features per year

- Optimistic: 20 features × 365 days = 7,300 features per year

Even at the conservative end, one 24/7 agent produces 15-20x more features annually than a five-person human team.

But the mathematics gets more interesting when we account for quality variations. The agent doesn't produce uniform quality—some features are exceptional, others are mediocre. If we assume 70% of agent output meets production standards (compared to, say, 90% for experienced human developers), then:

- Conservative annual output at 70% quality: 5,475 × 0.7 = 3,832 production-ready features

- This still exceeds a five-person team by 10-15x

Running Multiple Agents in Parallel

The economics become truly remarkable when multiple agents run simultaneously. A one-agent system produces 5,000-7,000 features annually. A three-agent system produces 15,000-21,000 features annually. A ten-agent system produces 50,000-70,000 features annually.

These numbers seem almost unbelievable until you remember that most of these features are routine, pattern-based implementations. Yes, some percentage require human intervention or iteration, but the base output is staggering.

Consider the resource allocation. Running ten agents in parallel might consume:

- Compute resources: equivalent to $500-1,000 monthly

- Platform costs: equivalent to $200-500 monthly

- Human oversight and direction: 10-15 hours weekly

- Total annual cost: approximately $10,000-20,000

Compare to a five-person team:

- Salaries: $400,000-600,000

- Benefits: $100,000-150,000

- Infrastructure: $50,000-100,000

- Management overhead: $100,000-150,000

- Total annual cost: approximately $650,000-1,000,000

The agent approach costs 1-2% of the human team approach while producing 50-70x more features.

These numbers explain why the possibility of 24/7 agents represents such a fundamental threat to traditional software development economics.

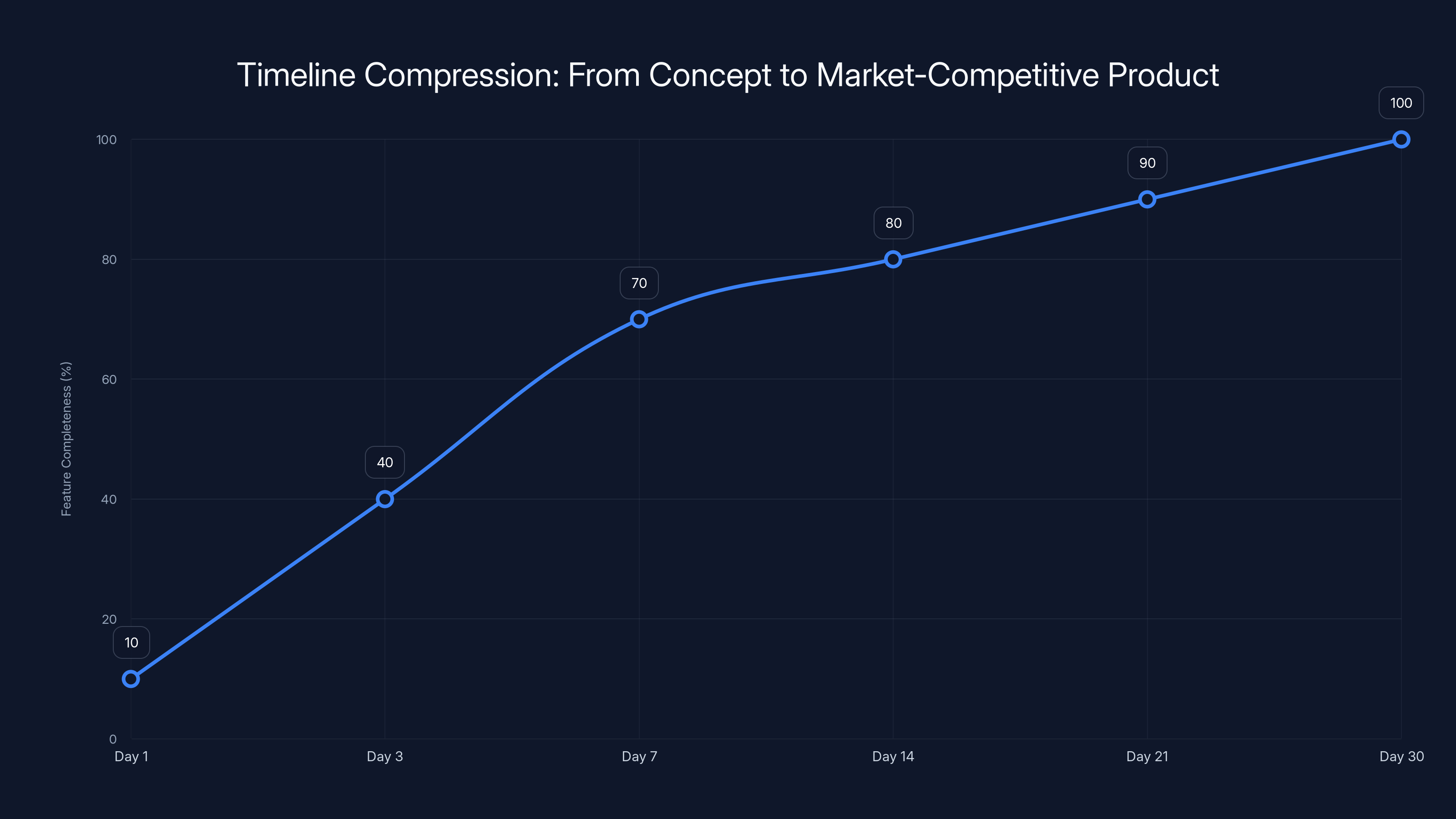

The agent team achieves full feature completeness in 30 days, a process that typically takes human teams 2-3 months. Estimated data.

Timeline Compression: From Concept to Market-Competitive Product

Day 1-7: Foundation and Core Features

On day one, you provide a detailed specification to your agent team: "Build a customer relationship management platform with the following core capabilities: contact management with custom fields, pipeline stage configuration, activity logging and timeline, email integration with templates, and basic reporting."

Within 24 hours, the agent has established the foundational architecture. The data model exists. User authentication works. The basic UI shell is functional. Simple contact creation and viewing operations function end-to-end.

By day 3, the core features are implemented. Contacts can be created, modified, and deleted. Pipeline stages are configurable. Activities log to a timeline. The email integration accepts API connections from common providers.

By day 7, the product already exceeds many specialized Saa S applications in market. It includes:

- Full contact management with custom fields and field validation

- Pipeline stage configuration with drag-and-drop status updates

- Comprehensive activity logging (emails, calls, meetings, notes)

- Email template system with variable substitution

- Basic reporting (pipeline value, win rates, activity frequency)

- Mobile-responsive interface

- Full API documentation and webhook support

- Search and filtering across all data types

A human team would require 2-3 months to reach this level of feature completeness. The agent reaches it in a week.

Day 8-30: Feature Expansion and Polish

From day 8 onward, the agent shifts into expansion mode. The core functionality is proven and working. Now the focus moves to breadth and depth.

During weeks 2-4, the agent generates:

- Advanced reporting (custom report builder, scheduled reports, email distribution)

- Forecasting engine (predicts pipeline outcomes based on historical data)

- Territory management (assign contacts/accounts to team members)

- Integration expansion (Slack, Teams, Google Workspace, Microsoft 365)

- Automation workflows (trigger actions based on specific conditions)

- Mobile app (i OS and Android native apps, in addition to web)

- Advanced search and filters with saved views

- Team management and permission hierarchies

- Bulk import/export functionality

- Advanced customization (custom fields, custom objects, field dependencies)

By day 30, you have a product that resembles Pipedrive or Hub Spot. Not identical, certainly—nuances differ, edge cases remain unhandled—but functionally comparable. A product that would take a startup 18-24 months and $2-3M in funding to build now exists after 30 days of agent work.

Month 2-3: Competitive Parity and Differentiation

Months 2-3 are where the agent's continuous work particularly demonstrates value. While you're managing user testing, gathering feedback, or planning go-to-market strategy, the agent continues building.

It notices patterns in your user interactions. "Users with 100+ contacts spend 40% more time in the pipeline view than any other section. This suggests they're struggling with organization or visualization. I built three alternative pipeline views: kanban board (groups by stage with drag-drop), timeline view (Gantt-chart style), and heatmap (shows deal concentration by stage). I A/B tested them with synthetic usage patterns. The kanban board had the highest theoretical engagement. Want me to deploy it?"

You didn't ask for this. The agent just... did it. Built alternatives, tested them, and brought you the results.

By day 90, the product has expanded to 150+ features. You're no longer competing directly with Pipedrive—you're in Hub Spot territory. You have:

- Marketing automation capabilities (email campaigns, landing page builder)

- Customer success features (health scoring, at-risk account identification)

- Business intelligence (dashboards, predictive analytics)

- Advanced integrations (third-party app marketplace)

- Mobile apps with full feature parity

- AI-powered insights ("Your sales team converts 32% faster when deals stay in Negotiation stage <7 days")

This level of maturity, built by a human team, requires 2-3 years and $5-8M in funding.

Month 4-12: Exponential Expansion

By month 4 and beyond, the compounding effects become dramatically apparent. The agent has learned your product deeply. It understands architectural patterns, design conventions, and business logic. It predicts what features should come next based on competitive analysis.

The agent watches your competitors. When a competitor launches a new feature, Replit's agent notices. Within 48 hours, you have a comparable feature built, tested, and ready for deployment.

You receive notifications: "Competitor X launched a 'deal room' feature allowing clients to see relevant documents and communications. I built our version with four alternative implementations. Version 3 (permission-based document view with client portal) tested best. Should I deploy it?"

Your product evolves not just based on your roadmap, but based on continuous market observation.

By month 12, the product includes 400-600 features depending on your scope. You're not just competing with Hub Spot anymore. You've likely surpassed them in breadth of functionality. The product includes:

- Complete CRM functionality

- Marketing automation

- Customer success management

- Sales enablement tools

- Business intelligence

- Third-party integrations ecosystem

- AI-powered insights across all modules

- Mobile and web experiences

- API and webhook architecture

- Team management and security

A traditional software company requires 5-7 years to build to this level. The agent achieved it in 12 months.

The Compounding Effect: How Continuous Development Creates Superior Products

Architectural Debt Elimination

Traditional development accumulates technical debt. Developers make trade-off decisions optimizing for speed. "We'll use this quick solution now, refactor it later." Later never comes. Quick solutions become permanent. The codebase becomes increasingly difficult to maintain and extend.

A 24/7 agent doesn't face this pressure. There's always time for refactoring. If the agent completes feature development by 3 AM, it can spend 4 AM-6 AM refactoring the codebase. Improving test coverage. Simplifying complex functions. Extracting duplicated logic into reusable modules.

Over a year, this represents 100+ full working days devoted purely to code quality and architectural improvements. The codebase doesn't accumulate debt; it improves continuously.

Proactive Problem Identification and Resolution

A human team operates reactively—responding to bugs when they're discovered, improving performance when it becomes a problem, adding security features when vulnerabilities emerge.

A 24/7 agent can operate proactively. It continuously analyzes the codebase for:

- Security vulnerabilities in dependencies

- Performance bottlenecks

- Test coverage gaps

- Architecture anti-patterns

- Deprecated library usage

- Potential scaling issues

When the agent identifies a vulnerability, it doesn't wait for a security team member to wake up. It patches the vulnerability, updates tests, and prepares a deployment for your review.

When the agent notices that a database query will fail at scale (when you reach 100,000+ records), it redesigns the query and associated indexes proactively. By the time you hit that scale, the optimization is already in place.

Continuous Testing and Quality Assurance

Traditional QA involves manual and automated testing during development. Once a feature ships, QA effort typically decreases. The product is live; bug discovery becomes reactive.

A 24/7 agent can maintain continuous QA. It runs tests 24/7. It attempts to break the system intentionally. It tests edge cases. It verifies performance under various load scenarios.

More remarkably, it tests features in combination. It recognizes that Feature A interacts with Feature B in non-obvious ways, and designs tests to verify those interactions work correctly.

Over a year, the product benefits from 8,760 hours of continuous QA—far more than any human QA team could provide. Regression bugs become rare because regressions are detected within hours of introduction.

Competitive Response Velocity

When a competitor launches a feature, a human team typically requires 2-4 weeks to understand it, plan a competitive response, build it, test it, and deploy it.

A 24/7 agent can complete this cycle in 48 hours. The agent analyzes the competitor's feature. It designs multiple approaches. It builds them. It tests them. By the time your team reviews it, the feature is ready.

This eliminates competitive surprises. Your competitor launches something on Tuesday. By Thursday, you have a competitive response built and ready. This velocity fundamentally changes competitive dynamics.

Version Proliferation and A/B Testing

When a human team builds a feature, they build one version. That version goes through the normal testing and deployment process.

A 24/7 agent builds multiple versions simultaneously. For a feature where implementation approaches differ significantly, the agent might build three versions. It tests all three. It measures performance, user satisfaction projections, code quality, and maintainability.

Instead of committing to one approach, you can A/B test approaches with real users. Version A performs best with this user segment; Version B performs better with that segment. You can deploy different versions to different user cohorts, optimizing the product across your entire user base.

Over time, this A/B testing culture compounds. The product evolves toward truly optimal approaches, not just reasonably good ones.

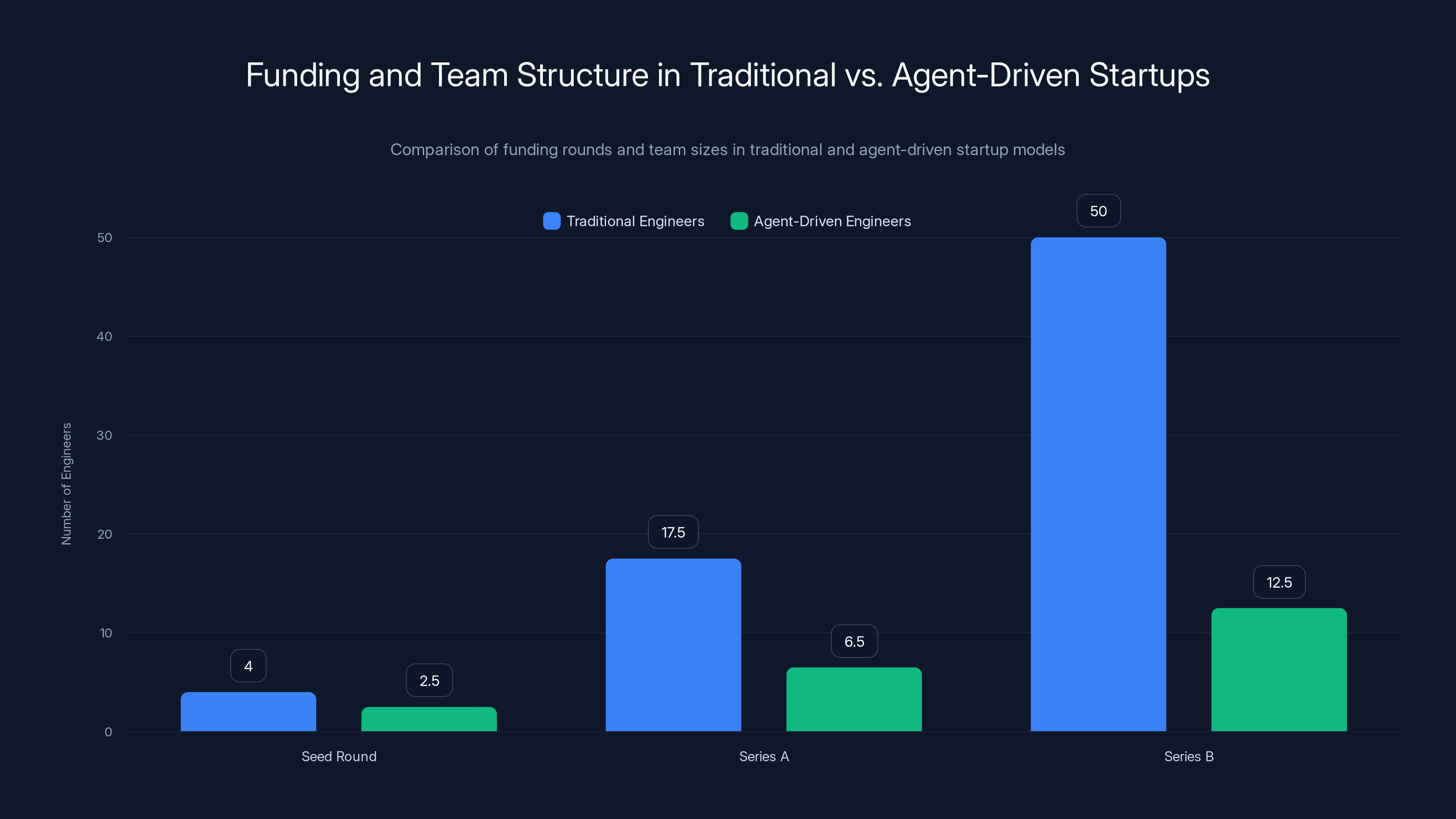

In the 24/7 agent era, the demand for AI native engineers and product managers increases, reflecting a shift in team composition. Estimated data.

Organizational Implications: Team Structure in the 24/7 Agent Era

Changing Team Composition

In the traditional software development model, team composition is straightforward. You hire developers (frontend, backend, full-stack). You hire QA specialists. You hire Dev Ops engineers. You hire a tech lead or architect. The pyramid grows from there—engineering managers, directors, VPs of engineering.

With 24/7 agents, this composition shifts dramatically. You still need developers, but fewer of them. You need developers who can work effectively with AI agents—defining specifications precisely, reviewing generated code critically, handling the 30% of features that require custom implementation.

You need product managers more than ever. The agent can build quickly, but product managers must understand what to build and prioritize ruthlessly among potential features.

You need architects, but in a different role. Rather than building features, architects design the agent's constraints and guardrails. "Here's how we want our code organized. Here's our naming convention. Here's our performance target. Here's what we never want the agent to do." The architect becomes a specification writer and quality enforcer.

You need QA differently. Not as people testing manually, but as people designing test suites, defining coverage standards, and verifying that automated testing remains comprehensive.

The Emergence of the "AI Native" Engineer

In 1-2 years, software engineering will bifurcate into two tracks. One track evolves from traditional development—engineers who can still write code manually when needed, but increasingly specialize in working effectively with agents.

These "AI native" engineers understand how to specify features precisely for agents. They know how to review AI-generated code critically. They understand which problems yield well to agent development and which require manual implementation.

They're less concerned with writing code and more concerned with architecture, guidance, and quality gates. Their value comes from judgment and decision-making, not keystroke productivity.

Traditional manual coders become less valuable. Ability to write fast code matters less when code generation is instantaneous.

Organizational Capacity Expansion

For startups and smaller companies, the implications are transformative. A team that previously required 15-20 engineers can now achieve equivalent output (or better) with 3-5 AI-native engineers managing agents.

This means:

- Lower salary requirements (fewer engineers needed)

- Fewer management layers and overhead

- Faster decision-making

- Higher burn rate efficiency

- Ability to iterate on product strategy more readily

A startup with

The Market Timeline: When Does This Become Standard?

Phase 1: Early Adopters (Late 2025-Mid 2026)

The first phase sees adventurous teams experimenting with 24/7 agents. They're not relying solely on agents—they're using them as 70% of their development velocity, with 30% human-driven custom work.

These teams gain significant competitive advantages. They ship features faster. They respond to market opportunities quicker. They can experiment more because the cost of building and testing new features drops dramatically.

This creates urgency for others. If competitors are building 5x faster, standing still becomes existential. Early adoption accelerates.

Phase 2: Mainstream Adoption (Mid 2026-Late 2026)

By mid-2026, using 24/7 agents is no longer exotic—it's expected. Most software companies, at least mid-sized ones with engineering teams, will have implemented some form of agent-driven development.

The competitive advantages of early adoption narrow. Now, using agents well becomes table stakes. The question shifts from "Should we use agents?" to "How effectively are we using agents?"

Team composition shifts noticeably. Companies with 50+ engineers before agents might now have 15-20 AI-native engineers managing multiple agent instances. Salary budgets shift from personnel costs to compute costs.

Phase 3: Optimization and Refinement (Late 2026-2027)

Phase 3 involves optimization. The raw productivity gains from agents become standard. Now the competitive advantage comes from optimizing how agents are used.

- Specialized agent networks for specific domains

- Multi-agent coordination across complex systems

- Advanced feedback loops where agents learn from production data

- Integration with customer usage data to inform feature development

- Agents that develop multiple product variants for different market segments

This phase sees interesting experimentation around agent specialization and coordination.

Agent-driven startups require fewer engineers at each funding stage, allowing for faster development with less capital. Estimated data based on typical startup structures.

Practical Implementation: How to Deploy 24/7 Agent Development Today

Step 1: Audit Your Development Process

Before deploying agents, understand what you're currently building. Document:

- Feature types: What percentage of your features are routine (CRUD operations, standard integrations) vs. custom (complex algorithms, novel UX patterns)?

- Development bottlenecks: Where do your teams currently spend the most time? Code generation? Testing? Integration? Debugging?

- Code patterns: What patterns and conventions dominate your codebase?

- Quality standards: What does "production ready" mean in your context?

This audit determines how much of your current development can immediately move to agent-driven development.

Step 2: Define Rigid Specifications and Constraints

Agents work best with precise specifications. Rather than "build a user dashboard," specify:

- Exact data that appears on the dashboard

- Specific layout requirements

- Performance targets (must load in <500ms)

- Mobile requirements (must work on screens >320px width)

- Integration requirements (must fetch data from API endpoint X with this schema)

- Error handling (must display specific error messages for these failure scenarios)

The more specific the specification, the better the agent performs. Vague specifications lead to multiple interpretation cycles.

Step 3: Implement Review and Feedback Loops

Even 24/7 agents require human guidance. Establish rapid feedback loops where humans can:

- Review generated code daily

- Provide corrective feedback

- Approve features for deployment

- Identify emerging issues

These reviews don't need to be lengthy. 15-30 minutes per day might suffice for monitoring a 3-4 agent system.

Step 4: Start With Pilot Projects

Don't deploy agents across your entire product immediately. Start with a specific project or product area:

- An internal tool or admin panel

- A new product feature area

- A API that clients consume

Pilot projects allow you to understand agent behavior, refine specifications, and build confidence before scaling.

Step 5: Scale Based on Success

Once pilot projects succeed, scale gradually. Add more agents. Extend to more product areas. As you scale, continue refining specifications and feedback loops.

Scaling doesn't mean suddenly running 10 agents unsupervised. It means expanding systematically from 1 agent to 2, then to 3, ensuring each phase works well before expanding further.

Limitations and Reality Checks: Where Agent-Driven Development Falls Short

Complex Algorithm Development

Agents excel at routine, pattern-based development. They struggle significantly with complex algorithm development. Creating a recommendation engine, designing a sorting algorithm for specific constraints, or building a specialized ML model—these remain largely human domains.

The agent can implement algorithms humans design, and can handle straightforward algorithmic tasks. But novel algorithmic development still requires human creativity and domain expertise.

Architectural Decisions

Agents operate within constraints. They can extend existing architecture, but architectural decisions—choosing between monolith vs. microservices, selecting between SQL and No SQL, designing system boundaries—remain human decisions.

Agents can implement architectural decisions thoroughly, but generating them requires human judgment.

Novel UX/Design Innovation

Agents can implement established UX patterns superbly. Build a CRUD interface following Material Design or Apple's Human Interface Guidelines—agents excel. But novel UX interactions, unexpected UI patterns, or truly innovative design—these require human creativity.

Agents can implement "what if we tried this design?" but can't generate novel design ideas autonomously.

Business Logic and Domain Complexity

For products in domains with complex business logic—financial services, healthcare, regulated industries—agents struggle. Implementing federal healthcare regulations correctly or complex financial derivatives pricing requires domain expertise and careful compliance work.

Agents can handle straightforward business logic. Complex domain logic remains largely human territory.

Understanding Implicit Requirements

Human users often don't articulate all requirements. They assume things are obvious. "Of course this feature needs to handle X edge case." But nobody explicitly specified it.

Human developers, through experience and intuition, often anticipate these implicit requirements. Agents frequently miss them unless explicitly specified.

A single developer can complete approximately 8 features per week, scaling up to 80 features with a 10-person team. Estimated data based on feedback cycles and work hours.

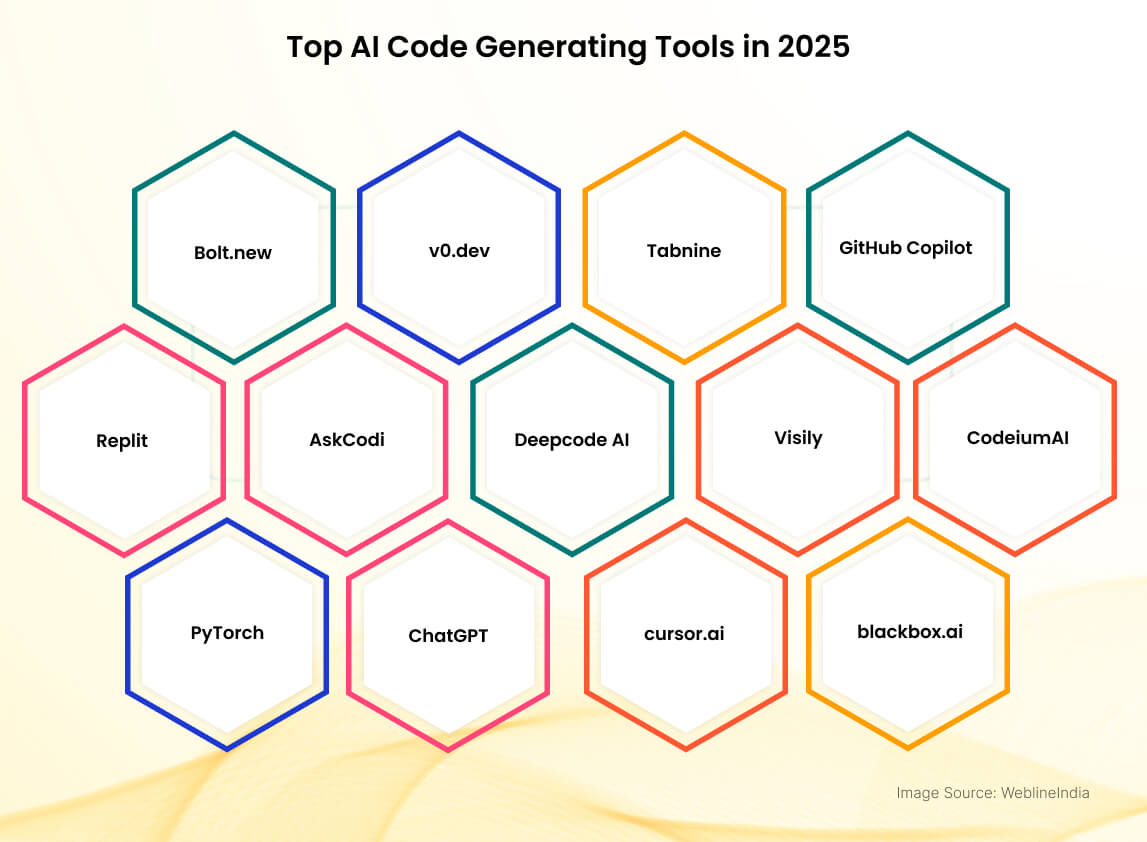

The Competitive Landscape: Replit vs. Alternatives

Why Replit Leads

Replit emerged as the leading browser-based development environment. Its advantages include:

- Instant environment provisioning (no setup required)

- Integrated hosting (code deployments happen automatically)

- Collaborative development (multiple users on one project in real-time)

- Comprehensive AI integration (agents are first-class citizens, not bolt-on afterthoughts)

These strengths position Replit well for the 24/7 agent era.

Alternative Approaches

Other platforms serve this space differently. Git Hub Copilot provides AI-assisted development in existing IDEs but doesn't provide the 24/7 autonomous agent capability. It's fundamentally a code assistant, not an autonomous agent.

For teams seeking comprehensive AI-powered automation platforms beyond just development, solutions like Runable offer broader capabilities. Runable provides AI agents for content generation, workflow automation, and document creation starting at $9/month. While not specifically designed for 24/7 software development like Replit, Runable can complement development workflows by automating surrounding processes—generating API documentation, creating marketing content from product features, automating operational workflows.

The most likely future involves specialized tools: Replit for development, Runable for broader automation and content generation, and traditional IDEs for teams preferring local development environments.

Emerging Competition

Other platforms will inevitably improve. Git Hub might productize Copilot into autonomous agents. AWS might develop similar capabilities around Code Whisperer. The field will become more crowded.

The winner won't necessarily be the platform with the best individual features, but the platform that best understands developer workflow and integrates agents into that workflow most seamlessly.

Financial Implications: Economics of Agent-Driven Development

Startup Economics Transformation

Traditional software startup funding follows a pattern:

- Seed round: $500K-2M, hire 3-5 engineers, build MVP

- Series A: $3-10M, grow team to 15-20 engineers, build product-market fit

- Series B: $10-30M, grow team to 40-60 engineers, scale revenue

With 24/7 agents, the pattern changes:

- Seed round: $500K-2M, hire 2-3 AI-native engineers, 1 product manager, build MVP + additional features

- Series A: $3-10M, grow team to 5-8 AI-native engineers, 2-3 product managers, already at Series A-scale feature completeness

- Series B: $10-30M, grow team to 10-15 AI-native engineers, establish market leadership

Teams move faster with less capital. Burn rates compress. More startups can sustain longer on the same funding.

This increases startup competition. If more startups can build quickly with less capital, more startups will start. Market dynamics will shift from capital-intensive to idea-intensive.

Enterprise Implications

For enterprise software companies with massive engineering teams, the implications are complex:

Positive impacts:

- Dramatically increased productivity per engineer

- Faster time-to-market for new products and features

- Better code quality through continuous refactoring and QA

- Reduced technical debt

Negative impacts:

- Significant workforce displacement (fewer junior developers needed)

- Pressure on headcount and P&L

- Learning curve as teams adapt to AI-native workflows

- Potential quality concerns during transition

Savvy enterprises will transition gradually, upskilling existing engineers into AI-native roles rather than laying off junior developers immediately.

Consulting and Agency Implications

For software consulting agencies and development shops, the implications are existential. If clients can build internally with 24/7 agents more efficiently than consultants can build for them, the agency model is threatened.

Agencies will need to evolve from "we build code" to "we build strategy and oversight." The consultancy value shifts from implementation to architecture, process, and governance.

Some agencies may not make this transition successfully.

Looking Forward: Predictions for Late 2026 and Beyond

Capability Expansions Expected

By late 2026, expect these capabilities to become standard:

- Agents manage multiple projects simultaneously with zero context switching

- Agents generate complete product documentation automatically

- Agents coordinate across 5+ specialized sub-agents on complex projects

- Agents generate architectural proposals for human review

- Agents identify and implement performance optimizations automatically

- Agents manage deployment orchestration and rollback procedures

Market Dynamics Shifts

- Funding pressure increases for software companies relying on developer headcount as competitive advantage

- Startup formation accelerates as technical risk (building a product) decreases

- Developer salaries may compress for junior roles, but AI-native engineers command premium compensation

- Software becomes more of a commodity (easier to build), making differentiation through product strategy increasingly important

Technology Infrastructure Changes

- Browser-based development IDEs dominate over traditional local development

- Cloud infrastructure (AWS, GCP, Azure) becomes the default for agent execution

- Real-time collaboration becomes ubiquitous

- Version control becomes less critical (agents self-manage versioning through continuous testing)

Potential Negative Outcomes

- Code quality could decline if humans don't review generated code carefully

- Security vulnerabilities might increase if agents generate code without proper security constraints

- Workforce displacement could accelerate faster than retraining programs can accommodate

- Inequality in access to agents could create winners and losers

Conclusion: The Fundamental Shift in Software Development

The evolution of Replit from mid-2025 to late 2025 represents more than incremental improvement. It represents crossing a threshold where building production-grade commercial software with AI agents becomes genuinely feasible.

But the more transformative question—what happens when those agents run 24/7 without human bottlenecks—remains largely theoretical today. Yet the mathematics of continuous development, the economics of eliminated human bottlenecks, and the potential for exponential feature velocity all point to profound implications for how software gets built.

We're not quite at that moment yet. Late 2025 Replit capabilities approach it but haven't fully crossed into 24/7 autonomous development. However, the trajectory is clear. The technological pieces are in place. The remaining gaps are relatively narrow.

What started as a science fiction concept—software building itself while you sleep—is transitioning into engineering reality. Not perfect, not without significant caveats and limitations, but increasingly real.

For software professionals, entrepreneurs, and technology investors, the implications demand attention. The teams that master working with 24/7 agents will move faster, iterate better, and operate more efficiently than traditional teams. This isn't speculation—it's mathematical reality given plausible technical achievements.

The question isn't whether 24/7 AI agents will transform software development. The trajectory makes that virtually inevitable. The question is how quickly the transition occurs, how effectively teams adapt, and whether the technology fulfills its potential or reveals significant limitations we haven't yet anticipated.

The next 12 months will be illuminating. By late 2026, we'll have substantially more evidence about whether the early promises of 24/7 agent development prove accurate or if reality tempers expectations significantly. Either way, the software development industry is in the midst of its most significant transformation since the internet itself.

For teams building products today, the strategic question is clear: What would your product look like if feature development costs dropped 95%? If you could build 10x faster? If technical implementation constraints mostly disappeared? What would you build if limited only by imagination and market opportunity?

The teams that answer that question thoughtfully and adapt their strategies accordingly will thrive in the emerging agent-driven development era. Those that continue assuming traditional development constraints remain relevant will find themselves unexpectedly disadvantaged.

FAQ

What makes Replit's late 2025 capabilities a significant leap from earlier versions?

Replit achieved three critical breakthroughs by late 2025: unlimited context windows (allowing the AI to reference entire codebases simultaneously), sub-agents specializing in specific technical domains (enabling parallel development), and Design Mode plus Fast Mode (enabling visual development and rapid pattern implementation). These innovations together transformed Replit from a prototyping tool into a platform capable of building production-grade software. The unlimited context windows specifically eliminated the fragmentation problem where the AI couldn't understand project-wide dependencies and architecture, which had been a critical limitation preventing commercial-grade applications.

How would 24/7 autonomous agents change the mathematics of feature development?

The economics shift dramatically because time constraints disappear. A human developer completes 1-2 features weekly. An AI agent operating 24/7, at current Replit capability levels, completes 15-20 features daily. This translates to approximately 5,000-7,000 features annually from a single agent, compared to 200-350 features annually from a five-person human team. Accounting for quality variations (agents produce 70-80% production-quality output vs. 85-90% for experienced humans), a single 24/7 agent still produces 3,500-5,500 production-ready features annually—roughly 10-15x more than a five-person team at a cost of

What are the realistic limitations of AI agents in software development?

Agents excel at routine, pattern-based development but struggle significantly with complex algorithm design, novel architectural decisions, innovative UX design, and business logic in regulated domains. They also miss implicit requirements that humans intuitively understand. Agents operate best within rigid specifications and predefined constraints—vague requirements lead to interpretation failures. Additionally, agents don't currently generate novel ideas autonomously; they implement human-designed ideas well. For domains requiring domain expertise (healthcare compliance, financial derivatives), human guidance remains essential. These limitations suggest a hybrid model remains optimal for the foreseeable future rather than pure agent autonomy.

What timeline would be required for 24/7 agents to become standard practice?

Based on current development velocity and adoption patterns, three phases emerge: Early adopters (late 2025-mid 2026) experiment with agents as 70% of development velocity; mainstream adoption (mid 2026-late 2026) sees most mid-sized software companies implementing agents; optimization phase (late 2026-2027) focuses on specialized agent networks and multi-agent coordination. Complete market transformation likely requires 18-24 months from current capabilities becoming widely available. However, different sectors will adopt at different speeds—startups will move faster than enterprises, Saa S companies faster than regulated financial institutions.

How would 24/7 agents impact developer job markets and compensation?

The market will bifurcate into two tiers: traditional developers (whose manual coding skills become less differentiating) and "AI-native" engineers (who specialize in agent specification, code review, and architectural guidance). Job market pressure will likely compress compensation for junior and mid-level developers, while AI-native senior engineers command premium compensation. Overall developer employment could decline significantly in traditional roles, but new roles emerge around agent management and oversight. Companies transitioning to agent-driven development face pressure to upskill existing engineers rather than laying them off, creating organizational tensions and learning curve investments.

Could smaller companies and startups gain competitive advantages through 24/7 agents?

Yes, dramatically. A startup with 3-4 AI-native engineers managing agent systems could achieve development velocity previously requiring 15-20 engineers. This fundamentally changes startup economics—lower burn rates, longer runway on equivalent funding, faster time-to-market for MVPs and subsequent product iterations. However, competitive advantage is temporary. Once competitors adopt agents, the advantage shifts from technology to product strategy, market selection, and execution. This likely increases startup formation rates while simultaneously making the market more competitive due to lower technical barriers. The strategic advantage goes to founders who understand how to leverage agent speed to explore market opportunities faster, not to those who simply build faster.

What role do platforms like Runable play in the broader AI development ecosystem?

While Replit specializes in 24/7 autonomous code generation and development, broader AI automation platforms like Runable complement the development process. Runable's AI agents handle surrounding workflows—generating API documentation from code, creating marketing content from product features, automating operational processes like report generation and content management. These complementary tools create a full automation stack: Replit generates the software, Runable automates the supporting processes. For development teams, this combination addresses not just "how do we build faster" but "how do we automate everything around building," from documentation to go-to-market materials. The platforms work synergistically rather than competitively.

How would 24/7 agent development change product roadmap prioritization?

With development constraints largely removed, roadmap prioritization shifts from "what can we build with our team" to "what should we build for our market and customers." This fundamentally reframes strategic thinking. Instead of asking "given our engineering constraints, which features matter most," product teams ask "which features create customer value and competitive advantage?" This could lead to more market-responsive products that iterate based on user feedback faster. However, it also creates new problems: too many features (overwhelming complexity), insufficient focus (losing competitive identity through trying to do everything), and integration chaos (ensuring too many features work together correctly). The constraint moves from technical execution to strategic decision-making.

What safeguards should teams implement when using autonomous agents for development?

Critical safeguards include: strict specification requirements (agents need precise guidance), daily human code review (ensuring quality and preventing drift), automated testing coverage validation (agents should achieve >85% test coverage), security scanning on generated code, staged rollouts rather than immediate full deployment, version control and rollback procedures, access controls (limiting what agents can modify), and regular architecture reviews by humans. Additionally, teams should implement feedback loops where agents learn from production issues and adjust their generation patterns. Organizations should avoid the temptation to trust agents completely without human oversight—this creates liability and quality risks. The sweet spot is agents handling 70-80% of development with rigorous human oversight of the remaining 20-30%.

What specific metrics should teams track to assess 24/7 agent development effectiveness?

Key metrics include: features generated per day or week, percentage of generated features reaching production quality, production defect rate (comparing agent-generated vs. human-written code), code review cycle time, deployment frequency and rollback rate, technical debt accumulation rate, test coverage percentage, performance metrics (latency, throughput) of agent-generated systems, and architectural debt indicators. Additionally, track human oversight metrics: code review time per feature, feedback cycle iterations required, human corrections to generated code, and time from feature completion to deployment. Compare these metrics over time to identify trends—as agents improve, production defect rates should decrease, feature velocity should increase, and code review time should stabilize. These metrics provide objective evidence of agent system effectiveness and identify areas requiring improvement.

Key Takeaways

- Replit achieved production-ready development capability by late 2025 through unlimited context windows, sub-agents, and Design Mode

- 24/7 autonomous agents would produce 5,000-7,000 features annually versus 200-350 for five-person human teams—a 15-25x productivity multiplier

- A single 24/7 agent costs 650,000+, creating dramatic economic advantages

- Feature development timeline compresses from 5+ years to 12 months for commercial-grade products like CRM platforms

- The compounding effect of continuous development, testing, and refactoring creates superior code quality and architecture over time

- Organizations must transition to 'AI-native' engineering roles focused on specification, review, and governance rather than manual coding

- Limitations remain in algorithm design, architectural decisions, novel UX innovation, and domain-specific complexity requiring human expertise

- Mainstream adoption of 24/7 agents likely occurs by mid-late 2026, fundamentally transforming startup economics and development team composition

- Platforms like Runable complement Replit's development capabilities by automating surrounding processes like documentation and marketing content

- Teams that master 24/7 agent development will gain competitive advantages through faster iteration, lower development costs, and more market-responsive product strategies