Amazon's $50 Billion Open AI Investment: Reshaping the AI Landscape in 2025

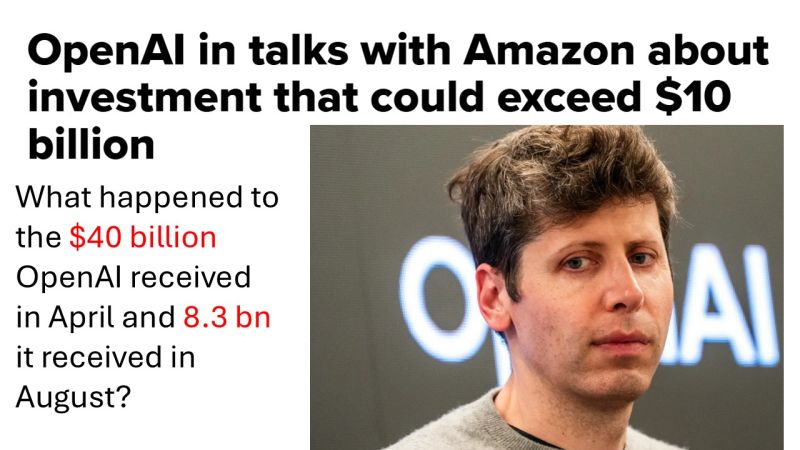

The artificial intelligence industry is experiencing unprecedented consolidation and investment activity. In early 2025, reports emerged that Amazon is in advanced negotiations to invest $50 billion into Open AI, creating one of the most significant technology funding developments in recent memory. This potential deal represents far more than a simple capital infusion—it signals a fundamental shift in how major cloud providers are positioning themselves in the race for AI dominance.

The significance of this investment cannot be overstated. Open AI, already valued at

What makes this situation particularly fascinating is the apparent contradiction it creates. Amazon has simultaneously been making massive commitments to Anthropic, Open AI's primary competitor, with over

This article explores the depth and breadth of this investment opportunity, its implications for the broader AI ecosystem, the strategic reasoning behind such a dual-investment approach, and what these developments mean for enterprises, developers, and competing platforms. We'll examine the deal structure, the players involved, the timeline expectations, and the competitive dynamics that make this investment so strategically important.

The artificial intelligence market has become the defining competitive battleground of the technology industry. Unlike previous technology cycles where success could be achieved through platform dominance or distribution advantages, the AI era demands exceptional foundation models, cutting-edge infrastructure, and substantial capital reserves. Amazon's reported investment reflects an understanding that participating meaningfully in this landscape requires direct financial participation in the companies shaping its future.

The Deal Structure and Financial Details

Investment Size and Valuation Implications

The reported $50 billion investment from Amazon would be extraordinarily significant in the context of technology industry financing. To provide perspective, this amount exceeds the total venture capital raised by most technology companies throughout their entire histories. The investment size suggests that Amazon views Open AI not as a vendor or partner but as a strategic asset worthy of substantial ownership stakes.

According to reporting, this investment would be part of a larger

The mathematics of this valuation highlight the outsized returns expected from AI investments. Companies in the technology sector typically see valuations rise based on revenue multiples, user growth, or competitive positioning. Open AI's valuation increases suggest investors believe the company will achieve exceptional financial outcomes—potentially reaching tens of billions in annual revenue—based on the licensing and deployment of its models and services.

Amazon's $50 billion contribution would likely secure it substantial equity ownership in Open AI, though the exact percentage remains undisclosed in available reporting. Depending on the deal structure, Amazon might receive board representation, information rights, preferred investor protections, or other governance benefits. Such terms would typically be negotiated as part of a major institutional investment of this size.

Timeline and Closing Expectations

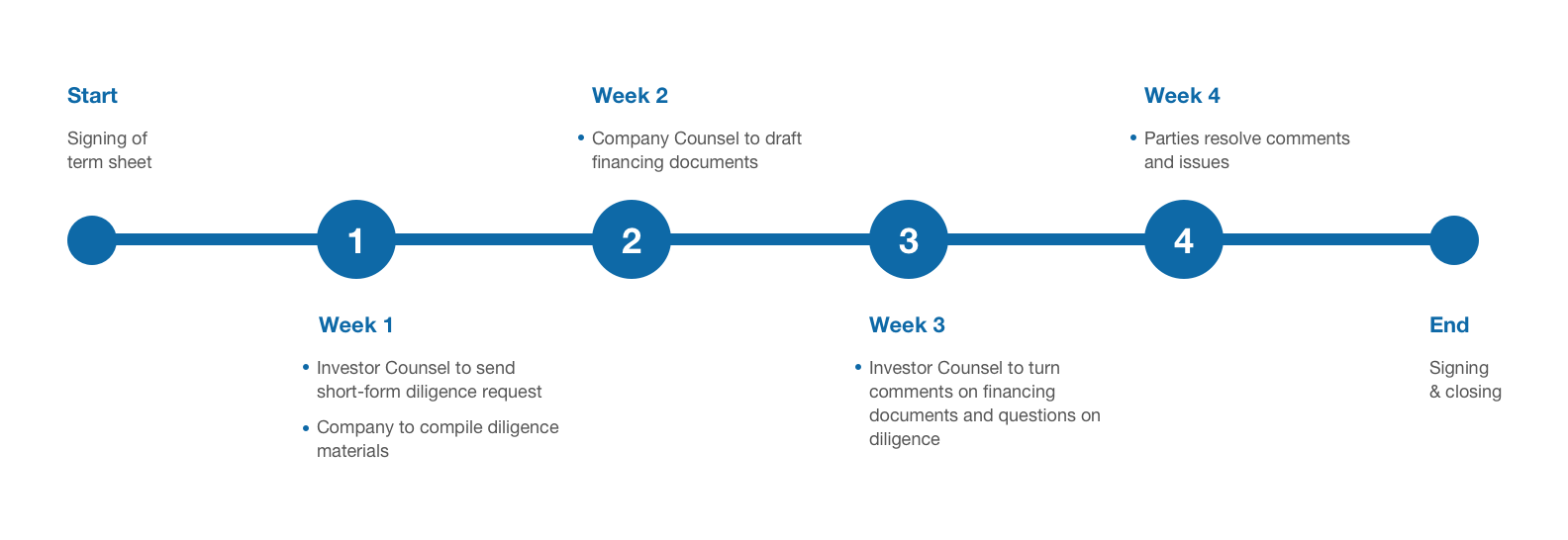

Reports indicate that the funding deal is expected to close by the end of Q1 2025, providing a clear timeline for when this transformative capital infusion would become official. This timeline suggests that the negotiations were already in advanced stages when reports emerged, with due diligence processes likely well underway.

The expedited timeline is notable because major investments of this scale typically require extended evaluation periods. The fact that negotiations appear to be moving toward rapid closure suggests that Amazon and Open AI have high confidence in the strategic fit and that regulatory concerns—if any—are not expected to create significant obstacles. Technology investments of this type generally receive less regulatory scrutiny than acquisitions would, though significant foreign investment considerations could theoretically complicate matters.

Q1 2025 represents a compressed timeline for completing final negotiations, but not unusually so for parties with strong motivation and alignment. Both Amazon and Open AI would benefit from closing quickly to begin the operational integration and infrastructure deployment phases of the partnership.

Negotiation Leadership and Strategic Oversight

The reported negotiations are being led by Amazon CEO Andy Jassy and Open AI CEO Sam Altman, indicating that both companies view this as a top-priority strategic initiative. CEO-level involvement in negotiations is typically reserved for deals with transformational implications, suggesting that both executives see this investment as critical to their respective company strategies.

Jassy has been instrumental in transforming Amazon's cloud operations into a profit-generating engine while maintaining market share growth. His willingness to personally lead these negotiations suggests he understands the strategic importance of artificial intelligence to Amazon's future competitive position. Similarly, Altman's involvement reflects Open AI's commitment to closing this critical funding round with preferred investors who align with the company's strategic vision.

The direct CEO involvement also streamlines decision-making and enables faster progress on complex issues. Rather than negotiations proceeding through multiple layers of management and board committees, CEO-to-CEO discussions can resolve strategic questions more rapidly and with greater authority.

Amazon's

Strategic Context: Amazon's Dual Investment Approach

The Anthropic Partnership and Infrastructure Investment

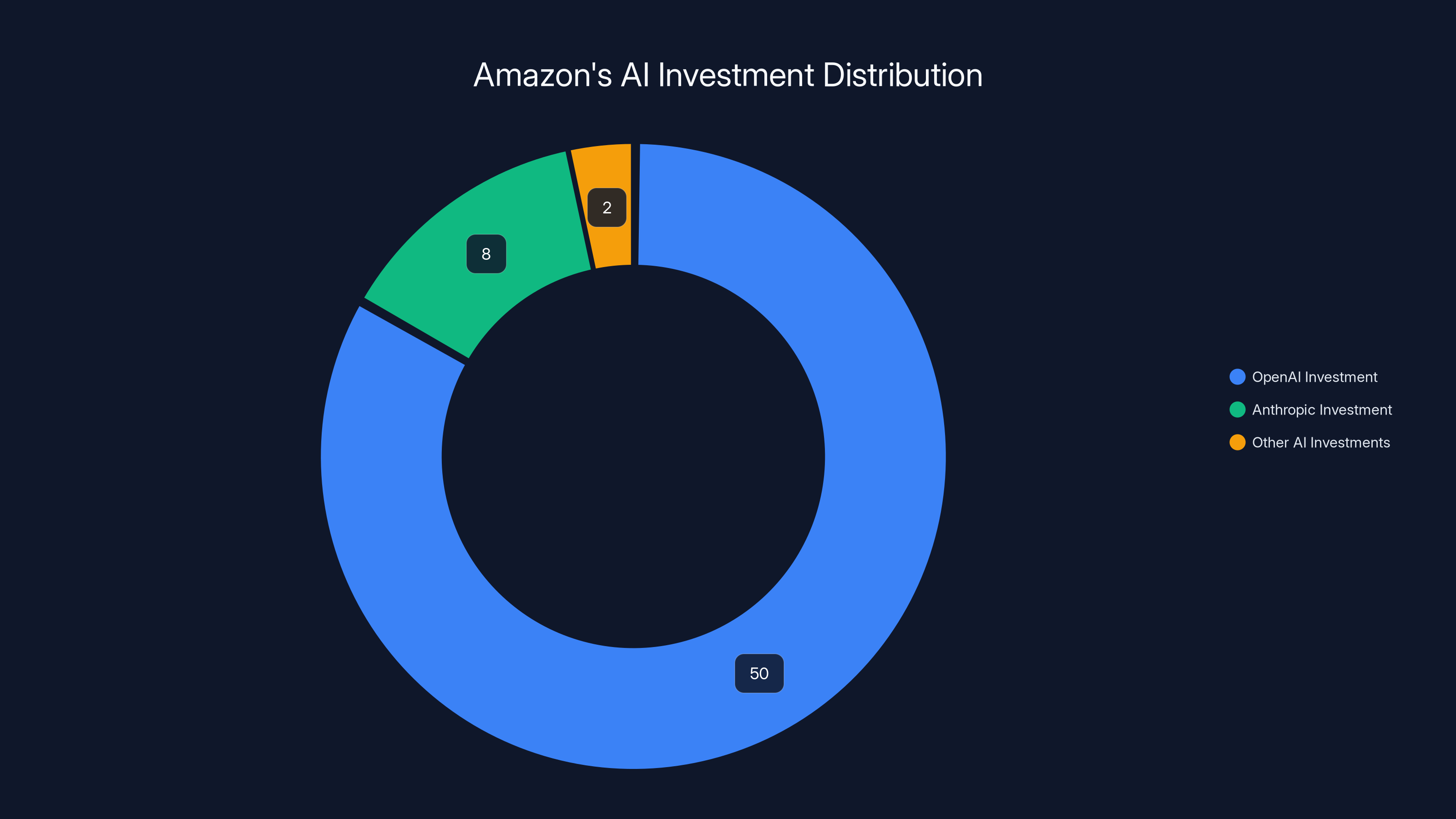

Perhaps the most intriguing aspect of Amazon's reported Open AI investment is that it occurs simultaneously with Amazon's massive commitment to Anthropic, Open AI's primary competitor. Amazon has invested at least $8 billion into Anthropic and serves as the primary cloud provider and training infrastructure partner for that company's model development.

This dual-investment strategy might seem contradictory on the surface, but it actually reflects a sophisticated approach to managing risk in the foundation model era. By maintaining significant investments in multiple competing AI companies, Amazon ensures that it is not dependent on any single vendor's success or strategic direction. If Open AI's model architecture proves to be the most effective for certain applications, Amazon benefits from its Open AI investment. Conversely, if Anthropic's approach to model alignment and safety creates competitive advantages, Amazon's Anthropic investment positions it to capitalize on that success.

The strategy also reflects the reality that the foundation model space is still in early stages of development, and no company can yet definitively claim technological superiority across all use cases. By participating in multiple companies' success, Amazon increases the likelihood that it will be well-positioned regardless of which companies emerge as long-term leaders.

The Indiana Data Center Campus and Infrastructure Strategy

Amazon's recent announcement of an $11 billion data center campus in Indiana specifically designed to exclusively run Anthropic models demonstrates a long-term commitment to that partnership. This infrastructure investment represents years of guaranteed capacity and represents a meaningful competitive advantage for Anthropic in model training and deployment.

Yet this commitment need not prevent Amazon from simultaneously investing in Open AI. In fact, having diversified infrastructure partnerships could actually strengthen Amazon's overall position. With capacity dedicated to Anthropic models and broad Open AI access through the reported investment, Amazon ensures access to leading AI capabilities across multiple technical approaches and applications.

This infrastructure-level commitment suggests that Amazon is thinking about the AI market in terms of decades-long competitive positioning rather than quarterly financial results. Massive data center investments represent multi-year commitments that reflect confidence in long-term strategic directions.

AWS Cloud Implications and Competitive Positioning

Amazon's reported investments in both Open AI and Anthropic have direct implications for AWS (Amazon Web Services) competitive positioning against Microsoft Azure and Google Cloud. Microsoft has been heavily promoting its integration with Open AI through Azure services, leveraging its close relationship with Open AI to drive cloud adoption and lock-in customers into the Azure ecosystem.

By becoming a major Open AI shareholder, Amazon potentially gains greater influence over deployment strategies, pricing, and feature prioritization. The company could potentially negotiate terms that ensure Open AI models are efficiently deployable on AWS infrastructure or that pricing structures favor AWS customers. Even without explicit preferential terms, being an owner and strategic partner could provide Amazon with technical insights and roadmap information that benefit AWS competitive positioning.

Similarly, the Anthropic partnership gives AWS customers access to a distinct AI model with different architectures and potentially superior performance on specific use cases. Offering customers a choice between Open AI and Anthropic models—both well-integrated with AWS services—creates a competitive advantage over cloud providers with limited AI model access.

Amazon's

Competitive Landscape and Market Implications

Microsoft's Strategic Position and Azure AI Integration

Microsoft has been the earliest major cloud provider to form strategic alliances in the foundation model space. The company's billions in investment in Open AI, combined with preferred integration rights in Azure, has given Microsoft meaningful competitive advantages in the enterprise AI market. Microsoft's Copilot products—which leverage Open AI technology—have become significant drivers of adoption for Azure and Microsoft 365.

Amazon's reported Open AI investment doesn't necessarily diminish Microsoft's position, as Microsoft's existing Open AI investments and contractual relationships with the company remain in place. However, it does create a situation where multiple major cloud providers have strategic relationships with the same foundation model company, potentially reducing Microsoft's ability to exclusively differentiate its offerings through Open AI access.

The competitive dynamics between cloud providers are now shifting from a binary choice (Azure with Open AI, or other providers without) to a more complex landscape where multiple providers offer varying degrees of access to multiple AI models. This pluralization of access could actually accelerate enterprise AI adoption by reducing concerns that choosing one cloud provider would lock organizations into a specific AI vendor.

Google Cloud's Gemini Strategy and Alternative AI Approaches

Google Cloud has pursued a different strategy, investing heavily in developing its own Gemini foundation models rather than investing significantly in external AI companies. This approach provides Google with complete control over its AI models while requiring substantial internal investment in model research and infrastructure.

Google's approach offers certain advantages—complete control over technology roadmaps, no dependence on external partners, and the ability to integrate AI capabilities deeply into Google Cloud services. However, it also requires Google to compete directly in foundation model development against specialized AI companies that focus exclusively on that domain. As of 2025, Open AI's models are widely viewed as technically superior for many enterprise applications, giving companies with Open AI access meaningful competitive advantages.

Amazon's dual strategy of investing in external companies while leveraging AWS infrastructure advantages creates a middle ground—the company maintains strong relationships with cutting-edge AI companies while ensuring its cloud services can efficiently deploy and integrate their models.

Specialized AI Companies and Competitive Challenges

Beyond the major cloud providers, numerous specialized AI companies have emerged, each with distinct technical approaches and target use cases. Anthropic's focus on alignment and safety, x AI's different architectural approaches, and other emerging companies represent alternatives to Open AI's dominance in the general-purpose foundation model space.

Amazon's multi-investment approach reflects an understanding that specialization will likely matter in the AI era. Rather than betting entirely on general-purpose models, enterprises will likely combine multiple specialized models with specific performance characteristics for different tasks. By maintaining relationships with leading companies across the competitive landscape, Amazon ensures it can offer customers access to best-of-breed solutions regardless of which companies emerge as category winners.

Open AI's Funding Strategy and Capital Requirements

The $100 Billion Funding Round and Its Strategic Purpose

Open AI's pursuit of approximately $100 billion in additional funding reflects the extraordinary capital requirements of cutting-edge AI development. Foundation model research and training demands unprecedented computational resources. Open AI's recent announcements about developing more advanced models, expanding to new modalities, and deploying at scale all require substantial capital investment.

The funding is intended to support multiple strategic priorities: accelerating research into more capable models, expanding computational infrastructure for training, building deployment infrastructure to serve enterprise and consumer customers at scale, and hiring world-class talent in a highly competitive hiring environment. Each of these areas requires billions in capital investment.

Foundation model training alone has become extraordinarily expensive. Estimates suggest that training state-of-the-art large language models costs hundreds of millions to billions of dollars. As models become more capable and larger, training costs increase substantially. Open AI's funding round reflects the reality that maintaining technological leadership requires continuous reinvestment in infrastructure and research.

Diversified Investor Base and Strategic Alignment

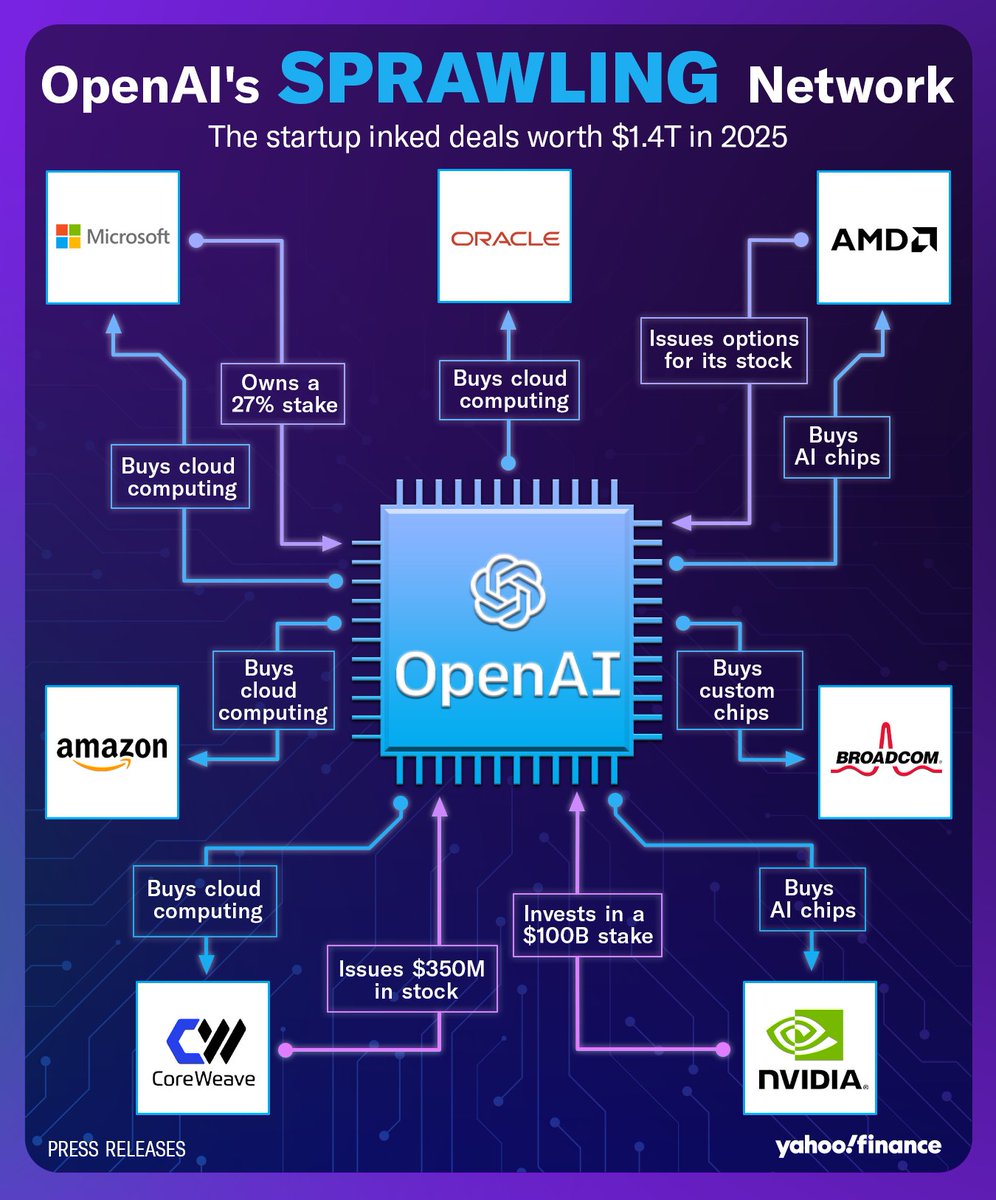

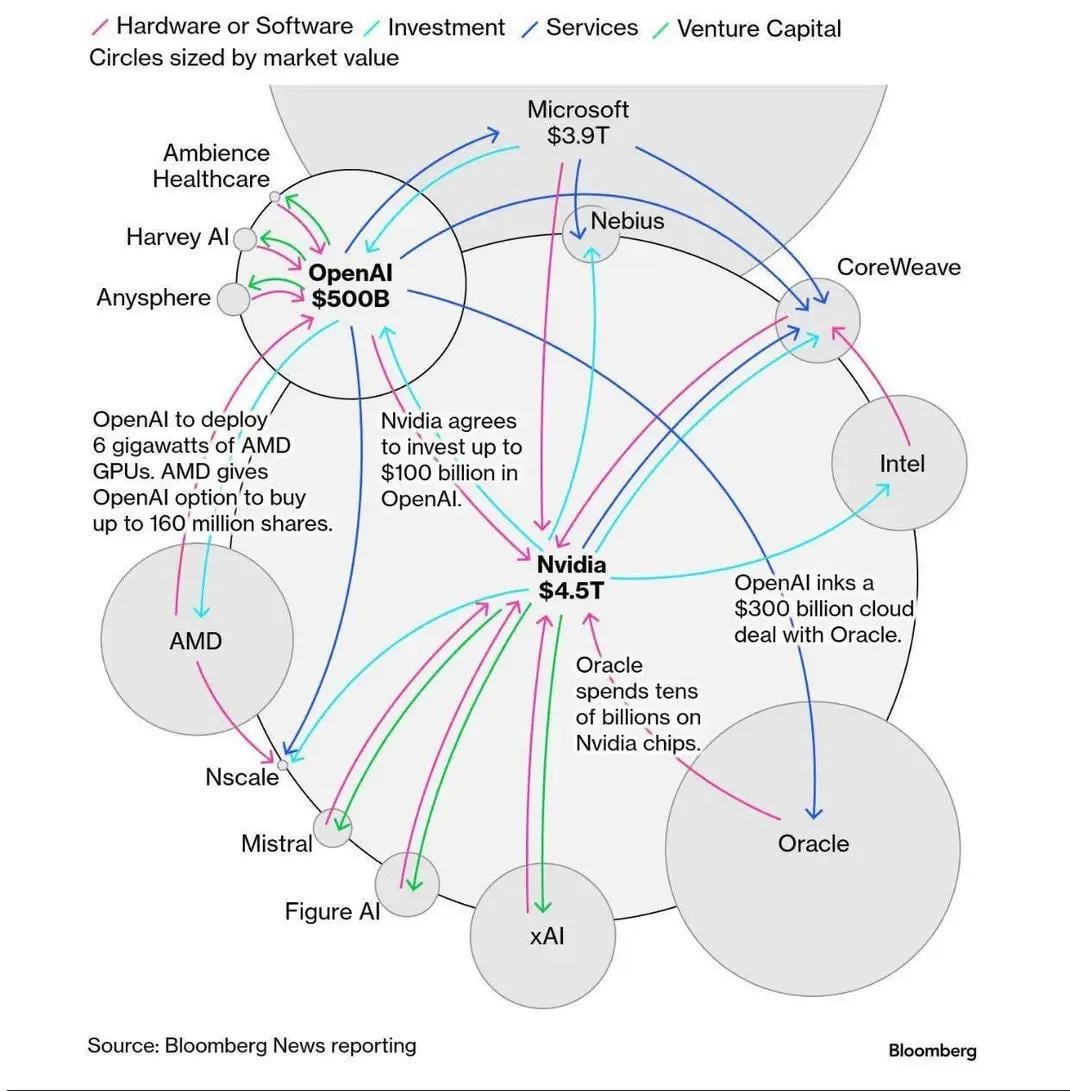

Open AI's strategy of raising capital from multiple sources—Amazon, sovereign wealth funds from the Middle East, Nvidia, Microsoft, and Soft Bank—reflects a desire to create a diversified investor base with strategic alignment rather than concentrated dependence on a single major investor.

Sovereign wealth funds from the Middle East represent long-term, patient capital from governments and entities with extended investment horizons. These investors are less concerned with quarterly performance or immediate returns and more focused on long-term participation in transformative technologies. Their participation provides stability and reduces pressure for rapid commercialization.

Nvidia's potential participation is particularly interesting because the company manufactures the GPU chips that power AI training and inference operations. Nvidia's investment in Open AI aligns with the company's business model—as Open AI scales operations, it purchases more Nvidia hardware. This creates a mutually reinforcing relationship where Open AI's success directly drives demand for Nvidia's products.

The Role of Microsoft and Soft Bank

Microsoft's existing investments in Open AI, combined with potential participation in this funding round, demonstrate the company's continued commitment to maintaining close relationships with the AI company. Microsoft's strategy involves both direct investment and deep integration into Azure services, positioning the company to benefit from Open AI's success across multiple dimensions.

Soft Bank, led by Masayoshi Son, has a long history of making large bets on transformative technology companies. The company's participation in Open AI's funding round reflects a belief that foundation models represent the defining technology of the coming decades. Soft Bank's capital provides additional liquidity and strategic support.

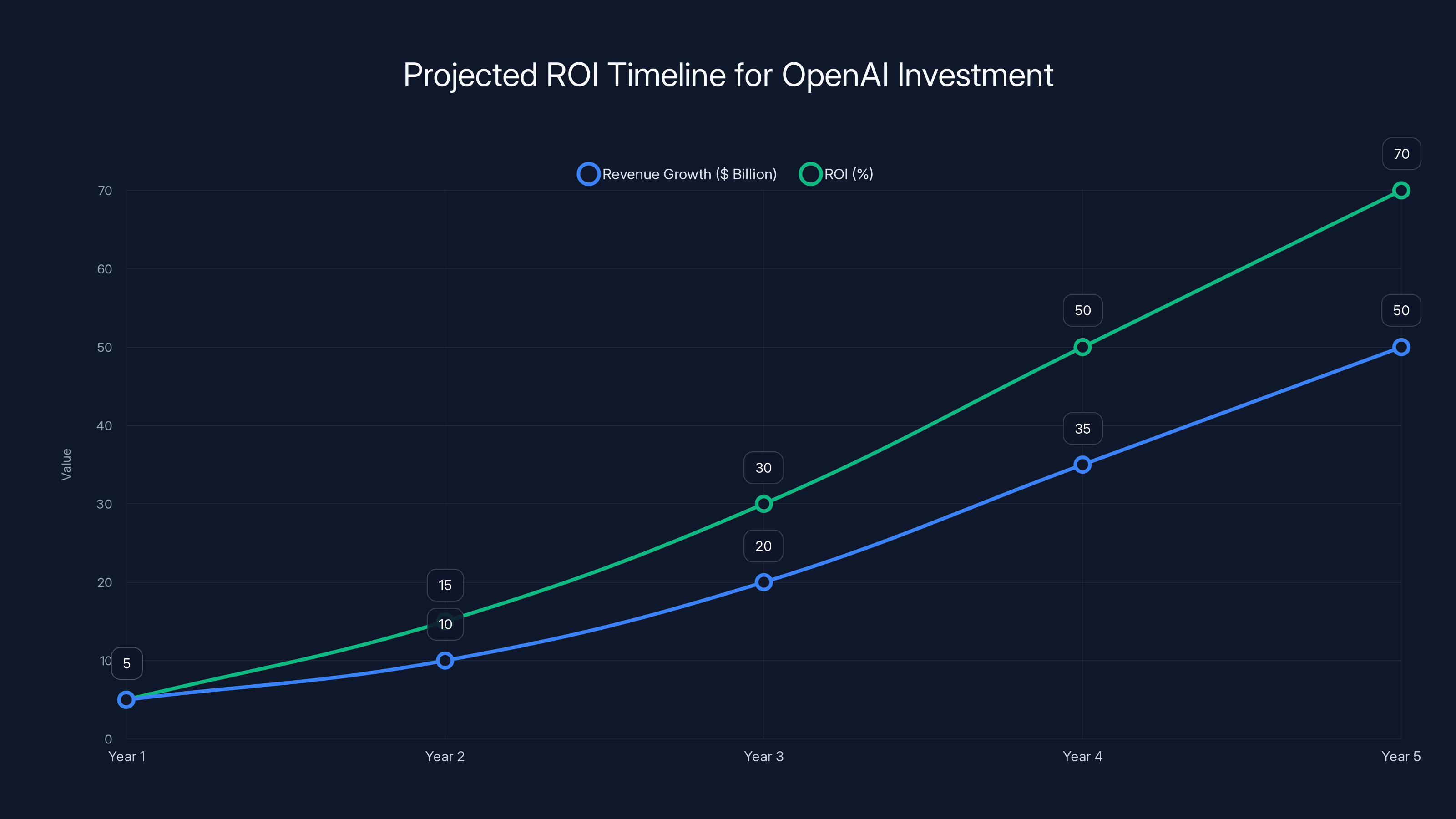

Projected revenue growth and ROI for OpenAI investment over 5 years show significant potential, with revenue reaching $50 billion and ROI at 70% by Year 5. Estimated data based on market trends.

Implications for Cloud Infrastructure and Deployment

Computational Infrastructure Requirements at Scale

Open AI's models require extraordinary computational resources. The company operates some of the largest GPU clusters in the world, utilizing tens of thousands of high-end processors to train and run models. As Open AI scales commercial deployments, computational requirements increase dramatically.

Amazon's investment likely comes with expectations that Open AI will leverage AWS infrastructure for portions of this computational workload. AWS offers extensive GPU availability, networking infrastructure optimized for machine learning workloads, and integration with enterprise services that make it attractive for AI deployment. An investment of $50 billion creates natural expectations that Open AI will make AWS a significant infrastructure partner.

This doesn't mean Open AI will exclusively use AWS—major companies like this typically maintain relationships with multiple cloud providers for redundancy and negotiating leverage. However, an investor relationship typically translates into preferred status and favorable pricing for significant workloads.

Integration with AWS AI Services

AWS already offers extensive AI and machine learning services, including SageMaker for model development and deployment, Bedrock for foundation model access, and various specialized AI capabilities. An Open AI investment creates natural opportunities to integrate Open AI models more deeply into these services.

For AWS customers, having official Open AI model access through AWS services would provide simplified procurement, consolidated billing, and better integration with other AWS capabilities. This could accelerate adoption of both Open AI models and AWS services among enterprises evaluating AI deployment strategies.

Multi-Cloud AI Deployment Strategies

The broader implication of Amazon's Open AI investment is that enterprises will increasingly have access to foundation models across multiple cloud providers. Rather than being locked into a single vendor's AI capabilities, organizations can choose cloud providers based on broader criteria including pricing, existing infrastructure, data location requirements, and specialized services.

This pluralization of access is likely to accelerate enterprise AI adoption by reducing switching costs and increasing competition among cloud providers to offer compelling AI-integrated services. As more foundation models become available across cloud providers, competitive dynamics will increasingly focus on integration quality, pricing, and specialized capabilities rather than exclusive model access.

Regulatory Considerations and Approval Pathways

Antitrust Review Potential and Foreign Investment Considerations

While technology investments typically face less regulatory scrutiny than outright acquisitions, investments of this magnitude occasionally attract attention from regulatory authorities. The relevant question is whether Amazon's investment in Open AI creates antitrust concerns by reducing competition or increasing barriers to entry in the foundation model space.

Arguments that the investment could raise antitrust concerns include: Amazon's already substantial cloud market share; the possibility that Amazon could preferentially favor Open AI over competitors; or that Amazon's investment could reduce Open AI's incentive to develop independently competitive products. However, arguments against antitrust concerns include: the foundation model market remains highly competitive with numerous alternatives; Amazon's simultaneous Anthropic investment demonstrates commitment to supporting competition; and foundation models remain freely available to all competitors.

Foreign investment review is unlikely to create obstacles, as Amazon is a U.S. company and Open AI is a U.S.-based entity, with the vast majority of operations occurring within the United States. The primary investor base—Amazon (U.S.), Microsoft (U.S.), Soft Bank (Japan), and Middle Eastern sovereign wealth funds—doesn't include investors from countries typically subject to additional foreign investment review requirements.

Precedent from Previous Large Technology Investments

Large technology investments in recent years have generally received approval without significant regulatory obstacles. Microsoft's billions in Open AI investment, while scrutinized, proceeded without being blocked. This precedent suggests that Amazon's investment would likely be approved without major regulatory impediments, though regulatory authorities might request information about the investment structure and integration plans.

The typical regulatory review process would involve providing information about the investment structure, assurances about maintaining competitive access to Open AI models, and explanations of how the investment aligns with both companies' strategic objectives. Assuming Amazon provides reasonable assurances that it won't attempt to restrict competitors' access to Open AI models, regulatory approval would likely proceed relatively straightforwardly.

Amazon's

Strategic Rationale and Long-Term Vision

Amazon's Cloud-Centric AI Strategy

Amazon has repeatedly emphasized that artificial intelligence is integral to AWS's future competitive positioning. Andy Jassy has discussed AI as a transformative technology that will reshape cloud computing and enterprise operations. The reported $50 billion Open AI investment represents Amazon's largest single bet on realizing that vision.

The strategic rationale is straightforward: foundation models are becoming essential infrastructure for modern applications. AWS customers increasingly demand access to state-of-the-art AI capabilities integrated with their cloud workloads. By securing a major investment position in Open AI, Amazon ensures it can offer customers access to best-in-class foundation models while maintaining close partnerships that enable preferred integration and deployment.

The investment also protects Amazon against scenarios where exclusive partnerships or strategic relationships give competitors overwhelming advantages in the AI era. If Microsoft's exclusive Open AI relationship became sufficiently advantageous, enterprises might migrate workloads from AWS to Azure to gain access to superior AI capabilities. By becoming a major Open AI shareholder, Amazon mitigates this risk.

Open AI's Independence and Strategic Direction

For Open AI, accepting Amazon's $50 billion investment provides crucial capital for accelerating research and scaling deployment while maintaining the company's independence and strategic autonomy. Open AI is not becoming a subsidiary of Amazon—rather, it's accepting a large investment from a strategic partner who has complementary business interests.

Open AI's unique position as a foundation model company serving multiple cloud providers, enterprises, and consumers requires careful balance. Accepting investments from multiple large companies actually helps maintain this balance by ensuring that no single investor has dominant influence over the company's strategic direction. Amazon's investment would be balanced by existing Microsoft relationships, Soft Bank participation, and other investors.

This structure enables Open AI to remain focused on its core mission of developing increasingly capable and safe AI systems while having the capital and infrastructure partnerships necessary to scale deployment globally.

Impact on Enterprise AI Adoption and Strategy

Simplified Access to Foundation Models in the Cloud

One practical implication of Amazon's Open AI investment is that enterprises using AWS will likely gain more direct, integrated access to Open AI models. Rather than negotiating separate contracts with Open AI and managing access independently, AWS customers could potentially access Open AI models through familiar AWS tools and billing systems.

This simplification would reduce friction in enterprise AI adoption. Organizations evaluating AI capabilities could leverage existing AWS relationships and infrastructure to deploy Open AI models without navigating complex procurement processes. The integration would likely include pre-built connectors, optimized inference infrastructure, and consolidated billing.

Competitive Positioning and Cloud Provider Choices

For enterprises evaluating cloud providers, Amazon's Open AI investment affects strategic calculus. Previously, Microsoft's Azure was positioned as offering superior Open AI integration due to Microsoft's direct investment relationship. Amazon's investment levels this playing field, positioning AWS as an equally viable platform for Open AI model deployment.

Enterprises can now choose cloud providers based on broader criteria—existing infrastructure investment, pricing, specialized services, data location requirements, and geographic presence—rather than being constrained by differential AI model access. This broader evaluation framework is likely to result in more balanced cloud provider competition and better outcomes for customers.

Multi-Cloud and Hybrid AI Strategies

Many large enterprises are pursuing multi-cloud strategies to avoid vendor lock-in and maintain negotiating leverage with cloud providers. Amazon's Open AI investment supports this trend by ensuring that organizations can adopt Open AI models across multiple cloud platforms. Customers can standardize on Open AI models for certain workloads while using provider-specific models and services for other use cases.

This flexibility would be particularly valuable for large organizations with complex infrastructure, geographically distributed operations, and diverse application portfolios. Rather than being forced to consolidate on a single cloud provider to access leading AI capabilities, enterprises can maintain their preferred multi-cloud architectures while enjoying comparable access to foundation models across platforms.

Amazon has invested

Timeline, Milestones, and Deal Closure

Q1 2025 Closure Expectations

The reported timeline for deal closure by the end of Q1 2025 provides clear expectations for when this transformative capital infusion would become official. Q1 extends through March 31, 2025, meaning the deal is expected to close within approximately three months of when the reporting emerged in January 2025.

This timeline is aggressive for a deal of this magnitude but not unusually so given the parties' apparent strong mutual interest. The fact that Amazon and Open AI are moving quickly suggests they have high confidence in strategic alignment and expect minimal regulatory or operational obstacles to closure.

Post-Closure Integration and Operational Plans

Following the expected Q1 2025 closure, both companies would begin integration activities. For Amazon, this would likely include finalizing infrastructure arrangements, integrating Open AI models into AWS services, and beginning the process of migrating certain Open AI workloads to AWS infrastructure. For Open AI, it would involve establishing governance structures with Amazon as a new major investor while maintaining operational independence.

The integration process would likely extend over multiple quarters, with both companies working to optimize how they work together. AWS infrastructure teams would work with Open AI's engineering staff to ensure optimal deployment of models on AWS infrastructure. AWS product teams would develop new service integrations to make Open AI models more accessible to customers.

First Announcements and Customer-Facing Changes

One of the first visible manifestations of the partnership would likely be announcements about new AWS services featuring Open AI models or improved integration of existing services. Amazon would probably announce new capabilities in AWS Bedrock or other AI services that reflect the partnership.

From the customer perspective, the most significant early changes would be simplified access to Open AI models through AWS services and potentially improved pricing for consolidated access. AWS customers who are already paying for Open AI services separately would benefit from integrated billing and consolidated management.

Alternative Platforms and Competitive Considerations

Evaluating Foundation Model Alternatives for Enterprises

While Open AI's models remain widely regarded as among the most capable available, enterprises evaluating AI strategies should consider multiple alternatives. Anthropic's Claude models offer distinct architectural approaches, particularly strong performance on reasoning tasks, and a strong focus on safety and alignment. For certain use cases—particularly those involving complex reasoning or where safety is paramount—Claude might provide superior results.

Google's Gemini models offer integration with Google Cloud services and leverage Google's extensive research infrastructure. Organizations heavily invested in Google Cloud platforms might find Gemini particularly compelling for native integration with their existing infrastructure.

Meta's Llama models, released as open-source alternatives, enable organizations to maintain full control over model deployment without dependency on commercial vendors. While Llama models may lag proprietary alternatives in certain capabilities, they offer significant advantages in terms of cost, customization flexibility, and operational control.

For organizations seeking cost-effective automation and content generation capabilities, platforms like Runable provide compelling alternatives to expensive foundation model licensing. Runable offers AI-powered automation tools, content generation, and developer productivity features at competitive pricing ($9/month), enabling teams to access advanced AI capabilities without the enormous licensing costs associated with enterprise foundation models.

Multi-Model and Hybrid Approaches

Sophisticated enterprises are increasingly adopting hybrid approaches that combine multiple foundation models optimized for specific use cases. An organization might use Open AI's GPT models for general-purpose natural language understanding, Anthropic's Claude for reasoning-intensive applications, and specialized open-source models for cost-sensitive or customization-heavy workloads.

This hybrid approach provides several advantages: organizations avoid over-dependence on a single vendor, they optimize costs by matching models to requirements, and they maintain flexibility to adjust as the technology landscape evolves. Amazon's dual investment in both Open AI and Anthropic actually supports this kind of enterprise strategy by ensuring customers have access to leading alternatives across different approaches.

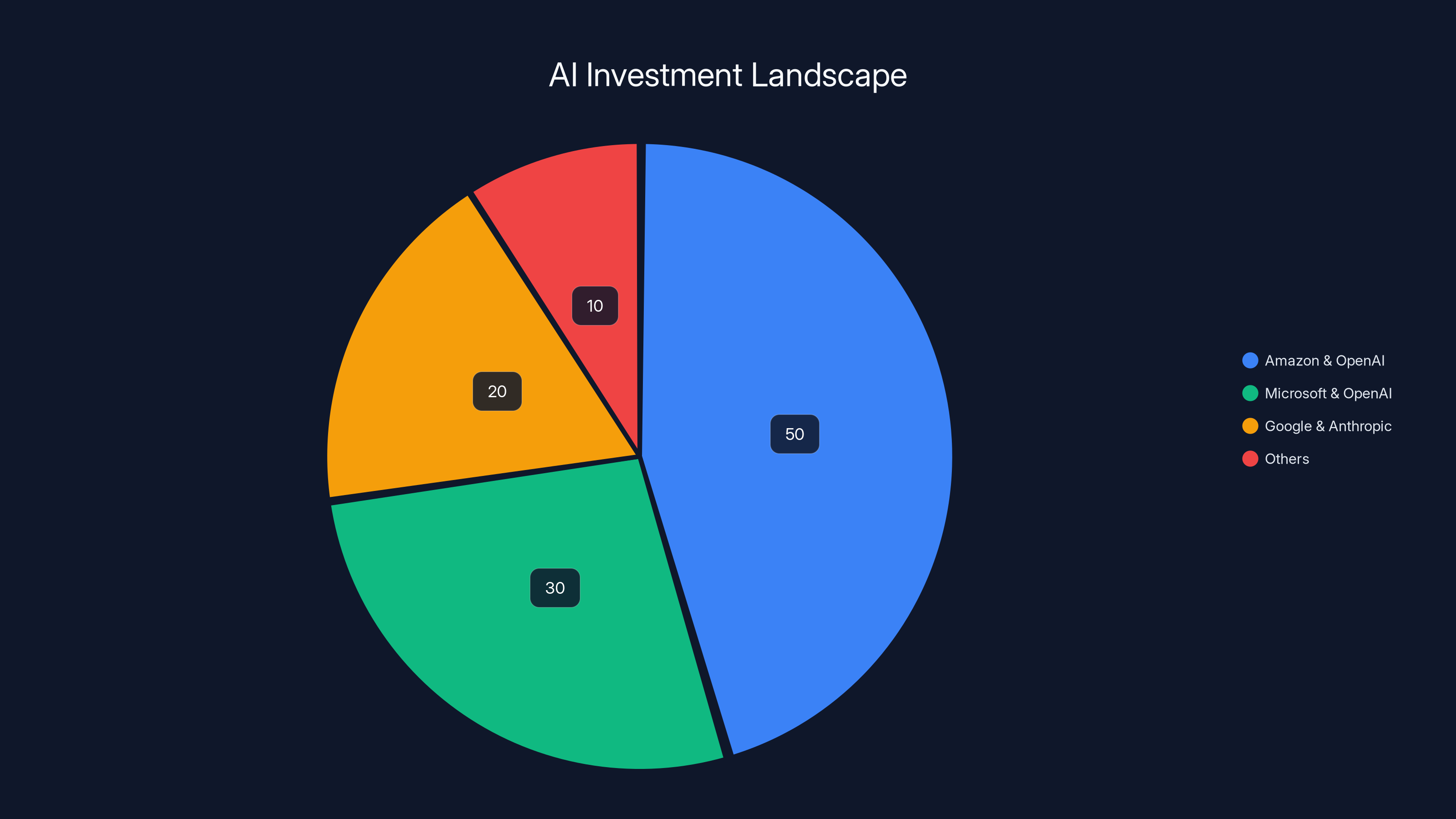

Amazon's $50 billion investment in OpenAI dominates the AI investment landscape, highlighting the strategic importance of AI partnerships. Estimated data.

Market Trends and AI Industry Consolidation

The Era of AI Consolidation and Strategic Partnerships

Amazon's reported Open AI investment reflects a broader trend of consolidation and strategic partnership in the AI industry. As foundation models become more sophisticated and require greater resources to develop and deploy, companies are increasingly choosing strategic partnerships and investments rather than attempting to compete entirely independently.

This consolidation pattern has historical precedent. In previous technology cycles, periods of rapid innovation typically led to strategic partnerships and acquisitions as companies with complementary capabilities combined forces. The AI era is following a similar pattern, with cloud providers, large technology companies, and specialized AI firms forming networks of partnerships and investments.

The consolidation is driven by fundamental economics: training state-of-the-art foundation models costs billions, infrastructure at scale requires enormous capital investment, and the commercial applications are still evolving. Companies with access to capital and infrastructure are increasingly becoming investors in specialized AI companies with research capabilities, while those companies depend on partners for deployment infrastructure and market access.

Venture Capital and Later-Stage Funding Trends

Traditional venture capital funding has partially given way to mega-rounds led by established technology companies and sovereign wealth funds. Rather than startups raising

This shift has implications for how AI companies raise capital and maintain independence. Rather than being acquired by larger technology companies, AI companies can maintain independence while accepting investments from multiple strategic partners. This creates a more diverse ecosystem than would exist if major technology companies were acquiring all promising AI research companies.

Regulatory, Ethical, and Safety Considerations

AI Safety and Responsibility Discussions

As foundation models become increasingly powerful and widely deployed, regulatory and ethical considerations have become more prominent. Governments worldwide are developing regulations for AI systems, and major companies are making commitments around responsible AI development and deployment.

Open AI has positioned itself as taking AI safety seriously, including through its partnership with Anthropic (focused specifically on safety) and through its own research initiatives. Amazon's investment would likely include discussions about how both companies approach safety, testing, and responsible deployment of increasingly capable models.

Enterprise customers are increasingly demanding assurances about model safety, bias testing, and responsible development practices. Amazon and Open AI would benefit from clearly communicating their commitments in these areas as they deepen their partnership.

Content Moderation and Misuse Prevention

As large language models are deployed at scale, questions about content moderation, preventing misuse, and ensuring compliance with applicable laws become increasingly important. Governments and regulators are beginning to establish requirements around how AI systems should handle harmful content, protect privacy, and comply with applicable regulations.

Amazon's infrastructure teams and Open AI's AI safety teams would need to collaborate to ensure that deployed models meet requirements across different jurisdictions and use cases. This collaboration could actually improve both companies' approaches to safety and compliance.

Financial Analysis and ROI Considerations

Return on Investment Timeline and Projections

Assessing the financial return on a $50 billion investment requires understanding the trajectory of Open AI's business development. The company is generating revenue through API access to its models, consumer applications like Chat GPT Plus, and enterprise deployments. As deployment scales and more organizations adopt models across their organizations, revenue is expected to grow substantially.

Currently, the foundation model market remains relatively nascent, with most organizations still in exploratory or early deployment phases. Analysts project that as AI capabilities mature and organizations move into production deployments, total addressable market for foundation models could reach tens of billions annually within the next 3-5 years.

Amazon's investment would be expected to generate returns through appreciation of Open AI's equity value as the company scales revenue and becomes profitable, but also through indirect benefits: access to best-in-class models for AWS services, competitive positioning against Microsoft, and the ability to better serve customers' growing AI requirements. These indirect benefits may actually exceed the direct equity appreciation.

Comparison to Previous Large Technology Investments

Comparing Amazon's Open AI investment to previous large technology investments provides useful context. Amazon's acquisition of Whole Foods for approximately

For comparison, Microsoft's multi-year commitment to Open AI investments totals tens of billions, though spread over multiple years rather than concentrated in a single investment round. Amazon's reported investment would potentially exceed Microsoft's total Open AI commitment if the $50 billion figure represents Amazon's total committed capital.

These comparisons highlight that Amazon is viewing AI and the Open AI partnership as strategically important enough to justify capital commitments exceeding even the largest previous acquisitions in the company's history.

Implications for Developers and Development Teams

Expanded API Access and Development Tools

For developers building applications, Amazon's Open AI investment should translate into improved access to Open AI models through AWS services. Developers building on AWS would benefit from simplified integration, potentially through improved SDKs and libraries, pre-built examples, and documentation.

The investment also creates motivation for both companies to develop new capabilities specifically useful for developers. AWS might develop new services that make it easier to deploy Open AI models, handle scaling, implement monitoring and observability, or integrate models into complex applications. Open AI would benefit from ensuring its models are optimally integrated into the development workflows that AWS customers use.

Pricing and Commercial Terms for Developers

One of the most significant implications for developers would be pricing. If Amazon negotiates favorable pricing for accessing Open AI models through AWS, developers and teams using AWS could benefit from lower costs for model access. This would directly impact the economics of applications built on Open AI models.

For developers evaluating whether to build on Open AI models or alternative platforms, favorable pricing through AWS could be a decisive factor. Startups and teams with limited budgets might be particularly influenced by pricing advantages, as the cost of model access can be a significant percentage of total infrastructure spending for AI-heavy applications.

Competition and Alternative Platforms for Development Teams

While Open AI models remain widely used for development, teams should also consider alternatives. Anthropic's Claude models offer strong performance on many tasks with different architectural approaches. Open-source models like Meta's Llama provide full control and customization potential. Specialized platforms like Runable offer cost-effective solutions for specific use cases like content generation, automation, and document handling.

Developers evaluating platforms should consider factors beyond just model capability: pricing, integration ease, support quality, and long-term sustainability of the vendor. While Open AI offers strong capabilities, the competitive landscape provides alternatives suitable for different use cases and budget constraints.

Future Outlook and Industry Evolution

The Next Generation of AI Capabilities

Following Amazon's investment, both companies would likely accelerate development of next-generation capabilities. Open AI would direct a portion of the new capital toward research into more capable models, new modalities beyond text, and improved efficiency in model training and deployment. Amazon would accelerate development of AI-integrated AWS services and infrastructure optimizations for model deployment.

The combination of Open AI's research capabilities and Amazon's infrastructure and engineering resources creates potential for breakthrough advances in AI deployment and efficiency. Both companies have strong incentives to demonstrate that their partnership is creating innovations that neither could achieve independently.

Competitive Dynamics and Industry Consolidation

Amazon's Open AI investment will likely trigger additional consolidation and strategic partnerships across the AI industry. Companies without major relationships with leading foundation model providers will likely seek partnerships or begin developing their own models. This could lead to a market structure with several competing ecosystems, each organized around different foundation models and cloud providers.

However, the investment also supports competition by enabling Open AI to remain independent while serving multiple cloud providers. Rather than being acquired by Amazon and exclusively serving AWS, Open AI maintains relationships with Microsoft, Google Cloud, and others. This pluralistic structure is actually more competitive than alternatives where each major cloud provider owns its own foundation model company exclusively.

Regulatory Evolution and AI Governance

As AI capabilities become more powerful and economic impact grows, regulatory frameworks will increasingly shape industry development. Amazon and Open AI's partnership occurs within the context of evolving regulations in the United States, European Union, and other jurisdictions. Both companies would benefit from working together to understand regulatory requirements and ensure compliance.

The partnership could also position both companies to influence regulatory development. Large, responsible companies with genuine commitments to AI safety and responsible deployment are often more effective at shaping regulatory frameworks than smaller companies or purely academic institutions. Amazon and Open AI together have the resources and credibility to be influential voices in AI policy discussions.

Implementation Best Practices for Enterprises

Evaluating AI Capabilities for Your Organization

For enterprises considering how to incorporate Open AI models—or alternatives—into their strategies, several key evaluation criteria should be considered. First, assess technical requirements: what specific capabilities does your organization need? Text generation, image generation, reasoning, code generation, and other capabilities have different performance profiles across available models.

Second, evaluate cost implications. Foundation model licensing costs vary substantially across providers and pricing models. Calculate expected usage patterns and costs under different platforms. Consider not just direct model costs but also infrastructure, integration, and operational costs.

Third, assess integration complexity. How easily can candidate models be integrated into your existing systems and workflows? Will they require significant infrastructure changes, or can they be integrated relatively straightforwardly?

Fourth, evaluate vendor stability and roadmap. Is the vendor likely to maintain the product and service long-term? What capabilities are planned for the future? How aligned are vendor roadmaps with your organization's long-term requirements?

Fifth, consider compliance and regulatory requirements. Do candidate solutions meet your organization's compliance requirements? How do they handle data privacy, security, and other regulatory concerns?

Building Multi-Model Strategies

Rather than betting entirely on a single platform, sophisticated organizations should consider hybrid approaches. Evaluate multiple foundation model providers and specializations—use Open AI models for general-purpose natural language work, Anthropic models for reasoning-intensive applications, and specialized or open-source models for cost-sensitive use cases.

This multi-model approach provides flexibility to optimize performance and costs across different requirements. If a particular vendor's pricing becomes disadvantageous or capabilities prove insufficient, you're not locked into exclusive dependence.

Leveraging Development Platforms and Productivity Tools

Beyond foundation models, consider specialized development platforms that can accelerate implementation. For content generation, documentation, and automation tasks, platforms like Runable offer cost-effective solutions with pre-built capabilities for common use cases. Rather than building custom implementations using raw foundation models, these platforms provide ready-to-use capabilities that can accelerate time-to-value and reduce implementation complexity.

Specialized platforms often provide better performance for specific use cases than generic foundation models, while also reducing operational complexity and support burdens. Evaluate these tools alongside foundation model licensing in your technology investment decisions.

The Broader Technology Ecosystem Impact

Effects on GPU and Infrastructure Markets

Amazon's $50 billion investment will significantly increase demand for GPU infrastructure, creating continued benefits for GPU manufacturers like Nvidia. The substantial capital infusion enables Open AI to expand its computational infrastructure, driving demand for Nvidia GPUs, networking hardware, and data center infrastructure.

This demand has ripple effects across the entire infrastructure industry. Suppliers to Amazon and Open AI benefit from increased purchasing, and companies providing infrastructure services experience growing demand. The competitive dynamics around GPU supply and pricing are directly influenced by how aggressively Open AI expands computational capacity using the new capital.

Supply Chain and Manufacturing Implications

As AI infrastructure demands grow, supply chain and manufacturing capabilities become increasingly important competitive factors. Ensuring adequate supplies of high-performance GPUs, specialized networking hardware, and data center components requires coordination across multiple suppliers and manufacturers.

Amazon's vertical integration—controlling AWS infrastructure, working with suppliers, and now investing in Open AI—positions the company well to ensure it can access necessary components. The company's size and purchasing power enable it to secure supplies and favorable pricing in ways smaller competitors cannot.

Addressing Common Questions and Misconceptions

Will Amazon's Investment Reduce Open AI's Independence?

One legitimate question concerns whether Amazon's $50 billion investment would compromise Open AI's operational independence. The answer is nuanced: while the investment likely gives Amazon significant governance rights and influence, Open AI would likely maintain operational autonomy similar to how Microsoft maintains Open AI's independence despite substantial Microsoft investment.

Open AI's structure as a company with both commercial and research missions suggests the company values maintaining control over strategic decisions about which capabilities to prioritize, how to approach safety and alignment, and how to deploy models. Amazon would likely respect these commitments to maintain Open AI as a trusted partner that independently develops valuable technology rather than treating it as a subsidiary.

Would Open AI Models Become Exclusive to AWS?

Another common question concerns whether Open AI models would become exclusively available through AWS following the investment. The answer is almost certainly no. Open AI's business model depends on serving multiple customers across different cloud providers and on consumer platforms. Restricting access exclusively to AWS would damage Open AI's revenue and competitive position.

The investment is more likely to result in preferential integration of Open AI models into AWS services while models remain available through other channels. AWS customers might benefit from simplified access or favorable pricing, but external customers and other cloud providers would retain access to Open AI models on existing terms.

What About the Anthropic Investment?

A third common question concerns how the Open AI investment affects Amazon's existing Anthropic relationship. The answer is that both investments serve Amazon's strategic interests in maintaining access to multiple competing foundation models and ensuring AWS offers customers best-of-breed capabilities.

Maintaining relationships with both Open AI and Anthropic might seem contradictory, but it actually enables Amazon to avoid over-dependence on a single AI company while maintaining flexibility to offer customers access to models with different technical approaches and performance characteristics.

FAQ

What is Amazon's reported $50 billion investment in Open AI?

Amazon is in advanced negotiations to invest approximately

Why is Amazon investing such a large amount in Open AI?

Amazon's investment reflects the strategic importance of artificial intelligence to AWS's competitive positioning against Microsoft Azure and Google Cloud. By becoming a major Open AI shareholder, Amazon ensures access to best-in-class foundation models for its cloud services, gains influence over model development and deployment strategies, and mitigates the risk that exclusive partnerships with competitors would create overwhelming competitive advantages. The investment also reflects Amazon's broader strategy of participating in multiple AI companies and technologies rather than betting exclusively on a single approach.

How does this investment relate to Amazon's existing Anthropic partnership?

Amazon maintains substantial investments in both Open AI and Anthropic, Open AI's primary competitor. This dual-investment strategy enables Amazon to avoid over-dependence on a single AI company while maintaining access to multiple competing technical approaches. The strategy also provides AWS customers with choices between different foundation models optimized for different use cases. Anthropic's focus on safety and alignment, combined with Open AI's focus on general-purpose capability, allows Amazon to serve diverse customer needs.

What will change for AWS customers following this investment?

Following the deal's closure, AWS customers should expect improved integration of Open AI models into AWS services, potentially including simplified API access, better documentation and examples, and consolidated billing. Pricing for AWS customers accessing Open AI models might improve if Amazon negotiates favorable terms. However, Open AI models would remain available through other channels—the investment would provide preferential access and integration rather than exclusive AWS availability.

How does this affect competition in the foundation model market?

The investment affects competitive dynamics by creating a situation where multiple major cloud providers (Amazon, Microsoft, Google) have strategic relationships with foundation model companies. Rather than Microsoft's exclusive Open AI relationship creating overwhelming competitive advantage, Amazon's investment levels the playing field. The investment also reinforces Open AI's independence while increasing its capital resources, allowing the company to continue competing effectively against other foundation model companies including Anthropic, Google's Gemini, and others.

What are alternatives to Open AI models for enterprises evaluating AI capabilities?

Enterprise customers evaluating foundation models have multiple options beyond Open AI. Anthropic's Claude models offer distinct architectural approaches with particular strengths in reasoning tasks and safety. Google's Gemini models provide integration with Google Cloud infrastructure. Meta's open-source Llama models enable organizations to maintain full control over deployment. For specific use cases like content generation and workflow automation, specialized platforms like Runable offer cost-effective ($9/month) solutions with pre-built capabilities. Organizations should evaluate multiple options based on technical requirements, cost, integration complexity, and vendor stability.

When is the deal expected to close and what happens next?

The deal is expected to close by the end of Q1 2025 (by March 31, 2025). Following closure, both companies would begin integration activities including finalizing infrastructure arrangements, integrating Open AI models into AWS services, and potentially developing new jointly-developed capabilities. The integration process would likely extend over multiple quarters as both companies optimize their partnership and announce new customer-facing capabilities.

Could regulatory review prevent this investment from closing?

While large technology investments occasionally attract regulatory scrutiny, Amazon's Open AI investment is unlikely to face significant regulatory obstacles. The investment doesn't involve acquisition of a competitor, doesn't concentrate control in a way that reduces competitive options (Open AI remains independent and serves multiple customers), and doesn't involve foreign investors in problematic categories. Regulatory review would likely be straightforward assuming both companies demonstrate that the investment won't restrict competitors' access to Open AI models.

How does Amazon's investment compare to Microsoft's Open AI relationship?

Microsoft's relationship with Open AI involves multiple billions in investments over time, preferential integration into Azure services, and close technical collaboration. Amazon's reported $50 billion investment would potentially exceed Microsoft's total Open AI commitment, though it represents a single investment rather than an ongoing commitment. Both companies are major Open AI investors and strategic partners. Rather than exclusive relationships, both Microsoft and Amazon are competing for Open AI's attention and resources while simultaneously maintaining other partnerships.

What does this mean for developers and development teams?

For developers, the investment should translate into improved access to Open AI models through AWS services—potentially simplified integration, better documentation, improved pricing, and new developer-focused capabilities. However, developers should also evaluate alternative platforms including other foundation models and specialized development tools. For specific use cases like content generation, documentation automation, and workflow automation, platforms like Runable may provide better performance and value than generic foundation model licensing.

How will this investment affect AI safety and responsible development?

Both Amazon and Open AI emphasize responsible AI development and safety. The partnership creates opportunities for collaboration on safety research, testing methodologies, and responsible deployment practices. Amazon's infrastructure expertise and Open AI's AI safety research could combine to ensure models are deployed responsibly at scale. However, questions about AI safety, bias, and responsible development will remain important ongoing concerns regardless of investment relationships.

Conclusion: Navigating the AI Investment Era

Amazon's reported $50 billion investment in Open AI represents one of the most significant technology investments in recent memory, signaling a fundamental shift in how major companies are participating in the artificial intelligence revolution. The investment reflects the extraordinary capital requirements of cutting-edge AI development, the strategic importance of foundation models to cloud providers' competitive positioning, and the rapidly consolidating nature of the AI industry.

The deal demonstrates that Amazon views artificial intelligence as central to AWS's future competitive positioning. Rather than allowing Microsoft's exclusive Open AI relationship to create an insurmountable competitive advantage, Amazon is securing direct participation in Open AI's success while maintaining independent relationships with alternative AI companies like Anthropic. This multi-investment strategy reflects sophisticated understanding that the foundation model market will likely support multiple competing companies and technical approaches, and that participants can benefit by maintaining relationships across the landscape.

For enterprises evaluating AI strategies, the investment underscores both the importance of foundation models and the expanding range of options available. While Open AI models remain technically impressive and widely used, organizations should evaluate alternatives including Anthropic's Claude, Google's Gemini, open-source options like Llama, and specialized platforms optimized for specific use cases. The competitive dynamics created by Amazon's investment actually support enterprise choice by ensuring that multiple cloud providers can offer access to leading models and capabilities.

The investment also highlights the critical importance of cloud infrastructure, computational resources, and strategic partnerships in the AI era. Companies without access to massive capital, sophisticated infrastructure, or partnerships with leading technology firms face significant disadvantages in developing and deploying competitive AI systems. This dynamic favors larger, well-capitalized companies with existing customer relationships and infrastructure advantages—precisely the characteristics that define Amazon.

Looking forward, expect continued consolidation and strategic partnerships in the AI industry. Other companies without secure access to leading foundation models will seek similar arrangements. Cloud providers will continue positioning themselves as platforms for AI deployment and integration. Specialized AI companies will increasingly depend on partnerships with cloud providers, enterprise software companies, and other strategic partners for market access and scaling.

For developers, teams, and enterprises, the key takeaway is that foundation models are becoming essential infrastructure, but they are not the only factor in AI success. Equally important are integration capabilities, developer experiences, specialized tools optimized for specific use cases, operational infrastructure for deployment and monitoring, and long-term partnership stability. Organizations should evaluate their AI strategies holistically, considering both foundation model access and the full ecosystem of tools, services, and platforms required for successful AI deployment.

As the AI industry evolves, the leaders will be companies that combine strong foundational technology with deep integration into existing enterprise and developer workflows, competitive pricing, reliable operations, and genuine commitments to safe and responsible development. Amazon and Open AI's partnership—along with alternative platforms and technical approaches—will collectively shape how enterprises access and deploy AI capabilities for years to come.

Key Takeaways

- Amazon's 100 billion funding round, fundamentally shifting cloud provider competitive dynamics

- The dual investment strategy in both OpenAI and Anthropic enables Amazon to maintain diversified access to competing AI approaches while avoiding vendor lock-in

- Foundation model market dynamics are increasingly consolidated, with major infrastructure companies investing in multiple competing AI companies

- AWS customers should expect improved OpenAI model integration, simplified access, and potentially favorable pricing following deal closure

- Enterprises evaluating AI strategies should consider multiple foundation models and specialized platforms, as the competitive landscape now includes viable alternatives beyond OpenAI

- The investment demonstrates AI infrastructure and research as fundamental competitive factors, with Amazon willing to invest unprecedented amounts to maintain cloud leadership

- For developers and teams seeking cost-effective AI solutions, specialized platforms like Runable offer compelling alternatives to expensive foundation model licensing for specific use cases

- Regulatory review is unlikely to prevent closure, as the investment maintains OpenAI's independence and supports competitive dynamics in the foundation model market

Related Articles

- Why Publishers Are Blocking the Internet Archive From AI Scrapers [2025]

- Google Project Genie: Create Interactive Worlds from Photos [2025]

- Best Foldable Phones 2025: Complete Buyer's Guide [2025]

- Waymo at SFO: How Robotaxis Are Reshaping Airport Transport [2025]

- SpaceX and xAI Merger: What It Means for AI and Space [2025]

- Why Comcast Keeps Losing Broadband Customers Despite Price Guarantees [2025]