Open AI Disbands Alignment Team: What It Means for AI Safety [2025]

In early February 2025, Open AI made a decision that sent ripples through the AI research community. The company quietly dissolved its Mission Alignment team, a unit specifically dedicated to ensuring its AI systems remained safe, trustworthy, and aligned with human values. At the same moment, the team's former leader, Josh Achiam, received a promotion to "Chief Futurist." On the surface, it looks like a routine organizational restructure. But dig deeper, and you'll find something far more consequential about how AI companies are approaching safety, research, and their responsibility to the world.

This isn't just an internal shuffle at one company. It's a signal about shifting priorities in the AI industry at a critical moment when these systems are becoming more powerful and more integrated into every aspect of human life. The move raises uncomfortable questions about whether safety research is being deprioritized as companies race to ship products and chase capabilities. It also reveals tensions within Open AI between its founding mission and the practical business realities of scaling AI to billions of users.

Let's unpack what actually happened, why it matters, and what this tells us about the future of AI safety in an industry increasingly focused on speed over caution.

TL; DR

- Open AI dissolved its Mission Alignment team in February 2025, a dedicated unit focused on AI safety and human-value alignment

- The team's leader became Chief Futurist, a different role that shifts focus from operational safety research to long-term strategic thinking

- Team members were reassigned to other departments, with the company claiming they'd continue similar work in different roles

- This follows the 2024 disbanding of Open AI's superalignment team, suggesting a pattern of restructuring safety-focused units

- The shift reflects broader industry tensions between scaling capabilities quickly and investing in safety research and alignment

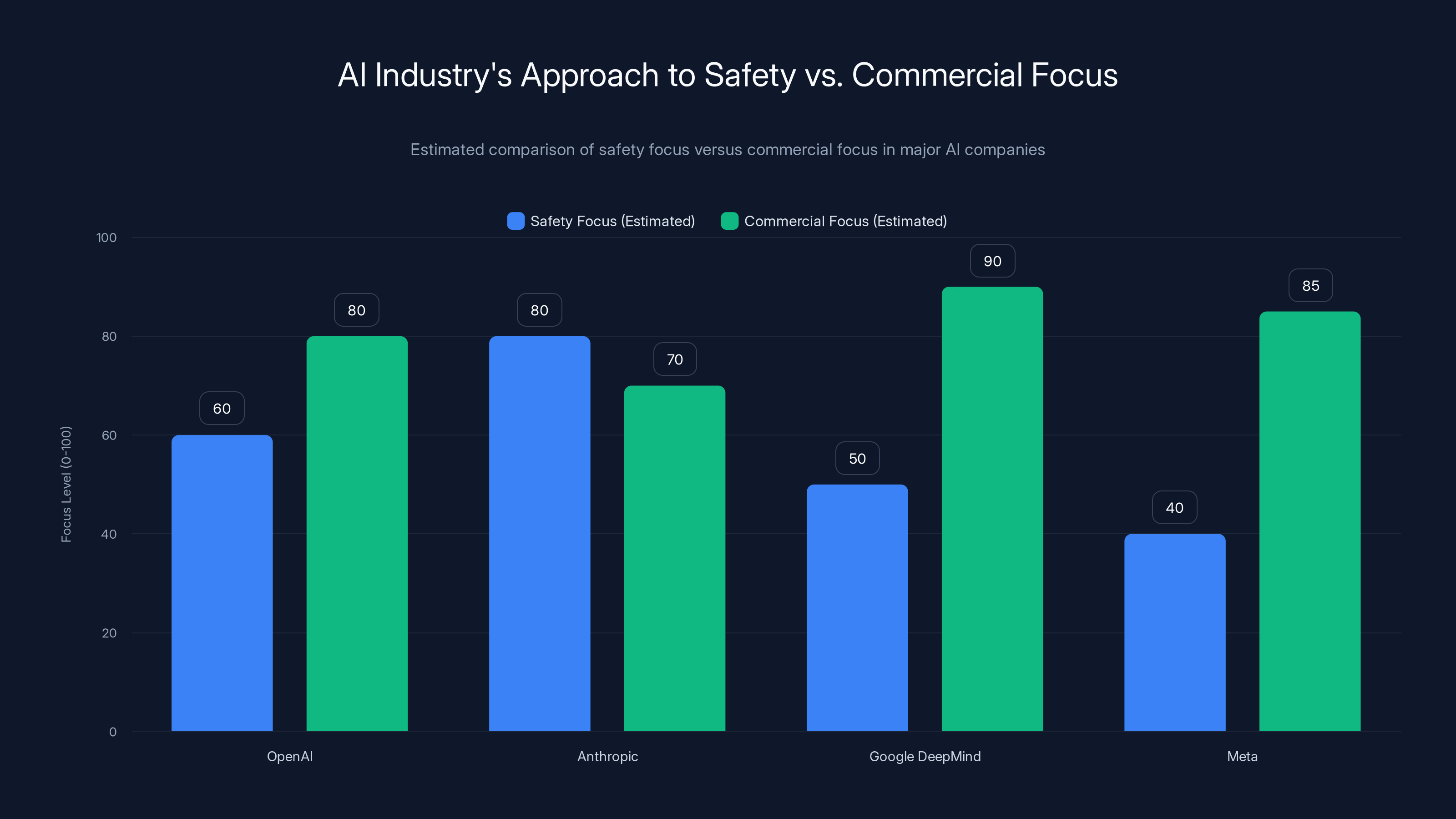

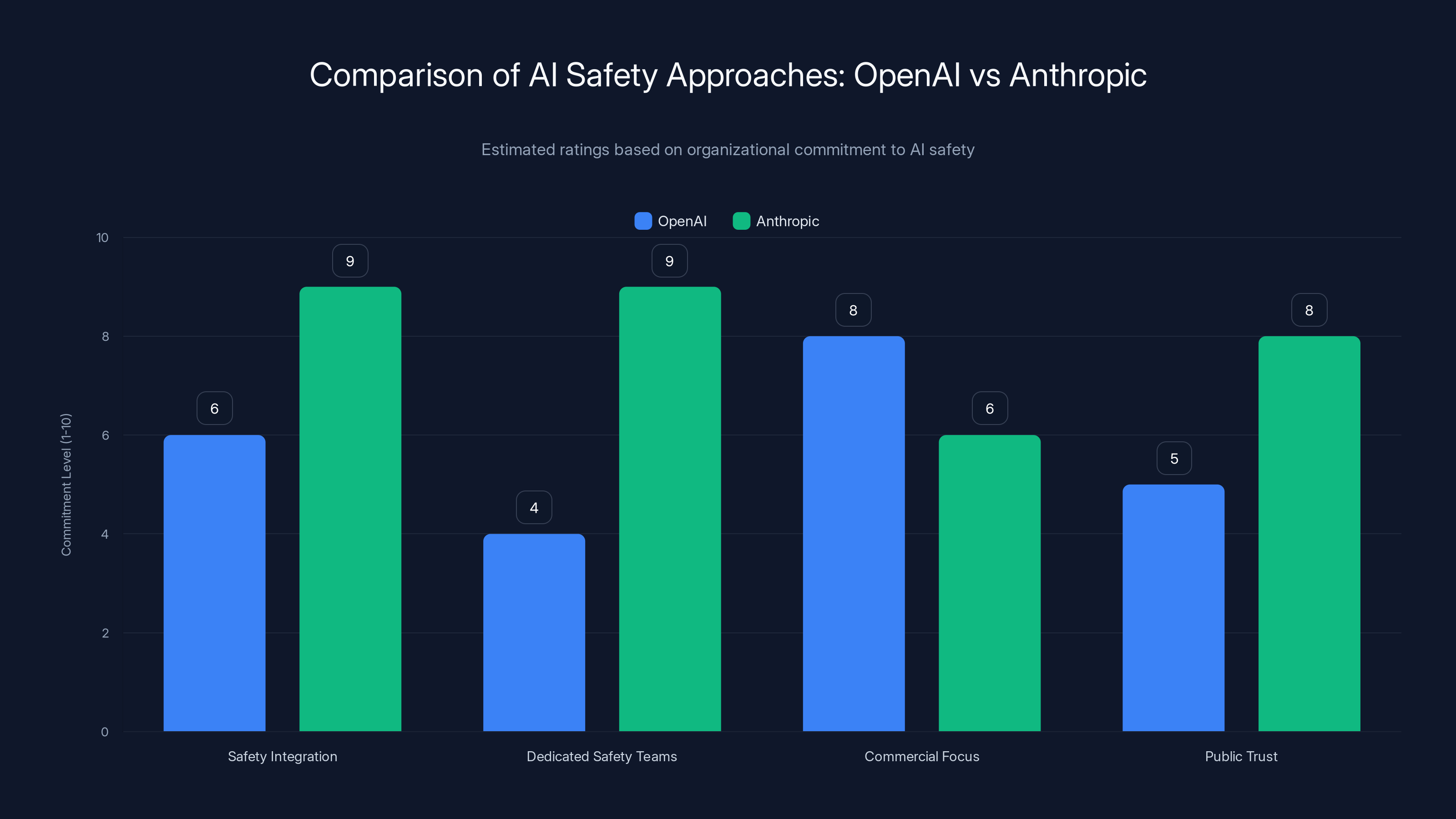

Estimated data shows that while companies like Anthropic maintain a high safety focus, commercial pressures often lead to a stronger emphasis on product development and market competition.

Understanding What the Mission Alignment Team Actually Did

Before we talk about why Open AI dissolved this team, you need to understand what it actually did and why the company created it in the first place. This wasn't some fringe research unit—it was positioned as core to Open AI's stated values.

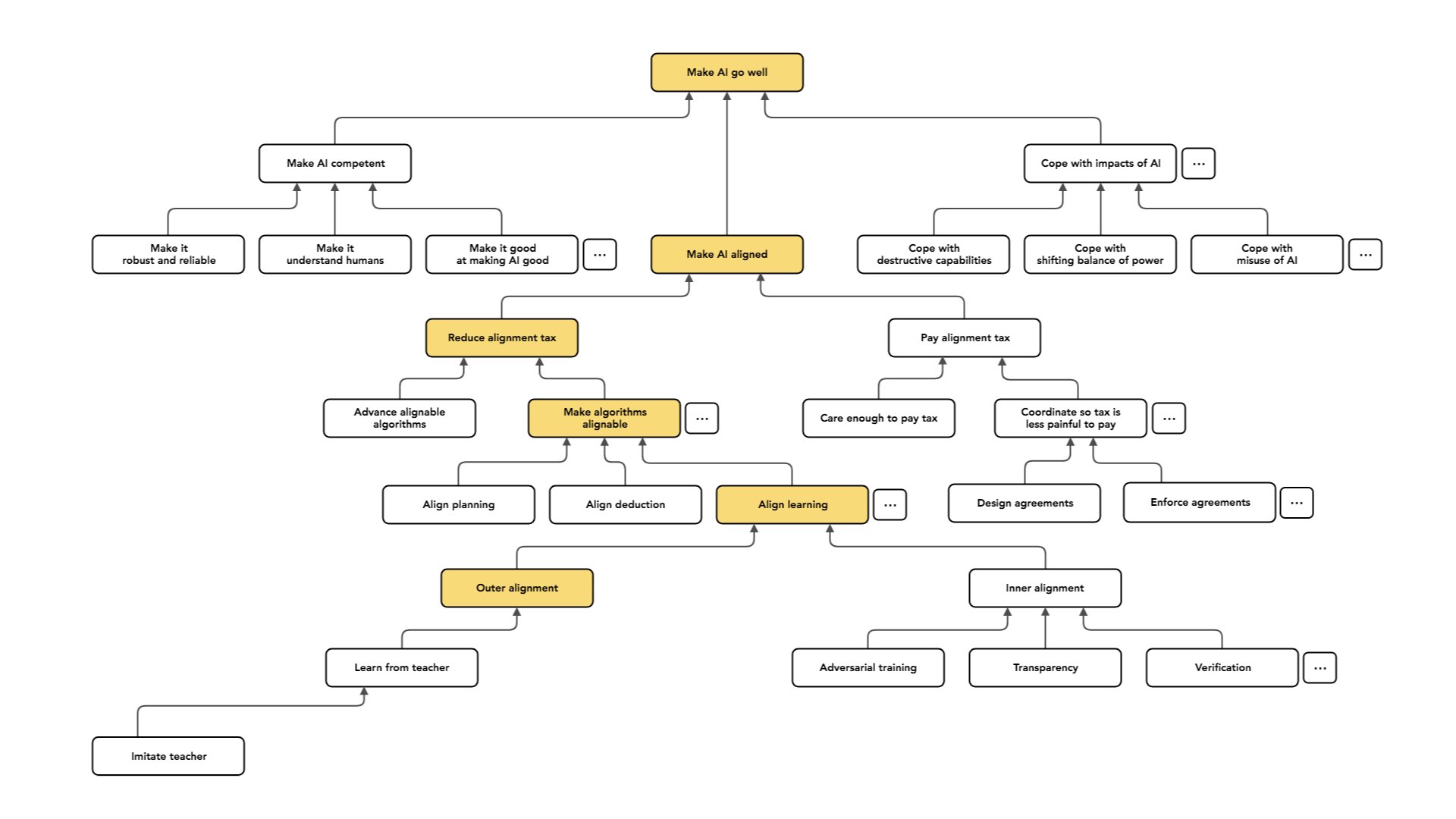

The Mission Alignment team, formed in September 2024, was Open AI's internal research unit dedicated to what the industry calls "alignment." Now, that word gets thrown around a lot, and it can sound abstract. But what it actually means is concrete: ensuring that AI systems follow human intent reliably, even in complex, adversarial, or high-stakes situations.

Think about it this way. When you prompt GPT-4 to write you a poem, alignment isn't really the issue. But when a hospital uses an AI system to diagnose patients, or when a financial institution uses it to approve loans, or when a government uses it to inform policy decisions, alignment becomes existential. Does the system understand what you actually want? Does it avoid harmful outputs even when someone tries to trick it? Does it remain controllable as it becomes more capable?

Open AI's own job descriptions for the team described it as focused on "developing methodologies that enable AI to robustly follow human intent across a wide range of scenarios, including those that are adversarial or high-stakes." The team was studying how to make sure that as AI systems got more powerful, they remained trustworthy.

The company's alignment research blog outlined the challenge clearly: "We want these systems to consistently follow human intent in complex, real-world scenarios and adversarial conditions, avoid catastrophic behavior, and remain controllable, auditable, and aligned with human values." That's not academic navel-gazing. That's describing the fundamental technical challenge of deploying superintelligent AI safely.

So what did the team actually do? From public information, they were working on research problems like interpretability, robustness, and control mechanisms. They were likely studying how to understand what's happening inside these black-box neural networks. They were researching how to make systems robust to adversarial inputs. They were exploring how to maintain human oversight and control as AI systems scale up in capability.

This isn't exotic stuff. It's the technical foundations needed to deploy AI responsibly at scale. Every major AI lab has some version of this research happening. It's the unglamorous work that doesn't generate headlines but that actually matters for safe AI deployment.

The Restructuring: What Happened to the Team

Now let's talk specifics. Open AI confirmed that the Mission Alignment team has been dissolved. The group consisted of roughly six or seven people. Rather than folding the work entirely, the company reassigned team members to "different parts of the company," according to a statement to Tech Crunch. But here's where things get murky.

Open AI's spokesperson said the team members "were engaged in similar work in those roles." But the company couldn't specify where exactly people were moved or what specific projects they'd be working on. That's a yellow flag. If these safety researchers were being moved to continue critical alignment work, you'd think the company would want to make that clear. The vagueness suggests either (a) the company doesn't want to emphasize the internal movement, or (b) the reassignments actually represent a significant shift in what this team will be focused on.

Meanwhile, Josh Achiam, the team's former leader, got promoted—technically. His new title is "Chief Futurist," and his charter is to "study how the world will change in response to AI, AGI, and beyond." It's a different animal entirely from running the alignment team. Being Chief Futurist means thinking strategically about long-term scenarios and implications. Running an alignment team means doing the technical research to make sure systems are actually safe and controllable right now.

There's another detail worth noting: Achiam's personal website still lists him as "Head of Mission Alignment at Open AI." His Linked In profile shows he held that position since September 2024—literally about five months before he was reassigned. So he ran the team for less than half a year before it was restructured.

The timing is also worth noting. This restructuring comes just weeks after Open AI released GPT-4.5, advancing the company's capabilities even further. As the systems get more powerful, the company is moving away from a dedicated team focused on ensuring those systems remain safe and aligned. Draw your own conclusions, but that's a remarkable choice.

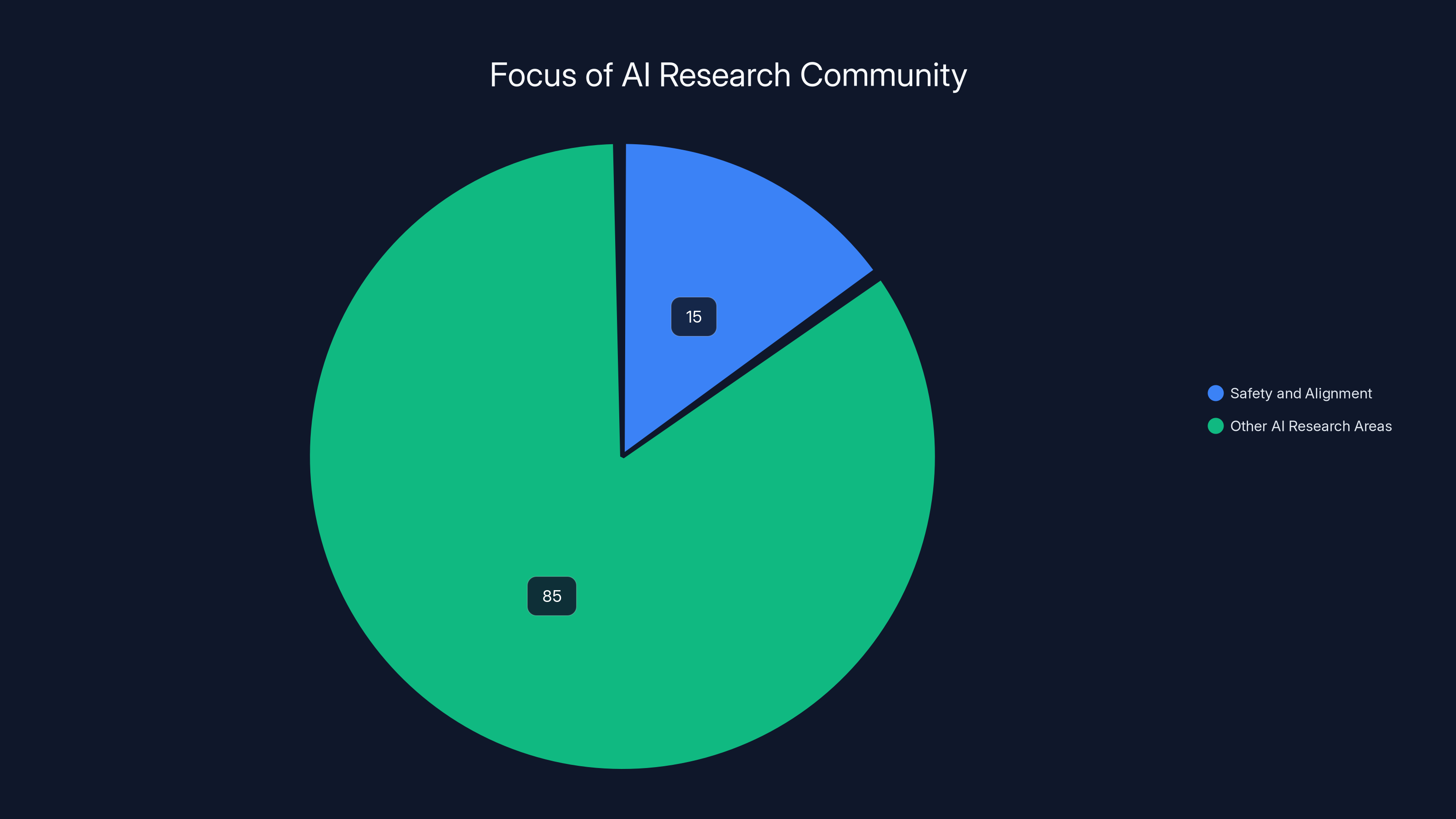

Only about 15% of the AI research community focuses on safety and alignment, highlighting the critical need for dedicated teams in this area.

Context: Open AI's History with Safety Teams

This isn't the first time Open AI has dissolved a safety-focused team. If it were, you might chalk it up to routine reorganization. But the pattern is significant.

In 2023, Open AI formed what it called the "Superalignment team." This team had an even broader mandate: studying long-term existential risks posed by AI and how to ensure that superintelligent systems could be aligned with human values. It was a big deal. The team included top researchers and had resources behind it. It represented Open AI's commitment to thinking seriously about the fundamental safety challenges posed by advanced AI systems.

Then in 2024, Open AI disbanded the Superalignment team.

Now, to be fair, the company announced that the work wasn't being abandoned entirely. But the team structure—which provides focus, resources, and institutional prioritization—was dismantled. Some researchers moved into other teams. The focused effort on the alignment problem was dispersed.

Now, in 2025, the company is doing it again, but this time with what was positioned as a core team focused on immediate safety and alignment challenges. The trajectory is clear: teams dedicated specifically to safety and alignment are being dissolved, with the work being absorbed into broader product and research organizations.

There are charitable interpretations here. Maybe Open AI genuinely believes that alignment research is best done in integrated teams rather than isolated units. Maybe the company thinks having safety work embedded throughout the organization is more effective than having a separate team. Maybe this is about scaling impact rather than deprioritizing safety.

But there's another interpretation too. Maybe as AI companies race to scale capabilities and compete with each other, safety research is becoming a bottleneck rather than a priority. Maybe having a dedicated team meant saying "no" to certain product features or keeping systems more locked down than competitors would. Maybe moving that work into other teams means it gets diluted, reprioritized, and absorbed into the endless queue of product development work.

The Chief Futurist Role: What Changed?

Let's talk about what Achiam's new role actually entails and why it matters. The "Chief Futurist" title sounds important. It comes with promotion, presumably better compensation, and elevated status. But it's fundamentally different from leading the alignment team.

Achiam wrote in a blog post explaining the transition: "My goal is to support Open AI's mission—to ensure that artificial general intelligence benefits all of humanity—by studying how the world will change in response to AI, AGI, and beyond." That's strategic thinking, scenario planning, and long-term analysis.

Running the alignment team meant being responsible for the concrete technical work of making systems safer right now. It meant setting deadlines, managing research projects, solving specific technical problems, and having accountability for whether systems were actually aligned. It meant being in the weeds of implementation.

Being Chief Futurist means stepping back from that operational responsibility and thinking big picture. It's intellectually stimulating work, and it's important work. But it's not the same as running a team responsible for safety research. It's possible to be Chief Futurist while alignment work is deprioritized everywhere else in the company.

Achiam mentioned that in his new role he'd be collaborating with Jason Pruet, a physicist from Open AI's technical staff. That's a nice partnership, but again, it's not a team with accountability for shipping safe systems.

The move also raises questions about expertise and institutional knowledge. Achiam had been leading alignment research. That comes with deep knowledge about current problems, research directions, and technical challenges. Moving that person to a different role means that expertise is no longer being deployed in that focused way. It's dispersed.

Patterns in AI Industry Approach to Safety

Open AI's restructuring isn't happening in isolation. It's part of a broader pattern across the AI industry, and understanding that pattern helps explain what's actually going on.

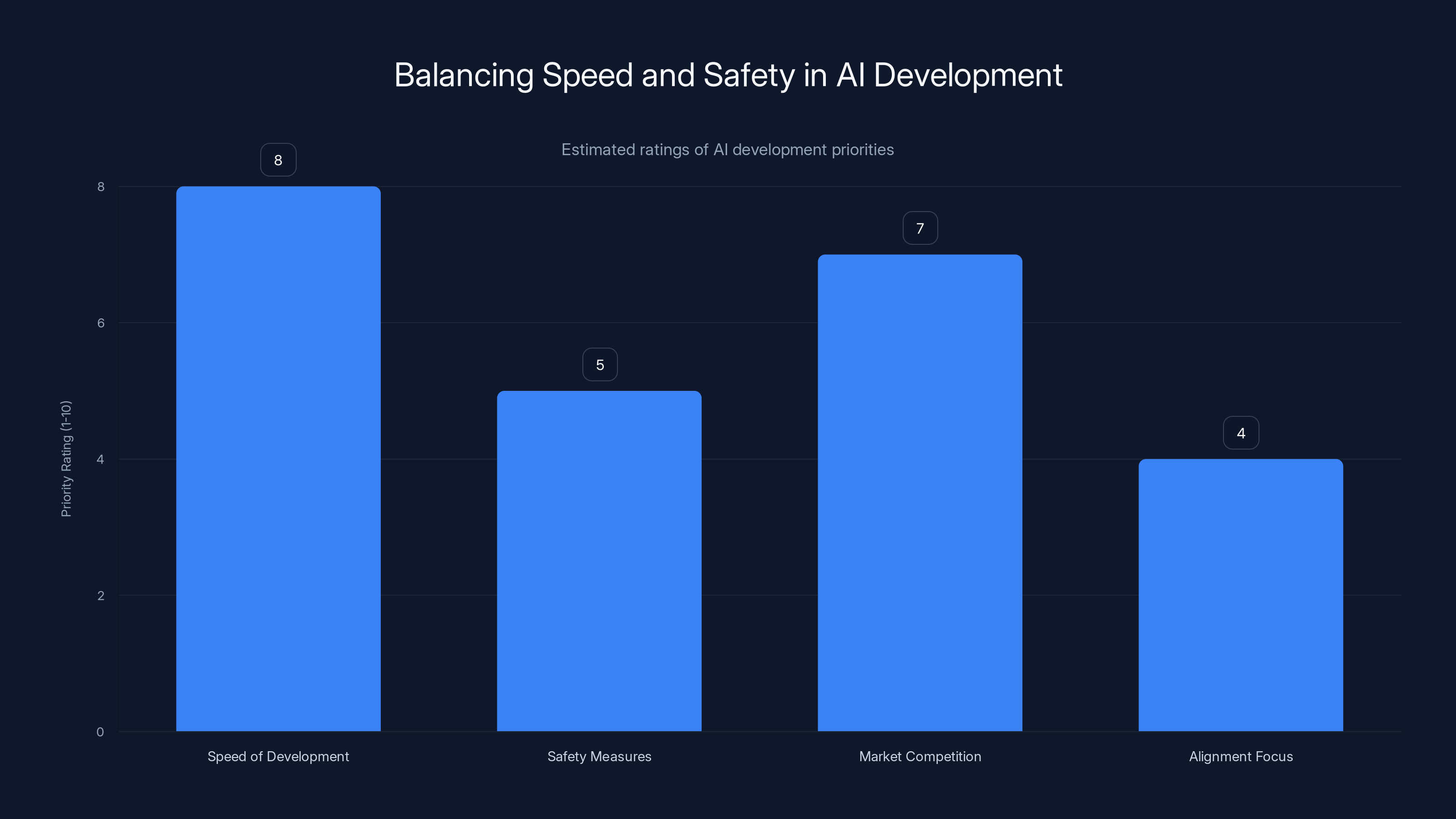

Right now, the AI industry is in hypergrowth mode. We're in the phase where capabilities are advancing rapidly, adoption is accelerating, and companies are competing fiercely to capture market share. In that environment, there's massive pressure to ship features, improve models, and move fast. Safety work, by its nature, is the opposite of that. Safety work slows things down. It asks hard questions. It sometimes says "no" to product ideas. It requires investment in research that doesn't directly generate revenue.

Look at the major AI labs. Open AI is racing to deploy GPT models and build products on top of them. Anthropic was founded specifically with constitutional AI and safety at the core, but it's also building commercial products and competing in the market. Google Deep Mind has resources but sits inside a giant corporation with competing priorities. Meta has open-sourced large models while dealing with public pressure about AI safety.

Where does dedicated safety research fit in that competitive landscape? It's expensive. It slows down product development. It can conflict with business objectives. It's hard to sell to investors as a reason to pay premium prices for your model when competitors are shipping fast and iterating quickly.

So you see this pattern: initial commitment to safety research as part of the founding mission, gradual de-emphasis as commercial pressures increase, eventual restructuring that breaks up dedicated teams. It's not usually a deliberate conspiracy. It's the natural result of incentive structures, competitive pressure, and the reality that safety research is a cost center, not a profit center.

Estimated data suggests that speed and market competition are prioritized over safety and alignment in AI development. This reflects the tension between rapid advancement and ensuring safety.

Why This Matters: The Timing and the Stakes

The timing of this decision is what makes it significant. Open AI isn't disbanding an alignment team when the technology is stable and safe. It's doing this while the technology is rapidly advancing, becoming more capable, and integrating into more critical systems.

Consider the context. We're at a point where large language models are being used to help make decisions about hiring, criminal justice, medical diagnosis, and financial lending. We're at a point where AI is being integrated into military and defense applications. We're at a point where governments are starting to regulate AI, and the regulatory framework relies on companies' commitments to safety and alignment.

This is exactly the moment when you'd think dedicated alignment research would be most important. These systems are powerful enough to cause real harm if they go wrong, but we still don't fully understand how to ensure they reliably follow human intent in all contexts. The alignment problem gets harder as systems get more capable, not easier.

Yet this is the moment Open AI chooses to dissolve its dedicated team focused on this problem. The company might argue that alignment researchers can work on these issues in other parts of the organization, but organizational structure matters. Teams get resources. Teams get accountability. Teams get autonomy to focus on their specific mission. When you dissolve a team and scatter its work, you're signaling that the problem is lower priority.

There's also a question about whether the reassigned team members will actually continue doing alignment work or whether they'll be absorbed into product teams focused on scaling capabilities. The company says similar work will continue, but "similar work" is vague. Will they be doing the same research? Will they have the same autonomy? Will they report to the same leadership? Will they have the same accountability? We don't know.

The Competitive Pressure Hypothesis

One reasonable explanation for this move is competitive pressure. The AI space is brutally competitive right now. Open AI is in a race with Google, Anthropic, Microsoft (through partnerships), and a growing list of other players trying to build the most capable models and most useful products.

Maintaining a large team focused specifically on safety research is expensive. It's also potentially a drag on speed. If competitors are moving faster because they're not investing heavily in safety research, there's pressure to do the same. If customers and investors are more impressed by new capabilities than by safety improvements, there's pressure to allocate resources toward capabilities.

Open AI has talked about the mission of ensuring AGI benefits all of humanity, but it's also a private company that needs to be competitive to survive. Those pressures can come into conflict. When they do, commercial pressure often wins. By dissolving the dedicated team, Open AI can redeploy those researchers and those resources toward other priorities while maintaining the appearance of commitment to safety through the "Chief Futurist" role and the claim that reassigned team members continue similar work.

This isn't unique to Open AI. It's a pattern you see across the tech industry. Companies start with mission-driven commitments to safety, privacy, or responsible development. As competitive pressures increase, those commitments get watered down. Teams get dissolved. Work gets deprioritized. Leadership changes happen.

What This Means for Industry Safety Standards

If Open AI is deprioritizing alignment research, it sends a signal to the rest of the industry. Smaller AI companies will look at this and think, "If the leader in the field doesn't have a dedicated alignment team, why should we?"

We're in a critical window where AI safety practices are still being established. Standards haven't solidified. Regulations are just starting to take shape. Companies have a chance to build safety-first practices into their development processes from the ground up. But that only happens if leading companies are actually prioritizing it.

When Open AI dissolves its safety team, it doesn't just affect Open AI. It sets a precedent for the industry. It signals that safety research isn't as important as it was claimed to be. It creates an out for other companies that were on the fence about investing in safety. They can point to Open AI's move and say, "If the leader isn't maintaining a dedicated team, we don't need to either."

Over time, this compounds. Less safety research happens across the industry. Safety standards get weaker. We end up in a situation where AI systems are more powerful but less well-understood and less rigorously tested for safety properties.

The irony is that this might ultimately hurt the industry. If AI systems cause significant harms because they weren't properly aligned and tested, it will trigger massive regulatory crackdowns. Governments will mandate safety standards that the industry should have been setting itself. Customers will lose trust. The technology will be restricted in ways that could have been avoided through earlier, better safety research.

Anthropic shows a stronger commitment to safety integration and maintaining dedicated safety teams compared to OpenAI, which focuses more on commercial scaling. (Estimated data)

The Institutional Knowledge Problem

There's another aspect of this that's worth considering: institutional knowledge and continuity. Dedicated teams build up expertise over time. Researchers understand the landscape of problems, what's been tried, what didn't work, and what directions are promising.

When you dissolve a team and scatter its members, you lose that concentrated expertise. The knowledge gets distributed across different teams, different projects, different priorities. Some of it will be retained by individuals, but some will be lost. Some researchers will continue focusing on alignment because it's their passion, but others will get absorbed into product teams and move on to other problems.

Over the long term, this matters. Building expertise in safety research takes time. Training new researchers takes time. Re-accumulating knowledge that was lost takes time. By dissolving the dedicated team, Open AI is betting that this work can continue effectively without institutional structure. History suggests that's not usually how it works.

Comparing to Anthropic's Approach

It's worth considering how this compares to other approaches in the industry. Anthropic, Open AI's primary competitor, was literally founded on the principle that AI safety should be central to how the company operates. The company was founded by several researchers who left Open AI partly because they felt safety wasn't being prioritized enough.

Anthropics's approach is to integrate safety into every part of the development process. But it also has dedicated research teams focused specifically on safety and constitutional AI. The company hasn't, as far as is publicly known, dissolved its safety-focused teams.

So you have two approaches competing in the market. Open AI's approach: start with safety-focused teams, then gradually dissolve them as you scale and commercialize. Anthropic's approach: maintain safety-focused research throughout the organization while building commercial products.

Over the long term, we'll be able to see which approach produces better outcomes. Which systems are safer? Which systems are more aligned with human values? Which companies avoid incidents that erode public trust?

Right now, it's early to judge. But Open AI's move suggests some lack of confidence in the institutional importance of dedicated safety research.

Questions the Restructuring Raises

Open AI's move raises some important questions that don't have clean answers but are worth thinking about:

First: Is alignment research better done in dedicated teams or distributed throughout the organization? Open AI seems to be betting on distributed. But there's a risk that distributed work becomes no one's responsibility. Dedicated teams create accountability. When work is distributed, it's easier for it to get deprioritized when other things come up.

Second: What does it mean for Open AI's stated commitment to ensuring AGI benefits humanity? If that's actually a core mission, you'd expect dedicated research teams focused on how to achieve that. Dissolving such teams suggests the mission might be more aspirational than operational.

Third: How much of this is about genuine organizational optimization vs. redirecting resources toward capabilities and products? The company claims it's routine restructuring, but the timing and the fact that this has happened twice in two years suggests something deeper.

Fourth: Will the reassigned team members actually continue alignment research or will they be absorbed into other priorities? The company didn't specify where people were moved, which is telling.

Fifth: Does this create a regulatory risk? As governments start regulating AI, they'll likely want to see evidence that companies have robust safety research and alignment work happening. Having dissolved dedicated teams might create vulnerability during regulatory scrutiny.

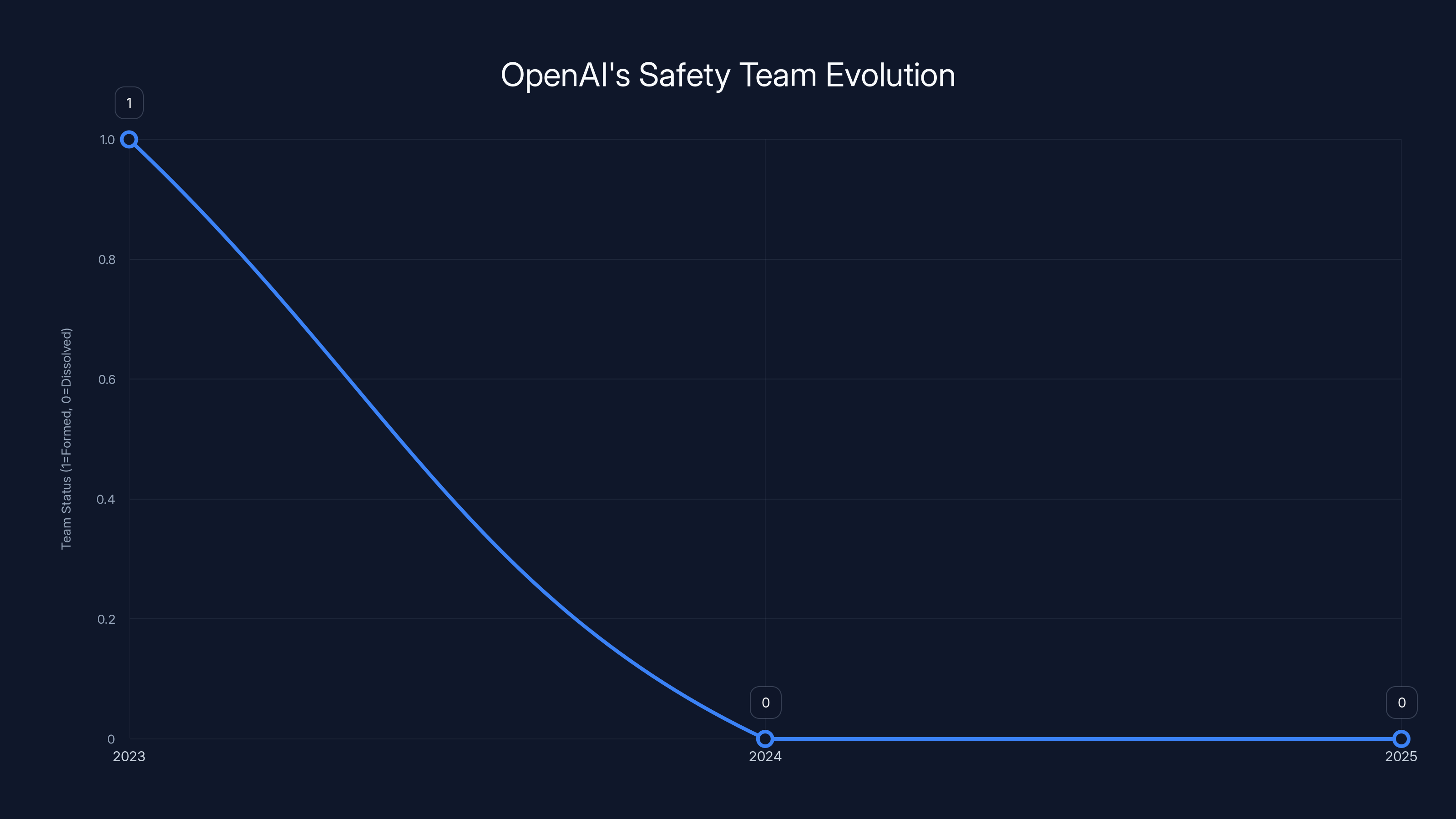

The chart shows the formation of the 'Superalignment team' in 2023 and its dissolution in 2024, followed by another dissolution of a core safety team in 2025. Estimated data highlights the trend of team dissolutions.

The Broader Context: Industry Maturation and Safety

In the longer arc of how technology industries develop, there's often a pattern. Early stage: companies emphasize responsible development, safety, and ethics as founding values because it differentiates them and builds trust. Growth stage: companies start compromising on those values because they become obstacles to scaling and competing. Maturation stage: regulations force the industry to implement the practices it originally claimed to value.

The AI industry might be moving into that middle stage. Companies that started with big commitments to safety are encountering commercial pressures and starting to deprioritize. If that continues without regulatory intervention, the industry might end up in a situation where safety practices are weaker than they could have been.

This isn't inevitable, though. The industry could choose a different path. It could prioritize long-term trust and safety over short-term capability gains. It could maintain dedicated teams focused on alignment and safety. It could resist competitive pressures that push toward shortcuts.

But those choices are harder to make when you're in a competitive race. And Open AI's move suggests the company is choosing the path of least resistance rather than the path that's best for the long-term development of safe, beneficial AI.

What's Next for AI Safety Research?

So where does this leave the field of AI safety research? If Open AI is deprioritizing it, and other companies see that signal and do the same, what happens to the work that actually needs to be done?

One possibility is that safety research shifts more toward academia and independent research organizations. Universities can focus on fundamental research without commercial pressure. Organizations like the Future of Life Institute and others focused specifically on existential risk can continue doing research without worry about product timelines.

But there's a limit to what independent researchers and academics can do. They don't have access to the most capable models. They don't have the resources of major tech companies. They're somewhat separated from the practical realities of building and deploying systems at scale.

So ideally, you'd want both. You'd want fundamental research happening in academia and independent labs. And you'd want rigorous, well-resourced safety research happening inside the companies actually building the systems.

If companies like Open AI keep dissolving their safety teams, you're left with a gap. The fundamental research happens, but it's not coupled to practical deployment. The systems get built and deployed, but without the focused research effort to ensure they're safe.

How Companies Should Actually Approach Safety

Looking at this from first principles, here's how AI companies should approach safety:

1. Treat it as a core technical function. Safety research should be as important as research on capabilities. You wouldn't dissolve your model architecture team because you're deploying products. Safety should get the same commitment.

2. Maintain dedicated teams with accountability. Distributed responsibility is diffused responsibility. Dedicated teams create focus, expertise, and accountability. They're essential for making progress on hard problems.

3. Integrate safety into the development process. Safety research shouldn't be siloed. It should inform decisions about what models to train, what capabilities to ship, how to deploy systems. But it needs to be brought in early and treated seriously.

4. Be transparent about safety research and challenges. If companies are genuinely working on alignment and safety, they should be transparent about what they're doing, what challenges they've found, and what they've learned. This helps the industry advance as a whole.

5. Accept that safety might slow down product development. If alignment research reveals that a feature could cause harm, the company needs to be willing to not ship that feature. That's expensive. It's slower. But it's what responsible development looks like.

6. Hire and retain safety researchers. The best safety researchers are also the most capable people in the field, and they could go work on capabilities anywhere. Companies need to make the case that safety research is important enough to dedicate top talent to.

Open AI's restructuring violates several of these principles. That's a problem.

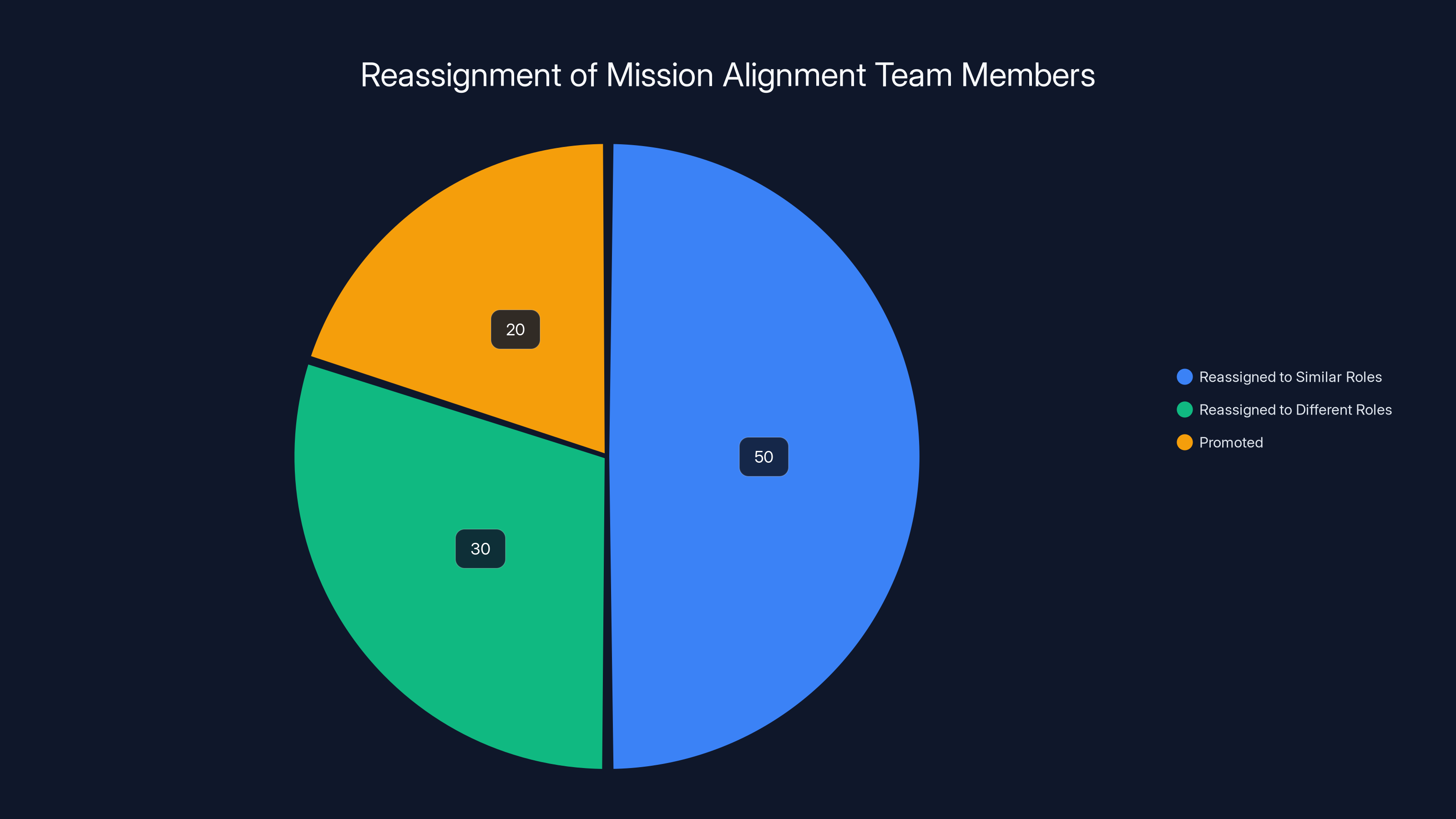

Estimated data suggests that 50% of the Mission Alignment team members were reassigned to similar roles, 30% to different roles, and 20% were promoted, highlighting a significant shift in focus.

The Long Game: Why This Matters for Everyone

You might be thinking: "This is internal Open AI politics. Why should I care?" Here's why: the decisions Open AI makes about how to organize its research and development affect all of us.

Open AI's models are being integrated into Microsoft's products, which billions of people use. Open AI's research affects how other companies approach their own AI development. Open AI's choices about what to prioritize signal to the entire industry what's important.

If Open AI deprioritizes alignment research, that's not just a decision that affects Open AI. It's a decision with consequences for how safe the AI systems we all interact with actually are.

That doesn't necessarily mean Open AI made the wrong choice. Maybe the company has learned that alignment research is better done in integrated teams. Maybe the team members will actually do better work in their new roles. Maybe this is the right move for Open AI's development as a company.

But from the outside, it looks like a company is choosing capabilities and speed over safety and caution at exactly the moment when those systems are becoming powerful enough to require both.

What We Don't Know

There's also a lot we don't know that makes it hard to judge this decision fairly:

- We don't know where exactly the reassigned team members went or what they're working on now

- We don't know what the alignment team had actually accomplished or what problems remained unsolved

- We don't know whether the research will continue to progress in its new organizational form

- We don't know what role, if any, Achiam will have in influencing safety-related decisions

- We don't know whether this is truly routine restructuring or a signal of changing priorities

Open AI could, if it chose to, clarify any of these things. It could explain where team members were assigned and what work they'll be doing. It could detail what the alignment team accomplished and what research directions they were pursuing. It could explain how it's ensuring alignment work continues in its new form.

The fact that it hasn't chosen to be transparent about these details is itself informative. It suggests either that the company doesn't think the details matter much, or that it doesn't want the details examined too closely.

The Role of External Accountability

One thing this situation highlights is how limited external accountability is for AI companies. Open AI isn't a public company with shareholders demanding transparency. It's not a government agency subject to freedom of information requests. It's a private company funded by venture capital and Open AI's own revenue, accountable primarily to its leadership and investors.

So when it dissolves a team focused on safety, there's no requirement to explain why, or how it will ensure that work continues, or how it will maintain the same level of rigor. The company can simply announce the restructuring and move on.

That's a problem for the field of AI safety. The companies doing the most important work on AI alignment are also the least accountable to the public. We're supposed to trust that they're making the right decisions about how to organize their research.

In the long term, external accountability might come through regulation. Governments might require AI companies to maintain dedicated safety research teams, to report on their alignment work, to justify decisions to scale back safety efforts. But we're not there yet. For now, we're mostly relying on companies' stated commitments.

When those commitments translate into dedicated teams, it means something. When companies maintain those teams even when it's inconvenient, it shows real commitment. When companies dissolve those teams, it's hard not to read it as a lack of genuine commitment.

Looking Forward: What Comes Next

What happens next depends on several factors:

Will the reassigned team members continue doing alignment research effectively? If yes, then maybe this restructuring doesn't matter much. If no, then Open AI has lost capacity for this critical work.

Will other companies follow Open AI's lead? If other major AI labs dissolve their safety teams, it signals the entire industry is moving away from dedicated alignment research. That would be concerning.

Will this trigger regulatory response? As governments start regulating AI, they might see dissolving safety teams as red flag. Regulators might require companies to maintain dedicated safety research.

Will there be public backlash? If safety incidents occur and people trace them back to deprioritization of alignment research, there could be significant reputational damage.

Will the approach actually work? Maybe Open AI is right that distributed safety research works better than dedicated teams. Maybe this restructuring will produce better outcomes. We'll find out over time.

The Bigger Picture: AI Development Philosophy

Ultimately, this situation reflects different philosophies about how AI should be developed.

One philosophy says: move fast and iterate. Build systems, deploy them, learn from real-world use, improve based on feedback. Safety emerges from rapid iteration and market forces. Dedicated safety teams slow things down and prevent valuable innovation.

Another philosophy says: think carefully about risks before you scale. Do research on alignment and safety in advance. Build systems with safety properties baked in from the start. Dedicated safety teams are essential for doing this work properly.

Open AI's restructuring suggests the company is moving toward the first philosophy. Anthropic seems to lean more toward the second. The industry will shape itself based on which approach produces better outcomes, which companies succeed, and which approach regulators end up mandating.

For now, Open AI's choice to dissolve its alignment team is a signal that the company values capabilities and speed over safety caution. That might prove to be the right call. Or it might prove to be something the company regrets. We'll find out over time.

FAQ

What is AI alignment and why is it important?

AI alignment refers to the challenge of ensuring that AI systems reliably follow human intent and values, even in complex or adversarial situations. It's critical because powerful AI systems that don't align with human values could cause significant harm, whether through misunderstanding instructions, being manipulated by users, or optimizing for objectives that have unintended consequences. As AI systems become more capable and are deployed in consequential domains like healthcare, criminal justice, and finance, alignment becomes increasingly important for ensuring these systems behave safely and predictably.

Why would Open AI dissolve a team focused on such an important problem?

Open AI's official explanation is that this is routine reorganization that occurs in fast-moving companies. The company claims that reassigned team members will continue doing similar alignment work in their new roles. However, the move could also be driven by competitive pressure to allocate resources toward building and scaling capabilities rather than safety research, or by a genuine belief that alignment research is more effective when distributed throughout the organization rather than concentrated in dedicated teams. Without more transparency from Open AI, it's difficult to know the true motivation.

What does the Chief Futurist role entail and how is it different from leading the alignment team?

The Chief Futurist role, which Josh Achiam moved into, involves strategic thinking about how the world will change in response to AI and AGI. It's a forward-looking, scenario-planning role focused on long-term implications. Leading the alignment team, by contrast, involved hands-on management of research projects aimed at making AI systems safer and more controllable right now. These are fundamentally different responsibilities, with the Chief Futurist role being more strategic and less operationally focused on specific safety research.

Is this the first time Open AI has restructured a safety-focused team?

No. Open AI previously disbanded its "Superalignment team" in 2024, which had been focused on studying long-term existential risks posed by AI. The dissolution of the Mission Alignment team in 2025 represents a similar move. This pattern suggests a broader shift in how Open AI is organizing its approach to safety research, possibly indicating reduced prioritization of dedicated safety-focused teams.

How does this compare to how other AI companies approach safety research?

Different companies take different approaches. Anthropic, Open AI's main competitor, was founded specifically with AI safety at the core and appears to maintain dedicated safety research teams while building commercial products. Google Deep Mind and other major labs also have safety-focused research groups. Open AI's move toward dissolving dedicated teams and distributing the work is distinctive and suggests a different philosophy about how safety research should be organized.

Could this affect the safety and reliability of AI systems that Open AI deploys?

Potentially. If the reassigned team members don't continue doing rigorous alignment research with the same focus and resources they had before, there could be less progress on critical safety challenges. However, it's also possible that alignment research continues effectively in its new organizational form. The impact will depend on whether the company actually maintains the same commitment to this work without a dedicated team structure, which is difficult to assess from the outside.

What does this mean for AI regulation and oversight?

As governments start regulating AI, they may look at decisions like this as part of assessing whether companies are genuinely committed to safe AI development. Dissolving safety teams could be viewed as a red flag during regulatory scrutiny. Conversely, companies might be required to maintain dedicated safety research teams as a regulatory requirement, which could reverse Open AI's decision regardless of the company's preference.

Will other AI companies follow Open AI's lead on this?

That remains to be seen. Smaller AI companies or companies racing to catch up might view this as permission to deprioritize safety research. However, companies like Anthropic that have built their identity around safety commitment are unlikely to follow suit. The industry will likely split between companies that maintain strong safety research programs and those that minimize them, which will have different consequences over time.

What would a better approach to this restructuring have looked like?

A more responsible approach would involve: (1) being transparent about why the team was being restructured, (2) specifying where team members were being reassigned and what they would work on, (3) explaining how the company would ensure alignment research continues at the same level of rigor, (4) potentially maintaining some dedicated team structure while also integrating safety throughout the organization, and (5) publicly committing to how much resources would be allocated to this work going forward.

Should I be concerned about using Open AI's products because of this restructuring?

This restructuring is a signal about Open AI's internal priorities, but it doesn't necessarily mean the company's products are unsafe. Open AI has invested heavily in safety mechanisms and testing for its deployed models. The question is more about whether the company will continue that commitment with the same rigor in the future. Users should make their own assessment based on their risk tolerance and use case, but the restructuring is worth being aware of when evaluating whether to rely on Open AI's systems for sensitive applications.

Conclusion: The Crossroads of Speed and Safety

Open AI's decision to disband its Mission Alignment team represents more than just an internal reorganization. It's a moment that reveals the real pressures facing AI development at this critical juncture. The company faces genuine tension between its founding mission to ensure AGI benefits humanity and its need to compete in an increasingly crowded market for AI capabilities. When those pressures conflict, the restructuring shows which way the company is leaning.

The official explanation—that this is routine reorganization and that alignment work will continue in other parts of the company—might be entirely accurate. Distributed safety research could work fine. The reassigned team members might do excellent work in their new roles. The Chief Futurist might influence safety-related decisions in ways that maintain the same level of rigor.

But from the outside, the pattern looks consistent: teams dedicated specifically to safety and alignment get dissolved as companies scale. Work gets distributed. Institutional structure dissolves. The organization finds it easier to prioritize capabilities and products over the slower, harder work of ensuring systems are genuinely safe and aligned.

This happens not because the companies are evil or indifferent to safety. It happens because safety research is a cost center, not a profit center. It happens because competitive pressure rewards speed over caution. It happens because it's easier to maintain safety through policy and distributed work than through dedicated teams with real accountability.

But it matters. These systems are becoming more capable and more integrated into critical infrastructure. The alignment problem gets harder as systems get more powerful, not easier. Dedicated research focused on this problem is valuable. When companies dissolve such teams, something is lost.

The question for Open AI, for the rest of the industry, and for the public is: what's the right balance between moving fast and ensuring we're moving in a safe direction? Open AI's choice suggests the company believes speed matters more. Whether that's the right call will become clear over time. Let's hope it is.

For teams and organizations trying to use AI responsibly, Open AI's restructuring is a reminder that you can't fully delegate responsibility for safety to the AI companies. You need to understand these systems, test them rigorously, maintain human oversight, and stay skeptical of claims that safety and alignment will automatically happen.

The future of AI safety depends on getting these choices right. And Open AI's recent moves suggest the industry might not be getting them right at all.

Key Takeaways

- OpenAI dissolved its Mission Alignment team in February 2025, which was dedicated to researching AI safety and alignment with human values

- The team's leader Josh Achiam was promoted to Chief Futurist, a strategic role distinct from operational alignment research

- This is the second time in a year OpenAI has restructured a safety-focused team, following the 2024 disbanding of its Superalignment team

- The move reflects broader industry tensions between competitive pressure to scale capabilities quickly and the need for dedicated safety research

- Dissolving dedicated teams signals to the industry that safety research may be deprioritized, potentially influencing how competitors approach the problem

- The lack of transparency about where team members were reassigned or what work they'll continue is concerning for maintaining research continuity

- Industry competitors like Anthropic maintain dedicated safety teams, creating different approaches to AI development philosophy

- This restructuring has implications for AI regulation, industry standards, and how the public can assess companies' genuine commitment to safe AI development

Related Articles

- OpenAI Researcher Quits Over ChatGPT Ads, Warns of 'Facebook' Path [2025]

- Humanoid Robots & Privacy: Redefining Trust in 2025

- OpenAI's ChatGPT Ads Strategy: What You Need to Know [2025]

- Apple's Siri Revamp Delayed Again: What's Really Happening [2025]

- Claude's Free Tier Gets Major Upgrade as OpenAI Adds Ads [2025]

- OpenAI Codex Hits 1M Downloads: Deep Research Gets Game-Changing Upgrades [2025]

![OpenAI Disbands Alignment Team: What It Means for AI Safety [2025]](https://tryrunable.com/blog/openai-disbands-alignment-team-what-it-means-for-ai-safety-2/image-1-1770847577887.jpg)