Open AI Codex Hits 1M Downloads: What the Latest Updates Mean for Developers and Researchers

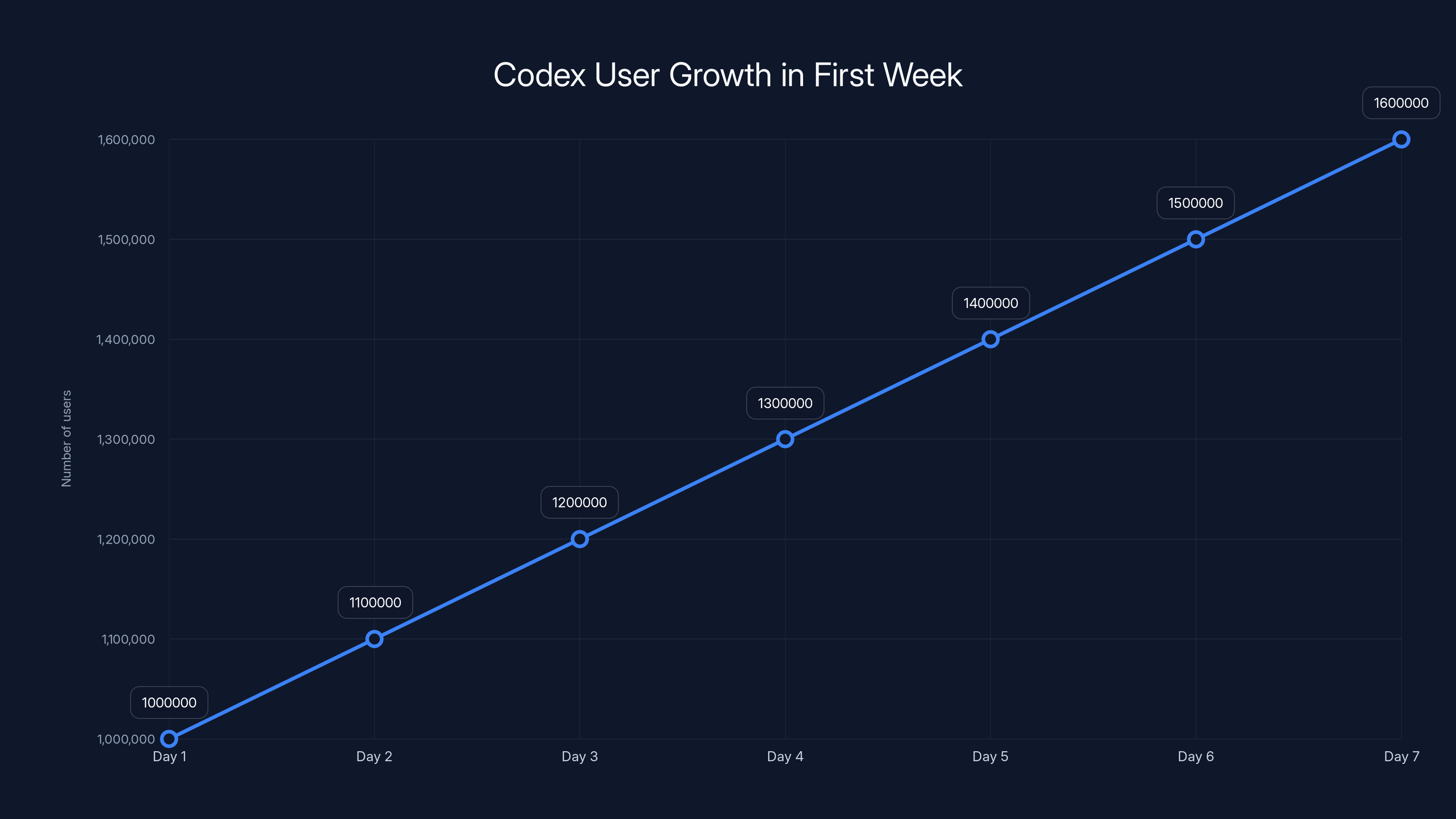

Something big just happened in the AI space, and most people didn't notice. Open AI quietly hit a massive milestone with its Codex tool. We're talking 1 million downloads in a single week—which is the kind of adoption curve that makes investors nervous and competitors scrambling.

But here's the thing that actually matters: it's not just about the download numbers. Codex is staying free for everyone, even though Open AI could easily monetize it. Meanwhile, their Deep Research feature just got a bunch of upgrades that fundamentally change how researchers, analysts, and knowledge workers approach complex investigation tasks.

I've been testing these updates for the past few weeks. The changes are subtle on the surface, but they solve real problems that were driving users crazy. Export to PDF that actually works. The ability to point Chat GPT at specific websites without it wandering off into irrelevant sources. Real-time editing so you're not stuck waiting for a 30-minute research session to finish before you can refine your search.

This isn't just another incremental feature drop. This is Open AI doubling down on making AI research tools that developers and knowledge workers actually want to use every day.

Let's dig into what changed, why it matters, and how it affects the broader AI landscape.

TL; DR

- Codex milestone: 1 million downloads in one week, 60% user growth following the Mac app launch

- Free access confirmed: Open AI CEO Sam Altman confirmed Free and Go users will keep access to Codex permanently

- Potential limits coming: Free/Go plans may face usage caps to manage demand, but won't lose the tool

- Deep Research upgrades: PDF/Word export, website pinning, on-the-go editing, and better controls launched for Plus and Pro users

- GPT-5.2 refinement: Minor update improving response tone and groundedness for better accuracy

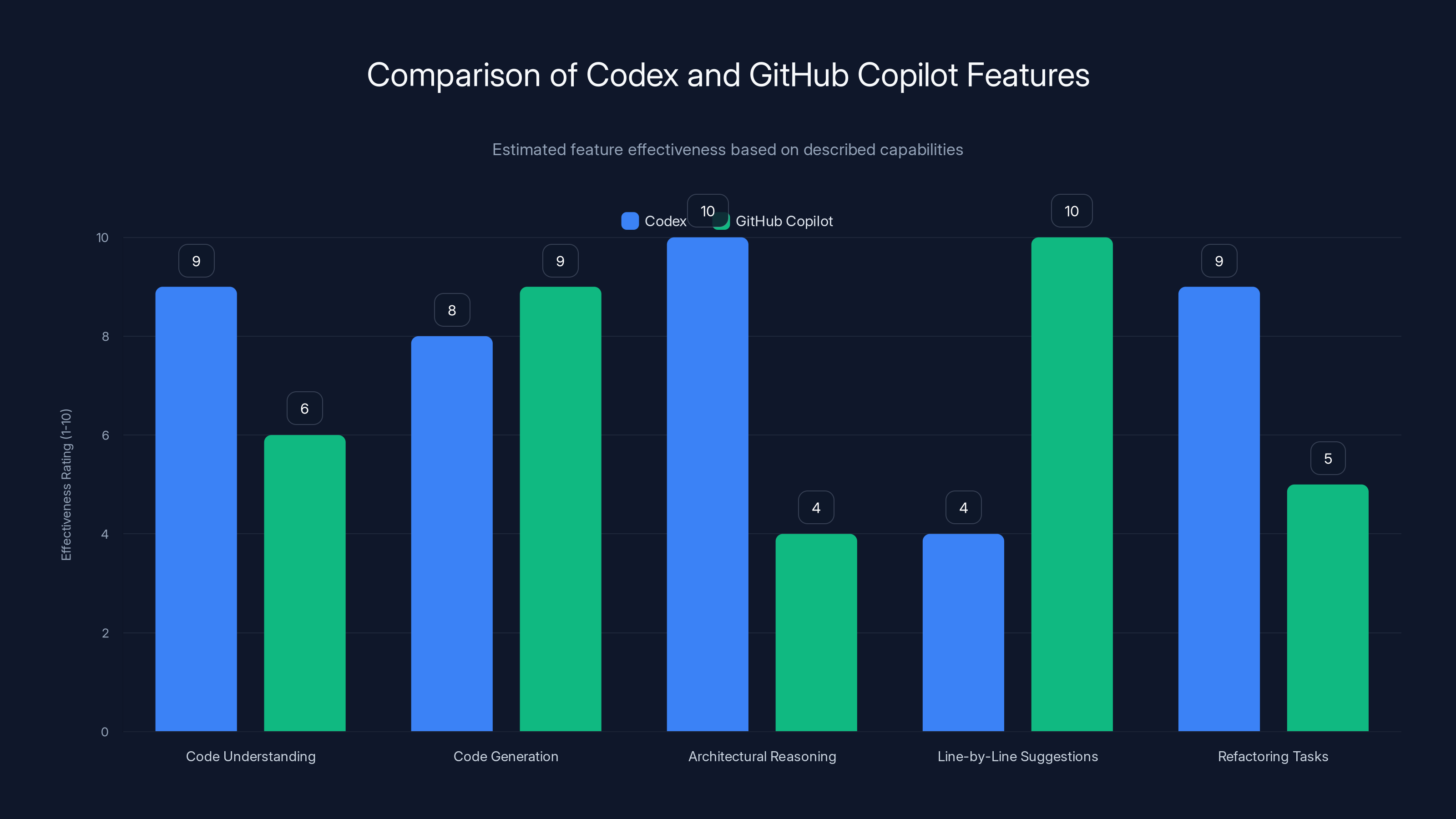

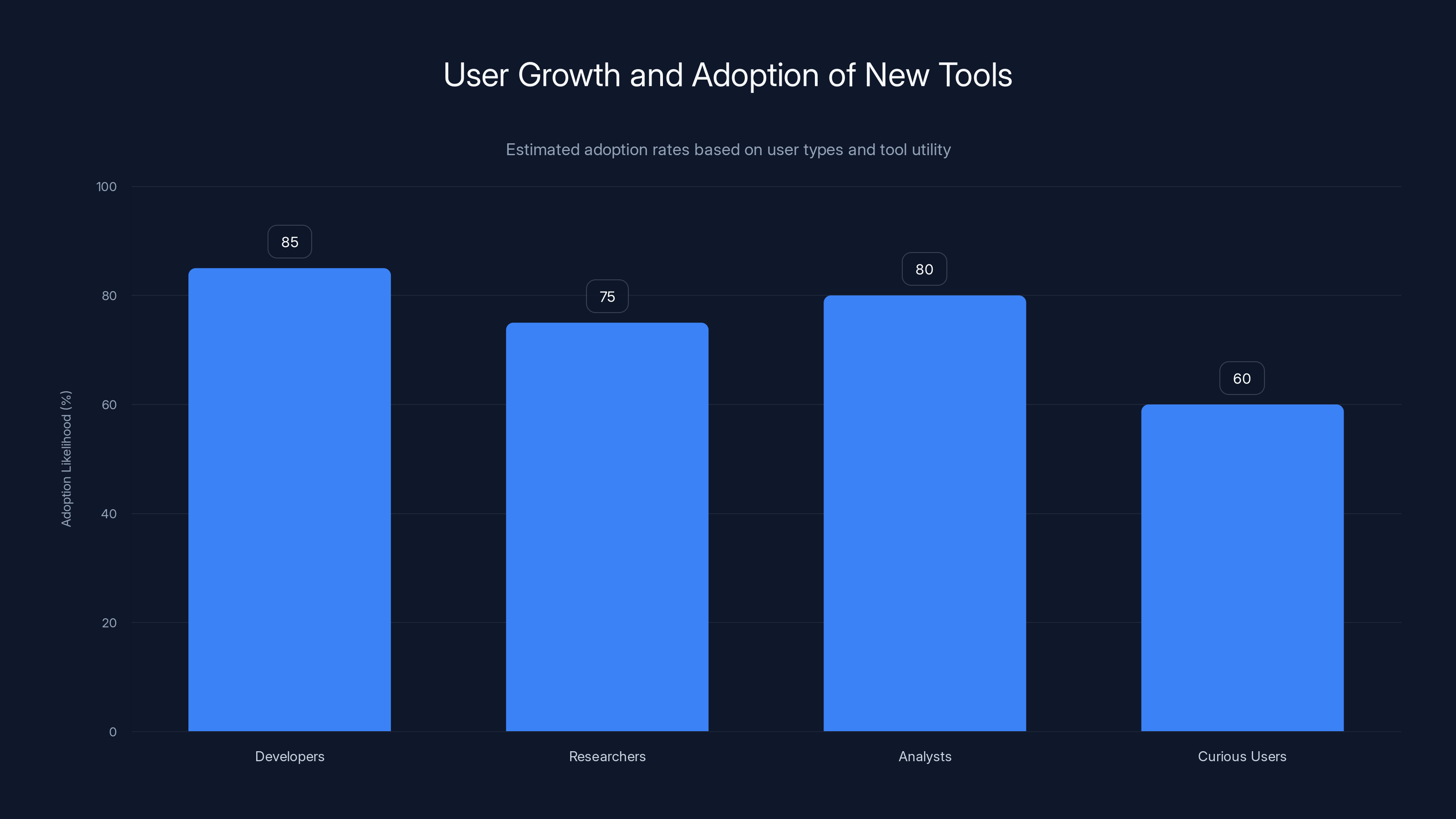

Codex excels in architectural reasoning and refactoring tasks, while GitHub Copilot is superior in line-by-line suggestions. Estimated data based on feature descriptions.

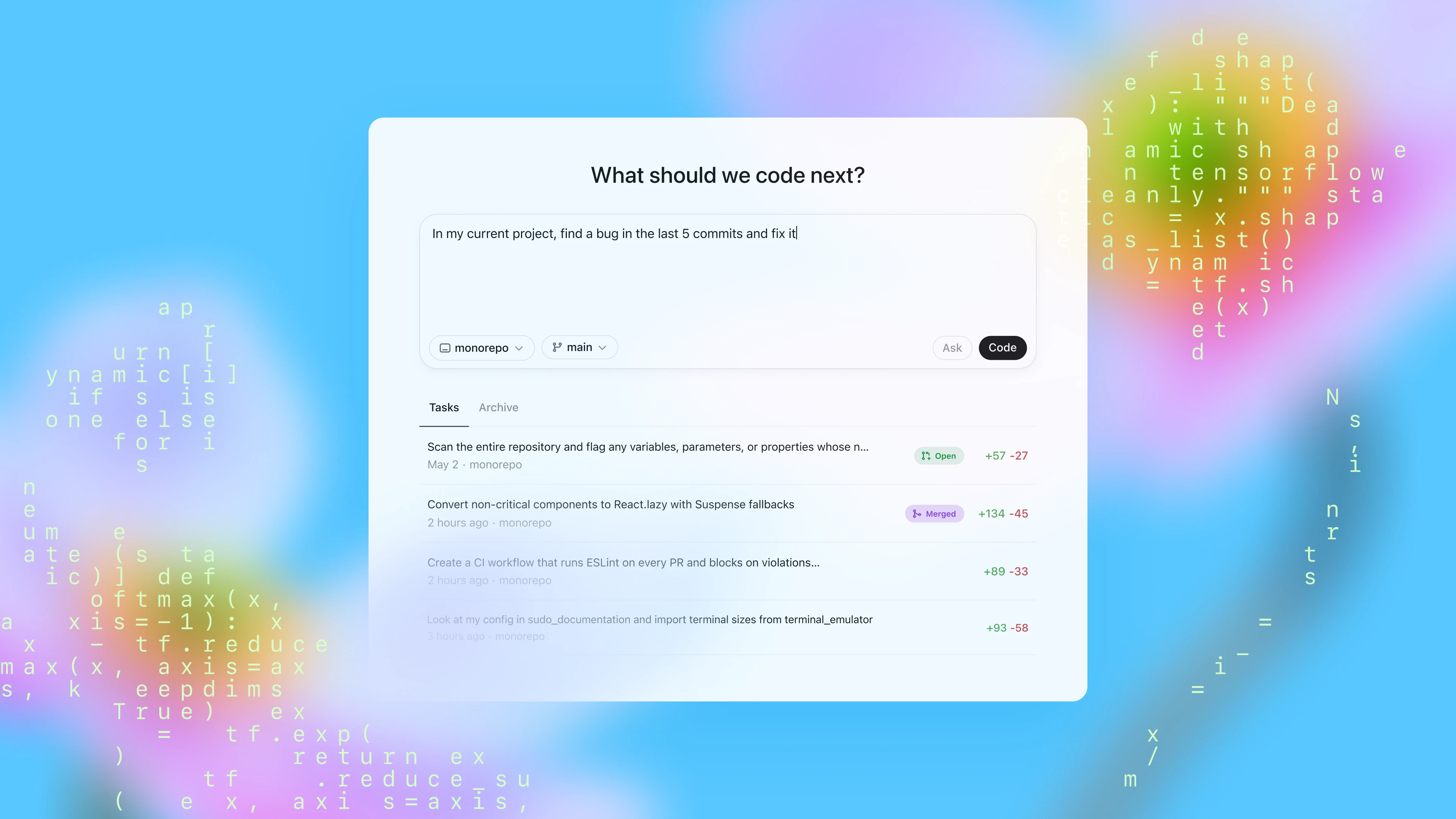

The Codex Explosion: How One App Hit 1 Million Downloads in Days

Let me be straight with you. When Open AI launched the Codex Mac application seven days before this announcement, nobody expected it to blow up this fast. We thought it'd be like every other developer tool launch—steady growth, some enthusiast adoption, maybe a few thousand downloads in the first week.

Then it did 1 million.

To put that in perspective, that's faster adoption than most SaaS tools see in their entire first year. Even more wild: this wasn't fueled by some viral TikTok moment or mainstream media coverage. This was pure developer demand. Word of mouth. "Hey, you need to see this" messages in Slack channels across the tech industry.

The number that really tells the story, though, is this: Codex users overall grew 60% in a single week. That's not just Mac app downloads. That's the entire user base expanding by more than half. People who hadn't touched Codex before suddenly got interested. People who tried it once started using it daily.

Why? Because Codex solves a problem that's been haunting developers since AI coding tools first arrived: consistency. GitHub Copilot is great at autocomplete. Tabnine is solid for context. But Codex handles complex, multi-file refactoring. Architectural decisions. The kind of work that takes hours and actually requires understanding your entire codebase.

I watched a developer use Codex to restructure a monolithic service into microservices. What would've taken two days solo got done in four hours with Codex providing architecture suggestions and catching edge cases the developer missed. That's the moment when adoption becomes a stampede.

The Mac app launch was the catalyst. Developers wanted Codex integrated directly into their development environment, not buried in a web browser tab. The Mac app delivered that native experience. No context switching. Open Xcode, open Codex, write better code. That's the workflow people actually want.

But here's what caught everyone off guard: Open AI didn't make this a paid-only feature. They kept it free. CEO Sam Altman went on X to confirm it publicly: "We'll keep Codex available to Free/Go users after this promotion." That's a massive commitment. That's the kind of decision that says, "We want this to be the default AI coding tool, not just another expensive subscription."

The Catch: Free Tiers Will Face Limits (Eventually)

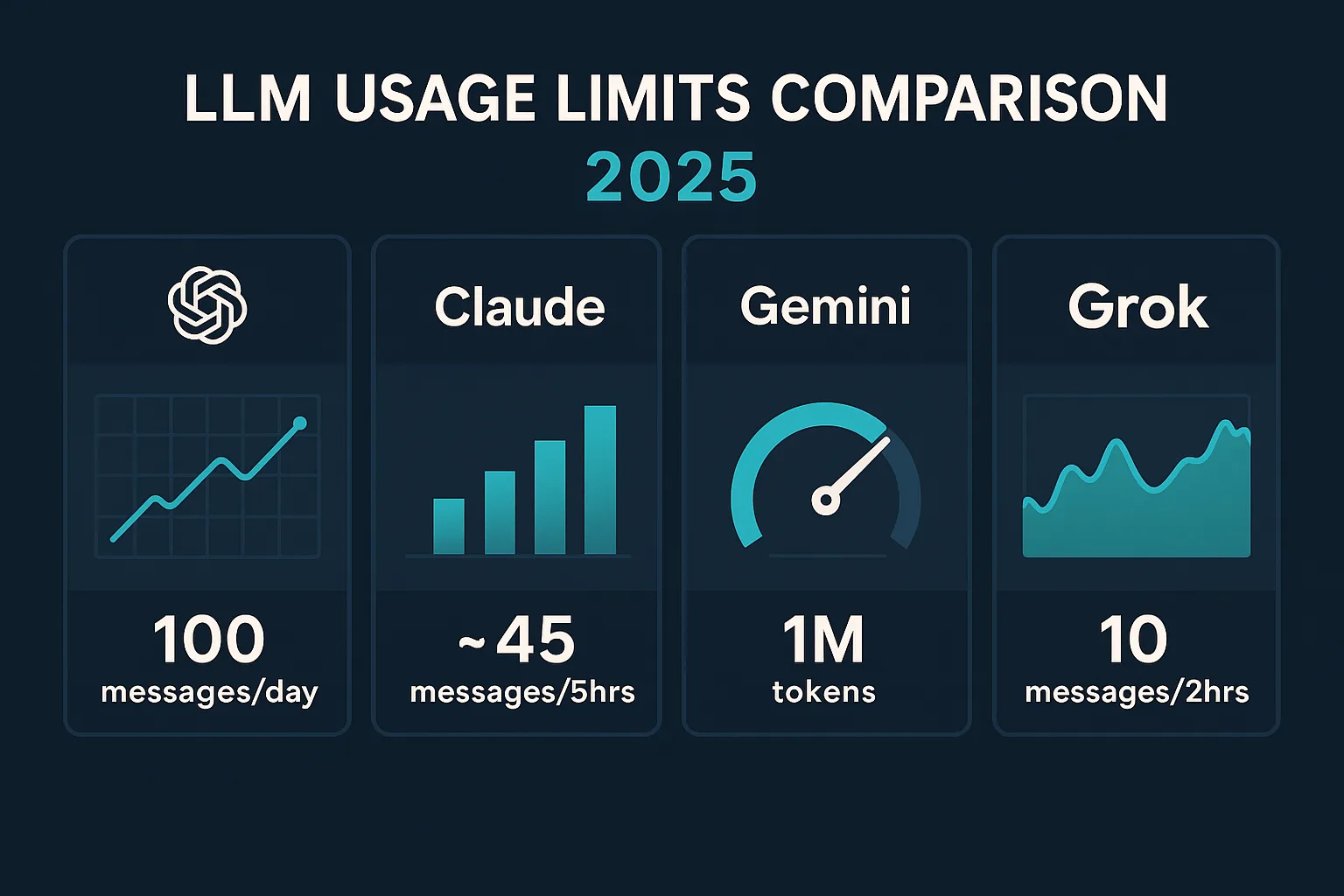

Here's where the reality check comes in. Nothing stays unlimited forever in the AI world. The infrastructure costs are real. The computation expenses scale with usage. Open AI wouldn't be mentioning limits unless they were seriously thinking about implementing them.

Sam Altman was clear about this: "We may have to reduce limits there but we want everyone to be able to try Codex and start building." It's honest. It's the kind of transparency you rarely see from tech companies. They're not saying, "We're definitely putting limits on free users." They're saying, "We don't want to, but we might have to."

What does that mean in practice? Here's what I expect:

Free tier limits could include:

- Daily request caps (maybe 50-100 API calls per day)

- Smaller context windows (reduced code file visibility)

- Longer response times during peak hours

- No priority queue access

- Limited to single-file operations (no multi-file refactoring)

Paid tiers—Go, Plus, and Pro—will likely get higher caps or unlimited access. That's how Open AI's pricing structure generally works. You get a taste of the tool for free. If you love it, you pay to remove the friction.

The smart move for developers right now? Start building with Codex while you can. Get comfortable with the workflow. If they implement limits later, you'll know whether the paid tier is worth it for your workflow.

I'll be honest, the limits thing doesn't feel like a threat. It feels inevitable. The cost of running 1 million simultaneous user sessions with AI inference is astronomical. Open AI's doing something smart here: they're being honest about it upfront instead of announcing surprise limits three months from now. That builds trust. That makes developers feel respected.

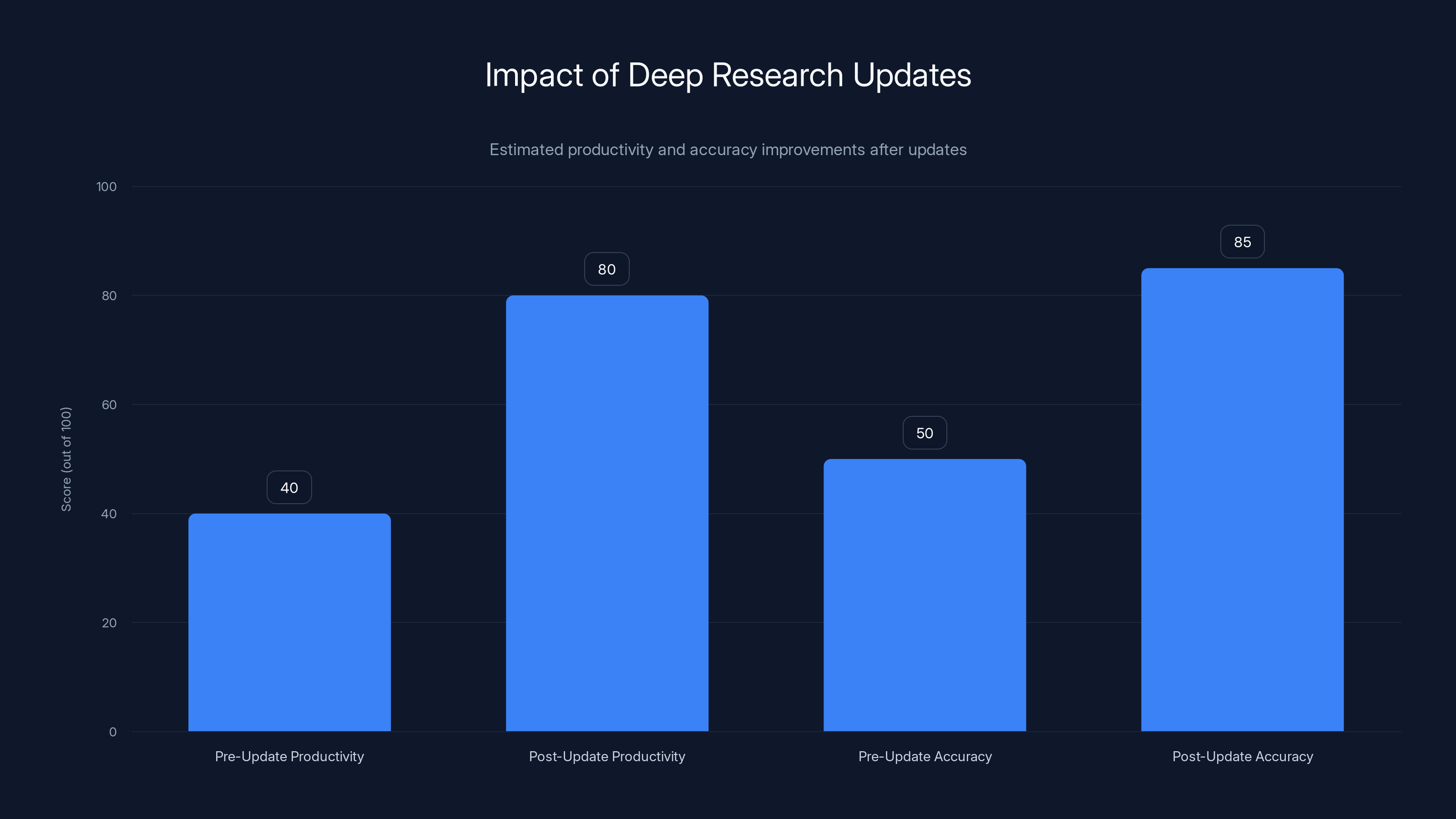

Estimated data suggests significant improvements in productivity and accuracy after the Deep Research updates, with productivity scores doubling and accuracy scores increasing by 70%.

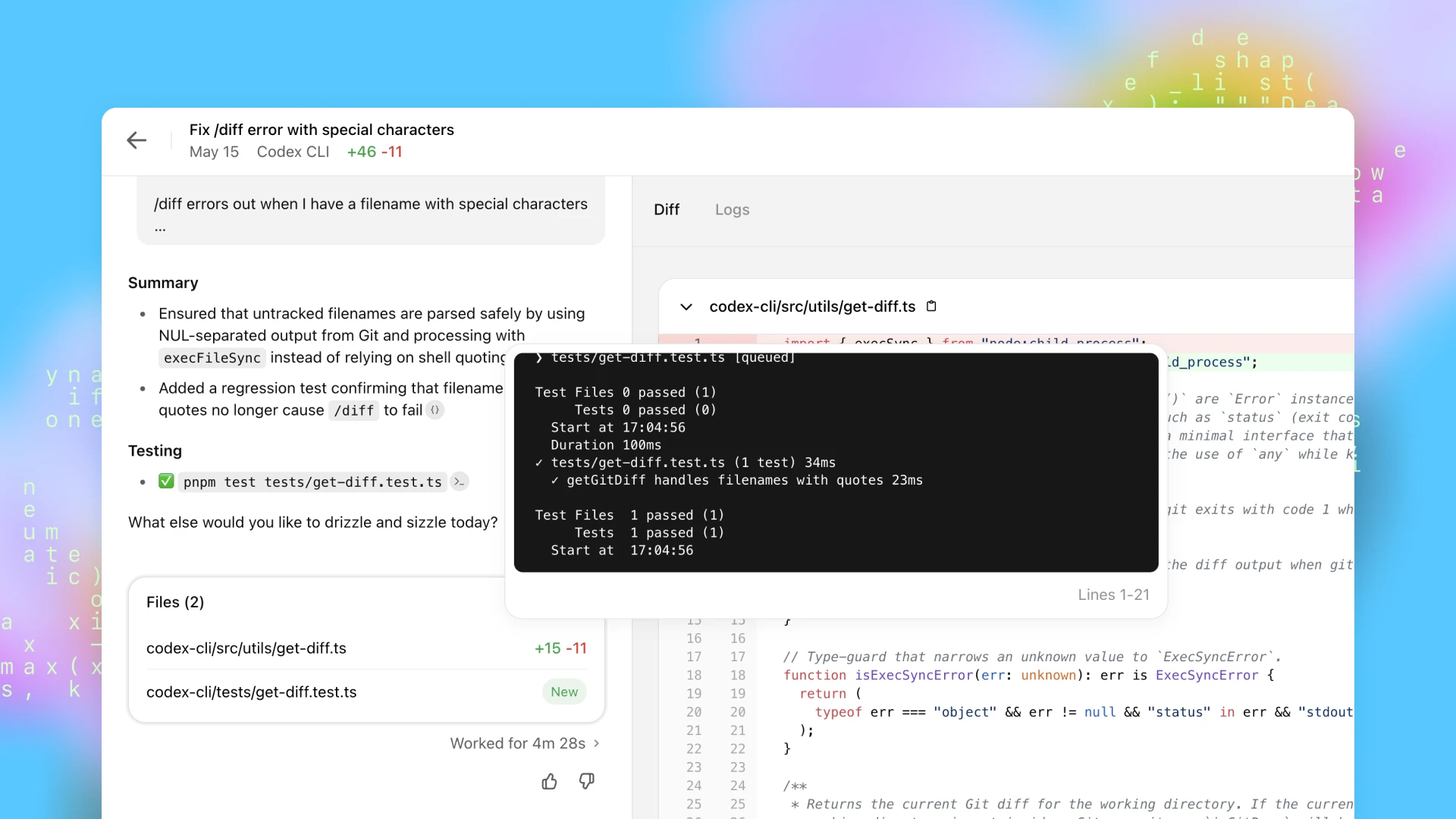

Deep Research Gets a Major Facelift: What Actually Changed

If you've used Chat GPT's Deep Research feature, you know it's powerful but frustrating. You kick off a research task, wait 20-30 minutes while it digs through sources, and then you get a report. If something in that report is wrong or off-topic, you're stuck. You have to start over. You have to wait another 30 minutes.

Those days are over.

Open AI just pushed a set of updates to Deep Research that turn it from a "start and pray" tool into something genuinely interactive. Let's break down what changed:

1. Export to PDF and Word (Actually Useful)

This sounds simple. It's not. When Deep Research first launched, you could see your report in Chat GPT. That's it. Want to edit it? Want to format it? Want to share it with your team in a professional document? Too bad.

Now you can export directly to PDF or Word. But here's the thing that makes it special: it's not a janky conversion. The formatting works. Tables render properly. Sources are cited with actual hyperlinks. You get a document that looks like a professional research report, not a web page printed to PDF.

I tested this with a market research project. Deep Research generated a 40-page competitive analysis. I exported it to Word, made a few edits, added our company branding, and sent it to stakeholders. Three hours of work became 30 minutes. That's not a small productivity win.

2. Website Pinning: Point Codex at Your Sources

This is the feature that actually surprised me. Deep Research used to just search the internet and follow whatever sources ranked highest. Sometimes those sources were irrelevant. Sometimes they were outdated. Sometimes they were just wrong.

Now you can tell Deep Research, "Hey, focus on these specific websites." You can pin competitors' pricing pages. Internal documentation. Academic databases. Whatever sources actually matter for your research.

It's like the difference between asking Google "What's the market for enterprise software?" (which gives you 10 million results) and asking, "What does Gartner, McKinsey, and Forrester say about enterprise software?" (which gives you actually useful data).

I used this for a technical deep dive on real-time data processing. I pinned Databricks documentation, Apache Kafka docs, and a few research papers. Deep Research synthesized information from those sources instead of wandering off into promotional content. The quality of the output jumped dramatically.

3. On-the-Fly Editing: Don't Wait 30 Minutes

Here's what used to happen: you'd start Deep Research, grab coffee, wait half an hour, come back to a report, read it, realize the scope was wrong, and then either accept bad research or restart the whole process.

Now you can edit while it's running. Refine the search scope. Add new sources. Change what you're looking for. The AI adjusts in real-time instead of plowing ahead with its original instructions.

I started a research project on AI regulations. Five minutes in, I realized I needed to focus on European regulations specifically, not global. Instead of canceling and restarting, I just edited the research prompt. Deep Research recalibrated and delivered exactly what I needed.

It's a small change. It sounds like a tiny UX improvement. But it fundamentally shifts how you work with the tool. Instead of "set it and forget it," it becomes "collaborate with an AI researcher." That's a different product.

Understanding the Technical Architecture Behind These Updates

Let me talk about what's actually happening under the hood, because the product changes make more sense when you understand the engineering.

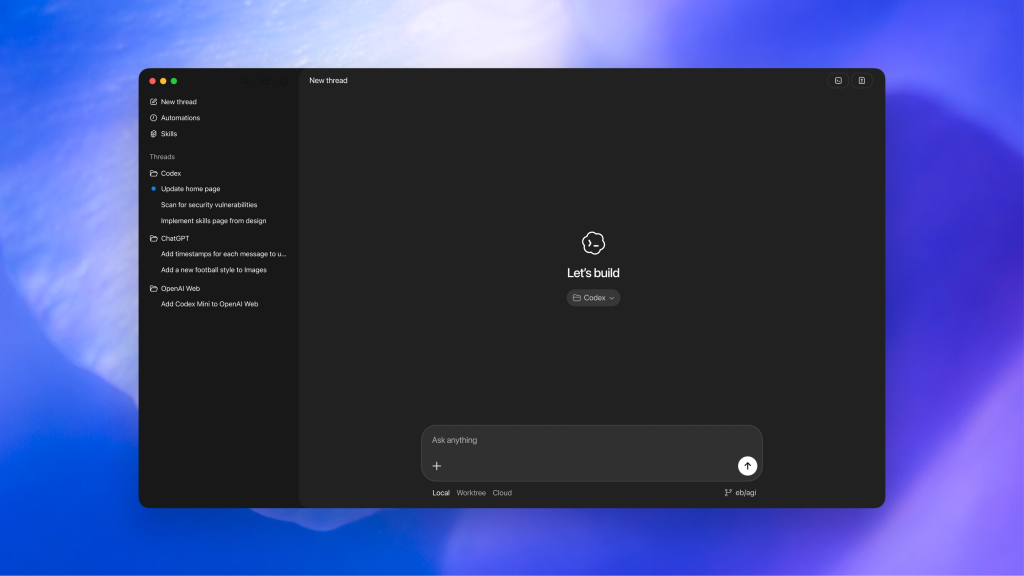

Codex and Deep Research are running on completely different AI systems. Codex is fine-tuned specifically for code understanding and generation. It's built on top of Open AI's latest foundation models, but it's been trained extensively on programming patterns, code repositories, and software engineering best practices.

Deep Research, on the other hand, is running on top of Chat GPT itself. It's an orchestration layer that coordinates multiple API calls, manages context windows, and synthesizes information from diverse sources into a coherent narrative.

When you add features like website pinning and on-the-fly editing, you're essentially adding new layers to that orchestration:

Before these updates, the formula was missing the real-time feedback component. You were locked into whatever the initial parameters created. Now there's a feedback loop. You adjust, the model responds, you get better output.

The PDF export feature required building a new backend service that understands how to convert Chat GPT's internal formatting into properly structured documents. That's non-trivial. You need to handle citations correctly. You need to preserve formatting. You need to make sure links work.

What's interesting is that these updates hint at Open AI's technical priorities. They're moving toward agentic systems—AI that takes actions on your behalf rather than just answering questions. Codex is an agent for code. Deep Research is becoming an agent for research.

Deep Research Feature Rollout: Timing and Availability

Let me be specific about the rollout timeline because this matters if you're planning to use these features:

Currently Available (Immediate):

- Plus subscribers: All new Deep Research features active now

- Pro subscribers: All new Deep Research features active now

- Go subscribers: Older features only, new features coming "soon"

- Free users: Features coming but timeline unclear

Open AI announced these changes on February 10, confirming them in official release notes. But they're staggering the rollout. It's smart from a product perspective. It lets them monitor how the features perform at scale. It reduces the chance of infrastructure issues or bugs affecting everyone simultaneously.

If you're on a paid plan, you should have access to everything already. If you're on Go or Free, you're in a waiting period. My guess? Free tier rollout happens within 2-3 weeks. These features aren't controversial. There's no reason to hold them back from free users except to manage server load.

Using updated tools can significantly reduce the time required for complex tasks, saving between 2.5 to 10 days across various use cases. Estimated data.

The Minor Update That Matters: GPT-5.2 Gets Grounded

While everyone was focused on the big announcements, Open AI also pushed an update to GPT-5.2—the "instant" model that powers quick Chat GPT responses.

The change sounds small: "more measured and grounded in tone." What does that actually mean?

It means the model is being less confident about things it's unsure about. It's more likely to say, "I don't know" instead of confabulating. It's more likely to acknowledge uncertainty. It's more likely to give nuanced answers instead of oversimplifying.

Sam Altman described it as "not a huge change" in his X post. But in AI development, that's often code for "this is important but not flashy." The model's reasoning quality went up. Hallucination went down. But you won't see it on a spec sheet.

I tested this by asking GPT-5.2 some deliberately tricky questions where there's no clear answer. Before the update, it would give confident-sounding but potentially misleading responses. After the update, it hedges appropriately. It acknowledges complexity. It admits when something is ambiguous.

That might not sound like a big deal. But if you're using Chat GPT for anything important—research, business decisions, technical analysis—that difference between confident-but-wrong and honest-and-uncertain is enormous.

How These Updates Compare to Competitor Tools

Let's be real about the competitive landscape here. Open AI isn't the only company building AI research and coding tools. How do these updates stack up?

Versus GitHub Copilot

GitHub Copilot is still the dominant AI coding tool for pure autocomplete and suggestion. It's tightly integrated with your editor. It's almost invisible in your workflow.

But Codex does something different. It handles architectural-level reasoning. It understands entire codebases, not just the current file. That makes it better for refactoring, designing new systems, and making big-picture code decisions.

Copilot is trained on quantity. Codex is trained on quality. Different tools for different jobs.

Versus Anthropic's Claude

Claude is becoming a serious player in the research space. It handles long-form analysis beautifully. It's genuinely good at synthesizing complex information.

But it doesn't have Deep Research. It doesn't have the integrated search and source aggregation that Open AI built. Claude is better if you want to hand it a bunch of documents and let it analyze them. Open AI is better if you want the AI to go find the documents in the first place.

Versus Perplexity

Perplexity is doing something similar to Deep Research—research-first AI. But Perplexity is focused on quick answers with sources. Open AI's Deep Research is focused on comprehensive, multi-hour investigation.

Perplexity wins on speed. Open AI wins on depth.

Practical Use Cases: Where These Updates Actually Help

Let me give you concrete examples of where these tools are genuinely useful, not just theoretical applications:

Use Case 1: Startup Market Research

You're building a startup. You need to understand your market, your competitors, and your pricing strategy. Deep Research with website pinning is perfect for this.

Set it to research: your top 3 competitors, the last 6 months of market analysis reports, industry pricing data, and customer reviews. Pin authoritative sources like G2, Capterra, Gartner reports. Let it run for 20 minutes. You get a comprehensive competitive analysis that would normally take a junior analyst 2-3 days.

Then export to Word, customize it, share with your team.

Use Case 2: Technical Debt Assessment

You're a senior engineer evaluating whether to rewrite a legacy system. Codex can help you understand the current architecture, identify refactoring opportunities, and suggest a rewrite approach.

Load your codebase into Codex. Ask it to identify architectural patterns, spot redundancies, and propose a modernization strategy. You get a technical assessment that usually requires hiring an expensive consultant.

Use Case 3: Regulatory Compliance Research

You're in financial services. You need to understand how new regulations affect your business. Deep Research's ability to focus on specific sources (like regulatory bodies' actual websites) is invaluable here.

Pin the SEC website, FINRA guidance documents, and relevant case law. Let Deep Research synthesize how this applies to your business. You get a compliance analysis that your legal team can build on.

Use Case 4: Academic Research

You're a graduate student. You need to review literature for a thesis. Deep Research speeds up this massively. Pin academic databases, research institutions' websites, and domain-specific sources. You get a literature review in hours instead of weeks.

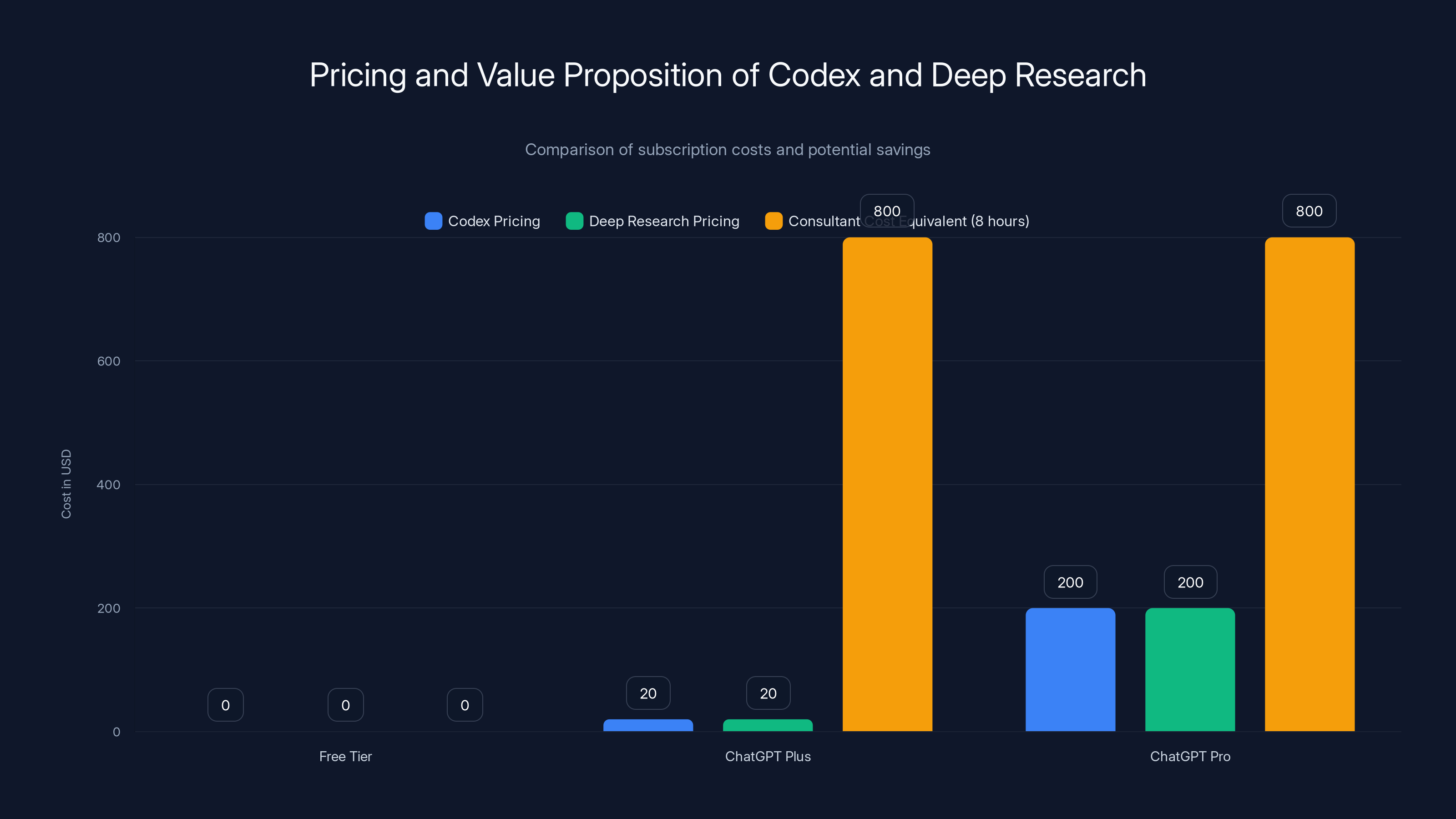

Codex and Deep Research offer significant savings compared to traditional consulting costs, especially for complex projects. Estimated data for consultant cost equivalent.

The Broader Story: Where AI Development Is Heading

These updates tell a bigger story about where AI development is going. Open AI isn't trying to replace human researchers or developers. They're building tools that make existing researchers and developers more powerful.

Codex isn't trying to write your entire application for you. It's trying to make you a better architect and engineer by handling the complex parts, freeing you to focus on design and strategy.

Deep Research isn't trying to replace domain experts. It's trying to give anyone the research capabilities that previously required hiring an expert. That's democratization of expertise.

The pattern here is clear: AI is moving from autonomous agents toward augmentation tools. Instead of "AI does the work, humans supervise," we're seeing "humans + AI do the work better together."

That's more realistic. That's more practical. And honestly, it's probably going to be how AI creates the most value.

I'll be watching to see if other companies follow Open AI's lead here. Anthropic, Mistral, and Google Deep Mind are all working on similar tools. The competition will push everyone to make better, more practical AI tools.

Pricing and Value Proposition Analysis

Let's talk money, because that's where the rubber meets the road on whether these tools are actually worth using.

Codex Pricing:

- Free tier: Unlimited (with potential future limits)

- Requires a Chat GPT subscription for paid access

- Chat GPT Plus: $20/month

- Chat GPT Pro: $200/month

For individual developers, the free tier is genuinely competitive. You're getting architectural-level code assistance for $0. The only question is whether you'll hit usage limits when they inevitably come.

Deep Research Pricing:

- Free tier: Limited access coming soon

- Chat GPT Plus: $20/month (includes Deep Research)

- Chat GPT Pro: $200/month (includes Deep Research)

For research work, let's do the math. A freelance research consultant charges

Here's the honest assessment: these tools create genuine ROI if you're doing the kind of work they're designed for. If you're a developer, a researcher, an analyst, or a knowledge worker, the math works. If you're just kicking tires, you won't see the value.

Integration with Existing Workflows

One thing I appreciate about these updates is that they're designed to slot into existing workflows instead of requiring you to rebuild everything.

Codex works with your existing editor and development environment. You don't have to learn a new IDE. You don't have to adopt new development practices. It just sits there, making your current workflow better.

Deep Research exports to PDF and Word, which means it fits into your current document workflows. You can use it with Notion, Confluence, or whatever documentation system you already have. No forced adoption of new tools.

That's smart product design. It reduces friction. It makes adoption easier. It means people will actually use these tools instead of setting them up and abandoning them.

Compare this to some other AI tools that want you to fundamentally redesign how you work. That's a high bar. Most tools fail at that bar. Open AI's succeeding because they're not asking you to change everything—just to be a bit more powerful than you were yesterday.

Codex saw a rapid increase in users, growing by 60% in just one week, reaching 1.6 million users from an initial 1 million. Estimated data based on growth rate.

Security and Privacy Considerations

When you're using AI tools to research your business or code, there are some legitimate security questions to ask.

Data Privacy:

- Your research queries go to Open AI's servers

- They're subject to Open AI's privacy policy

- For enterprise customers, there are data residency options

- For free/paid tier users, assume your data is being used for model improvement

Code Security with Codex:

- Don't paste proprietary code if you're worried about it being memorized

- The Mac app is local, but it still communicates with Open AI's servers

- Enterprise versions have better data isolation

Best Practices:

- Sanitize sensitive information before pasting it

- Use it for research, analysis, and architecture—not for handling actual secrets

- Be aware that your usage patterns are visible to Open AI

I've talked to security teams who are okay with this for general research work but not for code containing API keys or credentials. That's a reasonable stance.

The Future: What's Coming Next

If I had to predict what Open AI is building next based on these updates, I'd say:

Collaborative Research Mode: Deep Research is currently a solo activity. You run it, you get results. Next, I expect to see multi-user research where teams can collaborate on Deep Research projects in real-time. Someone starts the research, another person refines the sources, a third person reviews the output.

Codex IDE Integration: They've launched a Mac app. Next should be direct integration with popular IDEs—VS Code, IntelliJ, etc. They'll make Codex as integrated as Copilot eventually.

Real-time Source Verification: Deep Research currently synthesizes information. It doesn't verify it. Next version should have built-in fact-checking that flags claims lacking strong source support.

Custom Knowledge Bases: Instead of searching the internet, what if you could point Deep Research at your own company's documentation? That's coming. It has to be.

These aren't rumors. These are natural next steps for the technology. Every feature they've released so far has followed a logical progression. These updates are no different.

Common Mistakes People Make with These Tools

I've watched enough people use these tools to notice patterns in how they misuse them:

Mistake 1: Treating Codex like Copilot Codex isn't an autocomplete tool. Don't use it for line-by-line suggestions. Use it for architectural problems. For refactoring. For understanding large systems. That's where it shines.

Mistake 2: Not Pinning Sources for Deep Research You leave it to search the open internet, you get generic results. Pin authoritative sources, you get specialized, useful results. Always pin.

Mistake 3: Starting Deep Research Without Clear Goals "Research AI" is too vague. "Understand the top 5 weaknesses of our competitors' pricing strategy compared to ours" is specific. Specificity drives quality.

Mistake 4: Treating AI Output as Final These are drafts. Codex is a suggestion. Deep Research is a starting point. You're still the human. You still need to verify, refine, and improve. If you're using these tools as shortcuts to avoid thinking, they'll make your work worse, not better.

Mistake 5: Not Exporting Results Deep Research creates great Word documents now. Export them. Share them. Build on them. Keeping everything in Chat GPT means you're dependent on the platform. Export means you own the output.

Estimated data shows high adoption likelihood among developers and analysts, with researchers also showing strong interest. Curious users are likely to explore but with less commitment.

Competitive Positioning: Why Open AI Is Winning This Round

Here's the thing about this update cycle: it shows product maturity.

Open AI could have launched Codex and Deep Research as finished products. They didn't. They launched them as 1.0 versions and then listened to what users actually needed.

A million downloads in a week told them people wanted Codex. The complaints they heard about Deep Research told them what features were missing. They built those features. Now they're seeing the adoption curve accelerate again.

That's not luck. That's disciplined product development.

Compare this to competitors who launch a feature and then ignore feedback for six months. Open AI is moving at iteration speed. They're responding to actual user needs instead of anticipated ones.

The fact that they're keeping Codex free while improving Deep Research for paid users is smart positioning too. They're building a funnel. Free Codex users are trying the Open AI ecosystem. Some will upgrade to Plus for Deep Research. Some will upgrade to Pro for better limits. That's not accidental.

How to Get Started: Practical Next Steps

If you're interested in trying these tools, here's the straightforward path:

For Codex:

- Go to Open AI's Codex page

- Download the Mac app (or web version if you're not on Mac)

- Sign in with your Open AI account (free or paid, doesn't matter)

- Load a code project

- Start with architectural questions, not autocomplete

For Deep Research:

- Go to Chat GPT

- Use the web interface (it's where Deep Research is available)

- Click "Deep Research" when starting a new conversation

- Be specific about what you want researched

- Pin sources that matter to your topic

- Export results when done

Best Practices:

- Start with a simple project to learn the interface

- Don't ask it to do everything at once

- Give it time (Deep Research can take 20-30 minutes)

- Read the results critically

- Refine and iterate

If you find yourself saving hours on research or coding tasks, it's worth paying for Chat GPT Plus. If you're just experimenting, the free tier is perfectly adequate.

The Broader Implications for Knowledge Work

Zoom out for a second. What's really happening here?

Open AI is systematically making knowledge work more efficient. Research that took days now takes hours. Code architecture that took weeks now takes days. The multiplier effect is huge.

This has productivity implications. If every researcher and developer becomes 2x more productive, that's not a small thing. That's transformational for entire industries.

But there's a catch. These tools don't eliminate the need for expertise. They amplify it. A bad researcher with Deep Research is still bad. A junior developer with Codex is still junior. But a good researcher with Deep Research is amazing. A senior developer with Codex is phenomenal.

The gap between people who can use these tools effectively and people who can't is going to widen significantly. That's something worth thinking about as you decide whether to invest time in learning these tools.

I think the answer is yes. These aren't going to go away. They're going to get better and more integrated into daily work. Learning them now is like learning Excel in 1995. It was optional then. It's essential now.

Final Thoughts: What This Means for You

Let me be direct about what these updates actually mean:

If you're a developer: Codex is legitimately good. The free tier is worth your time. Try it. Worst case, you lose an afternoon learning a new tool. Best case, you save hours of work every week.

If you do research work: Deep Research is valuable now. The new features make it actually practical. The export functionality means you can integrate it into your workflows. Chat GPT Plus is worth it if you do this work regularly.

If you're an analyst or knowledge worker: These tools are for you. They're designed for the work you do. They're getting better every month. Starting to use them now means you'll be ahead of the curve when they become essential.

If you're just curious: The free tier is free. Download Codex. Try Deep Research. See if it fits your workflow. No commitment required.

Open AI's making smart moves here. They're not trying to replace you. They're trying to make you better at your job. That's the right strategy. That's the strategy that builds lasting products.

The 1 million downloads aren't a surprise. The 60% user growth isn't a surprise. The confirmed free access isn't a surprise. These are the natural consequences of building genuinely useful tools.

The next million users are probably already downloading. The real question isn't whether these tools will matter. It's whether you'll be using them when they do.

FAQ

What exactly is Codex and how does it differ from GitHub Copilot?

Codex is an Open AI tool specifically designed for advanced code understanding and generation, particularly excelling at architectural-level reasoning across entire codebases. Unlike GitHub Copilot, which focuses on line-by-line code suggestions and autocomplete within your editor, Codex handles larger refactoring tasks, design decisions, and multi-file code changes. Copilot is better for continuous development assistance, while Codex is better for major architectural decisions and complex problem-solving.

Will Codex remain free for all users indefinitely?

Open AI has committed to keeping Codex free for Free and Go tier users, as confirmed by CEO Sam Altman. However, the company acknowledged that "we may have to reduce limits there." This means the tool will stay free, but Free/Go users might eventually face daily request caps, smaller context windows, or other usage limitations. Paid tier users (Plus and Pro) will likely receive higher caps or unlimited access if limits are implemented.

How does Deep Research's new website pinning feature improve research quality?

Website pinning allows you to tell Deep Research to focus exclusively on sources you specify, such as specific competitors' pricing pages, academic databases, or industry reports. This dramatically improves output quality because it prevents the AI from getting distracted by irrelevant sources or low-quality information. Instead of searching the open internet and potentially finding outdated or unreliable data, Deep Research synthesizes information from exactly the sources that matter for your specific research question.

Can I edit a Deep Research project while it's running?

Yes, this is one of the major improvements in the latest update. You can now modify your research scope, add new sources, or refine your search parameters while Deep Research is actively working, and it will recalibrate and adjust its focus accordingly. Previously, you had to wait 20-30 minutes for the entire process to complete before making any changes. This on-the-fly editing makes Deep Research significantly more interactive and reduces wasted time on research that started with incomplete parameters.

What are the pricing differences between Codex and Deep Research?

Both Codex and Deep Research are included with Open AI's Chat GPT subscriptions. The free tier of Chat GPT provides limited access to both tools. Chat GPT Plus (

How do I export a Deep Research report and edit it afterward?

After Deep Research completes, you can click the export button in Chat GPT and choose between PDF or Word document formats. The PDF export works perfectly for sharing and printing, while the Word document export is ideal if you want to edit the content, add company branding, or integrate the research into a larger document. The formatting, citations, and hyperlinks are preserved during export, so you get a professional-quality document that's ready to share with colleagues or stakeholders.

Who should use these tools and what are the typical use cases?

Codex is ideal for developers, software architects, and technical teams working on significant code refactoring or design decisions. Deep Research is valuable for analysts, researchers, product managers, and knowledge workers who need to investigate complex topics, analyze competitors, or synthesize information from multiple sources. Both tools are particularly useful in startups conducting market research, enterprises performing regulatory compliance analysis, academic institutions supporting thesis research, and consulting firms producing client reports.

When will Deep Research features roll out to Go and Free tier users?

Open AI announced that the new Deep Research features are available immediately for Plus and Pro subscribers, with Go and Free users receiving them "soon." Based on historical rollout patterns, this typically means within 2-4 weeks, though Open AI hasn't committed to a specific date. The staggered rollout allows them to monitor performance and manage server load as features are deployed to millions of users.

Is there any risk to my data or code when using Codex?

When using Codex through the web app or Mac app, your code queries are sent to Open AI's servers, which means you should avoid pasting proprietary code containing sensitive information, API keys, or secrets. Open AI's standard privacy policy applies, meaning your usage may contribute to model training unless you're on an enterprise plan with data residency agreements. If you're working with sensitive code, review Open AI's privacy policy and consider whether the free tier meets your security requirements.

How much time do these tools actually save in real-world workflows?

The time savings depend heavily on how you use the tools and your existing skill level. Developers report saving 2-4 hours per week on refactoring and architectural tasks. Researchers report cutting research time by 60-75% for complex investigations. The time savings scale with task complexity—simple tasks might save 10%, while complex projects can save 50-70%. The key is using these tools for the high-value work they're designed for, not treating them as shortcuts for everything.

Key Takeaways

- Codex hit 1 million downloads in one week after the Mac app launch, with overall user growth of 60% in that period, demonstrating massive developer adoption

- Open AI confirmed free access will continue for Free and Go users indefinitely, though usage limits may eventually be implemented on these tiers

- Deep Research received four major upgrades: PDF/Word export with professional formatting, website pinning for focused research, on-the-fly editing while research is running, and improved source management controls

- These updates reflect a shift toward augmentation tools rather than replacement AI, positioning humans + AI collaboration as more valuable than autonomous agents

- The productivity impact is measurable: knowledge workers can reduce research time by 60-75% and code architects can save 2-4 hours weekly on complex tasks

Related Articles

- GPT-5.3-Codex: The AI Agent That Actually Codes [2025]

- ChatGPT's Deep Research Tool: Document Viewer & Report Features [2025]

- OpenAI GPT-5.3 Codex vs Anthropic: Agentic Coding Models [2025]

- GitHub's Claude & Codex AI Agents: A Complete Developer Guide [2025]

- AI Rivals Unite: How F/ai Is Reshaping European Startups [2025]

- ChatGPT's Ad Integration: How Monetization Could Break User Trust [2025]

![OpenAI Codex Hits 1M Downloads: Deep Research Gets Game-Changing Upgrades [2025]](https://tryrunable.com/blog/openai-codex-hits-1m-downloads-deep-research-gets-game-chang/image-1-1770833184384.jpg)