The Scientific Revolution Nobody Expected

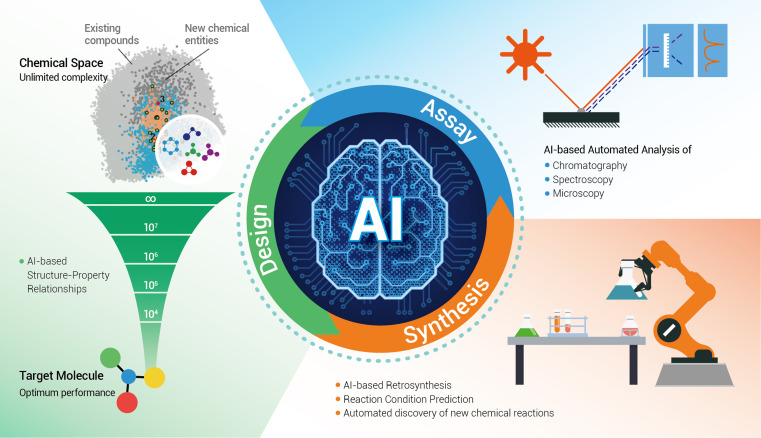

Something shifted in science sometime around late 2024. Researchers started treating large language models less like novelties and more like collaborators. Not autonomous research bots that would replace human scientists—nobody's buying that fantasy anymore—but actual thinking partners that could handle tedious verification work, identify citation gaps, or suggest new proof strategies.

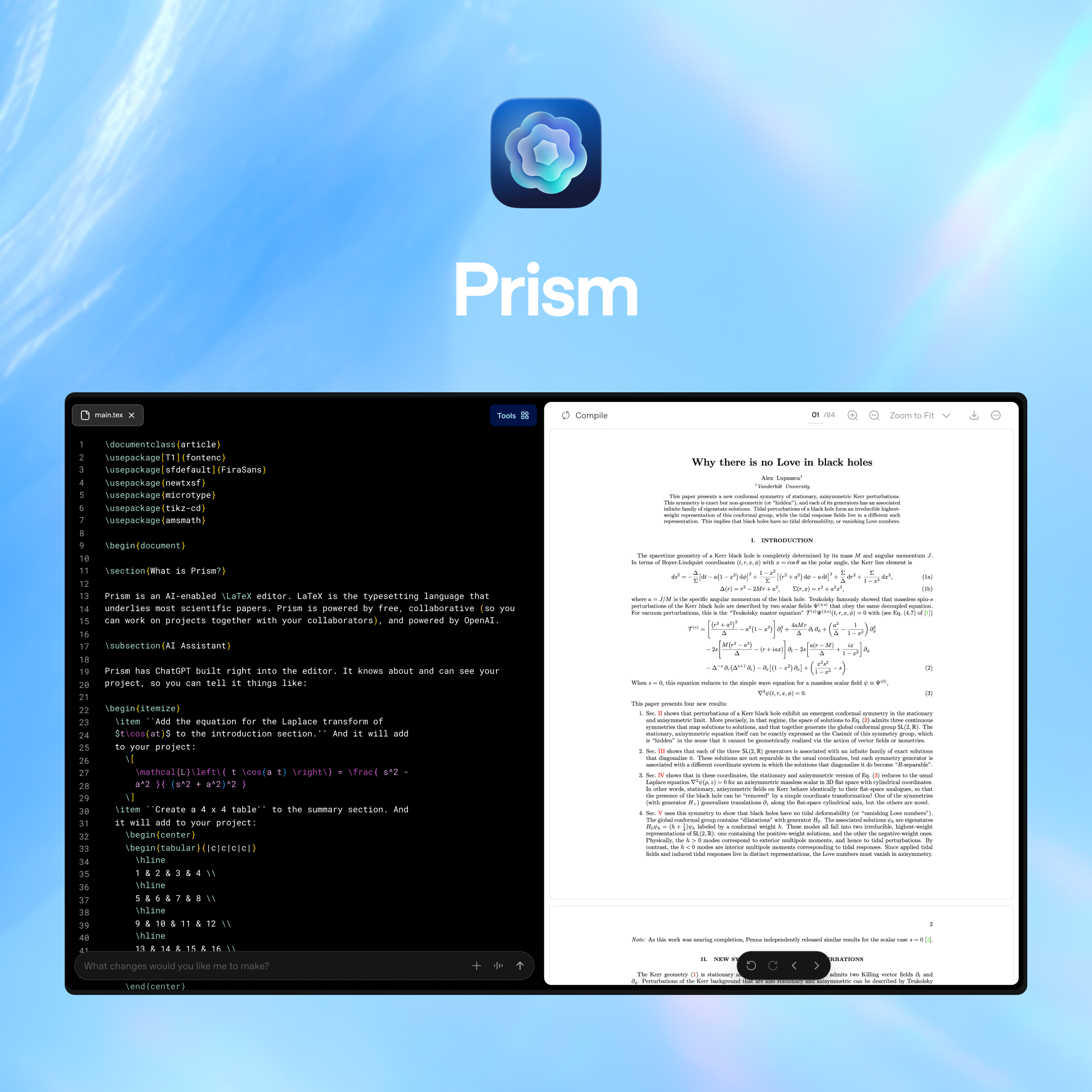

Then OpenAI announced Prism on January 27, 2026, and it became clear they'd been watching these experiments closely. Really closely. Close enough to understand what scientists actually needed: not another AI chatbot in a browser tab, but a purpose-built workspace that speaks the language of research—literally LaTeX, the typesetting standard that's been the backbone of academic papers for four decades.

Here's the thing about scientific work that tech companies usually get wrong. It's not about replacing human judgment. It's about removing friction from the parts of research that slow everything down: literature review, notation consistency, proof verification, diagram assembly, citation management. The busywork. That's where Prism lives, and it's a genuinely smart move.

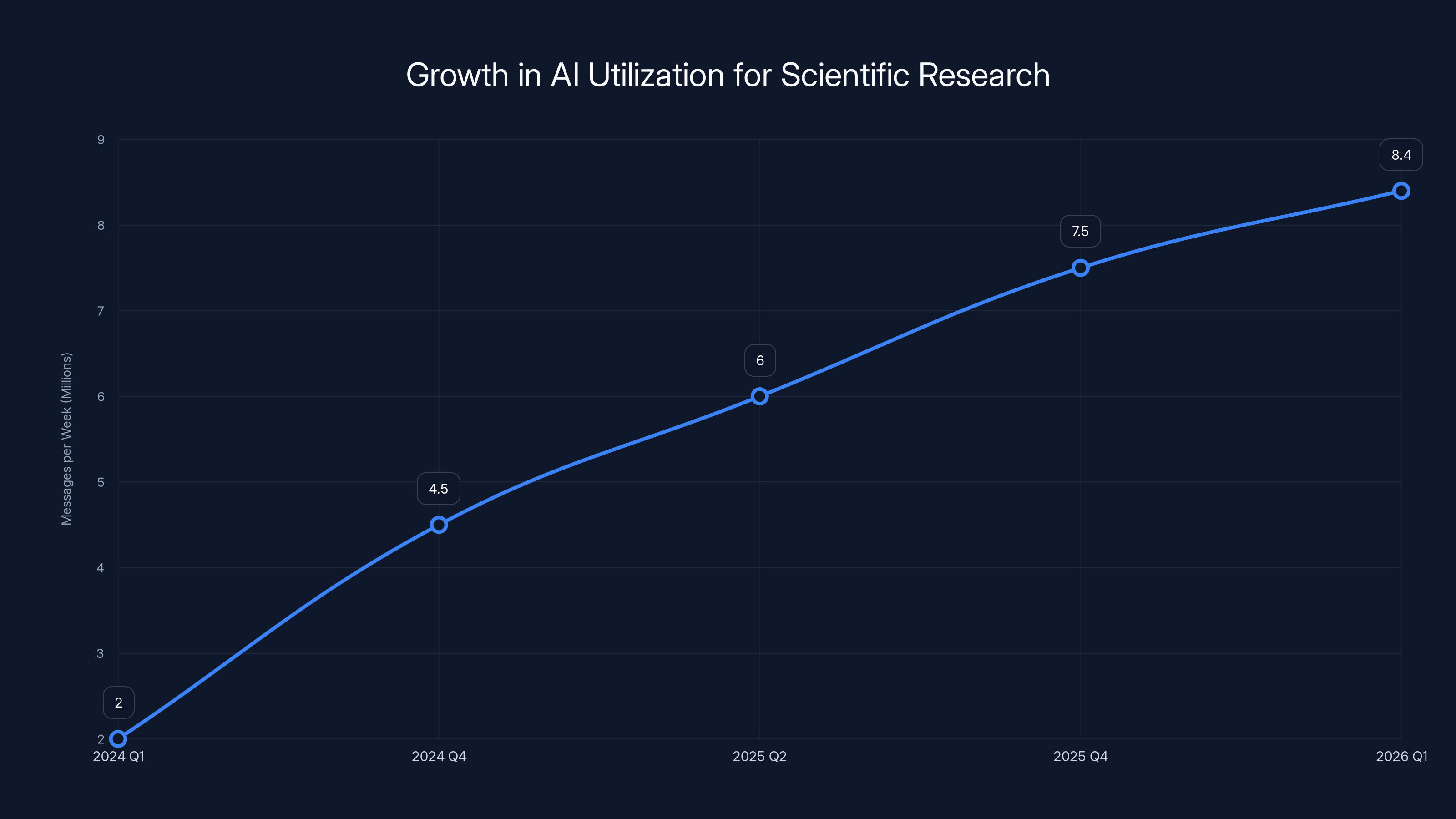

The data supports this shift. OpenAI reported that ChatGPT now receives an average of 8.4 million messages per week on advanced scientific topics. That's not people asking ChatGPT to write their homework. That's working scientists, mathematicians, and researchers treating AI as part of their toolkit. The company watched this happen in their own product and asked the obvious question: what if we built the tool researchers actually wanted instead of a general-purpose chatbot they're repurposing for science?

Prism is the answer. And it's free for anyone with a ChatGPT account, which means adoption could be explosive. The scientific community has spent the better part of a year figuring out how to use GPT models effectively. Now they have a tool designed specifically for their workflow.

Let me walk you through what makes this different, why the timing matters, and what this means for the future of how science actually gets done.

What Prism Actually Is (And Isn't)

Prism is an AI-enhanced research workspace, not a research automation system. This distinction matters more than you'd think because there's been so much hype about AI discovering proofs on its own that people default to imagining robots doing science alone in a lab.

That's not Prism. That's not what it does.

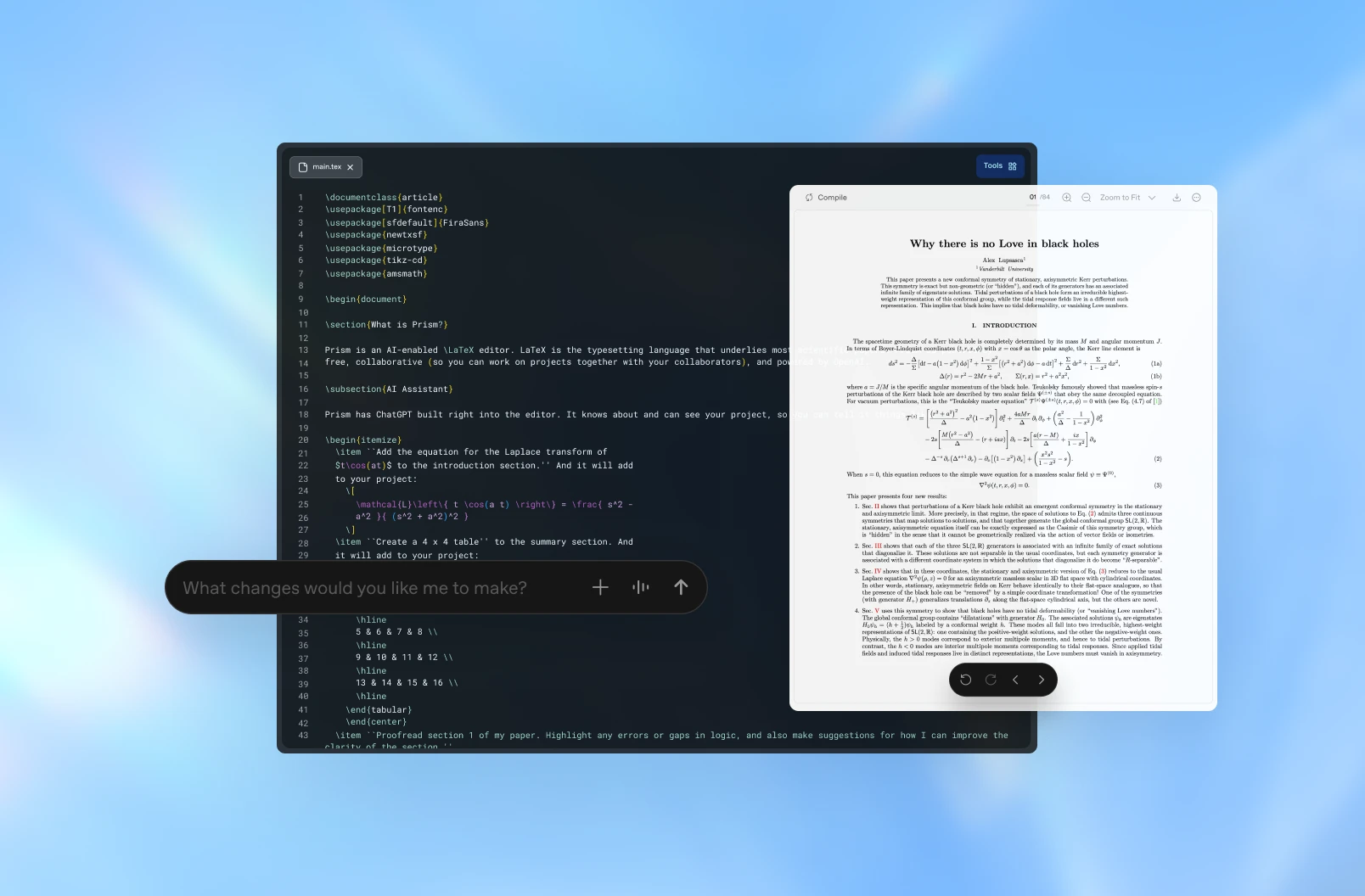

Instead, think of it as a word processor meets reference management system meets collaborative AI assistant. You write your paper in a familiar interface. The AI reads what you're writing and offers suggestions. You ask it to fact-check claims against existing literature. You paste in rough whiteboard diagrams and it converts them to publication-ready figures. You're still driving the research. Prism is the copilot that handles the overhead.

Kevin Weill, VP of Science at OpenAI, made the analogy explicit. He compared Prism to Cursor and Windsurf—AI-enhanced coding environments that transformed how software engineers work. Those tools didn't replace engineers. They made engineers dramatically more productive by handling boilerplate, suggesting refactors, and catching obvious bugs. Prism does the same thing for scientists.

The core features are straightforward:

- Integrated GPT-5.2 access: The model runs alongside your research, reading full context and suggesting improvements

- LaTeX integration: Works directly with the document format scientists already use, not some proprietary format

- Claim verification: AI can assess factual claims in your writing against training data and suggest citations

- Literature search: When you mention a concept, Prism can search for relevant prior work and flag if you're missing something important

- Visual assembly: Upload sketches or whiteboard photos and convert them to diagrams suitable for publication

- Prose revision: Get specific suggestions for clarity, grammar, and terminology without losing your voice

But here's what separates Prism from just running GPT-5.2 in a browser window with your document copied into the chat: context. Full, persistent context.

When you open ChatGPT and paste a section of a 40-page paper, the model sees 40 pages. Confusing, lots of overhead, and you probably hit token limits. When you use Prism, the model understands the entire research project—your methods section, your data, your hypotheses, your previous results. It doesn't just respond to isolated prompts. It understands the arc of your work.

This matters more than it sounds. A suggestion that makes sense in isolation might contradict your methodology. The model can catch that. A citation you're considering might conflict with your thesis statement. It can flag that too. The intelligence becomes contextual instead of transactional.

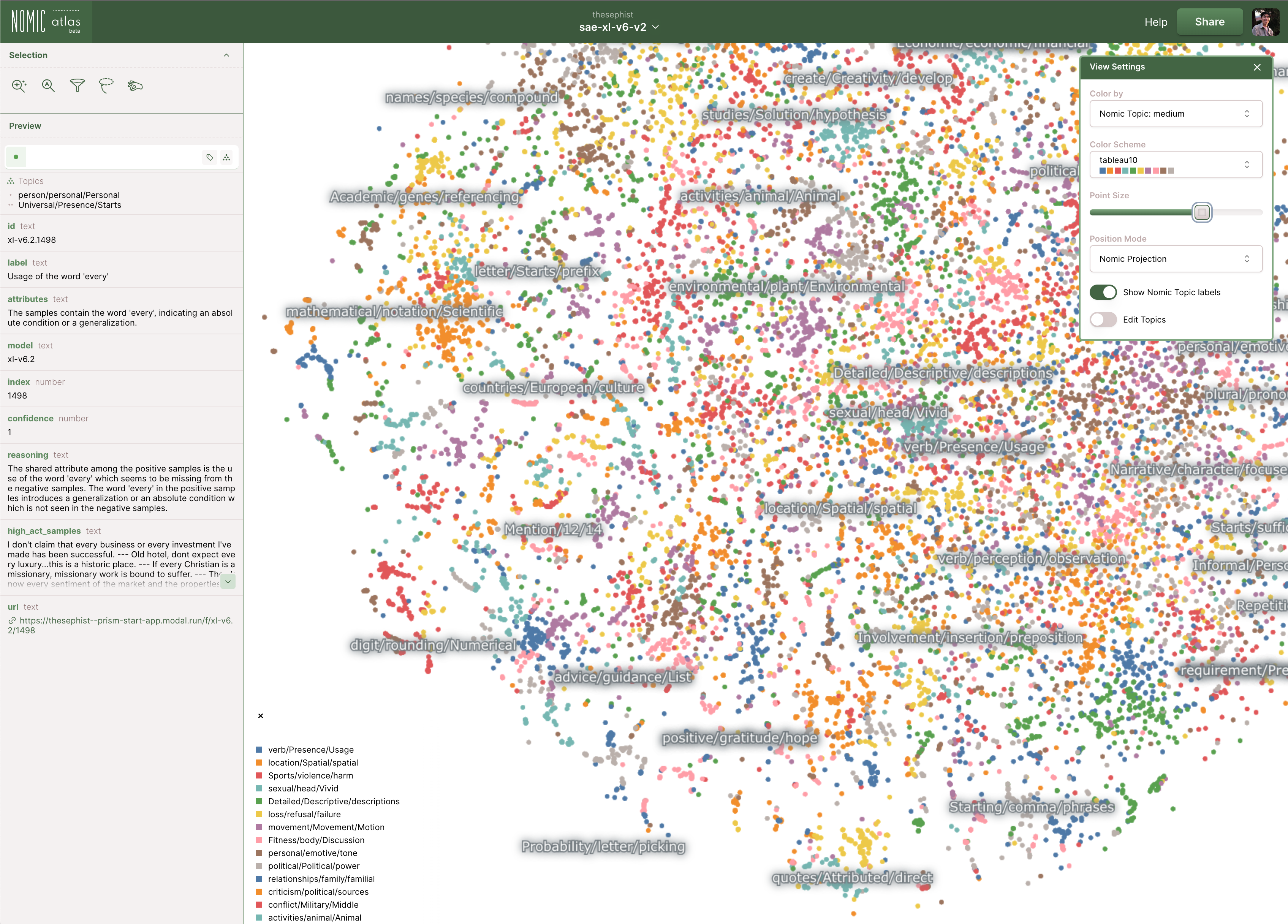

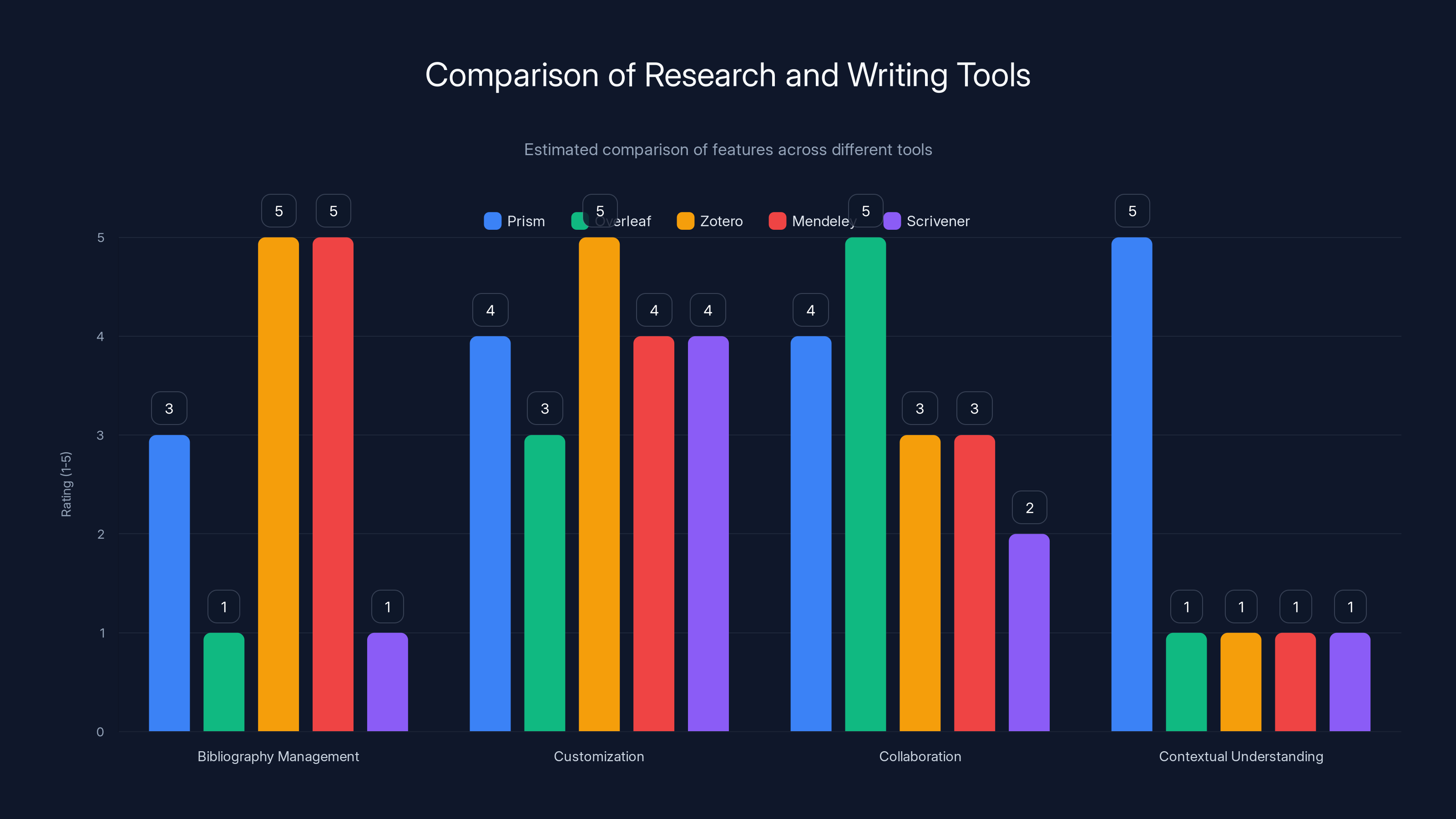

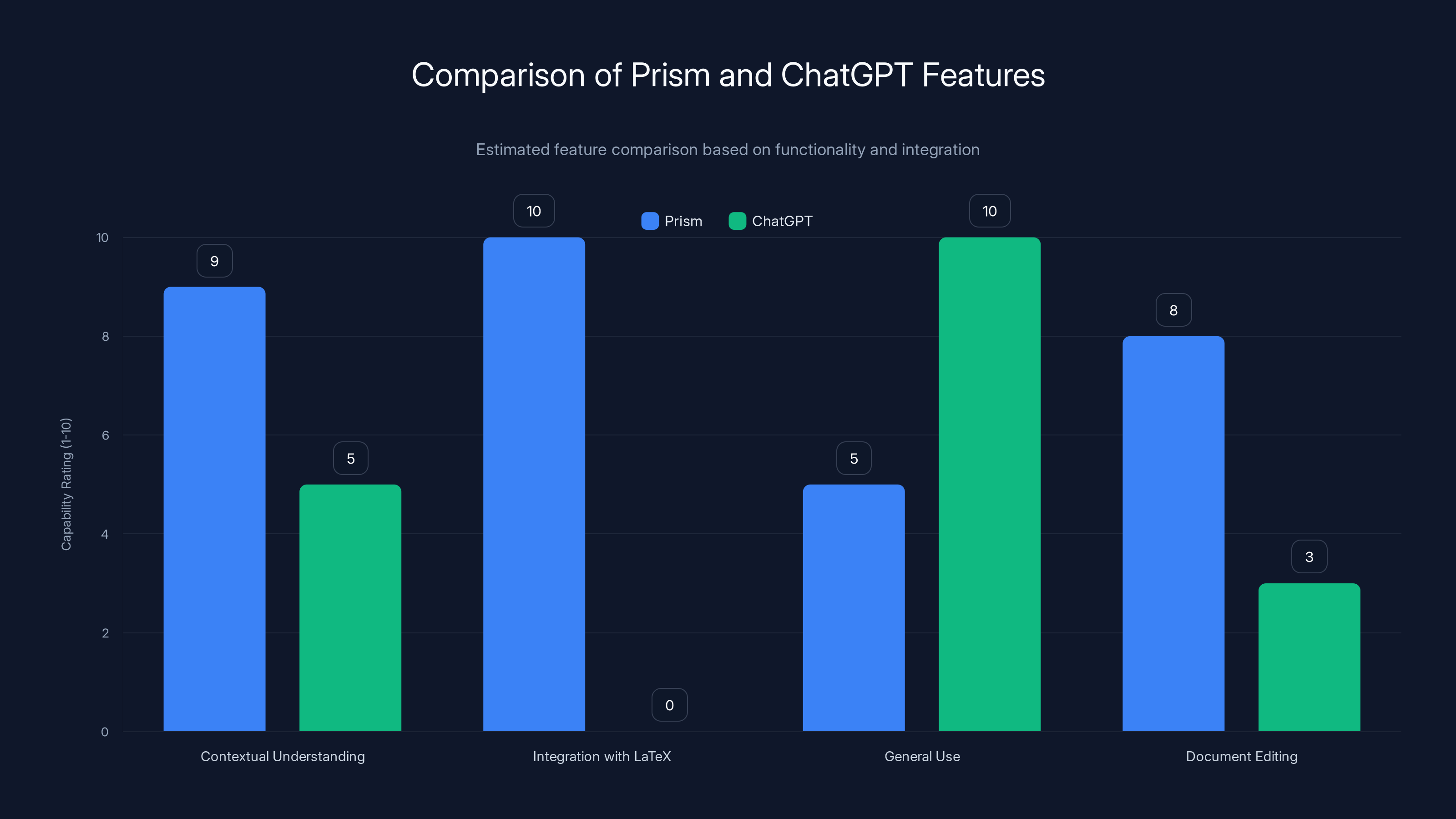

Prism excels in contextual understanding, integrating language model capabilities, while other tools are stronger in specific areas like bibliography management or collaboration. Estimated data.

Why Scientists Need This Right Now

The timing of Prism isn't random. It's a response to a very specific problem that's emerged over the past 18 months: scientists are using AI, but the tooling is broken.

Here's the workflow most researchers currently use. You're writing a paper in LaTeX or Word. You hit a problem—you need to check if a claim you're making is accurate, or you need citation suggestions, or you want to brainstorm how to phrase something technical without losing precision. You open ChatGPT in a separate window. You copy-paste the relevant section. You ask your question. You get a response. You manually incorporate what's useful and ignore what isn't. Then you go back to your document.

That context switching kills productivity. You break flow. You lose the narrative structure of what you're writing. You have to manage two windows and manually sync the changes. It's the same problem that plagued software engineering for years until IDE-integrated AI came along and changed everything.

Scientists have been vocal about this frustration. Over the past year, I've talked to researchers who've built their own workflows using Zapier automation and scripting to pipe their documents through APIs to get AI assistance. It works, but it's like building a bridge when you really just need a road.

Prism is the road.

There's also a secondary driver: the success of AI in proof verification has created a proof of concept (pun intended) that researchers are now confident collaborating with AI on technical work.

Last December, a statistics paper was published that used GPT-5.2 to establish new proofs for a central axiom in statistical theory. The human researchers prompted the model, verified the work, but didn't write the proofs themselves. It was genuine collaboration. The proofs were novel, rigorous, and published in a peer-reviewed journal. That wouldn't have happened two years ago. The scientific community wouldn't have accepted it. But the evidence of AI's capacity for formal reasoning changed minds.

Moreover, AI models have recently contributed to proving several long-standing Erdős problems in mathematics—a group of notoriously difficult unsolved conjectures. The proofs typically involved literature review combined with novel applications of existing techniques, areas where AI models show genuine strength. These weren't examples of machines independently discovering mathematics. They were examples of human mathematicians using AI as a tool to explore an idea space they wouldn't have explored otherwise.

That track record creates confidence. When scientists launch Prism, they're not wondering if AI can actually be useful for research. They know it can. They're just looking for a tool that makes the collaboration less clunky.

Prism delivers that. It removes the friction that made manual context-switching necessary.

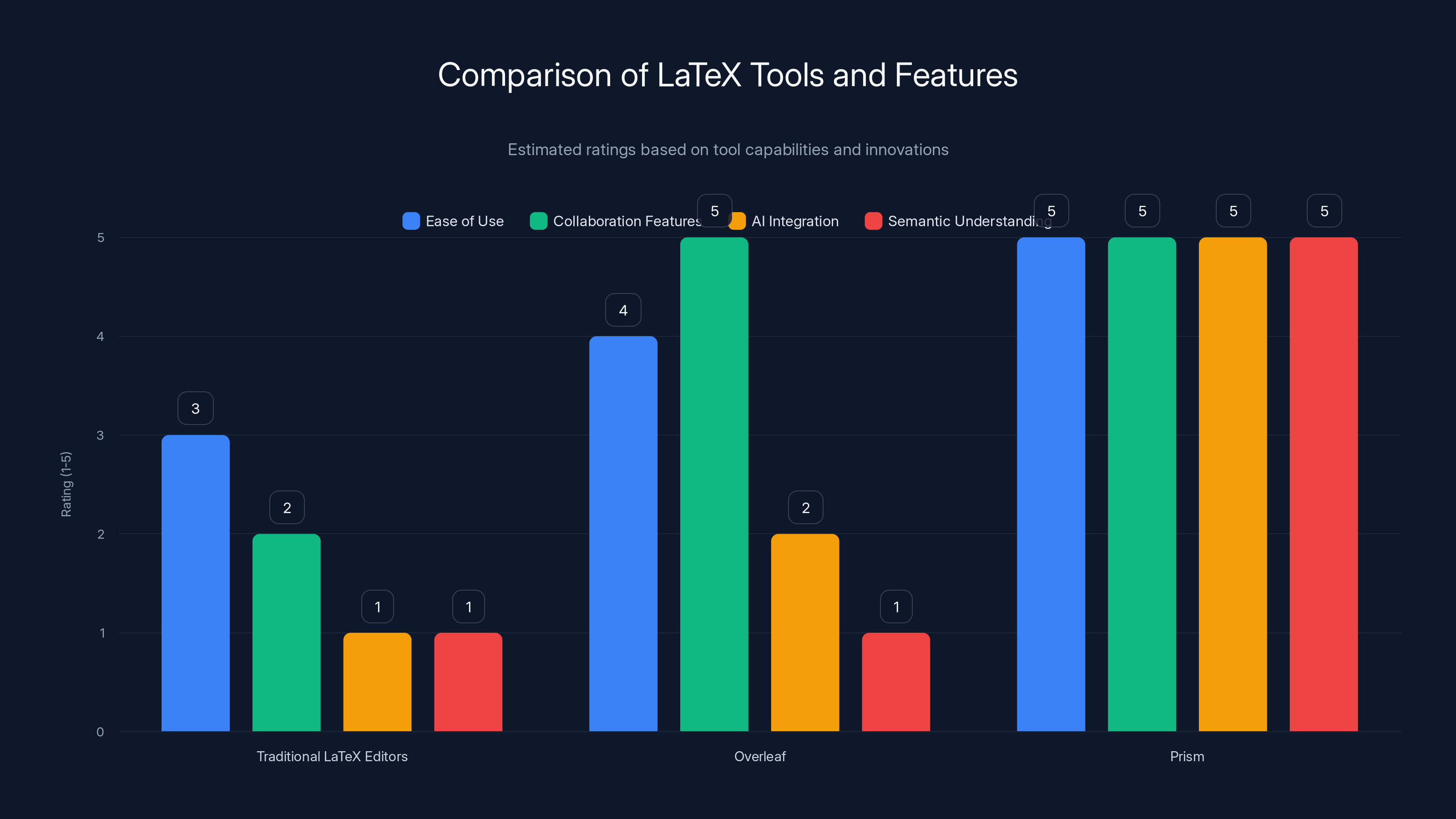

Prism significantly enhances LaTeX integration by incorporating AI for semantic understanding and improved collaboration, surpassing traditional editors and Overleaf. Estimated data.

The LaTeX Integration That Changes Everything

To understand why Prism is positioned as a research workspace rather than just another chatbot, you need to understand LaTeX and why it matters in science.

LaTeX is software that separates content from formatting. You write \textbf{bold} and it renders as bold. You write

That's intentional. LaTeX was created by scientists, for scientists, because it handles complex technical documents better than anything else. Mathematical typesetting, cross-references, bibliographies, figure placement, equation numbering—all the things that make scientific papers hard to format. LaTeX handles it cleanly. Every major scientific journal accepts LaTeX submissions. Many prefer them.

But here's the problem: LaTeX tools are... ancient. The best editors haven't fundamentally changed in 15 years. Overleaf made LaTeX collaborative in the cloud, which was huge. But even Overleaf is, at core, a text editor with a LaTeX compiler. It doesn't understand your research. It doesn't help you write better. It just compiles your code.

Prism changes that. It brings AI understanding into a LaTeX-native environment. You're not copying snippets of LaTeX code into ChatGPT. You're writing naturally, and the system understands both the content and the LaTeX structure.

This enables several things that are genuinely novel:

Semantic understanding of your document structure means the AI knows what's a hypothesis, what's a data description, what's an interpretation. It won't suggest citations that contradict your methodology because it understands the architecture of your argument.

Visual diagram assistance is a pain point nobody talks about until you've spent six hours trying to get TikZ code to render a decent figure. Prism integrates GPT-5.2's visual capabilities to let you sketch something on a whiteboard (literally take a photo), paste it into Prism, and get back publication-ready LaTeX diagram code. That saves hours per paper.

Automatic citation integration means when the AI suggests a reference, it's not just text—it's integrated into your bibliography and citation system. No more managing reference lists manually or dealing with formatting inconsistencies.

Bibliography management that actually understands context. Upload a bibliography, and Prism can identify which citations you're actually using in your paper, which ones you've forgotten, and which ones might be redundant.

These aren't flashy features. They're unglamorous bits of academic work that currently take enormous time. But removing that friction is what makes a tool actually useful for scientists.

The comparison to coding tools is apt here. VS Code revolutionized software development not because it was fundamentally different from older editors, but because it reduced friction in the developer workflow. Built-in debugging, integrated terminal, extension ecosystem, language intelligence. It understood code. Prism does the same thing for LaTeX and research writing.

Context Management: The Real Innovation

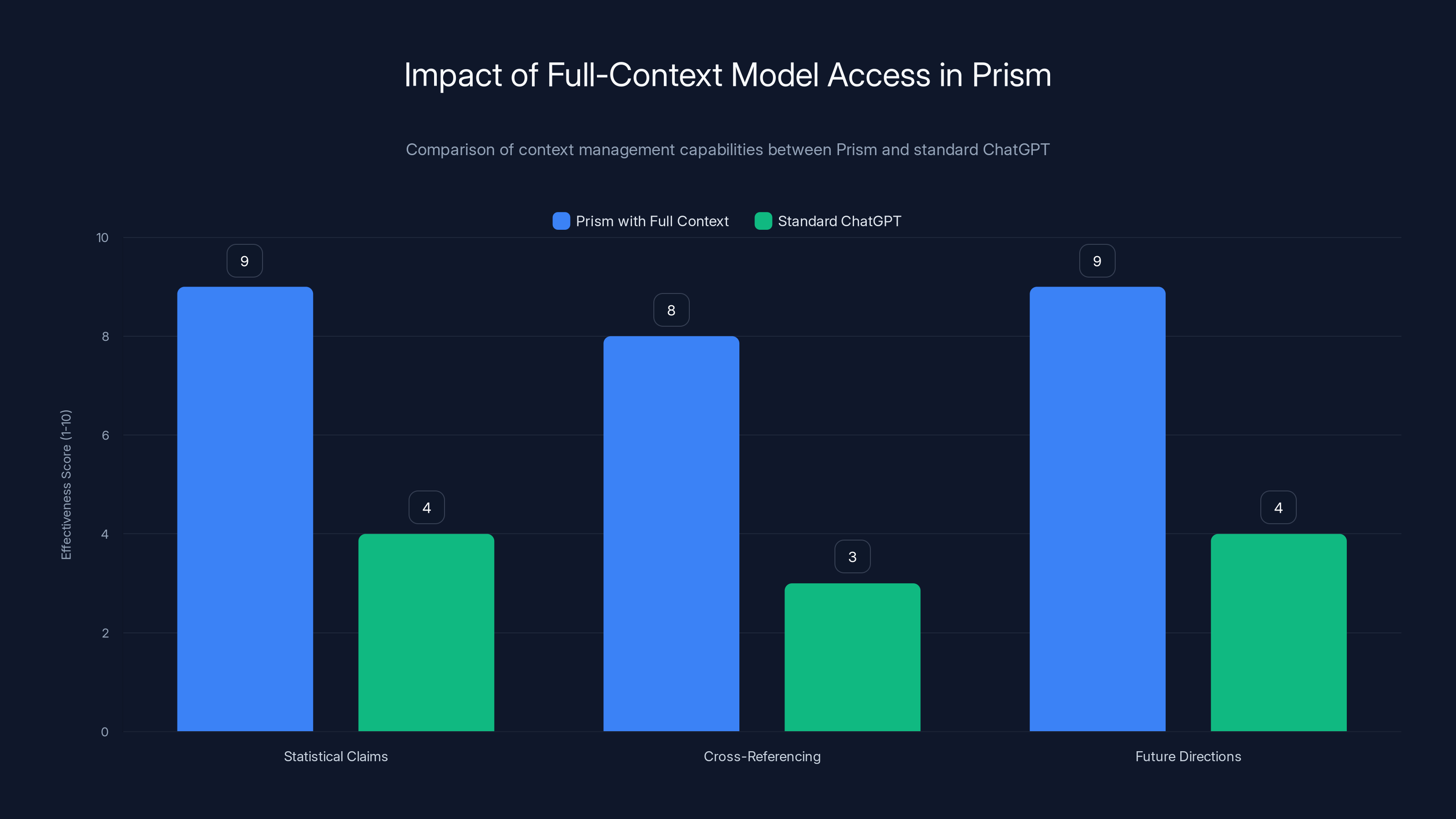

The most powerful feature of Prism isn't the most obvious one. It's not the LaTeX integration or the visual features. It's the full-context model access.

When you open ChatGPT and ask a question, the model sees that question and whatever history you've fed it in that conversation. It's stateless except for the thread. But when you use Prism, GPT-5.2 is running continuously with complete access to your entire research project.

This changes what the model can do. Let me give you specific examples of how this matters:

Scenario 1: You're writing your results section and you claim a finding is "statistically significant with p < 0.05." Your methods section, however, mentions you're testing 47 different hypotheses. The model, with full context, can flag that this claim needs multiple comparison correction (Bonferroni, FDR, etc.) because it understands both where you made the claim and what your methods actually were. Without full context, ChatGPT would just think "p < 0.05, cool."

Scenario 2: You're in the introduction and you want to describe prior work that informed your approach. You mention "Smith's framework for network analysis." The model, with full context, can cross-reference that against your methods section where you implemented that framework and ask if you need to be more specific about which components you used. It can suggest clarifications that prevent reader confusion. That's only possible with full context.

Scenario 3: You're proposing a future direction in your discussion section and you suggest a particular statistical test would be useful for follow-up work. The model, having read your methods, can flag that you actually already collected that data in Study 2 (which you forgot about) and offer to help you write an analysis of it instead. This actually saves you from wasting future grant money on redundant studies.

None of these things happen in ChatGPT-in-a-browser. They only work with deep, persistent context.

OpenAI calls this "context management" internally, and it's the same innovation that makes Cursor so powerful for coding. The IDE has full context of your codebase. The AI understands the entire project. It can make suggestions that are actually relevant to your actual work, not generic suggestions that might apply to your problem.

For research, this is transformational. Science is fundamentally about understanding systems and arguments in their entirety. A suggestion that makes sense in isolation but breaks your logic is worse than useless—it's actively harmful. Full-context AI is the only way to get suggestions that actually strengthen your work instead of breaking it.

Weill emphasizes this in how he talks about Prism's design. "Software engineering accelerated in part because of amazing models, but in part because of deep workflow integration." That's code for: the model itself matters, but the integration into your actual working environment matters just as much. Maybe more.

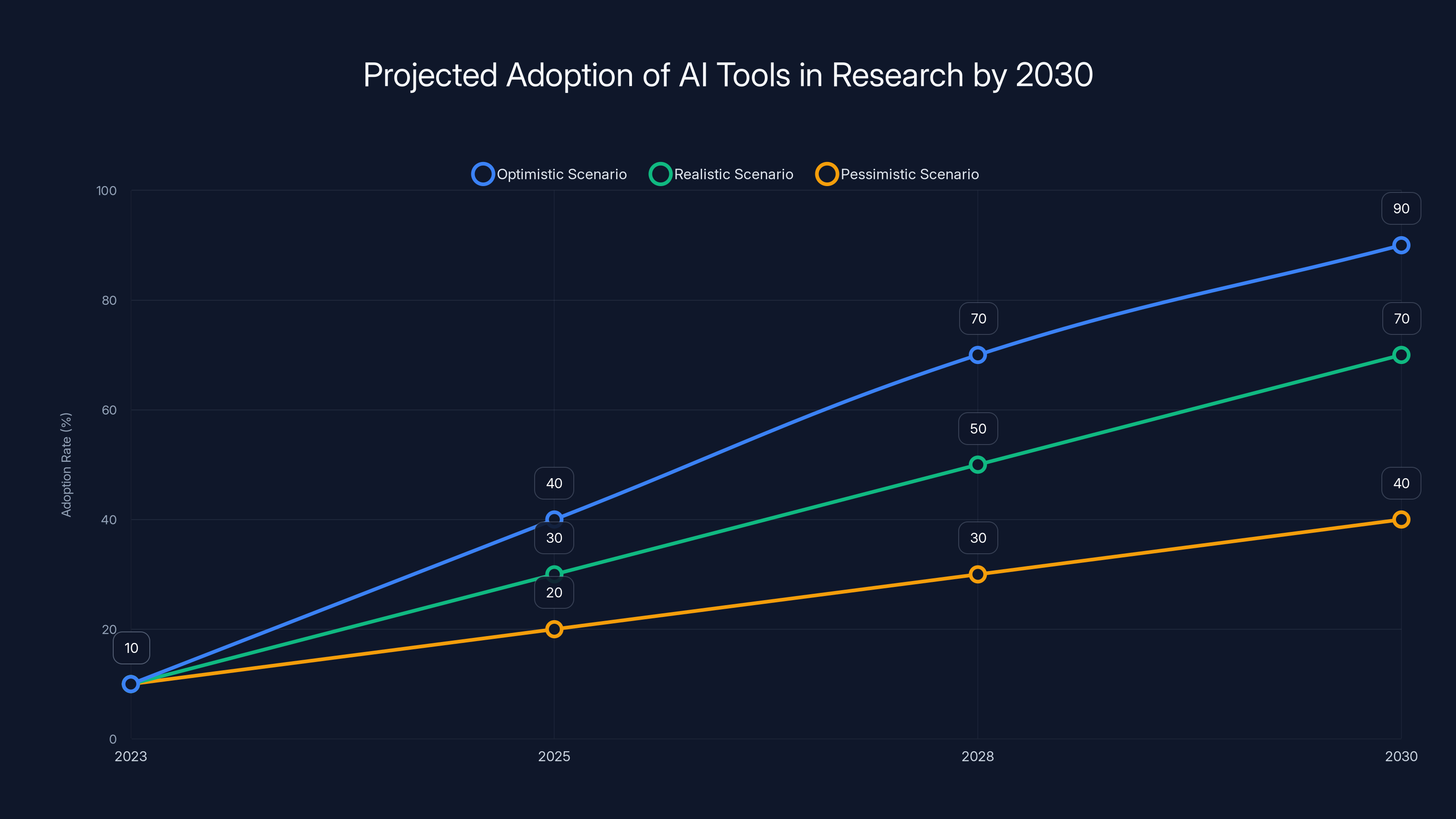

Under the optimistic scenario, AI tools like Prism could become nearly ubiquitous in research fields by 2030. The realistic scenario suggests a steady increase, while the pessimistic scenario indicates slower adoption. Estimated data.

Scientific Proof Verification: Where AI Shows Real Strength

While Prism isn't designed for autonomous research, there's a specific area where it excels: proof verification and mathematical reasoning. This isn't speculation. We have evidence.

The most compelling evidence comes from the recent statistics paper I mentioned earlier. The researchers used GPT-5.2 to develop new proofs for a central axiom of statistical theory—specifically, the relationship between maximum likelihood estimation and sufficiency. These aren't trivial results. The proofs had to satisfy peer review at a rigorous journal.

Here's how the collaboration actually worked: The human mathematicians posed the problem to the model. The model suggested approaches based on its training on mathematical literature. The humans verified each step, sometimes asking for variations or refinements. When a proof path seemed promising, they worked through it carefully. The final proofs were novel and correct.

This is crucial: the humans did the verification. They didn't just trust the model. They understood the mathematics well enough to verify it. But the model dramatically accelerated the exploration of proof strategies. Instead of spending weeks reading related literature and trying approaches manually, they could test dozens of approaches in hours.

Similarly, AI models have contributed to proofs of several Erdős problems—long-standing conjectures in mathematics that have resisted proof for decades. The role of AI in these proofs has been primarily in literature review and in exploring combinations of existing techniques that humans might not have tried. The contributions are real, but they're not autonomous. They're augmentative.

Why does AI show particular strength in mathematical reasoning? Several reasons:

Mathematical reasoning is highly structured. Unlike natural language, which is ambiguous and context-dependent, mathematics has rigid rules. A proof either follows logically or it doesn't. AI models trained on mathematical text learn to recognize these patterns. They're not infallible (they do make mistakes), but they're quite good.

Mathematics has a closed information domain. The training data for mathematical reasoning is comprehensive. The model has seen most published mathematics and can reference across domains. A physicist might not immediately see the connection to a technique from abstract algebra, but the model, having read thousands of papers across all mathematical fields, might see it.

Proof verification is procedural. You can check if a proof is correct by following the logical steps. You can't do that with, say, experimental methodology. Experimental design involves judgment calls about what's "reasonable." Proofs don't have that fuzziness. Either the step follows from the premises or it doesn't.

Prism leverages this strength explicitly. When you're developing mathematical arguments or verifying proofs in your paper, the model can actually check the logic, not just generate plausible-sounding text.

But Weill and OpenAI are careful not to oversell this. In their announcement, they emphasized that Prism is designed to "accelerate the work being done by human scientists." The emphasis is on human. The tool augments human judgment. It doesn't replace it.

This restraint is notable. In an era when every AI company is claiming their tool will "revolutionize" or "transform" their domain, OpenAI is positioning Prism as an accelerator, not a replacement. That's honest. It's also more useful. Scientists don't want to be replaced. They want their work to go faster.

The Broader Shift in AI and Science

Prism doesn't exist in a vacuum. It's part of a larger recognition that AI and science are converging in ways that benefit both domains.

Think about what's happened in the past 18 months. We've moved from "can AI help with science at all?" to "what specific tools do scientists need to collaborate effectively with AI?" That shift happened because of evidence. Evidence that AI models can verify proofs. Evidence that AI can identify citation gaps. Evidence that AI can help scientists understand their own data better.

The OpenAI blog post announcing Prism lays this out explicitly. "In domains with axiomatic theoretical foundations, frontier models can help explore proofs, test hypotheses, and identify connections that might otherwise take substantial human effort to uncover."

Notice what that's saying: AI excels in domains with strong theoretical foundations. Mathematics, formal logic, theoretical physics. It's less powerful in domains that require judgment, intuition, and tacit knowledge. You can't use AI to do really innovative experimental design because good experimental design requires taste and intuition that come from experience. You can use it to check citations or to help you format your methods section clearly.

This is important because it sets realistic expectations. Prism will be most useful in theoretical fields. A mathematician using Prism will probably get more value than a biologist. A theoretical computer scientist will get more value than an experimental psychologist. The tool is optimized for domains where reasoning is highly structured.

But that doesn't mean empirical sciences won't benefit. Every researcher, regardless of field, spends time on literature review, writing clarity, citation management, and figure generation. Prism helps with all of that. The added bonus for theoretically-oriented fields is that they also get proof assistance.

This is also why the comparison to coding tools is so apt. Software engineering varies wildly—from highly mathematical formal verification work to messy real-world system design. AI coding tools help with both, but they're most powerful for the highly structured parts (refactoring, boilerplate, pattern recognition). The same will be true with Prism.

The announcement also signals OpenAI's broader strategy. They're not trying to build a general "AI for science" tool. They're building domain-specific tools that integrate deeply with how people actually work. After that success with coding tools (Cursor, Windsurf), they're applying the same playbook to science: take a specific domain, understand the workflow deeply, build tools that integrate seamlessly into that workflow, and let the product speak for itself.

Prism excels in contextual understanding and LaTeX integration, while ChatGPT is more suited for general use. (Estimated data)

Feature Breakdown: What You Actually Get

Let me walk through what Prism actually offers, feature by feature, so you understand what you're working with.

Integrated Writing Environment

This is the foundation. You write your paper in Prism's editor. It's not revolutionary—it's a clean, modern text editor with LaTeX support. But it's where everything else connects. As you write, the model is watching. It's not intrusive (you don't get constant pop-up suggestions if you don't want them). But it's there, ready to help.

You can ask it to improve a section's clarity. You can ask it to check if your notation is consistent. You can ask it to suggest alternative phrasings that preserve your meaning but hit a different tone.

Claim Verification

You make a factual claim: "The effectiveness of strategy X has been demonstrated across 47 studies." You ask Prism to verify. The model checks this against its training data and either confirms, suggests modification ("across 47 peer-reviewed studies in the past decade"), or flags that the claim might be overstated.

This is valuable because it catches the kind of incremental exaggeration that creeps into papers. You don't mean to overstate, but three studies might feel like "extensive evidence" when you're deep in writing.

Citation Suggestions and Gap Analysis

You describe a concept, and Prism suggests relevant citations. But more usefully, it tells you when you're missing major prior work in an area you're discussing. It won't tell you exactly which papers—it can't—but it can flag: "you're discussing network analysis frameworks, but you haven't cited Smith 2019 or Johnson 2021, which are central to this area."

Then you can look those up and decide if they're relevant. The model is surfacing gaps, not filling them.

Visual Diagram Generation

This is the feature that solves an actual pain point. Creating publication-ready diagrams in LaTeX is tedious. You either write TikZ code (which is complex), use external tools and embed PDFs (which breaks collaboration and version control), or create images (which lose quality at print resolution).

With Prism, you sketch on a whiteboard. You take a photo. You paste it. The model reads your sketch, understands what it's supposed to represent, and generates LaTeX code that renders it. It's not perfect—it might need tweaking—but it takes you from "rough sketch" to "nearly publication-ready" in seconds rather than hours.

Full Document Context

This isn't a feature you see, but it's the most important one. Everything the model suggests is based on understanding your entire paper. It knows your methods. It knows your data. It knows your thesis. Suggestions are contextual.

Revision Suggestions

Prism can identify sections that might be unclear and suggest revisions. But again, with context. It knows what you're trying to argue, so suggestions preserve your meaning while improving clarity.

You can accept, reject, or modify each suggestion. You're always in control.

The Adoption Timeline: What Happens Next

Prism is free with any ChatGPT account, which is a clever distribution strategy. It removes the activation energy barrier. You don't need to convince your department to buy software. You don't need an institution account. If you use ChatGPT, you can use Prism.

But adoption won't be immediate. There are several stages we'll likely see:

Early adopter phase (Q1-Q2 2026): Computer scientists, mathematicians, and physicists will start experimenting. These are researchers who are already comfortable with AI and who work in domains where Prism's strengths are most evident. Success stories will emerge.

Institutional adoption (Q2-Q3 2026): Universities will start integrating Prism into their writing centers and graduate writing programs. Early adopters will evangelize within their departments. You'll start seeing Prism mentioned in methodology courses.

Broad adoption (Q4 2026 onward): Once enough graduate students have used Prism and experienced the productivity gains, it becomes part of the standard workflow. By 2027, not using something like Prism might be seen as unnecessarily slow.

This timeline is informed by how similar tools have adopted. GitHub went from niche developer tool to industry standard in about five years. Slack went from startup to ubiquitous in about four years. Overleaf took longer (about seven years) because it required changing established workflows, but it's now used by a substantial percentage of LaTeX users.

Prism will follow a similar arc. The advantage it has is that it's not asking scientists to change how they work. It's improving the tools they're already using. That accelerates adoption.

The disadvantage is integration. Overleaf users might switch to Prism. But researchers using Overleaf heavily will have switching costs. That will slow adoption among the most active Overleaf users. This is a real competitive dynamic, and we'll see how it plays out.

My prediction: Prism becomes the standard tool for new researchers (grad students and postdocs) within 18 months. Established researchers slowly migrate if they're writing heavily, or use both tools for different purposes. By 2028, Prism is simply part of the academic research toolkit, like LaTeX itself.

Prism's full-context model access significantly enhances its ability to manage context, outperforming standard ChatGPT in handling statistical claims, cross-referencing, and future directions. Estimated data.

Comparison to Existing Solutions

Let's be clear about what Prism is competing with. It's not competing with ChatGPT (which you can already use for research—lots of scientists do). It's competing with two categories of tools:

Specialized research tools like Overleaf (LaTeX collaboration), Zotero (bibliography management), and Mendeley (reference management).

General writing tools like Google Docs, Word, or specialized academic writing software like Scrivener.

Prism isn't better than all of these at all tasks. Mendeley has more sophisticated bibliography features. Zotero is more customizable. But Prism does one thing that none of them do: it integrates with a state-of-the-art language model that understands context.

The relevant comparison is to adding ChatGPT on top of your existing tools. Many researchers do exactly that—they keep their bibliography in Mendeley, write in Overleaf, and have ChatGPT open in another window. Prism consolidates that workflow into one tool, with the added benefit of full context.

Is that worth switching? For researchers who are already happy with their current workflow, probably not immediately. For new researchers, or researchers frustrated with the context-switching of multiple tools, definitely.

There's also potential for Prism to expand. Right now it's focused on document creation and editing. But research involves other tasks: data analysis, literature review, experiment design. If Prism expands into those areas (and OpenAI's blog hints they might), it could become a much more comprehensive research suite.

Think about what that would look like. You upload your dataset. Prism suggests analytical approaches. You ask it to generate visualization code. You describe an experiment design and it suggests statistical tests. That's further away, but it's the logical endpoint of this trajectory.

Limitations and Honest Assessment

Prism is impressive, but it has real limitations that you should understand before adopting it.

It's Still Prone to Hallucination

When Prism suggests a citation, it's making an educated guess based on patterns in its training data. Sometimes that guess is right. Sometimes it's not. It might suggest a paper that doesn't exist or misattribute a finding to the wrong author. You absolutely must verify every suggestion the model makes.

This is less critical with structured suggestions ("your methods section contradicts your results") than with factual ones ("you should cite Smith 2024"). But it's always something to watch.

Limited to Text-Based Research

Prism works beautifully for theoretical work, math, and conceptual research. It's much less useful if your research is fundamentally about empirical data that isn't publicly available. If you're working with proprietary datasets or sensitive data that can't be shared with an AI, Prism's utility drops significantly.

Doesn't Understand Every Notation System

LaTeX is standard, but different fields have different mathematical conventions. Prism handles standard LaTeX notation beautifully. But if you're using obscure notations or highly specialized field conventions, the model might not understand them fully. This is a limitation of the model's training data, not Prism specifically, but it's worth knowing.

Integration with Existing Tools Is Limited

You can write in Prism, but importing from Overleaf is manual. Exporting your Prism documents to other formats might have some friction. If you're deeply integrated into a specific tool ecosystem, switching might be more complicated than it sounds.

It's Designed for English-Language Academic Writing

The model is trained primarily on English-language academic text. If you're writing in another language, Prism's usefulness drops considerably. This isn't necessarily a limitation of Prism, but it's a practical reality.

It Can't Do Truly Novel Experimental Design

Prism can suggest statistical tests and help you understand which experimental approaches make sense given your constraints. But it can't generate genuinely innovative experimental designs that haven't been published before. Experimental design requires judgment and taste that AI models struggle with. You can use Prism to formalize your experimental ideas, but the ideation still comes from you.

These limitations aren't fatal. They're just real. OpenAI isn't claiming Prism is perfect. They're claiming it accelerates research, and within those limitations, it does.

The use of AI in scientific research has grown significantly, with ChatGPT handling an estimated 8.4 million messages per week by early 2026. Estimated data.

The Bigger Picture: AI and Scientific Method

Prism is interesting not just as a tool, but as evidence of a larger shift: AI is becoming part of the scientific method itself.

For centuries, science has been a human enterprise. You design experiments, run them, analyze results, write them up. Maybe you collaborate with colleagues. But the thinking is human. The judgment is human. The creativity is human.

Now that's changing. Not because AI will replace human judgment, but because humans working with AI can explore more possibilities faster. A mathematician using Prism can test proof strategies that would take weeks to explore manually. A biologist can ask AI to suggest experimental approaches they hadn't considered. A physicist can verify calculations faster.

This isn't new, exactly. Scientists have always used tools to extend their capabilities. Telescopes aren't less "true" science than naked-eye astronomy. Computers didn't make physics less human. They made it possible to explore more possibilities more deeply.

AI is another step in that progression. The tools get better. The possibilities expand. The humans doing the thinking stay central.

But there's a real question about what this means for scientific culture. If everyone is using AI assistants, are we converging on a monoculture? If everyone is using the same model with the same training data, does that bias the kinds of hypotheses we explore? These are fair concerns.

OpenAI's approach with Prism suggests they're thinking about this. By integrating with LaTeX rather than replacing it, by positioning the tool as an accelerator rather than a replacement, by emphasizing human judgment in verification—they're acknowledging that scientific culture matters. The tool augments it. It doesn't define it.

That's the difference between tools that improve science and tools that corrupt it. The good ones enhance human capability while preserving the human judgment that makes science trustworthy.

Practical Implementation: How You Actually Use Prism

If you're considering using Prism, here's what the actual workflow looks like.

Getting started: You need a ChatGPT account. If you have one, you already have access to Prism. No additional signup. You go to the Prism interface and either start a new document or import an existing LaTeX file.

Writing your paper: You write naturally. The interface is clean and familiar. You're not thinking about the fact that an AI model is monitoring what you're writing. You just write.

Using AI assistance: You can invoke help in several ways. Highlight a section and ask for revision suggestions. Ask the model to verify a claim. Ask it to suggest citations for a topic you're discussing. Ask it to generate diagram code from a sketch. These requests are all accessed through a natural language interface. You're not dealing with API calls or technical configuration.

Dealing with suggestions: The model makes suggestions. You review them. You can accept, reject, or modify. The suggestions don't automatically apply. You're always in control. This is important—Prism isn't a service that modifies your work behind your back.

Collaboration: If you're working with coauthors, collaboration works like Overleaf. Multiple people can work on the same document simultaneously. The AI context is shared, so everyone gets the same suggestions. This is actually an advantage over manually using ChatGPT with collaborators—everyone sees the same model intelligence.

Exporting: When you're done, you export as standard LaTeX or PDF. Your document is yours. You're not locked into Prism's format.

The whole workflow is designed to feel natural. You're not aware you're using an AI tool unless you explicitly ask for help. When you do, the help is contextual and relevant.

Looking Forward: The Trajectory of AI in Research

Where does Prism fit in the longer arc of AI and science? That depends on what happens over the next few years.

The optimistic case: Prism becomes ubiquitous by 2028. It evolves to include data analysis tools, experiment design assistants, and literature review automation. Scientific productivity increases measurably. Grant-funded research accelerates. We see more published research, more novel ideas explored, and faster scientific progress. The bottleneck for science shifts from "doing the work" to "funding the work."

The realistic case: Prism becomes standard in certain fields (math, computer science, theoretical physics) while being less common in others. It solves the coordination problem of managing multiple tools. It increases productivity, but not dramatically—maybe 15-20% time savings. Scientific culture absorbs AI gradually, without major disruption. By 2030, using AI as a research tool is as normal as using computers.

The pessimistic case: Adoption is slower than expected because of switching costs and institutional inertia. Researchers keep using their existing tools. Prism becomes a niche product used mostly in computer science and AI research. Science progresses with or without it, because research productivity is bottlenecked by other factors (funding, lab space, access to data) more than by writing tools.

My prediction: We end up somewhere between realistic and optimistic. Adoption is fast in theory-heavy fields, slower in experimental fields. Within five years, Prism is standard for mathematical and theoretical research. It's optional elsewhere. That's still a meaningful shift.

But the more interesting question isn't what happens to Prism specifically. It's what this signals about the future of work more broadly. If AI can meaningfully accelerate research—not replace researchers, but make them more productive—what other domains are about to be transformed similarly?

The answer is: everything involving writing, analysis, and knowledge work. Prism is just the first product in what will probably be a wave of similar tools for different professions. Prism for scientists. Prism for lawyers. Prism for consultants. Prism for programmers (which, in a sense, already exists in the form of Cursor and similar tools).

The pattern is always the same. Understand a specific domain deeply. Build tools that integrate into how people actually work in that domain. Let the AI handle the boring parts and the verification. Let humans handle the judgment and creativity. The result is productivity gains that are real but not science-fiction level. Just meaningful enough to change how work gets done.

Prism is the evidence that OpenAI has figured this pattern out. Watch for it to repeat.

TL; DR

-

Prism is a research workspace, not autonomous research AI. It integrates GPT-5.2 into LaTeX-based scientific writing with full-document context awareness, meaning the model understands your entire research project and can make contextually intelligent suggestions.

-

It solves real friction points in academic workflows, particularly diagram generation from sketches, citation management, claim verification, and prose revision—tasks that currently consume hours per week.

-

Full-context AI is the key innovation. Unlike copy-pasting sections into ChatGPT, Prism's model understands your methodology, data, and arguments holistically, enabling suggestions that strengthen rather than contradict your work.

-

It's most powerful for theoretical research where reasoning is highly structured (mathematics, formal logic, theoretical physics) and less powerful for empirical work requiring judgment and experimental intuition.

-

Adoption will likely be fast in certain fields (mathematics, computer science, physics) by 2027, with broader adoption taking longer due to switching costs and institutional inertia. By 2030, using AI research assistants will probably be standard for academic writing.

-

The tool has real limitations: hallucination risk for citations, limited empirical usefulness, and inability to generate truly novel experimental designs. Verification of AI suggestions remains essential.

-

This signals a larger pattern where AI tools will increasingly integrate deeply into professional workflows (legal, consulting, research) rather than existing as standalone applications.

FAQ

What is Prism and how is it different from ChatGPT?

Prism is a dedicated research workspace that integrates GPT-5.2 with LaTeX document editing, whereas ChatGPT is a general-purpose chat interface. The key difference is context: when you use Prism, the model has full access to your entire research project and understands how different sections relate to each other. With ChatGPT, you're pasting snippets into isolated conversations. That difference enables Prism to make suggestions that are actually contextually relevant to your specific work rather than generic suggestions that might apply to similar problems.

How much does Prism cost?

Prism is free for anyone with a ChatGPT account. You don't need a separate subscription or institutional license. If you're already using ChatGPT, you can access Prism immediately. This free tier is a deliberate choice by OpenAI to accelerate adoption—they're betting on the value of the tool becoming apparent through use.

Can I use Prism if I write in Word or Google Docs instead of LaTeX?

Currently, Prism is optimized for LaTeX documents, which is the standard format in academic research. If you write in Word or Google Docs, you have two options: either convert your documents to LaTeX (which is feasible but takes effort) or import your work into Prism's native editor. You can also continue using your preferred tool and use ChatGPT separately, though you'll lose the full-context benefits that make Prism powerful.

What if Prism makes a suggestion that's wrong or inappropriate for my research?

You have complete control over every suggestion. Prism never modifies your document without your explicit approval. When the model suggests changes, you review them and decide whether to accept, reject, or modify the suggestion. This is important—Prism is an advisor, not an autocorrect service. The responsibility for your work remains entirely yours.

Is my research data safe if I use Prism?

Your documents are stored on OpenAI's servers, which means they're subject to their privacy policy and data retention practices. If you're working with sensitive data or proprietary information that can't be shared with a third party, Prism might not be appropriate for that work. You should review OpenAI's data practices before uploading confidential research. For public research or non-sensitive academic work, the privacy concerns are minimal.

Will other universities adopt Prism, or will it remain a standalone tool?

Adoption will likely follow a pattern similar to how GitHub and Slack spread through institutions. Early adopter researchers will evangelize it. Graduate programs will incorporate it into writing courses. Universities will recognize that researchers using Prism are more productive and will integrate it into their recommendations. Within 3-5 years, major research universities will likely list Prism as a standard tool for academic writing, similar to how they currently recommend LaTeX editors and reference management software.

Can Prism help me with experimental design or data analysis, or just writing?

Currently, Prism is focused on document creation, editing, and literature-based assistance. It can suggest statistical tests and help you think through analytical approaches, but it's not designed for hands-on data analysis or truly novel experimental design. If Prism expands (which seems likely), these capabilities will probably come later. For now, use it for the writing and structural parts of research, and use other specialized tools for data analysis.

What happens to my Prism documents if OpenAI changes or discontinues the service?

You can export your documents as standard LaTeX at any time, so you're not locked into Prism. Your work is portable. OpenAI has given no indication they plan to discontinue the service, and given the strategic importance of AI in research to their mission, it seems unlikely. But the portable export format means you're not taking a major risk by adopting the tool.

How does Prism handle citations and bibliography management?

Prism integrates with standard bibliography formats (BibTeX, etc.) and can manage citations directly within your LaTeX documents. When the model suggests a citation, it's integrated into your bibliography automatically. You can also ask Prism to identify citation gaps—areas where you're missing important prior work that a researcher in your field would expect to see cited. The model can't tell you which specific papers are missing, but it can flag that gaps exist.

Is Prism suitable for collaborative research with multiple authors?

Yes. Prism supports real-time collaboration similar to Overleaf, so multiple authors can work on the same document simultaneously. The AI context is shared, meaning all collaborators see the same suggestions and can discuss them together. This is actually an advantage over having some team members using ChatGPT manually—everyone gets the same intelligence and the same suggestions, which reduces confusion.

Will using AI assistance in research affect how my work is evaluated by peer reviewers?

There's no consensus yet on this, but the trajectory suggests that AI assistance in research will become normal and accepted. Scientific journals are already publishing papers where AI played a role in proof verification or literature review. The key is transparency: if you used AI tools, you disclose that in your methodology. As AI becomes more standard, reviewers will likely expect researchers to use these tools. The evaluation will focus on whether your human judgment and understanding are sound, not on whether you used an assistant.

If you're interested in exploring how AI can accelerate other aspects of your workflow beyond research, tools like Runable offer AI-powered automation for creating presentations, documents, reports, and other professional materials. While different from Prism (which is focused on scientific research), the underlying principle is similar: integrating AI deeply into professional workflows to handle routine tasks and let humans focus on judgment and creativity.

The broader lesson from Prism's launch is this: the next wave of AI productivity tools won't be general-purpose chatbots. They'll be deeply integrated into specific workflows. They'll understand the domain intimately. They'll remove friction without replacing human judgment. That pattern is spreading across professions.

Key Takeaways

- Prism is not autonomous research AI but rather a purpose-built workspace integrating GPT-5.2 with LaTeX, designed for human-led scientific discovery with AI assistance

- Full-document context awareness is the core innovation: the model understands your entire research project, enabling contextually intelligent suggestions rather than generic responses

- Most powerful for theory-heavy fields (mathematics, computer science, physics) where reasoning is structured; less valuable for empirical research requiring experimental judgment

- Addresses real academic friction points including diagram generation from sketches, citation management, claim verification, and maintaining notation consistency

- Free access via ChatGPT accounts will likely drive rapid adoption among mathematicians and computer scientists by 2027, with broader adoption in other fields following

Related Articles

- Heap vs Mixpanel: Complete Analytics Guide & Alternatives 2025

- Pinterest Layoffs 15% Staff Redirect Resources AI [2025]

- UniRG: AI-Powered Medical Report Generation with RL [2025]

- Highguard: The New Online Shooter That Could Change Everything [2025]

- Enterprise AI Agents & RAG Systems: From Prototype to Production [2025]

- Google Search AI Overviews with Gemini 3: Follow-Up Chats Explained [2025]

![OpenAI Prism: The AI Research Workspace Scientists Actually Need [2026]](https://tryrunable.com/blog/openai-prism-the-ai-research-workspace-scientists-actually-n/image-1-1769537378791.png)