Open AI's Codex for Mac: Multi-Agent AI Coding Reimagined [2025]

Last spring, Open AI quietly launched Codex, a programming agent that promised to write code for developers. Nobody expected it to evolve into something that could orchestrate multiple AI models working in parallel, delegating tasks like a seasoned project manager. But that's exactly what happened.

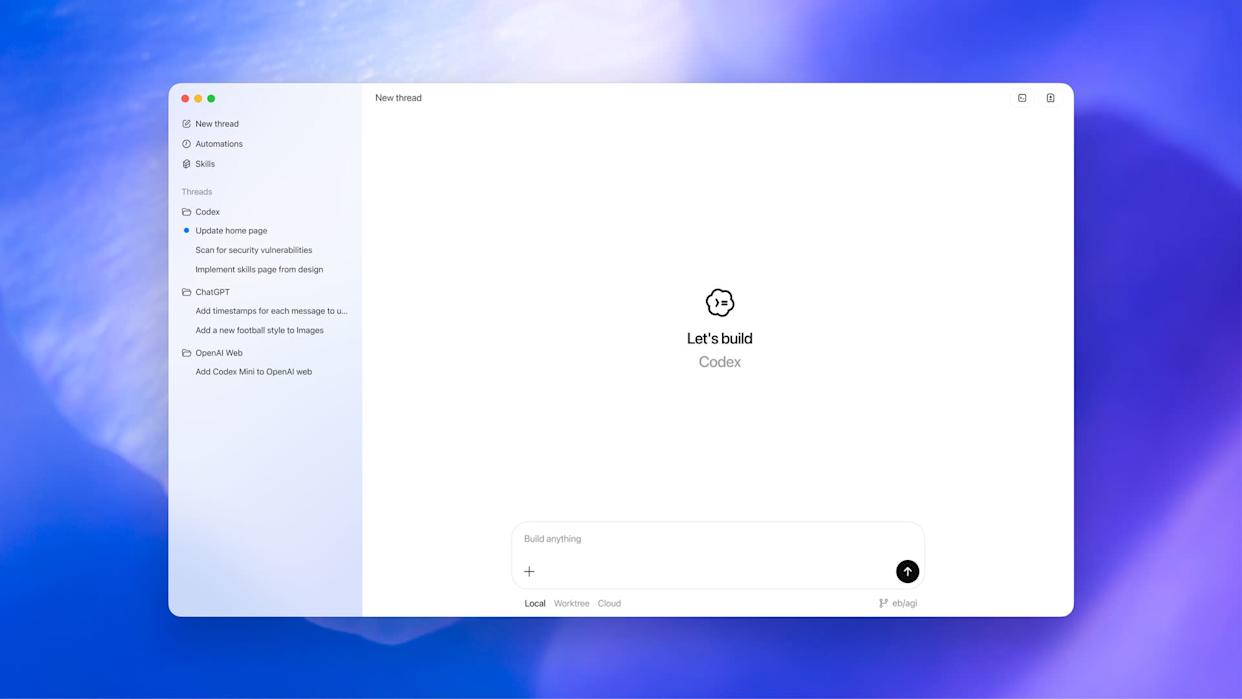

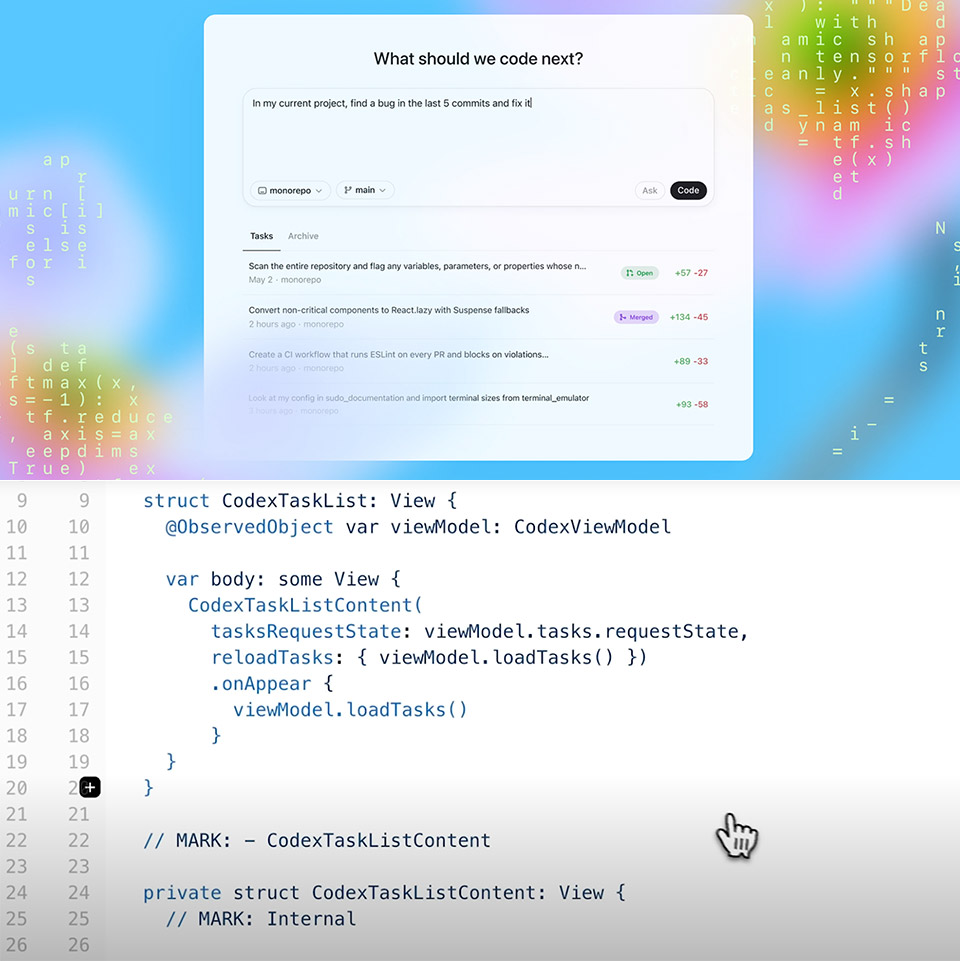

Now available as a dedicated macOS application, Codex represents a fundamental shift in how AI approaches software development. Instead of asking an AI to build something complex and hoping it handles all the moving pieces, Codex breaks work into smaller problems, assigns them to specialized models, and coordinates the results. It's like having a tech lead who never sleeps, never gets frustrated, and can juggle five projects simultaneously.

The implications are wild. We're not talking about marginal productivity gains anymore. We're talking about tasks that previously required entire teams or weeks of work getting completed in hours. But before diving into the hype, let's understand what's actually happening here, why it matters, and what it means for developers in 2025.

TL; DR

- Multi-agent coordination: Codex now manages multiple AI models working in parallel, each handling specialized tasks simultaneously

- Real-world proof: Open AI built a complete Mario Kart-style game with visual assets, game logic, and quality assurance using Codex's multi-agent approach

- Automation capabilities: The new Automations section lets developers schedule recurring tasks like bug detection, code review, and CI/CD failure analysis

- Broader developer impact: Multi-agent AI patterns are proving developers can achieve what previously required larger teams or longer timelines

- Strategic timing: Release comes as industry explores agentic AI's potential, with competitors like Cursor already demonstrating similar architectures

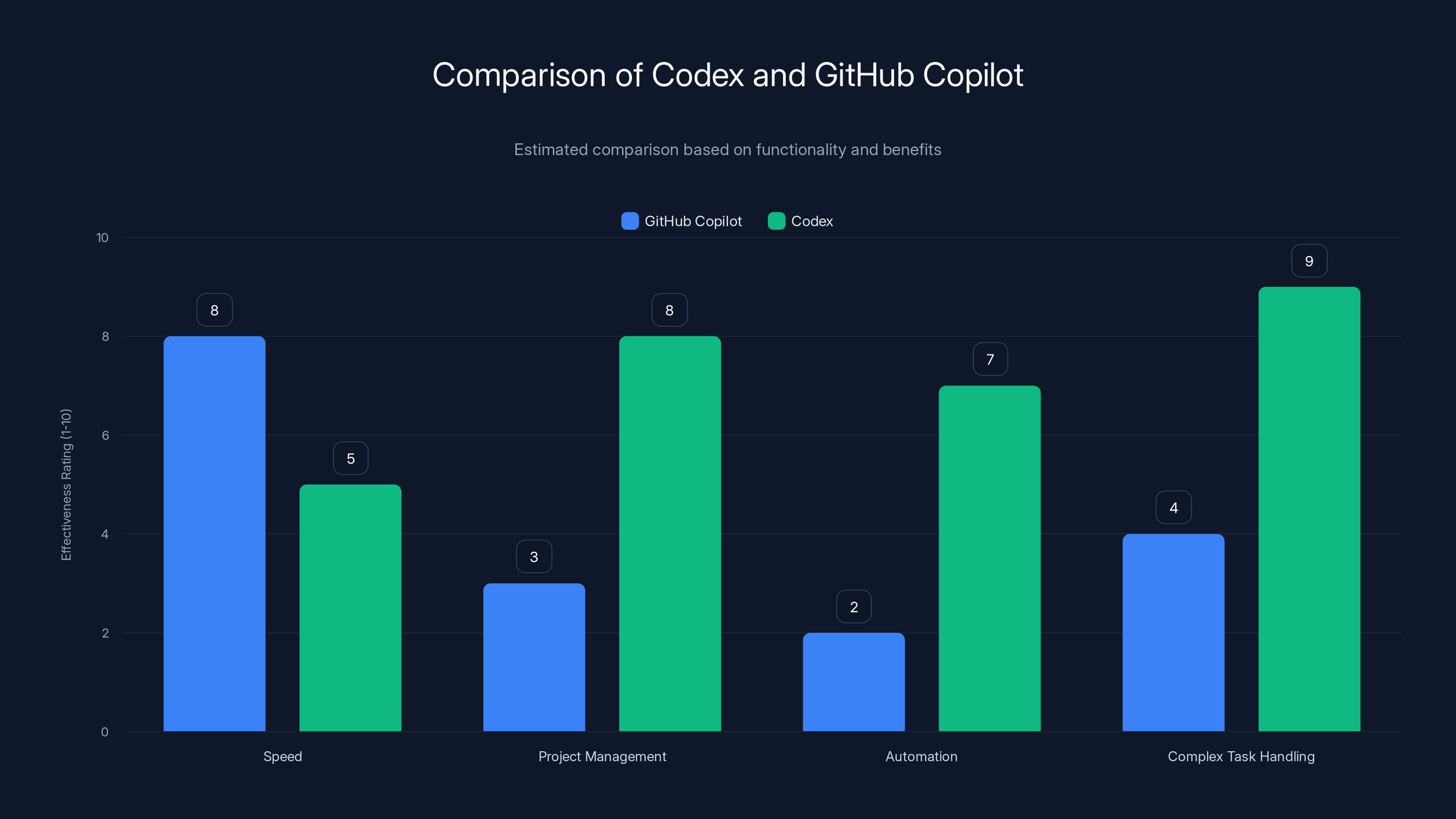

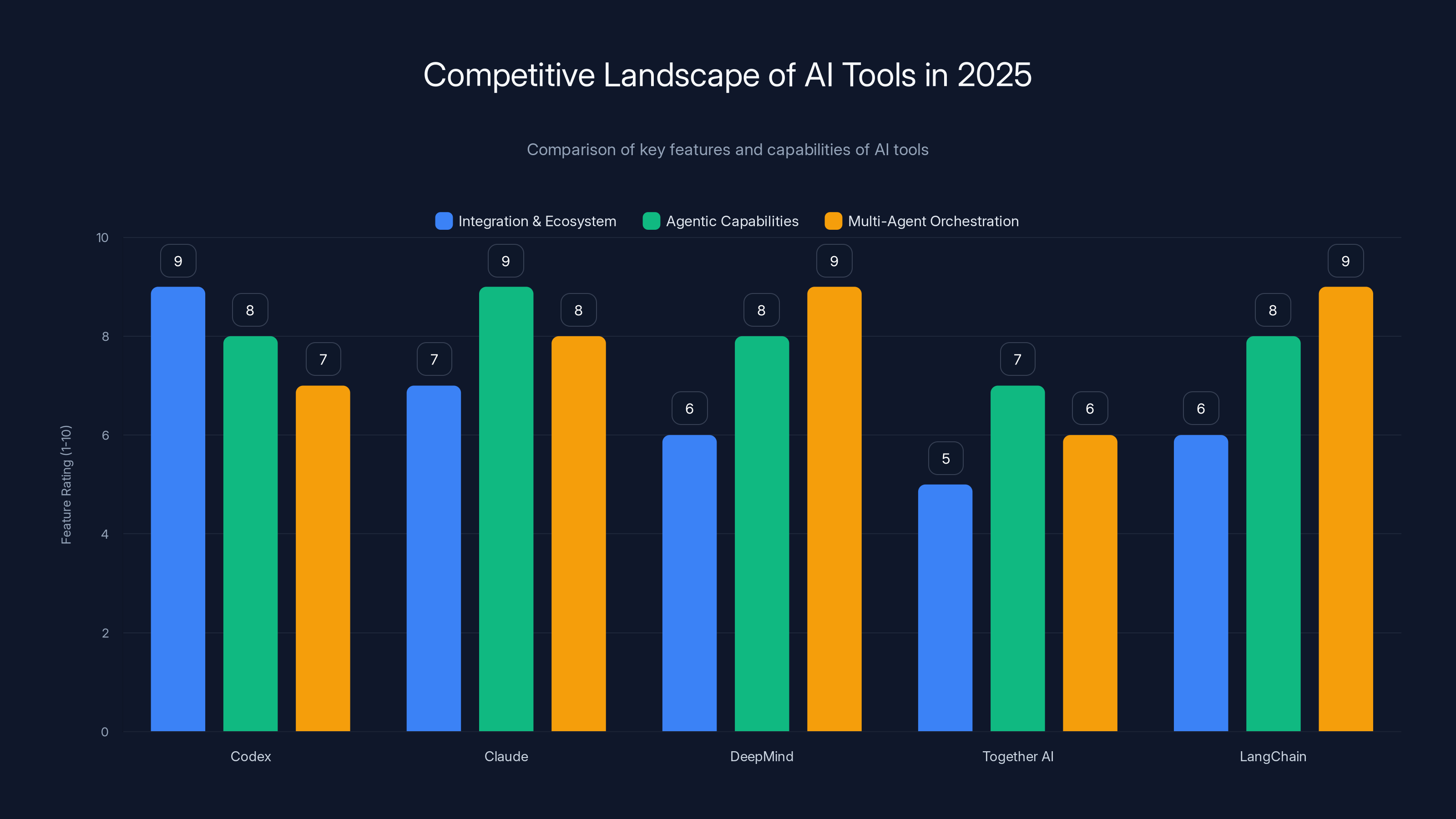

Codex excels in project management and handling complex tasks, while GitHub Copilot is superior in speed for inline code suggestions. Estimated data based on described functionalities.

What Codex Actually Is (And What It Isn't)

Here's the thing that confuses people: Codex isn't Chat GPT with a code editor. It's not Claude wrapped in a new interface. It's a fundamentally different approach to how AI tackles programming problems.

At its core, Codex is an agentic AI system. That means it doesn't just generate code and stop. It takes goals, breaks them into steps, executes those steps, checks the results, adjusts course if needed, and repeats until the job is done. Think of it as the difference between telling someone "build me a website" versus having someone who figures out what needs doing, does it, checks their work, and comes back with a finished product.

What makes the new version different is multi-agent orchestration. Instead of one model trying to do everything, Codex can spawn multiple AI agents, each with different capabilities, and coordinate their work. This is genuinely novel in developer tools.

The old model of AI coding assistance looked like this: developer writes a prompt → AI generates code → developer integrates it. It works fine for simple tasks. But for anything complex—a game with graphics, physics, UI, and test cases—the single-model approach hits a wall. The AI either produces half-working code that requires heavy editing, or it gets confused trying to hold too much context.

Codex's approach flips the script. A single orchestration layer coordinates specialized agents. One handles graphics generation via image models. Another writes the game logic. A third handles UI. A fourth validates the code works. They all work simultaneously, feed results back to each other, and the orchestrator makes sure everything integrates.

This architecture solves a real problem in AI development: specialization versus generalization. A general model tries to be good at everything and ends up being okay at most things. Specialized models are excellent at narrow tasks but need coordination. Codex solves this by being the coordination layer.

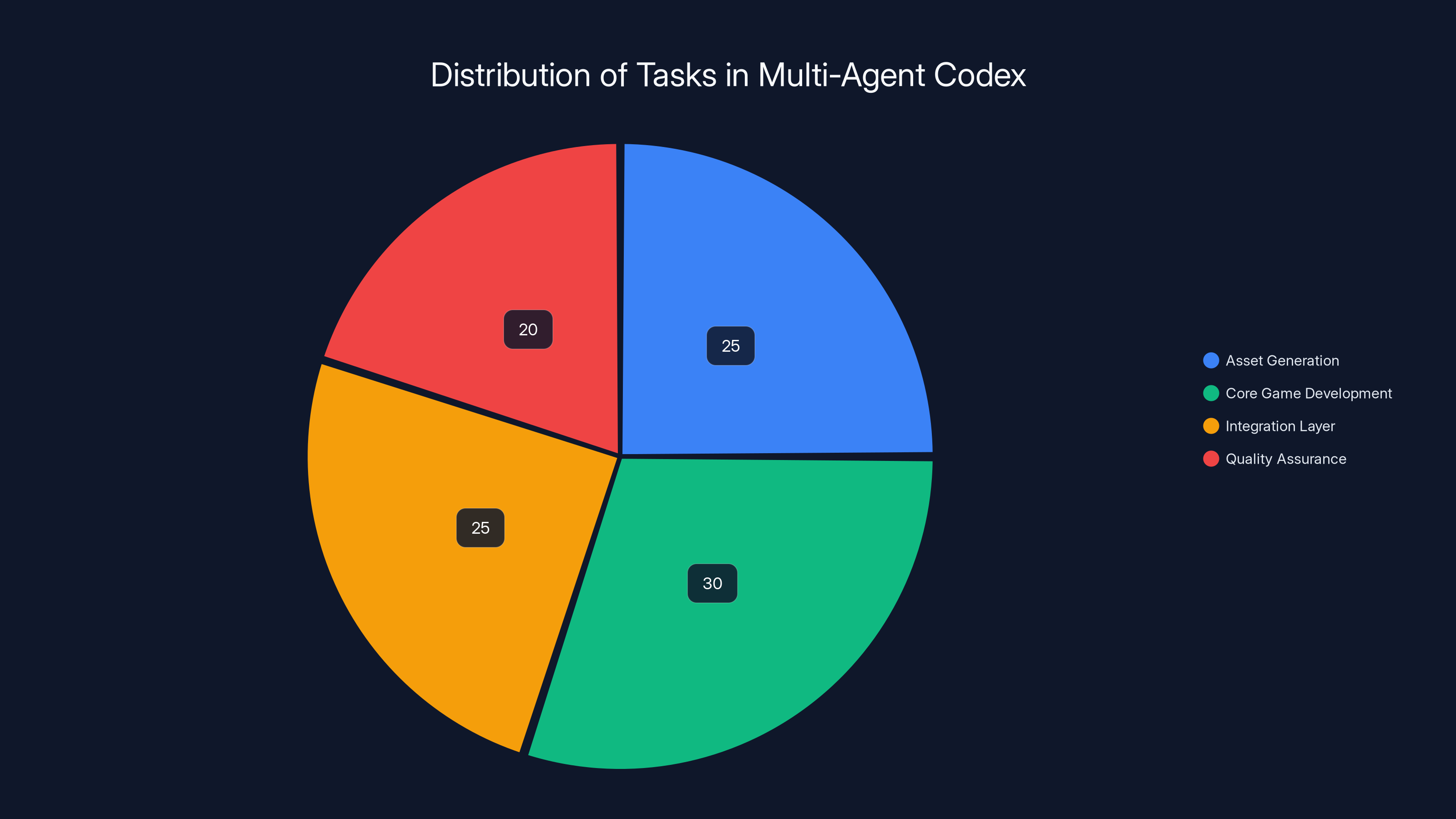

Estimated data shows how Codex distributed tasks across different phases. Core Game Development and Asset Generation were the largest components, each taking about a quarter of the effort.

The Mario Kart Case Study: How Multi-Agent Codex Works in Practice

Open AI's example of building a Mario Kart-style racing game using Codex is more than marketing fluff. It's genuinely illustrative of what becomes possible when you stop asking one AI to do everything.

Let's break down what actually happened, because the technical choreography is impressive.

Open AI gave Codex a goal: "Build a playable Mario Kart-like racing game." That goal needed several components:

- Visual assets: Car designs, track graphics, UI elements

- Game mechanics: Physics engine, collision detection, lap tracking

- Gameplay features: Selectable cars, eight different tracks, power-up systems

- Quality assurance: Testing that the game actually plays and doesn't crash

A single AI model would need to: generate visual code to describe cars, write Java Script physics logic, handle UI implementation, build the game loop, manage assets, and simultaneously optimize for performance. It's possible in theory. In practice, the output is usually a partially-working prototype that needs serious debugging.

Codex distributed the work:

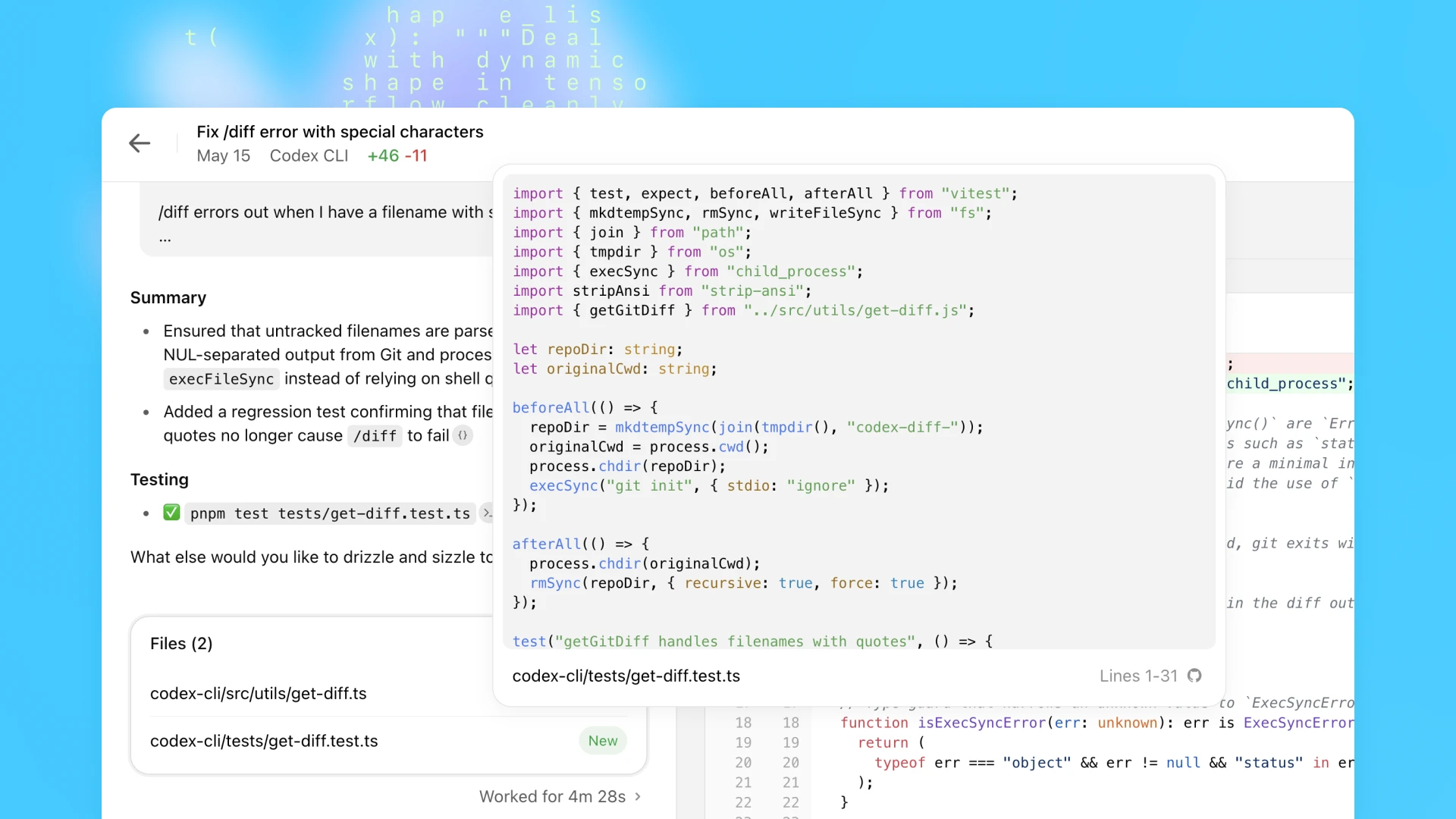

Phase 1: Asset Generation Codex sent visual generation tasks to GPT Image (or similar image model). The model generated 20+ assets: different car designs, track variations, power-up icons. While that was running, the next phase started.

Phase 2: Core Game Development A separate language model wrote the actual game code. This model focused purely on Java Script game logic: physics calculations, collision detection, lap counting, win conditions. Without needing to worry about generating images, it could write tighter, more focused code.

Phase 3: Integration Layer Another agent took the generated assets and game code, stitched them together, and handled all the integration work. Asset paths, image loading, memory management.

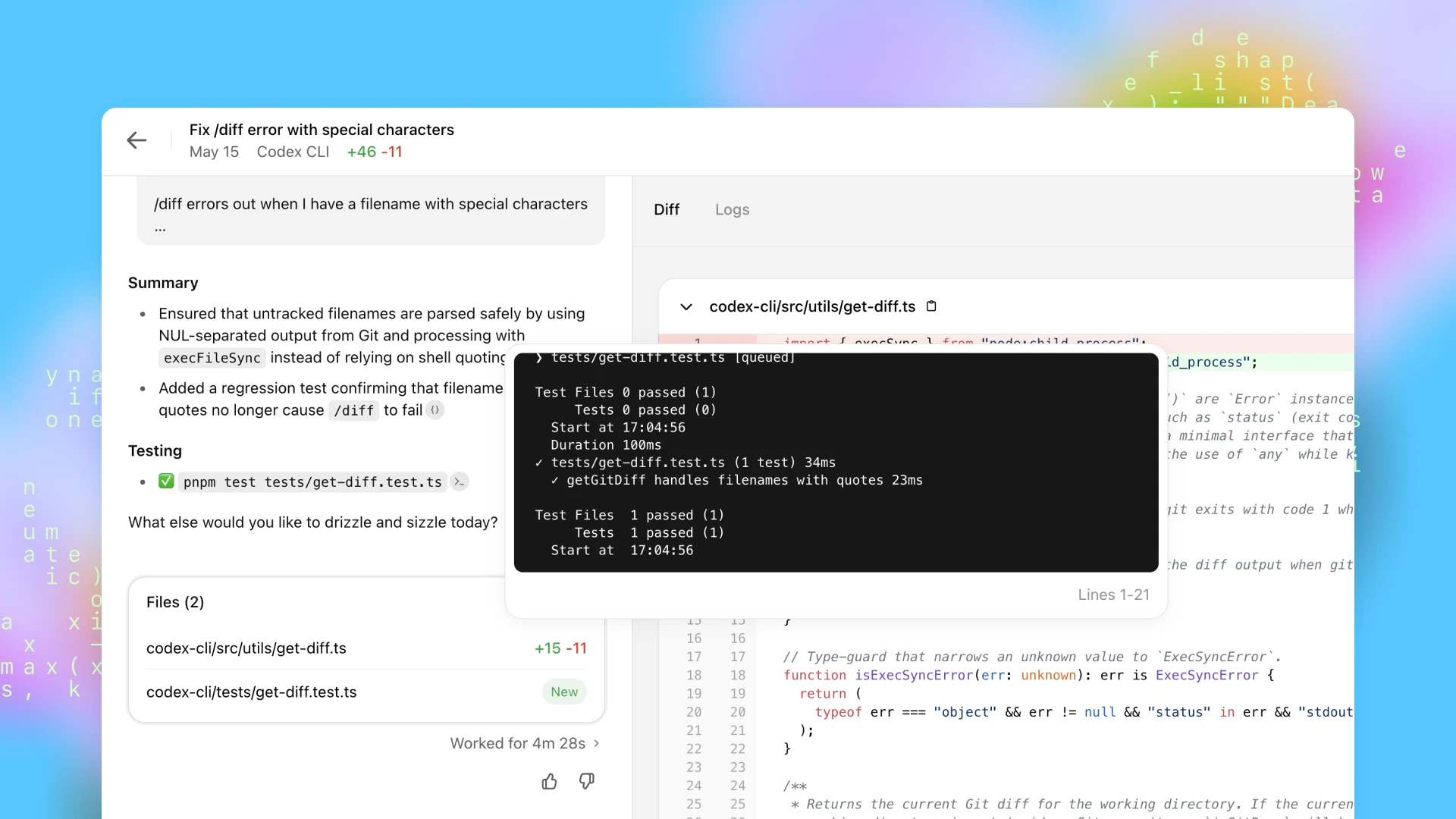

Phase 4: Quality Assurance This is the part that shocks people. Codex didn't just generate code and hope it worked. A separate agent actually played the game to validate it functioned. The agent tried different cars, drove different tracks, collected power-ups, and reported bugs back to the development agent for fixing.

This automation of QA is genuinely significant. Most development tool AI focuses on code generation. Codex also tests the code, which means fewer broken builds and faster iteration.

The entire process took hours, not days. For comparison, a human team building the same game would typically need:

- 1 game programmer for core mechanics (3-4 days)

- 1 graphics designer for assets (2-3 days)

- 1 integrations developer for stitching pieces together (1-2 days)

- 1 QA tester finding and reporting bugs (1-2 days)

Total: About 8-11 person-days of work. Codex did it in a few hours with supervision.

Now, honest assessment: The game Codex built wasn't a AAA title. It won't ship on Steam. But it was playable, debugged, and complete with features. That's the bar for proving multi-agent AI works in practice, and Codex cleared it.

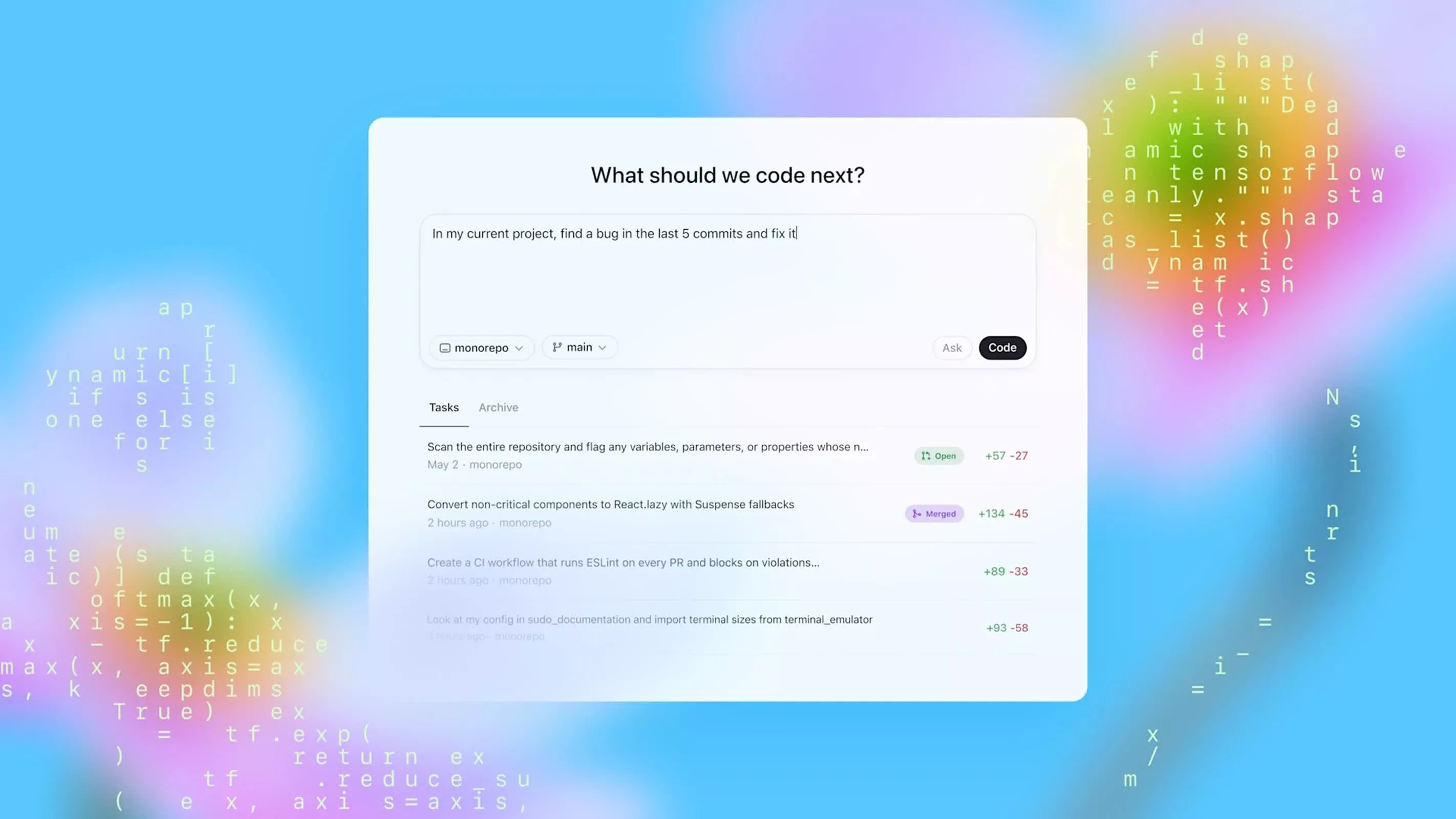

The Automations Feature: Turning Developers Into Managers of AI

The second major addition to Codex isn't as flashy as game generation, but it might be more practically useful. Automations lets developers schedule recurring tasks that run in the background, handled entirely by AI.

This is the end game of AI coding assistants—not replacing developers with robots, but making the repetitive, soul-crushing parts of development automated so humans can focus on the creative and strategic work.

Open AI's examples are specific:

Daily Issue Triage: Every morning, Codex reviews new Git Hub issues, categorizes them by priority and type, assigns severity labels, and summarizes them for the team. A task that takes a developer 30-45 minutes per day just... disappears.

CI/CD Failure Analysis: When tests fail, Codex investigates. It checks the logs, identifies which test failed, digs into the code changes that preceded the failure, and generates a summary. "Test X failed because commit Y changed function Z without updating its callers." Instead of a developer manually tracing failures for 20-30 minutes, they get a report.

Release Brief Generation: Before every release, someone writes a summary of what changed, what's new, what's fixed. Codex does this by analyzing commits, PRs, and issue history. A 30-minute task becomes automatic.

Bug Detection: Codex scans code for common patterns that indicate bugs. Race conditions in concurrent code, null pointer dereferences, security vulnerabilities in how APIs are called. It flags these proactively instead of waiting for them to surface in production.

The underlying pattern here is automation of high-frequency, high-certainty tasks. These are tasks that:

- Happen repeatedly (daily, weekly)

- Have clear success criteria

- Don't require human judgment

- Take 20-60 minutes when done manually

- Are tedious enough that humans deprioritize them

By automating these, Codex claims developers reclaim 5-8 hours per week. That's essentially one full workday every single week.

But here's where you need to be skeptical. Automations work best when you:

- Have precise requirements: "Flag issues without a milestone" works. "Find important issues" doesn't.

- Can tolerate occasional errors: If Codex categorizes 95% of issues correctly and mislabels 5%, you catch those during review. That's still a 95% time savings.

- Have established workflows: If your team categorizes issues five different ways depending on who does it, automation gets confused.

- Review outputs regularly: Automation is only time-saving if you're not spending 40 minutes verifying every output. Spot-check, don't full-audit.

The automation feature also hints at a bigger strategic shift at Open AI. They're positioning Codex not as a code writer, but as a development operations tool. The value isn't "Codex will write your entire app." It's "Codex handles the operational overhead of development so your team focuses on building." That's a more realistic, more valuable positioning.

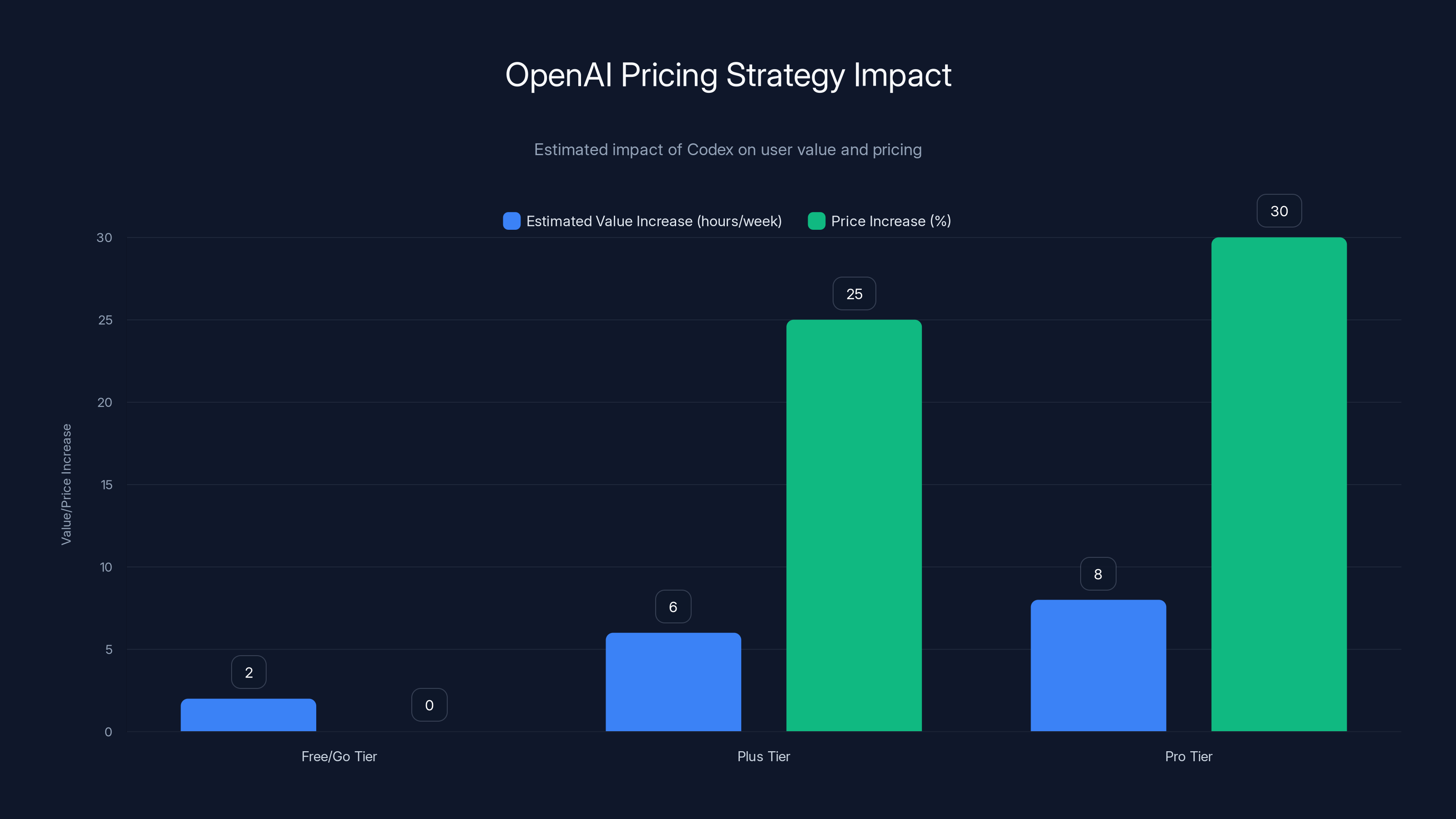

Estimated data shows that while Free/Go tier users gain access to Codex without a price increase, Plus and Pro users experience a 25-30% price increase, aligning with the additional value provided by Codex.

Multi-Agent Architecture: Why This Pattern Matters

The technical architecture behind Codex's multi-agent capabilities deserves examination, because it's becoming a pattern across AI development.

Traditional AI coding assistants operate with a single-model pipeline:

User Input → Single LLM → Generated Code → Output

This works. It's simple. But it has constraints:

- Context windows: Even the largest models have limits. A complex project description plus code history plus requirements approaches limits quickly.

- Quality variance: A single model has a baseline quality. It's good at some domains (Python) and weaker at others (embedded C).

- Latency: One model must do everything sequentially, so latency equals one model's inference time times the number of steps.

Multi-agent architecture changes this:

User Input → Orchestrator → [Agent 1, Agent 2, Agent 3] (Parallel)

↓

Coordination Layer → [Testing Agent] → Output

Each agent specializes. Agent 1 handles backend code. Agent 2 handles frontend. Agent 3 handles Dev Ops configuration. They work simultaneously. An orchestration layer (the conductor) ensures their outputs integrate. A testing agent validates everything works.

This architecture has several advantages:

1. Latency Reduction Instead of tasks running sequentially (model A, then model B, then model C, then testing), they run in parallel. If three tasks each take 30 seconds sequentially, that's 90 seconds. In parallel, it's 30 seconds total. For complex projects, this is massive.

2. Quality Improvement Specialized models outperform generalists. A model trained specifically on game engine patterns will generate better game code than a general model. By using specialized agents for specialized domains, overall quality increases.

3. Error Handling Multi-agent systems can validate and correct each other. If one agent produces code that doesn't integrate, another agent catches it and either fixes it or asks for revision. This creates a quality loop.

4. Scalability You can add agents without changing the core architecture. Need better security analysis? Add a security agent. Need better documentation? Add a documentation agent. The orchestrator routes work accordingly.

5. Cost Efficiency Specialized smaller models cost less than large generalist models. Using three smaller specialized models instead of one massive generalist model can reduce compute costs while improving quality.

The orchestration challenge is real though. Coordinating multiple agents requires:

- State management: Tracking what each agent produced, what depends on what

- Error recovery: If one agent fails, deciding whether to retry, use fallback, or escalate

- Integration logic: Ensuring outputs from different agents actually work together

- Human involvement: Knowing when to escalate decisions to humans versus letting AI decide

Codex appears to handle this, though the documentation is sparse on exact mechanisms. Based on the public examples, it seems to:

- Decompose goals into subtasks

- Route subtasks to appropriate agents

- Manage dependencies so agents can work in parallel when possible

- Validate outputs before moving to next stages

- Handle failures by re-attempting or escalating

- Integrate results into final deliverables

This is non-trivial engineering. It's essentially a project management system where the manager and workers are all AI.

How Codex Compares to Competing AI Developer Tools

Codex doesn't exist in a vacuum. It's competing against established tools and newer entrants, each with different philosophies.

Git Hub Copilot (the OG AI coding assistant) focuses on inline code completion. You're writing code, Copilot suggests next lines. It's helpful for speed but doesn't manage complex projects. It's good for implementation, not architecture.

Cursor (built by Anysphere) emphasizes full-file understanding and codebase knowledge. Rather than inline suggestions, Cursor lets you ask questions about your entire project and get answers. In early 2025, Cursor demonstrated building a web browser from scratch using multi-agent orchestration—essentially validating the approach Codex is now using.

Claude (from Anthropic) offers long-context assistance. You can dump entire codebases and ask questions. Claude handles the context better than other models but doesn't automate task execution the way Codex does.

Codeium focuses on enterprise deployment and compliance. It's Git Hub Copilot's main competitor for teams that need on-premise deployment and security guarantees.

Codex's differentiator is agentic automation combined with macOS integration. It's not trying to be the best at inline suggestions (Copilot) or codebase understanding (Cursor). It's trying to be the tool that manages entire development workflows, schedules tasks, and handles project-level complexity.

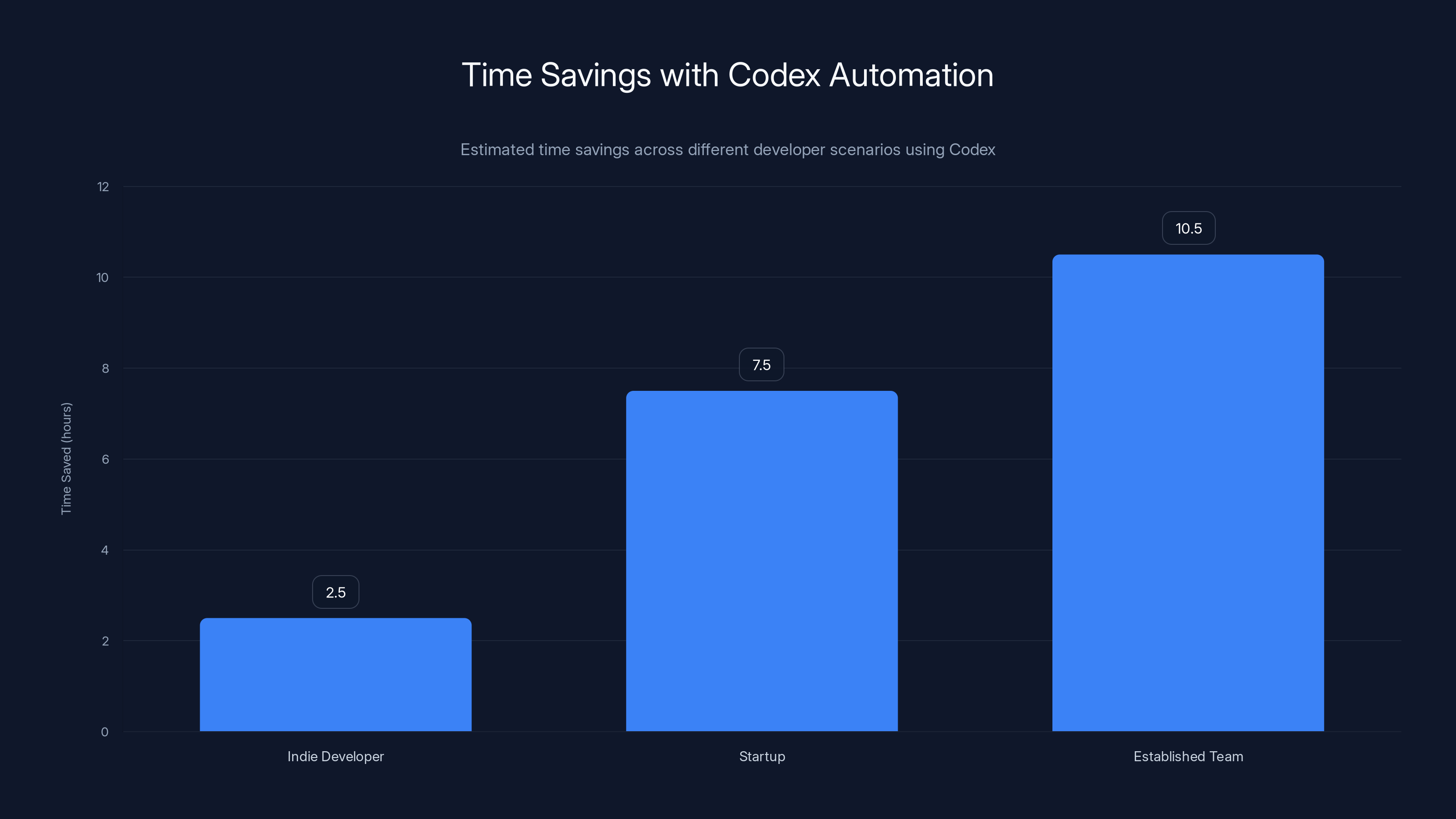

The macOS-exclusive launch is interesting strategically. macOS represents roughly 15-20% of developer machines but skews heavily toward indie developers and startups. These are audiences that often work solo or in small teams, where automation of operational overhead has disproportionate impact. A solo developer using Automations gains 5-8 hours per week of reclaimed time. For a team of one, that's 25% productivity gain.

One honest limitation: Codex requires familiarity with agentic AI concepts to use well. You can't just throw any task at it. You need to decompose complex goals into clear subtasks. This requires understanding how the system works, what it's good at, and what to expect. This is a higher bar for adoption than "click a button and get suggestions."

For teams already thinking in terms of task automation and CI/CD pipelines, Codex is natural. For teams used to traditional coding assistance, the mental model shift is steeper.

Estimated data: Codex significantly reduces time spent on routine tasks, saving up to 10.5 hours per week for established teams.

Pricing and Access Strategy

Open AI's access approach reveals their positioning. For a limited time, they're offering Codex to Chat GPT Free and Go users. Free users get access. Chat GPT Go users (the $20/month tier) get access. This is intentionally broad.

The catch: they're simultaneously doubling rates for Plus and Pro subscribers. This is smart pricing strategy.

Here's the logic:

Free/Go tier gets Codex → Attracts users, builds habit, demonstrates value

Plus tier price increase → Existing Plus users pay more, but they also get more value if they adopt Codex

Pro tier price increase → Premium users (likely teams/professionals) subsidize development costs

This isn't a hostile move the way it might sound. If Plus users suddenly have access to automation that saves 5-8 hours per week, they're getting more value. The price increase roughly aligns with the value increase.

The strategy makes sense for Open AI. Codex is more computationally expensive than basic Chat GPT. Multi-agent coordination requires more inference, more context management, more validation steps. The cost to Open AI is higher, so prices need to increase.

But this also signals confidence. Open AI isn't charging massive premiums for Codex. They're making it available to free users initially. This suggests confidence that usage will drive value realization, which drives willingness to pay.

Practical Implementation: How Developers Will Actually Use Codex

Theory is nice. But how do real developers integrate this into workflows?

Scenario 1: The Indie Developer Sarah is a solo developer maintaining three production SaaS products. Every morning, she used to spend 30 minutes checking logs, categorizing issues, and writing a status report. Codex automates this. Now she runs Codex as a background automation every morning. By the time she opens her laptop, she has a report of what broke, what's urgent, what's new. That 30 minutes becomes 5 minutes of review. She saves 25 minutes per day = 2+ hours per week.

Sarah also uses Codex for code generation when building new features. Instead of writing boilerplate and common patterns manually, she describes what she wants, Codex breaks it into subtasks (API endpoints, database schema, frontend components), generates them in parallel, tests the integration, and produces working code. Development time for new features drops 30-40%.

Scenario 2: The Early-Stage Startup A five-person startup is moving fast. Two engineers spend significant time on code review, catching bugs, ensuring quality. Codex Automations run weekly code analysis, flagging common issues before code review even happens. Code review time drops 25-30% because the obvious mistakes are already caught.

When building a new product version, instead of one engineer managing the project and five working on features, Codex handles project decomposition and coordination. One engineer still manages strategic decisions, but Codex handles tactical execution. Time from "we have an idea" to "we have a demo" drops from two weeks to five days.

Scenario 3: The Established Team A 20-person team maintains legacy systems and builds new features. Operations overhead is significant: ticket triage, documentation updates, release management, security scanning. Codex automates 60-70% of these operational tasks. The time saved allows the team to ship more features without growing headcount.

The operational gains are invisible in day-to-day work but massive cumulatively. Instead of 3 engineers spending 40% of their time on operations, Codex handles it. That's equivalent to 1.2 engineers of freed-up capacity, which is almost a free engineering hire.

All three scenarios have a common pattern: Codex works best when you have clear, repeatable tasks. The more standardized your workflows, the better automation performs. If your team is chaotic, decision-making is ad-hoc, and processes change weekly, Codex will struggle.

This actually creates a selection effect. Codex will likely be adopted fastest by organizations with mature engineering practices. These are teams that already have clear processes, documented standards, and consistent workflows. Codex amplifies what they're already good at.

In 2025, Codex leads in integration with OpenAI's ecosystem, while Claude and LangChain excel in agentic capabilities and multi-agent orchestration. Estimated data.

The Competitive Landscape in 2025

Codex's launch is well-timed but not surprising. The industry has been moving toward agentic AI for 12-18 months.

Anthropic has been emphasizing agentic capabilities in Claude. The company published research on constitutional AI agents that can decompose tasks and work autonomously. Claude's extended thinking capabilities align with agentic workflows.

Google Deep Mind released papers on multi-agent reinforcement learning and agents that can learn to collaborate. These aren't directly marketed as coding tools, but the underlying research is foundational.

Smaller specialized players are also making moves. Together AI focuses on inference optimization for agentic workflows, understanding that multi-agent systems are computationally expensive. Lang Chain provides orchestration frameworks for building agents, making multi-agent systems accessible to developers without needing to build from scratch.

The competitive advantage Codex has is integration with Open AI's ecosystem. Chat GPT users already trust Open AI. Codex is a natural extension. Pricing is integrated into existing Chat GPT plans. The user experience is unified. This reduces friction dramatically compared to adopting a completely new tool.

But make no mistake: Cursor, Claude, and others are building similar capabilities. The pattern of multi-agent orchestration isn't proprietary to Open AI. Within 12 months, expect most major AI developer tools to offer multi-agent features.

The differentiation will be in execution: whose orchestration is most reliable? Whose agents handle edge cases best? Whose integration is smoothest? These are operational questions, not fundamental capability gaps.

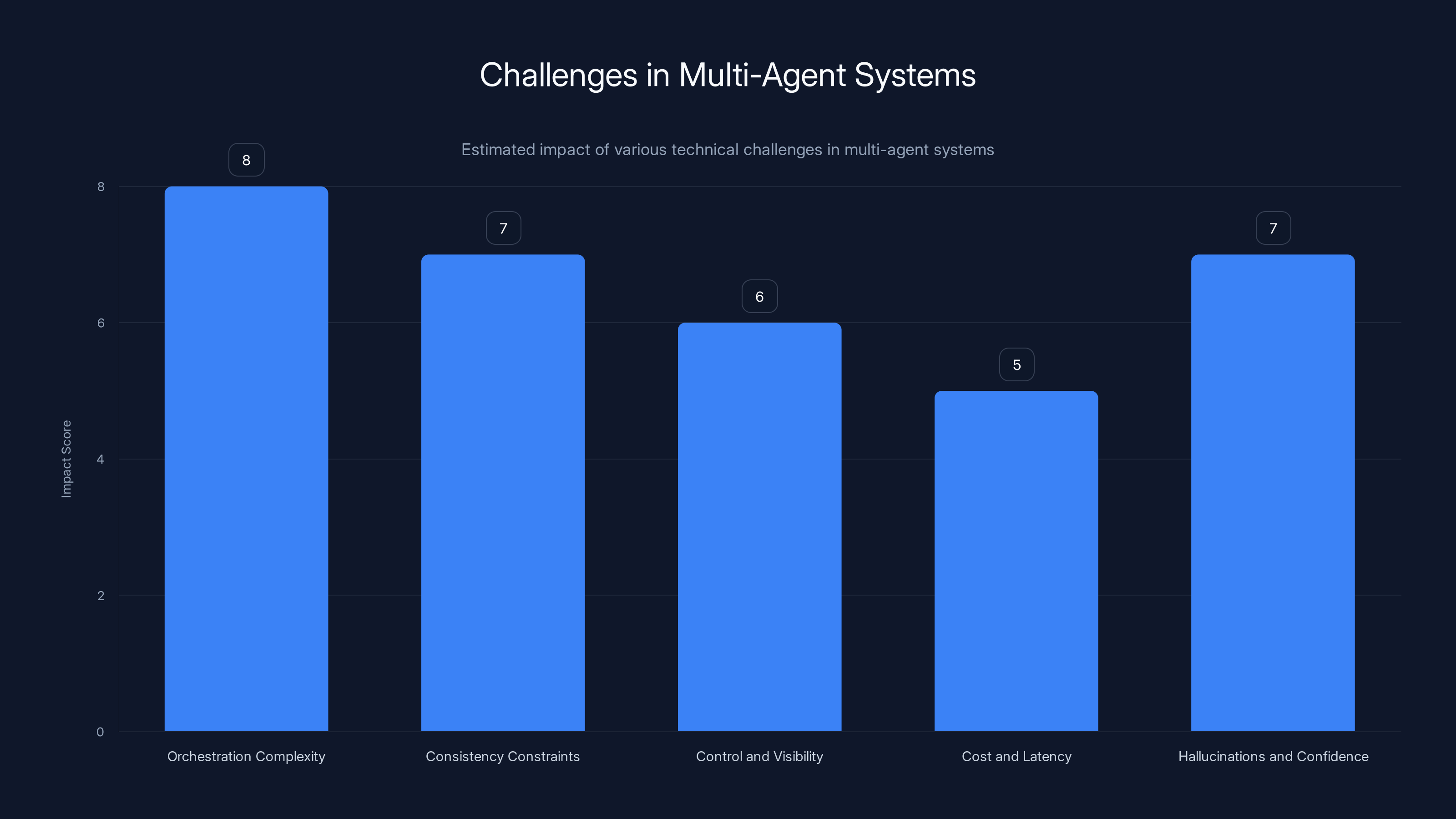

Technical Challenges and Real Limitations

Here's where enthusiasm needs tempering with reality. Multi-agent systems are powerful, but they have real limitations.

1. Orchestration Complexity Coordinating multiple agents requires managing state, dependencies, and error cases. If Agent 1's output is subtly incompatible with Agent 2's expectations, the system breaks silently. Debugging multi-agent failures is harder than debugging single-agent failures because failures can propagate between agents.

Codex likely handles this through aggressive validation and rollback mechanisms, but it's not a solved problem universally.

2. Consistency Constraints When multiple agents work on a system, maintaining consistency is hard. Agent 1 makes assumptions about the database schema. Agent 2 changes the schema. Agent 3 is using outdated assumptions. Now the system is broken.

Codex mitigates this through shared context and integration testing, but it's not perfect. Complex projects with many agents have higher failure rates than simpler projects with fewer agents.

3. Control and Visibility When one AI does all the work, you watch it happen. With multi-agent systems, parallel execution means you can't see everything at once. If something goes wrong, tracing back to the root cause is harder.

Codex logs everything, but interpreting agent logs requires understanding how the agents work—technical knowledge beyond typical developer experience.

4. Cost and Latency Trade-offs Parallel execution improves latency, but more agents means more API calls, which means higher costs. Running three agents simultaneously might cost 2.5-3x as much as running one agent sequentially.

For some use cases (one-time game building), this is fine. For high-frequency use (generating code snippets hundreds of times per day), cost becomes prohibitive.

5. Hallucinations and Confidence AI systems sometimes confidently produce wrong answers. In multi-agent systems, one agent's hallucination can cascade. Agent 1 produces incorrect code. Agent 2 relies on it. Agent 3 builds on that. By the time the system validates the final output, fixing the root cause requires restarting multiple agents.

Codex's testing agent mitigates this, but it's not perfect. Some hallucinations are semantic (wrong logic that still compiles and runs) rather than syntactic (broken code).

None of these limitations are dealbreakers. But they are real. Multi-agent AI systems are more powerful and more complex than single-agent systems. You gain speed and capability. You pay for it with complexity and reduced interpretability.

Orchestration complexity and hallucinations/confidence issues are among the highest impact challenges in multi-agent systems. Estimated data based on typical challenges.

What This Means for Developer Career Paths

The introduction of tools like Codex creates uncomfortable questions about developer careers. If AI can handle 30-40% of development work, are developers becoming obsolete?

Historically, the answer to this question is "no, but job composition changes." When calculators replaced human computers doing manual math, mathematical work didn't disappear. It shifted. People stopped doing arithmetic and started doing higher-level analysis. Jobs evolved.

The same dynamic is likely here. If Codex handles routine code generation and operational automation, what does that mean for developers?

Developers become architects and decision-makers. Instead of writing boilerplate, developers decide what needs building and review AI output. The thinking gets higher-level. You're designing systems and ensuring quality, not implementing patterns.

Developer productivity increases, but so do expectations. If one developer with Codex can do the work of 1.5 developers without it, companies will hire fewer developers but expect each to handle more complex problems. The role shifts from implementer to systems thinker.

Early-career developers struggle more. Junior developers learn by doing routine tasks: writing boilerplate, implementing simple features, making mistakes and learning from them. If Codex handles boilerplate, how do juniors learn? This is a real concern. The industry might need to intentionally structure "learning-focused" work separate from "production-focused" work.

Specialization becomes more valuable. Generalist developers are easier to automate. Developers with deep expertise in specific domains (distributed systems, security, performance optimization) remain harder to replace. Specialization becomes a career strategy.

Dev Ops and infrastructure roles get more complex. As development scales and gets more automated, infrastructure becomes more complex. Operational demands increase. Engineers who understand both application and infrastructure become more valuable.

None of this means developers are doomed. But it does mean the profession is inflecting. The mix of work is changing. Career planning needs to account for this.

Practical Guide: Getting Started with Codex

If you want to evaluate Codex, here's a realistic approach.

Phase 1: Understand the Model Codex isn't a replacement for your existing workflow. It's a tool you integrate strategically. Spend time understanding:

- What kinds of tasks it handles well (complex, decomposable tasks)

- What it struggles with (tasks requiring deep domain expertise, novel problems)

- How to structure prompts and goals for multi-agent execution

Don't try to use it for everything immediately. That leads to frustration.

Phase 2: Identify Automation Opportunities Review your development workflow. Where do you waste time? Look for:

- Repetitive tasks (issue triage, release management, documentation updates)

- Dependency-free work that can parallelize (generating API specs, writing tests, generating UI components)

- Validation work (code review, testing, documentation review)

Prioritize opportunities with high frequency and clear success criteria.

Phase 3: Start Small Begin with one small automation or one bounded project. Don't try to use Codex to build your entire product on day one. Use it for:

- Automated daily issue triage

- Generating tests for a small module

- Building a side project with game-like scope

Monitor the results. What worked? What didn't? What surprised you?

Phase 4: Iterate and Expand Once you've gotten comfortable with Codex through small uses, expand gradually. Add more automations. Tackle bigger projects. Build institutional knowledge about what works well with Codex in your specific context.

Specific Prompting Strategies for Codex:

Codex responds better to specific, structured prompts than vague instructions.

Bad: "Build me a web app" Good: "Build a single-page web app that: 1) Fetches user data from API endpoint /api/users 2) Displays users in a sortable table 3) Allows filtering by department 4) Exports table as CSV"

The second prompt is specific enough for Codex to decompose into subtasks: fetch logic, table component, filter logic, export logic. Each can be handled by a different agent.

Validation Checklist: Before deploying Codex output to production:

- Run through your existing test suite (does it pass?)

- Code review the output (is it reasonable? Are there obvious issues?)

- Performance test it (is it fast enough?)

- Security audit it (are there obvious vulnerabilities?)

- Integration test with your system (does it interact correctly with your existing code?)

Codex isn't perfect, but if it passes these checks, it's production-ready.

The Broader Context: Where AI Development Tools Are Heading

Codex's multi-agent capabilities are one data point in a larger trend. The entire AI-assisted development landscape is moving toward higher-level abstraction.

First generation (2021-2022): AI fills in code. You write skeleton, AI completes patterns.

Second generation (2023-2024): AI understands full files and projects. You ask questions about code, AI answers.

Third generation (2025+): AI manages tasks and workflows. You set goals, AI breaks them into work, executes in parallel, validates results.

Codex is solidly in third generation. This generation's killer feature is reduction of cognitive load. Instead of juggling multiple pieces of work yourself, you delegate to AI and review the output.

But there's a fourth generation coming, which we might see by 2026-2027: AI that learns your codebase and your team's practices. Tools that understand not just how code works, but how your code works. The style, the patterns, the conventions you use. At that point, AI-generated code can be indistinguishable from code written by your team.

Codex is a stepping stone toward that, but not there yet. The multi-agent architecture is enabling infrastructure. The next generation of tools will build on this foundation.

Honest Assessment: Is Codex Worth Using in 2025?

Let me be direct.

If you're a solo developer or in a small startup and you have operational overhead eating your time, yes, try Codex. The free tier access means zero risk. The time saved from automations alone could be meaningful. The time saved from project-level code generation could be transformative.

If you're in a mid-size or large team with established engineering practices, evaluate it seriously. The multi-agent code generation is impressive, but the bigger value is operational automation. How much time does your team waste on issue triage, CI/CD failure analysis, documentation? Codex can handle these at scale.

If you're in an organization with immature engineering practices (chaotic workflows, ad-hoc decision-making, inconsistent standards), hold off. Codex needs clear, repeatable processes to work well. You'll get frustrated before seeing benefits.

The pricing is reasonable if you're already a Chat GPT Plus user. The macOS-exclusive launch is limiting, but Windows and Linux versions are presumably coming.

The honest risk: you become dependent on Codex, and if it breaks or changes pricing, your workflow is affected. This is the cost of using any external tool. Mitigate by gradually integrating Codex, not overhauling your entire workflow at once.

Future Predictions: What's Coming Next

Based on industry trajectory and competitive dynamics, here's what's likely in the next 12-18 months:

1. Windows and Linux Versions of Codex MacOS exclusivity won't last. Open AI will expand to all platforms to capture broader market. Expect this by Q2-Q3 2025.

2. Deeper IDE Integration Codex will integrate more deeply with VSCode, Jet Brains IDEs, and others. Instead of a standalone app, it becomes a background agent in your IDE. You invoke it with commands or hotkeys while working in your editor.

3. Training on Private Codebases Tokens will become more customizable. Instead of generic models, you'll be able to fine-tune Codex agents on your private codebase so they understand your specific patterns and conventions.

4. Stronger Multi-Language Support Codex will improve at polyglot development. Right now, it's good at one language per task. Future versions will handle multi-language systems better (Java backend, React frontend, Rust services all coordinating).

5. Better Error Recovery Multi-agent error cases will be handled more gracefully. Instead of failures cascading, agents will detect incompatibilities earlier and recover automatically.

6. Community Agent Marketplace Developers will create specialized agents (for specific frameworks, specific domains, specific problems). You'll compose these agents for your specific needs.

7. Enterprise-Grade Compliance For regulated industries (fintech, healthcare, defense), Open AI will offer Codex versions with audit trails, compliance certifications, and on-premise deployment options.

These predictions are educated guesses, not insider information. But they align with obvious competitive pressures and technical roadmaps that are public.

FAQ

What is multi-agent AI in the context of code development?

Multi-agent AI is a system where multiple specialized AI models work in parallel on different subtasks of a larger problem, with a coordination layer ensuring their outputs integrate correctly. Instead of one AI trying to build everything, you have specialized agents for graphics, logic, testing, etc., each handling what they're best at simultaneously.

How does Codex differ from Git Hub Copilot?

Git Hub Copilot provides inline code suggestions as you type—it's good for speed and implementation. Codex is a project-level orchestrator that manages multiple agents to handle complex tasks. Copilot helps you code faster. Codex helps you build projects with less total work.

What are the main benefits of using Codex for development?

Codex saves time through automations (issue triage, CI/CD analysis, documentation) and through parallel code generation for complex projects. It also reduces context switching by handling operational overhead. Teams report 20-40% reduction in routine development time, with the savings compounding as more automations are added.

Is Codex production-ready for large enterprise projects?

Not yet. Codex works best on well-defined, decomposable problems. For large, complex systems with deep dependencies and intricate logic, human oversight is still essential. Codex is a force multiplier for developer productivity, not a replacement for human architects and senior engineers on complex projects.

How does Codex handle integration between multiple agents' outputs?

Codex uses a testing and validation layer to verify that outputs from different agents integrate correctly. It runs the integrated code, tests the functionality, and reports errors back to the relevant agents for fixing. This creates a feedback loop that ensures compatibility, though it's not perfect for all edge cases.

What types of tasks should I NOT use Codex for?

Avoid using Codex for: novel problems without clear patterns, projects requiring deep domain expertise, systems with complex state management and intricate dependencies, and tasks where every decision requires human judgment. Codex shines on well-defined, routine, and parallelizable work.

What's the cost, and is it worth the price increase?

Codex is available to Chat GPT Plus users (now at increased pricing). If it saves 5+ hours per week through automations and 20-30% development time on projects, the cost is justified at roughly $50/month for the added value. For freelancers billing hourly, the time savings often pay for the tool within the first week of use.

How should small teams get started with Codex?

Start with one automation (like daily issue triage) for two weeks. Monitor quality and iteration time. Add one more automation only when you're confident in the first. Then experiment with using Codex for a contained project. Build institutional knowledge gradually rather than trying to transform your entire workflow at once.

Will Codex make developers obsolete?

No. Codex automates routine implementation and operational overhead. Developers shift toward architecture, system design, and decision-making. The profession evolves, with more specialization and higher cognitive demands, but demand for developers remains strong. The developers most at risk are those doing purely routine implementation without differentiation.

When will Codex be available on Windows and Linux?

Open AI hasn't announced specific dates, but historical precedent suggests 6-12 months after the macOS launch. Expect Windows and Linux versions by mid-to-late 2025.

Conclusion: The Inflection Point

Codex's launch with multi-agent capabilities isn't just another feature addition. It's a signal that AI-assisted development has matured from "helper for developers" to "coordinator of technical work."

We're watching the industry hit an inflection point. The tools are becoming capable enough that teams can delegate significant portions of development work to AI while humans focus on higher-level decisions. This is qualitatively different from code suggestions or answer engines.

For individual developers, the opportunity is immediate. The time-saving automations are real. The project-level code generation works. You can adopt Codex cautiously, measure the impact, and decide if it fits your workflow.

For organizations, the strategic question is more complex. Do you invest in teams learning to work with agentic AI? Do you reorganize roles to emphasize high-level thinking over implementation? Do you build automation infrastructure into your development pipeline? These are architectural decisions with long-term implications.

For the industry, expect consolidation around tools that successfully implement multi-agent orchestration. The technical capability isn't unique to Open AI for long. But execution, reliability, and integration matter enormously. Winners will be tools that developers trust and integrate into their daily workflows.

Codex is worth trying. It's free to evaluate (for Chat GPT users). The technical innovations are real. The limitations are clear and manageable if you understand them.

But don't expect it to be magic. It's a powerful tool for specific use cases. Use it well, and it multiplies your productivity. Use it poorly, and you'll waste time debugging AI hallucinations.

The future of development is collaborative: humans making decisions, AI executing work, validation loops ensuring quality. Codex is one implementation of this future. More are coming. The question for developers in 2025 isn't whether AI will be part of development. It's whether you'll learn to work with AI effectively or be left behind by teams that do.

The window to get ahead of this curve is now.

Key Takeaways

- Multi-agent orchestration enables parallel task execution, dramatically reducing project completion time for complex development goals

- Codex's Automations feature automates 5-8+ hours of repetitive developer overhead weekly through issue triage, CI/CD analysis, and release documentation

- Real-world proof: OpenAI built a playable Mario Kart-style game with graphics, logic, and testing entirely through multi-agent coordination

- Industry trajectory shows shift from single-model AI assistance to specialized agent networks—Cursor already demonstrated this capability

- Career implications: developers shift from implementation to architecture/decision-making as routine coding becomes automated

Related Articles

- OpenAI's Codex Desktop App Takes On Claude Code [2025]

- OpenAI's New macOS Codex App: The Future of Agentic Coding [2025]

- OpenClaw AI Agent: Complete Guide to the Trending Tool [2025]

- Why Businesses Fail at AI: The Data Gap Behind the Divide [2025]

- Agentic AI Security Risk: What Enterprise Teams Must Know [2025]

- Context-Aware Agents & Open Protocols in Enterprise AI [2025]

![OpenAI's Codex for Mac: Multi-Agent AI Coding [2025]](https://tryrunable.com/blog/openai-s-codex-for-mac-multi-agent-ai-coding-2025/image-1-1770059898137.jpg)