Open AI's Codex Desktop App Takes On Claude Code [2025]

Introduction: The AI Coding Tool Wars Heat Up

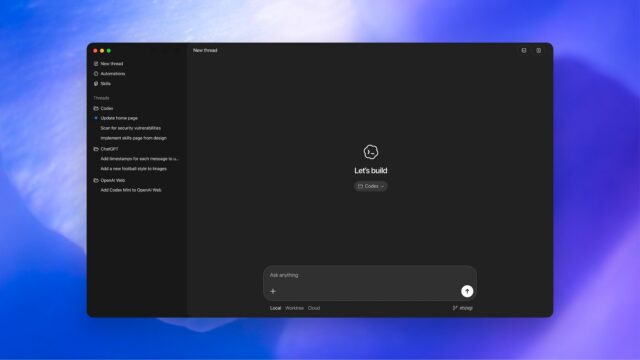

Let me set the scene for you. Six months ago, if you wanted to use OpenAI's Codex for coding tasks, you had exactly three options: a command-line interface, a web interface, or an IDE extension. Not ideal if you're juggling multiple projects and running autonomous agents that need close monitoring. That's where Anthropic's Claude Code had already pulled ahead with a dedicated macOS app that made agent management feel natural.

Now OpenAI's playing catch-up, and they're doing it the way they usually do: by throwing better rate limits at the problem and delivering a solid product that'll make you question whether you actually need the competition's tool anymore.

The gap between leading AI coding tools has become the story nobody's talking about loudly enough. OpenAI has been running behind on specific product features, but they're resourced in a way that means they can leapfrog pretty quickly once they decide to move. The Codex desktop app is exactly that kind of move.

Here's what matters: if you're a developer using AI agents for serious coding work, the macOS app changes where you'll actually spend your time. Instead of context-switching between the terminal and your IDE, you get a dedicated interface built specifically for managing multiple parallel coding tasks. That's not a small thing when you're running three agents simultaneously, each handling different parts of your codebase.

The competitive landscape matters too. Claude had momentum. Developers were talking about it. Teams were experimenting. But momentum only lasts as long as the product stays ahead. Once OpenAI's features catch up (and the rate limits doubled), the question becomes: what's Claude's actual advantage now?

We're going to dig into this in painful detail. You'll understand exactly what the Codex desktop app does, why it matters, how it compares to what you were using before, and whether you should actually care about switching from whatever CLI or IDE extension you're using right now. By the end, you'll have a solid take on whether this is legitimately competitive or just OpenAI playing catch-up (spoiler: it's both).

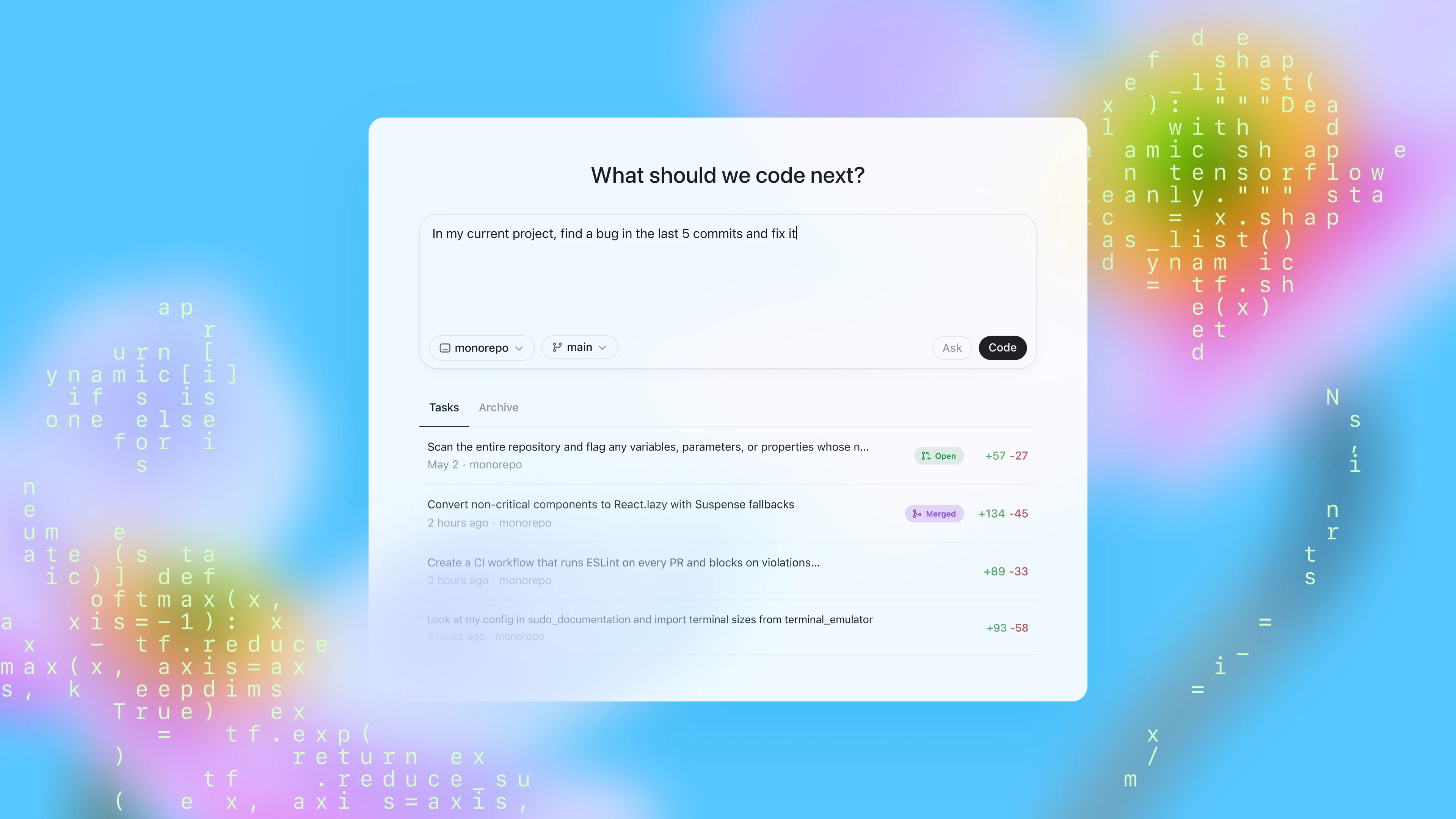

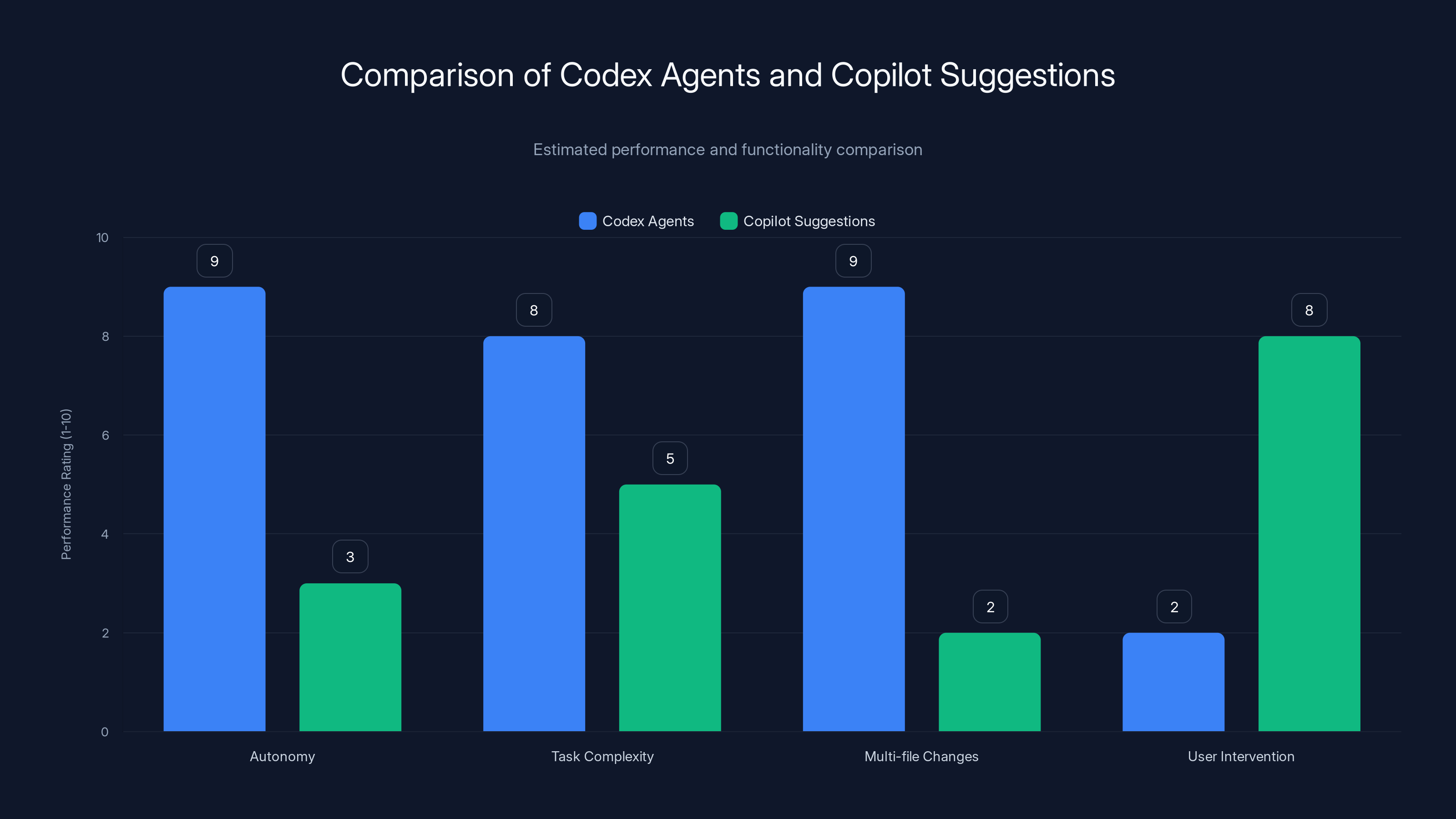

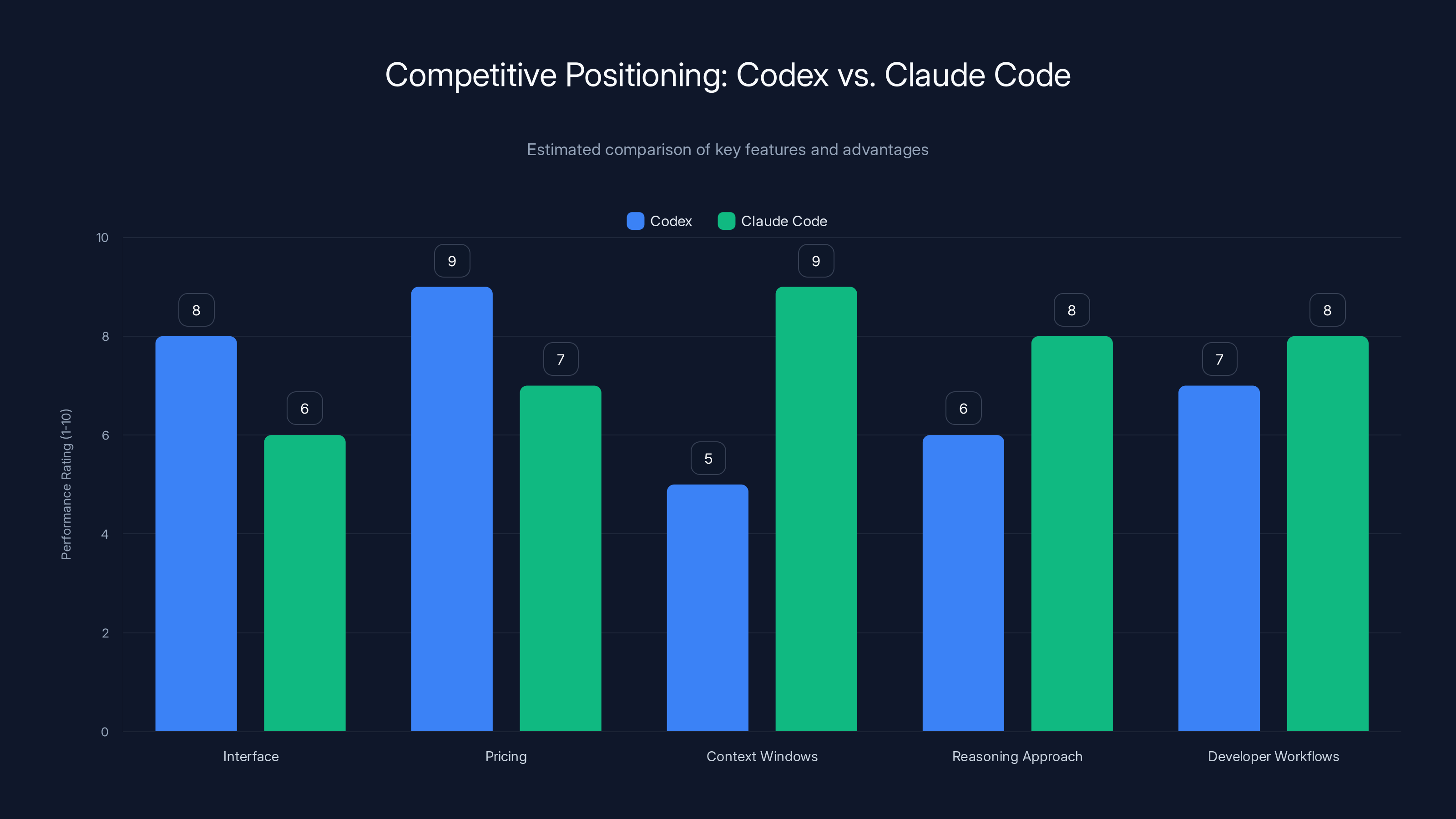

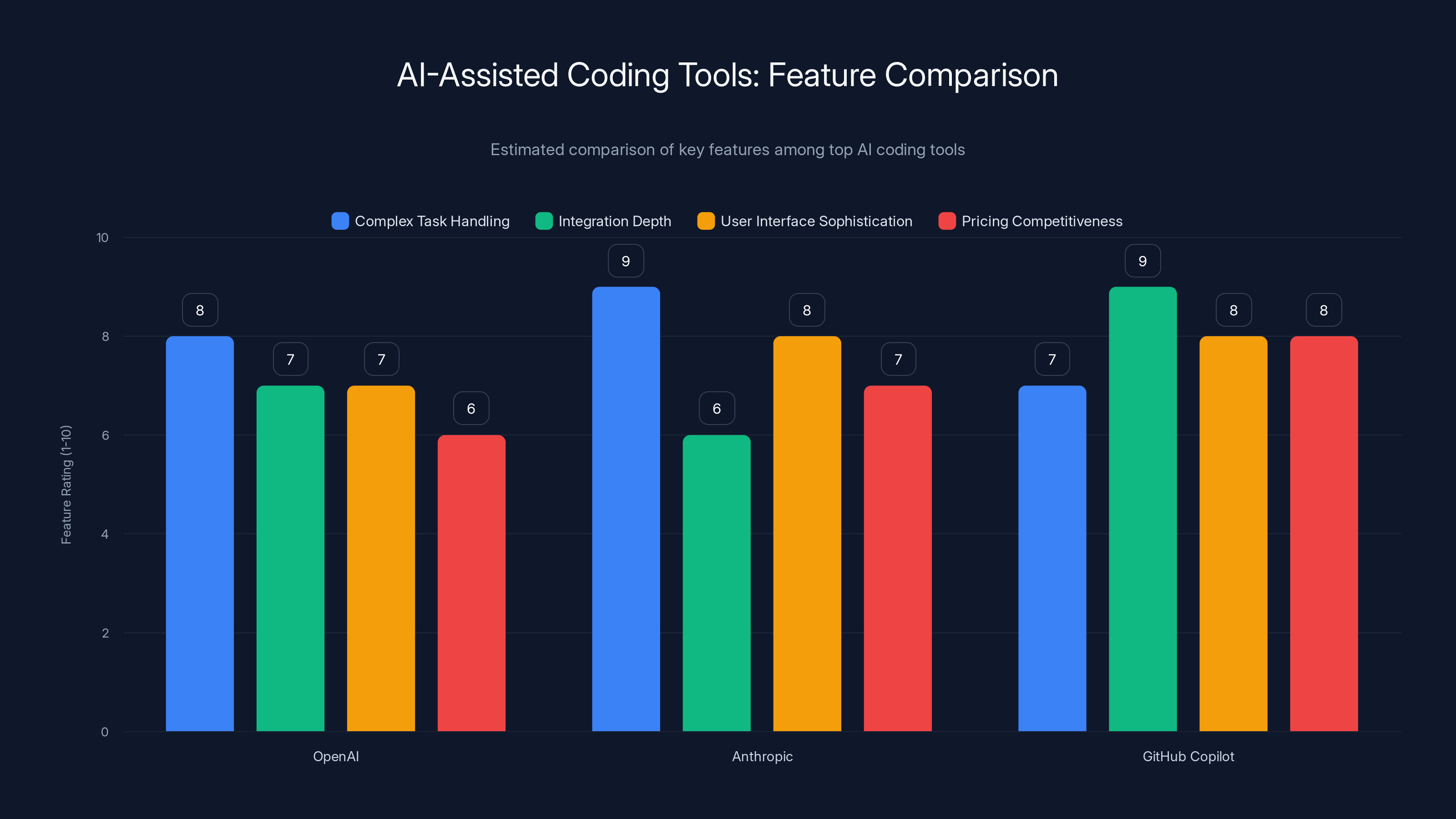

Codex agents outperform Copilot suggestions in autonomy and handling complex, multi-file tasks with minimal user intervention. Estimated data based on feature descriptions.

TL; DR

- Desktop advantage: Codex's new macOS app enables parallel agent management that CLI and IDE extensions can't match

- Rate limits doubled: OpenAI doubled Codex rate limits across Plus, Pro, Business, and Enterprise plans

- Project organization: Agents are grouped by project, making multi-project workflows cleaner than competitors

- Feature parity: The desktop app does everything the CLI, IDE, and web interfaces do, just in a dedicated interface

- Skills and automations: Support for custom skills and scheduled automations brings flexibility the competition needed to copy

What Changed: The Desktop App Context

For about two years, Codex existed primarily as a back-end capability that you accessed through different interfaces. The CLI was powerful if you liked typing commands. The web interface worked if you wanted to stay in a browser. IDE extensions were perfect if you never wanted to leave VS Code or your editor of choice. But none of these interfaces were designed for one specific use case: managing multiple autonomous agents running in parallel on different projects simultaneously.

That's what Claude Code solved. The macOS app created a dedicated space where you could watch agents work, group them logically, see their progress in real-time, and jump between projects without losing context. For developers managing complex codebases or running multiple simultaneous AI-assisted coding tasks, this made an actual difference.

OpenAI noticed. And they built something similar.

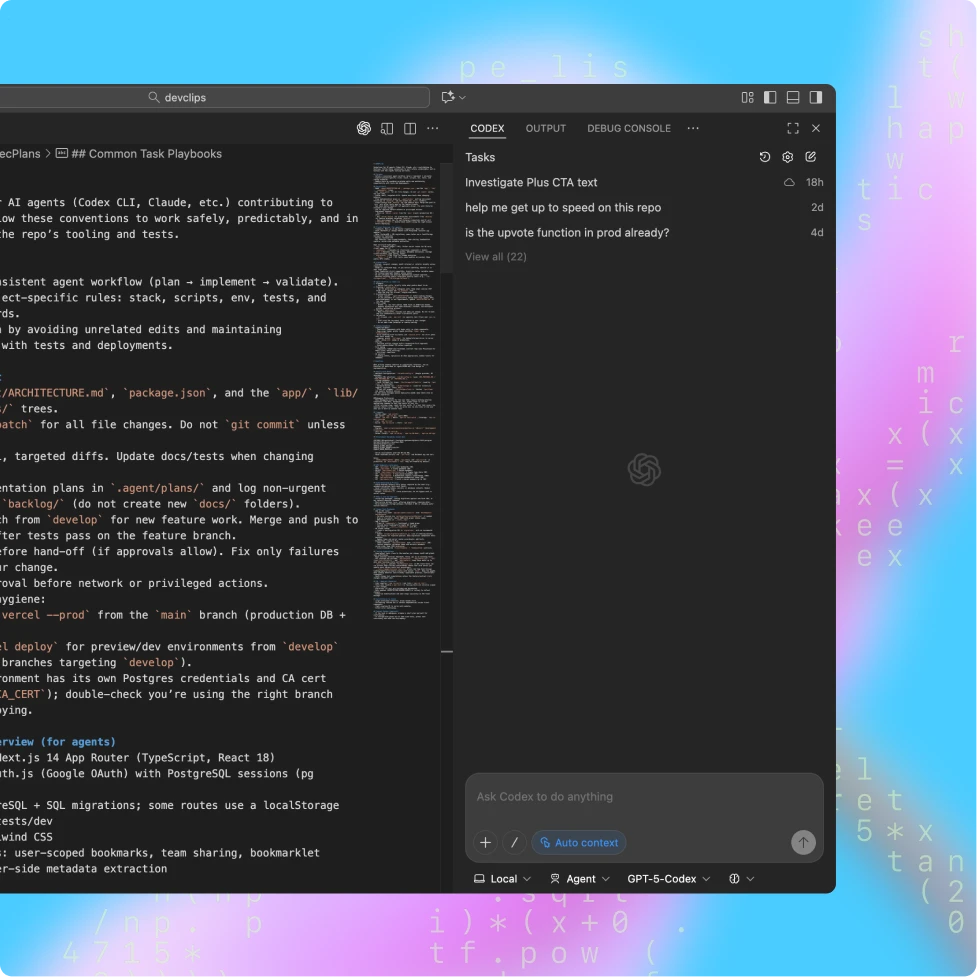

The Codex macOS app launches you into a workspace-style interface. You see your agents organized by project. You can start multiple agents on the same project (they use worktrees to avoid conflicts), pause them, inspect their work, and switch between projects without closing everything and reopening it. If you've used project management tools designed for complex workflows, the mental model is familiar: dedicated space, clear organization, task focus.

What's notable is that this isn't just a wrapper around existing tools. This is a purpose-built interface for how developers actually want to interact with AI agents. The web interface works fine if you're using one agent on one task. But if you're the kind of developer who throws five coding agents at five different problems and checks back in an hour, the desktop app changes your workflow fundamentally.

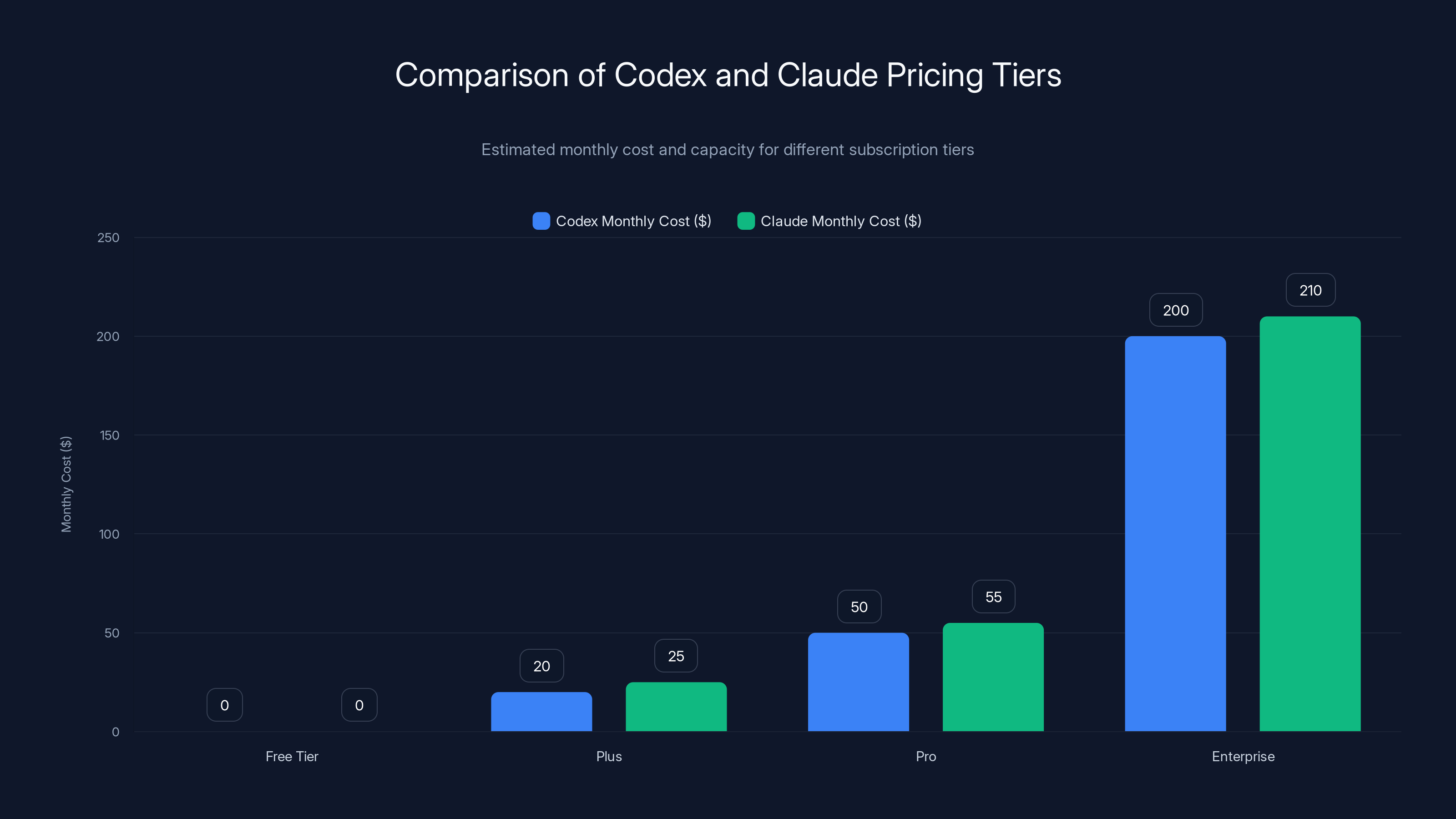

Codex and Claude offer similar pricing structures across tiers. Codex's competitive pricing strategy ensures comparable costs with a focus on increased capacity. (Estimated data)

How the Codex Desktop App Actually Works

Let's talk specifics, because the interface design matters more than you'd think. Opening the Codex app, you see a sidebar with your projects. Click a project, and you're looking at that project's agents. Each agent has a name, status, and controls. You can start new agents, configure them with specific instructions, set them loose, and then switch to another project without losing your place.

The agent panel shows you what the agent is doing in real-time. It's not just a progress bar. You get visibility into the agent's reasoning, the files it's working on, and its next steps. If something looks wrong, you can pause the agent, inspect its work, and either let it continue or kill it and start over with different instructions.

Worktrees deserve their own explanation because they're actually important. When you run multiple agents on the same project, they could theoretically conflict with each other, both trying to modify the same file or overwrite each other's work. Worktrees create isolated branches where each agent works on its own copy of the codebase. The app manages merging these branches back together when the agent finishes. It's not perfect (merge conflicts are still possible), but it prevents the catastrophic state where two agents corrupt each other's changes.

Skills are basically the app's extension mechanism. You create a folder with instructions, code snippets, and resources, and the app treats it as a skill the agent can apply to its work. Say you have a specific coding pattern you want all your agents to follow, or a particular testing framework you want them to use. You bundle that into a skill, and every agent gets access to it. This is where the tool gets interesting for teams because you can codify your team's coding practices as skills.

Automations are where the app gets closest to genuinely autonomous. You configure an automation with instructions and a schedule. Every day at midnight, or every two hours, or once a week, the automation runs a Codex agent with those instructions. It's not intelligent scheduling based on code changes (it's just time-based), but for repetitive tasks like generating documentation, updating dependencies, or running routine refactoring, it works.

Rate Limit Changes: The Real Competitive Move

Here's where the strategy becomes obvious. OpenAI didn't just launch an app. They doubled the rate limits on Codex across Plus, Pro, Business, Enterprise, and Edu plans. Simultaneously. That's not coincidental.

Let's think about what this means in practice. If you were hitting rate limits before (which many active users were), you suddenly have twice the capacity at the same price. For a developer running multiple agents, that could mean the difference between being able to run three agents in parallel versus six. The cost structure doesn't change, but your effective productivity might double.

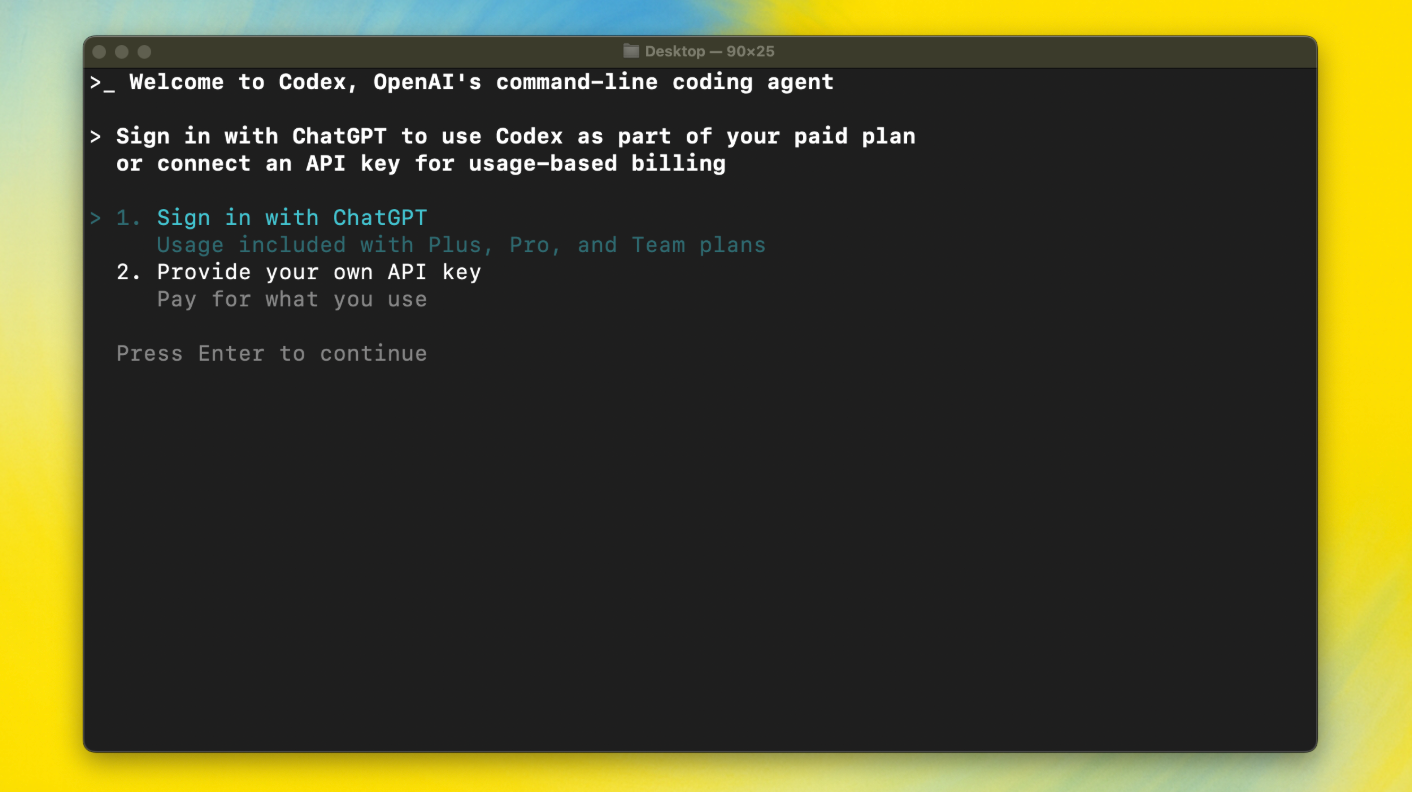

OpenAI also made Codex available to ChatGPT Free and Go subscribers "for a limited time," though the announcement was light on details about what "limited time" actually means. This is textbook product strategy: get users on the free tier comfortable with the tool, then upsell them to a paid plan when the promotional period ends.

What's clever here is that it makes Claude Code's rate limit advantage less relevant. Even if Claude had generous limits, OpenAI just changed the game by matching or exceeding them. The competitive space shifted from "who has the best features" to "who has the best rate limits," and rate limits are something OpenAI can adjust instantly.

Desktop vs. CLI vs. IDE Extensions: When You Actually Care

Okay, let's be real about this. Most developers don't need the desktop app. If you're using Codex for occasional tasks, the CLI or IDE extension works fine. You run a command, Codex does its thing, you move on. Fast, simple, no interface overhead.

But if you're using Codex as a regular part of your development workflow, particularly for complex or parallel tasks, the interface changes matter. The desktop app is built for watching agents work. The CLI is built for scripting tasks. The IDE extension is built for quick in-editor help. They're solving different problems.

Consider the CLI approach. You open a terminal, you run a Codex command with specific parameters, you wait for it to finish. If you're running one task, this is efficient. If you're running five tasks, you need five terminal windows or to daisy-chain commands. At that point, the overhead of tracking what's running where becomes significant.

The IDE extension solves some of this by keeping you in your editor. But IDE extensions are designed for in-editor assistance, not for launching autonomous agents and monitoring their progress over hours. Some extensions try to do both, but they're always stretching the IDE interface beyond what it was designed for.

The desktop app is purpose-built. It assumes you're running agents. It assumes you want to watch them work. It assumes you'll run multiple agents. Every UI decision flows from that assumption.

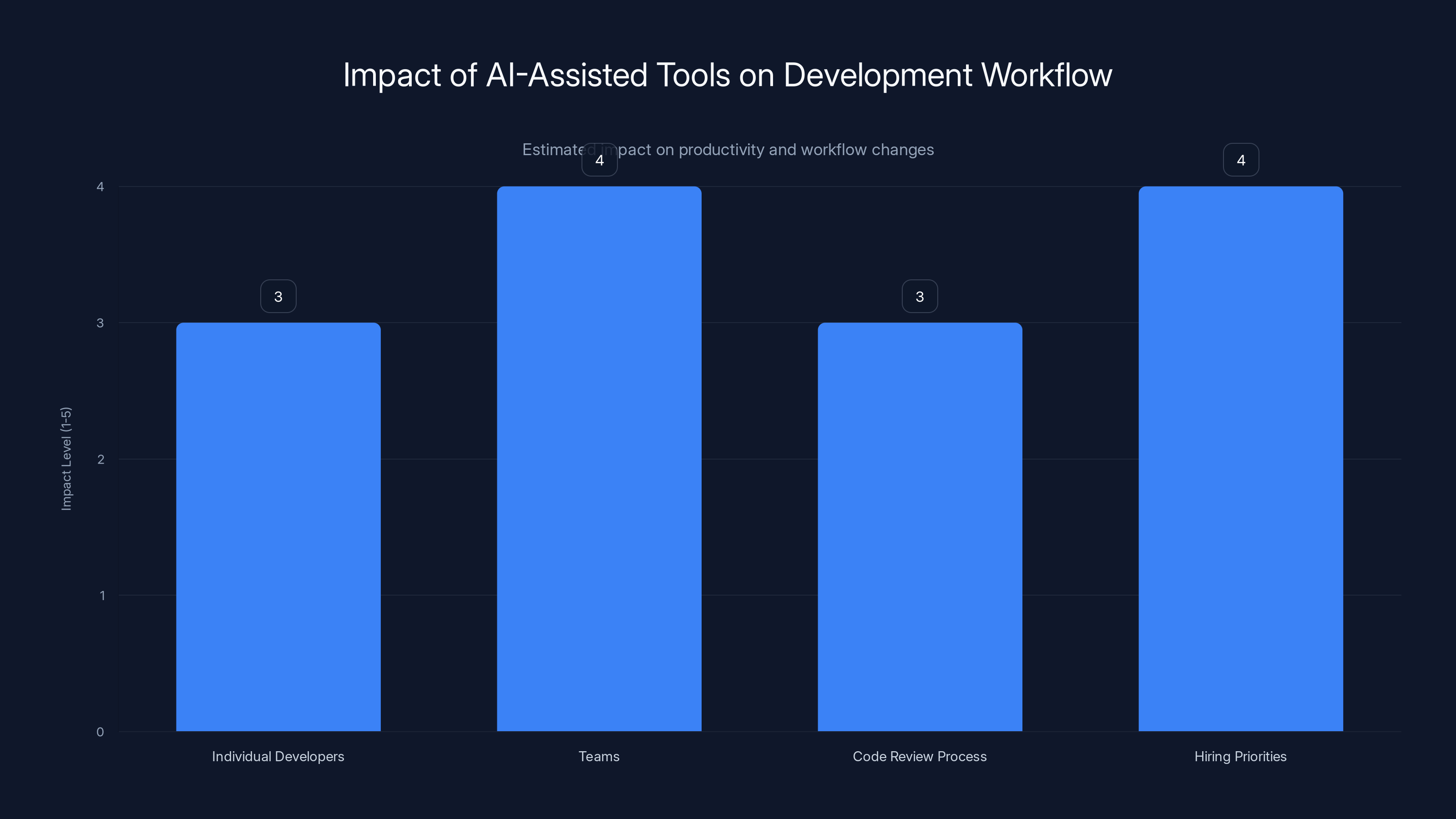

Estimated data suggests that AI-assisted tools have a moderate to high impact on team workflows and hiring priorities, indicating a shift towards integrating AI in regular development processes.

Features That Stand Out Against the Competition

Let's get specific about what makes Codex's desktop app different from what was available before, and how it compares to Claude Code's offering.

Project-based organization is the first big one. You're not managing agents in a flat list. Agents are grouped under projects. This sounds simple, but it's genuinely useful when you're juggling five different projects. You switch to Project A, see all of Project A's agents, run one or modify another. It's a mental model that matches how most developers think about their work.

Worktree integration prevents the catastrophic merge conflict scenario. When you run two agents on the same project, they don't stomp on each other's work. This alone might save teams hours of debugging.

Skills as reusable blocks let you standardize how your agents approach problems. If your team has specific patterns, conventions, or frameworks, you bundle them as skills and every agent respects them automatically. This is where single-person tools become multi-team tools.

Scheduled automations move Codex from "tool I use when I need it" to "tool that helps me even when I'm not looking." Run a documentation generator every night. Run a dependency updater weekly. Run a code quality analyzer every morning. These aren't groundbreaking, but they're genuinely useful for background maintenance tasks.

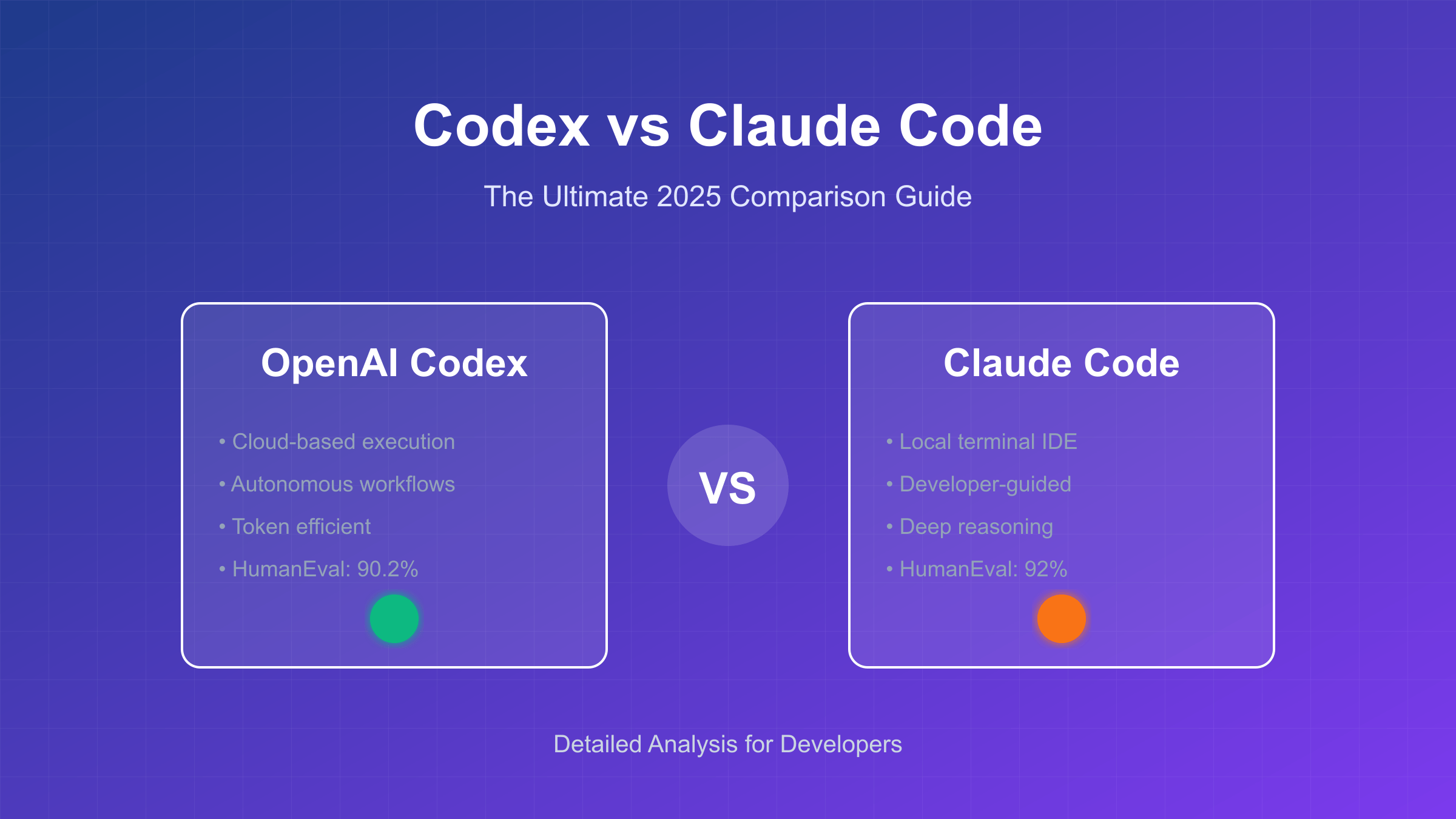

Compared to Claude Code, Codex's approach feels more structured and less magical. Claude Code's positioning has always been "Claude Code is smart, just tell it what you want." Codex's positioning is more "here's a framework, you configure it." Both work, they just appeal to different developer personalities.

Real-World Performance: How Agents Actually Perform

Using Codex agents for extended periods reveals something important: they're capable, but they have consistent blind spots. After testing the desktop app for real coding tasks over several weeks, patterns emerge.

Codex agents are excellent at straightforward refactoring. You ask them to rename a variable across a large codebase? Done correctly, fast. You ask them to add type annotations to an untyped JavaScript file? They'll get most of it right. They're good at mechanical transformations where the rules are clear.

Where they struggle is judgment calls. Should this function be async? Should this logic be split into two files? Should you add a cache here to prevent N+1 queries? These require understanding your specific application's requirements, not just the code. The agents do okay with context clues (comments, existing patterns), but they'll sometimes make architectural decisions you'd reverse.

What I noticed testing the app is that having a good interface for monitoring agents matters. When an agent starts going wrong, you want to see it immediately, not five minutes into a long-running task. The Codex desktop app gives you that visibility. You watch the agent work, you see it approaching a decision point you're unsure about, you can pause it and adjust instructions.

Error handling is where agents need the most work. They're brittle. If they encounter an unexpected file format or a folder structure they weren't prepared for, they can get stuck or make bad decisions. The desktop app helps here too because you can see the agent is stuck instead of assuming it's still working.

Pricing and Cost Implications

OpenAI's pricing strategy for Codex has always been: competitive on features, generous on usage limits. The doubled rate limits double down on this approach.

For Plus subscribers, you're looking at a modest monthly cost but now with significantly more capacity. The effective cost-per-agent-run goes down. For Pro subscribers, the increase in capacity is meaningful for serious usage. Enterprise customers get even higher limits, and the doubled capacity might be the difference between needing an expensive tier upgrade and staying on your current plan.

The free tier access for limited time is the hook. If you've never used Codex before, you get to experience it without paying. The expectation is that enough users will switch to a paid tier when the promotion ends. Some will, many won't, but the conversion rate just needs to be high enough.

Compared to Claude Code, pricing is in the same ballpark. Claude's pricing structure is also monthly subscription with tiered capacity. The actual cost-per-inference is comparable. What's changed is the rate limit advantage, which is essentially gone now that OpenAI matched them.

Codex leads in interface and pricing, while Claude Code excels in context windows and reasoning approach. Estimated data based on typical feature strengths.

The Developer Experience Question

Here's what actually determines whether you'll switch from whatever you're using now: does the interface make you more productive? Everything else is secondary.

The Codex desktop app does make you more productive if you're running multiple agents. Not massively more, not to the point where it's transformative. But measurably more. You spend less time managing multiple terminal windows, less time losing track of what's running where, less time context-switching.

For developers who never run multiple agents, the CLI or IDE extension is fine. Honestly, better. Less overhead, faster. The desktop app is extra complexity you don't need.

For developers running three or more agents in parallel, or managing complex multi-agent workflows across projects, the desktop app changes the game. You're not context-switching as much. You're not managing as many windows. You're watching agents work and being able to intervene if needed.

The design is clean, which matters. Too many developer tools look like they were designed in 2008 with a design budget of zero dollars. The Codex app doesn't have that problem. Navigation is logical, information is presented clearly, you can get to what you need without clicking through five menus.

Worktrees, Merge Conflicts, and Why This Matters

Worktrees deserve deeper explanation because they're the infrastructure that makes parallel agents possible. Without them, running two agents on the same codebase would be like having two people editing the same Word document simultaneously. Chaos. Corruption. Loss of work.

Git worktrees create isolated branches. Agent 1 works on its branch, Agent 2 works on its branch. They can't interfere with each other. When they're done, the app attempts to merge both branches back to main. If the changes don't conflict (they modified different files), it's automatic. If they do conflict, you're notified and can resolve it manually or reject one agent's changes.

This is genuinely useful architecture. It means you can parallelize coding tasks without fear. Send three agents to work on three different features simultaneously, and the worst-case scenario is you need to resolve merge conflicts. The best-case scenario is everything merges cleanly and you've tripled your coding throughput.

The catch is that agents don't understand merge conflict resolution. If two agents both modify the same function, the app can't automatically decide which version is correct. You have to look at both and pick the right one. This happens less often than you'd expect (because agents tend to work on different parts of the codebase), but it happens often enough that you need to understand it's a possibility.

Skills: Standardizing Agent Behavior Across Teams

Skills are where Codex gets genuinely interesting for teams. Instead of every agent having to learn your codebase's conventions, you encode those conventions as skills and agents apply them automatically.

Example: your team uses a specific pattern for error handling. You write that pattern once as a skill. Every agent that runs now knows your error handling pattern. It's a form of knowledge transfer that scales across your entire agent fleet.

Example: your team has a testing framework you prefer. You create a skill that documents your testing patterns, your specific test structure, your assertion library preferences. When agents write code, they use your testing patterns automatically.

Example: your team uses a specific naming convention for variables and functions. You encode that as a skill. Agents respect it.

This is where the tool goes from being "cool AI helper" to being "integrated into your team's development workflow." The agent isn't working against your conventions, it's working with them.

Building skills requires effort up front. You need to document your patterns clearly enough that an AI agent can understand and apply them. But that documentation becomes useful for onboarding new team members too, so it's not wasted effort.

This chart estimates the feature strengths of leading AI-assisted coding tools. GitHub Copilot excels in integration depth, while Anthropic leads in complex task handling. Estimated data.

Automations: Background Work While You Sleep

Scheduled automations are the simplest feature to understand and one of the most useful. You configure a task and a schedule, and the app runs it automatically.

Documentation that goes stale? Run an agent to refresh it every week. Dependencies that need updating? Run an agent to check for updates every Monday. Code that should have type annotations? Run an agent to add them every night. Tests that should be added to untested functions? Run an agent every day.

This is essentially continuous improvement, automated. Your codebase never sits still. It's constantly being improved by agents running on a schedule you set.

The limitation is that automations are time-based, not event-based. You can't trigger an automation when a new function is added (yet). You can't trigger it when a PR is merged. You have to use a schedule. This means sometimes agents run and there's nothing to do, which is a waste of capacity. But most teams would rather have agents run too often than miss important maintenance work.

Competitive Positioning: How This Changes the Market

The Codex desktop app, combined with doubled rate limits, shifts the competitive landscape. Claude Code had momentum from being first with a dedicated macOS app. That advantage is gone. The question now is: what's Claude's actual differentiation?

Anthropic's answer historically has been "our AI is smarter and more reliable." That might be true for specific tasks, but it's hard to quantify and harder to market. OpenAI's answer is "we have the best product and the best rate limits," which is easier to explain and easier to justify with numbers.

Anthropic could respond by building more specific features that Claude Code does better than Codex. They could focus on specific developer workflows that OpenAI hasn't prioritized. They could lean into Claude's strengths (longer context windows, different reasoning approach) for specific use cases.

But they're now in a reactive position. OpenAI isn't. For developers deciding between the two, the question is simpler now: does Codex's interface and pricing work for me? If yes, start there. If no, Claude Code is the alternative.

When You Might Actually Need the Desktop App

Let's be honest about who needs this. Most developers don't. Most coding work is still synchronous, human-driven, and doesn't benefit from running multiple agents in parallel.

You need the desktop app if:

You're running multiple agents in parallel: This is the core use case. If you regularly start three or more agents at once and want to monitor them simultaneously, the desktop app makes that significantly easier than terminal windows or IDE extensions.

You're managing multi-project workflows: If you work on five different projects and each has agents running, the project-based organization in the desktop app saves you mental overhead.

You're building team automations: If you want your agents to be part of your CI/CD workflow and automatically running maintenance tasks, the automations feature is designed for this.

You're standardizing agent behavior with skills: If you need agents to follow specific patterns and conventions, skills help you enforce that at scale.

You probably don't need the desktop app if:

You use agents occasionally: If you generate a PR once a week or add features using agents sometimes, the CLI is fine. No need for a dedicated interface.

You prefer IDE-based workflows: If you never leave your editor and want AI assistance as a sidebar rather than a separate tool, IDE extensions remain superior.

You're testing Codex for the first time: Start with the web interface or CLI. You'll learn faster. Switch to the desktop app once you understand how you want to use it.

Integration Ecosystem and Developer Tools

The Codex desktop app doesn't exist in isolation. It's part of a larger ecosystem of OpenAI's API and tools.

Codex works with your Git repositories. It works with your code editors. It works with your CI/CD pipeline (though this integration is still rough). It works with your Slack for notifications about long-running agent tasks. It integrates with the rest of your development workflow.

What's missing is tighter integration with some specialized tools. If you use Vercel for deployments, the integration is minimal. If you use LaunchDarkly for feature flags, there's no automatic knowledge of your flags. If you use specialized databases or services, the agent might not know about them without you documenting them as skills.

This is a gap that Claude Code has similar issues with, so it's not a competitive disadvantage. But it's worth knowing that the agent's knowledge of your specific infrastructure, databases, and deployment systems depends on how well you document them.

The Future of AI-Assisted Coding Tools

Where does this go from here? The trajectory is pretty clear. Agents will get better at complex tasks. The interfaces for managing them will get more sophisticated. The integrations with development workflows will deepen. The rate limits will continue to increase as costs come down.

What's less clear is whether this remains a premium feature (requiring a subscription) or becomes commoditized. If agent-based coding becomes standard across teams, the pricing dynamics change. If it remains a niche feature for power users, premium pricing makes sense.

For now, we're in the "prove the value" phase. OpenAI is proving it by delivering a product that works and offering generous rate limits. Anthropic is defending Claude Code's position by emphasizing the quality of Claude's reasoning. Other players like GitHub Copilot are building agent capabilities into their existing platforms.

The competitive intensity is increasing. That's good for developers because it means the products are improving quickly and competition on price and features is real.

FAQ

What is a Codex agent and how does it differ from Copilot suggestions?

Codex agents are autonomous AI assistants that can understand your code structure, make multiple changes across files, and complete complex tasks like refactoring or adding features with minimal direction. Unlike Copilot suggestions, which are in-editor autocomplete-style predictions, agents run autonomously and can work for extended periods, handling multi-step tasks without human intervention between each step.

How do worktrees prevent merge conflicts when running multiple agents?

Worktrees are Git features that create isolated branches for each agent's work. When Agent 1 and Agent 2 run simultaneously, they each work on separate branches of your codebase, preventing them from overwriting each other's changes. Once both agents finish, their branches are merged back to the main codebase, and if they modified different files, the merge happens automatically. If they modified the same function or file, you're notified to resolve the conflict manually.

What are Skills and how do they improve agent performance?

Skills are reusable instructions bundled as folders containing documentation, code patterns, and resources that teach agents how to approach specific tasks. For example, if your team has a specific error handling pattern or testing framework preference, you create a skill that documents this. Every agent that runs thereafter applies that skill automatically, standardizing behavior across your entire agent fleet. This transfers team knowledge to the agents without having to explain it manually each time.

Can I run scheduled automations even when I'm not actively working?

Yes, scheduled automations are exactly designed for this. You configure a task and a schedule (every day at 2 AM, every Monday, every six hours), and agents run automatically on that schedule even if you're offline. This is useful for continuous maintenance tasks like dependency updates, documentation refreshes, or code quality improvements that run in the background without human oversight.

How does Codex's pricing compare to Claude Code, and is the rate limit increase meaningful?

Pricing between Codex and Claude Code is comparable, both operating on monthly subscription models with tiered capacity. The significant change is that OpenAI recently doubled Codex rate limits across all paid plans while keeping pricing the same. This effectively doubled the number of parallel agents you can run without additional cost, making Codex substantially more capable per dollar than it was before the update. Claude Code's rate limit advantage is now neutralized.

Is the desktop app required to use Codex, or can I stick with the CLI?

The desktop app is optional. You can continue using Codex through the CLI, web interface, or IDE extensions. The desktop app specifically benefits developers who run multiple agents in parallel or manage complex multi-project workflows. If you use agents occasionally or prefer staying in your IDE, the existing interfaces remain perfectly functional. Choose based on your actual usage pattern, not out of obligation to adopt the newest interface.

What This Means for Your Development Workflow

The Codex desktop app arriving now, with doubled rate limits, marks a meaningful shift in how AI-assisted development tools compete. This isn't about incremental improvement. It's about a category leader ensuring their product stays ahead while lowering the barrier to adoption.

For individual developers, the decision is straightforward: if you're occasionally using agents, keep your current setup. If you're running multiple agents regularly, the desktop app will probably make you more productive. Worth trying, especially since the free tier trial exists.

For teams, the implications are larger. Your team's agent strategy might shift from "agents are nice for occasional work" to "agents are part of our regular workflow." That shifts where you invest time. It changes your hiring priorities (you want developers who can work with and prompt agents well). It changes your code review process (code written by agents has different error patterns).

The bigger picture is that AI-assisted coding is becoming a real part of professional development workflows. Not in a "robots are replacing programmers" way. More in a "repetitive coding work is becoming automated" way, which frees developers to focus on higher-level problems.

OpenAI's willingness to match and exceed Claude Code's capabilities while also being aggressive on pricing says they believe there's a real market here, not just a novelty feature. Anthropic's sustained investment in Claude Code and continued improvements say they agree.

Where this goes depends on whether developers can develop reliable workflows around these tools. The desktop app is OpenAI's bet that yes, you can, and they're providing the tooling to make it work.

The competitive intensity is good for developers. It means the tools will keep improving, the prices will stay reasonable, and the best product will win based on actual quality and capability, not just hype or timing.

For now, the Codex desktop app is worth evaluating if you're considering agent-based development tools at all. The free trial removes the risk. The doubled rate limits make it more capable. And the interface is solid enough that you won't feel like you're using an early-stage product.

That's the actual significance of today: not that OpenAI launched an app, but that they've removed the last clear advantage Claude Code had, made their tool cheaper to use per agent, and delivered a solid product that works. It's competitive strategy done well, and developers benefit.

Key Takeaways

- Codex desktop app enables parallel agent management that CLI and IDE extensions cannot match

- OpenAI doubled rate limits across all paid tiers while maintaining pricing, matching Claude Code's competitive advantage

- Project-based organization and Git worktree integration prevent merge conflicts when running multiple agents simultaneously

- Skills feature allows teams to standardize agent behavior across projects, improving consistency and reducing manual configuration

- Scheduled automations enable continuous background improvements to codebases without manual intervention

Related Articles

- OpenAI's New macOS Codex App: The Future of Agentic Coding [2025]

- Claude Code Is Reshaping Software Development [2025]

- How OpenAI's Codex AI Coding Agent Works: Technical Details [2025]

- Agentic AI Security Risk: What Enterprise Teams Must Know [2025]

- AI Coding Tools Work—Here's Why Developers Are Worried [2025]

- LinkedIn Vibe Coding Skills: The Future of AI Developer Credentials [2025]

![OpenAI's Codex Desktop App Takes On Claude Code [2025]](https://tryrunable.com/blog/openai-s-codex-desktop-app-takes-on-claude-code-2025/image-1-1770057978229.jpg)