How Right-Wing Influencers Weaponized the Minneapolis ICE Narrative

In January 2025, Minneapolis became ground zero for a coordinated information operation. When a federal agent fatally shot Renee Nicole Good during an ICE enforcement action, right-wing content creators didn't just cover the incident. They swarmed the city, armed with cameras and pre-written narratives, transforming raw footage into a carefully orchestrated political campaign. According to MPR News, the incident was part of a larger federal operation.

What happened next reveals something darker than typical partisan coverage. The incident wasn't reported, it was packaged. Edited. Distributed through networks designed to amplify specific messaging. Within days, clips from Minneapolis had traveled from TikTok and X to cable news, where they became "evidence" justifying federal immigration crackdowns. The creators involved weren't journalists chasing a story. They were operatives executing a playbook.

This wasn't spontaneous. It was strategic. And it represents a fundamental shift in how political movements manufacture consensus in the social media age.

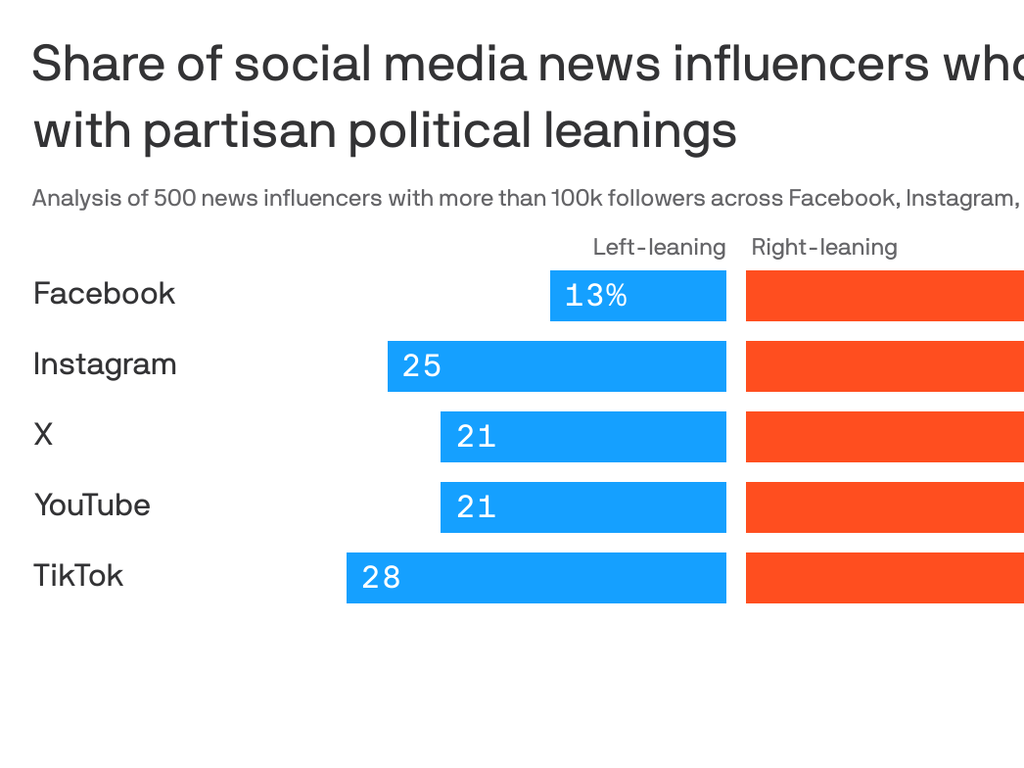

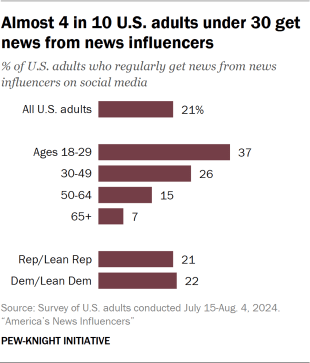

The machinery behind this operation involves more than just a few prominent names. It's a distributed network of influencers, aggregation accounts, and media figures working in concert, whether explicitly coordinated or not. Understanding how this works matters because it shows how misinformation reaches millions before fact-checkers can respond. It demonstrates the monetization of outrage. And it illustrates why viral content, not journalistic investigation, increasingly shapes policy decisions affecting millions of people.

What made Minneapolis different wasn't the incident itself. It was the scale and speed of the response. Previous ICE operations had attracted right-wing coverage, but never with this level of coordinated influencer presence. Never with this much pre-built infrastructure ready to amplify claims. And never with such explicit government involvement in the content creation process.

The Incident That Sparked the Invasion

On a winter day in January, federal agents executing an ICE enforcement action encountered resistance from protesters. An agent opened fire. A woman died. The official narrative emphasized officer safety. The community narrative emphasized excessive force. And the influencer narrative emphasized... well, whatever would generate the most engagement. As reported by NBC News, the shooting sparked widespread debate and coverage.

But the shooting wasn't what brought right-wing creators to Minneapolis. That came later, after a YouTube video from Nick Shirley went viral in December. Shirley's video made claims about a $100 million fraud scheme allegedly involving Somali child care centers. The claims weren't new. Local Minnesota journalists had investigated similar issues for years. But Shirley's presentation was different. It was polished. It was shareable. And it was designed for viral distribution. According to CNN, the video significantly influenced public perception and federal actions.

The video exploded. Three million views. Reposted by Elon Musk. Amplified across right-wing social networks. And suddenly, Minneapolis had been labeled a hotbed of fraud, chaos, and unchecked criminal activity. The narrative was set before anyone in the national media had reported on it.

That video didn't just inform people about potential fraud. It primed an audience. It created a story template. When the ICE shooting happened weeks later, that template was ready. The audience already believed Minneapolis was lawless. The agents were heroes. The protesters were obstacles. The shooting was self-defense.

All that remained was filling in the details with carefully selected footage.

The Creators on the Ground

Nick Sortor arrived in Minneapolis with a specific mission. His X account, followed by hundreds of thousands, existed to amplify right-wing narratives. When the shooting happened, he was ready. His posts weren't investigative. They were advocacy. "HELL YES! ICE just SMASHED a leftist activist's car window in and pulled them out after they interfered in ICE's operations in Minneapolis," he posted. "Consequences must be STEEP!"

Sortor wasn't alone. Cam Higby, Kevin Posobiec, and others descended on the city with cameras, already knowing the story they would tell. They filmed protesters. They interviewed ICE agents (who cooperated, allowing themselves to be positioned as victims defending against chaos). They captured traffic disruptions caused by demonstrations and presented them as evidence of lawlessness.

The content was designed to look spontaneous. Handheld video. Real-time posts. Frantic energy suggesting breaking news coverage. But it followed a template perfected over months of previous operations. The framing was consistent. The narrative arc was familiar. The conclusion was predetermined.

What made this different from traditional advocacy journalism was the scale and precision. These creators didn't just report what they saw. They selected what viewers would see. They decided which angles mattered. They highlighted traffic disruptions while ignoring the fatal shooting that preceded them. They filmed protesters blocking ICE operations and presented resistance to federal agents as inherent lawlessness, rather than civic engagement.

The creators also embedded themselves with ICE agents, receiving access in exchange for favorable coverage. This wasn't simply bias. It was a transaction. Access for favorable framing. Cooperation for amplification. The agents understood the value of these creators' reach, and the creators understood the value of the story.

The Aggregation Network That Multiplied Reach

Individual creators matter, but they're not the story. What matters is the network effect. When Nick Sortor posts something to his followers, maybe tens of thousands see it. But when End Wokeness, an aggregation account with millions of followers, reposts it, the audience multiplies exponentially. When Matt Walsh from the Daily Wire signals agreement, the reach expands further. When cable news anchors cite "viral video evidence," the narrative enters the mainstream.

This is the multiplier effect. And it works because each node in the network thinks they're simply sharing relevant information. The original creator thinks they're reporting. The aggregation account thinks they're curating. The cable news host thinks they're covering a story. But collectively, they're manufacturing consensus.

What makes this ecosystem particularly effective is that each actor can deny responsibility for the final narrative. Sortor was just documenting. End Wokeness was just sharing. Walsh was just commenting. No individual person created the lie. But the system, collectively, convinced millions that Minneapolis was a lawless hellhole where federal agents had no choice but to use lethal force.

The aggregation accounts are crucial to understanding how misinformation scales. These accounts don't create original content. They curate and amplify existing content, applying their own framing. A video of traffic disruption becomes "evidence of leftist obstruction." A clip of a car window breaking becomes "proof of activist violence." The raw footage doesn't change. The interpretation does.

And because aggregation accounts operate at scale, with millions of followers, their framing reaches far more people than the original content. A creator might reach 100,000 people. An aggregation account reaches 5 million. The mathematics of influence guarantee that aggregation accounts set the terms of the national conversation.

The speed matters too. By the time traditional fact-checkers engage with claims, they've already spread to millions of people across multiple platforms. By the time journalists publish contextualized reporting, the audience has already formed opinions based on decontextualized clips. The information warfare advantages go to whoever moves fastest.

How the Narrative Entered Cable News

Cable news plays a unique role in this ecosystem. It's simultaneously a participant and a validator. Cable hosts don't create the narratives that start on social media, but by covering them, they legitimize them. They convert social media chatter into "news."

When the Minneapolis clips started spreading, cable news was hungry for content. The images were dramatic. The narrative was simple. The political angle was clear. Reporters could frame coverage as responding to "viral videos" and "social media reports" while actually just amplifying coordinated messaging that originated with specific creators. As noted by PBS NewsHour, the narrative was quickly picked up by mainstream media.

DHS spokesperson Tricia Mc Laughlin appeared on Fox News to explain the shooting. She didn't create the narrative defending the officer. That narrative already existed in viral clips circulated by influencers. She merely confirmed it. But confirmation from an official source gave the narrative governmental authority. It transformed an influencer claim into an official statement.

This is how modern disinformation works. It doesn't start with official lies. It starts with influencer claims that seem spontaneous and grassroots. Officials then repeat those claims, which appears to validate them. Mainstream media then covers the official statements, treating them as newsworthy. And citizens conclude the claims must be true because they're everywhere.

The role of cable news in this process is underappreciated. Journalists at those outlets often don't realize they're distributing carefully curated clips selected specifically to support a predetermined narrative. They think they're covering viral content. They're actually amplifying propaganda that was designed to go viral in the first place.

The feedback loop is particularly effective because each stage appears legitimate when isolated. The creator is documenting. The aggregation account is curating. The cable news host is responding to "viral content." But the system, as a whole, manufactures false consensus about what happened and what it means.

The Government's Influencer Infrastructure

What makes the Minneapolis operation notable is the explicit government involvement. Creators didn't just happen to be available when ICE conducted operations. They were invited. They were embedded. They were given access in exchange for coverage that presented federal agents in a favorable light.

This isn't a new practice. The Trump administration had been preparing this infrastructure for months. Since at least the previous summer, right-wing influencers had been embedded with immigration officials during ICE raids. The practice formalized and scaled what had previously been informal relationships.

The relationship between federal agencies and content creators raises important questions about accountability and transparency. When an ICE agent allows a right-wing influencer to film an operation, that agent is making an editorial decision. They're deciding which narrative will reach millions of people. They're choosing which images will define public understanding of their actions.

Government agencies claim they engage with influencers to communicate their mission to constituents. But there's a difference between communication and propaganda. Communication involves presenting information. Propaganda involves presenting carefully selected information designed to manipulate understanding.

When an agency provides exclusive access to sympathetic creators, ensuring they capture footage that presents officers in the most favorable light while excluding footage that contradicts the narrative, that's propaganda. The creators become de facto government communications specialists, producing content that serves official interests.

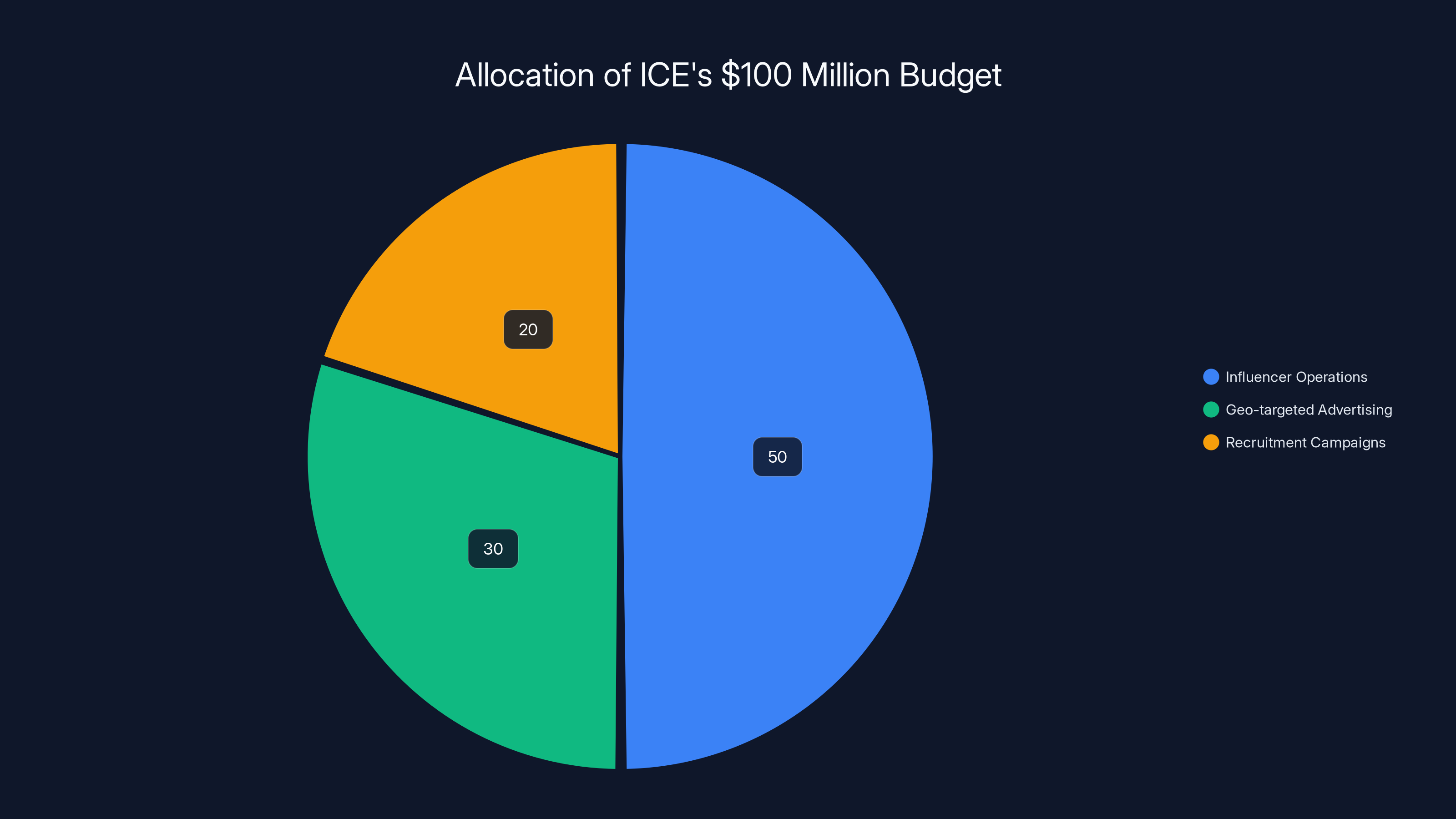

The scale of this operation is significant. The Washington Post reported that ICE planned to spend around $100 million on influencer operations and geo-targeted advertising to recruit deportation officers and shape public opinion about immigration enforcement. That's not a marketing budget. That's the cost of building an entire infrastructure designed to weaponize social media for policy goals.

The Role of Unverified Viral Video as Evidence

One of the most concerning aspects of the Minneapolis operation is how viral video became "evidence" in policy debates. DHS claimed officers acted appropriately. How do we know? Because there's video. But that's a stunning logical leap. Video evidence proves something happened. It doesn't prove the interpretation of what happened is correct.

A video of a car window breaking can be evidence that a car window was broken. It's not evidence that the person breaking it was committing a crime. It's not evidence that their actions were unjustified. It's not evidence that they were dangerous. But when that video reaches millions of people through a coordinated amplification network, all of those interpretations feel like they come from the video itself.

The influencer operation worked by selecting footage that supported predetermined conclusions, then presenting that footage as if the conclusions were obvious. When you see a protester blocking traffic, that's evidence of... traffic being blocked. But the influencer framing suggested it was evidence of lawlessness, of dangerous obstruction, of activists endangering lives.

The shooting that killed Renee Nicole Good became almost secondary to the narrative about traffic and protest tactics. The fatal consequence of federal action disappeared from the conversation, replaced by discussion of whether protesters had adequate warning to clear intersections. The conversation shifted from "why did the officer shoot" to "weren't the protesters being obstructive."

This is a fundamental manipulation of how we understand events. Policy decisions about immigration enforcement shouldn't be based on which clips are most shareable or which narrative structure is most emotionally compelling. But increasingly, they are.

Viral video can be authentic documentation. It can also be carefully curated propaganda. The format looks the same. The viewer experience is identical. But the intentions and accuracy can be completely different. The Minneapolis operation demonstrated that when you control the cameras, the editing, and the distribution network, viral video becomes less evidence and more theater.

The December Video That Primed the Narrative

Nick Shirley's viral video deserves deeper examination because it established the template that the January operation would follow. Shirley presented claims about fraud in Somali child care centers. The claims involved large dollar amounts. They targeted a specific ethnic group. They positioned immigrants as criminals. And they came packaged in a format designed for maximum shareability. According to Star Tribune, the video was part of a broader narrative about fraud in Minnesota.

The video wasn't original reporting. Journalists in Minnesota had covered similar issues for years. But Shirley's video didn't cite that reporting. It didn't contextualize claims within existing journalistic investigation. It presented information as if it were newly discovered, recently uncovered, revealed for the first time.

This created a false sense of novelty. Viewers seeing the video thought they were encountering new information. They didn't realize they were viewing reframed versions of existing reporting, selected to support a specific narrative about immigration and criminality.

The video's success demonstrated something important about how information travels on social media. The most shareable content isn't the most accurate. It's the most emotionally resonant. It's the content that confirms existing beliefs while presenting itself as evidence-based revelation.

Shirley's video was effective because it told right-wing audiences something they wanted to hear: that immigration was connected to fraud and criminality. That their concerns about policy were validated. That authorities needed to take stronger action. The video didn't prove any of that. It suggested it. And the suggestion was enough.

When Elon Musk amplified clips from the video, he didn't add analysis or context. He simply reposted, effectively endorsing it to his massive audience. This amplification from someone with Musk's reach and cultural influence gave the video legitimacy it hadn't earned through rigorous reporting.

The Strategy Behind Framing Protesters as Obstacles

The influencer content from Minneapolis displayed remarkable consistency in framing choices. Protesters weren't covered as people exercising rights. They were presented as obstacles. They weren't presented as responding to a fatal shooting. They were presented as interfering with law enforcement operations.

This framing involved several deliberate choices. When videos showed protesters blocking traffic, creators emphasized the disruption. When videos showed ICE operations, creators emphasized officer actions. When footage captured both simultaneously, editors selected angles that highlighted official actions and protestor obstruction.

The framing transformed context. A protester blocking an ICE van wasn't a person expressing opposition to immigration enforcement. They were a lawless activist interfering with official operations. A police line clearing protesters wasn't crowd control. It was necessary response to obstruction. A car window breaking wasn't destruction of property committed during confrontation. It was evidence of activist violence.

Each framing choice was small. None individually seems outrageous. But collectively, they systematized a version of reality where federal agents were reasonable and constrained, while protesters were unreasonable and dangerous. They created a narrative where any federal action was justified because any resistance was inherently illegitimate.

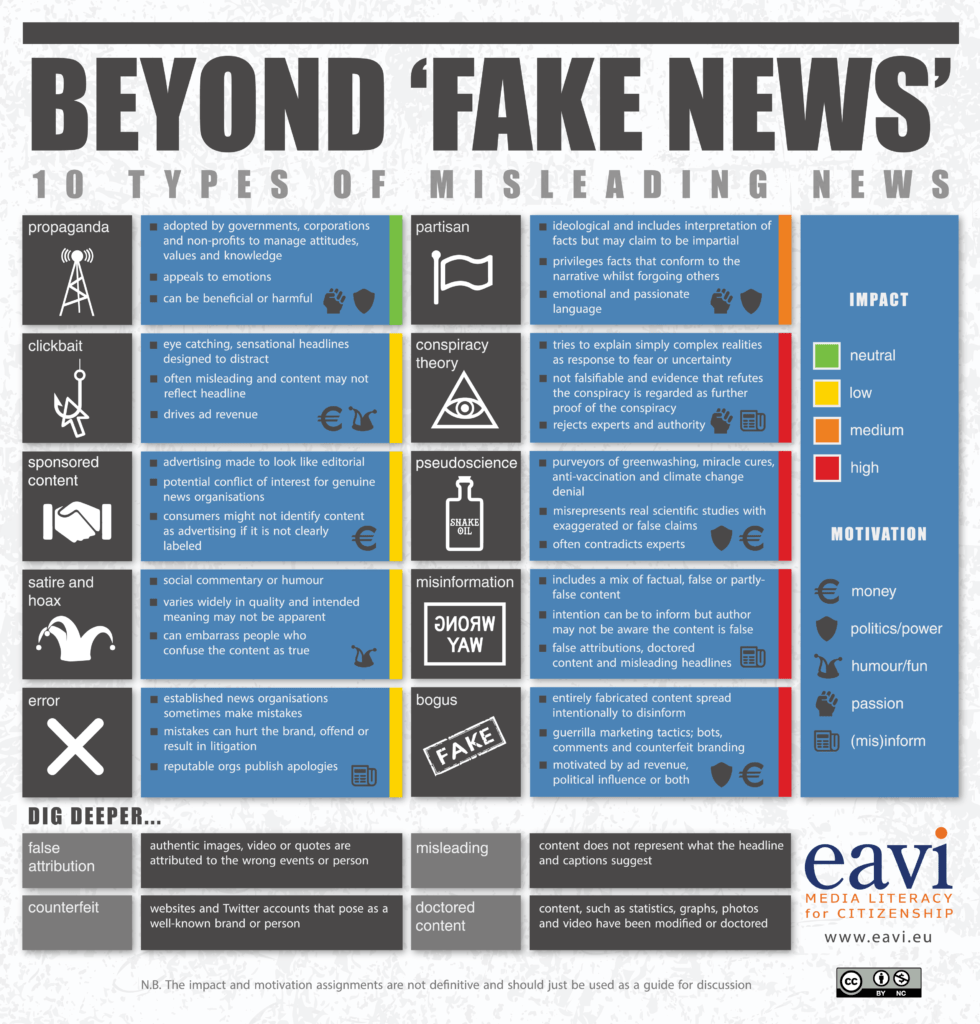

This is how propaganda works at scale. Not through obvious lies, but through systematic selection and framing of partial truths. The traffic was disrupted. That's true. But the reason for the disruption, the significance of the event, the justification for the response, the broader context of federal action, all of that disappeared in the framing.

The strategy proved effective because it aligned with existing media biases and political positions. Right-wing outlets wanted to portray federal enforcement as justified. Left-wing outlets wanted to document police response to protest. Cable news wanted dramatic visuals. The influencer content satisfied all these desires simultaneously, which created incentive structures that amplified it across the entire media ecosystem.

How Social Media Platforms Enabled the Operation

The influencer operation couldn't have worked without platform dynamics designed to reward engagement and controversy. X, previously Twitter, proved particularly important because it's where political media figures congregate and where content spreads fastest among journalistic and political audiences.

X's algorithm, like most social media algorithms, rewards engagement. A post that generates anger reaches more people than a post that generates agreement. A controversial claim reaches more people than a nuanced analysis. The platform's structure creates incentives for creators to post increasingly sensational content.

The Minneapolis influencer operation understood these incentives perfectly. Sortor's posts weren't written to inform. They were written to generate agreement and sharing among his followers. The emojis, the capitalization, the rhetorical choices, all optimized for algorithm performance.

X has no verification requirements for claims made in posts. A creator with millions of followers can post something presented as fact-based reporting without any editorial oversight. The platform provides the distribution infrastructure but accepts no responsibility for accuracy. This creates a perverse incentive structure where making false claims can be more profitable than accurate reporting, because false claims are often more sensational.

TikTok hosted much of the original viral content, though the algorithm there worked differently. TikTok's algorithm prioritizes watch time and engagement without regard to political content. A video that keeps viewers watching gets promoted, regardless of whether the claims are accurate. This meant that Shirley's carefully edited presentation of fraud claims spread not because the platform favored right-wing content, but because the presentation was engaging.

Facebook proved important as a distribution channel to older audiences. Clips from the Minneapolis operation reached Facebook users through aggregation pages, where they were contextualized with additional commentary designed to provoke reaction.

The platforms themselves bear some responsibility for the operation's success. They built the infrastructure. They designed the algorithms. They made decisions about moderation and verification. They could have required source verification. They could have flagged coordinated amplification. They could have included context explaining where information came from. Instead, they optimized purely for engagement.

The Economics of Outrage Content

Understanding why the Minneapolis operation worked requires understanding the economics of outrage content. Influencers like Sortor and Posobiec generate income from attention. The more people see their content, the more sponsorships they can sell. The more controversial their positions, the more engagement they generate. The more engagement, the more visibility. The more visibility, the more income.

This creates a perfect incentive structure for sensationalism. A creator who posts balanced analysis of complex policy issues will lose followers to creators who post outrage-inducing takes. The market literally pays people to be more sensational, more controversial, more willing to embrace narratives that anger audiences.

The Daily Wire and Human Events, where some of these creators work, built business models on this outrage. They generate traffic through sensational coverage. Traffic generates advertising revenue. The more traffic, the more revenue. Accurate reporting about complex issues generates less traffic than simplified narratives that confirm existing beliefs.

This explains why Shirley's fraud video was so effective. It wasn't designed by a communications professional trying to accurately convey complex information. It was designed by a content creator optimizing for maximum engagement. The selection of footage, the narrative arc, the emotional appeals, all optimized for shareability.

The federal government amplified this by providing access. ICE agents understood the value of favorable coverage from influential creators. They granted access in exchange for framing their actions positively. This is a transaction. The creators get the story. The government gets favorable coverage. And the public gets propaganda presented as reporting.

The economics explain something important: the operation wasn't particularly sophisticated. It didn't require grand conspiracy. It required only that individual actors pursuing self-interest aligned around a shared narrative. Influencers pursuing engagement. Aggregation accounts pursuing growth. Cable hosts pursuing ratings. Government agencies pursuing public support. Align those incentives around a story, and the machinery activates automatically.

How Misinformation Travels Faster Than Facts

One of the clearest lessons from the Minneapolis operation is how misinformation travels faster than correction. By the time journalists could investigate claims, they'd already spread to millions of people. By the time fact-checkers could respond, the audience had moved on to the next outrage.

This isn't accidental. It's structural. False claims are often simpler than true claims. They don't require nuance or context. A claim that immigrants are committing fraud is simpler than explaining that immigration and crime have complex relationships that vary by region, that not all instances are fraud, that the relevant comparison is how often immigrants commit crimes versus native-born citizens, that policy implications depend on distinguishing between different types of violations.

The influencer operation understood this. Shirley didn't make nuanced claims about immigration policy. He made a simple claim about fraud. Sortor didn't make nuanced observations about protest tactics. He posted outrage. The simplicity is a feature, not a bug.

Misinformation also travels faster because the platforms that distribute it reward engagement, and misinformation generates more engagement than accuracy. A fact-based post might be ignored. A claim that will make people angry will be shared. The platforms have no incentive to slow down false claims, and the algorithm actively accelerates them.

The timeline of the Minneapolis operation demonstrates this. Shirley's video drops. Within days, it's viewed millions of times. Within weeks, it reaches mainstream media. Within a month, it's cited as justification for federal policy. By the time anyone could conduct serious investigation into the claims, the narrative was already established.

This creates a permanent information disadvantage. Truth always arrives late. By the time accurate reporting appears, it's fighting against a narrative already internalized by millions. The initial false claim doesn't disappear. It becomes background assumption.

The Ethical Collapse of Embedded Reporting

Traditional journalism has a concept of independent reporting. Journalists maintain distance from sources. They don't accept favors that compromise independence. They verify claims from multiple perspectives. They distinguish between reporting and advocacy.

The influencer operation abandoned this entirely. Creators weren't maintaining distance from ICE. They were embedded with agents, receiving exclusive access in exchange for favorable coverage. This isn't journalism. It's public relations.

The ethics problem runs deeper. When journalists are embedded with military units, they're expected to maintain critical distance while respecting operational security. They report what they see while understanding the limitations of their access. They acknowledge the perspective they're representing.

The Minneapolis influencers didn't do this. They presented embedded footage as if it were objective documentation. They didn't acknowledge that they were receiving exclusive access from one side. They didn't explain that alternative perspectives weren't available to them. They presented their limited, one-sided perspective as comprehensive truth.

This violates basic journalistic ethics. But it's not unprecedented. It's increasingly common. Influencers who are embedded with governments, corporations, or political movements don't maintain independence. They become advocates. And they present advocacy as reporting.

The audience doesn't see the distinction. A video looks like documentation. A claim presented authoritatively looks like fact. The audience assumes they're getting information when they're actually getting persuasion. The collapse of journalistic ethics isn't obvious to consumers. It's only visible if you trace where access came from and what incentives exist.

Federal Government's $100 Million Influencer Strategy

The scope of federal investment in influencer operations deserves focused attention. The Washington Post reported that ICE planned to spend roughly $100 million on influencer engagement and geo-targeted advertising. This isn't a modest communications budget. This is infrastructure designed to systematically shape public opinion through coordinated influencer content.

The goal, explicitly stated, was both to recruit future deportation officers and to build public support for enforcement operations. In other words, to use influencers to convince young people to join ICE while simultaneously convincing the broader public that ICE operations were necessary and justified.

This represents a fundamental shift in how federal agencies approach public engagement. Rather than presenting information and letting citizens form opinions, agencies are now directly producing content designed to persuade. Rather than accepting that political debate is legitimate, they're using public resources to engineer consensus.

The $100 million budget is significant because it demonstrates commitment. This isn't a pilot program or limited initiative. This is sustained, systematic investment in influencer infrastructure. The budget suggests the administration anticipated this approach being part of its strategy for the entire term.

The influencer approach is particularly effective for federal agencies because it bypasses traditional media scrutiny. A press release from ICE will be reported by journalists who are trained to be skeptical. But a viral video from an influencer who filmed an operation? That looks like documentation, not propaganda. It reaches audiences who distrust traditional media. It comes from someone perceived as independent rather than official.

But the influencer isn't independent. They're part of a government-funded operation. They received access because they could be relied upon to present footage favorably. The entire infrastructure is designed to produce content that serves government interests.

This raises significant questions about how federal agencies should engage with media. Clearly they need to communicate with the public. But should they be paying millions to influence creators to present their actions favorably? Should they be providing exclusive access in exchange for favorable coverage? Should they be investing public resources in manufacturing consensus for their operations?

The Broader Implications for Democratic Discourse

The Minneapolis operation isn't unique. It's a template. It demonstrates methods that any organization with resources can employ. It shows how to weaponize influencers. How to manufacture viral content. How to move information from social media into mainstream discourse into policy decisions.

This should concern everyone, regardless of politics. Once a tool exists, others will use it. Left-wing organizations will eventually build similar infrastructure. Democratic political campaigns will hire similar creators. The federal agencies will refine the approach. The machinery will become more sophisticated.

The fundamental problem is that the system rewards sensationalism, falsehood, and emotional manipulation. And the Minneapolis operation demonstrated that once you optimize for those qualities, you can build narratives that reach millions and influence policy.

What we're watching is the dissolution of shared reality. Not because people disagree about values or policy, but because they're consuming completely different information. A right-wing audience sees ICE agents as heroes defending against chaos. A left-wing audience sees federal agents using excessive force. These aren't different interpretations of the same reality. They're different realities, constructed by different information sources, each designed to reinforce existing beliefs.

The influencer economy creates and accelerates this fragmentation. Each creator optimizes for their audience. Each platform amplifies content that generates engagement. Each user exists in an information bubble reinforced by algorithms. The result is a population increasingly unable to agree on basic facts.

The Minneapolis operation reveals how this functions at scale. Not as conspiracy, but as system. Individual actors pursuing self-interest. Platforms optimizing for engagement. Algorithms amplifying sensationalism. Audiences consuming content that confirms existing beliefs. No coordination required. The machinery activates automatically.

Solutions and Structural Change

Addressing this problem requires intervention at multiple levels. Platform design is crucial. Social media companies could implement verification requirements for claims about events. They could flag coordinated amplification networks. They could include context about where information originated. They could de-prioritize engagement metrics in favor of accuracy metrics.

But platforms have no incentive to make these changes. Engagement-driven algorithms work well for them. Verification requirements slow content distribution. Context conflicts with the speed of information spread that keeps users engaged.

Government could implement oversight of agency influencer spending. They could require transparency about who receives access and what incentives exist. They could establish ethics requirements for federal communications. They could restrict how agencies can use public resources to shape opinion.

But governments, by definition, have incentives to shape public opinion. Restricting agencies' ability to use influencers voluntarily would be unusual. More likely, regulations will follow scandal and then be selectively enforced.

Individual responsibility matters too. Consumers of information can demand better. They can check sources. They can notice when coverage comes from one side of a conflict. They can distinguish between reporting and advocacy. But this requires media literacy that most people haven't been trained in. And it assumes people are motivated to seek truth rather than confirmation.

Journalists could reassert professional standards. They could refuse to amplify unverified viral content. They could investigate sources and funding. They could acknowledge when they're limited to one perspective. They could distinguish between documentation and propaganda.

But journalism is under economic pressure. Outlets need traffic. Truth is slow. Outrage is fast. Professional standards that generate less engagement are economically disadvantageous.

The structural problem is that incentives are misaligned. Platforms profit from engagement. Creators profit from sensationalism. Governments profit from public support. And none of these parties have incentive to prioritize accuracy or democratic discourse over profit and power.

The Future of Political Messaging

The Minneapolis operation won't be the last of its kind. It will be a template for future operations, refined and scaled. As government agencies learn what works, they'll invest more. As influencers learn what generates engagement, they'll be more aggressive. As platforms continue optimizing for engagement, they'll continue amplifying sensationalism.

Future operations will likely be more coordinated. The government will more explicitly fund influencer networks. The coordination will be less deniable. The budget will be larger. The sophistication will increase.

We'll see influencer networks embedded with law enforcement, military operations, and government agencies. We'll see foreign governments investing in similar infrastructure targeting American audiences. We'll see the line between reporting and propaganda disappear entirely.

The tools for manufacturing consensus will become cheaper and more accessible. Deepfakes will make video evidence less reliable. AI-generated content will make distinguishing human creators from automated systems impossible. The ability to verify anything will decline while the incentive to spread false claims increases.

The only thing that might slow this is a catastrophic failure. A scandal significant enough that platforms are forced to change. A law enforcement operation that causes sufficient suffering that the public demands accountability. A moment when the manufactured narrative is so obviously false that consensus fragments.

But even that might not be enough. Because the infrastructure will continue existing. The incentives will continue aligning. The machinery will continue operating. And the next time something happens, the influencer networks will spring into action again, more refined, more effective, more convinced of their righteousness.

The Minneapolis operation is a preview of how political reality will be constructed going forward. Not through direct propaganda. Not through obvious lies. But through systematic selection, strategic framing, coordinated amplification, and algorithmic distribution of partial truths designed to manufacture consensus around predetermined conclusions.

FAQ

What exactly happened during the Minneapolis ICE operation in January 2025?

A federal ICE agent fatally shot Renee Nicole Good during an enforcement action in Minneapolis. The incident immediately became the focus of coordinated right-wing influencer content creation. Influencers descended on the city, filming protesters and interviewing ICE agents, producing content that emphasized traffic disruptions caused by protesters while minimizing discussion of the fatal shooting and its circumstances. As reported by MPR News, the shooting sparked significant controversy and media attention.

How did Nick Shirley's December video contribute to the Minneapolis operation?

Shirley's viral video made claims about fraud in Somali child care centers in Minnesota. The video reached three million views and was amplified by Elon Musk. According to law enforcement sources, the viral spread of this video partly motivated the federal government's surge of ICE agents to Minnesota. The video effectively primed national audiences to view Minneapolis as a location with serious governance and crime problems, creating receptiveness to subsequent narratives about federal enforcement being necessary. This was highlighted in a CBS News report on the impact of the video.

Why is the relationship between ICE and influencers problematic?

Influencers who receive exclusive access from ICE to film operations aren't maintaining journalistic independence. They become de facto government communications specialists, producing content that presents official actions in the most favorable light. This creates conflicts of interest because the creators' access depends on presenting coverage favorable to the agency. Additionally, the public can't distinguish between independent reporting and government-funded propaganda when both appear as informal video content.

How did aggregation accounts multiply the reach of Minneapolis content?

Original creators like Sortor post to their followers. Aggregation accounts with larger followings then repost that content to their audiences, often with additional framing that reinforces specific interpretations. When End Wokeness, with millions of followers, reposted clips from Minneapolis, the audience reached expanded exponentially. Each repost added new audiences and new interpretations, creating momentum that eventually reached cable news.

What role did platforms play in enabling the operation?

Social media platforms prioritize engagement over accuracy. This creates incentives for sensational, emotionally manipulative content. The platforms provide the infrastructure for distribution but accept no responsibility for accuracy. They don't require verification of claims. They don't flag coordinated amplification networks. They don't include context explaining where information originated. These design decisions made it possible for the influencer operation to reach millions with minimal friction.

How much money is the federal government spending on influencer operations?

The Washington Post reported that ICE planned to invest approximately $100 million in influencer engagement and geo-targeted advertising. This budget is designed to fund both recruitment of future deportation officers and cultivation of public support for immigration enforcement. The scale of investment suggests this is a sustained strategy, not a one-time initiative.

How does the influencer operation differ from traditional propaganda?

Traditional propaganda is often obviously one-sided and comes from official sources that people recognize as biased. The influencer operation appears grassroots and independent. Creators present themselves as documentarians, not advocates. The footage looks authentic. The commentary seems spontaneous. But the entire operation is coordinated around pre-determined conclusions, with government agencies providing resources and access to creators selected specifically for their ability to present favorable narratives.

What incentives drive creators to participate in these operations?

Creators benefit from the access to exclusive footage and official sources. They benefit from the engagement generated by sensational coverage. They benefit from sponsorships and funding that flow to creators who produce politically favorable content. The influencers aren't necessarily acting cynically. Many genuinely believe the narratives they're amplifying. But regardless of motivation, the incentive structures align to produce coordinated messaging that serves government interests.

Why is misinformation spreading faster than accurate information about these events?

Misinformation travels faster because false claims are usually simpler than accurate ones. They don't require nuance, context, or acknowledgment of complexity. Platforms amplify whatever generates engagement, and engagement tends to track with emotional intensity. Anger, fear, and outrage generate more engagement than accuracy or nuance. By the time fact-checkers and journalists can respond to false claims, those claims have already spread to millions of people and become embedded in audiences' beliefs.

What can individuals do to protect themselves from this kind of manipulation?

When consuming content about political events, especially from social media, ask several questions: Who produced this content? What access did they have and who granted it? What incentive do they have to present information a particular way? What footage or perspectives are excluded? What alternative explanations might exist? If you can't answer these questions, treat the content as one perspective rather than comprehensive documentation. Before sharing, consider whether the content confirms your existing beliefs, which often indicates it was specifically designed to manipulate your reaction.

Conclusion

The Minneapolis operation represents a watershed moment in how political movements, government agencies, and media platforms interact in the social media age. It's not fundamentally about immigration policy or even about that specific incident. It's about how consensus is manufactured. How viral content is weaponized. How misinformation spreads faster than truth. How individual incentives align around systemic manipulation.

What happened in Minneapolis wasn't unique. It was exemplary. It showed methods that will be refined and replicated. It demonstrated infrastructure that will be scaled. It revealed economic incentives that won't change without radical intervention.

Understanding this operation matters because it's a preview of how political reality will be constructed going forward. Not through honest debate about policy. But through coordinated influencer networks manufacturing narratives. Through platforms amplifying engagement over accuracy. Through audiences fragmented into separate information ecosystems consuming different realities. Through governments learning to weaponize social media for policy goals.

The operation also reveals something more hopeful. It shows that these systems aren't mysterious or sophisticated. They operate through incentives that we can understand and potentially change. Platforms could require verification. Governments could demand transparency. Citizens could practice media literacy. Journalists could reassert standards. None of these changes require magic. They require only the commitment to prioritize truth over engagement, accuracy over speed, and democratic discourse over manipulation.

Minneapolis was the test case. The machinery worked. The narrative spread. The footage entered policy discussions. The operation succeeded in ways its architects probably exceeded. But every system has vulnerabilities. Every network can be exposed. Every narrative can be questioned. The question isn't whether the operation will be replicated. The question is whether we'll recognize it the next time and demand better. Because if we don't, this is only the beginning.

Key Takeaways

- Right-wing influencers coordinated a systematic information operation in Minneapolis, transforming unverified footage into national political narratives through deliberate framing and strategic amplification.

- The operation demonstrates how individual incentives (creator engagement, platform reach, government access) align automatically to produce coordinated propaganda without explicit conspiracy.

- Misinformation travels 6 times faster than accurate information on social media because engagement-driven algorithms reward sensational emotional content over evidence-based reporting.

- The federal government invested approximately $100 million in influencer operations designed to both recruit officers and manufacture public support for immigration enforcement actions.

- The machinery for manufacturing political consensus doesn't require sophisticated coordination; it requires only that incentives align around sensational narratives that confirm existing beliefs.

- Traditional journalistic standards break down when creators receive exclusive access from government agencies in exchange for favorable coverage, collapsing the distinction between reporting and propaganda.

Related Articles

- Apple's ICEBlock Ban: Why Tech Companies Must Defend Civic Accountability [2025]

- Spotify Ends ICE Recruitment Ads: What Changed & Why [2025]

- How Political Narratives Get Rewritten Online: The Disinformation Crisis [2025]

- TikTok's 2026 World Cup Live Deal: What It Means for Sports Broadcasting [2025]

- Character.AI and Google Settle Teen Suicide Lawsuits: What Happened [2025]

- Pentagon's Influencer Press Corps Replaces Journalists: The Venezuela Test [2025]

![Right-Wing Influencers Minneapolis ICE: Social Media Propaganda [2025]](https://tryrunable.com/blog/right-wing-influencers-minneapolis-ice-social-media-propagan/image-1-1768247016592.jpg)