Roblox's AI Age Verification System Failure Explained

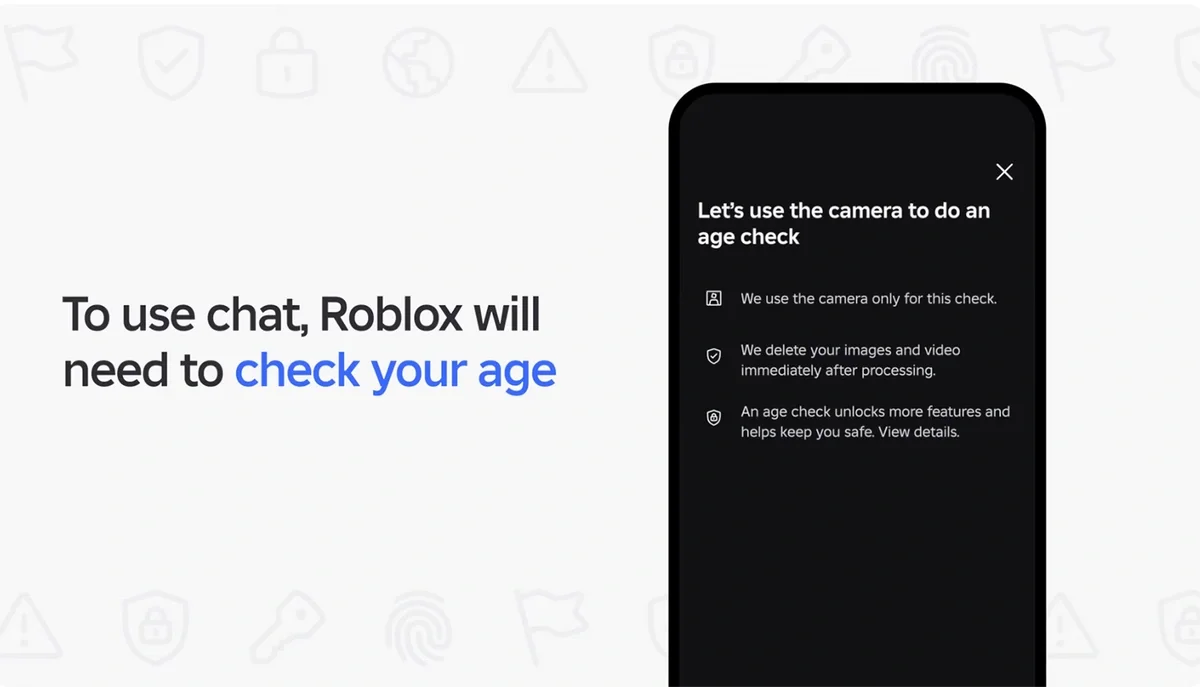

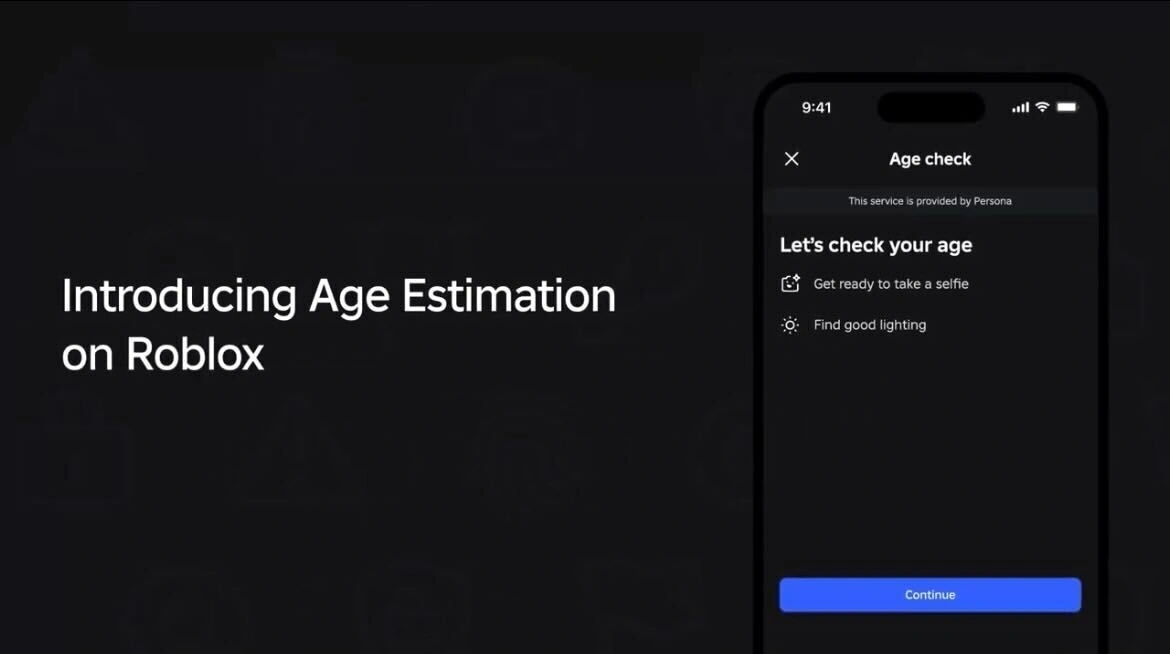

Roblox launched what it promised would be a groundbreaking safety feature: AI-powered age verification using facial recognition. The goal sounded solid on paper. Keep predators away from kids by preventing adults from freely chatting with children they don't know. Sounds reasonable, right?

Then it all fell apart within days.

The system started misidentifying children as adults, adults as teenagers, and—in one viral clip—a kid with marker-drawn wrinkles on his face as 21 years old. Meanwhile, savvy users discovered they could trick the system entirely using deepfakes or photos of celebrities. Even worse, age-verified accounts for kids as young as nine years old started appearing on eBay for four dollars.

This wasn't just a minor glitch. This was a fundamental failure of a safety system designed to protect children, rolled out globally with minimal real-world testing. And it exposed something uncomfortable about the state of AI in 2025: sometimes the technology everyone thinks will solve a problem actually makes things worse.

Let's break down what went wrong, why it matters, and what it tells us about the limits of AI-powered safety systems in gaming.

TL; DR

- Facial recognition accuracy is worse than Roblox suggested: The system misidentifies ages at rates that undermine its core safety promise

- Verified accounts are being sold online for $4: Age verification provides no meaningful verification when the underlying system is broken

- The system doesn't actually stop predators: Child safety experts say it creates false confidence while doing little to address grooming

- The rollout ignored predictable problems: Parents verifying on behalf of kids, spoofed videos, and marker-drawn faces all bypassed the system

- Roblox's response has been inadequate: The company blamed parents, promised fixes, but offered no timeline or technical details

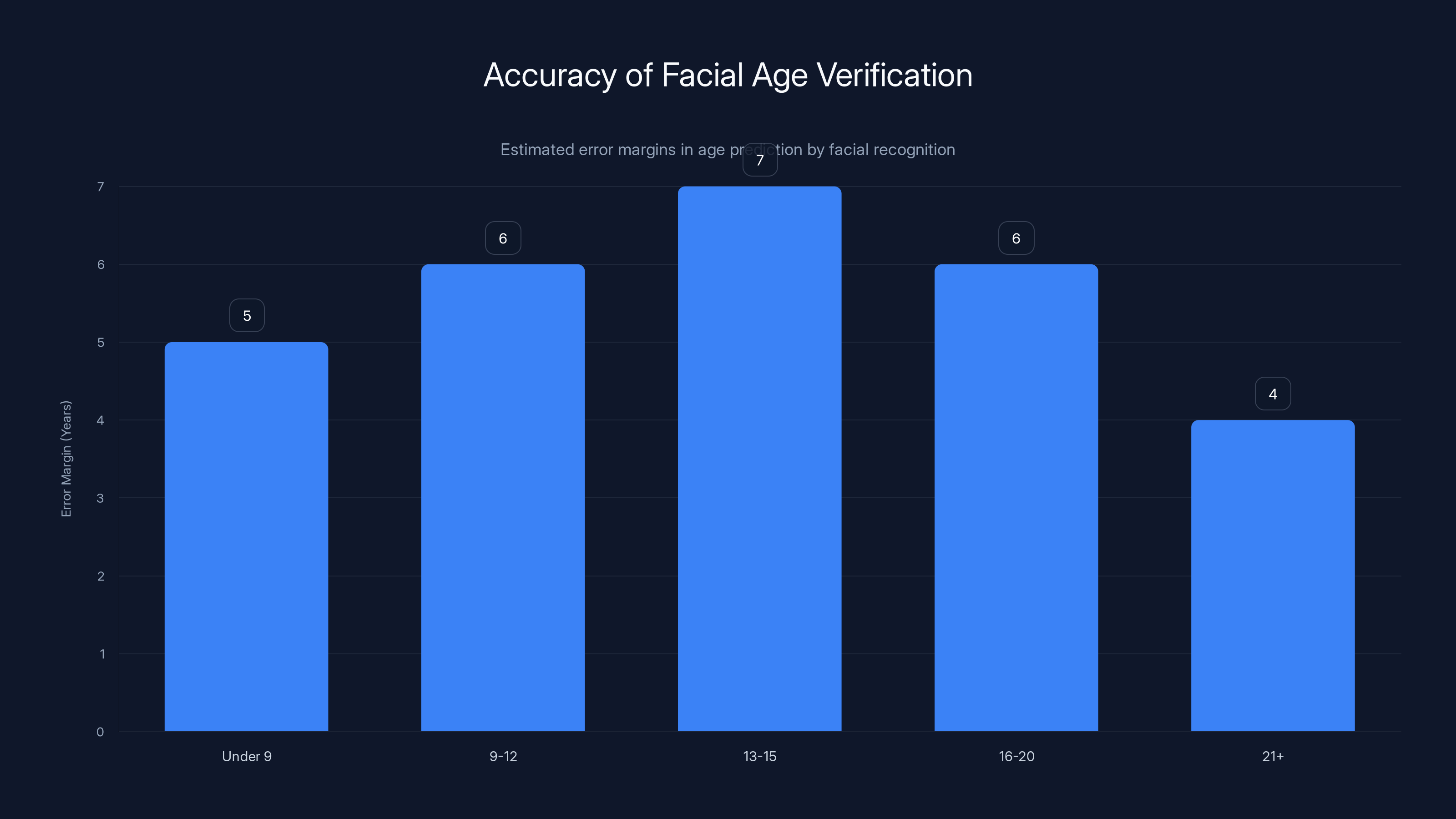

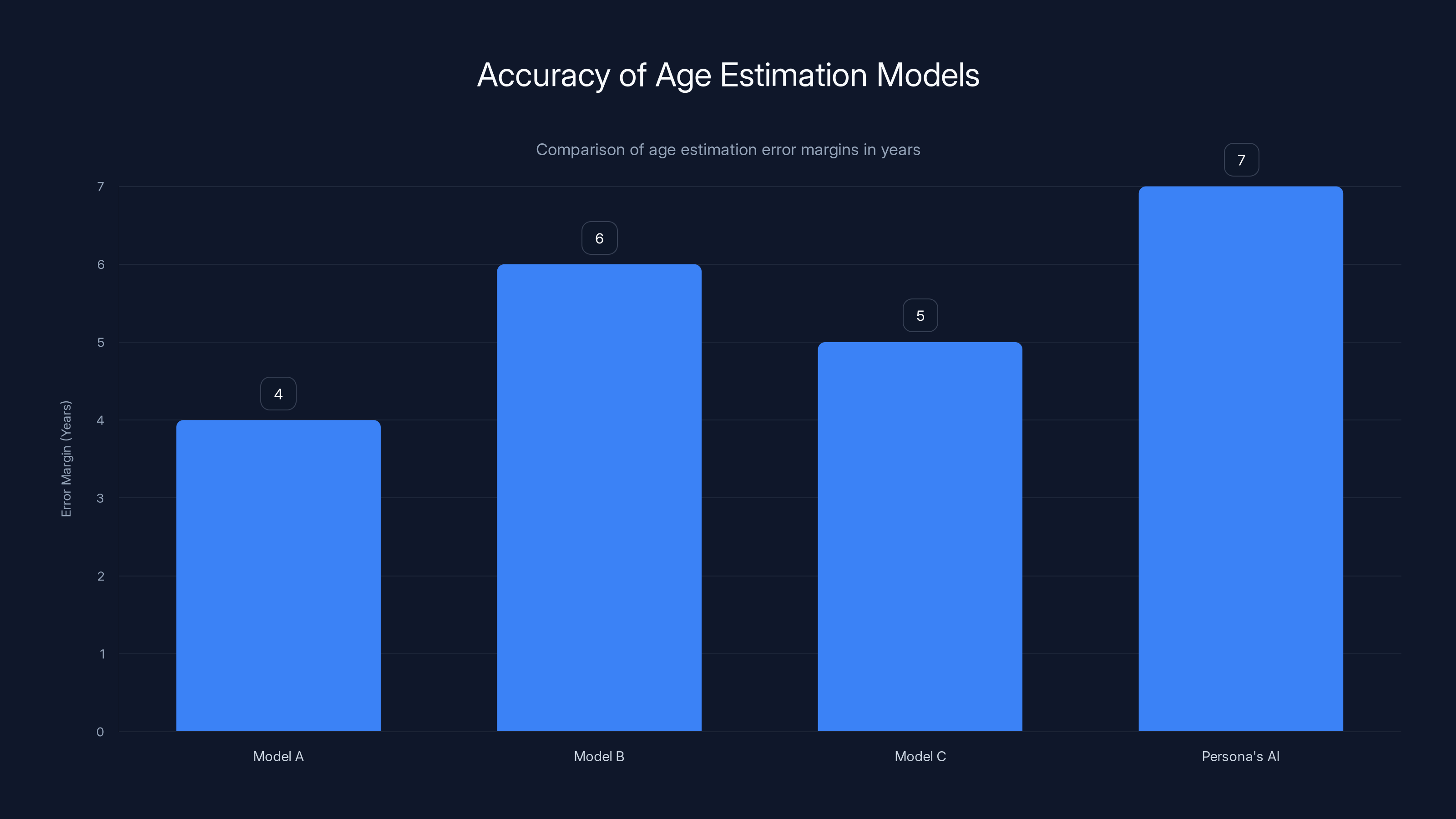

Facial age verification systems have error margins of 4-7 years, leading to potential misclassification of users' ages. Estimated data.

What Roblox Actually Built (And Why It Failed)

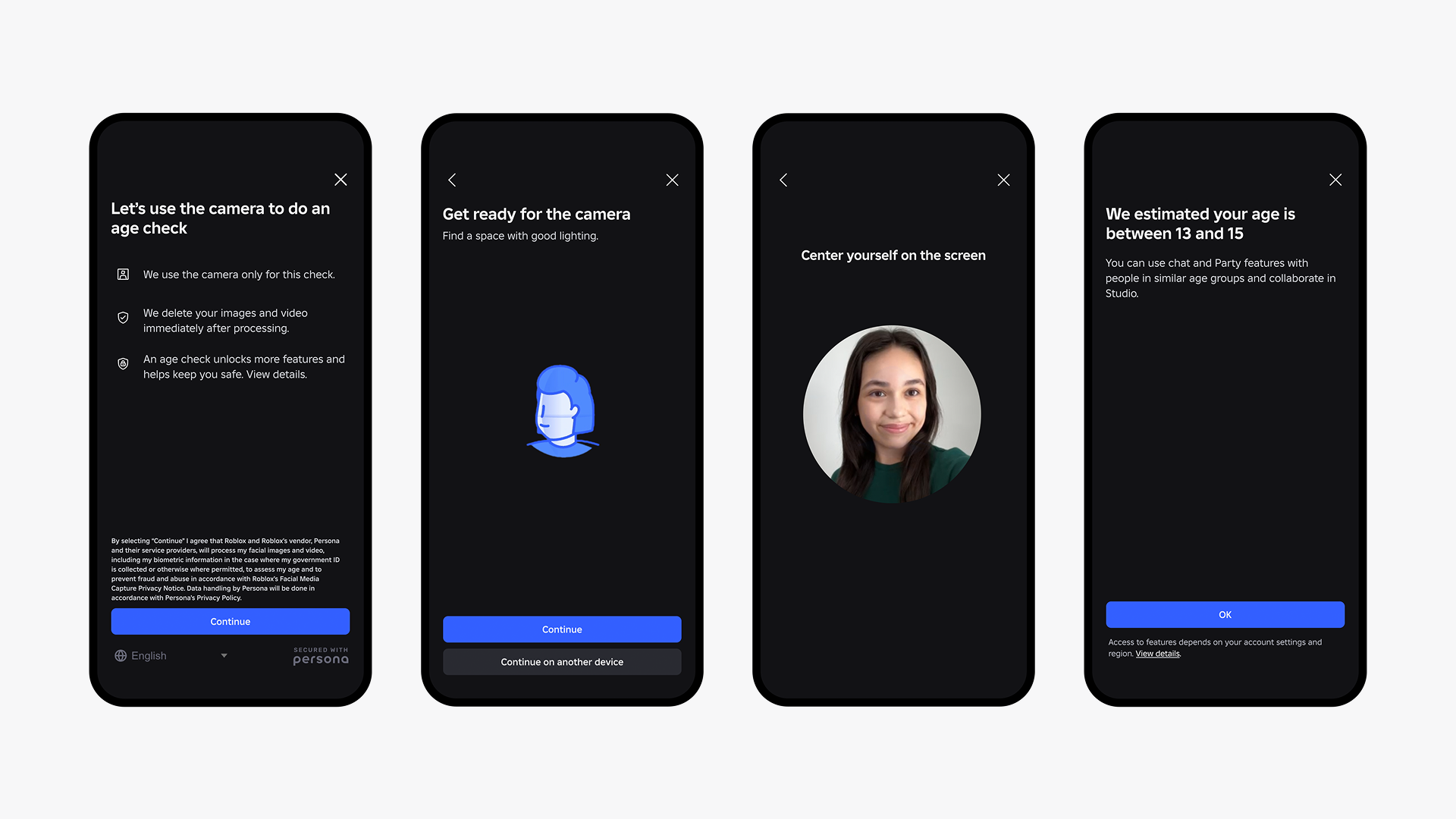

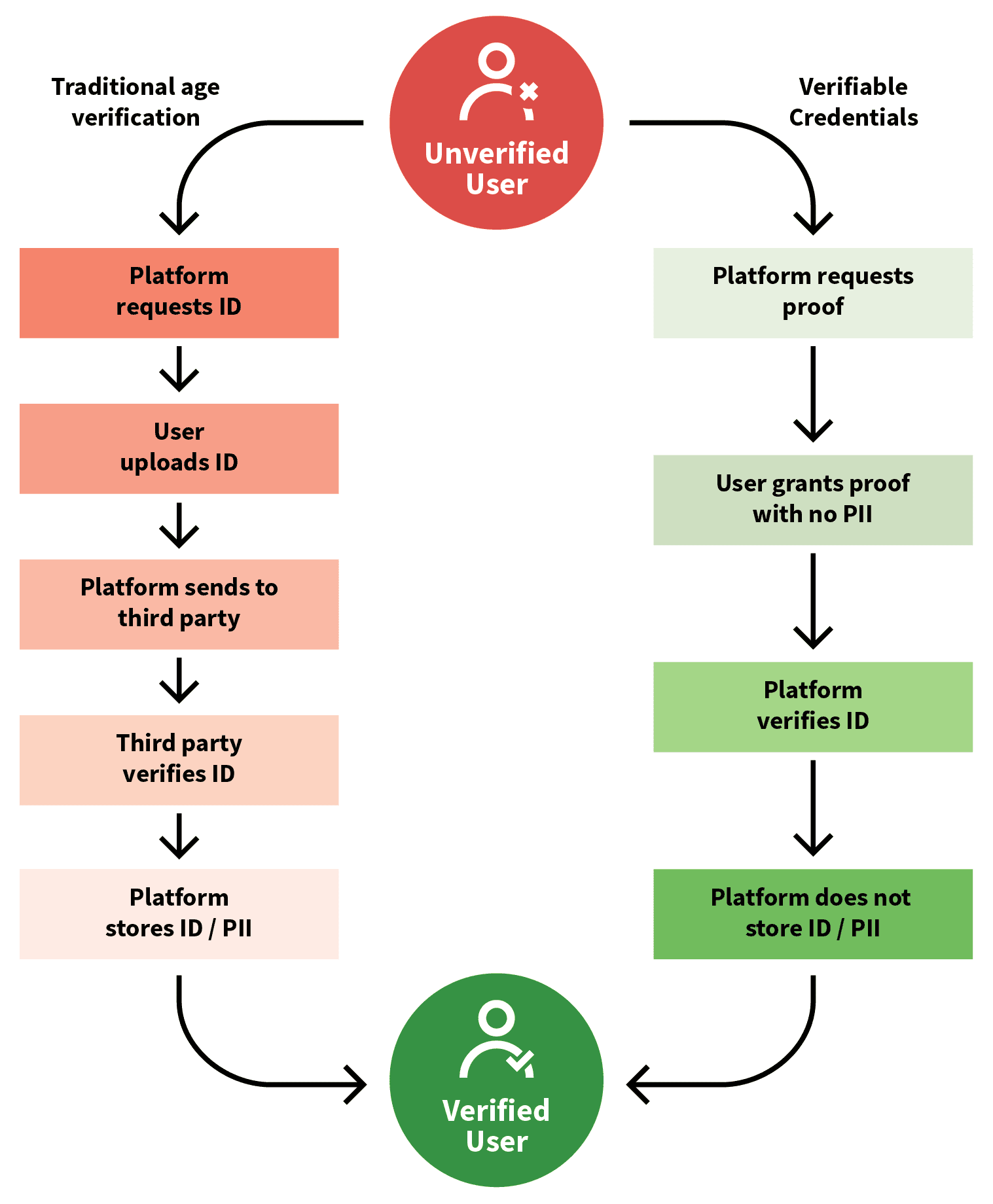

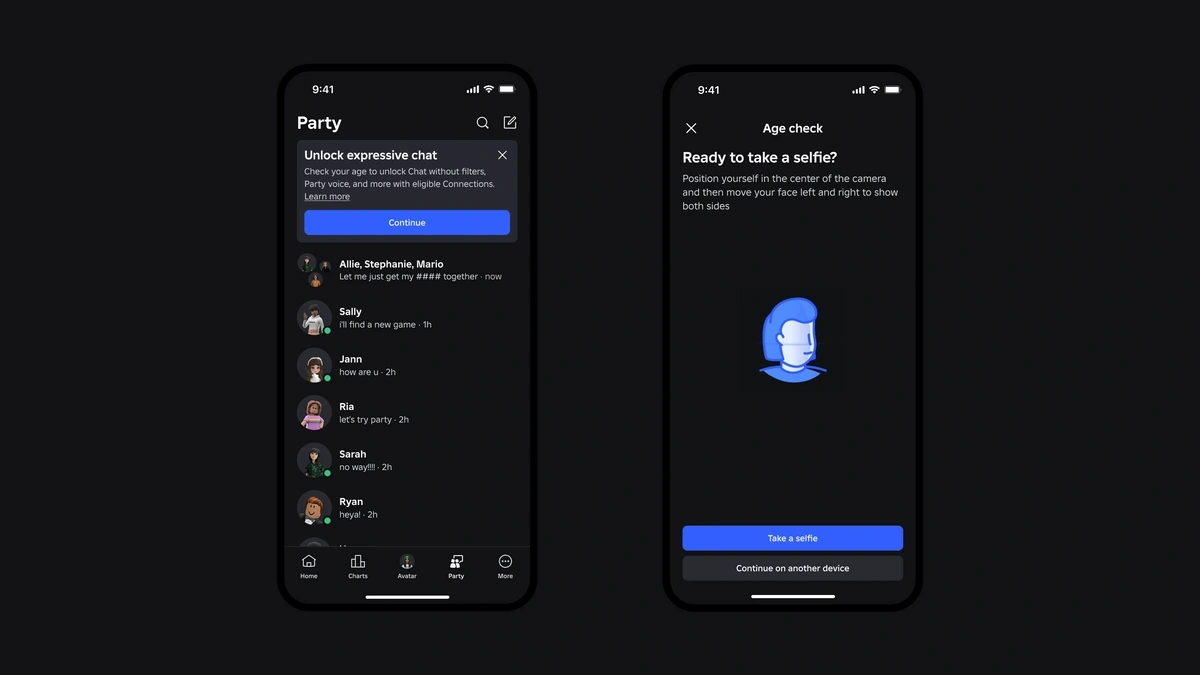

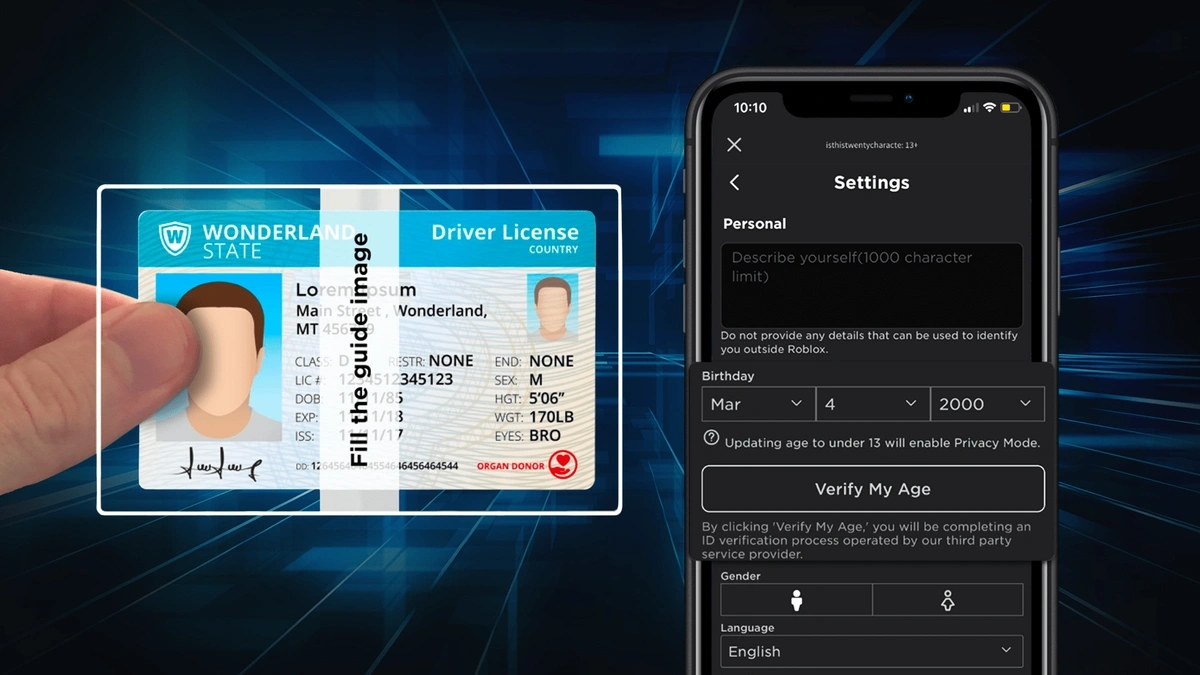

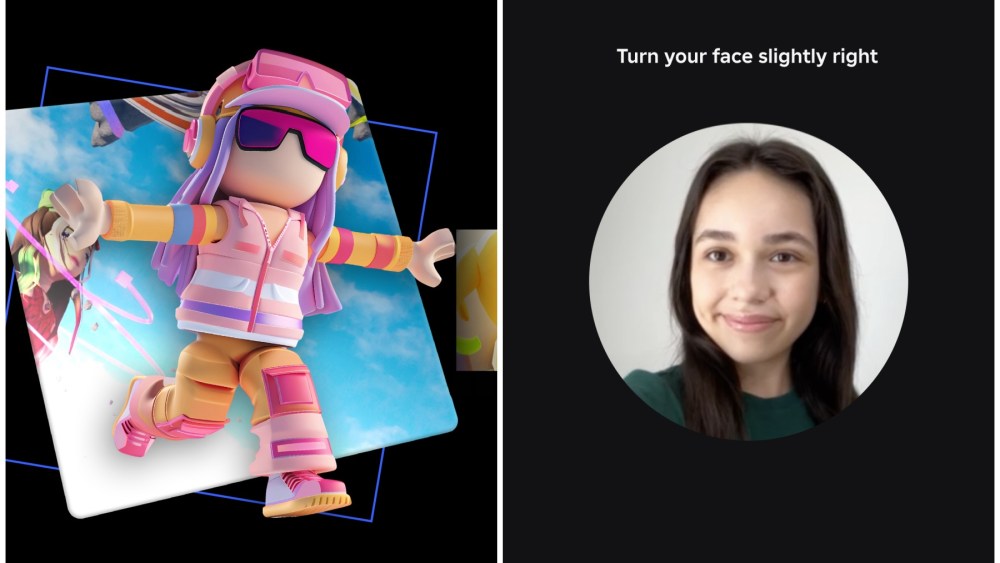

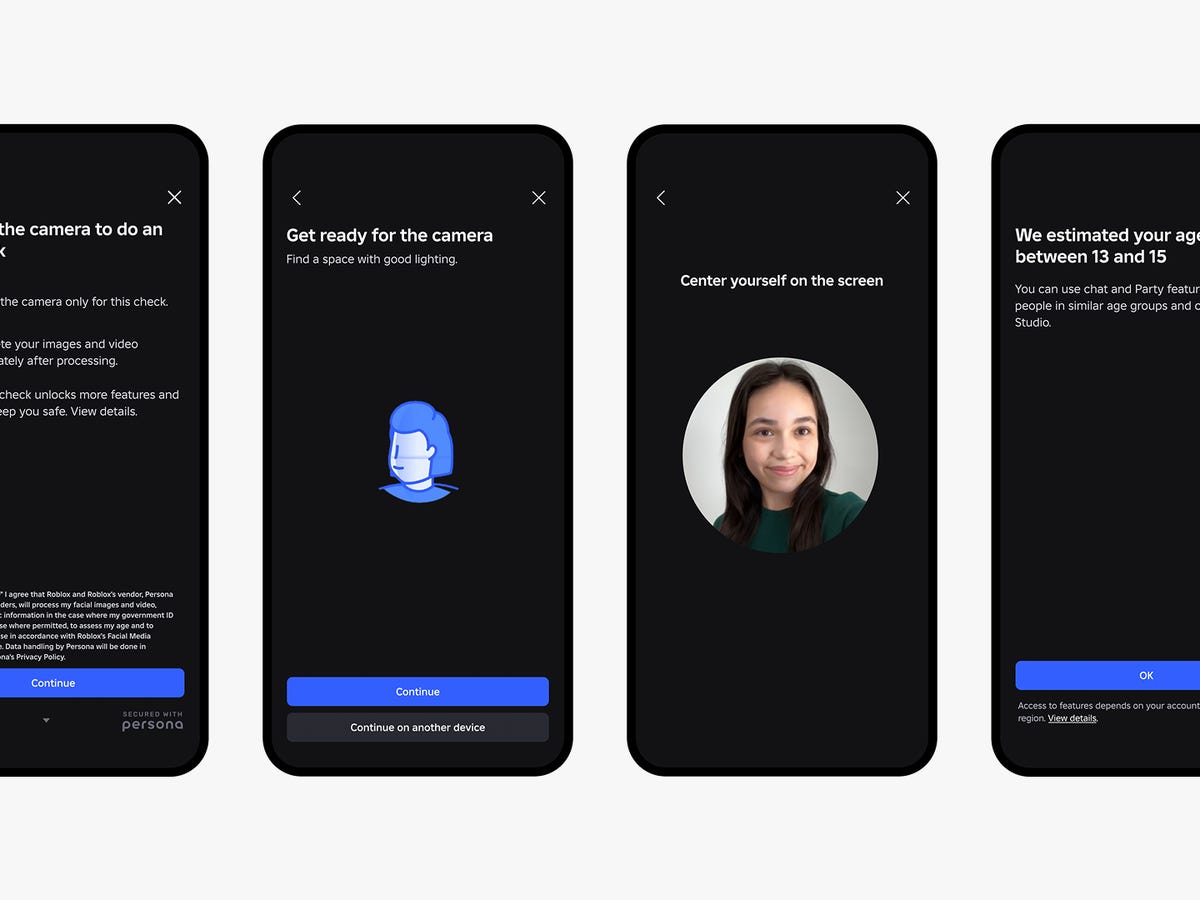

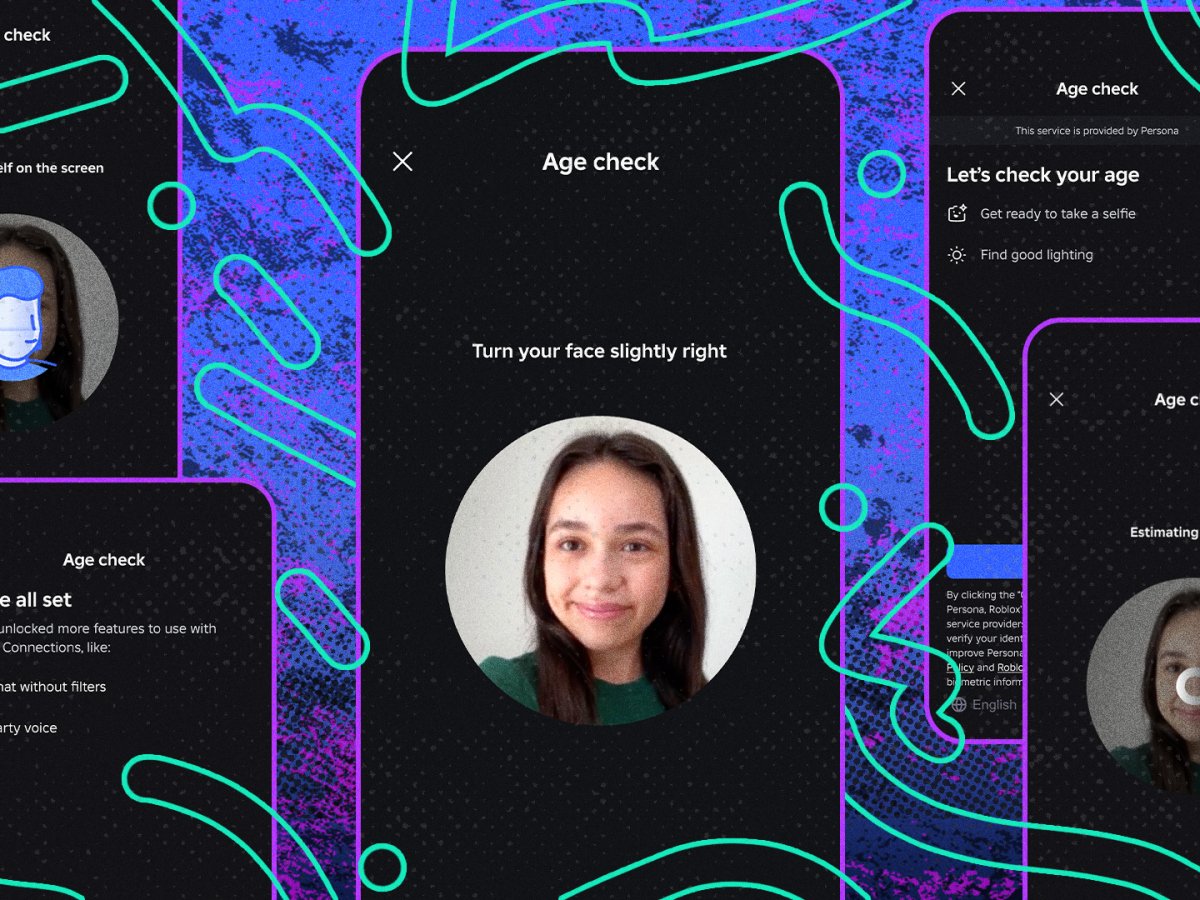

Roblox partnered with a company called Persona to build their age verification system. Here's how it was supposed to work: users would record a short video of their face using their phone or computer camera. Persona's AI would estimate their age, and boom—access unlocked.

Alternatively, users 13 and older could upload a government-issued photo ID. Roblox claimed all biometric data would be "deleted immediately after processing." That last part matters, because it means the company couldn't keep a database of verified faces if they wanted to.

Once verified, the system placed users into age bands. Kids under 9 could only chat with other players up to age 13. Sixteen-year-olds could chat with ages 13 to 20. The system created artificial silos, supposedly keeping children away from adults who might try to groom them.

It sounds great. Age-gated chat. Predators locked out. Kids safe.

Except the underlying technology didn't work reliably.

Within days of the global rollout, hundreds of users posted screenshots and videos showing wildly inaccurate age estimations. A person with a full beard was classified as 13 to 15. Someone claiming to be 18 was locked in the 13 to 15 bracket. On the flip side, actual children were being flagged as 18 or older.

The system wasn't just inaccurate—it was inaccurate in ways that completely defeated its purpose.

The Technical Problem Hiding in Plain Sight

Facial age estimation is genuinely difficult. It's not like image classification, where a computer can learn to distinguish a cat from a dog with high reliability. Age is a continuous variable. People age gradually. Lighting, angle, facial hair, makeup, and a thousand other factors affect how old someone appears.

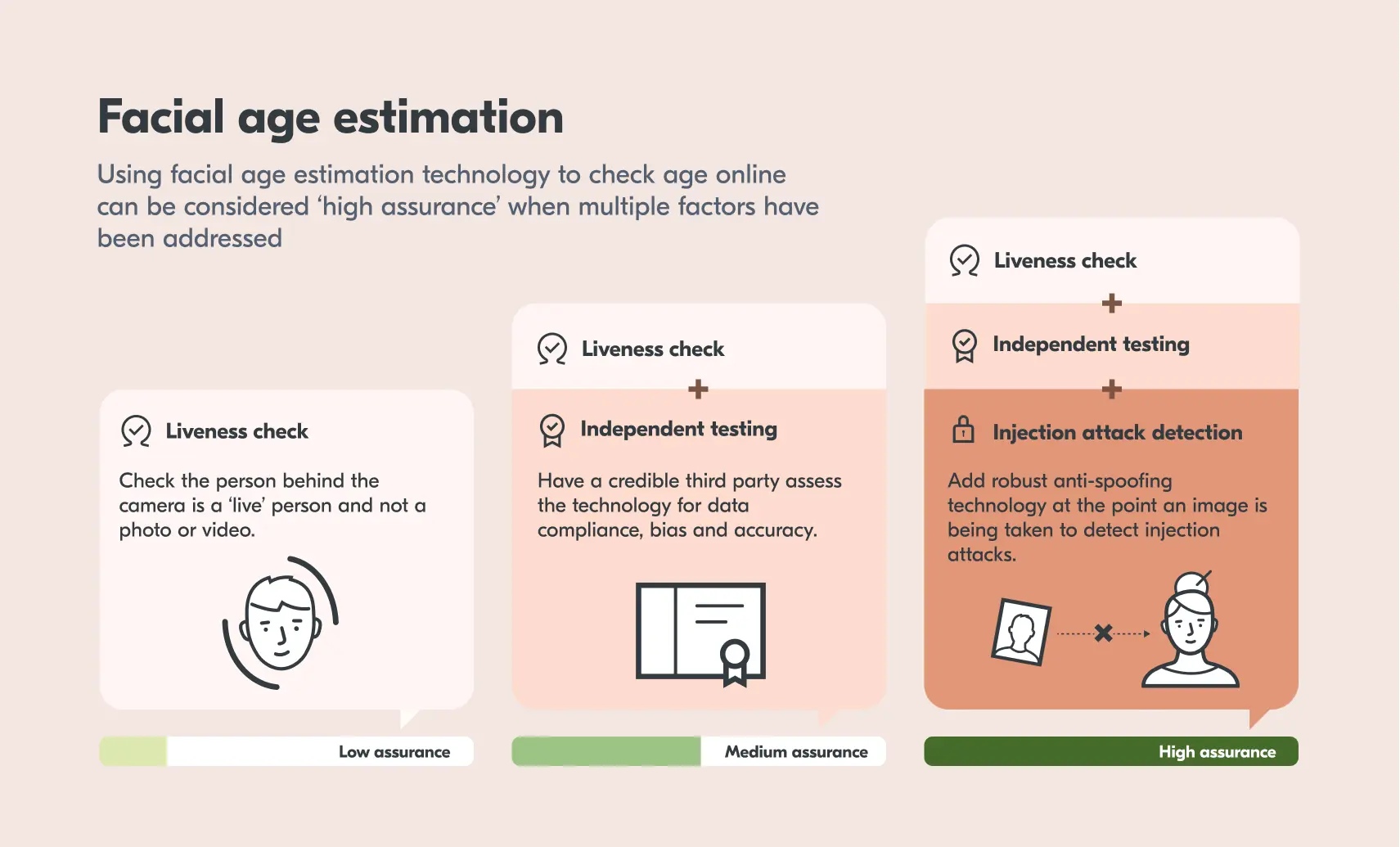

Research shows that even state-of-the-art facial age estimation models have significant error margins. Studies on common datasets show errors of plus or minus 4 to 7 years on average. Some models perform worse. That means if you're 14, the system might reasonably estimate you as anywhere from 7 to 21.

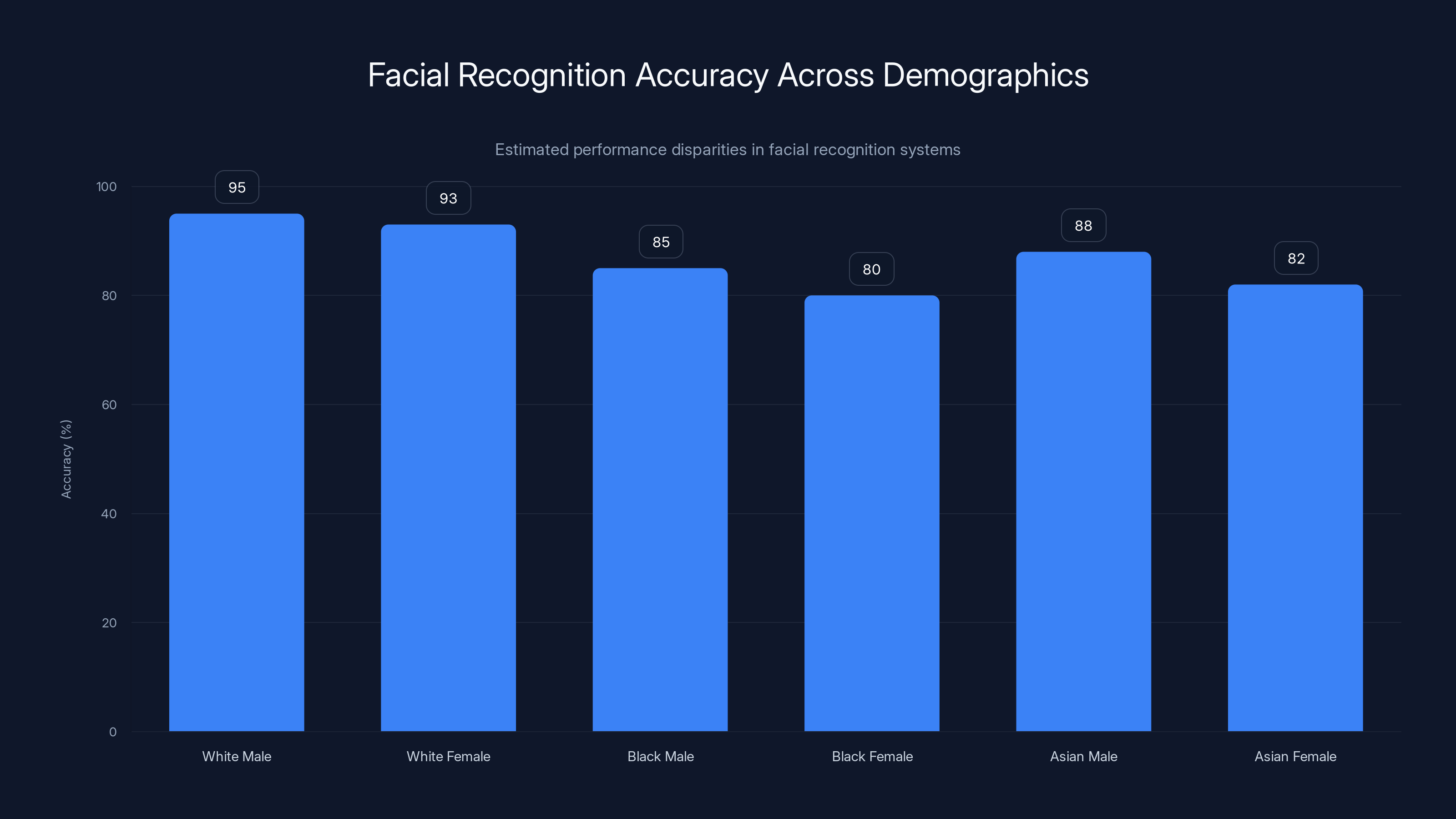

Now scale that across millions of users, all with different lighting conditions, camera qualities, ethnicities, and facial characteristics. The error rates compound. A recent academic study found that facial recognition systems show significant demographic bias, performing worse on darker-skinned individuals and women. Age estimation models have their own biases too.

Roblox's system inherited all of these problems. The company didn't publish accuracy benchmarks before rolling out globally. That should have been a red flag from day one.

Parents Accidentally Defeating Their Own Safety Measures

Here's something Roblox apparently didn't anticipate: parents logging in to verify their own ages on their kids' accounts.

It seems obvious in retrospect. A parent wants their child to play Roblox. The chat feature is being restricted. So they hand the kid the phone, the parent's face gets scanned, and—boom—the child's account is now flagged as adult. The kid is in the 18+ age bracket, which sounds safer, except now nothing is protecting them from actual predators who should be blocked from chatting with children.

Roblox acknowledged this in an update: "We are aware of instances where parents age check on behalf of their children leading to kids being aged to 21+." That's a massive system flaw hiding behind one sentence.

The company said it was "working on solutions," but offered no technical details on how to distinguish between a parent verifying for their kid and an adult creating a fraudulent account. That's a genuinely hard problem. How do you verify that the face in the video actually belongs to the account holder? Without a government ID, you can't.

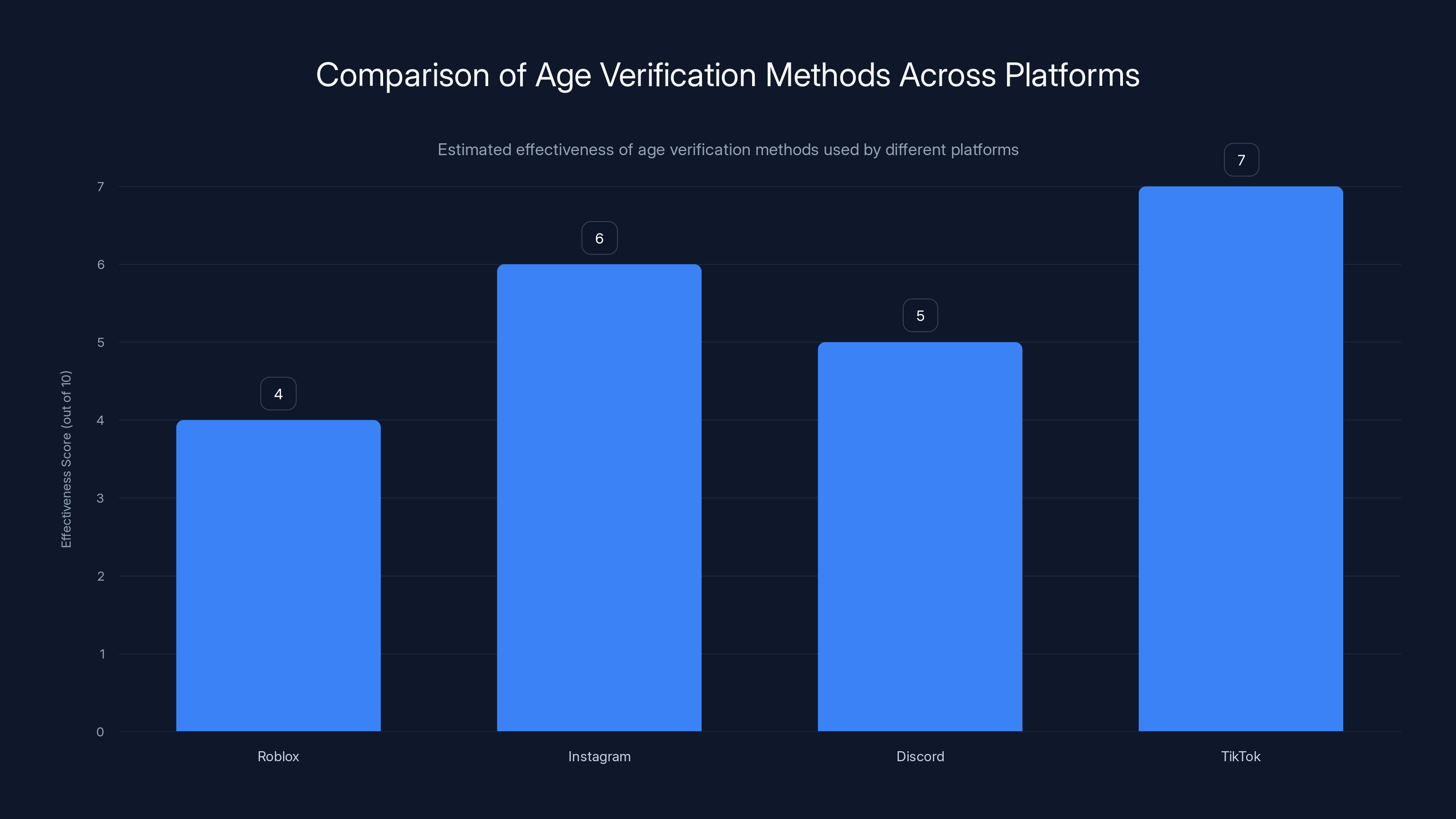

Estimated data: TikTok's combination of methods is rated highest in effectiveness, while Roblox's reliance on facial recognition scores lower.

How Bad Actors Are Gaming the System (And It's Not Hard)

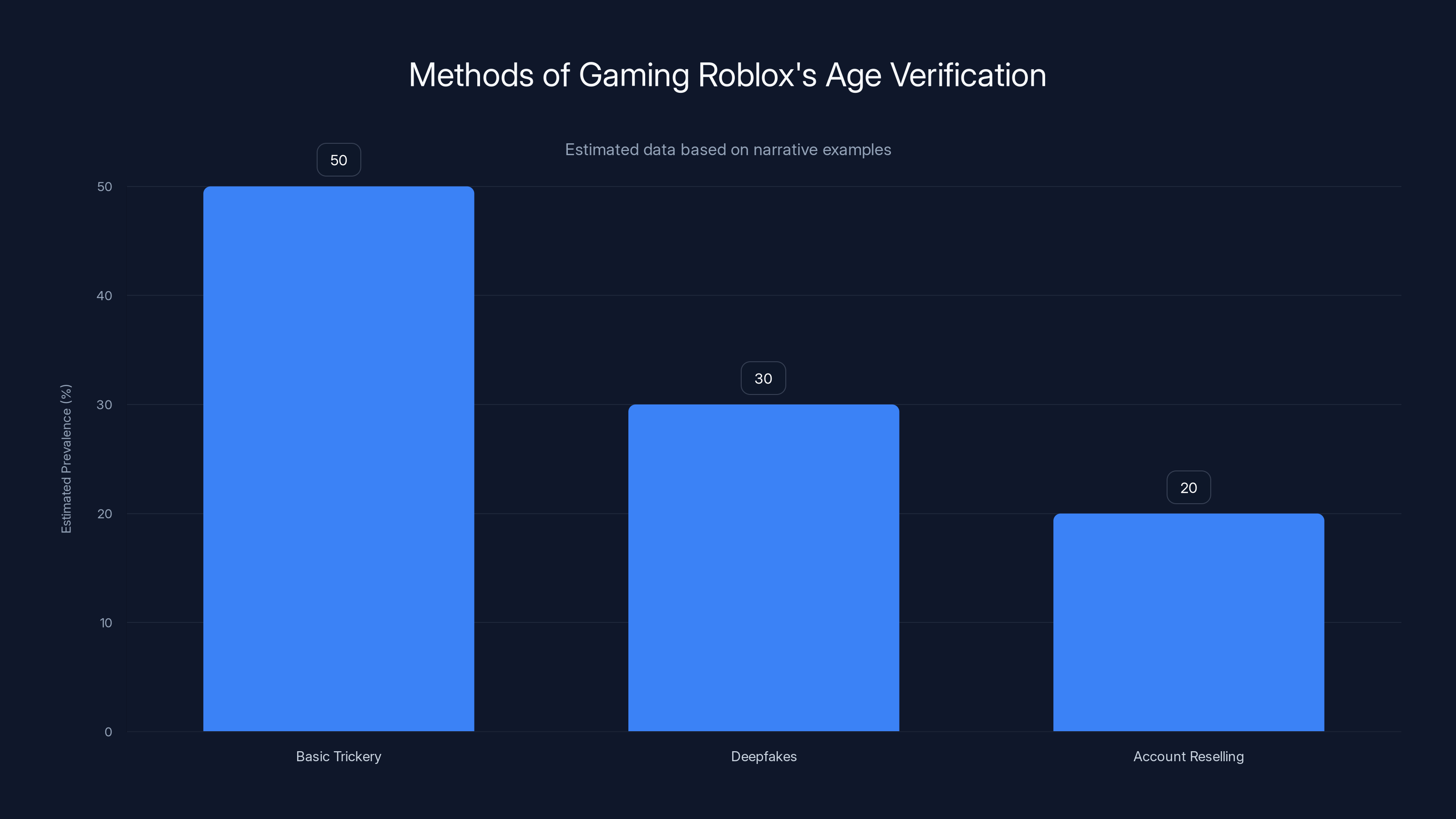

Within hours of the global rollout, social media exploded with tutorials on how to trick the age verification system. Some were creative. Some were just embarrassing for Roblox's engineers.

One widely shared TikTok showed a young boy drawing wrinkles and stubble on his face with a black marker. He submitted the video, and the system classified him as 21+. In another video, someone held up a photo of Kurt Cobain, and the system accepted it.

These aren't sophisticated attacks. They're not deepfakes or advanced AI techniques. They're just... basic fooling around.

The Deepfake Problem (That's Only Getting Worse)

The more sophisticated attack is deepfakes. You can generate a fake video of an adult face using freely available tools. Services like Deep Face Lab or commercial options like Synthesia make it trivial. Feed that video to Roblox's age verification system, and you've got a verified adult account.

Now, here's the scary part: Roblox deleted the original biometric data immediately. That means they can't do retroactive checks against a database of known fraudulent faces. If you spoofed a verified account three months ago, and Roblox discovers it now, they can't systematically find and ban all accounts that used that same deepfake.

The decision to delete biometric data was meant to protect privacy. It backfired spectacularly as a security feature.

Verified Accounts Selling for Less Than a Coffee

eBay users started listing verified Roblox accounts for

When WIRED flagged the listings, eBay removed them. But the damage was done. The message was clear: age verification on Roblox wasn't actually verification. It was a checkbox system that someone with $4 could circumvent.

This created a perverse incentive. Motivated actors—whether they're predators, scammers, or just kids wanting to bypass restrictions—now had a clear path forward. Commit fraud once, get verified, then resell the account. The economics work.

The Real Problem: Age Verification Doesn't Solve Grooming

Here's the uncomfortable truth that experts keep pointing out: age-gated chat doesn't actually prevent child grooming. It might feel like it does, but the mechanics don't work the way Roblox is advertising.

A predator's goal on a platform like Roblox isn't to chat with random children. It's to identify vulnerable kids, build trust, and move them to private communication channels. The predator doesn't care if they're locked in an 18+ age bracket—they just need access to the initial chat channels where they can start grooming.

Ry Terran, an independent extremism researcher who tracks exploitation networks on Roblox, pointed out the flaw directly: the real danger isn't from people correctly sorted into the wrong age bracket. It's from the large numbers of people sorted into the wrong bracket entirely, combined with a complete lack of visibility for parents and moderators.

He's right. Here's why:

Verified Adults Can Still Interact With Kids (Just Indirectly)

Suppose you're an adult correctly verified as 25. You can't directly chat with a 12-year-old. But you can join a game, start a public chat in that game's lobby, and a 12-year-old will see your message. Then they can initiate a private message to you. Congratulations, you've circumvented the age gate.

Or suppose you get verified as 16 (either legitimately or through fraud). Now you can chat with kids aged 13-15. You can build relationships, earn trust, and move the conversation to Discord, where age gating doesn't exist.

The system assumes that the age gate is the primary defense. It's not. It's one layer. But layers only work if every layer is secure. Roblox's verification layer was compromised within hours.

Moderators and Parents Lost Visibility

Before age verification, everyone could see everyone's age. Now, only age-verified users know each other's verified age. Parents can't see what age bracket their child was placed into. Moderators have limited visibility into whether someone is actually who they claim to be.

That's a step backward for safety.

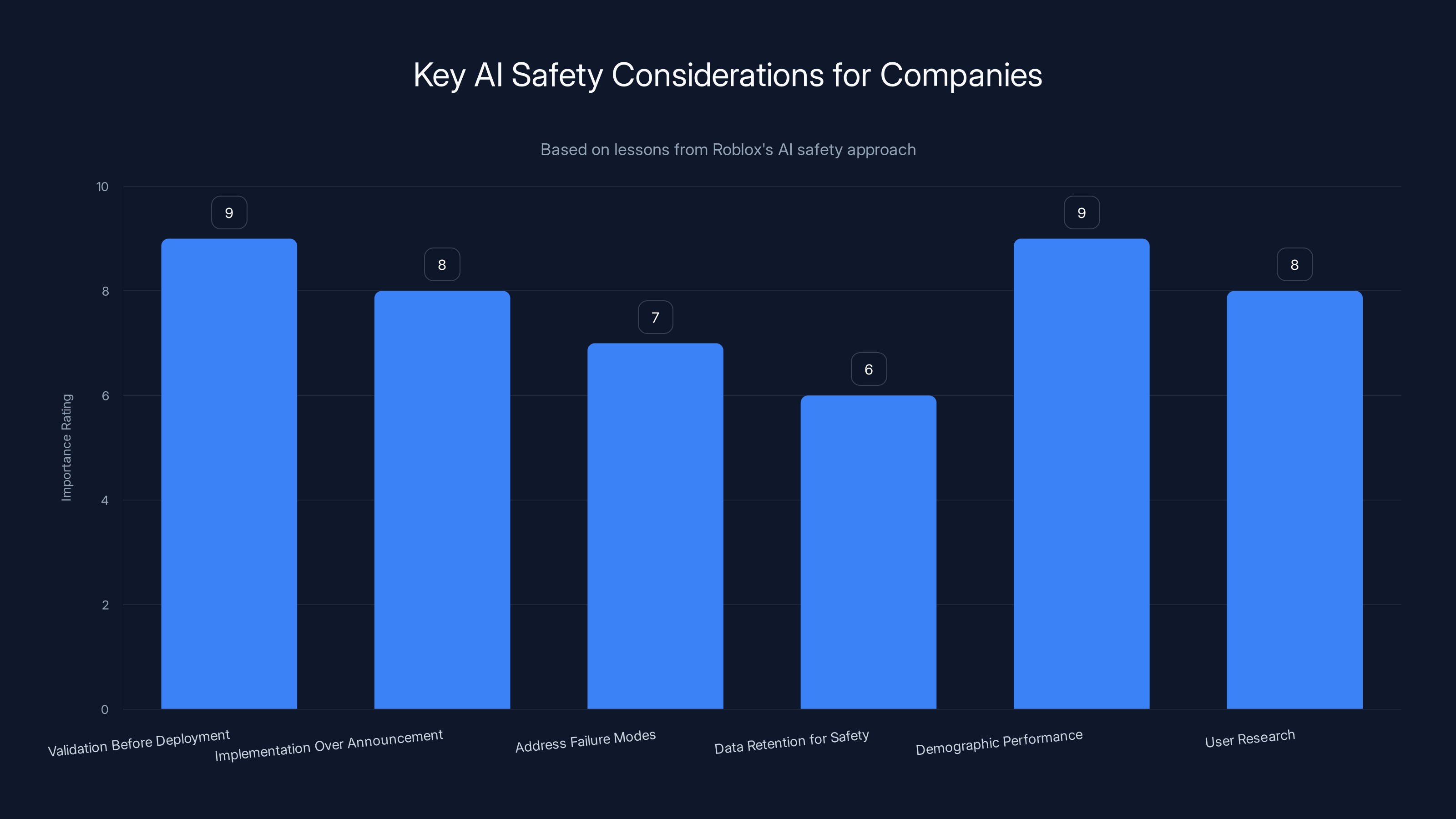

The chart highlights crucial AI safety considerations, emphasizing validation, demographic performance, and user research as top priorities. Estimated data based on content insights.

Why Roblox Pushed This System Forward (Pressure, Not Technology)

Roblox didn't build age verification because the technology was ready. They built it because they were under immense legal and reputational pressure.

The company has faced lawsuits alleging that it failed to protect young users and that predators used the platform to groom children. The attorneys general of Louisiana, Texas, and Kentucky filed lawsuits. Florida's attorney general issued criminal subpoenas. Media coverage was relentless and devastating.

Age verification became a way to tell regulators and the public: "We're doing something. We're being responsible. We're using AI to protect kids." It looked good in a press release.

What Roblox didn't do was stress-test the technology before rollout. They didn't release accuracy benchmarks. They didn't conduct user research on how people would actually use the system or how bad actors would attack it. They tested in Australia, New Zealand, and the Netherlands—relatively small rollouts—found that only 50% of users even completed verification, and then they went global anyway.

That's not how technology that's supposed to protect children should be deployed.

The Pattern: AI as a PR Band-Aid

Roblox's mistake isn't unique. It's becoming increasingly common for companies facing safety or regulatory pressure to announce AI solutions without fully understanding the implications. The announcement generates headlines. The company looks responsible. Then, months later, when the system fails at scale, the damage is already done.

In Roblox's case, the announcement of age verification probably did help its reputation with regulators in the short term. But the rollout failure has erased most of those gains and created new credibility problems.

Facial Recognition Bias: Why This Matters Across Demographics

One thing worth highlighting separately: facial age estimation doesn't fail equally across all demographics. Research consistently shows that these systems perform worse on darker-skinned individuals, women, and people with non-Western facial features.

That means Roblox's system probably misidentified some players worse than others. A young Black girl might have been classified as older than her actual age at higher rates than a white boy. A woman might be classified as younger than her age more often than a man.

These biases matter because they affect who gets protected and who doesn't. If the system systematically underestimates the age of darker-skinned children, then those kids end up in lower age brackets with greater access to potential predators.

Roblox hasn't published demographic breakdowns of their system's performance. That's another red flag.

The chart compares error margins of different age estimation models. Persona's AI had the highest error margin, contributing to its failure in accurately verifying user ages. Estimated data based on typical error ranges.

The Developer Exodus and Economic Impact

While the safety implications were dire, Roblox also faced an immediate economic problem: developers were leaving, and players were furious.

Thousands of developers flooded Roblox's official forum with complaints. More than anything else, they were seeing a cliff drop in chat usage. One developer shared metrics showing chat activity in their game dropping from an average of 85% of players to 36%.

That's not a rounding error. That's game-changing. Chat is a core feature that keeps players engaged. Remove it, and retention plummets. Developers' revenue depends on player engagement. Roblox essentially killed a revenue stream for their entire creator economy without meaningful consultation.

The developers' frustration went beyond economics. Many pointed out that they had spent years building communities around chat-based gameplay. Games like role-playing experiences, social simulations, and collaborative building games depend on chat. Age verification didn't just break the feature—it broke entire categories of games.

The Pressure From the Creator Community

Roblox's creator community is powerful. Hundreds of thousands of developers build games on the platform. When they all start posting that a feature is broken, the company has a credibility problem.

Most of the developer feedback boiled down to: "Revert this. It's broken. It's hurting us and it's not actually safer."

Roblox didn't revert. Instead, it doubled down, promising improvements while providing no technical details or timeline. That kind of non-answer tends to make developers more, not less, angry.

What Happens Now: Roblox's Promised Fixes (And Why They're Vague)

Roblox said it would randomly recheck ages if fraud was suspected. The company also acknowledged the parent-verification problem and promised to "develop and improve detection."

But here's the thing: the company provided zero specifics. No technical details. No timeline. No metrics on what "fraud detection" would look like.

How would random rechecking work? Would users be notified? Would there be a grace period? Would a failed recheck result in account suspension? Roblox didn't say.

How would the system detect parents verifying for kids? Would it use behavioral analytics? Would it flag accounts where someone logs in from a different location? Would it require periodic reverification? Again, no details.

That's frustrating because it suggests the company either doesn't have answers yet, or doesn't want to commit to fixes that might require dismantling the system entirely.

The Nuclear Option: Complete Reversal

There's a scenario where Roblox ultimately abandons age verification entirely. Not because the technology will improve, but because the PR cost of maintaining a broken system exceeds the benefit of having something in place.

If the system fails publicly enough—if there's a high-profile case of a predator who used a fake verified account, or if regulators demand accuracy guarantees Roblox can't meet—the company might decide to shut it down.

That would be the honest move. It would admit that facial age estimation isn't reliable enough for safety-critical applications. But it would also leave Roblox without any age verification tool, which brings regulators back to asking uncomfortable questions about how the company plans to protect children.

Basic trickery, such as using markers or photos, is estimated to be the most common method of bypassing Roblox's age verification, followed by deepfakes and account reselling. Estimated data based on narrative.

Comparing Roblox's Approach to Other Platforms

Roblox isn't the only platform struggling with age verification. But most others have learned from similar failures.

Instagram uses age estimation based on ID verification for users over 18, but relies on self-reported age for younger users. It's not perfect, but it's honest about its limitations. Discord doesn't do facial age verification at all—it relies on account age and behavioral signals. TikTok uses a combination of age self-reporting, ID verification (in some regions), and behavioral analytics.

None of these platforms claim that age verification solves grooming. They treat it as one tool among many, not a silver bullet.

Roblox's mistake was positioning facial recognition as a comprehensive safety solution. Facial age estimation is not reliable enough for that role. Period.

What Actually Works for Child Safety on Gaming Platforms

If platforms genuinely want to reduce grooming and exploitation, the research suggests focusing on:

Behavioral monitoring: Detecting suspicious patterns like adults repeatedly initiating private conversations with different minors, or attempting to move conversations off-platform.

Content moderation: Using AI to detect explicit language, links to external sites, and solicitation patterns. This is harder than facial recognition but actually addresses the problem.

Transparent reporting: Making it easy for users and parents to report suspicious behavior, then actually investigating those reports.

Parental visibility: Showing parents what's happening in their kids' accounts and conversations (with privacy guardrails). Roblox actually reduced parental visibility with age verification.

Education: Teaching kids to recognize grooming patterns and know when to report suspicious behavior.

Facial age verification doesn't appear on that list because, as Roblox discovered, it's not actually that helpful.

The Broader Lesson: When AI Isn't Ready for Deployment

Roblox's failure tells us something important about the state of AI in 2025: the technology is being deployed in high-stakes situations before it's actually ready.

Facial age estimation is an inherently difficult problem. The error rates are significant. The demographic biases are well-documented. The vulnerability to spoofing is obvious. Any competent researcher could have told you this wouldn't work reliably.

But regulatory pressure and the desire to appear proactive pushed Roblox to deploy anyway.

This pattern is repeating across industries. Companies deploying AI systems for hiring, lending, law enforcement, and now child safety—all before adequately understanding the technology's limitations.

The difference is that when a hiring AI makes a mistake, someone doesn't get an interview. When a child safety AI fails, children can be harmed.

The Responsibility Gap

Here's something that should concern everyone: Roblox partnered with Persona to build the age verification system. If the system fails to protect children, who's responsible?

Roblox will likely point to Persona's technology. Persona will probably say they built the system to Roblox's specifications. Regulators will be left asking why anyone deployed a system this unreliable in the first place.

There's a responsibility gap. Nobody's fully accountable for a bad outcome because responsibility is distributed across companies, regulators, and users.

Facial recognition systems show lower accuracy for darker-skinned individuals and women, highlighting significant bias. (Estimated data)

What Parents and Players Should Know

If you or someone you know uses Roblox, here's what you should understand about age verification:

It's not trustworthy for safety. Don't assume that because someone's account is verified as an adult, they actually are one. The system has been defeated with marker-drawn wrinkles.

It doesn't replace actual safety practices. Whether or not age verification works, kids should still not share personal information, should be cautious about who they chat with, and should report suspicious behavior.

Parental oversight is still essential. Age verification removed some parental visibility. Parents should use other methods to monitor their kids' gaming and online interactions.

Consider privacy implications. The age verification system requires submitting a facial scan or government ID. That's a privacy trade-off worth thinking about, especially since the data handling wasn't clearly explained.

Report suspicious behavior. If you see someone trying to recruit kids to external platforms or engage in grooming behavior, report it directly to Roblox. Platform reports are more useful than complaining on social media.

The Regulatory Perspective: What Happens Next

Roblox is currently under scrutiny from multiple state attorneys general. The age verification rollout probably didn't help their case—it showed they were taking action, but the action failed publicly.

Regulators face a challenge: they want platforms to be safer, but they can't mandate solutions that don't work. If they accept age verification as a compliance measure while knowing it's broken, they're basically settling for security theater.

There's a possibility that regulators will actually demand better solutions, not just facial recognition. They might require behavioral monitoring, content moderation improvements, or actual identity verification (which is much harder).

Roblox might also face shareholder pressure. The company went public in 2021, and disappointing platform safety metrics affect valuation. Investors might push for more robust solutions, which would require time and money.

The Timeline for Real Solutions

If Roblox actually tries to build a safer platform, what would that timeline look like?

Short-term (3-6 months): Rollback or significant modification of age verification. Implementation of better behavioral monitoring for chat. Improved reporting systems. Enhanced content moderation.

Medium-term (6-12 months): Potential pivot to a hybrid approach combining ID verification for older teens, behavioral monitoring, and improved parental controls.

Long-term (12+ months): Building out full behavioral safety infrastructure, which requires training new ML models, hiring safety experts, and implementing new data infrastructure.

The problem is, regulators probably won't wait 12 months. They might demand faster results, which could force Roblox into deploying half-baked solutions again.

Learning From Roblox: How Other Companies Should Approach AI Safety

Roblox's failure provides a blueprint for what not to do:

Don't deploy AI for safety-critical functions before validation. If you're going to use AI to determine whether someone is a child, the system should have undergone rigorous accuracy testing on diverse populations. Full stop.

Don't treat announcement as accomplishment. Announcing an AI safety feature and actually implementing one are different things. The implementation matters infinitely more.

Don't ignore obvious failure modes. Deepfakes, spoofing, and parents verifying for kids were all predictable failure modes. A two-hour brainstorming session would have surfaced them.

Don't delete data you might need for safety. The decision to delete facial biometrics immediately might have been motivated by privacy concerns, but it eliminated the ability to retroactively verify or audit the system. That trade-off should have been more carefully considered.

Do measure performance across demographics. Any system that affects safety or access should be evaluated across different racial, gender, and ethnic groups. If it doesn't perform equally, it's not ready.

Do run actual user research. Roblox tested in three countries and found that 50% of users didn't complete verification. That should have been a sign to investigate why before going global.

The Fundamental Technical Challenge

Let's be clear about what Roblox was trying to solve and why it's so hard:

Facial age estimation is trying to predict a continuous variable (age) from a single image of a face. The mathematical challenge is this: age is affected by genetics, lifestyle, sun exposure, stress, makeup, facial hair, and dozens of other factors that vary significantly between individuals.

A 16-year-old can look 25. A 35-year-old can look 28. Those differences aren't bugs in the system—they're features of human biology.

In mathematical terms, you're trying to fit a model where the relationship between features (facial characteristics) and the target variable (age) has high variance and significant outliers. The error bars are wide.

When you deploy that model across millions of users with different lighting conditions, camera qualities, ethnicities, and actual ages, the error compounds. A model with an average error of ±5 years might easily have errors of ±10 years for specific demographic groups or conditions.

That's not a Roblox problem. That's a fundamental technical problem with facial age estimation.

What This Means for AI Regulation Going Forward

Roblox's failure is making a case for stronger AI regulation before deployment, not after. The EU's AI Act, which categorizes different types of AI systems by risk level, would classify facial age verification as a high-risk system if it's being used to restrict access or protect children.

High-risk systems require:

- Pre-deployment risk assessment

- Documentation of accuracy and demographic performance

- Human oversight mechanisms

- Monitoring after deployment

- The ability to override AI decisions

Roblox didn't do most of those things. They probably wouldn't have been required to under existing US regulations (which are minimal). But if those standards were in place, age verification might not have been deployed.

That's worth considering as AI systems increasingly make decisions that affect real people's lives and safety.

Moving Forward: What Roblox Needs to Do (And Probably Won't)

If Roblox were operating in a reasonable world, here's what they should do:

1. Admit the system didn't work. Stop saying "We're improving it" and be honest: facial age estimation isn't reliable enough for this application at this scale.

2. Pause and pivot. Move back to age self-reporting or require ID verification for all users (or none), rather than trying to make facial recognition work.

3. Invest in behavioral safety. Focus resources on actually detecting grooming patterns, not on estimating age from faces.

4. Improve parental tools. Give parents better visibility into their kids' accounts and interactions, not less.

5. Communicate clearly. Tell users why the system failed, what you're changing, and when.

Will Roblox do these things? Probably not immediately. The company has already committed publicly to improving the system. Backing down would be an admission of failure, which companies resist. But pressure from regulators, developers, and users might force it eventually.

Conclusion: AI Isn't Magic, and Neither Is Safety

Here's the thing that keeps coming back: Roblox wanted AI to solve a social problem. They wanted facial recognition to replace what actually prevents grooming on gaming platforms: human moderation, behavioral monitoring, and parental oversight.

AI is good at pattern recognition in images, text, and data. It's not good at substituting for human judgment about safety, especially not when it's deployed at scale without proper testing.

Roblox learned this the hard way. Their users, developers, and the kids using the platform paid the price for that learning experience.

As more platforms and companies rush to deploy AI for safety-critical functions, Roblox's failure is an important cautionary tale. It shows us what happens when you let regulatory pressure, PR needs, and technological enthusiasm override careful testing and honest assessment of what a system can actually do.

The next company building an AI safety system should study what Roblox got wrong. They should demand proper accuracy testing, demographic performance evaluation, and real-world user research before deployment. They should build in human oversight. They should have a plan for when the AI fails.

And they should be honest that AI can't replace human responsibility for keeping kids safe. It can be one tool, but not the only one.

Roblox thought they could use AI as a band-aid for a cultural problem. The result was a system that made things worse, not better. That's a lesson worth learning before the next company makes the same mistake.

FAQ

What is facial age verification and how does it work?

Facial age verification is a technology that analyzes a photograph or video of someone's face to estimate their age. The system uses machine learning models trained on large datasets of faces with known ages, then applies this model to new faces to predict age. Roblox implemented this through a company called Persona, requiring users to record a short video of their face, which is then analyzed to estimate whether they're under 9, 9-12, 13-15, 16-20, or 21+ years old. The company claims biometric data is deleted immediately after analysis.

Why did Roblox implement age verification in the first place?

Roblox introduced age verification in response to intense legal and regulatory pressure over child safety concerns. The company faced multiple lawsuits alleging it failed to protect young users from predators, and several state attorneys general filed suits. By implementing AI-powered age verification, Roblox aimed to show regulators and the public that it was taking meaningful action to restrict communication between adults and children. However, the company didn't adequately test the technology before deploying it globally, leading to widespread failures.

What are the main problems with Roblox's age verification system?

The system has multiple critical flaws: first, facial age estimation is inherently inaccurate with error margins of plus or minus 4-7 years, causing children to be classified as adults and vice versa. Second, the system is trivially easy to fool using marker-drawn wrinkles, photos of celebrities, or deepfakes. Third, parents verifying on behalf of their children accidentally placed kids in adult age brackets. Fourth, verified accounts are being sold online for as little as $4. Fifth, the system doesn't actually prevent grooming, which requires behavioral monitoring rather than age-gating. Finally, the rollout reduced parental visibility into their children's accounts.

Can verified accounts on Roblox actually be trusted?

No. Within days of rollout, age-verified accounts for minors as young as 9 years old were being sold on eBay for $4. Users demonstrated they could trick the system using marker drawings on their faces, photos of celebrities, or deepfake videos. The underlying facial age estimation technology is too unreliable to serve as a trustworthy verification mechanism. Age verification on Roblox should not be treated as proof that someone is actually who they claim to be.

Does age verification actually stop predators from grooming children on Roblox?

No, and experts say it actually creates false confidence that the platform is safer than it is. Age verification only prevents direct chat between age brackets—it doesn't address how predators actually operate. Predators use initial public chat to identify vulnerable kids, build trust through a game, then move conversations to private Discord servers or other platforms where age-gating doesn't apply. The system reduces parental visibility without meaningfully preventing grooming. Child safety experts emphasize that behavioral monitoring and content moderation are far more effective than age-gating.

How many people used the age verification system when Roblox tested it in other countries first?

When Roblox tested age verification in Australia, New Zealand, and the Netherlands in November 2024, only 50% of users completed the verification process. Despite this low adoption rate in testing, suggesting the system had problems, Roblox proceeded with a global rollout anyway. This failure to adequately interpret negative user research data was a significant red flag that went unheeded.

What demographic biases exist in facial age estimation?

Research consistently shows that facial recognition and age estimation systems perform worse on darker-skinned individuals, women, and people with non-Western facial features. This means Roblox's system likely misidentified children from certain demographic groups at higher rates than others, potentially leaving some populations less protected than others. The company has not published demographic performance data, which is concerning for a system meant to protect children.

What should Roblox have done instead of implementing age verification?

Experts suggest focusing on behavioral monitoring to detect grooming patterns, improved content moderation to identify suspicious language and solicitation, transparent and responsive reporting mechanisms, enhanced parental controls and visibility, and education for users about recognizing grooming. These approaches actually address the core problem—predatory behavior—rather than relying on age-gating, which is easily circumvented. Combined with ID verification for specific high-risk features (rather than facial recognition), these would be more effective for child safety.

Is Roblox planning to fix or remove the age verification system?

Roblox has acknowledged problems and said it's working on improvements and developing fraud detection for suspected age misrepresentation. However, the company has provided no specific technical details, timeline, or concrete metrics. Many developers and players are calling for the system to be completely rolled back, and it remains unclear whether Roblox will eventually admit the technology is unsuitable for this purpose or attempt to salvage it through additional modifications.

What lessons does Roblox's failure teach about deploying AI for safety-critical functions?

Roblox's failure demonstrates that companies should never deploy AI systems for safety-critical applications without rigorous pre-deployment testing, validation across demographic groups, documentation of accuracy and failure modes, and honest assessment of what the technology can actually achieve. The company let regulatory and PR pressure override technical due diligence. Organizations should demand proper accuracy benchmarks, user research, and rollback plans before deploying any AI system intended to protect children or restrict access based on sensitive characteristics.

Key Takeaways

- Facial age estimation has inherent error margins of ±4-7 years and performs worse across different demographic groups, making it unsuitable for safety-critical applications

- Roblox's system failed within days: children classified as adults, adults as teens, and marker-drawn wrinkles were classified as 21+ years old

- Age-verified accounts were being sold on eBay for as little as $4, proving the system provides no meaningful verification

- Age verification doesn't prevent grooming because predators build trust in public chat then move to external platforms where age-gating doesn't apply

- Developer engagement dropped dramatically (85% to 36% chat usage) because age verification broke core gameplay and social mechanics

- The company deployed globally despite finding only 50% adoption rate in testing, ignoring clear warning signs of system failure

- Regulatory pressure and PR needs drove deployment of untested technology, prioritizing the appearance of safety over actual effectiveness

- Effective child safety requires behavioral monitoring and content moderation, not age-gating

Related Articles

- Roblox Age Verification Requirements: How Chat Safety Changes Work [2025]

- Roblox Mandatory Age Verification: Complete Guide for Parents and Users [2025]

- Grok's Child Exploitation Problem: Can Laws Stop AI Deepfakes? [2025]

- Grok Deepfake Crisis: Global Investigation & AI Safeguard Failure [2025]

- Complete Guide to New Tech Laws Coming in 2026 [2025]

- App Store Age Verification: The New Digital Battleground [2025]

![Roblox's AI Age Verification System Failure Explained [2025]](https://tryrunable.com/blog/roblox-s-ai-age-verification-system-failure-explained-2025/image-1-1768331220277.jpg)