Samsung RAM Price Hikes: What's Behind the AI Memory Crisis [2025]

TL; DR

- RAM costs are spiking due to AI data centers consuming massive amounts of high-bandwidth memory, as noted by Bloomberg.

- Price hikes are coming soon to smartphones, laptops, and other consumer devices according to Samsung executives.

- The shortage affects everything, from cars to gaming consoles, as manufacturers compete for limited memory supplies, highlighted by NPR.

- AI workloads require extreme bandwidth, forcing memory makers to reprioritize away from consumer products, as discussed in IDC's analysis.

- No quick fix exists without major investments in new manufacturing capacity or a slowdown in AI infrastructure spending, according to TrendForce.

It's 2025, and the AI revolution is hitting your wallet harder than anyone expected. Not through expensive AI subscriptions or premium AI features in your phone, but through something far more fundamental: the chips that power everything. Samsung just signaled something that's been brewing in the shadows of the data center boom. RAM costs are about to explode, and that means the prices you pay for devices are going up too.

Wonjin Lee, Samsung's global marketing leader, didn't mince words at CES 2026. "There's going to be issues around semiconductor supplies, and it's going to affect everyone," he said during an interview at the annual tech conference. "Prices are going up even as we speak. Obviously, we don't want to convey that burden to the consumers, but we're going to be at a point where we have to actually consider repricing our products."

This isn't Samsung crying wolf. This is a company that manufactures roughly 30% of the world's DRAM explaining why your next phone, laptop, or tablet might cost more than you expect. And the culprit isn't some natural disaster or geopolitical crisis. It's artificial intelligence, and specifically, the insatiable appetite AI data centers have for high-bandwidth memory.

So what's actually happening here? Why is AI eating all the RAM? And when should you expect to feel the pinch in your pocket? Let's break down what's becoming one of the most consequential supply chain stories of the AI era.

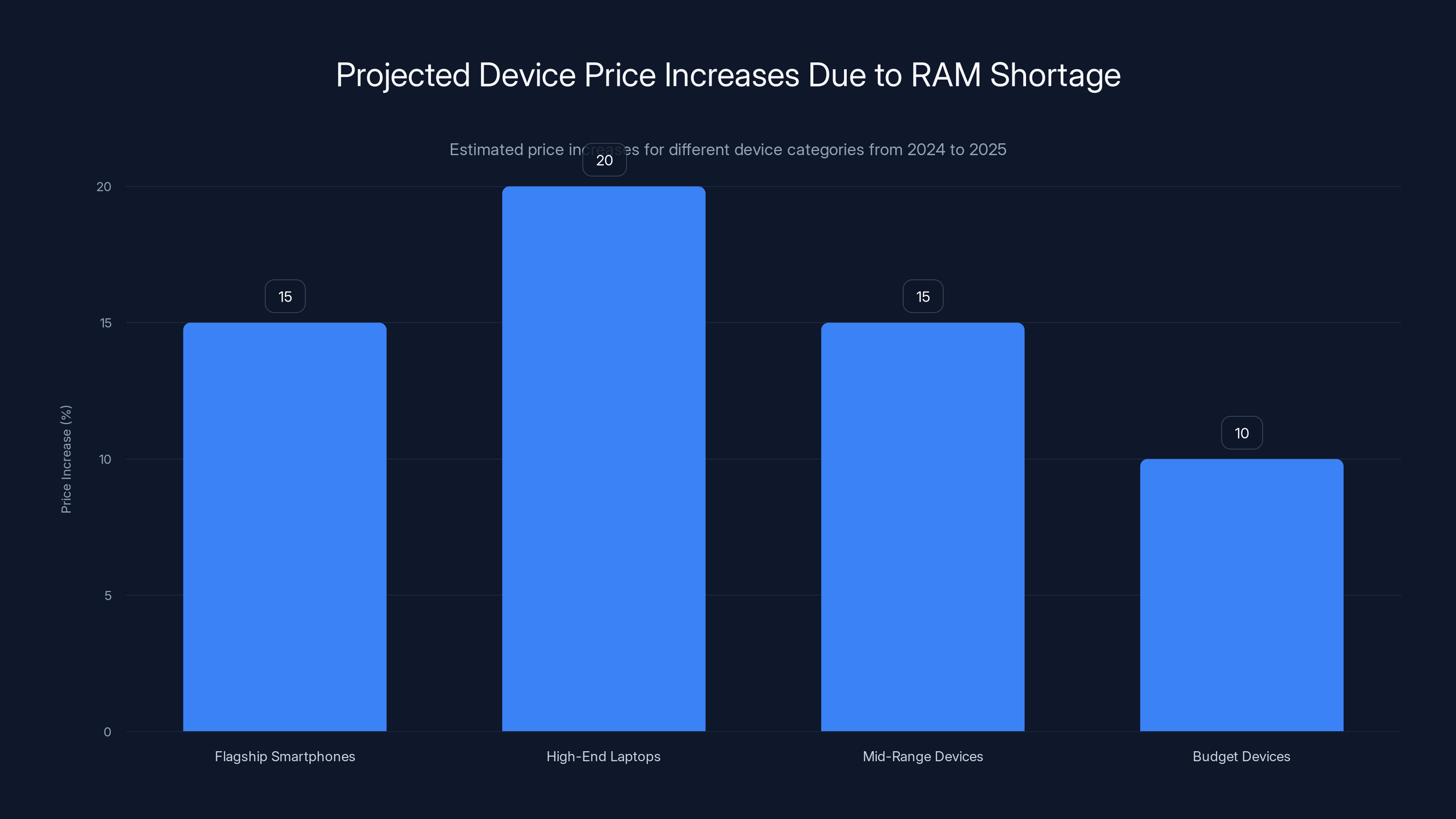

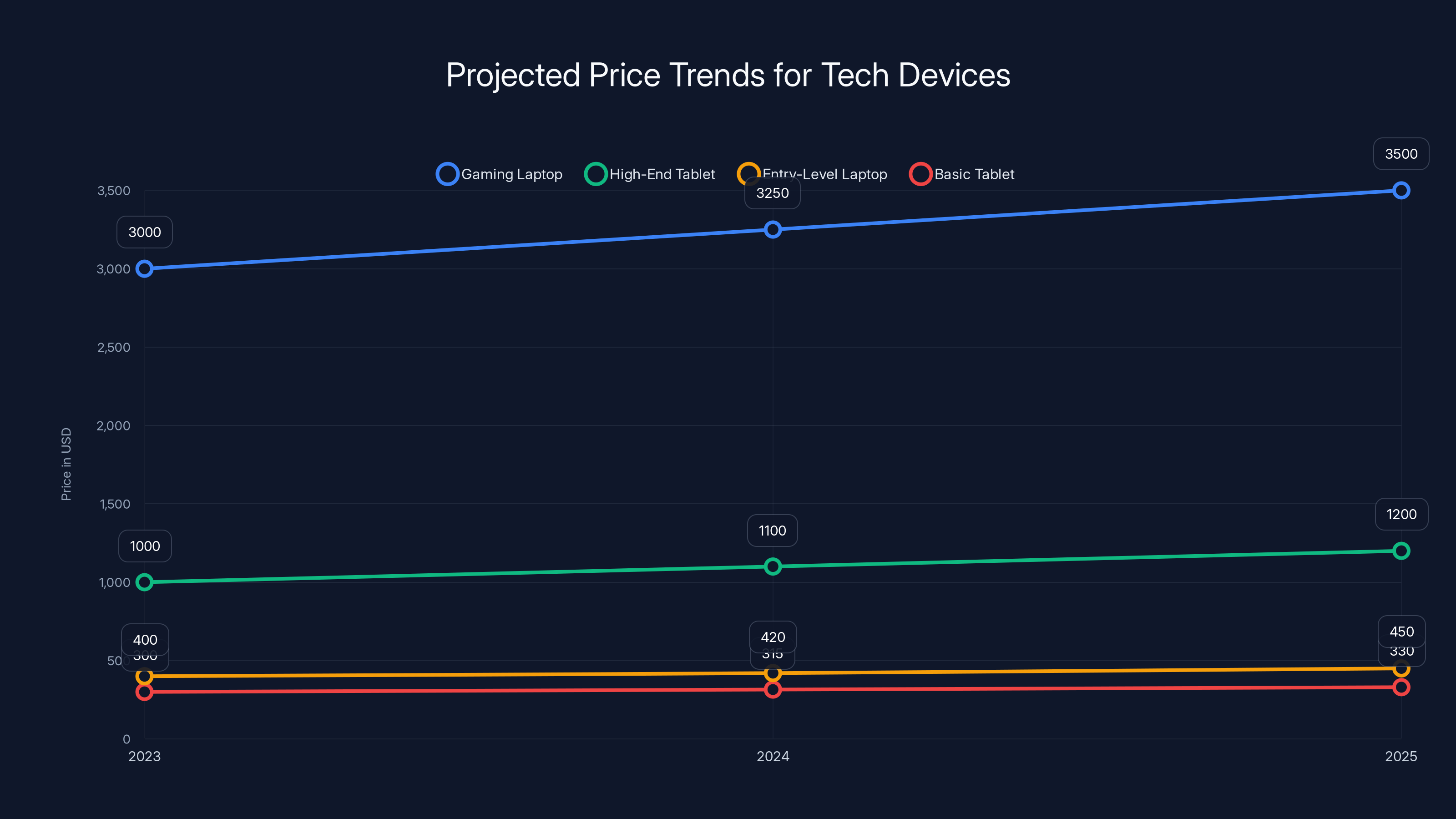

Flagship smartphones and high-end laptops are projected to see the largest price increases due to RAM shortages, with increases of 15-20%. Estimated data based on current trends.

The RAM Shortage Nobody Saw Coming (But Kind Of Did)

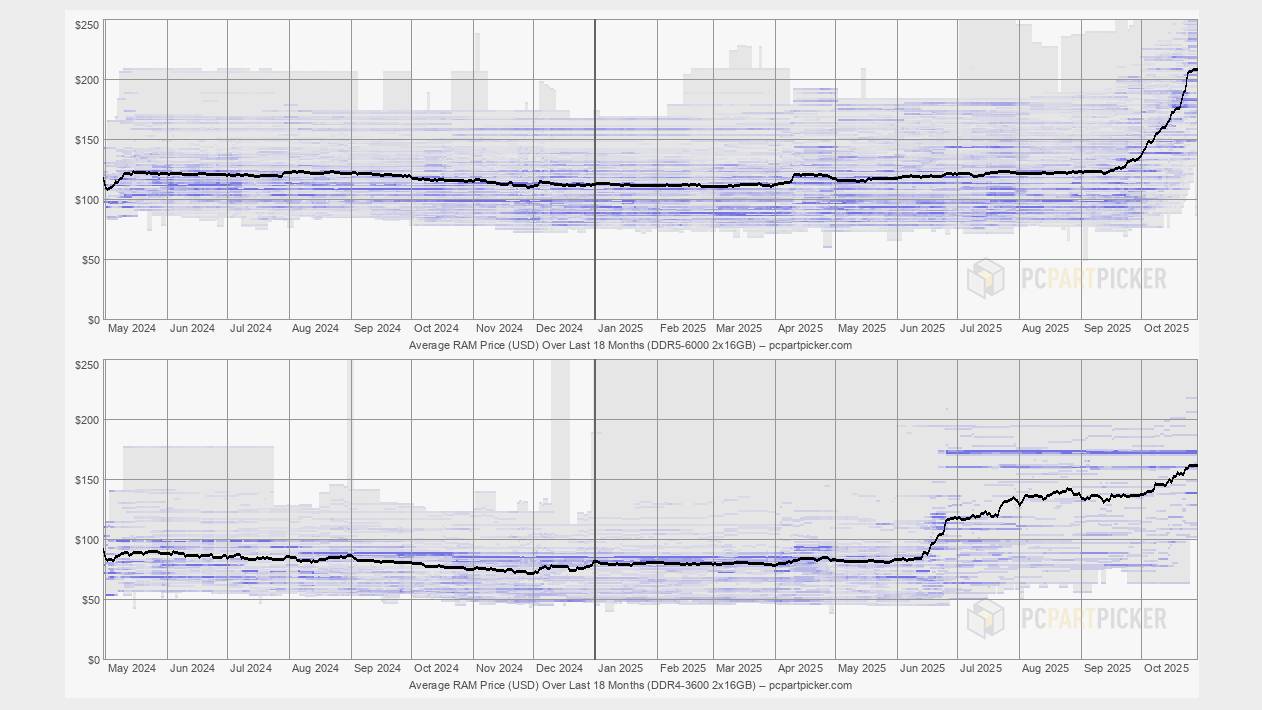

Memory shortages aren't new. The semiconductor industry cycles through tight supply periods like clockwork. But this one's different. In previous cycles, the squeeze came from demand spikes in specific sectors—maybe smartphones got hot one year, then servers the next. These were predictable, manageable shifts that manufacturers adjusted to within 6 to 18 months.

The current situation is different because AI data centers aren't just competing for a slice of RAM production. They're fundamentally changing what "high-demand" means.

HBM, or high-bandwidth memory, is the lifeblood of AI inference and training systems. These chips can transfer data at rates several times faster than conventional DRAM. A single high-end AI processor might need 40GB to 80GB of HBM just to function properly. When you're building a data center with thousands of GPUs, the memory requirements become staggering.

Nvidia's data center GPUs, for example, can come equipped with 192GB of HBM per card. A single cluster with 100 GPUs needs 19.2TB of high-bandwidth memory. When you consider that hyperscalers like Open AI, Google, Microsoft, and Meta are building dozens of these clusters, sometimes hundreds, the math becomes terrifying. We're talking about petabytes of demand hitting a market that was designed to produce gigabytes.

Memory manufacturers have made the logical business decision: follow the money. HBM commands premium prices and delivers higher margins than the low-bandwidth DRAM that goes into phones, laptops, and cars. When Samsung, SK Hynix, and Micron have limited fab capacity, they allocate resources to the highest-value applications first.

That's just basic supply and demand. But it creates a cascading problem downstream. Consumer device manufacturers suddenly find themselves competing for the remaining allocation of standard DRAM. And that scarcity drives prices up. When prices up, they get passed to you.

How AI Created This Perfect Storm

To understand how we got here, you need to understand what changed. For decades, RAM demand was relatively predictable. A new generation of smartphones hit the market, they used more RAM, manufacturers ramped production, the market cleared in 12-18 months. Gaming consoles launched. Computers got upgraded. Servers got refreshed. Each had appetite, each had patterns.

AI workloads shattered that pattern.

Sanchit Vir Gogia, CEO of Greyhound Research, explained the core issue to NPR in late December: "AI workloads are built around memory. AI has changed the nature of demand itself. Training and inference systems require large, persistent memory footprints, extreme bandwidth, and tight proximity to compute. You cannot dial this down without breaking performance."

That last sentence is crucial. You can't compromise on memory in AI systems without breaking the entire system. It's not like smartphone RAM where adding an extra 2GB helps but isn't essential. With AI, the memory bandwidth requirements are fundamental to the architecture.

When Open AI trains GPT-5, or when Google trains Gemini Ultra, or when Meta builds custom AI accelerators, they're not just throwing more compute at the problem. They're reshaping the entire data flow of their systems around memory. That means every dollar spent on GPUs is only as valuable as the memory supporting those GPUs.

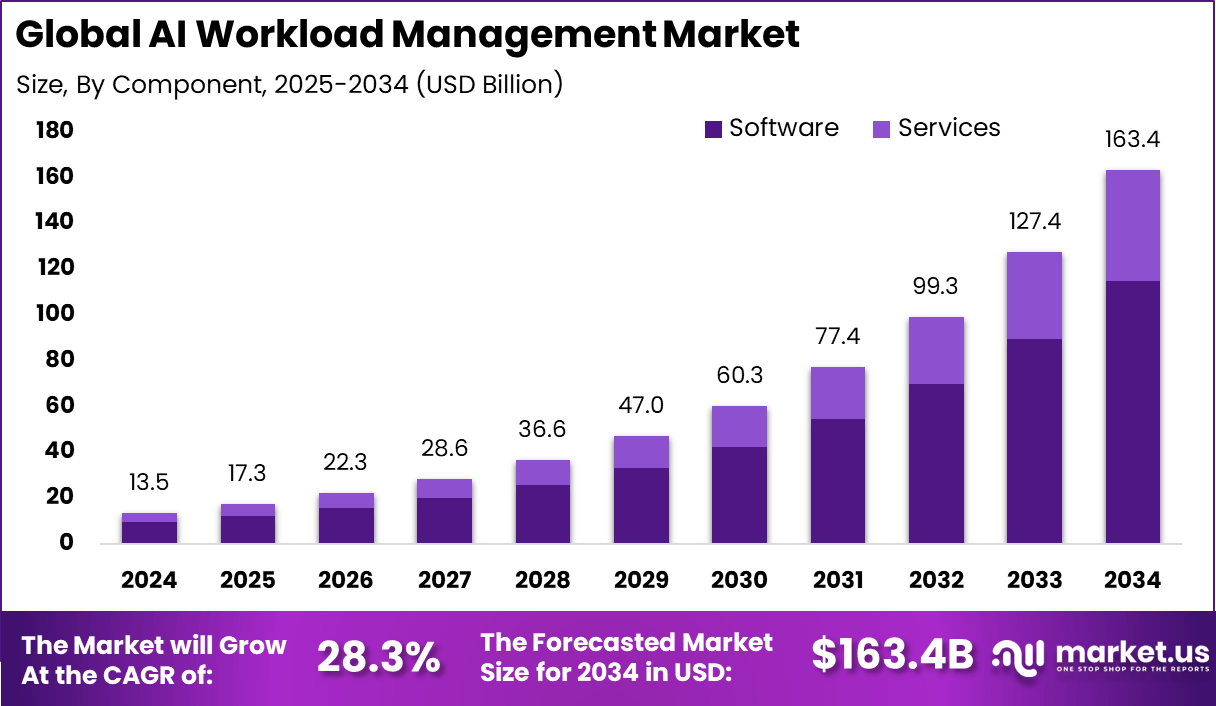

This creates a virtuous cycle, from the perspective of memory manufacturers but a vicious one for everyone else. More AI investment means more memory investment. More memory production capacity means higher manufacturing costs to build the fabs. Higher manufacturing costs get amortized across all memory production, raising baseline prices.

And here's the kicker: those investments in manufacturing take 3-5 years to produce actual new capacity. Samsung announced new fab investments in 2024 and early 2025. You won't see the production capacity hit the market until 2027 or 2028.

That's a long time to be in a supply-constrained market.

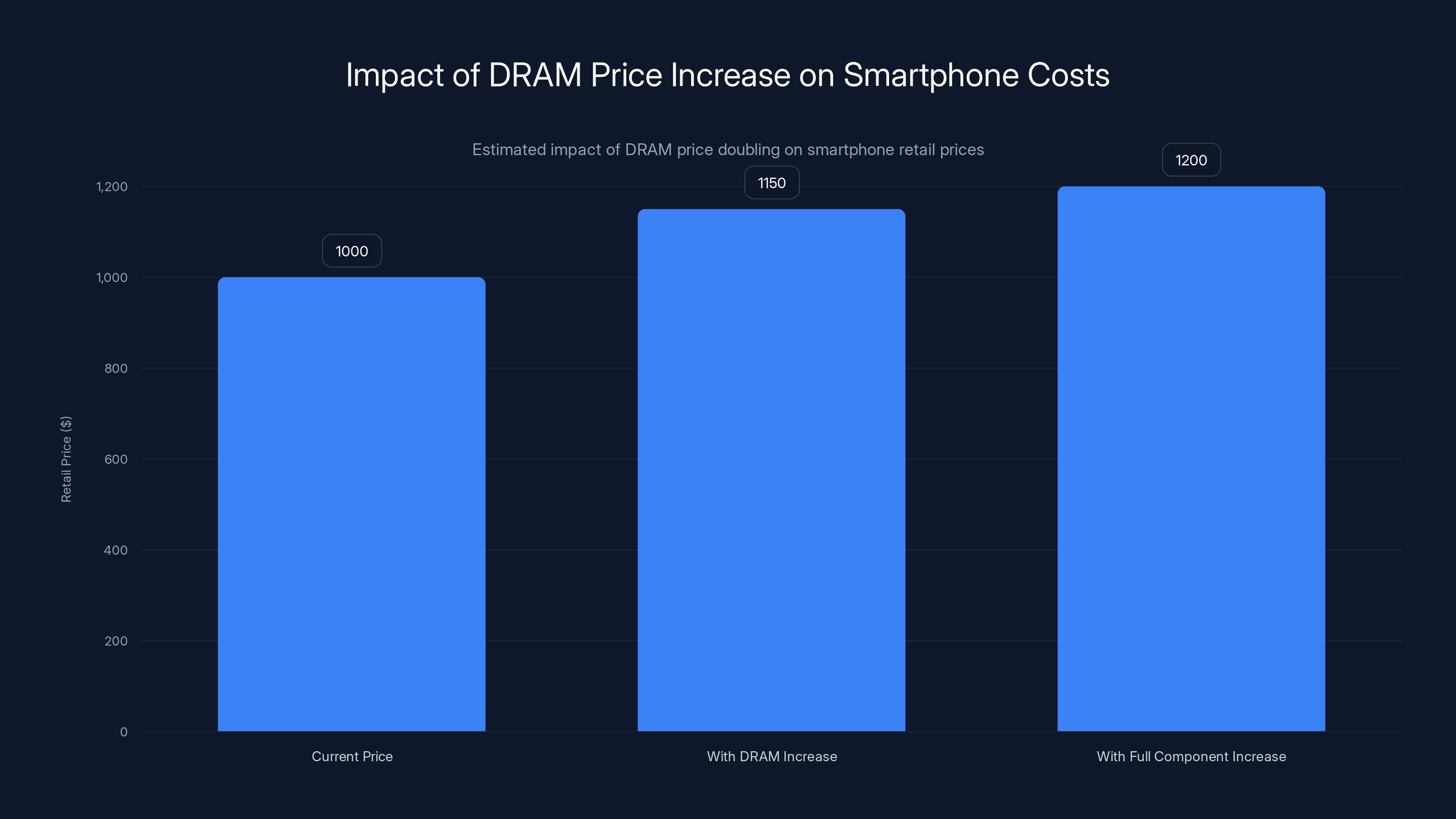

Estimated data shows that a doubling of DRAM prices could increase smartphone retail prices by 10-15%, while a full component cost increase could lead to a 20% price hike.

The Ripple Effect: When RAM Shortage Becomes Everything Shortage

Here's where it gets really interesting. You'd think a RAM shortage would mainly affect data centers and servers. But Samsung's warning wasn't focused on enterprise customers. It was focused on everyone else—the companies making products for consumers.

Because the same manufacturers producing HBM for AI accelerators are producing the standard DRAM that goes into your phone. It's not like HBM manufacturing happens in separate facilities with separate supply chains. It's the same fabs, the same engineers, the same raw materials, competing for production slots.

When a fab is running at capacity and can produce either 100,000 wafers of HBM or 300,000 wafers of consumer DRAM, the economics are clear. HBM generates higher revenue, higher margins, and higher strategic value. So the fab produces HBM.

That means phone manufacturers have to buy DRAM from whoever's selling, at whatever price they're asking. And because everyone's in the same boat, everyone's paying premium prices.

But it doesn't stop there. The shortage cascades through entire product categories. Automotive RAM, which is typically low-bandwidth and cheap, gets affected because the foundries that make it are also capacity-constrained. A car manufacturer that budgeted for

Then there's gaming consoles. The PS5 and Xbox Series X both use GDDR6 memory, which isn't as premium as HBM but is still relatively specialized. Supplies are tight. If there's a new console refresh cycle in 2025 or 2026, you better believe memory costs are factoring into the launch price.

Think about Io T devices, smart TVs, routers, security cameras—anything with onboard memory is affected. The shortage isn't theoretical. It's hitting real product categories right now.

Samsung's Signal and What It Means

Samsung's warning was notable not just for what it said, but for when and how it said it. In early December 2024, Samsung told Reuters it was "monitoring the market" but wouldn't comment on pricing. That's corporate non-speak. By late January 2025, at CES, Wonjin Lee was basically announcing price increases.

That change in messaging is deliberate. Companies don't accidentally signal price hikes without planning for them. Samsung was softening the ground, preparing the market, managing expectations.

Why? Because Samsung sells consumer devices. Smartphones, tablets, laptops, displays, storage drives—all of it requires memory. If Samsung announced price hikes without warning, competitors could underprice them for a quarter or two, stealing market share. But if Samsung gets out ahead, explaining why prices are rising across the industry, it normalizes the increase. Competitors face the same supply constraints, so they have no choice but to follow.

It's smart business, but it's also a signal that Samsung expects these price increases to stick around for a while. Companies don't soft-launch price hikes if they expect demand to normalize in a few months. Samsung's preparing for a 2-3 year supply crunch at minimum.

And Samsung isn't alone. SK Hynix, the second-largest DRAM manufacturer, is facing the same pressures. Micron, the third player, is dealing with similar constraints. The entire memory industry is moving in the same direction.

When the entire industry moves together, it's not collusion. It's just economics. Customers pay more, margins improve, and investors get excited about memory stocks. The only people who lose are everyone buying devices.

The Math Behind the Memory Crisis

Let's quantify what's happening here. The average smartphone currently uses between 8GB and 12GB of RAM. With current DRAM pricing around

If DRAM prices double due to shortage—which some analysts are predicting—that component alone adds

For a phone that currently retails at $900-1000, that's a 10-15% price hike, justified almost entirely by component costs.

Now consider that not just memory is affected. If memory supplies are tight, other semiconductor components get squeezed too. Processors, power management ICs, sensors—everything gets more expensive in a supply-constrained environment. The total impact could easily be 15-20% for new devices launching in 2025-2026.

Consider the formula for device pricing in a supply-constrained market:

Where margin factor typically accounts for maintaining historical profit margins as component costs rise.

For a $1000 device with a 40% gross margin:

- Base profit: $400

- If component costs rise by 200 price increase

- New selling price: $1200

- New margin: 40% (on higher absolute numbers)

That's not speculation. That's how the economics of consumer electronics work when input costs rise.

Estimated data shows that prices for premium tech devices are expected to rise significantly by 2025, with gaming laptops potentially increasing by $500. Budget devices may see smaller increases, with potential savings during sales events.

Which Devices Will Get Hit First (and Hardest)

Not all devices will see equal price increases. Some categories are more memory-sensitive than others. Here's the hierarchy of pain:

Flagship Smartphones and Laptops: These already use premium memory with high bandwidth and large capacities. A Samsung Galaxy S25 with 12GB or 16GB of RAM, plus generous storage, is memory-heavy. Price increases hit quickly and hard. Expect $100-200 increases for premium phones in 2025-2026.

Gaming and Content Creation Devices: Gaming laptops, workstations, and high-end tablets rely on fast RAM. These products already command premium prices, so adding $50-100 in memory costs is absorbed without dramatic percentage increases. But the impact is still there.

Mid-Range and Budget Devices: This is where the disruption matters most. A

Connected Devices and Io T: Your router, smart home hub, security camera, and similar devices use small amounts of cheap DRAM. A 50% increase in memory costs might only add

Automotive and Industrial: Cars and industrial systems use memory too, but the price sensitivity is different. A

The AI Investment Paradox: Why This Keeps Getting Worse

Here's the uncomfortable truth that nobody in tech wants to admit: the AI boom is actively making the supply chain worse, and it's going to continue doing so for years.

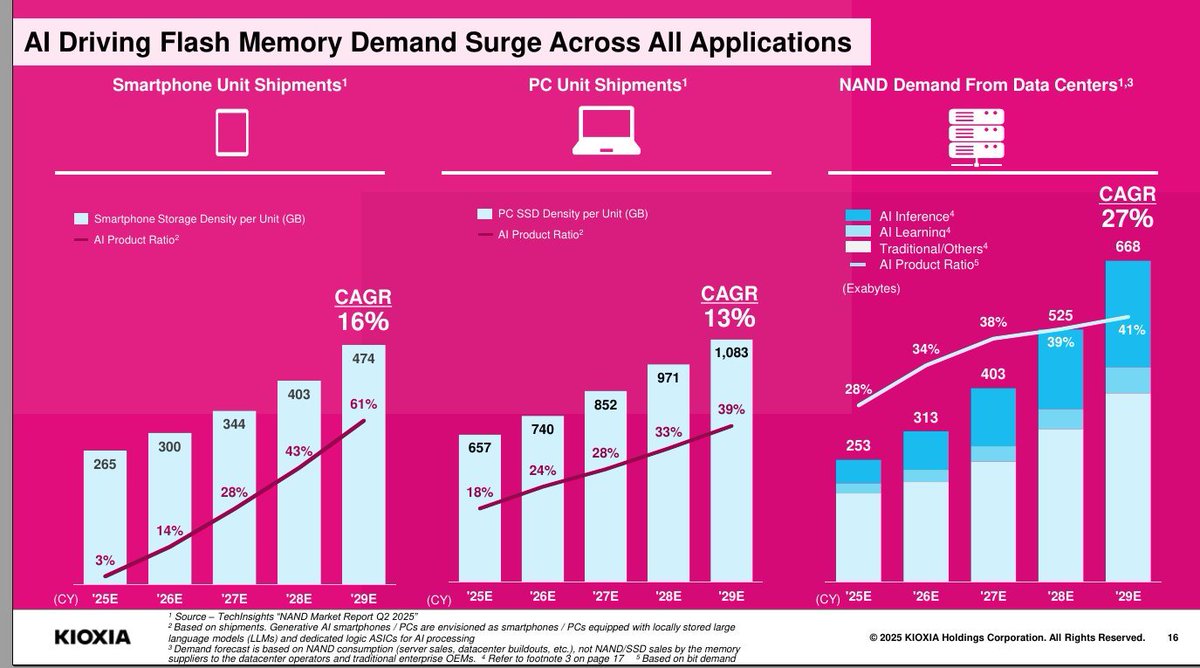

Every dollar spent by Microsoft, Google, Meta, Open AI, and others on AI infrastructure is a dollar that creates demand for memory that wasn't there before. That demand is legitimate—AI systems genuinely need that memory. But it's also demand that's emerging faster than manufacturing capacity can respond to.

Memory manufacturers have announced $50+ billion in new fab construction globally through 2027. That's massive investment. But fabs take 3-5 years to build and get to full production capacity. So all the new fab announcements being made today won't produce meaningful supply increases until 2027-2028.

Meanwhile, AI spending is accelerating. Estimates suggest AI infrastructure spending will exceed $500 billion annually by 2026. That's before considering the memory requirements of the thousands of startups building AI applications, edge AI devices, and AI-enabled consumer products.

The mathematical reality is grim: demand growth from AI is outpacing supply expansion by a factor of 2-3x. That's the definition of a structural shortage. It doesn't resolve in months. It resolves when either (a) AI spending slows down significantly, or (b) manufacturing capacity finally catches up, which won't happen before 2027-2028.

Neither scenario is arriving soon.

What About The AI Bubble Bursting?

Some financial forecasters have started asking the obvious question: what if the AI bubble bursts? If AI spending suddenly collapsed, would that alleviate the memory shortage?

Theoretically, yes. If hyperscalers suddenly stopped building data centers and reduced their memory purchases by 80%, the market would rebalance quickly. Memory prices would collapse. Device manufacturers would face downward cost pressure.

But here's the thing: there's no realistic scenario where that happens overnight. AI spending has become embedded in the strategies of the world's largest technology companies. Microsoft, Google, Amazon, and Meta have publicly committed to multi-year AI infrastructure spending. That's not going away in a quarter or two.

Even if venture capital funding for AI startups slowed down significantly (which it hasn't), the infrastructure spending from hyperscalers would continue. These companies are racing to build competitive AI systems. None of them are going to blink first.

And honestly? Some slowdown in AI spending might be healthy for the market, but it wouldn't dramatically improve things for consumers. Even a 30-40% reduction in AI memory demand would still leave the market supply-constrained by historical standards.

The more likely scenario is a gradual moderation. AI infrastructure spending grows at 40-50% annually instead of 60-80%. That's still substantial growth, just slower than the frenzied pace of 2023-2024.

That still means memory remains constrained and prices remain elevated.

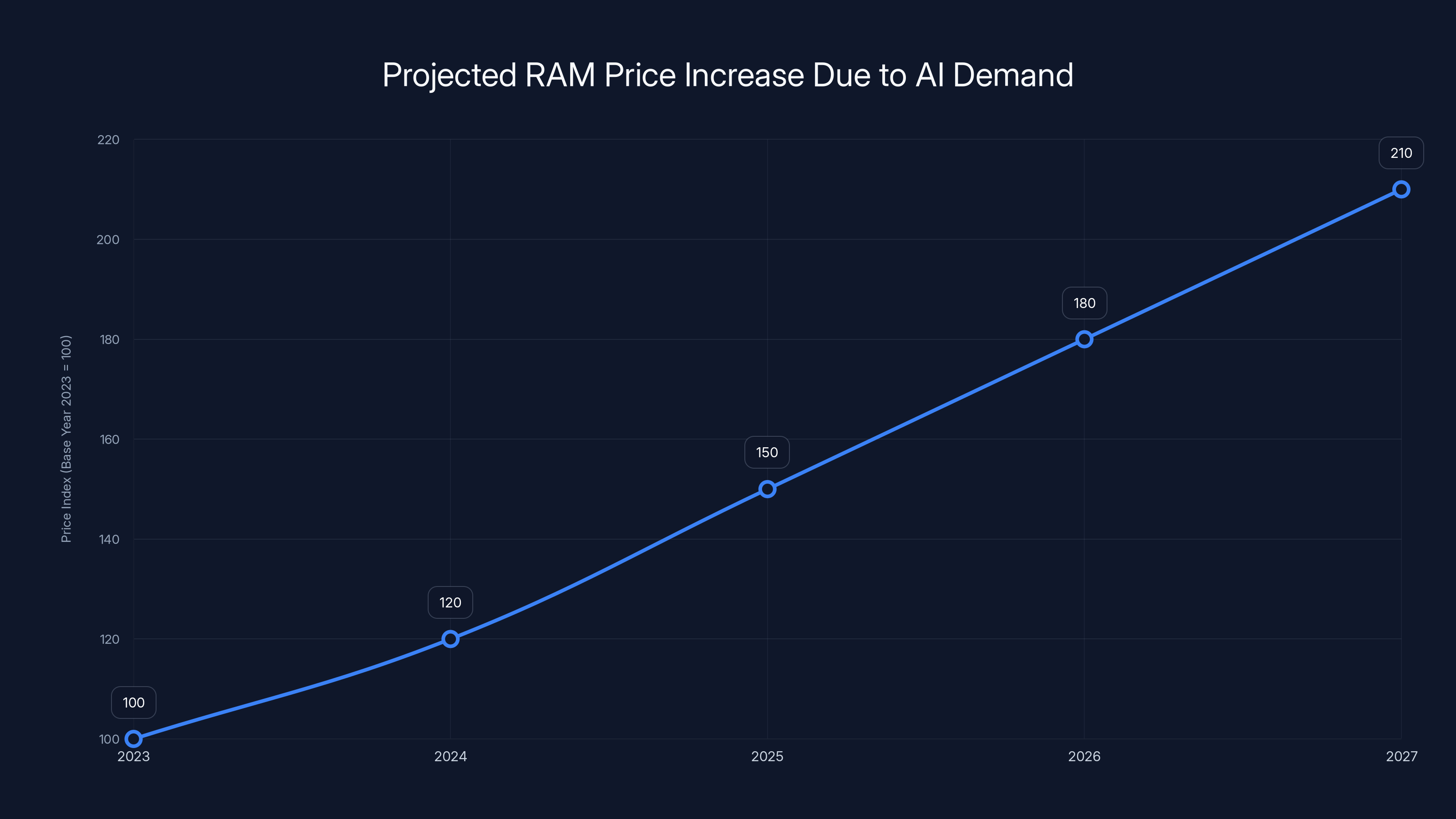

RAM prices are projected to increase significantly from 2023 to 2027 due to AI data centers' demand for high-bandwidth memory. Estimated data.

The Manufacturing Reality: Why New Capacity Takes Forever

It's worth understanding why Samsung can't just spin up new fabs overnight. Memory manufacturing is one of the most capital-intensive industrial processes on Earth.

Building a state-of-the-art DRAM fab costs $10-20 billion and takes 3-5 years. Not because manufacturers are dragging their feet, but because the engineering is genuinely complex. A modern fab has to maintain tolerances measured in nanometers. A single speck of dust ruins a wafer. The chemical processes are finicky. Equipment must be precisely calibrated.

Then there's the challenge of finding capital and securing permits. Samsung, SK Hynix, and Micron aren't the only companies competing for funding and resources. The entire semiconductor industry is in expansion mode. Equipment suppliers like ASML, Tokyo Electron, and Lam Research are booked solid for years. You can't just order a new fab's worth of equipment and get it next quarter.

So when Samsung announces a new fab or a major expansion, that's genuinely the fastest path to capacity growth available. And that path is still 3-5 years long.

Meanwhile, existing fabs are being pushed to absolute limits. Yield rates are dropping slightly as fabs run hot. Equipment is aging faster because it's being used more intensively. But the manufacturers can't slow down because AI demand is too valuable.

It's a treadmill with no way off.

Price Predictions: What's Your Device Going to Cost?

Based on Samsung's warnings and current supply dynamics, here's what reasonable price expectations look like:

Immediate (Q1-Q2 2025): Minimal price increases, maybe $20-50 on flagship devices. Companies are still burning through inventory purchased at lower component costs. Premium brands (Apple, Samsung) might implement increases faster than budget brands.

Mid-Term (Q3-Q4 2025): Significant price increases, $75-150 on flagship devices and laptops. Memory costs have fully propagated through supply chains. New product launches reflect higher BOM costs.

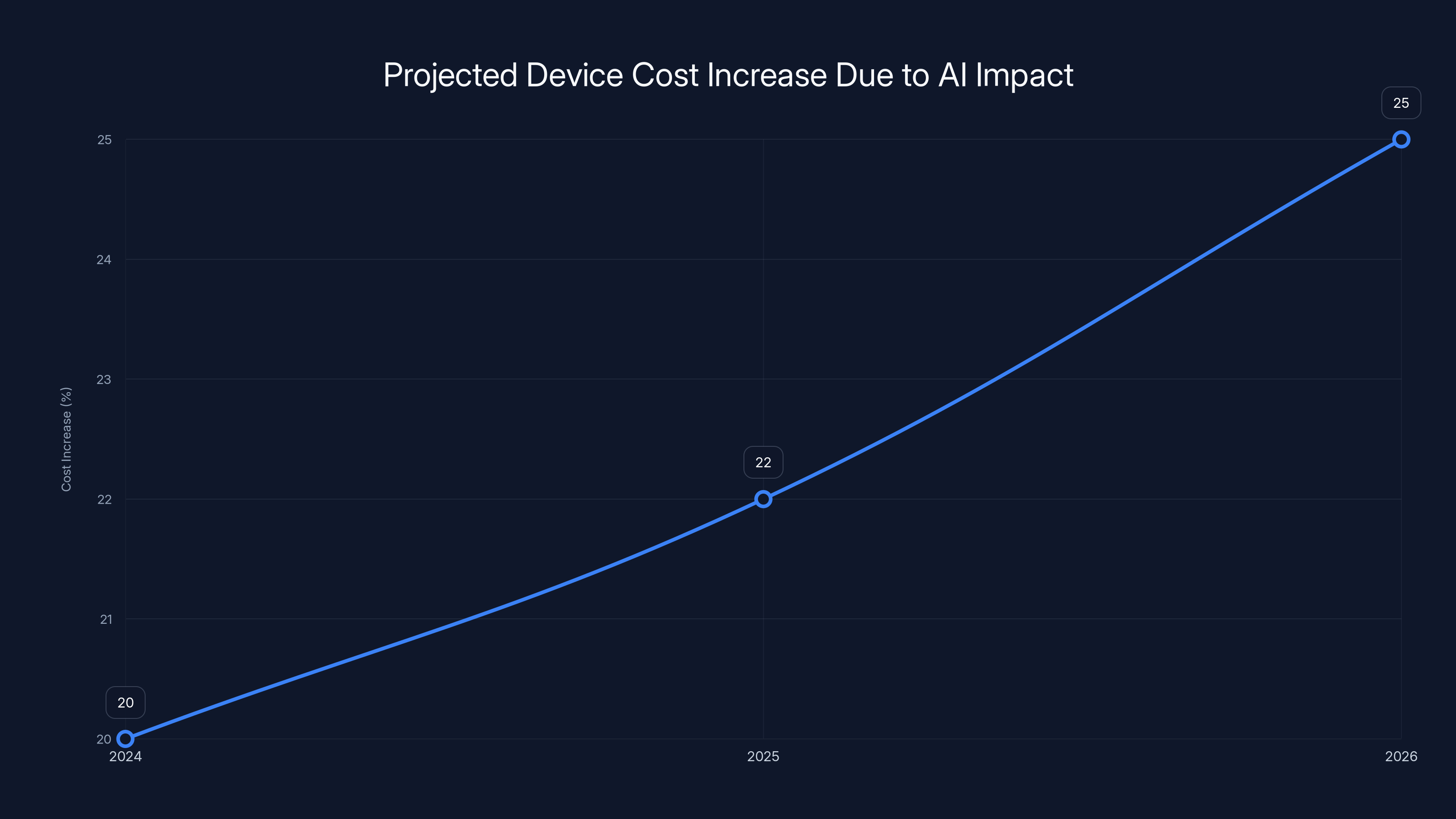

Extended (2026-2027): Further increases if memory prices don't stabilize, potentially 15-25% above 2024 baseline. But also possibility of stabilization if new fab capacity begins coming online in 2027.

For context, in the 2017-2018 shortage, memory prices doubled. Component costs on devices rose 20-40%. Don't expect that scale of impact this time because, well, we're already adjusting for it. But we're also earlier in the cycle.

The most likely scenario is a 10-20% cumulative increase in device prices over the next 18-24 months, with most of that hitting by the end of 2025.

What Consumers Should Do Right Now

So what's the practical advice? Should you rush out and buy a laptop today? Should you hold off and wait for prices to drop? Should you just accept that everything's getting more expensive?

Think about your actual needs. If you need a device now and your current one is struggling, buy now. Waiting 6 months hoping for lower prices is a losing bet. Prices are more likely to go up than down in the near term.

If your device works fine and you don't have a specific deadline, waiting until mid-2025 might catch sales events where manufacturers are clearing 2024 inventory before launching pricier 2025-2026 lines. That could save you $50-200.

For premium purchases (gaming laptop, high-end tablet, powerful desktop), buying soon might make sense. These devices will see bigger absolute price increases. A

For budget purchases (entry-level laptop, basic tablet, phone for casual use), waiting until fall sales events might work in your favor. Manufacturers compete harder on price in the $300-500 range, so promotional discounts might offset some cost increases.

But here's the key insight: this isn't a temporary supply shock that's going to normalize in a few months. This is a structural shift in the market. Memory costs are likely staying elevated for 2+ years. So don't expect a "good time to buy" that's significantly better than today.

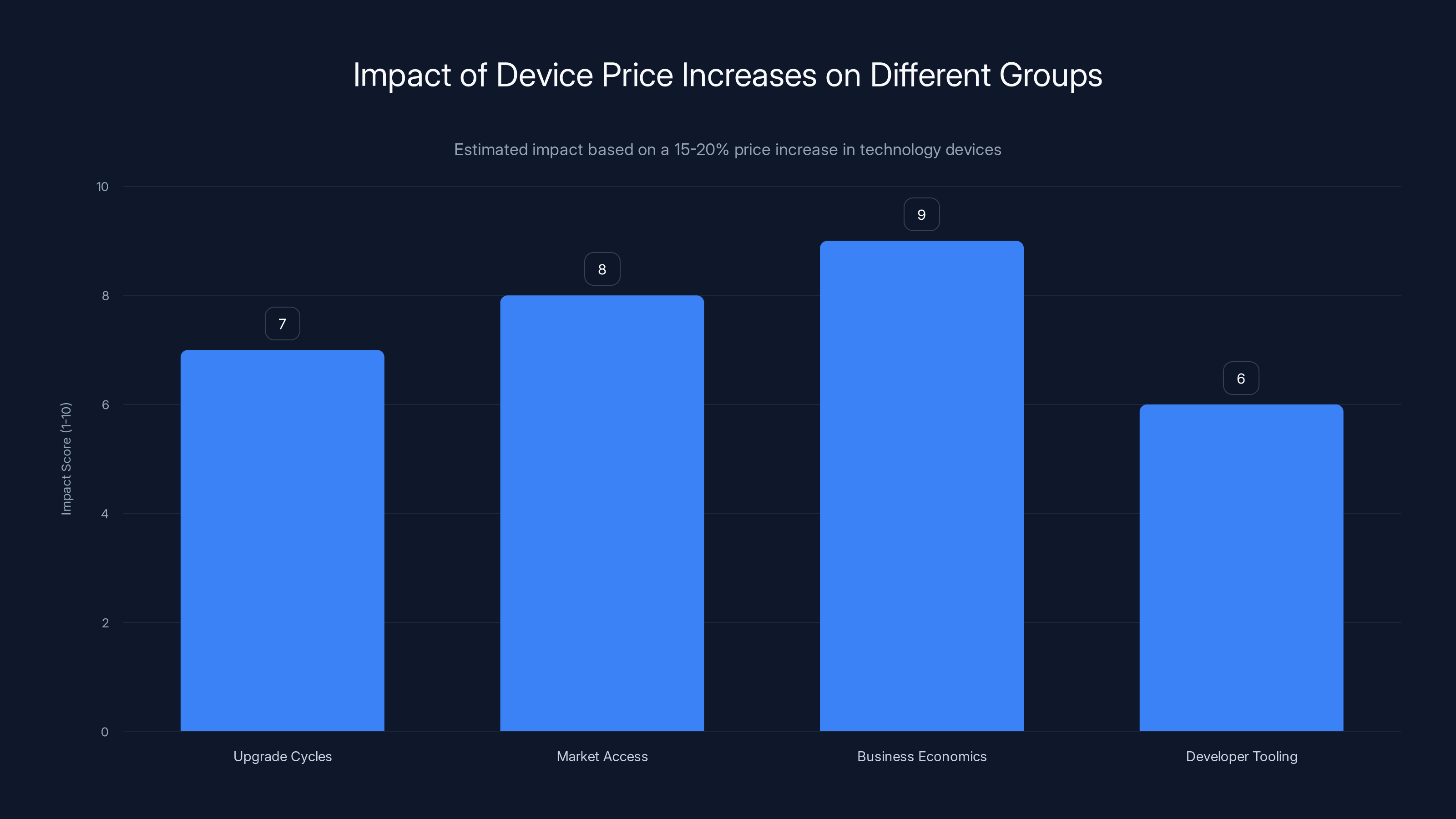

Estimated data shows that a 15-20% increase in device prices significantly impacts market access and business economics, with scores of 8 and 9 respectively, highlighting the widening digital divide and financial strain on startups.

Who Benefits From This Shortage?

While consumers face higher prices, let's be clear about who wins from this situation.

Memory Manufacturers (Samsung, SK Hynix, Micron): Dramatically improved margins, record profitability, increased stock valuations. These companies are loving this market condition.

AI Hardware Companies (Nvidia, Advanced Micro Devices, custom silicon designers): More expensive components mean better margins and higher-value products. They're winning too.

Established Tech Companies: Apple, Microsoft, Google, Amazon, and similar firms have purchasing power and long-term supply agreements that protect them from the worst price increases. Their smaller competitors suffer more.

Enterprise Customers: Corporations and cloud providers can absorb price increases. They pass costs to consumers through higher subscription fees or service charges. It's not about whether they can afford it—they can. It's about whether consumers will accept higher prices.

Who loses? Everyone else. Consumers pay more. Startups face higher component costs that erode already-thin margins. Mid-market manufacturers struggle to compete with larger players who negotiate better bulk pricing.

It's a wealth concentration mechanism, which is a common outcome of supply shortages. The companies with the most power and capital weather it best. Everyone else pays more.

The Bigger Question: Is This The Good Part of the AI Era?

Remember the meta-question that was floating around: "When do we get to the good part of the AI era?" When will AI actually make life better instead of just hyping up tech stocks and enriching chip companies?

We're still waiting.

The promise of AI has always been that it automates work, increases productivity, lowers costs, and improves living standards. Those things are theoretically happening. AI is writing code, answering questions, generating images, and increasingly, handling business processes.

But the economic gains are being captured by AI platform companies, hardware companies, and infrastructure providers. The costs are being distributed to everyone else through higher device prices, higher subscription fees, and job displacement without corresponding wage growth.

It's the classic technology pattern: early benefits flow to capital owners, workers and consumers bear the costs, and eventually some productivity gains flow through as broader living standard improvements. That last part usually takes a decade or more.

We're 2 years into the Chat GPT era and still firmly in the "everyone except capital owners is getting squeezed" phase.

Samsung's warning is a symptom of this dynamic. The AI infrastructure boom is driving costs everywhere, but the economic benefits are narrowly distributed. Most people will experience this era as their devices getting more expensive for reasons they don't understand, powered by AI systems they didn't ask for.

Maybe in 2030 or 2035, the productivity gains from AI will have compounded enough that consumers see meaningful benefits. But in 2025? You're looking at higher prices, not lower ones.

What Needs to Happen to Fix This

There's no silver bullet, but a few things could help:

Demand Management: AI companies could optimize their memory usage, accept slightly lower performance to reduce memory requirements, or stagger infrastructure investments. This is unlikely because competitive pressure means nobody wants to accept lower AI performance.

Supply Acceleration: Memory manufacturers could accelerate fab construction. Samsung and SK Hynix have announced this, but it still takes years. Micron is expanding but from a smaller base. This is the most realistic path, but it's still years away.

New Technologies: Novel memory technologies (photonic memory, quantum memory, advanced neuromorphic architectures) could eventually replace conventional DRAM. But these are research-stage. Nothing production-ready for years.

Market Recalibration: If AI infrastructure spending moderated significantly, or if widespread disappointment with AI ROI hit hyperscalers, spending could slow. This could ease the shortage. But it requires a major sentiment shift in the industry.

Alternative Memory: Companies could design AI systems using more conventional, plentiful memory types with different trade-offs. This would sacrifice performance but increase availability. It's theoretically possible but strategically unattractive for companies racing to build leading AI systems.

None of these are quick fixes. So expect the shortage to persist through at least 2026, with gradual easing in 2027-2028 as new manufacturing capacity comes online.

Device costs are projected to increase by 15-25% from 2024 to 2026 due to AI-driven memory demand. Estimated data.

The Larger Economic Picture

Zoom out for a moment. What's happening with memory pricing is a microcosm of a larger story: the early stages of the AI era are economically extractive for most people.

Big tech companies and semiconductor companies are capturing disproportionate value. Consumers and workers are bearing the costs through higher prices and employment displacement. The distribution of AI gains is top-heavy, not broad-based.

This is worth paying attention to because it informs policy conversations about AI regulation, corporate taxation, and labor protection. If society wants AI to broadly improve living standards rather than narrowly enriching capital owners, we need intentional choices about how that technology gets deployed and who captures its gains.

Right now? The market is just letting supply and demand work. And supply-and-demand work in favor of whoever has capital and market power.

Consumers? We're just paying the bills.

Looking Ahead: 2025-2027 Timeline

Here's a realistic timeline for how this plays out:

Q1 2025: Samsung and other manufacturers announce official price guidance. Device makers prepare for higher component costs.

Q2-Q3 2025: New flagship devices launch at 10-15% higher prices than 2024 equivalents. Manufacturers frame it as "feature parity pricing" not "component cost increases." Consumers notice and complain.

Q4 2025: Memory prices stabilize at elevated levels. Supply constraints are real but not worsening. Some new fab capacity in Asia begins production ramp.

2026: Mid-year, first meaningful relief as multiple new fabs produce first meaningful volumes. Memory prices begin softening, but remain elevated vs. 2023 levels. Device prices stabilize but don't drop—manufacturers hold prices and improve margins.

2027: Significant new fab capacity online. Memory prices approach more historical norms. But device prices don't fully revert because manufacturers have reset expectations. A "normal" laptop from 2027 costs more than a 2023 comparable model.

2028+: Full equilibrium with new, higher baseline prices. AI memory demand is still huge but is being served by new capacity. Other semiconductor bottlenecks emerge in different areas (like advanced packaging, advanced nodes for specialized chips).

That's the most likely trajectory. It's not fun for consumers, but it's how supply chains actually work.

The Human Impact: Beyond Just Prices

When device prices increase 15-20%, it matters. It's not just about rich people paying more. It's about:

Upgrade Cycles: People keep older devices longer. That extends support costs, reduces security updates, and fragments the user base across older, vulnerable software versions.

Market Access: Lower-income consumers are priced out of new technology. The digital divide widens. Not everyone can afford a

Business Economics: Startups and small businesses get squeezed harder than established companies. A startup that needs 50 laptops for its team faces an unexpected $50,000 bill if they haven't already purchased. That's real money that impacts hiring, growth, and runway.

Developer Tooling: Developers working on budget hardware feel the impact most. A junior developer who was planning to buy a

The abstract economics of supply chains have real human consequences. Samsung's warning isn't just news for investors. It's a signal that more people are about to face higher costs for technology they depend on.

Conclusion: Bracing for Impact

Samsung's warning crystallizes something that's been building in the shadows: the AI revolution is profitable for tech companies and expensive for everyone else, at least in the short term.

Memory costs are rising because AI data centers are consuming memory at unprecedented scales. That's not a small problem that resolves in quarters. That's a structural shift that will reshape component costs and device pricing for the next 2-3 years.

Expect your next device to cost 15-25% more than comparable models from 2024. Expect that premium to persist through at least 2026. And expect the economic benefits of AI to remain narrowly distributed while the costs spread broadly.

Is there a path to a better outcome? Sure. Demand moderation, accelerated manufacturing, new technologies, or market recalibration could all help. But none of them are arriving in the next 6 months. We're locked into the current trajectory for at least 12-18 months.

So what should you do? If you need a device, be thoughtful but don't overthink it. Prices aren't likely dropping anytime soon. Budget for higher costs. And maybe—just maybe—start thinking about how to advocate for policies that ensure AI's gains are distributed more broadly than they currently are.

Because right now, if you're not a shareholder in a memory company or a cloud platform, the AI era is expensive.

FAQ

What is RAM and why does it matter for AI systems?

RAM (Random Access Memory) is the fast, short-term memory that computers use to hold data while processing it. For AI systems, RAM is absolutely critical because AI models need to access massive amounts of data repeatedly while training and running inference. Standard RAM might hold gigabytes of data, but enterprise AI systems need terabytes of accessible memory. Without sufficient fast memory, AI processors sit idle waiting for data, which completely breaks the performance equation. This is why HBM (high-bandwidth memory) exists—it's RAM designed specifically for the extreme bandwidth demands of AI workloads.

How much more will devices cost due to the RAM shortage?

Based on current supply constraints and Samsung's warnings, expect devices launching in 2025-2026 to cost 10-25% more than comparable 2024 models, with the increase largely driven by component costs. A flagship smartphone that cost

When will RAM prices come down?

Memory prices are unlikely to meaningfully decrease before 2027, and that's optimistic. New manufacturing capacity announced today won't produce significant volumes until 2026-2027. Even when new fabs come online, memory prices will normalize to elevated levels rather than revert to 2023 prices. Think of this as a market reset rather than a temporary spike. Expect higher memory baseline prices for the remainder of the decade unless something dramatically changes in AI infrastructure spending.

Which device categories will see the biggest price increases?

Flagship smartphones and high-end laptops will see the largest absolute price increases ($100-200+) because they already use premium memory in large quantities. Gaming devices, workstations, and AI-capable devices will follow. Mid-range devices see smaller absolute increases but proportionally significant impacts on price-sensitive customers. Budget devices are least affected in absolute terms but remain impacted. Automotive electronics, Io T devices, and industrial equipment will also see increases, though less visible to consumers than phone prices.

Why can't manufacturers just build more memory fabs?

Building a modern DRAM fab costs $10-20 billion and takes 3-5 years to construct and bring to full production capacity. Manufacturers can't just speed this up through investment or determination—the engineering constraints are real. Equipment suppliers are booked solid. Real estate and permits take time. And frankly, the ROI on new capacity depends on sustained high prices. If prices drop, the new fabs become less profitable. Manufacturers are announcing investments (Samsung, SK Hynix), but those investments are already in motion and will take years to bear fruit.

Is this shortage really because of AI, or are there other factors?

The shortage is absolutely driven by AI data center demand. That's not a theory—it's what memory manufacturers and industry analysts are confirming. Previous memory shortages came from consumer device spikes or gaming console launches. Those were manageable, cyclical events. This is different because AI demand is structurally larger, not cyclical. It's reshaping the entire memory market. Other factors like geopolitics and logistical challenges play supporting roles, but the core driver is AI infrastructure investment.

What should I do if I'm planning to buy a device in 2025?

If you need the device now or in the next 60 days, buy now. Waiting won't help because prices are more likely to increase than decrease. If you can wait until fall 2025, you might catch promotional pricing as manufacturers clear inventory before new launches. For expensive devices (gaming laptops, high-end workstations), buying sooner rather than later makes sense because the price increases are substantial. For budget devices, waiting for seasonal sales might offset some increases. But don't wait expecting a "good time to buy"—that's unlikely to come for 18+ months.

Will this affect AI product pricing?

Indirectly, yes. AI platforms like Chat GPT Plus, Claude Pro, and others have thin margins dependent on infrastructure costs. Higher memory and compute costs either reduce profitability or force price increases. Some AI services will raise subscription prices in 2025-2026. Others will absorb costs and accept lower margins (unlikely in a venture-backed context). The effect on end users depends on which companies choose which strategy. But the trend is upward pricing pressure across the board.

Key Takeaways

-

Samsung's warning signals a 2-3 year supply constraint: Memory costs are rising due to AI data center demand, and the shortage won't resolve until new manufacturing capacity comes online in 2026-2027. Device prices are following.

-

HBM demand is fundamentally different from previous shortages: AI systems require extreme memory bandwidth that can't be compromised. This creates persistent, structural demand that won't normalize until manufacturing catches up.

-

Price increases will be 10-25% for most devices: Expect $100-200 increases on flagship phones and laptops by late 2025, with further adjustments through 2026.

-

The problem cascades across product categories: Not just phones and laptops—cars, gaming devices, Io T products, and industrial equipment all face memory cost increases.

-

AI infrastructure spending is unlikely to moderate soon: Hyperscalers are committed to multi-year AI expansion. Demand will remain strong, keeping memory constrained.

-

New manufacturing capacity exists but takes years: Samsung and SK Hynix have announced fab expansions that won't produce meaningful volume until 2026-2027.

-

The economic gains of AI are narrowly distributed: While memory and hardware companies see record profitability, consumers face higher prices. This is classic early-stage technology economics.

![Samsung RAM Price Hikes: What's Behind the AI Memory Crisis [2025]](https://tryrunable.com/blog/samsung-ram-price-hikes-what-s-behind-the-ai-memory-crisis-2/image-1-1767807614325.jpg)