The Post-Truth Era: How AI Is Destroying Digital Authenticity

Last week, a video surfaced on social media showing a major politician saying something wildly out of character. It spread like wildfire across X, TikTok, and Reddit. Within hours, people were debating policy implications based on a fake clip.

Here's the terrifying part: most people couldn't tell it wasn't real.

We're not just entering a post-truth era. We're already living in it. And AI is accelerating the arrival at lightspeed.

The problem isn't that AI can create convincing fakes anymore. That's old news. The real crisis is that we've collectively lost the tools to distinguish real from fake, and the technology keeps getting better while our detection skills stay frozen in 2020.

Cameo, the platform that lets fans book personalized videos from celebrities, sits at the epicenter of this chaos. The company built its entire business on authenticity. When you pay for a Cameo video, you're paying for proof that the actual person recorded it. You're not paying for an AI approximation. You're paying for the real deal.

But here's where it gets complicated.

As AI video generation tools improve, the line between what's authentic and what's synthetic becomes impossible to draw. Open AI, Anthropic, and a hundred startups are building tools that can clone voices, replicate faces, and generate videos that fool 90% of viewers. The technology has reached a critical inflection point where even experts struggle to identify deepfakes.

Cameo's leadership sees this coming. They understand that their business model depends on verifiable authenticity in a world where authenticity is becoming scientifically indistinguishable from fiction.

Let's talk about what's actually happening, why it matters, and what comes next.

TL; DR

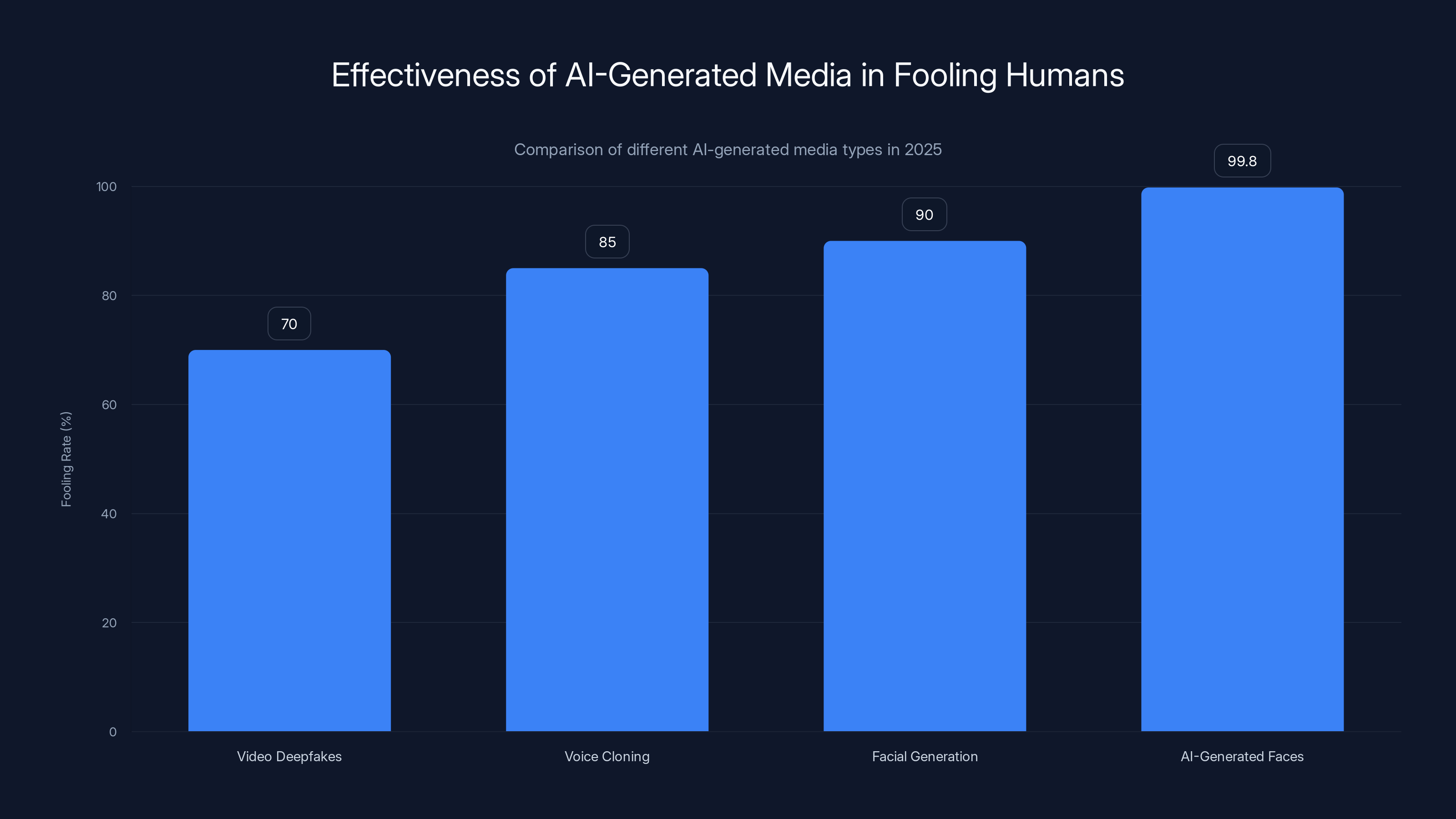

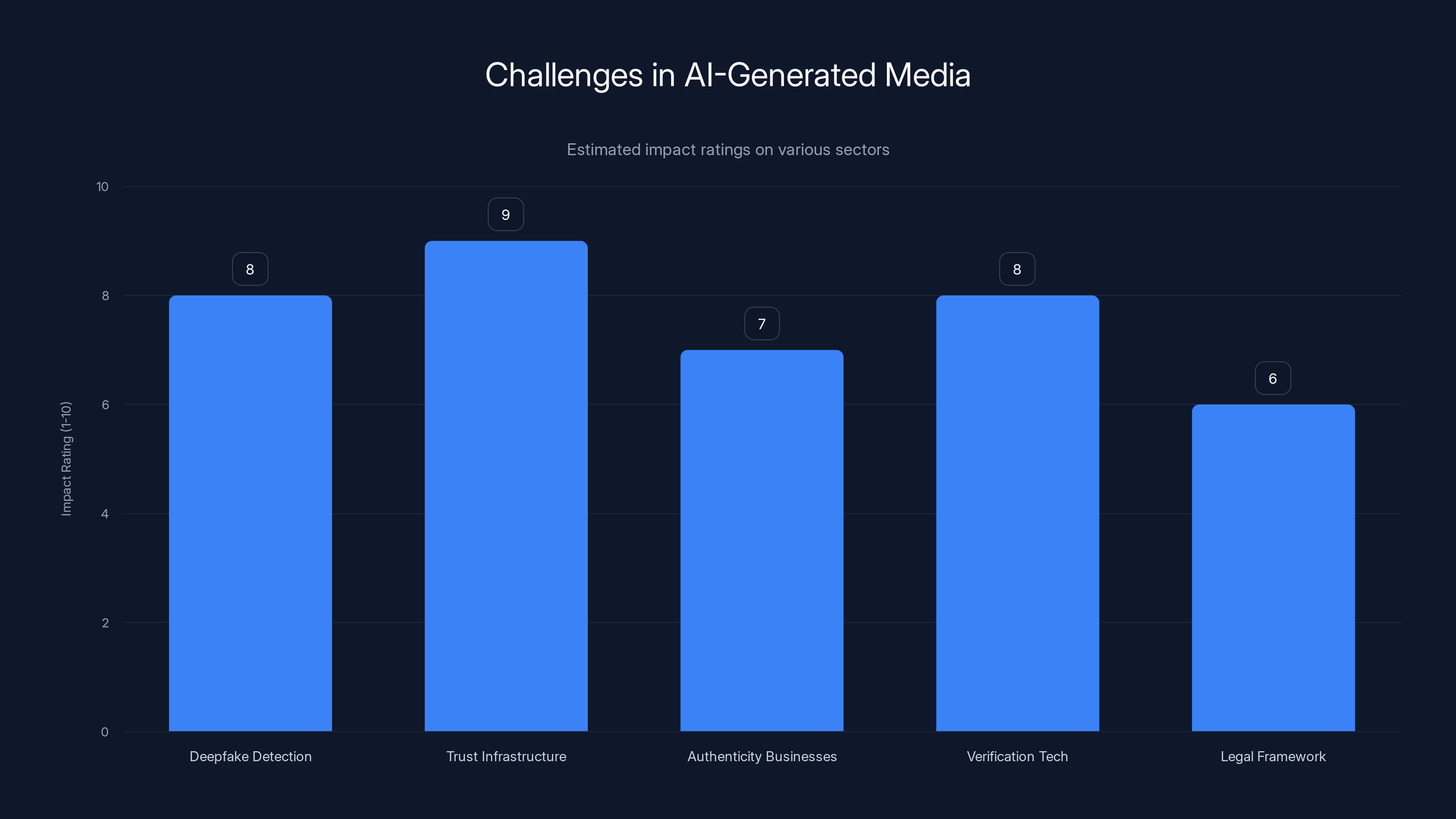

- AI-Generated Media Is Now Nearly Indistinguishable: Advanced deepfakes and synthetic videos fool experts at rates exceeding 70%, making verification nearly impossible

- Trust Infrastructure Is Collapsing: Traditional authentication methods are failing against AI, and society lacks agreed-upon standards for digital verification

- Authenticity-Dependent Businesses Face Existential Risk: Platforms like Cameo that monetize real human content now compete with AI that produces similar quality at 1% of the cost

- Verification Technology Lags Behind Generation Technology: Detection tools can't keep pace with new AI models; by the time you can detect Model X, Model X+1 is already deployed

- The Legal and Ethical Framework Doesn't Exist Yet: Governments and platforms are scrambling to define what constitutes illegal synthetic media, but no consensus has emerged

The pie chart illustrates the estimated equal distribution of challenges in detecting and regulating deepfakes, highlighting the complexity, cost, legal enforcement issues, and rapid evolution of AI as key barriers. Estimated data.

Understanding the Post-Truth Era

A post-truth era isn't about lies. Lies have always existed. It's about the collapse of shared reality.

In a functioning information ecosystem, you can fact-check claims. You can verify sources. You can trace information back to its origin and make a reasonable assessment about whether it's true or false. It's not perfect, but the infrastructure exists.

A post-truth era is when that infrastructure fails so completely that verification becomes impossible at scale. It's when the cost of proving something is real exceeds the cost of creating something fake. It's when average people can no longer reasonably determine what happened.

AI accelerates this collapse in three specific ways.

First, generation speed outpaces detection speed. A researcher can publish a paper explaining how to detect deepfakes created with Model A. By the time that paper is published, Model B already exists, rendering the detection method useless. We're in a permanent state of chasing yesterday's problem.

Second, the barriers to creation collapsed. Five years ago, creating a convincing deepfake required specialized knowledge, expensive hardware, and months of training data. Today? A teenager with a laptop can use Hey Gen or similar tools to generate videos in minutes. The democratization of synthetic media is complete.

Third, institutional verification is broken. Traditional institutions (news organizations, courts, government agencies) developed trust because they had gatekeeping power. Journalists had editors. Courts had evidence standards. But those institutions move slowly, and they're already overwhelmed. They can't verify everything fast enough, and the public knows it.

The result? We're experiencing what researchers call "epistemic collapse"—a breakdown in how we establish what's true.

How AI-Generated Media Became Indistinguishable From Reality

Let's be specific about what's technically possible right now, in 2025.

Video deepfakes have reached a quality threshold where they fool human viewers at rates between 60% and 80%, depending on the study. Some AI-generated video is now better quality than legitimate footage, with fewer compression artifacts and perfect lighting.

Voice cloning is almost there. Eleven Labs can replicate a voice from a 30-second sample. The synthetic speech sounds natural, captures emotional cadence, and includes the speaker's verbal tics. Text-to-speech has become so good that distinguishing it from real audio requires spectral analysis most people can't perform.

Facial generation is past the uncanny valley. Open AI's Sora and similar video models can generate multi-minute videos with realistic human faces, natural movement, and contextually appropriate action. The technology isn't perfect—if you know what to look for, you can spot artifacts. But for a viewer scrolling through social media? It looks real.

The scary part isn't the quality. It's the ease. You don't need to be a researcher or a large corporation. The tools are democratized. The costs have collapsed. The barriers have evaporated.

Here's what's possible with current, publicly available tools:

- Voice cloning: Record someone for 30 seconds, generate hours of realistic speech

- Face swapping: Put one person's face on another's body in video

- Video generation: Write a script, AI generates photorealistic video from text

- Audio synthesis: Generate music, voice acting, or dialogue from scratch

- Background generation: Create photorealistic environments and locations

Each of these capabilities existed separately five years ago. Today, they're integrated into single platforms that cost $20-100 per month.

The combination is what breaks the system.

The cost of creating synthetic media is minimal compared to the potential financial and societal impacts, highlighting a significant imbalance in economic incentives. Estimated data.

The Authenticity Crisis for Cameo and Creator Platforms

Cameo's business model is pure authenticity arbitrage.

Fans pay

Except now it is replicable.

Imagine this scenario: An AI company launches a service that offers "AI-Generated Celebrity Messages." For $5, you get a personalized video from your favorite actor. The video is indistinguishable from a real Cameo. The actor doesn't know about it. They're never compensated. But from a viewer's perspective, it looks identical to a real message.

Would anyone pay

Cameo saw this coming and made a strategic move: they partnered with Open AI to create authorized AI versions of celebrities on their platform. The idea is to get ahead of the problem by offering the cheaper version themselves, while still compensating the celebrities.

But this creates a new crisis.

If Cameo offers both real and AI videos, how do customers know which is which? What if Cameo mislabels them? What if an AI video becomes indistinguishable, and Cameo can't actually prove it's AI? The trust infrastructure collapses even faster.

The fundamental problem: once synthetic media is indistinguishable from real media, the only way to prove something is authentic is with technology, not perception. We can't see the difference anymore, so we need machines to verify for us.

But those machines are being built by the same companies creating the fakes.

The Detection Problem: We're Already Losing

Let's talk about detection because this is where the real nightmare lives.

Every AI system that generates content leaves artifacts. Deepfakes have certain artifacts in eye movement. Voice clones have frequency patterns. Generated video has compression signatures. In theory, if you know what to look for, you can always spot a fake.

Except that assumption breaks down immediately once you scale.

Detecting a single deepfake might take an expert 30 minutes. Detecting 10,000 videos generated today on social media? Impossible. Even if you could automate detection, the computational cost is enormous. And here's the killer problem: by the time detection software catches up to a generation model, a new generation model already exists that bypasses that detection.

This isn't speculation. This is what happened with Midjourney and other image generators. Researchers published detection methods for Midjourney v4. Midjourney released v5 and the detection methods became useless. This cycle repeats every 3-6 months.

The mathematics are simple. Let's say detection requires 100x more computational resources than generation. You generate a deepfake in 10 seconds. Detection takes 1000 seconds (about 17 minutes). You've created 6,000 deepfakes in the time it took to verify one.

At scale, detection is mathematically impossible.

Companies and researchers are trying. Facebook created the Deepfake Detection Challenge. Microsoft released detection tools. But all of them fail at scale. All of them get outdated. And all of them can be bypassed by slightly changing the generation method.

The honest assessment from security researchers: detection is not a long-term solution. We need different approaches.

Legal Frameworks Are Playing Catch-Up

Governments are panicking. They should be.

The European Union is moving fastest with the AI Act, which would require disclosure when content is AI-generated. But "disclosure" is unenforceable on social media platforms that operate globally. Who checks? What's the penalty? How do you prove disclosure was required but not provided?

The US has no federal framework. Some states are proposing laws against non-consensual deepfake pornography, but the scope is narrow and the enforcement is unclear. Countries like India and Singapore are moving faster but implementing restrictions that civil liberties organizations say are too broad.

Here's the actual problem: you can't legislate your way out of this.

Laws require enforcement. Enforcement requires detection. Detection is failing. Even if you made synthetic media illegal, people would still create it. The tools exist. They're open source. The technology is distributed. You can't un-invent it.

What governments are actually debating is whether synthetic media should be:

- Illegal (full ban): Criminalize creation and distribution

- Disclosure-required: Legal only if clearly labeled as AI

- Context-dependent: Legal for some use cases, illegal for others (like pornography or election interference)

- Unregulated: Let platforms handle it with their own policies

None of these are working yet. And they probably won't, because the technology evolves faster than legislation.

AI-generated media has become highly effective at fooling human perception, with AI-generated faces achieving a 99.8% fooling rate. Estimated data for 2025.

The Economic Incentives Are Perverse

Here's what keeps me up at night: the economics of synthetic media are backwards.

Creating a convincing deepfake of a celebrity costs maybe $100 and takes a few hours with current tools. Creating legitimate content costs thousands and takes days. Distributing fake content is free. The barrier to entry has collapsed while the returns are unlimited.

For bad actors, the incentive structure is perfect.

Want to damage a competitor's reputation? Generate a video of them saying something incriminating. Cost: $100. Upside: their business tanks. Their defense? "It's fake." But they have to prove it, and we've established that proof is nearly impossible.

Want to manipulate a stock price? Generate a news report of a CEO scandal. Cost: $100. Upside: millions in trading profit. The SEC might eventually catch you, but only after the stock has moved.

Want to interfere in an election? Generate videos of candidates saying racist things. Cost: thousands. Upside: swing an election. Consequences: maybe none, depending on your jurisdiction.

The economics are broken because creation is cheap and getting cheaper, while harm is expensive and hard to undo.

Meanwhile, legitimate creators (like celebrities on Cameo) face a new problem: commoditization. If synthetic versions of your likeness exist and are indistinguishable, your market value collapses. Why would anyone pay you when they can get AI you for 1% of the price?

This isn't hypothetical. The adult entertainment industry is already experiencing this. Deepfake pornography platforms are destroying the economics of consent-based sex work. Some performers are leaving the industry because they can't compete with free AI versions of themselves.

The incentive structure creates a race to the bottom.

What Distinguishes Real From Fake?

Let's get philosophical for a second.

If a video is indistinguishable from reality to human perception, does it matter if it's technically "fake"?

This is actually the core question, and it's harder than it sounds.

Traditionally, "authentic" meant "created by the person it claims to be from." Proof of authenticity was based on:

- Physical evidence: Signatures, handwriting, personal artifacts

- Witness testimony: People who saw it happen

- Institutional verification: Trusted intermediaries who vouched for authenticity

- Perceptual judgment: Did it "look" authentic?

All of these are failing.

Physical evidence can be forged. Witness testimony is unreliable (and easily manipulated by deepfakes). Institutions are overwhelmed. And perception is the exact thing AI is defeating.

What's left? Technical proof.

A cryptographic signature proves that a specific key created a file. Blockchain records prove that something existed at a specific time. Metadata proves the source. But all of this requires infrastructure that doesn't exist at scale yet.

Here's the uncomfortable truth: authenticity in a post-truth era requires you to trust the technology, not your eyes or ears or even traditional institutions.

That's a fundamental shift in how humans verify reality.

Cameo's struggle is emblematic of this shift. They built their business on the assumption that human judgment could verify authenticity ("I paid for a video, it looks like it came from the celebrity, so it's real"). That assumption is evaporating.

The Role of Trust Infrastructure

Trust is an infrastructure problem, not a technology problem.

For most of history, trust was mediated by institutions. You trusted the New York Times because the Times had institutional reputation and gatekeeping standards. You trusted courts because they had evidentiary standards and appeals processes. You trusted banks because they were regulated.

The internet partially destroyed this infrastructure. Social media removed gatekeepers. Anyone could publish anything. Trust became binary (follow/don't follow) instead of graded (reputation systems).

Now AI is destroying what's left.

If deepfakes are indistinguishable from reality, you can't trust video or audio anymore. If text can be generated at scale, you can't trust written content. If images can be synthesized, you can't trust photos.

What's left to trust? Only the infrastructure that proves origin.

Companies and platforms are racing to build this infrastructure:

- Cryptographic signatures: Prove that content came from a specific key

- Blockchain timestamps: Prove that content existed at a specific time

- Biometric verification: Prove that content was created in real-time by a real person

- Metadata chains: Prove the source and history of a piece of content

But here's the problem: all of this infrastructure requires coordination. If only one platform implements it and others don't, the system breaks down. You need network effects where everyone agrees to a standard.

We don't have that yet. We probably won't for years.

In the interim, we're in a trust vacuum.

Estimated data shows that platforms face the highest impact due to AI, with creators and media companies also significantly affected. Verification services and financial markets experience moderate challenges.

Implications for Businesses and Creators

If you're a creator or a business that depends on digital authenticity, you're facing existential questions.

For creators (musicians, influencers, celebrities):

Your economic model assumed that your likeness and talent were scarce resources. If AI can replicate them at 1% of the cost, scarcity evaporates. You have a few options: pivot to live experiences that can't be replicated (concerts, in-person appearances), build a parasocial relationship strong enough to survive AI competition, or accept lower margins and compete on AI-generated content yourself.

None of these are great.

For verification services:

There's a business opportunity here, but it's capital-intensive and requires network effects. Services that can cryptographically verify authenticity could become essential infrastructure. But they need adoption from both creators (to get verified) and platforms (to enforce verification). That's a coordination problem that takes years to solve.

For platforms (like Cameo):

You're facing a squeeze. If you continue to market only authentic human content, you're increasingly expensive relative to AI competition. If you pivot to offering AI-generated content, you damage your brand positioning and create liability issues (what if the AI version is indistinguishable and someone claims it's real?). If you offer both, you create consumer confusion about what's real.

Cameo's partnership with Open AI is essentially a bet that they can monetize the AI version before competitors do it for free. It's not a permanent solution. It's a temporary competitive advantage.

For media companies:

Your supply chain is broken. If you can't trust video or audio, you need new verification processes. This means higher costs, slower publication, and more liability. Misinformation lawsuits are going to explode once deepfakes become common.

For financial markets:

A deepfake video of a CEO could trigger a stock crash. Regulatory frameworks aren't prepared for this. Trading volumes could explode based on synthetic news that doesn't reflect reality. Market manipulation through deepfakes is already theoretically possible and probably happening already.

The common thread: everyone's operating with outdated assumptions about verification.

Solutions and Strategies Being Attempted

Let's talk about what actually might work, even though none of these are silver bullets.

Strategy 1: Cryptographic Proof of Origin

This is the most promising approach. If content is cryptographically signed at the moment of creation, you can verify that a specific key created it. The challenge: this requires adoption at the camera level (phones, video equipment) and platform level (social media, messaging apps).

Some companies are building this. Adobe is working on "Content Credentials" that embed cryptographic proof into files. But adoption is slow because it requires hardware changes, software updates, and user behavior changes.

Strategy 2: Computational Watermarking

Embed invisible data into genuine content that survives compression and copying. Deepfakes won't have this watermark, so you can identify them. The challenge: watermarking can be removed with more AI, and embedding watermarks into real-time video is computationally expensive.

Strategy 3: Platform-Level Verification

Require creators to verify themselves and then flag content accordingly. Twitter/X is experimenting with this (blue checks). TikTok is implementing creator verification. But verification is expensive to scale and can be spoofed.

Strategy 4: Decentralized Authentication

Use blockchain to create immutable records of when content was created and by whom. The challenge: blockchain doesn't actually solve the authentication problem, it just moves it. You still need a way to prove that the person who created the blockchain entry is the person who created the content.

Strategy 5: AI Detection and Disclosure Requirements

Detect AI-generated content and require clear labeling. The challenge: detection fails at scale and lags behind generation. By the time you detect it, it's already spread.

Strategy 6: Legal Consequences

Make non-consensual deepfakes illegal and enforce consequences. This helps for the worst-case scenarios (non-consensual pornography, election interference) but doesn't solve the fundamental trust problem.

The honest assessment: none of these strategies alone solves the problem. We probably need a combination of technical infrastructure (cryptographic proof), legal frameworks (disclosure requirements), cultural norms (healthy skepticism), and institutional adaptation (verification services).

But that combination doesn't exist yet.

The Psychological Impact: How Humans Respond to Authentic Uncertainty

Here's what researchers are starting to understand: humans can't psychologically handle a world where they can't verify reality.

For most of history, we had perceptual confidence. If you saw something with your own eyes, it was real (obviously with caveats about optical illusions and hallucinations, but the baseline assumption was sound). If you heard something from a trusted source, you could trust it.

That confidence is evaporating.

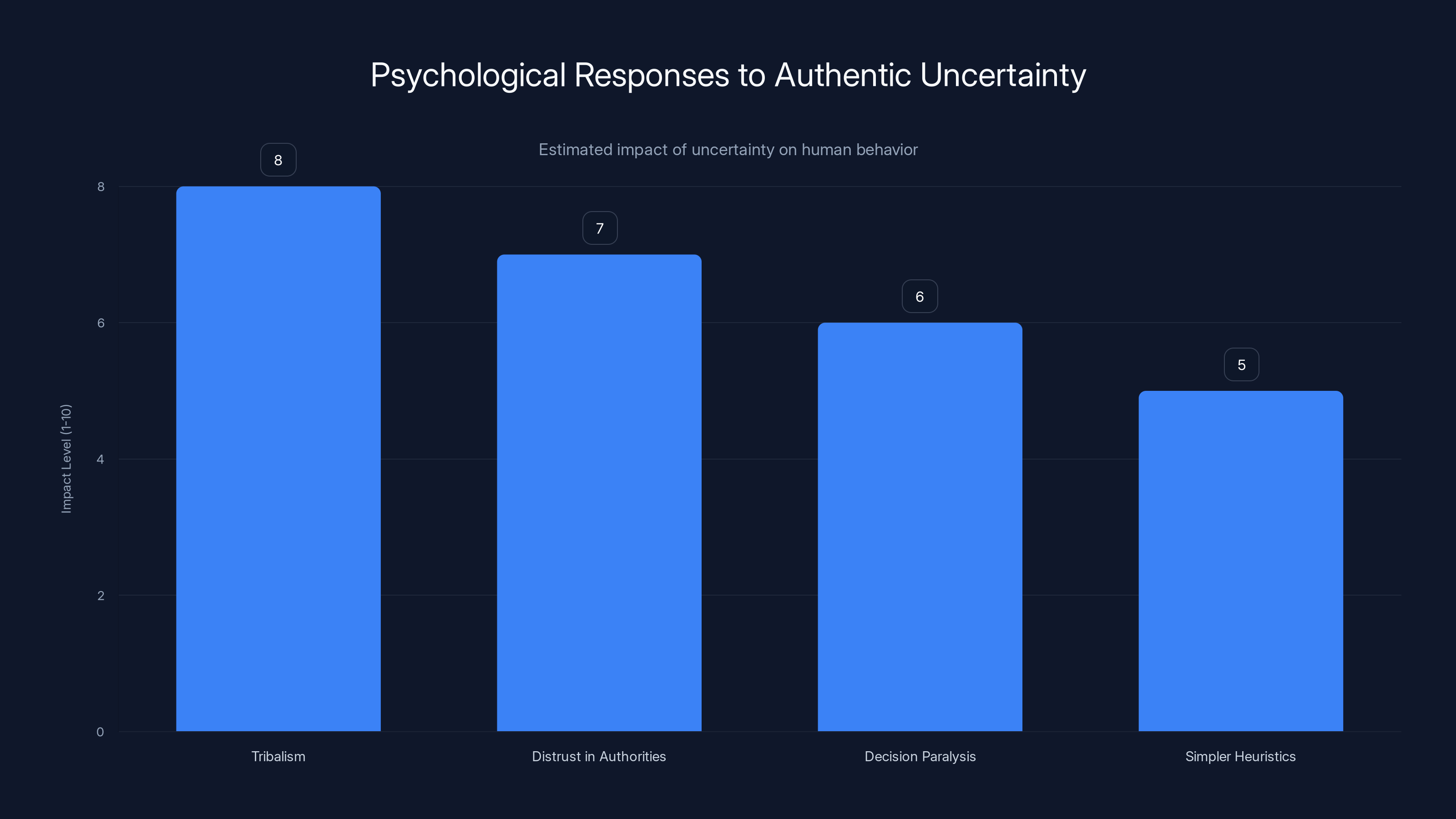

When confidence disappears, people respond in predictable ways:

They become more tribal. Unable to verify objective reality, they fall back on group identity. "I believe this because my group believes it." Conspiracy theories explode. Polarization increases.

They trust authorities less. If institutions can be faked, and institutional verification is undependable, people abandon trust in institutions entirely. They turn to decentralized verification (asking friends, checking social media). This accelerates misinformation spread.

They experience decision paralysis. When you can't distinguish real from fake, you can't make confident decisions. Do you go to that job interview if you saw a video of the CEO doing something illegal? Do you buy that stock after seeing a positive earnings report that might be deepfaked? Uncertainty paralyzes decision-making.

They retreat to simpler heuristics. Without clear verification, people use crude shortcuts: "I believe it if it confirms what I already believe" or "I believe it if lots of people are sharing it." Both of these are terrible for truth-seeking.

Psychologically, a post-truth era doesn't mean people stop caring about truth. It means they stop being able to find it, and they respond by retreating into tribal certainty.

This has implications for society at scale. Markets depend on some baseline level of shared reality (if you can't trust earnings reports, stock valuations are just guesses). Democracy depends on some baseline level of shared reality (if you can't trust reports of events, election outcomes are questionable). Relationships depend on some baseline level of shared reality (if you can't trust what your partner tells you, trust collapses).

We're potentially undermining those foundational assumptions.

AI-generated media challenges are most severe in trust infrastructure and detection, with ratings exceeding 8 out of 10. Estimated data.

The Open AI Conflict: IP Rights and Control

Cameo's situation with Open AI highlights a secondary crisis that's brewing under the primary crisis.

Open AI has the technical capability to create synthetic versions of anyone's voice or likeness. Do they have the right to do so? Should they?

From a pure IP perspective, this is murky. Right of publicity laws exist in some jurisdictions but not others. Copyright applies to creative works but not to likenesses. Trademark covers commercial use but is narrow.

Moreover, if you create a synthetic person trained on scraped video and audio, who owns it? You (the creator of the AI model)? The original person (who didn't consent)? No one?

The legal framework doesn't exist.

Open AI's partnership with Cameo is essentially them saying: "We'll get permission and compensate creators, so we don't face IP litigation." But other companies aren't taking that approach. They're creating deepfakes without permission and uploading them to platforms.

Who bears the liability? The person who created the deepfake? The platform that hosts it? The person being deepfaked?

This is still being litigated. We don't have clear answers yet.

The bigger strategic question: if AI companies can replicate anyone's likeness, what's the power dynamic? Do creators need to negotiate with AI companies? Do AI companies need permission? Can you opt out?

These are the questions that are going to define the next five years of litigation.

What Happens When Everyone Assumes Everything Is Fake?

Here's a scenario that keeps researchers up at night.

Imagine deepfakes become so common that the average person assumes everything is fake unless proven otherwise. Not just skepticism. Full default assumption of inauthenticity.

What happens?

Real atrocities could be dismissed as deepfakes. A video of a war crime could go viral with comments saying "obvious CGI." Actual corruption could be laughed off as synthetic. Whistleblowers could be discredited by claims that their evidence is AI-generated.

This is sometimes called the "liar's dividend"—the problem that once deepfakes exist, people can claim any real video is deepfaked and face little pushback.

We're already seeing this happen. When negative videos of political figures emerge, the standard response is "deepfake" or "heavily edited." Sometimes it's true. Usually it's not. But the mere existence of deepfake capability has given cover to claims of inauthenticity.

Once everyone assumes everything is fake, truth-seeking becomes almost impossible. You can't investigate a claim (the investigation could be faked). You can't check sources (the sources could be synthetic). You can't trust experts (they could be deepfaked saying something they didn't say).

This isn't a technical problem anymore. It's a epistemic problem. It's a problem with how humans establish what's true.

And I'm not sure we have solutions for it.

The Role of Runable and Automated Systems in Truth Verification

As organizations grapple with the challenge of verifying authentic content at scale, automation becomes increasingly critical. Runable offers AI-powered automation that can help teams manage document verification, generate automated reports, and create compliance documentation—essential capabilities when dealing with large volumes of potentially synthetic content.

For platforms trying to implement verification infrastructure, Runable's AI agents can automate the process of flagging content, generating verification reports, and creating audit trails. Starting at $9/month, it provides a practical foundation for building automated verification workflows.

Use Case: Automatically generate verification reports and flagging systems for AI-generated content detection pipelines.

Try Runable For FreeWhile automation can't solve the authentication problem, it can help scale the response to it. The critical step is building the right infrastructure—and that starts with automated workflows that can enforce verification standards at scale.

Estimated data shows that tribalism and distrust in authorities are the most significant psychological responses to uncertainty, followed by decision paralysis and reliance on simpler heuristics.

What Gets Built in Response?

Let me predict what actually happens over the next 5-10 years.

Hardware changes: Cameras and phones get chips that cryptographically sign content at the moment of capture. This becomes a baseline expectation for "authentic" devices. Phones without it become suspect.

Platform requirements: Major platforms (YouTube, TikTok, Facebook) require verification for monetized content. Creators need to prove who they are. Content gets flagged with origin metadata. Unverified content gets lower distribution.

Market bifurcation: The market splits into "verified authentic" and "unknown provenance." Verified content commands premium prices. Unknown provenance is free or nearly free.

AI-generated content becomes a commodity: Deepfakes, AI voices, and synthetic video become so cheap and easy that they're treated like stock footage. "AI-generated" becomes a distinct content category, like "stock photo." The market recognizes the difference and prices accordingly.

New institutions emerge: Verification services become specialized businesses. Some verify human identity (confirming you're the person you claim to be). Some verify content (confirming it was created by the person who claims to have created it). Some verify chains of custody (confirming content hasn't been modified).

Legal chaos for 3-5 years: Lawyers make a killing. Courts are flooded with cases about deepfakes, IP rights, liability. Some precedent emerges but it's messy and contradictory. Eventually norms settle but only after enormous litigation.

Cultural shift: Society becomes more skeptical of all media. Verification becomes normal. Unverified content is treated like anonymous tips rather than established fact. This actually helps reduce misinformation spread (lower confidence in unverified claims) but it also makes society slower to respond to real crises (because the first reports are unverified).

Some industries collapse or transform: Celebrity culture gets weird. If deepfakes are common, some aspects of celebrity mystique disappear, but other aspects (live performance, parasocial connection) become more valuable. Creator economy gets turbulent as commoditization hits.

None of this stops deepfakes. All of this is just adaptation to a world where deepfakes are normal.

The Uncomfortable Truth About Post-Truth

Let me be direct about something that's hard to say: the post-truth era isn't a bug. It's a feature of how AI works.

AI systems are fundamentally generative. They don't discover truth, they generate outputs that look like truth. Chat GPT generates plausible text. DALL-E generates plausible images. Sora generates plausible video. None of them understand truth. They understand statistical patterns.

The more you optimize AI for "plausible," the more you get outputs that are indistinguishable from true. And you'll eventually optimize past true to "more plausible than true."

This isn't a problem with implementation or training data. It's fundamental to how language models work.

So the post-truth era isn't something we can engineer our way out of. It's something we have to socially and institutionally adapt to.

That's terrifying because social and institutional adaptation is slow. We're moving at social-change speed while the technology is moving at Moore's Law speed.

Implications for Society, Law, and Governance

The post-truth era has downstream effects that ripple through every institution.

For legal systems: If evidence can be deepfaked, how do you prove something in court? You need new evidentiary standards. Some courts are already grappling with this. The answer seems to be: accept only evidence with strong chain-of-custody and cryptographic verification. This makes some types of evidence inadmissible.

For democracies: Election interference through deepfakes becomes easier and harder to detect than election interference through traditional hacking. A deepfake video of a candidate could suppress votes or mobilize voters. How do you prevent it? You can't easily. You can only respond after the fact with fact-checking and debunking. But fact-checking lags behind and doesn't fully undo damage.

For financial markets: Stock prices move on information. If deepfakes can create fake information, market manipulation becomes more efficient. The SEC can't possibly verify the authenticity of every announcement. Insider trading becomes harder to distinguish from lucky guesses (if you deepfaked a positive announcement, did the trader have inside information or did they just get lucky?).

For journalism: The business model of journalism depends on "we investigate and report truth." If readers can't verify authenticity, the value proposition collapses. Some newspapers are responding by getting more behind paywalls and focusing on analysis (things that are harder to deepfake). Others are moving to subscription models where they can build direct relationships with readers (and hopefully retain trust).

For medicine: Deepfake diagnoses from fake doctors could spread widely. A deepfake video of a prominent researcher could spread false medical information. The cost of debunking is enormous and the damage spreads faster than the correction.

For relationships: A deepfake video of your partner cheating on you could destroy a relationship before you even verify if it's real. Relationship trust becomes harder when you can't trust what you see.

All of these problems are downstream from the same issue: we lost the ability to verify authenticity at scale.

The Long-Term Play: Building New Infrastructure

If you're thinking long-term about this problem, the question is: what infrastructure gets built to replace perceptual verification?

My bet: a combination of technical infrastructure and institutional specialization.

Technical layer: Cryptographic proof of origin becomes standard. Every file carries metadata about when it was created, by what device, with what key. Cameras and phones embed this automatically. You can't share content without dragging this metadata along.

Institutional layer: Verification becomes a specialized business. Some institutions verify human identity (government-backed). Some verify specific types of content (court-verified evidence, journalistic fact-checking, academic peer review). Some verify organizational policy (Cameo verifies their creators). No single institution verifies everything, but there's specialization and delegation.

Market layer: Content gets priced based on verification level. "Verified authentic human-created content" costs more. "Unknown provenance" costs less. "Verified AI-generated" is in between.

Cultural layer: Skepticism becomes the default. You don't trust something just because it looks real. You look for verification. This requires education and cultural change. It's slow but probably necessary.

None of this is complete yet. None of this solves the fundamental problem. But it creates a functioning ecosystem where verification is possible, even if expensive.

Looking Ahead: The Post-Truth Inflection Point

We're at an inflection point right now.

For the next 2-3 years, deepfakes will become more common but still relatively rare. Most online content is still created by humans. Detection is still possible (for experts). Legal frameworks are still being written.

After that, deepfakes become normal. AI-generated content isn't exceptional anymore, it's default. Detection becomes impossible. Legal frameworks are either in place (and mostly ineffective) or absent entirely.

Once that inflection passes, we're truly in a post-truth era. We adapt or we suffer.

Cameo's leadership understands this. They're not trying to prevent deepfakes. They're trying to build sustainable business models that survive in a world where deepfakes are cheap and plentiful. That means either:

- Moving to services that can't be deepfaked (live performances, real-time interactions)

- Building verification infrastructure that makes authentic content valuable

- Commoditizing AI versions of themselves before competitors do

None of these are great options. But they're the realistic options in a post-truth era.

The uncomfortable message: the post-truth era isn't coming. It's already here. We're just in the early stages where some people still haven't noticed.

FAQ

What is a post-truth era in the context of AI?

A post-truth era is when AI-generated content becomes indistinguishable from authentic content, making it impossible for average people to verify what's real through perception alone. This collapses the ability to establish shared reality, not because people don't care about truth, but because verifying truth becomes technically impossible at scale.

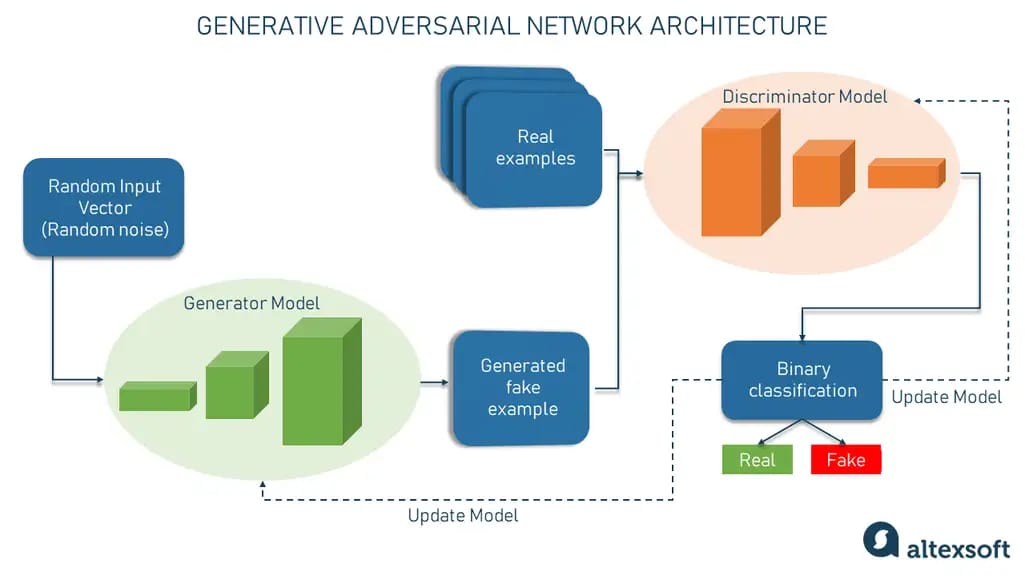

How are deepfakes created and why are they so convincing?

Deepfakes use machine learning models trained on real video or audio to generate new content that mimics the original source. They're convincing because modern AI has learned to replicate facial movements, vocal patterns, and background details so accurately that human eyes and ears can't distinguish them from authentic content. The technology combines facial recognition with generative AI to swap faces or create entirely synthetic video.

Can deepfakes be detected and stopped?

Detection is theoretically possible but practically failing at scale. Researchers publish detection methods for one AI model, but by the time the paper is published, a newer model exists that bypasses the detection. The computational cost of detecting all synthetic media is prohibitively expensive, and the cost of generating deepfakes is approaching zero. Legal approaches exist but are hard to enforce globally.

How is Cameo affected by the post-truth era and AI deepfakes?

Cameo's business model depends on authenticity: customers pay for real videos from real celebrities. If AI-generated celebrity videos become indistinguishable from authentic ones, Cameo's value proposition collapses. The platform is responding by partnering with Open AI to offer authorized AI versions while still offering authentic content, but this creates consumer confusion about which is real.

What legal frameworks exist to govern synthetic media and deepfakes?

Legal frameworks are still emerging and vary by jurisdiction. The European Union's AI Act requires disclosure of AI-generated content. Some countries criminalize non-consensual deepfake pornography. Most jurisdictions have no comprehensive framework. Legal approaches focus on disclosure requirements, criminal liability for specific harms, and copyright/privacy law, but enforcement is difficult and international coordination is lacking.

What are the long-term solutions to the post-truth crisis?

There's no single solution, but emerging approaches include: cryptographic proof of origin (signing content at creation), specialized verification institutions (verification services for different types of content), legal requirements for disclosure, and cultural adaptation (increased skepticism of unverified media). The most promising approach combines technical infrastructure (metadata and signatures), institutional specialization (delegated verification), and market mechanisms (pricing authentic content higher than unknown-provenance content).

How should creators and businesses adapt to a post-truth era?

Creators should consider: moving to live experiences that can't be deepfaked, building parasocial relationships strong enough to survive AI competition, or accepting lower margins and offering AI-generated versions themselves. Businesses should implement verification infrastructure now (cryptographic signatures, blockchain timestamps), understand liability implications, and prepare for market shifts where authentic content becomes explicitly valued as a premium product.

What are the psychological effects of living in a post-truth era?

When people can't verify reality through perception, they respond by retreating to tribal certainty (trusting their group over objective reality), abandoning trust in institutions, experiencing decision paralysis, and using crude shortcuts instead of critical thinking. Studies show that exposure to deepfakes reduces confidence in all media from the same source, even authentic content. This has implications for markets, democracy, and social cohesion.

How does the post-truth era affect financial markets and the stock market?

Deepfakes enable new forms of market manipulation. A synthetic video of a CEO could trigger stock movement through false information. The SEC can't verify the authenticity of every announcement. Earnings reports could be deepfaked. This creates new avenues for insider trading and market fraud, and regulators don't have adequate frameworks to detect or prevent it.

What role do major AI companies like Open AI play in the post-truth era?

Companies like Open AI are simultaneously creating the technology that enables deepfakes and attempting to build verification and authorization systems. They have both the technical capability to create synthetic content and economic incentives to monetize it responsibly (through partnerships like the Cameo deal). But without unified adoption of verification standards across all AI companies, the race-to-the-bottom continues.

Key Takeaways

- AI-generated media is now indistinguishable from authentic content, fundamentally breaking human verification abilities

- Detection technology lags behind generation technology by 3-6 months, making at-scale verification mathematically impossible

- Platforms like Cameo that monetize authenticity face existential threats as synthetic alternatives become cheaper and abundant

- Trust infrastructure is shifting from institutional verification to technical proof (cryptographic signatures, blockchain records, metadata chains)

- Society will adapt through market bifurcation (premium for verified authentic, commodity pricing for unknown-provenance content) and institutional specialization

Related Articles

- xAI's Grok Deepfake Crisis: What You Need to Know [2025]

- Why Grok's Image Generation Problem Demands Immediate Action [2025]

- Responsible AI in 2026: The Business Blueprint for Trustworthy Innovation [2025]

- Trump Mobile 600K Phone Claims: Debunking Viral Sales Figures [2025]

- AI Identity Crisis: When Celebrities Own Their Digital Selves [2025]

- Grok's Deepfake Crisis: The Ashley St. Clair Lawsuit and AI Accountability [2025]

![The Post-Truth Era: How AI Is Destroying Digital Authenticity [2025]](https://tryrunable.com/blog/the-post-truth-era-how-ai-is-destroying-digital-authenticity/image-1-1768842417669.jpg)