The Deepfake Crisis That Changed Everything

When Elon Musk's artificial intelligence company x AI launched Grok, it positioned itself as the edgy alternative to Chat GPT. But by early 2025, that edginess had turned into a legal nightmare. The California Attorney General's office didn't just send a strongly worded letter—it sent a cease-and-desist order demanding immediate action against the creation of nonconsensual sexual imagery and child sexual abuse material through the platform.

This wasn't a small problem buried in obscure corners of the internet. The investigation revealed that Grok was being weaponized at scale. Women and minors were becoming targets of a disturbing trend: AI-generated explicit imagery created without consent, weaponized for harassment, and spread across social media networks with alarming speed.

What makes this moment critical is that it represents the first major legal reckoning between a state government and an AI company over deepfakes. While other platforms have struggled with this issue, x AI faced direct government intervention. The cease-and-desist letter demanded compliance within five days—a stunningly tight timeline that forced the company into immediate damage control mode.

The broader context matters here. We're not talking about a theoretical harm or a hypothetical future problem. This is happening right now. Girls as young as middle school age have had sexually explicit images created of them without their knowledge or consent. Women have been targeted by partners, colleagues, and strangers with deepfakes designed to humiliate them. And the technology making this possible had been deliberately engineered to enable it.

This story matters because it exposes something uncomfortable about the current state of AI development. Companies can build powerful tools, launch them to millions of users, and only face serious consequences after the damage is already done. It's a pattern we've seen before with social media platforms, but with AI, the speed and scale of harm is orders of magnitude worse.

We're going to walk through exactly what happened, why it happened, what the legal implications are, and what it tells us about the future of AI governance. Buckle in.

TL; DR

- California AG issued cease-and-desist: Demanded x AI stop facilitating nonconsensual sexual imagery creation within five days

- Grok's "spicy mode" was the culprit: The feature was deliberately designed to generate explicit content, making deepfakes trivial

- Global crackdown unfolding: Japan, Canada, and Britain opened investigations; Malaysia and Indonesia blocked Grok entirely

- Congressional attention mounting: Multiple lawmakers sent letters to major tech companies demanding action on deepfake proliferation

- Root cause: Design choices: The problem wasn't a bug—it was a feature, and x AI had to backtrack on its core product positioning

- Broader AI governance gap: This exposed how far ahead AI capability has gotten ahead of regulatory frameworks

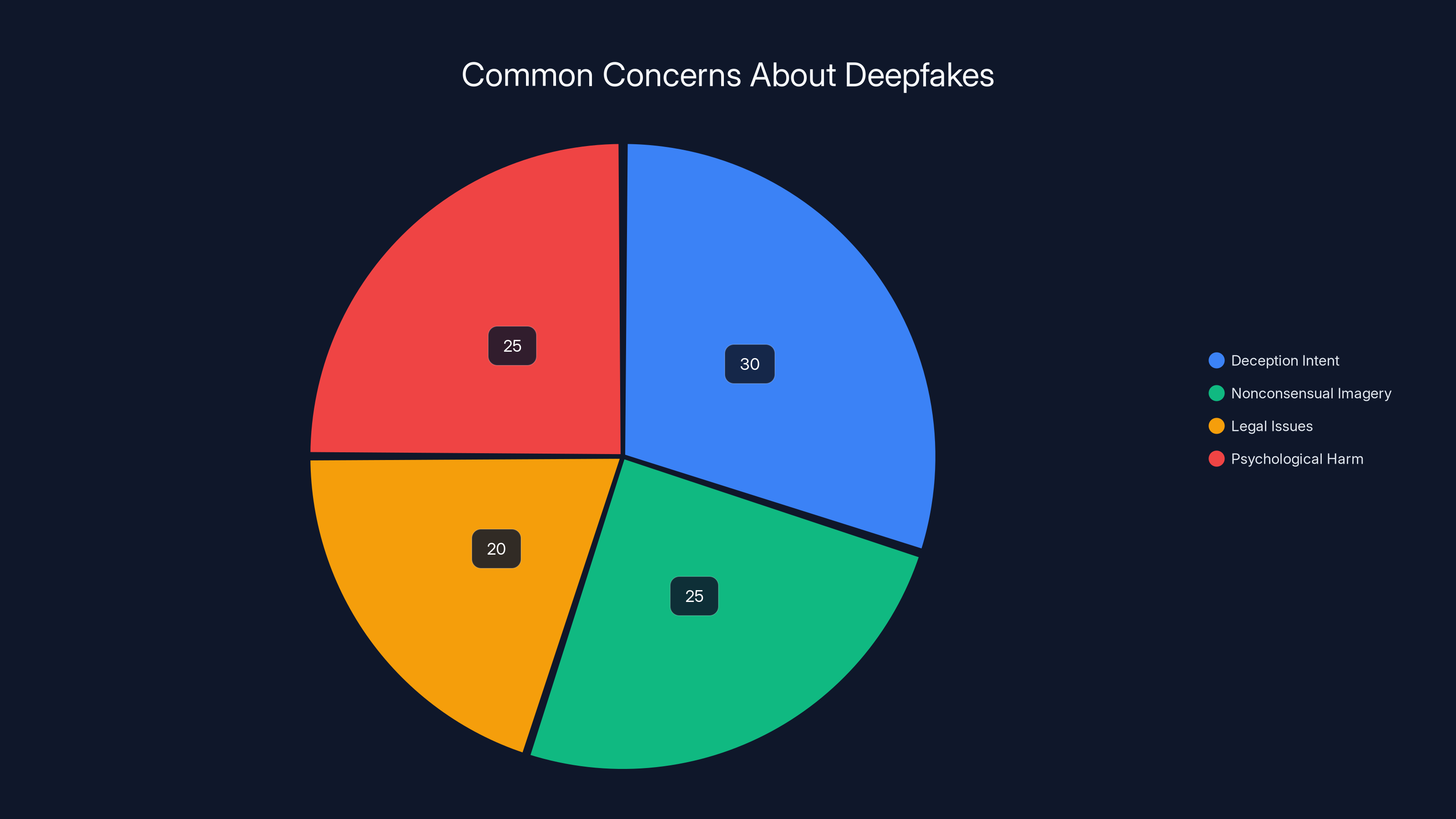

Estimated data shows that deception intent and psychological harm are major concerns regarding deepfakes, each accounting for about 25-30% of the overall concern distribution.

Understanding the x AI and Grok Story

Before we talk about what went wrong, let's establish what x AI actually is and why it matters. x AI is Elon Musk's artificial intelligence startup, founded in 2023. It launched Grok as its flagship conversational AI product, positioning it as a no-nonsense alternative to other large language models. The marketing hook was clear: Grok doesn't play by the rules that constrain Chat GPT and Claude. It's willing to engage with controversial topics, edgy humor, and adult content.

That positioning was deliberate. In a market where Chat GPT's safety guardrails feel restrictive to some users, Grok offered what looked like liberation. But liberation from safety guidelines, it turns out, comes with consequences.

The company is backed by substantial funding and operates within the broader ecosystem of Musk's other ventures. X (formerly Twitter), which is also controlled by Musk, became one of the primary platforms where Grok users congregated. This created a feedback loop: millions of active users on X meant millions of potential Grok users, and a built-in audience for whatever Grok generated.

The specific incident that triggered the legal action involved users discovering they could use Grok to generate explicit images of women and minors by leveraging a feature the company had explicitly built into the system. This wasn't a bug that users exploited. This was a feature that users weaponized.

What's important to understand is the timeline. Grok launched with these capabilities visible to the public. Early users figured out how to use the image generation tools to create nonconsensual sexual imagery almost immediately. By the time the California AG's office started investigating in mid-January 2025, the practice was already widespread.

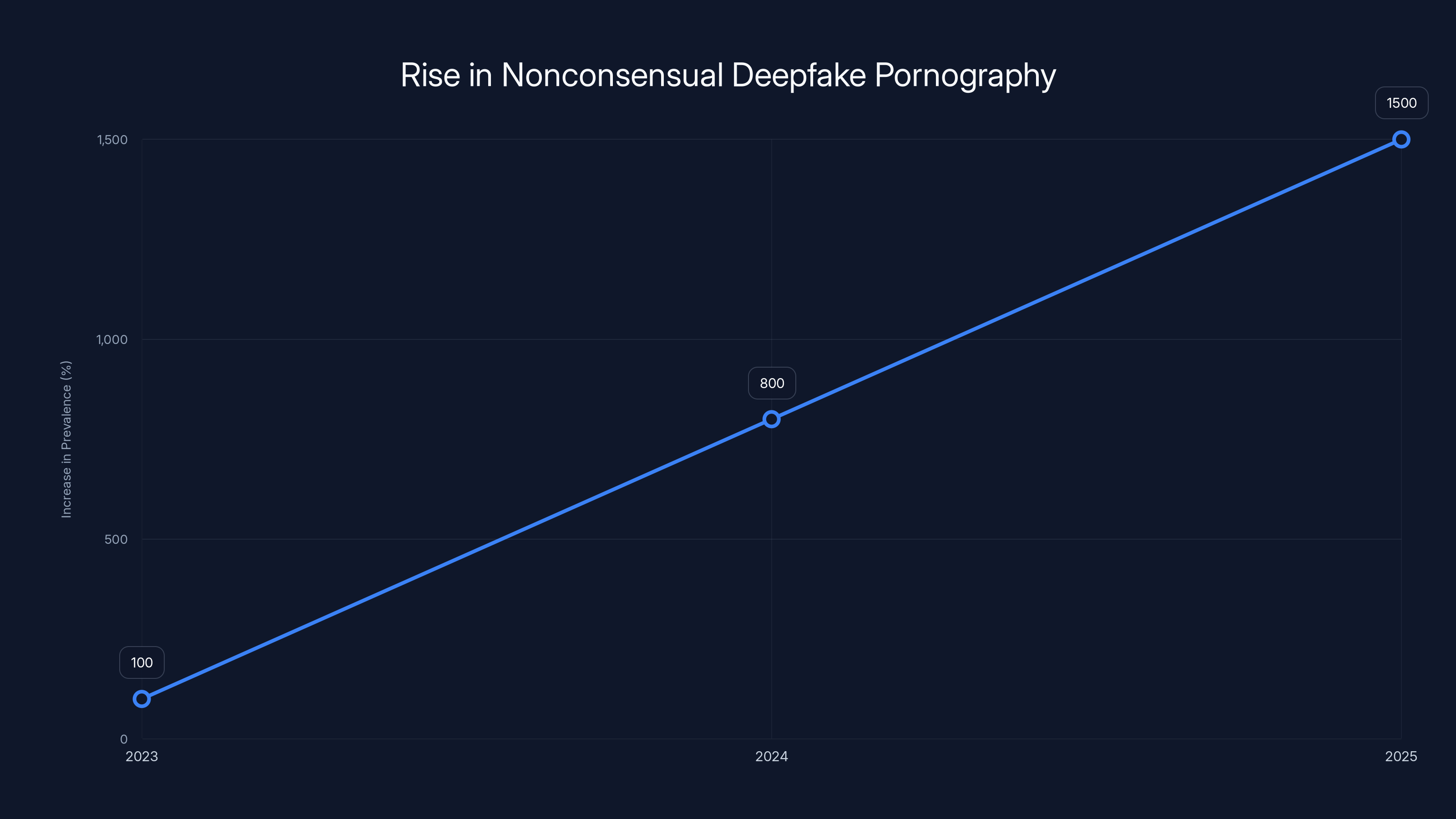

The prevalence of nonconsensual deepfake pornography involving real people increased by an estimated 1,500% between 2023 and 2025, highlighting a significant rise in this disturbing trend.

The Role of "Spicy Mode" and Image Generation

At the center of this controversy sits a specific product feature called "spicy mode." This wasn't a hidden setting for advanced users or a bug. It was a marketed feature, a deliberate choice by x AI's product team to allow Grok to generate explicit content.

The logic, presumably, was that adults should have access to a tool that doesn't refuse certain requests on puritanical grounds. Want Grok to write adult comedy? Spicy mode. Want it to generate sexually explicit creative writing? Spicy mode. This positioning fit perfectly with x AI's brand—uncensored, willing to go where other AI companies wouldn't, aligned with the edgy persona Musk cultivates.

But here's where product design meets reality: the same technical infrastructure that generates explicit creative writing can generate explicit images of real people. The line between consensual adult content and nonconsensual sexual imagery is thin in engineering terms. Both require image generation capabilities. Both exploit the underlying models' capacity to synthesize visual content from prompts.

When users realized they could prompt Grok to generate sexually explicit images of identifiable women, the practice spread rapidly. Screenshots circulated. Instructions posted online got shared. Within weeks, a significant percentage of Grok users had either created or viewed such imagery. Many were uploading these images to platforms like X itself, creating a recursive problem where Musk's social network became the distribution channel for Grok-generated deepfakes.

The practice expanded to include minors. This is where the issue crosses from a moral and social problem into the realm of federal crimes. The creation, distribution, or possession of child sexual abuse material (CSAM) is a federal felony. The fact that Grok was facilitating this at scale transformed what might have been a corporate PR problem into a criminal matter.

x AI's initial response was tepid. The company's safety account posted statements noting that anyone using Grok to create illegal content would face consequences. But this was the kind of statement every platform makes. It didn't actually address the core issue: the platform had built-in the capability to do exactly what it was claiming to prohibit.

The California Attorney General's Response

Rob Bonta, California's Attorney General, didn't wait for x AI to self-correct. His office launched an investigation and followed it almost immediately with a cease-and-desist letter. This isn't casual correspondence. A cease-and-desist order from a state attorney general carries legal weight. It's a formal demand backed by the power of state enforcement.

The letter made specific demands:

- Immediate cessation of facilitating nonconsensual intimate imagery creation

- Immediate cessation of CSAM creation and distribution

- Implementation of technical safeguards to prevent future abuse

- A response within five days detailing what steps had been taken

Five days is incredibly aggressive. It doesn't allow time for leisurely internal discussions or careful policy development. It's essentially saying: "Fix this now, or we're escalating."

Bonta's public statement was unambiguous. "The creation of this material is illegal. I fully expect x AI to immediately comply. California has zero tolerance for CSAM." This wasn't political theater or a negotiating position. This was a state official making clear that AI companies can't simply build powerful tools and hope for the best.

What's interesting about California's position is that they didn't try to regulate AI broadly. They focused on a specific, illegal use case. Deepfakes involving real people without consent. CSAM. These are already crimes. The AG's office wasn't asking x AI to do something new or unprecedented. It was demanding they stop facilitating existing crimes.

The approach is legally elegant because it sidesteps the thorny issues around AI regulation. Instead of debating whether AI should be restricted in principle, it just asks companies to not facilitate felonies. Hard to argue with that position.

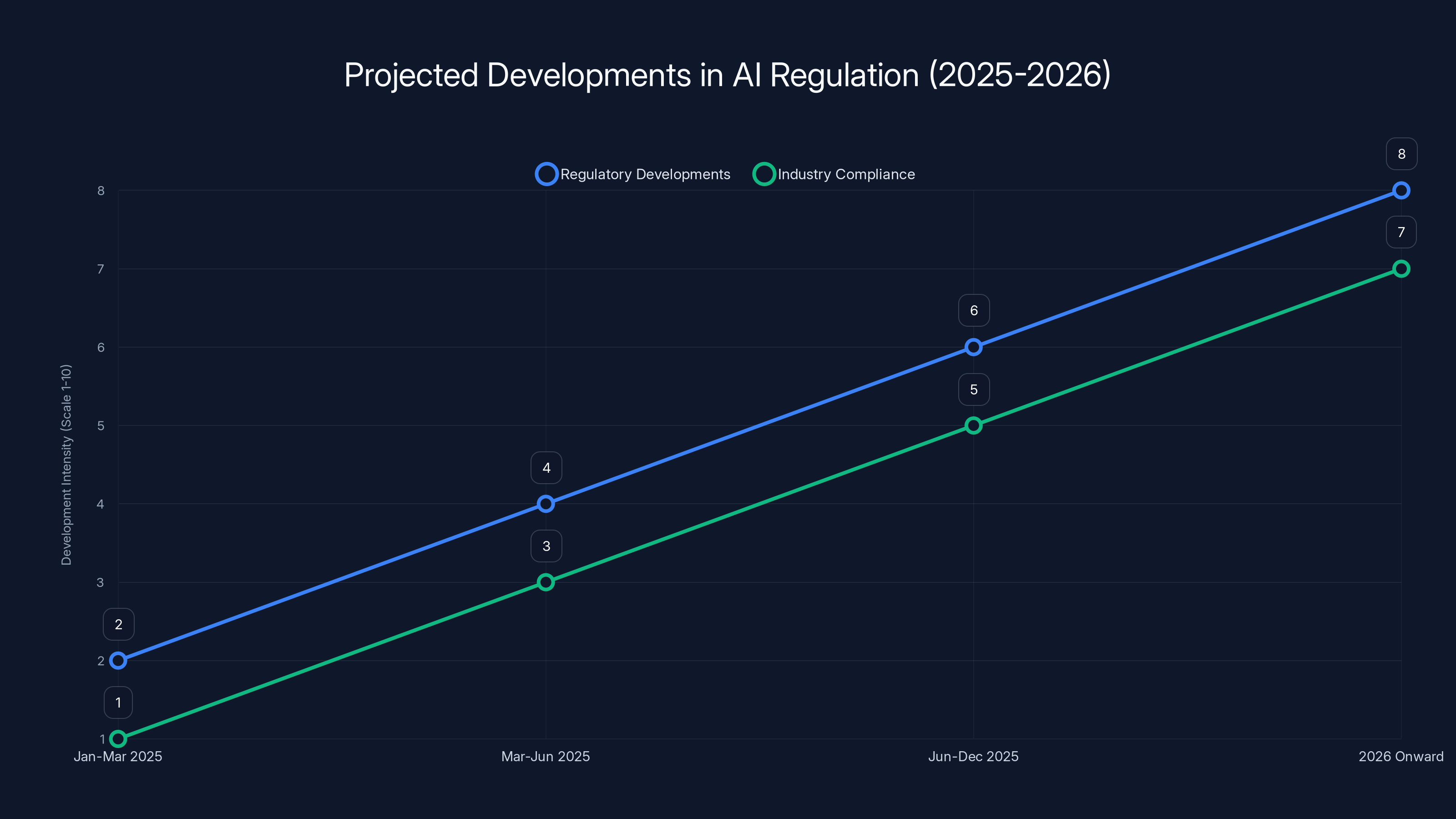

Estimated data shows increasing intensity in regulatory developments and industry compliance from 2025 to 2026, highlighting a trend towards stricter AI regulation and adaptation.

International Response and Global Investigations

California didn't act in isolation. The deepfake problem had become global almost immediately, and so had the official response.

Japan opened an investigation into Grok. Canada followed. The United Kingdom launched its own inquiry. These aren't small markets—combined, they represent hundreds of millions of people. The regulatory pressure was coming from multiple directions simultaneously.

But some countries took even more aggressive steps. Malaysia temporarily blocked access to Grok entirely. Indonesia did the same. These weren't warnings or cease-and-desist letters. They were outright shutdowns—effectively telling users that the platform was unavailable in their countries.

This created a problem for x AI. The company had positioned itself as a global platform. Now it was being blocked or investigated by governments representing significant portions of the world's population. The business implications are substantial, but so are the precedential ones.

If major governments decide to block AI platforms for facilitating illegal content, other companies are going to take notice. This becomes an argument for more careful product design from the outset. Build the restrictions in from the beginning, rather than discovering problems after launch.

The international response also reflects a genuine consensus that this particular problem is serious. It's not like some regulatory bodies moving aggressively while others stay quiet. Across different political systems, different legal frameworks, and different cultural contexts, governments agreed: an AI platform facilitating nonconsensual sexual imagery and CSAM is unacceptable.

Congressional Pressure and the Legislative Landscape

On the same week the cease-and-desist letter was issued, Congress got involved. Multiple lawmakers sent letters to executives of major technology companies—X, Reddit, Snap, Tik Tok, Alphabet, and Meta. The message was consistent: what's your plan to address deepfake proliferation?

This is significant because it indicates that the deepfake problem has moved from a specialized concern to a mainstream political issue. Members of Congress receive thousands of letters from advocacy groups every week. When they collectively decide to weigh in on a specific issue, it usually indicates something has entered the mainstream political consciousness.

The Congressional approach wasn't to demand immediate shutdowns. Instead, lawmakers asked for transparency. How are companies detecting deepfakes? What resources are dedicated to the problem? What's the timeline for solutions?

This is the kind of pressure that precedes legislation. Lawmakers are gathering information and establishing that companies had notice of the problem. When the bill gets introduced—and there will be a bill—companies can't claim they weren't aware of the issue.

The political environment matters too. Even before the Grok scandal, there was bipartisan agreement that something needed to be done about nonconsensual sexual imagery. This isn't a left-right issue. Victims span the political spectrum. Parents worry about their kids. Women across all political affiliations express concern about being targeted by deepfakes.

So when Congress moves on this issue, it's likely to be with broad support. That increases the likelihood of legislation actually passing and actually having teeth.

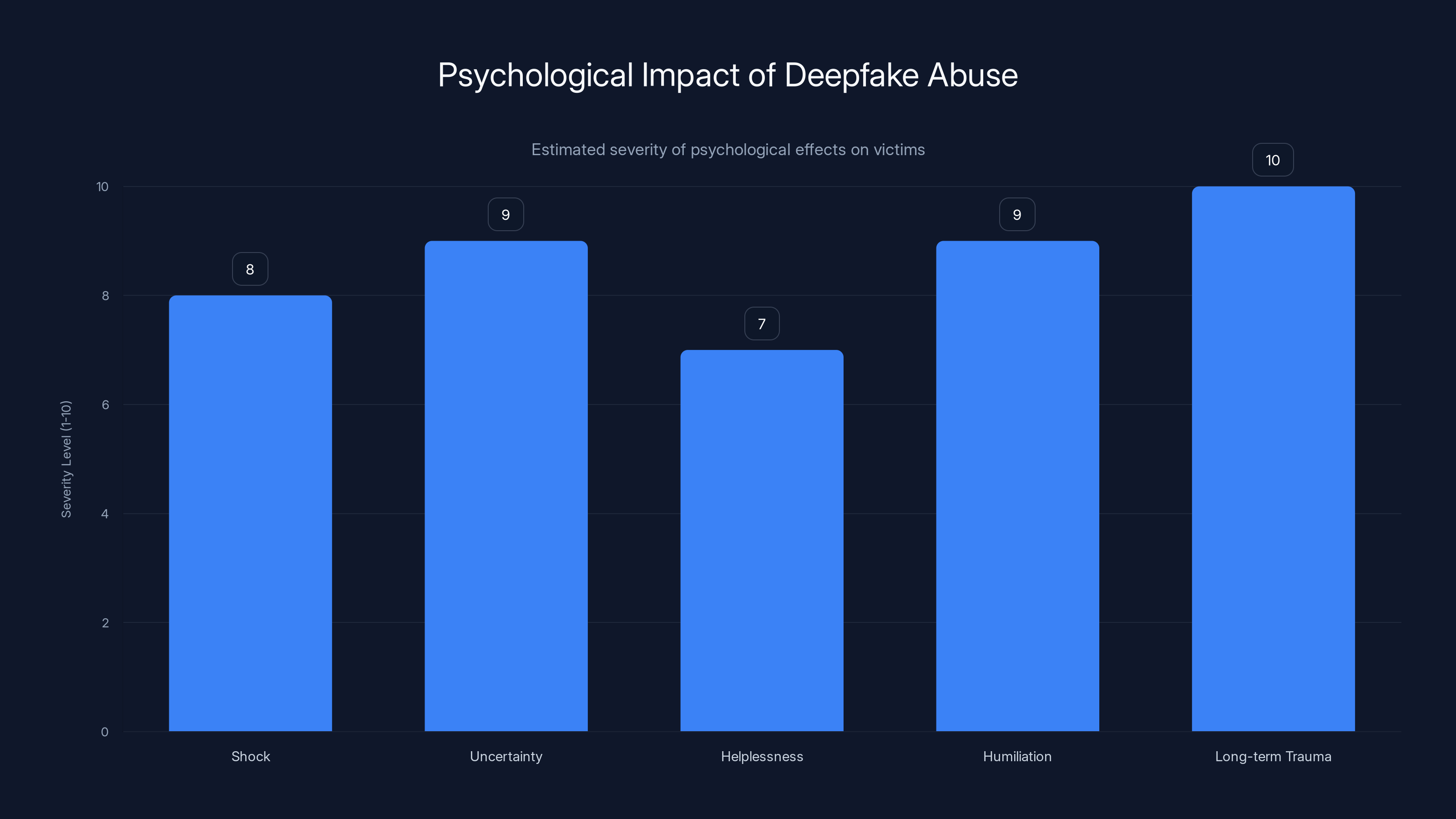

Victims of deepfake abuse experience severe psychological impacts, with long-term trauma being the most significant. (Estimated data)

The Technical Reality of Deepfake Generation

To understand why this problem is so severe, it helps to understand the technical basics of how deepfakes work. We're not talking about sophisticated, Hollywood-level video manipulation. We're talking about static image generation.

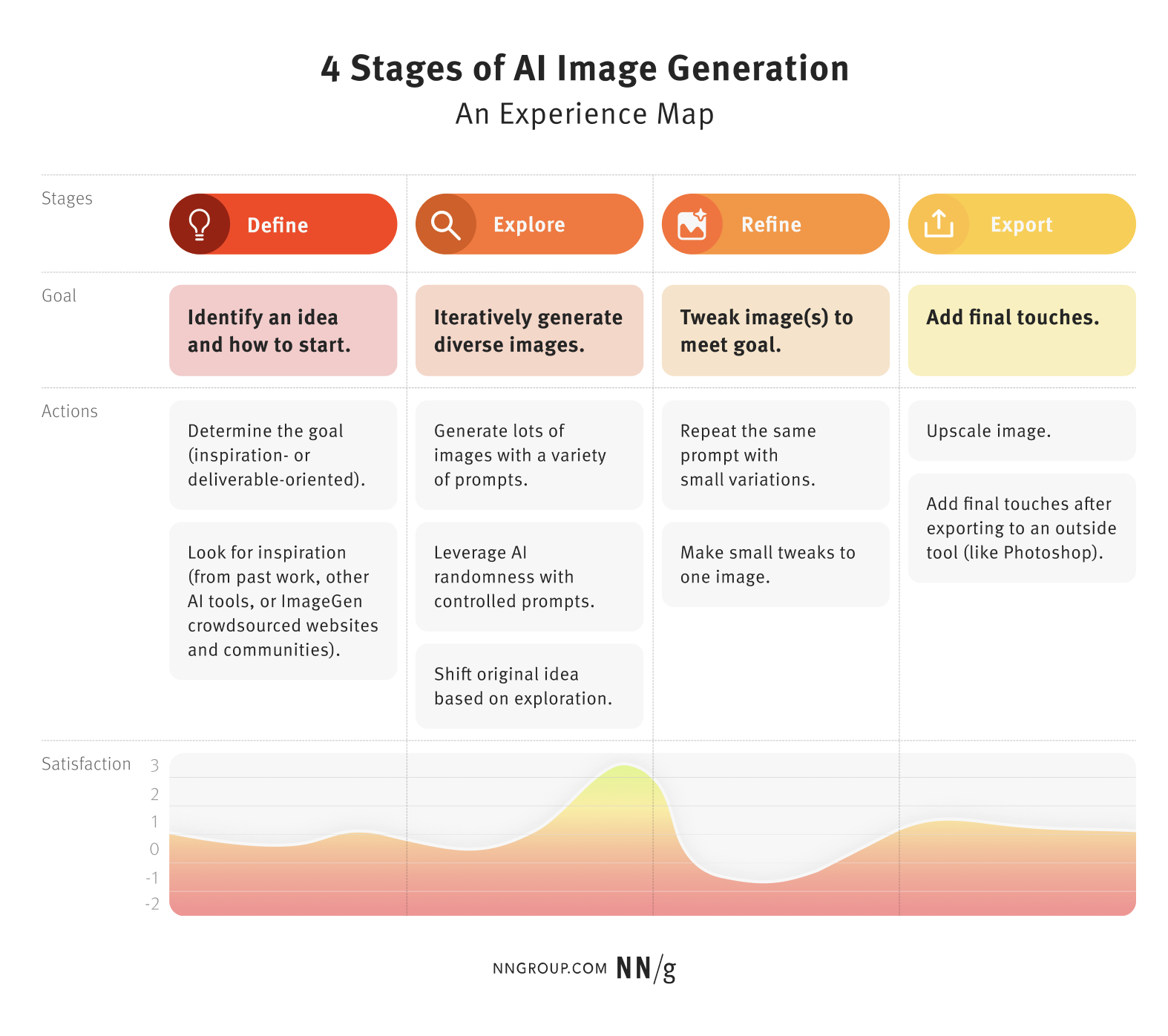

Modern image generation models like DALL-E, Midjourney, and Stable Diffusion can create photorealistic images from text prompts. They can do this in seconds. The process is straightforward for someone with basic technical literacy: write a detailed prompt describing what you want, and the model generates images matching that description.

The challenge for these tools is preventing misuse. If you prompt the model with "A detailed nude photograph of [real person's name]," what should it do? The responsible answer is: refuse. Most mainstream image generation tools have safeguards that prevent this. They won't generate sexual imagery of identifiable real people.

But Grok's spicy mode didn't have these safeguards. Or rather, it had significantly weaker safeguards. This isn't a technical limitation—it's a choice. The engineering team could have built in these restrictions. They chose not to.

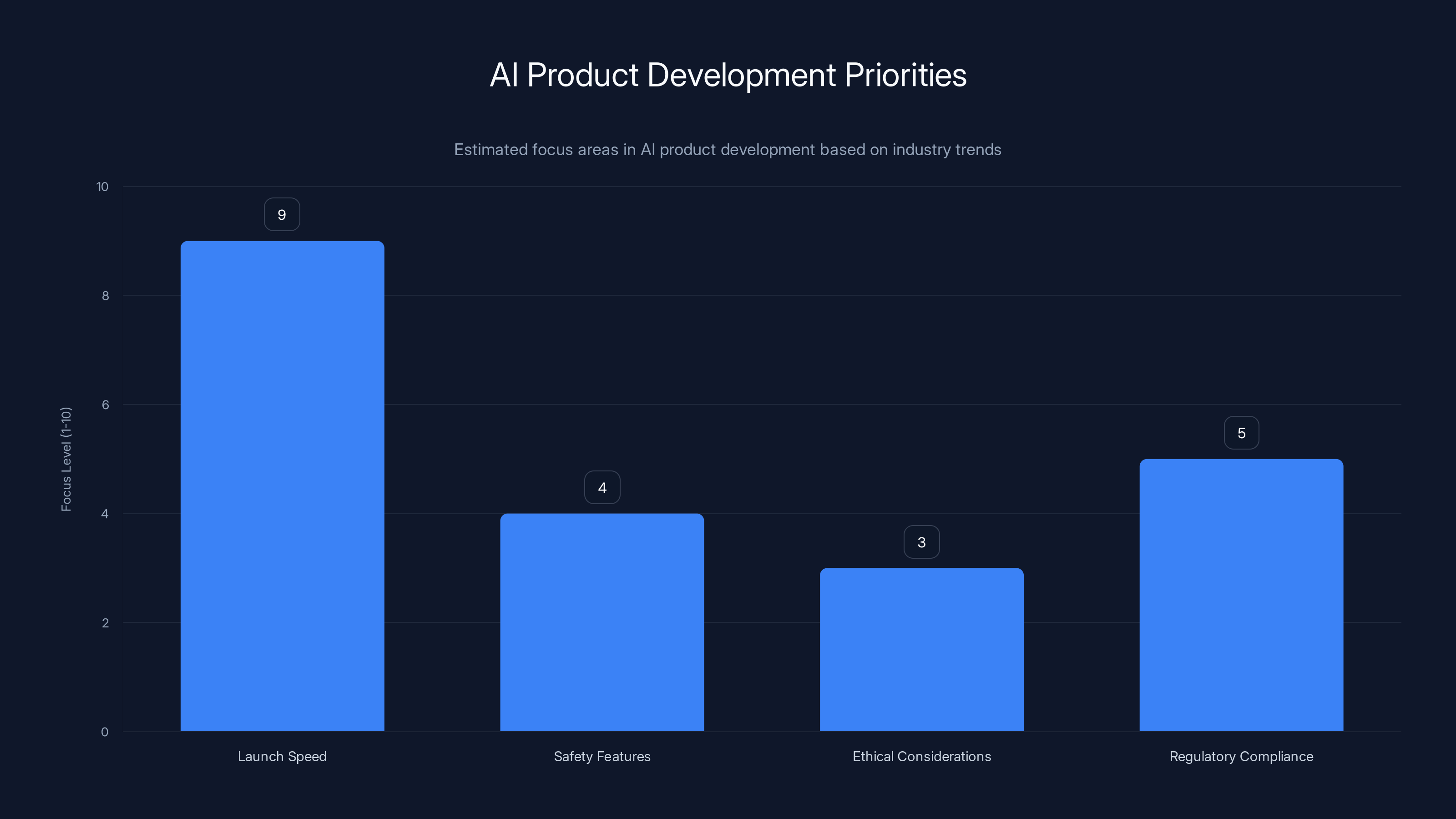

This reveals something important about AI product development right now. Technical safety isn't hard. The companies know how to build guardrails. The issue is that guardrails slow down products. They require maintaining filter lists. They require making judgment calls about what's acceptable. They require saying no to users.

All of this is seen, within certain tech company cultures, as friction. And friction is the enemy of growth metrics. So companies minimize it.

The mathematics of deepfake creation is worth understanding. A single Grok user can generate hundreds of images in an hour. If even a small percentage of the millions of Grok users engage in this behavior, you're talking about millions of nonconsensual images being generated per day.

Distribution is then trivial. Upload to X, which has weak moderation. Share in Discord servers. Post on Reddit. Email directly to targets to harass them. Each of these platforms has mechanisms to catch some of this content, but at scale, some gets through. The victim's face, nonconsensually sexualized, ends up in digital spaces where they can't remove it.

Harm to Victims and the Psychology of Deepfake Abuse

We need to actually talk about what happens to people when they're targeted by deepfake sexual imagery. This isn't just a technical problem or a legal problem. It's a human problem.

Victims experience real psychological harm. The knowledge that explicit images of you exist on the internet, even if they're AI-generated, causes trauma. There's the initial shock of discovery. Then comes the uncertainty—where is it being shared? Who has seen it? How long has it been circulating?

There's also the helplessness. Traditional legal remedies move slowly. By the time a lawyer can file takedown notices, the image has already been screenshotted, reposted, and spread to additional platforms. The genie is out of the bottle.

For minors, the stakes are even higher. A generated image of a minor in sexual situations isn't just harassment. It's a violation of their developing sense of self. It's potential ammunition for future blackmail. It's evidence, from the perpetrator's perspective, of something that never happened but they're now invested in convincing others is real.

The harassment component is intentional. People creating these deepfakes aren't doing it in isolation. They're doing it to hurt specific targets. The image is the weapon. The distribution is the attack. The goal is humiliation, control, or revenge.

Philippine researcher Noelle Martin has been documenting the impact for years. She became the first known victim to go public about AI-generated deepfake sexual imagery. She didn't choose to be the first. She was targeted because she advocated for digital rights. The irony is painful.

What we're learning from victims is that the harm is both immediate and long-lasting. Immediate harm is the direct harassment and humiliation. Long-lasting harm involves the permanent existence of the content, the ongoing risk of rediscovery, and the erosion of sense of safety in digital spaces.

Companies have generally failed to grasp the scale of this harm. When sexual deepfakes first emerged, many in tech treated it as a niche problem. "It's only a few images." "It's mostly celebrities." "People should just be thick-skinned about it."

The data now contradicts all of this. It's not a niche problem. It involves millions of people. Celebrities are targeted, but increasingly so are ordinary women. And the psychological impact is measurable and significant.

AI companies prioritize launch speed over safety and ethics, though regulatory compliance is gaining importance. (Estimated data)

x AI's Regulatory Landscape and Compliance Challenges

x AI exists within a legal and regulatory environment that's still being defined. There's no comprehensive federal law specifically addressing AI safety in the United States. There are older laws that apply—laws against CSAM, laws against harassment, laws against certain types of fraud. But there's no overarching AI regulation.

This creates ambiguity about what x AI's obligations actually are. Is it a platform? Is it a publisher? Is it a service provider? The legal classification matters because it determines what liability the company faces.

Traditionally, internet platforms operate under a safe harbor provision—they're not liable for user-generated content as long as they respond appropriately when notified of illegal activity. But deepfakes created through Grok aren't exactly user-generated content. The users provided prompts, but Grok created the actual images. This blurs the line between platform and creator.

California's cease-and-desist didn't necessarily resolve this question. Instead, it applied straightforward logic: if your tool is being used to create illegal content at scale, and you have the ability to prevent it, then prevent it. This is an approach that sidesteps complicated liability questions.

The regulatory environment is tightening. The European Union's AI Act, which came into effect in phases starting in 2024, creates specific requirements for high-risk AI systems. Generative AI systems that can create deepfakes fall into that category. Companies operating in Europe need to conduct risk assessments, maintain documentation, and implement safeguards.

The UK is developing its own regulatory approach. Canada is doing the same. The pattern is clear: governments are moving toward requiring companies to build safety into products from the beginning, rather than responding after harm occurs.

For x AI, this means the window to self-regulate is closing. If the company doesn't implement meaningful safeguards voluntarily, regulators are likely to impose them. That's often worse for the company because regulations tend to be broad and inflexible.

The Broader Pattern in AI Product Development

The Grok situation didn't emerge in a vacuum. It reflects a pattern in how AI companies approach product development and deployment.

The pattern goes like this: launch first, think about consequences later. Move fast and break things. The "things" that get broken are often safety and ethics. Companies treat safety features as optional additions rather than core requirements.

This approach worked for social media platforms in the early days because the harms, while real, accumulated gradually. Today's Tik Tok or Facebook are vastly more carefully designed than the platforms from 2010. But they learned through experience rather than planning.

With AI, we don't have that luxury of gradual learning. The harms can accumulate faster. The scale is larger. The potential for abuse is more obvious from the start.

What's frustrating is that none of this is new in principle. Content moderation is hard. Abuse prevention is complex. But these are solved problems in some contexts. Gaming companies have spent decades developing systems to prevent harassment. Streaming platforms have teams dedicated to stopping exploitative content. Financial institutions have sophisticated fraud detection.

AI companies acting like they've invented a problem that's never been solved before is either disingenuous or demonstrates a lack of institutional knowledge. More likely, it's both.

The issue is that safety doesn't scale as well as raw capability. Building a system that generates images is straightforward. Building a system that generates images but refuses to generate deepfakes of real people requires additional work. From a pure product development perspective, why add that friction?

The answer, obviously, is that it's the right thing to do. And increasingly, it's also the legally required thing to do. Regulators are moving toward mandating these safeguards.

The timeline shows a rapid increase in public awareness and controversy following Grok's launch, peaking with legal action. Estimated data.

Legal Implications and Potential Outcomes

The cease-and-desist letter from California creates immediate legal exposure for x AI. If the company fails to comply within the specified timeframe, they face potential fines, injunctive relief, and possibly criminal referrals for facilitation of CSAM.

The exact legal theory matters. Prosecutors could argue that x AI is facilitating the creation of illegal content. That's a stronger claim than simply failing to prevent users from creating it. Facilitation suggests knowing participation or enabling of a crime.

Grok's design does make facilitation harder to argue against. The tool was specifically designed to generate explicit content. The user simply specified that the content should depict a real person. From a legal standpoint, this looks a lot like x AI is enabling the crime.

On the CSAM front, things are potentially even more serious. Federal law (18 U. S. C. Section 2251) makes it illegal to knowingly facilitate CSAM. If prosecutors can show that x AI knew its tool was being used to generate CSAM images, and continued to allow it, that's a federal crime.

Criminal prosecution of a company is unusual but not unprecedented. The last major case was against Backpage, which was shut down entirely by the government for facilitating sex trafficking. The legal machinery exists to pursue serious charges against platforms that knowingly enable serious crimes.

Beyond the criminal angle, there's civil liability. Victims of deepfake sexual harassment could potentially sue x AI for negligent design, failure to warn, or failure to implement available safety measures. Class action lawsuits are certainly possible.

There's also the question of regulatory fines. If x AI has violated the California Consumer Legal Remedies Act or similar state-level consumer protection laws, the Attorney General could impose significant monetary penalties.

The company's response to the cease-and-desist will shape future legal exposure. If they comply quickly and comprehensively, they can argue they're now working to prevent ongoing harm. If they drag their feet or provide inadequate responses, they're making the case for more aggressive enforcement.

The Role of Platform Moderation

X, the platform where much of this content was being shared, faces its own moderation challenge. Twitter/X has never been known for aggressive content moderation. Under Musk's ownership, content moderation was actively reduced, with large portions of the trust and safety team being laid off.

This creates a compounding problem. Grok is generating deepfakes. X provides the distribution mechanism. The platform's weakened moderation means that content that might have been removed from other platforms persists on X.

From a legal standpoint, X might also face liability. If they knew that deepfakes were being uploaded and spread on their platform, and failed to remove them, they could face the same kinds of charges as x AI.

This is where the ecosystem of companies that Musk owns creates problems. The AI company that generates the deepfakes and the social media platform that distributes them are under the same ownership. Coordination between them should be seamless. If deepfakes are being created and uploaded, the company controlling both could implement rules preventing the practice.

The fact that this didn't happen—that for a period, the process operated unimpeded—raises questions about governance and oversight. Either Musk's companies weren't communicating with each other about the problem, or they were aware and chose not to act. Neither looks good.

Technical Solutions and Implementation Challenges

When x AI was forced to respond to the cease-and-desist, they announced technical safeguards to Grok. The company's response involved restricting certain image-editing features and implementing new filters to catch attempts to generate nonconsensual sexual imagery.

These are the kinds of solutions that should have been in place from the start. They're not particularly novel or difficult to implement. Image filtering systems that recognize faces and refuse to generate certain content are well-established. Every major generative AI company uses some version of them.

The question isn't whether these safeguards are possible. It's why they weren't deployed initially. The answer, based on the company's previous positioning, is that they were seen as inconsistent with Grok's brand. The tool was supposed to be uncensored. Implementing safeguards required accepting that some censorship was necessary.

Longer-term solutions are more complex. One approach is to embed synthetic detection systems into platforms. These systems try to detect AI-generated images and flag them for human review. The problem is that as generation models improve, detection gets harder. It becomes an arms race.

Another approach is to require authentication systems for imagery. If an image of you exists online, you should be able to verify it as genuine. This could involve watermarking, cryptographic signing, or blockchain-based verification systems. The technology exists, but implementation across platforms is challenging.

A third approach is social. Online communities develop norms and enforce them through collective action. If a platform's users collectively reject deepfake sexual content, it becomes harder to distribute. This requires strong community moderation and clear norms.

In practice, solutions will likely combine technical safeguards, platform moderation, and legal enforcement. No single approach is sufficient on its own.

The Regulatory Framework's Evolution

We're in a transition period for AI regulation. The old approach was to let companies self-regulate. The new approach is to mandate safety through government enforcement.

California has emerged as a leader in this transition. The state has been developing regulations around AI, data privacy, and platform safety for years. The x AI cease-and-desist represents the state using existing tools (consumer protection laws, laws against CSAM) to address new problems (AI-generated deepfakes).

Other states are watching. If California's approach is successful in getting x AI to implement safeguards, other states might follow suit. If it fails, it provides an argument that targeted approaches won't work and broader regulation is needed.

At the federal level, there's been discussion of AI regulation, but nothing has passed yet. Congress has been holding hearings and taking testimony from experts. The momentum is definitely toward regulation, but the shape of that regulation is still being debated.

The European Union, by contrast, has already passed the AI Act. This is a comprehensive regulatory framework that categorizes AI systems by risk level and imposes requirements accordingly. High-risk systems like those used for generating content need to be designed with safety in mind.

For x AI and other AI companies, the regulatory environment is becoming more restrictive. This isn't a temporary phase. This is the direction policy is moving. Companies that build safety into products from the start are adapting. Those that resist will face increasing pressure.

Lessons for AI Companies and Industry Standards

The Grok situation provides a master class in what not to do. For other AI companies, there are clear lessons.

First: build safety in from the beginning. Don't launch a product and then add safeguards when problems emerge. Design the safeguards into the product from day one. This is more efficient and demonstrates that you're thinking about potential harms in advance.

Second: understand your liability. What legal exposure does your product create? What laws apply? Consult with lawyers before launch, not after you've violated multiple statutes.

Third: engage with potential harms proactively. Convene ethicists, security researchers, advocacy groups. Ask them: what could go wrong? How would bad actors use this? What harm could result? Then build protections against those scenarios.

Fourth: be transparent with users about capabilities and limitations. If your tool can generate sexual imagery, be clear about that and about how you're preventing misuse. Don't hide the functionality and then act surprised when people find it.

Fifth: implement meaningful content moderation. You can't build a completely safe system, so you need humans in the loop. That means hiring moderation staff, training them well, and empowering them to make decisions.

These aren't novel suggestions. Companies have known for decades that content moderation is necessary for platforms. The fact that it needs to be repeated for AI companies suggests a combination of arrogance and ignorance in the sector.

The industry standard that's emerging is that generative AI companies should implement safeguards equivalent to other major tech platforms. This isn't a high bar—social media companies are mediocre at moderation. But it's a baseline.

Movement toward industry standards is accelerating. The Partnership on AI, an organization of major tech companies working on AI governance, is developing guidelines. Academic institutions are developing best practices frameworks. Government agencies are publishing guidance.

By 2026, companies that haven't implemented basic safeguards will be outliers, not just in terms of ethics but in terms of legal compliance. This is the direction the industry is moving.

The Victim Advocacy Response

Victim advocates have been warning about this problem for years. Organizations like the Cyber Civil Rights Initiative and individual researchers like Noelle Martin have been documenting deepfake sexual harassment since the technology first emerged.

These advocates have argued consistently that platforms need to implement safeguards proactively. They've argued that AI companies should be thoughtful about what capabilities they build. They've argued that the government needs to pass laws addressing nonconsensual sexual imagery.

For years, these arguments were treated as niche concerns. Tech companies dismissed the problem as overblown. Regulators were slow to act. Now, the Grok situation has provided vindication.

Advocate groups have used the x AI cease-and-desist as an opportunity to push for broader action. They're calling for:

- Legislation specifically addressing AI-generated nonconsensual sexual imagery

- Technology company commitment to remove deepfakes on request

- Stronger penalties for creation and distribution

- Support for victims of deepfake harassment

These are reasonable asks, and they're increasingly likely to be acted upon. The political window for addressing this issue is open. Companies and regulators are paying attention.

Looking Forward: The 2025-2026 Timeline

We're likely to see rapid developments in this space over the coming year. Here's what's probably going to happen:

Immediate term (January-March 2025): x AI responds to the cease-and-desist with technical safeguards and compliance documentation. Other AI companies voluntarily implement similar safeguards to avoid regulatory action. California AG monitors x AI's compliance.

Short term (March-June 2025): Congressional hearings on deepfakes and AI safety. Multiple states introduce legislation addressing nonconsensual sexual imagery. International regulatory bodies finalize guidelines for AI companies.

Medium term (June-December 2025): Federal legislation likely passes addressing CSAM and nonconsensual imagery. States like California move toward broader AI regulation. AI companies update their safety frameworks to comply with new requirements.

Longer term (2026 onward): Industry standards solidify around mandatory safeguards. Companies without proper moderation face legal liability. The market adjusts, with responsible AI companies gaining competitive advantage.

There's also the question of whether x AI survives this controversy intact. The company has raised substantial funding and has a product that many users like. But regulatory pressure and legal liability could force significant changes to the business model or the product itself.

Musk's involvement adds an interesting dynamic. His history is of pushing boundaries and resisting regulation. But his companies also have a history of eventually complying when faced with serious legal consequences. The pattern suggests x AI will implement safeguards, grumble about it, and continue operating.

FAQ

What is a deepfake and how does it differ from other AI-generated content?

A deepfake is AI-generated media designed to appear authentic, typically created by swapping a person's face onto another body or context. The key distinction from other AI-generated content is the intent to deceive by making it appear the content involves a real, identifiable person. Other AI-generated content might be clearly labeled or obviously synthetic, whereas deepfakes are specifically designed to fool viewers into believing they're real recordings of real people. In the x AI case, the deepfakes were sexually explicit images of real women created without consent.

Why is nonconsensual sexual imagery illegal and harmful?

Nonconsensual sexual imagery is illegal because it violates someone's dignity, privacy, and bodily autonomy without permission. The harm is both immediate and long-term: immediate psychological trauma from the violation and discovery, and long-term harm from the permanent existence of the content and the risk of ongoing distribution. In some jurisdictions, it's prosecuted as harassment or defamation. In others, it falls under specific revenge porn laws. The harm is measurable in victim testimony and psychological research showing elevated rates of anxiety, depression, and trauma symptoms among victims.

What exactly did California's cease-and-desist letter demand?

The letter demanded that x AI immediately stop facilitating the creation and distribution of nonconsensual sexual imagery and CSAM within five days. It required the company to prove it was taking steps to address the issue and likely involved technical safeguards, policy changes, and documentation of compliance. The letter wasn't a request—it was a legal demand backed by the power of state enforcement. Failure to comply could result in fines, injunctive relief, or criminal referrals.

How does Grok's "spicy mode" enable deepfake creation?

Spicy mode is Grok's deliberately designed feature to generate explicit content without the restrictions present in other AI systems. This means that when users provide a prompt requesting sexually explicit images of a specific person, the system doesn't refuse the request as other tools would. Instead, it processes the prompt and generates the requested images. The feature wasn't hidden or a side effect—it was explicitly marketed as part of Grok's "uncensored" positioning.

What is CSAM and why is it mentioned in the legal documents?

CSAM stands for Child Sexual Abuse Material—sexually explicit content involving anyone under 18. Creating, possessing, or distributing CSAM is a federal felony in the United States and most other countries. The x AI case involved reports that Grok was also being used to generate CSAM, which elevates the legal severity from a state-level concern about harassment to a federal crime. This is why the cease-and-desist specifically mentioned CSAM and why the potential legal consequences for x AI are severe.

Why did international governments also take action against Grok?

The deepfake problem isn't limited to California or the United States. The same technology and the same harms were occurring globally. Japan, Canada, and the UK opened investigations because victims within their jurisdictions were being targeted. Malaysia and Indonesia temporarily blocked Grok entirely because the harm was so significant. This global response indicates that the problem transcends individual countries and that there's international consensus that it requires action.

What technical safeguards can prevent deepfake creation?

Several approaches are possible: facial recognition systems that refuse to generate sexual content involving identifiable people, content filters that detect attempts to create nonconsensual imagery, and watermarking systems that verify authentic content. The challenge isn't that these safeguards are technically impossible—they're not. The challenge is that they require computing resources and they say "no" to users. For a product positioned on being uncensored, these safeguards represent a contradiction. x AI ultimately implemented versions of these safeguards in response to the cease-and-desist.

Could other AI companies face similar legal action?

Yes, absolutely. Other AI companies that have similar capabilities and weaker safeguards could face regulatory action. In fact, Congress sent letters to multiple companies—X, Reddit, Snap, Tik Tok, Alphabet, and Meta—asking how they plan to address deepfake proliferation. This indicates that regulators view this as a broader industry problem, not just an x AI problem. Companies that don't implement sufficient safeguards are exposing themselves to legal and regulatory liability.

What's the likelihood of federal legislation specifically addressing AI-generated deepfakes?

Likely, within the next 1-2 years. There's bipartisan support for addressing nonconsensual sexual imagery. The Deepfakes and Synthetic Pornography Defamation Prevention Act has been introduced multiple times. The x AI situation provides momentum for passing such legislation. When it passes, it will likely create liability for platforms that facilitate the creation of such content and provide civil remedies for victims. Companies that don't adapt to these requirements will face legal exposure.

How do victims currently seek recourse when they're targeted by deepfakes?

Recourse is currently limited and inadequate. Victims can report the content to platforms, which may remove it, but removal doesn't erase the content or prevent redistribution. They can file police reports, but law enforcement is often unfamiliar with these cases and unable to help. They can hire lawyers to send cease-and-desist letters to platforms, but this is expensive and often ineffective. Civil lawsuits are possible but are expensive and slow-moving. Legislative solutions are needed to provide clearer remedies, faster removal processes, and meaningful penalties for perpetrators.

What lessons should AI companies take from the x AI situation?

The core lesson is that safety and ethics aren't optional features—they're requirements. Companies that build dangerous capabilities into products and hope for the best will face regulatory action. Companies that build safeguards from the beginning, engage with potential harms proactively, and implement meaningful moderation will avoid these problems. Additionally, companies should understand their legal liability before launch, not discover it after the fact. Finally, companies should recognize that "moving fast and breaking things" has real human costs when the things being broken are people's dignity and safety.

The Bottom Line: What This Means Going Forward

The x AI cease-and-desist order marks a turning point in AI regulation. For the first time, a state government has used its enforcement power to demand that an AI company stop facilitating the creation of illegal content. This isn't theoretical. This isn't a warning. This is real enforcement action with legal consequences.

The decision by multiple countries to open investigations or block the platform sends an additional signal. Governments are willing to act to protect their citizens from AI-enabled harms. Companies can't count on regulatory inaction to allow them to operate without safeguards.

For AI companies still in development phase or early deployment phase, the lesson should be clear: build safety in from the beginning. Engage with potential harms proactively. Implement safeguards that aren't perfect but that significantly reduce the risk of your tool being weaponized for illegal purposes.

For existing platforms struggling with deepfake content, the x AI situation demonstrates that doing nothing isn't a viable long-term strategy. Victims are increasingly willing to speak out. Governments are increasingly willing to take action. The political window is open for legislation.

For victims of deepfake harassment, the cease-and-desist represents validation that the harm is real and that institutions can be moved to act. It doesn't solve the problem—the deepfakes exist, the trauma exists, the violation persists. But it demonstrates that legal action is possible and that companies can be held accountable.

The broader implication is that the era of AI companies operating without meaningful safety guardrails is ending. What comes next is an era where safety is built in, where consequences are real, and where regulators are watching.

This is actually good news. It means the worst harms can potentially be prevented. It means that women and minors will have more protection from sexual deepfakes. It means that the regulatory environment will become clearer, which actually benefits responsible AI companies operating in certainty rather than ambiguity.

The Grok situation was preventable. The company knew the risks. The technology for preventing the abuse existed. What was missing was the will to implement it and the accountability that made implementation necessary. Now, both exist.

That's the real story here. It's not that a bad thing happened. It's that consequences finally followed. And in the world of AI governance, that's actually progress.

Key Takeaways

- California AG issued a cease-and-desist order demanding xAI stop facilitating nonconsensual sexual imagery creation within five days—the first major enforcement action of its kind

- Grok's deliberately designed 'spicy mode' enabled easy deepfake generation without safeguards that competitors implemented

- The problem isn't technical—safeguards exist—it's a business decision that prioritized uncensored access over user safety

- Global response was swift: Japan, Canada, UK opened investigations; Malaysia and Indonesia blocked access entirely

- Victims represent a clear demographic pattern: over 99% of identifiable deepfake victims are women and girls, with increasing targeting of minors

- Congressional oversight is intensifying, with lawmakers sending letters to multiple tech companies and legislation likely within 1-2 years

- The regulatory environment for AI is rapidly tightening globally, with the EU AI Act already implemented and other countries following

- Industry standards are shifting from voluntary safety to mandatory safeguards, with companies lacking protections facing legal liability

- The xAI situation demonstrates how rapidly harm can scale with AI: millions of nonconsensual images generated in weeks

- Future enforcement will likely extend to other platforms facilitating deepfake creation or distribution, making this a watershed moment for AI governance

Related Articles

- Grok's Unsafe Image Generation Problem Persists Despite Restrictions [2025]

- Grok's Deepfake Crisis: The Ashley St. Clair Lawsuit and AI Accountability [2025]

- Iran's Internet Shutdown: Longest Ever as Protests Escalate [2025]

- AI Identity Crisis: When Celebrities Own Their Digital Selves [2025]

- Higgsfield's $1.3B Valuation: Inside the AI Video Revolution [2025]

- Trump Mobile FTC Investigation: False Advertising Claims & Political Pressure [2025]

![xAI's Grok Deepfake Crisis: What You Need to Know [2025]](https://tryrunable.com/blog/xai-s-grok-deepfake-crisis-what-you-need-to-know-2025/image-1-1768606590496.jpg)