Responsible AI in 2026: The Business Blueprint for Trustworthy Innovation

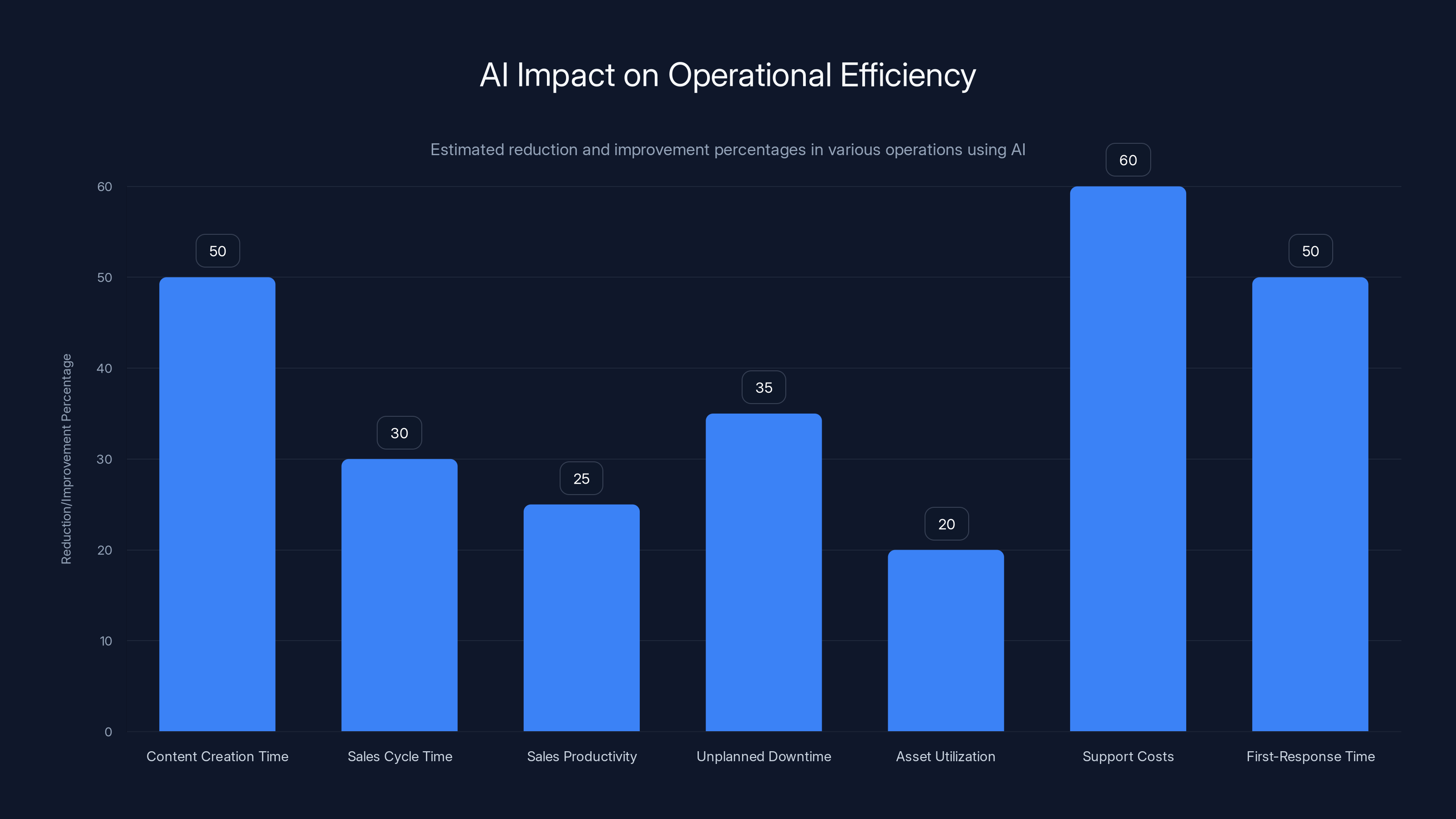

We're past the hype phase now. The questions about whether AI belongs in the enterprise have been replaced by something much harder: how do we implement this responsibly?

That shift matters. A lot.

Right now, businesses are sitting at an inflection point. AI budgets are growing faster than governance structures. Teams are adopting new tools without clear guardrails. And the gap between what AI can do and what it should do is widening daily. The stakes aren't academic anymore. They're operational, financial, and reputational.

In 2026, the businesses that will win aren't the ones moving fastest. They're the ones moving smartest. That means building AI systems anchored in three pillars: responsible governance, data integrity, and team alignment. It means understanding that trust isn't a feature—it's a business imperative.

Let me be direct: the companies treating AI as a speed play are going to stumble. The ones treating it as a trust play are going to scale.

TL; DR

- UK business investment in AI will rise 40% over the next two years, but responsibility and governance must scale alongside adoption

- Trust is a performance driver: 67% of consumers consider brand trust essential to purchase decisions, making transparent AI implementation a competitive advantage

- 78% of CISOs report AI-powered cyber threats are already impacting their organizations, demanding rigorous security governance

- Siloed working is unsustainable: unified channel strategies and collaborative cross-functional teams are essential for commercial impact

- People, process, and governance matter more than technology: structured training and compliance frameworks enable safe, scalable AI deployment

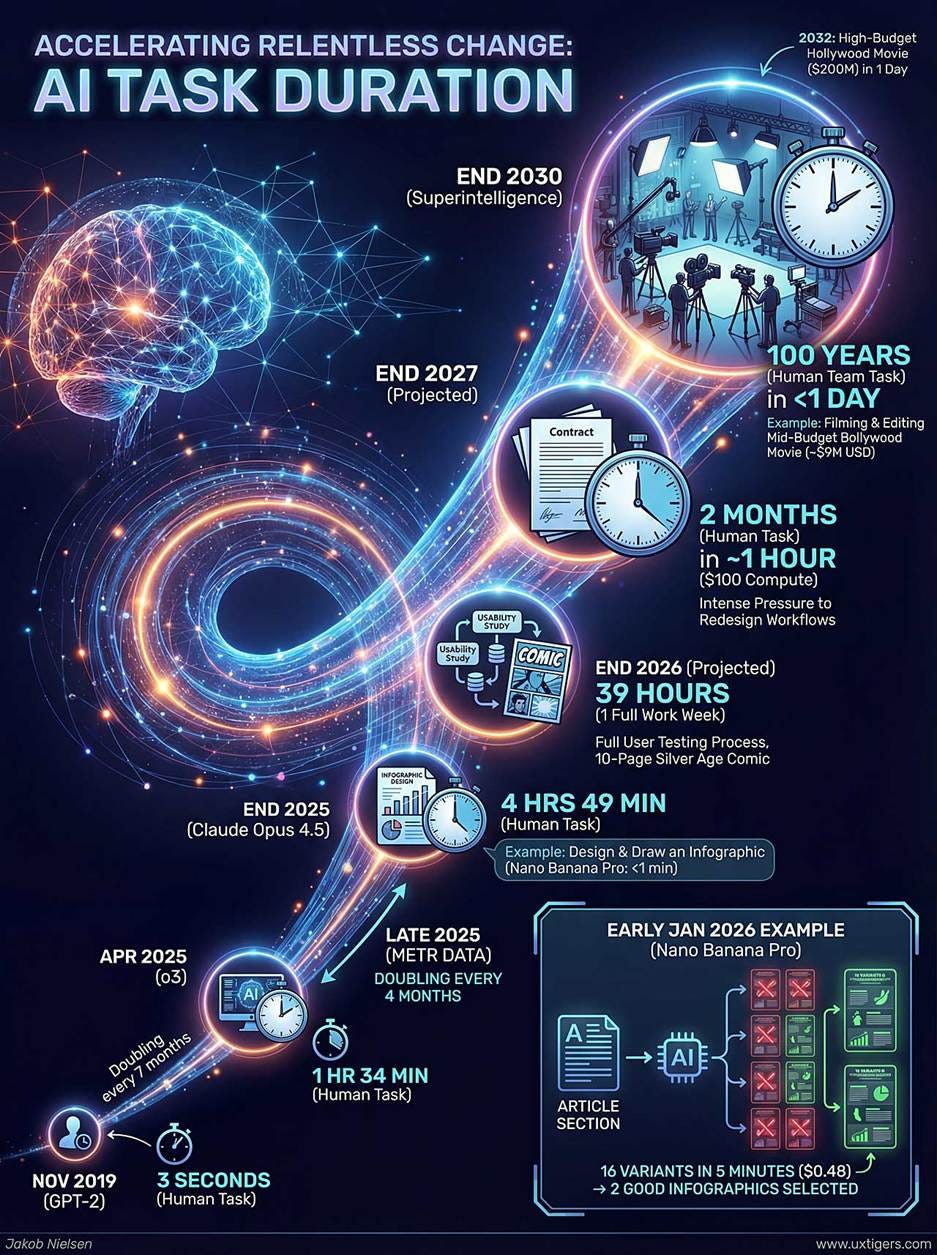

The distribution highlights that 'People' are often the primary focus in Responsible AI initiatives, followed by structured 'Process' and 'Governance'. Estimated data.

The Current State: AI is Mainstream, Governance is Lagging

Let's be honest about where we are. AI isn't experimental anymore. It's embedded in workflows across marketing, sales, operations, and IT. Entire teams are using AI for content generation, lead scoring, predictive maintenance, and customer service without thinking twice. That's progress.

But progress without guardrails creates risk.

The data tells the story. According to recent industry surveys, 78% of Chief Information Security Officers (CISOs) report that AI-powered cyber threats are already having a significant impact on their organizations. That's not a future problem. That's today's problem. And it's accelerating because hackers aren't waiting. They're actively building AI-powered attack strategies to match the defensive tools that businesses are deploying.

The pattern is predictable: businesses adopt AI rapidly, security teams scramble to catch up, and then adversaries find new vectors to exploit. It's a cycle that repeats unless organizations get ahead of it with deliberate governance.

What's interesting is that the technology itself isn't the bottleneck. The bottleneck is people, process, and discipline.

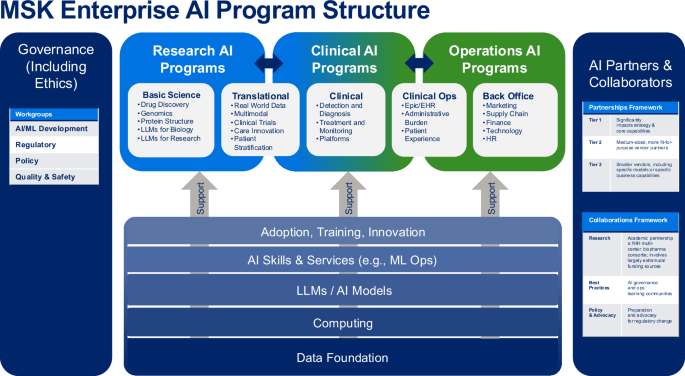

When you talk to organizations that are successfully deploying AI at scale, they're not the ones with the fanciest tools. They're the ones with the clearest governance frameworks, the best-trained teams, and the most rigorous data management practices. They've made conscious choices about how they'll use AI before they've scaled their use of it.

That's the mindset that needs to dominate in 2026.

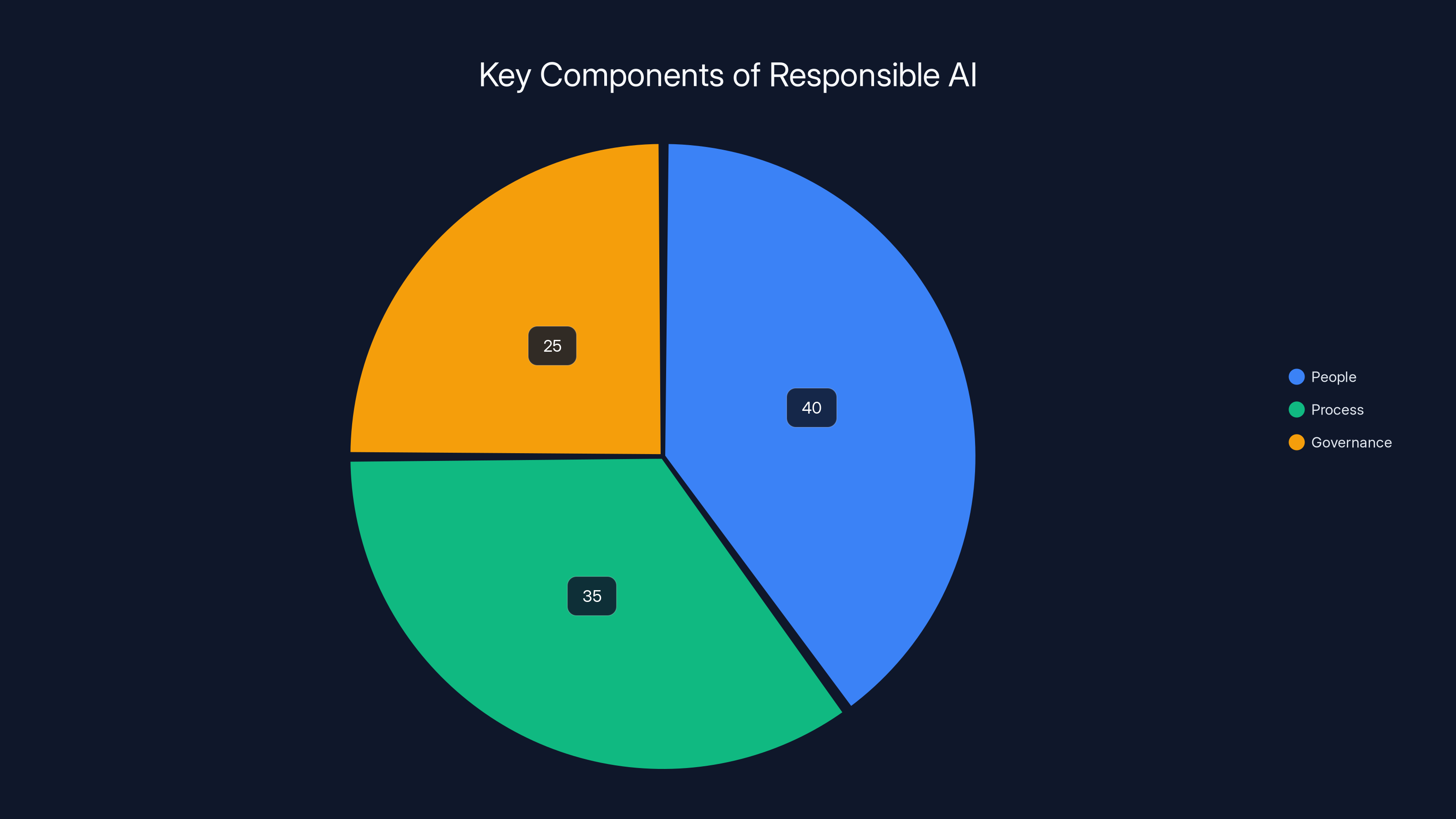

Unified channel strategies significantly outperform siloed strategies across key metrics, leading to higher efficiency, effectiveness, customer satisfaction, and revenue growth. Estimated data.

Section 1: Responsible AI Starts With People, Process, and Governance

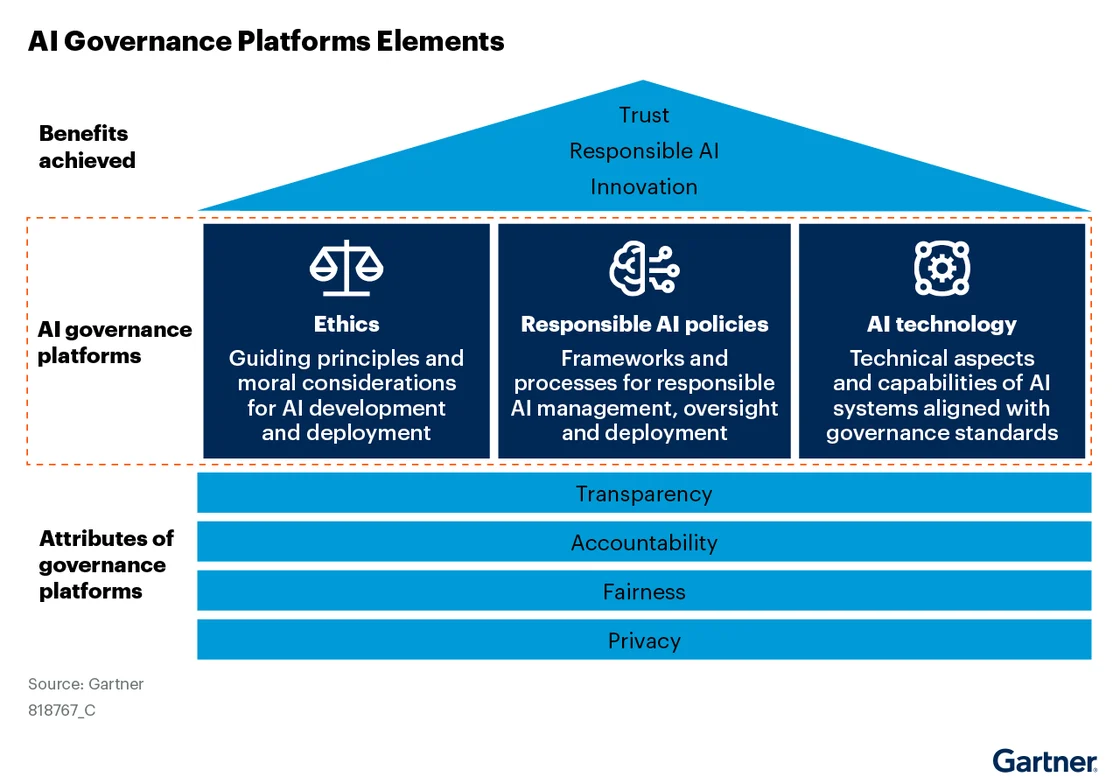

Understanding the Three Pillars of Responsible AI

Responsible AI isn't a technology problem. It's a systems problem. And the system has three interdependent components: people, process, and governance.

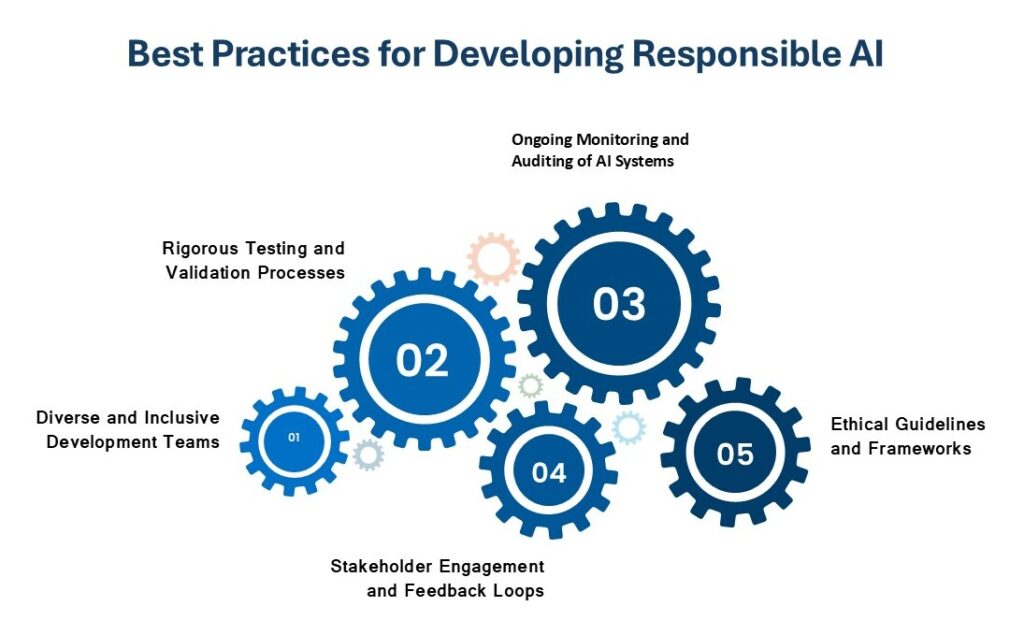

People means upskilling. It means training teams not just on how to use AI, but on when to use it and why certain guardrails matter. The organizations scaling AI fastest are the ones investing in AI literacy programs across the organization. Not just for data scientists. For marketers. For salespeople. For operational teams. Everyone who touches AI needs to understand the basics of how it works, where it can fail, and what their responsibility is in maintaining system integrity.

This isn't academic training. This is practical, use-case-focused education. For a marketing team, it might be: "Here's how AI can generate content faster. Here's how it can hallucinate. Here's what you need to verify before publishing." For an operations team, it might be: "Here's how predictive maintenance catches problems. Here's how it occasionally over-predicts. Here's when human verification is non-negotiable."

When teams understand the mechanics, they use tools more intelligently. They catch problems earlier. They make better judgment calls about when AI is appropriate and when human expertise is essential.

Process means structure. It means creating clear workflows for how AI is evaluated, deployed, and monitored. Before a team implements a new AI tool, there should be a process: What problem are we solving? What data will this use? Who has access? What are the failure modes? How will we measure success? What are our redlines?

Without process, adoption becomes chaotic. Different teams use tools differently. Standards erode. Risk accumulates invisibly.

With process, adoption becomes systematic. You can scale confidently because you've built infrastructure to catch problems before they become crises.

Some of the best-run organizations have AI review boards. Not bureaucratic gatekeepers, but cross-functional groups that evaluate new use cases, ask hard questions, and make recommendations. These boards aren't slowing adoption. They're accelerating it by building confidence that new implementations are sound.

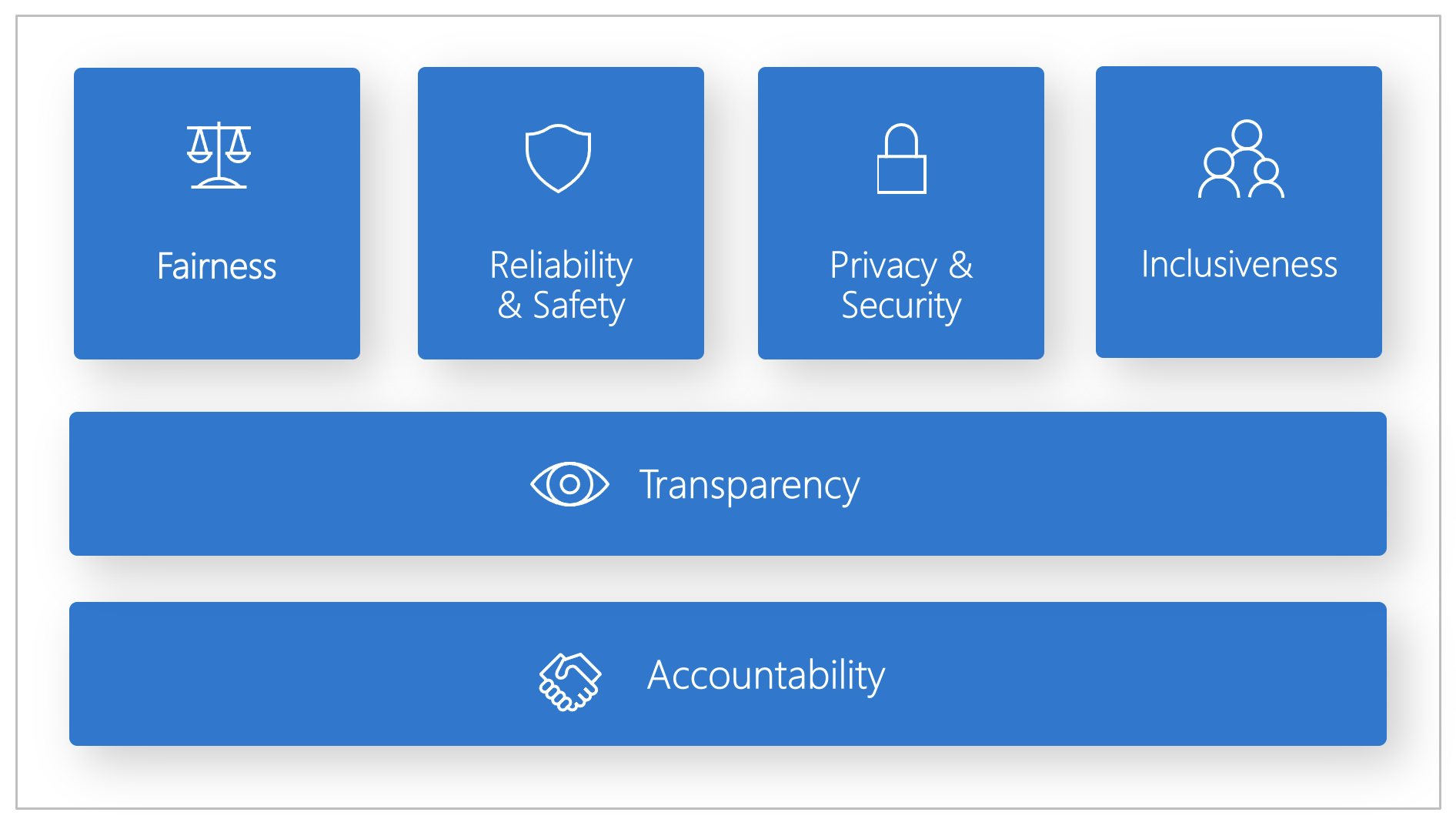

Governance means accountability. It means designating clear ownership for AI systems. It means creating audit trails so you can explain how decisions were made. It means having someone responsible for monitoring for drift, bias, or performance degradation in production systems.

Governance also means compliance. As regulations evolve, your governance framework becomes your insurance policy. If something goes wrong, you can demonstrate that you followed reasonable practices, made good-faith efforts, and acted responsibly. That matters legally, but it also matters reputationally.

Here's what this looks like in practice:

- Structured training programs: Monthly sessions on AI literacy, updated quarterly with new tools and use cases

- Clear approval workflows: New AI implementations require sign-off from data governance, security, and legal teams

- Regular audits: Monthly reviews of AI systems in production to catch drift or unexpected behavior

- Incident response plans: Clear protocols for what to do if an AI system produces erroneous outputs or is compromised

- Data access controls: Strict permissions governing who can use AI systems and what data they can access

- Transparency reporting: Regular updates to leadership on AI adoption, risks identified, and mitigation actions taken

The organizations that build these systems early are going to have a massive competitive advantage in 2026. Because as regulators pay more attention and customers demand more transparency, having these foundations in place won't feel like overhead. It'll feel like insurance.

The Cybersecurity Dimension: AI as Both Sword and Shield

Here's something that doesn't get enough attention: AI is making cybersecurity harder and easier simultaneously.

On the ease side, AI is enabling predictive maintenance, threat detection, and anomaly identification at scales that were impossible before. Organizations can now detect suspicious patterns in network traffic, user behavior, or database access in real-time. They can predict where vulnerabilities might appear based on historical patterns. They can automate security responses to routine incidents.

This is genuinely powerful. It frees security teams to focus on the complex, strategic problems instead of fighting fires all day.

But on the hard side, adversaries are using the exact same capabilities. They're using AI to craft targeted phishing emails that are harder to distinguish from legitimate communication. They're using AI to automate the discovery of new vulnerabilities. They're using AI to generate malware that adapts to defensive measures in real-time.

The dynamic is escalating fast. Security teams that aren't building AI-powered defenses are going to get outpaced by adversaries using AI-powered attacks. But security teams that implement AI defensively without proper governance are creating new attack surfaces.

The answer is the same: governance. You need to understand what your AI security systems are doing, what data they're using, how they're making decisions, and what happens when they fail. Because when a security system fails, the consequences are immediate and severe.

The organizations that will have the strongest security postures in 2026 won't be the ones using the most sophisticated AI tools. They'll be the ones with the clearest understanding of how those tools work, the strongest oversight mechanisms, and the most rigorous testing protocols.

Section 2: Trust and Reputation as Business Drivers

Why Trust Matters Now More Than Ever

Budgets are tighter. Deal cycles are longer. Decision-makers are more skeptical. In this environment, trust becomes the differentiator.

The data backs this up. Recent consumer research shows that 67% of consumers consider brand trust essential to their purchase decisions. For B2B, the dynamic is even more pronounced. When a purchasing decision involves significant investment, long-term commitments, and organizational risk, trust isn't a nice-to-have. It's the foundation of the entire decision.

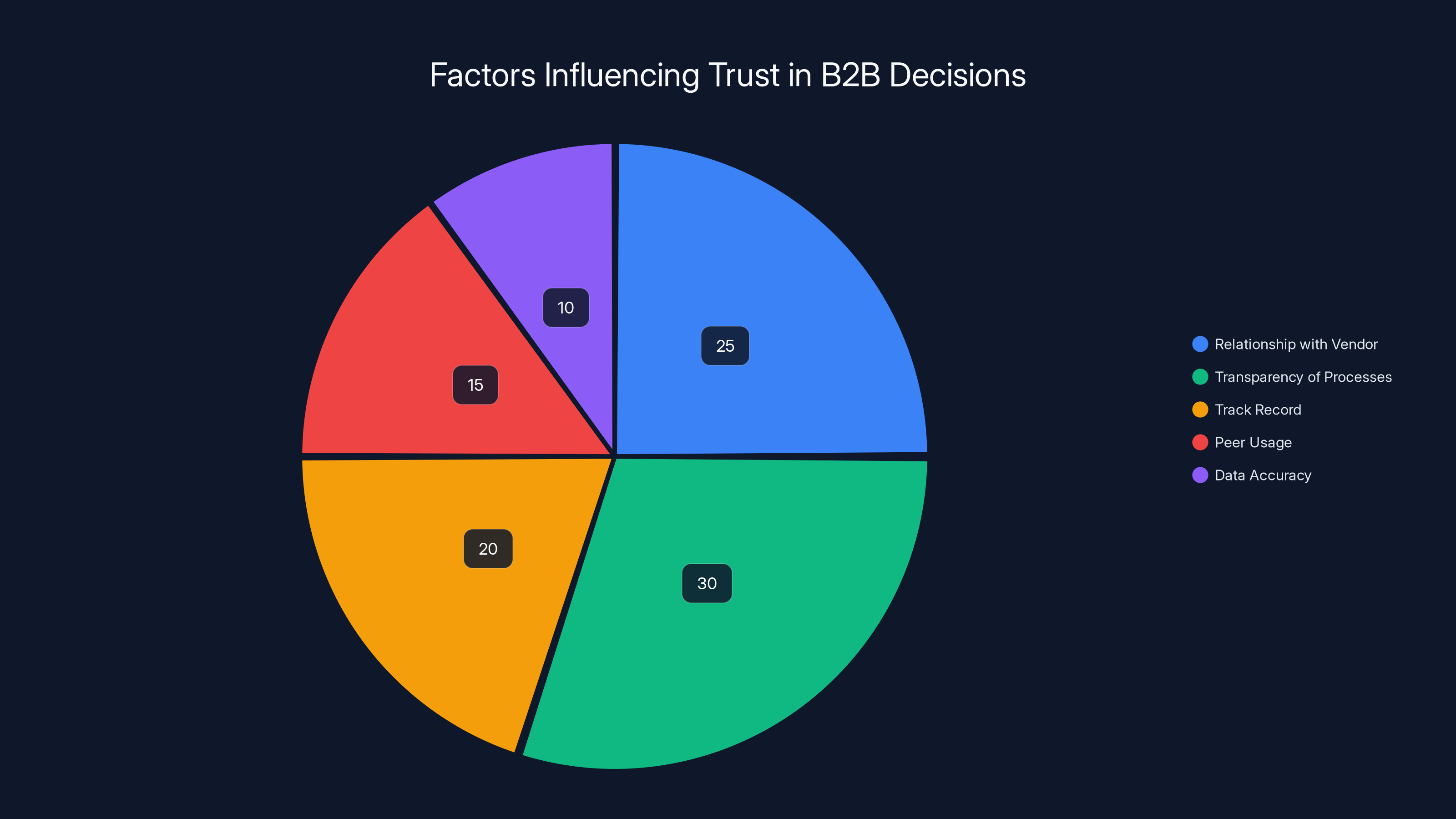

But here's where it gets interesting: as organizations adopt more automated and AI-supported processes, the sources of trust are changing.

Traditionally, B2B trust came from relationships. You trusted your vendor because you knew the sales rep. You trusted their claims because they had a track record. You trusted their product because others like you were using it.

That still matters. But now there's a new dimension: you trust them because you can see how their systems work. You can verify that the data is accurate. You can understand the logic behind recommendations. You can audit their processes and confirm they're sound.

Transparency has become a trust lever. Organizations that can show their work—that can explain the data, the logic, the quality controls—have a credibility advantage over organizations that hide complexity behind black boxes.

This is particularly true in industries like healthcare, finance, and law, where decisions have significant consequences. A healthcare provider using AI to make treatment recommendations needs to be able to explain how that recommendation was generated. A financial institution using AI for credit decisions needs to be able to justify those decisions to regulators and customers. A legal firm using AI for contract review needs to be able to show that the AI is accurate and not introducing bias.

But even in less regulated industries, transparency is becoming a competitive advantage. Customers want to know that the recommendations they're getting are based on solid data, not hallucinations. They want to know that the product is being improved based on genuine insight, not marketing narratives. They want to know they can trust the organization to use their data responsibly.

Organizations that build transparency into their AI systems from the ground up will have an easier time scaling in 2026. They'll have fewer trust-based objections. They'll have higher conversion rates because customers feel more confident in their decisions. They'll have better retention because customers know their data is being handled responsibly.

Building Credibility Through Data Integrity

Transparency is only credible if it's built on solid data. And right now, data integrity is a problem.

Why? Because organizations are drowning in data. They're collecting it from dozens of sources. They're using it for dozens of purposes. And in many cases, they don't have clear governance over data quality, data lineage, or data usage.

The result is that AI systems are trained on data that's incomplete, biased, or outdated. They're making recommendations based on flawed inputs. And they're doing it at scale, with confidence, without any warning lights that something might be wrong.

Here's a concrete example: a B2B software company using AI to predict which leads are most likely to convert. The AI is trained on 18 months of historical data. It learns patterns about which company types, industries, and decision-makers are most likely to buy. It makes predictions. Sales focuses on high-probability leads. Everything seems fine.

But six months into the deployment, the AI starts making worse predictions. Why? Because the world changed. The COVID pandemic shifted buying patterns. Remote work became standard. Industries that were buying suddenly weren't. New competitors entered the market. Customer priorities shifted.

The AI didn't know any of this. It was trained on old data. It was making predictions based on a world that no longer existed. And nobody noticed until deals started slipping and forecasts started missing.

This is a data integrity problem. The AI wasn't bad. The data was obsolete.

Responsible AI organizations solve this by building data governance practices that keep data fresh, accurate, and representative. They create processes to regularly review data quality, identify bias, and update training sets. They create monitoring systems to detect when model performance is degrading. They create human review workflows that catch obvious errors before they cause damage.

This isn't one-time work. It's ongoing operational discipline. And it's the difference between AI systems that become more accurate over time and AI systems that slowly degrade into unreliability.

Content Accuracy and Misinformation Risk

One of the most visible AI risks in 2026 will be misinformation and AI-generated content that's inaccurate or misleading.

Generative AI systems are incredibly good at producing plausible-sounding text. The problem is that plausible-sounding and accurate are not the same thing. AI models can confidently make claims that are completely false. They can cite sources that don't exist. They can misquote people. They can misrepresent statistics.

For marketing and content teams, this creates a real problem. If you're using AI to generate content at scale, and you're not systematically verifying accuracy, you're building a credibility time bomb. Your content might rank well initially, but as customers realize it's inaccurate, they'll lose trust. And once trust is lost, it's incredibly expensive to rebuild.

This is where content accuracy guardrails become essential.

Responsible organizations are implementing workflows like this:

- AI generates content based on guidelines and data sources

- Automated systems check for factual claims and verify them against trusted sources

- Human editors review for accuracy, bias, and tone

- Compliance review ensures nothing violates policy or regulations

- Publication with attribution - content clearly indicates it was AI-generated and reviewed

This isn't fast. It's not meant to be. It's meant to be trustworthy. And in 2026, trustworthiness will be worth more than raw speed.

Some organizations are going further. They're building AI systems that can cite their sources and explain their reasoning. When you ask the AI to generate a blog post about customer retention strategies, it doesn't just generate text. It shows you: "Here are the sources I used. Here's the data I drew from. Here's what I'm confident about, and here's where I'm making educated guesses." This transparency makes it easier for humans to verify and improve the output.

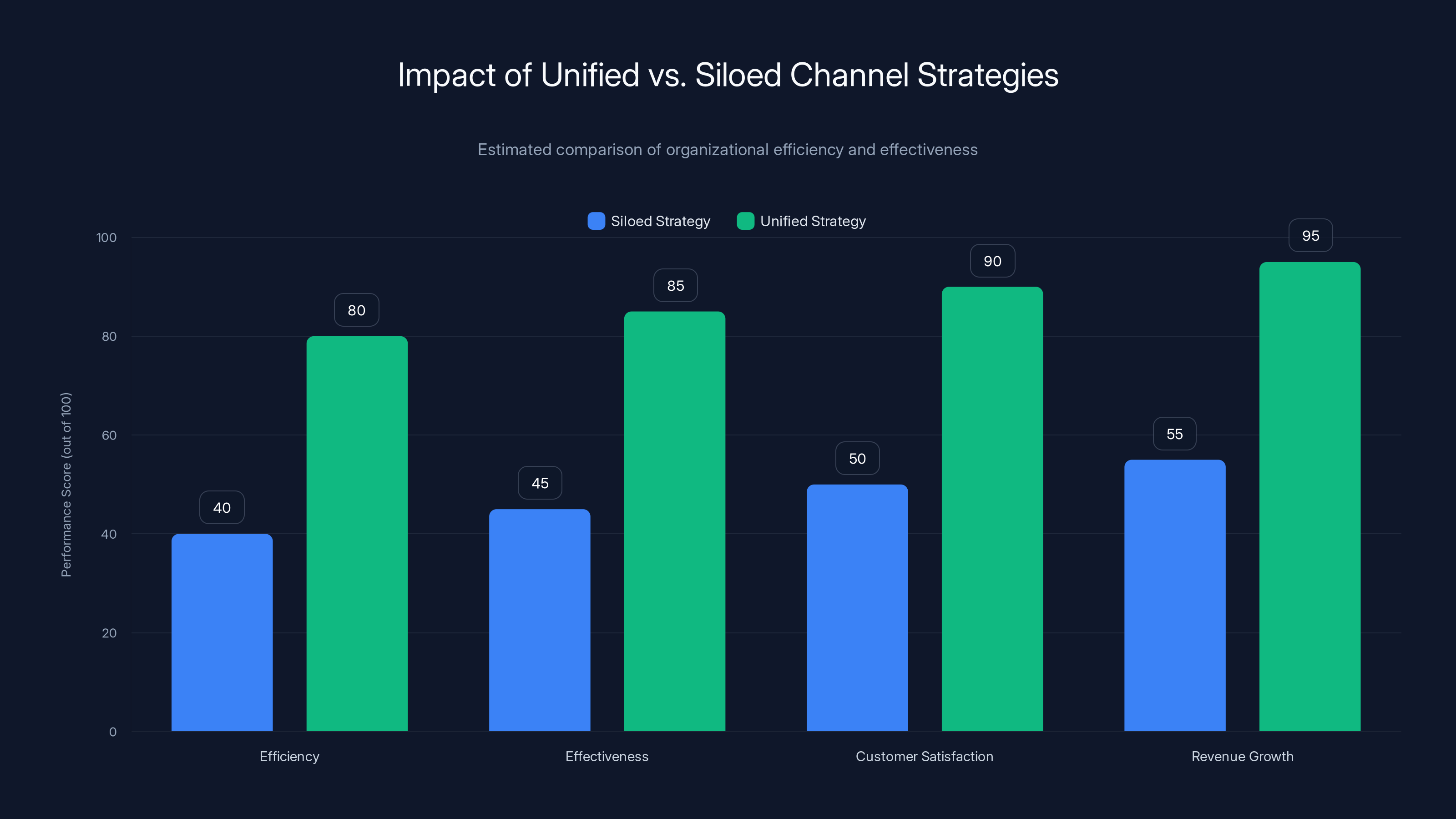

AI implementation can significantly enhance operational efficiency, with up to 60% reduction in support costs and 50% reduction in content creation time. Estimated data based on industry benchmarks.

Section 3: Breaking Down Silos for Unified Channel Strategies

The Problem With Siloed Teams

Most organizations still operate like this: marketing has its budget, sales has its budget, product has its budget. Marketing builds campaigns, sales runs them through their own process, product launches features independently. Each team optimizes for its own metrics. Nobody's looking at the full customer journey.

This worked when business was simpler. It doesn't work anymore.

Here's why: the customer journey is increasingly complex. A prospect might discover your company through content marketing, engage with a sales rep, try your product through a free trial, see a competitor's solution, come back to your website, sign up for a webinar, get a sales call, and then make a decision. That journey touches multiple channels, multiple teams, and multiple touchpoints.

If those teams aren't coordinated, you get fragmented experiences. The messaging is inconsistent. The offers conflict. The timing is off. The customer gets frustrated and buys from someone else.

Siloed organizations are also extremely inefficient. Marketing spends money driving traffic to a landing page. Sales doesn't follow up on the leads. Product doesn't know who's using the free trial. Customer success doesn't know where customers came from or what they've been exposed to.

The result: wasted spend, missed opportunities, and poor outcomes.

Unified organizations look different. They have shared dashboards showing the full customer journey. They have integrated data so that everyone's working from the same source of truth. They have aligned metrics so that individual teams are optimized for overall commercial success, not departmental KPIs. They have regular synchronization points where cross-functional teams review results and make adjustments.

And here's the thing: unified organizations are more efficient and more effective. Because they're not optimizing locally, they're optimizing globally.

Integrated Customer Experience Across Direct and Indirect Channels

One of the biggest shifts in B2B in recent years has been the rise of the indirect channel. It used to be that companies sold directly to customers. Now, they increasingly sell through partners, resellers, integrators, and other intermediaries.

This creates new complexity. If you're managing both direct and indirect channels, you're essentially running two different businesses. Two different sales processes. Two different customer experiences. Two different data systems.

Unified organizations are solving this by building integrated approaches where direct and indirect channels aren't separate strategies, but parts of a cohesive go-to-market model.

What does this look like?

Shared messaging: Direct and indirect channels are using the same core messaging, brand positioning, and value propositions, but tailored for different audience segments and buying contexts.

Integrated data: Customer data from direct sales, indirect partners, and marketing campaigns is flowing into a single system. So you can see the full picture of a customer's journey, regardless of channel.

Coordinated incentives: Direct sales teams and channel partners are incentivized for the same outcomes (customer lifetime value, expansion revenue, customer satisfaction) rather than conflicting KPIs (direct quota vs. channel quota).

Aligned content and tools: Both direct and indirect teams have access to the same content library, the same tools, and the same training. So they're representing the company consistently.

Joint planning and forecasting: Direct and indirect teams are forecasting together, planning together, and holding each other accountable for commitments. Because they're interdependent.

When organizations get this right, the results are dramatic. Efficiency improves because you're not duplicating effort. Effectiveness improves because you have visibility into the full pipeline. Scalability improves because adding indirect channels becomes additive rather than disruptive.

Building Collaborative Workforces

Unifying channels isn't just a data problem. It's a people problem.

Historically, sales and marketing have been siloed (often for good reason—they have different incentives and different ways of working). Bringing them together requires more than process changes. It requires cultural shifts and structural changes.

Organizations that have successfully done this have typically implemented some combination of:

Shared accountability for pipeline: Instead of marketing being measured on leads and sales being measured on closed deals, both are measured on pipeline progression. Marketing brings in leads, sales qualifies and advances them, and both teams are held accountable for moving prospects through each stage.

Integrated planning: Marketing and sales plan campaigns together, not separately. They agree on target segments, messaging, channels, timing, and success metrics upfront.

Regular synchronization: Instead of occasional handoffs, aligned teams have weekly or daily sync points where they review pipeline status, identify bottlenecks, and adjust tactics.

Cross-functional hiring and development: Instead of sales hiring only salespeople and marketing hiring only marketers, aligned organizations hire and develop people with overlap. Sales people who understand marketing and marketing people who understand sales are more effective at collaborating.

Shared tools and dashboards: Instead of each team having its own system, aligned organizations invest in integrated platforms where everyone can see the same data and collaborate in real-time.

This shift requires investment, patience, and leadership commitment. But the payoff is significant. Organizations that execute this well report:

- 20-30% improvement in sales cycle time because handoffs are smoother and momentum isn't lost

- 15-25% improvement in conversion rates because messaging is consistent and timely

- 25-35% improvement in marketing efficiency because spend is focused on high-probability opportunities

- 10-15% improvement in team retention because people feel more connected to outcomes

Section 4: AI-Powered Workflows in Marketing

Content Generation and Acceleration

One of the most immediate uses of AI in marketing has been content generation. And for good reason—it's genuinely useful.

Where it used to take a team of writers weeks to produce a month's worth of blog content, now it can take days. Where it used to take marketers hours to create email variations, now it takes minutes. Where it used to require designers to manually create multiple versions of social content, now it can be automated.

The productivity gains are real. And they free up human time for higher-value work like strategy, creative direction, and audience insight.

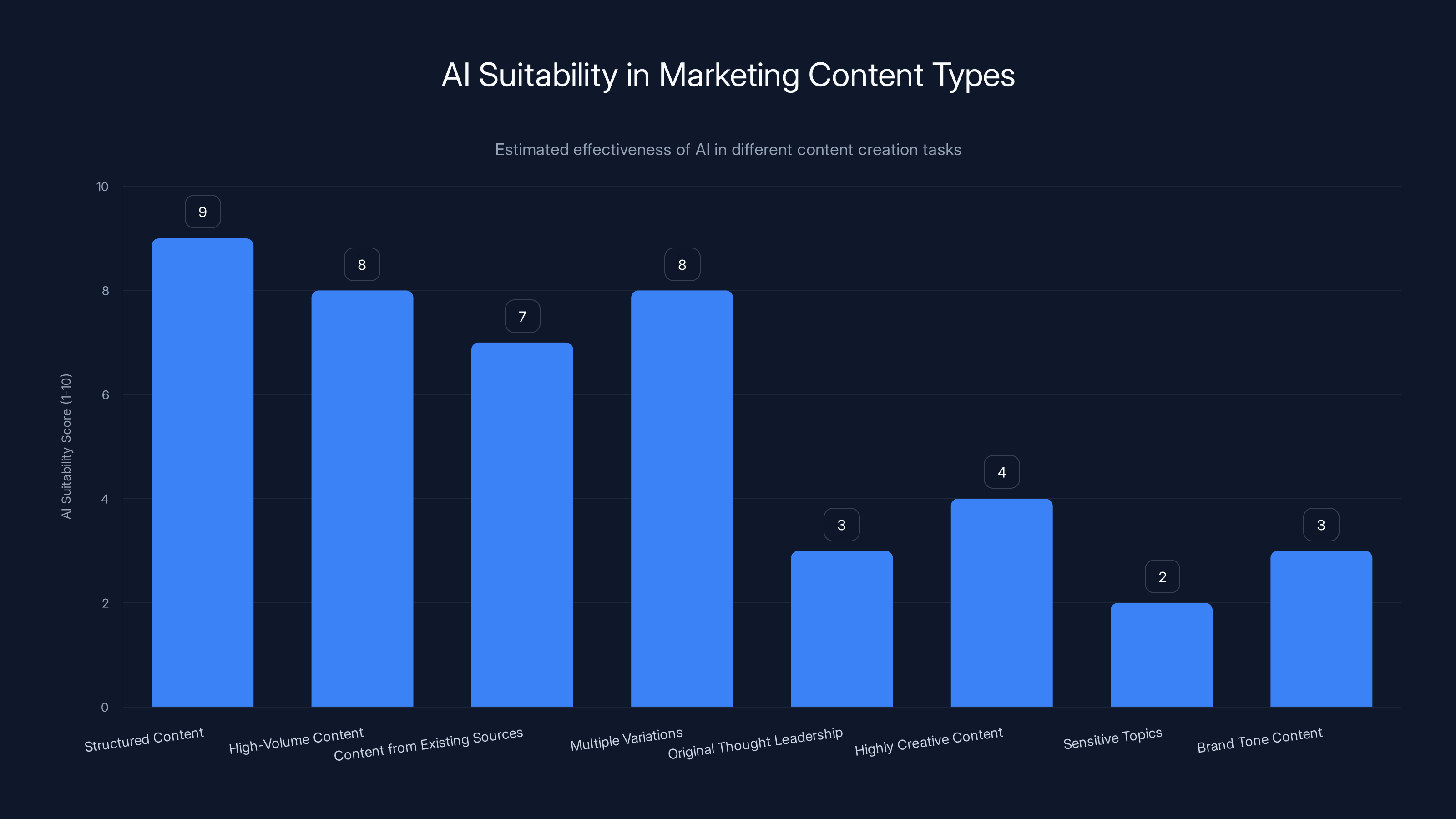

But here's the critical part: not all content is equally suitable for AI generation.

AI excels at:

- Structured content with clear patterns (product descriptions, FAQs, standard email formats)

- High-volume content where consistency matters more than uniqueness (social media variations, ad copy alternatives)

- Content based on existing sources like data, research, or documentation (blog posts summarizing industry research, email campaigns based on customer data)

- Content that benefits from multiple variations (A/B testing alternatives, localized versions)

AI struggles with:

- Original thought leadership that requires deep expertise or unique perspective

- Highly creative content that needs genuine innovation, not variation

- Sensitive topics that require nuance, context, and human judgment

- Content that sets brand tone and shapes how customers perceive your company

Responsible marketing teams are using AI as an accelerator, not a replacement. They're using it to handle the high-volume, routine content work, which frees creative people to focus on the strategic, original, high-impact work.

Here's what this looks like in practice:

- Strategy and planning remain human-driven: Marketers decide what content to create, who the audience is, and what outcomes matter

- AI generates first drafts: Based on guidelines and source material, AI creates initial versions of content

- Human experts review and refine: Marketers, subject matter experts, and editors review for accuracy, tone, and appropriateness

- AI handles variations: Once a piece is finalized, AI can quickly generate variations for different channels, segments, or A/B tests

- Performance tracking and optimization: Humans analyze what's working and feed learnings back into the system

Lead Scoring and Sales Enablement

Another high-impact application of AI in B2B has been lead scoring. Traditional lead scoring is manual and imprecise. Marketers define scoring rules based on hunches: a demo request is 50 points, a whitepaper download is 10 points, a specific industry is 20 points. It's arbitrary and it degrades over time.

AI-powered lead scoring works differently. It looks at historical data to learn which behaviors and characteristics actually predict purchasing. A demo request might only be worth 10 points if your data shows that 70% of people who request demos are misaligned on budget. But a specific search behavior might be worth 50 points if data shows it's highly predictive of intent.

The scoring evolves automatically as new data comes in. If patterns change (like during a market shift or competitive disruption), the model adapts.

The result is more efficient sales teams. They're spending time on genuine opportunities instead of chasing unqualified leads. Conversion rates improve. Sales cycle time improves. Revenue per rep improves.

But again, the critical part is governance. You need to understand:

- What data is the model using? If it's using demographic data, you're potentially introducing bias. If it's using behavioral data, that's more defensible.

- How accurate is the model? If it's 95% accurate, great. If it's 60% accurate, it's not ready for production.

- What are the edge cases? Every model has scenarios where it fails. What are the known limitations?

- How will you monitor performance? As business conditions change, model accuracy will degrade. You need systems to detect this.

Organizations that implement AI lead scoring without this governance often discover six months in that the model has degraded or introduced bias. And by then, they've already made wrong decisions based on bad scoring.

SEO and Search Optimization

Search engine optimization is becoming increasingly AI-driven, and increasingly complex.

On the surface, AI can help with basic SEO work: keyword research, content optimization, technical audits. Tools can analyze top-ranking content and suggest optimizations. They can identify technical issues that hurt crawlability. They can recommend which keywords to target and how to structure content.

This is useful. It accelerates SEO work.

But there's a deeper shift happening. Search engines themselves are increasingly AI-powered. Google's recent updates (Helpful Content Update, AI Overviews) are rewarding content that demonstrates genuine expertise and original perspective. They're penalizing thin, optimized-for-keywords, low-value content.

This means that AI-generated content that's optimized for search but lacks substance is at risk. And content that's AI-generated but reviewable and authoritative is becoming more valuable.

Responsible SEO teams are adapting by:

- Focusing on expertise and authority: Building content that demonstrates genuine knowledge, not just keywords

- Using AI to enhance human expertise: Using AI to identify opportunities, structure content, and optimize, but keeping humans responsible for accuracy and originality

- Building topical authority: Creating comprehensive content ecosystems around topics where you have genuine expertise, not scattered content chasing random keywords

- Emphasizing original data and research: Creating content that includes original research, data analysis, or insights that competitors can't just copy

- Being transparent about AI usage: Disclosing where AI was used in content creation, building trust through transparency

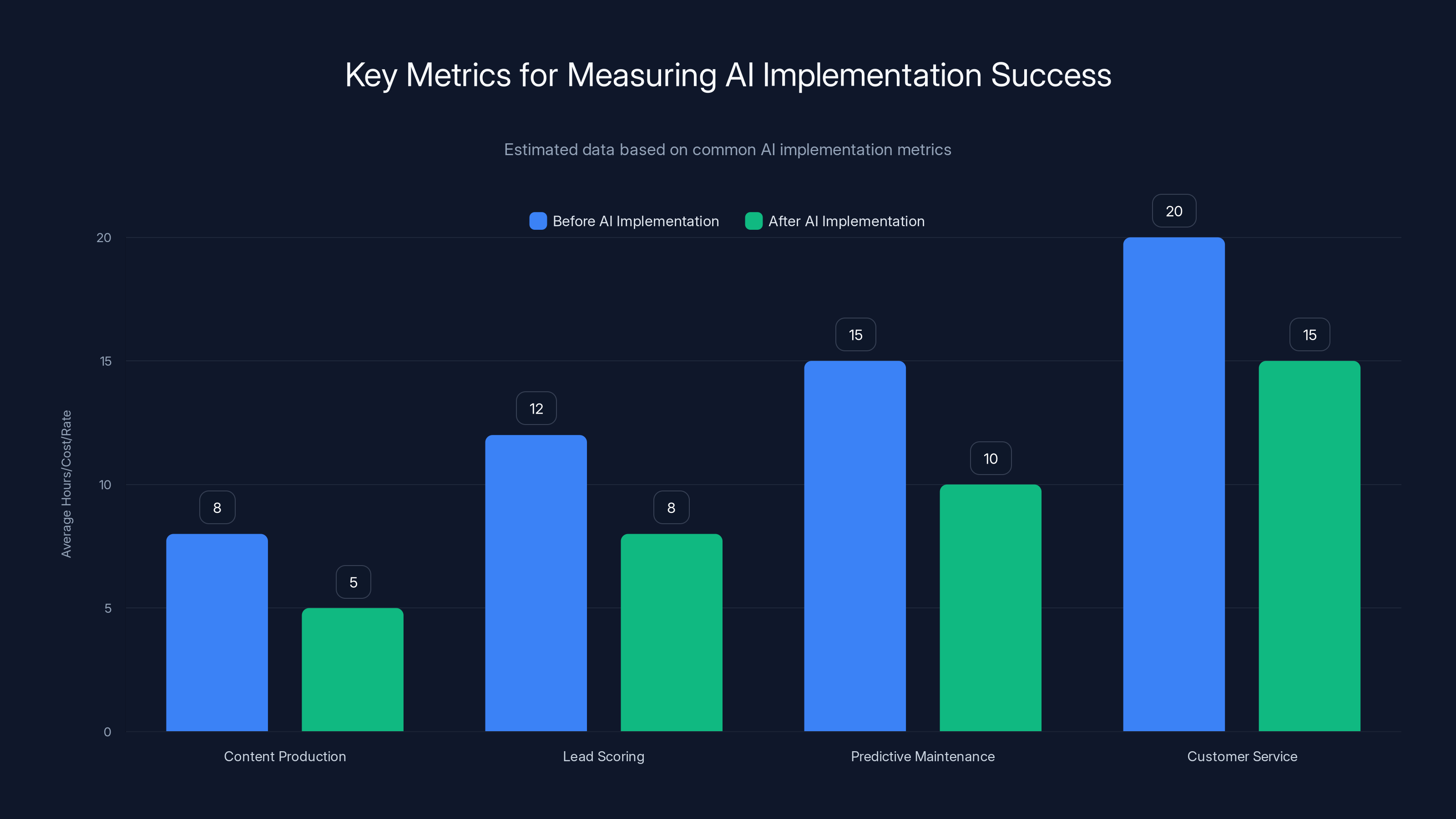

Estimated data showing potential improvements in key metrics after AI implementation. Notable reductions in hours and costs are observed across all categories.

Section 5: Financial and Operational Impact

ROI and Cost Efficiency

Let's talk numbers. Organizations are investing in AI because the ROI potential is significant.

Here are some real-world benchmarks:

Content production: Organizations using AI-assisted content generation report 40-60% reduction in content creation time. If your team produces 100 pieces of content per month and it currently takes 500 hours, AI assistance can reduce that to 200-300 hours. At a fully-loaded cost of

Lead scoring and qualification: Organizations using AI lead scoring report 25-35% reduction in sales cycle time and 20-30% improvement in sales productivity (revenue per rep). For a sales organization with

Predictive maintenance: Organizations using AI for operations report 30-40% reduction in unplanned downtime and 15-25% improvement in asset utilization. For a manufacturing or logistics organization with significant asset bases, this translates directly to the bottom line.

Customer service: Organizations using AI for routine support inquiries report 50-70% reduction in support costs for automated categories and 40-60% reduction in first-response time. This improves customer satisfaction while reducing headcount.

But here's what's important: these benefits only materialize if the AI systems are implemented responsibly.

Irresponsible AI deployment creates costs that offset benefits:

- Accuracy problems: AI systems that make mistakes generate support tickets, require human rework, and damage reputation

- Governance problems: Organizations that don't have clear ownership end up with duplicate efforts, conflicting implementations, and wasted spend

- Compliance problems: AI systems that introduce bias or violate regulations create legal liability and remediation costs

- Trust problems: AI systems that produce unreliable outputs erode customer confidence and damage brand reputation

The organizations seeing the best ROI are the ones that view AI investment not as "deploy tool and collect savings" but as "build governance, implement systematically, optimize continuously."

Budget Allocation and Investment Priorities

With UK business investment in AI set to rise by 40% over the next two years, the question for many organizations is: where should we invest?

Responsible organizations are typically allocating budget across these categories:

Foundational governance (15-20% of budget)

- Data governance and quality infrastructure

- AI governance frameworks and processes

- Training and upskilling programs

- Compliance and risk management

This seems like overhead, but it's actually insurance. Organizations that don't invest in foundations end up spending way more on remediation and risk management later.

Tool and platform investment (40-50% of budget)

- AI platforms and tools for specific use cases

- Integration platforms to connect AI systems

- Monitoring and observability tools

- Security and access control systems

This is where the productivity gains come from. But the tools are only effective if they're implemented on solid foundations.

Team and capability building (20-30% of budget)

- Hiring AI specialists and engineers

- Training existing teams on AI literacy

- Building internal AI capabilities

- Consulting and implementation support

This is often underfunded, but it's critical. The tools are worthless without people who know how to use them responsibly.

Monitoring and optimization (10-15% of budget)

- Continuous performance monitoring

- Model retraining and updates

- A/B testing and experimentation

- Feedback loops and optimization

This is the most neglected budget category. But organizations that skip this end up with AI systems that degrade over time.

Section 6: Regulatory Landscape and Compliance

Evolving Regulations

The regulatory environment around AI is becoming increasingly complex. And unlike some tech regulations that are slow to evolve, AI regulations are changing rapidly.

In Europe, the AI Act is taking effect, creating liability for organizations that deploy AI systems without proper governance. In the US, various frameworks are emerging: the Executive Order on AI from the White House, sector-specific guidance from NIST, state-level regulations, and industry standards.

The compliance requirements typically fall into these categories:

Transparency and disclosure Organizations need to disclose when AI is being used in decision-making, how it works, and what data it's using. This applies to everything from hiring decisions to customer service to content generation.

Bias and fairness Organizations need to test AI systems for bias and have processes to mitigate it. This is particularly important for systems making consequential decisions (hiring, lending, healthcare, etc.).

Data privacy and security Organizations need to ensure AI systems don't violate data privacy regulations, don't expose personal information, and aren't vulnerable to attack.

Explainability and auditability Organizations need to be able to explain how AI systems make decisions and maintain audit trails to demonstrate compliance.

Human oversight Organizations need to maintain meaningful human control over AI systems, particularly for consequential decisions.

Risk management Organizations need to identify, assess, and mitigate risks associated with AI systems.

The specific requirements vary by jurisdiction and industry, but the overall trend is clear: responsible AI governance is becoming a compliance requirement, not an optional best practice.

Building Compliant Systems

How do you build AI systems that are compliant by design?

Start with governance frameworks: Before deploying any AI system, establish governance frameworks that define how systems will be evaluated, approved, deployed, and monitored. Make compliance part of the approval process, not an afterthought.

Document everything: Maintain detailed documentation of how AI systems were built, what data they use, how decisions are made, and what testing was performed. This documentation is your evidence of good-faith compliance efforts.

Test for bias systematically: Before deploying any AI system in a consequential domain, test it for bias across demographic groups. Document the findings and mitigation approaches.

Build audit trails: Ensure AI systems maintain detailed logs of what inputs were used, what outputs were generated, and who reviewed them. These audit trails are critical for compliance investigations.

Implement human review workflows: For consequential decisions, require human review before the decision is finalized. This maintains meaningful human control and provides a checkpoint for catching errors.

Monitor continuously: After deployment, continuously monitor AI system performance for degradation, bias drift, or unexpected behavior. Have processes to investigate anomalies and take corrective action.

Stay informed: Keep up with evolving regulations and standards. Join industry groups, participate in standards development, and maintain awareness of regulatory changes.

Transparency is increasingly important, accounting for 30% of trust factors in B2B decisions, surpassing traditional relationship-based trust. (Estimated data)

Section 7: Building Trust Through Transparency

Customer-Facing Transparency

One of the most underrated competitive advantages in 2026 will be the ability to explain what you're doing and why.

When customers see that you're using AI, they get nervous. Not because AI is inherently bad, but because they don't understand it. They worry about bias. They worry about accuracy. They worry about privacy.

Organizations that address these concerns directly and transparently build trust faster.

What does customer-facing transparency look like?

Clear disclosure of AI usage: If you're using AI in any customer-facing context (customer service, recommendations, content generation, etc.), say so. Don't hide it. Customers respect honesty more than they're troubled by the disclosure.

Explanation of how it works: Give customers a basic understanding of how the AI system works and what data it's using. This doesn't need to be technical. It needs to be honest.

Transparency about limitations: Tell customers what the AI is good at and what it's not. "This recommendation engine is trained on customer usage data from your industry. It's designed to suggest features you might find useful based on how similar companies are using us. It's not personalized to your specific use case, so use it as a starting point and adjust as needed."

Easy escalation to humans: Make it obvious how to talk to a human if the AI isn't working. Don't make customers fight through AI systems. Provide clear paths to human support.

Opt-in for non-essential uses: If you're using AI in ways that aren't essential to the core service (like using customer data for research), make it optional. Let customers opt out if they're uncomfortable.

Regular updates on improvements: As you improve AI systems, tell customers about the improvements. "We've updated our recommendation engine based on feedback from thousands of customers. It should be more accurate now." This builds confidence that the system is improving, not degrading.

Internal Communication and Change Management

Building trust internally is just as important as building it with customers.

When organizations roll out AI systems, they often encounter resistance from employees who fear job displacement or are uncomfortable with new systems. This resistance isn't irrational. It's a signal that you haven't done enough to build confidence.

Responsible organizations handle this by:

Communicating early and often: Don't introduce AI systems as fait accompli. Communicate about them early, explain why they're being introduced, and what the implications are.

Being honest about changes: If a role is changing due to AI adoption, say so. "This team's work is evolving. You won't be manually processing leads anymore. Instead, you'll be reviewing AI-recommended leads, refining scoring, and working on more strategic activities." Honesty is more motivating than pretending nothing is changing.

Focusing on upskilling: Show employees how they can develop new skills to work effectively with AI. "You're going to learn how to interpret AI recommendations, when to trust them and when to override them, and how to give feedback that improves the system." This turns anxiety into professional development.

Maintaining job security during transition: If AI is replacing some work, commit to no layoffs during the transition period. Redeploy people to new roles, provide training, and maintain career growth. This reduces anxiety and enables people to embrace change.

Celebrating early wins: As people master new workflows and see productivity improvements, celebrate it. Share stories about what's working. Build momentum.

Section 8: Technology Stack and Integration

Choosing the Right AI Tools

The market for AI tools is exploding. For almost every business process, there's an AI tool claiming to automate it.

How do you choose?

Responsible organizations evaluate AI tools using this framework:

Problem clarity: Do we have a clear problem we're trying to solve? If we're pursuing AI for its own sake ("AI is trendy, we should try it"), we're going to waste money. If we're solving a specific problem ("We spend 10 hours per week manually processing leads"), we're more likely to succeed.

Data readiness: Do we have clean, representative data to train or tune the system? Many AI implementations fail because the underlying data is poor quality, incomplete, or biased. Audit your data before choosing a tool.

Integration requirements: How does this tool integrate with our existing systems? If it requires manual data entry or duplicate data management, it's going to create friction and errors. Look for tools that integrate directly with your existing platforms.

Governance fit: Does this tool align with our governance requirements? Can we audit it? Can we explain its decisions? Can we test it for bias? If not, it's riskier.

Vendor stability and support: Is this vendor likely to be around in 2 years? Do they provide the support we need? Can we switch to a different vendor if needed? Vendor lock-in creates risk.

Total cost of ownership: What's the true cost, including implementation, training, integration, and ongoing management? Don't just look at tool price. Look at the full cost.

Risk assessment: What are the failure modes? If the tool produces bad outputs, what's the impact? If it causes bias, what's the reputational damage? If it exposes data, what's the legal liability? Build a risk profile.

Organizations that do this analysis upfront tend to have much better outcomes than organizations that just buy the shiny new tool.

Integration and Data Flow

One of the biggest implementation challenges is getting AI systems to work with your existing infrastructure.

Scenario: You have a CRM system, a marketing automation platform, a sales engagement tool, and three different cloud data warehouses. You want to implement AI lead scoring that uses data from all of these systems.

If you try to do this manually, you're going to spend a fortune on custom integration, you're going to have data consistency problems, and you're going to create ongoing maintenance burden.

Responsible organizations are building integration architectures that look like this:

Unified data layer: All data from various systems flows into a central data warehouse or data lake where it's standardized, deduplicated, and made available for analysis.

API-first architecture: Systems communicate through APIs rather than file transfers or manual exports. This enables real-time data sync, reduces errors, and makes it easier to add new systems.

Data quality processes: As data flows in, quality checks are applied. Missing values are flagged. Duplicates are detected and merged. Data is validated against business rules.

Transformation logic: Raw data is transformed into useful formats for analysis. Lead data is enriched with company data. Customer interactions are sequenced into journeys. Statistical models are run.

Feedback loops: Results from AI systems are fed back into the systems that produced the original data. This closes the loop and enables continuous improvement.

Building this infrastructure takes time and investment upfront. But it pays dividends by enabling rapid AI deployment, reducing integration friction, and creating a foundation for future enhancements.

AI is highly suitable for structured and high-volume content but struggles with original and sensitive topics. Estimated data.

Section 9: Scaling AI Responsibly

From Pilot to Production

Most organizations start AI initiatives with pilots. You pick a specific use case (lead scoring, content generation, customer service), implement it in one team, run it for a few months, and evaluate results.

Pilots are smart. They let you learn without betting the company.

But many organizations get stuck in pilot mode. They run pilot after pilot, never scaling to production. Either the business case didn't prove out, or the pilot worked great but scaling it was harder than expected.

Responsible scaling requires a different mindset. You need to move from "let's prove this works" to "how do we run this operationally."

Here's what that transition looks like:

Pilot phase (2-4 months)

- Define success metrics clearly

- Build the MVP (minimum viable product)

- Test with a limited audience

- Collect feedback and iterate

- Document what worked and what didn't

- Calculate ROI based on pilot results

Pre-production phase (1-2 months)

- Build production-grade infrastructure (security, monitoring, scalability)

- Implement governance and oversight

- Train users and support teams

- Set up data pipelines and integrations

- Plan for monitoring and optimization

- Prepare runbooks for common issues

Production phase (ongoing)

- Roll out to broader audience

- Monitor performance and user adoption

- Collect feedback and issues

- Optimize based on real-world usage

- Scale to additional use cases as confidence builds

Optimization phase (ongoing)

- Continuously improve system performance

- Retrain models as patterns change

- A/B test variations

- Expand to adjacent use cases

- Build institutional knowledge

Organizations that follow this path, rather than trying to jump directly from pilot to enterprise-wide deployment, are far more successful. They learn as they go. They build capability progressively. They avoid the big bang rollouts that often fail.

Organizational Change Management

Scaling AI isn't just a technical challenge. It's an organizational challenge.

When you pilot AI in one team, change is contained. That team adapts. They learn new workflows. They develop new skills.

When you scale AI across the organization, change becomes pervasive. Multiple teams are affected simultaneously. Organizational workflows are disrupted. Skill gaps emerge.

Managing this requires:

Clear executive sponsorship: AI scaling needs visible support from leadership. Without it, resistance at middle management levels will kill momentum.

Dedicated change management: Assign someone to lead organizational change. They should be focused on adoption, training, communication, and addressing resistance. This is a full-time role.

Phased rollout: Don't roll out to everyone at once. Phase the rollout by department, by geography, or by use case. This spreads the disruption and lets you learn from each phase.

Training infrastructure: Build comprehensive training for all affected employees. This isn't just "here's how to use the tool." It's "here's why we're doing this, how your role is changing, and how to succeed in the new workflow."

Support and help desk: Ensure adequate support during rollout. When people run into problems, support needs to be responsive. Slow support response during rollout kills adoption.

Feedback loops: Create mechanisms for employees to surface concerns, questions, and feedback. Act on the feedback. Show people their input is being heard and incorporated.

Celebrate wins: As people master new workflows and see benefits, celebrate it publicly. Share stories about improved productivity, better outcomes, or easier workflows. Build momentum.

Section 10: Measuring Success and Impact

Defining the Right Metrics

How do you know if your AI implementation is working?

This seems like a simple question. But organizations often define metrics poorly, then wonder why they can't demonstrate value.

Good metrics have specific characteristics:

Aligned with business strategy: The metric should measure something that matters to the business. If your strategy is to improve customer retention, measure customer retention. If it's to reduce costs, measure costs. Don't measure vanity metrics that don't connect to business outcomes.

Measurable and verifiable: The metric should be objective and measurable. Not "improved efficiency" (subjective) but "reduced processing time from 10 hours to 6 hours per week" (objective).

Comparable before and after: You need to be able to measure performance before the AI system was implemented, so you can see the improvement after. This requires baseline measurement.

Controlled for external factors: If you implement lead scoring in January and then measure results in March, but your company also launched a major marketing campaign in February, how much of the improvement is from lead scoring vs. the marketing campaign? You need to separate the effects.

Leading and lagging indicators: Some metrics measure current performance (leading indicators like time spent processing leads). Others measure eventual outcome (lagging indicators like conversion rate). You need both.

Examples of good metrics:

- Content production: Hours spent creating content, content volume per team member, cost per piece of content

- Lead scoring: Sales cycle time, conversion rate, cost per sale, time spent on leads that don't convert

- Predictive maintenance: Unplanned downtime hours, maintenance cost, asset utilization rate

- Customer service: Support ticket volume, first-response time, resolution time, customer satisfaction scores

ROI Calculation Framework

Once you have good metrics, how do you calculate ROI?

Simplified ROI formula:

But let's make this concrete with an example.

You implement AI-assisted content creation. Your baseline is 20 hours per week of content creation time, at a fully-loaded cost of

With AI assistance, the same team produces the same content in 12 hours per week. That's 8 hours of time saved at

You invested

Total costs:

Total gains: $41.6K

ROI: (

In plain English: for every dollar spent, you got $1.19 back in the first year. The investment pays for itself in about 5.5 months.

But this is just the direct ROI. There are indirect benefits:

- Quality improvements: If AI assistance improves content quality, that can lead to better engagement, higher conversion rates, and more revenue

- Capability expansion: The freed-up time lets your team do more high-value work that they weren't able to do before

- Team satisfaction: If your team is happier doing less routine work, that can reduce turnover and recruitment costs

- Scale without hiring: If you can scale content production without hiring more people, that avoids salary, benefits, and onboarding costs

These indirect benefits are real, but harder to quantify. Conservative organizations quantify only direct benefits. Progressive organizations look for indirect benefits too.

Section 11: The Human Element: Skills and Capability Building

AI Literacy Across the Organization

Here's something that doesn't get enough attention: most people in business don't understand AI well enough to use it responsibly.

They know it exists. They know it's powerful. They know it's supposed to make them more productive. But they don't understand how it works, what it's good at, what it's bad at, and when they should trust it vs. verify it.

This creates risk.

A marketing manager who doesn't understand how AI content generation works might publish AI-generated content without verifying accuracy. A sales leader who doesn't understand how AI lead scoring works might rely on bad scores and miss good opportunities. An IT leader who doesn't understand AI security risks might deploy AI tools without proper safeguards.

Responsible organizations are investing in AI literacy programs that are comprehensive and role-specific.

Executive education: Help leaders understand AI's strategic implications, competitive dynamics, and risk profile. This is 2-3 hours of training focused on strategy and business implications.

Manager training: Help managers understand how AI affects their teams, how to support AI adoption, how to identify and address issues. This is 4-5 hours of training focused on management implications.

Individual contributor training: Help employees understand how to use specific AI tools responsibly, when to trust outputs and when to verify. This is 2-4 hours of training per tool, hands-on and practical.

Specialist training: For people building or implementing AI systems, deeper technical training on how models work, how to evaluate them, how to monitor them. This is 20+ hours of training.

These programs should be:

- Ongoing, not one-time: AI is evolving rapidly. What was true six months ago might not be true today. Annual refresher training is minimum.

- Practical, not theoretical: Use real examples from your business. Let people practice with your actual tools and data.

- Role-specific, not generic: A marketer needs different AI literacy than an IT person.

- Accessible: Don't require advanced technical knowledge. Explain concepts in accessible language.

Building and Hiring AI Talent

Beyond general AI literacy, organizations need specialized talent to build, implement, and manage AI systems.

This is a competitive talent market. AI engineers, data scientists, and machine learning specialists are in high demand and short supply.

Responsible organizations are taking multiple approaches:

Develop internal talent: Rather than only hiring people with AI experience, hire smart people and train them. It takes time, but you build organizational knowledge and reduce dependency on any individual.

Hire strategically: Hire a few senior AI practitioners who can lead implementation and train others. These people are expensive but they're force multipliers.

Partner with external expertise: Use consultants or implementation partners to accelerate implementation and transfer knowledge to your team.

Build AI centers of excellence: Centralize AI expertise in a dedicated team that other departments can access. This avoids duplicate effort and builds consistency.

Create career paths: Show people how they can build careers around AI. This attracts talent and retains experienced people.

The organizations that are best at this are building AI capability as an ongoing investment, not a one-time project. They're creating internal paths for people to move into AI-related roles. They're hiring continuously. They're investing in training. They're treating AI talent as a strategic asset.

Section 12: Looking Ahead: 2026 and Beyond

Emerging Trends to Watch

The AI landscape is changing rapidly. Here are some trends that are likely to shape how organizations use AI in 2026 and beyond:

Agentic AI: Today's AI tools mostly respond to requests. You ask for something, AI generates it. The next wave is "agentic AI" - AI that can autonomously work toward goals, make decisions, and take actions. This is more powerful but also requires more robust governance.

Multimodal AI: Today's AI tools typically work with one type of data (text, images, audio). Multimodal AI works across multiple types simultaneously. This enables richer applications but also increases complexity.

Domain-specific AI: Rather than general-purpose AI, we're seeing increasingly specialized AI for specific industries or functions. This improves performance but requires deep domain knowledge.

AI regulation: Regulatory frameworks are evolving rapidly. By 2026, most AI systems will need to comply with multiple regulatory regimes (GDPR, AI Act, sector-specific regulations, etc.). Governance will become even more critical.

Transparency and explainability: There's growing demand for AI systems that can explain their decisions. This will drive development of explainable AI and transparency tools.

AI security: As AI systems become more critical, they're becoming high-value targets for attackers. AI security is becoming a major focus area.

Human-AI collaboration: Rather than AI replacing humans, the trend is toward more sophisticated human-AI collaboration. Systems that understand human expertise and complement it.

Building Future-Ready Organizations

How do you build an organization that's ready for whatever comes next?

Flexibility and adaptability: Don't over-commit to any single technology or approach. Build systems and capabilities that can adapt as technology evolves.

Continuous learning: Make learning part of your culture. Encourage people to experiment with new tools, attend training, and stay current.

Strong governance foundations: The specific tools and technologies will change, but the governance principles (transparency, accountability, risk management) will remain constant. Build these foundations and they'll serve you regardless of what comes next.

Customer and employee focus: Stay focused on solving problems for customers and employees, rather than chasing technology for its own sake. Technologies come and go, but customer needs are constant.

Ethical framework: Develop and articulate an ethical framework for AI use in your organization. This guides decisions when new technologies and opportunities emerge.

Network and partnerships: Build relationships with peers, industry groups, and experts. These relationships help you stay informed about trends and avoid isolated thinking.

The Path Forward: Key Takeaways for 2026

Let's synthesize this. Here's what organizations need to focus on in 2026:

1. Governance is no longer optional. With 78% of CISOs reporting AI-powered threats and regulatory frameworks tightening, organizations without clear governance frameworks are taking on unacceptable risk. Make governance a strategic priority.

2. Trust is a competitive advantage. In an environment of tighter budgets and longer sales cycles, 67% of consumers cite trust as essential to purchase decisions. Organizations that build transparent, trustworthy AI systems will win.

3. Data integrity is foundational. AI is only as good as its training data. Organizations that can't guarantee data quality are building on sand. Make data governance a core capability.

4. People and process matter more than technology. The organizations with the best AI outcomes aren't necessarily the ones with the fanciest tools. They're the ones with the most disciplined processes, the best-trained teams, and the strongest governance.

5. Unified strategies outperform siloed approaches. In a complex, interconnected business environment, siloed working is unsustainable. Organizations that align marketing, sales, product, and operations around unified strategies will be more efficient and more effective.

6. Responsible implementation drives ROI. Organizations that implement AI responsibly see 3-4x better ROI than organizations that cut corners. The short-term investment in governance pays dividends in the long term.

7. Change management is as important as technology deployment. The technical side of AI is often simpler than the organizational side. Invest in change management, training, and adoption support.

8. Transparency builds confidence. Whether you're communicating with customers or employees, transparency about how AI is being used, why it's being used, and how it's governed builds confidence and accelerates adoption.

The organizations that execute well on these principles will be the ones that capitalize on AI's potential while managing its risks. They won't be the fastest to adopt every new tool. But they'll be the most sustainable, most trustworthy, and most successful in the long term.

FAQ

What is responsible AI?

Responsible AI refers to the practice of developing, deploying, and managing artificial intelligence systems in a way that is transparent, accountable, and aligned with ethical principles. It involves implementing governance frameworks, ensuring data integrity, maintaining human oversight, and continuously monitoring systems for bias, accuracy, and compliance. Responsible AI isn't about avoiding AI—it's about using AI thoughtfully and systematically.

Why does governance matter for AI implementation?

Governance provides the structure and processes that enable safe, effective AI deployment at scale. Without governance, organizations end up with duplicate efforts, inconsistent approaches, uncontrolled risks, and ultimately, poor outcomes. Governance ensures that AI systems are developed and deployed systematically, with clear ownership, defined processes, regular monitoring, and continuous improvement. Organizations with strong governance see 3-4x better ROI on AI investments.

How can organizations build trust through AI transparency?

Transparency means explaining how AI systems work, what data they use, how accurate they are, and what their limitations are. This applies both to customers (explaining how recommendations are generated, why specific decisions were made) and employees (explaining how AI changes workflows and why). Organizations that are transparent about AI usage build confidence faster. Customers and employees respect honesty more than they're concerned about AI's use, as long as it's used responsibly and they understand how it works.

What are the key metrics for measuring AI success?

Metrics should be aligned with business strategy and measurable before and after implementation. For content production, track hours saved and cost reduction. For lead scoring, track sales cycle time and conversion rate improvements. For customer service, track resolution time and satisfaction scores. The best organizations track both leading indicators (current performance) and lagging indicators (eventual business outcomes) to get a complete picture of impact.

How should organizations approach AI adoption across departments?

Start with a clear problem (not technology for its own sake). Conduct a pilot in one department with a specific use case. Document what works and what doesn't. Build production-grade infrastructure (not just a prototype). Implement governance and training. Conduct a pre-production phase where the new system runs in parallel with existing processes. Then phase the rollout across departments, not all at once. This reduces disruption and lets you learn from each phase before scaling further.

What skills do employees need to work effectively with AI?

All employees should have baseline AI literacy: understanding what AI is, what it's good at, what it's bad at, and when to trust it vs. verify it. Managers should understand how AI affects their teams and how to support adoption. Individual contributors using AI tools need hands-on training on those specific tools. Technical specialists building or implementing AI systems need deeper knowledge of how models work, how to evaluate them, and how to monitor them. AI literacy should be ongoing, not one-time training.

Conclusion: The Competitive Advantage of Responsibility

In 2026, the competitive advantage won't go to the organizations that adopt AI fastest. It'll go to the organizations that adopt AI smartest.

This is important because it reframes the conversation. The pressure to move fast creates real urgency. Every competitor seems to be announcing new AI initiatives. Every vendor is pushing implementation. Every executive is feeling pressure to "do something" with AI.

But that pressure is exactly when you need to pause and think strategically.

The organizations that will win in 2026 are the ones that:

- Build governance alongside implementation

- Invest in data quality and integrity

- Prioritize transparency and trust

- Unify channel strategies and cross-functional teams

- Invest in people and capability building

- Measure impact rigorously

- Adapt and optimize continuously

These approaches take time. They require discipline. They feel slower than the competitors who are moving recklessly.

But they work. And they compound.

Organizations that implement AI responsibly in 2025 will have massive advantages in 2026. Their systems will be more reliable. Their teams will be more capable. Their customers will trust them more. Their risk profile will be lower. Their ROI will be higher.

The organizations that moved recklessly? They'll be scrambling to fix problems. Dealing with compliance issues. Rebuilding trust. Retraining teams.

Responsible innovation isn't just about managing risk. It's about building competitive advantage.

In 2026, the race won't be won by the fastest. It'll be won by the most responsible.

Key Takeaways

- 78% of CISOs report AI-powered cyber threats already impacting organizations, demanding rigorous security governance

- 67% of consumers consider brand trust essential to purchase decisions, making transparent AI implementation a competitive advantage

- Organizations with strong AI governance see 3-4x better ROI compared to those deploying AI without governance frameworks

- Unified cross-functional strategies and aligned metrics outperform siloed departmental approaches by 20-35% in efficiency and effectiveness

- People, process, and governance matter more than technology: the most successful organizations prioritize systematic implementation over rapid adoption

Related Articles

- Enterprise AI Needs Business Context, Not More Tools [2025]

- AI Accountability & Society: Who Bears Responsibility? [2025]

- Managing 20+ AI Agents: The Real Debug & Observability Challenge [2025]

- 7 Biggest Tech Stories: Apple Loses to Google, Meta Abandons VR [2025]

- xAI's Grok Deepfake Crisis: What You Need to Know [2025]

- Closing the UK's AI Skills Gap: A Strategic Blueprint [2025]

![Responsible AI in 2026: The Business Blueprint for Trustworthy Innovation [2025]](https://tryrunable.com/blog/responsible-ai-in-2026-the-business-blueprint-for-trustworth/image-1-1768729047858.jpg)