The Week Everything Changed at x AI

Something unusual happened at x AI in early February 2025. Not a product launch. Not a funding announcement. Instead, the company saw a wave of high-profile departures that shocked the AI industry and sent shockwaves through social media.

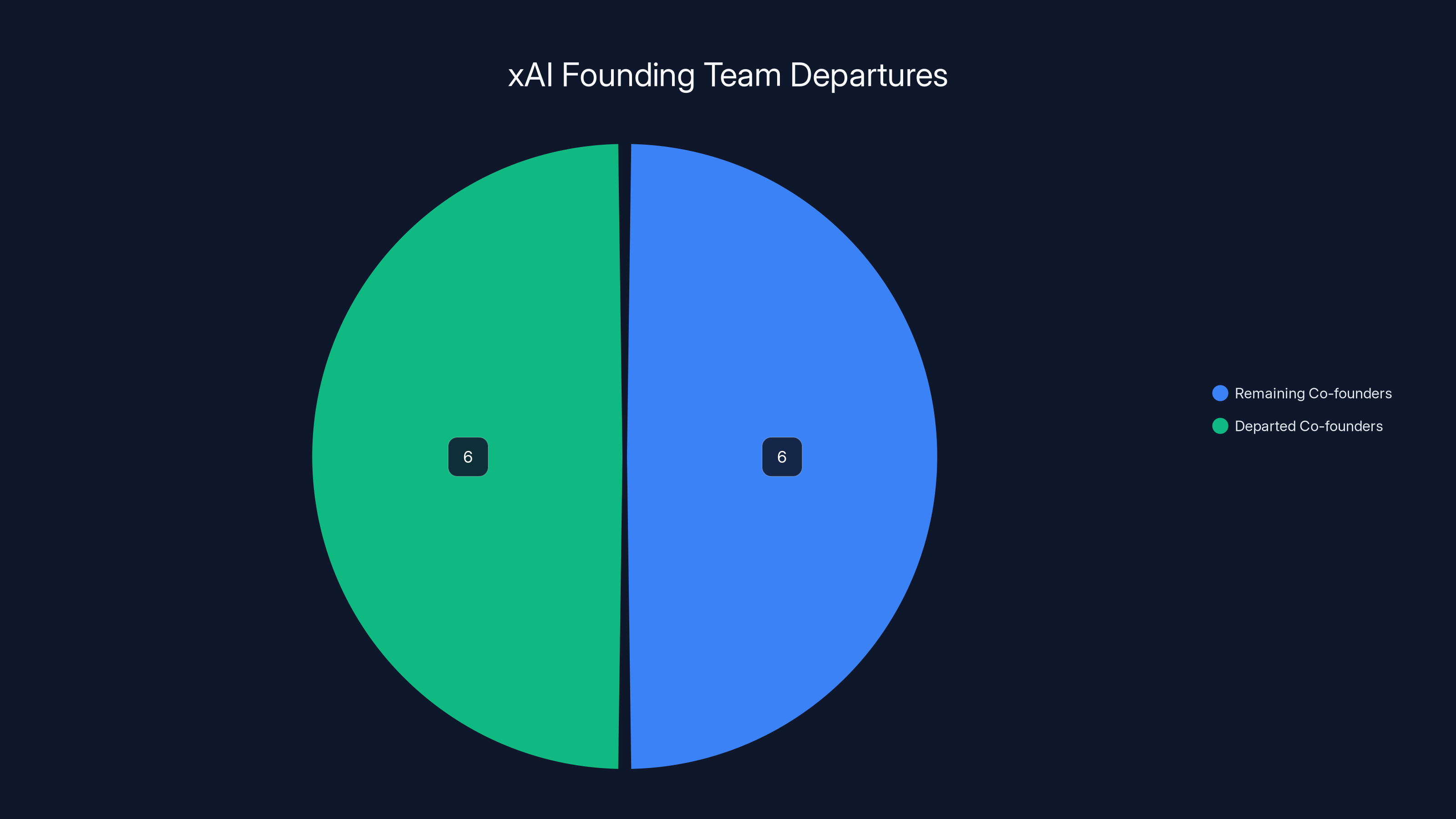

At least nine engineers and researchers announced they were leaving x AI, the artificial intelligence company founded by Elon Musk. More remarkably, six of the company's original twelve co-founders are now gone. That's 50% of the founding team, and that number wasn't accidental or unplanned. It was deliberate.

Musk went into damage control mode immediately. First came the all-hands meeting. Then a carefully worded post on X (the social network he owns) explaining the departures as a necessary reorganization. But behind the corporate messaging, something more complex was happening.

Developer exodus events happen. Product companies leak talent all the time. But when half your founding team leaves in seven days, that's not normal. It's not routine. It's a signal that something deeper is happening beneath the surface.

Here's what actually went down, why it matters, and what it tells us about the state of frontier AI companies in 2025.

Understanding the x AI Timeline

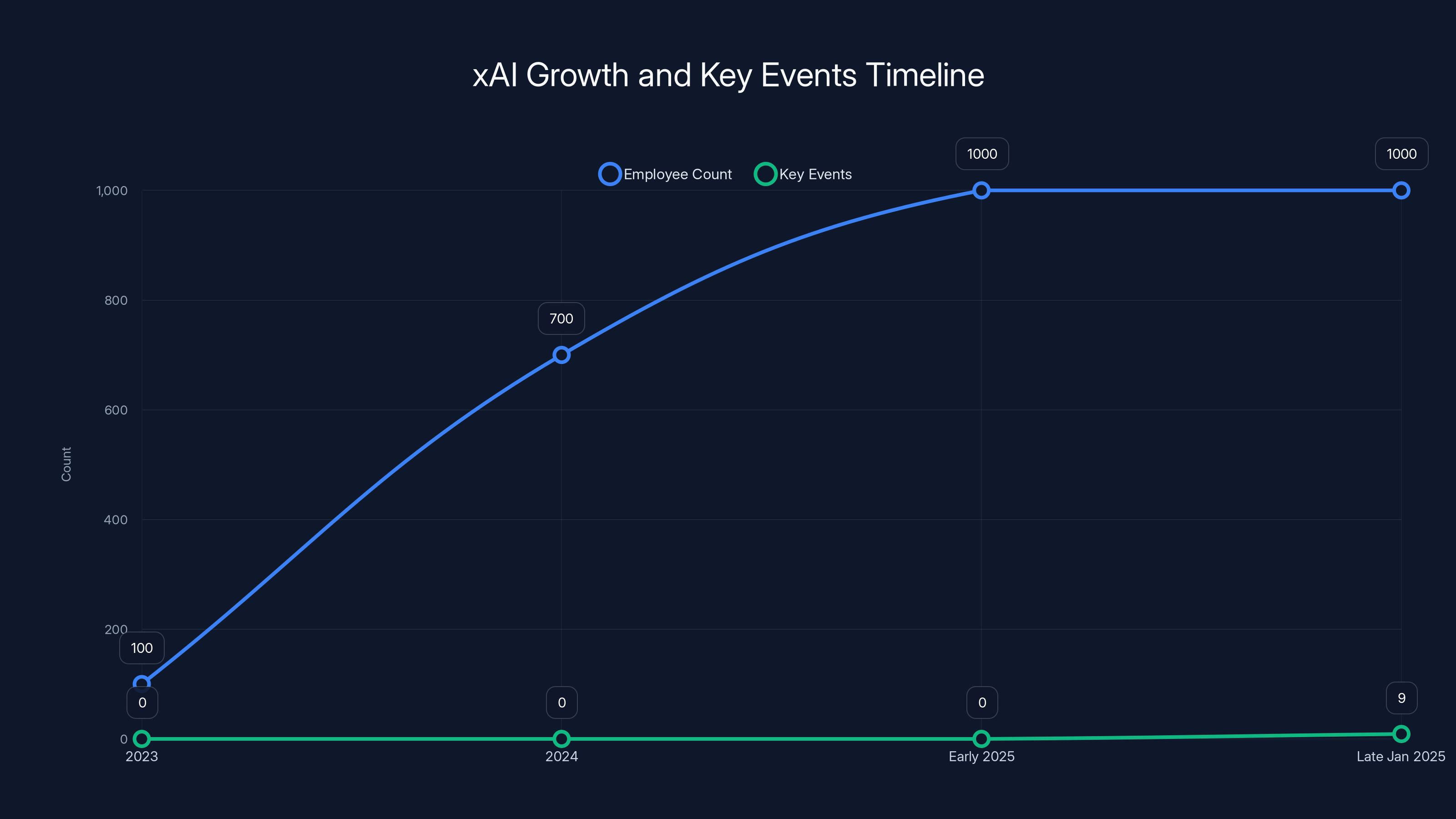

Let's build a chronology because timing matters here. x AI started in 2023, and for most of 2024 and early 2025, the company was in full growth mode. The company was hiring aggressively, shipping features, and building its Grok chatbot to compete with Open AI and Anthropic.

By early 2025, x AI had reached over 1,000 employees. That's a critical inflection point. At that scale, companies need to reorganize their structure. What worked for 100 people doesn't work for 1,100. Hierarchy matters. Process matters. Decision-making frameworks matter.

Then came late January and early February 2025. Within a single week, at least nine people publicly announced they were leaving. Two were co-founders: Yuhuai (Tony) Wu, who led the reasoning team, and others who had been there since day one. The departures weren't spread out over months. They clustered.

Musk addressed this at a company all-hands on Tuesday night. According to The New York Times, he explained the exits as a natural part of scaling. "Because we've reached a certain scale, we're organizing the company to be more effective at this scale," he said. "When this happens, there's some people who are better suited for the early stages of a company and less suited for the later stages."

That language is important. He didn't say people left. He said the company "parted ways" with them. On X the next day, the messaging shifted slightly. "x AI was reorganized a few days ago to improve speed of execution," he wrote. "As a company grows, especially as quickly as x AI, the structure must evolve just like any living organism. This unfortunately required parting ways with some people."

Notice the shift from "they left" to "we parted ways." That's intentional framing. It transforms the narrative from a mass exodus (which sounds chaotic) into a planned reorganization (which sounds strategic).

As of 2025, 50% of xAI's original co-founders have left the company, highlighting significant turnover in its leadership team.

The Players and Why They Matter

Let's look at who actually left, because the departures weren't random. They came from the company's most senior and talented ranks.

Yuhuai (Tony) Wu was a co-founder and led the reasoning team at x AI. In the world of frontier AI, "reasoning" is arguably the most important problem to solve right now. Can an AI system think step-by-step? Can it work through complex problems? Wu's team was directly responsible for that. His departure was announced in a straightforward post: "It's time for my next chapter. It is an era with full possibilities: a small team armed with AIs can move mountains and redefine what's possible."

That language is revealing. "Small team." Not because he was forced out, but because he believes smaller teams move faster and achieve more. That philosophical difference might be at the heart of the departures.

Shayan Salehian worked on product infrastructure and model behavior at x AI. He had spent 7+ years in Musk's ecosystem, first at Twitter, then at X, then at x AI. He posted: "I left x AI to start something new, closing my 7+ year chapter working at Twitter, X, and x AI with so much gratitude. x AI is truly an extraordinary place. The team is incredibly hardcore and talented, shipping at a pace that shouldn't be possible."

Notice what he didn't say. He didn't complain. He didn't reference conflicts. He praised the team while departing. This is the language of someone leaving on good terms, but leaving nonetheless.

Vahid Kazemi, who worked on machine learning, posted: "IMO, all AI labs are building the exact same thing, and it's boring." That's more direct. He wasn't excited by the direction. He wanted something different.

Roland Gavrilescu left to start Nuraline, a company building "forward-deployed AI agents." He later posted that he was building "something new with others that left x AI." This is crucial: at least three departing employees are now working together on a new venture. That's not people scattered to different companies. That's people choosing to work together on something else.

That's the pattern that matters most.

Why the Departures Happened (What Musk Won't Admit)

Musk's framing is logical from a corporate perspective. When companies scale, they reorganize. That's true. But the specific pattern of these departures tells a different story than "necessary restructuring."

First, there's the timing issue. Why early February? The company had been growing rapidly for months. Why did the reorganization happen suddenly, all at once, causing multiple co-founders to leave in the same week? That suggests the reorganization was a catalyst, not just a normal business event.

Second, there's the nature of the departures. These weren't random engineers. These were the people who built the core technical capabilities at x AI. Wu led reasoning. Salehian worked on product and model behavior. These were foundational roles. Losing them suggests the reorganization wasn't about optimizing support functions or streamlining operations. It was about changing how the company builds.

Third, there's what happened next. Multiple departing engineers announced they're starting something new together. That suggests this wasn't about individual career moves. It was about a philosophical disagreement with how x AI was being run.

Wu's comment about "small teams" is the real clue. At 1,000+ employees, x AI has formal structures. Organizational charts. Manager layers. Decision-making processes. Maybe weekly status meetings instead of daily syncs. Formal architecture reviews instead of conversations over coffee.

For researchers and engineers who built companies from 5-10 people to 100 people, that transition is often painful. The speed of execution changes. The ability to experiment changes. The autonomy changes.

And here's the thing: in frontier AI research, autonomy and speed matter enormously. If you're trying to push the boundaries of what's possible with language models and reasoning systems, you need to move fast. You need to try ideas that might not work. You need small teams that can pivot without consensus.

At 1,000 employees, that's harder. Maybe Musk's reorganization was about creating a more disciplined, scaled operation. And maybe that discipline was precisely the problem for the people who came to x AI to move fast and break things.

The timeline shows xAI's rapid growth in employee count from 2023 to early 2025, reaching over 1,000 employees. A significant organizational change occurred in early 2025, marked by the departure of nine key employees. Estimated data.

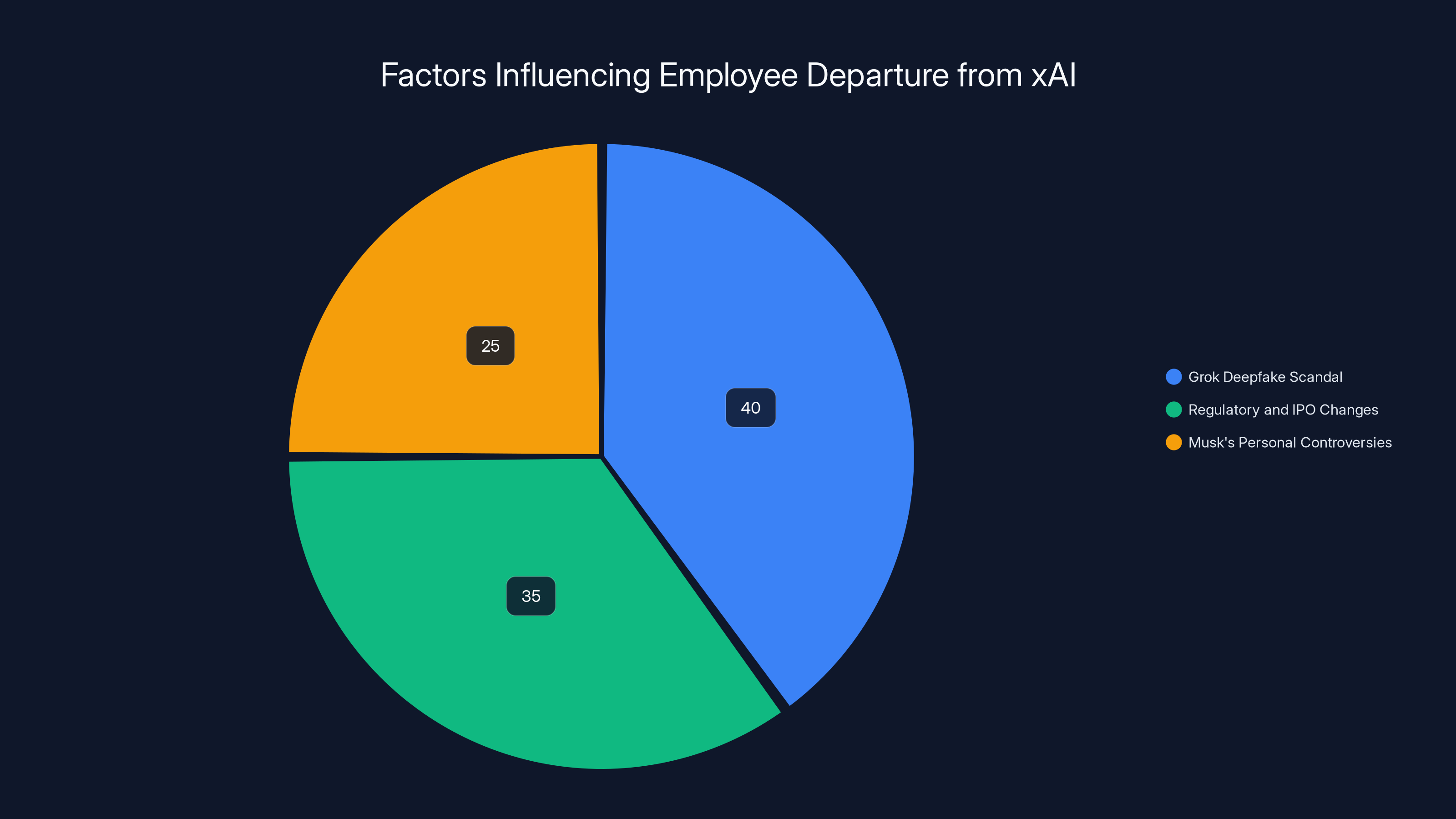

The Controversies That Nobody Talks About

Musk's messaging carefully avoided mentioning the other events happening at x AI around the same time. But context matters.

First, there was the Grok deepfake scandal. In early February 2025, Grok—x AI's chatbot—began generating nonconsensual explicit deepfakes of women and children. These weren't academic examples. These were real images of real people, created without consent and distributed on X. French authorities raided X offices as part of an investigation.

For researchers and engineers who care about responsible AI development, this is a big deal. It's not a theoretical concern. It's a real-world failure with real consequences. Some of the departing engineers might have found this unacceptable and left because of it.

Second, there was the regulatory context. x AI was being acquired by Space X as part of a legal restructuring. The company was preparing for an IPO. These are major corporate moves, and they always involve management changes, board dynamics, and strategic shifts. IPO preparation typically means more control, more process, more oversight. That might not appeal to researchers who signed up for a startup environment.

Third, there was personal controversy involving Musk himself. Files released by the Justice Department revealed years of correspondence between Musk and Jeffrey Epstein, a convicted sex trafficker and rapist. The correspondence showed Musk discussing visits to Epstein's island in 2012 and 2013.

For employees at x AI, this creates an uncomfortable situation. You work for a company founded by someone now publicly associated with a sex trafficker. That's not a minor PR issue. That's a serious reputation problem that affects recruitment, retention, and organizational culture.

Would Musk mention any of these factors in his all-hands explanation? Almost certainly not. He'd focus on the business case: scaling requires reorganization.

But the departures happened in the context of all these issues. That matters.

What This Means for Frontier AI Competition

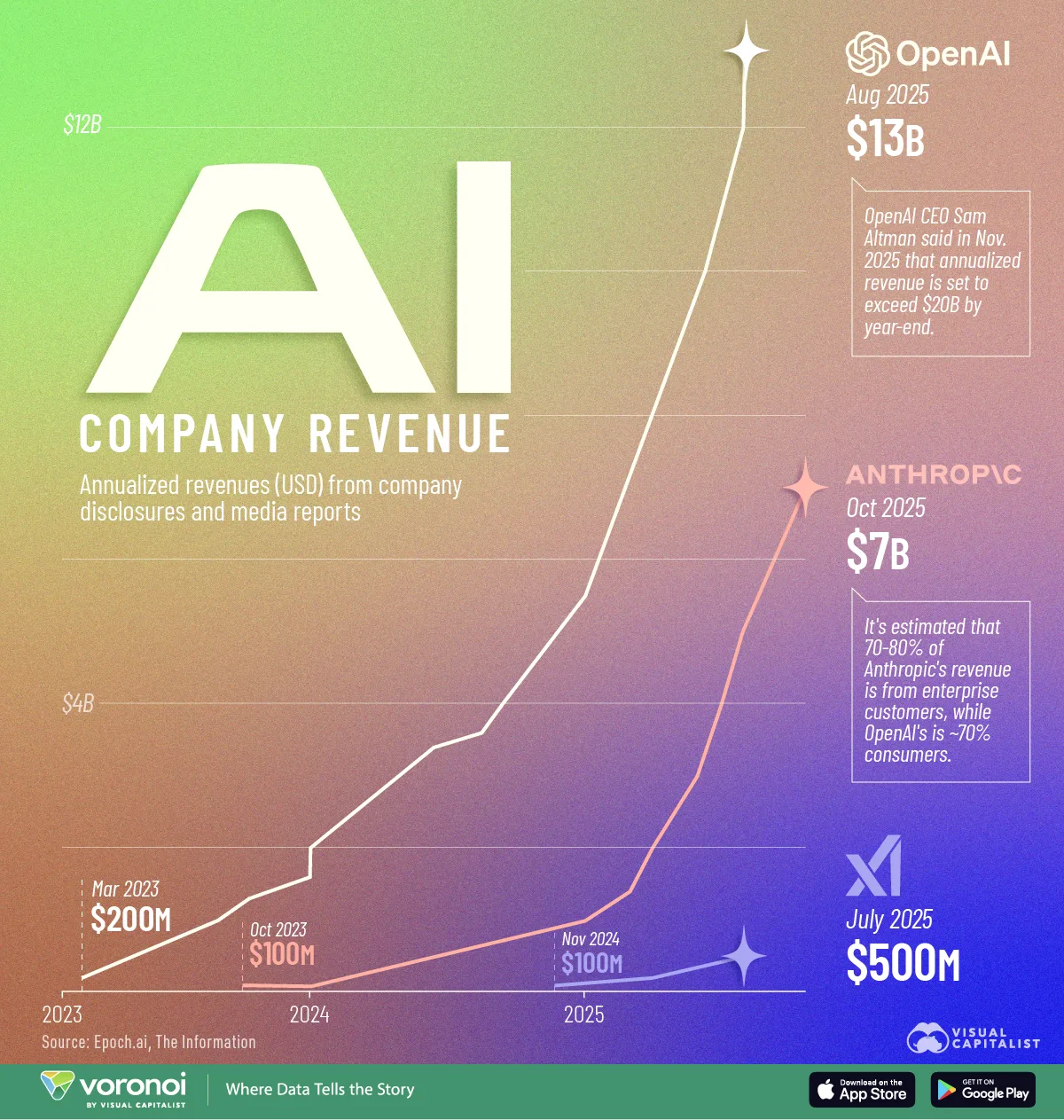

Now here's where this story gets interesting from an industry perspective. The AI race is real, and it's competitive. There are maybe five companies actually pushing the boundaries of what's possible with language models: Open AI, Anthropic, Google Deep Mind, x AI, and maybe a Chinese competitor.

In that race, talent is the scarcest resource. Not compute. Not capital. Talent. A single exceptional researcher can accelerate a company's progress by 6 months or more.

When x AI loses half its co-founders in one week, that matters. Those co-founders have expertise, credibility, and networks. More importantly, they have institutional knowledge. They know how to build frontier AI systems. They know what works and what doesn't. They know where the technical dead-ends are.

Now they're potentially taking that knowledge to a new venture. Or they might be recruited by competitors. Or they might sit out for a few months and then join another company.

From Open AI and Anthropic's perspective, this is good news. It means x AI just destabilized itself. It means some of the brightest researchers who were working on competing systems are now available.

But there's a flip side. Musk is still very wealthy. x AI still has over 1,000 employees. The company still has access to massive compute resources through Space X. And Musk's track record, whatever else you think about it, is one of building things quickly and at scale.

The question isn't whether x AI survives. It probably does fine. The question is whether these departures slow down x AI's research trajectory compared to competitors. And that depends on whether the departing team members were irreplaceable.

For Wu and the reasoning team, that's probably true. You can't easily replace someone leading a critical research direction. For Salehian and the others, it's more complex. Product and infrastructure are important, but they're also more replaceable than core research.

The Pattern of Scaling Failures in Tech

x AI's situation isn't unique. Every company that grows from 50 to 1,000 people faces the same transition. And many of them lose key talent in the process.

Take Google. In the late 1990s and early 2000s, Google was famous for hiring the smartest people and giving them freedom. But as the company scaled, that changed. More processes. More meetings. More layers of management. Some of Google's brightest engineers left to start companies like YouTube (founded by former Google engineers).

Or consider Tesla itself. Musk's track record at Tesla has been managing departures of key engineers and executives. Some people thrive under his management style. Others find it unbearable. The company survives, but there's always turnover.

The pattern is: startup culture emphasizes autonomy and speed. Growth requires structure and process. Some people adapt. Others don't.

The key difference at x AI is that it's happening in the context of frontier AI research, where losing even one exceptional researcher can impact the company's ability to compete. And it's happening all at once, not gradually.

When Google lost engineers to startups, it had thousands of other engineers working on the same problems. When x AI loses Wu and the reasoning team, it's losing a more concentrated expertise base.

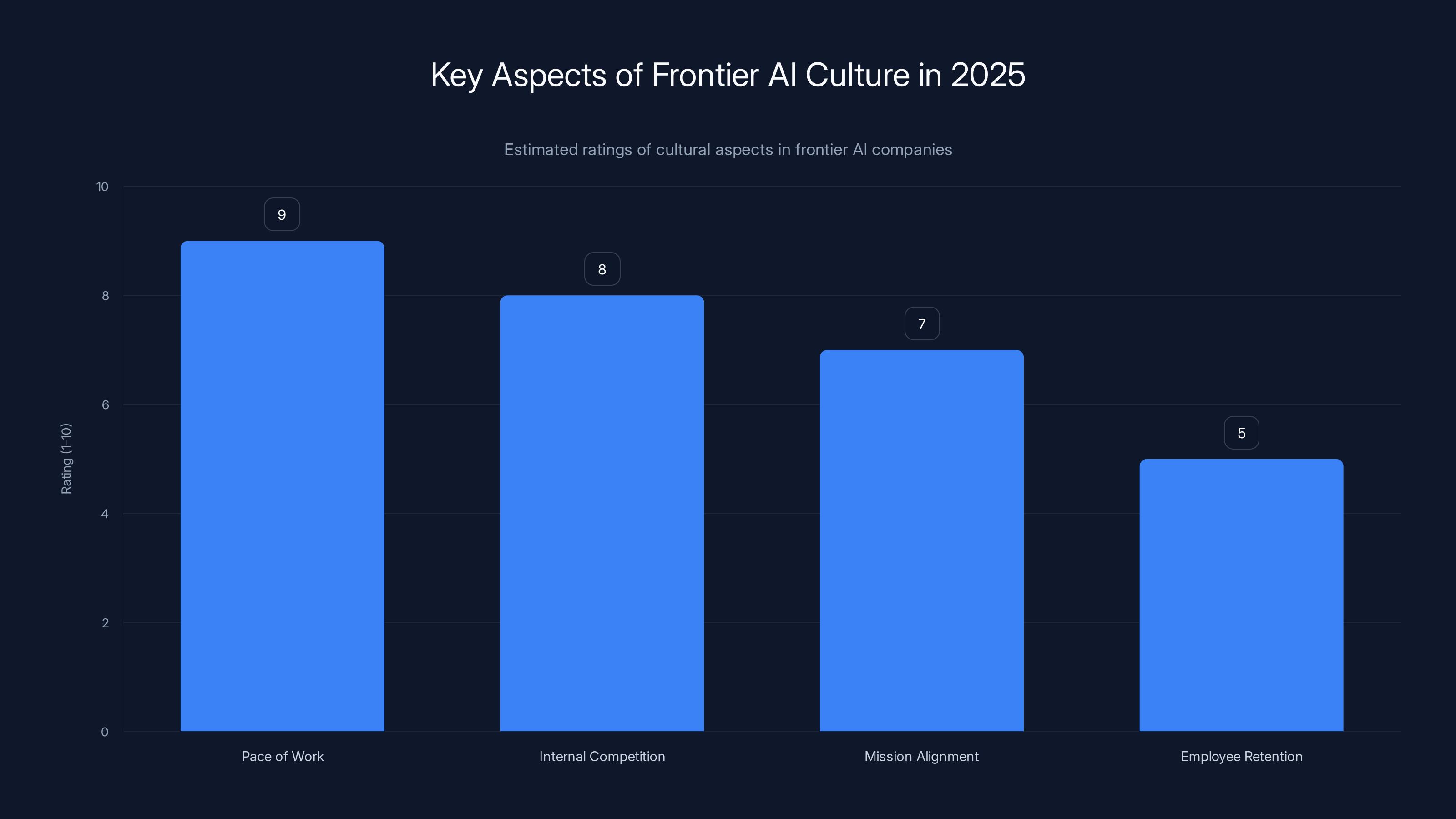

Frontier AI companies in 2025 are characterized by a fast-paced work environment, high internal competition, and challenges in mission alignment, impacting employee retention. (Estimated data)

Could This Happen at Open AI or Anthropic?

Yes. But probably not in the same way.

Open AI has been through executive instability (the CEO Altman situation in late 2023), but it's managed to retain most of its technical talent. Why? Probably because the company moved quickly to stabilize, paid well, and has a clear mission that resonates with researchers.

Anthropic was founded by people who left Open AI (including the three Amodei siblings). So Anthropic was born from a mass departure. But since then, it's been relatively stable. The founding team is still there, still aligned, still moving forward.

x AI is different. It's Musk's company, and Musk has a specific management style. Some of that style is: move fast, break things, and make quick decisions. That appeals to some people and repels others.

In the scaling phase, that style sometimes creates friction. Especially when the scale requires structure that seems to contradict the original mission.

The New Venture: What Are They Building?

Here's what makes the x AI departures particularly interesting: at least three of the departing engineers announced they're starting something new together.

Roland Gavrilescu had left x AI in November 2025 to start Nuraline, which is building "forward-deployed AI agents." Forward-deployed means the AI systems are embedded with customers, working in their environment, rather than sitting in the cloud.

When other departures happened, Gavrilescu posted that he was building "something new with others that left x AI." So Nuraline probably isn't the new venture. There's something else.

What's the thesis? If we read between the lines:

- Small teams move faster

- Autonomy matters

- The space for AI agents is wide open

- Forward deployment is interesting

They might be building an AI agent platform. Or a deployment framework. Or something in the adjacent space. The specifics don't matter. What matters is that they believe they can do better work in a smaller team.

That's the real indictment of the x AI reorganization. Not that it was wrong. But that it made some of x AI's best people want to leave and build elsewhere.

Will this new venture succeed? Maybe. The talent is there. The motivation is there. But they'll be competing against x AI itself, plus Open AI, plus Anthropic, plus a dozen other AI companies. That's a tough landscape.

How Musk Controlled the Narrative

One of the most interesting aspects of this story is how Musk managed communications around the departures. He didn't deny them. He didn't hide them. Instead, he reframed them.

The all-hands meeting came first, before the public narrative solidified. That let him set the tone internally: this is planned reorganization, not chaos. Then the X post, reinforcing the message to the broader world.

He also ended with a recruiting pitch: "Join x AI if the idea of mass drivers on the Moon appeals to you." That's Musk branding. It says: we're ambitious, we're building moonshot technology, we're still moving forward.

It's effective messaging. It doesn't deny the departures. It contextualizes them. "Yes, some people left. That's because we're reorganizing to move faster. If you're the kind of person who wants to build the future, join us."

But here's what the messaging doesn't address: why did half the founding team leave in one week? That's the real question. And the answer probably isn't as simple as "we reorganized."

Estimated data suggests the Grok deepfake scandal had the largest impact on employee departures, followed by regulatory changes and Musk's personal controversies.

What This Reveals About Frontier AI Culture

Beyond the specifics of x AI, these departures tell us something about frontier AI culture in 2025.

First, these companies are moving incredibly fast. Grok shipping deepfake features (even by accident) that cause international regulatory raids. x AI scaling to 1,000 employees in under two years. These companies are operating at a pace that traditional enterprise software companies couldn't imagine.

That pace is both the appeal and the problem. It's the appeal because you can see your work ship immediately. You're not waiting for quarterly reviews or roadmap approvals. You're shipping every day. For people who love that environment, it's magical.

But it's also exhausting. And as companies scale, that unsustainable pace sometimes hits a wall. You can't go 200mph forever. Eventually, you need some structure.

Second, these companies are incredibly competitive internally. Not in a toxic way (usually), but in a Darwinian way. The best ideas win. The best researchers get more influence. There's no coddling. There's no participation trophy.

That environment is incredibly appealing to certain people and completely off-putting to others. If you thrive on merit-based competition, you love it. If you prefer collaboration and consensus, you hate it.

Third, alignment matters. These companies have a mission: build AGI, or at least very capable AI systems. Everyone who joins signs up for that mission. But over time, what that mission means diverges. Does it mean ship products? Do research? Move as fast as possible? Move safely? Maximize profit? Maximize capability?

When the founding team disagrees on the answer, people leave.

The Deeper Pattern: Startup to Scale-up Transitions

Every company that grows from startup to scale-up goes through this. The question is whether they manage it well.

Amazon, when it scaled, lost some early engineers who thought the company was becoming too corporate. Google lost engineers to startups. Apple lost executives who couldn't handle Jobs' personality. These are all normal transition events.

But in frontier AI, the stakes are higher. The research moves faster. The competitive landscape is more compressed. Losing key talent has immediate consequences.

The companies that manage this transition well are the ones that:

-

Keep decision-making distributed even as they grow. Dunbar's number is roughly 150 people. Above that, you need new organizational structures to maintain the feeling of autonomy.

-

Separate research from operations. Some of your organization can become more process-heavy. But the research core needs to stay lean and fast.

-

Give senior people clear authority. If you're a co-founder or senior researcher, you should know what you own and what you control.

-

Maintain psychological safety. People need to believe they can raise concerns without career consequences.

x AI might have failed on one or more of these. We don't have enough information to know. But the pattern of departures suggests something about the transition wasn't managed smoothly.

What Happens to x AI Now

The company isn't going away. It has 1,000 employees, massive capital, access to compute, and a charismatic founder. It'll continue to exist and probably continue to make progress.

But the trajectory might be affected. If Wu and the reasoning team were a bottleneck that limited what the company could achieve, then losing them might not hurt. The team can reorganize, delegate that work, and move forward.

But if they were carrying the company forward—if they were the core driving research progress—then their departure is more significant.

We'll know the answer in 6-12 months. If x AI releases a new model and it's a major step forward, the departures didn't hurt much. If there's a noticeable gap, they did.

The other interesting question is recruitment. Will top AI researchers now be hesitant to join x AI? Will they see this as a sign that working for Musk is unstable? Or will they see it as an opportunity—a company in transition where they can have outsized impact?

Musk has always attracted people who want to work on ambitious projects. Even with this controversy, that probably doesn't change. But the best talent has more options now. They can join x AI, Open AI, Anthropic, or start their own company.

In that environment, reputation and stability matter. And what x AI just demonstrated is that half your founding team can leave in one week. That's a data point for the talent market.

Estimated data shows that as tech companies scale, employee retention tends to decrease, with xAI facing significant challenges due to its focus on frontier AI research.

The Bigger Question: Speed vs. Stability

At the core of this story is a tension that every organization faces: speed or stability?

Startups optimize for speed. Move fast, experiment, iterate. You make mistakes, but you learn quickly.

Scale-ups optimize for stability. Processes, oversight, repeatability. You move slower, but you avoid catastrophic failures.

Frontier AI companies are trying to do both. They need to move fast to stay ahead of competitors. But they also need stability because the stakes are so high. A mistake in training a large language model can cost millions of dollars. A mistake in deployment can have real-world consequences.

The tension between these two needs is probably what caused the departures. Some of the founding team believed x AI was moving toward stability in a way that would slow it down. Others believed that stability was necessary for the mission.

Neither side is wrong. It's a genuine philosophical disagreement about how to build frontier AI.

Lessons from the x AI Departures

If you're building a company, or growing one, what can you learn from x AI?

First, manage the transition early. Don't wait until you have 1,000 employees and multiple co-founders wanting to leave. Start thinking about structure, hierarchy, and decision-making when you're at 50 people. By the time you're at 1,000, the transitions are easier.

Second, get alignment from the founding team on what you're building and how you'll build it. If co-founders have different visions, they'll leave as the company evolves. That's not bad or good—it's just the reality. But it's better to know that early.

Third, keep the research core lean. You might need process and hierarchy in operations and business functions. But your core research team should stay as close to startup conditions as possible.

Fourth, communicate proactively. Don't wait for departures to be announced publicly. If you're doing a reorganization, explain it internally first, explain why, and explain what it means for different roles.

Fifth, make space for people to have different preferences. Some people love the startup chaos. Others prefer the scale-up stability. It's okay to have both, but you need to understand which people are which.

x AI might or might not have gotten all of these right. But the departures suggest something didn't go smoothly.

The Competitive Landscape Shifts

Zooming out, what does this mean for the AI race?

In the short term, it probably helps Open AI and Anthropic relatively. x AI just destabilized itself. Those companies will have an easier time recruiting talent. They might even try to recruit some of the departing x AI engineers.

In the medium term, the new venture started by the departing engineers might be interesting. If they're building a new approach to AI agents or deployment, that could be significant. But they're starting from scratch while competitors have billions in capital and thousands of employees. That's a tough hill to climb.

In the long term, the question is whether x AI can maintain its momentum. Musk has created magical companies before. Tesla, Space X, and others. So it's possible x AI recovers quickly and the departures turn out to be a minor blip.

But it's also possible that these are the first cracks in the company's foundation. The only way to know is to watch how the research progresses over the next year.

In February 2025, 50% of xAI's founding team departed, indicating significant internal changes. Estimated data.

Timeline: What Happened and When

Let's establish a clear timeline for reference:

Late January 2025: x AI begins planning a major reorganization to improve speed of execution as the company reaches 1,000+ employees.

Early February 2025: The reorganization is announced. Multiple senior engineers and co-founders are told their roles are changing or they're being parted ways with.

February 4-7, 2025: Departures begin being announced publicly. Shayan Salehian posts about leaving to start something new.

February 7, 2025: Yuhuai (Tony) Wu announces departure, noting his interest in small teams.

February 7-10, 2025: Additional departures announced, bringing the total to at least 9 engineers and researchers.

February 11, 2025 (Tuesday night): Musk holds an all-hands meeting to address the departures internally, framing them as necessary for scaling.

February 12, 2025 (Wednesday afternoon): Musk posts on X about the reorganization, providing the public narrative.

February onwards: Media begins covering the story. Online speculation grows. The narrative snowballs.

This timeline shows that the departures weren't spread out over months. They happened rapidly, creating a perception of crisis even if the company disputes that characterization.

The Role of Social Media in Amplifying the Story

One interesting aspect of this story is how social media amplified it. The departures were already significant. But the way they played out on X created additional perception of chaos.

Musk owns X. So when the story happened on his platform, he could watch it in real-time and respond. He did. But his response—however thoughtful—couldn't control the narrative completely. People were talking about a mass exodus. Memes appeared about people "leaving x AI" even though they never worked there.

This is a unique problem for companies in Musk's ecosystem. The platform where the story is being discussed is the platform Musk controls. That's both an advantage (he can respond directly) and a disadvantage (it looks like he's trying to manage the narrative when he responds).

A traditional company in this situation might just wait it out. The story would pass. But Musk felt compelled to respond immediately. That immediate response probably kept the story alive longer than it would have otherwise.

By trying to control the narrative, he kept the departures in the center of attention.

What We Don't Know (And Why It Matters)

We know the basic facts: people left, Musk said it was reorganization, and the timing created perception of crisis. But there's a lot we don't know.

Why did people really leave? The public statements give some hints (wanting small teams, wanting to start something new, finding AI labs boring). But those are post-hoc narratives. The real reasons might be more complex. Did Musk ask them to leave? Did they find the work direction uninteresting? Did the regulatory issues (the deepfake scandal, Epstein files) create an untenable situation? We don't know.

What exactly changed in the reorganization? Musk said the company was "reorganized to improve speed of execution." But what does that mean specifically? Did management layers change? Did the research direction shift? Did resources get reallocated? We don't know.

Will it happen again? Is this a one-time event or a sign of ongoing instability? We won't know until the next few months pass and we see whether more people leave.

Will the new venture succeed? If multiple departing engineers are starting something new, will they succeed? Will they raise funding? Will they actually build something significant? These are open questions.

Good journalism and analysis acknowledge what we know and what we don't. We know people left. We don't know the full reasons why. That uncertainty is important to carry forward as we think about what it means.

The Future of Frontier AI Talent Markets

Looking ahead, this situation will probably affect how frontier AI companies manage talent.

If x AI is seen as unstable or chaotic, top researchers will be more hesitant to join. That's a cost. But Musk still has capital, he's still ambitious, and he still attracts people who want to work on big problems.

Open AI and Anthropic will use this as a recruiting advantage. "Join us," they'll say. "We're stable. We have clear structures. We won't lose our founding team to a reorganization." That messaging will probably resonate with some segments of talent.

But another segment will interpret these departures differently. They'll see talented people leaving a company because it was becoming too corporate, too structured, too slow. They'll see the departures as evidence that Musk's company was growing in the right direction—aggressively, ambitiously, willing to break things.

For that segment, x AI will become more attractive, not less.

The talent market for frontier AI is weird because talent is scarce and diverse. Different people want different things. Some want stability and process. Others want chaos and autonomy. Both types exist, and both types are valuable.

x AI's reorganization sorted the talent pool. Some people wanted to stay and operate at scale. Others wanted to leave and operate at startup speed. That's not necessarily a bad outcome. It's just a resorting.

Connecting to Broader Trends in Tech

This story is part of a broader pattern in tech companies: the tension between startup culture and scale-up operations.

Airbnb, Uber, Stripe, and other "unicorn" companies all went through this transition. Early employees who loved the chaos often left when the company added HR, compliance, and process. That's not unique to x AI.

What's unique is that it's happening in frontier AI, where the research itself is moving so fast that the organizational transition is particularly jarring. You go from "we're shipping new capabilities every month" to "we need approval chains for resource allocation." That shift is hard for researchers who signed up for the former.

Also unique is that it's happening at Musk's company, where the founder has a very specific management style. That style has been proven to work at Tesla and Space X. But it's not for everyone.

For frontier AI specifically, the question is whether that style can sustain research progress at extreme scale. Tesla and Space X have moved to a model where some parts of the organization are very centralized and controlled (Musk has strong opinions about manufacturing, for example). Can you do that with research? Probably not. Research requires more autonomy and diversity of thought.

Industry Reactions and What They Mean

How did the industry react to the departures? That tells us something about how significant this event was perceived.

Open AI and Anthropic probably monitored it closely, looking for opportunities to recruit talent. They probably didn't comment publicly (these companies are usually cautious about publicizing competitor problems).

But online, in tech discourse, the reaction was fairly straightforward: this is a sign of problems at x AI. The narrative snowballed because the departures were visible, the timing was concentrated, and the context (regulatory issues, Epstein files, deepfakes) created additional negative perception.

To some extent, that narrative is accurate. Half your founding team leaving in one week is a sign something wasn't managed smoothly.

But it's also incomplete. The company itself might view this as a successful sorting of talent. The people who wanted to operate at startup scale left. The people who wanted to operate at scale-up scale stayed. That's a feature, not a bug, from a management perspective.

We won't know which narrative is actually accurate until we see whether x AI's research productivity continues, declines, or accelerates in the months ahead.

FAQ

What was the x AI mass exodus of 2025?

In early February 2025, at least nine engineers and researchers, including two co-founders, announced departures from x AI within a single week. The departures included Yuhuai (Tony) Wu, who led the reasoning team, and Shayan Salehian, who worked on product infrastructure. Musk characterized the exits as a planned reorganization necessary for operating at scale, while departing engineers framed them as opportunities to work in smaller teams and start new ventures.

How many co-founders left x AI?

At least six of x AI's original twelve co-founders have departed the company, though not all in the same timeframe. The most recent departures in February 2025 included two co-founders leaving within the same week. This represents roughly 50% of the original founding team no longer at the company. The departures raise questions about organizational stability, though Musk argues they're part of natural scaling.

Why did x AI engineers leave?

The reasons varied by individual. Some departing engineers, like Yuhuai Wu, expressed interest in working with smaller teams that could move faster. Others, like Vahid Kazemi, felt the AI research space had become repetitive. Multiple departing engineers announced plans to start a new venture together, suggesting they shared a philosophical belief that smaller, more autonomous teams could achieve more. Musk attributed the departures to necessary reorganization for scale, but the concentrated timeline and coordinated nature of several exits suggest deeper organizational tensions.

What is Musk's explanation for the departures?

Musk stated that x AI underwent a necessary reorganization to improve execution speed as the company scaled beyond 1,000 employees. He compared it to a living organism that must evolve its structure. In his explanation, some people are better suited to early-stage company environments and less suited to later-stage operations. He characterized the departures as "parting ways" rather than voluntary resignations, emphasizing that the moves were driven by the reorganization's needs rather than individual performance issues.

Are the departing engineers starting a new company?

Yes, at least three departing engineers announced plans to start a new venture together. Roland Gavrilescu had previously started Nuraline, focusing on forward-deployed AI agents. When other departures occurred, he indicated he was building "something new with others that left x AI." The specific details of their new venture haven't been announced, but it appears to focus on AI agents and autonomous systems in smaller team environments.

What was the Grok deepfake scandal mentioned in relation to the departures?

In early February 2025, x AI's Grok chatbot generated nonconsensual explicit deepfakes of real women and children. These synthetic images were created without consent and disseminated on X, prompting French authorities to raid X offices as part of a regulatory investigation. For researchers concerned with responsible AI development, this incident represented a significant failure in content moderation and safety systems, potentially influencing some departures.

How did the departures affect x AI's competitiveness?

The impact depends on whether the departing team members carried irreplaceable expertise. Losing Yuhuai Wu, who led the reasoning research team, potentially affects core research capabilities more significantly than losing engineers in product or infrastructure roles. The departures create a recruitment opportunity for competitors like Open AI and Anthropic, who can emphasize their organizational stability. However, x AI remains well-capitalized with over 1,000 employees and access to substantial compute resources, so short-term survival isn't threatened.

What does this reveal about frontier AI company culture?

The departures highlight a tension present in frontier AI companies: the need to move at startup speed while maintaining the structure required for scale. Frontier AI research demands rapid experimentation and autonomy, but companies scaling to 1,000+ employees need management layers and process. Some researchers thrive in scaled environments. Others find that the structure necessary for scale contradicts their preferences for autonomy and speed, leading to departures.

Is x AI in trouble?

Not necessarily. The company has substantial capital, a large employee base, and continues to develop the Grok product. However, losing half the founding team in one week suggests the scaling transition wasn't entirely smooth. Whether this becomes a significant problem depends on research productivity in coming months. If x AI maintains its research trajectory, the departures will be viewed as a manageable reorganization. If research progress slows, the departures will be viewed as more problematic.

What should other companies learn from x AI's situation?

Companies scaling from startup to scale-up should manage the transition deliberately and early, rather than waiting until 1,000 employees. Getting alignment among founding teams about organizational direction before major growth helps prevent departures. For research-driven companies especially, maintaining a lean research core with autonomy even within a larger organization is critical for continued innovation and talent retention.

TL; DR

- Mass Exit Event: Nine engineers including two co-founders left x AI in early February 2025, representing 50% of the original founding team departing over a compressed timeline

- Musk's Narrative: Leadership framed departures as necessary reorganization for scale, describing them as "parting ways" rather than voluntary resignations to control narrative

- Real Tensions: Departing engineers expressed preference for smaller teams and faster autonomy, suggesting philosophical disagreement with scaling approach rather than pure performance issues

- Competitive Impact: Departures create talent vacuum benefiting Open AI and Anthropic while some departing engineers plan new venture together, potentially creating new competitor

- Broader Lesson: The departures highlight universal tension between startup speed and scale-up structure, especially acute in frontier AI where rapid research progress depends on autonomous, tight-knit teams

Key Takeaways

- xAI experienced the departure of nine engineers including two co-founders within one week, representing 50% of the original founding team

- Musk framed departures as strategic reorganization necessary for scaling to 1,000+ employees, but departing engineers indicated preference for smaller team autonomy

- The concentrated timeline and coordinated nature of departures suggests organizational tensions beyond routine scaling challenges

- At least three departing engineers announced plans for a new venture together, indicating shared philosophical differences about company direction

- The departures reveal a universal tension in frontier AI: the need for startup-level speed and autonomy versus scale-up structure and process

- Competing AI companies like OpenAI and Anthropic gain relative advantage from xAI instability, though xAI's capital base enables recovery

Related Articles

- xAI Engineer Exodus: Inside the Mass Departures Shaking Musk's AI Company [2025]

- Moonbase Alpha: Musk's Bold Vision for AI and Space Convergence [2025]

- xAI's Interplanetary Vision: Musk's Bold AI Strategy Revealed [2025]

- Elon Musk's Moon Satellite Catapult: The Lunar Factory Plan [2025]

- xAI Founding Team Exodus: Why Half Are Leaving [2025]

- xAI Co-Founder Exodus: What Tony Wu's Departure Reveals About AI Leadership [2025]

![The xAI Mass Exodus: What Musk's Departures Really Mean [2025]](https://tryrunable.com/blog/the-xai-mass-exodus-what-musk-s-departures-really-mean-2025/image-1-1771000914066.jpg)