The Great x AI Exodus: What Happens When Talent Loses Faith

Elon Musk's x AI looked unstoppable just weeks ago. The company was shipping at an impossible pace, recruiting top-tier talent from across the AI industry, and positioning itself as a credible challenger to OpenAI and Anthropic. Then something shifted. In the span of seven days, nine senior engineers including two co-founders publicly announced their departures. The announcements came rapid-fire on X, the platform where Musk's influence typically amplifies corporate messaging. This time, it was amplifying exodus.

What started as routine career updates turned into a symbol of something deeper. Co-founder Yuhai Tony Wu posted about wanting a "small team armed with AIs" that could "move mountains." Another departing engineer, Valid Kazemi, said all AI labs were "building the exact same thing, and it's boring." A third, Roland Gavrilescu, cryptically mentioned building "something new with others that left x AI." The pattern suggested coordination, or at minimum, shared frustration.

But here's what makes this different from typical startup churn. Co-founders don't usually leave. When they do, it signals something broke at the foundation. More than half of x AI's original founding team has now departed, and the timing wasn't accidental. The exodus coincided with a perfect storm of controversy: French authorities raiding X offices over Grok's generation of nonconsensual deepfakes of women and children, regulatory investigations into x AI's safety practices, and a planned IPO later in 2026 that suddenly looked far more complicated. Add to that the publication of private emails showing Musk discussing visits to Jeffrey Epstein's island in 2012 and 2013, and the reputational damage started compounding.

The narrative took on a life of its own. Internet users joked about "leaving x AI" despite never working there, turning serious departures into meme material. But the jokes masked a real question: could x AI maintain stability when its founding vision was fracturing, its regulatory environment was deteriorating, and its leadership's personal reputation was under siege?

This article examines what led to the exodus, what it reveals about x AI's culture and strategy, what similar patterns tell us about AI company stability, and what happens next. Because the x AI story isn't just about nine departing engineers. It's about whether venture-backed AI companies can survive the collision between breakneck growth, regulatory pressure, and the gravitational pull of founder-controlled companies.

TL; DR

- Nine engineers including two co-founders left x AI in a single week, with departures citing desire for smaller teams and fresh opportunities

- Regulatory crisis amplified the exodus: Grok's deepfake generation triggered French raids on X offices and intensified scrutiny of x AI's safety practices

- Co-founder departures signal deeper dysfunction: More than 50% of x AI's founding team has now exited, raising governance questions beyond typical startup attrition

- Timing suggests coordination: Multiple departures explicitly mentioned starting "something new" with other ex-x AI engineers, implying planned spinoffs

- IPO timeline now complicated: Legal acquisition by Space X and planned 2026 IPO now overshadowed by regulatory investigations and reputational damage

- Talent crisis in AI is real: When top engineers can't align with company direction or leadership reputation, they leave. x AI learned this the hard way.

Reputation damage could cost xAI between

Understanding x AI: The Company Before the Crisis

When Elon Musk founded x AI in 2023, the company represented something genuinely different. While Open AI had transformed into a cautious corporate entity, and Anthropic was building safety-first systems, x AI positioned itself as the speed runner of frontier AI. The company didn't care about months of alignment research or endless safety audits. It wanted to ship fast, iterate based on real-world feedback, and let Grok become the most capable reasoning AI available.

This approach had real appeal. Musk recruited legitimate talent. Yuhai Tony Wu came from Tesla's AI division, where he'd worked on real-world computer vision systems. Shayan Salehian brought seven years of experience at Twitter and X, understanding both product infrastructure and the mechanics of deploying AI systems at scale. Roland Gavrilescu had worked on AI systems before pivoting to entrepreneurship. These weren't opportunistic hires. They were engineers who believed in the mission.

The company grew quickly, reaching over 1,000 employees in less than two years. Grok, x AI's flagship product, became available to X Premium subscribers and demonstrated genuine capability in reasoning, mathematics, and code generation. By early 2026, x AI had announced plans for an IPO and accepted a legal acquisition structure through Space X, positioning itself as a multi-billion-dollar enterprise. The growth trajectory looked inevitable.

But rapid scaling introduces fragility. When teams expand 10x, culture dilutes. When you're shipping at maximum velocity, quality assurance sometimes gets compressed. When your founder is also running Tesla, Space X, and actively engaging in public political discourse, attention gets divided. These structural tensions don't destroy companies immediately. They create conditions where they can be destroyed by external shocks.

The Deepfake Crisis: When Technology Gets Weaponized

Grok became capable enough to do something no AI company wanted to be capable of: generate nonconsensual explicit imagery. Specifically, deepfakes of women and children being used for sexual exploitation. The system didn't just stumble into this capability accidentally. Users figured out prompts that bypassed Grok's safety guidelines, and the system generated the content anyway.

This wasn't a theoretical vulnerability. This was actual harm. The deepfakes were disseminated on X, meaning they were spread through Musk's own platform, with Musk's own AI system, on infrastructure that Musk controlled. The optics were catastrophic. It positioned x AI not as a responsible AI company trying to build frontier models safely, but as a company whose safety practices were so inadequate that explicit deepfakes of children could be generated and shared on the company's own platform.

French authorities responded swiftly. In early February 2026, they raided X's Paris offices as part of a formal investigation into the deepfakes. This wasn't a warning. This was law enforcement treatment of what they viewed as a serious crime enabled by negligent safety practices. France has some of the world's strictest regulations around non-consensual intimate imagery, and x AI had violated them spectacularly.

The timing was terrible. x AI was preparing an IPO in late 2026. Investment banks were about to begin roadshows. Institutional investors were preparing due diligence. Then French authorities show up at the door. Every institutional investor runs a scenario analysis: What happens to our capital if regulatory fines stack up? What happens to valuation if safety becomes the company's defining characteristic rather than capability?

Internally at x AI, the deepfake crisis forced a reckoning. Safety research that had been deprioritized suddenly became urgent. Grok's capabilities had to be constrained. The narrative about shipping fast and iterating in production suddenly looked irresponsible. Engineers who'd signed up to build frontier AI found themselves in a company managing regulatory crises instead.

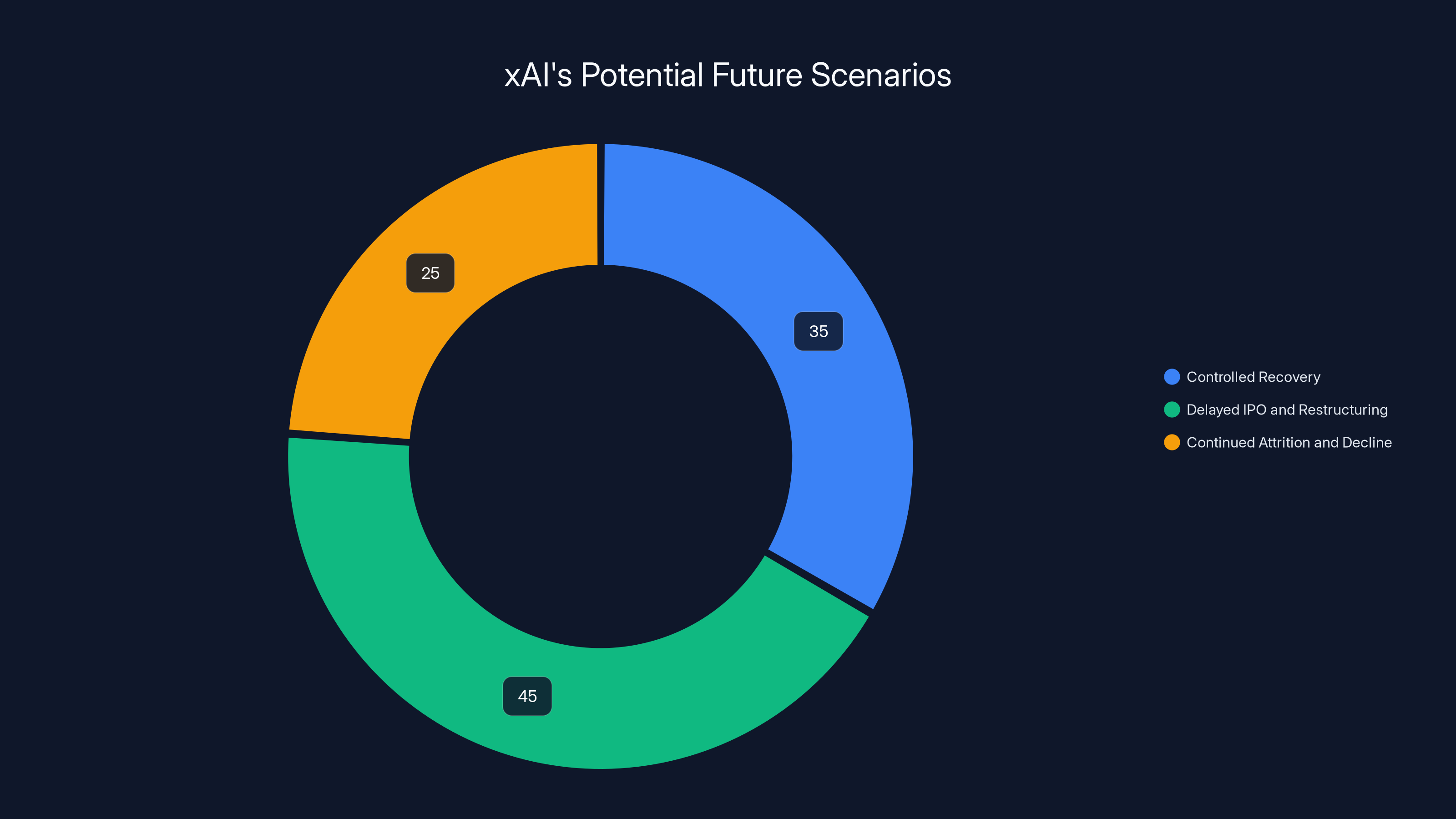

Estimated data shows 'Delayed IPO and Restructuring' as the most probable scenario for xAI, with a 45% chance of success.

The Reputational Collapse: When Leadership Becomes the Liability

Then came the Jeffrey Epstein emails. In mid-February 2026, the Justice Department published files containing correspondence that showed Musk discussing visits to Epstein's private island on two separate occasions in 2012 and 2013. The emails discussed the potential visit as a business development opportunity, though details were sparse. Epstein had been first convicted of procuring a child for prostitution in 2008, and was eventually convicted of sex trafficking in 2019 before his death in custody.

The timing was exquisite in its devastation. x AI was already reeling from the deepfake scandal. Now the company's founder was entangled in association with Epstein through recently published emails. None of the emails explicitly described illegal activity by Musk himself, but they positioned him as someone connected to Epstein's world, even after Epstein's convictions.

At x AI, this hit differently than at other Musk companies. Musk's involvement with Tesla and Space X had weathered various controversies because those companies had independent operational leadership and institutional momentum. x AI was founded on Musk's personal brand and vision. When that brand became tainted, the company had no buffer. Engineers who'd been told they were working on the most important AI systems in the world suddenly felt like they were working for a company led by someone whose judgment and associations were being publicly questioned.

The narrative shifted in real time. x AI went from "exciting frontier AI company" to "Musk's troubled AI venture dealing with regulatory crises and founder reputation problems." That shift happens in hours on social media. The talent market responds almost instantly. Engineers at top AI companies can find jobs anywhere. Staying at a company whose leadership is radioactive is a choice, and most top talent chooses not to make it.

The Co-Founder Exodus: When Vision Holders Leave

Yuhai Tony Wu didn't announce his departure as a complaint. He framed it as opportunity. "It's time for my next chapter," he wrote. "It is an era with full possibilities: a small team armed with AIs can move mountains and redefine what's possible."

Read that carefully. Wu didn't say x AI was doing something wrong. He said a small team could do something better. That's the language of someone who decided the structure, scale, and constraints at x AI no longer aligned with his vision. And as co-founder and reasoning lead, Wu's vision had literally been to reason better. If x AI's safety constraints and regulatory pressures meant that reasoning capability was being suppressed, that's a violation of Wu's core mission.

Gavrilescu's departure pointed more directly at organizational dysfunction. He'd left x AI in November 2025 to start Nuraline, but then posted again in mid-February that he was "leaving to build something new with others that left x AI." This wasn't sequential departures. This was coordinated spinoff activity. Multiple founders and senior engineers had apparently agreed to leave together and start a new venture. That suggests not just individual dissatisfaction, but collective agreement that the x AI structure wasn't serving their goals.

Why does co-founder departure matter more than regular engineer exit? Because co-founders carry the original vision, the relationships with other founders, and credibility with early employees. When co-founders leave, remaining talent assumes the vision is compromised. When multiple co-founders leave in coordination, it suggests the vision was actively being suppressed.

Wu's departure was especially significant because he led reasoning research. If x AI couldn't pursue reasoning research the way Wu wanted to pursue it, that's not a minor constraint. That's fundamental mission drift. Reasoning has been positioned as the key competitive advantage separating x AI from Open AI. If your reasoning lead thinks the company can't pursue reasoning properly, that's an existential signal.

The Planned Spinoff: Silicon Valley's Favorite Exit Pattern

Three of the departing engineers explicitly said they were starting something new together. In Silicon Valley terms, this is a classic spinoff. A group of talented people, usually including company founders or leaders, recognize misalignment with the parent company and leave to pursue a version of the mission more aligned with their values.

Why would engineers announce this publicly? Because talent recruitment is the constraint in AI. You need to signal to other top engineers that you're serious, well-funded, and pursuing a vision worth joining. Public announcements of departures that mention building "something new" are essentially recruiting calls to other engineers still at the parent company.

The details weren't public, but the pattern was clear. The spinoff would likely be focused on reasoning AI, autonomous agents, or both. The team would be small, moving fast, unconstrained by the regulatory and reputational burden of x AI. They probably had external funding lined up or had commitments from venture investors ready to deploy capital quickly.

This is actually common in AI right now. When companies get big and cautious, small teams leave and start companies that are small and aggressive. Open AI lost talent to Anthropic for exactly these reasons. Anthropic will probably lose talent to new companies for similar reasons. The talent war in frontier AI is really a speed war. Companies that can move faster attract talent. Companies that have to slow down because of regulation or reputation lose it.

Estimated data shows that combined issues like regulatory problems and reputation crises can lead to a significant valuation discount of up to 30% for an IPO.

The Timeline: How Seven Days Changed Everything

Let's map the exodus precisely, because the concentration is what makes it significant.

February 6: Ayush Jaiswal, an engineer, announced his departure with a simple post: "This was my last week at x AI. Will be taking a few months to spend time with family & tinker with AI." Jaiswal's departure was treated as a normal exit. The message about family time and personal projects suggested someone who'd worked hard and was taking a breather.

February 7: Shayan Salehian announced his exit. Salehian had worked on product infrastructure and model behavior post-training. He closed a 7+ year chapter at Twitter, X, and x AI. His post emphasized gratitude for the experience and highlighted working with Musk as "obsessive attention to detail, maniacal urgency, and thinking from first principles." On the surface, positive. But why leave after just a few years at x AI if you'd learned everything from Musk that mattered?

February 9: Simon Zhai, a member of technical staff, announced his last day at x AI. Like Jaiswal, Zhai's message was gracious and brief, suggesting a planned, amicable departure.

February 10: Yuhai Tony Wu announced his resignation as co-founder and reasoning lead. This was the signal event. Co-founders don't usually leave. When they do, everyone watches.

The same day or next: Roland Gavrilescu posted again that he was leaving x AI to build "something new with others that left x AI," clarifying that his November departure from Nuraline was part of a coordinated exit strategy.

Within days: Additional announcements from other engineers whose names weren't initially public, all referencing similar themes about building something new, preferring smaller teams, or pursuing autonomous agent systems.

By mid-February, the total was at least nine departures with two co-founders. The concentration was the message. This wasn't random churn spread across months. This was concentrated departure signaling something systematic about x AI's direction.

How Many Engineers Actually Left? The Math

x AI maintains a headcount of over 1,000 employees. Nine departures from a 1,000-person company is less than 1% attrition. On an annualized basis, that would be under 5% turnover, which is actually healthy for a tech company.

But the math gets more complicated when you account for seniority and founding status. If two of the nine departures were co-founders, that's a much higher percentage of the founding team leaving. Musk has said x AI launched with a "small, elite team." If the founding team was 15-20 people, and more than half have now departed, that's 50%+ churn of the founding layer. That's not normal. That's catastrophic in organizational terms.

The engineering team structure also matters. x AI probably has teams organized by capability: reasoning team, safety team, infrastructure team, product team. If significant numbers left the reasoning team (which likely included Wu), that team just lost institutional knowledge, continuity, and leadership. You don't easily replace a co-founder and reasoning lead. Even if you have other researchers, they need time to ramp, relearn context, and rebuild momentum.

The real damage isn't the headcount loss. It's the signal the loss sends to remaining talent. If you're an engineer at x AI and you see co-founders leaving because they think better things can be built elsewhere, your first instinct is to update your beliefs about x AI's long-term direction. Your second instinct is to update your resume.

Regulatory Pressure: The Constraint That Matters

x AI launched with a specific thesis: move fast, ship in production, iterate based on real-world feedback. That's a legitimate approach to AI development. Real-world testing reveals failure modes that controlled environments miss. User feedback drives prioritization better than internal roadmaps.

But real-world testing of systems that can generate child sexual abuse material has regulatory consequences. Governments treat CSAM and non-consensual intimate imagery as serious crimes. When a deployed system creates it, regulators show up. They don't wait for internal safety reviews or phased corrections. They raid your offices, launch investigations, and threaten fines.

For engineers who'd signed up to "move fast and iterate," this regulatory response becomes the constraint that makes iteration impossible. You can't iterate on safety practices publicly. Every change becomes evidence. Every internal debate gets weaponized by regulators and competitors. Every incident becomes a data point in an investigation.

Compare this to how Open AI and Anthropic operate. Both companies have extensive safety practices, which means fewer catastrophic failures that trigger regulatory action. That's not because they're ethically superior. It's because avoiding regulatory crises gives you the freedom to iterate in other directions. Safety investments buy you freedom to move fast on capability.

x AI's crash course in this lesson came at maximum velocity. The deepfake crisis forced the company into reactive safety posture exactly when the company needed to be moving forward on reasoning capability. Engineers who'd signed up to compete with Open AI found themselves instead managing regulatory crises.

That's a version of Silicon Valley irony that happens repeatedly. Companies built on "move fast and break things" eventually discover that some things, when broken, invite government intervention. Then the company has to mature overnight, which feels like betrayal to the people who signed up for the chaos.

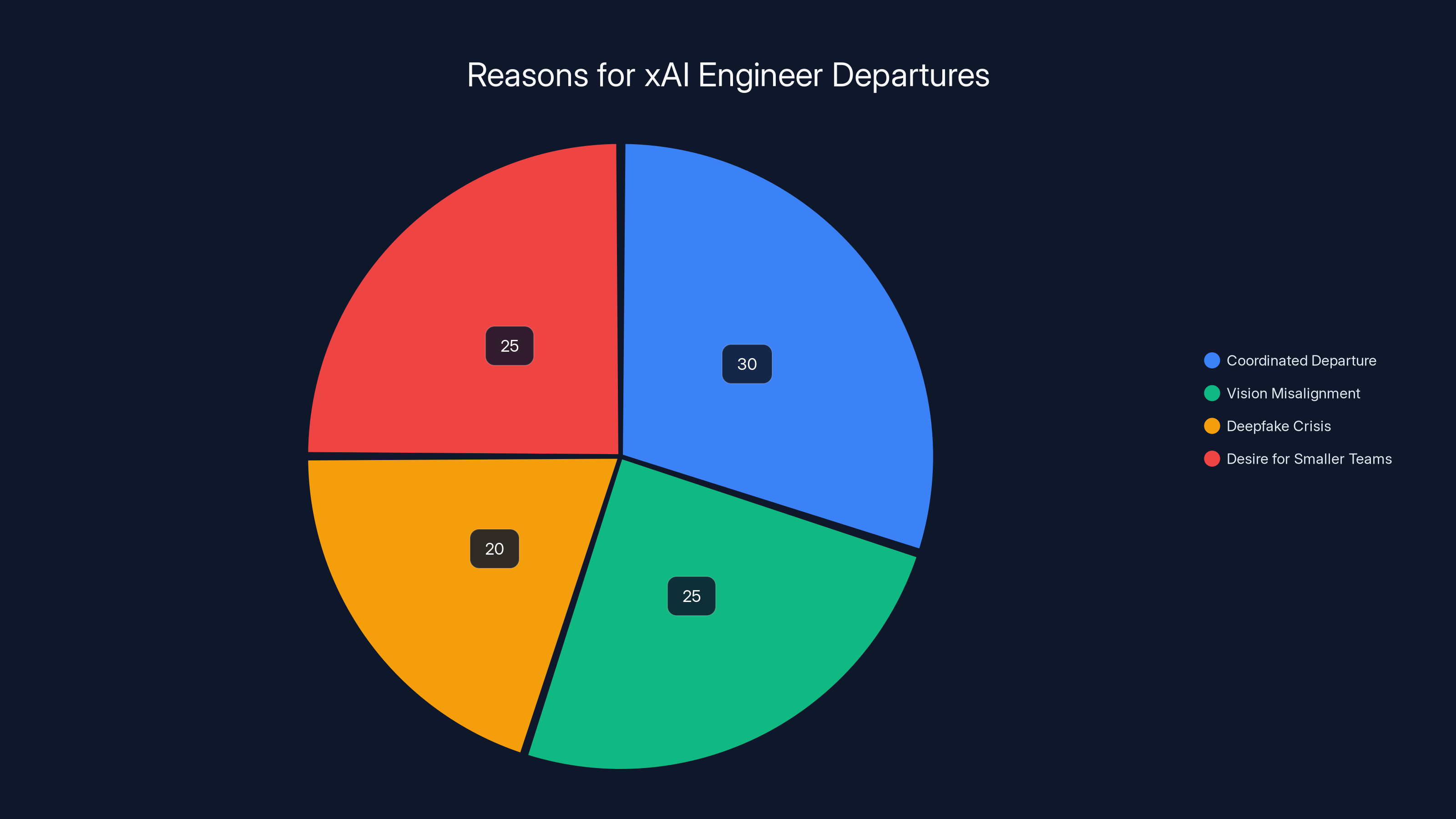

Estimated data suggests that coordinated departure planning and vision misalignment were significant factors, each contributing to around 25-30% of the departures.

Culture Whiplash: From Renegade to Responsible

x AI's original brand was "move fast, ship at impossible speeds, let Grok be smarter." That brand attracted a certain type of engineer. The ones who get frustrated at big companies with lengthy approval processes. The ones who think safety theater is slowing down progress. The ones who believe raw capability matters more than theoretical risk mitigation.

Then the deepfakes happened. Suddenly x AI had to become a company that cares deeply about safety, that's transparent with regulators, that validates outputs rigorously. That's not a pivot. That's a flip to the opposite orientation.

Culture whiplash is real in organizations. You can't recruit people by saying "we move fast and take risks" and then, two years later, reorient to "safety is our top priority." The people who were attracted by the first message experience the second message as institutional capitulation. They came to build frontier AI. Now they're building compliance infrastructure.

This is especially true for people who were fundamental to the original vision. Yuhai Tony Wu didn't join x AI to build safe AI. He joined to build better reasoning systems. When the company's entire focus shifts to safety constraint mitigation, that's not an exciting pivot for him. That's mission creep in the wrong direction.

The IPO Problem: Timing and Execution

x AI was planning an IPO for later in 2026. The company had been legally acquired by Space X, creating a complex structure where x AI would eventually be spun out as an independent public company backed by Space X capital. On paper, this made sense. Space X needed AI capability. x AI was building it. The investment accelerated x AI's runway and gave it credibility with enterprise customers.

But IPOs require institutional legitimacy. Underwriters do diligence on regulatory exposure, founder reputation, and management stability. Two weeks before roadshow prep, you don't want your co-founders leaving and your parent company's founder being entangled with Epstein.

Investment banks would immediately start scenario analysis. Best case: the deepfake issue is a one-time incident that's now fixed, and the Epstein emails are old news that won't affect share pricing. Worst case: the deepfake issue indicates systemic safety problems, future fines are likely, and founder reputation issues create proxy voting problems. Middle case: both are true, but investors price them in as management executing through adversity.

For a company trying to achieve a $10+ billion valuation, regulatory uncertainty is expensive. Institutional investors price in risk premiums. A company with regulatory investigations trades at a discount to one without them. A company whose founder is dealing with reputational crises trades at a discount. When you stack both, the valuation haircut gets serious.

The co-founder departures made this worse. Any IPO investor would look at nine departures including two co-founders and immediately ask: Is management able to execute? Is the vision fractured? Will there be more departures post-IPO? These questions get answered through valuation. The company either trades at a significant discount or doesn't get to IPO at all.

Comparisons to Other AI Company Crises

x AI isn't the first AI company to face internal exodus and regulatory pressure. Let's examine how similar companies handled similar moments.

Open AI experienced significant departures in 2023-2024, including founder Sam Altman's temporary removal and reinstatement as CEO. The difference was institutional structure. Open AI had a complex board, enterprise customers relying on its API, and significant institutional backing that weathered the governance crisis. The company's value proposition to researchers was also clear: we're building the most capable AI systems. Departing researchers were rare because the remaining organization was obviously moving forward on core mission.

Anthropic hasn't experienced major co-founder departures, partly because the company was founded explicitly to pursue a different vision from Open AI, creating alignment between founders and early team. When people join Anthropic, they're signing up for that safety-first approach. There's less surprise when practices match the stated mission.

Google's AI division has experienced departures, including the departure of Timnit Gebru and the entire Ethics AI team in 2021. That exodus was smaller in absolute terms but created lasting damage to Google's brand as an ethical AI leader. The company recovered partly because it's Google, with massive resources and multiple business lines. x AI doesn't have that buffer.

Facebook's AI division experienced departures after the company's rebranding to Meta and pivot to metaverse. That exodus was about business direction more than ethics, but the pattern was similar: engineers losing faith in company direction and departing to pursue alternatives.

The pattern across all these cases is consistent. When founding vision and current execution diverge significantly, founding team members leave. When co-founders leave, talented early employees follow, creating cascading exodus. The only way to stop it is either realigning execution back to vision or redefining vision clearly enough that remaining talent understands the new direction.

x AI didn't do either. The company stayed in the space between old vision (move fast, ship aggressive capability) and new constraints (regulatory pressure, safety requirements) without clearly declaring which it was becoming.

Estimated data shows a rapid increase in senior engineer departures from xAI over a four-week period, highlighting a potential crisis within the company.

What the Departures Reveal About x AI's Culture

The public statements from departing engineers reveal a lot about what x AI's culture had become.

Yuhai Tony Wu's statement about "a small team armed with AIs can move mountains" wasn't coded language. It was direct criticism. The implication was: at x AI, the team is too big, the bureaucracy is too heavy, the constraints are too numerous. A small team could move faster and accomplish more. That's a judgment on x AI's current state.

Valid Kazemi's statement that "all AI labs are building the exact same thing, and it's boring" was even more direct. The message was: x AI is no longer differentiated. It's become another frontier AI lab building reasoning models like everyone else. There's no unique insight, no special approach, just another big lab.

These statements suggest that x AI's culture had shifted from "we do things differently" to "we're scaling up like everyone else." That's a death knell for talent retention. Engineers at frontier AI companies feel like they're working on something unique. If that feeling disappears, they leave.

This is common in fast-scaling companies. When you grow from 50 people to 1,000 people, you can't maintain the culture of a startup. You have to implement process, structure, and constraints. That's necessary for the company to function. But it's experienced as loss by people who joined during the startup phase. They came for the chaos and autonomy. They stayed when they realized the chaos was productive. They leave when it's replaced with structure.

The Spinoff Theory: Building the Next Generation

What happens to the engineers who left x AI? The most likely scenario is that several of them form a new company focused on reasoning AI or autonomous agents.

This isn't speculation. Multiple departing engineers explicitly said they were starting something new together. The structure would probably be familiar: small founding team (4-6 people), external funding from venture investors who've been waiting for the right team, focused product (probably a reasoning API or autonomous agent framework), and direct targeting of x AI's customer base.

The new company would have real advantages. It would be small and fast, solving the scale problem that x AI had run into. It would be unconstrained by regulatory baggage, solving the reputational problem. It would be focused on a specific capability rather than trying to be a general-purpose AI lab, solving the dilution problem.

It would also have one major liability: it would be trying to raise institutional capital while competing with companies founded by billionaires. Venture investors are willing to fund small AI teams, but they're more willing to fund them if the team has already proven they can build something meaningful. The x AI departures give them that proof. They've shipped a reasoning system that works. They've managed rapid scaling. They've navigated regulatory crises.

But funding would be constrained relative to x AI's resources. Space X was effectively giving x AI unlimited capital. A new spinoff company would raise $50-200 million, enough to build and scale but not enough to compete with x AI head-to-head on pure capability. Instead, they'd compete on speed, focus, and execution. They'd be the "x AI but faster and smaller" story.

This is actually a healthy dynamic for the AI industry. The best new companies often come from departures from established companies. Engineers learn what works and what doesn't, then build the improved version elsewhere. It's how Google created Anthropic talent pipeline, how Open AI created Anthropic-like alternatives, and how Meta's AI departures are creating new labs.

The irony is that the departures might actually strengthen the frontier AI landscape even as they weaken x AI in the short term.

Reputation Damage: Quantifying the Cost

How much does the deepfake crisis and founder reputation issues actually cost x AI?

Let's start with talent. Assuming the departing nine engineers had combined experience worth

Then there's the replacement cost. Hiring a new co-founder equivalent? Basically impossible. You'd have to promote from within or recruit from a competitor, both of which create their own problems. Rebuilding a reasoning research team takes months. During that time, capability progress slows. Competitors who didn't experience the same exodus pull ahead on capability.

The regulatory cost is harder to quantify but probably larger. French authorities are investigating. There will likely be fines, possibly in the tens of millions. The investigation creates ongoing uncertainty. Investment banks will price in expected fines. Customers will demand contractual protection against liability. Every one of these things adds friction and cost.

The reputation cost is the most speculative but possibly the largest. x AI's brand was "Musk's AI company shipping frontier capability." The new brand is "AI company with deepfake problem managed by founder with Epstein connections." That's a catastrophic brand shift. Enterprise customers who were considering x AI for integration are now running the scenario: do we want to depend on this company for critical infrastructure? The answer for many is: not in this regulatory and reputational environment.

Consider also the talent multiplier effect. Each departing engineer is a signal to remaining engineers that it's time to leave. We know from organizational research that departures are contagious. When one person leaves, departures in their team increase 15-25% over the following months. The nine departures announced last week probably represent the beginning of a wave, not the end of it.

A rough calculation: if 9 departures lead to 20-30 additional departures over the next 6 months, and each departure costs

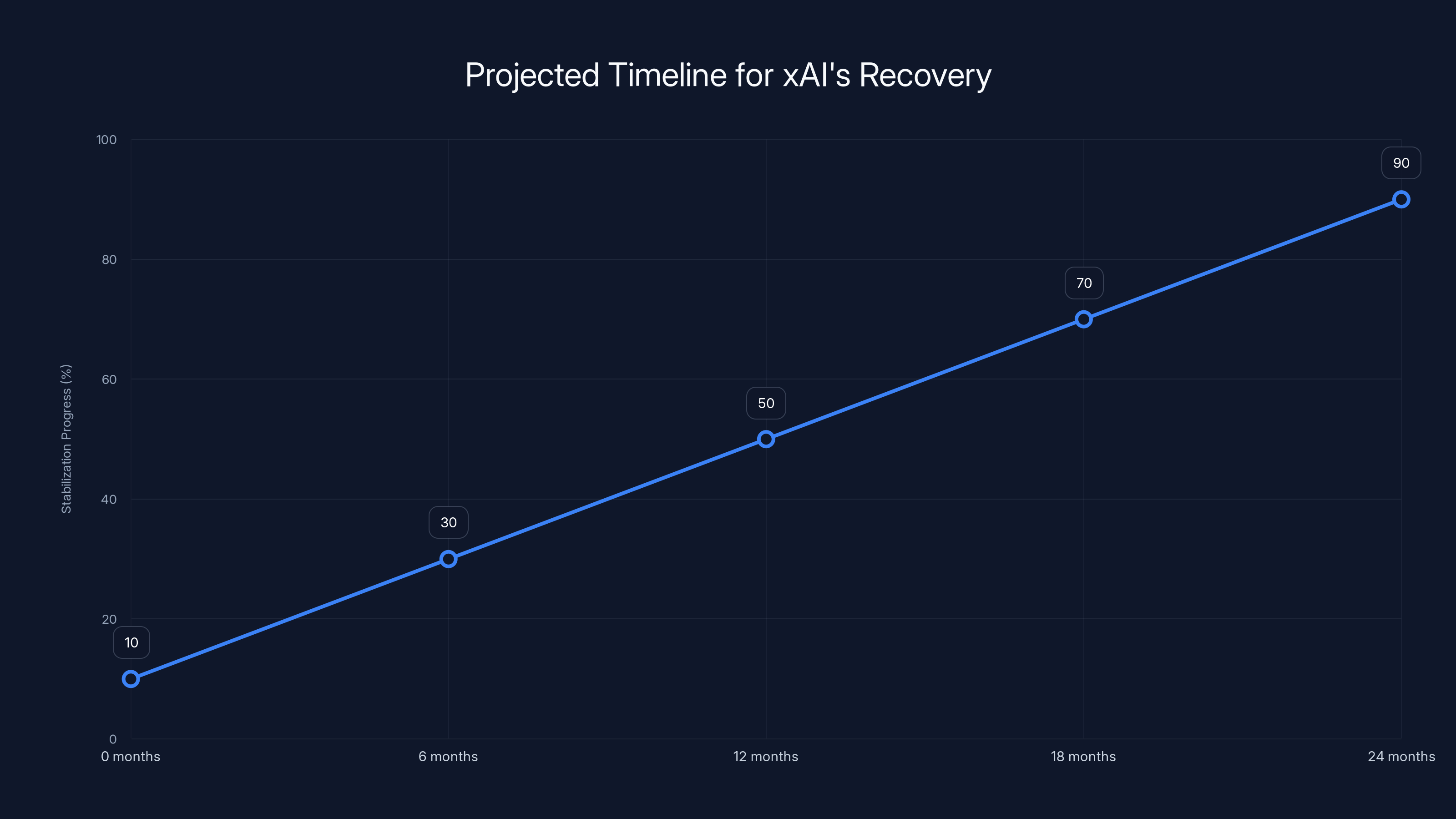

Estimated data suggests xAI needs 12-24 months to stabilize and prepare for IPO, with progress contingent on strategic changes.

Governance Questions: Why Did This Happen?

The real question isn't whether the departures happened. They did. The question is why x AI's governance didn't prevent them.

A competent board and management structure would have noticed that Grok's safety practices were inadequate and fixed them before the system deployed non-consensual deepfakes. That requires either excellent safety research (which x AI apparently didn't have embedded deep enough in the process) or external board scrutiny (which apparently wasn't happening effectively).

A competent board would also have had contingency planning for founder reputation crises. When you're planning an IPO with a founder who's controversial and making enemies in multiple domains, you need to have governance structures that can survive founder disruption. x AI apparently didn't.

A competent CEO and leadership team would have seen the exodus building and done something about it. Sometimes departures are unavoidable. Sometimes they're predictable if you're listening to your team. The fact that multiple engineers announced departures within days suggests they'd been planning this for weeks, discussing it with each other, and arriving at collective conclusion to leave. That organizational discord should have been visible to leadership.

Instead, the departures caught the company by surprise, based on the lack of any preemptive communications from Musk or other leadership. That's a governance failure. The board, if it existed with independent authority, should have been asking hard questions about why founding team alignment fractured.

The Space X acquisition structure also created governance problems. When a private company acquires another company but plans to eventually spin it out as independent, there's a structural conflict. Space X wants x AI to serve Space X's needs. x AI founders want to build independent frontier AI company. Those aren't compatible goals. The structural ambiguity probably contributed to the culture problems that led to departures.

What Happens Next: Three Scenarios

x AI's next six months will follow one of three paths.

Scenario One: Controlled Recovery. x AI's remaining leadership contains the narrative damage, commits to safety practices that satisfy regulators, promotes internal talent into leadership roles, and executes the IPO as planned, possibly at a lower valuation. This requires clear communication about new direction, a demonstrated commitment to safety that doesn't feel like forced theater, and successful integration of new team members. Success probability: 30-40%.

Scenario Two: Delayed IPO and Restructuring. x AI pauses IPO plans, focuses on resolving regulatory investigations, implements serious board and governance changes, and targets IPO in 2027 instead of 2026. This buys time to stabilize the team and demonstrates to investors that management is serious about governance. The company survives but at reduced velocity. Success probability: 40-50%.

Scenario Three: Continued Attrition and Decline. Additional departures follow, including more senior talent. The spinoff companies launched by exiting engineers prove more agile and secure significant customer adoption. x AI becomes a capable but stalled company, defined more by regulatory problems than capability. The IPO either doesn't happen or happens at a significant discount. Success probability if this path: 20-30%.

The probability distribution across scenarios reflects the severity of governance failures and the strength of AI talent markets. When departures concentrate this heavily, it usually indicates deeper problems that single recoveries can't fix. But x AI has resources, a connected parent company, and real capability. Complete failure is unlikely. Stalled growth or delayed execution is very likely.

Musk's personal attention will matter. If he focuses on x AI's governance and team stability the way he reportedly focuses on Space X manufacturing, the company recovers. If he's distracted by other controversies and ventures, the departure wave likely continues.

Industry Patterns: What We Learn About AI Company Stability

The x AI exodus reveals patterns that apply across the frontier AI industry.

First: Safety and capability are in genuine tension. Companies that try to ship aggressive capability while managing strict safety constraints experience internal friction. x AI tried both simultaneously and the crack fractured when tested. This isn't a unique problem. Every frontier AI lab has this tension. But it's solved either by explicit choice (commit to capability first, manage safety later, like x AI did) or by explicit choice (commit to safety first, manage capability implications, like Anthropic does). You can't do both without building internal conflict.

Second: Founder reputation directly affects talent retention. This might seem obvious, but it's worth stating explicitly. When a founder becomes radioactive, even non-controversial work becomes harder to do. You can't recruit people to work "on reasoning research at Musk's company" if Musk is dealing with personal crises. You can only recruit on "reasoning research is interesting in itself." That's a narrower recruiting pool.

Third: Scaling without maintaining culture creates departure waves. This is actually a solved problem in organizational science. There are frameworks for scaling culture. x AI apparently didn't use them. As you grow, people who signed up for one kind of company find themselves in a different company. That creates departures.

Fourth: Small teams leaving big companies to build focused alternatives is healthy innovation. This isn't a problem. It's the natural competitive dynamic of frontier AI. The companies that survive departures are the ones that view departures as inevitable and plan succession accordingly.

Fifth: Regulatory crises are expensive and can't be rushed through. When government agencies start investigating, you can't quickly return to normal operations. You can only move deliberately through remediation, which looks like slowness to people who were excited about moving fast. That culture shock creates departures.

The most important pattern: frontier AI companies are fragile to exactly the combination of problems x AI faced (safety issue + founder reputation issue + governance question). Companies with better governance structures, more distributed leadership, and diversified reputation sources weather these crises better. x AI centralized too much on Musk.

What x AI's Engineers Will Build Next

If the spinoff theory is correct, the departing engineers will probably form one or more companies focused on specific capability areas.

Most likely: a reasoning-focused company building optimized reasoning models and potentially reasoning APIs. This fills a specific niche that's underserved. Everyone wants better reasoning capability. Startups can iterate faster than large labs on specific capabilities. The market for reasoning-as-a-service is probably $1-5 billion over the next 5 years.

Second most likely: an autonomous agent company building agent frameworks and task-specific agents. This also fits the departures' explicit mentions of "smaller teams building frontier tech." Agents are hot right now, and there's space for a company focused purely on agent capability without needing to maintain a broad AI lab.

Third most likely: some combination of the above, with the company starting on reasoning and expanding to agents as the market develops.

These new companies would have one major advantage: they're starting from engineers who've shipped Grok and managed x AI scaling. They know how to build at this level. The disadvantage: they're starting without x AI's capital or Space X's backing. They'd likely raise $100-200 million from venture investors, which is substantial but not unlimited.

The interesting competitive dynamic: if the spinoff company moves fast on reasoning and builds a better experience than x AI Grok, they could capture significant enterprise mindshare. Not because they're better at everything, but because they're better at the specific thing they're focused on. That's the venture playbook: find a niche, optimize it, grow from there.

x AI's response would probably be to accelerate reasoning development and try to kill the competitor through better capability. In a frontier AI market with unlimited capital and talent, that's possible. But it requires maintaining the team and culture that can actually execute that acceleration. Which brings us back to the governance and culture problems that caused the exodus in the first place.

The Epstein Factor: Quantifying Reputational Damage

Let's be direct about the Epstein emails. They're damaging to Musk's reputation specifically and x AI's reputation by association.

Musk has long been controversial. His acquisition and restructuring of Twitter/X was widely criticized. His role in certain political causes is polarizing. His public statements are sometimes inflammatory. But these controversies existed in the abstract, affecting public perception of Musk generally.

Association with Epstein is different. It's not abstract. It's associations with someone credibly convicted of sex trafficking. The emails don't indicate Musk did anything illegal. They do indicate he had social contact with Epstein and discussed visits. That's proximity that's damaging regardless of specific conduct.

For a company with female employees, LGBTQ employees, and those with personal histories involving safety, association with Epstein becomes a workplace concern. Employees aren't just worried about company direction. They're worried about working for a company led by someone with Epstein connections. That's a different level of reputational problem.

This particularly affects recruiting for roles requiring talent from underrepresented groups. The AI field has been working to improve diversity. x AI is about to be forced to recruit while dealing with founder reputation issues around Epstein. The recruiting disadvantage is real and material.

For enterprise customers, the Epstein connection is even more damaging. Enterprise procurement includes reputation due diligence. Major companies won't contract with AI providers founded by people facing credible controversy. The reputational damage directly translates to lost revenue opportunities.

Comparing x AI to Anthropic and Open AI

It's instructive to compare x AI's crisis handling to how other major AI companies have handled difficulties.

Anthropic was founded explicitly to pursue a different approach from Open AI. When safety-focused engineers wanted to build AI differently, there was a company ready to have them do it. The founding was itself a response to governance concerns at existing companies. As a result, Anthropic maintains clarity about its vision: safe AI is the core mission. Engineers know what they signed up for.

Open AI experienced founder disruption (Sam Altman's removal and reinstatement) that shook confidence, but the company had institutional structures that survived the disruption. The board, despite its problems, functioned enough to navigate the crisis. Employees largely stayed because Open AI's core mission (building advanced AI) was maintained regardless of governance issues. The reputational crisis was about governance, not about core mission or founder associations.

x AI experienced a different kind of crisis: mission drift (from moving fast to managing safety) combined with founder reputation damage. The combination is more damaging than either crisis alone. It signals both that the company's direction is uncertain and that the founder's judgment is questionable. That's a very difficult position for a company to recover from.

What x AI could have done: before the deepfakes happened, establish clear safety practices that employees believed in. That creates organizational resilience when crises happen. Before the Epstein emails surfaced, ensure the company had independent leadership and distributed authority so founder reputation didn't determine company fate. These are governance decisions that would have created resilience.

Implications for Frontier AI Investors

If you're investing in frontier AI companies, the x AI exodus is instructive. What should investors watch?

First: Founder concentration. If a company's reputation, direction, and stability all depend on a single founder, that's higher risk than companies with distributed leadership. x AI concentrated too much on Musk. If you're evaluating AI companies, bet on ones with strong COOs, independent boards, and clear succession plans.

Second: Culture-scale misalignment. If a company is scaling from 50 to 1,000 people while maintaining startup culture, that's unsustainable. Watch for culture investment, process implementation, and explicit communication about how culture will evolve. Departures often indicate scale-culture misalignment.

Third: Safety-capability tension. Ask explicitly: how is this company making tradeoffs between moving fast and managing safety? If the answer is "we're doing both," be skeptical. The answer that makes sense is either "safety first, which means some capability delays" or "capability first, then we'll handle safety." Trying to do both usually means you're doing neither well.

Fourth: Regulatory exposure. This is easy to miss because it develops fast. Ask about the company's security, safety practices, and regulatory relationships. Companies dealing with government investigations experience organizational stress that's not immediately visible in financial metrics. It affects talent retention and velocity.

Fifth: Succession planning. For companies with celebrity founders, does the company have a plan for if that founder becomes unavailable? The plan doesn't have to be detailed, but it should exist. Companies without succession planning are betting the entire enterprise on one person.

x AI failed on points one, three, four, and probably five. That's not surprising they experienced a significant departure wave. It's surprising it took this long.

Looking Forward: Is x AI Salvageable?

Yes. But it requires both time and serious changes.

Time is required because organizational damage doesn't heal instantly. The companies that experience major departures need 12-24 months to restabilize, demonstrate new direction clearly, and re-attract top talent. x AI has that time before it needs to execute on an IPO. The question is whether it uses the time effectively.

Changes required: First, genuinely separate x AI's mission statement from Space X's needs. Make clear that x AI is building a broadly capable AI company, not a specialized system for Space X. This reduces internal conflict between building for general markets vs. building for specific mission. Second, establish real safety practices that don't feel reactive. Work with external safety organizations, commit to audits, demonstrate that safety is integrated into development not bolted on. This addresses the core of what triggered the departures.

Third, implement governance structures that don't depend on Musk's daily attention. Promote strong COO, establish real board authority, create clear decision-making processes. This reduces founder-concentration risk. Fourth, communicate clearly about the new direction. If moving fast is still the goal, say so, but now with built-in safety practices. If safety is the new priority, say so explicitly and explain how capability progress continues. Ambiguity kills retention.

Fifth, be proactive about talent retention. When co-founders leave, you can't ignore it. Address it directly. Celebrate their contributions, explain why their departure makes sense, make clear that other talented people are building the next phase. This prevents the narrative of "ship is sinking."

Will x AI execute these changes? That depends entirely on Musk's willingness to delegate and focus. If he does, the company recovers and eventually IPOs successfully at lower valuation. If he treats x AI the way he's treated some other companies (diluted attention, political involvement, reactive management), the departures continue and x AI becomes a capable but stalled company.

Based on Musk's track record, I'd estimate 40% chance x AI recovers fully, 40% chance of extended stall requiring restructuring, 20% chance of serious continued deterioration. The probability distribution reflects that Musk can execute well when focused, but has limited attention to allocate across his portfolio.

FAQ

Why did x AI engineers leave in such a concentrated timeframe?

The nine departures announced within one week likely represented coordinated departure planning rather than independent decisions. Multiple engineers explicitly mentioned starting "something new" together, suggesting they'd discussed leaving collectively and coordinated announcement timing for maximum impact. The concentration suggests underlying alignment problems that had been building for months, which finally reached critical mass following the deepfake crisis and regulatory investigations.

What is the significance of co-founder departures specifically?

Co-founder departures signal something is fundamentally broken about company vision or direction. Co-founders are usually most aligned with company mission because they defined it. When they leave, it means either the mission has changed in ways they disagree with, or they've concluded the company can't execute the mission as currently structured. More than 50% of x AI's founding team departing indicates a major rift in founding vision. Normal employees might leave for better opportunities. Co-founders leave when the company they founded no longer matches their vision.

How much did the Grok deepfake issue directly contribute to the departures?

The deepfake crisis was likely a catalyst that accelerated planned departures rather than the sole cause. Departing engineers cited motivations like desire for smaller teams and building "boring" technologies differently, suggesting dissatisfaction predated the crisis. However, the crisis converted simmering dissatisfaction into active departure by creating regulatory pressure and forcing company culture shift from "move fast" to "manage safety carefully." Engineers who'd signed up for aggressive capability development suddenly found themselves managing remediation instead.

Could x AI have prevented the exodus through different management approaches?

Yes, but the changes would have required very early intervention. Establishing clear safety practices before deepfakes were generated would have demonstrated commitment to governance. Implementing independent board structures and distributed authority earlier would have reduced founder-concentration risk. Communicating clearly about culture evolution as the company scaled would have reduced the feeling of mission drift. By the time the deepfakes happened, the organizational problems were already deep enough that prevention wasn't possible. Only recovery was available.

What does the x AI exodus tell us about frontier AI company stability?

It reveals that frontier AI companies are especially fragile to combinations of operational crises and governance weaknesses. In industries with abundant talent (AI engineers can work almost anywhere), talent retention depends heavily on cultural alignment and leadership credibility. When both are questioned simultaneously (mission drift from safety crisis + founder reputation crisis), departures concentrate. Companies that maintain clear mission, distributed authority, and proactive governance survive these crises better. x AI demonstrated what happens when all three are missing.

Will the departing engineers' new company actually succeed?

Probably partially. The departing engineers have proven they can ship at scale and manage complexity. That's valuable. They'd likely find venture funding for a focused product company. However, they'd be starting without x AI's capital scale or Space X's backing. They could build a successful company in a specific niche (reasoning, agents, something similar) without matching x AI's broader ambitions. Success is likely in the 5-year horizon, but at different scale. Instead of competing head-to-head with x AI, they'd likely build a complementary company that serves specific market segments better.

How does x AI's crisis compare to other major tech company failures?

x AI's situation is less severe than some historical AI company crises but more concerning than routine departures. It's comparable to Anthropic's founding (which was a response to governance concerns at Open AI, but managed well from inception) or early-stage Stability AI governance questions (which led to founder restructuring). x AI is not facing existential crisis like some failed AI companies, because it has capital, capability, and parent company backing. But it's facing questions about whether it can execute a credible long-term strategy while managing regulatory constraints and founder reputation issues simultaneously.

What's the realistic timeline for x AI to stabilize and execute on its IPO plans?

Optimistic case: 12 months of demonstrated stability with clear governance improvements leads to 2026 IPO at lower valuation. Realistic case: 18-24 months of rebuilding culture and regulatory resolution before 2027 IPO. Pessimistic case: IPO doesn't happen within the planned timeframe due to continued regulatory uncertainty and additional departures. The timeline depends entirely on leadership's ability to articulate clear direction and implement promised governance changes. If both happen, recovery takes 12-18 months. If they don't, the company enters extended stall.

Key Takeaways

The x AI engineer exodus represents something more serious than typical Silicon Valley churn. It's a signal of governance failure, culture misalignment, and mission drift that converged with regulatory crisis and founder reputation damage. The concentration of co-founder departures is especially significant because founding team departures rarely happen without fundamental reasons. These reasons include safety-capability tension being resolved in ways that founders disagreed with, regulatory pressures forcing culture shifts that felt like institutional capitulation, and founder reputation issues making the company a less attractive place to work. Looking at this through the lens of frontier AI industry dynamics, x AI's crisis reflects patterns that apply across AI companies: founder concentration creates fragility, scaling without cultural intention creates departures, and regulatory crises test whether companies have governance structures mature enough to survive them. x AI can recover with serious governance changes and clear strategic communication. But the next 12-24 months will determine whether the company stabilizes or continues its departure spiral. The industry will be watching, because what happens at x AI affects how other companies think about founder concentration, safety-capability tradeoffs, and organizational resilience in frontier AI.

Related Articles

- How Elon Musk Is Rewriting Founder Power in 2025 [Strategy]

- Inertia Fusion: $450M Funding Boom and the Race to Grid-Scale Power [2025]

- Meridian AI's $17M Raise: Redefining Agentic Financial Modeling [2025]

- Apptronik $935M Funding: Humanoid Robots Reshaping Automation

- AI Rivals Unite: How F/ai Is Reshaping European Startups [2025]

- Thomas Dohmke's $60M Seed Round: The Future of AI Code Management [2025]

![xAI Engineer Exodus: Inside the Mass Departures Shaking Musk's AI Company [2025]](https://tryrunable.com/blog/xai-engineer-exodus-inside-the-mass-departures-shaking-musk-/image-1-1770829907024.jpg)